Significance

Although sign languages and nonlinguistic gesture use the same modalities, only sign languages have established vocabularies and follow grammatical principles. This is the first study (to our knowledge) to ask how the brain systems engaged by sign language differ from those used for nonlinguistic gesture matched in content, using appropriate visual controls. Signers engaged classic left-lateralized language centers when viewing both sign language and gesture; nonsigners showed activation only in areas attuned to human movement, indicating that sign language experience influences gesture perception. In signers, sign language activated left hemisphere language areas more strongly than gestural sequences. Thus, sign language constructions—even those similar to gesture—engage language-related brain systems and are not processed in the same ways that nonsigners interpret gesture.

Keywords: brain, American Sign Language, fMRI, deafness, neuroplasticity

Abstract

Sign languages used by deaf communities around the world possess the same structural and organizational properties as spoken languages: In particular, they are richly expressive and also tightly grammatically constrained. They therefore offer the opportunity to investigate the extent to which the neural organization for language is modality independent, as well as to identify ways in which modality influences this organization. The fact that sign languages share the visual–manual modality with a nonlinguistic symbolic communicative system—gesture—further allows us to investigate where the boundaries lie between language and symbolic communication more generally. In the present study, we had three goals: to investigate the neural processing of linguistic structure in American Sign Language (using verbs of motion classifier constructions, which may lie at the boundary between language and gesture); to determine whether we could dissociate the brain systems involved in deriving meaning from symbolic communication (including both language and gesture) from those specifically engaged by linguistically structured content (sign language); and to assess whether sign language experience influences the neural systems used for understanding nonlinguistic gesture. The results demonstrated that even sign language constructions that appear on the surface to be similar to gesture are processed within the left-lateralized frontal-temporal network used for spoken languages—supporting claims that these constructions are linguistically structured. Moreover, although nonsigners engage regions involved in human action perception to process communicative, symbolic gestures, signers instead engage parts of the language-processing network—demonstrating an influence of experience on the perception of nonlinguistic stimuli.

Sign languages such as American Sign Language (ASL) are natural human languages with linguistic structure. Signed and spoken languages also largely share the same neural substrates, including left hemisphere dominance revealed by brain injury and neuroimaging. At the same time, sign languages provide a unique opportunity to explore the boundaries of what, exactly, “language” is. Speech-accompanying gesture is universal (1), yet such gestures are not language—they do not have a set of structural components or combinatorial rules and cannot be used on their own to reliably convey information. Thus, gesture and sign language are qualitatively different, yet both convey symbolic meaning via the hands. Comparing them can help identify the boundaries between language and nonlinguistic symbolic communication.

Despite this apparently clear distinction between sign language and gesture, some researchers have emphasized their apparent similarities. One construction that has been a focus of contention is “classifier constructions” (also called “verbs of motion”). In ASL, a verb of motion (e.g., moving in a circle) will include a root expressing the motion event, morphemes marking the manner and direction of motion (e.g., forward or backward), and also a classifier that specifies the semantic category (e.g., vehicle) or size and shape (e.g., round, flat) of the object that is moving (2). Although verbs of motion with classifiers occur in some spoken languages, in ASL these constructions are often iconic—the forms of the morphemes are frequently similar to the visual–spatial meanings they express—and they have therefore become a focus of discussion about the degree to which they (and other parts of ASL) are linguistic or gestural in character. Some researchers have argued that the features of motion and spatial relationships marked in ASL verbs of motion are in fact not linguistic morphemes but are based on the analog imagery system that underlies nonlinguistic visual–spatial processing (3–5). In contrast, Supalla (2, 6, 7) and others have argued that these ASL constructions are linguistic in nature, differing from gestures in that they have segmental structure, are produced and perceived in a discrete categorical (rather than analog) manner, and are governed by morphological and syntactic regularities found in other languages of the world.

These similarities and contrasts between sign language and gesture allow us to ask some important questions about the neural systems for language and gesture. The goal of this study was to examine the neural systems underlying the processing of ASL verbs of motion compared with nonlinguistic gesture. This allowed us to ask whether, from the point of view of neural systems, there is “linguistic structure” in ASL verbs of motion. It also allowed us to distinguish networks involved in “symbolic communication” from those involved specifically in language, and to determine whether “sign language experience” alters systems for gesture comprehension.

It is already established that a very similar, left-lateralized neural network is involved in the processing of many aspects of lexical and syntactic information in both spoken and signed languages. This includes the inferior frontal gyrus (IFG) (classically called Broca’s area), superior temporal sulcus (STS) and adjacent superior and middle temporal gyri, and the inferior parietal lobe (IPL) (classically called Wernicke’s area) including the angular (AG) and supramarginal gyri (SMG) (4, 8–18). Likewise, narrative and discourse-level aspects of signed language depend largely on right STS regions, as they do for spoken language (17, 19).

Although the neural networks engaged by signed and spoken language are overall quite similar, some studies have suggested that the linguistic use of space in sign language engages additional brain regions. During both comprehension and production of spatial relationships in sign language, the superior parietal lobule (SPL) is activated bilaterally (4, 5, 12). In contrast, parallel studies in spoken languages have found no (12) or only left (20) parietal activation when people describe spatial relationships. These differences between signed and spoken language led Emmorey et al. (5) to conclude that, “the location and movements within [classifier] constructions are not categorical morphemes that are selected and retrieved via left hemisphere language regions” (p. 531). However, in these studies, signers had to move their hands whereas speakers did not; it is unclear whether parietal regions are involved in processing linguistic structure in sign language as opposed to simply using the hands to symbolically represent spatial structure and relationships. Other studies have touched on the question of symbolic communication, comparing the comprehension of sign language with pantomime and with meaningless, sign-like gestures (11, 21–23). In signers, activation for both sign language and pantomime gestures was reported in classical language-related areas including the IFG, the posterior region of the STS (STSp), and the SMG, although typically these activations are stronger for sign language than gesture. Similar patterns of activation—although often more bilateral—have been observed in nonsigners, for meaningful as well as for meaningless gesture perception (24–27).

Thus, on the one hand, the classical left-lateralized “language-processing” network appears to be engaged by both signers and nonsigners for interpreting both sign language and nonlinguistic gesture. On the other hand, sign language experience appears to drive a specialization of these regions for signs over nonsigns in signers, whereas similar levels of activation are seen for gestures and spoken descriptions of human actions in nonsigners (27). In the right hemisphere, when viewing gestures both signers and nonsigners show activation of the homologs of the left hemisphere language regions noted above, including the STS (the STSp associated with biological motion, as well as more anterior areas), inferior and superior parietal regions, and the IFG.

One important caveat in considering the literature is that most studies have compared task-related activation to a simple baseline condition that did not control for the types of movements made or for other low-level stimulus features. Thus, apparent differences between sign language and gesture may in fact be attributable to their distinct physical/perceptual qualities, whereas subtle but important activation differences between sign language and nonlinguistic communication may have been “washed out” by the overall similarity of brain activation when people attempt to find meaning in hand, body, and face movement relative to a static stimulus.

Our goal in the present study was to further investigate the neural processing of ASL verbs of motion and to determine whether we could dissociate the brain systems involved in deriving meaning from “symbolic communication” (including both language and gesture) from those specifically engaged by “linguistically structured content” (sign language), while controlling for the sensory and spatial processing demands of the stimuli. We also asked whether sign language experience influences the brain systems used to understand nonlinguistic gesture. To address these questions, we measured brain activation using functional MRI (fMRI) while two groups of people, deaf native ASL signers and nonsigning, hearing native English speakers, viewed two types of videos: ASL verbs of motion constructions describing the paths and manners of movement of toys [e.g., a toy cow falling off a toy truck, as the truck moves forward (2); see Fig. S5 for examples], and gestured descriptions of the same events. Importantly, we also included a “backward-layered” control condition in which the ASL and gesture videos were played backward, with three different videos superimposed (as in refs. 17 and 18).

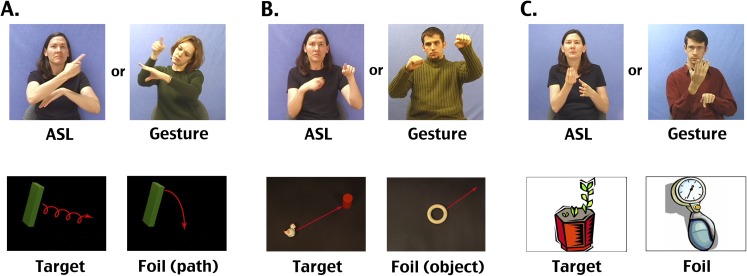

Fig. S5.

Example stimuli. Top row: Example frames from ASL and gesture movies shown to participants in the fMRI study. A and B are from movies elicited by stop-motion videos produced by author TS (2); C are from movies elicited by clip art stimuli. Bottom row: Still images that were shown to participants in the MRI study after each ASL or gesture stimulus. For A and B, these are also representative of the objects and motion paths present in the original elicitation stimuli. Although as animated videos these were quite understandable, screen shots of these videos were not very clear. Thus, these images are reconstructions of the scenes from the movies, made using the same props as in the original movies, or very similar ones.

We predicted that symbolic communication (i.e., both gesture and ASL, in signers and nonsigners) would activate areas typically seen in both sign language and meaningful gesture processing, including the left IFG and the SMG and STSp bilaterally. We further predicted that the left IFG would show stronger activation for linguistically structured content, i.e., in the contrast of ASL versus gesture in signers but not in nonsigners. We also predicted that other areas typically associated with syntactic processing and lexical retrieval, including anterior and middle left STS, would be more strongly activated by ASL in signers. Such a finding would provide evidence in favor of the argument that verbs of motion constructions are governed by linguistic morphology and argue against these being gestural constructions rather than linguistic. We further predicted that visual–manual language experience would lead to greater activation of the left IFG of signers than nonsigners when viewing gesture, although less than for ASL.

Results

Behavioral Performance.

Overall, deaf signers showed greater accuracy than hearing nonsigners in judging which picture matched the preceding video, shown in Fig. S1. Deaf signers were more accurate than nonsigners for both ASL (signers: 1.99% errors; nonsigners: 17.85% errors) and for gestures (signers: 2.37% errors; nonsigners: 5.68% errors). A generalized linear mixed model fitted using a binomial distribution, involving factors Group (signers, nonsigners) and Stimulus Type (ASL, gesture) identified a Group × Stimulus Type interaction (z = 3.53; P = 0.0004). Post hoc tests showed that signers were significantly more accurate than hearing nonsigners for both ASL (z = 7.61; P < 0.0001) and for gestures (z = 2.9; P = 0.004). Furthermore, deaf signers showed similar levels of accuracy on both ASL and gesture stimuli, whereas hearing nonsigners were significantly more accurate in judging gestural than ASL stimuli (z = 6.91; P < 0.0001).

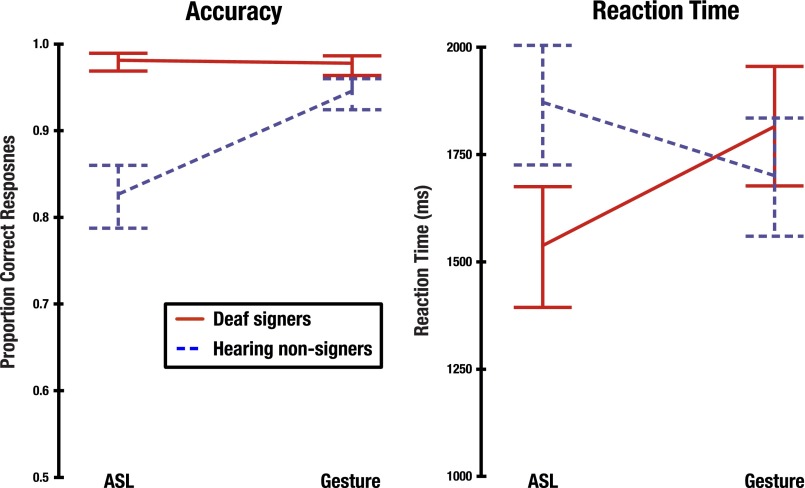

Fig. S1.

Behavioral data, including accuracy (Left) and reaction time (Right). Error bars represent 95% confidence intervals around each mean.

Examination of the reaction times (RTs) shown in Fig. S1 suggested an interaction between Group and Stimulus Type for this measure as well, with signers showing faster responses to ASL (1,543.4 ms; SD = 264.1) than to gestures (1,815.4 ms; SD = 314.3), but nonsigners showing faster responses to gestures (1,701.3 ms; SD = 325.3) than to ASL (1,875.0 ms; SD = 334.4). This observation was borne out by the results of a 2 (Group) × 2 (Stimulus Type) linear mixed-effects analysis, which included a significant Group × Stimulus Type interaction [F(1,2,795) = 148.08; P < 0.0001]. RTs were significantly faster for signers than nonsigners when judging ASL stimuli (t = 3.36; P = 0.0006); however, the two groups did not differ significantly when judging gesture stimuli (t = 1.15; P = 0.2493). Signers were also significantly faster at making judgments for ASL than gesture stimuli (t = 10.54; P < 0.0001), whereas nonsigners showed the reverse pattern, responding more quickly gesture stimuli (t = 6.63; P < 0.0001).

fMRI Data.

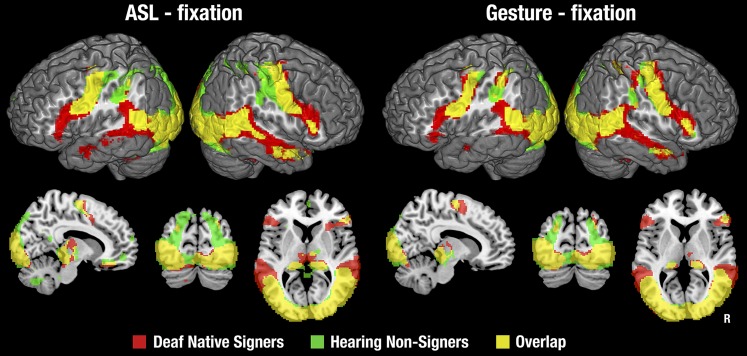

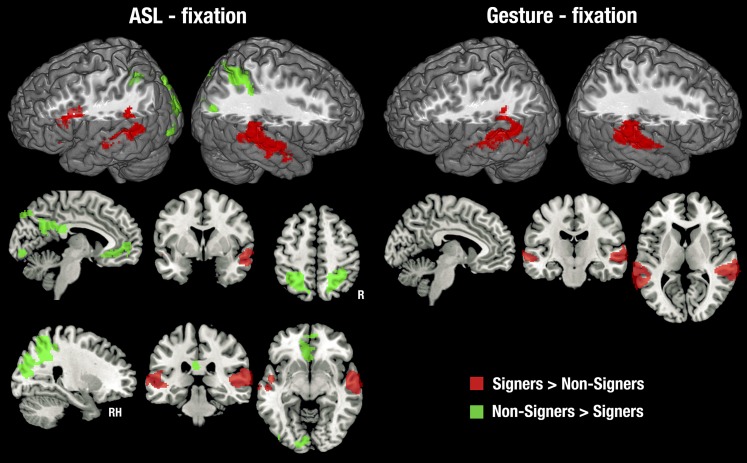

Although we developed backward-layered control stimuli to closely match and control for the low-level properties of our stimuli, we first examined the contrast with fixation to compare our data with previous studies (most of which used this contrast) and to gain perspective on the entire network of brain regions that responded to ASL and gesture. This is shown in Fig. S2 with details in Tables S1 and S2. Relative to fixation, ASL and gesture activated a common, bilateral network of brain regions in both deaf signers and hearing nonsigners, including occipital, temporal, inferior parietal, and motor regions. Deaf signers showed unique activation in the IFG and the anterior and middle STS bilaterally.

Fig. S2.

Statistical maps for each stimulus type relative to the fixation cross baseline, in each subject group. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05. In the coronal and sagittal views, the right side of the brain is shown on the right side of each image.

Table S1.

Areas showing significantly greater fMRI response to ASL than fixation baseline, for each group

| Deaf native signers | Cluster size | Hearing nonsigners | Cluster size | |||||||||

| Brain region | Max z | X | Y | Z | Brain region | Max z | X | Y | Z | |||

| Frontal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| IFG, pars opercularis | 4,398 | 6.39 | −44 | 16 | 20 | Precentral gyrus | 3,333 | 5.43 | −36 | −20 | 62 | |

| Cingulate gyrus | 12 | 2.85 | −18 | −24 | 38 | |||||||

| Frontal pole | 8 | 3.35 | 0 | 56 | −2 | |||||||

| Right hemisphere | ||||||||||||

| IFG, pars opercularis | 4,126 | 6.99 | 58 | 18 | 22 | Precentral gyrus | 3,286 | 4.9 | 46 | −14 | 58 | |

| Frontal pole | 82 | 3.62 | 36 | 36 | −16 | Frontal pole | 78 | 3.39 | 2 | 56 | −2 | |

| Superior frontal gyrus | 49 | 4.09 | 14 | −6 | 68 | Frontal pole | 37 | 3.69 | 34 | 36 | −18 | |

| Precentral gyrus | 12 | 3.37 | 2 | −14 | 66 | Superior frontal gyrus | 35 | 3.4 | 12 | −10 | 68 | |

| Frontal pole | 12 | 3.84 | 2 | 54 | −6 | |||||||

| Frontal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Supplementary motor area | 702 | 6.03 | −2 | 0 | 54 | Frontal medial cortex | 836 | 6.06 | 0 | 50 | −10 | |

| Subcallosal cortex | 361 | 5.26 | −10 | 26 | −18 | Supplementary motor area | 482 | 4.14 | −2 | −2 | 56 | |

| Right hemisphere | ||||||||||||

| Supplementary motor area | 495 | 5.01 | 2 | 0 | 60 | Frontal medial cortex | 629 | 5.61 | 2 | 50 | −10 | |

| Frontal medial cortex | 467 | 4.37 | 4 | 36 | −18 | Supplementary motor area | 342 | 4.05 | 8 | −10 | 64 | |

| Frontal orbital cortex | 15 | 2.96 | 30 | 28 | 6 | Frontal orbital cortex | 151 | 4.22 | 36 | 30 | −18 | |

| Temporal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Planum temporale/supramarginal gyrus | 3,364 | 6.29 | −52 | −42 | 20 | Lateral occipital cortex, inferior | 1,054 | 5.44 | −42 | −62 | 8 | |

| Right hemisphere | ||||||||||||

| Middle temporal gyrus, temporo-occipital | 5,368 | 5.97 | 50 | −58 | 10 | Middle temporal gyrus, temporo-occipital | 2,868 | 5.6 | 48 | −42 | 8 | |

| Planum temporale | 9 | 2.77 | 56 | −36 | 20 | |||||||

| Temporal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Fusiform gyrus, occipital | 2,847 | 5.58 | −36 | −78 | −8 | Fusiform gyrus, occipital | 2,860 | 7.11 | −26 | −84 | −14 | |

| Parahippocampus gyrus, posterior | 137 | 6.38 | −14 | −32 | −4 | Parahippocampal gyrus, posterior | 213 | 5.93 | −12 | −32 | −2 | |

| Parahippocampal gyrus, anterior | 26 | 2.95 | −26 | −2 | −22 | |||||||

| Right hemisphere | ||||||||||||

| Lingual gyrus | 2,847 | 5.65 | 6 | −88 | −8 | Fusiform gyrus, temporal-occipital | 3,151 | 6.78 | 36 | −58 | −16 | |

| Fusiform cortex, anterior temporal | 231 | 5.01 | 36 | −2 | −36 | Parahippocampal gyrus, posterior | 199 | 6.64 | 18 | −32 | −2 | |

| Parahippocampal gyrus, anterior | 189 | 7.17 | 18 | −28 | −6 | Parahippocampal gyrus, anterior | 71 | 3.9 | 18 | −10 | −26 | |

| Fusiform cortex, posterior temporal | 13 | 3.79 | 46 | −18 | −18 | Parahippocampal gyrus, anterior | 28 | 2.98 | 26 | 0 | −22 | |

| Parietal-superior | ||||||||||||

| Left hemisphere | ||||||||||||

| Superior parietal lobule | 600 | 5.73 | −32 | −48 | 42 | Superior parietal lobule | 1,952 | 5.98 | −26 | −50 | 46 | |

| Postcentral gyrus | 146 | 4.15 | −58 | −10 | 46 | |||||||

| Postcentral gyrus | 30 | 3.3 | −48 | −12 | 60 | |||||||

| Right hemisphere | ||||||||||||

| Superior parietal lobule | 469 | 3.69 | 32 | −54 | 48 | Superior parietal lobule | 2,293 | 5.43 | 30 | −46 | 50 | |

| Postcentral gyrus | 67 | 3.21 | 54 | −10 | 54 | Postcentral gyrus | 10 | 3.71 | 44 | −6 | 26 | |

| Postcentral gyrus | 54 | 3.73 | 54 | −22 | 58 | |||||||

| Parietal-inferior | ||||||||||||

| Left hemisphere | ||||||||||||

| Supramarginal gyrus, posterior | 1,304 | 6.65 | −54 | −42 | 22 | Supramarginal gyrus, posterior | 479 | 3.96 | −30 | −46 | 36 | |

| Supramarginal gyrus, posterior | 426 | 4.58 | −48 | −48 | 10 | |||||||

| Supramarginal gyrus, anterior | 12 | 3.16 | −66 | −22 | 32 | |||||||

| Right hemisphere | ||||||||||||

| Supramarginal gyrus, posterior | 1,013 | 5.56 | 60 | −38 | 12 | Supramarginal gyrus, posterior | 704 | 5.9 | 48 | −42 | 10 | |

| Supramarginal gyrus, posterior | 39 | 3.01 | 32 | −40 | 38 | Supramarginal gyrus, posterior | 215 | 4.5 | 32 | −40 | 40 | |

| Angular gyrus | 9 | 3.31 | 30 | −56 | 38 | Angular gyrus | 7 | 3.48 | 26 | −54 | 36 | |

| Occipital | ||||||||||||

| Left hemisphere | ||||||||||||

| Lateral occipital cortex, inferior | 5,365 | 6.04 | −38 | −80 | −6 | Lateral occipital cortex, inferior | 8,386 | 7.64 | −48 | −76 | −4 | |

| Lateral occipital cortex, superior | 255 | 3.23 | −28 | −66 | 38 | |||||||

| Right hemisphere | ||||||||||||

| Lateral occipital cortex, inferior | 5,348 | 6.82 | 50 | −66 | 6 | Lateral occipital cortex, inferior | 7,446 | 7 | 38 | −82 | −10 | |

| Posterior-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Cingulate gyrus, posterior | 32 | 8.25 | −16 | −28 | −6 | Parahippocampal gyrus, posterior | 479 | 5.91 | −20 | −30 | −6 | |

| Cuneus | 137 | 4.12 | −18 | −80 | 32 | |||||||

| Precuneus | 22 | 2.55 | −2 | −78 | 50 | |||||||

| Cingulate gyrus, posterior | 12 | 2.79 | −18 | −26 | 34 | |||||||

| Cuneus | 9 | 3.41 | −12 | −88 | 14 | |||||||

| Right hemisphere | ||||||||||||

| Cingulate gyrus, posterior | 50 | 5.98 | 20 | −30 | −4 | Parahippocampal gyrus, posterior | 349 | 6.36 | 20 | −30 | −4 | |

| Cuneus | 91 | 3.95 | 20 | −78 | 30 | |||||||

| Precuneus | 9 | 3.16 | 24 | −52 | 34 | |||||||

| Occipital pole | 6 | 2.88 | 14 | −86 | 20 | |||||||

| Thalamus | ||||||||||||

| Left hemisphere | ||||||||||||

| Posterior parietal projections | 805 | 8.25 | −16 | −28 | −6 | Temporal projections | 647 | 6.53 | −24 | −28 | −6 | |

| Right hemisphere | ||||||||||||

| Posterior parietal projections | 795 | 7.18 | 8 | −30 | −4 | Temporal projections | 617 | 6.64 | 18 | −32 | −2 | |

| Prefrontal projections | 118 | 3.32 | 14 | −12 | 2 |

Because the contrasts often elicited extensive activation, including a single large cluster for each contrast that included the occipital, temporal, parietal, and often frontal lobes bilaterally, to create these tables we first took the z maps shown in the figures, which had been thresholded at z > 2.3, and then cluster size-corrected for P < 0.05 and masked them with a set of anatomically defined regions of interest (ROIs). These ROIs were derived from the Harvard–Oxford Cortical Atlas provided with the FSL software package (version 5.0.8) by combining all anatomically labeled regions in that atlas comprising the larger regions labeled in the table. Results for each ROI were obtained by performing FSL’s cluster procedure on the thresholded z map masked by each ROI, and then obtaining the most probable anatomical label for the peak of each cluster using FSL’s atlasquery function. Thus, it is important to recognize, with reference to Fig. S2, that many of the active regions listed in this table were part of larger clusters in the whole-brain analysis. However, because clustering for the table was performed on the same statistical map shown in Fig. S2, the location and extent of the activations in total are equivalent in the figure and table.

Table S2.

Areas showing significantly greater fMRI response to gesture than fixation baseline, for each group

| Deaf native signers | Hearing nonsigners | |||||||||||

| Brain region | Cluster size | Max z | X | Y | Z | Brain region | Cluster size | Max z | X | Y | Z | |

| Frontal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 4,284 | 5.49 | −52 | 26 | 12 | Precentral gyrus | 2,926 | 5.33 | −32 | −12 | 52 | |

| Right hemisphere | ||||||||||||

| Precentral gyrus | 4,340 | 5.08 | 50 | 0 | 38 | Precentral gyrus | 3,242 | 5.09 | 62 | 6 | 34 | |

| Superior frontal gyrus | 96 | 4.07 | 12 | −6 | 66 | Superior frontal gyrus | 19 | 3.21 | 12 | −10 | 68 | |

| Precentral gyrus | 19 | 3.64 | 4 | −14 | 66 | |||||||

| Frontal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Supplementary motor area | 478 | 4.75 | −8 | −10 | 64 | Supplementary motor area | 193 | 3.76 | −6 | −6 | 64 | |

| Right hemisphere | ||||||||||||

| Supplementary motor area | 432 | 4.95 | 8 | −4 | 62 | Supplementary motor area | 156 | 3.75 | 8 | −8 | 62 | |

| Temporal-lateral | 8 | 2.87 | 28 | 14 | −24 | |||||||

| Left hemisphere | ||||||||||||

| Middle temporal gyrus, temporo-occipital | 2,329 | 6.23 | −46 | −62 | 2 | Lateral occipital cortex, inferior | 896 | 5.43 | −42 | −62 | 8 | |

| Planum temporale | 37 | 3.32 | −46 | −44 | 18 | |||||||

| Right hemisphere | ||||||||||||

| Middle temporal gyrus, temporo-occipital | 3,726 | 6.16 | 46 | −60 | 4 | Middle temporal gyrus, temporo-occipital | 1,727 | 5.72 | 46 | −42 | 8 | |

| Planum temporale | 7 | 2.79 | 56 | −36 | 22 | |||||||

| Temporal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Lingual gyrus | 2,423 | 6.4 | −2 | −90 | −6 | Fusiform gyrus, occipital | 2,787 | 6.75 | −34 | −78 | −16 | |

| Parahippocampal gyrus, posterior | 129 | 5.55 | −20 | −28 | −8 | Parahippocampal gyrus, posterior | 142 | 5.9 | −14 | −32 | −6 | |

| Right hemisphere | ||||||||||||

| Lingual gyrus | 2,672 | 6.36 | 2 | −88 | −6 | Fusiform gyrus, occipital | 2,866 | 6.56 | 24 | −90 | −10 | |

| Parahippocampal gyrus, posterior | 148 | 5.88 | 16 | −32 | −2 | Parahippocampal gyrus, posterior | 149 | 6.7 | 10 | −30 | −6 | |

| Fusiform gyrus, anterior temporal | 119 | 5.06 | 34 | 0 | −34 | Parahippocampal gyrus, anterior | 60 | 4.11 | 18 | −8 | −20 | |

| Fusiform gyrus, posterior temporal | 15 | 2.67 | 42 | −12 | −28 | |||||||

| Parietal-superior | ||||||||||||

| Left hemisphere | ||||||||||||

| Superior parietal lobule | 1,380 | 5.19 | −32 | −50 | 52 | Superior parietal lobule | 1,309 | 5.6 | −28 | −54 | 54 | |

| Postcentral gyrus | 52 | 3.25 | −46 | −18 | 62 | |||||||

| Right hemisphere | ||||||||||||

| Superior parietal lobule | 1,170 | 4.99 | 30 | −48 | 56 | Superior parietal lobule | 1,089 | 5.71 | 32 | −50 | 54 | |

| Postcentral gyrus | 506 | 4.19 | 64 | −4 | 36 | Postcentral gyrus | 563 | 5.29 | 54 | −16 | 54 | |

| Postcentral gyrus | 8 | 3.67 | 44 | −6 | 28 | |||||||

| Parietal-inferior | ||||||||||||

| Left hemisphere | ||||||||||||

| Supramarginal gyrus, posterior | 1,726 | 7.48 | −58 | −44 | 18 | Supramarginal gyrus, anterior | 612 | 4.14 | −34 | −40 | 38 | |

| Supramarginal gyrus, posterior | 409 | 5.1 | −48 | −48 | 10 | |||||||

| Right hemisphere | ||||||||||||

| Supramarginal gyrus, posterior | 1,207 | 5.63 | 58 | −38 | 18 | Supramarginal gyrus, posterior | 765 | 6.27 | 48 | −42 | 10 | |

| Supramarginal gyrus, posterior | 68 | 3.67 | 32 | −40 | 40 | Supramarginal gyrus, posterior | 583 | 4.78 | 32 | −40 | 40 | |

| Supramarginal gyrus, anterior | 20 | 2.71 | 52 | −26 | 46 | |||||||

| Occipital | ||||||||||||

| Left hemisphere | ||||||||||||

| Lateral occipital cortex, inferior | 5,501 | 6.43 | −46 | −64 | 4 | Lateral occipital cortex, inferior | 7,280 | 6.85 | −48 | −76 | −4 | |

| Lateral occipital cortex, superior | 328 | 3.7 | −24 | −74 | 30 | |||||||

| Right hemisphere | ||||||||||||

| Lateral occipital cortex, inferior | 5,426 | 6.72 | 50 | −66 | 6 | Occipital pole | 6,440 | 6.51 | 24 | −92 | −8 | |

| Lateral occipital cortex, superior | 83 | 2.79 | 30 | −56 | 64 | Lateral occipital cortex, superior | 260 | 4.79 | 24 | −58 | 52 | |

| Posterior-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Parahippocampal gyrus, posterior | 33 | 5.49 | −20 | −30 | −6 | Parahippocampal gyrus, posterior | 28 | 5.92 | −16 | −32 | −4 | |

| Cuneus | 9 | 3 | −20 | −74 | 34 | |||||||

| Right hemisphere | ||||||||||||

| Cingulate gyrus, posterior | 38 | 5.74 | 20 | −30 | −4 | Parahippocampal gyrus, posterior | 40 | 6.13 | 12 | −32 | −2 | |

| Cuneus | 8 | 3.21 | 20 | −76 | 36 | |||||||

| Thalamus | ||||||||||||

| Left hemisphere | ||||||||||||

| Posterior parietal projections | 397 | 5.75 | −8 | −30 | −4 | Posterior parietal projections | 492 | 5.92 | −16 | −32 | −4 | |

| Right hemisphere | ||||||||||||

| Temporal projections | 502 | 5.94 | 16 | −32 | 0 | Posterior parietal projections | 546 | 6.23 | 16 | −32 | −2 |

Because the contrasts often elicited extensive activation, including a single large cluster for each contrast that included the occipital, temporal, parietal, and often frontal lobes bilaterally, to create these tables we first took the z maps shown in the figures, which had been thresholded at z > 2.3, and then cluster size-corrected for P < 0.05 and masked them with a set of anatomically defined regions of interest (ROIs). These ROIs were derived from the Harvard–Oxford Cortical Atlas provided with the FSL software package (version 5.0.8) by combining all anatomically labeled regions in that atlas comprising the larger regions labeled in the table. Results for each ROI were obtained by performing FSL’s cluster procedure on the thresholded z map masked by each ROI, and then obtaining the most probable anatomical label for the peak of each cluster using FSL’s atlasquery function. Thus, it is important to recognize, with reference to Fig. S2, that many of the active regions listed in this table were part of larger clusters in the whole-brain analysis. However, because clustering for the table was performed on the same statistical map shown in Fig. S2, the location and extent of the activations in total are equivalent in the figure and table.

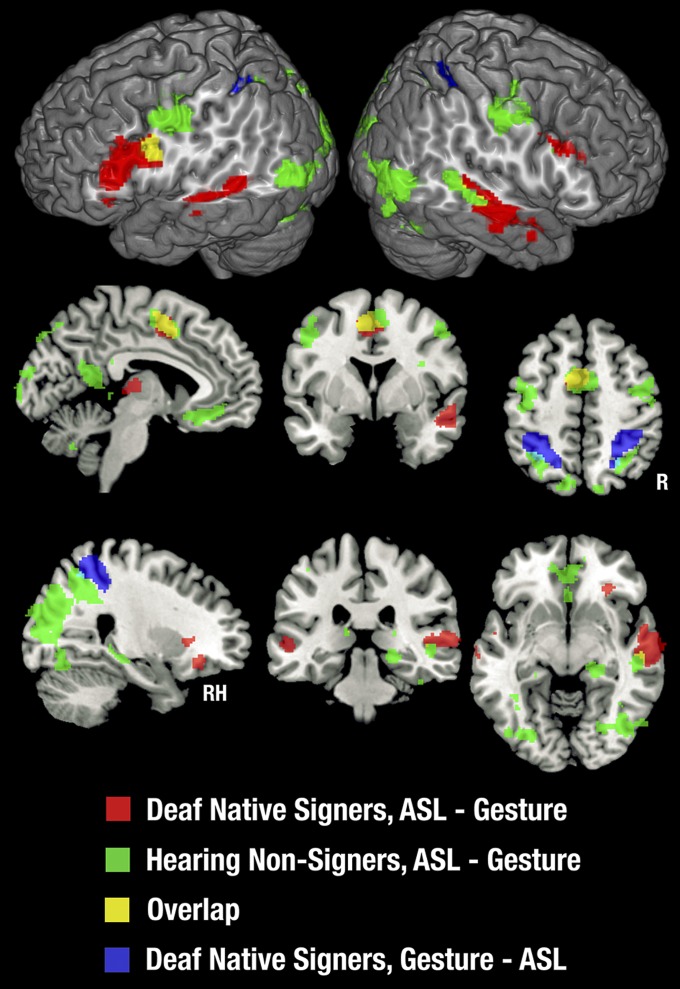

Contrasts with Backward-Layered Control Stimuli.

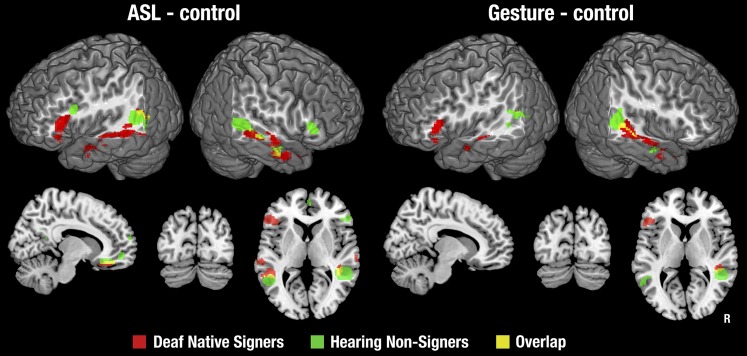

The better-matched contrasts of ASL and gesture with backward-layered control stimuli identified a subset of the brain regions activated in the contrast with fixation, as seen in Fig. 1. Details of activation foci are provided in Tables S3 and S4. Very little of this activation was shared between signers and nonsigners. Notably, all of the activation in the occipital and superior parietal lobes noted in the contrast with fixation baseline was eliminated when we used a control condition that was matched on higher-level visual features. On the lateral surface of the cortex, activations were restricted to areas in and around the IFG and along the STS, extending into the IPL. Medially, activation was found in the ventromedial frontal cortex and fusiform gyrus for both groups, for ASL only. Signers uniquely showed activation in the left IFG and bilateral anterior/middle STS, for both ASL and gesture. Nonsigners uniquely showed activation bilaterally in the STSp for both ASL and gesture (although with a small amount of overlap with signers in the left STSp), as well as in the left inferior frontal sulcus and right IFG for ASL only. The only area showing any extensive overlap between signers and nonsigners was the right STS.

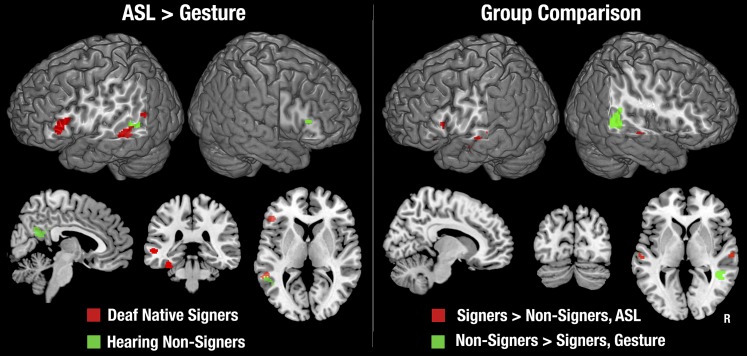

Fig. 1.

Statistical maps for each stimulus type relative to the backward-layered control stimuli, in each subject group. Statistical maps were masked with the maps shown in Fig. S2, so that all contrasts represent brain areas activated relative to fixation baseline. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05. In the coronal and sagittal views, the right side of the brain is shown on the right side of each image.

Table S3.

Cluster locations and z values of significant activations in the contrasts between ASL and matched backward-layered control condition, for each group

| Deaf native signers | Cluster size | Hearing nonsigners | Cluster size | |||||||||

| Brain region | Max z | X | Y | Z | Brain region | Max z | X | Y | Z | |||

| Frontal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 571 | 7.03 | −50 | 22 | 12 | Inferior frontal gyrus, pars opercularis | 117 | 4.25 | −42 | 14 | 26 | |

| Supplementary motor area | 22 | 3.39 | −4 | −14 | 62 | Frontal pole | 8 | 3.58 | 0 | 56 | 4 | |

| Right hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 139 | 5.49 | 54 | 32 | 2 | |||||||

| Frontal pole | 66 | 4.74 | 8 | 60 | 14 | |||||||

| Frontal pole | 11 | 3.78 | 2 | 54 | −6 | |||||||

| Frontal pole | 8 | 3.45 | 36 | 34 | −14 | |||||||

| Frontal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Frontal medial cortex | 356 | 4.98 | −2 | 40 | −14 | Paracingulate gyrus | 807 | 5.78 | −8 | 46 | −8 | |

| Supplementary motor area | 138 | 3.84 | −6 | −6 | 66 | |||||||

| Right hemisphere | ||||||||||||

| Frontal medial cortex | 299 | 4.8 | 2 | 40 | −14 | Paracingulate gyrus | 624 | 5.72 | 4 | 52 | 4 | |

| Frontal orbital cortex | 80 | 3.38 | 36 | 32 | −16 | |||||||

| Temporal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Middle temporal gyrus, posterior | 1,073 | 5.37 | −62 | −40 | −2 | Middle temporal gyrus, temporo-occipital | 375 | 5.69 | −52 | −46 | 4 | |

| Planum temporale | 16 | 3.05 | −56 | −40 | 20 | |||||||

| Right hemisphere | ||||||||||||

| Middle temporal gyrus, anterior | 1,345 | 4.09 | 60 | −4 | −22 | Middle temporal gyrus, temporo-occipital | 952 | 7.74 | 52 | −38 | 0 | |

| Temporal pole | 22 | 2.88 | 28 | 14 | −30 | |||||||

| Temporal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Fusiform gyrus, posterior temporal | 115 | 6.36 | −34 | −34 | −18 | |||||||

| Fusiform gyrus, posterior temporal | 46 | 3.69 | −38 | −10 | −30 | |||||||

| Right hemisphere | ||||||||||||

| Fusiform gyrus, posterior temporal | 66 | 3.84 | 32 | −32 | −22 | Fusiform gyrus, temporal-occipital | 40 | 3.05 | 42 | −40 | −22 | |

| Parahippocampal gyrus, anterior | 63 | 3.74 | 32 | −8 | −24 | Fusiform gyrus, posterior temporal | 6 | 3.54 | 44 | −24 | −14 | |

| Fusiform gyrus, posterior temporal | 12 | 3.45 | 42 | −20 | −18 | |||||||

| Parietal-superior | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Parietal-inferior | ||||||||||||

| Left hemisphere | ||||||||||||

| Angular gyrus | 238 | 3.95 | −56 | −56 | 18 | Angular gyrus | 362 | 5.08 | −46 | −56 | 14 | |

| Right hemisphere | ||||||||||||

| Supramarginal gyrus | 7 | 2.73 | 40 | −38 | 4 | Supramarginal gyrus | 181 | 6.09 | 46 | −38 | 6 | |

| Occipital | ||||||||||||

| Left hemisphere | ||||||||||||

| Lateral occipital cortex | 9 | 2.79 | −54 | −62 | 18 | |||||||

| Right hemisphere | ||||||||||||

| Posterior-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Precuneus | 295 | 6.84 | −4 | −56 | 12 | |||||||

| Right hemisphere | ||||||||||||

| Precuneus | 161 | 5.14 | 4 | −56 | 22 | |||||||

| Thalamus | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere |

Maps were masked to ensure that areas shown here also showed significantly greater activation than the fixation baseline, i.e., these voxels are a subset of those presented in Table S1 and Fig. S2. These areas correspond to the statistical map shown in Fig. 1. Details of table creation are as for Table S1.

Table S4.

Cluster locations and z values of significant activations in the contrasts between gesture and matched backward-layered control condition, for each group

| Deaf native signers | Hearing nonsigners | |||||||||||

| Brain region | Cluster size | Max z | X | Y | Z | Brain region | Cluster size | Max z | X | Y | Z | |

| Frontal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 312 | 6.04 | −48 | 26 | 8 | |||||||

| Superior frontal gyrus | 51 | 4.02 | −10 | −6 | 64 | |||||||

| Right hemisphere | ||||||||||||

| Frontal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Supplementary motor area | 190 | 4.04 | −6 | −8 | 62 | |||||||

| Right hemisphere | ||||||||||||

| Temporal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Superior temporal gyrus, anterior | 307 | 4.92 | −54 | −6 | −12 | Middle temporal gyrus, temporo-occipital | 92 | 3.23 | −56 | −52 | 2 | |

| Planum temporale | 6 | 2.7 | −54 | −40 | 20 | |||||||

| Right hemisphere | ||||||||||||

| Middle temporal gyrus, temporo-occipital | 880 | 4.24 | 46 | −40 | 0 | |||||||

| Temporal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Parietal-superior | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Parietal-inferior | ||||||||||||

| Left hemisphere | ||||||||||||

| Angular gyrus | 15 | 3.28 | −52 | −58 | 18 | Angular gyrus | 130 | 3.71 | −44 | −52 | 16 | |

| Right hemisphere | ||||||||||||

| Supramarginal gyrus, posterior | 121 | 5.57 | 42 | −42 | 8 | |||||||

| Occipital | ||||||||||||

| Left hemisphere | ||||||||||||

| Lateral occipital cortex, superior | 20 | 3.27 | −50 | −62 | 16 | |||||||

| Right hemisphere | ||||||||||||

| Posterior-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Thalamus | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere |

Maps were masked to ensure that areas shown here also showed significantly greater activation than the fixation baseline, i.e., these voxels are a subset of those presented in Table S1 and Fig. S2. These areas correspond to the statistical map shown in Fig. 1. Details of table creation are as for Table S1.

Comparison of ASL and Gesture Within Each Group.

No areas were more strongly activated by gesture than by ASL in either group. In signers, the ASL–gesture contrast yielded an exclusively left-lateralized network, including the IFG and middle STS, as well as the fusiform gyrus (Fig. 2, Left, and Table S5). By contrast, the only areas that showed significantly stronger activation for ASL than gesture in hearing people was a small part of the left STSp (distinct from the areas activated in signers) and the posterior cingulate gyrus. Although nonsigners showed significant activation for ASL but not gesture in or around the IFG bilaterally, these activations were not significantly stronger for ASL than for gesture, implicating subthreshold activation for gesture. Between-condition contrasts for the data relative to fixation baseline (without the backward-layered control condition subtracted) are shown in Fig. S3.

Fig. 2.

(Left) Between-condition differences for each subject group, for the contrasts with backward-layered control stimuli. No areas showed greater activation for gesture than ASL in either group. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05. (Right) Between-group differences for the contrast of each stimulus type relative to the backward-layered control stimuli. No areas were found that showed stronger activation in signers than nonsigners for gesture, nor in nonsigners than signers for ASL. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05. Significant between-group differences in the left IFG were obtained in a planned region of interest analysis at z > 2.3, uncorrected for cluster size.

Table S5.

Cluster locations and z values of significant activations in the contrasts between the two language conditions (ASL and gesture), for each group

| Deaf native signers | Hearing nonsigners | |||||||||||

| Brain region | Cluster size | Max z | X | Y | Z | Brain region | Cluster size | Max z | X | Y | Z | |

| Frontal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 278 | 3.09 | −46 | 24 | 16 | |||||||

| Right hemisphere | ||||||||||||

| Inferior frontal gyrus, pars triangularis | 9 | 2.49 | 48 | 28 | 12 | |||||||

| Frontal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Temporal-lateral | ||||||||||||

| Left hemisphere | ||||||||||||

| Superior temporal gyrus, posterior | 187 | 3.02 | −56 | −42 | 4 | Middle temporal gyrus, temporo-occipital | 82 | 2.82 | −48 | −44 | 6 | |

| Right hemisphere | ||||||||||||

| Temporal-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Fusiform gyrus, posterior temporal | 81 | 3.34 | −34 | −34 | −22 | |||||||

| Right hemisphere | ||||||||||||

| Parietal-superior | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere | ||||||||||||

| Parietal-inferior | ||||||||||||

| Left hemisphere | ||||||||||||

| Angular gyrus | 79 | 3.01 | −46 | −60 | 16 | Supramarginal gyrus, posterior | 8 | 2.68 | −50 | −44 | 8 | |

| Right hemisphere | ||||||||||||

| Occipital | ||||||||||||

| Left hemisphere | ||||||||||||

| Lateral occipital cortex, superior | 7 | 2.93 | −50 | −62 | 16 | |||||||

| Right hemisphere | ||||||||||||

| Posterior-medial | ||||||||||||

| Left hemisphere | ||||||||||||

| Cingulate gyrus, posterior | 42 | 2.92 | 0 | −52 | 20 | |||||||

| Right hemisphere | ||||||||||||

| Cingulate gyrus, posterior | 107 | 3.03 | 4 | −46 | 24 | |||||||

| Thalamus | ||||||||||||

| Left hemisphere | ||||||||||||

| Right hemisphere |

These correspond to the statistical maps shown in Fig. 2, Left. The input to the between-language contrast was the statistical maps shown in Fig. 1 and Tables S3 and S4, in other words with activation in the backward-layered control condition subtracted and masked with the contrasts of the language condition with fixation baseline. Only contrasts where ASL elicited greater activation than gesture are shown, because no regions showed greater activation for gesture than for ASL. Details of table creation are as for Table S1.

Fig. S3.

Within-group, between-condition differences, for each stimulus condition relative to fixation. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05.

Between-Group Comparisons.

Signers showed significantly stronger activation for ASL than nonsigners in the anterior/middle STS bilaterally and in the left IFG (Fig. 2, Right, and Table S6). The area of stronger activation in the left IFG did not survive multiple-comparison correction. However, because differences between groups were predicted a priori in this region, we interrogated it using a post hoc region of interest analysis. Left IFG was defined as Brodmann’s areas 44 and 45 (28), and within this we thresholded activations at z > 2.3, uncorrected for multiple comparisons. As seen in Fig. 2, the area that showed stronger activation for signers than nonsigners was within the left IFG cluster that, in signers, showed stronger activation for ASL than gesture. Hearing nonsigners showed greater activation than signers only for gesture, and this was restricted to the STSp/SMG of the right hemisphere. Between-group contrasts for the data relative to fixation baseline (without the backward-layered control condition subtracted) are shown in Fig. S4.

Table S6.

Cluster locations and z values of significant activations in the contrasts between the two groups, for each language type

| Deaf native signers > hearing nonsigners ASL | Hearing nonsigners > deaf native signers Gesture | ||||||||||

| Brain region | Cluster size | Max z | X | Y | Z | Brain region | Cluster size | Max z | X | Y | Z |

| Frontal-lateral | |||||||||||

| Left hemisphere | |||||||||||

| Inferior frontal gyrus, pars triangularis | 16 | 2.75 | −54 | 22 | 10 | ||||||

| Right hemisphere | |||||||||||

| Precentral gyrus | 4,340 | 5.08 | 50 | 0 | 38 | ||||||

| Superior frontal gyrus | 96 | 4.07 | 12 | −6 | 66 | ||||||

| Precentral gyrus | 19 | 3.64 | 4 | −14 | 66 | ||||||

| Frontal-medial | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Temporal-lateral | |||||||||||

| Left hemisphere | |||||||||||

| Superior temporal gyrus, posterior/planum temporale | 73 | 3.45 | −62 | −16 | 2 | ||||||

| Right hemisphere | |||||||||||

| Superior temporal gyrus, posterior/planum temporale | 37 | 3.11 | 64 | −20 | 4 | Middle temporal gyrus, temporo-occipital | 174 | 3.43 | 42 | −48 | 6 |

| Temporal-medial | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Parietal-superior | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Parietal-inferior | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Supramarginal gyrus, posterior | 73 | 3.67 | 52 | −38 | 18 | ||||||

| Occipital | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Posterior-medial | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere | |||||||||||

| Thalamus | |||||||||||

| Left hemisphere | |||||||||||

| Right hemisphere |

These correspond to the statistical maps shown in Fig. 2, Right. The input to the between-group contrast was the statistical maps shown in Fig. 1 and Table S2, in other words with activation in the backward-layered control condition subtracted and masked with the contrasts of the language condition with fixation baseline. Note that deaf signers only showed greater activation than nonsigners for ASL, whereas hearing nonsigners only showed greater activation than deaf signers for gesture. Details of table creation are as for Table S1.

Fig. S4.

Between-group differences for the contrast of each stimulus type relative to the fixation cross baseline. Thresholded at z > 2.3, with a cluster size-corrected P < 0.05.

Discussion

The central question of this study was whether distinct brain systems are engaged during the perception of sign language, compared with gestures that also use the visual–manual modality and are symbolic communication but lack linguistic structure. Some previous work has suggested that aspects of sign language—such as verbs of motion—are nonlinguistic and are processed like gesture, thus relying on brain areas involved in processing biological motion and other spatial information. This position would predict shared brain systems for understanding verbs of motion constructions and nonlinguistic gestures expressing similar content. In contrast, we hypothesized that verbs of motion constructions are linguistically governed and, as such, would engage language-specific brain systems in signers distinct from those used for processing gesture. We also investigated whether knowing sign language influenced the neural systems recruited for nonlinguistic gesture, by comparing responses to gesture in signers and nonsigners. Finally, we compared signers and nonsigners to determine whether understanding symbolic communication differs when it employs a linguistic code as opposed to when it is created ad hoc. Because there is little symbolic but nonlinguistic communication in the oral–aural channel, such a comparison is best done using sign language and gesture. Although many neuroimaging studies have contrasted language with control stimuli, to our knowledge no studies have compared linguistic and nonlinguistic stimuli while attempting to match the semantic and symbolic content. Here, ASL and gesture were each used to describe the same action events.

In the present study, compared with the low-level fixation baseline, ASL and gesture activated an extensive, bilateral network of brain regions in both deaf signers and hearing nonsigners. This network is consistent with previous studies of both sign language and gesture that used similar contrasts with a low-level baseline (10, 22–27). Critically, however, compared with the backward-layered conditions that controlled for stimulus features (such as biological motion and face perception) and for motor responses, a much more restricted set of brain areas was activated, with considerably less overlap across groups and conditions. Indeed, the only area commonly activated across sign language and gesture in both signers and nonsigners was in the middle/anterior STS region of the right hemisphere. In general, when there were differences between stimulus types, ASL elicited stronger brain activation than gesture in both groups. However, the areas that responded more strongly to ASL were almost entirely different between groups, again supporting the influence of linguistic experience in driving the brain responses to symbolic communication.

Linguistic Structure.

Our results show that ASL verbs of motion produce a distinct set of activations in native signers, based in the classic left hemisphere language areas: the IFG and anterior/middle STS. This pattern of activation was significantly different from that found in signers observing gesture sequences expressing approximately the same semantic content, and was wholly different from the bilateral pattern of activation in the STSp found in hearing nonsigners observing either sign language or gestural stimuli. These results thus suggest that ASL verbs of motion are not processed by native signers as nonlinguistic imagery—because nonsigners showed activation primarily in areas associated with general biological motion processing—but rather are processed in terms of their linguistic structure (i.e., as complex morphology), as Supalla (2, 6, 7) has argued. This finding is also consistent with evidence that both grammatical judgment ability and left IFG activation correlated with age of acquisition in congenitally deaf people who learned ASL as a first language (14). Apparently, despite their apparent iconicity, ASL verbs of motion are processed in terms of their discrete combinatorial structure, like complex words in other languages, and depend for this type of processing on the left hemisphere network that underlies spoken as well as other aspects of signed languages.

The other areas more strongly activated by ASL—suggesting linguistic specialization—were in the left temporal lobe. These included the middle STS—an area associated with lexical (lemma) selection and retrieval in studies of spoken languages (29)—and the posterior STS. For signers, this left-lateralized activation was posterior to the STSp region activated bilaterally in nonsigners for both ASL and gesture and typically associated with biological motion processing. The area activated in signers is in line with the characterization of this region as “Wernicke’s area” and its association with semantic and phonological processing.

Symbolic Communication.

Symbolic communication was a common feature of both the ASL and gesture stimuli. Previous studies had suggested that both gesture and sign language engage a broad, common network of brain regions including classical left hemisphere language areas. Some previous studies used pantomimed actions (10, 21, 27), which are more literal and less abstractly symbolic than some of the gestures used in the present study; other studies used gestures with established meanings [emblems (22, 27, 30)], or meaningless gestures (21, 23, 30). Thus, the stimuli differed from those in the present study in terms of their meaningfulness and degree of abstract symbolism. Our data revealed that, when sign language and gesture stimuli are closely matched for content, and once perceptual contributions are properly accounted for, a much more restricted set of brain regions are commonly engaged across stimulus types and groups—restricted to middle and anterior regions of the right STS. We have consistently observed activation in this anterior/middle right STS area in our previous studies of sign language processing, in both hearing native signers and late learners (16) and in deaf native signers both for ASL sentences with complex morphology [including spatial morphology (18), and narrative and prosodic markers (17)]. The present findings extend this to nonlinguistic stimuli in nonsigners, suggesting that the right anterior STS is involved in the comprehension of symbolic manual communication regardless of linguistic structure or sign language experience.

Sign Language Experience.

Our results indicate that lifelong use of a visual–manual language alters the neural response to nonlinguistic manual gesture. Left frontal and temporal language processing regions showed activation in response to gesture only in signers, once low-level stimulus features were accounted for. Although these same left hemisphere regions were more strongly activated by ASL, their activation by gesture exclusively in signers suggests that sign language experience drives these areas to attempt to analyze visual–manual symbolic communication even when it lacks linguistic structure. An extensive portion of the right anterior/middle STS was also activated exclusively in signers. This region showed no specialization for linguistically structured material, although in previous studies we found sensitivity of this area to both morphological and narrative/prosodic structure in ASL (17, 18). It thus seems that knowledge of a visual–manual sign language can lead to this region’s becoming more sensitive to manual movements that have symbolic content, whether linguistic or not, and that activation in this region increases with the amount of information that needs to be integrated to derive meaning.

It is interesting to note that our findings contrast with some previous studies that compared ASL and gesture, and found left IFG activation only for ASL (10, 21). This finding was interpreted as evidence for a “gating mechanism” whereby signs were distinguished from gesture at an early stage of processing in native signers, with only signs passed forward to the IFG. However, those previous studies did not use perceptually matched control conditions (allowing for the possibility of stimulus feature differences) and used pantomimed actions. In contrast, we used sequences of gestures that involved the articulators to symbolically represent objects and their paths. In this sense, our stimuli more closely match the abstract, symbolic nature of language than does pantomime. Thus, there does not appear to be a strict “gate” whereby the presence or absence of phonological or syntactic structure determines whether signers engage the left IFG; rather signers may engage language-related processing strategies when meaning needs to be derived from abstract symbolic, manual representations.

Conclusions

This study was designed to assess the effects of linguistic structure, symbolic communication, and linguistic experience on brain activation. In particular, we sought to compare how sign languages and nonlinguistic gesture are treated by the brain, in signers and nonsigners. This comparison is of special interest in light of recent claims that some highly spatial aspects of sign languages (e.g., verbs of motion) are organized and processed like gesture rather than like language. Our results indicate that ASL verbs of motion are not processed like spatial imagery or other nonlinguistic materials, but rather are organized and mentally processed like grammatically structured language, in specialized brain areas including the IFG and STS of the left hemisphere.

Although in this study both ASL and gesture conveyed information to both signers and nonsigners, we identified only restricted areas of the right anterior/middle STS that responded similarly to symbolic communication across stimulus types and groups. Overall, our results suggest that sign language experience modifies the neural networks that are engaged when people try to make sense of nonlinguistic, symbolic communication. Nonsigners engaged a bilateral network typically engaged in the perception of biological motion. For native signers, on the other hand, rather than sign language being processed like gesture, gesture is processed more like language. Signers recruited primarily language-processing areas, suggesting that lifelong sign language experience leads to imposing a language-like analysis even for gestures that are immediately recognized as nonlinguistic.

Finally, our findings support the analysis of verbs of motion classifier constructions as being linguistically structured (2, 6, 7), in that they specifically engage classical left hemisphere language-processing regions in native signers but not areas subserving nonlinguistic spatial perception. We suggest that this is because, although the signs for describing spatial information may have had their origins in gesture, over generations of use they have become regularized and abstracted into segmental, grammatically controlled linguistic units—a phenomenon that has been repeatedly described in the development and evolution of sign languages (31–33) and the structure and historical emergence of full-fledged adult sign languages (2, 6, 7, 34). Human communication systems seem always to move toward becoming rapid, combinatorial, and highly grammaticized systems (31); the present findings suggest that part of this change may involve recruiting the left hemisphere network as the substrate of rapid, rule-governed computation.

Materials and Methods

Please see SI Materials and Methods for detailed materials and methods.

Participants.

Nineteen congenitally deaf native learners of ASL (signers) and 19 normally hearing, native English speakers (nonsigners) participated. All provided informed consent. Study procedures were reviewed by the University of Rochester Research Subjects Review Board.

ASL and Gesture Stimuli.

Neural activation to sign language compared with gesture was observed by asking participants to watch videotaped ASL and gesture sequences expressing approximately the same content. Both ASL and gesture video sequences were elicited by the same set of stimuli, depicting events of motion. These stimuli included a set of short videos of toys moving along various paths, relative to other objects, and a set of line drawings depicting people, animals, and/or objects engaged in simple activities. Examples of these are shown in Fig. S5. After viewing each such video, the signer or gesturer was filmed producing, respectively, ASL sentences or gesture sequences describing the video. These short clips were shown to participants in the scanner. The ASL constructions were produced by a native ASL signer; gestured descriptions were produced by three native English speakers who did not know sign language. The native signer and the gesturers were instructed to describe each video or picture without speaking, immediately after viewing it. The elicited ASL and gestures were video recorded, edited, and saved as digital files for use in the fMRI experiment. Backward-layered control stimuli were created by making the signed and gestured movies partly transparent, reversing them in time, and then overlaying three such movies (all of one type, i.e., ASL or gesture) in software and saving these as new videos.

fMRI Procedure.

Each participant completed four fMRI scans (runs) of 40 trials each. Each trial consisted of a task cue (instructions to try to determine the meaning of the video, or watch for symmetry between the two hands), followed by a video (ASL, gesture, or control), followed by a response prompt. For ASL and gesture movies, participants saw two pictures and had to indicate via button press which best matched the preceding video. For control movies, participants made button presses indicating whether, during the movie, three hands had simultaneously had the same handshape. Two runs involved ASL stimuli only, whereas the other two involved gesture stimuli only. Within each run, one-half of the trials were the ASL or gesture videos and the other half were their backward-layered control videos. The ASL movies possessed enough iconicity, and the “foil” pictures were designed to be different enough from the targets that task performance was reasonably high even for nonsigners viewing ASL. Data were collected using an echo-planar imaging pulse sequence on a 3-T MRI system (echo time, 30 ms; repetition time, 2 s; 90° flip angle, 4-mm isotropic resolution). fMRI data were analyzed using FSL FEAT software according to recommendations of the software developers (fsl.fmrib.ox.ac.uk/fsl/fslwiki/).

SI Materials and Methods

Participants.

Nineteen congenitally deaf (80 dB or greater loss in both ears), native learners of ASL (eight males; mean age, 22.7 y; range, 19–31) and 19 normally hearing, native English speakers (nine males; mean age, 20.3 y; range, 19–26) participated in this study. All were right-handed, had at least 1 y of postsecondary education, had normal or corrected-to-normal vision, and reported no neurological problems. Both parents of all deaf participants used ASL, and all but two deaf people reported using hearing aids. None of the participants in this study had participated in previous studies from our laboratory involving these or any similar stimuli. None of the hearing participants reported knowing ASL, although one lived with roommates who used ASL, one reported knowing “a few signs,” and another reported knowing how to fingerspell. All subjects gave informed consent, and the study procedures were reviewed by the University of Rochester Research Subjects Review Board. Participants received $100 financial compensation for participating in the study.

Materials Used to Elicit ASL and Gesture Stimuli.

The ASL and gesture stimuli used in the fMRI experiment were elicited by the same set of materials, which included a set of short videos as well as a set of pictures. The first were a set of short, stop-motion animations originally developed by author T.S. to elicit ASL verbs of motion constructions (2). These videos depict small toys (e.g., people, animals, and videos) moving along various paths, sometimes in relation to other objects. Fig. S5 shows still frames taken from several of these stimuli, as examples. This set comprised 85 movies, all of which were used for elicitation, although only a subset of the ASL and gestures elicited by these were ultimately used in the fMRI study (see below). The second set of elicitation stimuli were colored “clipart” line drawings obtained from Internet searches. These were selected to show people and/or objects, either involved in an action, or in particular positions relative to other objects. For example, one picture showed a boy playing volleyball, whereas another depicted three chickens drinking from a trough. One hundred such images were selected and used for ASL and gesture elicitation, although again only a subset of the elicited gesture sequences were used in the fMRI experiment.

Procedure Used to Elicit ASL and Gesture Stimuli.

We recruited one native ASL signer and three nonsigning, native English speakers as models to produce the ASL and gesture sequences, respectively, to be used as stimuli in the fMRI experiment. Our goal was to have the gesture sequences be as similar as possible to the ASL stimuli in terms of fluidity of production, duration, and information represented. Of course, ASL and gesture production are inherently different because a native ASL signer would be able to readily access the necessary signs in her lexicon and produce a grammatical sequence describing the scene; by contrast, nonsigners would have to generate appropriate gestures “on the fly” including deciding how to represent the individual referents and their relative positions and/or movements. To help ensure fluidity, the gesture models were given opportunities to practice each gesture before filming, and all were brought in to the studio on more than one occasion for filming. We recruited three gesture models to generate a wider range of gestures from which to select the most concise, fluid, and understandable versions. Because ASL is a language, we used only one signer as a model because the signs and grammar used by other signers would be expected not to vary across native signers. Although the variety in appearance of the nonsigners may have led to less habituation of visual responses to these stimuli than to the signer, we do not see this as a problem because the control stimuli (backward-overlaid versions of the same movies) were matched in this factor. Videos were recorded on a Sony digital video camera and subsequently digitized for editing using Final Cut Pro (Apple). The signer was a congenitally deaf, native learner of ASL born to deaf native ASL signing parents. For each elicitation stimulus, she was instructed to describe the scene using ASL. Instructions were provided by author T.S., a native signer. The gesturers were selected for their ability to generate gestural descriptions of the elicitation stimuli. Two of the gesturers had some acting experience, but none were professional actors. The gesturers were never in the studio together and did not at any time discuss their gestures with each other. Each gesturer was informed as to the purpose of the recordings, and instructed by the hearing experimenters to describe the videos and pictures that they saw using only their hands and body, without speaking, in a way that would help someone watching their gestures choose between two subsequent pictures—one representing the elicitation stimulus the gesturer had seen, and the other representing a different picture or video. Each gesturer came to the studio on several occasions for stimulus recording and had the opportunity to produce each gesture several times both within and across recording sessions. We found this resulted in the shortest, and most fluid gestures; initial attempts at generating gestures were often very slow, during which time the gesturer spent time determining how best to depict particular objects and receiving feedback from the experimenter regarding the clarity of the gestures in the video recordings (i.e., positions of hands and body relative to the camera). The experimenters who conducted the gesture recording (authors A.J.N. and N.F.) did not provide suggestions about particular choices of gestures or the way scenes were described, nor were any attempts to make them similar to ASL—feedback was provided only to encourage clarity and fluidity.

Subsequent to ASL and gesture recording, the videos were viewed by the experimenters and a subset was selected for behavioral pilot testing. Author T.S. was responsible for selecting the ASL stimuli, whereas A.J.N. and N.F. selected the gestural stimuli. Only one example of a gesture was selected for each elicitation stimulus (i.e., the gesture sequence from one of the three gesturers), using criteria of duration (preferring shorter videos) and clarity of the relationship between the gesture and the elicitation stimulus, while balancing the number of stimuli depicting each of the three gesturers. In total, 120 gestured stimuli and 120 ASL stimuli were selected for behavioral pilot testing.

Selection of Stimuli for fMRI Experiment.

As noted above, a total of 240 potential stimuli were selected for the fMRI experiment. Next, we conducted a behavioral pilot study using six normally hearing nonsigners, with the goal of identifying which stimuli were most accurately and reliably identified by nonsigners. The pilot study was conducted in a similar way to the fMRI experiment: on each trial, one video was shown, followed by two pictures. One of the pictures was the target stimulus that had been used to elicit the preceding video (gesture or ASL), and the other was a “foil.” For the stop-motion elicitation stimuli, still images were created that showed a key frame from the video, along with a red arrow drawn on the image to depict the path of motion. Foils for these images either showed the same object(s) with a different path (“path foils”) or a different object(s) following the same path (“object foils”). For the videos elicited by clipart, foils were other clipart pictures that showed either a similar actor/object performing a different action, or different actors/objects performing a similar action. Subjects were asked to choose via button press which picture best matched the preceding video.

From the set of 240 pilot stimuli, the final set of stimuli for the fMRI experiment was selected as those that resulted in the highest mean correct responses across subjects. This resulted in a final set of 80 items; 40 of these were elicited by the stop-motion animation stimuli, and 40 by the clipart stimuli. For each of these 80 stimuli, both the gestured and ASL versions of the stimulus were used in the fMRI study. Presentation of these was counterbalanced, such that a given participant would only see the gestured or the ASL version of a particular elicitation stimulus, but never both. The movies ranged in duration from 2 to 10.8 s, with the ASL movies averaging 4 s in length (range, 2.8–8.1 s) and the gesture movies averaging 7.2 s (range, 4.5–9.2 s). Although the ASL movies were on average shorter, because the backward-layered control movies were generated from these same stimuli, they were of a comparable range of durations. Thus, the imaging results in which activation for the control stimuli are subtracted out—and in particular the observed differences between ASL and gesture stimuli—should not be attributable to differences in length of the movies between conditions.

fMRI Control Stimuli.

Our goal in developing the control stimuli for the fMRI experiment was to match these as closely as possible to the visual properties of the ASL and gesture stimuli, while preventing participants from deriving meaning from the stimuli. We used the same approach as we had used successfully in previous fMRI studies of ASL (17, 18). This involved making each movie partially transparent as well as having it play backward, and then digitally overlaying three such movies. The three movies selected for overlaying in each control video were chosen to have similar lengths, and in the case of the gesture movies, all three overlaid movies showed the same person; equal numbers of control movies were produced showing each of the three gesturers. Overlaying three movies was done because, in previous pilot testing, we found that signers were quite good at understanding single (nonoverlaid) ASL sentences played backward; this was not true for overlaid stimuli.

Procedure.

Participants were provided with instructions for the task before going into the MRI scanner and were reminded of these instructions once they were inside the scanner, immediately before starting the task. Participants were told to watch each movie (gestured or ASL) and try to understand what was being communicated. They were told that two of the four runs would contain gesture produced by nonsigners, whereas the other two contained ASL. Signers were instructed to try to make sense of the gestures; nonsigners were instructed to try to make sense of all of the stimuli, including to get what they could out of the ASL stimuli. Each run started with text indicating whether gesture or ASL would be presented. Participants were instructed to wait until the response prompt after the end of each movie, and then choose which of two pictures best matched the stimulus they had seen. The pictures shown were those described above under Selection of Stimuli for fMRI Experiment—i.e., one target and one foil. For backward-layered control stimuli, participants were instructed to watch for any point at which three of the hands in the movie (there were six hands in each movie) had the same handshape (i.e., position of the fingers and hand). The response options after these control trials were one picture depicting three iconic hands in the same handshape, and a second picture showing three different handshapes. The left/right position on the screen of each response prompt (target/foil or same/different handshapes) was pseudorandomized across trials to ensure that each possible response was presented an equal number of times on each side of the screen. Participants made their responses with their feet (this was done to minimize activation of the hand representation of the motor cortex associated with responding, because it was predicted that observing people’s hands in the stimuli might also activate hand motor cortex), via fiber optic response boxes.

Participants were given several practice trials with each type of stimulus before going into the MRI scanner, and were allowed to ask for clarification and given feedback if it appeared they did not understand the instructions. For signers, all communication was in ASL, either by a research assistant fluent in ASL, or via an interpreter. While in the MRI scanner, hearing participants could communicate with researchers via an audio intercom, whereas for signers a two-way video intercom was used. Stimulus presentation used DirectRT software (Empirisoft) running on a PC computer connected to a JVC DLA-SX21U LCD projector, which projected the video via a long-throw lens onto a Mylar projection screen placed at the head end of the MRI bore, which participants viewed via an angled mirror.

In the MRI scanner, a total of four stimulus runs were conducted for each participant; two of these contained ASL stimuli and two contained gesture stimuli. The ordering of the stimuli were counterbalanced across participants within each subject group. Each run comprised 40 trials, with equal numbers of target stimuli (i.e., ASL or gestures, depending on the run) and control stimuli presented in a pseudorandomized order and with “jittered” intertrial intervals between 0 and 10 s (in steps of 2 s), during which time a fixation cross was presented, to optimize recovery of the hemodynamic function for each condition. Each trial began with a 1-s visual cue that indicated whether the task and stimuli on that trial required comprehension of ASL or gestures, or attention to hand similarity in the control condition. The cue was followed by the stimulus movie and then, after a random delay of 0.25–3 s during which the screen was blank, the response prompt showing two alternatives as described above. The trial ended as soon as the participant made a response, or after 4 s if no response was made.

MRI Data Acquisition.

MRI data were collected on a 3 T Siemens Trio scanner using an eight-channel head coil. Functional images were collected using a standard gradient-echo, echo-planar pulse sequence with echo time (TE) = 30 ms, repetition time (TR) = 2 s, flip angle = 90°, field of view = 256 mm, 64 × 64 matrix (resulting in 4 × 4-mm resolution in-plane), and 30, 4-mm-thick axial slices collected in an interleaved order. Each fMRI run started with a series of four “dummy” acquisitions (full-brain volumes), which were discarded before analysis. T1-weighted structural images were collected using a 3D magnetization-prepared rapid gradient-echo pulse sequence, TE = 3.93 ms, TR = 2020 ms, inversion time = 1,100 ms, flip angle = 15°, field of view = 256 mm, 256 × 256 matrix, and 160, 1-mm-thick slices (resulting in 1-mm isotropic voxels).

fMRI Preprocessing and Data Analysis.

fMRI data processing was carried out using FMRI Expert Analysis Tool (FEAT), version 5.98, part of FMRIB’s Software Library (FSL) (fsl.fmrib.ox.ac.uk/fsl/fslwiki/). Before statistical analysis, the following preprocessing steps were applied to the data from each run, for each subject: motion correction using MCFLIRT; nonbrain removal using BET; spatial smoothing using a Gaussian kernel of FWHM 8 mm; grand-mean intensity normalization of the entire 4D dataset by a single multiplicative factor; and high-pass temporal filtering (Gaussian-weighted least-squares straight line fitting, with σ = 36.0 s). Runs were removed from further processing and analysis if they contained head motion in excess of 2 mm or other visible MR artifacts; in total, two runs were rejected from one nonsigning participant, and a total of four runs across three signing participants.

Statistical analysis proceeded through three levels, again using FEAT. The first level was the analysis of each individual run, using general linear modeling (GLM). The time series representing the “on” blocks for each of the two stimulus types (ASL or gesture, depending on the run, and the backward-layered control condition) were entered as separate regressors into the GLM, with prewhitening to correct for local autocorrelation. Coefficients were obtained for each stimulus type (effectively, the contrast between the stimuli and the fixation baseline periods that occurred between trials), as well as for contrasts between the target stimuli (ASL or gesture) and the backward-layered control condition.

To identify brain areas activated by each stimulus type relative to its backward-layered control condition, a second-level analysis was performed for each participant, including all four runs from that participant. The inputs to this were the coefficients (β weights) obtained from the first-level GLM for each contrast in the first-level analyses. This was done using a fixed effects model, by forcing the random effects variance to zero in FMRIB’s Local Analysis of Mixed Effects (FLAME).

A third-level, across-subjects analysis was then performed separately for each group, using the coefficients from each subject determined in the second-level GLM. This was used to obtain the activation maps for each group, including both the contrasts with fixation baseline and with backward-layered control stimuli. This was done using FLAME stage 1 and stage 2. The resulting z statistic images were thresholded using clusters determined by z > 2.3 and a (corrected) cluster significance threshold (35) of P < 0.05. The results of the contrasts with backward-layered control stimuli were masked with the results of the contrast with fixation, to ensure that any areas identified as being more strongly activated by ASL or gestures relative to control stimuli showed significantly increased signal relative to the low-level fixation baseline.

Finally, between-group analyses were performed, again using the coefficients obtained from each participant from the second-level analyses, in FLAME stages 1 and 2. Thresholding was the same as for the third-level analyses. Thresholded maps showing greater activation for one group or the other were masked to ensure that significant group differences were restricted to areas that showed significantly greater activation for ASL or gesture relative to its respective control condition, within the group showing stronger activation in the between-group contrast.

Acknowledgments