Abstract

Introduction

Patient-reported outcomes (PROs) are often the outcomes of greatest importance to patients. The minimally important difference (MID) provides a measure of the smallest change in the PRO that patients perceive as important. An anchor-based approach is the most appropriate method for MID determination. No study or database currently exists that provides all anchor-based MIDs associated with PRO instruments; nor are there any accepted standards for appraising the credibility of MID estimates. Our objectives are to complete a systematic survey of the literature to collect and characterise published anchor-based MIDs associated with PRO instruments used in evaluating the effects of interventions on chronic medical and psychiatric conditions and to assess their credibility.

Methods and analysis

We will search MEDLINE, EMBASE and PsycINFO (1989 to present) to identify studies addressing methods to estimate anchor-based MIDs of target PRO instruments or reporting empirical ascertainment of anchor-based MIDs. Teams of two reviewers will screen titles and abstracts, review full texts of citations, and extract relevant data. On the basis of findings from studies addressing methods to estimate anchor-based MIDs, we will summarise the available methods and develop an instrument addressing the credibility of empirically ascertained MIDs. We will evaluate the credibility of all studies reporting on the empirical ascertainment of anchor-based MIDs using the credibility instrument, and assess the instrument's inter-rater reliability. We will separately present reports for adult and paediatric populations.

Ethics and dissemination

No research ethics approval was required as we will be using aggregate data from published studies. Our work will summarise anchor-based methods available to establish MIDs, provide an instrument to assess the credibility of available MIDs, determine the reliability of that instrument, and provide a comprehensive compendium of published anchor-based MIDs associated with PRO instruments which will help improve the interpretability of outcome effects in systematic reviews and practice guidelines.

Keywords: MID, Minimally Important Difference, Patient Reported Outcome, Systematic Survey, Protocol

Background

For decades, longevity and major morbid events (eg, stroke, myocardial infarction) have been a primary focus in health research. Increasingly, investigators and clinicians have acknowledged the critical role of disease and treatment-related symptoms, as well as the function and perceptions of well-being for informed clinical decision-making. Typically measured by direct patient inquiry, these outcomes, previously generally referred to as ‘quality of life’ or ‘health-related quality of life’ measures, are now most commonly referred to as patient-reported outcomes (PROs). PROs provide patients’ perspectives on treatment benefits and harms, and are often the outcomes of most importance to patients.

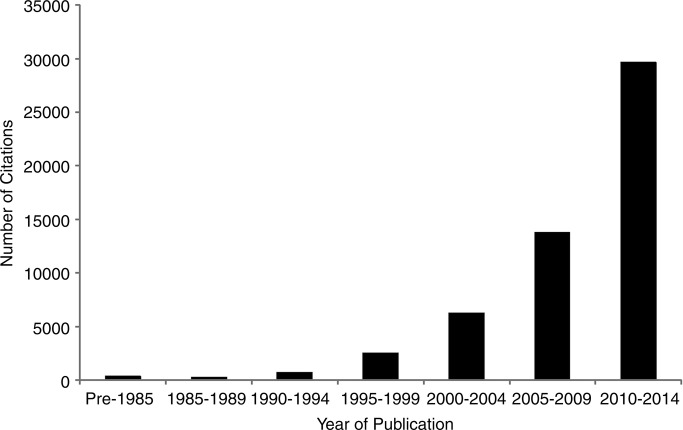

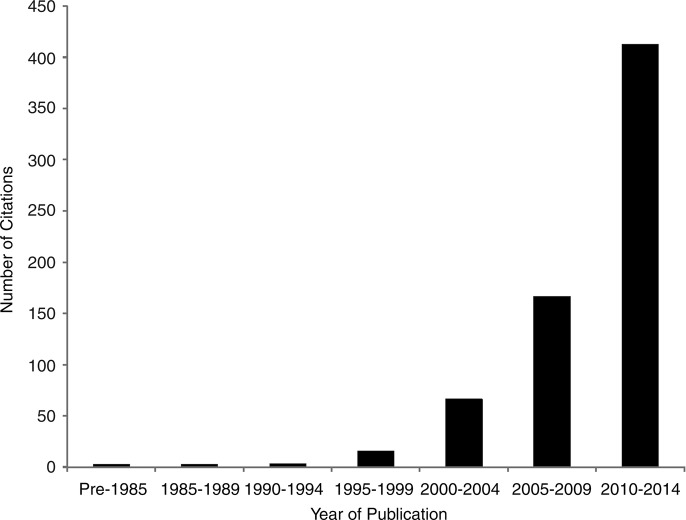

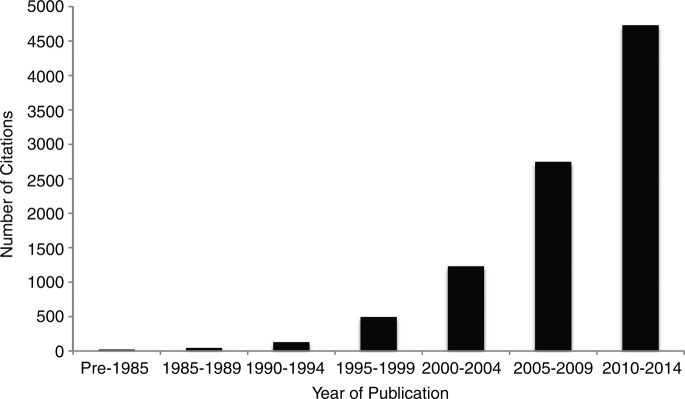

The PRO literature has grown exponentially over the past three decades (figure 1), with several instruments (eg, Short-Form-36,1 Beck Depression Inventory2) in routine use in research and clinical practice. The number of clinical trials evaluating the impact of interventions on PROs has also steadily increased (figure 2), and PROs are increasingly considered in practice guidelines (figure 3).

Figure 1.

Number of citations found in PubMed with search terms of patient reported outcome, by 5-year stratum.

Figure 2.

Number of citations found in PubMed with the search terms of patient reported outcome limited to clinical trials, by 5-year stratum.

Figure 3.

Number of citations found in PubMed with search terms of patient reported outcome and practice guidelines, by 5-year strata.

Although PROs are often measured as primary outcomes in clinical trials, challenges remain in their application. In addition, although evidence supporting reliability, validity and responsiveness exists for many PRO instruments, interpretation of their results remains a challenge.

Interpretability has to do with understanding the changes in instrument scores that constitute trivial, small but important, moderate or large differences in effect. For instance, if a treatment improves a PRO score by three points relative to control, what are we to conclude? Is the treatment effect large, warranting widespread dissemination in clinical practice, or is it trivial, suggesting that the new treatment should be abandoned? Recognition of this potentially serious limitation has led to increasing interest in the interpretation of treatment effects on PROs.3 4

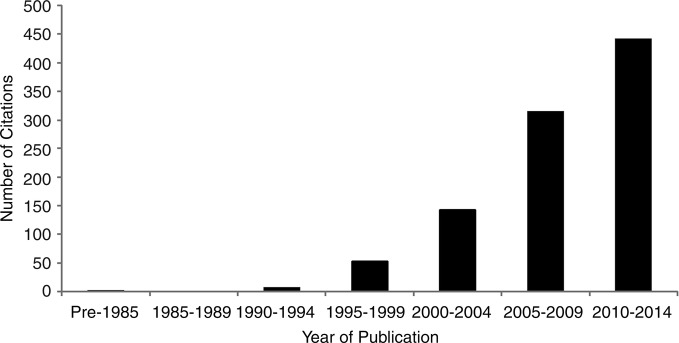

The minimally important difference (MID) provides a measure of the smallest change in the PRO of interest that patients perceive as important, either beneficial or harmful, and that would lead the patient or clinician to consider a change in management.5 Knowledge of the MID allows decision-makers to better interpret the magnitude of treatment effect and assess the trade-off between beneficial and harmful outcomes. Patients, clinicians and clinical practice guideline developers require knowledge of the MID to guide their decisions. For example, a guideline developer using the GRADE approach6 might consider the MID as a decision threshold for determining if the quality of evidence for a given intervention should be rated down (for imprecision) if the CI surrounding a pooled effect estimate includes the MID,7 or if it is sufficiently precise, lying well above the MID threshold. The MID also provides a metric for clinical trialists planning sample sizes for their studies. This is accomplished by first calculating the proportion of patients achieving an MID or greater change, and subsequently determining the difference in the proportion of responders that trialists would like to examine between the treatment and control that would constitute a clinically important difference. The widespread recognition of the usefulness of the MID is reflected in the exponential growth in the number of citations reporting MIDs since the concept was first introduced into the medical literature in 1989 (figure 4).4

Figure 4.

Number of citations found in PubMed with search terms of minimal (clinically) important difference, by 5-year stratum.

There are two primary approaches for estimating an MID: distribution-based and anchor-based methods. Distribution-based methods rely on the distribution around the mean scores of the measure of interest (eg, SD).8 In the anchor-based approach, investigators examine the relation between the target PRO instrument and an independent measure that is itself interpretable—the anchor. An appropriate anchor will be relevant to patients (eg, measures of symptoms, disease severity or response to treatment).9 Investigators often use global ratings of change (patients classifying themselves as unchanged, or experiencing small, moderate and large improvement or deterioration) as an anchor. It is generally agreed that the patient-reported anchor-based approach is the optimal way to determine the MID because it directly captures the patients’ preferences and values,8 10 although it can still be problematic if the credibility of the anchor is in question (eg, is the anchor itself interpretable and are responses on the anchor independent of responses on the PRO?).

Currently, there is no study or database that systematically documents all available anchor-based MIDs associated with PRO instruments. In addition, there are currently no accepted standards for appraising the credibility of an MID determination; incorrect methods, assumptions or interpretation of MID could be detrimental to otherwise well-designed clinical trials, systematic reviews and guidelines. For example, systematic review authors and guideline developers might interpret trials using PROs incorrectly, and provide misleading guidance to patients and clinicians.11 12 In addition, erroneous MIDs may lead to inappropriate sample size calculations.

Our objectives are therefore to:

Summarise the anchor-based methods that investigators have used to estimate MIDs and the criteria thus far suggested to conduct studies optimally (henceforth referred to as a ‘systematic survey addressing the anchor-based methods used to estimate MIDs’).

Develop an instrument for evaluating the credibility of MIDs that emerge from identified PRO instruments.

Document published anchor-based MIDs associated with PRO instruments used in evaluating the effects of interventions on chronic medical and psychiatric conditions in adult and paediatric populations (henceforth referred to as a ‘systematic survey of the inventory of published anchor-based MIDs’).

Apply the credibility criteria (developed in objective 2) to each of the MID estimates that emerge from our synthesis in objective 3.

Determine the reliability of our credibility instrument (developed in objective 2).

To ensure the feasibility and a manageable scope of the project and given that the primary use of PROs is in the management of chronic medical and psychiatric conditions,13 we have restricted our focus to these clinical areas.

Methods

Eligibility criteria

We define an anchor-based approach as any independent assessment to which the PRO instrument is compared, irrespective of the interpretability or the quality of the anchor. We will include two types of publications: (1) Methods articles addressing MID estimation using an anchor-based approach (theoretical descriptions, summaries, commentaries, critiques). We will include only studies that dedicate a minimum of two paragraphs to discuss methodological issues when estimating MIDs from both of these types of studies. (2) Original reports of studies that document the empirical development of an anchor-based MID for a particular instrument in a wide range of chronic medical and psychiatric conditions. That is, studies will compare the results of a PRO instrument to an independent standard (the ‘anchor’), irrespective of the interpretability or the quality of the anchor. We will include adult (≥18 years of age) and paediatric populations (<18 years of age). PROs of interest will include self-reported patient-important outcomes of health-related quality of life, functional ability, symptom severity and measures of psychological distress and well-being.

In our definition of a PRO, if the patient is incapable of responding to the instrument and a proxy is used, then the study is still eligible. If the clinician completes only the anchor, then it is still eligible. For anchor-based MIDs identified in the paediatric population for children under the age of 13, we will include both patient-reported and caregiver-reported instruments.

We will exclude studies when only the clinician completes the PRO instrument (ineligible proxy), as well as studies reporting only distribution-based MIDs without an accompanying anchor-based MID.

Information sources and search

We will search MEDLINE, EMBASE and PsycINFO for studies published from 1989 to the present (the MID concept was first introduced into the medical literature in 1989).3 Search terms will include database subject headings and text words for the concepts: ‘minimal important difference’, ‘minimal clinical important difference’, ‘clinically important difference’, ‘minimal important change’, alone and in combination, and adapted for each of the chosen databases. Table 1 presents the MEDLINE search strategy. One of the co-authors (DLP) works closely with MAPI Trust, which maintains PROQOLID—a large repository of commonly used, well-validated PRO instruments.13 To supplement our search, the investigator has granted us access to the PROQOLID internal library, which houses approximately 180 citations related to MID estimates. We will, in addition, search the citation lists of included studies and collect narrative and systematic reviews for original studies that report an MID for a given instrument.

Table 1.

Search strategies for MEDLINE, January 1989 to present

| 1 | (clinical* important difference? or clinical* meaningful difference? or clinical* meaningful improvement? or clinical* relevant mean difference? or clinical* significant change? or clinical* significant difference? or clinical* important improvement? or clinical* meaningful change? or mcid or minim* clinical* important or minim* clinical* detectable or minim* clinical* significant or minim* detectable difference? or minim* important change? or minim* important difference? or smallest real difference? or subjectively significant difference?).tw. |

| 2 | “Quality of Life”/ |

| 3 | “outcome assessment(health care)”/or treatment outcome/or treatment failure/ |

| 4 | exp pain/ |

| 5 | exp disease attributes/or exp “signs and symptoms”/ |

| 6 | or/2–5 |

| 7 | 1 and 6 |

| 8 | health status indicators/or “severity of illness index”/or sickness impact profile/or interviews as topic/or questionnaires/or self report/ |

| 9 | Pain Measurement/ |

| 10 | patient satisfaction/or patient preference/ |

| 11 | or/8–10 |

| 12 | 7 and 11 |

| 13 | limit 12 to yr=“1989 -Current” |

| 14 | (quality of life or life qualit??? or hrqol or hrql).mp. |

| 15 | (assessment? outcome? or measure? outcome? or outcome? studies or outcome? study or outcome? assessment? or outcome? management or outcome? measure* or outcome? research or patient? outcome? or research outcome? or studies outcome? or study outcome? or therap* outcome? or treatment outcome? or treatment failure?).mp. |

| 16 | pain????.mp. |

| 17 | ((activity or sever* or course) adj3 (disease or disabilit* or symptom*)).mp. |

| 18 | or/14–17 |

| 19 | 1 and 18 |

| 20 | (questionnaire? or instrument? or interview? or inventor* or test??? or scale? or subscale? or survey? or index?? or indices or form? or score? or measurement?).mp. |

| 21 | (patient? rating? or subject* report? or subject* rating? or self report* or self evaluation? or self appraisal? or self assess* or self rating? or self rated).mp. |

| 22 | (patient? report* or patient? observ* or patient? satisf*).mp. |

| 23 | anchor base??.mp. |

| 24 | or/20–23 |

| 25 | 19 and 24 |

| 26 | limit 25 to yr=“1989 -Current” |

| 27 | 13 or 26 |

Study selection

Teams of two reviewers will independently screen titles and abstracts to identify potentially eligible citations. To determine eligibility, the same reviewers will review the full texts of citations flagged as potentially eligible.

Data collection, items and extraction

Teams of data extractors will, independently and in duplicate, extract data using two pilot-tested data collection forms: one for the systematic survey addressing the anchor-based methods used to estimate MIDs, and the second for the systematic survey of the inventory of published anchor-based MIDs. On study initiation, we will conduct calibration exercises until sufficient agreement is achieved. Our data collection forms will include the following items: study design, description of population, interventions, outcomes, characteristics of candidate instruments (eg, generic or disease specific), characteristics of independent anchor measures, critiques/commentary on methods to estimate MID(s), and credibility criteria for method(s) to estimate MIDs.

For the extraction of anchor-based methods studies used to estimate MIDs, a team of methodologists familiar with MID methods will use standard thematic analysis techniques14 to abstract concepts related to the methodological quality of MID determinations until reaching saturation. We will review coding and revise the taxonomy of methodological factors iteratively until informational redundancy is achieved. Appendix A presents the data extraction items for the systematic survey of the inventory of published anchor-based MIDs. Reviewers will resolve disagreements by discussion and, if needed, a third team member will serve as an adjudicator.

Subsequently, we will synthesise and complete each objective as follows:

Objective 1: Methods to develop anchor-based methods used to estimate MIDs

We will classify the anchor-based approaches used to determine MIDs into separate categories, describe their methods, and summarise their advantages, disadvantages and important factors that constitute a high-quality anchor.

Objective 2: Development of a credibility instrument for studies determining MIDs

We define credibility as the extent to which the design and conduct of studies measuring MIDs are likely to have protected against misleading estimates. Similar definitions have been used for instruments measuring the credibility of other types of study designs.15 16

The systematic survey addressing methods to estimate MIDs (objective 1) will identify all the available methodologies and concepts along with their strengths and limitations, and will thus inform the item generation stage of instrument development. On the basis of the survey of the methods literature and our group's experience with methods of ascertaining MIDs,3 4 17–22 we will develop initial criteria for evaluating the credibility of anchor-based MID determinations. Our group has used these methods successfully for developing methodological quality appraisal standards across a wide range of topics.16 23–26

Using a sample of eligible studies, we will pilot the draft instrument with four target users, specifically researchers interested in the credibility of MID estimates, who will be identified within our international network of knowledge users (please see ‘Knowledge Translation’ section below). The data collected at this stage will inform item modification and reduction. This iterative process will be conducted until we achieve consensus for the final version of the instrument.

Objective 3: Systematic survey of studies generating an anchor-based MID of target PRO instruments

We will summarise MID estimates separately for paediatric and adult populations, along with study design, intervention, population characteristics, characteristics of the PRO, characteristics of the anchor and credibility ratings. If multiple MID estimates are captured for the same PRO instrument across similar clinical conditions, we will summarise all the estimates.

Objective 4: Measuring credibility of compiled MIDs

Using the instrument (the development of which we have described in objective 2), teams of two reviewers will undertake the credibility assessment for all eligible studies identified in the review. The appraisal will be performed in duplicate, using prepiloted forms. Disagreements will be resolved by discussion between the reviewers and, if needed, with a third team member. Knowledge users (eg, future systematic review authors or guideline developers) could then use their judgement to consider the credibility of the MID on a continuum from ‘highly credible’ to ‘very unlikely to be credible’.

Objective 5: Reliability study of the credibility instrument

We will conduct a reliability study of our instrument to measure the credibility of MIDs by calculating the inter-rater reliability and associated 95% CI as measured by weighted κ with quadratic weights. We will complete reliability analyses using classical test theory. We will consider a reliability coefficient of at least 0.7 to represent ‘good’ inter-rater reliability.27–29 According to Walter et al,30 considering three replicates per study (three raters), a minimally acceptable level of reliability of 0.6 and an expected reliability of 0.7, an α of 0.05 and a β of 0.2, we would require a minimum of 133 observations/study assessed per rater. We will use all the studies identified in the systematic survey of estimated MIDs to calculate the reliability estimate. On the basis of initial pilot screening, we estimate that we will have approximately 400 eligible studies.

Knowledge translation

Our multidisciplinary study team is composed of knowledge users and researchers with a broad range of content and methodological expertise. We represent and provide direct links to international networks of key knowledge users including the GRADE Working Group, the Cochrane Collaboration, the WHO and the Canadian Agency for Drugs and Technologies in Health. Collectively, these organisations provide opportunities for disseminating our findings to an international network of clinical trialists, systematic review authors, guideline developers and researchers involved in the development of PROs.

Our dissemination strategies include the incorporation of our knowledge products into Cochrane reviews via the GRADEprofiler (http://www.guidelinedevelopment.org—a globally-adopted platform that is widely used by our target audience for conducting systematic reviews and developing practice guidelines) and MAGICapp (a guideline and evidence summary creation tool that uses the GRADE framework). We will offer our work for relevant portions of the printed and online versions of the Cochrane Handbook, and we will develop interactive education sessions (workshops) and research briefs to inform knowledge-users from various health disciplines about our findings and their implications for clinical trial, systematic review and guideline development.

Discussion

Our systematic survey will represent the first overview of methods to develop anchor-based MIDs, and the first comprehensive compendium of published anchor-based MIDs associated with PRO instruments used in evaluating the effects of interventions on chronic medical and psychiatric conditions. Our systematic survey on methods to estimate MIDs will draw attention to the methodological issues and challenges involved in MID determinations. In doing so, we will deepen the understanding and improve the quality of reporting and use of MIDs by our target knowledge users. The methods review will inform the development of an instrument to determine the credibility of anchor-based MIDs that will allow us to address existing MIDs and can subsequently be used to evaluate new studies offering anchor-based MIDs. This work will also help knowledge users identify anchor-based MIDs that may be less credible, misleading or inappropriate with respect to the average magnitude of change that is important to patients.

We recognise that some variability in MIDs will be attributable to context and patient characteristics. For example, anchor-based MIDs are established using average magnitudes of change that are considered important to patients. Without individual patient data, we will not be able to explore, for example, subgroups of patients with mild or severe disease, gender differences and the relative contributions of these factors. We will alert our knowledge users to this potential limitation.

Collectively, these efforts will promote better-informed decision-making by clinical trialists, systematic review authors, guideline developers and clinicians interpreting treatment effects on PROs.

Acknowledgments

The authors would like to thank Tamsin Adams-Webber at the Hospital for Sick Children and Mr. Paul Alexander for their assistance with developing the initial literature search.

Footnotes

Contributors: BCJ, SE, GHG and GN conceived the study design, and AC-L, TAF, DLP, BRH and HJS contributed to the conception of the design. BCJ and SE drafted the manuscript, and all authors reviewed several drafts of the manuscript. All authors approved the final manuscript to be published.

Funding: This project is funded by the Canadian Institutes of Health Research, Knowledge Synthesis grant number DC0190SR. SE is supported by an MITACS Elevate and SickKids Restracomp Postdoctoral Fellowship Awards.

Competing interests: TAF has no COI with respect to the present study but has received lecture fees from Eli Lilly, Meiji, Mochida, MSD, Otsuka, Pfizer and Tanabe-Mitsubishi, and consultancy fees from Sekisui Chemicals and Takeda Science Foundation. He has received royalties from Igaku-Shoin, Seiwa-Shoten and Nihon Bunka Kagaku-sha publishers. He has received grant or research support from the Japanese Ministry of Education, Science, and Technology, the Japanese Ministry of Health, Labour and Welfare, the Japan Society for the Promotion of Science, the Japan Foundation for Neuroscience and Mental Health, Mochida and Tanabe-Mitsubishi. He is a diplomate of the Academy of Cognitive Therapy. All other authors declare no conflicts of interest.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Ware JE Jr, Sherbourne CD. The MOS 36-item short-form health survey (SF-36). I. Conceptual framework and item selection. Med Care 1992;30:473–83. 10.1097/00005650-199206000-00002 [DOI] [PubMed] [Google Scholar]

- 2.Beck AT, Ward CH, Mendelson M et al. An inventory for measuring depression. Arch Gen Psychiatry 1961;4:561–71. 10.1001/archpsyc.1961.01710120031004 [DOI] [PubMed] [Google Scholar]

- 3.Jaeschke R, Singer J, Guyatt GH. Measurement of health status. Ascertaining the minimal clinically important difference. Control Clin Trials 1989;10:407–15. 10.1016/0197-2456(89)90005-6 [DOI] [PubMed] [Google Scholar]

- 4.Johnston BC, Thorlund K, Schunemann HJ et al. Improving the interpretation of quality of life evidence in meta-analyses: the application of minimal important difference units. Health Qual Life Outcomes 2010;8:116 10.1186/1477-7525-8-116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schunemann HJ, Guyatt GH. Commentary–goodbye M(C)ID! Hello MID, where do you come from? Health Serv Res 2005;40:593–7. 10.1111/j.1475-6773.2005.0k375.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Guyatt GH, Oxman AD, Vist GE et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924–6. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Guyatt GH, Oxman AD, Kunz R et al. GRADE guidelines 6. Rating the quality of evidence–imprecision. J Clin Epidemiol 2011;64:1283–93. 10.1016/j.jclinepi.2011.01.012 [DOI] [PubMed] [Google Scholar]

- 8.Guyatt GH, Osoba D, Wu AW et al. , Clinical Significance Consensus Meeting G. Methods to explain the clinical significance of health status measures. Mayo Clin Proc 2002;77:371–83. 10.4065/77.4.371 [DOI] [PubMed] [Google Scholar]

- 9.Guyatt GH, Juniper EF, Walter SD et al. Interpreting treatment effects in randomised trials. BMJ 1998;316:690–3. 10.1136/bmj.316.7132.690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.King MT. A point of minimal important difference (MID): a critique of terminology and methods. Expert Rev Pharmacoecon Outcomes Res 2011;11:171–84. 10.1586/erp.11.9 [DOI] [PubMed] [Google Scholar]

- 11.Johnston BC, Patrick DL, Thorlund K et al. Patient-reported outcomes in meta-analyses—part 2: methods for improving interpretability for decision-makers. Health Qual Life Outcomes 2013;11:211 10.1186/1477-7525-11-211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Johnston BC, Bandayrel K, Friedrich JO et al. Presentation of continuous outcomes in meta-analysis: a survey of clinicians’ understanding and preferences. Cochrane Database Syst Rev 2013;Suppl 1(212). [Google Scholar]

- 13.MAPI Research Trust. Patient-Reported Outcome and Quality of LIfe Database (PROQOLID). Secondary Patient-Reported Outcome and Quality of LIfe Database (PROQOLID) 2013. http://www.proqolid.org

- 14.Morse J. Designing funded qualitative research. In: Denzin NK, Linclon YS, eds. Handbook for qualitative research. Thousand Oaks, CA: Sage, 1994:220–35. [Google Scholar]

- 15.Murad MH, Montori VM, Ioannidis JP et al. How to read a systematic review and meta-analysis and apply the results to patient care: users’ guides to the medical literature. JAMA 2014;312:171–9. 10.1001/jama.2014.5559 [DOI] [PubMed] [Google Scholar]

- 16.Sun X, Briel M, Walter SD et al. Is a subgroup effect believable? Updating criteria to evaluate the credibility of subgroup analyses. BMJ 2010;340:c117 10.1136/bmj.c117 [DOI] [PubMed] [Google Scholar]

- 17.Fallah A, Akl EA, Ebrahim S et al. Anterior cervical discectomy with arthroplasty versus arthrodesis for single-level cervical spondylosis: a systematic review and meta-analysis. PLoS ONE 2012;7:e43407 10.1371/journal.pone.0043407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Turner D, Schunemann HJ, Griffith LE et al. The minimal detectable change cannot reliably replace the minimal important difference. J Clin Epidemiol 2010;63:28–36. 10.1016/j.jclinepi.2009.01.024 [DOI] [PubMed] [Google Scholar]

- 19.Turner D, Schunemann HJ, Griffith LE et al. Using the entire cohort in the receiver operating characteristic analysis maximizes precision of the minimal important difference. J Clin Epidemiol 2009;62:374–9. 10.1016/j.jclinepi.2008.07.009 [DOI] [PubMed] [Google Scholar]

- 20.Juniper EF, Guyatt GH, Willan A et al. Determining a minimal important change in a disease-specific Quality of Life Questionnaire. J Clin Epidemiol 1994;47:81–7. 10.1016/0895-4356(94)90036-1 [DOI] [PubMed] [Google Scholar]

- 21.Johnston BC, Thorlund K, da Costa BR et al. New methods can extend the use of minimal important difference units in meta-analyses of continuous outcome measures. J Clin Epidemiol 2012;65:817–26. 10.1016/j.jclinepi.2012.02.008 [DOI] [PubMed] [Google Scholar]

- 22.Yalcin I, Patrick DL, Summers K et al. Minimal clinically important differences in Incontinence Quality-of-Life scores in stress urinary incontinence. Urology 2006;67:1304–8. 10.1016/j.urology.2005.12.006 [DOI] [PubMed] [Google Scholar]

- 23.Levine M, Ioannidis J, Haines T, Harm (observational studies). In: Guyatt G, Rennie D, Meade MO et al., eds. Users’ guides to the medical literature: a manual for evidence-based clinical practice. McGraw-Hill, 2008:363–82. [Google Scholar]

- 24.Randolph A, Cook DJ, Guyatt G. Prognosis. In: Guyatt G, Rennie D, Meade MO, et al., eds. Users’ Guides to the Medical Literature: a manual for Evidence-Based Clinical Practice: McGraw-Hill, 2008:509–22. [Google Scholar]

- 25.Furukawa TA, Jaeschke R, Cook D et al. Measuring of patients’ experience. In: Guyatt G, Drummond R, Meade MO et al., eds. Users’ guides to the medical literature: a manual for evidence-based clinical practice. 2nd edn New York: McGraw-Hill, Inc, 2008:249–72. [Google Scholar]

- 26.Akl E, Sun X, Busse JW et al. Specific instructions for estimating unclearly reported blinding status in randomized trials were reliable and valid. J Clin Epidemiol 2012;65:262–7. 10.1016/j.jclinepi.2011.04.015 [DOI] [PubMed] [Google Scholar]

- 27.Heppner PP, Kivlighan DM, Wampold BE. Research design in counseling. Pacific Grove, CA: Brooks/Cole, 1992. [Google Scholar]

- 28.Kaplan RM, Sacuzzo DP. Psychological testing: principles, applications, and issues. 4th edn Pacific Grove, CA: Brooks/Cole, 1997. [Google Scholar]

- 29.Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing interrater reliability. Psychol Bull 1979;86:420–8. 10.1037/0033-2909.86.2.420 [DOI] [PubMed] [Google Scholar]

- 30.Walter SD, Eliasziw M, Donner A. Sample size and optimal designs for reliability studies. Stat Med 1998;17:101–10. [DOI] [PubMed] [Google Scholar]