Abstract

Background

The goal of this survey paper is to overview cellular measurements using optical microscopy imaging followed by automated image segmentation. The cellular measurements of primary interest are taken from mammalian cells and their components. They are denoted as two- or three-dimensional (2D or 3D) image objects of biological interest. In our applications, such cellular measurements are important for understanding cell phenomena, such as cell counts, cell-scaffold interactions, cell colony growth rates, or cell pluripotency stability, as well as for establishing quality metrics for stem cell therapies. In this context, this survey paper is focused on automated segmentation as a software-based measurement leading to quantitative cellular measurements.

Methods

We define the scope of this survey and a classification schema first. Next, all found and manually filteredpublications are classified according to the main categories: (1) objects of interests (or objects to be segmented), (2) imaging modalities, (3) digital data axes, (4) segmentation algorithms, (5) segmentation evaluations, (6) computational hardware platforms used for segmentation acceleration, and (7) object (cellular) measurements. Finally, all classified papers are converted programmatically into a set of hyperlinked web pages with occurrence and co-occurrence statistics of assigned categories.

Results

The survey paper presents to a reader: (a) the state-of-the-art overview of published papers about automated segmentation applied to optical microscopy imaging of mammalian cells, (b) a classification of segmentation aspects in the context of cell optical imaging, (c) histogram and co-occurrence summary statistics about cellular measurements, segmentations, segmented objects, segmentation evaluations, and the use of computational platforms for accelerating segmentation execution, and (d) open research problems to pursue.

Conclusions

The novel contributions of this survey paper are: (1) a new type of classification of cellular measurements and automated segmentation, (2) statistics about the published literature, and (3) a web hyperlinked interface to classification statistics of the surveyed papers at https://isg.nist.gov/deepzoomweb/resources/survey/index.html.

Keywords: Cellular measurements, Cell segmentation, Segmented objects, Segmentation evaluation, Accelerated execution of segmentation for high-throughput biological application

Background

Segmentation is one of the fundamental digital image processing operations. It is used ubiquitously across all scientific and industrial fields where imaging has become the qualitative observation and quantitative measurement method. Segmentation design, evaluation, and computational scalability can be daunting for cell biologists because of a plethora of segmentation publications scattered across many fields with reported segmentation choices that are highly dependent on scientific domain specific image content. Thus, the goal of this survey paper is to overview automated image segmentations used for cellular measurements in biology.

In quantitative image-based cell biology, cellular measurements are primarily derived from detected objects using image segmentation methods. In order to report statistically significant results for any hypothesis or task, cellular measurements have to be taken from a large number of images. This requires automated segmentation which includes algorithm design, evaluation, and computational scalability in high-throughput applications. This survey is motivated by the need to provide a statistics-based guideline for cell biologists to map their cellular measurement tasks to the frequently used segmentation choices.

The large number of publications reporting on both the omnipresent image segmentation problem and cell biology problems using image-based cellular measurements was narrowed down by adding more specific cell biology criteria and considering recent publications dated from the year 2000 until the present. While general survey papers are cited without any date constraints to provide references to segmentation fundamentals, statistics-based guidelines are reported for selected published papers that focus on optical microscopy imaging of mammalian cells and that utilize three-dimensional (3D) image cubes consisting of X-Y-Time or X-Y-Z dimensions (or X-Y-Z over time). Although there are many promising optical microscopy imaging modalities, we have primarily focused on the conventional phase contrast, differential interference contrast (DIC), confocal laser scanning, and fluorescent and dark/bright field modalities. In the space of mammalian cells and their cellular measurements, we included publications reporting in vitro cell cultures. The goal of such cellular measurements is to understand the spectrum of biological and medical problems in the realm of stem cell therapies and regenerative medicine, or cancer research and drug design. We introduce first the basic motivations behind cellular measurements via microscopy imaging and segmentation. Next we describe the types of results that come from image segmentation and the requirements that are imposed on segmentation methods.

Motivation

We address three motivational questions behind this survey: (1) why is quantitative cell imaging important for cell biology; (2) why is segmentation critical to cellular measurements; and (3) why is automation of segmentation important to cell biology research? We analyze image segmentation and cellular characterization as software-based cellular measurements that are applied to images of mammalian cells.

First, cell research has its unique role in understanding living biological systems and developing next generation regenerative medicine and stem cell therapies for repairing diseases at the cellular level. Live cell imaging and 3D cell imaging play an important role in both basic science and drug discovery at the levels of a single cell and its components, as well as at the levels of tissues and organs [1]. While qualitative cell imaging is commonly used to explore complex cell biological phenomena, quantitative cell imaging is less frequently used because of the additional complexity associated with qualifying the quantitative aspects of the instrumentation, and the need for software-based analysis. If quantitative cell imaging is enabled then a wide range of applications can benefit from high statistical confidence in cellular measurements at a wide range of length scales. For example, quantitative cell imaging is potentially a powerful tool for qualifying cell therapy products such as those that can cure macular degeneration, the leading cause of blindness in adults (7 million US patients, gross domestic product loss $30 billion [2]). On the research side, quantitative cell imaging is needed to improve our understanding of complex cell phenomena, such as cell-scaffold interactions, and cell colony behavior such as pluripotency stability, and is especially powerful when these phenomena can be studied in live cells dynamically.

Second, the segmentation of a variety of cell microscopy image types is a necessary step to isolate an object of interest from its background for cellular measurements. At a very low level, segmentation is a partition of an image into connected groups of pixels that have semantic meaning. Mammalian cell segmentation methods can be found in literature that focus on biological and medical image informatics. They aim to improve the efficiency, accuracy, usability, and reliability of medical imaging services within the healthcare enterprise [3]. Segmentation methods also become a part of quantitative techniques for probing cellular structure and dynamics, and for cell-based screens [4]. Cellular measurement without image segmentation would be limited to statistics of either a portion of a cell (i.e., portion of a cell interior covered by one field of view) or mixture of a cell and its background. Thus, accurate and efficient segmentation becomes critical for cellular measurements.

Third, with the advancements in cell microscopy imaging and the increasing quantity of images, the automation of segmentation has gained importance not only for industrial applications but also for basic research. The benefits of automation can be quantified in terms of its cost, efficiency, and reproducibility of image segmentation per cell. The benefits motivate the design of automated segmentations while maximizing their accuracy. However, with automation comes a slew of questions for cell biologists about design and evaluations of accuracy, precision, and computational efficiency.

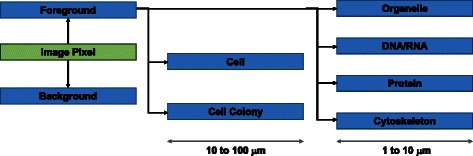

Image segmentation results are objects of interest to cell biologists that can be described by semantically meaningful terms in cell biology and can also be characterized by spectral intensity, shape, motion, or textural measurements from acquired images. Fig. 1 illustrates generic and cell specific labels assigned to a 2D image pixel (or 3D image voxel) during segmentation. Specific semantic labels depend on the type of experiment. For instance, the stain choice in an experimental design followed by imaging modality and segmentation method determines a semantic label of a segmentation result. It is also common to incorporate a priori knowledge about cells to obtain semantically meaningful segmentation results. For example, cell connectivity defines segmentation results at the image level to be connected sets of 2D pixels or 3D voxels.

Fig. 1.

Segmentation labels ranging from generic (foreground, background) to cell specific objects relevant to diffraction-limited microscopy (DNA/RNA, protein, organelle, or cytoskeleton)

Segmentation results and imaging measurement pipeline

Given a connected set of 2D pixels or 3D voxels as a segmentation result, one can obtain cellular measurements about (1) motility of cells, (2) cell and organelle morphology, (3) cell proliferation, (4) location and spatial distribution of biomarkers in cells, (5) populations of cells with multiple phenotypes, and (6) combined multiple measurements per cell [5].

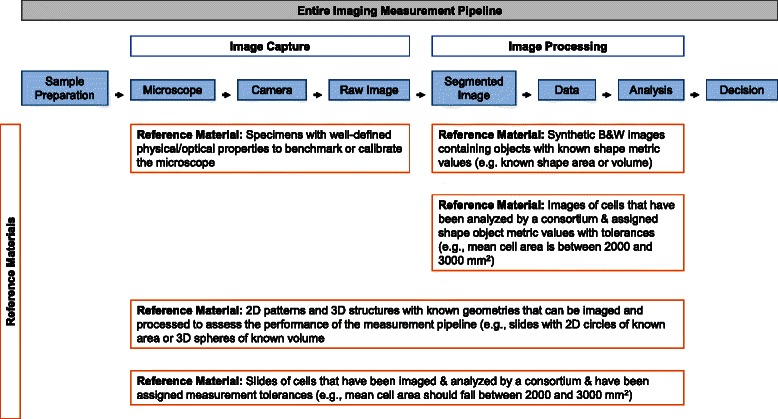

These cellular measurements from segmentation results depend on the entire imaging measurement pipeline shown in Fig. 2.

Fig. 2.

Top: The pipeline for an imaging measurement. Bottom: Different types of reference materials that can be used to evaluate performance of the different stages of the measurement pipeline

The pipeline for an imaging measurement is broken down into three stages: sample preparation, image capture and image processing. Reference materials, organized by constituent parts (Fig. 2, orange boxes), can be used to evaluate the performance of the stages of the pipeline.

Survey usefulness and organization

This survey paper reports statistics of classification categories for automated segmentation methods. The segmentation classification categories are introduced to provide multiple perspectives on an image segmentation step. Segmentation can be viewed from the perspective of a cell biologist as a cellular measurement, or from the perspective of a computer scientist as an algorithm. Both, cell biologists and computer scientists, are interested in analyzing accuracy, error, and execution speed of segmentation (i.e., evaluation perspective of segmentation) as applied to cell measurements. We establish multiple categories for various perspectives on segmentation and classify each paper accordingly.

The term “statistics” refers to frequencies of occurrence and co-occurrence for the introduced classification categories. The occurrence and co-occurrence values are also known as 1st and 2nd order statistics. The terms “survey statistics” indicate that we perform a survey of papers, classify them into categories, and then report statistics of the categories.

The usefulness of survey statistics lies in gaining the insights about the community-wide usage of segmentation. With this insight, a principal investigator who is not interested in applying segmentation to his images can classify his/her cellular measurement problem and follow the most frequently used segmentation in the community. Thus, his work focusing on other aspects of cell biology can just refer to all other papers that have been reported with the same segmentation method. He can justify the segmentation choice based on the usage statistics in the cell biology community. On the other hand, a principal investigator who is interested in doing segmentation research can gain insights about which segmentation methods have not been applied to certain cellular measurements and hence explore those new segmentation approaches.

Overall, this surveys aims at understanding the state-of-the-art of cellular measurements in the context of the imaging measurement pipeline yielding segmented objects. Following from Fig. 2 cellular measurements have an intrinsic accuracy, precision, and execution speed that depend on steps of the pipeline. In order to understand the attributes of cellular measurements, we performed a survey of published literature with the methodology described in Methods section. The segmentation-centric steps of the imaging measurement pipeline are outlined in Results section. Statistical summaries of classified publications can be found in Discussion section. Finally, Conclusions section presents a list of open research questions based on our observations of the published papers.

Methods

This survey was prepared based on an iterative process denoted in literature as “a cognitive approach” [6]. This approach starts with an initial definition of the scope of this survey (i.e., see the search filters in Endnotes section) and a classification schema. All found and manually filtered publications are classified into the categories presented in Table 1. For the purpose of this survey, the classification includes main categories of (1) objects of interests (or objects to be segmented), (2) imaging modalities, (3) digital data axes, (4) segmentation algorithms, (5) segmentation evaluations, (6) computational hardware platforms used for segmentation acceleration, and (7) object (cellular) measurements. The sub-categories in Table 1 come from specific taxonomies that are introduced in the sub-sections of Results section.

Table 1.

Seven main classification criteria of publications (columns) and their categories

| Object of interest | Imaging modality | Data axes | Segmentation | Segmentation evaluation | Segmentation acceleration | Objectameasurement |

|---|---|---|---|---|---|---|

| Cell | Phase contrast | X-Y-T | Active contours + Level Set | Visual inspection | Cluster | Geometry |

| Nucleus | Differential interference contrast | X-Y-Z | Graph-based | Object-level evaluation | Graphics Processing Unit (GPU) | Motility |

| Synthetic (digital model) | Bright-field | X-Y-Z-T | Morphological | Pixel-level evaluation | Multi-core CPU | Counting |

| Synthetic (reference material) | Dark-field | Other | Technique is not specified | Single-core Central Processing Unit (CPU) | Location | |

| Other | Confocal fluorescence | Partial Derivative Equations | Unknown | Unknown | Intensity | |

| Wide-field fluorescence | Region growing | |||||

| Two-photon fluorescence | Thresholding | |||||

| Light sheet | Watershed |

aObject refers to the categories of an object of interest and clusters of objects

The categories of objects of interest were chosen based on foci of cell biology studies and capabilities of optical microscopy. We have selected cell, nucleus, and synthetically generated objects generated using a digital model or a reference material. Synthetic objects are used for segmentation evaluations. The category “Other” includes, for instance, Golgi apparatus boundary, extracellular space, heterochromatin foci, olfactory glomeruli, or laminin protein.

The segmentation categories are based on published techniques across a variety of applications domain. They follow standard categories (e.g., thresholding, region growing, active contours and level set) in segmentation surveys [7–9] with additional refinements (e.g., watershed, cluster-based, morphological, or Partial Derivative Equations (PDEs)). The taxonomy for segmentation categories is presented in Design of automated segmentation algorithms section.

The evaluation of automated segmentation is categorized according to the level of automation as visual inspection (i.e., manual) and object-level or pixel-level evaluation. The object-level evaluation is concerned with the accuracy of the number of objects and/or approximate location, for example, in the case of counting or tracking. The pixel-level evaluation is about assessing accuracy of object shape and location, for instance, in the case of geometry or precise motility. Some papers do not report evaluation at all (classified as “unknown”) while others report results without specifying a segmentation evaluation method (classified as “technique is not specified”).

The categories of segmentation acceleration reflect current computational hardware platforms available to researchers in microscopy labs and in high-throughput biological environments. The platforms include single-core CPU (central processing unit), multi-core CPU, GPU (graphics processing unit), and computer cluster. We have not found a segmentation paper utilizing a supercomputer with a large shared memory. In addition, some researchers report a multi-core CPU hardware platform but do not mention whether the software was taking advantage of multiple cores (i.e., algorithm implementations are different for multi-core CPU than for single-core CPU platforms). Papers that do not report anything about a computational platform or the efficiency of segmentation execution are placed into the category “Unknown”.

Finally, the object or cellular measurement categories are derived from five types of analyses that are performed with 2D + time or 3D cell imaging. These analyses are related to motility, shape, location, counting, and image intensity. They are the primary taxa for mammalian cell image segmentation. Any other specific types of analyses were included in these main five classes or their combinations. For instance, monitoring cell proliferation would be classified as motility and counting or abundance quantification of intracellular components would be classified as location and counting.

While we went over close to 1000 publications and cross-referenced more than 160 papers, we classified only 72 papers according to the above criteria. We excluded from the classification publications that presented surveys or foundational material, did not include enough information about a segmentation method, or were published before the year 2000. Co-authors of this survey sometimes included a few of these papers into the main text to refer to previously published surveys, to seminal publications, or to the key aspects of segmentations demonstrated outside of the scope of this survey. Thus, there is a discrepancy between the number of classified and cross-reference papers. The 72 papers went through independent classifications by at least two co-authors. If a different category was assigned by two co-authors then a third co-author performed another independent classification. Although this verification process doubled the amount of work, we opted for classification quality rather than for quantity given our limited resources.

Our method for validating the classification schema presented above is to compute the occurrence of papers that fall into each category, and the co-occurrence of the classification categories in each paper. The list of papers that are contributing to each occurrence or co-occurrence number are converted programmatically into a set of hyperlinked web pages and can be browsed through at https://isg.nist.gov/deepzoomweb/resources/survey/index.html. The publications and their statistical summaries can be interpreted not only for validation purposes (low values suggest removing a segmentation category from classification) but also for identifying segmentation methods that have not been applied to optical microscopy images of mammalian cells.

Results

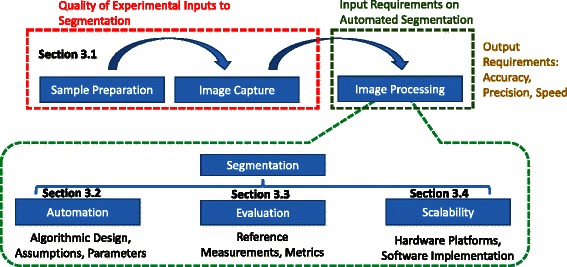

We organized the results into four main sub-sections devoted to (1) experimental inputs to segmentation, (2) automated segmentation, (3) evaluation of automated segmentation, and (4) hardware platforms for computational scalability of automated segmentation as illustrated Fig. 3. The sections have a direct relationship to the imaging pipeline presented in Fig. 2.

Fig. 3.

Survey organization of the Results section with respect to the imaging measurement pipeline. Four sections are devoted quality of segmentation inputs (Experimental inputs to cell imaging and segmentation), automation (Design of automated segmentation algorithms), evaluation (Evaluations of automated segmentations) and computational scalability (Scalability of automated segmentations)

Due to the typical variations in microscopy image appearance, it is important to understand experimental cell imaging inputs to automated segmentation. Variations in cells, reagents, and microscope instrumentations have a great impact on segmentation accuracy [10]. Thus, the design of an automated segmentation algorithm is driven by sensitivity of segmentation to the variations in cell imaging inputs.

The choice of automated segmentation technique can be facilitated by our understanding of segmentation algorithm design, particularly the assumptions for image invariance, the mathematical model for obtaining segments, and the model parameters. Numerical representations of a mathematical model and techniques for optimizing model parameters can also vary across implementations of the same automated segmentation method and determine performance robustness to extreme inputs.

Evaluations of automated segmentation are critical for the comparison-based choice of a segmentation algorithm, for optimization of segmentation parameters, and for the dynamic monitoring of segmentation results to guarantee performance and consistency. However, evaluations depend on designing task-specific metrics and on either reference segmentation for supervised evaluations or an objective cost function for unsupervised evaluations.

Finally, with the continuous advancements in microscopy, automated segmentations are deployed in increasingly diverse research and industrial settings and applied to exponentially growing volumes of microscopy images. In order to create cost effective solutions when segmenting large amounts of images, computational scalability of segmentation on a variety of hardware platforms becomes a selection criterion and has to be included in the evaluations. With the emphasis on reproducibility of biological experiments, computational scalability is not only of interest to bio-manufacturing production environments but also to research institutions conducting large scale microscopy experiments to achieve high statistical confidence of findings.

Experimental inputs to cell imaging and segmentation

While optical microscopy is frequently used as a qualitative tool for descriptive evaluations of cells, the tool is used increasingly to generate digital images that are segmented and used to measure the shape, arrangement, location and the abundance of cellular structures or molecules. There are many advantages to quantitative analysis by automated segmentation algorithms including the capability to assess large datasets generated by automated microscopy in an unbiased manner. In the absence of computational analysis, researchers are often limited to comparatively small sample sizes and presenting microscopic data with a few “look what I saw” images.

The cellular measurements derived from image segmentation can be strongly influenced by specimen preparation [11] and the instrumentation [12] used to image the specimens. The single most important factor for good segmentations is high contrast between foreground and background, and this is achieved by carefully considering four inputs: (1) Cells, (2) Reagents, (3) Culture Substrate/Vessels, and (4) Optical Microscopy Instrumentation. Common sources of variability from these inputs are outlined in Table 2 and should be carefully managed in order to provide high foreground intensity and low background intensity. Images from the initial observations that characterize a new biological finding are not always the best for quantitative analysis. Refinement and optimization of the sample preparation and the imaging conditions can often facilitate quantitative analysis. In the overview of the four experimental inputs, we highlight reports that have used experimental techniques to improve or facilitate downstream segmentation and analysis. Interested readers can consult in-depth technical reviews and books on reagents [13–16], culture substrate/vessels [17–19], and optical microscopy instrumentation [20–22], The single most important factor for good segmentations is high contrast between foreground and background, and this is achieved by carefully considering four Common sources of variability from these inputs are outlined in Table 2 and should be carefully managed in order to provide high foreground intensity and low background intensity [1].

Table 2.

Sources of variability in a quantitative optical microscopy pipeline and methods for monitoring and assuring data quality

| Stage of pipeline | Measurement assurance strategy | Source of variability assessed/addressed | Reference |

|---|---|---|---|

| Sample Preparation | -Establish well-defined protocols for handling cells (ASTM F2998) | Cell culture variability (cell type, donor, passage, history, culturing protocol, user technique) | [23, 94] |

| -Use stable and validated stains (e.g. photostable, chemically stable, high affinity, well characterized antibody reagents) | Instability of probe molecule and non-specific staining | [95–98] | |

| -Choose substrate with low and homogeneous background signal for selected imaging mode or probe (ASTM F2998) | Interference from background | [94, 99–101] | |

| -Optimize medium [filter solutions to reduce particulates, reduce autofluorescence (phenol red, riboflavin, glutaraldehyde, avoid proteins/serum during imaging) | |||

| -Optimize experimental design to the measurement (e.g., low seeding density if images of single cells are best) (ASTM F2998) | Interference from cells in contact | [94, 102] | |

| Image Capture | -Use optical filters to assess limit of detection, saturation and linear dynamic range of image capture (ASTM F2998) | Instrument performance variability (e.g.) light source intensity fluctuations, camera performance, degradation of optical components, changes in focus) | [94, 103, 104] |

| -Optimize match of dyes, excitation/emission wavelength, optical filters & optical filters | Poor signal and noisy background | [105, 106] | |

| -Minimize refractive index mismatch of objective, medium, coverslips & slides | |||

| -Use highest resolution image capture that is practical (e.g., balance throughput with magnification, balance numerical aperture with desired image depth) | |||

| -Calibrate pixel area to spatial area with a micrometer | Changes in magnification | [107, 108] | |

| -Collect flat-field image to correct for illumination inhomogeneity (ASTM F2998) | Non-uniformity of intensity across the microscope field of view | [94, 109–112] |

A final, but critical aspect of the inputs for cell imaging experiments is documenting metadata about how cells, reagents and instrumentation were used [23]. Storing and accessing metadata describing a cellular imaging experiment has been the focus of several research efforts including, ProtocolNavigator [24] and the Open Microscopy Environment project [25, 26]. This metadata serves as evidence for measurement reproducibility in the cell image experiments. The irreproducibility of biological studies has recently be highlighted [27, 28]. A benefit to performing cellular studies using measurements derived from image segmentation is that they can, in principle, be quantitatively reproduced. This means that statistics can be applied to the data to determine the measurement uncertainty. Because the measurement uncertainty depends on the experimental inputs, methods that can be used to monitor each input can be valuable for assuring the reproducibility of a complex, quantitative imaging pipeline. A tabulated list of sources of variability and reference protocols and materials that can be used to monitor the measurement quality in a quantitative cell imaging analysis pipeline prior to segmentation are provided in Table 2.

Input: cells

We focus this survey on the imaging of cultured mammalian cells because of the critical role these systems play in drug screening, medical diagnostics, therapies, and basic cell biology research. The complexity of cellular features observed during imaging can lead to challenging segmentation problems. At the population level, mammalian cells exhibit substantial phenotypic heterogeneity [29], even among a genetically homogeneous population of cells. This means that it is important to image and measure large numbers of cells in order to obtain statistical confidence about the distribution of phenotypes in the population.

Despite the challenges associated with segmenting and identifying cells, in some cases experimental approaches can be selected to facilitate automated analysis and segmentation. In a recent example of this used by Singer et al. [30], a histone-GFP fusion protein was placed downstream of the Nanog promoter in mouse pluripotent stem cells. In this way, the Nanog reporter was localized to the nucleus. A similar example was used by Sigal et al. to probe the dynamic fluctuations exhibited by 20 nuclear proteins [31]. Without nuclear localization, the image analysis would have been substantially more challenging as cells were frequently touching, and the boundary between cells was not well defined in the images. In such cases, a few considerations in the design of the cellular specimen to be imaged can greatly reduce the complexity of algorithms required for segmentation and improve the confidence in the numerical results.

Input: reagents

Reagents used as indicators for cellular function or as labels for specific structures are central to quantitative imaging experiments. The development of probes has a rich history and researchers have access to a large number of probe molecules, including labeled antibodies, through commercial vendors. A description of two recent surveys of probes is provided below so that interested readers can navigate the wide range of technologies that are available. Giuliano et al. produced a particularly relevant review of reagents used within the context of high content imaging [16]. Their work provides a very good overview of the types of probes used in fluorescence microscopy and how they can be applied as physiological indicators, immunereagents, fluorescent analogs of macromolecules, positional biosensors, and fluorescent protein biosensors. In evaluating a fluorescent reagent, they consider the following six critical probe properties: fluorescence brightness (resulting from high absorbance and quantum efficiency), photostability, chemical stability, phototoxicity, non-specific binding, and perturbation of the reaction to be analyzed. Many references to the papers describing the original development of the probes themselves are included in the survey. Another relevant review was produced by the authors Kilgore, Dolman and Davidson who survey reagents for labeling vesicular structures [13], organelles [14], and cytoskeletal components [15]. This work includes experimental protocols as well as citations to original articles where the probes were applied.

Input: culture substrate/vessel

Cells are cultured on many different types of surfaces. From the perspective of collecting digital images prior to quantitative analysis, the ideal tissue culture substrate would be completely transparent at all relevant wavelengths, non-fluorescent, defect free and have a spatially flat surface. These features would facilitate segmentation because the substrate/vessel would not produce any interfering signal with the structures of interest in the image. In practice, cells are frequently cultured on tissue culture polystyrene (TCPS) or glass, both of which are suitable for subsequent analysis particularly at low magnification.

A confounding factor for analysis of digital images of cells is that substrates are frequently coated with extracellular matrix (ECM) proteins that are necessary for the proper growth and function of the cells. The protein coating can make segmentation more challenging by adding texture to the background, both by interfering with transmitted light or by binding probe molecules thus becoming a source of background signal that can interfere with accurate segmentation [32]. Using soft lithography to place ECM proteins in on a surface in a 2-dimensional pattern can simplify segmentation by confining cells to specific locations and shapes. This approach facilitated the quantification of rates of fluorescent protein degradation within individual cells [33]. The approach of patterning has also been used to facilitate live cell analysis of stem cell differentiation. Ravin et al. used small squares patterned with adhesive proteins to limit the migration of neuronal progenitor cells to a field of view and that allowed for lineage progression within these cells to be followed for multiple generations [34]. Without patterning the image analysis problem is challenging because it requires both accurate segmentation from phase contrast or fluorescent images and tracking of cells as they migrate.

Input: optical microscopy instrumentation

The particular image acquisition settings for imaging cells will have a profound impact on the segmentation results, as has been shown by Dima et al. [10]. Therefore, selecting the appropriate instrumentation and optimal acquisition settings is critical. General guidelines for choosing appropriate instrumentation are provided in Frigault et al. in a flow chart [22]. The authors of this article focus on live cell imaging in 3D, but the flow chart can be applied to a wide range of cell imaging experiments. The choice of instrumentation will depend on the cellular specimen, the reagents used and the substrate. When it comes to selection of the imaging mode, the goals of the qualitative visualization and quantitative analysis are the same: to image the objects under conditions that optimize the signal-to-noise ratio with minimal sample degradation. Therefore, the decision for how to image the biological sample is the same for visualization and quantitative analysis.

While it can be argued that 3 dimensional culture of cells is more physiologically relevant than culturing cells on 2 dimensional substrates [35], imaging cells on 3D scaffolds is more difficult. Cells on scaffolds are often imaged using optical sectioning techniques (i.e., confocal) to reduce the large amount of out-of-focus light that can obscure image details.

For confocal imaging, chromatic aberrations are increased along the Z-axis causing the Z-resolution to be approximately 3 times worse than the X-Y plane [36, 37]. This causes blurring in the Z-direction where spheres appear as ellipsoids. Deconvolution algorithms have been used to remove blurring, but they can be difficult to implement since they are highly dependent on imaging parameters: excitation/emission wavelengths, numerical aperture and refractive indices (RI) of the sample, medium, optics and scaffolds. A panel of reference spheres with narrow diameter distributions (15 μm +/− 0.2 μm) that are labelled with a variety of fluorescent dyes [37] can be used to assess the Z-axis aberrations for different wavelength fluorophores, but the reference spheres are not perfect mimics for cells due to differences in RI. References spheres are made of polystyrene, RI of 1.58; RI of phosphate buffered saline is 1.33; RI of culture medium is 1.35; and the RI of cells is challenging to measure, may depend on cell type and has been observed to be within the range of 1.38 to 1.40 [36, 38, 39].

In addition, the scaffolds used for 3D culture interfere with imaging. Non-hydrogel forming polymers, such as poly(caprolactone), can block light and obscure portions of cells that are beneath scaffold struts. Hydrogel scaffolds, such as cross-linked poly(ethylene glycol) (PEG), collagen, fibrin or matrigel scaffolds, can have differing refractive indices causing chromatic aberrations and light scattering effects in the imaging. In addition, hydrogel samples can have spatial inhomogeneities (polymer-rich or -poor phases) that can blur light. Some flat materials may be reflective and bounce light back into the detector resulting in imaging artifacts.

A potential solution could be the development of reference spheres with RIs that match cells. These could be spiked into cells during seeding into 3D scaffolds, and then beads could be imaged along with the cells. In this way, the reference spheres would be imaged under conditions identical to the cells, which would allow calibration of cell measurements against the reference spheres. A potential candidate could be PEG-hydrogel spheres containing fluorophores. Fabricating highly spherical PEG spheres with a narrow diameter distribution may be a challenge. Multi-photon absorption photopolymerization can generate highly uniform structures at 10 μm size scales and may be capable of achieving this goal [40].

Design of automated segmentation algorithms

Here, we focus on the design of segmentation methods encountered in cellular and subcellular image processing with two dimensional time sequence (x, y, t), three dimensional (x, y, z) or three dimensional time sequence (x, y, z, t) datasets. These image datasets are acquired using a subset of optical microscopy imaging modalities, such as phase contrast, differential interference contrast (DIC), confocal laser scanning, fluorescent, and bright/dark field.

Next, we describe common segmentation algorithms, their assumptions, models, and model parameters, as well as the parameter optimization approaches. In comparison to previous surveys about cell microscopy image segmentation [7], we provide more detailed insights into the design assumptions and parameter optimization of segmentation methods.

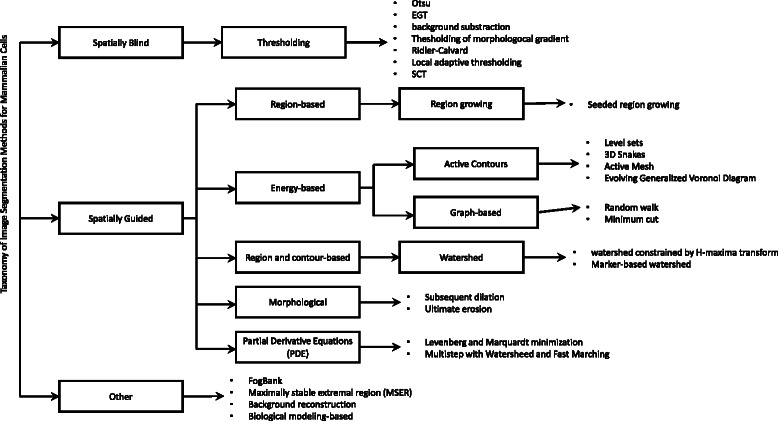

Algorithmic design and assumptions

We classified each paper found in the literature into eight segmentation categories. The categories for our classification are derived from a general taxonomy presented in [41]. Figure 4 shows the used taxonomy of image segmentations for mammalian cells. Table 3 shows eight categories and the frequency of papers using a segmentation method from each category. The categories are used in a disproportionate manner. Threshold based techniques are the simplest and most commonly used techniques in the literature, followed by Active contours. The third most common category is Watershed and the fourth category is the custom made segmentations. In our work, if a paper described a method with multiple different approaches, like thresholding followed by watershed then this paper was classified in both thresholding and watershed categories. Co-occurrence of cellular measurements and segmentation section provides more insight on segmentation methods, image modality and image dimensionality.

Fig. 4.

Taxonomy of image segmentation methods for mammalian cells

Table 3.

Summary usage statistics of segmentation methods in the surveyed literature

| Segmentation category | Description | Number of surveyed papers |

|---|---|---|

| Active contours + Level Set | Parametric curves which fit to an image object of interest. These curve fitting functions are regularized gradient edge detectors | 24 |

| Graph-based | Applies graph theories to segment regions of interest | 2 |

| Morphological | Apply morphological operations to segment or clean a pre-segmented image | 2 |

| Other | The methods in this category are created for a specific problem or cell line by a combination of existing techniques or by creating a new concept | 8 |

| Partial Derivative Equations | Groups pixels into different segment based on minimizing a cost function using partial derivatives | 2 |

| Region growing | Starts from a seed and grows the segmented regions following some pre-defined criterion | 2 |

| Thresholding | Threshold based techniques consider the foreground pixels to have intensity values higher (or lower) than a given threshold. | 31 |

| Watershed | Mainly used to separate touching cells or touching subcellular regions | 15 |

Every segmentation technique is built with certain assumptions about input images during an algorithmic design. Segmentation assumptions affect reported accuracy of segmentation results if they are not met. Assumptions are typically specific to each segmentation algorithm and incorporate image properties of a segmented region. According to the surveyed literature about mammalian cells and segmentation, segmentation assumptions can be categorized into three classes: (1) Biological assumptions, (2) Algorithmic assumptions and (3) Image pre-processing assumptions.

Biological assumptions in a segmentation algorithm are those that are derived from the knowledge of a biologist. For example, a nucleus is considered to have a round shape or a mother cell is small, round, and bright before mitosis in phase contrast images. Algorithmic assumptions are those made during the development of a segmentation algorithm. For instance, assumptions about k-means clustered pixels as initial inputs to the level sets functions, pixel intensities being distributed according to a bi-modal distribution, etc. Image pre-processing assumptions are those that require image processing operations to be applied before segmenting the images. Examples of such pre-processing are a Hessian-based filtering or intensity binning as necessary operations for improved performance. A more detailed description about each assumption class is presented in Table 4.

Table 4.

A summary of segmentation assumptions in the surveyed literature

| Assumptions | Sub-category | Description | References |

|---|---|---|---|

| Biological assumptions | Image Contrast | Strong staining to get high SNR for actin fibers | [113] |

| Optophysical principle of image formation is known | [44] | ||

| Cell brightness significantly higher than background | [114, 115] | ||

| Cell signal higher than noise level in an acquired z-stack | [49, 116–118] | ||

| Object Shape | Biological assumptions about mitotic events like mother roundness and brightness before mitosis | [119–122] | |

| Nucleus shape is round | [123] | ||

| Specifically designed for dendritic cells | [83] | ||

| Cell line falls into one a few object models. Cell must have smooth borders. E.coli model assumes a straight or curved rod shape with a minimum volume darker than background. Human cells assume nearly convex shape. | [124] | ||

| Cells posses only one nucleus | [125] | ||

| Algorithmic assumptions | Background/Foreground Boundary | Initializing level sets functions based on k-means clustering | [126] |

| Background | Background intensities are between the low and high intensities in the image | [127] | |

| Local background must be uniform | [128, 129] | ||

| Background is piecewise linear and its intensities are between the low and high intensities in the image | [130] | ||

| Foreground | Clear distinction between touching cell edge pixel intensities | [122] | |

| Foreground pixels are drawn from a different statistical model than the background pixels | [131] | ||

| Features computed based on their gray-scale invariants | [132] | ||

| Time | The first image of a time sequence should be segmented first by another algorithm like watershed | [69] | |

| Intensity Distributions | Image pixel intensities follow bi-modal histogram | [42] | |

| The statistical properties of the foreground and background are distinct and relatively uniform & foreground is bright, while the background is dark | [133] | ||

| Foreground and background follow Gaussinan distribution | [134] | ||

| Image pre-processing | Background flatfield correction | Image pre-processing: such as correcting inhomogeneous illuminated background intensities using a machine learning based approach to resolve differences in illumination across different locations on the cell culture plate and over time | [81] |

| Filters | Smoothing the image using Gaussian filter | [132] | |

| Downsampling (binning) the images | [64] | ||

| Image smoothing and automatic seed placement are used | [56] | ||

| Hessian-based filtering for better cell location and shape detection | [44] | ||

| Non-linear transformation | Image pre-conditioning where the image is transformed to bright field before applying the threshold | [48] | |

| Manual input | Manual interactivity is needed to compute segmentation | [84] |

Tools, packages, code availability, languages

Several software packages include segmentation algorithms that can be applied to images across different imaging modalities and cell lines. These packages range from polished tools with graphical interfaces to simple collections of segmentation libraries. A recent survey of biological imaging software tools can be found in [26]. The list provided in Table 5 includes tools with segmentation software packages encountered during this literature survey of segmentation techniques as well as methods that could potentially be used for mammalian cell segmentation. This table is inclusive to the segmentation field we are surveying but it is not by any means an exhaustive list of the available tools in that field.

Table 5.

A summary of software packages encountered during this literature survey

| Software name | Description | Tool availability | Reference |

|---|---|---|---|

| Ilastik | A tool for interactive image classification, segmentation, and analysis | S | [135] |

| FARSIGHT | Toolkit of image analysis modules with standardized interfaces | S | [136] |

| ITK | Suite of image analysis tools | S | [137] |

| VTK | Suite of image processing and visualization tools | S | [138] |

| CellSegmentation3D | Command line segmentation tool | E | [139] |

| ImageJ/Fiji | Image processing software package consisting of a distribution of ImageJ with a number of useful plugins | E + S | [78] |

| Vaa3D | Cell visualization and analysis software package | E + S | [140] |

| CellSegM | Cell segmentation tool written in MATLAB | S | [141] |

| Free-D | Software package for the reconstruction of 3D models from stacks of images | E | [142] |

| CellExplorer | Software package to process and analyze 3D confocal image stacks of C. elegans | S | [143] |

| CellProfiler | Software package for quantitative segmentation and analysis of cells | E + S | [144] |

| Kaynig’s tool | Fully automatic stitching and distortion correction of transmission electron microscope images | E + S | [145] |

| KNIME | Integrating image processing and advanced analytics | E + S | [146] |

| LEVER | Open-source tool for segmentation and tracking of cells in 2D and 3D | S | [31, 147] |

| OMERO | Client–server software for visualization, management and analysis of biological microscope images. | E + S | [148] |

| Micro-Manager | Open-source microscope control software | E + S | [149] |

| MetaMorph | Microscopy automation and image analysis software | PE | [124] |

| Imaris | Software for data visualization, analysis, segmentation, and interpretation of 3D and 4D microscopy datasets. | PE | [150] |

| Amira | Software for 3D and 4D data processing, analysis, and visualization | PE | [151] |

| Acapella | High content imaging and analysis software | PE | [85] |

| CellTracer | Cell segmentation tool written in MATLAB | E + S | [124] |

| FogBank | Single cell segmentation tool written in MATLAB | E + S | [122] |

| ICY | Open community platform for bioimage informatics. | E + S | [65] |

| CellCognition | Computational framework dedicated to the automatic analysis of live cell imaging data in the context of High-Content Screening (HCS) | E + S | [152] |

Tool Availability options are (P)roprietary, (E)xecutable Available, (S)ource Available

Optimization of segmentation parameters

In many cases, segmentation techniques rely on optimizing a parameter, a function or a model denoted as optimized entities. The goal of optimizing these entities is to improve segmentation performance in the presence of noise or to improve robustness to other cell imaging variables.

Around 40 % of the surveyed papers do not mention any specific parameter, function or model optimization. Based on the remaining 60 % of the papers, five categories of optimization entities were identified: (1) intensity threshold, (2) geometric characteristics of segmented objects, (3) intensity distribution, (4) models of segmented borders, and (5) functions of customized energy or entropy. Almost 50 % of the papers that explicitly mention parameter optimization rely on intensity threshold and/or intensity distribution optimization. Parameters related to the segmented object geometry are optimized in approximately 30 % of the papers while models of segmented border location are optimized in approximately 15 % of the surveyed publications. The remaining 5 % describe algorithms that make use of customized energy or entropy functions, whose optimization leads to efficient segmentation for specific applications.

Table 6 illustrates a summary of five representative publications for the most highly occurring categories of optimization entities (categories 1, 2 and 3 above) in terms of the use of optimization.

Table 6.

A summary of five publications in terms of their use of segmentation parameter optimization

| Optimized entity | Optimization approach | Segmentation workflow | Reference |

|---|---|---|---|

| Intensity threshold, intensity distribution | Otsu technique [43] to minimize intra-class variance | Thresholding→Morphological seeded watershed | [42] |

| DIC-based nonnegative-constrained convex objective function minimization→ Thresholding | [44] | ||

| Intensity threshold, intensity distribution, geometric characteristics of segmented objects | Find threshold that yields expected size and geometric characteristics | Gaussian filtering→Exponential fit to intensity histogram→Thresholding→ Morphological refinements | [49] |

| Thresholding→Morphological refinements | [47] | ||

| Intensity distribution, geometric characteristics of segmented objects | Hessian-based filtering and medial axis transform for enhanced intensity-based centroid detection | Iterative non-uniformity correction→Hessian-based filtering→Weighted medial axis transform→Intensity-based centroid detection | [48] |

Table 6 also shows how the segmentation workflow often consists of a number of steps, such as seeded watershed, various image filters, medial axis transform, and morphological operations, which involve different optimization entities. For instance, Al-Kofahi et al. [42] employ Otsu thresholding [43], followed by seeded watershed in order to correctly segment large cells. Bise et al. [44] eliminate differential interference contrast (DIC) microscopy artifacts by minimizing a nonnegative-constrained convex objective function based on DIC principles [45], and then the resulting images are easily segmented using Otsu thresholding [43]. Ewers et al. [46] initially correct for background and de-noise using Gaussian filters. Local intensity maxima are then sought based on the upper percentile, and are optimized based on the (local) brightness-weighted centroid and on intensity moments of order zero and two. We found several examples of intensity thresholding combined with geometry-based refinements [47], iterative procedures [48], and global fitting steps [49].

Interesting optimization approaches can be also found in applications of segmentation methods outside of the scope of this survey. Such segmentation methods use for instance artificial neural networks (ANN) and optimize ANN weights [50], 3D active shape models (ASM) and optimize shape variance [51], or geometrically deformable models (GDM) which rely on finding optimal internal and external forces being applied to deform 2D contours [52].

Evaluations of automated segmentations

We focus on accuracy and precision evaluations of automated segmentation algorithms. The evaluation approaches have been classified according to the taxonomy in [53]. They have been expanded by object and pixel level evaluations in Table 7. The object level evaluation is important for the studies focusing on counting, localization or tracking. The pixel level evaluation is chosen for the studies measuring object boundaries and shapes.

Table 7.

Taxonomy of segmentation evaluation approaches

| Taxonomy of segmentation evaluation | Subjective | ||||

| Objective | System Level | ||||

| Direct | Analytical | ||||

| Empirical | Unsupervised | Object level (counts, centroids) | |||

| Pixel level (boundaries) | |||||

| Supervised | Object level (counts, centroids) | ||||

| Pixel level (boundaries) |

The majority of evaluations found in the literature of interest to this survey fall under empirical methods with supervised and unsupervised evaluation approaches.

Next, we overview both unsupervised and supervised segmentation evaluation approaches and highlight several segmentation quality criteria and metrics, as well as challenges with creating reference segmentation results and selecting samples for the reference segmentations. Finally, we summarize evaluations methods employed in several past grand segmentation challenges that have been conducted in conjunction with bio-imaging conferences.

Unsupervised empirical evaluation design

Unsupervised evaluation of segmentation methods are also known as stand-alone evaluation methods and empirical goodness methods. A relatively broad survey of such methods is presented in [53]. Unsupervised evaluations do not require a creation of ground truth segmentation. Thus, they scale well with the increasing number of segmentation results that have to be evaluated for accuracy. Furthermore, these methods can be used for tuning segmentation parameters, detecting images containing segments with low quality, and switching segmentation methods on the fly.

In this class of evaluation methods, the goodness of segmentation is measured by using empirical quality scores that are statistically described, and derived solely from the original image and its segmentation result. One example of a quality score is the maximization of an inter-region variance in threshold-based Otsu segmentation [43]. Unfortunately, there is no standard for unsupervised evaluation of automated segmentation because the segmentation goodness criteria are application dependent. Moreover, application and task specific criteria are often hard to capture in a quantitative way because they come from descriptions based on visual inspections. As a consequence, unsupervised segmentation evaluations are rarely reported in the literature focusing on optical 2D and 3D microscopy images of cells. We did not find a single paper that reported comparisons of task-specific segmentation methods using unsupervised evaluation methods. On the other hand, a few researchers utilized elements of unsupervised evaluations in their segmentation pipeline in order to improve their final segmentation result. We describe three such examples next.

Lin et al. in [54] and [55] segment cellular nuclei of different cell types. The initial segmentation is performed with a modified watershed algorithm to assist with nucleus clustering and leads to over-segmentation. The authors estimate the confidence in segmenting a nucleus as the object composed of a set of connected segments with a probability. This probability can be seen as an unsupervised segmentation quality score and is used for merging of connected segment into a nucleus object.

Padfield et al. in [56] perform a segmentation of a spatio-temporal volume of live cells. The segmentation is based on the wavelet transform. It results in the 3D set of segmented “tubes” corresponding to cells moving through time. Some of the tubes touch at certain time points. The authors use the likelihood of a segment being a cell-like object as an unsupervised segmentation score for merging or splitting separate cell tracks.

Krzic et al. in [57] segment cellular nuclei in the early embryo. The initial segmentation is performed by means of local thresholding. The authors use volume of the candidate object as a score for the decision whether the volume split operation should be performed. If the volume is greater than the mean volume plus one standard deviation then the watershed algorithm is applied to the candidate object.

Supervised empirical evaluation design

Supervised empirical evaluation methods, also named empirical discrepancy methods are used to evaluate segmentations by comparing the segmented image against a ground-truth (or gold-standard) reference image. These methods often give a good estimation of the segmentation quality, but can be time-consuming and difficult for the expert in charge of manually segmenting the reference images. We overview publications that address a few challenges related to the creation of a reference segmentation, sampling, and evaluation metrics.

Creation of databases with reference cell segmentations

There is growing availability of reference segmentations on which to evaluate segmentation methods. A number of research groups have created databases of images and segmentation results that span a range of imaging modalities, object scales, and cellular objects of interest. Reference images are needed to test 3D segmentation algorithms across the variety of imaging modalities and over a wide variety of scales from cell nuclei to thick sections of biological tissues. We summarized a few cell image databases in Table 8.

Table 8.

Examples of reference cell image databases

| Cell image databases | Biological content | Scale of objects | Axes of acquired data | References |

|---|---|---|---|---|

| Biosegmentation benchmark | Mammalian cell lines | Nuclear to multi-cellular | X-Y-Z | [58] |

| Cell Centered Database | Variety of cell lines, initial data of nervous system | Subcellular to multi-cellular | X-Y-Z, X-Y-T, X-Y-Z-T | [59] |

| Systems Science of Biological Dynamics (SSBD) database | Single-molecule, cell, and gene expression nuclei. | Single-molecule to cellular | X-Y-T | [153] |

| Mouse Retina SAGE Library | Mouse retina cells | Cellular | X-Y-Z-T | [60] |

Gelasca et al. in [58] describe a dataset with images covering multiple species, many levels of imaging scale, and multiple imaging modalities, with associated manual reference data for use in segmentation algorithm comparisons and standard evaluation of algorithms. The database includes images from light microscopy, confocal microscopy, and microtubule tracking and objects from one micron to several hundred microns in diameter. They also provide analysis methods for segmentation, cell counting, and cell tracking. For each data set in the database, the number of objects of interest varies with the data set.

Martone et al. in [59] have created the Cell Centered Database for high-resolution 3D light and electron microscopy images of cells and tissues. This database offers hundreds of datasets to the public. They have developed a formal ontology for subcellular anatomy which describes cells and their components as well as interactions between cell components.

A database developed based on the work of Blackshaw et al. in [60] and accessible at http://cepko.med.harvard.edu/, contains imaging data to investigate the roles of various genes in the development of the mouse retina. Various clustering methods are available to understand the relationships between sets of genes at different stages of development. A review of biological imaging software tools summarizes the current state of public image repositories in general, including those with and without reference data sets [26], contains imaging data to investigate the roles of various genes in the development of the mouse retina.

Sampling of objects to create reference cell images

When creating reference cell image databases, there is a question of cell sampling. For the reference databases in Table 8, little information is available describing the sampling method and how the number of reference objects for each set is chosen, or how the variability across a population of images is found.

In general, cell image samples for inclusion into the reference database can be drawn from (1) multiple cell lines, (2) multiple biological preparations, (3) one experimental preparation with many images (X-Y-T or X–Y-Z), (4) one image containing many cells, and (5) regions of a cell. A sampling strategy would be applied to select images of cells, nuclei, or cell clusters. This topic of image sampling using fluorescence images of different biological objects has been explored by Singh et al. in [61]. By performing uniform random sampling of the acquired images and comparing their variability for different sample sizes, one can estimate the size of the image to sample to stay within a specified variance. Similarly, Peskin et al. in [62] offer a study that estimated the variability of cell image features based on unusually large reference data sets for 2D images over time. The authors showed that the range of sample numbers required depends upon the cell line, feature of interest, image exposure, and image filters.

The number of objects selected for analysis varies with the type of object in the image and its scale. Nuclear images tend to have larger numbers of objects in associated analyses. Examples include studies on rat hippocampus [55], various human tissues [63], and a variety of other species, for which the numbers of nuclei per image range from 200 to 800. These numbers are high compared with images of larger objects, such as breast cancer cells [58] or rat brain cells [55], for which the number of objects in the entire study is much lower, i.e. 50 to 150. The vast majority of studies do not explain exactly how the number of objects is selected, or the shapes of the distributions of resulting data (Gaussian or non-Gaussian).

In this survey, we encountered 44 papers that referred to different sampling methods including exhaustive sampling (13), manually selected samples (11), statistical sampling (13, random or stratified), or systematic sampling (7, regular sub-sampling of data or simply choosing the first N samples). These sampling techniques were used for selecting objects or interest to create reference segmentations. We found 49 papers that described the creation of reference segmentations by using automatic (4), semi-automatic (4), manual (38), and visual (3) approaches. The manual approaches created a reference segment representation while visual approaches provided just a high level label. There were several papers that reported creation of reference segmentations but did not report sampling of objects of interests. Some papers used combinations of sampling strategies (4) or creation methods (6).

Future research involving the availability and utility of a reference data set will depend upon the extent of efforts made to manually create sets that represent true image variability for a very wide range of applications. As more reference data is collected, one can begin to ask relevant questions about required sampling sizes for different types of applications.

Segmentation accuracy and precision measures

Following the classification in the survey of evaluation methods for image segmentation [9], the measures used in supervised empirical segmentation evaluation methods can be classified in four main categories: measures based on (1) the number of mis-segmented voxels, (2) the position of mis-segmented voxels, (3) the number of objects, and (4) the feature values of segmented objects. We summarized measures, metrics and cellular measurements in Table 9, and describe each category of segmentation evaluation measures next.

-

Measures based on the number of mis-segmented voxels

These measures view segmentation results as a cluster of voxels, and hence evaluate segmentation accuracy using statistics such as the Jaccard and Dice indices. These indices for a class can be written as:

| 1 |

| 2 |

where R is the set of voxels of the reference segmentation and S is the set of voxels obtained by the tested algorithm. To define a metric on the entire image, one can take the average of those indices over all the classes. These two measures were the most commonly used in the reviewed papers, notably in [61, 64–68].

Table 9.

A summary of segmentation evaluation metrics

| Measures based on | Metric name | Cellular measurement | Reference |

|---|---|---|---|

| Number of Mis-segmented voxels | Jaccard | Synthetic | [65] |

| Dice | Cell | [120, 129, 154] | |

| Synthetic | [154] | ||

| Other | [66] | ||

| F-Measure | Synthetic | [155] | |

| Adjusted Rand Index | Cell | [122] | |

| Custom measure | Nucleus | [61] | |

| Cell | [67] | ||

| Misclassification error | Nucleus | [156] | |

| Other | [156] | ||

| Accuracy (ACC) | Cell | [157, 158] | |

| Position of mis-segmented voxels | Average distance | Cell | [56] |

| Synthetic | [117] | ||

| Other | [116] | ||

| Root square mean of deviation | Synthetic | [159] | |

| Histogram of distances | Nucleus | [138] | |

| Number of objects | Object count | Nucleus | [55, 56, 123, 160–162] |

| Cell | [81, 119, 163] | ||

| Precision/Recall | Nucleus | [54, 84] | |

| Cell | [44, 69, 84, 127] | ||

| F-measure | Nucleus | [84] | |

| Cell | [69, 84] | ||

| Bias index | Cell | [69] | |

| Sensitivity | Nucleus | [138, 164] | |

| Custom measure | Cell | [67] | |

| Cell detection rate | Cell | [165] | |

| Feature values of segmented objects | Velocity histogram | Cell | [166] |

| Object position | Nucleus | [167] | |

| Cell | [151, 163, 166] | ||

| Synthetic | [168] | ||

| Pearson’s correlation slope and intercept for velocity measurements | Cell | [166] | |

| Voxel intensity based | Synthetic | [159] | |

| Other | [73] | ||

| Object area and shape based | Cell | [151] | |

| Other | [73] | ||

| Structural index | Cell | [151] |

Another common measure is the F-measure which is based on precision and recall:

| 3 |

| 4 |

| 5 |

where R and S have the same meaning as before. The F-measure has been used in [69, 70].

These measures based on the number of mis-segmented voxels have the advantage of being simple to compute. However, they do not take into account the location of a mis-segmented voxel. The location might be important since a mis-segmented voxel close to a segment boundary might not contribute to a segmentation error as much as one far away.

-

(2)

Measures based on the position of mis-segmented voxels

Measuring the segmentation discrepancy by taking into account only the number of mis-segmented voxels may not be sufficient to rank several segmentations of the same objects. While two segmentation results can be similar when measuring the number of mis-segmented voxels, they might be dissimilar when measuring positions of mis-segmented voxels. The most common measure based on positions of mis-segmented voxels is the Hausdorff distance [71]. It is defined as the maximum of the sets of minimum distances of two compared shapes and has been used to evaluate 3D nuclei segmentation in [72]. Another approach is to use the position distances between 3D boundary voxels of ground truth and segmented objects in 2D slices as used by S. Takemoto and H. Yokota in [73].

-

(3)

Measures based on the number of objects

Measures at voxel level have the disadvantage of measuring performance without considering aggregations of voxels that form semantically meaningful objects. Measures based on the number of objects are trying to address this issue. Depending on a specific study and its spatial resolution, the objects are usually colonies, cells or nuclei. Once semantically meaningful objects are defined, one can use the same measures as those introduced for measuring the number of mis-segmented voxels. As examples, two such studies have reported the use of the Jaccard index [74] and the F-measure [70]. With object-based measurements, however, the challenge lies in matching the objects from the automatically segmented images with the objects specified as ground truth. This step is not trivial since the automatic segmentation can result in false positives (object does not exist in ground truth), false negatives (missing object in automatic segmentation), splits (object detected as multiple objects) and merges (multiple objects detected as one object). One possible solution can be found in [74] where a reference cell R and a segmented cell S match if |R ∩ S| > 0.5 |R|.

-

(4)

Measures based on the feature values of segmented objects

Image segmentation can be viewed as a necessary step to extract properties of segmented objects. The extraction goal leads to segmentation evaluations based on one or several extracted features (properties) of a segment. The evaluation objective is to verify that features extracted from the segmented object are equivalent to features measured on the original object (reference features). In other words, conclusions derived from measured features over segmented objects will be the same for the original and the segmented object. This type of evaluation is used by S. Takemoto and H. Yokota in [73]. They use a custom similarity metric combining intensity-based and shape-based image features measurements and ranking several algorithms for a given 3D segmentation task based on the distance between feature vectors. Similarly, centroids of segments are used as features in [56] and [58] which can be viewed as an extension of measuring the position of mis-segmented voxels.

Among the aforementioned measures, the most common ones are the measures based on the number of mis-segmented voxels, such as the well-known Dice or Jaccard indices. Nonetheless, other measures can be found in literature that are based on either a custom design [61] or a combination of several existing measures [73]. It is also important to note that due to the amount of labor needed to establish 3D reference segmentation manually from volumetric data, evaluations are sometimes performed against 2D reference segmentations of 2D slices extracted from 3D volumetric data [61, 73, 75].

Confidence in segmentation accuracy estimates

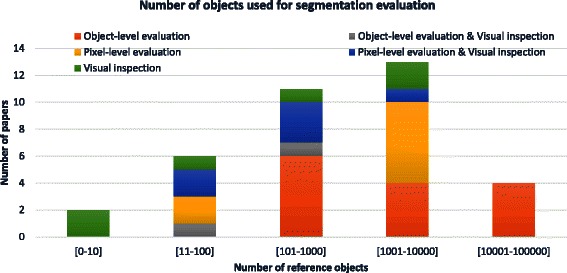

Sampling method and the sample size of reference objects determines the confidence in segmentation evaluation accuracy. We have extracted the information about the number of reference objects (sample size) from the classified papers and summarized them in Fig. 5. The numbers are presented per Segmentation Evaluation category introduced in Table 1. The papers that did not specify the sample size in units matching the object categories (i.e., cells, nuclei, etc.) but rather in time frames were labeled as “unknown” number of reference objects. The histogram in Fig. 5 shows 50 out of 72 papers that report the number of reference objects. It illustrates the distribution of the papers relying on qualitative/visual evaluations (2, 4, 5, 3, 0) and quantitative segmentation evaluations (0, 0, 6, 10, 4) as the number of reference objects increases.

Fig. 5.

A histogram of the number of evaluation objects used in surveyed papers that reported segmentation evaluation

Evaluations of segmentation grand challenges

In the past, segmentation accuracy evaluation of biomedical images has been formulated as grand challenges by several conferences. The majority of challenges have been affiliated with the Medical Image Computing and Computer-Assisted Intervention (MICCAI) conference and the IEEE International Symposium on Biomedical Imaging (ISBI): From Nano to Macro (see http://grand-challenge.org/All_Challenges/). Other conferences, such as SPIE, ECCV, and ICPR for computer vision and pattern recognition communities, have recently introduced such biomedical image challenges as well.

Although the specific biomedical imaging domain varies in these challenges, almost all of them include a segmentation step. For example, among the grand challenges affiliated with the 2015 ISBI conference, seven out of the eight included segmentation. Out of those seven, two challenges are related to mammalian cell segmentation (Cell Tracking Challenge and Segmentation of Overlapping Cervical Cells from Multi-layer Cytology Preparation Volumes). These challenges run over two to three years since the segmentation problem remains an open problem in general. In addition, the challenges transition from segmenting 2D to 3D data sets which increases the difficulty of designing an accurate solution.

In terms of challenge evaluation, the challenge of segmentation of overlapping cervical cells is assessed using the average Dice Coefficient against manually annotated cytoplasm for each cell and nucleus, and against a database of synthetically overlapped cell images constructed from images of isolated cervical cells [76, 77]. The cell tracking challenge is evaluated using the Jaccard index, and against manually annotated objects (the ground truth) consisting of the annotation of selected frames (2D) and/or image planes (in the 3D cases) [74].

Summary of segmentation evaluation

Evaluation of automated segmentation methods is a key step in cellular measurements based on optical microscopy imaging. Without the evaluations, cellular measurements and the biological conclusions derived from them lack error bars, and prevent others from comparing the results and reproducing the work.

The biggest challenge with segmentation evaluations is the creation of reference criteria (unsupervised approach) or reference data (supervised approach). The reference criteria are often hard to capture in a quantitative way because they are based on observations of experts’ visual inspections. As a consequence, unsupervised segmentation evaluations are rarely reported in the literature using optical microscopy images of cells. If segmentation parameters have to be optimized then some papers use “goodness criteria” for this purpose.

The challenge with creating reference data is the amount of labor, human fatigue, and reference consistency across human subjects. Software packages for creating reference segmentation results have been developed [78, 79]. These software packages provide user friendly interfaces to reduce the amount of time needed. However, they do not address the problem of sampling for reference data, and do not alleviate too much the human aspects of the creation process.

Finally, there are no guidelines for reporting segmentation evaluations. For example, evaluations of segmentation objects are summarized in terms of the total number of cells, frames or image stacks, or a sampling frame rate from an unknown video stream. These reporting variations lead to ambiguity when attempts are made to compare or reproduce published work.

Scalability of automated segmentations

We have focused our survey of the segmentation literature on the use of desktop solutions with or without accelerated hardware (such as GPUs), and the use of distributed computing using cluster and cloud resources. These advanced hardware platforms require special considerations of computational scalability during segmentation algorithm design and execution. The categories of hardware platforms in Table 1 can be placed into a taxonomy based on the type of parallelism employed, as given in Table 10.

Table 10.

Taxonomy of hardware platforms

| Taxonomy of hardware platforms | Parallel | MIMD | Cluster |

| Multi-core CPU | |||

| SIMD | GPU | ||

| Serial | Single-core CPU | ||

SIMD is Single Instruction, Multiple Data streams, MIMD is Multiple Instruction, Multiple Data streams [169]

Based on our reading of the literature that meets the survey criteria, the topic of computational scalability is currently not a major concern for the 3D segmentation of cells and subcellular components. While algorithms in other application areas of 2D and 3D medical image segmentation are often developed to support scalability and efficiency [80], most of the papers we surveyed made no claims about computational efficiency or running time. Of the works that did claim speed as a feature, only a few exploited any kind of parallelism, such as computer clusters [81], GPUs [82], or multi-core CPUs [83–87]. Some other algorithms made use of the GPU for rendering (e.g. Mange et al. [83]) rather than for the segmentation itself. For algorithms that did exploit parallelism for the actual segmentation, it was generally either to achieve high throughput on a large data set (e.g. on clusters for cell detection in Buggenthin et al. [81]) or to support a real-time, interactive application (e.g. on multi-core CPUs for cell tracking in Mange et al. [83] and for cell fate prediction in Cohen et al. [88]). We did not find any works which made use of more specialized hardware, such as FPGAs.

In addition to algorithmic parallelism and computational scalability, the segmentation result representation plays an important role in the execution speed of rendering and post-processing of segments. The output of most cell segmentation algorithms is in the form of pixels or voxels, which are a set of 2D or 3D grid points, respectively, that sample the interior of the segmented region. Some other works produce output in the form of a 3D triangle mesh (e.g. Pop et al. [89]), or its 2D equivalent, a polygon (e.g. Winter et al. [87]). While the triangular mesh representation is very amenable to rendering, especially on the GPU, it is less suitable than a voxel representation for certain types of post-segmentation analysis, such as volume computation.