Abstract

A number of recent studies have demonstrated variants of the action–sentence compatibility effect (ACE), wherein the execution of a motor response is facilitated by the comprehension of sentences that describe actions taking place in the same direction as the motor response (e.g., a sentence about action towards one’s body facilitates the execution of an arm movement towards the body). This paper presents an experiment that explores how the timing of the motor response during the processing of sentences affects the magnitude of the ACE that is observed. The results show that the ACE occurs when the motor response is executed at an early point in the comprehension of the sentence, disappears for a time, and then reappears when the motor response is executed right before the end of the sentence. These data help to refine our understanding of the temporal dynamics involved in the activation and use of motor information during sentence comprehension.

Keywords: Embodied cognition, Language comprehension, Theory of Event Coding, Simulation, Motor planning

A number of recent papers have provided support for the view that the comprehension of language involves the construction of sensorimotor simulations of the objects, actions, and events that are being described. Behavioural studies have shown that both perceptual (e.g., Connell, 2007; Holt & Beilock, 2006; Kaschak et al., 2005; Kaschak, Zwaan, Aveyard, & Yaxley, 2006; Richardson, Spivey, Barsalou, & McRae, 2003; Zwaan, Madden, Yaxley, & Aveyard, 2004; Zwaan, Stanfield, & Yaxley, 2002) and motoric (e.g., Borreggine & Kaschak, 2006; Glenberg & Kaschak, 2002; Kaschak & Glenberg, 2000; Zwaan & Taylor, 2006) information is active during the processing of sentences. The behavioural results are complemented by neuroimaging studies showing that the processing of words and sentences involves the activation of the same neural regions that would be involved in actually perceiving or acting with the referent of the words, or engaging in the action depicted in the sentences (e.g., Buccino et al., 2005; Gernsbacher & Kaschak, 2003; Isenberg et al., 1999; Kan et al., 2003; Martin & Chao, 2001; Pulvermüller, 1999). The focus of this paper is on a specific finding from this literature, the action–sentence compatibility effect (ACE; Glenberg & Kaschak, 2002).

The ACE is demonstrated in cases where the execution of a task response is facilitated by the comprehension of a sentence that describes an action that has the same motor features as the task response (Glenberg & Kaschak, 2002). The ACE was originally demonstrated by Glenberg and Kaschak (2002) in a task where participants read and made sensibility judgements on sentences such as, “Meghan gave you a pen” (action towards you) or, “You gave Meghan a pen” (action away from you). Indicating that the sentence was sensible required either making an arm movement towards the body or making an arm movement away from the body. Participants were faster to execute the motor response when the direction of the response matched the direction of motion described by the sentence (e.g., making a response towards the body to the sentence, “Meghan gave you a pen”; see Borreggine & Kaschak, 2006, and Zwaan & Taylor, 2006, for further demonstrations of ACE effects).

Borreggine and Kaschak (2006) developed an account of the ACE based on the theory of event coding (TEC) developed by Hommel and colleagues (e.g., Hommel, Musseler, Aschersleben, & Prinz, 2001). TEC is a theory of perception and action planning, although we discuss the theory solely in terms of action planning. TEC proposes that action planning takes place in two stages. In the first stage, the actor activates features associated with one or more potential actions. For example, a person might consider grasping a salt shaker, a glass, or a coffee mug, all of which are situated on a table in front of her. Features associated with the potential grasp of all of those objects become active at this point (e.g., the salt shaker and glass might activate the “right” feature because they are to the right of the person, whereas the mug activates the “left” feature because it is to the left of the person). If more than one potential action activates the same feature (e.g., the salt shaker and glass both activate the “right” feature), that feature receives more activation than if it was only activated by one potential action, and priming between the potential actions occurs. In the second stage of action planning, the person selects an action for execution (e.g., grasping the salt shaker). The features relevant for the execution of this action are bound into what Hommel et al. (2001) call an event code. For the present purposes, the important thing to note about an event code is that when a feature (“right”) is bound to a particular action (grasping the salt shaker), it is temporarily less available for use in preparing another action that involves the same feature (e.g., grasping the glass, which also requires the “right” feature).

Based on TEC (and Townsend & Bever’s, 2001, late assignment of syntax theory, LAST), Borreggine and Kaschak (2006) proposed that the simulation of motor actions during language comprehension is a two-step process (see Richardson, Spivey, & Cheung, 2001, for a similar TEC-based approach to language processing). The first step occurs during online language processing, where features relevant to the action being described (e.g., “towards” or “away”) are activated. This information is activated as soon as it becomes available in the incoming linguistic input (e.g., information about the nature of the action becomes available when a verb such as “handed” is processed; see Zwaan & Taylor, 2006). The features are not immediately bound into a complete simulation of the action because the comprehender must delay running the simulation until there is enough information to fully specify the action being taken. For example, upon reading, “You dealt the cards …,” there is enough information to know that the action is an “away” action, but there is not yet enough information to run the full simulation because it is not yet known who is being dealt the cards. The second step in simulating motor actions during language comprehension occurs at or near the completion of the sentence, once enough information has accrued to fully specify the action that is taking place. Here, the active features are bound into a full simulation of the action described by the sentence. This is akin to TEC’s proposal that features are bound into an event code when an action is executed.

On Borreggine and Kaschak’s (2006) view, the ACE arises because of the parallel two-step processes that are needed to prepare the motor response for execution and to simulate the action described in the sentence. When the nature of the motor response needed to make the sensibility judgement (towards or away) is known, it activates a directional feature (towards). As the sentence is being processed, a directional feature (towards) is activated in preparation for the simulation that will be executed. In cases where both the motor response and the sentence activate the same feature (towards), priming between the preparation of the motor response and the preparation of the simulation will occur (as in the grasping example used to illustrate TEC earlier in the paper). This priming is the mechanism that drives the ACE. Whereas activation of common action-relevant features during motor planning and sentence processing is the mechanism that produces the ACE, one other precondition must be met in order for the ACE to be observed: The participant must execute the motor response at a point in time at which the directional feature is not bound to the simulation of the sentence (in Borreggine & Kaschak’s experiments, this meant executing the motor response before the simulation was run). If the motor response is executed at a point in time at which the directional feature is not bound to the simulation, the execution of the motor response will be speeded by virtue of the priming that arises when the sentence and motor response activate the same feature. Once the feature has been bound to the simulation, it becomes less available for use in executing the motor response, and the ACE disappears.

Borreggine and Kaschak (2006) provided support for their approach by showing that the presence or absence of the ACE could be controlled by manipulating the point in the trial at which participants knew what motor response to make in order to indicate that the sentence was sensible. They presented sentences to the participants auditorily, and at some point during each trial a visual cue would indicate whether the participant should make a towards response or an away response in order to say that the sentence made sense (they were to make no response if the sentence was not sensible). When the visual cue was presented at the beginning of the trial, the motor response could be prepared early in the comprehension of the sentence, and participants were able to execute the motor response before the end of the sentence (given that most sentences were obviously sensible or nonsensible; see Borreggine & Kaschak, 2006, for a discussion). The ACE was seen under these circumstances. When the visual cue was presented at various points immediately following the conclusion of the sentence, the ACE disappeared.

Borreggine and Kaschak’s (2006) results are consistent with two explanations. The first explanation (which we call the feature binding account) is the TEC-based account described earlier: At (or around) the end of the sentence, participants bind the directional feature (towards or away) to their simulation of the sentence, and the feature is therefore less available for use in executing the motor response. An alternative account (which we call the feature activation account) would explain the presence (and absence) of the ACE without proposing that motor features are bound to the simulation of the sentence. On this view, the comprehension of sentences involves activating motoric information that can prime the execution of congruent motor responses (similar to Borreggine & Kaschak’s account). The ACE will therefore appear when the motor response is executed during online sentence processing (as in Borreggine & Kaschak’s Experiment 1) and will disappear when sentence processing has been completed (as in Borreggine & Kaschak’s Experiments 2–4). Note that in this case, the ACE disappears not because motor features are bound to the simulation, but because the motor features relevant to the sentence are no longer active once sentence comprehension has been completed.

In order to distinguish between the feature binding and feature activation accounts of the ACE, it is necessary to pin down the temporal dynamics of motor congruence effects during sentence processing. Some steps have already been taken in this direction. With regard to feature activation, Zwaan and Taylor (2006) used a phrase-by-phrase reading task to show that motor information about the actions described in the sentence is activated as early as possible during sentence comprehension (e.g., when the verb indicates the nature of the action to be taken). This would need to be the case in order for the ACE to arise in the manner discussed above. Things are less clear with regard to the binding of features into simulations. The present data are consistent with the idea that the execution of simulations (and the requisite binding of features) occurs at or near the end of the sentence, but they are also consistent with accounts that do not make recourse to feature binding during simulations. However, the feature binding and feature activation accounts make different predictions regarding the pattern of ACE effects that should arise at different points during online sentence comprehension. The feature activation account suggests that the ACE should be present throughout the presentation of the sentence (since the comprehender’s focus on the incoming language should keep the relevant motor features active while the participant is processing the linguistic input). On the other hand, the feature binding account would predict that the ACE should disappear at some point around the end of the sentence, perhaps even somewhat before the end of the sentence. Given that sentence processing is incremental in nature (e.g., Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995) and that many extant models of sentence processing can arrive at a plausible sentence interpretation before all of the words have been fully processed (e.g., MacDonald, Pearlmutter, & Seidenberg, 1994; McRae, Spivey-Knowlton, & Tanenhaus, 1998), it is possible that comprehenders are able to start running their simulations before the actual conclusion of the sentence (i.e., once they have enough information to specify the nature of the action taking place).

The experiment presented below is designed to take a first step towards pinning down the window of time during which simulations are run during sentence comprehension. Whereas Borreggine and Kaschak (2006) focused their efforts on observing the ACE effects that arise when the nature of the motor response needed for the sensibility judgement was known either at the beginning or after the end of the sentences, the present experiment explores how the ACE changes when the motor response is planned and executed at different points within the sentence itself. In our experiment, participants were asked to listen to the sentences used in earlier studies of the ACE (Borreggine & Kaschak, 2006; Glenberg & Kaschak, 2002). They were told to remember what each sentence means, as they were to be given a memory test at a later point in the experiment. We also told participants that they would be engaging in a secondary task designed to examine how the presence of distraction affects their memory. The distractor task was used in place of the sensibility judgement task employed in earlier studies of the ACE. At some point during each trial, a visual cue would appear on the screen. Based on the cue, participants were told to immediately execute one of two actions: a towards response or an away response. These responses were identical to the motor responses used by Borreggine and Kaschak (2006). The visual cue was presented 500, 1,500, or 2,000 ms after the onset of the sentence. (Note that the average sentence length for our materials was approximately 2,300 ms.)

The condition in which the motor cue was presented 500 ms after the onset of the sentence was designed to replicate the ACE within the slightly modified paradigm employed for this study. Because the presentation of the motor cue (and the subsequent planning and execution of the motor response) occurs very early in the sentence, it is expected that the ACE should arise for the reasons given earlier: Activation of a directional feature (“towards”) by the sentence and motor response primes the use of that feature, speeding the execution of the motor response. The conditions in which the motor cue was presented at 1,500 and 2,000 ms after the onset of the sentence were designed to present the motor cue at time points near the end of the sentences in order to determine when (and if) comprehenders are binding the activated motor features into a simulation. If comprehenders are running simulations before the end of the sentence, it is expected that the ACE should be attenuated in one or both of these conditions. Such a finding would take a step towards ruling out the feature activation account of the ACE, as this would show that the ACE disappears at a point in time where some processing of the linguistic input remains to be done.

METHOD

Participants

The participants were 144 introductory psychology students from Florida State University (48 in each of the three cue presentation conditions). They received course credit in exchange for their participation. Across the conditions, the data from a total of 9 participants were replaced due to low accuracy on the experimental task. Average response accuracy for the remaining participants was over 98%.

Materials

The critical sentences for this experiment were the 40 transfer sentences used by Glenberg and Kaschak (2002). Each sentence had a “towards” version (e.g., “Mark dealt the cards to you”) and an “away” version (e.g., “You dealt the cards to Mark”). In addition, 20 of the transfer sentences described literal, concrete transfer (as in the “Mark” sentences), and 20 of the sentences described abstract transfer (e.g., “Andy pitched you the idea”). An additional 40 filler sentences were selected from Glenberg and Kaschak’s (2002) materials. These fillers were the nonsensical items used in previous ACE experiments (e.g., “Frank boiled you the sky”). Although the experiment did not employ a sensibility judgement task, we decided to include the nonsensible items both to make the memory task facing the participants more challenging and to keep the overall set of materials as similar as possible to the earlier ACE studies. A total of 18 additional sentences (9 sensible) were generated to serve as practice items at the beginning of the experiment. All sentences were recorded by a female speaker of American English using the freeware, open source software program Audacity1.23 (Creative Commons attribution licensure) and played using Null soft Winamp 5.08. The mean length of the critical sentences was 2,267 ms (SD = 336 ms). Critical sentences are listed in Appendix A.

To ensure that sentences appeared equally often in all four critical conditions of the experiment (away sentences, away response; away sentence, towards response; towards sentence, away response; towards sentence, towards response), we created four counterbalanced lists on which a different set of 10 sentences were assigned to appear in each of the four critical conditions. Sentences were assigned to conditions randomly within the constraints that only 10 sentences could appear in one condition on each list, and a sentence could only appear in a condition once across the four counterbalance lists. On each list, there were an equal number of concrete and abstract transfer sentences in each condition.

Procedure

Participants were randomly assigned to counterbalance lists, with the constraint that an equal number of participants appeared on each of the four lists across the entire experiment. The participants were told that they were going to hear a series of sentences, and that they should remember what each sentence meant as the experiment was going to end with a memory test (which was not actually administered). They sat with the computer keyboard situated on their lap at a 90-degree angle from its normal orientation, such that the letter “Q” was situated away from their body, and the letter “P” was situated near their body. To initiate the playing of a sentence, participants pressed and held down the “Y” key on the keyboard. Participants were also told that they would engage in a distractor task during the experiment. They were told that at some point during each trial, the letter “P” or “Q” would appear on the computer screen. As soon as they saw the letter, they were to immediately release the “Y” key and press either the “P” or the “Q” key (based on the letter presented on the screen). A “P” response would be a response towards the body, and a “Q” response would be a response away from the body. The “Y”, “P”, and “Q” buttons had plastic blocks placed on top of them to facilitate responding. The “P” or “Q” appeared on the screen 500 ms, 1,500 ms, or 2,000 ms after the onset of the sentence. The timing of the “P” and “Q” motor cues was manipulated between participants. To reduce practice effects, participants first responded to 18 practice items (9 sensible). These practice items transitioned smoothly into the critical part of the experiment so that participants would not be aware of the change.

Design and analysis

The dependent variable was the time required to press the “P” or “Q” button after the relevant letter had been presented on the computer screen. The data were analysed as follows. First, all incorrect responses (where participants pressed the wrong button) were removed from the dataset. Less than 1% of the responses were errors.1 Next, we omitted all response times longer than 3,000 ms. The remaining data were further screened for outliers by removing all response times that were more than 2 standard deviations from each participant’s mean response time in each of the four critical conditions (away sentence, away response, and so on). The data were then analysed using a 2 (Sentence Direction: towards vs. away) × 2 (Response Direction: towards vs. away) × 3 (Cue Presentation: 500, 1,500, or 2,000 ms after sentence onset) analysis of variance (ANOVA). Analyses were conducted across participants (denoted F1) and across items (denoted F2). Sentence direction and response direction are both within-participants and within-items variables. Cue presentation was a between-participants variable and a within-items variable.

It came to our attention after we had begun running the experiment that 11 of the critical sentences had at least one version (towards or away) that was shorter than 2,000 ms (in all but one case, both versions were less than 2,000 ms), meaning that the motor cue was presented after the end of these sentences in the 2,000-ms cue presentation condition. We therefore excluded these items from the main analyses of the experiment. This was done because our goal was to explore the ways that the timing of the motor response within the sentence changed the ACE as the motor response moved from an early point in the sentence (500-ms cue presentation) to progressively later points in the sentence (1,500- and 2,000-ms cue presentations). To make this comparison across conditions, it was important that the motor response be executed before the end of the sentence in all three cue presentation conditions (a constraint violated by the sentences shorter than 2,000 ms). Although these items are excluded from the main analyses of the data, they are included in the post hoc analyses conducted to explore how the magnitude of the ACE is related to the length of the individual sentences (presented after the main results).

RESULTS

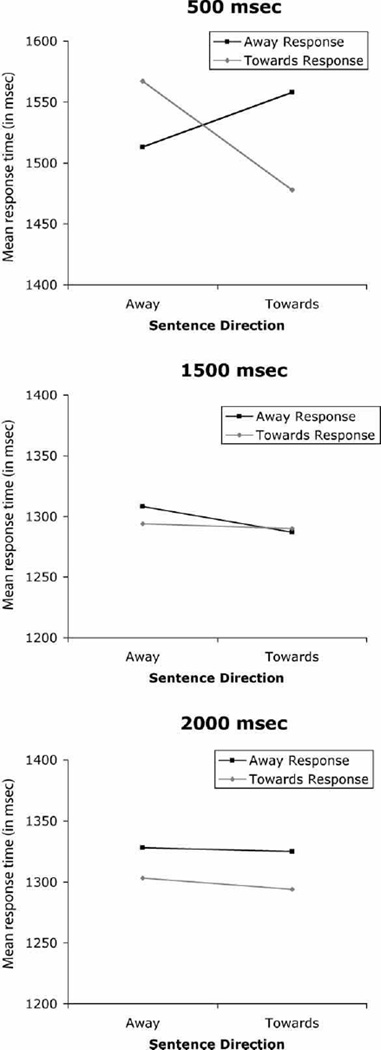

In each cue presentation condition, the critical question is whether we observe the ACE. The ACE manifests itself as a significant Sentence Direction × Response Direction interaction, such that responses are faster when the direction of the sentence matches the direction of the response than when the direction of the sentence mismatches the direction of the response. The results are presented in Figure 1.

Figure 1.

Motor response times as a function of sentence direction and response direction across cue presentation conditions.

Statistical analyses revealed a significant Sentence Direction × Response Direction × Cue Presentation interaction, F1(2, 141) = 4.01, p < .05; F2(2. 56) = 3.06, p = .055, indicating that the ACE was different across the three cue presentation conditions. Within the individual cue presentation conditions, there was a significant ACE in the 500-ms condition, F1(1, 47) = 9.74, p < .01; F2(1, 28) = 5.07, p < .05, but not in the 1,500- or 2,000-ms conditions (Fs < 1). In the 500-ms condition, participants were faster to produce “towards” actions than to produce “away” actions when responding during “towards” sentences, F1(1, 47) = 5.73, p < .05; F2(1, 28) = 5.25, p < .05, but the tendency to produce “away” actions faster than to produce “towards” actions during “away” sentences was not significant, F1(1, 47) = 2.99, p = .09; F2(1, 28) = 1.34, p = .25. Thus, delaying the execution of the motor response until a relatively late point in the sentence (1,500 or 2,000 ms after sentence onset) had the effect of eliminating the ACE. In addition to the significant three-way interaction, the main analysis also revealed an effect of cue presentation condition, F1(2, 141) = 6.01, p < .01; F2(2, 56) = 208.80, p < .001, with participants in the 500-ms condition responding more slowly than participants in the other two conditions. An examination of the data in all three cue presentation conditions showed that the longer average response times for participants in the 500-ms condition was due to a subset of the participants (around 25% of the participants in that condition) having somewhat long response times (average response times for these participants were > 2,000 ms, whereas no participants in the 1,500- or 2,000-ms conditions had average response times over 2,000 ms). Although the behaviour of these participants is somewhat discrepant from the behaviour of the other participants in the experiment in terms of overall response latency, it is important to note that their data show essentially the same pattern as the data of the rest of the participants in the 500-ms condition. Removing these participants from the analyses has very little impact on the statistical results.

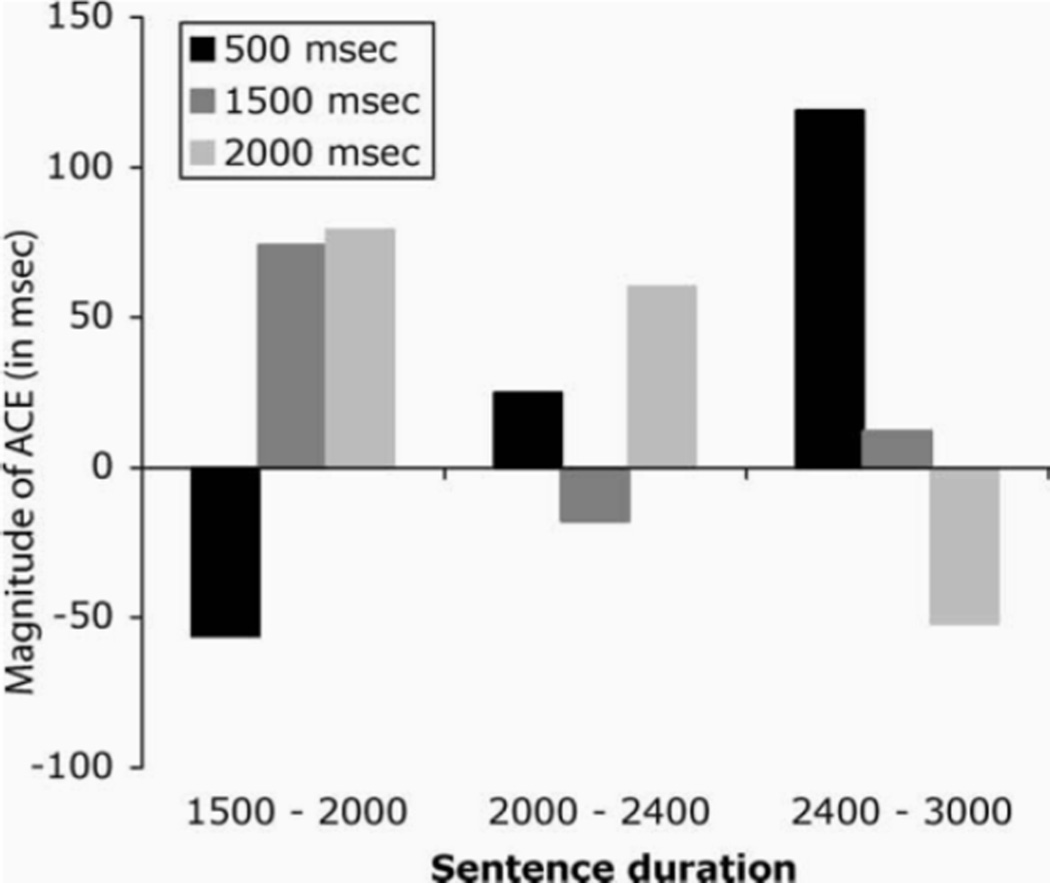

These data take us part of the way towards finding the window of time in which simulations are run during the comprehension of our critical sentences. Given an average sentence length of around 2,300 ms, the fact that the ACE was eliminated when the motor cue was presented at both 1,500 ms and 2,000 ms after the onset of the sentence suggests that the simulations are being run at some point around the last 300– 800 ms of the sentence (a time period that largely covers the last noun phrase of the sentence). Although the experiment was not originally designed to do so, we decided to exploit the fact that there was some variability in the length of our sentences (sentence lengths ranging from 1,500 ms to 3,000 ms) in order to see whether we could get a better handle on the timing issue. We broke our sentences into three length categories: 1,500 ms–2,000 ms (11 sentences; as discussed earlier, these sentences were excluded from the previous analyses), 2,000 ms–2,400 ms (14 sentences), and 2,400 ms–3,000 ms (15 sentences). These length categories were constructed in part to equate the number of sentences in each condition (and thus to facilitate the subsequent analysis). For each sentence, we calculated an ACE value for each of the three cue presentation conditions. The ACE value was calculated as (away sentence, towards response – away sentence, away response) – (towards sentence, towards response – towards sentence, away response), such that the canonical ACE would result in a positive number, and a reversed ACE would result in a negative number. The resulting ACE values were analysed using a 3 (Cue Presentation condition: 500 ms, 1,500 ms, 2,000 ms) × 3 (Sentence Duration: 1,500–2,000 ms, 2,000– 2,400 ms, 2,400–3,000 ms) ANOVA with cue presentation as a within-items variable and sentence duration as a between-items variable. We did not conduct analyses across participants because the counterbalancing scheme set up to balance other variables in the experiment resulted in imbalances in the sentence duration variable for participants across the cells of the design.

The ACE values across cue presentation conditions and sentence durations are presented in Figure 2. The main effects of cue presentation condition and sentence duration were not significant (Fs < 1), but there was a significant Cue

Figure 2.

Magnitude of the action–sentence compatibility effect (ACE) for items as a function of cue presentation condition and sentence duration.

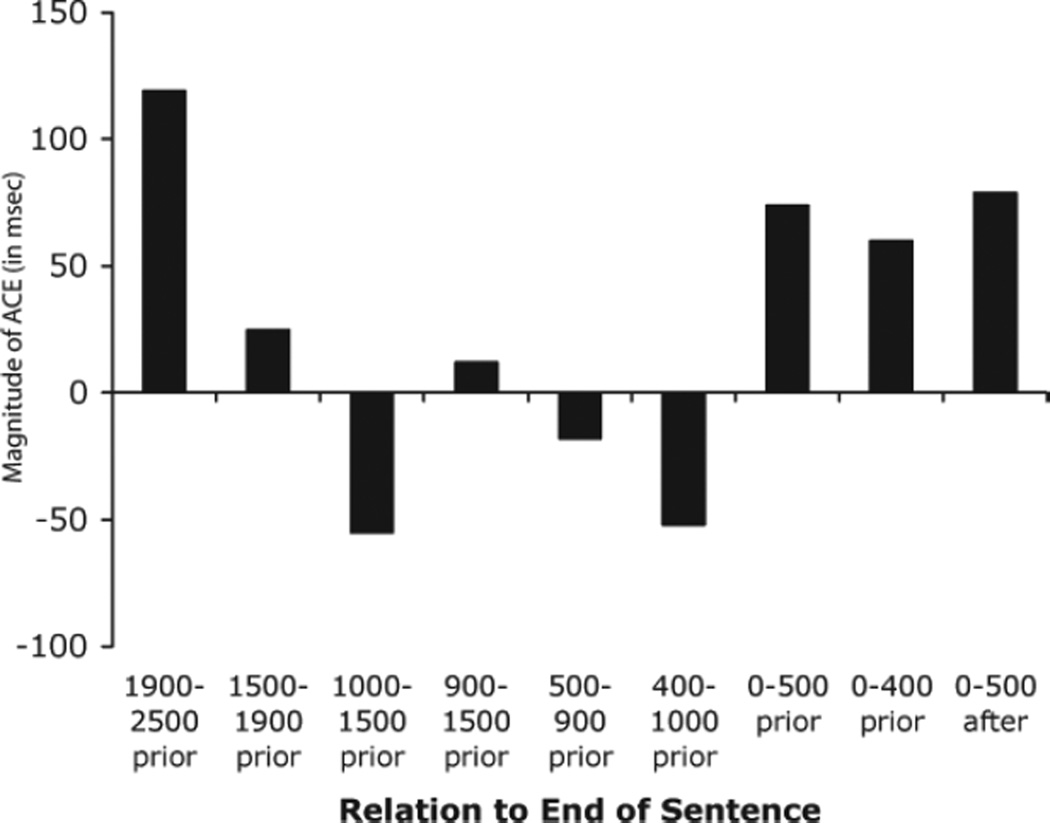

Presentation Condition × Sentence Duration interaction, F2(4, 74) = 2.56, p < .05. The interaction reflects the fact that each of the three sentence duration categories shows a different pattern of ACE values across cue presentation conditions. For the shortest sentences (1,500–2,000 ms), the ACE is weakest in the 500-ms condition. For the midlength sentences (2,000–2,400 ms), the ACE is weakest in the 1,500-ms condition. For the longest sentences (2,400–3,000 ms), the ACE is weakest in the 2,000-ms condition. In the interest of clarifying the patterns presented in Figure 2, it is instructive to reorder the individual conditions in the figure according to the distance between the presentation of the motor cue and the end of the sentence. For example, when considering the 1,500–2,000-ms group of sentences, the cue is presented between 1,000 and 1,500 ms before the end of the sentence in the 500-ms cue presentation condition, between 0 and 500 ms before the end of the sentence in the 1,500-ms cue presentation condition, and between 0 ms and 500 ms after the end of the sentence in the 2,000-ms cue presentation condition. Figure 3 presents the reordered data, with the distance between the cue and the end of the sentence displayed along the x-axis. Note that there are some cases where the time periods covered by different conditions overlap. In this case, we ordered the conditions such that the one that had the lower time bound closest to the end of the sentence was considered to be closer to the end of the sentence than the condition with a lower bound that was further from the end of the sentence. Thus, the time bin between 400 and 1,000 ms before the end of the sentence was considered to be closer to the end of the sentence than the time bin between 500 and 1,000 ms before the end of the sentence. In the discussion that follows, the conclusions are unaffected by reversing the position of these overlapping conditions.

Figure 3.

Magnitude of the action–sentence compatibility effect (ACE) for items as a function of the timing of the motor response cue relative to the end of the sentence.

The data in Figure 3 show that the ACE is present both when the motor cue is presented at an early stage in sentence processing (1,900–2,500 ms before the end of the sentence) and when the motor cue is presented near the very end of the sentence (from around 500 ms before the end of the sentence until 500 ms after the end of the sentence), but not when the motor cue is presented between 500 and 1,900 ms before the end of the sentence.

Statistically, the ACE was significantly different from 0 when the motor cue was presented 1,900–2,500 ms before the end of the sentence, t(14) = 2.26, p < .05, when the motor cue was presented between 0 and 400 ms before the end of the sentence, t(23) = 2.58, p < .05, and when the data from the 0–400 ms before the end of the sentence and the 0–500 ms before the end of the sentence conditions were combined (on account of the degree of temporal overlap between the conditions) t(24) = 2.61, p < .05. These data suggest that the execution of the simulation of the motor action described in the sentence (which binds the “towards” or “away” directional feature to the simulation and thereby disrupts the ACE) appears to be centred around the time from 500 to 1,900 ms before the end of the sentence (i.e., it occurs as the comprehender is processing the final noun phrase in the sentence). Interestingly, the data also suggest that not only is the ACE present when the motor response is executed early in the processing of the sentence, but also that the ACE reappears after the running of the simulation, but before the end of the sentence. We take up the implications of this pattern in the Discussion section.

Whereas the data presented in Figures 2 and 3 help to further define the range of time in which simulations might be executed during the comprehension of these sentences, they are also useful in ruling out an alternative account of the presence of the ACE in the 500-ms cue presentation condition and the absence of the ACE in the 1,500and 2,000-ms conditions. One might propose that participants in the 1,500- and 2,000-ms conditions did not show the ACE because they were not actually paying attention to the sentences (in spite of our instructions that they should remember the sentences for a later memory test). The data in Figure 2 argue against this possibility, as within each cue presentation condition some sentences show an ACE effect whereas others do not (and this difference is explained, at least in part, by the timing of the motor response within the sentence). This pattern would not be expected to emerge if the participants were not paying attention to the content of the sentences.

DISCUSSION

The experiment reported in this paper was designed to further our understanding of the temporal dynamics of the ACE. We were interested in determining whether comprehenders were running simulations of the actions described in the sentences before the end of the sentence and, if so, determining at what point before the end of the sentence this was taking place. The results suggest that comprehenders are simulating the action described in the sentence before processing of the entire sentence has been completed. Evidence for this claim comes from the 1,500and 2,000-ms conditions, in which the ACE was eliminated by delaying the execution of the “towards” and “away” motor responses until near the end of the sentence. Our examination of the data from individual sentences suggests that the locus of this simulation is somewhere between 500 and 1,900 ms before the end of the sentence. Given that our estimate of the time frame of the ACE arises from a post hoc analysis of the data, this latter conclusion should be accepted tentatively pending more carefully controlled studies of the ACE in this time window.

Our data support the feature binding account of the ACE developed by Borreggine and Kaschak (2006). The presence of the ACE in the 500-ms cue presentation condition reflects the activation of directional features (towards or away) by both the content of the sentence and the motor response. The absence of the ACE in the 1,500ms and 2,000-ms cue presentation conditions is a consequence of the relevant directional features being bound to the simulation of the action described by the sentence. The running of the simulation (and consequently, the binding of features) appears to occur between 500 and 1,900 ms before the end of the sentence. The fact that the ACE reemerges after the running of the simulation, but before the end of the sentence, is also consistent with this account. Even though action described by the sentence has already been simulated, the nature of the task requires the participants to continue attending to the content of the sentence (as they need to remember the sentence for a later memory test). As participants continue to maintain the content of the sentence in memory, they reactivate the directional features relevant to the action of the sentence. This allows the ACE to reemerge at the very end of the sentence.

The data do not support the feature activation account described earlier. The feature activation account predicts that the ACE should be present throughout the processing of the sentence, as the motor features associated with the action depicted in the sentence should be active (and available to prime the motor response) as long as the sentence is being processed. This was not the case. Furthermore, the feature activation account does not have a mechanism to explain why the ACE would disappear during the processing of the sentence and then reemerge at the very end of the sentence. Even if one presumes that the disappearance of the ACE during the sentence reflects the fact that the process of comprehension was completed before the end of the sentence, the fact that participants were instructed to remember the sentences would suggest that they would continue to keep the content of the sentence in memory. From the perspective of the feature activation account, this possibility would seem to preclude the disappearance of the ACE at some point during the sentence.

Our findings take a step towards nailing down the time course of the ACE during sentence comprehension, but it is important to note that this time window is only a rough estimate and is likely to be affected by the characteristics of the individual sentences themselves. For example, if the content of the sentence is predictable (e.g., in “The bartender served you …” it is somewhat likely that the final noun phrase in the sentence will be a phrase such as “the drink”) it may be possible to run the simulation at an earlier point in the sentence than if the nature of the action cannot be ascertained until the content of the final noun phrase in the sentence is known. The possibility of sentence-level variables affecting the timing of the simulation process is one explanation for why the ACE was absent for a period of around 1,400 ms (between 500 and 1,900 ms before the end of the sentence) in our data. Further studies will be needed to determine exactly how long motor features are bound to simulations during sentence comprehension. Together with Taylor and Zwaan’s (2008 this issue) exploration of the role of linguistic focus in shaping the motor resonance that occurs during sentence processing, our suggestion that factors such as predictability can affect the timing of motor resonance and the execution of simulations during sentence processing argues that it may be fruitful to examine the role of a range of psycholinguistic variables in constraining the use of sensorimotor information in language comprehension.

Whereas we have discussed at length how our results fit within the framework of theories of motor planning (i.e., TEC), our results are also consistent with extant theories of sentence processing. The two-stage approach to the comprehension of sentences is in accord with Townsend and Bever’s (2001) LAST, which claims that sentence processing involves an initial stage in which many sources of information (probabilistic information about the use of particular words and syntactic constructions, “world knowledge,” etc.) are used to generate a quick-and-dirty interpretation for the sentence and a second stage in which a more refined interpretation of the sentence is generated. Thus, the initial stage of sentence processing would involve the activation of motor information relevant to the actions that are being described in the sentence (see Zwaan & Taylor, 2006, for a demonstration of the early activation of motor information during sentence processing; see also Chambers, Tanenhaus, & Magnuson, 2004), and the second stage would involve running a more precise simulation of the events being described (see Zwaan & Taylor, 2006, for a similar argument).

Although we have interpreted our data in the context of a sensorimotor or “embodied” approach to language comprehension, it is interesting to note that there is an apparent disconnect between the results presented by Borreggine and Kaschak (2006) and other results that are taken as support for the sensorimotor view (e.g., Connell, 2007; Zwaan et al., 2004; Zwaan et al., 2002) including the results presented here. Whereas Borreggine and Kaschak’s (2006) Experiments 2–4 suggest that simulations occur during online sentence comprehension and that congruence effects such as the ACE disappear once the sentence has been completed, other reports have shown congruence effects between a sentence that is processed and a stimulus (typically a picture; e.g., Connell, 2007; Zwaan et al., 2002) that is presented after the end of the sentence. In our view, the discrepancy between Borreggine and Kaschak’s (2006) results and other results reported in the literature can be explained by examining the demands of the different experimental tasks used across studies. In the sentence–picture judgement tasks, participants process the sentence and then need to assess whether the picture that is presented matches the content of the sentence. This judgement requires the participant to keep the content of the sentence in memory after they have finished the process of sentence comprehension, and thus this paradigm reveals congruence effects even though the perceptual stimulus is presented after the offset of the sentence. In contrast, Borreggine and Kaschak’s (2006) paradigm does not require participants to keep their representation of the content of the sentence active after they have finished the process of sentence comprehension. When the presentation of the motor cue was delayed until after the end of the sentence, all participants needed to remember was that they intended to make a “yes” response. Thus, there is no congruence effect when the motor cue is presented after the end of the sentence.

This proposal allows us to explain why Borreggine and Kaschak (2006) did not observe the ACE when the motor cue was presented after the end of the sentence, whereas we do observe a positive ACE when the motor cue was presented after the end of the sentence. Because the present task focused on memory for the sentences, participants may have continued rehearsing sentence content after having comprehended the sentence. As such, the relevant motor features were reactivated after the simulation was run (see earlier discussion) and remained active as the participant continued rehearsing the sentence. Thus, a version of the ACE task that requires memory of the sentence content beyond the conclusion of the sentence shows an ACE effect after the conclusion of the sentence (see Figure 3), but a version of the same task that does not require memory of the sentence beyond the end of the trial does not.

The hypothesis advanced here suggests that the interaction between linguistic and sensorimotor processing depends in part on when and how participants are focusing on the content of the sentence. Taylor and Zwaan (2008 this issue) make a similar point with their lexical focus hypothesis (LFH). In an earlier set of experiments, Zwaan and Taylor (2006) used a phrase-by-phrase reading task to show that motoric information was active while the verb of the sentence was processed, but was not active when other regions of the sentence were being processed. Taylor and Zwaan (2008 this issue) use a similar task to show that the activation of motoric information can be extended beyond the verb when additional lexical items (particularly adverbs) continue to focus attention on the action that is being described. It will be important to continue exploring how experimental task demands and linguistic cues affect the timing and shape of “embodied” effects in language processing.

The data reported in this paper have shed some light on the temporal dynamics that surround the activation and use of motor information during the processing of sentences about action. This work takes a small step towards understanding how sensorimotor information is used during online sentence comprehension. In order to develop a fuller understanding of these dynamics, it will be necessary to conduct studies using both a broader range of sentence types and a broader range of action types. Such explorations will place important constraints on the development of sensorimotor theories of language comprehension.

Acknowledgments

Thanks to Christopher Borda, Devona Gray-Hogans, Sarah Hahn, Ranezethiel Hernandez, Melissa Hilvar, Elizabeth Huamonte, Bridget Ingwell, Divya Manjunath, Jenna McHenry, Brett Mercer, Donald Moysey, Matthew O’Brien, Jamie Sorenson, Big Clay Money (aka Clayton Weiss), and Katelyn Wukovits, all of whom helped to run these experiments. Thanks to Sian Beilock and two anonymous reviewers for their helpful comments on a previous version of this manuscript.

Appendix A

Critical sentences for the study

For the sake of brevity, we have only listed the “towards” version of each sentence. “Away” versions can be constructed by reversing the agent and beneficiary of the action in each sentence (e.g., “Katie handed the puppy to you” would become, “You handed the puppy to Katie”). A full list of the “away” sentences can be found in Borreggine and Kaschak (2006). The sentences are arranged by length categories.

| 1,500–2,000 ms (n = 11) | 2,000–2,400 ms (n = 14) | 2,400–3,000 ms (n = 15) |

|---|---|---|

| Katie handed the puppy to you. | Helen awarded the medal to you. | Alex forked over the cash to you. |

| Andy pitched the idea. | Jack kicked the football to you. | Andy delivered the pizza to you. |

| Amanda paid you tribute. | Vincent donated money to you. | Kelly dispensed the rations to you. |

| Liz told you the story. | Amber drove the car to you. | Jeff entrusted the key to you. |

| Joe kicked you the soccer ball. | Mark dealt the cards to you. | Sally slid you the cafeteria tray. |

| Mike sold the land to you. | Christine bought you ice cream. | Shawn shot you the rubber band. |

| Mike rolled you the marble. | Diane threw you the pen. | Art bestowed the honor upon you. |

| Jenni sang you a song. | Courtney handed you the notebook. | Sara transmitted the orders to you. |

| He taught you a lesson. | Your dad poured you some water. | Tiana devoted her time to you. |

| Heather slipped you a note. | Adam conveyed the message to you. | Anna transferred responsibility to you. |

| Paul hit you the baseball. | Dan confessed his secret to you. | John dedicated the song to you. |

| Ian received the complaint from you. | Steve lavished you with praise. | |

| Your sister blew you a kiss. | Chris offered you some writing tips. | |

| Your family sent you regards. | Jesse gave you another chance. | |

| The policeman radioed the message to you. |

Footnotes

Errors were analysed with a Cue Presentation × Sentence Direction × Response Direction ANOVA and showed a main effect of response direction (F1 and F2 > 7.00, p < .05), with responses on away trials being slightly more accurate than responses on towards trials (99.5% vs. 99.0%). The interaction of Cue Presentation Sentence Direction was significant by participants but not items, F1(2, 141) = 4.00, p < .05; F2(2, 78) = 1.98, p = .15, with participants in the 500-ms condition being more accurate when responding during away sentences than when responding during towards sentences (98.6% for away sentences, 99.3% for towards sentences), and the opposite pattern holding for participants in the 1,500-ms condition (99.4% for away sentences, 98.9% for towards sentences) and in the 2,000-ms condition (99.7% for away sentences, 99.6% for towards sentences). Given that so few participants made any errors at all (only 19% of participants made an error in responding) and that even fewer participants made more than one error (6% of participants), it is our sense that the statistical significance of these small differences reflects the extreme lack of variability in the error data rather than systematic differences in the way that participants were responding to the task.

References

- Borreggine KL, Kaschak MP. The action-sentence compatibility effect: It’s all in the timing. Cognitive Science. 2006;30:1097–1112. doi: 10.1207/s15516709cog0000_91. [DOI] [PubMed] [Google Scholar]

- Buccino G, Riggio L, Melli G, Binkofski F, Gallese V, Rizzolatti G. Listening to action-related sentences modulates the activity of the motor system: A combined TMS and behavioral study. Cognitive Brain Research. 2005 doi: 10.1016/j.cogbrainres.2005.02.020. [DOI] [PubMed] [Google Scholar]

- Chambers CG, Tanenhaus MK, Magnuson JS. Actions and affordances in syntactic ambiguity resolution. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:30–49. doi: 10.1037/0278-7393.30.3.687. [DOI] [PubMed] [Google Scholar]

- Connell L. Representing object color in language comprehension. Cognition. 2007;102:476–485. doi: 10.1016/j.cognition.2006.02.009. [DOI] [PubMed] [Google Scholar]

- Gernsbacher MA, Kaschak MP. Neuroimaging studies of language production and comprehension. Annual Review of Psychology. 2003;54:91–114. doi: 10.1146/annurev.psych.54.101601.145128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glenberg AM, Kaschak MP. Grounding language in action. Psychonomic Bulletin & Review. 2002;9:558–565. doi: 10.3758/bf03196313. [DOI] [PubMed] [Google Scholar]

- Holt LE, Beilock SL. Expertise and its embodiment: Examining the impact of sensorimotor skill expertise on the representation of action-related text. Psychonomic Bulletin and Review. 2006;13:694–701. doi: 10.3758/bf03193983. [DOI] [PubMed] [Google Scholar]

- Hommel B, Musseler J, Aschersleben G, Prinz W. The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences. 2001;24:849–937. doi: 10.1017/s0140525x01000103. [DOI] [PubMed] [Google Scholar]

- Isenberg N, Silbersweig D, Engelien A, Emmerich K, Malavade K, Benti B, et al. Linguistic threat activates the human amygdala. Proceedings of the National Academy of Sciences. 1999;96:10456–10459. doi: 10.1073/pnas.96.18.10456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan IP, Barsalou LW, Solomon KO, Minor JK, Thompson-Schill SL. Role of mental imagery in a property verification task: fMRI evidence for perceptual representation of conceptual knowledge. Cognitive Neuropsychology. 2003;20:525–540. doi: 10.1080/02643290244000257. [DOI] [PubMed] [Google Scholar]

- Kaschak MP, Glenberg AM. Constructing meaning: The role of affordances and grammatical constructions in language comprehension. Journal of Memory and Language. 2000;43:508–529. [Google Scholar]

- Kaschak MP, Madden CJ, Therriault DJ, Yaxley RH, Aveyard M, Blanchard AA, et al. Perception of motion affects language processing. Cognition. 2005;94:B79–B89. doi: 10.1016/j.cognition.2004.06.005. [DOI] [PubMed] [Google Scholar]

- Kaschak MP, Zwaan RA, Aveyard M, Yaxley RH. Perception of auditory motion affects language processing. Cognitive Science. 2006;30:733–744. doi: 10.1207/s15516709cog0000_54. [DOI] [PubMed] [Google Scholar]

- MacDonald MC, Pearlmutter NJ, Seidenberg MS. Lexical nature of syntactic ambiguity resolution. Psychological Review. 1994;101:676–703. doi: 10.1037/0033-295x.101.4.676. [DOI] [PubMed] [Google Scholar]

- Martin A, Chao LL. Semantic memory and the brain: Structure and process. Current Opinion in Neurobiology. 2001;11:194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- McRae K, Spivey-Knowlton MJ, Tanenhaus MK. Modeling the influence of thematic fit (and other constraints) in on-line sentence comprehension. Journal of Memory and Language. 1998;38:283–312. [Google Scholar]

- Pulvermüller F. Words in the brain’s language. Behavioral and Brain Sciences. 1999;22:253–279. [PubMed] [Google Scholar]

- Richardson DC, Spivey MJ, Barsalou LW, McRae K. Spatial representations active during real-time comprehension of verbs. Cognitive Science. 2003;27:767–780. [Google Scholar]

- Richardson DC, Spivey MJ, Cheung J. Motor representations in memory and mental models: Embodiment in cognition. In: Moore JD, Stenning K, editors. Proceedings of the Twentythird Annual Meeting of the Cognitive Science Society. Hillsadale, NJ: Lawrence Erlbaum Associates, Inc; 2001. pp. 867–872. [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, Sedivy JC. Integration of visual and linguistic information in spoken language comprehension. Science. 1995;268:1632–1634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Taylor LJ, Zwaan RA. Motor resonance and linguistic focus. Quarterly Journal of Experimental Psychology. 2008;61:896–904. doi: 10.1080/17470210701625519. [DOI] [PubMed] [Google Scholar]

- Townsend DJ, Bever TG. Sentence comprehension. Cambridge, MA: MIT Press; 2001. [Google Scholar]

- Zwaan RA, Madden CJ, Yaxley RH, Aveyard ME. Moving words: Dynamic mental representations in language comprehension. Cognitive Science. 2004;28:611–619. [Google Scholar]

- Zwaan RA, Stanfield RA, Yaxley RH. Language comprehenders mentally represent the shape of objects. Psychological Science. 2002;13:168–171. doi: 10.1111/1467-9280.00430. [DOI] [PubMed] [Google Scholar]

- Zwaan RA, Taylor L. Seeing, acting, understanding: Motor resonance in language comprehension. Journal of Experimental Psychology: General. 2006;135:1–11. doi: 10.1037/0096-3445.135.1.1. [DOI] [PubMed] [Google Scholar]