Summary

Brains are optimized for processing ethologically relevant sensory signals. However, few studies have characterized the neural coding mechanisms that underlie the transformation from natural sensory information to behavior. Here, we focus on acoustic communication in Drosophila melanogaster, and use computational modeling to link natural courtship song, neuronal codes, and female behavioral responses to song. We show that melanogaster females are sensitive to long timescale song structure (on the order of tens of seconds). From intracellular recordings, we generate models that recapitulate neural responses to acoustic stimuli. We link these neural codes with female behavior by generating model neural responses to natural courtship song. Using a simple decoder, we predict female behavioral responses to the same song stimuli with high accuracy. Our modeling approach reveals how long timescale song features are represented by the Drosophila brain, and how neural representations can be decoded to generate behavioral selectivity for acoustic communication signals.

Introduction

A central goal of neuroscience is to understand how the natural sensory stimuli that inform behavior are represented by the brain (deCharms and Zador, 2000; Theunissen and Elie, 2014). To solve this problem, much of the field has focused on optimal coding theory, which posits that sensory neurons encode and transmit as much information about stimuli as possible to downstream networks (Fairhall et al., 2001; Sharpee et al., 2006). However, these approaches rarely take into account the animal's tasks and goals. This presents a problem because, in addition to representing stimuli as faithfully and efficiently as possible, nervous systems must also reduce information to facilitate downstream computations that inform behavior (Barlow, 2001; Olshausen and Field, 2004). In support of this, many sensory codes are often not “optimal” in the classical sense (Salinas, 2006). For example, the increase in sparseness observed in many systems can reduce stimulus information but greatly simplifies decision-making and learning by making behaviorally relevant stimulus features explicit (Clemens et al., 2011; Quiroga et al., 2005). Likewise, the generation of intensity or size invariant codes is necessary for robust object recognition but involves a loss of sensory information (Carandini and Heeger, 2012; Dicarlo et al., 2012).

The best way, therefore, to understand sensory representations is to link naturalistic sensory stimuli, neural codes, and animal behavior. This involves three steps. First, the stimulus features and timescales important for behavior must be identified. Second, the the codes the brain uses to represent behaviorally relevant stimulus features (‘encoding’) must be characterized. Third, the relationship between neural representations and animal behavior (‘decoding’) must be defined. Accomplishing all of this is challenging for most natural behaviors because it is difficult to recapitulate them in a fixed preparation in which neural codes can be recorded. We address this challenge here by using computational modeling as a link between natural behavior and neural codes recorded in non-behaving animals.

We focus on the acoustic communication system of Drosophila. The Drosophila brain comprises a small number of neurons; this feature combined with genetic tools facilitates identifying individual neurons and neuron types for recordings. In Drosophila, acoustic communication occurs during courtship: males chase females and produce patterned songs in response to dynamic sensory feedback (Coen et al., 2014). Courtship unfolds over many minutes, and females arbitrate mating decisions based in large part on features present in male courtship songs. Numerous patterns on timescales ranging from tens of milliseconds to several seconds are present within song (Arthur et al., 2013); how females process and respond to these timescales of auditory information has never before been addressed. We do this here using a large behavioral data set of simultaneously recorded song and fly movements during natural courtship.

To determine how the brain represents courtship song information, we performed in vivo intracellular recordings from auditory neurons in the Drosophila brain. The antennal mechanosensory and motor complex (AMMC) is the primary projection area of fly auditory receptor neurons (termed Johnston's Organ neurons or JONs) (Kamikouchi et al., 2006). The two major populations of sound-responsive JONs terminate in AMMC zones A and B (Kamikouchi et al., 2009; Yorozu et al., 2009). Recent studies have mapped several of the central neurons that innervate these zones (Lai et al., 2012; Vaughan et al., 2014), and have found that most project to a nearby neuropil termed the ventrolateral protocerebrum (VLP). Projections from the VLP to other brain regions remain uncharacterized (but see Yu et al. (2010)). While genetic tools exist that label many AMMC or VLP neurons (Pfeiffer et al., 2008), only a handful have been functionally characterized (Lai et al., 2012; Tootoonian et al., 2012; Vaughan et al., 2014). Here we sample a larger population of AMMC and VLP neurons and generate computational models that effectively recapitulate responses to naturalistic stimuli.

To link neural codes in the AMMC and VLP to the female's behavioral response to song, one would ideally record neural activity during behavior. However, recording techniques can disrupt the highly dynamical interactions that occur during social behaviors like courtship. Moreover, in vivo neural recordings from the Drosophila brain are typically too brief in duration to present a large battery of natural song stimuli. We thus use computational modeling to infer links between the neural codes for song in the AMMC/VLP and female behavior. That is, using our model of stimulus encoding, we generate neuronal responses to the song stimuli recorded during natural courtship as a substitute for direct recordings. Based on these surrogate neural responses, we predict female behavior using simple transformations. Our study reveals an unexpected behavioral selectivity for song structure on long timescales in Drosophila females. Using our encoder/decoder approach, we find that of the two major computations in AMMC/VLP neurons, only one - biphasic filtering - is necessary to explain the female responses to courtship song, while the other - adaptation - is dispensable. Finally, we propose a putative circuit that can extract behaviorally relevant song features from AMMC/VLP responses and transform them into the female behavioral response.

Results

Identifying the courtship song features that drive female song responses

Drosophila melanogaster courtship song comprises two modes, sine and pulse, and males typically alternate between production of these modes during a song bout (Fig. 1A). During courtship, males produce many song bouts prior to copulation with a female; females may therefore be sensitive to a range of timescales that characterize courtship song, from the short species-specific spacing between pulses within pulse mode, known as the inter-pulse interval (IPI), to the long pauses between bouts (Fig. 1B). Previously, we showed that the more sine or pulse song a male produces, the more a sexually receptive female slows down (thus producing a negative correlation between song amount and female speed) (Coen et al., 2014). We re-analyzed this dataset of song and simultaneously tracked fly movements from 315 male-female pairs to examine female responsiveness to various song features and timescales (Fig. 1C). This dataset corresponded to ~4000 minutes of courtship between wild type males of 8 different melanogaster strains and females genetically-engineered to be both pheromone-insensitive and blind (PIBL). This genetic manipulation maximizes the salience of song for these females.

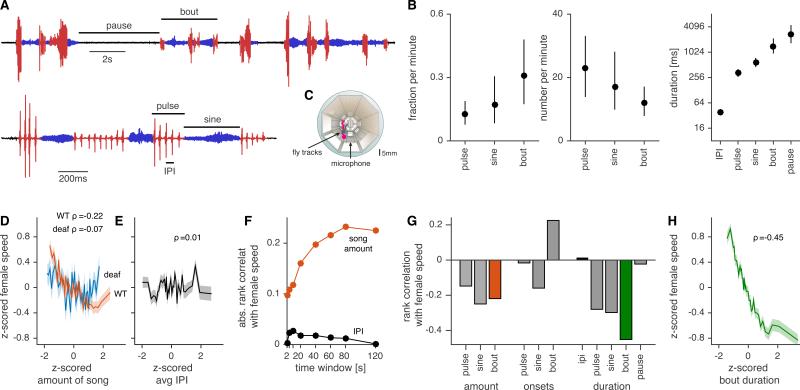

Figure 1. Song features driving female behavioral responses.

A Structure of Drosophila melanogaster courtship song. Song bouts consist of alternations between pulse (red) and sine (blue) modes; bouts are interleaved with pauses. Pulse song consists trains of pulses, separated by species-specific inter-pulse intervals (IPI).

B Courtship song is structured on multiple timescales. Left: The fraction of one minute of courtship that consists of pulse song, sine song, and bouts. Middle: The number (per one minute of courtship) of pulse songs, sine songs, and bouts. Right: The duration of IPIs, pulse songs, sine songs, bouts, and pauses. All plots show median and inter-quartile range. We analyzed song from 3896 minutes of courtship between females and wild type males from 8 geographically diverse strains.

C Behavioral assay for recording male song and male/female movements (Coen et al., 2014).

D, E Behavioral preference function (see Experimental Procedures) for the amount of song (D) or IPI (E). We grouped the data into ~100 minute bins (sorted by x-value), and plotted the mean +/− s.e.m. for each bin. Both female speed and song amount or IPI are z-scored for each male strain. To quantify the strength of association between song features and female speed we calculated rank correlations (ρ) from the raw, unbinned data. The correlation between song amount and female speed is strongly reduced for deaf females (D, blue trace).

F Absolute rank correlation between female speed and the amount of song (orange) or IPI (black) for varying time windows. Rank correlation with IPI is weak for all window durations (all abs. ρ<0.03). The curve for amount of song begins to saturate at ~60s. We therefore analyzed correlations between female speed and song features in 60s windows for all subsequent analyses.

G Rank correlation between 11 song features and female speed (see Experimental Procedures for definitions of song features). Song amount (orange) negatively correlates with female speed while IPI (black) is uncorrelated. Bout duration (green) is most strongly associated with female speed.

H Behavioral preference function for bout duration.

See also Fig. S1.

We first considered the total amount of song and average female speed within a given time window (Fig. 1D, orange, rank correlation(ρ)=−0.22); this correlation began to saturate at time windows of ~60 seconds (Fig. 1F, orange), even for non-overlapping windows (Fig. S1D). This suggests that song information affects females on timescales much longer than the duration of a single song bout. The correlation between song and female speed was mostly abrogated by deafening the female (Fig. 1D, blue), which demonstrates this relationship is dependent on hearing the male song. While previous studies suggested the importance of IPI for female receptivity (Bennet-Clark and Ewing, 1969; Schilcher, 1976), we found no correlation between female speed and IPI (Fig. 1E, ρ=0.01); this was true for both short and long time windows (Fig. 1F, black). This suggests that the range of IPIs produced by conspecific males (of the 8 geographically diverse strains we examined) is too narrow relative to the female preference function for IPI to strongly modulate female speed. We next asked whether females are sensitive to other conspecific song features within the 60 second time window of integration (Fig. S1A). We found that most song features were significantly correlated with female speed (Fig. 1G), likely due to correlations between song features (Fig. S1B); however, bout duration was most strongly correlated with female speed (Fig. 1G-H), and this relationship was independent of other features (Fig. S1C). This suggests that melanogaster females evaluate the structure, not just the total amount, of male song.

Whole-cell patch clamp recordings from AMMC/VLP neurons in the female brain

To determine how the female brain encodes male song structure, we recorded from a subset of neurons innervating the AMMC and VLP neuropils; these neurons represent the first relays for processing courtship song in the brain (Fig. 2A). We hypothesized that computations within these AMMC/VLP neurons should support the encoding and extraction of behaviorally relevant song features like bout duration. To link neural responses with our behavioral dataset (Fig. 1), we used a computational modeling strategy (Fig. 2B). We built an encoder model that captures the stimulus transformations implemented by AMMC/VLP neurons and we used it to predict the responses to natural courtship song from our behavioral data set. We then fit a simple decoder model to predict female speed from the encoder representation of song.

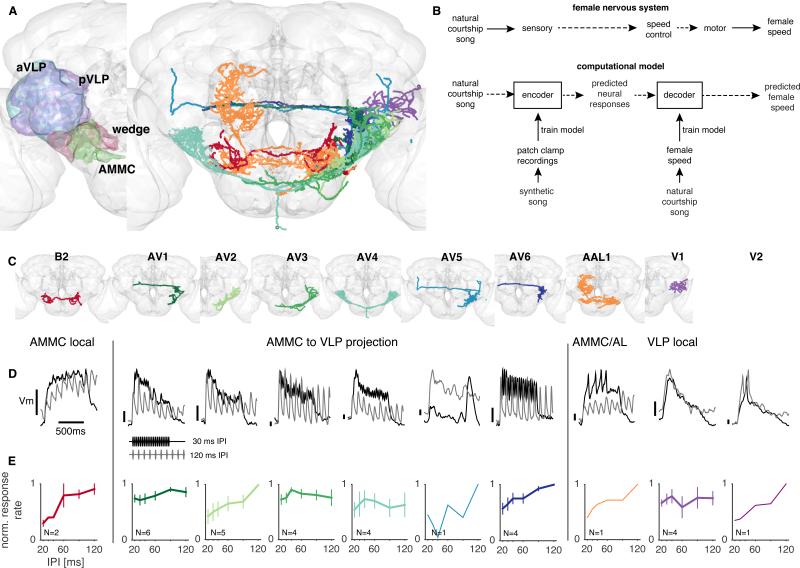

Figure 2. Auditory responses in the AMMC/VLP.

A Left: Projection areas of auditory neurons in the fly brain. Auditory receptor neurons project to the antennal mechanosensory and motor center (AMMC, green). From there, AMMC neurons project to different parts of the ventrolateral protocerebrum (VLP) – the wedge (red), anterior VLP (aVLP, cyan) and posterior VLP (pVLP, blue). Right: Skeletons of neurons in our dataset; skeletons come from the FlyCircuit database of single neuron morphologies (Chiang et al., 2010) and were identified based on our fills of recorded neurons.

B To reveal the neural computations linking song processing with female behavior, we model the female nervous system using an encoding and decoding stage. The decoder is trained using the natural courtship data (song and associated female speed) to reproduce female behavioral responses to song from encoder responses.

C Skeletons of individuals neurons from our study (compare with A). We were not able to identify the V2 neuron in the FlyCircuit database, but the fill of this neuron reveals diffuse arborization throughout the VLP (Supplemental Movie S1).

D Baseline subtracted responses (changes in membrane voltage) of 10 types of AMMC/VLP neurons to synthetic pulse trains with IPIs of 30ms (black) or 120ms (gray). Vertical bar (for each trace) corresponds to Vm scale (ranging from 0-1 mV).

E IPI tuning turves (see Experimental Procedures). Plots show mean +/− s.e.m. Number of recordings per cell type indicated in each panel.

See also Fig. S2 and S3, and Supplemental Movies S1—S5

We first identified genetic enhancer lines that labeled AMMC and VLP neurons by screening images of GFP expression from >6,000 GAL4 lines generated by the Dickson lab (BJD, unpublished and (Kvon et al., 2014)). Two recent studies identified several of the neuron types innervating the AMMC and VLP (Lai et al., 2012; Vaughan et al., 2014). Our screen identified some of these neuron types, in addition to several new ones (Table 1). Systematic electrophysiological recordings from the full set of identified AMMC and VLP neurons are challenging because many of these neurons are not accessible for patch clamp recordings in a preparation in which the antennae are intact and motile (Tootoonian et al., 2012). We therefore recorded from 15 different accessible neuron types (Table 1 and Fig. S2) from the pool of genetically-labeled AMMC and VLP neurons; if auditory responses in this sample were largely similar between cell types, this would suggest that these neural responses are representative of the larger AMMC/VLP population.

Table 1.

Summary of anatomical and histological information on the neurons recorded from in this study. See Supplemental Movies S1-5 and Fig. S2 for additional information. Confocal stacks and z-projections of the VT lines can be accessed at http://brainbase.imp.ac.at. Ipsilateral/contralateral defined with respect to soma position.

| neuron name | transmitters | anatomy | |||||

|---|---|---|---|---|---|---|---|

| driver line | N | this paper | alternatives | cholinergic | GABAergic | cell body | Projections4 |

| JO2 (NP1046), VT049365 | 2 | AMMC-B2 | B21 | no1 | yes1 | ventral | AMMC (bilateral) |

| VT029306 | 6 | AMMC-VLP1 (AV1) | AMMC-VLP PN2 | no | no | posterior | AMMC (ipsilateral), pVLP (bilateral) |

| VT050279 | 5 | AMMC-VLP2 (AV2) | no | no | ventral | AMMC (ipsilateral), wedge (ipsilateral), aVLP (ipsilateral), SOG (ipsilateral) | |

| VT050279 | 4 | AMMC-VLP3 (AV3) | no | yes | ventral | AMMC (ipsilateral), wedge (ipsilateral), aVLP (ipsilateral), SOG (ipsilateral) | |

| VT050245 | 4 | AMMC-VLP4 (AV4) | wedge-wedge PN2 | no | yes2 | ventral | wedge (bilateral) |

| VT029306 | 1 | AMMC-VLP5 (AV5) | no | no | posterior | AMMC (ipsilateral), wedge (ipsilateral),aVLP (bilateral) | |

| VT029306, VT011148 | 4 | AMMC-AV6 (AV6) | aLN(GCI)3, A11 AMMC-VLP PN2 | no1 | no1 | posterior | AMMC (ipsilateral), wedge (ipsilateral), aVLP (bilateral) |

| JO2 (NP1046) | 1 | AMMC-AL1 (AAL1) | ventral | AL (contralateral), AMMC (bilateral), SOG (bilateral) | |||

| VT2042 | 4 | VLP1 (V1) | no | no | posterior | pVLP (ipsilateral) | |

| not GFP labeled | 1 | VLP2 (V2) | ventral | VLP (ipsilateral) | |||

| VT020822 | ventral | ||||||

| VT002600 | no | no | ventral | ||||

| VT010262 | ventral | ||||||

| VT008188 | posterior | ||||||

| VT000772 | aPN33 | posterior | |||||

Based on single neuron fills (with biocytin)

Abbreviations: AMMC – antennal mechanosensory and motor center; VLP – ventrolateral protocerebrum; aVLP – anterior VLP, pVLP – posterior VLP, LH – lateral horn.

We presented a broad range of stimuli during recordings (see Experimental Procedures). However, 33% (5/15) of the neuron types we recorded did not respond to any of our acoustic stimuli; these neurons may be postsynaptic to non-auditory receptors (Kain and Dahanukar, 2015; Otsuna and Ito, 2006; Yorozu et al., 2009). The 10 neuron types that responded to acoustic stimuli included: 1 AMMC local neuron (AMMC-B2), 7 candidate AMMC projection neurons (AMMC-VLP AV1-6 and AMMC-AL AAL1)), and 2 VLP local neurons (VLP V1-2) (Fig. 2C). We confirmed that all recorded neurons innervated the AMMC and/or VLP via imaging (Table 1, Fig. S2, and Supplemental Movies M1-5). We found that auditory activity for almost all responsive neuron types was characterized by graded changes in membrane voltage (Vm) and not action potentials (Fig. 2D), as we had shown previously for neuron type AMMC-AV6 (a.k.a. A1 (Tootoonian et al., 2012)). Four of the sound-responsive cell types (AV1, AV3, AAL1 and V2) occasionally produced spikes during responses to pulse trains (Fig. 2D and S3A). Whereas the subthreshold responses of AV1 and AV3 cells were evoked consistently across animals for a given cell type, spikes were not (Fig. S3A-E). For neurons that we recorded from long enough to present all of our auditory stimuli, we were able to generate frequency and intensity tuning curves (Fig. S3F-H); these curves were similar across cell types but were all distinct from the receptor neuron (JON) population (Tootoonian et al., 2012), implying that subthreshold responses in AMMC/VLP neurons do not simply reflect the tuning of the auditory receptor inputs. These data collectively suggest that Vm changes (and not spikes) are likely to represent the “auditory code” or output of these neurons. Like frequency and intensity tuning, tuning for IPI was also relatively uniform – the cell types we recorded from were either un-tuned for IPI or responded more strongly to long IPIs (Fig. 2E). While other subsets of AMMC/VLP neurons may possess distinct tuning from the neurons we recorded ((Vaughan et al., 2014) and see Discussion), the similarity of auditory responses among the AMMC/VLP neurons in our dataset is striking and suggests that our sample of neurons may represent computations common to auditory neurons in these two brain areas. To examine these computations directly, we next generated models of each recorded cell's response.

A computational model to predict AMMC/VLP neuron responses

We constructed encoder models (see Fig. 2B) to predict the stimulus-evoked Vm changes of every recorded neuron (Fig. 3A); these models were first fit to AMMC/VLP responses to artificial pulse trains (Fig. 2D) and then tested using a diverse set of stimuli including excerpts of recorded courtship song. The model used here is an extension of the standard linear-nonlinear (LN) model (Schwartz et al., 2006). Commonly, LN models consist of two stages: a linear filter that represents the neuron's preferred temporal feature and a nonlinearity that transforms the filtered stimulus into a prediction of the neuronal response. Because we observed a prominent response adaptation in almost all of our recordings (Fig. 2D), we added an adaptation stage to the input of the LN model (Fig. S4A-B), generating aLN models. Our implementation of the adaptation stage is based on a model for a depressing synapse that can account for multiple adaptation timescales (David and Shamma, 2013). However, we remain agnostic about the biophysical basis of adaptation in fly auditory neurons. All model parameters were fit by minimizing the squared error (see Experimental Procedures).

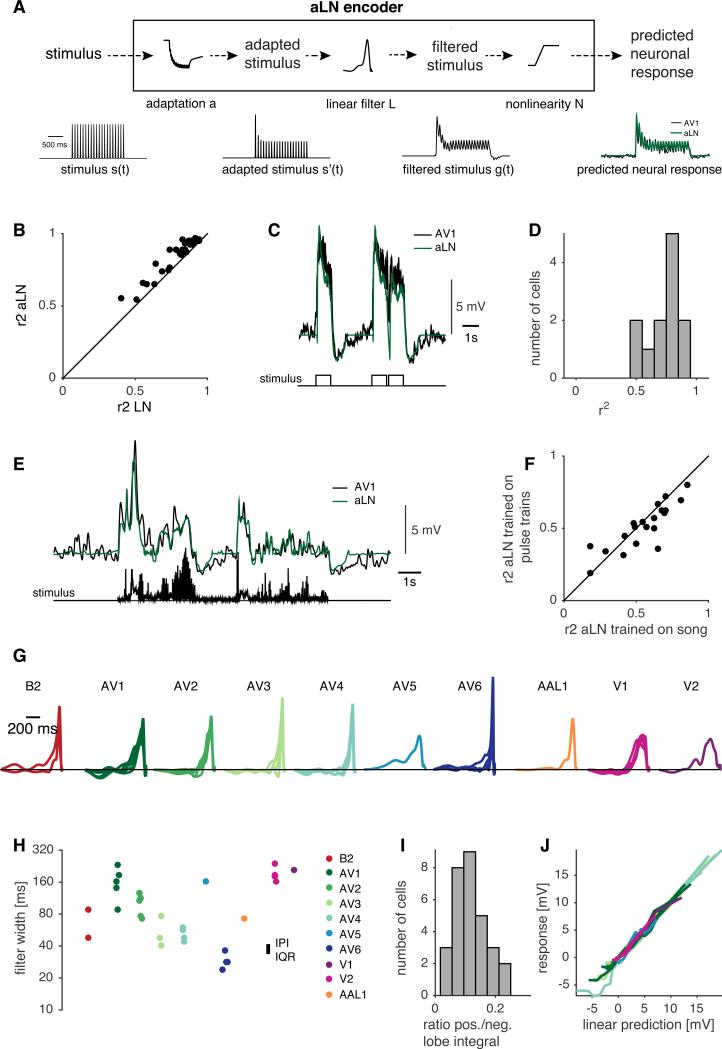

Figure 3. Adaptive linear-nonlinear (aLN) models reproduce AMMC/VLP responses.

A Structure of the aLN model; inputs were short pulse train stimuli of varying IPI (see Fig. 2D). The stimulus envelope s(t) is processed by an adaptation stage (a). The adapted stimulus s’(t) is then transformed by a standard LN model with a filter (F) and an input-output function or nonlinearity (N) to yield a prediction of the membrane voltage (green).

B Adding adaptation to the model improves performance for all cells. Coefficient of determination r2 for aLN models = 0.92 (0.11) median(IQR) and for LN models (without the adaptation stage) =.0.84 (0.21), p=7×10−12, sign test, N=36 neurons.

C An aLN model fitted to naturalistic pulse train stimuli effectively predicts neuronal responses. The stimulus (bottom trace) is a 10 minute sequence of 1 second pulse train bouts (IPI=40ms) with a natural distribution of pauses between trains. AV1 neuron response (black) and aLN prediction (green) for same recording as in A, r2=0.88.

D Assessment of the fits of the aLN model to naturalistic pulse train stimuli (r2=0.78(0.18) median(IQR), N=12).

E aLN models predict responses to natural courtship song. Song envelope (bottom trace) and membrane voltage of an AV1 neuron (black, top trace) or prediction from an aLN model fitted to short pulse trains (green trace).

F Performance of aLN models fitted to natural song (r2 = 0.59+/−0.19) vs. the performance of aLN models fitted to artificial short pulse trains and tested with natural song (r2 = 0.52+/−0.11) p=0.50, sign test, N=20.

G Linear filters for all cells in the dataset. Color scheme for different cells is also used in H and J.

H Width of the positive filter lobe for all cells (measured at half-maximal height, log scale). Interquartile range of conspecific IPIs for melanogaster is indicated.

I Ratio of the integral of the positive and negative lobes of the linear filters (0.11(0.06), median(IQR)).

J Nonlinearities from aLN models.

See also Fig. S4 and S5.

We evaluated model performance by comparing predicted pulse train responses to actual, single-trial responses for three types of stimuli: i) pulse trains (of varying IPI) used for fitting the model (‘short pulse trains’), ii) a long (10 min) series of 1 second pulse trains separated by pauses drawn from the natural distribution present in D. melanogaster courtship songs (‘naturalistic pulse trains’), and iii) excerpts of natural courtship song. r2 values for pulse train response predictions were high for all cell types examined (r2 IQR 0.78-0.95), and were reduced by 10% when excluding the adaptation stage of the model (Fig. 3B). Moreover, models without the adaptation stage failed to fully reproduce the response decrease over a pulse train observed in our recordings (Fig. S5A). aLN models were also able to reproduce responses to stimuli containing naturalistic bout structure (Fig. 3C-D); they reproduced the adaptation observed within and across trains as well as the negative (offset) responses at bout ends (Fig. S5). We next compared the performance of models estimated using short pulse trains to those estimated using recorded excerpts of natural fly songs, which contain mixtures of sine and pulse song (Fig. 3E-F). The performance of the latter model constitutes an upper bound for the performance of aLN models fitted to artificial pulse trains when tested on recorded courtship songs. In general, model performance was not significantly different from that upper bound (Fig. 3F). Overall, this suggests that our aLN model captures the major aspects of AMMC/VLP encoding.

Strong similarities in model parameters across cell types would indicate similar response properties in the AMMC/VLP and would imply that the AMMC/VLP neurons we sampled are likely to be representative of the full population (but see Discussion). We observed no strong, qualitative differences in the shapes of linear filters across the 10 sampled cell types (Fig. 3G). All filters consisted of a dominant, positive lobe that was as wide or wider than a prominent feature of fly song, the IPI (Fig. 3H). Filters of the same cell type were more similar than those of different cell types, indicating that filter shape was cell-type specific (Fig. S4C). Interestingly, the filter durations of AMMC to VLP PNs (AV1-AV6) tiled a wide range between 30 and 300 ms (Fig. 3H) suggesting that the AV neurons act as a filter bank with different low-pass cutoff frequencies. 81% of the filters were also biphasic, i.e. they exhibited a relatively shallow but long negative component (Fig. 3G, I). Although weaker than the positive lobe of the linear filter, the negative lobe strongly affected model responses: removing the negative lobe from the filters (setting negative weights to zero) diminished adaptation and abolished negative offset responses (Fig. S5C). Re-fitting the adaptation and nonlinearity parameters did not fully restore model performance (Fig. S5D-F). Taken together, these analyses demonstrate that the negative lobe of the linear filters is necessary to faithfully reproduce AMMC/VLP responses.

By contrast, adaptation parameters were as variable across as within cell types (Fig. S4D). The majority of cells had only one or two timescales of adaptation, with the most frequent dominant time scale being 2 seconds (Fig. S4E-G). This long time constant suggests that adaptation is active during the coding of song bout structure (see Fig. 1B for relevant timescales). The nonlinearities in the aLN model were uniform and nearly linear across cell type, likely because these cells lack spiking nonlinearities (Kato et al., 2014) (Fig. 3J). Thus, the aLN encoder model 1) suggests relative homogeneity for song encoding across a morphologically diverse subset of AMMC/VLP neurons, 2) reveals two relatively simple computations of all recorded AMMC/VLP neurons (biphasic filtering and adaptation) and 3) effectively predicts single-trial responses to courtship song stimuli.

Readouts of AMMC/VLP neurons exceed behavioral tuning for song amount

We next sought to relate the neural representation of song in AMMC/VLC neurons to female slowing. To do this, we used the aLN (encoder) model to predict neural responses (for each of the 32 recorded neurons) to each of the ~4000 minutes of courtship song stimuli from our behavioral dataset. This produced a total of 127,552 model responses. We then built a decoder model to predict recorded female speed from each individual neuron's response (Fig. 4A). The decoder integrated neural responses over time windows of one minute – it thereby linked computations on the order of hundreds of milliseconds to behavioral timescales of tens of seconds. The decoder model serves two goals: i) to reveal the computations within AMMC/VLP neurons that underlie female sensitivity for bout structure and ii) to suggest computations downstream of AMMC/VLP neurons that generate female responses to song.

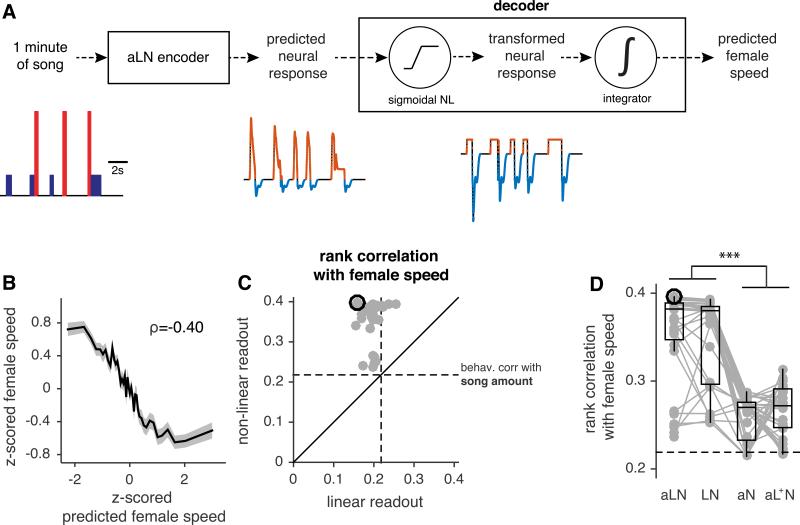

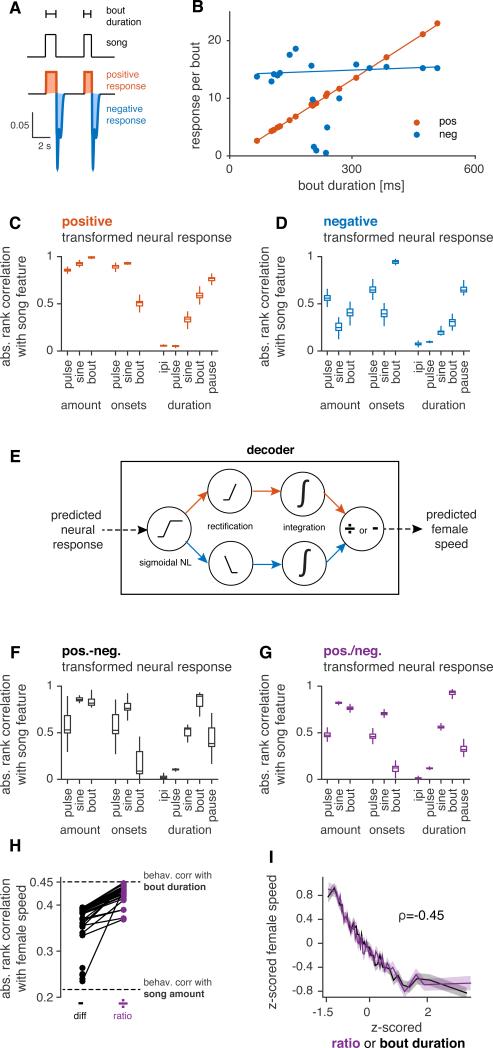

Figure 4. Decoding neuronal responses to predict behavior.

A AMMC/VLP responses to 1 minute segments of natural courtship containing song (left below) are predicted using the aLN encoder. The predicted neural responses (middle below, cell type AV1) are then transformed (right below) and integrated to yield a prediction of the average female speed for that courtship segment. The sigmoidal nonlinearity is optimized for thematch between predicted female speed and actual female speed (see panel B). Positive responses are strongly compressed (orange) and negative responses at bout ends are amplified (blue) by sigmoidal NL (see Fig. S6A-D).

B For all 3896 courtship windows, actual female speed versus predicted speed (from decoding a single AV1 neuron), ρ=−0.40. Although the original rank correlation between decoder output and female speed is positive, we inverted the curve to match the plots in Fig. 1.

C Rank correlations for actual versus predicted female speed for all 32 cells in the dataset. The sigmoidal NL improves the correlation for all cells (w/ sigmoidal NL ρ=0.38(0.04) (median(IQR)), w/o sigmoidal NL ρ=0.19(0.02), p=0, sign test). Dashed lines correspond to the absolute rank correlation between song amount and female speed (ρ=0.22; see Fig. 1D). The decoder outperforms the correlation with song amount for all cells (p= 5×10−6, sign test) and most cells (29/32) perform worse than this correlation when decoded linearly (p=3×10−6, sign test).

D Biphasic filtering - but not adaptation - is necessary for good predictions of female speed. aLN = full encoding model; LN = model lacking adaptation; aN = model lacking filtering; aL+N = all negative weights of the linear filter set to zero. aLN vs. LN p = 0.08, aLN/LN vs. aN/aL+N p<1×10−4, sign test, p-values Bonferroni-corrected for 4 comparisons. The dashed line shows the absolute rank correlation between song amount and female speed.

Black circles in C and D indicate the performance values for the example neuron shown in B.

To incorporate nonlinearities commonly found in neurons, our decoder model started with a sigmoidal nonlinearity, which imposed both a threshold and a saturation on the model neuron response (Fig. 4A). Threshold and saturation values in our model were chosen independently for each cell to optimize the match between that cell's readout and female speed. Applying the nonlinearity produced a “transformed neural response”, which when integrated, created a predicted female speed that could be compared with the recorded female speed (Fig. 4B, compare with Fig. 1D). Including the sigmoidal nonlinearity improved correlations with female behavior in all cells (Fig. 4C), which suggests that simply integrating the raw predicted AMMC/VLP responses is not sufficient to explain female behavior. Notably, all cells outperformed the correlation between song amount and female speed when decoded this way (Fig. 4C; all points are above the horizontal dashed line, which is the rank correlation between song amount and female speed, Fig. 1D), implying that the decoder relies on song features other than just the amount of song.

We next removed from the encoding models either adaptation (generating LN models) or the linear filter (generating aN models) (Fig. 4D). Removing the filter, but not adaptation, strongly reduced performance. Removing both filtering and adaptation – thereby predicting female speed from the raw song traces – further reduced performance (ρ = 0.14). Furthermore, the amplification of the negative response components by the sigmoidal nonlinearity in the decoder (Fig. 4A, blue) suggests that the negative lobe of the biphasic filter plays a major role in creating sensitivity to behaviorally relevant features of song. This amplification was not specific to any cell type (Fig. S6A-D). Removing the negative lobe of the linear filter (setting all negative weights to zero; “aL+N” models) reduced decoder performance as strongly as removing the filter altogether (Fig. 4D). Thus, the decoder model predicts female speed and identifies biphasic filtering as essential for reproducing behavioral selectivity.

A modified decoder model matches behavioral tuning for bout duration

We next identified the song features (Fig. 1G) most strongly correlated with the decoder output. Our decoder model integrates both positive (Fig. 5A, orange) and negative (Fig. 5A, blue) response components over one minute of courtship (thus, over several song bouts). The positive response component constitutes a faithful, binary representation of bout structure without discriminating between pulse and sine; it is thus highly correlated with the duration of each individual bout (Fig. 5B, orange) and the integral of the positive response over one minute correlates best with the amount of song (Fig. 5C). By contrast, the negative offset response is largely invariant to each individual bout's duration (Fig. 5B, blue) and its integral over one minute correlates best with the number of bout onsets (Fig. 5D). Thus, the positive and negative response components generated by the biphasic filters in AMMC/VLP neurons (Fig. 3) represent two distinct and behaviorally relevant song features – song amount and bout number – in a multiplexed code within the Vm of single neurons (see Discussion).

Figure 5. Modified decoder model predicts female responses to bout duration.

A Separation of the transformed neural response (Fig. 4A) into positive (orange) and negative (blue) response components by half-wave rectification at base line. Trace is from the same neuron in Fig. 4A.

B Correlation between bout duration and positive (ρ=0.99, p=0) or negative (ρ=0.20, p=0.49) response components.

C Absolute rank correlation between positive response components (averaged over one minute, not for each individual bout as in B) and song features for all cells. The rank correlation with song bout amount is highest (all p<2×10−8, one-sided sign test).

D Same as (C) but for negative response components. The rank correlation with bout onsets is highest (all p<1×10−6, one-sided sign test).

E Alternative decoder based on reading out positive and negative response components separately and then combining via subtraction (equivalent to the original decoder, Fig. 3A) or division (ratio-based decoder).

F Same as (C) but for the original decoder (Fig. 4A). There is no single feature that correlates most strongly with this decoder readout. However, the output of this decoder has a strong correlation with bout duration (ρ=0.89(0.13), median(IQR))

G Same as (C) but for the ratio-based decoder. Its ouput correlates most strongly with bout duration (ρ=0.93(0.04), all p<1×10−3, one-sided sign test).

H The ratio-based decoder outperforms the original subtraction-based decoder for all cells and reaches the correlation values achieved between the female behavior and bout duration (original decoder: ρ=0.38(0.04), ratio decoder: rank ρ=0.42(0.02), p=3×10−8, sign test).

I Comparison of female speed preference function for bout duration from behavioral data (black, reproduced from Fig. 1H) and from the ratio decoder for one AV1 cell (purple) – rank correlations are the same (p<1×10−13).

N=32 cells for (C) and N=26 cells for (D, F, G) since 6/32 cells did not exhibit a detectable negative response component. All tuning curves and correlation values based on 3896 one minute segments of song. All p-values in C,D,F,G are Bonferroni corrected for 55 comparisons between all song features. See also Fig. S6.

Our behavioral analysis identified bout duration as the best predictor of female speed - how does the female brain extract this information? Because the decoder integrates over positive and negative response components, it relies on the difference of song amount and bout number for predicting female speed. However, bout duration is the ratio – not the difference – of song amount and bout number, and it can therefore be explicitly decoded by instead dividing the integrated positive and negative response components of AMMC/VLP neurons within the decoder (Fig. 5E). The readout from this modified decoder more strongly correlates with bout duration (compare Figs. 5F and G, p=3e-8, one-sided sign test) and better predicts female speed (Fig. 5H); it reads-out responses of single neurons with a near perfect match to the behavioral correlation between bout duration and female speed (Fig. 5I). The nonlinearity in the new ratio-based decoder is not strictly necessary to predict behavior (Fig. S6E). By contrast, in the original, difference-based decoder, the nonlinearity played a major role (Fig. 4C) – there, the weight of song amount and bout number were adjusted by compression and amplification, respectively. We thus propose a simple algorithm which can extract bout duration based on a few operations: biphasic filtering in AMMC/VLP neurons, and rectification, integration and division in downstream neurons. Our approach links female selectivity for a particular property of male courtship song – song bout duration – and the neural representation of song in the female brain in a physiologically plausible way.

Discussion

In this study, we used computational models to link natural stimulus features, neural codes, and animal behavior. This approach enabled us to i) identify behaviorally relevant song features during natural behavior (Fig. 1), ii) characterize the computations that underlie the representation of sounds in early auditory neurons (Figs. 2 and 3), iii) link these computations to female behavior (Fig. 4), and iv) propose a simple algorithm for generating behavioral selectivity for song features on long timescales (Fig. 5). Similar approaches have been successfully applied in other systems, e.g. to link olfactory discrimination behavior with neural codes in Drosophila (Parnas et al., 2013) or to study the emergence of object recognition in the mammalian visual system (Dicarlo et al., 2012). Our study extends this approach to a social behavior like courtship.

Female sensitivity to song structure on long timescales

Drosophila melanogaster courtship song contains features spanning multiple timescales. Short timescale features like the inter-pulse interval (IPI) vary across species, and previous studies have focused on the IPI in relation to female receptivity (Bennet-Clark and Ewing, 1969; Schilcher, 1976). When examining timescales of integration > 2 seconds, we failed to uncover a strong effect of conspecific IPI range on female speed. Instead, we found that females are most sensitive to long timescale song features like bout duration. Our previous study showed that melanogaster females slow down in response to conspecific but not heterospecific male song; the major difference between these songs is on short timescales (e.g., IPIs), as males of both species shape song bout structure in accordance with the female's behavior (Coen et al., 2014). Taken together, our results are most consistent with the interpretation that short timescale song features indicate species identity, whereas long timescale features (like bout duration) indicate fitness (signaling, for example, a male's ability to follow the female) and are used to differentiate between conspecifics. In this context, it is worth noting that pheromonal incompatibilities between species typically prevent courtship between heterospecifics (Billeter et al., 2009; Fan et al., 2013).

The influence of longer timescale song structure on female speed is non-trivial as indicated by three aspects of our behavioral data. First, bout duration was the strongest predictor of all single or pairs of song features (Fig. 1G and Fig. S1C). Second, the duration of bouts, but not the pauses between bouts, was strongly correlated with female speed (Fig. S1B). This is contrary to the expectation if the selectivity for bout duration were a trivial consequence of its correlation with song amount, since pause duration negatively correlates with song amount. Third, females reduce speed most to song with few bout onsets but many sine onsets, while pulse onsets were uncorrelated with her speed. This pattern cannot be explained by correlations between these features and song amount. All three points indicate that females are not simply accumulating conspecific song, but instead are evaluating song bout structure on timescales exceeding that of the IPI. This has implications not only for the neural computations underlying song processing (discussed below) but also for evolution and sexual selection theories.

AMMC/VLP neurons and the representation of courtship song

To characterize the representation of song in the early auditory centers of the Drosophila brain, we recorded from as many different types of AMMC/VLP neurons as possible, and we sampled both local and projection neurons. To our surprise, response properties quantified through the aLN model (Fig. 3) revealed little qualitative difference between cell types. All neurons exhibited long linear filters, showed pronounced adaptation during responses to pulse trains, and most produced negative offset responses. The major, quantitative difference among AMMC/VLP neurons was the duration of the positive filter lobes (Fig. 3H); however, this feature did not impact behavioral predictions (rank correlation between duration of the positive lobe and decoder performance ρ=−0.01, p=0.96). The range of filter durations may thus serve other aspects of song processing or other acoustically-driven behaviors (Lehnert et al., 2013; Vaughan et al., 2014). The relative homogeneity in response properties in our data set also makes it unlikely that females rely on any single neuron type when processing male song. However, a recent study focused on two AMMC neuron types not sampled in our study (one cluster of AMMC local neurons (aLN(al)) and another cluster of AMMC projection neurons (B1 or aPN1) – both cannot be recorded from while keeping the antenna intact and motile in air). They found that neural silencing of either cluster in females lengthened the time to copulation (Vaughan et al., 2014). Whether these neuron types are also necessary for female slowing in response to courtship song was not determined. Additionally, we do not know how the auditory responses of these two neuron types differ from the AMMC/VLP neurons we sampled. Imaging neural responses using fast voltage sensors (Cao et al., 2013) should facilitate mapping the auditory codes of the full complement of neurons in the AMMC/VLP.

Linking computations in the auditory pathway with female behavior

Of the two main computations in our aLN encoder model - biphasic filtering and adaptation - only biphasic filtering influenced the full model's (encoder + decoder) ability to predict female behavior. In principle, adaptation should contribute to tuning for song features given that it acts on long - and behaviorally relevant - timescales and is known to affect temporal coding (Benda and Herz, 2003). We posit that in Drosophila AMMC/VLP neurons, adaptation may mainly serve as a gain control mechanism to preserve auditory sensitivity and conserve energy across the large range of intensities likely encountered by the female during courtship. The decoder model also revealed an important role for the weak, long negative lobe of the biphasic filter, which produces negative offset responses after the end of song bouts in 81% of the neurons recorded. This negative response is independent of bout duration and thus forms the basis for counting the number of bouts through integration. Since the decoder integrates over long time windows, as the female does in evaluating male song, the negative lobe of the filter leads to a signal that is clearly different from noise and hence can be used by the decoder. Note that in a noiseless system, the amplification of the weak negative response components is not strictly necessary to match behavior (Fig. S6E). However, amplification ensures robustness to noise – it is therefore desirable to place it as early as possible in the neural pathway, as occurs in our decoder (Fig. 5E). The negative lobe's biophysical basis may be slow inhibition or activation of hyperpolarizing currents after depolarization (e.g. Ca++-dependent K+ channels underlying AHPs).

Similar biphasic filters have been found in numerous other systems (Hart and Alon, 2013; Kato et al., 2014; Nagel and Doupe, 2006); there they are proposed to support efficient coding (Atick and Redlich, 1990; Zhao and Zhaoping, 2011). Here, we add another aspect that makes biphasic filters advantageous: the two lobes correspond to two neuronal response features - tonic and phasic – and signal distinct song features - song amount and bout number. This representation thus constitutes a multiplexed code, in which multiple features are encoded in different aspects of a single data stream (Blumhagen et al., 2011; Ratte et al., 2013). Since the tonic and phasic components occur sequentially, they can be easily de-multiplexed using rectification, an essential component of the decoder model (Fig. 5). The decoder further provides evidence that females evaluate bout duration, independent of our behavioral analysis, because we only considered the neuronal response patterns and female speed values (not song parameters) when optimizing the original (non-ratio-based) decoder's sigmoidal nonlinearity. Nonetheless, its output was strongly correlated with bout duration (ρ = 0.89).

Given that our knowledge of higher-order auditory neurons in the Drosophila brain is limited (Lai et al., 2012; Zhou et al., 2014), the utility of our decoder model is that it posits simple algorithms for transforming the auditory codes of AMMC/VLP neurons into behavioral responses to courtship song. We propose that an AMMC/VLP neuron is read out by two downstream neurons. One of these neurons positively rectifies the AMMC/VLP response and encodes bout structure in a binary way. Alternatively, this neuron could get its input from one of the AMMC/VLP neurons in our data set that lacked a sufficiently strong negative lobe (Fig. 3I). The other downstream neuron reads out the phasic response components at the end of each bout. This could be implemented through release from inhibition (cf. (Liu et al., 2015)). To improve the signal-to-noise ratio, these phasic responses could be amplified by voltage-dependent conductances (Engel and Jonas, 2005; González-Burgos and Barrionuevo, 2001). The output of these two downstream neurons would then be integrated, for example through recurrent connectivity, intracellular molecules like calcium, or extracellular molecules like neuropeptides (Durstewitz et al., 2000; Flavell et al., 2013; Major and Tank, 2004). Finally, the two integrated values are combined by divisive inhibition to yield an output that is used to control female speed (Gabbiani et al., 2002; Silver, 2010).

Our decoder model, like female behavior, computes average bout duration, not the duration of single song bouts, suggesting that individual Drosophila neurons do not encode bout duration explicitly. This contrasts with other systems, where duration-tuned neurons have been found (Aubie et al., 2012). In the case of processing echolocation signals or speech, knowing the duration of individual calls is likely essential. However, for the female fruit fly, the duration of an individual bout may carry little information about male fitness. This highlights a general property of the integration processes associated with decision-making or behavioral control (Brunton et al., 2013; Clemens et al., 2014; DasGupta et al., 2014): some features of the sensory environment may be available to the animal only in the form of averages or summary statistics (Clemens and Ronacher, 2013; McDermott and Simoncelli, 2011; Freeman and Simoncelli, 2011). This has consequences for the search for neural correlates of behavior, in that explicit neuronal selectivity for behaviorally relevant features may never arise until after decision-making stages.

Experimental Procedures

Flies

Details regarding all fly stocks and genotypes can be found in Supplemental Experimental Procedures.

Behavioral Analysis

We used a previously published data set of natural courtship song and accompanying male and female movements, from pairs of wild type males (of 8 geographically diverse strains) and PIBL (pheromone-insensitive and blind) females (Coen et al., 2014). Definitions of song features and details of all analyses can be found in Supplemental Experimental Procedures.

Sound Delivery and Electrophysiology

Patch clamp recordings were performed and auditory stimuli were delivered as described previously in (Tootoonian et al., 2012). We recorded from 15 different cell types with projections in the AMMC/VLP (see Table 1). For details see Supplemental Experimental Procedures.

Analysis and Modeling of Electrophysiological Data

Tuning curves for frequency, intensity and inter-pulse interval were constructed from the baseline subtracted membrane voltage (Vm). The Vm response was predicted using an adaptive linear-nonlinear (aLN) model, in which the stimulus envelope was pre-processed by a divisive adaptation stage, before being filtered and nonlinearly transformed to the predicted Vm. See Supplemental Experimental Procedures for details on analyses, model parameters and fitting procedures.

Behavioral Model

For predicting the female behavioral response to song, we used aLN models fitted to the electrophysiological data. We generated model neural responses for the courtship song recorded in the behavioral assay. We then predicted female speed using a decoder, which consisted of a sigmoidal nonlinearity and an integration stage. See Supplemental Experimental Procedures for details on the decoder model and variants tested.

Supplementary Material

Acknowledgements

We thank Carlos Brody, Jonathan Pillow, Tim Hanks, and Adam Calhoun for comments on the manuscript. JC was funded by the DAAD (German Academic Exchange Foundation), CCG by a Marie-Curie International Research Fellowship, and MM by the Alfred P. Sloan Foundation, Human Frontiers Science Program, NSF CAREER award, McKnight Foundation, Klingenstein Foundation, NSF BRAIN Initiative EAGER award, and an NIH New Innovator award.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions: JC, CCG and MM designed the study. CCG performed neural recordings and immunohistochemistry, with assistance from X-JG. PC collected the behavioral data. BJD contributed genetic enhancer lines. JC analyzed the data and generated the computational models. JC and MM wrote the paper.

References

- Arthur BJ, Sunayama-Morita T, Coen P, Murthy M, Stern DL. Multi-channel acoustic recording and automated analysis of Drosophila courtship songs. BMC Biol. 2013;11:11. doi: 10.1186/1741-7007-11-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atick JJ, Redlich AN. Towards a theory of early visual processing. Neural Computation. 1990;2:308–320. [Google Scholar]

- Aubie B, Sayegh R, Faure PA. Duration Tuning across Vertebrates. Journal of Neuroscience. 2012;32:6373–6390. doi: 10.1523/JNEUROSCI.5624-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow H. Redundancy reduction revisited. Network: Computation in Neural Systems. 2001;12:241–253. [PubMed] [Google Scholar]

- Benda J, Herz AVM. A universal model for spike-frequency adaptation. Neural Computation. 2003;15:2523–2564. doi: 10.1162/089976603322385063. [DOI] [PubMed] [Google Scholar]

- Bennet-Clark HC, Ewing AW. Pulse interval as a critical parameter in the courtship song of Drosophila melanogaster. Animal Behaviour. 1969;17:755–759. [Google Scholar]

- Billeter J-C, Atallah J, Krupp JJ, Millar JG, Levine JD. Specialized cells tag sexual and species identity in Drosophila melanogaster. Nature. 2009;461:987–991. doi: 10.1038/nature08495. [DOI] [PubMed] [Google Scholar]

- Blumhagen F, Zhu P, Shum J, Schärer Y-PZ, Yaksi E, Deisseroth K, Friedrich RW. Neuronal filtering of multiplexed odour representations. Nature. 2011;479:493–498. doi: 10.1038/nature10633. [DOI] [PubMed] [Google Scholar]

- Brunton BW, Botvinick MM, Brody CD. Rats and Humans Can Optimally Accumulate Evidence for Decision-Making. Science. 2013;340:95–98. doi: 10.1126/science.1233912. [DOI] [PubMed] [Google Scholar]

- Cao G, Platisa J, Pieribone VA, Raccuglia D, Kunst M, Nitabach MN. Genetically Targeted Optical Electrophysiology in Intact Neural Circuits. Cell. 2013;154:904–913. doi: 10.1016/j.cell.2013.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carandini M, Heeger DJ. Normalization as a canonical neural computation. Nature Reviews Neuroscience. 2012;13:51–62. doi: 10.1038/nrn3136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiang A-S, Lin C-Y, Chuang C-C, Chang H-M, Hsieh C-H, Yeh C-W, Shih C-T, Wu J-J, Wang G-T, Chen Y-C, et al. Three-Dimensional Reconstruction of Brain-wide Wiring Networks in Drosophila at Single-Cell Resolution. Current Biology. 2010;21:1–11. doi: 10.1016/j.cub.2010.11.056. [DOI] [PubMed] [Google Scholar]

- Clemens J, Ronacher B. Feature extraction and integration underlying perceptual decision making during courtship behavior. Journal of Neuroscience. 2013;33:12136–12145. doi: 10.1523/JNEUROSCI.0724-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clemens J, Krämer S, Ronacher B. Asymmetrical integration of sensory information during mating decisions in grasshoppers. Proc Natl Acad Sci U S A. 2014;111:16562–16567. doi: 10.1073/pnas.1412741111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clemens J, Kutzki O, Ronacher B, Schreiber S, Wohlgemuth S. Efficient transformation of an auditory population code in a small sensory system. Proc Natl Acad Sci U S A. 2011;108:13812–13817. doi: 10.1073/pnas.1104506108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coen P, Clemens J, Weinstein AJ, Pacheco DA, Deng Y, Murthy M. Dynamic sensory cues shape song structure in Drosophila. Nature. 2014;507:233–237. doi: 10.1038/nature13131. [DOI] [PubMed] [Google Scholar]

- DasGupta S, Ferreira CH, Miesenböck G. FoxP influences the speed and accuracy of a perceptual decision in Drosophila. Science. 2014;344:901–904. doi: 10.1126/science.1252114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- David SV, Shamma SA. Integration over Multiple Timescales in Primary Auditory Cortex. Journal of Neuroscience. 2013;33:19154–19166. doi: 10.1523/JNEUROSCI.2270-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- deCharms RC, Zador A. Neural Representation and the Cortical Code. Annu. Rev. Neurosci. 2000;23:613–647. doi: 10.1146/annurev.neuro.23.1.613. [DOI] [PubMed] [Google Scholar]

- Dicarlo JJ, Zoccolan D, Rust NC. How Does the Brain Solve Visual Object Recognition? Neuron. 2012;73:415–434. doi: 10.1016/j.neuron.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Neurocomputational models of working memory. Nature Neuroscience. 2000;3(Suppl):1184–1191. doi: 10.1038/81460. [DOI] [PubMed] [Google Scholar]

- Engel D, Jonas P. Presynaptic Action Potential Amplification by Voltage-Gated Na+ Channels in Hippocampal Mossy Fiber Boutons. Neuron. 2005;45:405–417. doi: 10.1016/j.neuron.2004.12.048. [DOI] [PubMed] [Google Scholar]

- Fairhall AL, Lewen GD, Bialek W, Ruyter D. Efficiency and ambiguity in an adaptive neural code. Nature. 2001;412:787–792. doi: 10.1038/35090500. [DOI] [PubMed] [Google Scholar]

- Fan P, Manoli DS, Ahmed OM, Chen Y, Agarwal N, Kwong S, Cai AG, Neitz J, Renslo A, Baker BS, et al. Genetic and Neural Mechanisms that Inhibit Drosophila from Mating with Other Species. Cell. 2013;154:89–102. doi: 10.1016/j.cell.2013.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flavell SW, Pokala N, Macosko EZ, Albrecht DR, Larsch J, Bargmann CI. Serotonin and the Neuropeptide PDF Initiate and Extend OpposingBehavioral States in C. elegans. Cell. 2013;154:1023–1035. doi: 10.1016/j.cell.2013.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman J, Simoncelli EP. Metamers of the ventral stream. Nature Neuroscience. 2011;14:1195–1201. doi: 10.1038/nn.2889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabbiani F, Krapp HG, Koch C, Laurent G. Multiplicative computation in a visual neuron sensitive to looming. Nature. 2002;420:320–324. doi: 10.1038/nature01190. [DOI] [PubMed] [Google Scholar]

- González-Burgos G, Barrionuevo G. Voltage-gated sodium channels shape subthreshold EPSPs in layer 5 pyramidal neurons from rat prefrontal cortex. Journal of Neurophysiology. 2001;86:1671–1684. doi: 10.1152/jn.2001.86.4.1671. [DOI] [PubMed] [Google Scholar]

- Hart Y, Alon U. The utility of paradoxical components in biological circuits. Mol. Cell. 2013;49:213–221. doi: 10.1016/j.molcel.2013.01.004. [DOI] [PubMed] [Google Scholar]

- Kain P, Dahanukar A. Secondary Taste Neurons that Convey Sweet Taste and Starvation in the Drosophila Brain. Neuron. 2015;85:819–832. doi: 10.1016/j.neuron.2015.01.005. [DOI] [PubMed] [Google Scholar]

- Kamikouchi A, Inagaki HK, Effertz T, Hendrich O, Fiala A, Göpfert MC, Ito K. The neural basis of Drosophila gravity-sensing and hearing. Nature. 2009;458:165–171. doi: 10.1038/nature07810. [DOI] [PubMed] [Google Scholar]

- Kamikouchi A, Shimada T, Ito KEI. Comprehensive Classification of the Auditory Sensory Projections in the Brain of the Fruit Fly Drosophila melanogaster. Comparative and General Pharmacology. 2006;356:317–356. doi: 10.1002/cne.21075. [DOI] [PubMed] [Google Scholar]

- Kato S, Xu Y, Cho CE, Abbott LF, Bargmann CI. Temporal Responses of C. elegans Chemosensory Neurons Are Preserved in Behavioral Dynamics. Neuron. 2014;81:616–628. doi: 10.1016/j.neuron.2013.11.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kvon EZ, Kazmar T, Stampfel G, Yáñez-Cuna JO, Pagani M, Schernhuber K, Dickson BJ, Stark A. Genome-scale functional characterization of Drosophila developmental enhancers in vivo. Nature. 2014 doi: 10.1038/nature13395. [DOI] [PubMed] [Google Scholar]

- Lai JS-Y, Lo S-J, Dickson BJ, Chiang A-S. Auditory circuit in the Drosophila brain. Proc Natl Acad Sci U S A. 2012;109 doi: 10.1073/pnas.1117307109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehnert BP, Baker AE, Gaudry Q, Chiang A-S, Wilson RI. Distinct Roles of TRP Channels in Auditory Transduction and Amplification in Drosophila. Neuron. 2013;77:115–128. doi: 10.1016/j.neuron.2012.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu WW, Mazor O, Wilson RI. Thermosensory processing in the Drosophila brain. Nature. 2015 doi: 10.1038/nature14170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Major G, Tank D. Persistent neural activity: prevalence and mechanisms. Current Opinion in Neurobiology. 2004;14:675–684. doi: 10.1016/j.conb.2004.10.017. [DOI] [PubMed] [Google Scholar]

- McDermott JH, Simoncelli EP. Sound texture perception via statistics of the auditory periphery: evidence from sound synthesis. Neuron. 2011;71:926–940. doi: 10.1016/j.neuron.2011.06.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagel KI, Doupe AJ. Temporal Processing and Adaptation in the Songbird Auditory Forebrain. Neuron. 2006;51:845–859. doi: 10.1016/j.neuron.2006.08.030. [DOI] [PubMed] [Google Scholar]

- Olshausen BA, Field DJ. Sparse coding of sensory inputs. Current Opinion in Neurobiology. 2004;14:481–487. doi: 10.1016/j.conb.2004.07.007. [DOI] [PubMed] [Google Scholar]

- Otsuna H, Ito K. Systematic analysis of the visual projection neurons ofDrosophila melanogaster. I. Lobula-specific pathways. J. Comp. Neurol. 2006;497:928–958. doi: 10.1002/cne.21015. [DOI] [PubMed] [Google Scholar]

- Parnas M, Lin AC, Huetteroth W, Miesenböck G. Odor Discrimination in Drosophila: From Neural Population Codes to Behavior. Neuron. 2013;79:932–944. doi: 10.1016/j.neuron.2013.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeiffer BD, Jenett A, Hammonds AS, Ngo T-TB, Misra S, Murphy C, Scully A, Carlson JW, Wan KH, Laverty TR, et al. Tools for neuroanatomy and neurogenetics in Drosophila. Proc Natl Acad Sci U S A. 2008;105:9715–9720. doi: 10.1073/pnas.0803697105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature. 2005;435:1102–1107. doi: 10.1038/nature03687. [DOI] [PubMed] [Google Scholar]

- Ratte S, Hong S, De Schutter E, Prescott SA. Impact of Neuronal Properties on Network Coding: Roles of Spike Initiation Dynamics and Robust Synchrony Transfer. Neuron. 2013;78:758–772. doi: 10.1016/j.neuron.2013.05.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salinas E. How Behavioral Constraints May Determine Optimal Sensory Representations. PLoS Biology. 2006;4:e387. doi: 10.1371/journal.pbio.0040387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schilcher Von, F. The role of auditory stimuli in the courtship of Drosophila melanogaster. Animal Behaviour. 1976;24:18–26. [Google Scholar]

- Schwartz O, Pillow JW, Rust NC, Simoncelli EP. Spike-triggered neural characterization. Journal of Vision. 2006;6:484–507. doi: 10.1167/6.4.13. [DOI] [PubMed] [Google Scholar]

- Sharpee TO, Sugihara H, Kurgansky AV, Rebrik SP, Stryker MP, Miller KD. Adaptive filtering enhances information transmission in visual cortex. Nature. 2006;439:936–942. doi: 10.1038/nature04519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silver AR. Neuronal arithmetic. Nature Reviews Neuroscience. 2010;11:474–489. doi: 10.1038/nrn2864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theunissen FE, Elie JE. Neural processing of natural sounds. Nature Reviews Neuroscience. 2014;15:355–366. doi: 10.1038/nrn3731. [DOI] [PubMed] [Google Scholar]

- Tootoonian S, Coen P, Kawai R, Murthy M. Neural Representations of Courtship Song in the Drosophila Brain. Journal of Neuroscience. 2012;32:787–798. doi: 10.1523/JNEUROSCI.5104-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaughan AG, Zhou C, Manoli DS, Baker BS. Neural Pathways for the Detection and Discrimination of Conspecific Song in D. melanogaster. Current Biology. 2014 doi: 10.1016/j.cub.2014.03.048. [DOI] [PubMed] [Google Scholar]

- Yorozu S, Wong A, Fischer BJ, Dankert H, Kernan MJ, Kamikouchi A, Ito K, Anderson DJ. Distinct sensory representations of wind and near-field sound in the Drosophila brain. Nature. 2009;458:201–205. doi: 10.1038/nature07843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu JY, Kanai MI, Demir E, Jefferis GSXE, Dickson BJ. Cellular organization of the neural circuit that drives Drosophila courtship behavior. Current Biology : CB. 2010;20:1602–1614. doi: 10.1016/j.cub.2010.08.025. [DOI] [PubMed] [Google Scholar]

- Zhao L, Zhaoping L. Understanding Auditory Spectro-Temporal Receptive Fields and Their Changes with Input Statistics by Efficient Coding Principles. PLoS Comput Biol. 2011;7:e1002123. doi: 10.1371/journal.pcbi.1002123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou C, Pan Y, Robinett CC, Meissner GW, Baker BS. Central Brain Neurons Expressing doublesex Regulate Female Receptivity in Drosophila. Neuron. 2014;83:149–163. doi: 10.1016/j.neuron.2014.05.038. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.