Abstract

Perceptual learning is a sustainable improvement in performance on a perceptual task following training. A hallmark of perceptual learning is task specificity – after participants have trained on and learned a particular task, learning rarely transfers to another task, even with identical stimuli. Accordingly, it is assumed that performing a task throughout training is a requirement for learning to occur on that specific task. Thus, interleaving training trials of a target task, with those of another task, should not improve performance on the target task. However, recent findings in audition show that interleaving two tasks during training can facilitate perceptual learning, even when the training on neither task yields learning on its own. Here we examined the role of cross-task training in the visual domain by training 4 groups of human observers for 3 consecutive days on an orientation comparison task (target task) and/or spatial-frequency comparison task (interleaving task). Interleaving small amounts of training on each task, which were ineffective alone, not only enabled learning on the target orientation task, as in audition, but also surpassed the learning attained by training on that task alone for the same total number of trials. This study illustrates that cross-task training in visual perceptual learning can be more effective than single-task training. The results reveal a comparable learning principle across modalities and demonstrate how to optimize training regimens to maximize perceptual learning.

Keywords: Perceptual learning, cross-task training, orientation, spatial-frequency, comparison task, transfer

1. Introduction

Plasticity is a key feature of the brain that adapts our perception and behavior to ongoing changes in the environment. Plasticity in the perceptual domain is revealed through perceptual learning (PL)–the ability to improve performance on sensory tasks through training (Carmel & Carrasco, 2008; Levi & Li, 2009; Sagi, 2011). In this study we evaluated whether cross-task training, i.e., interleaving training with a different task, would affect perceptual learning in vision.

Specificity is a hallmark of PL; after training with a single specific stimulus and task, PL rarely generalizes to untrained stimulus features or untrained tasks (reviews: Sagi, 2011; Wright & Zhang, 2009). For example, training on orientation discrimination leads to learning with the trained orientation, but after learning has been achieved, orientation discrimination with the orthogonal orientation is not improved, thus showing feature specificity of learning (e.g., Crist, et al., 1997; Karni & Sagi, 1991; Seitz et al., 2009; Schoups, et al., 2001; Shiu and Pashler, 1992; Zhang J. Y. et al, 2010). Likewise, training and corresponding learning on one task often does not aid learning with a different task, even with the same extensively trained stimulus, showing task specificity (Ahissar & Hochstein, 1993; Mossbridge et al., 2006; Shiu & Pashler, 1992). For example, training on brightness discrimination of lines leads to learning, but such training does not improve orientation discrimination of the same lines (Shiu & Pashler, 1992). Thus, these studies on task learning suggest that performing a task throughout training is a requirement for learning to occur on that specific task.

Recently, PL transfer has been reported across locations and features with training procedures that involve exposure to more than one stimulus or training on more than one task (Harris, Gliksberg, & Sagi, 2012; Xiao et al, 2008; Wang et al., 2012; Zhang, J. Y. et al, 2010). For example, learning on a texture-discrimination task (TDT) can transfer to untrained locations at which task-irrelevant dummy trials containing textures oriented 45° away from the trained stimulus have been presented, possibly due to a release from adaptation (Harris et al., 2012); learning on this task, however, differs from other visual PL phenomena in that it may reflect temporal learning (Wang, Cong & Yu, 2013). Similarly, learning of contrast discrimination can transfer to untrained locations if orientation learning has occurred at those locations (double training paradigm) (Xiao et al, 2008). With respect to transfer across features, orientation learning in the fovea can transfer to the orthogonal orientation when the orientation learning is followed by contrast learning with the orthogonal stimulus (training-plus-exposure procedure); this finding has been attributed to rule-based learning (Zhang, J.Y. et al., 2010).

The studies mentioned so far focused on learning generalization; whether learning is specific or generalizes was examined after training, once learning had already been achieved. In contrast, in this study, we investigate the effects of interleaving tasks during the process of learning acquisition (cross-task training). According to learning specificity, learning would require continuous training on the task to be learned, and thus interleaving training trials of one task with another task would have no effect on learning. Previous results are consistent with that prediction – switching between bisection and vernier discrimination tasks using the same stimulus for both tasks did not improve learning on either task (Huang et al., 2012; Li et al., 2004). These results are in line with the findings that the same stimulus can elicit different cortical representations either in vision (Li et al., 2004) or audition (Polley et al., 2006) depending on the trained task. However, a recent study in audition has shown that a given amount of training on one task insufficient to promote learning on its own, when coupled with training on another different task (cross-task training), enables PL when the same standard stimulus is used for both tasks (Wright et al., 2010).

Can cross-task training also enable PL in vision? Can cross-task training even enhance PL? Although PL has been investigated in all sensory modalities, little is known about the extent to which PL mechanisms are similar across them or specific to each modality. Similarities in PL across sensory modalities have been assumed (Polley et al., 2006; Seitz & Dinse, 2007) but rarely tested. Here we use a cross-task training paradigm in vision modeled after that used in audition (Wright et al., 2010). To match the auditory training paradigm, we implement comparison tasks, instead of conventional discrimination tasks (e.g., Huang et al., 2012; Li et al., 2004), and use the same standard stimulus for both tasks. We focus our assessment on learning on a target orientation-comparison task, because PL for orientation is well characterized (Dosher & Lu, 2005; Karni & Sagi, 1991; Schoups, et al., 2001; Shiu & Pashler, 1992; Xiao et al., 2008; Zhang T. et al., 2010; Zhang J. Y. et al., 2010). We use spatial-frequency comparison as the interleaving task.

To investigate the role of cross-task training in vision, we first establish a training amount that is insufficient to lead to learning of orientation when observers are trained only on the orientation or the spatial-frequency comparison task. We then examine a cross-task training paradigm by alternating training between the orientation comparison and frequency comparison tasks. According to task specificity, there should be no interaction among tasks during training, and thus cross-task training should not enable learning. Additionally, to compare the effects of cross-task training to single-task training, and to control for the total number of exposures to the same standard stimulus, we train another group of observers only on orientation.

Although task specificity was the primary focus of our study, we also examine the effects of cross-task training on generalization across features by testing the untrained orthogonal orientation, as PL specificity for orientation has been established (e.g., Karni & Sagi, 1991; Seitz et al., 2009; Shiu & Pashler, 1992; Zhang J. Y. et al, 2010).

2. Methods

2.1. Observers

Twenty-eight human observers with normal or corrected-to-normal vision participated in this study (sixteen females, mean age 24.1 years, std=6.79). All observers were naïve to the study’s purpose and had not participated in experiments using the tasks and stimuli used here. Observers were paid for their participation. The NYU Review Board approved the protocol and observers gave informed consent.

2.2. Apparatus and Stimuli

The stimuli were presented on a calibrated 21-in. color IBM monitor (resolution:1280 × 960 pixels; refresh rate:100 Hz). The experiment was programmed in Matlab7.1 using Psychtoolbox 3.0.8. Observers were seated in a dark room, 57 cm from the screen with their heads supported by a chin- and forehead-rest. The screen background was gray (57cd/m2).

The stimuli were two Gabor patches (30% contrast), subtending 2° of visual angle, located on the horizontal meridian and centered at 5° eccentricity. On each trial, the standard stimulus was equally likely to be on either side of a fixation cross, and the other stimulus was on the opposite side. The standard stimulus was a Gabor patch of constant spatial frequency (4 cpd) and orientation (30° or 300°). The other stimulus was either of the same spatial frequency as the standard, with a slight clockwise or counter-clockwise orientation offset (orientation task), or of the same orientation as the standard, with a higher or lower spatial frequency (spatial-frequency task).

2.3.Task and Procedure

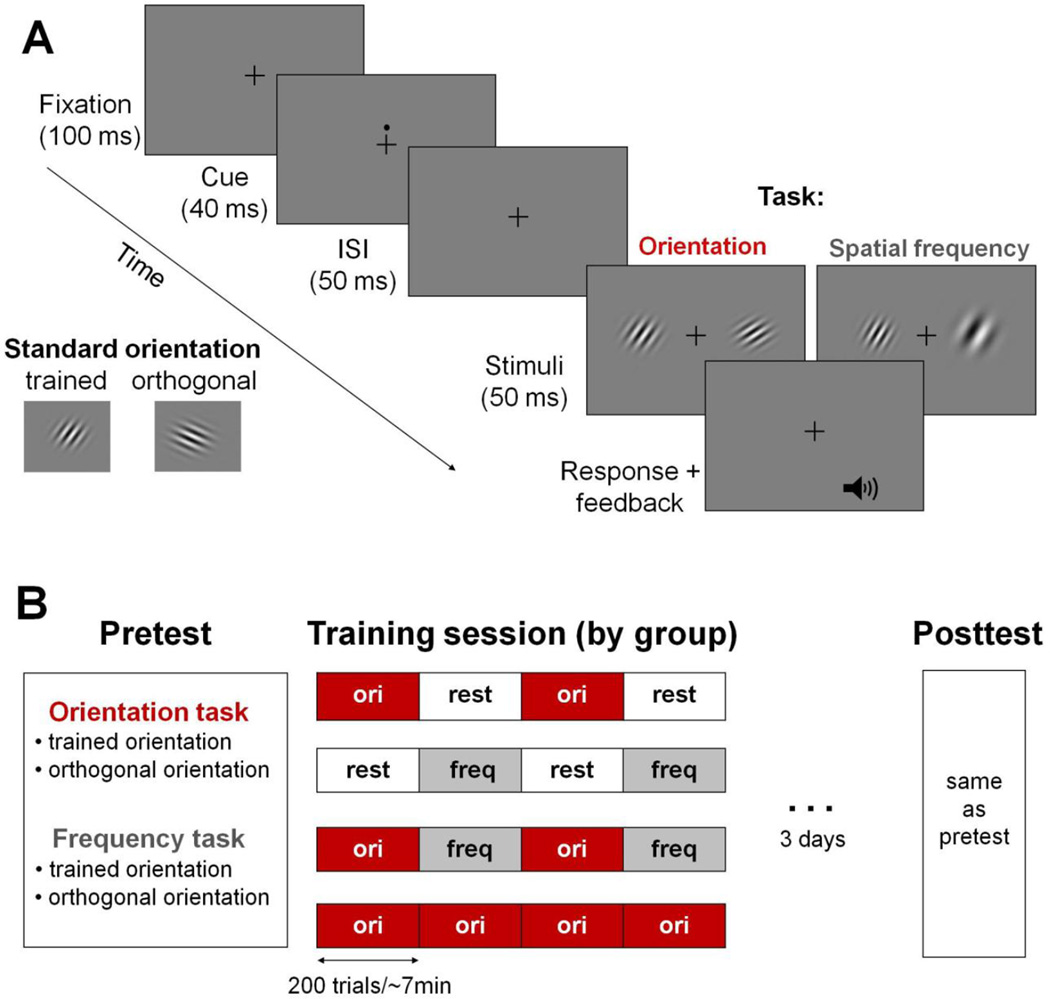

Observers were asked to maintain fixation throughout the trial sequence, and to indicate which of the two stimuli (right or left) was more clockwise for the orientation task, or of higher frequency for the spatial-frequency task, by pressing a keyboard button. Each trial began with the 100-ms fixation cross (0.25° × 0.25°, <4cd/m2) at the center, followed by a 50-ms temporal cue (a black dot, just above fixation). Then, after a 50-ms inter-stimulus interval (ISI), the two stimuli appeared for 100 ms (Figure 1A). A feedback tone indicated whether each trial’s response was correct or incorrect.

Figure 1.

A. Trial sequence used for all groups. Each trial started with a 100-ms fixation cross, followed by a 50-ms temporal cue, followed by a 50-ms ISI, after which the two stimuli appeared for 100 ms. The stimuli included a standard stimulus (oriented at 30° or 300°) and a test stimulus which varied in spatial frequency or orientation depending on the task. The location of the standard (left or right) varied randomly in each trial. Observers had to compare the two stimuli and indicate which one was more clockwise (orientation task) or had a higher spatial frequency (spatial-frequency task). Each response was followed by an auditory cue indicating whether the response was correct.

B. Procedure across groups (n=7 for each group). All observers participated in a pre-test and a post-test that included testing for the two tasks for the two standard stimuli (order counterbalanced). Each group underwent a different training procedure that was identical for the 3 training days. Groups “O-O-” and “F-F-” trained on orientation alternating with rest and rest alternating with spatial-frequency, respectively (400 trials). Group “OFOF” trained on orientation alternating with spatial frequency (400 trials on each task). Group “OOOO” trained only on orientation (800 trials).

2.4.Testing sessions

The experiment was completed within 5 days. All observers participated in the same pretest (Day-1) and post-test (Day-5), during which they were tested on both the orientation- and spatial-frequency comparison tasks. The pre-test began with a brief period of practice with each of the two tasks. We then adjusted the orientation or spatial frequency difference from the standard to achieve ~70% accuracy. Performance throughout was measured (% correct) for these fixed stimulus differences. The pre-test and post-test were identical and included four blocks of 300 trials for each of four conditions, each testing a combination of task (orientation or spatial frequency) with either standard orientation (30° or 300°). The order of tasks and orientations was independently counterbalanced across observers for the pre-test and the posttest.

2.5.Training regimens

Training was performed during three consecutive days (Days 2–4) with the 30° standard stimulus. Observers were randomly assigned to one of four training regimens (n=7 in each group, Figure 1B), during which they were trained on the spatial-frequency task, the orientation task, or both (cross-task training). For simplicity, “O” indicates training on the orientation task, “F” indicates training on the spatial-frequency task, and “−“ indicates rest in the dark room for ~7min (equivalent time to performing 200 trials).

Groups trained on: (1) “O–O–” orientation alternating with rest (400 trials per day); (2) “– F–F” spatial-frequency alternating with rest (400 trials); (3) “OFOF” orientation alternating with spatial-frequency (400 trials per task, 800 trials per day); (4) “OOOO” orientation (800 trials). Each block consisted of 200 trials. Each training session lasted ~30 min for all groups.

3. Results

Cross-task training improved learning

To compare improvement across groups, we first established that performance in the pretest did not differ across them [for both tasks and both orientations, all p≥.357]. Then we conducted a mixed design ANOVA with session (pre-test vs. post-test), task (orientation vs. spatial frequency), and stimulus orientation (trained vs. orthogonal) as within-subject repeated factors, and group as the between-subject factor. There was a significant 4-way interaction [F(3,24)=4.94, p=.008], so we examined each group separately1. For each group we examined the effects of task (orientation vs. spatial frequency) and stimulus orientation (trained vs. orthogonal) on the amount of improvement (session: pre-test vs. post-test). We performed repeated measures ANOVAs and paired two-tailed t-tests.

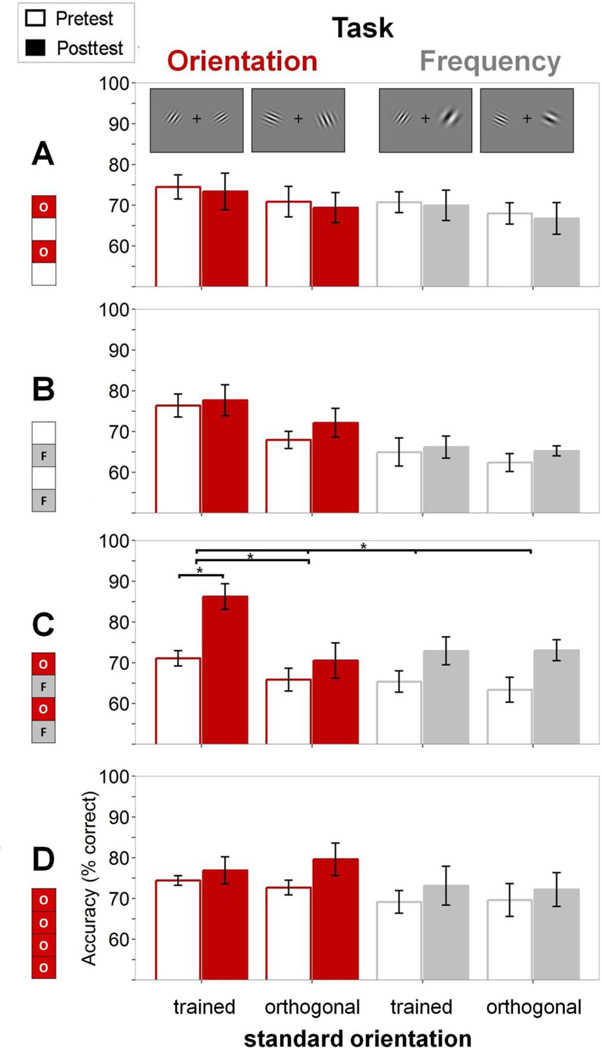

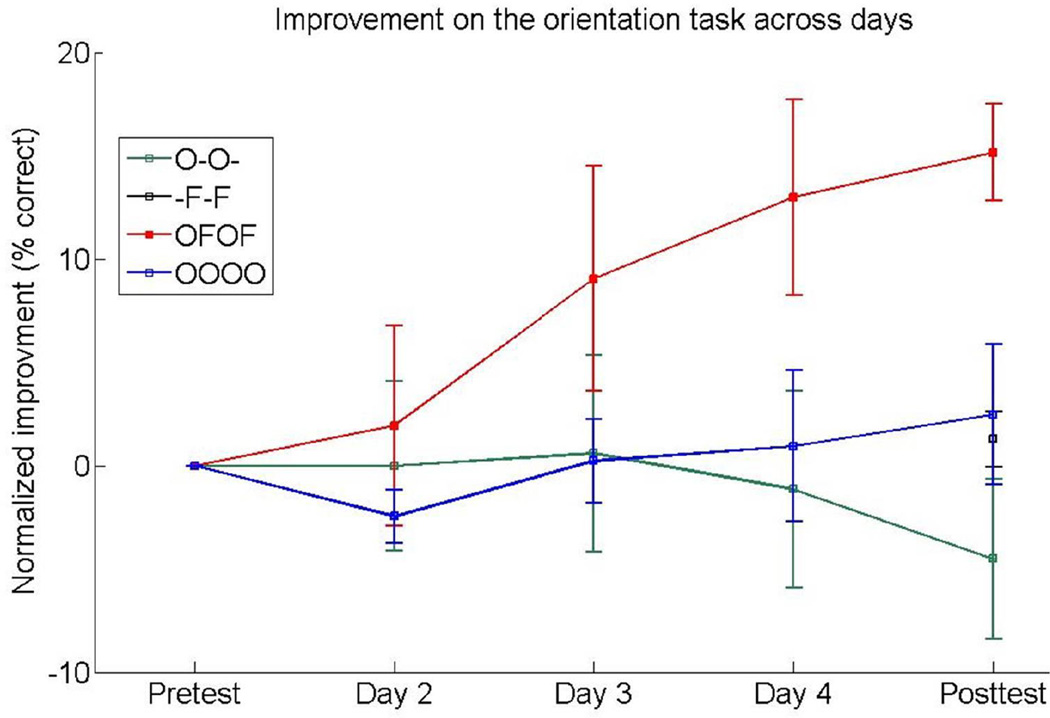

By design, the two groups that received only minimal training (400 trials per day) showed no improvement on any condition. There was no significant main effect of session, interaction of task and session, or 3-way interaction for either the “O–O–" group or the “–F–F" group (Figure 2A,B) [all p≥.234]. Neither group showed learning across blocks during the training phase (Figure 3; green and black symbols). Having established that the “O–O–” group did not learn on the trained orientation condition (the target condition), we could then evaluate whether interleaving spatial-frequency training with orientation training would enable learning on that condition. Likewise, having established that the “–F–F” group did not improve on the orientation task indicates that the spatial-frequency trials on their own could not aid learning on orientation through generalization.

Figure 2.

Results of testing sessions for all groups (n=7 per group). A. Empty and filled bars indicate pretest and post-test accuracy (% correct), respectively. Results are shown separately for the two tasks and two orientations of the standard stimulus: red indicates orientation and grey spatial frequency for both trained and orthogonal (untrained) orientation. Group “OFOF” showed significant learning on the trained orientation condition (see text). Results show means across observers, error bars depict SEM.

Figure 3.

Normalized improvement across days for all groups on the orientation task. The normalized amount of improvement was calculated for each observer by subtracting the % correct performance on the pre-test dayfrom that on each of days 2–5). Performance on the orientation task improved gradually across days for the cross-task group (“OFOF”; red symbols and line) but not for the other groups (“O-O-“, green symbols and line; “-F-F”, black symbols; “OOOO”, blue symbols and line). Results show means across observers, error bars depict SEM.

Despite the lack of learning on the target condition (the orientation task at 30°) in the “O–O–” and “–F–F” groups, there was learning on that condition when the training alternated between the orientation and spatial-frequency tasks. For the “OFOF” group, there was a significant main effect of session [F(1,6)=10.774, p=.017] and 3-way interaction [F(1,6)=13.341, p=.011], indicating differential learning across tasks and stimuli; Figure 2C. For the target task, orientation comparison, there was a significant effect of session [F(1,6)=29.123, p=.002] and session X stimulus interaction [F(1,6)=11.983, p=.013], revealing learning for the trained orientation [t(6)=6.444, p=.0006] but only marginal generalization to the untrained orthogonal orientation [t(6)=1.938, p=.1]. Performance gradually increased across training blocks on the trained orientation condition (Figure 3; red symbols), indicating significant learning even with an amount of training insufficient on its own (“O–O–” group). No learning occurred for the interleaving spatial-frequency comparison task; there was no significant main effect of session or session X stimulus interaction [p≥.131].

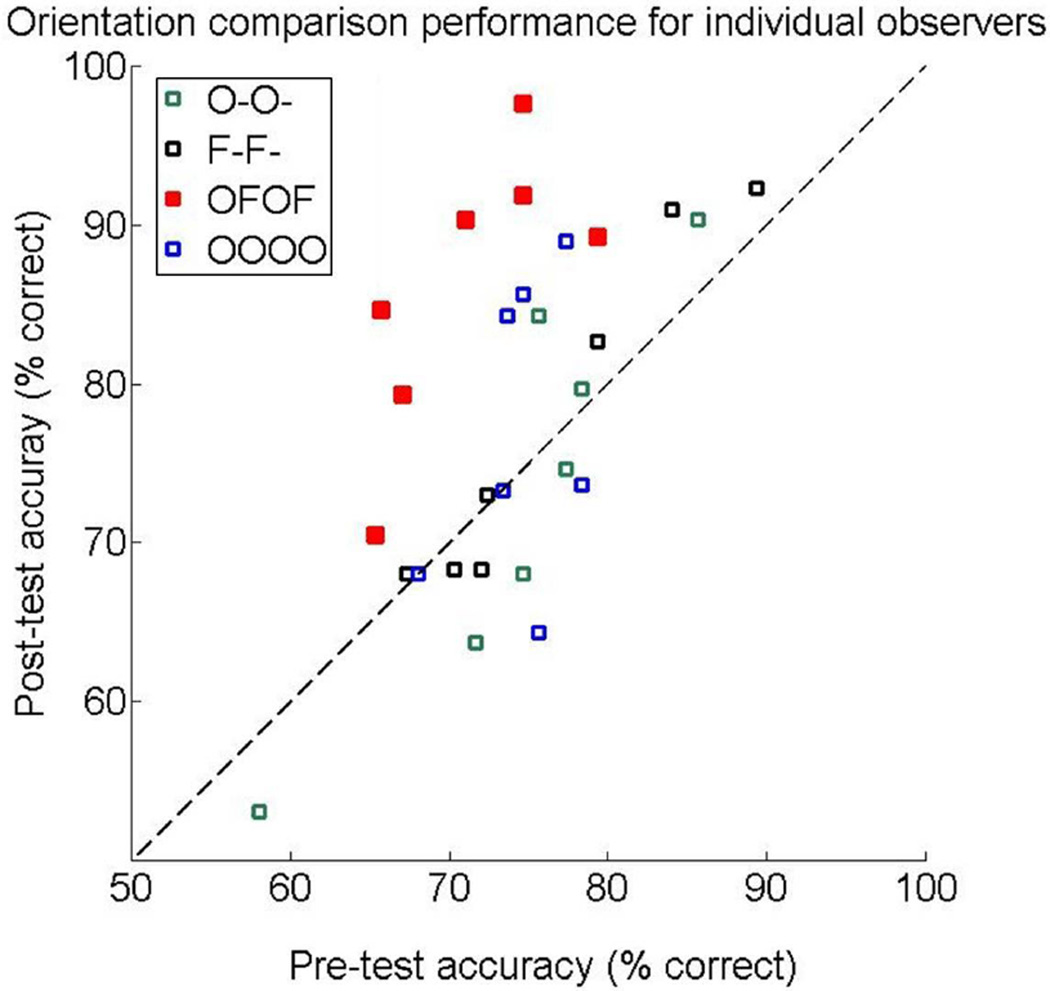

The learning on the target orientation condition in the “OFOF” group depended on the interleaving of the tasks, and was not merely due to the additional exposure to the same standard stimulus with the spatial-frequency task: When observers trained only on the orientation task (“OOOO”), no learning occurred, even though that group received the same number of exposures to the standard stimulus as the “OFOF” group (800 trials per day). The “OOOO” group did not improve on any condition as exhibited by the lack of a significant main effect of session or 3-way or 2-way interactions [all p≥.304; Figure 2D, Figure 3, blue symbols]. This result differs from the significant improvement shown by the “OFOF” group, the only group that showed significant learning (Figures 2 and 3) for all observers (Figure 4)

Figure 4.

Pre-test and post-test performance on the orientation task of individual observers across groups. The dashed diagonal line indicates identical performance between pre-test and post-test. All observers from the cross-task group (red symbols, “OFOF”) are above the line and above observers from other groups (“O-O-“, green symbols; “-F-F”, black; “OOOO”, blue), showing the benefit of cross-task training.

4. Discussion

4.1. Cross-task training benefits visual perceptual learning

We asked whether a visual variant of a cross-task training paradigm that is beneficial for auditory learning (Wright et al., 2010) would be beneficial for visual perceptual learning. We found that cross-task training, that is, replacing a portion of training of orientation comparison with frequency comparison, not only enabled learning but also enhanced it, even more than additional training with the orientation task only. This study reveals that post-training task specificity does not imply that tasks do not interact during training.

We first established an amount of training on an orientation-comparison task (Group “O-O-”) and a spatial-frequency comparison task (Group “-F-F”) that yielded no learning on either task. We then combined the two tasks in a cross-task training regimen (Group “OFOF”) to examine whether training on both tasks would enable learning on the target orientation condition even though the amount of training in each task alone was insufficient to do so. Were the processes that enable learning task specific, as implied by the typical lack of generalization from a learned task to an untrained task (Shiu and Pashler, 1992; Ahissar and Hochstein, 1993; Mossbridge et al., 2006), additional training with the spatial-frequency task would not facilitate improvement in the orientation comparison task. Contrary to previous results that showed no benefit of interleaving tasks in vision (Li et al., 2004a; Huang et al., 2012; see below), we found significant benefit with our cross-task regimen, demonstrating that interleaving training on a given task can enable learning on a different task. These results are parallel to those in audition in which cross-task training enabled PL (Wright et al., 2010), and thus reveal a comparable learning principle. Learning with the visual cross-task regimen was more pronounced for the trained stimulus than for the untrained orthogonal orientation, consistent with previous findings of PL stimulus specificity (Wright & Zhang, 2009; Sagi, 2011).

We ruled out the possibility that the simple additional exposure to the standard stimulus in the cross-task group (“OFOF”) could account for these results by testing an additional group of observers who received prolonged task-specific training on the orientation-comparison task (Group “OOOO”). Strikingly, the cross-task training group exhibited orientation learning whereas this single-task group did not. Orientation learning has been observed using more training trials (6 sessions of >1500 trials each rather than 3 sessions of 800 trials each) and a discrimination rather than a comparison task (Dosher and Lu, 2005; Xiao et al., 2008; Zhang T. et al., 2010; Zhang J. Y. et al., 2010). We note that performance did not deteriorate within sessions in any of the regimens, indicating that fatigue or inattention did not prevent learning (Censor et al., 2006; Mednick et al., 2005; Molloy et al., 2012).

Heretofore, it has been considered that performing the relevant task is essential for visual learning (Shiu and Pashler, 1992; Ahissar and Hochstein, 1993; Huang et al., 2007; Xiao et al., 2008; Zhang J. Y. et al., 2010a; except for below threshold stimulation, Sasaki et al., 2010). However, the present results show that continuous performance of the relevant visual task is not required throughout training. Cross-task training not only enabled visual learning, it also facilitated it relative to extensive training with one task suggesting that different tasks during training can affect each other and further enhance learning.

4.2. Possible explanations for cross-task training benefit

Why was interleaving between our visual tasks better than training on only one task, even when the total number of trials was the same? We first rule out two possibilities: (1) Learning occurs independently for each task and generalizes across tasks. This cannot be the case, as neither spatial-frequency nor orientation training yielded orientation learning when performed alone for the 400 trials/day provided in the cross-task training regimen. (2) Spacing between training blocks. Distributed training can lead to more learning than massed training (Cepeda et al., 2006). However, spacing alone cannot account for our results because the spacing between blocks and days was identical for the three groups (“O-O-”, “-F-F” and “OFOF”); a rest period in the single-task groups lasted the same time as the alternating task.

Our findings are in agreement with the idea that learning emerges when neural processes responsible for performing the trained task are stimulated sufficiently while in a sensitized state (Wright et al., 2010). To reach this state, some task performance is required, because no learning is achieved through (supraliminal) stimulus exposure alone. Given this state, stimulus exposure that is sufficient to allow the processes to surpass a threshold is necessary for learning, because too little training does not yield improvement (Wright & Sabin, 2007; Aberg et al., 2009; Wright et al., 2010). According to this idea, training on our orientation task placed the activated neural circuitry in the sensitized state, and the additional exposures to the same standard stimulus, albeit through a different task (spatial-frequency), provided enough stimulation to enable learning.

We expand this theoretical framework by suggesting that the visual system may favor learning a standard stimulus that appears in two different contexts, because that provides two distinct error signals. This proposal is consistent with the findings that training elicits different neuronal tuning depending on the performed task (Li et al., 2004, Polley et al., 2006) and the top-down modulatory signals enable neurons to carry more information about the stimulus relevant features (Gilbert & Li, 2013). Processing the same standard stimulus to solve two different tasks prompts two distinct error signals, which may affect each other, potentially leading to a strengthened representation and enhanced learning.

Another possibility is that cross-task training could improve learning by increasing the weights on the most diagnostic channels. The Augmented Hebbian Reweighting Model (AHRM) accounts for several perceptual learning phenomena, including transfer across features and locations (Dosher et al., 2013; Liu, Dosher & Lu, 2014; Petrov, Dosher & Lu, 2005, 2006). Interestingly, model simulations for orientation training of Gabor stimuli suggested that learning increases the weight not only for the most diagnostic orientation channels but also for the target spatial-frequency bands (Petrov, Dosher & Lu, 2005, 2006). Thus, in the present study, training on the spatial-frequency comparison task (interleaving task) may have changed weights on the orientation channels and thus improved orientation learning.

Finally, there are similar characteristics between PL and long-term potentiation (LTP; Aberg and Herzog, 2012). Similar to the need for sufficient synaptic stimulation in LTP, minimal amounts of training do not lead to learning, but learning can be achieved with sufficient training (Wright & Sabin, 2007; Aberg et al., 2009; Wright et al., 2010). Our results show that in vision, as in audition (Wright et al., 2010), sufficient training can be achieved by performing different tasks with the same standard stimulus, possibly by synapse activation brought about by the standard stimulus, in conjunction with interleaved and distributed training.

4.3. Cross-task training and task specificity in PL

The present results differ from those of several previous investigations that focused on interactions between tasks in perceptual learning. During learning acquisition, interleaving training with acuity and hyperacuity discrimination tasks does not aid performance on either task (Li et al., 2004; Huang et al., 2012). This result differs from the present data showing a between-task interaction that aids learning. Several differences between the current and previous investigations may account for this discrepancy. For example, the amount of training on each task alone was already sufficient to yield learning in the previous investigations, but not in the present one. Once the amount of training provided on each task alone is sufficient to yield learning on that task, the benefit from interleaved training may decrease, because there is less room for improvement. Thus, it is possible that the benefit of cross-task training may decrease with increases in single-task training. Moreover, in addition to requiring judgments about different stimulus features (orientation and spatial-frequency as opposed to acuity and hyperacuity), we used a comparison rather than a discrimination task. Importantly, the standard stimulus was the same in both the target task (orientation) and the intervening task (spatial frequency) throughout cross-task training (“OFOF”). Thus, it is also possible that training on a comparison task may be better suited for a cross-task training PL regimen than training on a discrimination task, as the standard stimulus ought to be encoded to perform both comparison tasks successfully.

After training on one task visual learning is typically specific to the trained task (e.g., Ahissar & Hochstein, 1993; Shiu & Pashler, 1992); however, in some cases there is cross-task generalization when tasks rely on each other to be performed, e.g. to perform a curvature task observers first extract orientation (McGovern et al., 2012), or for easy tasks using different stimuli (Pavlovskaya & Hochstein, 2011). The present demonstration of an interaction between tasks differs from the interactions revealed in those studies because judging one stimulus feature (orientation) did not rely on the other (frequency), task difficulty was similar across groups, learning did not generalize between the tasks, and the interaction affected learning acquisition rather than generalization.

As mentioned in the introduction, other recent findings show that learning on two different tasks can facilitate generalization– reduce location and feature specificity (Xiao et al., 2008; Zhang J. Y. et al., 2010). In these studies, improvement on one task (e.g., contrast discrimination) learnt at one location or for a specific feature can be transferred to another location or feature by additional training with a different task (e.g., orientation), so long as there is significant learning on both tasks. The authors interpret their findings to reflect a rule-based learning mechanism. We also trained two tasks in the present study, but instead of focusing on generalization, we asked what is required for the training itself to be effective. By design, there was no learning for each task individually, suggesting no rule learning occurred, yet when tasks were interleaved PL was attained. This finding suggests that rule-learning is not necessary during training. Moreover, by showing that the two interleaved tasks interacted during training, this study places constraints on rule-based learning.

5. Conclusions

We found that merely alternating tasks during training enables visual PL, revealing similarities with auditory PL and suggesting common underlying learning principles in different modalities. Surprisingly, a cross-task regimen enhanced PL compared to single-task training even with the same total number of trials. The present results illustrate that cross-task training can enhance brain plasticity and maximize learning. These findings shed light on theoretical principles underlying learning acquisition and have translational potential to treat perceptual disorders.

Highlights.

Perceptual learning in vision is highly specific to the trained task

We examined whether interleaving practice with another task would enable learning

For cross-task training, we interleaved training with two tasks, neither of which yielded learning on their own

Cross-task training led to more learning than single-task training thereby maximizing PL

For PL to occur, performance on the relevant task is not required throughout training

Acknowledgments

This study was supported by NIH Grant R01 EY016200 (MC) and NIH Grant RO1 DC004453 (BAW).

We thank members of the Carrasco lab for comments on the study and manuscript, and Sarah Cohen and Young A Lee for help with data acquisition.

Abbreviations

- PL

Perceptual learning

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Reaction times, our secondary measure, decreased with training [F(1,22)=18.065, p<.0001], but there was no interaction with group [p=.142]. Thus, there was no speed-accuracy trade-off. Due to a technical problem, reaction time data of two subjects from the "O-O" condition is missing.

The authors declare no competing financial interests.

References

- Aberg KC, Herzog MH. About similar characteristics of visual perceptual learning and LTP. Vision research. 2012;61:100–106. doi: 10.1016/j.visres.2011.12.013. [DOI] [PubMed] [Google Scholar]

- Aberg KC, Tartaglia EM, Herzog MH. Perceptual learning with Chevrons requires a minimal number of trials, transfers to untrained directions, but does not require sleep. Vision research. 2009;49(16):2087–2094. doi: 10.1016/j.visres.2009.05.020. [DOI] [PubMed] [Google Scholar]

- Adini Y, Wilkonsky A, Haspel R, Tsodyks M, Sagi D. Perceptual learning in contrast discrimination: The effect of contrast uncertainty. Journal of Vision. 2004;4(12) doi: 10.1167/4.12.2. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proceedings of the National Academy of Sciences of the United States of America. 1993;90(12):5718. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astle AT, Webb BS, McGraw PV. Spatial frequency discrimination learning in normal and developmentally impaired human vision. Vision research. 2010;50(23):2445–2454. doi: 10.1016/j.visres.2010.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banai K, Ortiz JA, Oppenheimer JD, Wright BA. Learning two things at once: differential constraints on the acquisition and consolidation of perceptual learning. Neuroscience. 2010;165(2):436–444. doi: 10.1016/j.neuroscience.2009.10.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmel D, Carrasco M. Perceptual Learning and Dynamic Changes in Primary Visual Cortex. Neuron. 2008;57:799–801. doi: 10.1016/j.neuron.2008.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Censor N, Karni A, Sagi D. A link between perceptual learning, adaptation and sleep. Vision research. 2006;46(23):4071–4074. doi: 10.1016/j.visres.2006.07.022. [DOI] [PubMed] [Google Scholar]

- Cepeda NJ, Pashler H, Vul E, Wixted JT, Rohrer D. Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychological bulletin. 2006;132(3):354. doi: 10.1037/0033-2909.132.3.354. [DOI] [PubMed] [Google Scholar]

- Crist RE, Kapadia MK, Westheimer G, Gilbert CD. Perceptual learning of spatial localization: specificity for orientation, position, and context. Journal of neurophysiology. 1997;78(6):2889–2894. doi: 10.1152/jn.1997.78.6.2889. [DOI] [PubMed] [Google Scholar]

- Dosher BA, Lu ZL. Perceptual learning in clear displays optimizes perceptual expertise: Learning the limiting process. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(14):5286–5290. doi: 10.1073/pnas.0500492102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Jeter P, Liu J, Lu ZL. An integrated reweighting theory of perceptual learning. Proceedings of the National Academy of Sciences. 2013;110(33):13678–13683. doi: 10.1073/pnas.1312552110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert CD, Li W. Top-down influences on visual processing. Nature Reviews Neuroscience. 2013;14(5):350–363. doi: 10.1038/nrn3476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris H, Gliksberg M, Sagi D. Generalized perceptual learning in the absence of sensory adaptation. Current Biology. 2012;22(19):1813–1817. doi: 10.1016/j.cub.2012.07.059. [DOI] [PubMed] [Google Scholar]

- Huang CB, Lu ZL, Dosher B. Co-learning analysis of two perceptual learning tasks with identical input stimuli supports the reweighting hypothesis. Journal of Vision. 2008;8(6):1123–1123. doi: 10.1016/j.visres.2011.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeter PE, Dosher BA, Petrov A, Lu ZL. Task precision at transfer determines specificity of perceptual learning. Journal of Vision. 2009;9(3):1. doi: 10.1167/9.3.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A, Sagi D. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proceedings of the National Academy of Sciences. 1991;88(11):4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi DM, Li RW. Perceptual learning as a potential treatment for amblyopia: a mini-review. Vision Research. 2009;49:2535–2549. doi: 10.1016/j.visres.2009.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li W, Piëch V, Gilbert CD. Perceptual learning and top-down influences in primary visual cortex. Nature neuroscience. 2004;7(6):651–657. doi: 10.1038/nn1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Dosher B, Lu ZL. Modeling trial by trial and block feedback in perceptual learning. Vision Research. 2014 doi: 10.1016/j.visres.2014.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGovern DP, Webb BS, Peirce JW. Transfer of perceptual learning between different visual tasks. Journal of vision. 2012;12(11) doi: 10.1167/12.11.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mednick SC, Arman AC, Boynton GM. The time course and specificity of perceptual deterioration. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(10):3881–3885. doi: 10.1073/pnas.0407866102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mossbridge JA, Fitzgerald MB, O'Connor ES, Wright BA. Perceptual-learning evidence for separate processing of asynchrony and order tasks. The Journal of Neuroscience. 2006;26(49):12708–12716. doi: 10.1523/JNEUROSCI.2254-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum M, Nelken I, Ahissar M. Stimulus uncertainty and perceptual learning: Similar principles govern auditory and visual learning. Vision research. 2010;50(4):391–401. doi: 10.1016/j.visres.2009.09.004. [DOI] [PubMed] [Google Scholar]

- Parkosadze K, Otto TU, Malania M, Kezeli A, Herzog MH. Perceptual learning of bisection stimuli under roving: Slow and largely specific. Journal of Vision. 2008;8(1) doi: 10.1167/8.1.5. [DOI] [PubMed] [Google Scholar]

- Pavlovskaya M, Hochstein S. Perceptual learning transfer between hemispheres and tasks for easy and hard feature search conditions. Journal of Vision. 2011;11(1) doi: 10.1167/11.1.8. [DOI] [PubMed] [Google Scholar]

- Petrov AA, Dosher BA, Lu ZL. The dynamics of perceptual learning: an incremental reweighting model. Psychological review. 2005;112(4):715. doi: 10.1037/0033-295X.112.4.715. [DOI] [PubMed] [Google Scholar]

- Petrov AA, Dosher BA, Lu ZL. Perceptual learning without feedback in non-stationary contexts: Data and model. Vision research. 2006;46(19):3177–3197. doi: 10.1016/j.visres.2006.03.022. [DOI] [PubMed] [Google Scholar]

- Polley DB, Steinberg EE, Merzenich MM. Perceptual learning directs auditory cortical map reorganization through top-down influences. The journal of Neuroscience. 2006;26(18):4970–4982. doi: 10.1523/JNEUROSCI.3771-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi D. Perceptual learning in Vision Research. Vision research. 2011;51(13):1552–1566. doi: 10.1016/j.visres.2010.10.019. [DOI] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practicing orientation identification improves orientation coding in V1 neurons. Nature. 2001;412(6846):549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Dinse HR. A common framework for perceptual learning. Current opinion in neurobiology. 2007;17(2):148–153. doi: 10.1016/j.conb.2007.02.004. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61(5):700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Yamagishi N, Werner B, Goda N, Kawato M, Watanabe T. Task-specific disruption of perceptual learning. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(41):14895–14900. doi: 10.1073/pnas.0505765102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & psychophysics. 1992;52(5):582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- Tartaglia EM, Aberg KC, Herzog MH. Perceptual learning and roving: Stimulus types and overlapping neural populations. Vision research. 2009;49(11):1420–1427. doi: 10.1016/j.visres.2009.02.013. [DOI] [PubMed] [Google Scholar]

- Wang R, Cong LJ, Yu C. The classical TDT perceptual learning is mostly temporal learning. Journal of vision. 2013;13(5):9. doi: 10.1167/13.5.9. [DOI] [PubMed] [Google Scholar]

- Wang R, Zhang JY, Klein SA, Levi DM, Yu C. Task relevancy and demand modulate double-training enabled transfer of perceptual learning. Vision research. 2012;61:33–38. doi: 10.1016/j.visres.2011.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright BA, Sabin AT. Perceptual learning: how much daily training is enough? Experimental Brain Research. 2007;180(4):727–736. doi: 10.1007/s00221-007-0898-z. [DOI] [PubMed] [Google Scholar]

- Wright BA, Zhang Y. A review of the generalization of auditory learning. Philosophical Transactions of the Royal Society B: Biological Sciences. 2009;364(1515):301–311. doi: 10.1098/rstb.2008.0262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright BA, Sabin AT, Zhang Y, Marrone N, Fitzgerald MB. Enhancing perceptual learning by combining practice with periods of additional sensory stimulation. The Journal of Neuroscience. 2010;30(38):12868–12877. doi: 10.1523/JNEUROSCI.0487-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiao LQ, Zhang JY, Wang R, Klein SA, Levi DM, Yu C. Complete transfer of perceptual learning across retinal locations enabled by double training. Current Biology. 2008;18(24):1922–1926. doi: 10.1016/j.cub.2008.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yotsumoto Y, Chang LH, Watanabe T, Sasaki Y. Interference and feature specificity in visual perceptual learning. Vision research. 2009;49(21):2611–2623. doi: 10.1016/j.visres.2009.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T, Xiao LQ, Klein SA, Levi DM, Yu C. Decoupling location specificity from perceptual learning of orientation discrimination. Vision research. 2010;50(4):368–374. doi: 10.1016/j.visres.2009.08.024. [DOI] [PubMed] [Google Scholar]

- Zhang JY, Zhang GL, Xiao LQ, Klein SA, Levi DM, Yu C. Rule-based learning explains visual perceptual learning and its specificity and transfer. The Journal of Neuroscience. 2010;30(37):12323–12328. doi: 10.1523/JNEUROSCI.0704-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]