Abstract

Practice can improve visual perception, and these improvements are considered to be a form of brain plasticity. Training-induced learning is time-consuming and requires hundreds of trials across multiple days. The process of learning acquisition is understudied. Can learning acquisition be potentiated by manipulating visual attentional cues? We developed a protocol in which we used task-irrelevant cues for between-groups manipulation of attention during training. We found that training with exogenous attention can enable the acquisition of learning. Remarkably, this learning was maintained even when observers were subsequently tested under neutral conditions, which indicates that a change in perception was involved. Our study is the first to isolate the effects of exogenous attention and to demonstrate its efficacy to enable learning. We propose that exogenous attention boosts perceptual learning by enhancing stimulus encoding.

Keywords: learning, attention, vision, perception

Training can enhance performance on basic perceptual tasks over time; this phenomenon is called perceptual learning (for a review, see Sagi, 2011). These long-lasting perceptual improvements are an example of adult brain plasticity and ameliorate perceptual deficits in presbyopia, amblyopia, and cortical blindness (e.g., Das & Huxlin, 2010; Levi & Li, 2009; Polat et al., 2012; Sagi, 2011). Perceptual learning is very time consuming; training usually requires long sessions, across multiple days. However, aspects facilitating learning acquisition are still understudied. To optimize training, as well as its translational potential to help recover function in perceptual disorders, requires conditions that facilitate learning acquisition. In this study, we examined whether exogenous attention during training could enable perceptual learning when none was present otherwise.

The role of attention in perceptual learning has been much discussed but remains poorly understood. Even so, attention is considered a gate for perceptual learning (Ahissar & Hochstein, 2004; Lu, Yu, Sagi, Watanabe, & Levi, 2010; Roelfsema, van Ooyen, & Watanabe, 2010; Tsushima & Watanabe, 2009). On the one hand, some studies suggest that top-down attention is necessary for learning. Training on one task does not improve performance on an alternative task, even with the same stimulus (Ahissar & Hochstein, 1993; Shiu & Pashler, 1992), and neural tuning differs according to the training task and stimulus (Li, Piëch, & Gilbert, 2004). On the other hand, learning can occur for subthreshold, task-irrelevant stimuli (Paffen, Verstraten, & Vidnyánszky, 2008; Seitz & Watanabe, 2009; Watanabe, Náñez, & Sasaki, 2001), especially when attention is directed away from the stimuli (Choi, Seitz, & Watanabe, 2009). In most studies, attention’s role in perceptual learning has been inferred, but it has been defined and operationalized only sporadically. Indeed, in perceptual-learning studies, the term “attention” has been used to refer to a variety of different phenomena. For instance, attention has been equated with task difficulty (Bartolucci & Smith, 2011; Huang & Watanabe, 2012), inferred from neural activity in attention-related brain areas (Mukai et al., 2007; Tsushima, Sasaki, & Watanabe, 2006), or used interchangeably with conscious perception (Tsushima & Watanabe, 2009; but these separate constructs can be manipulated independently, Koch & Tsuchiya, 2007). And most commonly, authors evoke attention to describe the fact that observers perform a task with a specific stimulus (Meuwese, Post, Scholte, & Lamme, 2013; Paffen et al., 2008; Seitz & Watanabe, 2009; Watanabe et al., 2001).

The role of covert attention (i.e., the focusing of attention on a given location in the absence of eye or head movement) has very rarely been examined directly in perceptual learning. Covert attention allows selective prioritization of information and enhances perceptual processing on a variety of visual tasks. There are two types of spatial covert attention: exogenous attention (reflexive, involuntary, and transient, peaking at about 100 ms and decaying shortly thereafter) and endogenous attention (voluntary and sustained, deploying in ~300 ms; Carrasco, 2011, 2014; Yantis & Jonides, 1996).

Here, we provide a protocol to directly test the role of attention in perceptual learning and examine its role on learning acquisition. We chose exogenous attention to disentangle spatial attention from task relevance. Peripheral cues attract exogenous spatial attention to the upcoming stimuli independent of cue validity (Giordano, McElree, & Carrasco, 2009) and provide no information with regard to the correct response on the task, thereby allowing us to manipulate and isolate the role of covert exogenous attention in perceptual learning.

To investigate whether exogenous attention enables learning, we first established a training regimen that was insufficient to lead to learning when observers were trained without attention. We trained two groups of observers, one with exogenous attention and the other without it, on an orientation-comparison task, given that perceptual learning for orientation is well characterized (e.g., Dosher & Lu, 2005; Karni & Sagi, 1991; Schoups et al., 2001; Szpiro, Wright, & Carrasco, 2014; Xiao et al., 2008; Zhang et al., 2010). To isolate the role of attention, we performed the pretest and the posttest under a neutral condition. We hypothesized that training with attention would enable learning. Although evaluating the role of exogenous attention on learning acquisition was the primary focus of this study, we also examined the effects of exogenous attention on generalization across features and tasks.

Method

Observers

Fourteen human observers with normal or corrected-to-normal vision participated in the perceptual learning study (mean age = 20.7 years, SD = 2.66). They were randomly assigned to one of two groups (attention or neutral). This sample size (n = 7) is common for studies of perceptual learning (Ahissar & Hochstein, 1993; Chirimuuta, Burr, & Morrone, 2007; Dosher, Han, & Lu, 2010; Huang & Watanabe, 2012; Paffen et al., 2008; Szpiro, Wright, & Carrasco, 2014). For the control experiment, a novel group of 11 observers participated (mean age = 23.85, SD = 2.29). All observers were naive to the purpose of the study and had not participated in experiments using the tasks and stimuli used in the present experiments. Observers were paid for their participation. The New York University review board approved the protocol, and observers gave informed consent.

Apparatus, stimuli, and cues

The stimuli and cues were presented on a calibrated 21-in. color monitor (IBM P260) with a resolution of 1,280 × 960 pixels and a refresh rate of 100 Hz. The experiment was programmed in MATLAB Version 7.1 (The MathWorks, Natick, MA) using Psychophysics Toolbox (Version 3.0.8; Brainard, 1997). Observers were seated in a dark room, 57 cm from the screen; their heads were supported by a chin-and-forehead rest. The screen background was gray (57 cd/m2).

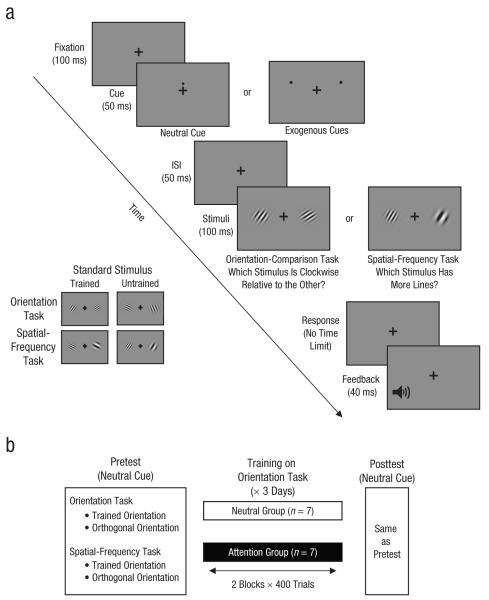

On each trial, the stimuli were two Gabor patches (30% contrast), subtending 2° of visual angle and located on the horizontal meridian at 5° eccentricity. One stimulus was the standard stimulus and the other stimulus was the comparison stimulus (see Fig. 1a). The standard stimulus was equally likely to be on either side of a fixation cross, and the comparison stimulus was on the opposite side. There were two possible standard stimuli: orientation was 30° in the trained stimulus and 300° in the orthogonal or untrained stimulus; spatial frequency (4 cycles/deg) was the same in both standards. The comparison stimulus differed depending on the task. In the orientation-comparison task, the standard stimulus and the comparison stimulus had the same spatial frequency (4 cycles/deg), but the comparison stimulus was rotated slightly clockwise or counterclockwise relative to the standard stimulus. In the spatial-frequency task, the standard stimulus and the comparison stimulus had the same orientation, but the comparison stimulus had a higher or lower spatial frequency than the standard stimulus. The location of the stimuli was constant throughout the experiment; thus, there was no location uncertainty. We used these parameters in a prior study in which a task was learned only by interleaving practice with another task (Szpiro, Wright, & Carrasco, 2014).

Fig. 1.

Trial sequence and protocol for training and testing. Each trial began with a screen showing a fixation cross (a), followed by either the neutral cue at the center of the screen or two exogenous cues above the locations of the upcoming stimuli. After the cues, there was a brief interstimulus interval (ISI). In the orientation-comparison task, observers had to determine which of the two stimuli was rotated clockwise relative to the other; in the example shown here, the stimulus on the right is rotated clockwise relative to the other. In the spatial-frequency task, observers had to determine which of the two stimuli had more lines; in the example shown here, the stimulus on the left has more lines. Standard stimuli were oriented either at 30° (trained) or at 300° (orthogonal or untrained) relative to vertical. The protocol (b) consisted of a pretest, 3 days of training, and a posttest. Participants were tested on both tasks, with both the trained and the orthogonal (untrained) standard stimuli; the neutral cue was used in all test trials. Participants were trained on the orientation-comparison task only. The neutral group trained with the neutral cue, and the attention group trained with the exogenous cues.

There were two types of cues: neutral and exogenous. The neutral cue consisted of one black dot, just above the fixation cross. The exogenous cues were two black dots that appeared simultaneously, one above the location of each of the upcoming stimuli. There were two exogenous cues because processing of both stimuli was required for the comparison task. Such cues effectively direct attention to both locations (Bay & Wyble, 2014; Carmel & Carrasco, 2009). All cues were the same size and were uninformative about the task. The neutral cue had the same timing as the exogenous cues to eliminate temporal uncertainty regarding stimulus onset. It has been shown that exogenous attention improves processing relative to a variety of neutral cues (Carrasco, Williams, & Yeshurun, 2002; Talgar, Pelli, & Carrasco, 2004; Yeshurun & Carrasco, 2008).

Task and procedure

Observers were asked to maintain fixation throughout the trial sequence and to indicate, by pressing a keyboard button, which of the two stimuli (the one on the right or left) was rotated clockwise relative to the other (for the orientation-comparison task) or had higher spatial frequency (for the spatial-frequency task). Note that for these comparison tasks, it was necessary to compare the two stimuli; thus, it is detrimental to fixate at one stimulus and optimal to fixate at the center of the display. Figure 1a illustrates the stimulus sequence. Each trial began with the fixation cross (0.25° × 0.25°, < 4 cd/m2) at the center for 100 ms, followed for 50 ms by either the neutral cue or the two exogenous cues. Then, after a 50-ms interstimulus interval, the two stimuli were presented for 100 ms. Observers then responded using the keyboard; there was no time limit for response. Finally, a 40-ms feedback tone indicated whether the response was correct or incorrect. Accuracy was the main dependent variable; we also analyzed reaction times to rule out any speed-accuracy tradeoffs.

Testing sessions

In addition to assessing learning acquisition for tasks using the trained stimulus, we investigated whether the learning would transfer to another feature (by testing observers in the orientation-comparison task with the stimulus in an orthogonal orientation) and to another task (by testing observers in the spatial-frequency task).

The experiment was completed in 5 consecutive days. All observers completed the same pretest (Day 1) and posttest (Day 5) with neutral cues. They were tested on both the orientation and spatial-frequency tasks, with standard stimuli in both the trained and untrained orientations. The pretest session began with a brief period of practice (about 100 trials) with each of the two tasks. We then manually adjusted the orientation or spatial frequency of the orthogonal stimulus so that the difference between it and the standard stimulus allowed a given observer to achieve about 70% accuracy. The orientation differences ranged between 5° and 12.5° across observers (mean = 9.03°, SD = 2.5°), and the spatial-frequency differences ranged between 0.18 and 0.35 cycles per degree (mean = 0.27, SD = 0.007). There was no significant difference between groups for the orientation differences, t(12) = 1.51, p > .1, or for the spatial-frequency differences, t(12) = 9.49, p > .1. Performance (percentage correct) was measured throughout the sessions for these fixed stimulus differences. The pretest and posttest were identical and consisted of 1,200 trials each; there were 300 trials for each of the four combinations of task type (orientation or spatial frequency) and standard-stimulus type (trained or untrained). The order of tasks and orientations was independently counterbalanced across observers for the pretest and the posttest, and each combination of standard stimulus and task was tested in a separate block.

Training regimens

Training on the orientation-comparison task was performed over 3 consecutive days (Days 2–4) with the 30° standard stimulus. Each training session consisted of 800 trials and lasted about 30 min for each group. Observers were randomly assigned to two groups: 7 observers trained with the two exogenous cues and the other 7 trained with the neutral cue (Fig. 1b).

Control experiment

In a control experiment, we used the orientation-comparison task to evaluate whether performance differed in a central-neutral-cue condition and a no-cue condition. The procedure was very similar to that in the perceptual-learning study. Observers participated in one session (~40 min) and performed the orientation-comparison task with the two Gabor stimuli oriented at 30°. The central—neutral—cue was identical to the neutral cue in the perceptual-learning experiment. The nocue condition was identical to the central-neutral-cue condition except that a cue did not appear before presentation of the stimuli. As in the perceptual-learning experiment, there was no temporal uncertainty regarding the onset of the Gabor stimuli because all observers received audio feedback after each trial, which served as an indicator of the onset of the following trial. We then manually adjusted the orientation of the orthogonal stimulus so that the difference between it and the standard stimulus allowed a given observer to achieve about 70% accuracy; the orientation differences ranged between 2° and 10° across observers (mean = 5.36°, SD = 2.8°).

To compare performance between the central-neutral-cue and the no-cue conditions, we had each observer perform four blocks in each cueing condition; each block consisted of 100 trials, for a total of 400 trials of each of cueing condition. The order of the blocks was random except that a given cueing condition could not repeat more than twice in a row.

Results

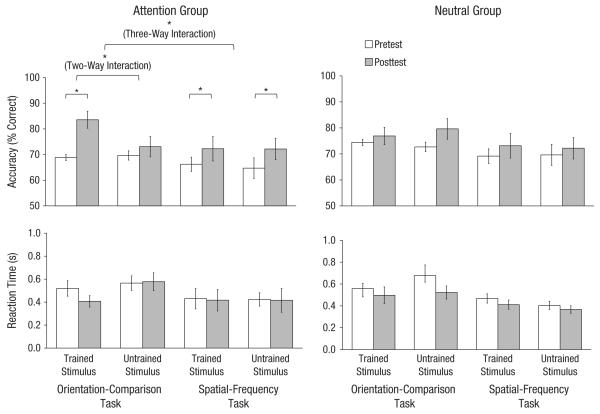

First, we established that accuracy in the pretest did not differ between the neutral and attention groups for either task and for either standard stimulus (all ps > .1). Then we examined whether and how the two training procedures affected learning. We conducted a mixed-design analysis of variance (ANOVA) with session (pretest vs. posttest), task (orientation vs. spatial frequency), and stimulus orientation (trained vs. untrained) as within-subjects repeated factors and group as the between-subjects factor. To rule out speed-accuracy trade-offs, we analyzed reaction times. They were slightly faster after training but not significantly so, F(1, 12) = 2.81, p = .12, and there were no significant interactions (Fig. 2, bottom row; all ps > .2). Thus, we proceeded to analyze our main dependent measure—accuracy.

Fig. 2.

Results for the attention group (left) and the neutral group (right) after training on the orientation-comparison task. Accuracy (top row) and reaction time (bottom row) are presented separately. Error bars represent ±1 SEM. Asterisks represent significant differences (*p < .05).

For the accuracy data, there was a significant four-way interaction (Attention Group × Task × Stimulus Orientation × Session), F(1, 12) = 6.54, p = .025, which indicates a significant difference in learning between the two groups. We examined learning for each group separately using repeated measures three-way ANOVAs. For the neutral group, neither the three-way interaction (Task × Stimulus Orientation × Session), F(1, 6) = 1.26, p = .304 (Fig. 2, top right), nor the two-way interactions for either of the tasks, all F(1, 6)s < 1.94, p > .211, nor the main effects of learning for either the trained or the untrained stimuli in either task, all t(6)s < 1, p > .39, were significant. In contrast, for the attention group, there was a significant three-way interaction (Task × Stimulus Orientation × Session), F(1, 6) = 6.35, p = .045 (Fig. 2, top left). To explore this interaction, we examined the learning effects for each task in the attention group.

Attention enables learning in the orientation-comparison task with the trained stimulus

For the attention group (Fig. 2, left) there was a main effect of session for the trained orientation-comparison task, two-way ANOVA, F(1, 6) = 9.935, p = .02, ηp2 = .623, and a significant two-way interaction between session and stimulus orientation, F(1, 6) = 6.43, p = .044, ηp2 = .518. These effects emerged from significant learning for the trained stimulus, t(6) = 3.14, p = .02, Cohen’s d = 1.252, but not for the untrained (orthogonal) stimulus, t(6) = 1.59, p = .162. For all 7 observers, when they trained with the attentional cues, learning was better for the trained stimulus than for the untrained stimulus.

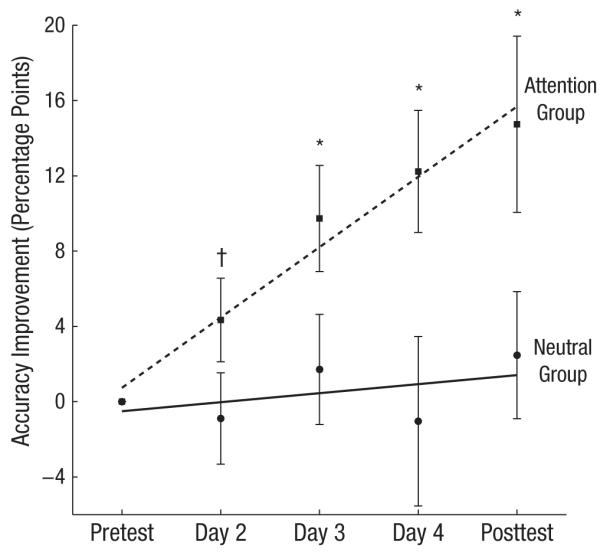

To determine the learning rate (Fig. 3), we calculated for each observer the average improvement in accuracy (relative to the pretest) on the orientation-comparison task when the standard stimulus was the trained stimulus and plotted this improvement across sessions. We then calculated the slope of the best-fitting regression line. For the attention group, we found a significantly positive slope, t(6) = 3.29, p = .016, which indicates that observers’ performance in this group improved steadily; in contrast, for the observers in the neutral group, performance was not significantly different from zero, t(6) = 0.6, p = .57. Furthermore, independent-samples one-tailed t tests revealed significant differences between the groups starting from Day 2—Day 2: t(12) = 1.59, p = .07; Day 3: t(12) = 1.97, p = .04; Day 4: t(12) = 2.39, p = .02; Day 5 (posttest): t(12) = 2.12, p = .03. Note that the improved performance in the attention group was maintained during the posttest, when both groups were tested with a neutral cue.

Fig. 3.

Improvements in accuracy (relative to the pretest) on the orientation-comparison task when the standard stimulus was the trained stimulus. Accuracy is graphed as a function of session, separately for the attention group and the neutral group. Error bars represent ±1 SEM. The dagger and asterisks represent the significance of the differences between the groups (†p < .1, *p < .05).

Attention facilitates learning in the untrained spatial-frequency task

For the attention group, we examined whether and how training on the orientation-comparison task influenced performance on the untrained spatial-frequency task (Fig. 2, left). Evidence of learning also emerged for the untrained spatial-frequency task: There was a main effect of session, F(1, 6) = 12.47, p = .012, ηp2 = .675, and no Stimulus Orientation × Session interaction, F(1, 6) < 1. For all but 1 observer, attention improved performance for the untrained task.

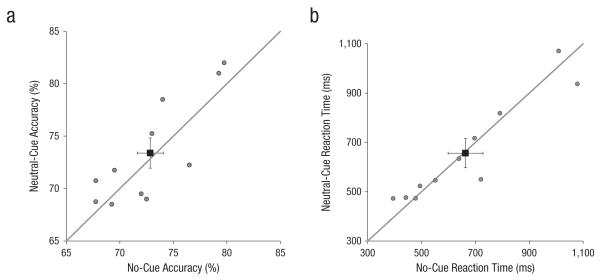

Central-neutral-cue and no-cue conditions do not differ

In the control experiment, we examined whether the central neutral cue might have prevented the processing of the two stimuli by attracting attention to the center. We compared performance in a central-neutral-cue condition with that in a no-cue condition. Paired t tests revealed that performance did not differ significantly between the two conditions—accuracy: t(10) = 0.63, p = .54; reaction time: t(10) = 0.28, p = .78 (Fig. 4). If anything, for most observers, accuracy was higher in the central-neutral-cue condition than in the no-cue condition. Thus, the central neutral cue was not responsible for the lack of learning in the neutral condition in the perceptual-learning experiment.

Fig. 4.

Accuracy (a) and reaction time (b) in the control experiment (n = 11). Performance in the central-neutral-cue condition is plotted against performance in the no-cue condition. The solid lines represent the equality lines. The black squares represent the average for each condition, and error bars represent ±1 SEM.

Discussion

This is the first study to isolate the effects of exogenous attention on learning acquisition and to disentangle it from task relevance. Attention has been presumed to play a central role in perceptual learning (Ahissar & Hochstein, 1993, 2004; Lu et al., 2010; Meuwese et al., 2013; Roelfsema et al., 2010; Seitz & Watanabe, 2009; Shiu & Pashler, 1992; Tsushima & Watanabe, 2009), but it has almost always been inferred and rarely operationalized and manipulated.

In this study, we experimentally manipulated attention between groups. In contrast to studies in which the stimuli were defined as attended and unattended stimuli according to task context, we equated all parameters between groups: Stimuli and testing sessions were identical between groups, and the timing between the cues in both groups was identical, thus eliminating the possibility that timing differences could underlie the benefit in the attention group. Both neutral and exogenous cues were task irrelevant and provided no information that could inform task performance, thereby distinguishing attention from task performance and relevance.

Only one previous study has examined the role of exogenous spatial attention on the acquisition stage of perceptual learning: During training, different locations were cued with different exogenous-cue types (attended, divided attention, unattended; Mukai, Bahadur, Kesavabhotla, & Ungerleider, 2011). Unfortunately, the fact that different cues were manipulated within observers prevented any direct interpretation of the role of attention on learning. Moreover, the effects of different cue types could stem from the transfer of learning across locations brought about by exogenous attention (Carrasco, Baideme, & Giordano, 2009; Donovan, Szpiro, & Carrasco, 2015).

Although endogenous attention has been shown to improve learning for task-relevant perceptual learning (Ito, Westheimer, & Gilbert, 1998; Schoups et al., 2001) and task-irrelevant, exposure-based sensory adaptation (Gutnisky, Hansen, Iliescu, & Dragoi, 2009), both of these studies found learning for attended and unattended conditions. Moreover, because the effect of attention was tested within subjects, it is hard to distinguish between learning and endogenous-attention facilitation of transfer across locations (Ito et al., 1998). Relevant to our current findings are the differences between endogenous and exogenous attention in terms of the effort required from the observer, the flexibility versus automaticity, the time course, and the probable different effects on stimuli processing (see reviews by Carrasco, 2011, 2014).

In this study, by design, we set the number of training days and trials so that training with a neutral cue would not yield learning. We then trained another group of observers on the same task with exogenous cues. Attention modulated performance and, remarkably, enabled learning. In contrast to studies finding that the effect of endogenous attention decreases with practice (object-based attention: Dosher et al., 2010; dual-task performance: Chirimuuta et al., 2007), this was not the case in our study. The pronounced difference between observers who trained with neutral cues and those who trained with exogenous cues did not decrease (Fig. 3). Critically, this attention benefit was maintained during the posttest, when a neutral cue was used for both groups. The learning in the attention group reflects improved visual processing, the ability to compare nearby orientations, rather than an improved ability to attend. Had the improved performance reflected an improved ability to attend, performance improvements would no longer be present during the posttest, in which exogenous cues were no longer present. Furthermore, the brief stimulus presentation precluded deployment of endogenous (voluntary) attention, which takes about 300 ms (e.g., Liu, Stevens, & Carrasco, 2007; Nakayama & Mackeben, 1989). Moreover, the control experiment in the present study confirmed that a central neutral cue does not attract attention away from target stimuli (Carrasco et al., 2002; Talgar et al., 2004; Yeshurun & Carrasco, 2008), given that performance in an orientation-comparison task was the same with and without a central cue (Fig. 4).

Together, these findings show that manipulating attention can dramatically change the learning process. Exogenous attention is a form of covert spatial attention that prioritizes processing at cued spatial locations automatically and involuntarily, even for irrelevant and uninformative cues (Carrasco, 2011, 2014). This resource allocation to upcoming spatial locations by exogenous peripheral cues enhances the signal (Carrasco, 2011) and can improve the processing of several locations simultaneously (Bay & Wyble, 2014; Carmel & Carrasco, 2009). We speculate that the enhanced signal for the cued stimuli in our study may be learned more effectively than the stimuli without the cues. The possibility that an enhanced signal facilitates perceptual learning is consistent with the finding that attention enhances the signal of the attended stimulus and facilitates memory encoding (Ballesteros, Reales, García, & Carrasco, 2006). It has been proposed that perceptual learning may be attained only when a certain threshold of sensory stimulation is reached (Seitz & Dinse, 2007; Szpiro, Wright, & Carrasco, 2014); exogenous attention may facilitate the sensory stimulation required to attain that threshold. Future studies will further understanding of the underlying mechanism responsible for this attention benefit to visual learning.

Interleaving tasks during training enables learning, but it does not transfer across tasks or features (Szpiro, Wright, & Carrasco, 2014). In the current study, attention enabled learning for the trained task and also for the untrained task (i.e., the spatial-frequency comparison), albeit to a lesser degree, which indicates that attention can also facilitate transfer across tasks. However, as in a previous study (Sagi, 2011), learning did not transfer across features to the orthogonal untrained orientation for the trained task. The current finding of transfer across tasks, but no transfer across features, is consistent with previous findings on perceptual-learning specificity for orientation (Dosher & Lu, 2005; Karni & Sagi, 1991; Schoups et al., 2001; Xiao et al., 2008; Zhang et al., 2010) and may reflect hyperspecificity and overfitting to the trained feature of the trained task (as suggested by Gutinsky et al., 2009; Sagi, 2011).

We implemented a protocol for testing the interaction of attention and perceptual learning by using a between-subjects design in which both covert exogenous attention and neutral conditions are isolated during training. This study reveals that directing exogenous attention to the upcoming stimuli using uninformative peripheral cues enables learning and task transfer. Existing perceptual-learning protocols that aid clinical populations (e.g., Das & Huxlin, 2010; Levi & Li, 2009; Polat et al., 2012; Sagi, 2011) can benefit from the present findings by merely presenting a peripheral cue before upcoming stimuli. These cues can speed up learning, and possibly enable it in otherwise ineffective protocols, without additional effort from participants, which makes it especially beneficial for visual rehabilitation.

Acknowledgments

We thank Sarah Cohen for help with data collection.

Funding

This work was supported by National Eye Institute Grant EY016200 (to M. Carrasco).

Footnotes

Author Contributions

M. Carrasco and S. F. A. Szpiro designed the study, performed the analysis, and wrote the manuscript. S. F. A. Szpiro collected the data.

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

References

- Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proceedings of the National Academy of Sciences, USA. 1993;90:5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends in Cognitive Sciences. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Ballesteros S, Reales JM, García E, Carrasco M. Selective attention affects implicit and explicit memory for familiar pictures at different delay conditions. Psicothema. 2006;18:88–99. [PubMed] [Google Scholar]

- Bartolucci M, Smith AT. Attentional modulation in visual cortex is modified during perceptual learning. Neuropsychologia. 2011;49:3898–3907. doi: 10.1016/j.neuropsychologia.2011.10.007. [DOI] [PubMed] [Google Scholar]

- Bay M, Wyble B. The benefit of attention is not diminished when distributed over two simultaneous cues. Attention, Perception, & Psychophysics. 2014;76:1287–1297. doi: 10.3758/s13414-014-0645-z. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Carmel D, Carrasco M. Bright and dark attention: Distinct effect of divided attention at attended and unattended locations [Abstract] Journal of Vision. 2009;9(8) doi: 10.1167/9.8.123. Article 123. [DOI] [Google Scholar]

- Carrasco M. Visual attention: The past 25 years. Vision Research. 2011;51:1484–1525. doi: 10.1016/j.visres.2011.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M. Spatial attention: Perceptual modulation. In: Kastner S, Nobre AC, editors. The Oxford handbook of attention. Oxford University Press; Oxford, England: 2014. pp. 183–230. [Google Scholar]

- Carrasco M, Baideme L, Giordano AM. Covert attention generalizes perceptual learning [Abstract] Journal of Vision. 2009;9(8) doi: 10.1167/9.8.857. Article 857. [DOI] [Google Scholar]

- Carrasco M, Williams PE, Yeshurun Y. Covert attention increases spatial resolution with or without masks: Support for signal enhancement. Journal of Vision. 2002;2(6) doi: 10.1167/2.6.4. Article 4. [DOI] [PubMed] [Google Scholar]

- Chirimuuta M, Burr D, Morrone MC. The role of perceptual learning on modality-specific visual attentional effects. Vision Research. 2007;47:60–70. doi: 10.1016/j.visres.2006.09.002. [DOI] [PubMed] [Google Scholar]

- Choi H, Seitz AR, Watanabe T. When attention interrupts learning: Inhibitory effects of attention on TIPL. Vision Research. 2009;49:2586–2590. doi: 10.1016/j.visres.2009.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das A, Huxlin KR. New approaches to visual rehabilitation for cortical blindness: Outcomes and putative mechanisms. The Neuroscientist. 2010;16:374–387. doi: 10.1177/1073858409356112. [DOI] [PubMed] [Google Scholar]

- Donovan I, Szpiro S, Carrasco M. Exogenous attention facilitates perceptual learning transfer within and across visual hemifields. Journal of Vision. 2015;15(10) doi: 10.1167/15.10.11. Article 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Han S, Lu ZL. Perceptual learning and attention: Reduction of object attention limitations with practice. Vision Research. 2010;50:402–415. doi: 10.1016/j.visres.2009.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Lu Z-L. Perceptual learning in clear displays optimizes performance: Learning the limiting process. Proceedings of the National Academy of Sciences, USA. 2005;104:5286–5290. doi: 10.1073/pnas.0500492102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giordano AM, McElree B, Carrasco M. On the automaticity and flexibility of covert attention: A speed-accuracy trade-off analysis. Journal of Vision. 2009;9(3) doi: 10.1167/9.3.30. Article 30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gutinsky DA, Hansen BJ, Iliescu BF, Dragoi V. Attention alters visual plasticity during exposure-based learning. Current Biology. 2009;19:555–560. doi: 10.1016/j.cub.2009.01.063. [DOI] [PubMed] [Google Scholar]

- Huang TR, Watanabe T. Task attention facilitates learning of task-irrelevant stimuli. PLoS ONE. 2012;7(4) doi: 10.1371/journal.pone.0035946. Article e35946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M, Westheimer G, Gilbert CD. Attention and perceptual learning modulate contextual influences on visual perception. Neuron. 1998;20:1191–1197. doi: 10.1016/s0896-6273(00)80499-7. [DOI] [PubMed] [Google Scholar]

- Karni A, Sagi D. Where practice makes perfect in texture discrimination: Evidence for primary visual cortex plasticity. Proceedings of the National Academy of Sciences, USA. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch C, Tsuchiya N. Attention and consciousness: Two distinct brain processes. Trends in Cognitive Sciences. 2007;11:16–22. doi: 10.1016/j.tics.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Levi DM, Li RW. Perceptual learning as a potential treatment for amblyopia: A mini-review. Vision Research. 2009;49:2535–2549. doi: 10.1016/j.visres.2009.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li W, Piëch V, Gilbert CD. Perceptual learning and top-down influences in primary visual cortex. Nature Neuroscience. 2004;7:651–657. doi: 10.1038/nn1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Stevens ST, Carrasco M. Comparing the time course and efficacy of spatial and feature-based attention. Vision Research. 2007;47:108–113. doi: 10.1016/j.visres.2006.09.017. [DOI] [PubMed] [Google Scholar]

- Lu Z-L, Yu C, Sagi D, Watanabe T, Levi D. Perceptual learning: Functions, mechanisms, and applications. Vision Research. 2010;50:365–367. doi: 10.1016/j.visres.2010.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meuwese JD, Post RA, Scholte HS, Lamme VA. Does perceptual learning require consciousness or attention? Journal of Cognitive Neuroscience. 2013;25:1579–1596. doi: 10.1162/jocn_a_00424. [DOI] [PubMed] [Google Scholar]

- Mukai I, Bahadur K, Kesavabhotla K, Ungerleider LG. Exogenous and endogenous attention during perceptual learning differentially affect post-training target thresholds. Journal of Vision. 2011;11(1) doi: 10.1167/11.1.125. Article 25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukai I, Kim D, Fukunaga M, Japee S, Marrett S, Ungerleider LG. Activations in visual and attention-related areas predict and correlate with the degree of perceptual learning. The Journal of Neuroscience. 2007;27:11401–11411. doi: 10.1523/JNEUROSCI.3002-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, Mackeben M. Sustained and transient components of focal visual attention. Vision Research. 1989;29:1631–1647. doi: 10.1016/0042-6989(89)90144-2. [DOI] [PubMed] [Google Scholar]

- Paffen CL, Verstraten FA, Vidnyánszky Z. Attention-based perceptual learning increases binocular rivalry suppression of irrelevant visual features. Journal of Vision. 2008;8(4) doi: 10.1167/8.4.25. Article 25. [DOI] [PubMed] [Google Scholar]

- Polat U, Schor C, Tong JL, Zomet A, Lev M, Yehezkel O, Levi DM. Training the brain to overcome the effect of aging on the human eye. Scientific Reports. 2012;2 doi: 10.1038/srep00278. Article 278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roelfsema PR, van Ooyen A, Watanabe T. Perceptual learning rules based on reinforcers and attention. Trends in Cognitive Sciences. 2010;14:64–71. doi: 10.1016/j.tics.2009.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi D. Perceptual learning in Vision Research. Vision Research. 2011;51:1552–1566. doi: 10.1016/j.visres.2010.10.019. [DOI] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Dinse HR. A common framework for perceptual learning. Current Opinion in Neurobiology. 2007;17:148–153. doi: 10.1016/j.conb.2007.02.004. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. The phenomenon of task-irrelevant perceptual learning. Vision Research. 2009;49:2604–2610. doi: 10.1016/j.visres.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & Psychophysics. 1992;52:582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- Szpiro S, Spering M, Carrasco M. Perceptual learning modifies untrained pursuit eye movements. Journal of Vision. 2014;14(8) doi: 10.1167/14.8.8. Article 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szpiro S, Wright B, Carrasco M. Learning one task by interleaving practice with another task. Vision Research. 2014;101:118–124. doi: 10.1016/j.visres.2014.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talgar CP, Pelli D, Carrasco M. Covert attention enhances letter identification without affecting channel tuning. Journal of Vision. 2004;4(1) doi: 10.1167/4.1.3. Article 3. [DOI] [PubMed] [Google Scholar]

- Tsushima Y, Sasaki Y, Watanabe T. Greater disruption due to failure of inhibitory control on an ambiguous distractor. Science. 2006;314:1786–1788. doi: 10.1126/science.1133197. [DOI] [PubMed] [Google Scholar]

- Tsushima Y, Watanabe T. Roles of attention in perceptual learning from perspectives of psychophysics and animal learning. Learning & Behavior. 2009;37:126–132. doi: 10.3758/LB.37.2.126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T, Náñez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413:844–848. doi: 10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- Xiao L-Q, Zhang J-Y, Wang R, Klein SA, Levi DM, Yu C. Complete transfer of perceptual learning across retinal locations enabled by double training. Current Biology. 2008;18:1922–1926. doi: 10.1016/j.cub.2008.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yantis S, Jonides J. Attentional capture by abrupt visual onsets: New perceptual objects or visual masking? Journal of Experimental Psychology: Human Perception and Performance. 1996;22:1505–1513. doi: 10.1037//0096-1523.22.6.1505. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Carrasco M. The effects of transient attention on spatial resolution and the size of the attentional cue. Perception & Psychophysics. 2008;70:104–113. doi: 10.3758/pp.70.1.104. [DOI] [PubMed] [Google Scholar]

- Zhang JY, Zhang GL, Xiao LQ, Klein SA, Levi DM, Yu C. Rule-based learning explains visual perceptual learning and its specificity and transfer. The Journal of Neuroscience. 2010;30:12323–12328. doi: 10.1523/JNEUROSCI.0704-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]