Abstract

Shearers play an important role in fully mechanized coal mining face and accurately identifying their cutting pattern is very helpful for improving the automation level of shearers and ensuring the safety of coal mining. The least squares support vector machine (LSSVM) has been proven to offer strong potential in prediction and classification issues, particularly by employing an appropriate meta-heuristic algorithm to determine the values of its two parameters. However, these meta-heuristic algorithms have the drawbacks of being hard to understand and reaching the global optimal solution slowly. In this paper, an improved fly optimization algorithm (IFOA) to optimize the parameters of LSSVM was presented and the LSSVM coupled with IFOA (IFOA-LSSVM) was used to identify the shearer cutting pattern. The vibration acceleration signals of five cutting patterns were collected and the special state features were extracted based on the ensemble empirical mode decomposition (EEMD) and the kernel function. Some examples on the IFOA-LSSVM model were further presented and the results were compared with LSSVM, PSO-LSSVM, GA-LSSVM and FOA-LSSVM models in detail. The comparison results indicate that the proposed approach was feasible, efficient and outperformed the others. Finally, an industrial application example at the coal mining face was demonstrated to specify the effect of the proposed system.

Keywords: shearer cutting pattern identification, least squares support vector machine, fruit fly optimization algorithm, ensemble empirical mode decomposition, feature extraction

1. Introduction

In a fully mechanized coal mining face, as the most important coal mining equipment, a shearer uses a drum to cut the coal. Due to the poor working conditions of coal mining, shearer operators does not have an accurate way to determine whether the shearer drum is cutting coal, rock, or coal with gangue depending only on simple visualization. This can lead to some poor coal quality and low mining efficiency problems. Moreover, in collieries many accidents are occurring with increasing frequently. The main reason of the problems is that the automation level of coal mining equipment is too low. With the development the suitable automation techniques, the automatic control of shearers has attracted more and more attention and accurate monitoring of shearer working status has played an indispensable important role for the automatic control of shearers. Therefore, researching the identification approach for shearer cutting patterns has become a challenging and significant subject [1].

Traditional identification techniques for shearer cutting patterns are mostly based on coal-rock recognition. The most influential methods are γ-ray detection [2], radar detection [3], infrared detection [4], and image detection [5], etc. However, these methods cannot satisfy the needs of practical applications and possess lower recognition rates because of the harsh conditions in practical production operation. In this context, this paper refers to the fault diagnosis and pattern recognition methods for traditional equipment and focuses on the identification method for shearer cutting patterns. Sensors are extensively used in pattern recognition and a diagnosis system to tackle the problem of perception by providing information about the machine. Using vibrations to collect the state information has become an effective method. In this regard, vibration-based analysis is becoming the most commonly used method and also proved to be efficient in various real applications. For a shearer, the rocker arm is the critical component and the vibrations of the rocker arm can comprehensively reflect the cutting conditions of the shearer, which can be diagnosed correctly by appropriate measurement and processing of sensor signals.

In general, existing pattern recognition methods can be classified into two categories: model-based methods and data-driven methods. The model-based pattern recognition aims to determine a pattern using the system’s analytical/mathematical model(s). However, the analytical/mathematical model associated a specific pattern is difficult to construct accurately, which leads to the ineffectiveness of model-based methods. In recent years, with the development of intelligent computing technology, data-driven methods have received much attention. In these methods, the pattern diagnosis can be realized by mapping the pattern space to the feature space through some modern intelligent algorithms [6], such as expert systems [7,8], neural networks [9,10], fuzzy logic [11], rough sets [12], and their hybrid methods [13,14]. Although the neural network and other conventional artificial intelligent techniques have been widely used in fault diagnosis and pattern recognition, they require sufficient samples and have limitations in generalization of results in models that can over-fit the samples because of the empirical risk minimization principle. Support vector machine (SVM) is a machine learning algorithm advocating structural risk minimization principle and has been widely used in classification and regression prediction because of its desirable generalization performance. The least squares support vector machine (LSSVM) is a reformulation of SVM which leads to solving a linear Karush-Kuhn-Tucker (KKT) system. The LSSVM can deal with non-linear systems and perform with high precision, making it a powerful tool for modeling and forecasting non-linear systems [15,16,17]. The performance of a LSSVM model largely depends on the values of its two parameters, one of which (denoted regularization parameter “C”) controls the tradeoff between margin maximization and error minimization. Another is called the kernel parameter and can implicitly define the nonlinear mapping from input space to high-dimensional feature space. Therefore, it is an indispensable step to optimize the parameters of LSSVM for a good performance in handling a learning task. Currently, several meta-heuristic algorithms have been employed to determine the appropriate values of these two parameters, such as particle swarm optimization [18,19], genetic algorithm [20,21], ant colony algorithm [22], and immune algorithm [23]. However, these optimization algorithms have the common drawbacks of being hard to understand and reaching the global optimal solution slowly.

The fruit fly optimization algorithm (FOA) proposed by Pan [24] is a novel evolutionary computation and optimization technique. This new optimization algorithm has the advantages of being easy to understand and to be written into program code which is not too long compared with other algorithms. More recently, FOAs have been applied in a variety of fields, such as power load forecasting [25], neural network parameter optimization [26], PID controller parameter tuning [27], design and optimization of key control characteristics [28], and so on. However, it often suffers the problem of being trapped into a local optimum which leads to premature convergence. In this research, an improved fruit fly optimization algorithm (IFOA) is proposed to optimize the two parameters of the LSSVM model, named the IFOA-LSSVM model, which uses a fruit fly optimization algorithm with two improvements to efficiently control the global search of LSSVM model in shearer cutting pattern identification.

The remaining parts of the paper are organized as follows: Section 2 summarizes some related works about the state of the art approaches to the problem. Section 3 introduces the basic theory of the original LSSVM and FOA methods, and presents the proposed IFOA-LSSVM model in detail. Section 4 describes the identification system of shearer cutting patterns based on the proposed method. Section 5 provides some examples and comparisons of IFOA-LSSVM with other methods. Section 6 presents the application results of the proposed method on a coal mining face. Section 7 gives the conclusions of our paper and proposes some future work.

2. Related Works on Identification of Shearer Cutting Patterns

In the past decades, many researchers have focused on coal-rock identification to roughly estimate the cutting state of shearers and many kinds of coal-rock recognition methods have been successively proposed. In [2], gamma-ray backscatter sensing was used to measure the boundary coal thickness. In [3], a radar coal thickness sensor was developed to identify the coal-rock interface and measure the thickness of a coal seam. In [5], a coal-rock interface identification method was provided based on the processing of visible light and infrared images. In [29], the recognition of a coal-rock interface in the top caving was investigated via the vibration signals of the tail beam of the hydraulic support. In [30], the color, grey scale, grain, shape and other image features of visible light images and infrared images were integrated and used to identify the coal-rock interface. In [31], the wavelet packet were utilized to extract the features of the torsional vibration signal of a drum shaft and the extracted features were integrated to recognize the coal-rock interface through fuzzy neural network technology. In [32], acoustic detection was applied in the identification of the coal-rock interface according to the sonic wave reflection and refraction at the water-coal interface and coal-rock interface. In [33], Sahoo et al. carried out some experiments about the application of a opto-tactile sensor for recognizing rock surfaces. In [34], the radar technology was used to identify the coal-rock interface and obtain the cutting patterns of a shearer.

Although many coal-rock recognition methods have been developed, they have some common disadvantages. Firstly, the coal-rock detectors in the above references are complex and require too harsh coal seam geological conditions, which cannot satisfy extensive applications during practical production. Furthermore, the recognition rate is sensitively influenced by the conditions of gangue included in the coal seam. Therefore, this paper utilizes the data-driven theory and proposes an intelligent identification method for shearer cutting patterns based on the integration of least squares support vector machine and an improved fruit fly optimization algorithm.

3. Least Squares Support Vector Machine with Improved Fruit Fly Optimization Algorithm

3.1. Least Squares Support Vector Machine

The support vector machine, a reliable tool for solving pattern recognition and classification problems, was initially presented by Vapnik and his coworkers in 1995 based on statistical learning theory and the structural risk minimization principle [35]. The least squares support vector machine (LSSVM) is an extension of SVM which applies the linear least squares criteria to the loss function instead of inequality constraints [36].

In a set of samples , is the input data and is the corresponding output value for sample i. The formulation of the primal problem for the LSSVM can be given as follows:

| (1) |

subject to the equality constraint:

| (2) |

where J is objective function; 1/2wTw is used as a flatness measurement function; C is the regularization parameter, which determines the tradeoff between the training error and the model flatness; ξi is the slack variable; the nonlinear mapping maps the input data into a high dimensional feature space, where a linear regression problem is obtained and solved; b is the bias, and w is a weight vector of the same dimension as the feature space.

The Lagrangian function L can be constructed by:

| (3) |

where αi is Lagrange multiplier. The Karush-Kuhn-Tucker (KKT) conditions for optimality are given by:

| (4) |

Based on Equation (3), one can formulate a linear system Ax = B in order to represent this problem as:

| (5) |

where I = [1, 1, …, 1]T, A = [α1, α2, …, αm]T, Y = [y1, y2, …, ym]T. According to the Mercer’s condition, the Kernel function can be set as:

| (6) |

Then, the regression function of LSSVM model can be described as follows:

| (7) |

For a classification problem, yi ∈ {−1, 1} indicates the corresponding desired output vector and the classification decision function is described as follows:

| (8) |

Equation (1) for classification problem should meet the following equality constraint:

| (9) |

The corresponding optimization problem of LS-SVM model with Lagrange function is described as follows:

| (10) |

Using the same processing method, the classification decision function is described as follows:

| (11) |

There are several different types of Mercer kernel function K(x, xi) such as sigmoid, polynomial and radial basis function (RBF). The RBF is a common option for the kernel function because of fewer parameters that need to be set and an excellent overall performance [37]. Therefore, this paper selected the RBF as the kernel function:

| (12) |

Consequently, there are two parameters that need to be chosen in the LSSVM model, which are the bandwidth of the Gaussian RBF kernel “δ” and the regularization parameter “C”. Many researches have shown that the LSSVM parameters have great influence on its learning and generalization ability. This paper presents an improved fruit fly optimization algorithm to determine the optimal values of these two parameters, so that LSSVM could perform the best generalization ability.

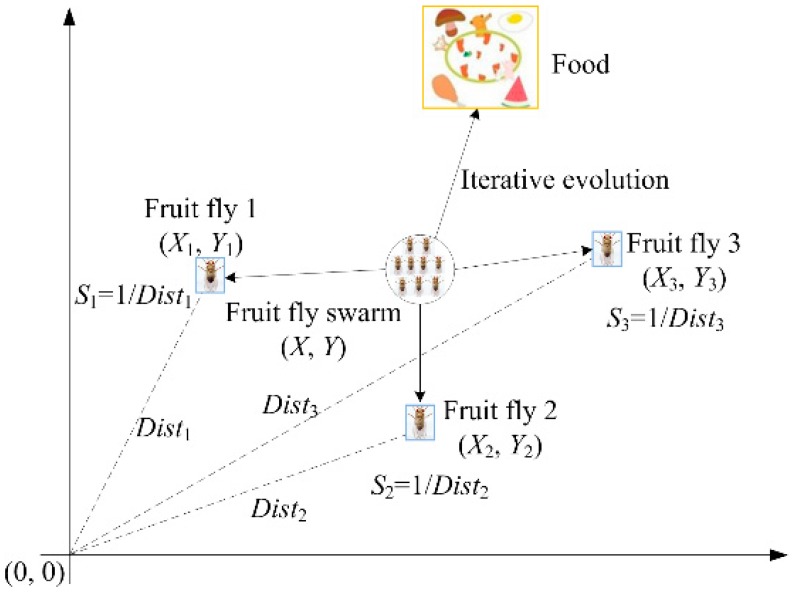

3.2. The Basic FOA and Analysis

The fruit fly optimization algorithm (FOA) is a new swarm intelligence algorithm, which was proposed by Pan [30], and it is a kind of interactive evolutionary computation method. The basic thought of FOA is that fruit fly finds the food through the food finding behavior. During finding food, a fruit fly initially smells a particular odor by using its osphresis organs, sends and receives information from its neighbors and compares the current best location and fitness. Flies identify the fitness values by taste and fly toward the location with better fitness. They use their sensitive vision to seek food and fly toward that direction further. Figure 1 shows the food finding iterative process of a fruit fly swarm.

Figure 1.

Food searching iterative process of fruit fly swarm.

According to the food finding characteristics of fruit fly swarm, the FOA can be divided into several steps, as follows:

Step 1: Parameters initialization. The swarm location range (LR), maximum iteration number (Maxgen), and population size (sizepop) are initialized. The initial fruit fly swarm location (X_axis, Y_axis) and the random flight distance range FR should be initialized first:

| (13) |

Step 2: Population initialization. The random direction and distance for food searching of any individual fruit fly can be given as follows:

| (14) |

Step 3: Population evaluation. Firstly, the distance of food source to the initialization location (Disti) is calculated by using the following equation:

| (15) |

Secondly, the smell concentration judgment value (Si) need to be calculated, and the value of Si is the reciprocal of the distance Disti:

| (16) |

Then, the smell concentration (Smelli) of the individual fruit fly location is calculated by inputting the smell concentration judgment value (Si) into the Smelli judgment function (also called the fitness function). Finally, the fruit fly with minimum smell concentration (the minimal value of Smelli) among the swarm is determined and found out:

| (17) |

Step 4: Vision searching process. The minimal concentration value and X, Y coordinate are maintained. The fruit fly swarm flies toward the location with the minimal smell concentration value by using vision:

| (18) |

Step 5: The iterative optimization is entered to repeat the implementation of Steps (2)–(4). When the smell concentration reaches the preset precision value or the iterative number reaches the maximal Maxgen, the circulation stops. Through the analysis of Equations (14)–(15), it can be found that FOA has some disadvantages which limit its searching performance. The disadvantages are summarized below.

-

(1)

It is clear that the value Si is non-negative and this smell concentration judgment value is then substituted into the smell concentration judgment function to find the smell concentration of the individual location of the fruit fly. That is to say that the variable of the fitness function is in the zone of (0, +∞), which will prevent the application of FOA in some problems with negative numbers in the domain.

-

(2)

FOA depends only on the current generation optimal solution and when the optimal individual is found, all fruit flies will fly towards this individual. Then the fruit flies are updated according to Equation (10). This operation will greatly reduce the diversity and exploration ability of fly swarm. Furthermore, the current generation optimal solution may not the global optimum and inapposite FR will make the FOA get into the local optimal solution.

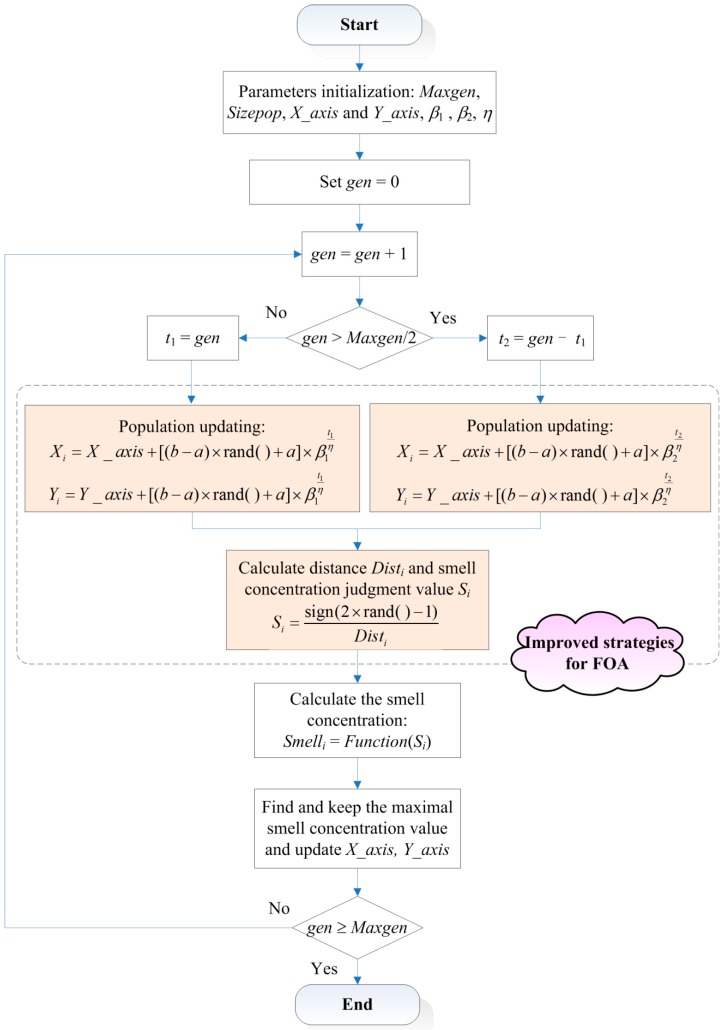

3.3. The Improved Strategies for FOA

Based on the aforementioned analysis, the original FOA has demanding application conditions and can possibly get into a local extreme. Thus, two improvements for the original FOA are proposed in this subsection.

-

(1)In Step (3), in order to ensure the variable of the fitness function is in the zone of (−∞, +∞), the smell concentration judgment value (Si) can be calculated by the following equation:

(19) -

(2)In order to improve the diversity and exploration ability of fly swarm and increase the ability to break away from the local optimum, this paper proposes an improved strategy for FOA through expanding search in the initial phase and narrowing search in the later phase. Let the fruit fly population be updated by the following equation:

where β is defined as the adjustment factor; η is used to control the flight distance range FR and can be determined according to the practical problem; gen is the current number of iterations. In the first phase, the random flight distance range should increase to realize the diversity of population. The adjustment factor β should be larger than 1, marked as β1 and the number of iterations in this phase t1 is equal to gen. Thus, the fruit fly population can be updated as follows:(20)

where [a, b] denotes the flight distance range of fruit fly. In the second phase, the random flight distance range should increase to enhance the convergence accuracy and convergence speed. The adjustment factor β should be smaller than 1, marked as β2 and the number of iterations in this phase t2 is equal to gen − t1. Thus, the fruit fly population can be updated as follows:(21) (22)

The complete flowchart of improved FOA is shown in Figure 2.

Figure 2.

The flowchart of improved FOA.

3.4. Improved Fruit Fly Optimization Algorithm for Parameters Selection of LSSVM Model

Selecting appropriate bandwidth “δ” and regularization parameter “C” of LSSVM is extremely important for the classification performance of LSSVM. In this paper, the proposed IFOA is used to choose the appropriate parameter values of the LSSVM model, named IFOA-LSSVM model. The details of IFOA for parameters determination of the LSSVM model are as follows:

Step 1: Initialization parameters. The maximum iteration number Maxgen, the population size sizepop, the initial fruit fly swarm location (X_axis, Y_axis), and the flight distance range FR should be determined at first. In the LSSVM model, two parameters need to be determined and we can set X_axis = rands(1, 2), Y_axis = rands(1, 2), where rands( ) denotes the random number generation function. Set gen = 0.

Step 2: Evolution starting. In the IFOA-LSSVM program, we employ two variables [X(i, :), Y(i, :)] to represent the flight distance for food finding of an individual fruit fly i. If gen ≤ Maxgen/2, the flight direction of fruit fly i should be updated by Equation (21). If gen > Maxgen/2, the flight direction of fruit fly i should be updated by Equation (22).

Step 3: Calculation. In the IFOA-LSSVM program, D(i,1) and D(i,2) are used to represent the distance Disti of the fruit fly i to the origin, which can be calculated as follows:

| (23) |

Similarly, we can use S(i, 1) and S(i, 2) to describe the smell concentration judgment value Si and it can be calculated as follows:

| (24) |

In the proposed model, the parameters (C, δ) of LSSVM are represented by S(i, 1) and S(i, 2), and can be set as C = 20× |S(i, 1)|, δ = |S(i, 2)|, respectively. Then, the smell concentration Smelli (also called the fitness value of fruit fly i) should be calculated. We adopt two fitness functions to represent the regression prediction performance and classification ability of IFOA-LSSVM model. One is the root-mean-square error (RMSE) between the outputs of LSSVM and actual values and another is the classification error rate (CER).

Step 4: Updating. The fruit flies are operated according to Equations (19) and (20), and then the swarm is updated through Equations (21) and (22). The smell concentration values are calculated again. Set gen = gen +1.

Step 5: Iteration termination. When gen reaches the max iterative number, the termination criterion satisfies, and the optimal parameters (C*, δ*) of LSSVM model can be obtained. Otherwise, go back to Step 2.

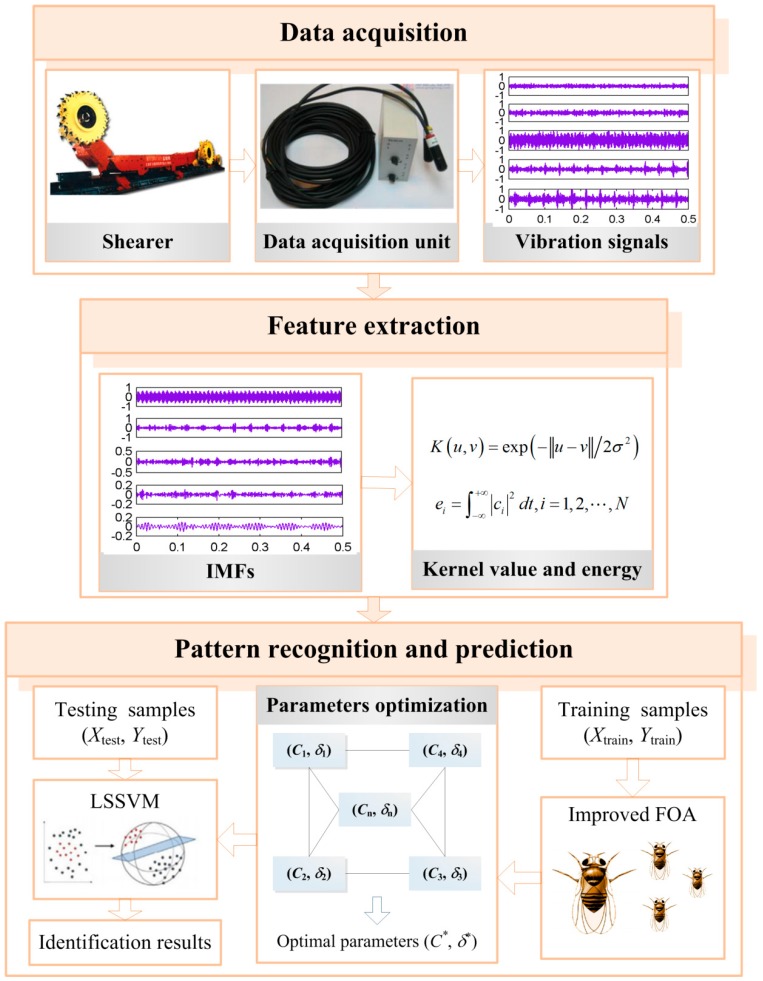

4. The Identification System for Shearer Cutting Pattern Based on Proposed Method

The intelligent identification for shearer cutting pattern based on proposed method is essentially a pattern recognition system, shown in Figure 3. It mainly consists of data acquisition, feature extraction and pattern recognition and prediction, which is explained as follows.

Figure 3.

The identification system for shearer cutting pattern based on proposed method.

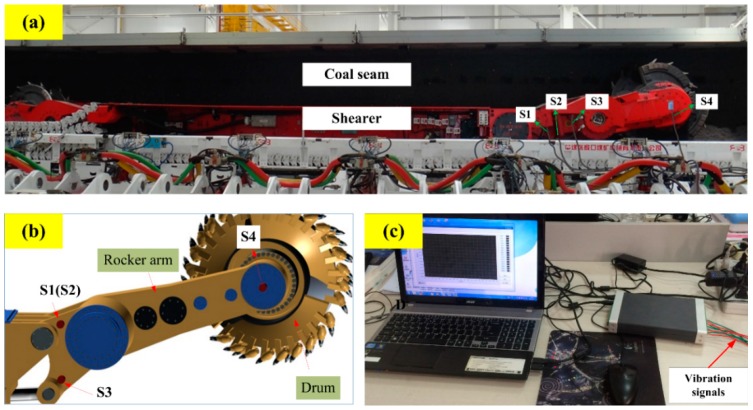

4.1. Data Acquisition

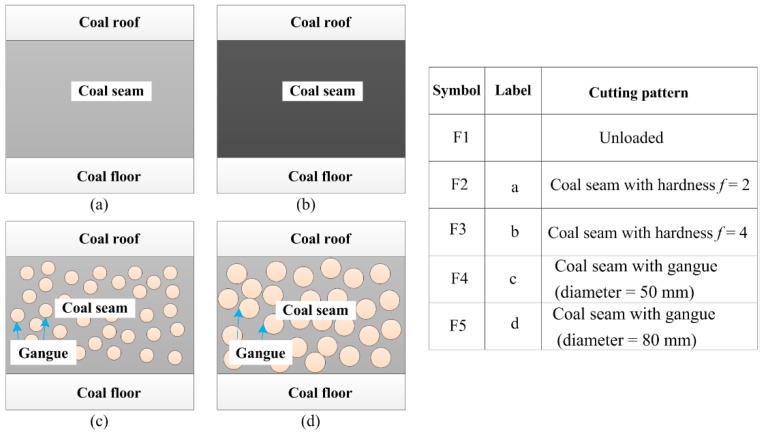

The cutting pattern diagnosis of shearers starts with data acquisition to collect the machinery working information. Vibration signal acquisition is the most commonly used method which is realized by sensors. In this study, the data were acquired through four sensors installed in a self-designed experimental system for a shearer cutting coal, as shown in Figure 4. In the experiment, the coal seam was mainly divided into four parts, including two kinds of coal seams with different hardness and the coal seam with some strata of gangue. All cutting patterns of the shearer (including the shearer with unloaded condition) are represented in Figure 5.

Figure 4.

Self-designed experimental system for shearer cutting coal: (a) The experiment bench of shearer cutting coal; (b) The installation sketch of accelerometers; (c) Vibration signals processing device.

Figure 5.

Different geological conditions of coal seam.

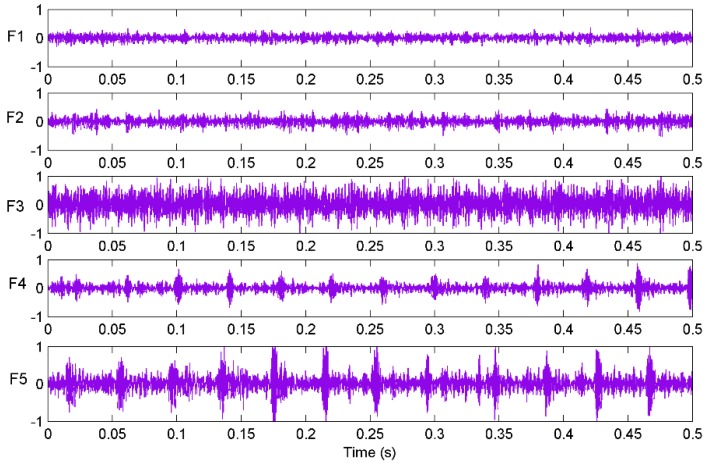

In Figure 4, the signs “S1, S2, S3 and S4” refer to four accelerometers located on the rocker arm. A multifunctional high-speed collector performed the data acquisition and the data were collected into a notebook computer through the USB interface. The sampling frequency was set as 12 kHz and the sampling time of each sample was 0.5 s. Vibration signals of sensor S1 with different patterns are plotted in Figure 6. Finally, 400 groups of samples were obtained with 80 groups of samples for each cutting pattern.

Figure 6.

Vibration signals from sensor S1 in different cutting patterns.

4.2. Feature Extraction

The signal feature extraction is a critical initial step in any pattern recognition and fault diagnosis system. The extraction accuracy has a great influence on the final identification results, so there have been a lot of signal processing approaches to obtain desirable features for machinery pattern diagnosis, among which the Fast Fourier Transform (FFT) and Wavelet Transform (WT) are widely used and well-established. When a fault occurs, new frequency components may appear and a change of the convergence of the frequency spectrum may take place. However, for weak signals the features are submerged in the strong background noise and it is difficult to extract effective features by traditional feature extraction methods. Fortunately, the ensemble empirical mode decomposition (EEMD) has been proposed in [38]. The EEMD method adds a certain amount of Gaussian white noise in the original signal before decomposing it, so as to solve the problem of frequency aliasing. This method is very appropriate for non-stationary and non-linear signals [39,40]. The steps of EEMD can be briefly summarized as follows:

-

(1)

Determine the number of ensemble M and initialize the amplitude of the added white noise, and set m = 1.

-

(2)Add a white noise series with the given amplitude to the original signal.

where am(t) denotes the mth added white noise series and xm(t) denotes the investigated signal added white noise (noise-added signal) of the mth trial.(25) -

(3)

By the use of EMD method [41], the noise-added signal xm(t) is decomposed into N intrinsic mode functions (IMFs), which can be marked as bnm(t)(n=1,2,…,N) and bnm(t) represents the nth IMF of the mth trial.

-

(4)

If m < M then let m = m + 1. Repeat Steps (2) and (3) again with different white noise series each time until m = M.

-

(5)Calculate the ensemble mean bn(t) of the M trials for each IMF. Then output the mean ci(t) (i = 1,2,…,N) of each of the N IMFs as the final decomposed results:

(26)

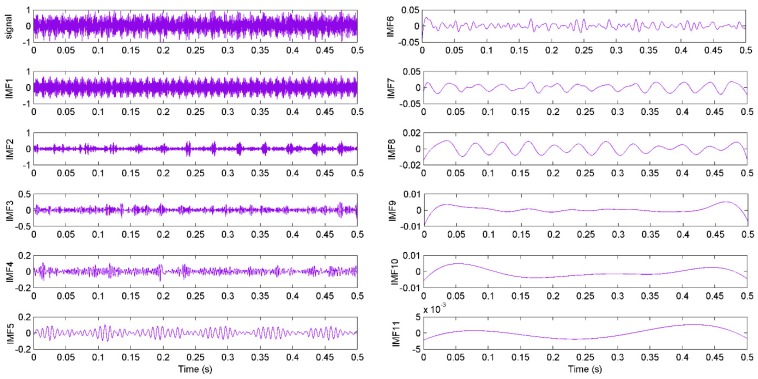

According to the above steps, a measured signal is decomposed and the decomposition results are given in Figure 7. It shows 11 IMFs in different frequency bands decomposed by the EEMD algorithm. It can be seen from the figure that the original signal is very complicated and the decomposed IMFs are hard to use for state diagnosis. Hence, features of the signals need to be extracted. In addition, the correlation coefficients between the last three IMFs (IMF9, IMF10 and IMF11) and the original signal are too low. Therefore, the kernel function value and energy of the first eight IMFs were extracted and used as features for pattern identification [42].

Figure 7.

The decomposed components with EEMD and original signal from S1 at F3.

The kernel feature is employed by a kernel function. Firstly, the signal collected by the ith sensor is defined as a sequence , where i = 1, 2, …, S and S is the number of sensors, l is the number of sampling points. Then the sequence is decomposed by EEMD to get N IMFs: {c1,c2,…,cN}, where ck = {ck1,ck2,…,ckl}, k = 1,2,…,N.

The 2-norm of the kth IMF can be calculated as follows:

| (27) |

Then, a vector can be constructed from N IMFs:

| (28) |

A Gaussian kernel function is described as . If v is defined as a vector {0}1×N, the kernel feature value of the signal from the ith sensor can be calculated as kfi = K(NORM, v). Finally, a kernel feature sample KF can be obtained by calculating S sensors’ signal data:

| (29) |

We assume that ei is the energy of the ith IMF, which can be calculated as follows:

| (30) |

The maximum energy of the IMFs is used to generate the energy sample EF, shown as follows:

| (31) |

In this experiment, the parameter of the Gaussian kernel function σ was set as 5. A feature sample could be constructed by the KF, EF or the combination of KF and EF. The performance of the proposed model based on different features was investigated and analyzed in Section 5.

4.3. Pattern Recognition and Prediction

In the shearer cutting pattern identification system, support vector machine, especially the least squares support vector machine, is widely used as a pattern recognition and prediction approach to diagnose which kind of working pattern the machinery is in. The proposed fruit fly optimization algorithm is adopted to determine the optimal parameters in a least squares support vector machine.

5. Example Computation and Comparison Analysis

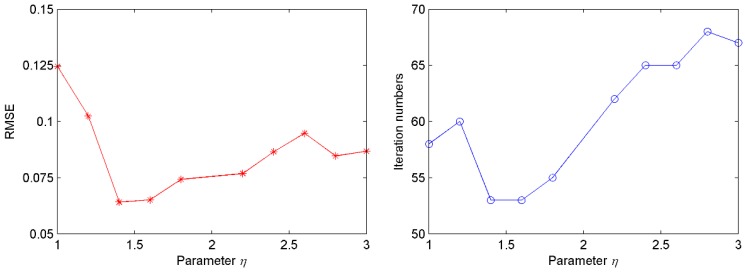

In this example, all samples were collected from the self-designed experimental system shown in Figure 4. Four hundred groups of samples were obtained with 80 groups of samples for each cutting pattern. The corresponding cutting patterns could be quantized as 1, 2, 3, 4 and 5. Seventy five percent of the samples were used as the training samples to optimize the parameters of LSSVM and the remaining samples were used for testing the generalization ability of the proposed model. The parameters were provided as follows: Maxgen = 100, sizepop = 20, (X_axis,Y_axis) ⊂ [−1, 1], FR = [−10, 10] (thus a = −10, b = 10), β1 = 1.1, β2 = 0.9. The parameter η has great influence on the quality of solution and searching speed. For different η, the changes of training root-mean-square error (RMSE) and iteration numbers to obtain the RMSE are shown in Figure 8. Seen from this figure, the proposed method has better training RMSE and iteration numbers when the parameter η is equal to 1.4, so the value of parameter η is set as 1.4 in this experiment.

Figure 8.

The influence of different η on the performance of proposed method.

In order to measure the prediction performance of an IFOA-LSSVM model, the classification error rate (CER) and the difference between the output of the model and the desired output were considered as the evaluation indexes and represented in separate ways. In this paper, the following measures were employed for model evaluation: the RMSE, the mean absolute error (MAE), the mean relative error (MRE), and Theil’s inequality coefficient (TIC). The CER represented the categorization performance of IFOA-LSSVM. The RMSE, MAE and MRE confirmed the prediction accuracy of the proposed model. The TIC indicated the level of agreement between the proposed mode land the studied process. These indicators were defined as follows:

| (32) |

| (33) |

| (34) |

| (35) |

| (36) |

where n is the number of samples; denotes the actual value of the ith sample; denotes the LSSVM output of the ith sample.

As described in the feature extraction subsection, our feature construction included two types of features: kernel feature (KF) and energy feature (EF). Here we constructed three models based on KF, EF, the combination of KF and EF (KF + EF), respectively. In order to reduce the random error, the models were trained and tested for about 50 times based on different features and the average values were computed to compare. Finally, the performance of IFOA-LSSVM model trained and tested with various features was shown in Table 1.

Table 1.

The performance of IFOA-LSSVM on the testing set with different combinations of features.

| Training Features | CER | RMSE | MAE | MRE (%) | TIC |

|---|---|---|---|---|---|

| KF | 0.1782 | 0.3291 | 0.1754 | 7.79 | 0.0669 |

| EF | 0.2193 | 0.4262 | 0.2014 | 9.83 | 0.0939 |

| KF + EF | 0.03015 | 0.0611 | 0.0524 | 2.66 | 0.0089 |

According to the results in Table 1, the performance of proposed model trained with the combination of KF and EF showed obvious improvement as compared to the individual feature. This demonstrated that both of the two types of features contributed to identifying the cutting patterns of shearer. Henceforth, the combination of KF and EF was selected as the feature samples to learn the proposed model.

In order to investigate the performance of proposed IFOA-LSSVM model, four other models: FOA-LSSVM, PSO-LSSVM (LSSVM optimized by particle swarm optimization algorithm), GA-LSSVM (LSSVM optimized by genetic algorithm), and single LSSVM were employed for comparison. The parameters of PSO were set as: population size = 20, maximum iteration number = 100, acceleration factors C1 = 1.5 and C1 = 1.7. The parameters of GA were set as: population size = 20, maximum iteration number = 100, crossover probability = 0.5, mutation probability = 0.1. The configurations of experimental environment for these methods were uniform. The models were trained and tested about 50 times and the average values were computed to compare. The comparison results of different models on the testing samples are listed in Table 2.

Table 2.

Comparison of LSSVM, PSO-LSSVM, GA-LSSVM, FOA-LSSVM and IFOA-LSSVM models.

| Model | Optimal Parameters | CER | RMSE | MAE | MRE (%) | TIC | |

|---|---|---|---|---|---|---|---|

| C* | δ* | ||||||

| LSSVM | 10 | 2 | 0.1096 | 0.2609 | 0.1401 | 6.17 | 0.0544 |

| PSO-LSSVM | 32.5671 | 3.8547 | 0.08108 | 0.1304 | 0.0815 | 4.11 | 0.0238 |

| GA-LSSVM | 15.1136 | 1.2256 | 0.06915 | 0.1107 | 0.0831 | 3.71 | 0.0289 |

| FOA-LSSVM | 19.3742 | 0.2827 | 0.05011 | 0.0694 | 0.0645 | 2.88 | 0.0112 |

| IFOA-LSSVM | 28.3846 | 0.0513 | 0.03015 | 0.0611 | 0.0524 | 2.66 | 0.0089 |

In Table 2, the optimal parameters (C* and δ*) of the five models denote the parameters with the smallest CER of different models among the 50 testing results. According to the results of IFOA tuning the parameters of the LSSVM model, the optimal values of C and δ were selected as 28.3846 and 0.0513, respectively. In the FOA-LSSVM model, the optimal values of C and δ were 19.3742 and 0.2827. According to the results of GA and PSO optimizing the parameters of LSSVM model, the values of C and δ were optimized as 15.1136 and 1.2256, 32.5671 and 3.8547, respectively. In the single LSSVM model, the values of C and δ were chosen as 10 and 2. The specific error indexes listed in Table 2 indicate that the IFOA-LSSVM model performed better than other models. In details, the LSSVM model provided a CER of 0.1096, a RMSE of 0.2609, a MAE of 0.1401, a MRE of 6.17%, and a TIC of 0.0544. The PSO-LSSVM model obtained a CER of 0.08108, a RMSE of 0.1304, a MAE of 0.0815, a MRE of 4.11%, and a TIC of 0.0238. The GA-LSSVM model produced a CER of 0.06915, a RMSE of 0.1107, a MAE of 0.0831, a MRE of 3.71%, and a TIC of 0.0289. The FOA-LSSVM model obtained a CER of 0.05011, a RMSE of 0.0694, a MAE of 0.0645, a MRE of 2.88%, and a TIC of 0.0112. Finally, the IFOA-LSSVM model could acquire a CER of 0.03015, a RMSE of 0.0611, a MAE of 0.0524, a MRE of 2.66%, and a TIC of 0.0089. We could see that the performances of LSSVM coupled with other optimization algorithms were much better than single LSSVM. One noticed that selecting the parameters in LSSVM model was of considerable significance for improving the learning and generalization performance of LSSVM. As shown in Table 2, the IFOA-LSSVM gave a better performance than PSO-LSSVM, GA-LSSVM and FOA-LSSVM models. Our proposed IFOA-LSSVM was reliable to provide superior regression prediction performance and classification ability for shearer cutting pattern.

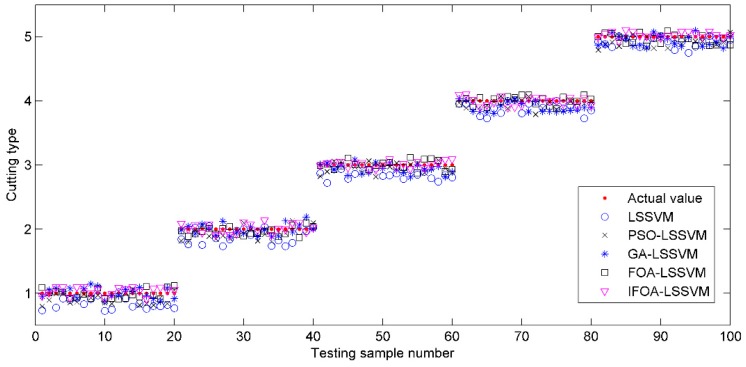

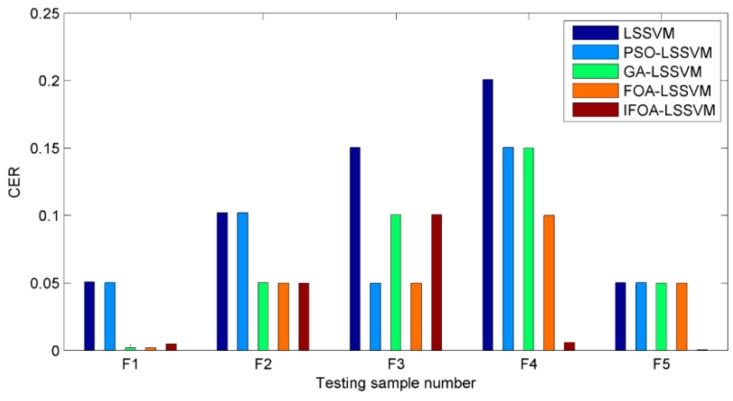

Visual comparison illustrations of the prediction values and classification results are also shown in Figure 9 and Figure 10. Obviously, the prediction results of IFOA-LSSVM model were nearly the real values on most data points, and the proposed model’s classification performance was superior to that of the competing models.

Figure 9.

The prediction values of testing samples based on different models.

Figure 10.

The CERs of five cutting types based on different models.

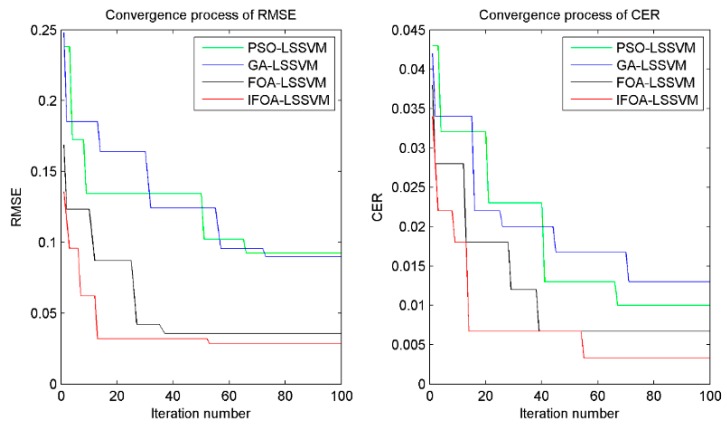

In order to investigate the efficiency of the proposed method, the convergence performance of four models (PSO-LSSVM, GA-LSSVM, FOA-LSSVM and IFOA-LSSVM) was compared and analyzed. The convergence curves of RMSE and CER obtained through the four different algorithms are illustrated in Figure 11. The results indicate that the proposed method had the advantage of faster convergence to the global optimal fitness by about 20 iteration numbers than PSO-LSSVM and GA-LSSVM. Although the iteration numbers were a little larger than that of FOA-LSSVM, the final RMSE and CER were excellent. Besides, the RMSE and CER by IFOA-LSSVM were 0.0285 and 0.0033, while the values by PSO-LSSVM, GA-LSSVM and FOA-LSSVM were 0.0924 and 0.010, 0.0898 and 0.013, 0.0355 and 0.0067, respectively, which signified the proposed method performed higher accuracy for forecasting and classification than other three methods. In briefly, the computation results manifested that the proposed IFOA-LSSVM model had better performance in the efficiency and generalization ability of identifying shearer cutting pattern.

Figure 11.

Comparison of PSO, GA, FOA and IFOA for optimization process.

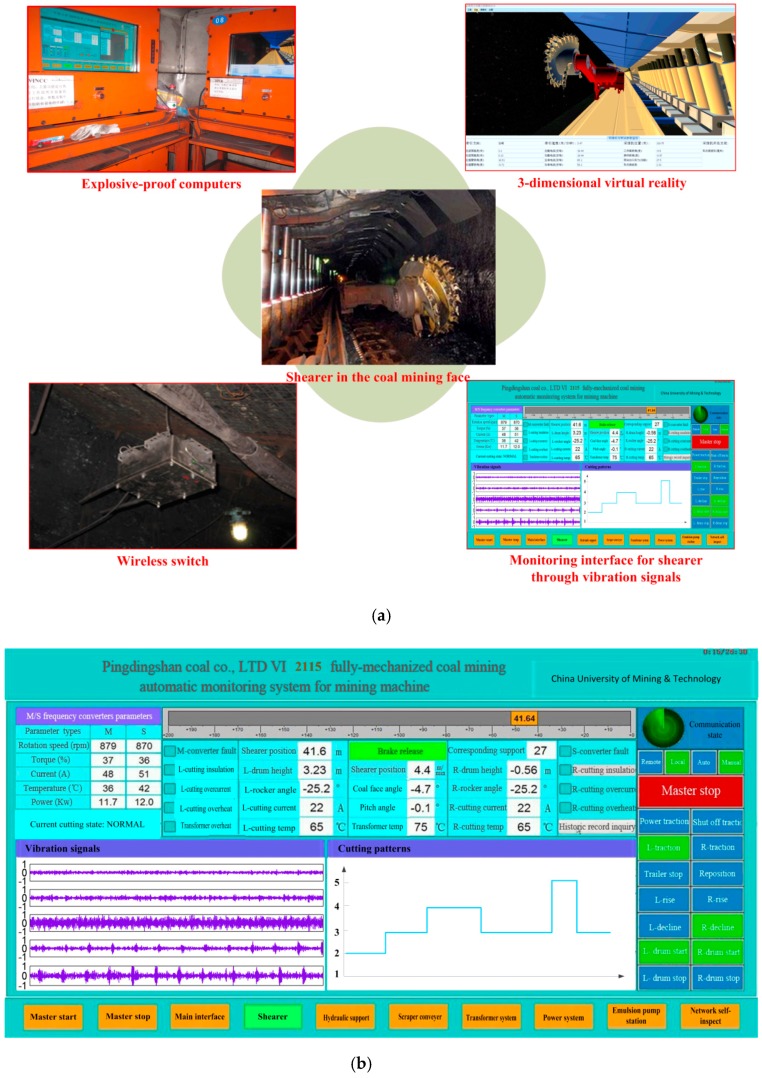

6. Industrial Application

In order to verify the application possibilities of the proposed shearer control method, a system based on the proposed method has been developed and an industrial test was carried out on a coal mining face. The basic structure of the system is shown in Figure 12. The application was accomplished at the 2115 coal mining face in the No.13 Mine of the Pingdingshan Coal Industrial Group Corporation. Seen from this figure, the accelerometers were installed inside of the shearer rocker arm shell to guarantee the reliability. The vibration signals were collected and transmitted into the explosion-proof computers by wireless switches. The computers could process the signals and execute the proposed method to identify the shearer cutting pattern. Meanwhile, a 3-dimensional virtual reality system was used to vividly display the working status of the shearer.

Figure 12.

Basic structure of the system for industrial test in the coal mining face: (a) The system in coal mining face based on proposed method; (b) The monitoring interface for shearer.

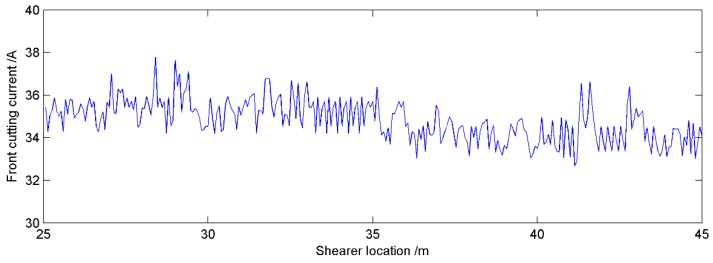

The goal of the proposed method is to accurately identify the cutting pattern of the shearer, which can provide the basis for its automatic control. Therefore, the cutting current of the front cutting motor was plotted in Figure 13 when the shearer was working from 25 m to 45 m. In this monitoring interval, the front cutting current was changed in the scope of 32.6894 A to 37.7769 A and the average value was 34.8074 A. The maximum current was only about 8.53% larger than that of the average value. The results indicated that the shearer could work smoothly and safely in the coal seam according to the identification provided by the proposed method and the system was proved stable and reliable in the practical application.

Figure 13.

Front cutting current curve according to proposed method.

7. Conclusions and Future Work

In this paper, we propose a novel method for identifying the shearer cutting pattern based on least squares support vector machine optimized by improved fruit fly optimization algorithm (IFOA-LSSVM). This proposed method uses the IFOA to automatically select the appropriate parameters of the LSSVM model in order to improve the forecasting and classification accuracy. The training features are constructed reasonably by the combination of kernel feature and energy feature. To validate the proposed method, four other alternative models (single LSSVM, PSO-LSSVM, GA-LSSVM, and FOA-LSSVM) are employed to compare the forecasting and classification performances. Example computation results show that the CER, RMSE, MAE MRE and TIC of proposed model are much smaller than those obtained by the competing models. Meanwhile, the convergence speed and precision of IFOA-LSSVM model perform with significant superiority over other alternative models in terms of the shearer cutting pattern identification. Furthermore, the industrial application result indicates that the system based on proposed method can provide stable and reliable references for the automatic control of a shearer.

In future studies, the authors will analyze the vibrations of other parts to represent the influence on the results of classification and plan to investigate advanced feature extraction methods to further improve the pattern identification results. Possible improvements may include some intelligent algorithms for synchronous feature selection and parameter optimization to obtain better performance. In addition, applications of the proposed method in the fault diagnosis domain are also worth further study.

Acknowledgments

The supports of China Postdoctoral Science Foundation (No. 2015M581879), National Key Basic Research Program of China (No. 2014CB046301), National Natural Science Foundation of China (No. 51475454), Joint Funds of the National Natural Science Foundation of China (No. U1510117) and the Priority Academic Program Development (PAPD) of Jiangsu Higher Education Institutions in carrying out this research are gratefully acknowledged.

Author Contributions

Lei Si and Zhongbin Wang conceived and designed the experiments; Xinhua Liu, Ze Liu and Jing Xu performed the experiments; Lei Si and Chao Tan analyzed the data; Lei Si wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Si L., Wang Z.B., Tan C., Liu X.H. A novel approach for coal seam terrain prediction through information fusion of improved D–S evidence theory and neural network. Measurement. 2014;54:140–151. doi: 10.1016/j.measurement.2014.04.015. [DOI] [Google Scholar]

- 2.Bessinger S.L., Neison M.G. Remnant roof coal thickness measurement with passive gamma ray instruments in coal mine. IEEE Trans. Ind. Appl. 1993;29:562–565. doi: 10.1109/28.222427. [DOI] [Google Scholar]

- 3.Chufo R.L., Johnson W.J. A radar coal thickness sensor. IEEE Trans. Ind. Appl. 1993;29:834–840. doi: 10.1109/28.245703. [DOI] [Google Scholar]

- 4.Markham J.R., Solomon P.R., Best P.E. An FT-IR based instrument for measuring spectral emittance of material at high temperature. Rev. Sci. Instrum. 1990;61:3700–3708. doi: 10.1063/1.1141538. [DOI] [Google Scholar]

- 5.Sun J.P., She J. Wavelet-based coal-rock image feature extraction and recognition. J. China Coal Soc. 2013;38:1900–1904. [Google Scholar]

- 6.Heng A., Zhang S., Tan A.C.C., Mathew J. Rotating machinery prognostics: State of the art, challenges and opportunities. Mech. Syst. Signal Pr. 2009;23:724–739. doi: 10.1016/j.ymssp.2008.06.009. [DOI] [Google Scholar]

- 7.Ma D.Y., Liang D.Y., Zhao X.S., Guan R.C., Shi X.H. Multi-BP expert system for fault diagnosis of power system. Eng. Appl. Artif. Intell. 2013;26:937–944. doi: 10.1016/j.engappai.2012.03.017. [DOI] [Google Scholar]

- 8.Mani G., Jerome J. Intuitionistic fuzzy expert system based fault diagnosis using dissolved gas analysis for power transformer. J. Electr. Eng. Technol. 2014;9:2058–2064. doi: 10.5370/JEET.2014.9.6.2058. [DOI] [Google Scholar]

- 9.Bangalore P., Tjernberg L.B. An artificial neural network approach for early fault detection of gearbox bearings. IEEE TRANS. Smart Grid. 2015;6:980–987. doi: 10.1109/TSG.2014.2386305. [DOI] [Google Scholar]

- 10.Ayrulu-Erdem B., Barshan B. Leg motion classification with artificial neural networks using wavelet-based features of gyroscope signals. Sensors. 2011;11:1721–1723. doi: 10.3390/s110201721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Winston D.P., Saravanan M. Single parameter fault identification technique for DC motor through wavelet analysis and fuzzy logic. J. Electr. Eng. Technol. 2013;8:1049–1055. doi: 10.5370/JEET.2013.8.5.1049. [DOI] [Google Scholar]

- 12.El-Baz A.H. Hybrid intelligent system-based rough set and ensemble classifier for breast cancer diagnosis. Neural. Comput. Appl. 2015;26:437–446. doi: 10.1007/s00521-014-1731-9. [DOI] [Google Scholar]

- 13.Seera M., Lim C.P. Online motor fault detection and diagnosis using a hybrid FMM-CART model. IEEE Trans. Neural Netw. Learn. Syst. 2014;25:806–812. doi: 10.1109/TNNLS.2013.2280280. [DOI] [PubMed] [Google Scholar]

- 14.Li K., Chen P., Wang S.M. An intelligent diagnosis method for rotating machinery using least squares mapping and a fuzzy neural network. Sensors. 2012;12:5919–5939. doi: 10.3390/s120505919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang T., Chen J., Zhou Y., Snoussi H. Online least squares one-class support vector machines-based abnormal visual event detection. Sensors. 2013;13:17130–17155. doi: 10.3390/s131217130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Silva D.A., Silva J.P., Neto A.R.R. Novel approaches using evolutionary computation for sparse least square support vector machines. Neurocomputing. 2015;168:908–916. doi: 10.1016/j.neucom.2015.05.034. [DOI] [Google Scholar]

- 17.Ji J., Zhang C.S., Kodikara J., Yang S. Prediction of stress concentration factor of corrosion pits on buried pipes by least squares support vector machine. Eng. Fail. Anal. 2015;55:131–138. doi: 10.1016/j.engfailanal.2015.05.010. [DOI] [Google Scholar]

- 18.Li B., Li D.Y., Zhang Z.J., Yang S.M., Wang F. Slope stability analysis based on quantum-behaved particle swarm optimization and least squares support vector machine. Appl. Math. Model. 2015;39:5253–5264. doi: 10.1016/j.apm.2015.03.032. [DOI] [Google Scholar]

- 19.Harish N., Mandal S., Rao S., Patil S.G. Particle Swarm Optimization based support vector machine for damage level prediction of non-reshaped berm breakwater. Appl. Soft Comput. 2015;27:313–321. doi: 10.1016/j.asoc.2014.10.041. [DOI] [Google Scholar]

- 20.Elbisy M.S. Sea wave parameters prediction by support vector machine using a genetic algorithm. J. Coast. Res. 2015;31:892–899. doi: 10.2112/JCOASTRES-D-13-00087.1. [DOI] [Google Scholar]

- 21.Cervantes J., Li X.O., Yu W. Imbalanced data classification via support vector machines and genetic algorithms. Connect. Sci. 2014;26:335–348. doi: 10.1080/09540091.2014.924902. [DOI] [Google Scholar]

- 22.Zhang X.L., Chen W., Wang B.J., Chen X.F. Intelligent fault diagnosis of rotating machinery using support vector machine with ant colony algorithm for synchronous feature selection and parameter optimization. Neurocomputing. 2015;167:260–279. doi: 10.1016/j.neucom.2015.04.069. [DOI] [Google Scholar]

- 23.Aydin I., Karakose M., Akin E. A multi-objective artificial immune algorithm for parameter optimization in support vector machine. Appl. Soft Comput. 2011;11:120–129. doi: 10.1016/j.asoc.2009.11.003. [DOI] [Google Scholar]

- 24.Pan W.T. A new fruit fly optimization algorithm: Taking the financial distress model as an example. Knowl.-Based Syst. 2012;26:69–74. doi: 10.1016/j.knosys.2011.07.001. [DOI] [Google Scholar]

- 25.Li H.Z., Guo S., Li C.J., Sun J.Q. A hybrid annual power load forecasting model based on generalized regression neural network with fruit fly optimization algorithm. Knowl.-Based Syst. 2013;37:378–387. doi: 10.1016/j.knosys.2012.08.015. [DOI] [Google Scholar]

- 26.Chen P.W., Lin W.Y., Huang T.H., Pan W.T. Using fruit fly optimization algorithm optimized grey model neural network to perform satisfaction analysis for e-business service. Appl. Math. Inform. Sci. 2013;7:459–465. doi: 10.12785/amis/072L12. [DOI] [Google Scholar]

- 27.Sheng W., Bao Y. Fruit fly optimization algorithm based fractional order fuzzy-PID controller for electronic throttle. Nonlinear Dynam. 2013;73:611–619. doi: 10.1007/s11071-013-0814-y. [DOI] [Google Scholar]

- 28.Xing Y. Design and optimization of key control characteristics based on improved fruit fly optimization algorithm. Kybernetes. 2013;42:466–481. doi: 10.1108/03684921311323699. [DOI] [Google Scholar]

- 29.Wang B.P., Wang Z.C., Li Y.X. Application of wavelet packet energy spectrum in coal-rock interface recognition. Key Eng. Mater. 2011;474:1103–1106. doi: 10.4028/www.scientific.net/KEM.474-476.1103. [DOI] [Google Scholar]

- 30.Sun J.P. Study on identified method of coal and rock interface based on image identification. Coal Sci. Technol. 2011;39:77–79. [Google Scholar]

- 31.Ren F., Liu Z.Y., Yang Z.J., Liang G.Q. Application study on the torsional vibration test in coal-rock interface recognition. J. Taiyuan Univ. Technol. 2010;41:94–96. [Google Scholar]

- 32.Yang W.C., Qiu J.B., Zhang Y., Zhao F.J., Liu X. Acoustic modeling of coal-rock interface identification. Coal Sci. Technol. 2015;43:100–103. [Google Scholar]

- 33.Sahoo R., Mazid A.M. Application of opto-tactile sensor in shearer machine design to recognize rock surfaces in underground coal mining; Proceedings of the IEEE International Conference on Industrial Technology; Churchill, Australia. 10–13 February 2009; pp. 916–921. [Google Scholar]

- 34.Bausov I.Y., Stolarczyk G.L., Stolarczyk L.G., Koppenjan S.D.S. Look-ahead radar and horizon sensing for coal cutting drums; Proceedings of the 4th International Workshop on Advanced Ground Penetrating Radar; Naples, Italy. 27–29 June 2007; pp. 192–195. [Google Scholar]

- 35.Cortes C., Vapnik V. Support-vector networks. Mach. Learn. 1995;20:273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 36.Suykens J.A.K., Vandewalle J. Least squares support vector machine classifiers. Neural Process Lett. 1999;9:293–300. doi: 10.1023/A:1018628609742. [DOI] [Google Scholar]

- 37.Keerthi S.S., Lin C.J. Asymptotic behaviors of support vector machines with Gaussian kernel. Neural Comput. 2003;15:1667–1689. doi: 10.1162/089976603321891855. [DOI] [PubMed] [Google Scholar]

- 38.Wu Z., Huang N.E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009;1:1–41. doi: 10.1142/S1793536909000047. [DOI] [Google Scholar]

- 39.Lv J.X., Wu H.S., Tian J. Signal denoising based on EEMD for non-stationary signals and its application in fault diagnosis. Comput. Eng. Appl. 2011;47:223–227. [Google Scholar]

- 40.Lei Y.G., Li N.P., Lin J., Wang S.Z. Fault diagnosis of rotating machinery based on an adaptive ensemble empirical mode decomposition. Sensors. 2013;13:16950–16964. doi: 10.3390/s131216950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Huang N.E., Shen Z., Long S.R., Wu M.C., Shih E.H., Zheng Q., Tung C.C., Liu H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lon. A. 1998;454:903–995. doi: 10.1098/rspa.1998.0193. [DOI] [Google Scholar]

- 42.Yang D.L., Liu Y.L., Li X.J., Ma L.Y. Gear fault diagnosis based on support vector machine optimized by artificial bee colony algorithm. Mech. Mach. Theory. 2015;90:219–229. doi: 10.1016/j.mechmachtheory.2015.03.013. [DOI] [Google Scholar]