Abstract

Crying is the most salient vocal signal of distress. The cries of a newborn infant alert adult listeners and often elicit caregiving behavior. For the parent, rapid responding to an infant in distress is an adaptive behavior, functioning to ensure offspring survival. The ability to react rapidly requires quick recognition and evaluation of stimuli followed by a co-ordinated motor response. Previous neuroimaging research has demonstrated early specialized activity in response to infant faces. Using magnetoencephalography, we found similarly early (100–200 ms) differences in neural responses to infant and adult cry vocalizations in auditory, emotional, and motor cortical brain regions. We propose that this early differential activity may help to rapidly identify infant cries and engage affective and motor neural circuitry to promote adaptive behavioral responding, before conscious awareness. These differences were observed in adults who were not parents, perhaps indicative of a universal brain-based “caregiving instinct.”

Keywords: caregiving, infant, magnetoencephalography, orbitofrontal cortex, vocalization

Introduction

Communication between parents and their offspring has long captured the interest of scientists (Darwin 1872, 1877; Lorenz 1943). From an evolutionary perspective, this early communication is essential for offspring survival by promoting protective and nurturing behaviors in parents. In humans, early parenting behavior is widely acknowledged to have far-reaching consequences for child cognitive and socio-emotional development. Parental responses to infant cries in particular have received much attention as a foundation of attachment relationships (Bowlby 1969; Ainsworth et al. 1978).

Crying, at least in early life, is thought to be largely reflexive, often occurring in response to pain, hunger, or separation from a caregiver (Bell and Ainsworth 1972; Soltis 2004). Much like the solicitation signals of other species (MacLean 1985; Newman 1985), an infant's distress cry ultimately serves to promote proximity between infant and caregiver (Ainsworth 1969; Bowlby 1969; Hunziker and Barr 1986). The sound of a human infant cry is characterized by a high and highly variable pitch, an overall “falling” or “rising–falling” melody, typically with some degree of tremor (or “vibrato”), and often includes abrupt changes in harmonic structure (Kent and Murray 1982; Golub and Corwin 1985). These acoustic features are thought to be largely attributable to infants' short vocal chords and limited muscular control over the vocal tract (Kent and Murray 1982; Ostwald and Murray 1985).

Theoretical models of responsive parental behavior highlight the capacity of vocal and facial cues to capture attention. Lorenz (1943) proposed that the specific configuration of an infant face (“Kindenschema”) acts as an “innate releaser” of caregiving behavior. Building on this theory, Murray (1979) suggested that the acoustic structure of an infant cry acts as a “motivational entity,” rapidly alerting an adult listener, while additional factors contribute to the selection of particular behaviors. Importantly, the motivational entity theory allows for a range of motives for behavior, such as a desire to terminate an aversive stimulus, an empathic response to reduce distress in another, or an evolutionary desire to ensure the wellbeing of offspring (Hoffman 1975; Murray 1979). Observational studies have shown that across cultures, infant crying provokes selective orienting of attention toward the infant and a desire to intervene, typically to provide care (Frodi et al. 1978; Bornstein et al. 2008, 2012). Beyond this initial orienting response, infant-directed behavior can be influenced by a number of other factors including gender, parental experience, specific features of cues, and the broader context of care and culture (Lamb 1977; Wood and Gustafson 2001; Bornstein et al. 2008, 2012; Kruger and Konner 2010).

Adults often report the sound of a crying infant as annoying, distressing, aversive, and likely to promote a desire to perform a caregiving response (e.g., Frodi et al. 1978; Schuetze and Zeskind 2001; Soltis 2004). There is evidence suggesting that hearing infant cries can initiate a broad range of physiological reactions in adult listeners (Frodi et al. 1978; Out et al. 2010). Changes have been demonstrated in heart rate (Frodi and Lamb 1980; Wiesenfeld et al. 1981; Bleichfeld and Moely 1984; Furedy et al. 1989; Del Vecchio et al. 2009), skin conductance (Frodi et al. 1978; Wiesenfeld et al. 1981), blood pressure (Frodi et al. 1978), respiratory sinus arrhythmia (Joosen et al. 2013), hand grip force (Bakermans-Kranenburg et al. 2012), and even skin temperature in breastfeeding mothers (Vuorenkoski et al. 1969). In addition, there is a large body of work showing individual differences in perceptual and physiological reactions to infant cries, varying according to gender, parental status, adult attachment style, and mental health (Furedy et al. 1989; Schuetze and Zeskind 2001; Out et al. 2010; Ablow et al. 2013).

Over the course of the lifespan, audible crying tends to become less frequent with children developing the capacity to inhibit vocalized crying and learning to access care through other, less metabolically costly, means (Bell and Ainsworth 1972; Rebelsky and Black 1972; Gekoski et al. 1983). By adulthood, crying is still a signal of distress, but is less salient to others (Cornelius 1984). Listener's responses to adult cries are largely culturally and contextually determined (e.g., Vingerhoets et al. 2000). Adult crying can alleviate distress by moving the self to action (Tomkins 1962), by establishing physiological homeostasis (Efran and Spangler 1979), or in some cases by motivating others to engage in prosocial behaviors (Hill and Martin 1997; Hendriks et al. 2008). There is little behavioral research directly comparing responses to infant and adult cries; however, we recently demonstrated that adult listeners report a greater motivation to respond to infant compared with adult cries (Parsons, Young, Stein, et al. 2014). At a more implicit level, listening to infant cries can evoke more rapid responding on a motor task, compared with listening to adult cries (Parsons et al. 2012; Young et al. 2015).

The mechanisms by which the brain can process these salient expressions to allow rapid, adaptive behavioral responses are not yet fully understood (Parsons, Stark, et al. 2013). Models of emotional processing describe “dual stream” systems for rapid identification of salient stimuli, followed by slower, detailed appraisal (LeDoux 2000; Adolphs 2002). A number of key regions are recruited in the processing of emotional vocalizations or affective prosody. These include superior temporal sulci/gyri (STS/STG), the orbitofrontal cortex (OFC), the supplementary motor area, middle temporal gyrus, basal ganglia, and amygdala, as confirmed by a recent meta-analysis (Ethofer et al. 2013; Yovel and Belin 2013; Belyk and Brown 2014).

Auditory vocal processing begins with analysis of basic acoustic features in subcortical and primary auditory regions (Belin et al. 2004, 2011; Schirmer and Kotz 2006; Yovel and Belin 2013). Extraction of linguistic, affective, or speaker-related content is then thought to occur within different subregions of the STS/STG (Belin et al. 2004, 2011). The right STS is considered the vocal equivalent of the fusiform face area, a region of the brain that has been shown to be highly selective to the human voice, compared with other environmental sounds (Belin et al. 2000, 2004). This region is particularly sensitive to the affective content of human vocalizations, responding more to emotional, compared with neutral vocalizations (Grandjean et al. 2005). Evidence from EEG studies demonstrates that event-related potentials (ERPs), thought to be generated by activity in the STS/STG (the P200 component), are sensitive to the valence, arousal, and category of emotional vocal stimuli (Paulmann and Kotz 2006, 2008). Supporting this suggestion, fMRI evidence has shown an association between activity in bilateral STS and emotional intensity in vocalizations (Ethofer et al. 2006). Activity in these regions is then thought to project to frontal regions, such as the OFC and IFG for appraisal and higher-order processing [OFC; Schirmer and Kotz 2006; Frühholz and Grandjean 2013; for a review of auditory vocal processing, see Frühholz et al. (2014, 2015)].

The human OFC has been shown to be a nexus for reward-related processing, critically involved in subjective appraisal of stimuli (Kringelbach 2005; Rudebeck and Murray 2014; Berridge and Kringelbach 2015). The temporal unfolding of information processing within this heterogeneous brain region is still under investigation (Kringelbach and Rolls 2004). Studies of individuals with lesions to the OFC have demonstrated impaired recognition of emotional vocal and facial expressions (Hornak et al. 1996, 2003; Grandjean et al. 2008). One such lesion study also demonstrated unaffected sensory processing of emotional vocal stimuli (as shown by the P200 ERP response), suggesting that the OFC may be more involved in evaluative than perceptual processing (Paulmann et al. 2010). In support of this “higher-order” function of the OFC, fMRI studies have demonstrated enhanced activity in this region when task demands require explicit attention for the evaluation of emotional vocalizations (Sander et al. 2005; Quadflieg et al. 2008). There is emerging evidence that the OFC may additionally play a role in the rapid detection of salience. The affective prediction hypothesis suggests that the OFC is involved in early stages of processing, aiding the identification of salient stimuli and facilitating motor reactions (for reviews, see Bar 2003, 2007, 2009). In support, there is mounting evidence that swift propagation of sensory information to the OFC (140 ms) from primary sensory regions may aid preattentive recognition (Bar et al. 2006; Kringelbach et al. 2008; D'Hondt et al. 2013; Parsons, Young, et al. 2013). It has been suggested that feedback projections to sensory areas could then impact further perceptual processing.

The affective prediction hypothesis also suggests that feedforward projections from the OFC to motor areas (e.g., motor cortex and basal ganglia) could prime rapid behavioral responding. One study demonstrated that activity in the OFC and motor cortex was correlated with speed of evaluation of emotional stimuli, supporting this notion (Ethofer et al. 2013). An alternative hypothesis, mirror neuron theory, implicates motor areas more directly in perceptual processing. This theory suggests a neuroanatomical overlap in systems for observing and executing actions (Di Pellegrino et al. 1992; Rizzolatti and Arbib 1998). This early recruitment of the motor system has been strongly emphasized in models of speech perception (Scott and Johnsrude 2003; Watkins et al. 2003; Warren et al. 2006). The role of motor systems in affective vocal processing is less well understood. It has been suggested that the mirror neuron system may support understanding of intention or preparation of imitative or nonimitative motor responses, relevant to the affective content of cues (Leslie et al. 2004; Ferrari et al. 2005; Iacoboni et al. 2005).

Existing studies of neural responses to infant vocalizations point to a comparable network of neural regions to that described above for emotional vocalizations more generally (Lorberbaum et al. 2002; Seifritz et al. 2003; Laurent and Ablow 2012a; Montoya et al. 2012; Riem et al. 2012; De Pisapia et al. 2013; Hipwell et al. 2015). Within this network, enhanced activity in the OFC, as measured with fMRI, has consistently been associated with the processing of multimodal infant cues including vocalizations (e.g., Lorberbaum et al. 2002; Laurent and Ablow 2012a, 2012b) and facial expressions (e.g., Bartels and Zeki 2004; Strathearn et al. 2008; Montoya et al. 2012). Yet, the low temporal resolution and indirect nature of the blood oxygen level-dependent signal in fMRI studies mean that it is very difficult to resolve the fine-grained temporal dynamics of brain activity. A number of recent studies have used magnetoencephalography (MEG) and, in line with the affective prediction hypothesis, there is now evidence that viewing infant faces is associated with greater early activity in the OFC, compared with viewing adult faces (Kringelbach et al. 2008; Parsons, Young, et al. 2013).

Building on these findings of responses to infant faces, we investigated responses to infant vocalizations to assess evidence for early differentiation of infant from adult vocalizations. We used MEG to compare neural activity in response to infant and adult cry vocalizations from a standardized database of emotional vocalizations (the OxVoc database; Parsons, Young, Stein, et al. 2014). We hypothesized that infant and adult cry vocalizations would be associated with differential early activity in auditory, orbitofrontal, and motor cortical regions.

Materials and Methods

Participants

MEG was used to examine both the timing and sources of early neural responses to infant and adult cry vocalizations in healthy men and women (N = 25, 13 male, 12 female). Participants were aged between 20 and 34 years (M = 23.88, SD = 2.97), and none were parents. They were recruited through email advertisement sent to a pool of participants who had previously participated in MRI research studies at Aarhus University Hospital, Denmark. All participants were right-handed, were not currently taking any medication affecting the brain, and had reported having no hearing problems. Ethical approval for the study was granted by the Ethics Committee of Central Region Denmark.

Experimental Task

Participants performed a target tone-detection task, within which vocalization stimuli were presented incidentally. The task required participants to make a button press response when they heard a target tone (400 Hz pure tone lasting 500 ms) and not respond when they heard distractor tones (500 Hz pure tone lasting 500 ms; the identity of target and distractor tones was counterbalanced across participants). Fifteen exemplars of each sound type (selected from a larger database, Parsons, Young, Stein, et al. 2014) were presented in each task block. Blocks of each stimulus type were repeated 8 times, resulting in a total of 120 stimulus presentations for each sound category. The order of blocks, and stimuli within blocks, was randomized.

Stimuli

Sound stimuli consisted of infant and adult cry vocalizations taken from an established stimulus database (Parsons, Young, Stein, et al. 2014). In brief, infant vocalization stimuli were obtained from video recordings of infants interacting with a caregiver in their own homes [see Young et al. (2012)]. During the experimental paradigm, participants also listened to other vocalization stimuli, including neutral and positively valenced infant and adult vocalizations. However, these results are not discussed here. Adult vocalization stimuli were obtained from video diary blogs recorded by females, aged approximately 18–30 years [see Parsons, Young, Stein, et al. (2014) for details]. Permission to use these stimuli for research was obtained directly from parents and individuals involved. Stimuli were presented using Presentation® software (Neurobehavioral Systems, Inc.) using an MEG-compatible in-ear earphone delivery system built in-house.

Stimuli consisted of 1.5 s auditory bursts, matched for root-mean-square intensity and with 150 ms linear rise and fall times applied to each clip. The physical features of infant and adults cry stimuli used are presented in Table 1. Independent t-tests demonstrated significant differences in fundamental frequency (F0) and average burst length, but not in the number of bursts.

Table 1.

Key physical parameters of vocalization stimuli

| Vocalization type | Infant cry |

Adult cry |

t-value | P-value | r-value | ||

|---|---|---|---|---|---|---|---|

| M, SD | Range | M, SD | Range | ||||

| F0 (Hz) | 444.30, 43.16 | 336.06–527.56 | 339.84, 64.32 | 257.81–445.31 | 5.22 | <0.001 | 0.69 |

| Burst duration (s) | 1.06, 0.47 | 0.45–1.50 | 0.57, 0.13 | 0.23–0.75 | 3.83 | 0.001 | 0.58 |

| Number of bursts | 1.73, 0.88 | 1–3 | 2.13, 0.35 | 2–3 | −1.63 | 0.12 | −0.29 |

F0, fundamental frequency.

Sound Intensity Matching Procedure

Perceived “loudness” of auditory stimuli can impact on the perceived intensity of the emotion within a sound (Murray and Arnott 1993). To match loudness across participants varying in sensitivity to sound intensity, a threshold of hearing test was completed by each participant immediately prior to MEG scanning. An adaptive staircase procedure [comparable to Levitt (1971)] was used to assess individual thresholds of hearing. Briefly, this was a two-alternative forced-choice task in which participants indicated whether they heard sounds of varying intensities. A two-down, one-up design was used, meaning that after a sound was detected, the subsequent sound presentation was decreased by 10 dB, but when a sound was not detected, the subsequent sound presentation was increased by 5 dB. Criterion for absolute threshold of hearing was 2 consecutive “up/down” reversals (the point at which participants report that they could hear a sound, after not hearing the previous sound) at the same value. Stimuli were presented at 70 dB above this level.

MEG Recordings

MEG recordings were performed using a 306-channel Elekta-Neuromag TRIUX system (Center of Functionally Integrative Neuroscience, Aarhus University Hospital), comprising 102 magnetometers and 204 planar gradiometers at a sampling rate of 1000 Hz. Before recording, a three-dimensional digitizer (Polhemus) was used to record the participant's head shape relative to the position of 5 head position indicator coils fixed to the head. In addition, the positions of 3 fiducial markers (the nasion and the left and right preauricular points) and 20–30 additional scalp “head-shape” points were recorded to aid later coregistration with MRI images. Data were recorded as part of a larger study of auditory processing in back-to-back sessions lasting 15 min each (later concatenated as a single session). A T1-weighted structural MRI had previously been acquired for each participant as a part of another research study at Aarhus University Hospital (Siemens Trio 3-T scanner, inversion time = 900 ms, time repetition = 1900 ms, time echo = 2.52 ms, flip angle = 9°, slice thickness = 1 mm, field of view = 250 × 250 mm). All MEG data analyses were carried out using an in-house built MATLAB (The MathWorks, Inc.) software with functions from FieldTrip (Oostenveld et al. 2011), SPM8 (Litvak et al. 2011), and FSL (Jenkinson et al. 2012).

Data Preprocessing

Channels containing clear artifacts were detected visually and marked as “bad” using downsampled data (sampling rate, 250 Hz) with an in-house built data viewer. External noise was removed using spatiotemporal signal space separation (Taulu and Simola 2006) applied to the raw, non-downsampled data, using only “good” channels (MaxFilter™, Elekta). Cleaned data were then downsampled to 250 Hz and high-pass filtering (0.1 Hz, two-pass Butterworth filter, SPM8) was performed to remove slow drifts in the data. Independent components analysis was used to decompose data into 60 components (data were prewhitened by normalizing to smallest eigenvalues; Hyvarinen and Oja 2000; Vigario et al. 2000). The outputs of this analysis were a number of channels × number of components mixing matrix, 60 temporally independent time courses and their back-projected spatial topographies. Identified components were manually reviewed to remove components containing artifacts (cardiac, ocular, or muscular), based on time courses, frequency spectrum, and spatial topography (Mantini et al. 2011; Muthukumaraswamy 2013). Data were then epoched (250 ms prestimulus to 1750 ms poststimulus) and an in-house automated outlier algorithm was applied, using robust linear regression to detect and remove epochs and channels with outlying minima and standard deviations (epochs with weights <0.40 and channels with weights <0.01 were removed).

Sensor-Level (Event-Related Field) Analysis

For event-related field (ERF) analyses, single-trial data were cropped from 200 ms prestimulus to 500 ms poststimulus onset. Data were low-pass filtered at 45 Hz and baseline corrections were performed (using the 100-ms prestimulus period for each trial). Averaged group sensor-level data were used to identify time windows of interest. Sensors exhibiting peak averaged responses during identified time windows in each hemisphere were selected for sensor-level statistical analysis of category-specific effects. Averaged time courses from each individual participant were extracted for each stimulus category and paired t-tests (one-tailed) were used to assess differences in ERF amplitude at time windows of interest.

Source Reconstruction

Preprocessed datasets were concatenated for each participant, taking the first session as a reference for averaging head positions (ensuring a single beamformer solution for each participant; Luckhoo et al. 2014). Anatomical data from T1-weighted MR scans were segmented and normalized to a template MNI brain. MEG data were coregistered using digitized fiducial markers and refined using additional head-shape points (SPM8).

Source reconstruction was performed using a dual-source adaptation of the Linearly Constrained Minimum Variance (LCMV) beamformer (Van Veen et al. 1997; Woolrich et al. 2011). This method used an overlapping spheres model to estimate dipoles at each location in a 6-mm3 mesh representation of the template MNI brain (Huang et al. 1999). Although beamforming has proved powerful at reconstructing source signals in electromagnetic imaging (Hillebrand et al. 2005), it can be limited in the presence of highly correlated source signals, such as early bilateral auditory responses (Van Veen et al. 1997; Sekihara et al. 2002; Herdman et al. 2003; Dalal et al. 2006). To overcome this, a bilateral implementation of the LCMV beamformer was employed, in which the beamformer spatial filtering weights for each dipole were estimated together with the dipole's contralateral counterpart (Brookes et al. 2007). A scalar formulation of the beamformer was used, in which each dipoles' 3 spatial orientations were collapsed to one direction obtained by maximizing the output of the beamformer (Sekihara et al. 2001). This was carried out on both of the bilateral dipoles in the dual-source beamformer. Iterating through all dipole locations in the 6-mm grid yielded a whole-brain estimate of source activity for each trial for each participant in the time window 200 ms prestimulus to 400 ms poststimulus in the frequency range of interest, 1–40 Hz.

General Linear Model

The source-reconstructed data were epoched into trial-specific time windows of 400 ms (100 ms prestimulus and 300 ms poststimulus), and a separate general linear model was run sequentially at each dipole location for each time point, for each stimulus category (Hunt et al. 2012; see the section “Experimental Procedures” in Supplementary Material). An initial demonstration of beamformer efficacy was carried out using all auditory stimuli from the entire experimental session (n = 840), compared against prestimulus baseline (see Supplementary Material). Following resolution of bilateral auditory cortices at 100 ms, differential processing of infant and adult cry vocalizations was assessed. First-level analyses consisted of calculation of “contrast of parameter estimates” (infant cry − adult cry) for each dipole and each time point. Resulting data were converted to absolute values and baseline-corrected using the 100-ms prestimulus period as a baseline. Data were then subject to second-level analyses, using one-sample t-tests with variances spatially smoothed with a Gaussian kernel (full-width at half-maximum = 50 mm). Results were computed for a series of t-stat maps temporally averaged over 10-ms windows, ranging from 95 to 220 ms poststimulus onset (overlapping by 5 ms). Multiple comparison corrections were conducted using a nonparametric cluster-based permutation test [for details, see Hunt et al. (2012)] with 5000 permutations and a cluster-forming t-threshold of 3.2 (equivalent to a corrected P < 0.05). Peak coordinates within significant clusters were identified and converted to z statistics.

Results

Behavioral Data

Performance during the tone-detection task was at ceiling level with no significant differences in reaction times to the tones during the blocks of presentation of infant and adult cries (t(24) = 0.05, P = 0.86; mean RT = 691 ms).

Summary of Results of MEG Data Analysis

We used standard Matlab tools to analyze the MEG data of all 25 participants both in sensor space and at the source level. Sensor-level analyses demonstrated significant differences in ERF time courses at both 100 and 200 ms to infant cries compared with adult cries in the right hemisphere. Using source-level analysis, we found early (at 95–135 ms) significant differences in activity between infant and adult cry vocalizations in temporal (STG and temporal pole) and frontal regions (OFC and anterior cingulate cortex [ACC]), and later differences (175–205 ms) in visual and motor regions.

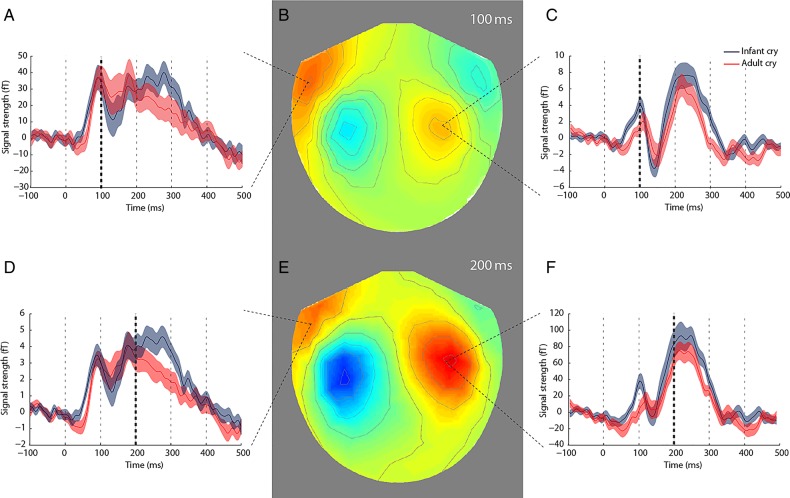

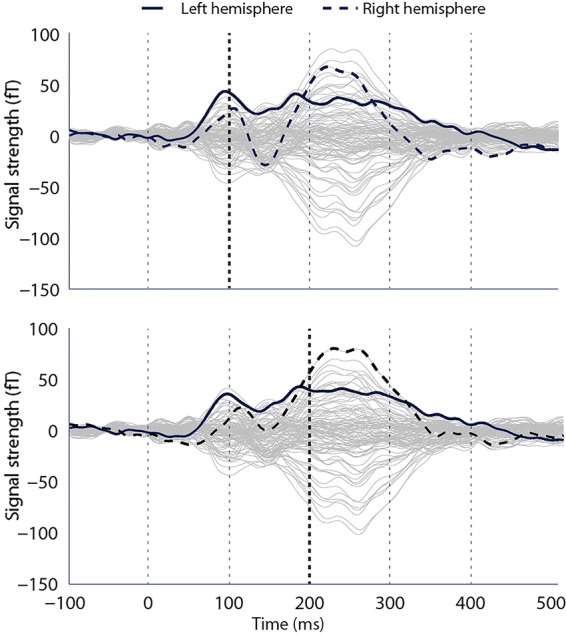

Sensor-Level Analysis

Figure 1 shows the averaged ERF time courses in response to all auditory stimuli (combined across conditions and participants) for each MEG channel. Based on these ERFs, time windows of interest were identified as the classical early auditory ERF components (the N100 and P200). Maximally responsive channels at each of these time points in each hemisphere (4 channels in total) were selected for further analysis. Time courses from each of these sensors are presented in Figure 2, along with scalp topographies. Paired-sample t-tests demonstrated greater ERF amplitude in response to infant cries, compared with adult cries that was significant at 100 ms [t(24) = 1.97, P = 0.03, r = 0.37] and approached significance at 200 ms [t(24) = 1.64, P = 0.06, r = 0.32] in maximally responsive channels in the right hemisphere. There were no significant differences in ERF amplitude in maximally responsive channels in the left hemisphere at 100 ms [t(24) = 0.22, P = 0.41, r = 0.04] or 200 ms [t(24) = −0.63, P = 0.27, r = 0.13]. Right hemisphere channels showing significant differences were fronto-temporally located (Fig. 2).

Figure 1.

Individual sensor time courses (averaged across conditions) presenting maximally responding channels at 100ms (upper) and 200ms (lower). Vertical dashed lines indicate timing intervals, and lines in “bold” demonstrate time point analyzed.

Figure 2.

Sensor-level data showing averaged ERFs at 100 ms (B) and 200 ms (E) poststimulus onset. (A, C, D, and F) Category-specific time courses from peak sensors in each hemisphere from −100 ms prestimulus to 500 ms poststimulus. In the right hemisphere only, the amplitude of ERFs from peak sensors were greater in response to infant cries (blue) than to adult cries (red) at 100 ms (P < 0.05) and 200 ms (P = 0.06). Error bars represent mean ± standard error. Vertical dashed lines indicate timing intervals, and lines in “bold” demonstrate time point analyzed.

Source-Level Analysis

Source reconstruction demonstrated different spatial distributions of neural activity in response to infant and adult vocalizations from 90 to 200 ms poststimulus (significant at cluster level P < 0.05, corrected for multiple comparisons using nonparametric cluster-based permutation tests, see the Methods section for details). Table 2 presents peak coordinates within significant clusters demonstrating differential early activity to infant and adult cries.

Table 2.

Neural activity across poststimulus time windows differentiating infant and adult cry vocalizations

| Time window (ms) | Cortical region | t-stat | MNI coordinates |

L/R | ||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| 90–100 | Primary auditory cortex (STG) | 4.38 | 42 | −30 | 12 | R |

| 95–105 | Auditory cortex | 4.14 | 42 | −30 | 18 | R |

| 100–110 | Primary auditory cortex (STG) | 4.54 | 54 | −30 | 18 | R |

| 105–115 | Primary auditory cortex (STG) | 5.59 | 54 | −30 | 18 | R |

| 110–120 | Primary auditory cortex (STG) | 6.54 | 60 | −24 | 12 | R |

| 115–125 | Primary auditory cortex (STG) | 7.60 | 60 | −24 | 12 | R |

| 120–130 | Primary auditory cortex (STG) | 8.34 | 60 | −24 | 12 | R |

| Primary auditory cortex (STG) | 4.07 | −60 | −24 | 6 | L | |

| 125–135 | Primary auditory cortex (STG) | 8.15 | 60 | −24 | 12 | R |

| Primary auditory cortex (STG) | 4.24 | −42 | −24 | 24 | L | |

| Orbitofrontal cortex | 4.47 | −34 | 20 | −16 | L | |

| Temporal pole | −46 | 18 | −22 | L | ||

| Anterior cingulate cortex | −6 | 36 | 18 | L | ||

| 130–140 | Primary auditory cortex (STG) | 4.93 | −54 | −30 | 12 | L |

| Primary auditory cortex (STG) | 7.4 | 54 | −24 | 12 | R | |

| 135–145 | Primary auditory cortex (STG) | 6.62 | 54 | −24 | 12 | R |

| Primary auditory cortex (STG) | 4.96 | −54 | −30 | 12 | L | |

| 140–150 | Auditory cortices | 5.74 | 60 | −24 | 18 | R |

| Primary auditory cortex (STG) | 4.59 | −54 | −30 | 12 | L | |

| 145–155 | Auditory cortices | 4.42 | 60 | −24 | 18 | R |

| Auditory cortices | 3.97 | −60 | −18 | 18 | L | |

| 150–160 | Auditory cortices | 4.64 | −60 | −18 | 18 | L |

| 155–165 | Auditory cortices | 4.64 | −66 | −18 | 18 | L |

| Primary auditory cortex (STG) | 4.09 | 68 | −18 | 0 | R | |

| 160–170 | Primary auditory cortex (STG) | 4.32 | 68 | −18 | 0 | R |

| 165–175 | Primary auditory cortex (STG) | 4.41 | 68 | −18 | 6 | R |

| 170–180 | Primary auditory cortex (STG) | 4.37 | −60 | −24 | 12 | L |

| 175–185 | Primary auditory cortex (STG) | 4.35 | −60 | −24 | 12 | L |

| Motor cortex | −46 | −8 | 36 | L | ||

| 180–190 | Auditory cortices | 4.20 | −36 | −18 | 36 | L |

| 185–195 | Auditory cortices | 4.13 | −30 | −24 | 18 | L |

| Visual cortex | 4.08 | 12 | −84 | −6 | R | |

| 190–200 | Auditory cortices | 3.89 | −60 | −18 | 18 | L |

| Orbitofrontal cortex | 3.83 | −34 | 20 | −16 | L | |

| Temporal pole | −36 | 24 | −24 | L | ||

| Anterior cingulate cortex | 3.87 | 18 | 18 | 30 | R | |

Note: Peak voxels within clusters of significant activity are reported, with thresholding at P < 0.05 (corrected for multiple comparisons), listed by time point and cortical region. There were no significant differences observed from 195–205, 200–210, 205–215, or 210–220 ms.

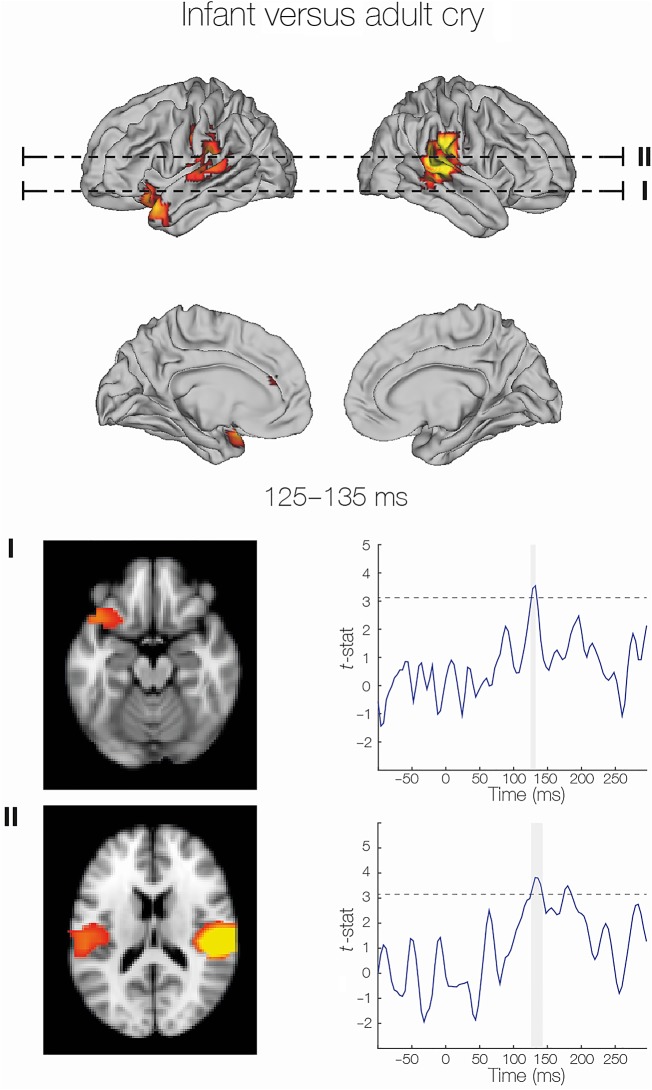

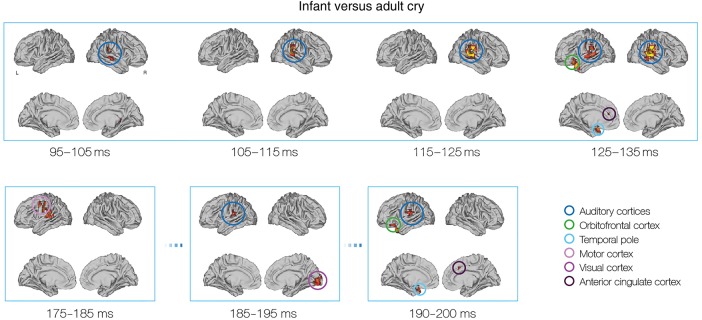

To summarize, the earliest differences in neural activity in response to infant and adult cry vocalizations were observed at around 100 ms. These differences were apparent in auditory regions, and were particularly strong in the right STG (see Fig. 3 for spatial distribution and Table 2 for statistics and coordinates of significant clusters). Differential processing in auditory regions continued throughout the time window tested (95–200 ms). From 125 to 135 ms, a transient difference in frontal activity was observed in the left OFC and anterior cingulate cortex (Fig. 4). Following this, differential processing moved to more posterior locations in the brain, including the postcentral gyrus (145–165 ms), the motor cortex (175–185 ms), and visual cortex (185–195 ms). Finally, from 190 to 200 ms, a second transient burst in frontal activity was observed, also localized to the OFC and anterior cingulate cortex.

Figure 3.

Listening to infant compared with adult cry vocalizations was associated with differential early transient activity in the OFC peaking around 130ms after stimulus onset. During this time, differential auditory cortex activity is also at a peak. Source reconstruction of the infant versus adult cry contrast is demonstrated (upper) and axial slices at the levels shown are demonstrated (lower). Statistical differences over time (t-stat time courses derived from the general linear model) are also presented to demonstrate the transient nature of these effects. Dotted lines indicate a t-threshold of 3.2 (equivalent to P < 0.05), and vertical gray bars indicate the time that differential activity is above this threshold.

Figure 4.

The evolution of differential neural activity in response to infant and adult cry vocalizations from 95 to 200ms. Time windows demonstrating changes of interest are presented (statistical details using overlapping 10ms windows are presented in Table 1). Early differences were observed in right-lateralized auditory regions. At 125–135 ms, there were differences in the OFC and anterior cingulate cortex (ACC). Later differences were demonstrated in the motor and visual cortices, with repeat recruitment of OFC and ACC at 195–205ms. Data were rendered onto inflated brain templates using the Connectome Workbench v1.0 tool (Marcus et al. 2011).

Discussion

We demonstrated rapid differentiation of infant and adult vocal distress in auditory, emotional, and motor brain regions. Differential activity, at a speed faster than conscious processing (Sergent et al. 2005), was found at the source level in emotional regions (OFC and ACC, 125–135 and 190–200 ms) and motor regions (175–185 ms). We also found sustained neural activity differentiating these stimuli in auditory regions (90–200 ms). This was corroborated by sensor-level findings of enhanced ERF activity for infant compared with adult cry vocalizations at 100 ms poststimulus in right-lateralized MEG sensors.

An infant's cry carries particular salience for adult listeners, signaling need for care and promoting the initiation of caregiving behavior (Lorenz 1943; Murray 1979). Previous work has demonstrated that adults have specific behavioral and psychophysiological responses to these sounds (e.g., Parsons et al. 2012; Joosen et al. 2013). Here, we show evidence for how this might be supported by early differential neural activity. The timing of this activity, in the range of 100–300 ms, is considered “preconscious” in studies that demonstrate a typical delay of around 500 ms between the onset of neural activity and the emergence of mental awareness [most notably described and examined by Libet (2002, 2006)].

We observed sustained differential activity in “voice-selective” STS/STG, thought to be sensitive to intensity, type, and salience of vocal emotion (STS/STG; Belin et al. 2004, 2011; Ethofer et al. 2013). In our data, early differences (90–120 ms) were observed in the right hemisphere only. This lateralization has previously been conceptualized as important in assessing the spectral content of auditory stimuli [for review, see Schirmer and Kotz (2006)]. Beyond 120 ms, we showed differential activity in both left and right auditory cortices, in line with recent work demonstrating bilateral processing of affective prosody (Frühholz and Grandjean 2013; Frühholz et al. 2015). The current study was not designed to investigate the functional roles of different components of activity. However, we would hypothesize that, in line with a large body of previous work, early differential activity in STS/STG is likely related to perceptual, rather than evaluative, processes. As infant and adult cry stimuli differ in pitch, it is plausible that early auditory cortex activity reflects processing of this acoustic feature. Future work could aim to investigate this by using stimuli that systematically vary in acoustic properties.

The OFC is a key region involved in the appraisal of emotional expressions across modalities (Adolphs 2002; Hornak et al. 2003). Theories of auditory emotional processing suggest involvement of the OFC at around 300 ms poststimulus, after perceptual processing has occurred in auditory regions (Schirmer and Kotz 2006). Our data partially support this, demonstrating earlier STG than OFC activity for differentiating vocal emotions. However, we found evidence of 2 transient bursts of activity in the OFC, occurring earlier than 300 ms (at 125–135 and 190–200 ms). We suggest that this is more consistent with the affective prediction hypothesis which proposes a dynamic interplay between OFC and sensory regions that may enable preattentive recognition of salient stimuli and prime behavioral responses (Bar 2007; Barrett and Bar 2009).

The neurobiology of parent–infant interactions is thought to heavily recruit networks involved in reward processing and social cognition, of which the OFC is thought to play a central role (Parsons et al. 2010; Swain et al. 2011; Parsons, Stark, et al. 2013; Feldman 2015). We have previously demonstrated early, differential OFC activity in response to infant faces compared with adult faces (Kringelbach et al. 2008; Parsons, Young, et al. 2013). Findings presented here show a similar effect in the auditory domain. Taken together, we speculate that this reflects a neural representation of a “caregiving instinct”, a preconscious response that may allow rapid detection and evaluation of infant-specific cues. Critically, these effects were observed in adults who were not parents, suggesting a potentially universal, modality-independent sensitivity to infant cues in the OFC. Replication and more detailed investigation of this effect for infant vocalizations would be important for exploring this hypothesis further. In the visual domain, we previously demonstrated diminished OFC responses to infant faces when there was a structural abnormality (cleft lip), suggesting that this early response relies on particular configurations of stimulus features (Parsons, Young, et al. 2013). Similar work characterizing changes in OFC responses relating to varying stimulus properties, task demands, and behavioral performance would be important for investigating the extent of this effect in the auditory domain. Given the wealth of extant research demonstrating specific behavioral and physiological responses to infant cries, future work combining multiple levels of analysis (e.g., neural, physiological, and behavioral) in the same individuals would also be an important future avenue for research into human caregiving behavior.

We also observed activity in the ACC from 190 to 200 ms, similar to previous fMRI studies that have demonstrated ACC activity in response to infant cry vocalizations, compared with artificial control stimuli (e.g., Lorberbaum et al. 2002; Sander et al. 2007; De Pisapia et al. 2013). Here, we show that this region is also sensitive to the difference between infant and adult cry vocalizations. The role of the ACC in caregiving behavior has previously been highlighted in animal studies, showing that ACC lesions can disrupt rodent maternal responses to pup vocalizations (e.g., Murphy et al. 1981). More broadly, the ACC in humans has been conceptualized as an “alarm system,” mediating signals communicating both physical and social pain (Eisenberger and Lieberman 2004). Within this context, emotional vocalizations from conspecifics could constitute “social pain” stimuli, communicating distress in others and perhaps aiding the orienting of attention.

Differences in neural activity were also found in the motor cortex. Previous studies using fMRI to investigate neural responses to infant vocalizations have not robustly demonstrated a role for motor cortical regions, although one study demonstrated motor cortex activity in response to infant faces (Caria et al. 2012). Findings presented here show that differential motor cortex activity was transient in nature and therefore unlikely to be detected with the slower temporal resolution of fMRI. Both the affective prediction hypothesis and mirror neuron theory suggest early involvement of motor regions in the processing of affective vocalizations (as highlighted in motor theories of speech perception; Rizzolatti and Arbib 1998; Scott and Johnsrude 2003). Further work investigating the functional role of this activity would be of much interest.

Potential Limitations

MEG provides greater sensitivity to cortical than subcortical sources of neural activity (Hillebrand and Barnes 2002). As such, there is limited power to detect differential activity in subcortical regions, such as the amygdala and basal ganglia, regions thought to support rapid processing and evaluation of emotional stimuli (e.g., LeDoux 2000; Dolan 2002; Paulmann et al. 2008). Previous work has also demonstrated differential acoustic processing in the auditory brainstem (Kraus and Nicol 2005; Nan et al. 2015) and differential sensitivity to infant cry vocalizations in the periaqueductal gray of the midbrain (using intracranial recordings; Parsons, Young, Joensson, et al. 2014). Evidence from techniques more sensitive to subcortical regions, such as fMRI, is important to consider in neural models of affective auditory processing. This is of particular importance in the context of a proposed “caregiving instinct” as these processes are likely to have been evolutionarily conserved and as such may recruit phylogenetically older (e.g., subcortical) brain structures. In addition, the current paradigm involved incidental listening, so we are unable to directly specify what impact this differential processing may have on behavior.

Future Directions

Investigating other types of motivationally salient auditory cues would further assess the extent to which early OFC functioning is comparable across modalities. Similarly, inclusion of relevant behavioral measures would inform theories of the function of this rapid differential activity. Previous work investigating individual differences in behavioral and physiological responses to infant cry vocalizations have demonstrated a number of effects including differences related to participant sex, parental status, depressive symptoms, and attachment style (Furedy et al. 1989; Schuetze and Zeskind 2001; Out et al. 2010; Ablow et al. 2013). The current study was not sufficiently powered to look at these differences, but future work should investigate the neural correlates of these previously demonstrated effects. Specific to the further investigation of human parenting behavior, assessment of these effects in parents of young infants would allow investigation of the impact of parental experience on these processes. In addition, identification of neural activity specific to the recognition of one's own infant would further inform our understanding of the neural underpinnings of the parent–infant relationship. Linking neural activity to caregiving behavior over time would eventually provide a better mechanistic understanding of the brain changes associated with the transition to parenthood.

Conclusions

We demonstrate evidence for rapid differentiation of infant and adult distress vocalizations in auditory, emotional (OFC), and motor brain regions. We propose that hearing an infant cry initiates dynamic activity among these brain regions that may aid quick detection and evaluation of these salient cues and prime adaptive responding. Taken together with previous findings demonstrating similarly early OFC activity in response to infant faces, we suggest that these responses may form part of the neural basis of a so-called “caregiving instinct”. Future work investigating how this neural signature may change as a result of parental experience, and in relation to caregiving behavior, would be of particular interest.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

The research was supported by an MRC Studentship awarded to K.S.Y., an ERC Consolidator Grant: CAREGIVING (no. 615539), and a TrygFonden Charitable Foundation grant to M.L.K, a Wellcome Trust Grant (no 090139) to A.S. as well as the Center for Music in the Brain at Aarhus University. Funding to pay the Open Access publication charges for this article was provided by the Wellcome Trust.

Supplementary Material

Notes

We would like to thank Christopher Bailey for technical assistance with this study. Conflict of Interest: None declared.

References

- Ablow JC, Marks AK, Shirley Feldman S, Huffman LC. 2013. Associations between first-time expectant women's representations of attachment and their physiological reactivity to infant cry. Child Dev. 84:1373–1391. [DOI] [PubMed] [Google Scholar]

- Adolphs R. 2002. Neural systems for recognizing emotion. Curr Opin Neurobiol. 12:169–177. [DOI] [PubMed] [Google Scholar]

- Ainsworth MD. 1969. Object relations, dependency, and attachment: a theoretical review of the infant-mother relationship. Child Dev. 40:969–1025. [PubMed] [Google Scholar]

- Ainsworth MD, Blehar MC, Waters E, Wall S. 1978. Patterns of attachment: a psychological study of the strange situation. New York: Psychology Press p. 137–153 [Google Scholar]

- Bakermans-Kranenburg MJ, van Ijzendoorn MH, Riem MME, Tops M, Alink LRA. 2012. Oxytocin decreases handgrip force in reaction to infant crying in females without harsh parenting experiences. Soc Cogn Affect Neurosci. 7:951–957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. 2003. A cortical mechanism for triggering top-down facilitation in visual object recognition. J Cogn Neurosci. 15:600–609. [DOI] [PubMed] [Google Scholar]

- Bar M. 2007. The proactive brain: using analogies and associations to generate predictions. Trends Cogn Sci. 11:280–289. [DOI] [PubMed] [Google Scholar]

- Bar M. 2009. The proactive brain: memory for predictions. Philos Trans Roy Soc B Biol Sci. 364:1235–1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, Hämäläinen MS, Marinkovic K, Schacter DL, Rosen BR. 2006. Top-down facilitation of visual recognition. Proc Natl Acad Sci USA. 103:449–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Bar M. 2009. See it with feeling: affective predictions during object perception. Philos Trans Roy Soc B Biol Sci. 364:1325–1334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels A, Zeki S. 2004. The neural correlates of maternal and romantic love. Neuroimage. 21:1155–1166. [DOI] [PubMed] [Google Scholar]

- Belin P, Bestelmeyer PEG, Latinus M, Watson R. 2011. Understanding voice perception. Br J Psychol. 102:711–725. [DOI] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Bedard C. 2004. Thinking the voice: neural correlates of voice perception. Trends Cogn Sci. 8:129–135. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. 2000. Voice-selective areas in human auditory cortex. Nature. 403:309–312. [DOI] [PubMed] [Google Scholar]

- Bell SM, Ainsworth MD. 1972. Infant crying and maternal responsiveness. Child Dev. 43:1171–1190. [PubMed] [Google Scholar]

- Belyk M, Brown S. 2014. Perception of affective and linguistic prosody: an ALE meta-analysis of neuroimaging studies. Soc Cogn Affect Neurosci. 9:1395–1403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Kringelbach ML. 2015. Pleasure systems in the brain. Neuron. 86:646–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bleichfeld B, Moely BE. 1984. Psychophysiological responses to an infant cry: comparison of groups of women in different phases of the maternal cycle. Dev Psychol. 20:1082–1091. [Google Scholar]

- Bornstein MH, Putnick DL, Heslington M, Gini M, Suwalsky JT, Venuti P, de Falco S, Giusti Z, Zingman de Galperín C. 2008. Mother-child emotional availability in ecological perspective: three countries, two regions, two genders. Dev Psychol. 44:666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein MH, Putnick DL, Suwalsky JT, Venuti P, de Falco S, de Galperín CZ, Gini M, Tichovolsky MH. 2012. Emotional relationships in mothers and infants: culture-common and community-specific characteristics of dyads from rural and metropolitan settings in Argentina, Italy, and the United States. J Cross-Cult Psychol. 432:171–197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowlby J. 1969. Attachment and loss. New York: Hogarth Press. [Google Scholar]

- Brookes MJ, Stevenson CM, Barnes GR, Hillebrand A, Simpson MI, Francis ST, Morris PG. 2007. Beamformer reconstruction of correlated sources using a modified source model. Neuroimage. 34:1454–1465. [DOI] [PubMed] [Google Scholar]

- Caria A, de Falco S, Venuti P, Lee S, Esposito G, Rigo P, Birbaumer N, Bornstein MH. 2012. Species-specific response to human infant faces in the premotor cortex. Neuroimage. 60:884–893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornelius RR. 1984. A rule model of adult emotional expression. In: Malatesta CZ, Izard CE, editors. Emotion in adult development. Beverly Hills, CA: Sage. p. 213–233. [Google Scholar]

- Dalal SS, Sekihara K, Nagarajan SS. 2006. Modified beamformers for coherent source region suppression. IEEE Trans Biomed Eng. 53:1357–1363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin C. 1872. The expression of the emotions in man and animals. London: John Murray. [Google Scholar]

- Darwin C. 1877. I.—a biographical sketch of an infant. Mind. 2:285–294. [Google Scholar]

- Del Vecchio T, Walter A, O'Leary SG. 2009. Affective and physiological factors predicting maternal response to infant crying. Infant Behav Dev. 32:117–122. [DOI] [PubMed] [Google Scholar]

- De Pisapia N, Bornstein MH, Rigo P, Esposito G, De Falco S, Venuti P. 2013. Sex differences in directional brain responses to infant hunger cries. Neuroreport. 24:142–146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Hondt F, Lassonde M, Collignon O, Lepore F, Honoré J, Sequeira H. 2013. “Emotions Guide Us”: behavioral and MEG correlates. Cortex. 49:2473–2483. [DOI] [PubMed] [Google Scholar]

- Di Pellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G. 1992. Understanding motor events: a neurophysiological study. Exp Brain Res. 91:176–180. [DOI] [PubMed] [Google Scholar]

- Dolan RJ. 2002. Emotion, cognition, and behavior. Science. 298:1191–1194. [DOI] [PubMed] [Google Scholar]

- Efran JS, Spangler TJ. 1979. Why grown-ups cry. Motiv Emot. 3:63–72. [Google Scholar]

- Eisenberger NI, Lieberman MD. 2004. Why rejection hurts: a common neural alarm system for physical and social pain. Trends Cogn Sci. 8:294–300. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Anders S, Wiethoff S, Erb M, Herbert C, Saur R, Grodd W, Wildgruber D. 2006. Effects of prosodic emotional intensity on activation of associative auditory cortex. Neuroreport. 17:249–253. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Bretscher J, Wiethoff S, Bisch J, Schlipf S, Wildgruber D, Kreifelts B. 2013. Functional responses and structural connections of cortical areas for processing faces and voices in the superior temporal sulcus. Neuroimage. 76:45–56. [DOI] [PubMed] [Google Scholar]

- Feldman R. 2015. The adaptive human parental brain: implications for children's social development. Trends Neurosci. 386:387–399. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Rozzi S, Fogassi L. 2005. Mirror neurons responding to observation of actions made with tools in monkey ventral premotor cortex. J Cogn Neurosci. 17:212–226. [DOI] [PubMed] [Google Scholar]

- Frodi AM, Lamb ME. 1980. Child abusers’ responses to infant smiles and cries. Child Dev. 51:238–241. [PubMed] [Google Scholar]

- Frodi AM, Lamb ME, Leavitt LA, Donovan WL. 1978. Fathers’ and mothers’ responses to infant smiles and cries. Infant Behav Dev. 1:187–198. [Google Scholar]

- Frühholz S, Grandjean D. 2013. Processing of emotional vocalizations in bilateral inferior frontal cortex. Neurosci Biobehav Rev. 37:2847–2855. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Gschwind M, Grandjean D. 2015. Bilateral dorsal and ventral fiber pathways for the processing of affective prosody identified by probabilistic fiber tracking. Neuroimage. 109:27–34. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Trost W, Grandjean D. 2014. The role of the medial temporal limbic system in processing emotions in voice and music. Prog Neurobiol. 123:1–17. [DOI] [PubMed] [Google Scholar]

- Furedy JJ, Fleming AS, Ruble D, Scher H, Daly J, Day D, Loewen R. 1989. Sex differences in small-magnitude heart-rate responses to sexual and infant-related stimuli: a psychophysiological approach. Physiol Behav. 46:903–905. [DOI] [PubMed] [Google Scholar]

- Gekoski MJ, Rovee-Collier CK, Carulli-Rabinowitz V. 1983. A longitudinal analysis of inhibition of infant distress: the origins of social expectations? Infant Behav Dev. 6:339–351. [Google Scholar]

- Golub HL, Corwin MJ. 1985. A physioacoustic model of the infant cry. In: Lester BM, Boukydis CF, editors. Infant crying: theoretical and research perspective. New York: Plenum Press; p. 59–82. [Google Scholar]

- Grandjean D, Sander D, Lucas N, Scherer KR, Vuilleumier P. 2008. Effects of emotional prosody on auditory extinction for voices in patients with spatial neglect. Neuropsychologia. 46:487–496. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, Vuilleumier P. 2005. The voices of wrath: brain responses to angry prosody in meaningless speech. Nat Neurosci. 8:145–146. [DOI] [PubMed] [Google Scholar]

- Hendriks MC, Croon MA, Vingerhoets AJ. 2008. Social reactions to adult crying: the help-soliciting function of tears. J Soc Psychol. 148:22–42. [DOI] [PubMed] [Google Scholar]

- Herdman AT, Wollbrink A, Chau W, Ishii R, Ross B, Pantev C. 2003. Determination of activation areas in the human auditory cortex by means of synthetic aperture magnetometry. Neuroimage. 20:995–1005. [DOI] [PubMed] [Google Scholar]

- Hill P, Martin RB. 1997. Empathic weeping, social communication, and cognitive dissonance. J Soc Clin Psychol. 16:299–322. [Google Scholar]

- Hillebrand A, Barnes GR. 2002. A quantitative assessment of the sensitivity of whole-head MEG to activity in the adult human cortex. Neuroimage. 16:638–650. [DOI] [PubMed] [Google Scholar]

- Hillebrand A, Singh KD, Holliday IE, Furlong PL, Barnes GR. 2005. A new approach to neuroimaging with magnetoencephalography. Hum Brain Mapp. 25:199–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hipwell A, Guo C, Phillips M, Swain J, Moses-Kolko E. 2015. Right frontoinsular cortex and subcortical activity to infant cry is associated with maternal mental state talk. J Neurosci. 35:12725–12732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman ML. 1975. Developmental synthesis of affect and cognition and its implications for altruistic motivation. Dev Psychol. 11:607. [Google Scholar]

- Hornak J, Bramham J, Rolls ET, Morris RG, O'Doherty J, Bullock P, Polkey C. 2003. Changes in emotion after circumscribed surgical lesions of the orbitofrontal and cingulate cortices. Brain. 126:1691–1712. [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls E, Wade D. 1996. Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia. 34:247–261. [DOI] [PubMed] [Google Scholar]

- Huang MX, Mosher JC, Leahy RM. 1999. A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Phys Med Biol. 44:423–440. [DOI] [PubMed] [Google Scholar]

- Hunt LT, Kolling N, Soltani A, Woolrich MW, Rushworth MF, Behrens TE. 2012. Mechanisms underlying cortical activity during value-guided choice. Nat Neurosci. 15:470–476, S471–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunziker UA, Barr RG. 1986. Increased carrying reduces infant crying: a randomized controlled trial. Pediatrics. 77:641–648. [PubMed] [Google Scholar]

- Hyvarinen A, Oja E. 2000. Independent component analysis: algorithms and applications. Neural Netw. 13:411–430. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Molnar-Szakacs I, Gallese V, Buccino G, Mazziotta JC, Rizzolatti G. 2005. Grasping the intentions of others with one's own mirror neuron system. PLoS Biol. 3:e79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, Beckmann CF, Behrens TE, Woolrich MW, Smith SM. 2012. FSL. Neuroimage. 62:782–790. [DOI] [PubMed] [Google Scholar]

- Joosen KJ, Mesman J, Bakermans-Kranenburg MJ, Pieper S, Zeskind PS, Van Ijzendoorn MH. 2013. Physiological reactivity to infant crying and observed maternal sensitivity. Infancy. 18:414–431. [Google Scholar]

- Kent RD, Murray AD. 1982. Acoustic features of infant vocalic utterances at 3, 6, and 9 months. J Acoust Soc Am. 72:353–365. [DOI] [PubMed] [Google Scholar]

- Kraus N, Nicol T. 2005. Brainstem origins for cortical “what” and “where” pathways in the auditory system. Trends Neurosci. 28:176–181. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML. 2005. The human orbitofrontal cortex: linking reward to hedonic experience. Nat Rev Neurosci. 6:691–702. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Lehtonen A, Squire S, Harvey AG, Craske MG, Holliday IE, Green AL, Aziz TZ, Hansen PC, Cornelissen PL et al. 2008. A specific and rapid neural signature for parental instinct. PLoS ONE. 3:doi:10.1371/journal.pone.0001664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. 2004. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Prog Neurobiol. 72:341–372. [DOI] [PubMed] [Google Scholar]

- Kruger AC, Konner M. 2010. Who responds to crying? Maternal care and allocare among the !Kung. Hum Nat. 21:309–329. [Google Scholar]

- Lamb ME. 1977. Father-infant and mother-infant interaction in the first year of life. Child Dev. 48: 167–181. [Google Scholar]

- Laurent HK, Ablow JC. 2012a. A cry in the dark: depressed mothers show reduced neural activation to their own infant's cry. Soc Cogn Affect Neurosci. 7:125–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurent HK, Ablow JC. 2012b. The missing link: mothers’ neural response to infant cry related to infant attachment behaviors. Infant Behav Dev. 35:761–772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux JE. 2000. Emotion circuits in the brain. Annu Rev Neurosci. 23:155–184. [DOI] [PubMed] [Google Scholar]

- Leslie KR, Johnson-Frey SH, Grafton ST. 2004. Functional imaging of face and hand imitation: towards a motor theory of empathy. Neuroimage. 21:601–607. [DOI] [PubMed] [Google Scholar]

- Levitt H. 1971. Transformed up-down methods in psychoacoustics. J Acous Soc Am. 49(2B): 467–477. [PubMed] [Google Scholar]

- Libet B. 2006. Reflections on the interaction of the mind and brain. Prog Neurobiol. 78:322–326. [DOI] [PubMed] [Google Scholar]

- Libet B. 2002. The timing of mental events: Libet's experimental findings and their implications. Conscious Cogn. 11:291–299. [DOI] [PubMed] [Google Scholar]

- Litvak V, Mattout J, Kiebel S, Phillips C, Henson R, Kilner J, Barnes G, Oostenveld R, Daunizeau J, Flandin G et al. 2011. EEG and MEG data analysis in SPM8. Comput Intell Neurosci. 2011:852961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorberbaum JP, Newman JD, Horwitz AR, Dubno JR, Lydiard RB, Hamner MB, Bohning DE, George MS. 2002. A potential role for thalamocingulate circuitry in human maternal behavior. Biol Psychiatry. 51:431–445. [DOI] [PubMed] [Google Scholar]

- Lorenz K. 1943. Die angeborenen Formen Möglicher Erfahrung. [Innate forms of potential experience]. Z Tierpsychol. 5:235–519. [Google Scholar]

- Luckhoo HT, Brookes MJ, Woolrich MW. 2014. Multi-session statistics on beamformed MEG data. Neuroimage. 95:330–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLean PD. 1985. Brain evolution relating to family, play, and the separation call. Arch Gen Psychiatry. 42:405–417. [DOI] [PubMed] [Google Scholar]

- Mantini D, Della Penna S, Marzetti L, de Pasquale F, Pizzella V, Corbetta M, Romani GL. 2011. A signal-processing pipeline for magnetoencephalography resting-state networks. Brain Connect. 1:49–59. [DOI] [PubMed] [Google Scholar]

- Marcus DS, Harwell J, Olsen T, Hodge M, Glasser MF, Prior F, Jenkinson M, Laumann T, Curtiss SW, Van Essen DC. 2011. Informatics and data mining tools and strategies for the human connectome project. Front N euroinform. 5:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montoya JL, Landi N, Kober H, Worhunsky PD, Rutherford HJV, Mencl WE, Mayes LC, Potenza MN. 2012. Regional brain responses in nulliparous women to emotional infant stimuli. PLoS ONE 7(5):e36270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy MR, MacLean PD, Hamilton SC. 1981. Species-typical behavior of hamsters deprived from birth of the neocortex. Science. 213:459–461. [DOI] [PubMed] [Google Scholar]

- Murray AD. 1979. Infant crying as an elicitor of parental behavior: an examination of two models. Psychol Bull. 86:191–215. [PubMed] [Google Scholar]

- Murray IR, Arnott JL. 1993. Toward the simulation of emotion in synthetic speech: a review of the literature on human vocal emotion. J Acoust Soc Am. 93:1097–1108. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy SD. 2013. High-frequency brain activity and muscle artifacts in MEG/EEG: a review and recommendations. Front Hum Neurosci. 7:138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nan Y, Skoe E, Nicol T, Kraus N. 2015. Auditory brainstem's sensitivity to human voices. Int J Psychophysiol. 953:333–337. [DOI] [PubMed] [Google Scholar]

- Newman JD. 1985. The infant cry of primates: an evolutionary perspective. In: LesterZachariah Boukydis BMCF, editors. Infant crying: theoretical and research perspectives. New York: Plenum Press; p. 307–323. [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. 2011. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011:156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostwald PF, Murray T. 1985. The communicative and diagnostic significance of infant sounds. In: Lester BM, Zachariah Boukydis CF, editors. Infant crying: theoretical and research perspectives. New York: Plenum Press; p. 139–158. [Google Scholar]

- Out D, Pieper S, Bakermans-Kranenburg MJ, Van Ijzendoorn MH. 2010. Physiological reactivity to infant crying: a behavioral genetic study. Genes Brain Behav. 9:868–876. [DOI] [PubMed] [Google Scholar]

- Parsons C, Young K, Stein A, Craske M, Kringelbach ML. 2014. Introducing the Oxford Vocal (OxVoc) Sounds Database: a validated set of non-acted affective sounds from human infants, adults and domestic animals. Emot Sci. 5:562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons CE, Stark EA, Young KS, Stein A, Kringelbach ML. 2013. Understanding the human parental brain: a critical role of the orbitofrontal cortex. Soc Neurosci. 8:525–543. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Joensson M, Brattico E, Hyam JA, Stein A, Green AL, Aziz TZ, Kringelbach ML. 2014. Ready for action: a role for the human midbrain in responding to infant vocalizations. Soc Cogn Affect Neurosci. 9:977–984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Mohseni H, Woolrich MW, Thomsen KR, Joensson M, Murray L, Goodacre T, Stein A, Kringelbach ML. 2013. Minor structural abnormalities in the infant face disrupt neural processing: a unique window into early caregiving responses. Soc Neurosci. 8:268–274. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Murray L, Stein A, Kringelbach ML. 2010. The functional neuroanatomy of the evolving parent-infant relationship. Prog Neurobiol. 91:220–241. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Parsons E, Stein A, Kringelbach ML. 2012. Listening to infant distress vocalizations enhances effortful motor performance. Acta Paediatr. 101:e189–e191. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Kotz SA editors. 2006. Valence, arousal, and task effects on the P200 in emotional prosody processing. Paper presented at the 12th Annual Conference on Architectures and Mechanisms for Language Processing 2006 (AMLaP).

- Paulmann S, Kotz SA. 2008. Early emotional prosody perception based on different speaker voices. Neuroreport. 19:209–213. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Pell MD, Kotz SA. 2008. Functional contributions of the basal ganglia to emotional prosody: evidence from ERPs. Brain Res. 1217:171–178. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Seifert S, Kotz SA. 2010. Orbito-frontal lesions cause impairment during late but not early emotional prosodic processing. Soc Neurosci. 5:59–75. [DOI] [PubMed] [Google Scholar]

- Quadflieg S, Mohr A, Mentzel H-J, Miltner WH, Straube T. 2008. Modulation of the neural network involved in the processing of anger prosody: the role of task-relevance and social phobia. Biol Psychol. 78:129–137. [DOI] [PubMed] [Google Scholar]

- Rebelsky F, Black R. 1972. Crying in infancy. J Genet Psychol. 121:49–57. [DOI] [PubMed] [Google Scholar]

- Riem MME, Van Ijzendoorn MH, Tops M, Boksem MAS, Rombouts SARB, Bakermans-Kranenburg MJ. 2012. No laughing matter: intranasal oxytocin administration changes functional brain connectivity during exposure to infant laughter. Neuropsychopharmacology. 37:1257–1266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. 1998. Language within our grasp. Trends Neurosci. 21:188–194. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Murray EA. 2014. The orbitofrontal oracle: cortical mechanisms for the prediction and evaluation of specific behavioral outcomes. Neuron. 84:1143–1156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, Vuilleumier P. 2005. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. Neuroimage. 28:848–858. [DOI] [PubMed] [Google Scholar]

- Sander K, Frome Y, Scheich H. 2007. fMRI activations of amygdala, cingulate cortex, and auditory cortex by infant laughing and crying. Hum Brain Mapp. 28:1007–1022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. 2006. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci. 10:24–30. [DOI] [PubMed] [Google Scholar]

- Schuetze P, Zeskind PS. 2001. Relations between women's depressive symptoms and perceptions of infant distress signals varying in pitch. Infancy. 2:483–499. [DOI] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS. 2003. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 26:100–107. [DOI] [PubMed] [Google Scholar]

- Seifritz E, Esposito F, Neuhoff JG, Lüthi A, Mustovic H, Dammann G, von Bardeleben U, Radue EW, Cirillo S, Tedeschi G et al. 2003. Differential sex-independent amygdala response to infant crying and laughing in parents versus nonparents. Biol Psychiatry. 54:1367–1375. [DOI] [PubMed] [Google Scholar]

- Sekihara K, Nagarajan SS, Poeppel D, Marantz A. 2002. Performance of an MEG adaptive-beamformer technique in the presence of correlated neural activities: effects on signal intensity and time-course estimates. IEEE Trans Biomed Eng. 49:1534–1546. [DOI] [PubMed] [Google Scholar]

- Sekihara K, Nagarajan SS, Poeppel D, Marantz A, Miyashita Y. 2001. Reconstructing spatio-temporal activities of neural sources using an MEG vector beamformer technique. IEEE Trans Biomed Eng. 48:760–771. [DOI] [PubMed] [Google Scholar]

- Sergent C, Baillet S, Dehaene S. 2005. Timing of the brain events underlying access to consciousness during the attentional blink. Nat Neurosci. 8:1391–1400. [DOI] [PubMed] [Google Scholar]

- Soltis J. 2004. The signal functions of early infant crying. Behav Brain Sci. 27:443–458. [PubMed] [Google Scholar]

- Strathearn L, Li J, Fonagy P, Montague PR. 2008. What's in a smile? Maternal brain responses to infant facial cues. Pediatrics. 122:40–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swain J, Kim P, Ho SS. 2011. Neuroendocrinology of parental response to baby-cry. J Neuroendocrinol. 23:1036–1041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taulu S, Simola J. 2006. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys Med Biol. 51:1759–1768. [DOI] [PubMed] [Google Scholar]

- Tomkins S. 1962. Affect/imagery/consciousness. Vol. 2: The negative affects. Oxford, England: Springer. [Google Scholar]

- Van Veen BD, van Drongelen W, Yuchtman M, Suzuki A. 1997. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng. 44:867–880. [DOI] [PubMed] [Google Scholar]

- Vigario R, Sarela J, Jousmaki V, Hamalainen M, Oja E. 2000. Independent component approach to the analysis of EEG and MEG recordings. IEEE Trans Biomed Eng. 47:589–593. [DOI] [PubMed] [Google Scholar]

- Vingerhoets AJ, Cornelius RR, Van Heck GL, Becht MC. 2000. Adult crying: a model and review of the literature. Rev Gen Psychol. 4:354. [Google Scholar]

- Vuorenkoski V, Wasz-Höckert O, Koivisto E, Lind J. 1969. The effect of cry stimulus on the temperature of the lactating breast of primipara. A thermographic study. Experientia. 25:1286–1287. [DOI] [PubMed] [Google Scholar]

- Warren JE, Sauter DA, Eisner F, Wiland J, Dresner MA, Wise RJ, Rosen S, Scott SK. 2006. Positive emotions preferentially engage an auditory-motor “mirror” system. J Neurosci. 26:13067–13075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins KE, Strafella AP, Paus T. 2003. Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia. 41:989–994. [DOI] [PubMed] [Google Scholar]

- Wiesenfeld AR, Malatesta CZ, DeLoach LL. 1981. Differential parental response to familial and unfamiliar infant distress signals. Infant Behav Dev. 4:281–295. [Google Scholar]

- Wood RM, Gustafson GE. 2001. Infant crying and adults’ anticipated caregiving responses: acoustic and contextual influences. Child Dev. 72:1287–1300. [DOI] [PubMed] [Google Scholar]

- Woolrich M, Hunt L, Groves A, Barnes G. 2011. MEG beamforming using Bayesian PCA for adaptive data covariance matrix regularization. Neuroimage. 57:1466–1479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young KS, Parsons CE, Stein A, Kringelbach ML. 2012. Interpreting infant vocal distress: the ameliorative effect of musical training in depression. Emotion. 12:1200–1205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young KS, Parsons CE, Stein A, Kringelbach ML. 2015. Motion and emotion: depression reduces psychomotor performance and alters affective movements in caregiving interactions. Front Behav Neurosci. 9:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yovel G, Belin P. 2013. A unified coding strategy for processing faces and voices. Trends Cogn Sci. 17:263–271. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.