Abstract

The auditory system is stunning in its capacity for change: a single neuron can modulate its tuning in minutes. Here we articulate a conceptual framework to understand the biology of auditory learning, where an animal must engage cognitive, sensorimotor, and reward systems to spark neural remodeling. Central to our framework is a consideration of the auditory system as an integrated whole that interacts with other circuits to guide and refine life in sound. Despite our emphasis on the auditory system, these principles may apply across the nervous system. Understanding neuroplastic changes in both normal and impaired sensory systems guides strategies to improve everyday communication.

Keywords: neuroplasticity, auditory learning, language development, language impairment

Graphical abstract

Learning, language & communication

Nervous system plasticity has been observed across the animal kingdom from single cells to sophisticated circuits. Sensory systems are prodigious in their ability to reshape response properties following learning, and in the auditory system plasticity has been observed from cochlea to cortex. This learning is fundamental to our ability to function in and adapt to our environments. Experience navigating this sensory world drives language development—perhaps the most remarkable auditory learning task humans accomplish—and it is necessary to understand the principles that govern this plasticity to devise strategies to improve language and communication in normal and disordered systems.

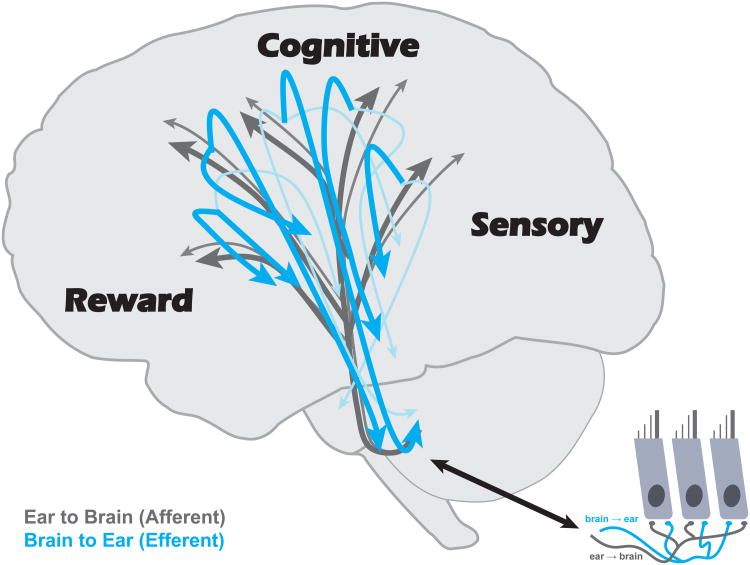

Here we argue that cognitive, sensorimotor, and reward ingredients engender biological changes in sound processing. The mechanisms behind these changes lie in two sets of dichotomous systems: (i) the afferent projections that transmit signals from ear to brain and the efferent projections that propagate signals from brain to ear, and (ii) the primary and nonprimary processing streams that suffuse the auditory neuroaxis (Fig. 1). We highlight experiments that advance our understanding of the neurophysiological foundations underlying auditory processing and that offer objective markers of auditory processing in humans. Finally, we place learning in the context of a distributed, but integrated, auditory system.

Figure 1.

The auditory system is a distributed, but integrated, circuit. Key to this framework is the rich series of afferent (ear-to-brain/bottom-up) and efferent (brain-to-ear/top-down) projections that pervade every station along the auditory pathway—including to and from the outer hair cells (inset). These pathways contain primary (darker colors) and nonprimary (lighter colors) divisions of the auditory system, and facilitate both sound processing and neural plasticity. Successful auditory learning engages cognitive, sensorimotor, and reward networks, and the intersection of these circuits guides neuroplasticity.

Rethinking the auditory system: A distributed, but integrated, circuit

Traditional models characterized the auditory system as series of relay stations along an assembly line, each with distinct functions [1–3]. While these hierarchical models recognized the interconnectivity of the system, the emphasis was to characterize each nucleus's specialization. The idea was that understanding each station would build each block necessary to construct the auditory circuit, and this “inside-out” approach has contributed greatly to our understanding of auditory neurophysiology.

We propose a complementary “outside-in” approach. Our view is that the auditory system should be thought of as a distributed, but integrated, circuit (Fig. 1). Any acoustic event catalyzes activity throughout the auditory neuraxis, and we argue that sound processing—and any assay thereof—is a reflection of this integrated network. Although each structure is specialized to perform a specific function, this specialization has evolved in the context of the entire circuit. To understand auditory learning, then, we are forced to move past a focus on an individual processing station as a single locus of activity, expertise, or disorder.

Our view is consistent with an emerging trend in neuroscience to consider the interplay of multiple processing stations, and the “give-and-take” between cortical and/or subcortical systems, underlying human behavior [4–8].

Plasticity in the human auditory system: A double-edged sword

We regard everyday auditory experience as a learning process that shapes the nervous system, not least because auditory experience is necessary for the maturation of basic auditory circuits [9–11]. These changes may be exacerbated—for better or worse—and cases of expertise and deprivation both contribute to understanding how experience shapes auditory circuitry [12]. Neuroplasticity must therefore be viewed as a double-edged sword. The cognitive, sensorimotor, and reward ingredients of auditory experience drive plasticity, and a hypothesis based on this framework is that insults to any of these domains dictate the resulting phenotype.

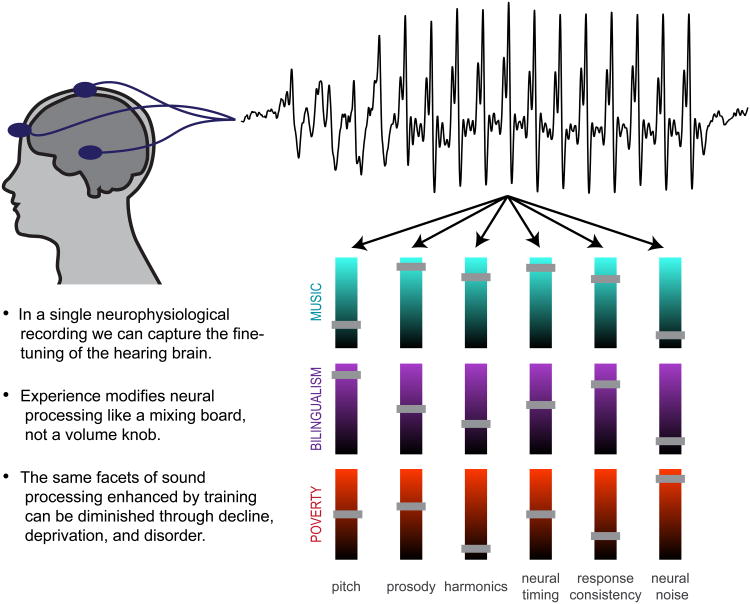

Of particular interest in our research program is the neural coding of fast auditory events, such as the details that convey phonemic identity in speech (Box 1). Our laboratory has developed an approach to index the influence of life experience on the neural coding of these fast acoustic details called the frequency-following response (FFR). We have previously referred to this as the auditory brainstem response to complex sounds, or cABR, but fear this terminology undermines the integrated and experience-depending nature of the auditory-neural activity it indexes. The FFR is as complex as the eliciting stimulus, and we use “FFR” to refer to the product of aggregate neural activity in auditory midbrain that reflects the coding of aggregate speech features, including activity that “follows” both transient and static acoustic cues; because auditory midbrain is a “hub” of intersecting afferent and efferent auditory projections, in addition to projections to and from non-auditory cortices, its response properties are shaped by this constellation of cognitive, sensory, and reward input (Fig. 2). Thus, despite its subcortical basis, the FFR reflects the distributed, but integrated, activity emblematic of auditory processing.

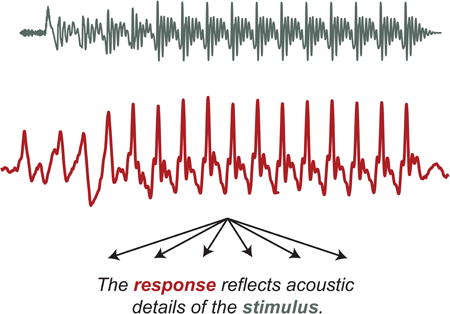

Box 1. Indexing auditory processing in humans: A matter of time.

The acoustic world unfolds at once across timescales, from subsecond syllables to multiminute monologues. Much as the visual system must integrate basic cues such as color, edge, and motion into a coherent object, the auditory system must integrate acoustic cues across time and frequency into meaningful percepts. It has been argued that insensitivity to temporal cues at one or more rate may contribute to language impairment [91,94], and so an important goal is to understand how the brain makes sense of information within and across these timescales.

Neurophysiological responses to speech sounds provide glimpses of the integrity of processing key acoustic features across timescales. The frequency-following response (FFR) reflects neural processing of fast acoustic information such as subsyllabic cues. A major advantage of this approach is the physical symmetry between the evoking stimulus and the response (see. Fig. I), meaning that the latter reflects the integrity with which any acoustic cue is transcribed—consonants and vowels, prosody, timing, pitch and harmonics, and more. Thus, within a single evoked response rests a plethora of information about how well details of sound are coded. In fact, when the FFR is played through a speaker it is recognizable as the eliciting stimulus [95].

Moreover, FFR properties are linked to everyday listening skills. Few of these are as complex and computationally-demanding as understanding speech in noise, which depends on an series of interactions between factors both exogenous (the talker, their accent, the language being spoken, and the acoustics of the room and noise) and endogenous (the listener, their experience, their cognitive abilities, and their hearing acuity). Due to these demands—in particular the demands for speed and precision in auditory processing—it stands to reason that any number of insults may constrain these processes, and indeed many clinical populations exhibit difficulties recognizing speech in noise. In this regard, the ability to recognize speech in noise may reflect overall brain health. FFR properties are linked to these listening challenges, suggesting that it may be an approach to uncover individual differences in listening abilities and their responses to intervention [96–100], thereby providing a biological indication of brain health. FFR is agnostic to a subject's age and species: the same protocols have been used as early as infancy [101], across the lifespan [102], and in animal models [103], providing granularity and uniformity to the study of sound processing. Thus, it can provide an approach to inform links between neural function and everyday communication such as hearing speech in noise.

Figure 2.

Measuring neural responses to speech allows us to evaluate auditory processing—and the legacy of auditory experience—in humans. Scalp electrodes pick up neural firing in response to sound, and the brainwave recapitulates a life in sound by reflecting the fine-tuning of the hearing brain through experience. The nature of an individual experience shapes the nature of the plasticity: different elements of sound processing are selectively modulated, for better or worse, within an individual. This is illustrated through a mixing board analogy, with several aspects of sound processing illustrated; the short bars reflect enhancements (above midline) or diminutions (below midline) to auditory processing. Bars at the halfway point reflect aspects of sound processing that appear unaffected by that particular experience. Although we highlight several aspects of sound processing in this illustration, much more may be glimpsed through these neurophysiological responses.

This research emphasizes the imprint of changes to the auditory system that affect the automatic sound processing that is always on and cannot volitionally be turned off—even after training has stopped [13,14]. Thus, biological infrastructure in the auditory system is influenced by an individual's life in sound. No two people hear the world exactly the same way because acoustic experiences impart enduring biological legacies (Fig. 2).

Cognitive influences on auditory processing

The cognitive component of our framework is grounded in these principles: (i) listening is an active process that engages cognitive networks; (ii) the precision of automatic sound processing in the brain is linked to cognitive skills such as attention and working memory; (iii) the cognitive systems engaged during listening selectively modulate the aspects of sound that are behaviorally relevant. The legacy of this repeated, active engagement is engrained in the nervous system over time as listeners make sound-to-meaning connections.

Speech understanding relies on the ability to pull on cognitive functions such as working memory and attention [15–18]; engagement of these systems strengthens the neural circuits that facilitate listening [19]. One study showed that cognitive factors shape auditory learning in an experiment comparing two groups of rats [20]. The first group trained to attend to frequency contrasts in a set of tones, whereas a different group trained on intensity contrasts—crucially, an identical stimulus set was used in both groups. Cortical maps changed along the acoustic dimension that was trained, demonstrating that what is relevant to an animal dictates map plasticity.

In humans, several studies show links between the integrity of the neural processing of sound and cognitive abilities [21], suggesting that the legacy of cognitive engagement is revealed through the precision of neural function. Additionally, this suggests that training to strengthen a cognitive skill propagates to sensory systems [22–24].

Many of these insights come from studies of music training, which provides a model to understand the biology of auditory learning [25–27]. Making music requires an individual to engage multiple cognitive systems, and to direct attention to the sounds that are heard, produced, and manipulated. The physical act of producing sound—through instrument or voice—mandates intricate motor control and stimulates auditory-motor projections [28]. In addition, music is an inherently-rewarding stimulus that elicits activity throughout the limbic system [29]. The musician's brain has been finely tuned to process sound, and the musician is a case to explore what is possible in terms of experience-dependent plasticity.

With regards to cognitive-sensory coupling, individuals with music training exhibit stronger neural coding of speech in noise concomitant to heightened auditory working memory [30]. Contrast this with a bilingual, who exhibits stronger neural coding of pitch cues concomitant to heightened inhibitory control [31]. A musician needs to pull out another instrument's “voice” from an ensemble while mentally rehearsing a musical excerpt, facilitating processing of signals in a complex soundscape and exercising working memory. But a bilingual needs to actively suppress one mental lexicon while using voice pitch as a cue to activate the appropriate one. Whereas music training is associated with superior speech recognition in certain types of background noise [32,33,cf. 34], the cognitive systems engaged through bilingualism create a different situation. Bilinguals have superior recognition of non-speech sounds in noise but inferior recognition of speech in noise, due to cognitive interference from the mental lexicon they are attempting to suppress during active listening [35,36]. Thus, the impact of this cognitive-sensory coupling for everyday listening skills depends on what constellation of cognitive and sensory skills are rehearsed. This juxtaposition illustrates an important principle of auditory plasticity: cognitive systems tune into particular details of sound and selectively modulate the sensory systems that represent those features (Fig. 2). By analogy, then, auditory learning may be thought of as a “mixing board” more than a single “volume knob,” with distinct aspects of neural coding selectively modulated as a function of the precipitating experience [37,38]. This contrast also reinforces the notion of a double-edged sword in experience-dependent plasticity, and adds a layer of nuance: within an individual some listening skills may be strengthened, whereas others may be suppressed.

There is similarly a tight interplay between cognitive and sensory losses; in fact, older adults with hearing loss exhibit faster declines in working memory, presumably because degraded auditory acuity limits opportunities for cognitively-engaging and socially-rewarding interactions [39]. Training these cognitive skills, however, cascades to boosts in sensory processing. For example, older adults have delayed neural timing in response to consonants, but not vowels [40]; auditory-cognitive training that directs attention to consonants (including built-in reward cues) reverses this signature aging effect [23]. Similar phenomena are observed following cognitive interventions in the visual system [24, 41].

These studies illustrate that identical neural pathways are imputed in disorder and its remediation, and are consistent with the view that both should be conceptualized as auditory learning. They demonstrate how fine-grained aspects of sound processing are selectively modulated based on the cognitive demands and bottlenecks of the experience (Fig. 2). Moreover, these cases exemplify the coupling between the integrity with which the nervous system transcribes sound and the cognitive skills important for everyday listening.

Sensorimotor influences on auditory processing

The sensorimotor component of our framework is grounded in these principles: (i) the infrastructure responsible for encoding basic sound features is labile; (ii) extreme cases of deprivation and expertise illuminate mechanisms that apply to a typical system; (iii) the entire auditory pathway—including the hair cells—can be thought of as sites of “memory storage” because response properties reflect the legacy of auditory experience.

Basic sensory infrastructure has a potential for reorganization. The most extreme examples comprise cases of profound deprivation, such as deafness, blindness, and amputation, where sensory cortices are coopted by circuits dedicated to the remaining senses—but only after a period of adaptation (that is, learning) [42,43]. These extremes illustrate the brain's potential for reorganization and the mechanisms underlying this remodeling.

In terms of expertise, music again offers a model for auditory learning. Musicians process sound more efficiently even when not playing music, suggesting that repeated active engagement with sound shapes the automatic state of the nervous system [19]. In fact, the imprint of music training extends all the way the outer hair cells of the cochlea [44, 45]. The musician model also demonstrates that sensory input alone is insufficient to drive neural remodeling: comparisons between children undergoing active music training (that engages cognitive, motor, and reward networks) and those in music appreciation classes have shown neurophysiological changes only in the former [46]. Thus, sensory input may be necessary, but not sufficient, for auditory learning [47].

With regards to language learning, evidence from songbirds demonstrates a causal role for the basal ganglia in song learning [48], suggesting a role for the motor system in language learning. We are just beginning to learn how the motor system is involved in auditory learning in humans, but it seems that motor acuity is tied to language abilities [49,50], and that training rhythmic skills can boost literacy skills [51]. The rhythm-language link may underlie the observation that music training confers gains in reading achievement.

Finally, we mention an example of sensory learning that on its surface appears to occur automatically. Infants quickly learn statistical regularities in the acoustic environment, and this is thought to contribute to language acquisition [52]. But not even these ostensibly passive learning processes are exempt from cognitive influence: prior experience and active expectations guide statistical learning [53,54]. Thus, as young as infancy, listeners can connect incoming sounds to meaning, and also exert meaning on incoming sounds, reinforcing the interplay between sensorimotor and cognitive systems in auditory learning.

Reward (limbic) influences on auditory processing

The reward component of our framework is grounded in these principles: (i) reward systems spark reorganization in fundamental auditory infrastructure; (ii) social and reward contexts gate auditory learning in humans; but (iii) limbic input can create the conditions to learn something that does not optimize auditory processing.

We learn what we care about. Consequently, the limbic system likely facilitates neural remodeling. Classic studies show that stimulation of the cholinergic nucleus basalis galvanizes cortical map reorganization [55,56]. Aberrant sensory-limbic coupling, in turn, is involved in disorders such as tinnitus [57], but also in their treatment [47]. This again emphasizes that identical networks are implicated in conditions of both enhancement and diminution to sound processing.

Less is known about how the limbic system guides auditory learning in humans, in part due to practical limitations controlling the expression of neuromodulators (although early evidence is promising [58]). Once again, music training provides a model: listening to and producing music activates multiple auditory-limbic projections [29, 59]. Given that music training directs attention to minute details of sound in a rewarding context, it stands to reason that these neuromodulators play a role in the resulting neural remodeling.

The limbic system may also play a role in language development. It has been argued that infants must tune into the aforementioned statistical patterns in the auditory environment to jumpstart language learning, but that these computations are gated by social (i.e., reward) context [60]. For example, infants learn non-native phonemic contrasts when they are modeled by a tutor speaking “motherese,” but only if that tutor is present and interacting with the child—a video of the tutor is insufficient [61].

Deficits in reward input, then, are hypothesized to contribute to language impairment. Children with autism exhibit reduced functional connectivity between limbic structures and voice-selective auditory cortex, which suggests a decoupling of sensorimotor and reward networks during everyday listening [62]. Indeed, many children with autism show poor neural coding of prosodic cues that convey emotional and pragmatic information [63].

Children whose mothers have relatively low levels of education—a proxy for socioeconomic status—present a different case of deprivation. Children in these homes hear approximately 30 million fewer words than their peers; in addition, they hear two fifths the number of different words, meaning that both the quantity and quality of their everyday linguistic input is impoverished [64]. Consider that a mother's voice is perhaps the single most rewarding sensory cue available to a child. If the sensory input is impoverished, but the conditions are right for learning, what is learned may itself be impoverished. Indeed, this linguistic impoverishment is reflected by poor neural coding and cognitive skills [65] (see Fig. 2). This is consistent with evidence from animal models that environmental deprivation constrains nervous system development; environmental enrichment, however, reverses this maladaptive plasticity [66], reinforcing the concept of auditory learning as a double-edged sword. This hypothesis also aligns with evidence that task reward structure shapes not only whether plasticity occurs, but how it manifests [67, 68].

Taken together, these studies illustrate that, on the one hand, a lack of reward structure stymies the mechanisms of auditory learning. On the other hand, sufficient reward structure with an impoverished content may cause learning of the wrong material. Presumably, this principle applies to the cognitive and sensorimotor aspects of auditory learning as well.

Mechanisms of learning

Having laid the groundwork to understand that cognitive, sensorimotor, and reward systems are necessary to drive neural remodeling, the question arises: how do these systems influence automatic sound processing?

Two anatomical dichotomies help navigate this integrated circuit and its role in auditory learning: afferent vs. efferent projections and the primary vs. nonprimary pathways.

Afferent & efferent projections: Bottom-up meets top-down

The first dichotomy comprises the projections that feed signals forwards and backwards through the auditory system (Fig. 1). The bottom-up afferent projections transmit information forwards to accomplish signal processing (“ear to brain”) whereas the top-down efferent projections propagate signals backwards (“brain to ear”); both extend between cochlea and cortex [69], and the latter mediates remodeling in subcortical structures [70,71].

Our proposal is that the efferent network shapes automatic response properties in cochlear and subcortical stations, which is why the basic response properties of the auditory system, such as otoacoustic emissions and electrophysiological responses, reflect life experiences in sound. It has been argued that similar mechanisms underlie both attention-driven online changes and long-term plasticity [67]. This leads to the hypothesis that if these experiences that engage cognitive, sensorimotor, and reward are repeated sufficiently they can, over time, facilitate functional remodeling by imparting a “memory” to afferent processing [19,72] and future learning [73–75].

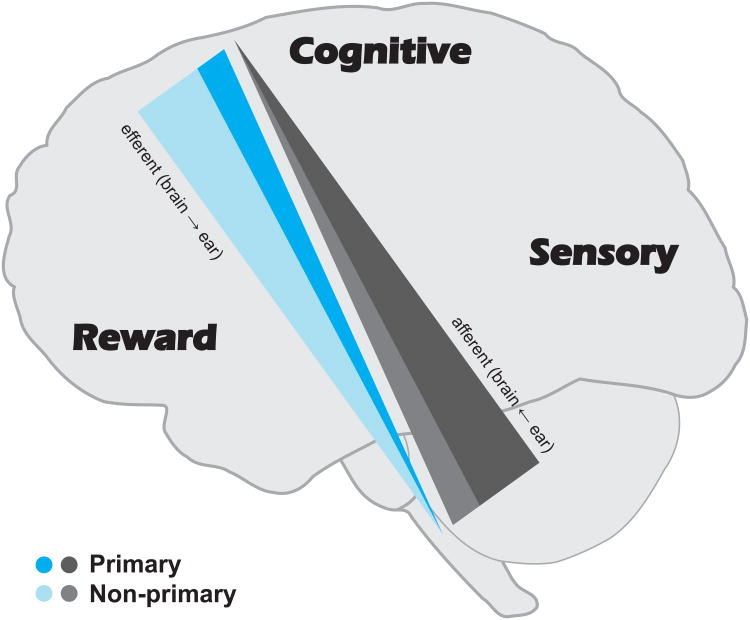

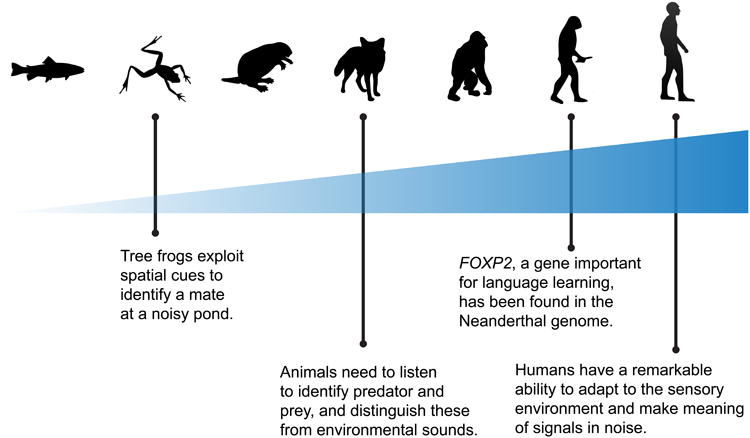

We hypothesize that the efferent system has become larger and more intricate evolutionarily with increasingly-sophisticated auditory behaviors. A number of complex auditory behaviors—many of which are important for listening in everyday situations—are similar across species. This includes the learning observed in animals with precocious auditory abilities such as bats [76], ferrets [77], and humans [78]. This may be due to convergent evolution, the independent evolution of a trait in distinct lineages based on the needs of the organism. These behaviors are perhaps most sophisticated in humans (Fig. 3), and we speculate that the convergent evolution of efferent projections may underlie some of these behaviors and the key role that auditory learning plays in developing the skills necessary for effective everyday communication. If one accepts that language learning pulls on the circuitry necessary for auditory learning, one could imagine a role for the efferent system in language development, and poor activation of these top-down networks as a chief factor in language impairment [79].

Figure 3.

We propose that the corticofugal system (top-down projections) has become richer evolutionarily, with a larger number of, and more connections between, fibers with increasing phylogenetic sophistication. We speculate that this underlies some of the increasingly-sophisticated behaviors observed across species. These behaviors likely emerged convergently—that is, they evolved independently in distinct lineages as a function of the organism's communication needs. Frogs are capable of exploiting many of the basic acoustic cues we use in complex soundscapes, such as spatial hearing and listening in the dips of background noise [104]. More sophisticated animals had to make meaning through diverse environmental sounds, learning both to ignore the rustling wind and to hustle when a predator approached. We propose that language learning is contingent upon the rigor and activation of this system, and it is interesting to note that our close genetic ancestor H. neanderthalensis carried FOXP2 [105], a gene implicated in language learning and impairment.

Primary & Nonprimary Divisions

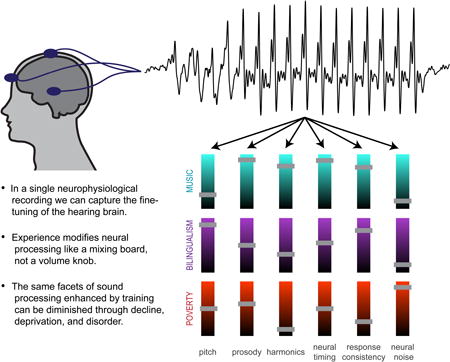

The second dichotomy pertains to auditory structures such as cochlear nucleus, inferior colliculus, thalamus, and cortex: the distinction between primary and nonprimary pathways (also known as lemniscal and paraleminiscal, cochleotopic and diffuse, or highway and country road; Fig. 4). Neurons in the primary pathway are biased to respond to auditory stimuli, whereas the nonprimary neurons are more multisensory. The primary pathway is tonotopically-organized, shows sharp tuning, and strongly phaselocks to the stimulus, whereas the nonprimary pathway is not especially tonotopic, has broader tuning, and does not time lock as strongly to stimuli [80]. In part for these reasons, it is thought that primary auditory cortex (“core”) represents nearly all incoming signals whereas nonprimary cortex (“belt” and “parabelt”) specializes for communication signals such as speech and music [81–83].

Figure 4.

The primary and nonprimary pathways operate in parallel throughout the auditory system. Each is schematized as a wedge. The larger ear-to-brain wedge (afferent primary) illustrates the predominantly stabile, automatic processing whereas the smaller ear-to-brain wedge (afferent nonprimary) illustrates the relatively smaller degree of evanescence. This dichotomy is flipped in the efferent system, where the larger wedge (efferent nonprimary) shows a predominance of evanescence in processing whereas the smaller wedge (efferent primary) suggests this system is relatively less stable. The tradeoff between stability and evanescence between the afferent and efferent systems may underlie the ability to maintain enough plasticity to adapt to new situations while also retaining enough stability to pull on previous experiences (language, memory, knowledge of the sensory world). The more an auditory activity is performed, repeated, and overlearned, it transfers to the primary pathway which becomes a repository of auditory experience by virtue of changes to its basic response properties.

Adopting our systems-wide perspective, however, an additional distinction emerges: the primary processing stream preferentially codes fast temporal information whereas the nonprimary stream codes relatively slow information [84–86]. This hypothesis is consistent with evidence from the rat trigeminal system [87] and primate visual system [88] that parallel pathways code fast and slow information. The functional consequences for language development are only beginning to be understood, however preliminary evidence suggests that deficits in either fast or slow auditory-temporal processing may lead to language impairment, but may not necessarily co-occur [89–91].

Less is known with regards to learning and the primary vs. nonprimary pathways. The nonprimary pathway has been implicated in rapid task-related plasticity, such as adapting to stimulus context [92] and classical conditioning [93]. During active listening, neurons inprefrontal cortex first change their tuning, followed by neurons in nonprimary auditory cortex, and then finally neurons in primary auditory cortex [7]. This leads to the hypothesis that the nonprimary system is more labile than the primary circuitry, and may facilitate rapid online learning and adaptation in connection to cognitive and reward circuits. We speculate that changes to the afferent pathway are biased towards stability—the system exhibits a more persistent physiology that resists transient changes, and relatively few of the projections exhibit task-related evanescence (Fig. 4). Conversely, the efferent pathway is biased towards in-the-moment changes; this evanescence facilitates phenomena such as selective attention to one speaker. This system is relatively less persistent in sound processing. The more an activity is done, repeated, and overlearned, the more likely remodeling will occur in the primary pathway and, eventually, influence afferent processing.

This primary-persistent, nonprimary-evanescent distinction may underlie the capacity to strike a balance between stability of auditory processing and malleability in attention, adaptation, and learning (Outstanding Questions Box).

Concluding Remarks and Future Directions

We have reviewed the auditory system's ability to change. In particular, we have argued that cognitive, sensorimotor, and reward systems optimize auditory learning, and that this learning underlies success in everyday language and communication. We have also argued that the auditory system should be thought of as a distributed, but integrated, circuit that is a moving target—for better or worse, its response properties change through the interplay of cognitive and reward circuits during everyday listening. Thus, both expertise and disorder should be considered from a common standpoint of neuroplasticity. While our emphasis has been on the auditory system, we argue that these principles extend to other sensory systems [4,6,8].

The recognition that states of decline, deprivation, and disorder should be viewed through a lens of plasticity suggests that they may, in part, be reversible. If the same pathways are responsible for expertise and disorder, then the conditions that facilitate expertise may ameliorate communicative difficulties. Our framework therefore makes a clear case for auditory training as an intervention for listening and language difficulties, and—so long as the training integrates cognitive, sensorimotor, and reward systems—early evidence is promising.

Finally, we have highlighted how measuring the integrity of sound processing at basic levels of the auditory system opens a window into human communication and the imprint of a life spent in sound. A healthy brain is labile and stable, able to adapt to new environments while pulling on knowledge and experience to make sense of the sensory world. Thus, in addition to motivating and informing interventions, our framework can help facilitate training by identifying an individual's strengths and weaknesses in the neural processes important for everyday communication.

Outstanding Questions Box.

How do auditory experiences layer and interact across an individual's life? How does attention in the past facilitate or constrain future learning?

How do the indexes of learning discovered in subdivisions of the auditory pathway work together as functional processing of sound becomes shaped by experiences?

Is the neural processing of particular sound details more or less malleable with experience?

How does the auditory system balance temporal processing across timescales of acoustic information? Is there a single “timekeeper” of nested oscillators or is each distinct? Does plasticity at one timescale of auditory processing imply plasticity at multiple timescales?

What “dosage” of auditory training is necessary to impart meaningful and lasting neurophysiological and behavioral changes? Our framework would predict that training combining cognitive, sensorimotor, and reward components would be optimized for fast and long-lasting changes.

Can evolutionary, comparative studies of the corticofugal pathway explain the phenomenal learning capacity observed in acoustically-sophisticated species? Is this interconnectedness at the heart of language and auditory learning? We speculate that the ability to learn and modulate sensory infrastructure has increased evolutionary, and thus the influence of auditory learning on everyday behavior is greater in more sophisticated species (Fig. 3).

How does our framework for auditory processing extend to other sensory systems?

How can the lessons of auditory learning be transferred outside of the laboratory and into clinical, educational, and community settings?

Trends Box.

The auditory system should be thought of as a distributed, but integrated, circuit that is more than a simple set of processing stations.

Experiences sculpt the auditory system and impart a biological “memory” that can change automatic response properties from cochlea to cortex.

The cognitive, sensorimotor, and reward aspects of these experiences optimize auditory learning.

Acknowledgments

We are grateful to Trent Nicol for his input on this manuscript and to colleagues in the Auditory Neuroscience Laboratory, past and present, for the many experiments and conversations that motivated this framework. Supported by NIH (R01 HD069414), NSF (BCS 1430400), and the Knowles Hearing Center.

Display items

- auditory neuraxis

the auditory information processing pathway of the nervous system that transmits information back and forth between the cochlea and cortex

- FFR/cABR

a scalp-recorded potential that consists of aggregate neural processing of sound details and that captures a snapshot of the integrity of auditory processing. While historically FFR referred to responses to low-frequency pure tones, the FFR can be as rich and complex as the eliciting stimulus, and we use it to refer to neural activity that “follows” both transient and periodic acoustic events

- phoneme

the smallest unit of speech that conveys a change in meaning. Phonemic information is connoted by fine-grained acoustic contrasts. For example, the acoustic difference between /b/and /g/ is phonemically meaningful, but the acoustic difference between /p/ in [putter] and /p/ in [sputter] is not

- inhibitory control

the ability to actively suppress information irrelevant to the task at hand

- phaselocking

the ability of auditory neurons to change their intrinsic rhythms to follow those of incoming sounds

- auditory processing

a cluster of listening skills that refer to the ability to make meaning from sound. Listeners can have normal hearing thresholds but still struggle to process auditory information

- statistical learning

an implicit process of picking up on the statistical regularities in the environment; infants exhibit this ability and it is thought to be a principal component of language learning

- otoacoustic emissions

sounds generated by outer hair cells of the inner ear; in certain cases these sounds can be modulated by active listening

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Webster DB. The mammalian auditory pathway: Neuroanatomy. Springer; 1992. An overview of mammalian auditory pathways with an emphasis on humans; pp. 1–22. [Google Scholar]

- 2.Peretz I, Coltheart M. Modularity of music processing. Nat Neurosci. 2003;6:688–691. doi: 10.1038/nn1083. [DOI] [PubMed] [Google Scholar]

- 3.Winer JA, Schreiner CE. The inferior colliculus. Springer; 2005. The central auditory system: a functional analysis; pp. 1–68. [Google Scholar]

- 4.Saalmann YB, et al. The pulvinar regulates information transmission between cortical areas based on attention demands. Science. 2012;337:753–756. doi: 10.1126/science.1223082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bajo VM, King AJ. Cortical modulation of auditory processing in the midbrain. Front Neural Circuits. 2012;6:114. doi: 10.3389/fncir.2012.00114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Behrmann M, Plaut DC. Distributed circuits, not circumscribed centers, mediate visual recognition. Trends Cogn Sci. 2013;17:210–219. doi: 10.1016/j.tics.2013.03.007. [DOI] [PubMed] [Google Scholar]

- 7.Atiani S, et al. Emergent selectivity for task-relevant stimuli in higher-order auditory cortex. Neuron. 2014;82:486–499. doi: 10.1016/j.neuron.2014.02.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Siegel M, et al. Cortical information flow during flexible sensorimotor decisions. Science. 2015;348:1352–1355. doi: 10.1126/science.aab0551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gordon KA, et al. Activity-dependent developmental plasticity of the auditory brain stem in children who use cochlear implants. Ear Hear. 2003;24:485–500. doi: 10.1097/01.AUD.0000100203.65990.D4. [DOI] [PubMed] [Google Scholar]

- 10.Benasich AA, et al. Plasticity in Developing Brain: Active Auditory Exposure Impacts Prelinguistic Acoustic Mapping. J Neurosci. 2014;34:13349–13363. doi: 10.1523/JNEUROSCI.0972-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tierney A, et al. Music training alters the course of adolescent auditory development. Proc Natl Acad Sci U S A. 2015;112:10062–10067. doi: 10.1073/pnas.1505114112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kilgard MP. Harnessing plasticity to understand learning and treat disease. Trends Neurosci. 2012;35:715–722. doi: 10.1016/j.tins.2012.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Skoe E, Kraus N. A little goes a long way: How the adult brain is shaped by musical training in childhood. J Neurosci. 2012;32:11507–11510. doi: 10.1523/JNEUROSCI.1949-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.White-Schwoch T, et al. Older adults benefit from music training early in life: Biological evidence for long-term training-driven plasticity. J Neurosci. 2013;33:17667–17674. doi: 10.1523/JNEUROSCI.2560-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Anderson S, et al. A dynamic auditory-cognitive system supports speech-in-noise perception in older adults. Hear Res. 2013;300:18–32. doi: 10.1016/j.heares.2013.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shinn-Cunningham BG. Object-based auditory and visual attention. Trends Cogn Sci. 2008;12:182–186. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rönnberg J, et al. Cognition counts: A working memory system for ease of language understanding (ELU) Int J Audiol. 2008;47:S99–S105. doi: 10.1080/14992020802301167. [DOI] [PubMed] [Google Scholar]

- 18.Nahum M, et al. Low-level information and high-level perception: the case of speech in noise. PLoS Biol. 2008;6:e126. doi: 10.1371/journal.pbio.0060126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kraus N, Chandrasekaran B. Music training for the development of auditory skills. Nat Rev Neurosci. 2010;11:599–605. doi: 10.1038/nrn2882. [DOI] [PubMed] [Google Scholar]

- 20.Polley DB, et al. Perceptual learning directs auditory cortical map reorganization through top-down influences. J Neurosci. 2006;26:4970–4982. doi: 10.1523/JNEUROSCI.3771-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kraus N, et al. Cognitive factors shape brain networks for auditory skills: spotlight on auditory working memory. Ann N Y Acad Sci. 2012;1252:100–107. doi: 10.1111/j.1749-6632.2012.06463.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Green CS, Bavelier D. Action video game modifies visual selective attention. Nature. 2003;423:534–537. doi: 10.1038/nature01647. [DOI] [PubMed] [Google Scholar]

- 23.Anderson S, et al. Reversal of age-related neural timing delays with training. Proc Natl Acad Sci. 2013;110:4357–4362. doi: 10.1073/pnas.1213555110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Anguera J, et al. Video game training enhances cognitive control in older adults. Nature. 2013;501:97–101. doi: 10.1038/nature12486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Patel AD. Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Front Psychol. 2011;2 doi: 10.3389/fpsyg.2011.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Herholz SC, Zatorre RJ. Musical training as a framework for brain plasticity: Behavior, function, and structure. Neuron. 2012;76:486–502. doi: 10.1016/j.neuron.2012.10.011. [DOI] [PubMed] [Google Scholar]

- 27.Strait DL, Kraus N. Biological impact of auditory expertise across the life span: musicians as a model of auditory learning. Hear Res. 2014;308:109–121. doi: 10.1016/j.heares.2013.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Limb CJ, Braun AR. Neural substrates of spontaneous musical performance: An fMRI study of jazz improvisation. PLoS ONE. 2008;3:e1679. doi: 10.1371/journal.pone.0001679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Blood AJ, et al. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat Neurosci. 1999;2:382–387. doi: 10.1038/7299. [DOI] [PubMed] [Google Scholar]

- 30.Parbery-Clark A, et al. Musical experience limits the degradative effects of background noise on the neural processing of sound. J Neurosci. 2009;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Krizman J, et al. Subcortical encoding of sound is enhanced in bilinguals and relates to executive function advantages. Proc Natl Acad Sci. 2012;109:7877–7881. doi: 10.1073/pnas.1201575109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Swaminathan J, et al. Musical training, individual differences and the cocktail party problem. Sci Rep. 2015;5 doi: 10.1038/srep11628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zendel BR, Alain C. Musicians experience less age-related decline in central auditory processing. Psychol Aging. 2012;27:410. doi: 10.1037/a0024816. [DOI] [PubMed] [Google Scholar]

- 34.Boebinger D, et al. Musicians and non-musicians are equally adept at perceiving masked speech. J Acoust Soc Am. 2015;137:378–387. doi: 10.1121/1.4904537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rogers CL, et al. Effects of bilingualism, noise, and reverberation on speech perception by listeners with normal hearing. Appl Psycholinguist. 2006;27:465–485. [Google Scholar]

- 36.Van Engen KJ. Similarity and familiarity: Second language sentence recognition in first-and second-language multi-talker babble. Speech Commun. 2010;52:943–953. doi: 10.1016/j.specom.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Strait DL, et al. Musical experience and neural efficiency–effects of training on subcortical processing of vocal expressions of emotion. Eur J Neurosci. 2009;29:661–668. doi: 10.1111/j.1460-9568.2009.06617.x. [DOI] [PubMed] [Google Scholar]

- 38.Kraus N, Nicol T. The cognitive auditory system. In: Fay R, Popper A, editors. Perspectives on Auditory Research. Springer-Verlag; 2014. pp. 299–319. [Google Scholar]

- 39.Lin FR, et al. Hearing loss and cognitive decline in older adults. JAMA Intern Med. 2013;173:293–299. doi: 10.1001/jamainternmed.2013.1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Anderson S, et al. Aging affects neural precision of speech encoding. J Neurosci. 2012;32:14156–14164. doi: 10.1523/JNEUROSCI.2176-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mishra J, et al. Adaptive training diminishes distractibility in aging across species. Neuron. 2014;84:1091–1103. doi: 10.1016/j.neuron.2014.10.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Merzenich MM, et al. Somatosensory cortical map changes following digit amputation in adult monkeys. J Comp Neurol. 1984;224:591–605. doi: 10.1002/cne.902240408. [DOI] [PubMed] [Google Scholar]

- 43.Neville HJ, et al. Cerebral organization for language in deaf and hearing subjects: biological constraints and effects of experience. Proc Natl Acad Sci. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Micheyl C, et al. Difference in cochlear efferent activity between musicians and non-musicians. Neuroreport. 1997;8:1047–1050. doi: 10.1097/00001756-199703030-00046. [DOI] [PubMed] [Google Scholar]

- 45.Bidelman GM, et al. Psychophysical auditory filter estimates reveal sharper cochlear tuning in musicians. J Acoust Soc Am. 2014;136:EL33–EL39. doi: 10.1121/1.4885484. [DOI] [PubMed] [Google Scholar]

- 46.Kraus N, et al. Auditory learning through active engagement with sound: biological impact of community music lessons in at-risk children. Front Neurosci. 2014;8 doi: 10.3389/fnins.2014.00351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Engineer ND, et al. Reversing pathological neural activity using targeted plasticity. Nature. 2011;470:101–104. doi: 10.1038/nature09656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Brainard MS, Doupe AJ. Interruption of a basal ganglia–forebrain circuit prevents plasticity of learned vocalizations. Nature. 2000;404:762–766. doi: 10.1038/35008083. [DOI] [PubMed] [Google Scholar]

- 49.Thomson JM, Goswami U. Rhythmic processing in children with developmental dyslexia: auditory and motor rhythms link to reading and spelling. J Physiol -Paris. 2008;102:120–129. doi: 10.1016/j.jphysparis.2008.03.007. [DOI] [PubMed] [Google Scholar]

- 50.Woodruff Carr K, et al. Beat synchronization predicts neural speech encoding and reading readiness in preschoolers. Proc Natl Acad Sci. 2014 doi: 10.1073/pnas.1406219111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Bhide A, et al. A Rhythmic Musical Intervention for Poor Readers: A Comparison of Efficacy With a Letter-Based Intervention. Mind Brain Educ. 2013;7:113–123. [Google Scholar]

- 52.Saffran JR, et al. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- 53.Lew-Williams C, Saffran JR. All words are not created equal: Expectations about word length guide infant statistical learning. Cognition. 2012;122:241–246. doi: 10.1016/j.cognition.2011.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Skoe E, et al. Prior Experience Biases Subcortical Sensitivity to Sound Patterns. J Cogn Neurosci. 2014;27:124–140. doi: 10.1162/jocn_a_00691. [DOI] [PubMed] [Google Scholar]

- 55.Bakin JS, Weinberger NM. Induction of a physiological memory in the cerebral cortex by stimulation of the nucleus basalis. Proc Natl Acad Sci. 1996;93:11219–11224. doi: 10.1073/pnas.93.20.11219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kilgard MP, Merzenich MM. Cortical map reorganization enabled by nucleus basalis activity. Science. 1998;279:1714–1718. doi: 10.1126/science.279.5357.1714. [DOI] [PubMed] [Google Scholar]

- 57.Leaver AM, et al. Dysregulation of limbic and auditory networks in tinnitus. Neuron. 2011;69:33–43. doi: 10.1016/j.neuron.2010.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.De Ridder D, et al. Placebo-Controlled Vagus Nerve Stimulation Paired With Tones in a Patient With Refractory Tinnitus: A Case Report. Otol Neurotol. 2015;36:575–580. doi: 10.1097/MAO.0000000000000704. [DOI] [PubMed] [Google Scholar]

- 59.Salimpoor VN, et al. Interactions between the nucleus accumbens and auditory cortices predict music reward value. Science. 2013;340:216–219. doi: 10.1126/science.1231059. [DOI] [PubMed] [Google Scholar]

- 60.Kuhl PK. Is speech learning “gated”by the social brain? Dev Sci. 2007;10:110–120. doi: 10.1111/j.1467-7687.2007.00572.x. [DOI] [PubMed] [Google Scholar]

- 61.Kuhl PK, et al. Foreign-language experience in infancy: Effects of short-term exposure and social interaction on phonetic learning. Proc Natl Acad Sci. 2003;100:9096–9101. doi: 10.1073/pnas.1532872100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Abrams DA, et al. Underconnectivity between voice-selective cortex and reward circuitry in children with autism. Proc Natl Acad Sci. 2013;110:12060–12065. doi: 10.1073/pnas.1302982110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Russo N, et al. Deficient brainstem encoding of pitch in children with autism spectrum disorders. Clin Neurophysiol. 2008 doi: 10.1016/j.clinph.2008.01.108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Hart B, Risley TR. Meaningful differences in the everyday experience of young American children. Paul H Brookes Publishing; 1995. [Google Scholar]

- 65.Skoe E, et al. The impoverished brain: disparities in maternal education affect the neural response to sound. J Neurosci. 2013;33:17221–17231. doi: 10.1523/JNEUROSCI.2102-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhu X, et al. Environmental Acoustic Enrichment Promotes Recovery from Developmentally Degraded Auditory Cortical Processing. J Neurosci. 2014;34:5406–5415. doi: 10.1523/JNEUROSCI.5310-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.David SV, et al. Task reward structure shapes rapid receptive field plasticity in auditory cortex. Proc Natl Acad Sci. 2012;109:2144–2149. doi: 10.1073/pnas.1117717109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Xiong Q, et al. Selective corticostriatal plasticity during acquisition of an auditory discrimination task. Nature. 2015;521:348–351. doi: 10.1038/nature14225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Kral A, Eggermont JJ. What's to lose and what's to learn: development under auditory deprivation, cochlear implants and limits of cortical plasticity. Brain Res Rev. 2007;56:259–269. doi: 10.1016/j.brainresrev.2007.07.021. [DOI] [PubMed] [Google Scholar]

- 70.Bajo VM, et al. The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat Neurosci. 2009;13:253–260. doi: 10.1038/nn.2466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ayala YA, et al. Differences in the strength of cortical and brainstem inputs to SSA and non-SSA neurons in the inferior colliculus. Sci Rep. 2015;5 doi: 10.1038/srep10383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Krishnan A, et al. Encoding of pitch in the human brainstem is sensitive to language experience. Cogn Brain Res. 2005;25:161–168. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 73.Wright BA, et al. Enhancing perceptual learning by combining practice with periods of additional sensory stimulation. J Neurosci. 2010;30:12868–12877. doi: 10.1523/JNEUROSCI.0487-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Sarro EC, Sanes DH. The cost and benefit of juvenile training on adult perceptual skill. J Neurosci. 2011;31:5383–5391. doi: 10.1523/JNEUROSCI.6137-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Green C, Bavelier D. Learning, attentional control, and action video games. Curr Biol. 2012;22:R197–R206. doi: 10.1016/j.cub.2012.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Gao E, Suga N. Experience-dependent plasticity in the auditory cortex and the inferior colliculus of bats: role of the corticofugal system. Proc Natl Acad Sci. 2000;97:8081. doi: 10.1073/pnas.97.14.8081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Bajo VM, et al. The ferret auditory cortex: descending projections to the inferior colliculus. Cereb Cortex. 2007;17:475–491. doi: 10.1093/cercor/bhj164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Patel AD. The evolutionary biology of musical rhythm: was Darwin wrong. PLoS Biol. 2014;12 doi: 10.1371/journal.pbio.1001821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.White-Schwoch T, et al. Auditory processing in noise: A preschool biomarker for literacy. PLOS Biol. 2015;13:e1002196. doi: 10.1371/journal.pbio.1002196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Schreiner CE, Urbas JV. Representation of amplitude modulation in the auditory cortex of the cat. II. Comparison between cortical fields. Hear Res. 1988;32:49–63. doi: 10.1016/0378-5955(88)90146-3. [DOI] [PubMed] [Google Scholar]

- 81.Rauschecker JP, et al. Processing of complex sounds in the macaque nonprimary auditory cortex. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- 82.Zatorre RJ, et al. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- 83.Overath T, et al. The cortical analysis of speech-specific temporal structure revealed by responses to sound quilts. Nat Neurosci. 2015;18:903–911. doi: 10.1038/nn.4021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Kraus N, et al. Midline and temporal lobe MLRs in the guinea pig originate from different generator systems: a conceptual framework for new and existing data. Electroencephalogr Clin Neurophysiol. 1988;70:541–558. doi: 10.1016/0013-4694(88)90152-6. [DOI] [PubMed] [Google Scholar]

- 85.Kraus N, et al. Discrimination of speech-like contrasts in the auditory thalamus and cortex. J Acoust Soc Am. 1994;96:2758–2768. doi: 10.1121/1.411282. [DOI] [PubMed] [Google Scholar]

- 86.Abrams DA, et al. A possible role for a paralemniscal auditory pathway in the coding of slow temporal information. Hear Res. 2011;272:125–134. doi: 10.1016/j.heares.2010.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Ahissar E, et al. Transformation from temporal to rate coding in a somatosensory thalamocortical pathway. Nature. 2000;406:302–306. doi: 10.1038/35018568. [DOI] [PubMed] [Google Scholar]

- 88.Merigan WH, Maunsell JH. How parallel are the primate visual pathways? Annu Rev Neurosci. 1993;16:369–402. doi: 10.1146/annurev.ne.16.030193.002101. [DOI] [PubMed] [Google Scholar]

- 89.Kraus N, et al. Auditory neurophysiologic responses and discrimination deficits in children with learning problems. Science. 1996;273:971–973. doi: 10.1126/science.273.5277.971. [DOI] [PubMed] [Google Scholar]

- 90.Abrams DA, et al. Rapid acoustic processing in the auditory brainstem is not related to cortical asymmetry for the syllable rate of speech. Clin Neurophysiol. 2010;121:1343–1350. doi: 10.1016/j.clinph.2010.02.158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Goswami U. A temporal sampling framework for developmental dyslexia. Trends Cogn Sci. 2011;15:3–10. doi: 10.1016/j.tics.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 92.Kraus N, et al. Nonprimary auditory thalamic representation of acoustic change. J Neurophysiol. 1994;72:1270–1277. doi: 10.1152/jn.1994.72.3.1270. [DOI] [PubMed] [Google Scholar]

- 93.Kraus N, Disterhoft JF. Response plasticity of single neurons in rabbit auditory association cortex during tone-signalled learning. Brain Res. 1982;246:205–215. doi: 10.1016/0006-8993(82)91168-4. [DOI] [PubMed] [Google Scholar]

- 94.Tallal P, Piercy M. Defects of non-verbal auditory perception in children with developmental aphasia. Nature. 1973;241:468–469. doi: 10.1038/241468a0. [DOI] [PubMed] [Google Scholar]

- 95.Galbraith GC, et al. Intelligible speech encoded in the human brain stem frequency-following response. Neuroreport. 1995;6:2363–2367. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- 96.Cunningham J, et al. Neurobiologic responses to speech in noise in children with learning problems: deficits and strategies for improvement. Clin Neurophysiol. 2001;112:758–767. doi: 10.1016/s1388-2457(01)00465-5. [DOI] [PubMed] [Google Scholar]

- 97.Anderson S, et al. Auditory brainstem response to complex sounds predicts self-reported speech-in-noise performance. J Speech Lang Hear Res. 2013;56:31–43. doi: 10.1044/1092-4388(2012/12-0043). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Rocha-Muniz CN, et al. Sensitivity, specificity and efficiency of speech-evoked ABR. Hear Res. 2014;28:15–22. doi: 10.1016/j.heares.2014.09.004. [DOI] [PubMed] [Google Scholar]

- 99.Tarasenko MA, et al. The auditory brainstem response to complex sounds: a potential biomarker for guiding treatment of psychosis. Front Psychiatry. 2014;5:142. doi: 10.3389/fpsyt.2014.00142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.White-Schwoch T, et al. Auditory-neurophysiological responses to speech during early childhood: Effects of background noise. Hear Res. 2015;328:34–47. doi: 10.1016/j.heares.2015.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Anderson S, et al. Development of subcortical speech representation in human infants. J Acoust Soc Am. 2015;137:3346–3355. doi: 10.1121/1.4921032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Skoe E, et al. Stability and plasticity of auditory brainstem function across the lifespan. Cereb Cortex. 2015 doi: 10.1093/cercor/bht311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Warrier CM, et al. Inferior colliculus contributions to phase encoding of stop consonants in an animal model. Hear Res. 2011;282:108–118. doi: 10.1016/j.heares.2011.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Vélez A, et al. Animal Communication and Noise. Springer; 2013. Anuran acoustic signal perception in noisy environments; pp. 133–185. [Google Scholar]

- 105.Krause J, et al. others. The derived FOXP2 variant of modern humans was shared with Neandertals. Curr Biol. 2007;17:1908–1912. doi: 10.1016/j.cub.2007.10.008. [DOI] [PubMed] [Google Scholar]