Abstract

Background

Individual patient data (IPD) meta-analysis is considered the “gold standard” for exploring the effectiveness of interventions in different subgroups of patients. However, obtaining IPD is time-consuming and contact with the researchers responsible for the original trials is usually required. To date, there are no studies evaluating different strategies to optimize the process for retrieval of IPD from such researchers. Our aim is to examine the impact of providing incentives to the researchers responsible for the trials eligible for a meta-analysis to submit their IPD.

Methods/Design

We updated our previously published systematic reviews for type 1 diabetes mellitus comparing long- and intermediate-acting insulin regimens (from January 2013 to June 2015) and for Alzheimer’s dementia comparing cognitive enhancers (from January 2015 to May 2015). Eligible were randomized controlled trials (RCTs) fulfilling the eligibility criteria of the systematic reviews. We will randomly allocate authors of the reports of these RCTs into an intervention or control group. Those allocated to the intervention group will be contacted by email, mail, and phone, and will be asked to provide the IPD from their RCT and will be given a financial incentive. Those allocated to the control group will be contacted by email, mail, and phone, but will not receive a financial incentive. Our primary outcome will be the proportion of authors who provide the IPD. The secondary outcomes will be the time to return the dataset (defined as the period between the information request and the authors’ response with the dataset), and completeness of data. We will compare the response rates in the two groups using the odds ratio and the corresponding 95 % confidence interval. We will also use binary logistic regression and cox regression analyses to examine whether different RCT characteristics, such as study size and sponsor information, influence the probability of providing IPD and the time needed to share the data.

Discussion

This study will determine whether a financial incentive affects response rates when seeking IPD from the original researchers. We will disseminate our findings in an open access scientific journal and present results at national and international conferences.

Trial registration

This trial is registered in Clinical Trials.gov, ID number NCT02569411. Date of registration 5 October 2015.

Keywords: Meta-analysis, Patient-level data, Individual patient data, Individual participant data, Incentive, Data retrieval, Data collection, Response rate

Background

Over the past 30 years, there has been a considerable increase in the number of published systematic reviews and meta-analyses [1, 2], and systematic reviews have become the base unit for developing clinical practice guidelines as well as other knowledge tools used in clinical practice and policy-making. Conducting a meta-analysis requires accessing relevant outcome measurements from the individual, eligible studies. However, often outcome data from eligible studies are not available, as many authors do not report them in their manuscript.

Medical journals have attempted to deal with this difficulty by endorsing standards for reporting of study results, such as the Consolidated Standards of Reporting Trials (CONSORT) checklist [3]. Despite these efforts, it has been shown that study data are inconsistently reported; missing evidence is a substantial problem and one of the greatest threats to the validity of results from a systematic review and meta-analysis [4–6]. Authors of systematic reviews, therefore, have to contact authors of the original studies to attempt to obtain the relevant data for their analysis. However, authors of the original studies may be unwilling or unable to share their data [7] and hence, systematic review authors may have to exclude these studies from the meta-analysis. This inability to obtain data occurs frequently, and it might occur for a variety of reasons. For example, the original study authors may worry that a re-analysis might show an error or a pattern they missed [8], they may have limited publication rights for the data as a study sponsor owns them [9], they may have moved to a different university and lost the data, or the data might be old and saved in an inaccessible storage device [10].

Meta-analysis can be conducted using individual patient data (IPD) (data from each individual participant enrolled in each included trial) and/or aggregated data (summary point estimates from all participants enrolled in each included trial). Meta-analysis of IPD is considered the “gold standard” approach [11] for meta-analysis, in part because it provides the opportunity to explore differences in treatment effects across different subgroups. These subgroups might include subsets of patients, such as males and females, or subsets of studies, such as those conducted in different geographical locations [12]. Knowledge about the effectiveness of interventions in different subgroups is particularly important for decision- making. To date, there has been an increase in the frequency of published IPD meta-analyses [13], but authors of these studies (or data managers) may have to devote a lot of time and effort to obtain and prepare the IPD in the required format [14]. For instance, a previously conducted IPD meta-analysis of 19 studies included four research coordinators (who invested between 5 and 20 % of their full time) required 2088 hours of data management and more than 1000 emails between research coordinators and the data managers [15]. A main barrier to undertaking an IPD meta-analysis is that study authors usually report aggregated data, and IPD can only be obtained by contacting the original study authors for information that they did not include in their reports. Although there is a strong movement to share anonymized IPD from randomized controlled trials (RCTs) [16–19], this has not yet been well-established and the cooperation of the original study authors is crucial for providing the data in a usable format and answering queries about their data. For example, a recent planned IPD meta-analysis failed to be conducted, as the majority of the primary study authors did not share their data [20]. Given that obtaining IPD is important but also time-consuming, efforts need to be undertaken to understand how to optimize this process. Although previous studies have shown that financial incentives may improve response rates in survey requests [21–24], to the best of our knowledge there are no studies evaluating whether a financial incentive may facilitate the retrieval of IPD from authors of studies that are eligible for a systematic review.

The objective of our study is to examine the impact of providing a financial incentive to authors of RCTs that are eligible for a systematic review and meta-analysis, versus usual contact strategies to obtain the IPD. This trial protocol is in accordance with the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT) 2013 statement [25] and is registered in ClinicalTrials.gov with NCT02569411 (5 October 2015).

Methods

Study design

Our study will be a pragmatic (or effectiveness) RCT comparing the financial incentive (i.e., intervention) against the standard process of contacting authors to obtain IPD (i.e., control). The participants will be the authors of RCTs included in our two systematic reviews for type 1 diabetes mellitus [26] and for Alzheimer’s dementia [27], and we will randomize these authors in two groups to request their IPD (see Control and intervention groups section).

We updated the literature search for our published systematic reviews for type 1 diabetes mellitus [26] from January 2013 to June 2015, and for Alzheimer’s dementia [27] from January 2015 to May 2015. Briefly, we used the terms from our previous reviews to search MEDLINE, Cochrane Central Register of Controlled Trials, and EMBASE. Gray literature (i.e., difficult to locate and unpublished studies) was searched via trial registry websites, relevant society/association websites and conference abstracts. Reference lists of included studies and relevant reviews were also scanned. We used the Synthesi.SR tool [28] to screen citations and full-text articles. To ensure reliability, we conducted a training exercise before screening titles and abstracts using our eligibility criteria. When high agreement (greater than 90 %) was observed, two team members screened each title and abstract for inclusion, independently (level 1). After pilot-testing, the same reviewers independently screened the full text of potentially relevant articles to determine inclusion (level 2). Conflicts were resolved by team discussion.

In the type 1 diabetes study, we included trials studying adults (aged 18 years or older) with type 1 diabetes and comparing long-acting basal insulin analogue preparations with other long- or intermediate-acting insulin. We included RCTs of any duration reporting glycosylated hemoglobin and severe hypoglycemia outcomes. Our updated search identified 179 citations with 15 potentially eligible studies, whereas 4 RCTs met the inclusion criteria. In total, 30 RCTs were included in the updated type 1 diabetes study, where 30 studies evaluated glycosylated hemoglobin and 24 assessed severe hypoglycemia.

In the Alzheimer’s dementia systematic review, we included adults (aged 18 years or older) with Alzheimer’s dementia diagnosed using various criteria (e.g., Diagnostic and Statistical Manual of Mental Disorders, Nursing Minimum Data Set criteria). Again, we focused on RCTs of any duration and we included the Mini-mental State Examination and overall serious adverse events outcomes. Our updated search identified 73 citations with 12 potentially eligible studies, whereas 1 RCT met the inclusion criteria. Overall, 108 RCTs were eligible for the updated Alzheimer’s dementia review, where 74 studies provided data on the Mini-mental State Examination outcome and 64 provided data on the serious adverse events outcome.

Participant recruitment

Corresponding authors of RCTs included in our previous and updated systematic reviews will be eligible for inclusion. We will attempt to obtain IPD from all eligible studies by contacting the corresponding author of each included RCT. In cases where the identified studies do not report authors’ email addresses or include non-working email addresses, we will search authors’ publications, PubMed, and profiles that are publicly available, including Research Gate and Google Scholar, to find contact information.

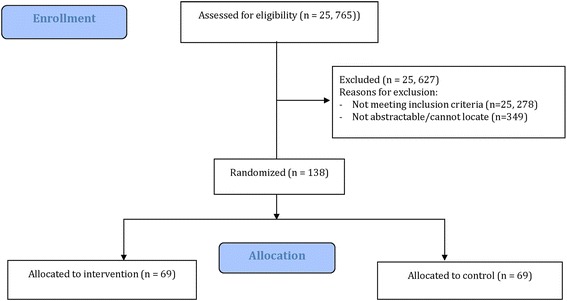

A challenge of this approach is that each author can only be contacted to ask for IPD from a single study. If a corresponding author of an eligible study has published more than one study, we will contact the first, last or the next in order author as presented in the paper. If a single author is included in more than one paper, then we will only contact him/her once for the newest study and the older study will be excluded. In such a case, for the IPD review and at the end of the RCT, we will contact the authors in the same way to obtain the IPD for all excluded studies. All authors who provide feedback during the conduct of the updated systematic review and IPD network meta-analysis for the type 1 diabetes study and the Alzheimer’s dementia study, will become part of an active collaboration and will be included in the authorship in the final publication (only if they agree) [29, 30]. This is in accordance with the International Committee of Medical Journal Editors (ICMJE) criteria [31]. A CONSORT flow diagram depicting the process of the study is presented in Fig. 1 [3]. This flow chart will be updated showing the flow of participants in the entire trial in the main manuscript.

Fig. 1.

Consolidated Standards of Reporting Trials (CONSORT) flow diagram of the process of the randomized controlled trial

Randomization and blinding

Eligible authors will be randomized to one of the two trial groups using a 1:1 procedure. Randomization will be performed using a computer-generated random number list, and adequate allocation concealment will be ensured as the sequence will not be revealed until the end of the study. The computer randomization will be done centrally and conducted by a statistician (AAV) who will be blinded to the authors’ names. However, it is impossible to blind the corresponding authors and research personnel who will be in contact with them due to the nature of intervention. Blinding of outcome assessors is also not possible in this design.

Control and intervention groups

Control group

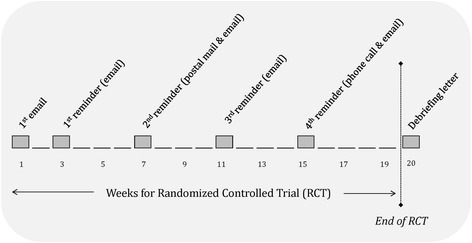

We will contact authors of eligible studies on Alzheimer’s dementia and type 1 diabetes allocated to the control group to participate using four strategies, as per Dillman’s method [21] to optimize response rates and obtain IPD. First, authors will be sent an email requesting their IPD. Second, we will send four email reminders at 2-, 6-, 10-, and 14-week intervals after the initial email. Third, in week 7, we will send a reminder by post in addition to email. Fourth, in week 15 we will attempt to contact the corresponding author by phone. The duration of our study will be 19 weeks in total (see Fig. 2).

Fig. 2.

Study process flow diagram

Intervention group

Using the same approach used for the control group, we will contact authors using the four approaches described above. Authors allocated to the intervention group will be additionally provided with a financial incentive (CAD100). Each participant allocated to the intervention group will receive an upfront CAD100 incentive as a gift certificate from Amazon (www.amazon.com) with the first email notification. In the same email we will clarify that at the end of the RCT, we will offer the same financial incentive to the authors allocated to the control group.

We will send two different letters by email, one for each group, simultaneously to the authors. The same letters will be sent to the authors’ mail addresses, which will have been printed in two different files, one for each group. In both letters, we will ask authors of the original studies to be included in the group authorship on the understanding that they provide feedback on results and take part in writing and reviewing the systematic review manuscript for the final publication, as is common practice in collaborative IPD reviews [32–35]. At the end of the RCT, we will send a debriefing letter to all the authors who participated to our study. All authors who will share their data with us will be appropriately cited and they will be acknowledged in our final manuscript if they wish.

Outcomes

Our primary outcome will be the proportion of RCT authors included in our published systematic reviews who provide complete IPD. We will define complete IPD as information on population, interventions, outcomes and randomization as outlined below for the two reviews:

Population: the type 1 diabetes RCTs should include: age, sex, pregnancy, initial baseline glucose control (e.g., baseline glycosylated hemoglobin level), presence of comorbid conditions, previous history of hypoglycemia, other medications used for each participant, drop-outs along with reasons for drop-out, and number of participants, and Alzheimer’s dementia RCTs should include: age, sex, severity of the Alzheimer’s dementia, previous response to treatment for Alzheimer’s dementia, presence of behavioral disturbance, comorbid conditions (e.g., stroke), other medications used for each patient, drop-outs along with reasons for withdrawal, and number of participants

Interventions: including allocated treatment and dosage

Outcomes: including event and date of event for severe hypoglycemia in the type 1 diabetes review and serious adverse events in the Alzheimer’s dementia review, as well glycosylated hemoglobin and Mini-mental State Examination values and measurement dates for type 1 diabetes and Alzheimer’s dementia respectively

Date of randomization for each participant and overall method of randomization for all study participants

If any of the above items are not provided in the data we receive, but have been collected according to the RCT’s protocol, the study’s dataset will be considered partially complete. These items were chosen as the most vital data for IPD analyses based on input from clinicians on the relevant systematic review team.

Our secondary outcomes will be the time taken to obtain the dataset and the completeness of data. We will determine the duration between the information request and the authors’ provision of their dataset to estimate the time required to obtain data from authors. In case the authors send multiple datasets (e.g., first received dataset is incomplete, but after exchanging several emails the final received dataset is complete) over a period of time, we will consider the last date of correspondence to estimate the time required to obtain IPD. The completeness of the received dataset is crucial to investigators, as missing data might prevent inclusion of a RCT in the meta-analysis. An IPD meta-analysis may be biased if it is based only on a subset of trials [13]. If an RCT author provides us with the requested information, but some variables are missing (e.g., age, sex, pregnancy) because these were not collected during the RCT, then we will consider the dataset complete if this was reported in the study protocol. This is because the data are not missing due to selection bias. However, in case the requested information is not provided and the data have been collected in the RCT, the dataset will be considered incomplete. In such cases, we will not be able to control for these variables for the particular RCT in the analysis.

Ethical approval and confidentiality

Ethical approval for this RCT was obtained from the Research Ethics Board (Dr. David Mazer, Dr. Philip Berger, and Dr. Brenda McDowell) of St. Michael’s Hospital (see Appendix).We are conducting the RCT to examine the impact of incentivizing authors versus usual contact strategies to obtain original IPD, and we feel that disclosing this early on in a consent letter will bias our results. Instead, we intend to send authors a debriefing letter after they share their data with us, letting them know that they were part of an RCT and that they can withdraw their data from our analysis, if they wish. The information generated during our study will be confidential and limited to the purposes, as described in this protocol. We will request authors to share anonymized IPD only, where each patient will be linked to a specific identifier.

Power and sample size

A sample size of at least 116 participants in total (58 per group) for evaluation will provide 80 % power at the 5 % level of significance (two-sided) to detect an increase in response rates from 30 % in the control group to 55 % in the experimental group with 1:1 allocation. This is based on studies examining response rates of surveys. We anticipate a large response difference (i.e., absolute increase of 25 %) between the two groups as large and upfront incentives have been shown to be more effective than no, small or promised monetary incentives [36–39]. James et al. [36] compared survey response rates between US$25 prepaid and US$25 promised and found that upfront payment of a cash incentive was significantly more effective (odds ratio (OR) 2.88, 95 % confidence interval (CI) (1.70, 4.89)). Similarly, Pit et al. [38] conducted a pairwise meta-analysis comparing monetary incentives versus no incentives in survey responses and found that cash incentives were more effective at increasing response rates (OR = 1.87, 95 % CI (1.55, 2.26)). Our response rate estimate for the control arm (30 %) was based on previous empirical findings for retrieval of missing data in meta-analysis [40]. We expect to have adequate power for a 25 % response difference between the groups, as the current number of authors to contact is 138 (i.e., 30 authors to contact for the type 1 diabetes systematic review and 108 authors for the Alzheimer’s dementia systematic review). We have experience in contacting authors, as this is a regular process to ask for additional aggregated data on the eligible studies to enhance clarity in a meta-analysis, and on average we have a good response rate (over 60 %).

Data collection, management and statistical analysis

Two team members (AAV, HA) will independently assess the data retrieved to ensure the datasets are complete as defined in the Outcomes section. The assessment process will be done blind to the allocation using a computer-generated random number list. Conflicts will be resolved by discussion or involvement of a third member (SES, ACT). All IPD will be held on a password-protected database on a secure server at St. Michael’s Hospital. Access to data and authors’ responses will be restricted to the research team and will not be shared with any third parties.

The analysis will be performed on an intention-to-treat basis and participants will be analyzed in the arms they were allocated to, regardless of whether they received the intervention or not. Results will be reported according to the CONSORT statement [3]. We will describe the two groups in terms of their baseline characteristics, including sex (in RCTs the investigators are focused on the sex of the patient (biological) and not gender) of authors contacted, sponsor information, study size, risk of bias, treatments compared, magnitude and statistical significance of the treatment effect (as presented in the published trial), year of publication, country in which the study was conducted (according to first author), and journal in which the study was published, irrespective of whether the authors provided their IPD. The funding source will be categorized as: (1) industry-sponsored trials (funded by or authored by an employee of a pharmaceutical or other commercial organization), (2) publicly-sponsored trials (governmental sources and non-profit organizations, including universities, hospitals, and foundations), and (3) non-sponsored trials (no funding source) [41]. We will also categorize studies according to their sample size as small (fewer than 50 patients per arm), moderate (50–150 patients per arm), and large (more than 150 patients per arm) [42]. Similar to our previous systematic review [26], we will appraise the risk of bias using the Cochrane Risk of Bias tool [43]. Two investigators (HA and AAV/ACT) will independently assess the risk of bias in each included study, and any disagreements will be resolved by discussion with a third investigator (SES). If risk of bias is unclear, we will ask the author for clarification. Risk of bias will be assessed considering direct investigation of the IPD as described in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) extension to IPD checklist [44]. For example, randomization might seem of low risk of bias in the text, but when assessing this using IPD it might show that there is an unbalanced treatment allocation to groups, and vice versa. We believe that a small study size and high risk of bias are factors that may impact on the IPD retrieval.

In this RCT, we will summarize categorical data in each group using frequencies and percentages and 95 % CIs, and we will use the chi-square test or Fisher’s exact test as appropriate to compare them. The continuous characteristics of each group will be presented using means and 95 % CIs if approximately normally distributed, and medians and interquartile ranges (IQRs) if non-normally distributed. We will compare means of the two groups using the t test, and medians of the intervention groups using the Wilcoxon-Mann-Whitney test. We will also use summary statistics, e.g., OR for dichotomous data, mean difference for symmetrical continuous data, and ratio of geometric means for skewed continuous data, along with the corresponding 95 % CI. We will use the Shapiro-Wilk test to determine normality for each variable.

The primary data analysis will compare responses with complete IPD between experimental and control groups using ORs and corresponding 95 % CIs. We will calculate the OR for response as soon as we have completed all different strategies to contact the eligible authors, as describe in the control and intervention groups section. For the secondary outcome (time needed to share the data), we will calculate the mean and 95 % CI for both control and experimental groups, and then we will compare them using mean difference and 95 % CI. We will also describe the completeness of each study’s dataset using percentages and 95 % CIs, and compare the average completeness of IPD between groups using mean difference and 95 % CI. If authors are lost to follow-up (e.g., they promise they will share the data and we never hear back from them), a sensitivity analysis will be undertaken using a complete case analysis to assess the robustness of the findings with respect to missing data for all outcomes.

To examine whether different study characteristics influence the probability of providing complete IPD as specified in the primary outcome, we will use binary logistic regression adjusting for any confounding variables (e.g., small study size, high risk of bias, funding) as appropriate. We will start with bivariate regression analyses (including one dependent and one independent variable) and then for significant moderators we will simultaneously enter them into multiple regression models as long as the minimum number of cases per independent variable is 10. The significance of the variables in the model will be evaluated with the Wald chi-square test and determination of ORs with the associated 95 % CIs. Similarly, to investigate the potential influence of the aforementioned confounding variables on the secondary outcome time needed to share the data, we will apply a Cox regression model adjusting for these explanatory variables. Statistical significance will be assessed at the 0.05 level (two-tailed). All statistical analyses will be conducted by the lead author (AAV).

Discussion

To date, there has been a steady increase in publication of systematic reviews that conduct an IPD meta-analysis [13], but obtaining IPD is time-consuming and costly, and depends on the authors’ willingness to share their data. Reviewers who wish to complete an IPD meta-analysis are often not able to obtain the IPD of all studies, and hence results might be prone to several biases [14, 45, 46]. To the best of our knowledge, this is the first experimental study that explores the effects of a cash incentive to encourage authors of eligible studies to submit their IPD. Results of this study will establish feasibility and provide evidence on the level of response with respect to different study characteristics. In addition, we will capture the most optimal and practical strategies for maximizing the amount of IPD obtained. Previous research evaluating the influence of financial incentives on survey requests showed that such incentives improve response rates considerably (average improvement between 15 and 19 %) [21–24]. This is particularly important, as the study’s sample may not be random according to the patient population, which may result in biased study results and conclusions. A limitation of this study is that we used survey research to inform our sample size, as to the best of our knowledge there are no studies assessing response rate in retrieving IPD from collaborative studies using financial incentives.

Findings of our study will contribute to the future planning of IPD meta-analyses given that the IPD retrieval is part of their research process. We will be able to provide guidance on ways that IPD might be obtained. This research will help customize approaches to planning and conducting IPD meta-analyses, including estimation of the time needed and effective ways to collect the IPD. This will help reviewers to effectively plan their timelines, which may increase the use of IPD in meta-analysis and network meta-analysis. We will disseminate the results of this study in an open access scientific journal and present our results at conferences.

Trial status

As of October 2015, we have updated the literature searches, and finalized the screening process for our systematic reviews, and randomized the authors of the eligible studies. We plan to start contacting the authors in the beginning of February 2016.

Acknowledgements

We thank Ms. Becky Skidmore for updating the literature searches. We would also like to thank Jaimie Ann Adams and Susan Le for formatting the manuscript.

Funding

AAV is funded by the Canadian Institutes of Health Research (CIHR) Banting Postdoctoral Fellowship Program. SES is funded by a Tier 1 Canada Research Chair in Knowledge Translation. ACT is funded by a Drug Safety and Effectiveness Network/Canadian Institutes of Health Research New Investigator Award in Knowledge Synthesis.

Abbreviations

- CI

confidence interval

- CONSORT

Consolidated Standards of Reporting Trials

- IQR

interquartile range

- IPD

individual patient data

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- RCT

randomized controlled trial

- SPIRIT

Standard protocol Items: Recommendations for Interventional Trials.

Appendix

Ethical approval obtained from the Research Ethics Board to conduct this randomized controlled trial.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

AAV, SES and ACT conceived and designed the study, and helped write the draft protocol. HA registered the protocol in Clinical Trials.gov and edited the draft protocol. LAS and MC edited the draft protocol. All authors read and approved the final protocol. HA will be responsible for contacting the RCT authors. AAV will be responsible for the analysis. AAV, ACT, LAS, MC and SES will be responsible for the interpretation of the results. All authors will be responsible for the final publication. All authors read and approved the final manuscript.

Contributor Information

Areti Angeliki Veroniki, Email: veronikia@smh.ca.

Sharon E. Straus, Email: sharon.straus@utoronto.ca

Huda Ashoor, Email: ashoorh@smh.ca.

Lesley A. Stewart, Email: lesley.stewart@york.ac.uk

Mike Clarke, Email: m.clarke@qub.ac.uk.

Andrea C. Tricco, Phone: 416-8646060, Email: triccoa@smh.ca

References

- 1.Egger M, Ebrahim S, Smith GD. Where now for meta-analysis? Int J Epidemiol. 2002;31(1):1–5. doi: 10.1093/ije/31.1.1. [DOI] [PubMed] [Google Scholar]

- 2.Chalmers I, Haynes B. Reporting, updating, and correcting systematic reviews of the effects of health care. BMJ. 1994;309(6958):862–5. doi: 10.1136/bmj.309.6958.862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Schulz KF, Altman DG, Moher D, Group C. CONSORT 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med. 2010;152(11):726–32. doi: 10.7326/0003-4819-152-11-201006010-00232. [DOI] [PubMed] [Google Scholar]

- 4.Mills E, Wu P, Gagnier J, Heels-Ansdell D, Montori VM. An analysis of general medical and specialist journals that endorse CONSORT found that reporting was not enforced consistently. J Clin Epidemiol. 2005;58(7):662–7. doi: 10.1016/j.jclinepi.2005.01.004. [DOI] [PubMed] [Google Scholar]

- 5.Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA. 1990;263(10):1385–9. doi: 10.1001/jama.1990.03440100097014. [DOI] [PubMed] [Google Scholar]

- 6.Parekh-Bhurke S, Kwok CS, Pang C, Hooper L, Loke YK, Ryder JJ, et al. Uptake of methods to deal with publication bias in systematic reviews has increased over time, but there is still much scope for improvement. J Clin Epidemiol. 2011;64(4):349–57. doi: 10.1016/j.jclinepi.2010.04.022. [DOI] [PubMed] [Google Scholar]

- 7.Wicherts JM, Borsboom D, Kats J, Molenaar D. The poor availability of psychological research data for reanalysis. Am Psychol. 2006;61(7):726–8. doi: 10.1037/0003-066X.61.7.726. [DOI] [PubMed] [Google Scholar]

- 8.Wicherts JM, Bakker M, Molenaar D. Willingness to share research data is related to the strength of the evidence and the quality of reporting of statistical results. PLoS One. 2011;6(11):e26828. doi: 10.1371/journal.pone.0026828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lundh A, Krogsboll LT, Gotzsche PC. Access to data in industry-sponsored trials. Lancet. 2011;378(9808):1995–6. doi: 10.1016/S0140-6736(11)61871-0. [DOI] [PubMed] [Google Scholar]

- 10.Vines TH, Albert AY, Andrew RL, Debarre F, Bock DG, Franklin MT, et al. The availability of research data declines rapidly with article age. Curr Biol. 2014;24(1):94–7. doi: 10.1016/j.cub.2013.11.014. [DOI] [PubMed] [Google Scholar]

- 11.Stewart LA, Clarke MJ. Practical methodology of meta-analyses (overviews) using updated individual patient data. Cochrane Working Group. Stat Med. 1995;14(19):2057–79. doi: 10.1002/sim.4780141902. [DOI] [PubMed] [Google Scholar]

- 12.Sutton AJ, Kendrick D, Coupland CA. Meta-analysis of individual- and aggregate-level data. Stat Med. 2008;27(5):651–69. doi: 10.1002/sim.2916. [DOI] [PubMed] [Google Scholar]

- 13.Riley RD, Lambert PC, Abo-Zaid G. Meta-analysis of individual participant data: rationale, conduct, and reporting. BMJ. 2010;340:c221. doi: 10.1136/bmj.c221. [DOI] [PubMed] [Google Scholar]

- 14.Cooper H, Patall EA. The relative benefits of meta-analysis conducted with individual participant data versus aggregated data. Psychol Methods. 2009;14(2):165–76. doi: 10.1037/a0015565. [DOI] [PubMed] [Google Scholar]

- 15.Ioannidis JP, Rosenberg PS, Goedert JJ, O’Brien TR. International meta-analysis of HIVHG. Commentary: meta-analysis of individual participants’ data in genetic epidemiology. Am J Epidemiol. 2002;156(3):204–10. doi: 10.1093/aje/kwf031. [DOI] [PubMed] [Google Scholar]

- 16.Drazen JM. Sharing individual patient data from clinical trials. N Engl J Med. 2015;372(3):201–2. doi: 10.1056/NEJMp1415160. [DOI] [PubMed] [Google Scholar]

- 17.El Emam K, Rodgers S, Malin B. Anonymising and sharing individual patient data. BMJ. 2015;350:h1139. doi: 10.1136/bmj.h1139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gotzsche PC. Why we need easy access to all data from all clinical trials and how to accomplish it. Trials. 2011;12:249. doi: 10.1186/1745-6215-12-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Suvarna VR. Sharing individual patient data from clinical trials. Perspect Clin Res. 2015;6(2):71–2. doi: 10.4103/2229-3485.153996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jaspers GJ, Degraeuwe PL. A failed attempt to conduct an individual patient data meta-analysis. Syst Rev. 2014;3:97. doi: 10.1186/2046-4053-3-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dillman DA. Mail and Internet surveys: the tailored design method. Update with new Internet, visual, and mixed-mode guide. 2nd ed. New York: Wiley; 2007. [Google Scholar]

- 22.Church A. Incentives in mail surveys: a meta-analysis. Public Opin Q. 1993;57:62–79. doi: 10.1086/269355. [DOI] [Google Scholar]

- 23.Jobber D, Saunders J, Mitchell VW. Prepaid monetary incentive effects on mail survey response. J Bus Res. 2004;57:21–5. doi: 10.1016/S0148-2963(02)00280-1. [DOI] [Google Scholar]

- 24.Lesser V, Dillman D, Carlson J, Lorenz F, Mason R, Willits F. Quantifying the influence of incentives on mail survey response rates and nonresponse bias. 2001.

- 25.Chan AW, Tetzlaff JM, Altman DG, Laupacis A, Gotzsche PC, Krleza-Jeric K, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013;158(3):200–7. doi: 10.7326/0003-4819-158-3-201302050-00583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tricco AC, Ashoor HM, Antony J, Beyene J, Veroniki AA, Isaranuwatchai W, et al. Safety, effectiveness, and cost effectiveness of long acting versus intermediate acting insulin for patients with type 1 diabetes: systematic review and network meta-analysis. BMJ. 2014;349:g5459. doi: 10.1136/bmj.g5459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tricco AC, Ashoor HM, Rios P, Hamid J, Ivory JD, Khan PA, et al. Comparative safety and effectiveness of cognitive enhancers for the treatment of Alzheimer’s disease: a rapidly updated systematic review and network meta-analysis. ODPRN. 2015.

- 28.Newton D. Synthesi.SR. Knowledge translation program, St. Michael’s Hospital: Toronto, Canada; 2012.

- 29.Veroniki AA, Straus SE, Ashoor HM, Hamid JS, Yu C, Tricco AC. Safety and effectiveness of long-acting versus intermediate-acting insulin for patients with type 1 diabetes: protocol for a systematic review and individual patient data network meta-analysis. BMJ Open. 2015;5(12):e010160. doi: 10.1136/bmjopen-2015-010160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Veroniki A, Straus S, Ashoor H, Hamid J, Hemmelgarn B, Holroyd-Leduc J, et al. Comparative safety and effectiveness of cognitive enhancers for Alzheimer’s dementia: protocol for a systematic review and individual patient data network meta-analysis. BMJ Open. 2016;6(1):e010251. doi: 10.1136/bmjopen-2015-010251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Defining the role of authors and contributors. International Committee of Medical Journal Editors. http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html. Accessed 25 February 2016.

- 32.Blanchard P, Hill C, Guihenneuc-Jouyaux C, Baey C, Bourhis J, Pignon JP. Mixed treatment comparison meta-analysis of altered fractionated radiotherapy and chemotherapy in head and neck cancer. J Clin Epidemiol. 2011;64(9):985–92. doi: 10.1016/j.jclinepi.2010.10.016. [DOI] [PubMed] [Google Scholar]

- 33.Middleton LJ, Champaneria R, Daniels JP, Bhattacharya S, Cooper KG, Hilken NH, et al. Hysterectomy, endometrial destruction, and levonorgestrel releasing intrauterine system (Mirena) for heavy menstrual bleeding: systematic review and meta-analysis of data from individual patients. BMJ. 2010;341:c3929. doi: 10.1136/bmj.c3929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pignon JP, le Maitre A, Maillard E, Bourhis J. Meta-analysis of chemotherapy in head and neck cancer (MACH-NC): an update on 93 randomised trials and 17,346 patients. Radiother Oncol. 2009;92(1):4–14. doi: 10.1016/j.radonc.2009.04.014. [DOI] [PubMed] [Google Scholar]

- 35.Daniels JP, Middleton LJ, Champaneria R, Khan KS, Cooper K, Mol BW, et al. Second generation endometrial ablation techniques for heavy menstrual bleeding: network meta-analysis. BMJ. 2012;344 doi: 10.1136/bmj.e2564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.James KM, Ziegenfuss JY, Tilburt JC, Harris AM, Beebe TJ. Getting physicians to respond: the impact of incentive type and timing on physician survey response rates. Health Serv Res. 2011;46(1 Pt 1):232–42. doi: 10.1111/j.1475-6773.2010.01181.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Asch DA, Christakis NA, Ubel PA. Conducting physician mail surveys on a limited budget. A randomized trial comparing $2 bill versus $5 bill incentives. Med Care. 1998;36(1):95–9. doi: 10.1097/00005650-199801000-00011. [DOI] [PubMed] [Google Scholar]

- 38.Pit SW, Vo T, Pyakurel S. The effectiveness of recruitment strategies on general practitioner’s survey response rates – a systematic review. BMC Med Res Methodol. 2014;14:76. doi: 10.1186/1471-2288-14-76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Everett SA, Price JH, Bedell AW, Telljohann SK. The effect of a monetary incentive in increasing the return rate of a survey to family physicians. Eval Health Prof. 1997;20(2):207–14. doi: 10.1177/016327879702000206. [DOI] [PubMed] [Google Scholar]

- 40.Gibson CA, Bailey BW, Carper MJ, Lecheminant JD, Kirk EP, Huang G, et al. Author contacts for retrieval of data for a meta-analysis on exercise and diet restriction. Int J Technol Assess Health Care. 2006;22(2):267–70. doi: 10.1017/S0266462306051105. [DOI] [PubMed] [Google Scholar]

- 41.Data Collection Checklist. Ottawa, Canada: Cochrane Effective Practice and Organisation of Care Review Group (EPOC). 2002.

- 42.Veroniki AA, Mavridis D, Higgins JP, Salanti G. Characteristics of a loop of evidence that affect detection and estimation of inconsistency: a simulation study. BMC Med Res Methodol. 2014;14:106. doi: 10.1186/1471-2288-14-106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Higgins J. Green S. The Cochrane Collaboration: Cochrane handbook for systematic reviews of interventions; 2011. [Google Scholar]

- 44.Stewart LA, Clarke M, Rovers M, Riley RD, Simmonds M, Stewart G, et al. Preferred Reporting Items for Systematic Review and Meta-Analyses of individual participant data: the PRISMA-IPD Statement. JAMA. 2015;313(16):1657–65. doi: 10.1001/jama.2015.3656. [DOI] [PubMed] [Google Scholar]

- 45.Berlin JA, Santanna J, Schmid CH, Szczech LA, Feldman HI. Individual patient- versus group-level data meta-regressions for the investigation of treatment effect modifiers: ecological bias rears its ugly head. Stat Med. 2002;21(3):371–87. doi: 10.1002/sim.1023. [DOI] [PubMed] [Google Scholar]

- 46.Higgins JP, Whitehead A, Turner RM, Omar RZ, Thompson SG. Meta-analysis of continuous outcome data from individual patients. Stat Med. 2001;20(15):2219–41. doi: 10.1002/sim.918. [DOI] [PubMed] [Google Scholar]