Abstract

Background and Aims:

The scenario in medical education is changing with objective structured clinical examination (OSCE) being introduced as an assessment tool. Its successful implementation in anaesthesiology postgraduate evaluation process is still limited. We decided to to evaluate the effectiveness of OSCE and compare it to conventional examinations as formative assessment tools in anaesthesiology.

Methods:

We conducted a cross-sectional comparative study in defined population of anaesthesiology postgraduate students to evaluate the effectiveness of OSCE as compared to conventional examination as formative assessment tool in anaesthesiology. Thirty-five students appeared for the conventional examination on the 1st day and viva voce on the 2nd day and OSCE on the last day. At the conclusion of the assessment, all the students were asked to respond to the perception evaluation questionnaire. We analysed the perception of OSCE among the students.

Results:

Results showed a positive perception of the objective structured physical examination (OSCE) as well as structured 9 (25.7%), fair 19 (54.2%) and unbiased 13 (37.1%) with more standardised scoring 9 (25.7%). The students perceived OSCE to be less stressful than other examination. Thirty-one (88.5%) students agreed that OSCE is easier to pass than conventional method and 29 (82.5%) commented that the degree of emotional stress is less in OSCE than traditional methods.

Conclusion:

OSCE is better evaluation tool when compared to conventional examination.

Keywords: Anesthesiology, objective structured clinical examination, objective structured physical examination, postgraduate

INTRODUCTION

Bloom has defined three main domains of learning, i.e., cognitive (knowledge), psychomotor (skills) and affective (attitude).[1] The learning cycle is a triad of educational objectives, instructional methodology and assessment.[2] Among these, assessment is a critical issue. Effective assessment tools for each domain of learning should be able to judge students’ progress through the course in a fair and objective manner. In a changing learning environment, assessment and evaluation strategies require reorientation.[3]

Assessment is an essential component for evaluation of medical education in affiliated medical colleges. By taking regular assessment, it can be assessed whether objectives and aims of education programmes as prescribed by the university have been attained or not.[4]

With changing trends in medical education, it is time we introduce objective structured clinical examination and objective structured physical examination (OSCE/OSPE) as a method of learning and assessment of practical skills in anaesthesiology for postgraduates. The primary aim in the present study was to evaluate and compare the conventional method of examination with OSCE/OSPE. The secondary aim was to explore students’ perception of OSCE/OSPE as a learning and assessment tool.

METHODS

The permission to conduct the survey was taken from the Institute Ethics and Research Committee. The questionnaire was critically reviewed by the department of medical education and informed consent was obtained from all the participants. The 1st, 2nd and 3rd year postgraduates of Anaesthesiology department of the tertiary care hospital with associated medical college were the subjects for the study. There were seven students in each batch.

The cognitive domain was assessed by theory paper, affective domain was evaluated by the viva voce and psychomotor domain was evaluated by OSCE/OSPE. As OSCE/OSPE were being conducted for the first time, a prescribed syllabus was given to them to maintain uniformity. After completing the uniform prescribed syllabus for the semester, the OSCE notification was announced 15 days in advance. The university has semester system with assessment every 6 months. Traditional methods of assessment like theory written examination and practical viva voce are usually conducted. Before utilizing this tool for evaluation, all the staff members had successfully completed the basic medical education workshop conducted by the Department of Medical Education. Structured questions and key answers were formed for question stations and checklists for the same were prepared. As the evaluation tool was being carried out for the first time, students were oriented for same in advance. A total of 35 students were assessed for two successive semesters. The assessment was carried over 3 days to prevent exhaustion of the candidates. First day, they underwent written examination comprising two long essay questions for twenty marks each and three short notes of ten marks each and five brief answers of six marks each. Next day, the participants gave viva voce on the same topics. On the last day, the students were examined on 14 stations and six procedure tables. The students were rotated through all stations and had to move to the next station at the signal. Each station was designed such that the task could be completed comfortably within 5 min. After every five stations, there was a rest station where questions papers of previous five stations were kept. This station was a part of workstation and thus ensured that no two participants were there at the same time. The coefficient of reliability of questions asked was done by calculating Cronbach's alpha.

At the end of the examination, it was compulsory for all the participants to fill a questionnaire in a single sitting. A staff member supervised over the sittings. This questionnaire comprised five sections. The questions and the potential responses were carefully framed, again through departmental consensus meeting. The questionnaire was also reviewed by the Department of the Medical Education and Ethical Committee of the institute. The questions were selected to assess rigidity, stress, fairness and potential bias with respect to both examination styles [Annexure 1 (152.5KB, pdf) ].

The first section of the questionnaire explored the students’ perception and feedback on the OSCE/OSPE examination. The second section was dedicated to find out if they were satisfied with the way the examination was conducted. The third and the fourth section compared the OSCE/OSPE examination with the other methods of assessment.

Students required 50% to pass all the three types of examinations. The marks obtained by the students were graded. If the student secured >60% it was Grade I, if marks were between 40 and 60% it was Grade II and if <40% it was categorised as Grade III. To rule out practice bias, none of the questions were repeated.

Data analysis was carried out on Microsoft Office Excel 2007 using SPSS-19 version, IBM SPSS statistics base (SPSS South Asia Pvt. ltd, Bangalore, India). The results were expressed as frequency and percentage. The marks obtained were expressed as mean ± standard deviation and test of significance was one-way ANOVA comparison between the two groups was done using post hoc test. P < 0.05 was considered statistically significant.

RESULTS

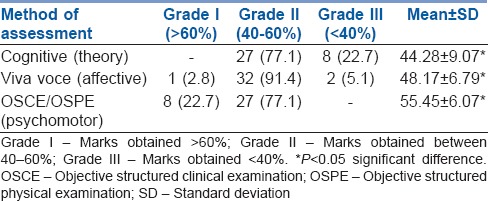

A total of 35 completely filled questionnaires were returned representing a response rate of 100%. Table 1 depicts the level of cognitive, psychomotor and affective domains of the subjects. Majority of the participants 86 (81.9%) secured Grade II in all the domains. While comparing Grade III scored by different domains by the students, it was noted that no student scored <50% in psychomotor domain whereas 22.7% (8) students scored <40% (Grade III) in the cognitive domain. On comparing the theory marks with the viva marks, there was a significant difference (P = 0.078). There was a significant difference in the marks obtained by the students in OSCE examinations and marks obtained in viva voce (P = 0.00) and theory written examinations (P = 0.00).

Table 1.

Comparative evaluation of marks obtained in different examinations

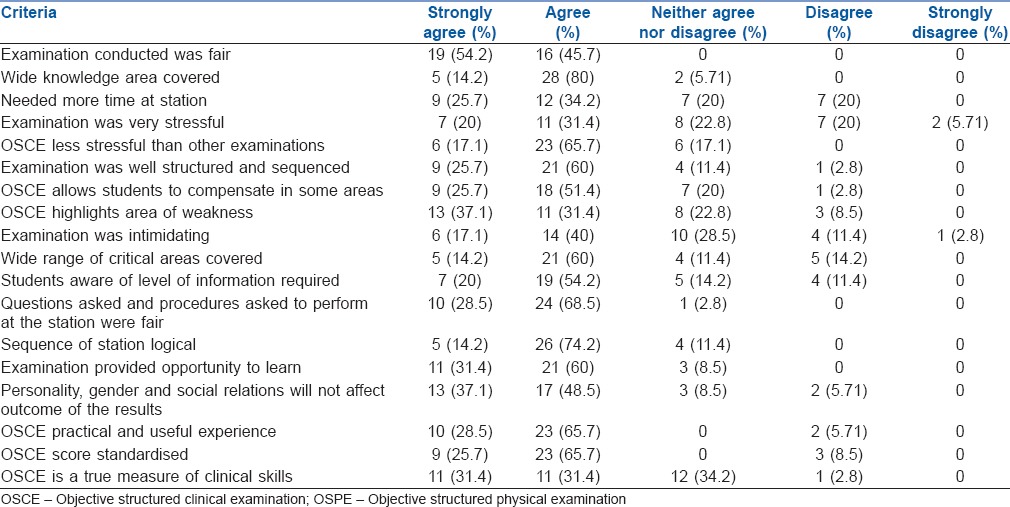

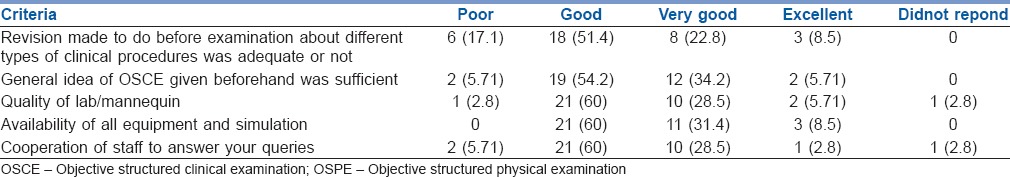

Table 2 represents the students’ evaluation of OSCE/OSPE attributes. The results of the questionnaire revealed that the majority of the students 21 (60%) viewed OSCE as a fair assessment tool which was well structured and covered a wide range of the critical areas of the discipline. Most of them reported that OSCE helped in highlighting their problem areas and provided opportunity to learn. Majority of the students agreed it was practical and the scoring system was standardised. The students were satisfied with the way OSCE/OSPE examinations were conducted [Table 3].

Table 2.

Post-graduate student's feedback analysis on various aspects of objective structured clinical examination/objective structured physical examination

Table 3.

Postgraduate student's feedback analysis on various aspects of objective structured clinical examination/objective structured physical examination

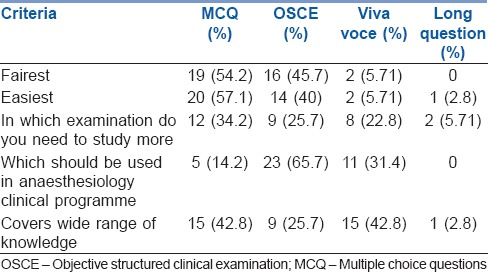

Majority of the subjects found multiple choice question to be easiest and fairest, but 23 (65.7%) commented that OSCE should be used in the clinical programme [Table 4].

Table 4.

Feedback on various type of examination conducted

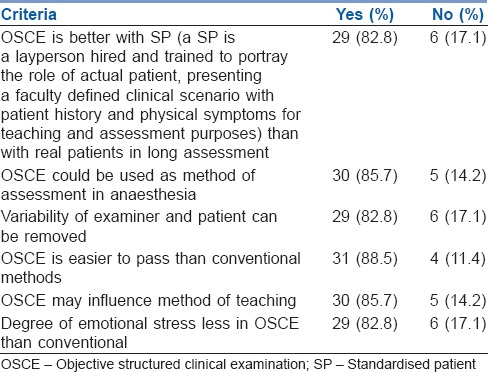

Majority of the students observed that OSCE was more satisfying compared to traditional clinical examination [Table 5].

Table 5.

Comparison of objective structured clinical examination/objective structured physical examination with conventional method of assessment

DISCUSSION

An essential component of medical education is assessment of clinical skills and competence at regular intervals and a medical branch such as anaesthesiology warrants that the examinations conducted should be able to address cognitive, psychomotor and affective domains. This requires educators to make informed decisions that measure student's clinical knowledge and skills accurately.[5] Simulation-based training and assessment can be used in anaesthesia for assessment of cognitive, psychomotor and affective domains in postgraduates.

OSCE was originally developed in Dundee in mid-1970s[5] and later extended to practical examination (OSPE) described in 1975 and in more detail in 1979 by Harden and his group.[6] Currently in India, OSCE/OSPE is conducted as a formative or summative examination in selected medical colleges all over India for undergraduates and diplomate of National Board courses and allotted a very limited percentage of the marks. On reviewing the literature, we found that most of the studies previously published were conducted on undergraduate medical students. This study may be first such study in the field of anaesthesiology in India.

OSCE is being increasingly used due to its objectivity and reliability. This form of evaluation helps us to provide feedback to the students on their progress or performance and allows us to measure the effectiveness of teaching style, modalities, content of lesson and motivate students.[7] Anaesthesia is a branch where mastering practical skills is of utmost importance. In this branch assessment component will influence learning strategies of the student. Therefore, for an assessment task to achieve desired outcome, it has to employ instruments that yield valid, accurate data which are reliable.[5,8]

There is building evidence that simulation-based training and assessment in anaesthesiology is gaining momentum for show how levels of competence. The assessment in anaesthesia should reflect the appropriate level of professionalism, in-depth anaesthesia knowledge, technical skills, interpersonal, communication skills and system-based practice.[9,10] The assessment should be able to capture additional information about the examinee.[11] Often we have seen that the student who is good in theory has not done well in viva voce or skill demonstration.[10,11]

The conventional method of assessing practical knowledge by taking viva voce has often being criticised by students. The common problem cited was irrelevant and discrepancy in questions asked by the examiner which may lead to many variations in scores.[12] Therefore, OSCE was introduced to the students so that the time, questions asked and marking were uniform for everyone.

The advantage of this examination is that the scoring is more objective since the standards of competence are preset and agreed checklists for scoring. This rules out examiner variability.[13] A limitation of these checklists is they can be either too easy or too difficult. This can be taken care of by designing well-balanced questionnaires. Students often complain that gender bias, personal and social relations may influence the final scores scored. The participants in our study strongly agreed that OSCE/OSPE may help in avoiding such bias. Since wide area of topics is covered it maintains student interest.[12,14]

Students in this study strongly agreed that more time was required for workstations. Previous studies have raised the same concern that time is a problem with OSPE and have stressed that it should not become an exercise how fast students can perform a technique but rather focus on how well they can perform.[12,15] Even though students felt intimidated by the OSPE examination, they were enthusiastic because of its objectivity, uniformity and reliability. The participants found OSCE to be less stressful than the conventional examination, and this allowed them to perform better. Our findings correlate with other findings that multiple choices are the preferred method of assessment by the students as they are able to recall the facts better.[12]

Another advantage of OSCE is that it can be adapted according to the local needs, departmental policies and availability of resources. A limitation of OSCE as noted by Ananthakrishnan is that it can lead to observer fatigue if he/she has to record the performance of several candidates on lengthy checklists.[6] Some other disadvantages which can be cited are patients’ non-cooperation, examiners need to observe the performance of each student carefully and time required for preparation to set OSCE.

Limitation of this study is the sample size. With the single experience, it is not possible to judge the difficulties and constraints of OSCE. We need to have multicentre studies with large sample size in future if implementation of OSCE/OSPE in the curriculum is required. Another limitation of the study was that the examiner feedback was not assessed which could give insight into the realism of this assessment format.

From the results of our study, it can be concluded that if correctly designed OSCE/OSPE can be feasible and acceptable to the students for the assessment of skills in postgraduate training in anaesthesiology. Still a lot of studies need to be conducted to be certain that OSCE can be included as part of postgraduate examinations in combination with the traditional methods.

CONCLUSION

OSCE can be a significantly better evaluation tool than conventional methods, especially in terms of objectivity, uniformity and versatility of clinical scenario that can be assessed. Further studies are required before recommending OSCE as a formative evaluation tool in postgraduate anaesthesia training.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

REFERENCES

- 1.Shafique A. Comparative performance of undergraduate students in objectively structured practical examinations and conventional orthodontic practical examinations. Pak Oral Dent J. 2013;33:312–8. [Google Scholar]

- 2.Mahajan AS, Shankar N, Tandon OP. The comparison of OSPE with conventional physiology practical assessment. J Int Assoc Med Sci Educ. 2004;14:54–7. [Google Scholar]

- 3.Kwizera E, Stepien A. Correlation between different PBL assessment components and the final mark for MB ChB III at a rural South African university. Afr J Health Prof Educ. 2009;1:11–4. [Google Scholar]

- 4.Charles J, Kalpana S, Max LJ, Shantharam D. A cross sectional study on domain based evaluation of medical students at the Tamil Nadu Dr M.G.R Medical University-Asuccess. IOSR J Res Method Educ. 2014;4:33–6. [Google Scholar]

- 5.Frntz JM, Rowe M, Hess DA, Rhoda AJ, Sauls BL, Wegner L. Student and staff perceptions and experiences of the introduction of objective structured practical examinations: A pilot study. Afr J Health Prof Educ. 2013;5:72–4. [Google Scholar]

- 6.Ananthakrishnan N. Objective structured clinical/practical examination (OSCE/OSPE) J Postgrad Med. 1993;39:82–4. [PubMed] [Google Scholar]

- 7.Wani P, Dalvi V. OSPE versus traditional clinical examination in human physiology: Student perception. Int J Med Sci Public Health. 2013;2:543–7. [Google Scholar]

- 8.Bourisicot K, Roberts T. How to set an OSCE. Clin Teach. 2005;2:16–20. [Google Scholar]

- 9.Chan CY. Is OSCE valid for evaluation of the six ACGME general competencies? J Chin Med Assoc. 2011;74:193–4. doi: 10.1016/j.jcma.2011.03.012. [DOI] [PubMed] [Google Scholar]

- 10.Ben-Menachem E, Ezri T, Ziv A, Sidi A, Brill S, Berkenstadt H. Objective structured clinical examination-based assessment of regional anesthesia skills: The Israeli National Board Examination in Anesthesiology experience. Anesth Analg. 2011;112:242–5. doi: 10.1213/ANE.0b013e3181fc3e42. [DOI] [PubMed] [Google Scholar]

- 11.Savoldelli GL, Naik VN, Joo HS, Houston PL, Graham M, Yee B, et al. Evaluation of patient simulator performance as an adjunct to the oral examination for senior anesthesia residents. Anesthesiology. 2006;104:475–81. doi: 10.1097/00000542-200603000-00014. [DOI] [PubMed] [Google Scholar]

- 12.Kundu D, Das HN, Sen G, Osta M, Mandal T, Gautam D. Objective structured practical examination in biochemistry: An experience in medical college, Kolkata. J Nat Sci Biol Med. 2013;4:103–7. doi: 10.4103/0976-9668.107268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dippenaar H, Steinberg WJ. Evaluation of clinical medicine in the final postgraduate examinations in final medicine. SA Fam Pract. 2008;50:67–67d. [Google Scholar]

- 14.Majagi SI. Introduction of OSPE to undergraduate in pharmacology subjects and its comparison with that of conventional practical. World J Med Pharm Biol Sci. 2011;1:27–33. [Google Scholar]

- 15.Al-Mously N, Nabil NM, Salem R. Students feedback on OSCE: An experience of a new medical college in Saudi Arabia. J Int Assoc Med Sci Educ. 2012;22:10–6. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.