Abstract

Background

Otitis media is one of the most common childhood diseases worldwide, but because of lack of doctors and health personnel in developing countries it is often misdiagnosed or not diagnosed at all. This may lead to serious, and life-threatening complications. There is, thus a need for an automated computer based image-analyzing system that could assist in making accurate otitis media diagnoses anywhere.

Methods

A method for automated diagnosis of otitis media is proposed. The method uses image-processing techniques to classify otitis media. The system is trained using high quality pre-assessed images of tympanic membranes, captured by digital video-otoscopes, and classifies undiagnosed images into five otitis media categories based on predefined signs. Several verification tests analyzed the classification capability of the method.

Findings

An accuracy of 80.6% was achieved for images taken with commercial video-otoscopes, while an accuracy of 78.7% was achieved for images captured on-site with a low cost custom-made video-otoscope.

Interpretation

The high accuracy of the proposed otitis media classification system compares well with the classification accuracy of general practitioners and pediatricians (~ 64% to 80%) using traditional otoscopes, and therefore holds promise for the future in making automated diagnosis of otitis media in medically underserved populations.

Keywords: Decision tree, Feature extraction, Automated diagnosis, Otitis media, Video-otoscope, Global medicine

Highlights

-

•

Computer-based otitis media classification can provide access to diagnosis in developing countries.

-

•

Diagnostic accuracy of the image-analysis classification system was comparable to general practitioners' and pediatricians'.

Most people globally do not have access to health care specialists who can diagnose one of the most common childhood illnesses, otitis media (middle ear infection). Affecting more than half a billion people annually, early and accurate diagnosis can ensure appropriate treatment to minimize the widespread impact of ear infections. We developed an image-analysis classification system that can diagnose otitis media with an accuracy comparable to that of general practitioners and pediatricians. The software system, which can be cloud-based for remote image uploading, could provide rapid access to accurate diagnoses in developing countries.

1. Introduction

Otitis media (OM) is the second most important cause of hearing loss, which ranked fifth on the global burden of disease and affected 1.23 billion people in 2013 (Global Burden of Disease Study 2013 Collaborators, 2015). It is one of the most common childhood illnesses and constitutes a major chronic disease in low and middle-income countries (Global Burden of Disease Study 2013 Collaborators, 2015, World Health Organization, 2004). The incidence of OM in sub-Saharan Africa (SSA), South Asia and Oceania is two- to eight-fold higher than in developed world regions with India and SSA accounting for the majority of OM related deaths (Monasta et al., 2012, Acuin, 2004).

Common types of OM include acute otitis media (AOM), otitis media with effusion (OME), and chronic suppurative otitis media (CSOM) (Paparella et al., 1985). OM is often misdiagnosed, or not diagnosed at all, and consequently treated incorrectly which may lead to serious, or even life-threatening complications (Asher et al., 2005, Buchanan and Pothier, 2008, Legros et al., 2008).

Access to ear-, nose- and throat (ENT) specialists and equipment to diagnose OM is severely limited in developing countries (Fagan and Jacobs, 2009, World Health Organization, 2013). In Africa, for example, the majority of countries (64%) report less than one ENT specialist per million people (World Health Organization, 2013). In addition, general practitioners and pediatricians, whom are also scarce in developing countries, are often prone to under- or over-diagnose OM (Asher et al., 2005, Buchanan and Pothier, 2008, Legros et al., 2008). There is therefore a great need to develop systems that can facilitate accurate diagnosis of OM in underserved areas of the world. Kuruvilla et al. (2013) recently reported an automated algorithm based on image-analysis for distinguishing between two types of OM (AOM and OME) and middle ears without effusion with a reported accuracy of 85.61%. While these results are promising, the full range of OM types in addition to other clinical presentations such as ear canal obstructions have not been demonstrated.

The aim of this study was to develop and validate a new image-analysis system to classify images obtained from commercial video-otoscopes into one of the following diagnostic groups: 1) obstructing wax or foreign bodies in the external ear canal (O/W); 2) normal tympanic membrane (n-TM); 3) AOM; 4) OME, and 5) CSOM with perforation. The image-analysis system was also evaluated in a clinical population using a low cost custom-made video-otoscope.

2. Methods

2.1. Development of the Image-Analysis Classification System

A decision tree was employed to classify images into one of the five diagnostic groups. In order for the tree to make an accurate diagnosis, the predefined features associated with each diagnosis (Table 1) had to be accurately identified in the images by feature extraction methods (Supplementary material 1 provides a detailed discussion on the image processing method). It included pre-processing followed by the detection of the malleus bone, tympanic membrane (TM) shape and color, perforation, obstructive wax, middle-ear fluid, and light reflex (Table 1).

Table 1.

Predefined features associated with each diagnosis (O/W — obstructing wax or foreign bodies in the external ear canal; n-TM — normal tympanic membrane; AOM — acute otitis media; OME — otitis media with effusion; CSOM — chronic suppurative otitis media with perforation).

| O/W | n-TM | AOM | OME | CSOM with perforation | |

|---|---|---|---|---|---|

| Malleus bone visible | Can be either visible or not | Yes | No | Yes | Can be either visible or not |

| Tympanic membrane shape | Can be categorized as retracted, normal, bulging or irregular | Can be categorized as retracted, normal or bulging or irregular | Bulging | Retracted | Can be categorized as normal, bulging or irregular |

| Color | Pearly white | Pearly white | Predominantly red | Can be categorized as red or opaque | Pearly white |

| Perforation | No | No | No | No | Yes |

| Wax | Can be present or not | Can be present or not | Can be present or not | Can be present or not | Can be present or not |

| Fluid | No | No | Can be either visible or not | Yes | No |

| Light reflex | No | Yes | Can be either visible or not | Can be either visible or not | No |

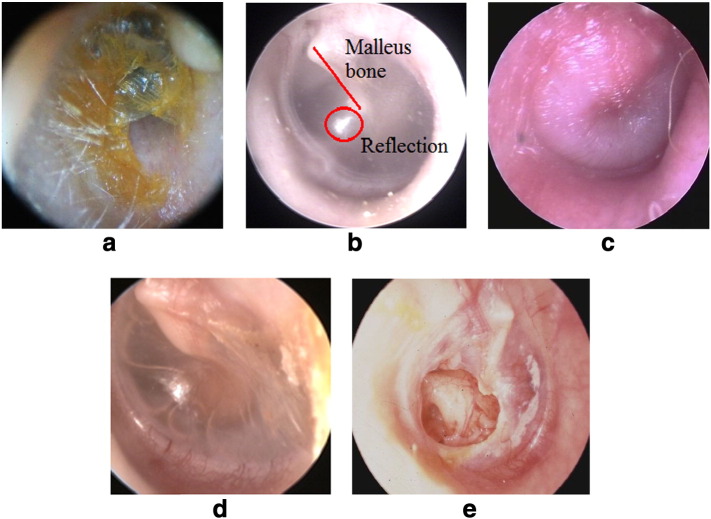

Pre-diagnosed color TM photographs (n = 562) from three TM image collections captured with a range of commercially available video-otoscopes were re-evaluated independently by two experienced (> 35 years of practice) specialists in otology. From them a set of 489 images (at least 500 × 500 pixels) of the TM and external ear canal were included in the study (examples provided in Fig. 1). Seventy-three images of the original 562 (12.99%) were discarded because of insufficient image quality or disagreement between the two otologists. Only images on which diagnoses were in agreement between the otologists were accepted (approximately 50% of them from children). The final set of images was classified as O/W (n = 120), n-TM (n = 123), AOM (n = 80), OME (n = 80), and CSOM with perforation (n = 86). Eighty percent of all images (n = 391) were randomly selected to develop the feature extraction algorithms and the remaining 20% (n = 98) were used for the validation study. The higher ratio for training images was used to improve classification accuracy while a proportion (20%), not used to train the system, was required for validation and testing. For each image in the training set (n = 391) the algorithms received a diagnosed image as input and analyzed the image using the feature extraction algorithms. It produced an array containing each image's features with the corresponding diagnosis as output. The decision tree was constructed by continuously dividing the input variables (feature vectors), or examples produced by the feature extraction algorithms, based on an attribute value test according to Quinlan (1986). This division of examples ensures that the examples that are least resembling the feature will eventually be eliminated in order to ensure that the best fit is found. The example feature vectors were subsequently used to manually design the decision tree, which constituted the classification algorithm employed in this study. No additional training was performed. Matlab® (MathWorks, Inc., Natick, Massachusetts, United States) was used as the software due to its processing capabilities, extended set of functions and familiarity. The user interface was designed, using “guide” in Matlab®, to be understandable, reliable and easy to use. (Supplementary material 2 provides a detailed discussion of the creation of the decision tree and the classification methods).

Fig. 1.

Examples of the five diagnostic classification categories.

a. Obstructing wax or foreign bodies (O/W) in external ear canal precluding visualization of the TM to establish an OM diagnosis; b. a normal TM (n-TM) showing a semi-transparent pearly white TM, triangular shaped light reflex and malleus bone clearly visible (red ring and line, respectively); c. acute otitis media (AOM) showing a bulging TM with red color; d. otitis media with effusion (OME) showing a retracted TM and fluid in the middle ear; e. chronic suppurative otitis media (CSOM) showing a TM perforation.

Images courtesy C. Laurent 2014.

Table 1 shows the five diagnostic groups and their corresponding features, according to which the feature extraction algorithms were developed. To classify an image of unknown diagnosis or pathology, the image is provided as input to the system, where after pre-processing and feature extraction are performed. Once all features are extracted, the decision-tree classifies the feature vector associated with the input image. The output, which consists of the extracted features as well as the final diagnosis, is then presented to the user as an output window on the notebook screen.

Execution time of the system, i.e. the time it takes to diagnose an image submitted after capturing, is determined by the respective execution times of the feature extraction algorithms and the decision tree classification. Total execution time of the image-analysis classification system was calculated as 3.45 s for a notebook computer with the following specifications: Intel(R) i7-3632QM 2.2 GhZ CPU with 8 GB RAM running on a 64-bit Windows 7 operating system.

2.2. Validation of the Image-Analysis Classification System

The image-analysis classification system was validated on 20% (n = 98) of the 489 diagnosed color images of TM's received from the two experienced otologists. These images had not been used previously to train the system. The image-analysis system classified images via the trained decision tree and a comparison could be made with the original diagnoses. Reliability of the image-analysis classification system was determined by investigating the agreement between diagnosed images and system classifications using Cohen's kappa. Unweighted kappa statistic (κ) was used to quantify “strength of agreement”, or diagnostic concordance.

In a separate pilot study, the image-analysis classification system was validated clinically on images captured by a custom-made video-otoscope. This was designed as an example of a low cost replacement for current clinical video-otoscopes. The shell of a commercial ear-cleaning product was used as the body for the otoscope. Internal components were replaced by a battery, a light emitting diode (LED), and resistor. Universal serial bus (USB) communication was implemented to communicate between the otoscope and a notebook computer using an audio/video cable to USB converter. The front part of the custom-made video-otoscope was removed to make space for the camera (2 megapixels) right inside the opening with a disposable ear tip attached, see Fig. 2. Supplementary material 3 provides detailed information about the construction of the custom-made video-otoscope. The final production cost of the custom-made video-otoscope came to around $84.

Fig. 2.

Side view of the final low cost custom-made video-otoscope.

The low cost custom-made video-otoscope was used to examine ears from patients in an emergency room at a hospital in Pretoria, South Africa. A qualified emergency unit nurse, without previous training in video-otoscopy did the examinations together with an experienced (> 20 years of practice) general practitioner (GP). The Institutional Review Board provided ethical clearance before any data collection commenced and each patient signed a consent form explaining the examination procedure. No patient under the age of 18 years was enrolled for the study, which was done in an emergency room for adults.

The GP first made an inspection of the ear with a hand-held otoscope and classified the ear canal, and/or TM status into one of the five diagnostic categories. The nurse then captured images using the custom-made video-otoscope and stored these on a notebook computer. Thereafter the image-analysis system classified the images stored on the computer via the trained decision tree and a comparison was made to the GP's diagnosis.

2.2.1. Funding

No external funding for any of the authors.

3. Results

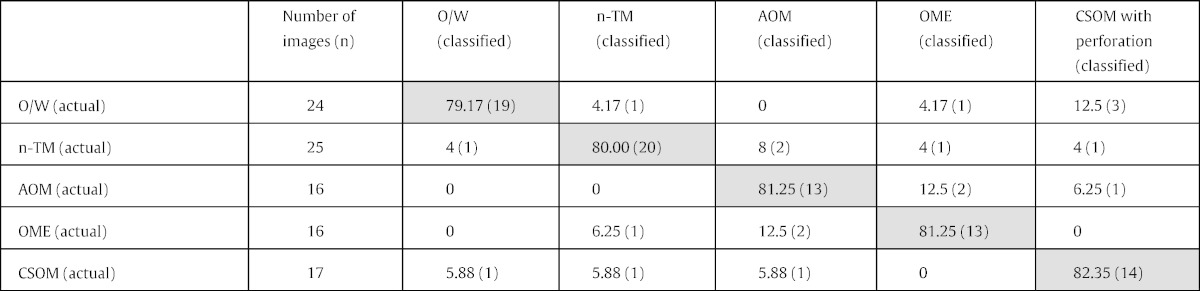

Table 2 shows the number of pre-diagnosed images used for validation in each diagnostic group. Disregarding their diagnoses, all of the validation images were pre-processed, feature extracted, and classified via the decision tree, yielding the results in Table 2, with an average objective classification accuracy of 80.61% (79/98).

Table 2.

Diagnostic classification accuracy (n = 98). Shaded cells = % correct diagnosis; unshaded cells = % incorrect diagnosis.

The unweighted kappa agreement was 0.76 (95% CI 0.66–0.86) showing a substantial agreement.

Performance characteristics of the image-analysis classification system are presented in Table 3. Sensitivity across all five diagnostic categories was between 0.79 and 0.82. AOM had the lowest specificity (0.92), while O/W had the highest (0.97). AOM had the lowest positive predictive value (PPV) (0.75) and O/W had the highest (0.89). Negative predictive values (NPV) were all between 0.94 and 0.95.

Table 3.

Performance parameters for each diagnosed category (PPV = positive predictive value; NPV = negative predictive value).

| O/W | n-TM | AOM | OME | CSOM with perforation | |

|---|---|---|---|---|---|

| Sensitivity | 0.79 | 0.80 | 0.81 | 0.81 | 0.82 |

| Specificity | 0.97 | 0.95 | 0.92 | 0.94 | 0.94 |

| PPV | 0.89 | 0.83 | 0.75 | 0.80 | 0.78 |

| NPV | 0.94 | 0.94 | 0.95 | 0.95 | 0.95 |

Using the low cost custom-made video-otoscope the nurse was able to capture 108 high quality images in three of the five diagnostic categories (Table 1). The images captured by the nurse were classified using the image-analysis classification system, (Table 4) with an overall diagnostic agreement of 78.70% (85/108). Since the custom-made video-otoscope was tested only in adults, all diagnostic groups were not observed.

Table 4.

Results of system on images obtained from custom-made video-otoscope.

| Category | Number of images collected | Number of images correctly diagnosed | Percentage correctly diagnosed |

|---|---|---|---|

| O/W | 15 | 11 | 73.33 |

| n-TM | 72 | 58 | 80.56 |

| OME | 21 | 16 | 76.19 |

| Total | 108 | 85 | 78.70 |

4. Discussion

The new image-analysis classification system demonstrated a high degree of agreement (80.6%) to the combined diagnostic correspondence of the two otologists, varying between 79.2 to 82.4% across the five diagnostic categories in the set of test images from adults and children. The accuracy of the image-analysis classification system compares favorably to average diagnostic accuracy of pediatricians (Kuruvilla et al., 2013) (∼ 80%), GP's (Blomgren and Pitkäranta, 2003, Jensen and Louis, 1999) (64–75%) and ENT's (Pichichero and Poole, 2001) (73%) using handheld otoscopes. Based on an individual diagnostic accuracy for ENT's of 73% (Pichichero and Poole, 2001) using a Gaussian statistical analysis, the combined diagnostic accuracy of the two otologists could be calculated to be 88.13%. The ground truth data used to train the image-analysis system may therefore contain an estimate of 11.87% inaccurate diagnoses. This uncertainty can be reduced and the validity of ground truth data improved by increasing the number of experienced specialists performing diagnosis on images used to train the system. For instance if three specialists were used the combined diagnostic accuracy would be 94.40% compared to 98.64% when using 5 specialists.

Compared to an algorithm proposed by Kuruvilla et al. (2013) that only distinguished between two diagnoses, the proposed system is able to diagnose five types of common external ear canal and middle ear conditions, including AOM, OME and CSOM with perforation. Utilization of an automated system for differential diagnosis of OM based on video-otoscopic images can provide a valuable diagnostic crosscheck for health personnel and, in poorly resourced sectors, could serve as the only source for a diagnosis.

The image-analysis system rarely indicated a false negative classification across OM categories with a negative predictive value of 0.95. Normal TM's were only indicated incorrectly in 8% of AOM and 4% of OME and CSOM with perforation. This accuracy is promising since it is similar to and better than reported diagnostic accuracy by pediatricians (Kuruvilla et al., 2013) and GP's (Blomgren and Pitkäranta, 2003, Jensen and Louis, 1999). Minimizing false negatives is important to ensure treatment in cases where it is indicated. Over-diagnosis by clinicians, however, is often a problem in AOM (Pichichero and Poole, 2001) and as a result the American Academy of Pediatrics recently prioritized greater diagnostic specificity to reduce over-diagnosis and unnecessary treatment (Lieberthal et al., 2013). The current system in contrast correctly identified AOM in 81.3% of instances. This could support accurate diagnoses at primary health care levels at which skilled clinical assessment is often unavailable or limited. A computer-assisted diagnostic system, such as this, can also provide a useful second opinion to assist health care workers in making correct diagnoses. Benefits include timely initiation of treatment and reduced health system burden related to over-referrals.

There were, however, some misclassifications of diagnoses noted. For example, O/W was misclassified as CSOM in 12.5% of cases. This is likely attributable to the feature extraction algorithm, which associates yellow features with the O/W category, and identifies similar features in some cases of CSOM. Moreover, the misclassification of AOM as OME and vice versa, in 12.5% of cases, could be attributed to middle-ear fluid not being visible with the TM detected by the system as opaque. These overlaps will be investigated to ensure improved differentiation in future improvements of the system.

The custom-made video-otoscope indicated overall clinical diagnostic accuracy of 78.7% compared to 80.6% on images from commercial video-otoscopes. While being assessed on a smaller sample of ears from adults (108) and only three diagnostic categories represented (Table 4) the results suggest that a custom-made video-otoscope could provide a low-cost method for accurate diagnosis of common ear disease. However, future investigations should evaluate the clinical validity in children compared to adults. The accumulated production cost of the custom-designed video-otoscope was around $84, which is at least 5 times less expensive than commercial entry-level video-otoscopes. Of course, as with regular video-otoscopes it still requires a computer to capture images. The computer together with the Matlab® software adds significantly to the cost. It would therefore come to the region of $1000 to operate the complete systems. However, the use of a netbook and the open source equivalent of Matlab®, (i.e. GNU Octave), will bring the cost down to between $250 and $300. The cost of equipment must be seriously considered if a method for automated diagnosis of OM is to be a viable solution for underserved contexts.

The present study demonstrates the accuracy of an image analysis system for diagnosis of one of the most common childhood illnesses (World Health Organization, 2004, Global Burden of Disease Study 2013 Collaborators, 2015). In light of the shortage of specialists able to accurately diagnose OM, especially in low and middle-income countries, a system like this could be very valuable to ensure that appropriate treatment is provided. For instance, nurses could be trained to take pictures of the TM using a video-otoscope and analyzed by the image analysis system locally or on a cloud-based server. In many world regions this is likely to be the only opportunity for access to a diagnosis of ear disease amongst large populations. In addition to these applications the system could be employed for teaching and training purposes in diagnosing OM to up-skill general health care workers in diagnosing ear disease.

In conclusion, we have proposed and validated a new image-analyzing system for the most frequent OM diagnoses and obstructions of the ear canal. The system loaded onto a conventional computer notebook has a diagnostic accuracy that compares favorably to that of pediatricians and GP's. It could be a valuable new tool for health personnel seeing OM patients in developing countries.

5. Limitations

The classification algorithm used in the current system is a decision tree constructed manually by analyzing the feature vectors produced by the feature extraction algorithms used to process the training images. The resulting decision tree is therefore fixed, and will have to be redesigned if more training images are incorporated. A neural network, however can be trained using the same feature vectors used to construct the decision tree and has the ability to be trained further with a single image's feature vector upon assessment by specialists. A comparison of these two approaches could be useful to evaluate for their respective accuracy.

Acquiring anamnestic information from patients, such as occurrence of fever, pain, hearing loss and discharge could increase the diagnostic accuracy of the system and will be considered in future validation studies. The main aim of this study, however was to use only image analysis and feature vector classification to diagnose the various forms of OM and we did not have additional metadata.

Manual examination of misdiagnosed images could help improve the overall accuracy of the system. In its current form the person operating the system has to inspect the image quality before submitting it for diagnosis. If images are of poor quality (e.g. poor focus, motion artifacts, insufficient light, partial TM visibility) the system will still attempt to make a diagnosis which may result in reduced accuracy.

Author Contributions

Hermanus C Myburgh (HCM) and Willemien H van Zijl (WHvZ) have contributed equally to the development of the image-analyzing software but HCM was the main author. DeWet Swanepoel (DWS) conceptualized the project and has contributed to analysis of results and writing of the manuscript. Sten Hellström (SH) was consulted as specialist on otitis media to interpret findings and to correct the manuscript, Claude Laurent (CL) was co-author and corresponding author. Respective contributions: HCM 30%, WHvZ 20%, DWS 20%, SH 10%, CL 20%.

Conflict of Interest

The authors have no conflict of interest to declare.

Acknowledgments

We are indebted to Dr. Björn Åberg, Oslo, Norway and Dr. Thorbjörn Lundberg, Umeå, Sweden for providing us with high quality photographs from their respective TM image collections.

Footnotes

Supplementary data to this article can be found online at http://dx.doi.org/10.1016/j.ebiom.2016.02.017.

Appendix A. Supplementary Data

Supplementary materials.

References

- Acuin J. WHO; Geneva: 2004. Chronic Suppurative Otitis Media: Burden of Illness and Management Options. ( http://www.who.int/pbd/deafness/activities/hearing_care/otitis_media.pdf, accessed August 29, 2012) [Google Scholar]

- Asher E., Leibovitz E., Press J., Greenberg D., Bilenko N., Reuveni H. Accuracy of acute otitis media diagnosis in community and hospital settings. Acta Paediatr. 2005;94(4):423–428. doi: 10.1111/j.1651-2227.2005.tb01912.x. [DOI] [PubMed] [Google Scholar]

- Blomgren K., Pitkäranta A. Is it possible to diagnose acute otitis media accurately in primary health care? Fam. Pract. 2003;20:524–527. doi: 10.1093/fampra/cmg505. [DOI] [PubMed] [Google Scholar]

- Buchanan C.M., Pothier D.D. Recognition of paediatric otopathology by general practitioners. Int. J. Pediatr. Otorhinolaryngol. 2008;72(5):669–673. doi: 10.1016/j.ijporl.2008.01.030. [DOI] [PubMed] [Google Scholar]

- Fagan J.J., Jacobs M. Survey of ENT services in Africa: need for a comprehensive intervention. Global Health Action. 2009;2:1–7. doi: 10.3402/gha.v2i0.1932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Global Burden of Disease Study 2013 Collaborators Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990–2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet. 2015;386:743–800. doi: 10.1016/S0140-6736(15)60692-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen P.M., Louis J. Criteria, performance and diagnostic problems in diagnosing acute otitis media. Fam. Pract. 1999;16:262–268. doi: 10.1093/fampra/16.3.262. [DOI] [PubMed] [Google Scholar]

- Kuruvilla A., Shaikh N., Hoberman A., Kovacevic J. Automated diagnosis of otitis media: vocabulary and grammar. Int. J. Biomed. Imaging. 2013;2013:1–15. doi: 10.1155/2013/327515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Legros J.M., Hitoto H., Garnier F., Dagorne C., Parot-Schinkel E., Fanello S. Clinical qualitative evaluation of the diagnosis of acute otitis media in general practice. Int. J. Pediatr. Otorhinolaryngol. 2008;72(1):23–30. doi: 10.1016/j.ijporl.2007.09.010. [DOI] [PubMed] [Google Scholar]

- Lieberthal A.S., Carroll A.E., Chonmaitree T. The diagnosis and management of acute otitis media. Pediatrics. 2013;131(3):e964–e999. doi: 10.1542/peds.2012-3488. [DOI] [PubMed] [Google Scholar]

- Monasta L., Ronfani L., Marchetti F. Burden of disease caused by otitis media: systematic review and global estimates. PLoS One. 2012;7(4) doi: 10.1371/journal.pone.0036226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paparella M., Bluestone C., Arnold W. Definition and classification. Ann. Otol. Rhinol. Laryngol. 1985;94(Suppl. 116):8–9. [Google Scholar]

- Pichichero M.E., Poole M.D. Assesing diagnostic accuracy and tympanoscentesis skills in the management of otitis media. Arch. Pediatr. Adolesc. Med. 2001;155(10):137–142. doi: 10.1001/archpedi.155.10.1137. [DOI] [PubMed] [Google Scholar]

- Quinlan J. Induction of decision trees. Mach. Learn. 1986;1:81–106. [Google Scholar]

- World Health Organization . World Health Organization; Geneva: 2004. Chronic Suppurative Otitis Media. Burden of Illness and Management Options; p. 83. [Google Scholar]

- World Health Organization . Switzerland; Geneva: 2013. Multi-Country Assessment of National Capacity to Provide Hearing Care. (Retrieved from: http://0www.who.int.innopac.up.ac.za/pbd/publications/WHOReportHearingCare_Englishweb.pdf) [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary materials.