Abstract

Children’s service systems are faced with a critical need to disseminate evidence-based mental health interventions. Despite the proliferation of comprehensive implementation models, little is known about the key active processes in effective implementation strategies. This proof of concept study focused on the effect of change agent interactions as conceptualized by Rogers’ diffusion of innovation theory on providers’ (N = 57) use of a behavioral intervention in a child welfare agency. An experimental design compared use for providers randomized to training as usual or training as usual supplemented by change agent interactions after the training. Results indicate that the enhanced condition increased use of the intervention, supporting the positive effect of change agent interactions on use of new practices. Change agent types of interaction may be a key active process in implementation strategies following training.

Keywords: Implementation science, evidence-based practice, children’s mental health services, child welfare

Introduction

A critical issue facing the implementation of evidence-based mental health services is the lack of empirically-based strategies to effectively train existing staff in new practices. Comprehensive implementation models suggest supporting each phase of implementation at multiple levels (e.g., staff, organization, and external systems; see Fixsen, Naoom, Blase, Friedman, & Wallace, 2005; Tabak, Khoong, Chambers & Brownson, 2012), but the importance of using a particular model for a specific setting and the key components needed for success are largely unknown (Powell, Proctor, & Glass, 2014; Proctor, 2012). In particular, little data are available to understand the underlying processes supporting uptake of new practices. Even in implementation studies with positive outcomes, the relative importance of specific strategies or approaches within different contexts is frequently unclear (Novins, Green, Legha, & Aarons, 2013).

Successful implementation of evidence-based practices is particularly complex when training units of existing providers, who have established practices and may need more intensive training to shift their practice behavior (Sholomskas et al., 2005). Because attempts to increase use of evidence-based mental health practices frequently involve enhancement of existing services, understanding the active components of strategies to support uptake of new practices in these types of training initiatives is particularly important. Just as evidence-based interventions have progressed from establishment of effectiveness to a focus on identification of key active components (Abry, Hulleman, & Rimm-Kaufman, 2015), the next challenge for implementation research is to evolve from models based primarily on correlational studies to an understanding of the impact of specific strategies and processes on practice behaviors (Blase & Fixsen, 2013; Proctor, 2012). The proof of concept study reported in this article focused on this need by studying the effect of interactions with a change agent, which are posited to be a key influence in uptake of new practices in Rogers’ (2003) theory of diffusion. The effect of exposure to change agent on use of new practices was examined after a typical training in an evidence-based behavioral intervention in a child welfare agency.

Implementation Challenges

Historically, development and dissemination of new practices was expected to progress from controlled trials to real-world effectiveness studies and then natural uptake by practice communities, but it has been recognized that this expectation is unrealistic (Fixsen, Blase, Naoom, & Wallace, 2009; Proctor et al., 2009; Weisz, Chu, & Polo, 2004). Instead, what occurs is a lag of as much as 15–20 years in the integration of more effective practices into usual care (Balas & Boren, 2000; Walker, 2004). Even when the practice change appears relatively simple, such as prescribing a different medication, a change in provider behavior is unlikely to occur on the basis of receiving information alone. When interventions are perceived as complex and involve an overall shift in practice orientation, as is often the case with evidence-based mental health interventions, supporting uptake and sustained use presents even more challenges (Henggeler & Lee, 2002; Riemer, Rosof-Williams, & Bickman, 2005).

In-person training in a time-limited workshop format is a common implementation strategy used to provide information about new practices to existing mental health providers. Often provided as continuing education or required in-service workshops, this strategy provides more opportunity for active participation than other implementation strategies focused on education, such as providing printed materials (e.g., treatment manuals). Unfortunately, the effects of training on use of new mental health practices have been disappointing. While training increases knowledge, when provided without other support, it is unlikely to change practice behavior (Beidas & Kendall, 2010; Fixsen et al., 2005; Torrey & Gorman, 2005). As in mental health systems, traditional child welfare training efforts generally involve in-person or web-based training with little or no follow up to support use of the new practices after completion of training. Little is known about the extent that training in child welfare settings results in improved services for children and families due to a lack of randomized studies, but existing data suggest little enduring change in practices results from these trainings (Collins, Amodeo, & Clay, 2007).

These findings are consistent with studies indicating that factors at multiple levels (e.g., individual, agency, and larger policy context) potentially facilitate or impede use of new practices. Individuals choose to initiate a new practice based on individual level factors such their attitudes toward EBPs, personality, and openness to novel experiences and perceived risk (Kolko, Cohen, Mannarino, Baumann, & Knudsen, 2009; Palinkas et al., 2008); perceived advantages, costs, and complexity of the practice (Henggeler et al., 2002); as well as compatibility of the new practice with their role (Dingfelder & Mandell, 2011). Additionally, factors such as supervisor and administrative support (Aarons & Palinkas, 2007; Antle, Barbee, & van Zyl, 2008; Damanpour & Schneider, 2009 Kolko et al., 2012) and an organization’s culture and climate -- in particular, lower proficiency and higher resistance to change -- may affect an individual’s adoption of new practices (Aarons & Sawitzky, 2006; Glisson, Dukes, & Green, 2011 Patterson, Dulmus, & Maguin, 2013). Given the range of factors that affect practice behavior, it is unsurprising that providing training alone is ineffective.

Training Enhancement

Enhancement of training outcomes is essential to increase the return on training investments and ultimately improve services. Multiple studies support that providing post-training support (e.g., expert consultation, performance feedback, and reminders) increase use of new practices after training (Beidas & Kendall, 2010; Gustafson et al., 2013; Miller, Yahne, Moyers, Martinez, & Pirritano, 2004). Consultation, defined as the provision of support in the use of a specific intervention by an external expert consultant, has the most empirical support in increasing use and fidelity to an intervention (Edmunds, Beidas, & Kendall, 2013; Herschell et al., 2009; Nadeem, Gleacher, & Beidas, 2013). Elements included in consultation (e.g., review of training content, problem solving, and application to specific clients) are likely to overlap significantly with those of similar strategies also shown to have positive effects, such as coaching, audit and feedback, and supervision as conceptualized by multisystemic therapy developers (Edmunds et al., 2013; Gustafson et al., 2013; Miller et al., 2004; Nadeem et al., 2013; Schoenwald, Sheidow, & Chapman, 2009). In this article, the term consultation is used to describe this range of support provided by an external expert who has a formal role focused on increasing use of targeted practices. Supervision provided by another agency-based provider might overlap with consultation, particularly with newer practitioners, but is distinct in that it also emphasizes oversight and evaluation of the provider’s work over a longer period of time and might have little focus on increasing targeted practices after training (Nadeem et al., 2013; Milne, 2007).

While the positive effects of consultation are encouraging, many questions related to how to best engage and support providers after training remain unanswered. Consultation has been characterized as a “black box” with undefined mechanisms of change (Nadeem et al., 2013). Variation in the elements included in consultation leads to questions about which elements, or core activities, have significant effects on different groups of providers. While focusing on distinct elements and their corresponding functions in consultation is important (see Nadeem et al. 2013), these models primarily rely on social learning theory with less incorporation of concepts implied by other theories. This results in an emphasis on the importance of learning process such as reinforcement and expansion of knowledge and skills, behavioral rehearsal, and application of training content to address barriers and client needs. These processes are likely to be key to the success of consultation models, but other theoretical perspectives might highlight quite different process -- for example, influences such as perceptions of costs and benefits, social position, or provider networks. Interestingly, providers themselves emphasize social connection with other therapists and their consultant when discussing effective consultation models (Beidas et al., 2013). Experimental research isolating distinct effects of different elements and, to the extent possible, interactional processes as suggested by a broader theoretical base, is need to understand how to most effectively and efficiently support providers after training.

In addition, a potential limitation of consultation models is their development in the context of efficacy trials, in which providers are selected for inclusion on the basis of their interest and motivation to learn the new practice. The selection process used in many efficacy and effectiveness studies is likely to have a significant impact on providers’ level of participation in post-training activities and uptake of the new practice relative to implementation efforts after typical agency trainings. In Miller et al.’s (2004) study focused on the effects of coaching and feedback after training, for example, striking differences were noted in participation in consultation sessions for a pilot group providers who were required to participate by their supervisor (median participation of 0 sessions out of 6) and a self-selected group of providers who completed an average of 5 out of 6 sessions. These findings parallel those of other studies suggesting that in absence of individual motivation, positive attitudes, and concurrence between providers’ current practices and the innovation, adoption will be low (Aarons, Hurlburt, & Horwitz, 2011). A greater understanding of how to increase receptivity to training content and increase use among existing providers is critically needed.

Application of Rogers’ Diffusion of Innovations Theory

The potential for interactions with influential individuals to affect innovations in practice is intriguing, as these types of interaction might affect receptivity to training, motivation, and ultimately use of new practices. Social interactions are central in Rogers’ diffusion of innovations theory (2003), cited as the most influential theory in implementation science, yet very few studies have tested these central premises. Diffusion of innovations focuses on the multiple decision points and influences on attitudes and behavior over time, suggesting that innovations are diffused through social networks by interactions between providers and key individuals who affect practices due to their personal characteristics as well as aspects of the practice and setting. These types of interactions might be key active processes to incorporate into established implementation strategies such as consultation, or might be a distinct strategy that should be integrated into comprehensive implementation plans.

In Rogers’ theory, a key role is played by change agents, whose social interaction with potential adopters of the innovation supports initial use by providers who are open to innovation early in the implementation process. Early adopters’ initial use of a practice may represent just a small portion of the services provided, but it is crucial, as it leads to the diffusion of the new practice throughout a network. The term “change agent” has been widely used in popular culture and business settings, with wide variation in its conceptualization. In this study, a change agent influence was enacted as closely as possible as hypothesized by Rogers’ (2003) theory. Following this theory, the change agent role was created with the following characteristics: 1) Generates interest in a novel practice through informal social interactions that inspire early adopters to try the new practice; 2) Similarity to the providers in position level (i.e., not a supervisor); 3) Does not have authority or interest in mandating practice change; 4) Perceived to have more advanced knowledge; 5) Personal qualities include high self-efficacy and outgoing, adventurous personality (Moore et al., 2004; Gingiss, Gottlieb, & Brink, 1994); and 6) Strong communication skills.

Change agent interactions might overlap with those of a charismatic consultant or supervisor, but clearly the change agent role is distinct from this role due to their effect through informal interactions and inspiration of other providers to try a practice that they endorse. Following these guidelines, change agents do not meet with providers regularly about the intervention or have any oversight over the provider or the providers’ use of the intervention.

As defined by Rogers (2003), the change agent is distinct from a champion, who is an individual at a high administrative level who strongly supports implementation through the influence provided by their position. Champions potentially affect administrative support for an innovation, while change agents affect practices more directly, primarily through their personal qualities and perceived expertise rather than any formal position. They are also distinct from key opinion leaders, who are individuals within an organization who are often early adopters of an innovation who develop expertise and later in the implementation process are key influences within the agency. Change agents may come from within or outside the organization, while key opinion leaders and champions generally influence innovation from within their organization.

Despite the predominance of diffusion of innovation theory in implementation research, only rarely have the effects of specific influences that it hypothesizes been directly tested. It is unknown whether a change agent interactions have distinct effect on uptake or sustained use of innovations in either social services or mental health practices. Across different fields, studies focused on Rogers’ theory have generally involved description or retrospective analyses of extent of diffusion, rather than prospective tests using an experimental design (Rogers, 2003). An exception to this is Atkins and colleagues’ (2008) study focused on the use of key opinion leader teachers in disseminating classroom-based behavior management strategies to other teachers. This study supported a central idea of Rogers’ theory: that uptake is more likely when an intervention is supported by a key opinion leader who is similar to potential adopters. Dissemination by key opinion leader teachers resulted in greater intervention uptake among teachers than dissemination by mental health providers based in the school (Atkins et al., 2008). Similar results have supported use of physician coaches versus non-physicians to influence physicians’ practices (van den Hombergh, Grol, van den Hoogen, & van den Bosch, 1999). However, whether a change agent process has an effect on initial use of an intervention in an agency setting has not been previously examined.

Study Aims

This study investigated the effect of post-training change agent interactions on use of an evidence-based intervention relative to training as usual with no additional post-training support. An aspect of Rogers’ diffusion of innovations theory was operationalized by training a mental health provider to interact with existing providers as a change agent in the agency setting. By randomly assigning providers to the condition that involved contact with a change agent, this study isolated the effect of change agent interactions on use of an evidence-based intervention after training as usual to understand if the distinct process of change agent interaction supports uptake of new practices among existing providers. We expected that:

Providers randomized to a condition that provided interaction with a change agent after training as usual would have greater use of the intervention than providers who received only training as usual;

Extent of contact with the change agent in the first half of the enhanced condition would be correlated with subsequent use of the intervention.

Methods

This study examined the effect of a change agent on uptake of an intervention to address child behavior problems in an experimental longitudinal design. Fifty-seven providers from a single large urban child welfare agency were randomly assigned to two conditions. The control group received “training as usual” (n = 26) while the experimental enhanced training group (n = 31) received the same training followed by potential contact with a change agent. When turnover of providers (therapists and case managers) occurred, new staff were assigned the condition of the previous provider, so that the change agent could continue to interact with providers serving the same foster parents and children. Providers self-reported use of the intervention components at up to five time points over a 14-month period with baseline interviews occurring prior to the enhanced services phase. A total of 188 observations (provider interviews) were obtained. The study received Institutional Review Board approval from the University of Illinois at Chicago as well as the Illinois Department of Children and Family Services.

Agency Setting

The selected agency is one of the largest urban child welfare agencies in the Midwest. At the point when the study was initiated, over 500 children placed in foster care were served by the agency. While conducting this study by randomizing providers within a single agency limits the generalizability of its findings, it equalized the effects of agency-level factors that are likely to have a strong influence on uptake of a new practice across the experimental and control groups and provided the opportunity to study a specific influence (change agent interaction) on behavioral change at the individual level. At the time of initiation of the study, this agency had just completed participation in a pilot study of the behavioral intervention used in this study. Positive results from this study (see ----) had increased administrative support for implementation of the intervention, strengthening a key factor supporting use in both conditions and providing a basis for the study.

Participants

Fifty-seven providers (43 case managers and 14 therapists) participated in this study. Both types of providers were included based on early pilot study findings indicating that both groups viewed foster parent support in addressing behavioral issues as an important issue and both also did not currently view providing parenting interventions as a part of their role (------). Foster parents and their foster children (N = 119) who were served by the providers also were enrolled, but the primary outcome examined in this article is providers’ reports of the extent of their use of the intervention with enrolled children. Providers served an average of 3.2 children each, with some children served by both a case manager and a therapist. In this agency, case managers are responsible for planning and oversight of the child’s care and services. They conduct assessments, monitor the foster home and the child’s wellbeing, provide information and support to foster parents, make referrals, and attend administrative and court hearings. Therapists are assigned to provide individual child treatment, and at the time of this study appeared to practice from an eclectic orientation. Their role involved seeing children individually once a week for hour-long sessions. While their clinical director had a psychodynamic orientation, most also had training in cognitive behavioral approaches.

As shown in Table 1, providers were predominately female and African American. Providers were recruited for the study if they had at least one child age 4–13 on their caseload who might benefit from the intervention. Eligibility was assessed using a brief 11-item screening instrument that was completed by case managers caring for all children who met age criteria. Criteria that screened children as eligible included factors such as psychiatric hospitalization in the past year, placement disruption in past year due to behavior, placement in specialized foster care due to behavior problems, a foster parent requesting services to address behavior problems, and foster parent submission of a 30-day notice to have child removed from her home due to behavior problems. Randomization occurred prior to consent and all but one provider who had an eligible child on his or her caseload consented to participate (98%). Participants in both the control and enhanced services groups were compensated for their time with $30 for completing an interview for the first child on their caseload and an additional $10 for each additional child.

Table 1.

Provider Demographics (N = 56)

| Variable | M | SD | % |

|---|---|---|---|

| Age | 33.46 | 7.76 | |

| Female | 80 | ||

| Race | |||

| African American | 46 | ||

| White | 46 | ||

| Asian | 5 | ||

| Other | 2 | ||

| Degree | |||

| Bachelors | 36 | ||

| Masters | 7 | ||

| Not reported | 7 | ||

| Position | |||

| Case Manager | 25 | ||

| Therapist | 75 | ||

Note. Demographic data were missing for one provider.

Evidence-based Intervention

This study used materials adapted from Keeping Foster Parents Trained and Supported (KEEP), an evidence-based intervention developed specifically for foster families as a preventative model for use with elementary school age foster children (see Chamberlain et al., 2008; Price, Chamberlain, Landsverk, & Reid, 2009). KEEP is designed to be provided primarily in a group format and focuses on increasing effective praise, positive interactions, and use of consistent, mild discipline techniques. It is effective in reducing child behavior problems and improving placement outcomes as compared to services as usual. It is important to note that the Oregon Social Learning Center Community Programs (OSLCCP), which provides comprehensive training and implementation support to agencies, municipalities, and states seeking to adopt KEEP, was not involved in the initial training or design of this study in any way. The present study was not a test of either the effectiveness of KEEP or a comprehensive implementation strategy for KEEP, which has demonstrated effectiveness following a structured implementation protocol developed in San Diego County (Chamberlain et al., 2008; Chamberlain et al., 2012; Price et al., 2009) that can be accessed through OSLCCP. Instead, this study used KEEP intervention materials with the consent of the developer only for the purpose of studying uptake and use of an evidence-based intervention after receiving “training as usual” in an agency context when enhanced support is provided by a change agent.

The KEEP manual had been adapted for use in a pilot study conducted just before the initiation of the change agent study. The adaptations were made (1) to provide KEEP in a home visiting or individual format and (2) to provide additional content focused on school success. The home visiting manual retained all of the elements of the KEEP intervention but included supplementary guidance for the provider to facilitate use in an individual format and assist them with development of behavior charts. It was also re-formatted so different elements (e.g., effective praise, behavior charts, timeout) could be accessed easily. Foster parent handouts were all retained as in the original manual.

Training as Usual

After completing the consent process and a baseline interview, providers and supervisors completed training as usual, which involved 16-hours of interactive instruction in behavioral concepts and use of parent management training with foster parents using KEEP materials. The training occurred at the agency and as noted above did not involve OLSCCP. Providers asked to attend by an email sent by the agency’s administration, but were not expected to attend if they had conflicts like court dates for children on their caseload. Of the 57 providers who participated in the study, 44 (85%) participated in one or more training session. Based on the agency’s request, training was spread over four sessions. On average, providers attended 2.55 (SD, 1.13) sessions. Therapists and case managers were trained separately, as the barriers to implementation and skills in different strategies were expected to be different due to the providers’ prior experience and role with the child and foster parent. In initial meetings with agency staff, supervisors were encouraged to support providers’ use of the intervention, but providers (therapists and case managers) were not required to use it. Providers who were not able to attend the trainings or who partially attended them received all materials provided during the missed trainings, but did not receive individual or “make up training,” consistent with how trainings are provided in child welfare settings. All providers were given a copy of the home visiting manual. Selected DVD clips were compiled on a DVD made for the project from Off Road Parenting (Pacifici, Chamberlain, & White, 2002), which demonstrates parent management training techniques. Portable DVD players were available at the agency for use during agency sessions or home visits with foster parents.

Training as usual included a focus on understanding underlying behavioral concepts of the intervention as well as a focus on active learning, including behavioral demonstrations, role plays, and case consultation during the training. At the end of training, providers planned for use of the intervention with a specific foster parent to encourage initiation of use. At the time of study initiation, therapists primarily saw children individually and had limited in-person contact with foster parents. Most case managers saw foster parents once a month and focused mainly on monitoring to assure child safety and address agency and court requirements, with a more limited focus on providing support or skills enhancement to foster parents. To address these barriers, therapist training included content on the importance of involving foster parents in treatment for behavior problems and how to engage foster parents in treatment. Case manager training focused on selecting material that was most relevant to each child and foster parent’s needs and providing this material to the foster parent during home visits. Both types of providers were also trained to provide some content by phone in situations where might be difficult to meet regularly with foster parents.

All participants were provided with the trainer’s email address and encouraged to call with any questions that they had about the intervention or their use of the techniques with foster parents, but follow up after the training was provided to control group providers. To verify that the training was at least the same quality as similar trainings provided by the agency, satisfaction with training as usual was measured with a brief measure that asked participants to rate the training and then to compare the training to other similar trainings they had received on a five-point scale. The training was rated at above average, with providers reporting that the training was of higher quality than average compared to other trainings received.

Experimental Enhanced Condition: Infusion of Change Agent

Our experimental condition sought to enhance intervention uptake by introducing the time-limited support of a project-trained change agent. The change agent’s goal was to initiate discussion about the intervention and its potential benefits with enhanced condition providers. She primarily sought out informal opportunities for information exchange and discussion of the intervention, although she was available for formal consultations as well. Formal consultation sessions with her were rare, however, with very few providers ever meeting with at a set time to discuss the intervention or their cases. Our goal was to create a situation analogous to an agency’s infusion of a highly regarded provider or other staff member who has received specialized training in both (1) a new intervention that an agency seeks to adopt and (2) strategies to engage and support other providers’ use of the intervention during implementation that are consistent with a change agent role.

Change Agent Selection

Because our implementation plan was dependent on the selection of an effective change agent, we screened and interviewed candidates carefully for this position. We were largely successful in hiring a change agent with the characteristics of an early adopter as described in previous work (Gingiss et al., 1994; Moore et al., 2004). She had high perceived self-efficacy, an interest in learning new interventions, a history of high centrality in employment networks and excellent communication skills as described by previous employers. She was also a candidate who met all agency hiring requirements and was similar to many agency staff in her previous experience and demographic characteristics. However, unlike the therapists and case managers she worked with, she had several years of experience in providing clinical supervision. While this enhanced the extent that she was perceived to have advanced knowledge, she had some difficulty with moving from a more traditional, structured supervisor/ consultant role (her previous work experience) to the informal, more varied types of interaction that the position entailed. To support her role as a change agent, we provided weekly support and coaching with goals specified for each week and monitoring of fidelity to these activities through a log of interactions and reflections on how each week progressed.

Change Agent Activities

As planned, the change agent activities included a minimal number of formal events, including a kick-off session to meet providers and a “staff spotlight” presentation with an early adopter who the change agent identified through her contacts with her. In the staff spotlight, the case manager discussed her use of the intervention with other providers. Although only enhanced condition providers received invitations to the kick-off session, it was also attended by control condition providers, possibly due to the food provided for this event. Contamination related to control providers’ attendance at this event was thought to be minimal, however, as this event focused on an overview of the study and informal discussion not focused on the intervention.

The change agent attended all trainings as usual prior to starting in her role as a change agent. As she was new to the agency, she was introduced to participants as a member of the research project who would be interacting with some providers after the training. Recruitment materials and the consent process and included complete disclosure of the study aims and the change agent’s role, so providers in both groups understood her role and knew that she would be only interacting with providers in the enhanced condition. Control group providers who did not attend the training as usual might not have been able to identify the change agent, but most did attend the training and so would have recognized her as a member of the research staff. She maintained a desk at the agency two days a week in each sites so that she was able to seek out interaction with providers assigned to the experimental group on a regular basis, but she did not become a part of the agency’s staff. She did not attend staff meetings or any events that were not related to the intervention. Consistent with the change agent role, after the training she completed the intervention with two foster parents herself so she was able to endorse the intervention and its potential benefits in her interactions with providers.

As planned, her contacts with enhanced condition providers primarily consisted of informal interactions, through unscheduled conversations about enrolled children and the intervention or casual conversations about other topics. Nearly all of her interactions were in person (94.4%) with the remaining contacts by telephone. She memorized the names of all providers enrolled in the project and introduced herself to enhanced condition providers as they passed her desk or by going to their cubicles or offices. For example, in a typical interaction, she would encounter an enhanced condition provider at the Xerox machine, while entering the building, or when passing their cubicle, and would ask about their enrolled children. She might then mention her use of the intervention with a foster parent, or ask if the provider thought a particular child on her caseload might benefit. For a provider who indicated that they were considering use or who had started the intervention, she would ask how it had gone and if they had any questions. In her contacts, she attempted to provide an enthusiastic but genuine endorsement of the intervention and its possible benefits. In contrast, her contacts with control providers were limited to brief casual conversations such as greetings. Her contacts were fairly consistent across the 6-month enhanced condition period but were reduced in the last two months of her time at the agency when she only went to each of the two agency offices once a week.

At the end of the six month enhanced condition period and prior to the Time 4 interview with providers, she left her project position for a more traditional clinical director position. Another change agent was hired to complete the final tasks that the main change agent had begun, which consisted of helping coordinate staff spotlights for early adopters to present their use of the intervention. The second change agent was less assertive and had difficulty continuing the first change agent’s activities given her lack of earlier contact with providers. She had very few contacts with agency staff related to the intervention and after three months, she transitioned out of this role.

Change Agent Fidelity

Fidelity to the change agent role was assessed by examining records of interaction with both intervention and control group providers that the change agent maintained throughout a 4-month period that spanned between approximately a month prior to the average Time 2 interview until the average Time 3 interview. These records indicate that she spoke with 71% of the enhanced group providers about the intervention in this period. Some contamination of the change agent condition also occurred, as would be expected given that she was housed in the agency with both control and experimental providers; she also talked with 15% of control providers about the intervention at some point. However, the average length of aggregated contacts within the period was very different. She recorded that she had had an average of 27.19 (SD, 40.6) minutes of interaction with enhanced group providers and just .87 (SD, 2.72) minutes of interaction with control group providers specifically about the intervention. These data suggest that her level and content of interaction with enhanced group providers were consistent with her role.

Measurement

Providers responded to a series of questions about their use of parent management training skills and project materials in their work with each foster parent caring for a child eligible for the intervention in the past 30 days. To create conservative measures of use, only use that included using a manual, DVD, or handout to teach a particular skill (e.g., use of rewards, timeout) were counted in the two outcome variables. To assess this, a series of questions asked about different components of the intervention. For example, first a question asked “How many times in the past 30 days did you talk to the foster parent about using incentives or rewards?” Then a follow up question asked “How many times did you use a manual to describe this strategy? Was it… didn’t get a chance to use it, one, two, three…” This allowed exclusion of more general discussion of behavioral parenting concepts with foster parents (which were reported by the majority of providers) and provides some indication that their presentation of the skill at least partially reflected the KEEP intervention.

This strategy was designed specifically to measure use of the manualized intervention over time, but has limitations as it is based on self-report. Hurlburt and colleagues’ (2010) findings from a study comparing therapist’s self-reported use of EBP practices indicates that therapists tend to perceive and report much greater use of evidence-based intervention strategies than observed in their recorded sessions. Consistent with this finding, in our study many components were reported to be used frequently in the first question that asked about overall use of the strategy with a particular foster parent. For example, skills focused on increasing use of encouragement was reported to be discussed with 47% of foster parents in the past 30 days at baseline and 63% at Time 2; behavior charts 9% at baseline and 24% at Time 2; effective requests 28% at baseline and 40% at Time 2. While these data suggest an increase in use of these strategies after the training, the high percentages suggest that over-reporting is likely to have occurred, supporting screening out use that occurred without using the manual or handouts.

Findings from Hurlburt’s study also raise questions about the validity of therapists’ reports about their use. The interclass correlations between the therapists’ reports and coders’ ratings for strategies including (1) responding effectively to negative behavior, (2) effective commands/ limit setting, (3) use of rewards systems, and (4) use of time out were low (.63, .35, .35, −.05, respectively), which suggest questionable validity for all skills other than effective commands. Requiring use of a manual, video, or handout to support presentation of the skill to count reported use hopefully increased validity and reduced over reporting. Only 35.1% of providers reported any use of the intervention materials (manual and/or handouts) after the training, so this measurement strategy did substantially reduce the intensity of use that was counted. However, without recording and coding the providers’ interactions with foster parents, which was not feasible in this study, the extent this increased validity is unknown.

Two variables were created to measure use. An additional variable was created from change agent records of interaction with providers to indicate intensity of providers’ interactions with the change agent.

Initiation of Use

This is a dichotomous variable coded with 1= used manuals and handouts at least one time with one or more foster parents in previous 30 days, and 0 = no use of intervention materials in past 30 days. This variable was measured at baseline and in each of the post baseline interviews.

Amount of Use

This variable summed the total number of intervention components presented to each enrolled foster parent in the past 30 days. For example, if a provider reported using a manual to talk with a foster parent about three topics such as praise, behavioral charts, and time out in the past 30 days, this use would be coded with a 3. If the provider reported using the intervention components with more than one child, this use was summed across cases; for example, a single provider who talked with two different foster parents about timeout using the materials would be coded with a 2. This variable was measured and coded the same way at baseline and across all time points.

It should be noted that these measures do not comprehensively assess all use, since each assessment asked only about use in the past 30 days, and use was only measured every three months at Times 2, 3 and 4, and then four months later at the final time point. In particular, use that occurred immediately after the training, which occurred right after the baseline interviews, might not be detected, so that initiation of use is likely to be undercounted. In addition, use was only measured as reported for specific foster parents and children who were enrolled in the study. This might also lead to under reporting if providers also used the intervention with other foster parents and children who were not enrolled.

Level of Change Agent Interaction

Records of interactions with providers maintained by the change agent over a four-month period during the enhanced phase provided an opportunity to assess whether level of interaction with a change agent is associated with subsequent use of the intervention. Total time spent interacting with the change agent prior to the Time 3 interview was calculated by summing the duration of all interactions. Only interactions that included some content related to the intervention were included in this count. Records of interaction were generally recorded immediately after the interaction occurred, and at the end of each day at the latest. These records are thought to have a reasonably high level of accuracy given the immediacy of the recording, the regular review of records, and the correspondence of the records with the change agent’s descriptions of her work and level of interaction in coaching sessions. However, the validity of this variable is untested; the change agent may have failed to record all interactions, or may have estimated duration incorrectly, particularly for interactions that were frequently recorded at the end of the day.

Data Analysis

Differences in use across the intervention and control groups were assessed using a zero-inflated Poisson model with random effects, a type of mixed effects regression model (Hedeker & Gibbons, 2006). Mixed models, similar to hierarchical linear models, can provide estimates of differences in groups at different time points while modeling the clustering of observations within individuals that is likely to occur over time (i.e., a provider who uses an intervention at one point in time is more likely to use it at a later point). These methods are also well suited to estimate nonlinear effects, which is essential in this study, since providers might initially use the intervention and then desist. Mixed effects models also use all available observations and are less restrictive regarding missing data than other longitudinal methods (Hedeker, Gibbons, & Flay, 1994). This is an important consideration in this study given the level of missing data that could potentially occur due to staff turnover, child placement moves, and missed interviews.

In this study’s data, a high level of “0” responses were obtained on measures of intervention use (90% in control group and 79% in the enhanced group across all time periods, including baseline), indicating no use of the intervention in the past 30 days. In addition, among providers who did use the intervention, responses had a Poisson distribution, as expected with count data. To adequately model this distribution, a zero-inflated Poisson (“ZIP”) model was estimated using nlmixed in SAS. In this method, the excess of zeros (in this case, no use reported in past 30 days) is assumed to be potentially due to a different process than the process determining level of use; for example, it may be impossible for some providers to use the intervention in a given 30-day period because they have no contact with an enrolled foster parent. This must be modeled to obtain more accurate parameter estimates for the count analysis, which is conditional on the probability of a zero observation (Lee, Wang, Scott, Yau, & McLachlan, 2006). Thus the ZIP model includes two parts to estimate the outcome variable: a logistic regression model with a random effect to model correlations within an individual across time and a Poisson model, also with a random effect at the individual level. These two parts are estimated at the same time so that the excess of zeros is accounted for and does not bias estimates in the Poisson results. Demographic variables and factors potentially affecting use such as position type (e.g., case manager or therapist) were initially included in the model but were deleted as none approached significance and deleting these variables did not change the results. The final model was chosen by comparing model fit indices and testing the significance of the change in the −2 log likelihood using the chi-square distribution for a series of nested models. A nonsignificant covariate for baseline in the zero inflation portion of the model was deleted as it did not improve fit and deletion did not affect the results. These tests also indicated that inclusion of the zero inflation portion of the model and two random effects (random intercepts for both the Poisson and zero inflation parts) significantly improved the model fit. As expected, inclusion of the random effects diminished the statistical significance of the estimates, particularly in the zero inflation portion of the model.

This analysis provides estimates of both amount of use (expected to be higher in the intervention group relative to the control group and baseline) and zero inflation (indicating no use, expected to be higher in the control group). To test the effect of the change agent, the change agent-enhanced group’s use of the intervention was contrasted at each of the four time points after baseline with the control group’s use. This strategy allows for detection of nonlinear effects across time without specifying how use would vary across time.

Testing mediation of effects by entering level of change agent interaction into the ZIP Poisson model was not possible since this variable was only measured for a limited period rather than throughout the enhanced support period. However, associations between level of interaction and use could be compared at two different time periods. We expected that use at Time 3 would be related to level of interaction in the four months before use was reported, consistent with the hypothesis that more intense contact with the change agent would be related to greater use at a later point. A weaker correlation was expected between level of change agent interaction and use at Time 2, which occurred prior to most of the period in which the change agent interactions were recorded. Pearson’s correlations were used test the significance of these correlations.

Results

Descriptive

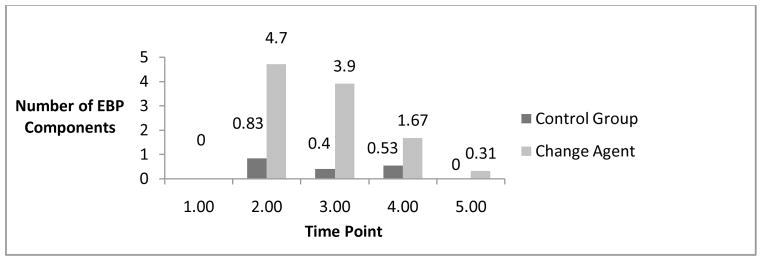

No use of the intervention occurred at baseline, as expected. After baseline, average use was higher in the enhanced group, but this difference diminished over time, as shown in Figure 1. At Time 2, three months after baseline, use in the enhanced group peaked at 4.7 (SD, 10.65) units of the intervention in 30 days. In comparison, use in the control group was .83 (SD, 1.85) even at its peak at time 2, indicating that no average the control group providers had almost no use in a 30 day period. At Times 3 and 4, use was reduced with means (standard deviations in parentheses) of 3.9 (7.3) and 1.67 (4.13) in the enhanced group and .4 (1.39) and .52 (1.65) in the control group. By the final interview, 13 months after the baseline and a year after the training, no use was reported in the control group and use was .31 (SD, .70) units in the enhanced group. Overall, 35.1% of providers reported any use of the intervention materials at some point. The percentage reporting any use of the materials after baseline was similar in the two groups, suggesting that more use occurred among the providers who initiated any use of intervention in the enhanced group rather than that a greater number initiated.

Figure 1.

Mean Provider Use in Past 30 Days by Condition

The effect size (Cohen’s d) of the change agent intervention at Times 2–5 were .51, .67, .37, and .63, indicating a moderate size effect.

Prediction of Use

Results from the Poisson regression model support that the change agent condition significantly influenced the level of providers’ use of the intervention, with greater use occurring in the change agent condition at Times 2, 3 and 4. This indicates that a positive effect extended through nine months (or the entire period in which a change agent was housed at the agency in at least one site). This effect was strongest at Time 3, and then diminished over time until it was nonsignificant at Time 5, which was after the second change agent had left the agency. For example, at Time 2, use in the change agent condition was greater than use in the control condition by a factor of 3.63 times [exp(1.29)]; at Time 3 by a factor of 5.30 [exp(1.67)]; at Time 4 by a factor of 3.17 [exp(1.16)]. Although these results may seem to suggest a strong effect for the change agent condition, these estimates are relative to the very low level of use in the control condition.

The logistic regression part of the ZIP model is frequently viewed as less important than the Poisson part, as its primary purpose is often to obtain unbiased Poisson parameter estimates for those with non-zero data. In this study, however, these results are also of interest since “zero inflation” corresponds to greater than expected zero use. Results from the ZIP model’s logistic results indicate that control group providers were more likely than the enhanced conditions group to report excess zero use in the prior 30 days at Time 3 (p < .05). In addition, a nonsignificant trend for greater “no use” in the control group at Time 2 occurred (p = .05). Specifically, the high overall odds of having zero use [15.03 = exp(2.73)], was reduced for providers in the change agent group at Time 3 by a factor of .15 (.15*15.03). However, no significant difference in excess zero use occurred across the groups at other time points.

Correlation of Use with Change Agent Interactions

As expected, providers’ level of use reported at Time 3 was significantly correlated with their level of interaction with the change agent in the four months before time 3. Within the enhanced group, the Pearson’s correlation was .57 (p < .01); within the entire sample at Time 3, the correlation was .59 (p < .001). In contrast, use of the intervention reported for the previous 30 days at Time 2 was not associated with change agent interactions that occurred after Time 2. At Time 2, correlations were −.09 (p = .67) in the enhanced change agent group and .03 (p = .85) in the entire sample.

Discussion

This study’s results provide evidence for the influence of interaction with a change agent on use of a novel intervention. After receiving a typical “training as usual” at their agency, child welfare providers randomized to a change agent condition reported greater use of the intervention relative to a control group that did not have the opportunity for contact with the change agent. The effect of the change agent was significant throughout the period in which she was in contact with providers, with a decrease occurring as she transitioned out of her role. These results support the role of change agent interactions in use even in the absence of any requirement or institutional support for the new practices. As noted by an agency director in a qualitative study focused on implementation of EBPs, this role is one that is not generally present as individuals or agencies consider adoption of a new therapy (Proctor et al., 2007). While drug companies invest heavily in marketing providing strong, enthusiastic endorsement of their products through advertising and interactions with “drug reps,” dissemination of manualized psychosocial treatments does not explicitly include these types of strategies. Our results support the potential for ongoing, informal positive interactions related to the new practice to increase use of a novel intervention within the practice setting.

Isolation of a specific type of interaction process that might be a key active component of an implementation strategy has not been previously studied in a child welfare setting. Even in the broader implementation literature, the relationships between elements of specific strategies and use of new practices are unclear. Clearly, providing initial trainings with no follow up is unlikely to impact provider behavior. As Powell, Proctor, and Glass (2014) state, “ongoing training (e.g., booster sessions), access to supervision, and expert consultation, peer support, and ongoing fidelity monitoring seem to be an important step in ensuring successful implementation and intervention sustainability” (pp. 204 – 206). While research supports providing consultation to address this need (Edmunds et al., 2013), consultation can include many distinct functions, such as education, modeling use, role play, imposing requirements, correcting drift from the intervention, ongoing monitoring, providing encouragement and social support, problem solving how to overcome barriers, and demonstrating potential benefits (Edmunds et al., 2013; Nadeem et al., 2013). The findings from this study indicate that one of the active components in effective follow up strategies is distinct from either requiring use or providing extended supervision. Having contacts with a change agent who persists in reminding providers about the intervention, is available for consultation on an as needed basis, expresses enthusiasm for the intervention, provides brief examples of the intervention’s benefits, and asks about the provider’s use of it with clients has an independent positive effect. This process might be an active component of consultation strategies or might provide a distinct benefit when provided as a strategy in addition to consultation, which may never be accessed by some providers.

Evidence-based intervention purveyors who provide a strong endorsement for their interventions may use change agent types of interactions to generate enthusiasm and support for the intervention. However, the extent that this type of interaction is ongoing after the purveyor’s training is likely to be variable. Ongoing consultation calls, which are a standard part of some training models after an initial training in an EBP, might also have the potential to fill this role. The tendency for behaviors to shift back to original models of care in the absence of continued support (Deane et al., 2014; Miller et al., 2004) and the findings of this study suggest that effective, sustained mechanisms to support use when consultation calls end need to be developed. Particularly when the service context is characterized by multiple demands and many new initiatives, as is the case in child welfare settings, new practices may be lost quickly after the implementation period.

Studies that target supervisors’ role in supporting implementation may help us better understand how to initiate and embed new practices into child welfare settings. Supervisory support and supervisory training have been identified as a key factors to target when initiating practice change, particularly uptake of evidence-based practices (Aarons & Palinkas, 2007; Antle et al., 2008; Barbee, Christensen, Antle, Wandersman, & Cahn, 2011). Increasing supervisors’ own use of a novel practice and training them to provide explicit, ongoing, positively-oriented support for use of the intervention (followed by their own ongoing consultation to sustain their support) might effectively integrate a change agent role into a supervision model. Further exploration of this question is needed, however, before assuming that the change agent role is compatible with the supervisory role. Change agents have personal characteristics (charisma, extroversion, persuasive abilities) that may be uncommon in existing supervisors, who may be chosen for their role due to very different characteristics. Change agent activities might be more effectively incorporated into implementation efforts through other mechanisms, such as designation of a provider with a strong interest in an intervention to receive early training in both the new practice as well as strategies to engage others in learning about the intervention as a change agent. Learning collaborative models, although understudied (Nadeem et al., 2013), might also serve this function, as they provide the opportunity for providers with different levels of use, interest, and enthusiasm for a new practice to interact. In some groups, a highly invested, enthusiastic provider could serve as a change agent. These types of influences should be examined in implementation research that isolates the differential effects of strategies such as supervisor, peer, and change agent support and interaction.

Additional research is also needed to understand how the effects of different implementation strategies are potentially moderated by work and contextual factors. Child welfare work is characterized by a high level of autonomy in tasks, responsibility and influence in decisions with uncertain outcomes, personal and professional risk, multiple demands, and frequent crises (Glisson & Green, 2011; Golden, 2009; Wulczyn, Barth, Yuan, Harden, & Landsverk, 2008). Child welfare organizational cultures are characterized by a high level of stress, but also potentially high proficiency, given the demands for accountability and documentation. However, much of the direct work with child welfare clients is unobserved, providing the potential for independent practice decisions. Work is highly documented, but as in other settings, documentation does not ensure fidelity to a particular intervention or model of care (Dingfelder & Mandell, 2011; Lipsky, 1980). These factors affect the practices of both therapists who work in child welfare agencies and case managers who attempt to integrate mental health interventions into their home visits. In this context, providers might be particularly focused on their established roles and have difficulty incorporating more proactive mental health practices that are not viewed as essential to their central role (Leathers at al., 2009). Successful implementation may require distinct strategies that recognize how these distinct feature of the work environment affect intervention uptake and sustained use. Strategies such as incorporation of a change agent role, which focuses primarily on increasing interest and self-initiated use of a practice rather than relying on mandated use, might be particularly important in this context given the number of requirements imposed within child welfare and the extent that these requirements may lead to little change in practices in these settings.

Limitations

Although the findings from this study were statistically significant, the clinical impact of the change agent alone is likely to be small. The majority of providers in both groups never used the intervention materials during the periods measured, and at most, the change agent had a moderate impact on use among those who did: mean levels of use in the enhanced condition indicate that many who did initiate use probably only provided just parts of the intervention. The use of a statistical model that accounts for an excess of zeros in the outcome variable provides some additional information about the patterns of use. Although the control group had a higher probability of zero use than the change agent group, this difference was only significantly different at one point, indicating that the change agent did not influence the practice behaviors of many in the enhanced group. Thus, while this study supports the effect of change agent interactions, it is clearly an inadequate implementation strategy when provided alone, as would be expected given the very low intensity of the strategy. In particular, larger studies are needed to assess the impact of change agent interactions in combination with consultation strategies, with a focus on the potential benefits of interactions with internal and external change agents as a part of a more comprehensive implementation plan. Change agent interactions might be fostered in optimal consultation models, or might have an independent effect that should be optimized through enhancement of these interactions within provider groups or by providing an external change agent during implementation.

Further development of the change agent role will also need to consider how to ensure client privacy and confidentiality of information. In this study, the change agent focused on approaching providers at times when they were alone so conversations would be confidential. This was not difficult because the study agency had recently expanded to a larger space, and so work areas were fairly spacious, even for caseworkers who worked in cubicles, and therapists all had private offices. However, some breaches of confidentiality might have occurred given the change agent’s focus on initiating conversations in common agency spaces. In an agency in which cubicles are closely spaced together and staff are more frequently in the office, this risk would be greater as change agent interactions could be more difficult to initiate without being overheard. How protection of confidentiality is addressed in future studies of change agent effects will depend on how the change agent role is operationalized as well as the agencies’ physical environments.

Use of a single agency in this study was necessary given the very large sample that would be required to adequately model agency-level effects and funding restraints, but this design limits generalizability of the findings. Additionally, the randomization by provider prohibited a clear understanding of foster parent and child outcomes because enrolled children potentially were served by multiple providers who could be assigned to either the change agent or control group, which complicates attempts to assess the impact of the change agent condition on child or foster parent outcomes. A larger study involving randomization of all providers assigned to each case, preferably at the team level so use within teams could be optimized, would be needed to adequately assess child-level outcomes. Ideally, randomization would occur at the agency level to allow assessment of agency-level factors, variation in change agent characteristics, and moderators of effects.

Finally, measurement of use was by design a conservative measure that assessed use with specific enrolled children in the last 30 days at 3-month intervals. Use was only counted if it involved use of the intervention manual or handouts. This substantially reduced the estimates of use of the intervention, as only 35% of providers reported ever using the intervention materials although the majority reported some use of the components with foster parents. This measure also does not capture use for most of the 3-month period or initial use that occurred immediately after the training. Use might be also be undercounted over time due to some providers’ lessened reliance on the intervention manual and other materials as they discussed a particular component with a foster parent, or their perception that they had covered all the relevant parts of the intervention for their enrolled children. Although this measurement strategy was chosen to increase the specificity of the measure, it prohibits assessment of the extent that the intervention was fully provided. Additionally, no measures of the provider’s fidelity were used; providers tend to substantially over-report their use of evidence-based practice components (Hurlburt et al., 2010) and use of a component may have been incomplete or inconsistent with the intervention and this would not have been detected. Future studies of specific implementation components that seek to identify effects at the client level will need to incorporate more comprehensive measurement of use and fidelity assessment.

Conclusion

While this study’s results support the role of a change agent in influencing practice behaviors, how to optimize this effect as a component of more comprehensive implementation process needs further study. A strength of the study was its application of Rogers’ (2003) theory and isolation of a specific hypothesized effect in an experimental design. Given the extent that the change agent role is mentioned in the implementation literature despite a lack of systematic study of this role, the findings of this study are a first step in understanding this potential influence. Focusing future research on additional influences incorporated into implementation components and their relative importance in different service contexts is essential to overcome implementation barriers in typical service contexts.

Table 2.

Zero Inflation Poisson Mixed Effect Regression Model Predicting Use

| B | SE | p | |

|---|---|---|---|

| Poisson Estimates | |||

| Intercept | .96 | .35 | <.01 |

| Enhanced T2 | 1.29 | .46 | <.01 |

| Enhanced T3 | 1.67 | .46 | <.01 |

| Enhanced T4 | 1.16 | .49 | .02 |

| Enhanced T5 | −.45 | .75 | .56 |

| Inflated Zero Estimates | |||

| Intercept | 2.73 | .55 | <.01 |

| Enhanced T2 | −1.52 | .76 | .05 |

| Enhanced T3 | −1.93 | .77 | .02 |

| Enhanced T4 | −.95 | .92 | .30 |

| Enhanced T5 | −1.34 | .99 | .18 |

Note. N = 57 providers with a total of 187 observations across time. Demographic variables including race, sex, age, time employed, and position type were deleted from the model to simplify results as they were nonsignificant and did not affect results. Variance components in the model included random intercepts for both the Poisson and inflated zero portions of the model (Poisson, .61, SE = ..29, p < .05; inflated zero, 1.46, SE = 1.08, p = .18).

Acknowledgments

This research was supported by a grant from the National Institute for Mental Health (RC1 MH088732). The views expressed in this paper solely reflect the views of the authors and do not necessarily reflect the views of the National Institutes of Health.

References

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a Conceptual Model of Evidence-Based Practice Implementation in Public Service Sectors. Administration and Policy in Mental Health. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: Service provider perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2007;34:411–419. doi: 10.1007/s10488-007-0121-3. [DOI] [PubMed] [Google Scholar]

- Aarons GA, Sawitzky AC. Organizational climate partially mediates the effect of culture on work attitudes and staff turnover in mental health services. Administration and Policy in Mental Health and Mental Health Services Research. 2006;33:289–301. doi: 10.1007/s10488-006-0039-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abry T, Hulleman CS, Rimm-Kaufman S. Using indices of fidelity to intervention core components to identify program active ingredients. American Journal of Evaluation. 2015;36(3):320–338. [Google Scholar]

- Antle BF, Barbee AP, van Zyl MA. A comprehensive model for child welfare training evaluation. Children and Youth Services Review. 2008;30:1063–1080. [Google Scholar]

- Atkins MS, Frazier SL, Leathers SJ, Graczyk PA, Talbott E, Jakobsons L, Adil JA, Marinez-Lora A, Demirtas H, Gibbons RB, Bell CC. Teacher key opinion leaders and mental health consultation in low-income urban schools. Journal of Consulting and Clinical Psychology. 2008;76:905–908. doi: 10.1037/a0013036. [DOI] [PubMed] [Google Scholar]

- Balas EA, Boren SA. Managing clinical knowledge for health care improvement. In: Bemmel J, McCray AT, editors. Yearbook of medical informatics 2000: Patient-centered systems. Stuttgard, Germany: Schattauer Verlagsgesellschaft mbH; 2000. pp. 65–70. [PubMed] [Google Scholar]

- Barbee AP, Christensen D, Antle B, Wandersman A, Cahn K. Successful adoption and implementation of a comprehensive casework practice model in a public child welfare agency: Application of the Getting to Outcomes (GTO) model. Children and Youth Services Review. 2011;33:622–633. [Google Scholar]

- Beidas RS, Edmunds JM, Cannuscio CC, Gallagher M, Downey MM, Kendall PC. Therapists perspectives on the effective elements of consultation following training. Administration and Policy in Mental Health and Mental Health Services Research. 2013;40(6):507–517. doi: 10.1007/s10488-013-0475-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Kendall PC. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice. 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blase K, Fixsen D. Core intervention components: Identifying and operationalizing what makes programs work (ASPE Research Brief) Washington, DC: OHSP, US Department of Health and Human Services; 2013. Retrieved from http://aspe.hhs.gov/hsp/13/KeyIssuesforChildrenYouth/CoreIntervention/rb_CoreIntervention.cfm. [Google Scholar]

- Chamberlain P, Price J, Leve L, Laurent H, Landsverk JA, Reid JB. Prevention of behavior problems for children in foster care: outcomes and mediation effects. Prevention Science. 2008;9:17–27. doi: 10.1007/s11121-007-0080-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain P, Roberts R, Jones H, Marsenich L, Sosna T, Price JM. Three collaborative models for scaling up evidence-based practices. Administration and Policy in Mental Health and Mental Health Services Research. 2012;39:278–290. doi: 10.1007/s10488-011-0349-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins ME, Amodeo M, Clay C. Training as a factor in plicy implementation: Lessons from a national evaluation of child welfare training. Children and Youth Services Review. 2007;29:1487–1502. [Google Scholar]

- Damanpour F, Schneider M. Characteristics of innovation and innovation adoption in public organizations: Assessing the role of managers. Journal of Public Administration Research and Theory. 2009;19:495–522. [Google Scholar]

- Deane FP, Andresen R, Crowe TP, Oades LG, Ciarrochi J, Williams V. A comparison of two coaching approaches to enhance implementation of a recovery-oriented service model. Administration and Policy in Mental Health and Mental Health Services Research. 2014;41:660–667. doi: 10.1007/s10488-013-0514-4. [DOI] [PubMed] [Google Scholar]

- Dingfelder HE, Mandell DS. Bridging the research-to-practice gap in autism intervention: An application of diffusion of innovation theory. Journal of Autism and Developmental Disorders. 2011;5:597–609. doi: 10.1007/s10803-010-1081-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edmunds JM, Beidas RS, Kendall PC. Dissemination and implementation of evidence-based practices: Training and consultation as implementation strategies. Clinical Psychology: Science and Practice. 2013;20:152–165. doi: 10.1111/cpsp.12031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Blase KA, Naoom SF, Wallace F. Core implementation components. Research on Social Work Practice. 2009;19:531–540. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- Gingiss PL, Gottlieb NH, Brink SG. Measuring cognitive characteristics associated with adoption and implementation of health innovations in schools. American Journal of Health Promotion. 1994;8:294– 301. [Google Scholar]

- Glisson C, Dukes D, Green P. The effects of the ARC organizational intervention on caseworker turnover, climate, and culture in children’s service systems. Child Abuse & Neglect. 2011;30:855–880. doi: 10.1016/j.chiabu.2005.12.010. [DOI] [PubMed] [Google Scholar]

- Glisson C, Green P. Organizational climate, services, and outcomes in child welfare systems. Child Abuse & Neglect. 2011;35:582–591. doi: 10.1016/j.chiabu.2011.04.009. [DOI] [PubMed] [Google Scholar]

- Golden O. Reforming child welfare. The Urban Institute; Washington, D.C: 2009. [Google Scholar]

- Gustafson DH, Quanbeck AR, Robinson JM, Ford JH, II, Pulvermacher A, French MT, McConnell KJ, Batalden PB, Hoffman KA, McCarty D. Which elements of improvement collaboratives are most effective? A cluster-randomized trial. Addiction. 2013;108:1145–1157. doi: 10.1111/add.12117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hedeker D, Gibbons RD, Flay BR. Random-effects regression models for clustered data with an example from smoking prevention research. Journal of Consulting and Clinical Psychology. 1994;62:757–765. doi: 10.1037//0022-006x.62.4.757. [DOI] [PubMed] [Google Scholar]

- Hedeker D, Gibbons RD. Longitudinal data analysis. Wiley Series in Probability and Statistics. Hoboken, NJ: Wiley-Interscience; 2006. [Google Scholar]

- Henggeler S, Lee T. What happens after the innovation is identified? Clinical Psychology: Science and Practice. 2002;9:191–194. [Google Scholar]

- Herschell AD, McNeil CB, Urquiza AJ, McGrath JM, Zebell NM, Timmer SG, et al. Evaluation of a treatment manual and workshops for disseminating Parent-Child Interaction Therapy. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36:63–81. doi: 10.1007/s10488-008-0194-7. [DOI] [PubMed] [Google Scholar]

- Hurlburt MS, Garland AF, Nguyen K, Brookman-Frazee L. Child and family therapy process: Concordance of therapist and observational perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37:230–244. doi: 10.1007/s10488-009-0251-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolko DJ, Baumann BL, Herschell AD, Hart JA, Holden EA, Wisniewski SR. Implementation of AF-CBT by community practitioners serving child welfare and mental health: A randomized trial. Child Maltreatment. 2012;17:32–46. doi: 10.1177/1077559511427346. [DOI] [PubMed] [Google Scholar]

- Kolko D, Cohen J, Mannarino A, Baumann B, Knudsen K. Community treatment of child sexual abuse: A survey of practitioners in the National Child Stress Network. Administration and Policy in Mental Health and Mental Health Services. 2009;36:37–49. doi: 10.1007/s10488-008-0180-0. [DOI] [PubMed] [Google Scholar]

- Leathers SJ, Atkins MS, Spielfogel JE, McMeel LS, Wesley JM, Davis R. Context-specific mental health services for children in foster care. Children and Youth Services Review. 2009;31:1289–1297. doi: 10.1016/j.childyouth.2009.05.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee AH, Wang K, Scott JA, Yau KKW, McLachlan GJ. Multi-level zero-inflated Poisson regression modelling of correlated count data with excess zeros. Statistical Methods in Medical Research. 2006;15:47–61. doi: 10.1191/0962280206sm429oa. [DOI] [PubMed] [Google Scholar]

- Lipsky M. Street-level Bureaucracy: Dilemmas of the Individual in Public Services. New York: Russell Sage Foundation; 1980. [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Martinez J, Pirritano M. A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology. 2004;72(6):1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Milne D. An empirical definition of clinical supervision. British Journal of Clinical Psychology. 2007;46:437–447. doi: 10.1348/014466507X197415. [DOI] [PubMed] [Google Scholar]

- Moore KA, Peters RH, Hills HA, LaVasseur JB, Rich AR, Hunt WM, Young MS, Valente TW. Characteristics of opinion leaders in substance abuse treatment agencies. American Journal of Drug and Alcohol Abuse. 2004;30:187–203. doi: 10.1081/ada-120029873. [DOI] [PubMed] [Google Scholar]

- Nadeem E, Gleacher A, Beidas RS. Consultation as an implementation strategy for evidence-based practices across multiple contexts: Unpacking the black box. Administration and Policy in Mental Health and Mental Health Services Research. 2013;40:439–540. doi: 10.1007/s10488-013-0502-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadeem E, Olin SS, Hill LC, Hoagwood KE, Horwitz SM. Understanding the components of quality improvement collaboratives: A systemic literature review. The Milbank Quarterly. 2013;91(2):354–394. doi: 10.1111/milq.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Novins DK, Green AE, Legha RK, Aarons GA. Dissemination and implementation of evidence-based practices for child and adolescent mental health: A systematic review. Journal of the American Academy of Child and Adolescent Psychiatry. 2013;52:1009–1025. doi: 10.1016/j.jaac.2013.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pacifici C, Chamberlain P, White L. Off Road Parenting. Oregon: Northwest Media, Inc; 2002. [Google Scholar]

- Palinkas L, Schoenwald S, Hoagwood K, Landsverk J, Chorpita B, Weisz J. An ethnographic study of implementation of evidence-based treatments in child mental health: First steps. Psychiatric Services. 2008;59:738–746. doi: 10.1176/ps.2008.59.7.738. [DOI] [PubMed] [Google Scholar]

- Patterson Silver Wolf (Adelv unegv Waya) DA, Dulmus CN, Maguin E. Is openness to using empirically supported treatments related to organizational culture and climate? Journal of Social Service Research. 2013;39:562–571. doi: 10.1080/01488376.2013.804023. [DOI] [PMC free article] [PubMed] [Google Scholar]