Significance

Understanding dynamic constraints and balances in nature has facilitated rapid development of knowledge and enabled technology, including aircraft, combustion engines, satellites, and electrical power. This work develops a novel framework to discover governing equations underlying a dynamical system simply from data measurements, leveraging advances in sparsity techniques and machine learning. The resulting models are parsimonious, balancing model complexity with descriptive ability while avoiding overfitting. There are many critical data-driven problems, such as understanding cognition from neural recordings, inferring climate patterns, determining stability of financial markets, predicting and suppressing the spread of disease, and controlling turbulence for greener transportation and energy. With abundant data and elusive laws, data-driven discovery of dynamics will continue to play an important role in these efforts.

Keywords: dynamical systems, machine learning, sparse regression, system identification, optimization

Abstract

Extracting governing equations from data is a central challenge in many diverse areas of science and engineering. Data are abundant whereas models often remain elusive, as in climate science, neuroscience, ecology, finance, and epidemiology, to name only a few examples. In this work, we combine sparsity-promoting techniques and machine learning with nonlinear dynamical systems to discover governing equations from noisy measurement data. The only assumption about the structure of the model is that there are only a few important terms that govern the dynamics, so that the equations are sparse in the space of possible functions; this assumption holds for many physical systems in an appropriate basis. In particular, we use sparse regression to determine the fewest terms in the dynamic governing equations required to accurately represent the data. This results in parsimonious models that balance accuracy with model complexity to avoid overfitting. We demonstrate the algorithm on a wide range of problems, from simple canonical systems, including linear and nonlinear oscillators and the chaotic Lorenz system, to the fluid vortex shedding behind an obstacle. The fluid example illustrates the ability of this method to discover the underlying dynamics of a system that took experts in the community nearly 30 years to resolve. We also show that this method generalizes to parameterized systems and systems that are time-varying or have external forcing.

Advances in machine learning (1) and data science (2) have promised a renaissance in the analysis and understanding of complex data, extracting patterns in vast multimodal data that are beyond the ability of humans to grasp. However, despite the rapid development of tools to understand static data based on statistical relationships, there has been slow progress in distilling physical models of dynamic processes from big data. This has limited the ability of data science models to extrapolate the dynamics beyond the attractor where they were sampled and constructed.

An analogy may be drawn with the discoveries of Kepler and Newton. Kepler, equipped with the most extensive and accurate planetary data of the era, developed a data-driven model for planetary motion, resulting in his famous elliptic orbits. However, this was an attractor-based view of the world, and it did not explain the fundamental dynamic relationships that give rise to planetary orbits, or provide a model for how these bodies react when perturbed. Newton, in contrast, discovered a dynamic relationship between momentum and energy that described the underlying processes responsible for these elliptic orbits. This dynamic model may be generalized to predict behavior in regimes where no data were collected. Newton’s model has proven remarkably robust for engineering design, making it possible to land a spacecraft on the moon, which would not have been possible using Kepler’s model alone.

A seminal breakthrough by Bongard and Lipson (3) and Schmidt and Lipson (4) has resulted in a new approach to determine the underlying structure of a nonlinear dynamical system from data. This method uses symbolic regression [i.e., genetic programming (5)] to find nonlinear differential equations, and it balances complexity of the model, measured in the number of terms, with model accuracy. The resulting model identification realizes a long-sought goal of the physics and engineering communities to discover dynamical systems from data. However, symbolic regression is expensive, does not scale well to large systems of interest, and may be prone to overfitting unless care is taken to explicitly balance model complexity with predictive power. In ref. 4, the Pareto front is used to find parsimonious models. There are other techniques that address various aspects of the dynamical system discovery problem. These include methods to discover governing equations from time-series data (6), equation-free modeling (7), empirical dynamic modeling (8, 9), modeling emergent behavior (10), and automated inference of dynamics (11–13); ref. 12 provides an excellent review.

Sparse Identification of Nonlinear Dynamics (SINDy)

In this work, we reenvision the dynamical system discovery problem from the perspective of sparse regression (14–16) and compressed sensing (17–22). In particular, we leverage the fact that most physical systems have only a few relevant terms that define the dynamics, making the governing equations sparse in a high-dimensional nonlinear function space. The combination of sparsity methods in dynamical systems is quite recent (23–30). Here, we consider dynamical systems (31) of the form

| [1] |

The vector denotes the state of a system at time t, and the function represents the dynamic constraints that define the equations of motion of the system, such as Newton’s second law. Later, the dynamics will be generalized to include parameterization, time dependence, and forcing.

The key observation is that for many systems of interest, the function consists of only a few terms, making it sparse in the space of possible functions. Recent advances in compressed sensing and sparse regression make this viewpoint of sparsity favorable, because it is now possible to determine which right-hand-side terms are nonzero without performing a combinatorially intractable brute-force search. This guarantees that the sparse solution is found with high probability using convex methods that scale to large problems favorably with Moore’s law. The resulting sparse model identification inherently balances model complexity (i.e., sparsity of the right-hand-side dynamics) with accuracy, avoiding overfitting the model to the data. Wang et al. (23) have used compressed sensing to identify nonlinear dynamics and predict catastrophes; here, we advocate using sparse regression to mitigate noise.

To determine the function from data, we collect a time history of the state and either measure the derivative or approximate it numerically from . The data are sampled at several times and arranged into two matrices:

Next, we construct a library consisting of candidate nonlinear functions of the columns of . For example, may consist of constant, polynomial, and trigonometric terms:

| [2] |

Here, higher polynomials are denoted as etc., where denotes the quadratic nonlinearities in the state :

Each column of represents a candidate function for the right-hand side of Eq. 1. There is tremendous freedom in choosing the entries in this matrix of nonlinearities. Because we believe that only a few of these nonlinearities are active in each row of , we may set up a sparse regression problem to determine the sparse vectors of coefficients that determine which nonlinearities are active:

| [3] |

This is illustrated in Fig. 1. Each column of is a sparse vector of coefficients determining which terms are active in the right-hand side for one of the row equations in Eq. 1. Once has been determined, a model of each row of the governing equations may be constructed as follows:

| [4] |

Note that is a vector of symbolic functions of elements of , as opposed to , which is a data matrix. Thus,

| [5] |

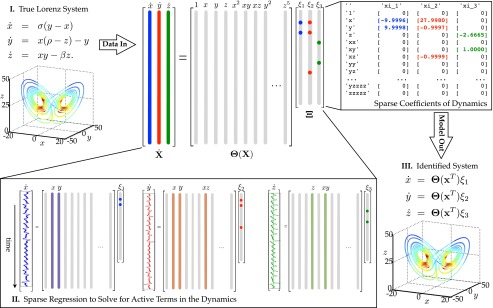

Fig. 1.

Schematic of the SINDy algorithm, demonstrated on the Lorenz equations. Data are collected from the system, including a time history of the states and derivatives ; the assumption of having is relaxed later. Next, a library of nonlinear functions of the states, , is constructed. This nonlinear feature library is used to find the fewest terms needed to satisfy Ξ. The few entries in the vectors of Ξ, solved for by sparse regression, denote the relevant terms in the right-hand side of the dynamics. Parameter values are , . The trajectory on the Lorenz attractor is colored by the adaptive time step required, with red indicating a smaller time step.

Each column of Eq. 3 requires a distinct optimization to find the sparse vector of coefficients for the kth row equation. We may also normalize the columns of , especially when entries of are small, as discussed in the SI Appendix.

For examples in this paper, the matrix has size , where p is the number of candidate functions, and because there are more data samples than functions; this is possible in a restricted basis, such as the polynomial basis in Eq. 2. In practice, it may be helpful to test many different function bases and use the sparsity and accuracy of the resulting model as a diagnostic tool to determine the correct basis to represent the dynamics in. In SI Appendix, Appendix B, two examples are explored where the sparse identification algorithm fails because the dynamics are not sparse in the chosen basis.

Realistically, often only is available, and must be approximated numerically, as in all of the continuous-time examples below. Thus, and are contaminated with noise so Eq. 3 does not hold exactly. Instead,

| [6] |

where is modeled as a matrix of independent identically distributed Gaussian entries with zero mean, and noise magnitude η. Thus, we seek a sparse solution to an overdetermined system with noise. The least absolute shrinkage and selection operator (LASSO) (14, 15) is an -regularized regression that promotes sparsity and works well with this type of data. However, it may be computationally expensive for very large data sets. An alternative based on sequential thresholded least-squares is presented in Code 1 in the SI Appendix.

Depending on the noise, it may be necessary to filter and before solving for . In many of the examples below, only the data are available, and are obtained by differentiation. To counteract differentiation error, we use the total variation regularization (32) to denoise the derivative (33). This works quite well when only state data are available, as illustrated on the Lorenz system (SI Appendix, Fig. S7). Alternatively, the data and may be filtered, for example using the optimal hard threshold for singular values described in ref. 34. Insensitivity to noise is a critical feature of an algorithm that identifies dynamics from data (11–13).

Often, the physical system of interest may be naturally represented by a partial differential equation (PDE) in a few spatial variables. If data are collected from a numerical discretization or from experimental measurements on a spatial grid, then the state dimension n may be prohibitively large. For example, in fluid dynamics, even simple 2D and 3D flows may require tens of thousands up to billions of variables to represent the discretized system. The proposed method is ill-suited for a large state dimension n, because of the factorial growth of in n and because each of the n row equations in Eq. 4 requires a separate optimization. Fortunately, many high-dimensional systems of interest evolve on a low-dimensional manifold or attractor that is well-approximated using a low-rank basis (35, 36). For example, if data are collected for a high-dimensional system as in Eq. 2, it is possible to obtain a low-rank approximation using dimensionality reduction techniques, such as the proper orthogonal decomposition (POD) (35, 37).

The proposed sparse identification of nonlinear dynamics (SINDy) method depends on the choice of measurement variables, data quality, and the sparsifying function basis. There is no single method that will solve all problems in nonlinear system identification, but this method highlights the importance of these underlying choices and can help guide the analysis. The challenges of choosing measurement variables and a sparsifying function basis are explored in SI Appendix, section 4.5 and Appendixes A and B.

Simply put, we need the right coordinates and function basis to yield sparse dynamics; the feasibility and flexibility of these requirements is discussed in Discussion and SI Appendix section 4.5 and Appendixes A and B. However, it may be difficult to know the correct variables a priori. Fortunately, time-delay coordinates may provide useful variables from a time series (9, 12, 38). The ability to reconstruct sparse attractor dynamics using time-delay coordinates is demonstrated in SI Appendix, section 4.5 using a single variable of the Lorenz system.

The choice of coordinates and the sparsifying basis are intimately related, and the best choice is not always clear. However, basic knowledge of the physics (e.g., Navier–Stokes equations have quadratic nonlinearities, and the Schrödinger equation has terms) may provide a reasonable choice of nonlinear functions and measurement coordinates. In fact, the sparsity and accuracy of the proposed sparse identified model may provide valuable diagnostic information about the correct measurement coordinates and basis in which to represent the dynamics. Choosing the right coordinates to simplify dynamics has always been important, as exemplified by Lagrangian and Hamiltonian mechanics (39). There is still a need for experts to find and exploit symmetry in the system, and the proposed methods should be complemented by advanced algorithms in machine learning to extract useful features.

Results

We demonstrate the algorithm on canonical systems*, ranging from linear and nonlinear oscillators (SI Appendix, section 4.1), to noisy measurements of the chaotic Lorenz system, to the unsteady fluid wake behind a cylinder, extending this method to nonlinear PDEs and high-dimensional data. Finally, we show that bifurcation parameters may be included in the models, recovering the parameterized logistic map and the Hopf normal form from noisy measurements. In each example, we explore the ability to identify the dynamics from state measurements alone, without access to derivatives.

It is important to reiterate that the sparse identification method relies on a fortunate choice of coordinates and function basis that facilitate sparse representation of the dynamics. In SI Appendix, Appendix B, we explore the limitations of the method for examples where these assumptions break down: the Lorenz system transformed into nonlinear coordinates and the glycolytic oscillator model (11–13).

Chaotic Lorenz System.

As a first example, consider a canonical model for chaotic dynamics, the Lorenz system (40):

| [7a] |

| [7b] |

| [7c] |

Although these equations give rise to rich and chaotic dynamics that evolve on an attractor, there are only a few terms in the right-hand side of the equations. Fig. 1 shows a schematic of how data are collected for this example, and how sparse dynamics are identified in a space of possible right-hand-side functions using convex minimization.

For this example, data are collected for the Lorenz system, and stacked into two large data matrices and , where each row of is a snapshot of the state in time, and each row of is a snapshot of the time derivative of the state in time. Here, the right-hand-side dynamics are identified in the space of polynomials in up to fifth order, although other functions such as , or higher-order polynomials may be included:

Each column of represents a candidate function for the right-hand side of Eq. 1. Because only a few of these terms are active in each row of , we solve the sparse regression problem in Eq. 3 to determine the sparse vectors of coefficients that determine which terms are active in the dynamics. This is illustrated schematically in Fig. 1, where sparse vectors are found to represent the derivative as a linear combination of the fewest terms in .

In the Lorenz example, the ability to capture dynamics on the attractor is more important than the ability to predict an individual trajectory, because chaos will quickly cause any small variations in initial conditions or model coefficients to diverge exponentially. As shown in Fig. 1, the sparse model accurately reproduces the attractor dynamics from chaotic trajectory measurements. The algorithm not only identifies the correct terms in the dynamics, but it accurately determines the coefficients to within .03% of the true values. We also explore the identification of the dynamics when only noisy state measurements are available (SI Appendix, Fig. S7). The correct dynamics are identified, and the attractor is preserved for surprisingly large noise values. In SI Appendix, section 4.5, we reconstruct the attractor dynamics in the Lorenz system using time-delay coordinates from a single measurement .

PDE for Vortex Shedding Behind an Obstacle.

The Lorenz system is a low-dimensional model of more realistic high-dimensional PDE models for fluid convection in the atmosphere. Many systems of interest are governed by PDEs (24), such as weather and climate, epidemiology, and the power grid, to name a few. Each of these examples is characterized by big data, consisting of large spatially resolved measurements consisting of millions or billions of states and spanning orders of magnitude of scale in both space and time. However, many high-dimensional, real-world systems evolve on a low-dimensional attractor, making the effective dimension much smaller (35).

Here we generalize the SINDy method to an example in fluid dynamics that typifies many of the challenges outlined above. In the context of data from a PDE, our algorithm shares some connections to the dynamic mode decomposition, which is a linear dynamic regression (41–43). Data are collected for the fluid flow past a cylinder at Reynolds number 100 using direct numerical simulations of the 2D Navier–Stokes equations (44, 45). The nonlinear dynamic relationship between the dominant coherent structures is identified from these flow-field measurements with no knowledge of the governing equations.

The flow past a cylinder is a particularly interesting example because of its rich history in fluid mechanics and dynamical systems. It has long been theorized that turbulence is the result of a series of Hopf bifurcations that occur as the flow velocity increases (46), giving rise to more rich and intricate structures in the fluid. After 15 years, the first Hopf bifurcation was discovered in a fluid system, in the transition from a steady laminar wake to laminar periodic vortex shedding at Reynolds number 47 (47, 48). This discovery led to a long-standing debate about how a Hopf bifurcation, with cubic nonlinearity, can be exhibited in a Navier–Stokes fluid with quadratic nonlinearities. After 15 more years, this was resolved using a separation of timescales and a mean-field model (49), shown in Eq. 8. It was shown that coupling between oscillatory modes and the base flow gives rise to a slow manifold (Fig. 2, Left), which results in algebraic terms that approximate cubic nonlinearities on slow timescales.

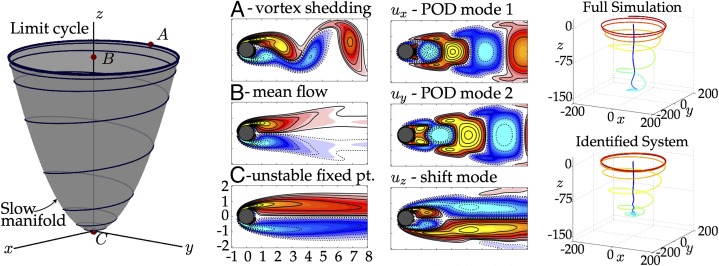

Fig. 2.

Example of high-dimensional dynamical system from fluid dynamics. The vortex shedding past a cylinder is a prototypical example that is used for flow control, with relevance to many applications, including drag reduction behind vehicles. The vortex shedding is the result of a Hopf bifurcation. However, because the Navier–Stokes equations have quadratic nonlinearity, it is necessary to use a mean-field model with a separation of timescales, where a fast mean-field deformation is slave to the slow vortex shedding dynamics. The parabolic slow manifold is shown (Left), with the unstable fixed point (C), mean flow (B), and vortex shedding (A). A POD basis and shift mode are used to reduce the dimension of the problem (Middle Right). The identified dynamics closely match the true trajectory in POD coordinates, and most importantly, they capture the quadratic nonlinearity and timescales associated with the mean-field model.

This example provides a compelling test case for the proposed algorithm, because the underlying form of the dynamics took nearly three decades for experts in the community to uncover. Because the state dimension is large, consisting of the fluid state at hundreds of thousands of grid points, it is first necessary to reduce the dimension of the system. The POD (35, 37), provides a low-rank basis resulting in a hierarchy of orthonormal modes that, when truncated, capture the most energy of the original system for the given rank truncation. The first two most energetic POD modes capture a significant portion of the energy, and steady-state vortex shedding is a limit cycle in these coordinates. An additional mode, called the shift mode, is included to capture the transient dynamics connecting the unstable steady state (“C” in Fig. 2) with the mean of the limit cycle (49) (“B” in Fig. 2). These modes define the coordinates in Fig. 2.

In the coordinate system described above, the mean-field model for the cylinder dynamics is given by (49)

| [8a] |

| [8b] |

| [8c] |

If λ is large, so that the z dynamics are fast, then the mean flow rapidly corrects to be on the (slow) manifold given by the amplitude of vortex shedding. When substituting this algebraic relationship into Eqs. 8a and 8b, we recover the Hopf normal form on the slow manifold.

With a time history of these three coordinates, the proposed algorithm correctly identifies quadratic nonlinearities and reproduces a parabolic slow manifold. Note that derivative measurements are not available, but are computed from the state variables. Interestingly, when the training data do not include trajectories that originate off of the slow manifold, the algorithm incorrectly identifies cubic nonlinearities, and fails to identify the slow manifold.

Normal Forms, Bifurcations, and Parameterized Systems.

In practice, many real-world systems depend on parameters, and dramatic changes, or bifurcations, may occur when the parameter is varied. The SINDy algorithm is readily extended to encompass these important parameterized systems, allowing for the discovery of normal forms (31, 50) associated with a bifurcation parameter μ. First, we append μ to the dynamics:

| [9a] |

| [9b] |

It is then possible to identify as a sparse combination of functions of as well as the bifurcation parameter μ.

Identifying parameterized dynamics is shown in two examples: the 1D logistic map with stochastic forcing,

and the 2D Hopf normal form (51),

The logistic map is a classical model for population dynamics, and the Hopf normal form models spontaneous oscillations in chemical reactions, electrical circuits, and fluid instability.

The noisy measurements and the sparse dynamic reconstructions for both examples are shown in Fig. 3. In the logistic map example, the stochastically forced trajectory is sampled at 10 discrete parameter values, shown in red. From these measurements, the correct parameterized dynamics are identified. The parameterization is accurate enough to capture the cascade of bifurcations as μ is increased, resulting in the detailed bifurcation diagram in Fig. 3. Parameters are identified to within .1% of true values (SI Appendix, Appendix C).

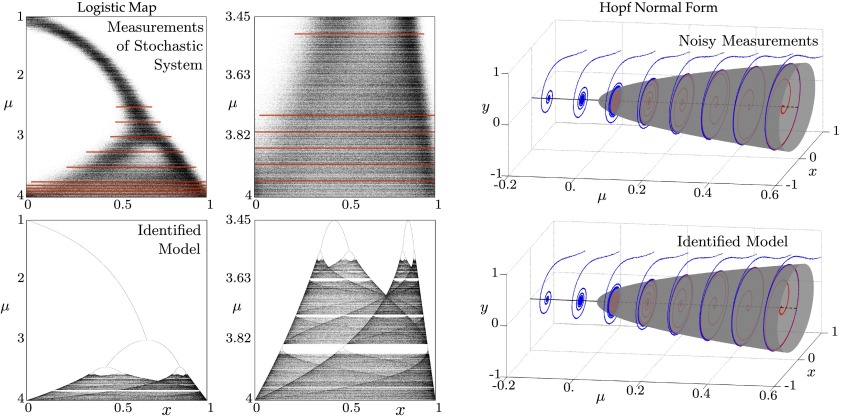

Fig. 3.

SINDy algorithm is able to identify normal forms and capture bifurcations, as demonstrated on the logistic map (Left) and the Hopf normal form (Right). Noisy data from both systems are used to train models. For the logistic map, a handful of parameter values μ (red lines), are used for the training data, and the correct normal form and bifurcation sequence is captured (below). Noisy data for the Hopf normal form are collected at a few values of μ, and the total variation derivative (33) is used to compute time derivatives. The accurate Hopf normal form is reproduced (below). The nonlinear terms identified by the algorithm are in SI Appendix, section 4.4 and Appendix C.

In the Hopf normal-form example, noisy state measurements from eight parameter values are sampled, with data collected on the blue and red trajectories in Fig. 3 (Top Right). Noise is added to the position measurements to simulate sensor noise, and the total variation regularized derivative (33) is used. In this example, the normal form is correctly identified, resulting in accurate limit cycle amplitudes and growth rates (Bottom Right). The correct identification of a normal form depends critically on the choice of variables and the nonlinear basis functions used for . In practice, these choices may be informed by machine learning and data mining, by partial knowledge of the physics, and by expert human intuition.

Similarly, time dependence and external forcing or feedback control may be added to the vector field:

Generalizing the SINDy algorithm makes it possible to analyze systems that are externally forced or controlled. For example, the climate is both parameterized (50) and has external forcing, including carbon dioxide and solar radiation. The financial market is another important example with forcing and active feedback control.

Discussion

In summary, we have demonstrated a powerful technique to identify nonlinear dynamical systems from data without assumptions on the form of the governing equations. This builds on prior work in symbolic regression but with innovations related to sparse regression, which allow our algorithms to scale to high-dimensional systems. We demonstrate this method on a number of example systems exhibiting chaos, high-dimensional data with low-rank coherence, and parameterized dynamics. As shown in the Lorenz example, the ability to predict a specific trajectory may be less important than the ability to capture the attractor dynamics. The example from fluid dynamics highlights the remarkable ability of this method to extract dynamics in a fluid system that took three decades for experts in the community to explain. There are numerous fields where this method may be applied, where there are ample data and the absence of governing equations, including neuroscience, climate science, epidemiology, and financial markets. Finally, normal forms may be discovered by including parameters in the optimization, as shown in two examples. The identification of sparse governing equations and parameterizations marks a significant step toward the long-held goal of intelligent, unassisted identification of dynamical systems.

We have demonstrated the robustness of the sparse dynamics algorithm to measurement noise and unavailability of derivative measurements in the Lorenz system (SI Appendix, Figs. S6 and S7), logistic map (SI Appendix, section 4.4.1), and Hopf normal form (SI Appendix, section 4.4.2) examples. In each case, the sparse regression framework appears well-suited to measurement and process noise, especially when derivatives are smoothed using the total-variation regularized derivative.

A significant outstanding issue in the above approach is the correct choice of measurement coordinates and the choice of sparsifying function basis for the dynamics. As shown in SI Appendix, Appendix B, the algorithm fails to identify an accurate sparse model when the measurement coordinates and function basis are not amenable to sparse representation. In the successful examples, the coordinates and function spaces were somehow fortunate in that they enabled sparse representation. There is no simple solution to this challenge, and there must be a coordinated effort to incorporate expert knowledge, feature extraction, and other advanced methods. However, in practice, there may be some hope of obtaining the correct coordinate system and function basis without knowing the solution ahead of time, because we often know something about the physics that guide the choice of function space. The failure to identify an accurate sparse model also provides valuable diagnostic information about the coordinates and basis. If we have few measurements, these may be augmented using time-delay coordinates, as demonstrated on the Lorenz system (SI Appendix, section 4.5). When there are too many measurements, we may extract coherent structures using dimensionality reduction. We also demonstrate the use of polynomial bases to approximate Taylor series of nonlinear dynamics (SI Appendix, Appendix A). The connection between sparse optimization and dynamical systems will hopefully spur developments to automate and improve these choices.

Data science is not a panacea for all problems in science and engineering, but used in the right way, it provides a principled approach to maximally leverage the data that we have and inform what new data to collect. Big data are happening all across the sciences, where the data are inherently dynamic, and where traditional approaches are prone to overfitting. Data discovery algorithms that produce parsimonious models are both rare and desirable. Data science will only become more critical to efforts in science in engineering, such as understanding the neural basis of cognition, extracting and predicting coherent changes in the climate, stabilizing financial markets, managing the spread of disease, and controlling turbulence, where data are abundant, but physical laws remain elusive.

Supplementary Material

Acknowledgments

We are grateful for discussions with Bing Brunton and Bernd Noack, and for insightful comments from the referees. We also thank Tony Roberts and Jean-Christophe Loiseau. S.L.B. acknowledges support from the University of Washington. J.L.P. thanks Bill and Melinda Gates for their support of the Institute for Disease Modeling and their sponsorship through the Global Good Fund. J.N.K. acknowledges support from the US Air Force Office of Scientific Research (FA9550-09-0174).

Footnotes

The authors declare no conflict of interest.

*Code is available at faculty.washington.edu/sbrunton/sparsedynamics.zip.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1517384113/-/DCSupplemental.

References

- 1.Jordan MI, Mitchell TM. Machine learning: Trends, perspectives, and prospects. Science. 2015;349(6245):255–260. doi: 10.1126/science.aaa8415. [DOI] [PubMed] [Google Scholar]

- 2.Marx V. Biology: The big challenges of big data. Nature. 2013;498(7453):255–260. doi: 10.1038/498255a. [DOI] [PubMed] [Google Scholar]

- 3.Bongard J, Lipson H. Automated reverse engineering of nonlinear dynamical systems. Proc Natl Acad Sci USA. 2007;104(24):9943–9948. doi: 10.1073/pnas.0609476104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schmidt M, Lipson H. Distilling free-form natural laws from experimental data. Science. 2009;324(5923):81–85. doi: 10.1126/science.1165893. [DOI] [PubMed] [Google Scholar]

- 5.Koza JR. Genetic Programming: On the Programming of Computers by Means of Natural Selection. Vol 1 MIT Press; Cambridge, MA: 1992. [Google Scholar]

- 6.Crutchfield JP, McNamara BS. Equations of motion from a data series. Complex Syst. 1987;1(3):417–452. [Google Scholar]

- 7.Kevrekidis IG, et al. Equation-free, coarse-grained multiscale computation: Enabling microscopic simulators to perform system-level analysis. Commun Math Sci. 2003;1(4):715–762. [Google Scholar]

- 8.Sugihara G, et al. Detecting causality in complex ecosystems. Science. 2012;338(6106):496–500. doi: 10.1126/science.1227079. [DOI] [PubMed] [Google Scholar]

- 9.Ye H, et al. Equation-free mechanistic ecosystem forecasting using empirical dynamic modeling. Proc Natl Acad Sci USA. 2015;112(13):E1569–E1576. doi: 10.1073/pnas.1417063112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Roberts AJ. Model Emergent Dynamics in Complex Systems. SIAM; Philadelphia: 2014. [Google Scholar]

- 11.Schmidt MD, et al. Automated refinement and inference of analytical models for metabolic networks. Phys Biol. 2011;8(5):055011. doi: 10.1088/1478-3975/8/5/055011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Daniels BC, Nemenman I. Automated adaptive inference of phenomenological dynamical models. Nat Commun. 2015;6:8133. doi: 10.1038/ncomms9133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Daniels BC, Nemenman I. Efficient inference of parsimonious phenomenological models of cellular dynamics using S-systems and alternating regression. PLoS One. 2015;10(3):e0119821. doi: 10.1371/journal.pone.0119821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc, B. 1996;58(1):267–288. [Google Scholar]

- 15.Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning. Vol 2 Springer; New York: 2009. [Google Scholar]

- 16.James G, Witten D, Hastie T, Tibshirani R. An Introduction to Statistical Learning. Springer; New York: 2013. [Google Scholar]

- 17.Donoho DL. Compressed sensing. IEEE Trans Inf Theory. 2006;52(4):1289–1306. [Google Scholar]

- 18.Candès EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inf Theory. 2006;52(2):489–509. [Google Scholar]

- 19.Candès EJ, Romberg J, Tao T. Stable signal recovery from incomplete and inaccurate measurements. Commun Pure Appl Math. 2006;59(8):1207–1223. [Google Scholar]

- 20.Candès EJ, Wakin MB. An introduction to compressive sampling. IEEE Signal Processing Magazine. 2008;25(2):21–30. [Google Scholar]

- 21.Baraniuk RG. Compressive sensing. IEEE Signal Process Mag. 2007;24(4):118–120. [Google Scholar]

- 22.Tropp JA, Gilbert AC. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans Inf Theory. 2007;53(12):4655–4666. [Google Scholar]

- 23.Wang WX, Yang R, Lai YC, Kovanis V, Grebogi C. Predicting catastrophes in nonlinear dynamical systems by compressive sensing. Phys Rev Lett. 2011;106(15):154101. doi: 10.1103/PhysRevLett.106.154101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schaeffer H, Caflisch R, Hauck CD, Osher S. Sparse dynamics for partial differential equations. Proc Natl Acad Sci USA. 2013;110(17):6634–6639. doi: 10.1073/pnas.1302752110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ozoliņš V, Lai R, Caflisch R, Osher S. Compressed modes for variational problems in mathematics and physics. Proc Natl Acad Sci USA. 2013;110(46):18368–18373. doi: 10.1073/pnas.1318679110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mackey A, Schaeffer H, Osher S. On the compressive spectral method. Multiscale Model Simul. 2014;12(4):1800–1827. [Google Scholar]

- 27.Brunton SL, Tu JH, Bright I, Kutz JN. Compressive sensing and low-rank libraries for classification of bifurcation regimes in nonlinear dynamical systems. SIAM J Appl Dyn Syst. 2014;13(4):1716–1732. [Google Scholar]

- 28.Proctor JL, Brunton SL, Brunton BW, Kutz JN. Exploiting sparsity and equation-free architectures in complex systems (invited review) Eur Phys J Spec Top. 2014;223:2665–2684. [Google Scholar]

- 29.Bai Z, et al. Low-dimensional approach for reconstruction of airfoil data via compressive sensing. AIAA J. 2014;53(4):920–930. [Google Scholar]

- 30.Arnaldo I, O’Reilly UM, Veeramachaneni K. ACM; New York: 2015. Building predictive models via feature synthesis. Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation; pp. 983–990. [Google Scholar]

- 31.Holmes P, Guckenheimer J. Applied Mathematical Sciences. Vol 42 Springer; Berlin: 1983. Nonlinear oscillations, dynamical systems, and bifurcations of vector fields. [Google Scholar]

- 32.Rudin LI, Osher S, Fatemi E. Nonlinear total variation based noise removal algorithms. Physica D. 1992;60(1):259–268. [Google Scholar]

- 33.Chartrand R. Numerical differentiation of noisy, nonsmooth data. ISRN Applied Mathematics. 2011;2011(2011):164564. [Google Scholar]

- 34.Gavish M, Donoho DL. The optimal hard threshold for singular values is 4/3. IEEE Trans Inf Theory. 2014;60(8):5040–5053. [Google Scholar]

- 35.Holmes PJ, Lumley JL, Berkooz G, Rowley CW. 2012. Turbulence, Coherent Structures, Dynamical Systems and Symmetry, Cambridge Monographs in Mechanics (Cambridge Univ Press, Cambridge, UK), 2nd Ed.

- 36.Majda AJ, Harlim J. Information flow between subspaces of complex dynamical systems. Proc Natl Acad Sci USA. 2007;104(23):9558–9563. [Google Scholar]

- 37.Berkooz G, Holmes P, Lumley JL. The proper orthogonal decomposition in the analysis of turbulent flows. Annu Rev Fluid Mech. 1993;23:539–575. [Google Scholar]

- 38.Takens F. Detecting strange attractors in turbulence. Lect Notes Math. 1981;898:366–381. [Google Scholar]

- 39.Marsden JE, Ratiu TS. Introduction to Mechanics and Symmetry. 2nd Ed Springer; New York: 1999. [Google Scholar]

- 40.Lorenz EN. Deterministic nonperiodic flow. J Atmos Sci. 1963;20:130–141. [Google Scholar]

- 41.Rowley CW, Mezić I, Bagheri S, Schlatter P, Henningson D. Spectral analysis of nonlinear flows. J Fluid Mech. 2009;645:115–127. [Google Scholar]

- 42.Schmid PJ. Dynamic mode decomposition of numerical and experimental data. J Fluid Mech. 2010;656:5–28. [Google Scholar]

- 43.Mezic I. Analysis of fluid flows via spectral properties of the Koopman operator. Annu Rev Fluid Mech. 2013;45:357–378. [Google Scholar]

- 44.Taira K, Colonius T. The immersed boundary method: A projection approach. J Comput Phys. 2007;225(2):2118–2137. [Google Scholar]

- 45.Colonius T, Taira K. A fast immersed boundary method using a nullspace approach and multi-domain far-field boundary conditions. Comput Methods Appl Mech Eng. 2008;197:2131–2146. [Google Scholar]

- 46.Ruelle D, Takens F. On the nature of turbulence. Commun Math Phys. 1971;20:167–192. [Google Scholar]

- 47.Jackson CP. A finite-element study of the onset of vortex shedding in flow past variously shaped bodies. J Fluid Mech. 1987;182:23–45. [Google Scholar]

- 48.Zebib Z. Stability of viscous flow past a circular cylinder. J Eng Math. 1987;21:155–165. [Google Scholar]

- 49.Noack BR, Afanasiev K, Morzynski M, Tadmor G, Thiele F. A hierarchy of low-dimensional models for the transient and post-transient cylinder wake. J Fluid Mech. 2003;497:335–363. [Google Scholar]

- 50.Majda AJ, Franzke C, Crommelin D. Normal forms for reduced stochastic climate models. Proc Natl Acad Sci USA. 2009;106(10):3649–3653. doi: 10.1073/pnas.0900173106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Marsden JE, McCracken M. The Hopf Bifurcation and Its Applications. Vol 19 Springer; New York: 1976. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.