Significance

Our ability to learn how to avoid harm is critical for maintaining physical and mental health. However, excessive harm avoidance can be maladaptive, as evident in psychiatric disorders such as avoidant personality disorder and obsessive-compulsive disorder. We therefore investigated the neural factors underlying individual imbalances in harm avoidance behavior. Our findings show that such imbalances can be predicted by the function and structure of an individual’s striatum, a brain region that is critical for goal-directed decisionmaking. Moreover, the neural signals expressed in this region revealed key processes through which individuals learn to avoid harm. These findings highlight a neural basis for imbalanced harm avoidance behavior, extreme forms of which may contribute to psychiatric pathology.

Keywords: avoidance learning, pain, individual differences, striatum, prediction errors

Abstract

Pain is an elemental inducer of avoidance. Here, we demonstrate that people differ in how they learn to avoid pain, with some individuals refraining from actions that resulted in painful outcomes, whereas others favor actions that helped prevent pain. These individual biases were best explained by differences in learning from outcome prediction errors and were associated with distinct forms of striatal responses to painful outcomes. Specifically, striatal responses to pain were modulated in a manner consistent with an aversive prediction error in individuals who learned predominantly from pain, whereas in individuals who learned predominantly from success in preventing pain, modulation was consistent with an appetitive prediction error. In contrast, striatal responses to success in preventing pain were consistent with an appetitive prediction error in both groups. Furthermore, variation in striatal structure, encompassing the region where pain prediction errors were expressed, predicted participants’ predominant mode of learning, suggesting the observed learning biases may reflect stable individual traits. These results reveal functional and structural neural components underlying individual differences in avoidance learning, which may be important contributors to psychiatric disorders involving pathological harm avoidance behavior.

Pain conveys vital feedback on our actions, informing us whether an action compromises our safety and should be avoided. However, learning what to avoid doing, rather than what to do, could lead to maladaptive passive risk-avoidant behavior. For instance, when learning to ski, an overreaction to a painful fall could render a person overly cautious and hinder progress in skill acquisition. Likewise, failed investments might lead to an overconservative passive financial strategy, whereas social rejection might engender reclusive behavior. In some individuals, such as those with avoidant personality disorders, refraining too much from potentially harmful actions can manifest as a stable personality trait (1).

However, an opposite tendency, to learn predominantly from successful actions that helped avoid harm, might lead to maladaptive active behavior. Thus, in soccer, sporadic success in preventing goals by diving to the left or right before seeing where a penalty kick is heading is sufficient for goalkeepers to overwhelmingly prefer this suboptimal active strategy, when in fact the optimal strategy is to passively stay put (2). In the extreme, excessive repetitive activity so as to avoid harm may constitute compulsivity, a debilitating feature of obsessive-compulsive disorder (3).

Complementing previous studies of learning about abstract outcomes (4, 5), we investigated individual biases in learning to avoid pain. To this end, we used a novel gambling task that probes how participants adjust their choices in response to painful electrical shocks and, additionally, how they adjust their choices in response to success in preventing shocks. In line with studies of reward learning (6–9), learning in our task was best explained as driven by an outcome prediction error that reflects the difference between expected and actual outcomes. Consistent with the expression of such a teaching signal, blood-oxygen level-dependent (BOLD) responses to outcomes in the striatum were modulated by expectation. However, striatal response to shocks were qualitatively different in negative learners (i.e., those who predominantly learned from shocks) compared with positive learners (i.e., those who predominantly learned from success in avoiding shocks). Specifically, striatal activity was consistent with an aversive prediction error signal in negative learners and with an appetitive prediction error signal in positive learners. The degree to which a participant tended to learn from success in avoiding than experiencing shocks was predicted by the structure of a participants’ striatum, specifically by higher gray matter density where the response to shocks was consistent with a prediction error signal.

Results

Individual Biases in Learning from Pain and Its Prevention.

To test for individual differences in learning to avoid pain, we tasked 41 participants to play a card game in which their goal was to minimize the number of painful electrical shocks they might receive. Participants could avoid shocks by gambling that the number they were about to draw will be higher than the number the computer had drawn. An unsuccessful gamble resulted in shock and a successful gamble led to its avoidance. Alternatively, participants could always decline the gamble and opt for a fixed 50% known probability of receiving a shock (Fig. 1A). Importantly, participants played with three different decks of cards and had to learn by trial and error how likely a gamble was to be successful with each deck.

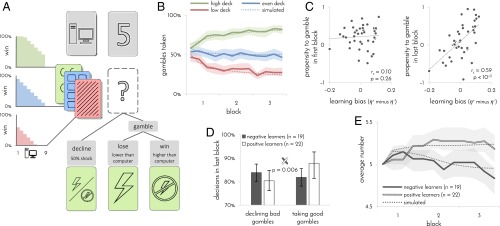

Fig. 1.

Experimental design and learning performance. n = 41 participants. (A) Experimental design. On each trial, participants were presented with one of three possible decks and a number between 1 and 9 drawn by the computer. If participants decided to gamble, a shock was delivered only if the number that they drew was lower than the computer’s number. Participants were only informed whether they won or lost the gamble, not which number they drew. Participants had to learn by trial and error how likely gambles were to succeed with each of the three decks. One deck contained a uniform distribution of numbers between 1 and 9 (even deck), one deck contained more 1’s (low deck), making gambles 30% less likely to succeed, and one deck contained more 9’s (high deck), making gambles 30% more likely to succeed. Opting to decline the gamble resulted in a 50% probability of shock regardless of which numbers were drawn by the computer. (B) Gambles taken with each deck as a function of time. Percentages were computed separately for each set of 15 contiguous trials (4 sets/60 trials per block). (C) Participants’ propensity to gamble in first (Left) and last (Right) blocks of trials as a function of learning bias. Propensity to gamble was computed by regressing out the effects of deck and computer’s number on participant’s choices using logistic regression. The numbers 1 and –1 correspond to always and never gamble, respectively. Learning bias was inferred from a participant’s choices using the learning model. (D) Proportion of bad gambles that were declined and good gambles that were taken in last block of trials as a function of learning bias. Participants with a positive learning bias (positive learners) declined fewer bad gambles and took more good gambles than participants with a negative learning bias (negative learners). Gambles were defined as good or bad based on probability of winning (good: >50%; bad <50%). Error bars: 95% bootstrap CI. (E) Average number drawn by computer as a function of time and learning bias. To maintain participants at a 50% gambling rate, numbers increased for positive learners and decreased for negative learners. In B and E, dotted line indicates simulated task performance of learning model. Shaded areas: 95% bootstrap CI.

In principle, participants can acquire information about the decks from both successful and unsuccessful gambles. Indeed, we observed from their behavior that as they gained experience with the three decks, their willingness to gamble with each deck differed (Fig. 1B). A more in-depth analysis indicated that they did not learn from the two types of outcomes to the same degree. Thus, the learning algorithm that best explained participants’ choices included two different learning rates, one for learning from shock outcomes (η–) and one for learning from no-shock outcomes (η+; log Bayes factor compared with algorithm with a single learning rate = 27.7). In this algorithm, the two learning rates determine the degree to which the two types of outcomes impact on subsequent expectations of gambling with each of the decks, and these expectations in combination with the numbers drawn by the computer determine whether subsequent gambles are taken or declined. The algorithm also accounts for each participant’s baseline propensity to take gambles. Learning in the favored algorithm is weighted by associability (10, 11), and the choices made for each deck tend to persist (7) (see SI Appendix for details of all learning algorithms and a validation of the model comparison procedure).

Further analysis showed that the difference between the two learning rates captured significant interindividual variation (log Bayes factor of algorithm with two learning rates per participant compared with algorithm with one average learning rate per participant and a group parameter for difference between the learning rates = 20.1). On this basis, we next computed each participant’s learning bias as the difference between the two learning rates that best fitted the participant’s choices (η+ minus η–). This bias reflects the degree to which a participant learned what gambles to take (because they resulted in no-shock outcomes) rather than what gambles to avoid (because they resulted in shocks). A positive learning bias (η+ > η–) entails that a propensity to gamble will emerge as the participant is learning from outcomes, whereas a negative learning bias (η+ < η–) should engender a propensity to decline a gamble (9). A comparison of participant’s raw propensity to gamble at the beginning and end of the experiment confirmed this expectation (Fig. 1C). Consequently, by the end of the experiment, participants with a positive learning bias came to take more good gambles, but also decline fewer bad gambles than participants with a negative learning bias (Fig. 1D).

To ensure that all participants nevertheless gambled at a similar rate and, thus, received roughly equal amounts of information about the decks, we increased or decreased the numbers the computer drew according to the participants’ own gambling rate, while ensuring that the three decks are always matched against similar computer’s numbers. Thus, because of their propensity to gamble, positive learners ended up playing against high numbers, whereas negative learners ended up playing against low numbers [Fig. 1E; mean difference 0.36, confidence interval (CI) 0.15–0.6, P = 0.001, bootstrap test; as a result, positive learners received a slightly higher number of shocks (mean 74.5, CI 72.7–76.5) than negative learners (mean 72.0, CI 70.3–73.3)]. In sum, participants who learned more from painful outcomes developed a propensity to avoid gambling, whereas participants who learned more from success in preventing pain developed a propensity to gamble.

Striatal Response to Pain Underlies Learning Bias.

Behaviorally, learning in our task was best explained as driven by outcome prediction errors (SI Appendix, Figs. S1 and S2). Such prediction errors are known to be expressed in the striatum (7–9, 12, 13), and, thus, we focused on examining participants’ striatal activity in response to task outcomes. BOLD responses in the striatum (and elsewhere) were overwhelmingly stronger in response to shock than to no-shock outcomes (SI Appendix, Fig. S3), likely reflecting sensory and affective processing of the shocks. Thus, to specifically test for prediction error signals, we examined the response to each type of outcome (shock and no-shock) separately, testing whether these responses were modulated by expectation.

We found that BOLD responses to no-shock outcomes in the head of the caudate nucleus were negatively modulated by expectation (derived by using the learning algorithm that best fitted participants’ behavior; Fig. 2A), consistent with an appetitive prediction error. This signal was not explained away by the effect of associability [P < 0.05, familywise error (FWE) corrected]. In contrast, when accounting for prediction errors, we did not find evidence for an associability signal in the striatum or elsewhere (P > 0.05 FWE corrected). Moreover, when decomposing the prediction error (PE) signal into the two components that affect a participant’s expectation, the one reflecting previous experience with the current deck and the other reflecting the number drawn by the computer, we found a significant effect within the identified striatal region in response to both PE components {Fig. 2B; peak Montreal Neurological Institute (MNI) coordinates: deck expectation [−10 6 6], t40 = –4.0, P = 0.04; number [−8 6 2], t40 = 4.2, P = 0.03; P values are FWE corrected across the striatum}.

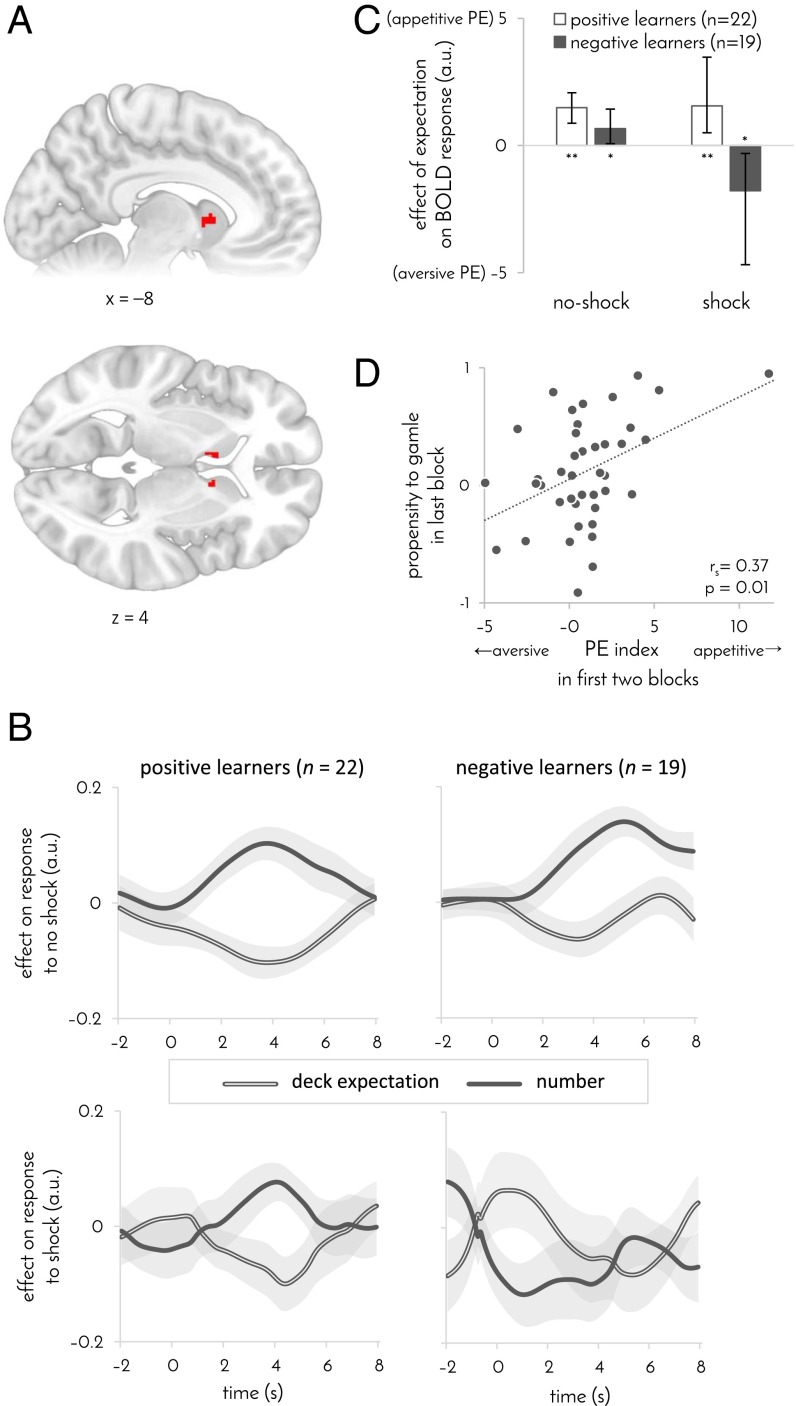

Fig. 2.

PE signaling in the striatum. n = 41 participants. (A) Striatal area where BOLD response to no-shock outcomes was modulated by expectations. Map is masked and FWE corrected for volume of striatum (P < 0.05, GLM). Extent: 22 voxels. Peak MNI coordinates: [–6 10 2] t40 = 4.5, corrected P = 0.01; [10 8 4] t40 = 4.7, corrected P = 0.007. x and z denote Montreal Neurological Institute (MNI) coordinates. (B) The two components of the prediction error. Effects of deck-based expectation and the number drawn by the computer on BOLD response to shock (Bottom) and no shock (Top) outcomes in the striatal ROI identified in A. Results are shown separately for positive (Left) and negative (Right) learners. A higher deck expectation and a lower number indicate lower expectation of a shock, and thus, an appetitive PE is consistent with a rise in the effect of number and a dip in the effect of deck expectation on the response to the outcomes. The converse pattern is consistent with an aversive PE. Time 0 indicates outcome onset. Shaded area: SEM. (C) Effect of expectations on BOLD response to no-shock and shock outcomes as a function learning bias. Positive values indicate an effect that is consistent with an appetitive PE. *P < 0.05, **P < 0.005, error bars: 95% bootstrap CI. (D) Propensity to gamble in the last task block as a function of a participant’s PE index, computed as the average effect of expectation on striatal response across both types of outcomes. An appetitive PE index predicted subsequent propensity to gamble (computed as in Fig. 1C). GLM coefficients in C and D were taken from the striatal area identified in A.

Whereas responses to no-shock outcomes were similarly modulated by expectations in positive and negative learners, the response to shock outcomes was qualitatively different in the two groups. No prediction error signal was evident in striatal responses to shocks across both groups of participants (P > 0.05, FWE small-volume corrected). However, examining the two groups separately revealed that the BOLD response to shocks was positively modulated by expectation in negative learners and negatively modulated by expectation in positive learners (Fig. 2C; responses measured in the same area where the response to no-shock outcomes was modulated by expectations; correlation between learning bias and average general linear model (GLM) coefficient r values =0.4, P = 0.005, permutation test; partial correlation accounting for number of shocks received: ρs = 0.38, P = 0.007, permutation test; a similar difference between positive and negative learners was evident in the caudate in a voxel-based analysis across the striatum—peak MNI coordinates [−10 −2 18], P = 0.03 small-volume corrected, permutation test—as well as when averaging across the whole caudate, P = 0.05, Bonferroni corrected for the three subregions of the striatum). In other words, in response to shocks, striatal activity in negative learners was consistent with an aversively signed prediction error, as seen in previous studies of pain prediction (14, 15). By contrast, in positive learners, activity was consistent with an appetitively signed prediction error, as in previous work on pain-relief prediction (16). Importantly, this difference between the groups was not associated with a difference in the mean response to shock compared with no-shock outcomes (P = 0.60, bootstrap test, GLM; SI Appendix, Fig. S3 C and D). These results suggest that, across both types of outcomes, positive learners express an appetitively signed prediction error, whereas negative learners express the absolute value of the prediction error.

If striatal prediction errors indeed drive avoidance learning, then the modulation of striatal responses to outcomes by expectation should predict whether a participant subsequently developed a propensity to gamble. Modulation consistent with an appetitive prediction error (signaling which gambles should be taken) should lead to a progressively increasing propensity to gamble, whereas modulation consistent with an aversive prediction error (signaling which gambles should be declined) should lead to an increasing propensity to decline. In agreement with this prediction, the degree to which striatal activity was consistent with an appetitive (rather than aversive) prediction error in the first two experimental blocks correlated with participants’ propensity to gamble in the last block, even when controlling for striatal activity in the last block (partial rs = 0.37, P = 0.01, permutation test). By contrast, the propensity to gamble in the first block was not correlated with the striatal prediction error signaling in the second and third block (Fig. 2C; partial rs = 0.05, P = 0.37, permutation test, controlling for the prediction error difference in the first block). Thus, we can conclude that striatal responses to outcomes were predictive of whether a participant would subsequently develop a propensity to accept or decline gambles.

Striatal Signals Prefigure Learned Neural Responses.

Differences between participants in how they learn should engender not only differences in behavior (i.e., propensity to gamble), but also differences in neural responses to stimuli or contexts about which they are learning (i.e., decks). Such an effect can be expected to be particularly evident for the even deck, because the even deck provided a context where negative learners came to avoid gambling and positive learners came to favor gambling (P = 0.01, permutation test; Fig. 3A). In simple terms, negative learners came to regard the even deck as a low deck, whereas positive learners came to regard it as a high deck. This result, however, is based on the same behavioral data used to estimate participants’ learning biases. Thus, to test the same effect using an alternative independent measure, we examined each participant’s BOLD responses to the three decks.

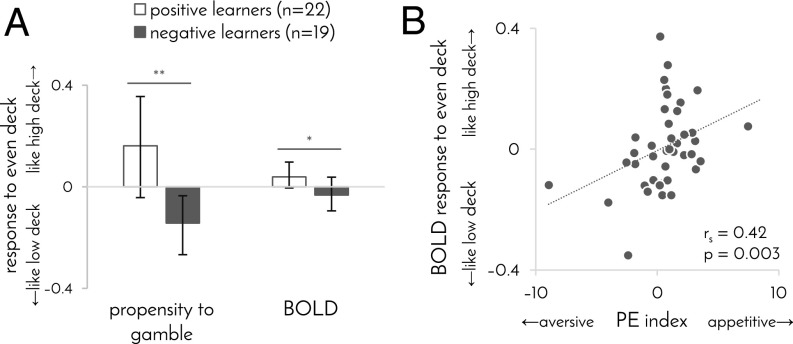

Fig. 3.

Behavioral and neural responses to the even deck. (A) Propensity to gamble and BOLD response to even deck as a function of learning bias. Propensity to gamble was computed as in Fig. 1C but exclusively for the even deck. By contrast, all participants avoided gambling with the low deck (propensity to gamble –0.42, CI –0.54 to –0.30) and favored gambling with the high deck (propensity to gamble 0.72, CI 0.60–0.80). BOLD response of 1 indicates that the response to the even deck was identical to the response to the high deck, whereas a value of –1 indicates that it was identical to the response to the low deck. Similarity of BOLD responses was computed as the Euclidian distance between the two responses’ GLM coefficients across all gray matter. Error bars: 95% bootstrap confidence intervals, *P = 0.05, **P = 0.01, permutation test. (B) BOLD response to even deck, compared with high and low decks, as a function of striatal PE index. PE index taken from Fig. 2D, and BOLD response was computed as in A.

In keeping with the behavioral result, we found that BOLD responses to the even deck were more similar to BOLD responses to the high deck in positive learners and more similar to BOLD responses to the low deck in negative learners (Fig. 3A). Moreover, the differences between participants in their representation of the even deck correlated with the degree to which striatal activity was consistent with an appetitive (rather than aversive) prediction errors (Fig. 3B; rs = 0.42, P = 0.003, permutation test). In fact, striatal activity in the first two experimental blocks predicted subsequent BOLD response to the even deck, measured during the last block (partial rs = 0.34, controlling for striatal activity in last block, P = 0.02, permutation test), whereas there was no evidence for the reverse relationship, between the BOLD response to the even deck in the first block and striatal activity in the last two blocks (partial rs = 0.03, controlling for striatal activity in first block, P = 0.42, permutation test). Together, these results suggest that differences in prediction error signaling between positive and negative learners shaped their subsequent neural responses to the stimuli about which participants were learning.

Learning Bias Is Predicted by the Structure of the Striatum.

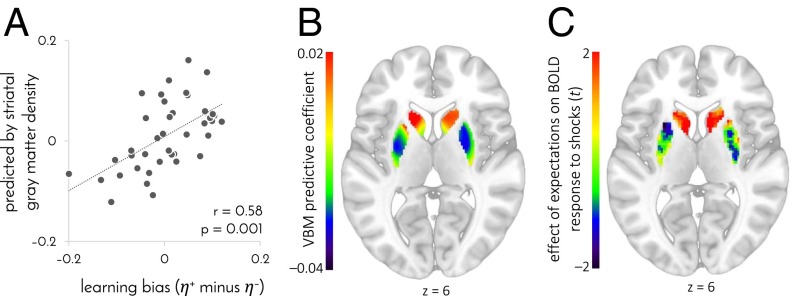

If individual differences in learning and striatal function, evident in our task, reflect stable individual traits, then we might also expect underlying differences in striatal structure. Indeed, a positive learning bias was associated with higher gray matter density in the head of the caudate (extent: 1 voxel, MNI coordinates [–17 23 6], P = 0.03 FWE small-volume corrected), as measured by using voxel-based morphometry (17) from magnetic transfer anatomical images that are particularly suited for measuring subcortical structures (18). To test whether the overall structure of participants’ striatum predicted their learning biases, we used the gray matter density of each participant’s 6,315 striatal voxels to predict each participant’s learning bias, by means of a regularized regression model that reflected the relationship between learning bias and striatal gray matter density in other participants (i.e., using fivefold cross-validation). Learning biases predicted by striatal structure significantly correlated with the actual biases derived from participants’ behavior (Fig. 4A; r = 0.58, P = 0.001, permutation test), with a positive learning bias predicted for 17 of the 22 positive learners, and a negative learning bias predicted for 13 of the 19 negative learners. Furthermore, a predictive relationship between gray matter density and learning bias was mostly negative throughout the putamen and accumbens and mostly positive in the caudate, and thus, it did not involve differences in overall striatal volume (Fig. 4B; mean predictive coefficient 0.0, CI –0.0002–0.0001). This predictive spatial pattern was not random, but presented a striking match with the area where BOLD responses to shocks were modulated by expectation (Fig. 4C; regression of individual expectation GLM coefficients on group gray matter-density predictive coefficients: mean 0.44, CI 0.22–0.77, P = 10−5, bootstrap test). That is, a more positive learning bias was predicted by higher gray matter density where responses to shocks were consistent with a (appetitive or aversive) prediction error signal, and lower gray matter density in areas that showed no such signal.

Fig. 4.

Striatal gray matter density predicts learning bias. n = 41 participants. (A) Learning bias predicted by gray matter density in the 6,315 voxels of the striatum as a function of the true learning bias inferred from participants’ choices. Learning biases were predicted from gray matter density by using cross-validated regularized linear regression. (B) Gray matter density coefficients used to predict learning bias. To create the map, predictive coefficients were averaged across participants and generalized across the striatum by assigning a fraction of each coefficient to each voxel proportionally to the gray matter-density variance shared between that voxel and the coefficient’s designated voxel. (C) Representation of expectations in the response to shocks. t values were computed by using a group-level GLM that included both negative learners, whose BOLD response was regressed against aversively signed prediction errors, and positive learners, whose BOLD response was regressed against appetitively signed prediction errors. There were no significant differences between positive and negative learners within the striatum (P > 0.5, FWE small-volume corrected). The map is masked for the volume of the striatum and not thresholded. z value in B and C denotes MNI coordinate.

Amygdala Specifically Involved in Learning from Pain.

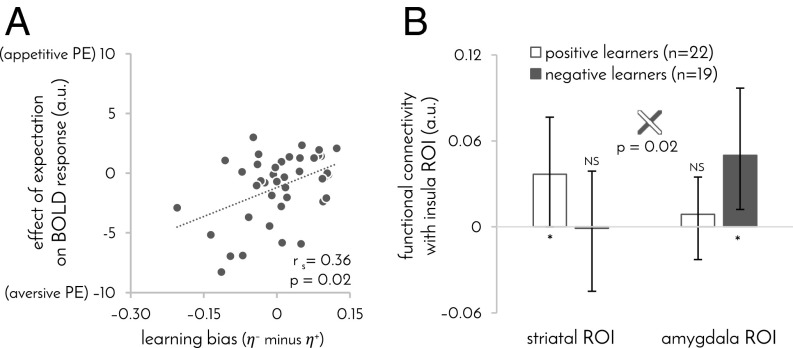

A large body of animal and human work implicates the amygdala and periaqueductal gray (PAG) in learning from aversive outcomes and, in particular, in generating aversive prediction errors (19–24). BOLD responses to outcomes in these areas suggests both areas were involved in learning in our task, albeit in different ways. Responses to shock and no-shock outcomes in the PAG were modulated by expectations in the same way as in our striatal ROI (SI Appendix, Fig. S3E; although we note that the ability of fMRI to discern PAG signals from neighboring structures is limited; ref. 25). In contrast, the amygdala showed no significant modulation in the response to no-shock outcomes, but its response to shocks was consistent with an aversive prediction error across the whole group (extent: 1 voxel, MNI coordinates [28 –6 –18], t40 = 2.8, P = 0.04 corrected for the volume of the amygdala). This latter effect was particularly pronounced in participants with a negative learning bias (Fig. 5A), mirroring the modulation of striatal and PAG activity in these same participants.

Fig. 5.

Individual learning biases outside of the striatum. (A) Effect of expectation on BOLD response to shocks as a function of learning bias in the amygdala region where this effect was significant (MNI coordinates [28 −6 −18]). Responses were most consistent with an aversive prediction error in participants who mostly learned from shock outcomes. n = 41 participants. (B) Functional connectivity between striatal (Fig. 2A) and amygdala (A) ROIs, and the insula area in which the response to shocks correlated with learning bias (MNI coordinates [46 −32 20]). Error bars: 95% bootstrap CI, *P ≤ 0.05, NS: P > 0.1.

Interestingly, a positive learning bias was associated with a stronger response to shock than to no-shock outcomes in right posterior insula (extent: three voxels, peak MNI coordinates [46 –32 20], P = 0.04 FWE-corrected for all voxels that significantly responded to shocks, shown in SI Appendix, Fig. S3A). The insula is thought to feed information about salient, painful, and aversive events to amygdala and striatum (26–28). Therefore, we next examined functional connectivity between this area and the striatal and amygdala regions in which response to outcomes was modulated by expectation. Positive learners showed significant functional connectivity between the insula and striatal regions, whereas negative learners showed significant functional connectivity between the insula and amygdala regions (correlation of learning bias with difference between striatal and amygdala functional connectivity: rs = 0.42, P = 0.005; Fig. 5B). Taken together, these results are consistent with previous suggestions that the amygdala is exclusively involved in learning from increases in aversive outcomes, whereas the striatum and PAG also partake in learning from decreases in aversive outcomes (21, 23, 29).

Discussion

We demonstrate that the two ways through which one can learn to avoid harm are used to different degrees by different individuals. In negative learners—those who primarily learned from being shocked and, thus, developed a propensity to avoid gambles—dorsal striatal and amygdalar responses to shocks were consistent with an aversive prediction error. In contrast, in positive learners—those who primarily learned from their success in preventing shocks and, thus, developed a propensity to gamble—the dorsal striatum’s response to shocks was consistent with an appetitive prediction error. This difference in striatal responses to outcomes anticipated observed differences in learned behavior, and in the neural responses to stimuli about which participants were learning. Participants’ learning bias was also predicted by the structure of their striatum, indicating that learning biases in our task reflected, at least in part, stable individual traits. Together, the findings reveal neural underpinnings of an elementary behavioral trait that predicts whether an individual learns predominantly what to do to prevent harm or what to avoid doing.

A multitude of animal and human studies implicate the striatum in learning about aversive outcomes (12, 13, 30–34). Striatal areas, including the caudate region identified in our study, have been shown to represent prediction error signals in both classical conditioning (14–16) and instrumental pain avoidance learning (35, 36). However, individual differences in the expression of these prediction error signals have not been studied to our knowledge. The present study was designed to assess the degree to which participants learn what actions have painful outcomes compared with what actions help avoid pain. The latter type of learning, defined in the animal literature as active avoidance learning, is particularly interesting, because it specifies that the very absence of a shock is reinforcing (37). Indeed, our results show that striatal response to outcomes in participants who were biased in favor of active avoidance learning mimicked striatal responses typically seen in studies of reward learning, with no-shock outcomes cast as reward and shock outcomes as reward omission. In contrast, in participants biased in favor of passive avoidance learning (i.e., learning what gambles should be avoided), striatal response to painful outcomes was consistent with an aversive prediction error, as seen in fear conditioning (14).

Thus, our results show that striatal response to pain is consistent with an appetitive prediction error in some individuals and with an aversive prediction error in others. In contrast, striatal responses to successful prevention of pain seem broadly consistent with an appetitive prediction error. Put another way, while some individuals represent an appetitively signed prediction error in response to both types of outcomes, others represent an unsigned prediction error (38) (aversively signed in response to pain and appetitively signed in response to pain prevention). That said, we note that in our experiment, the response to pain was generally stronger than the response to pain prevention, which is inconsistent with a purely appetitive prediction error, although this stronger response could reflect affective and sensory processing of the shocks.

Our findings concur with a view of the striatum as involved in processing both appetitive and aversive outcomes (26, 39). The amygdala, in contrast, was involved in our study solely in processing aversive outcomes, but not their omission. This involvement of the amygdala was most evident in participants who primarily learned from shock outcomes, and underscored by greater functional connectivity with the insula, a region with an established role in processing salient and aversive outcomes (40). These results strongly concur with previous work, indicating that learning from aversive outcomes engages the amygdala, whereas learning from success in avoiding aversive outcomes involves inhibition of the amygdala and activation of the striatum (29, 41–43). That said, we note that animal studies that were able to examine subregions of the striatum and amygdala with greater spatial resolution reveal a more complex picture (44, 45). Finally, whereas functional connectivity between medial prefrontal cortex, amygdala, and striatum has been shown to mediate avoidance learning (43), it did not mediate learning biases in our task, suggesting that such connectivity might be equally involved in learning from increases and decreases in aversive outcomes.

Several reports have linked variations in the structure of the striatum to individual differences in healthy and pathological decision-making behavior (46, 47) and to the expression of certain pain disorders (48, 49). Of particular relevance to the present study is the observation that obsessive-compulsive disorder (OCD), which features compulsive harm-avoidance behavior, is associated with higher gray matter density in the putamen (50). In our study, higher gray matter density in the putamen (and lower gray matter density in the caudate) predicted better learning from shocks and poorer learning from success in avoiding shocks. It is possible that such insensitivity to safety signals might engender excessively persistent harm avoidance behaviors, which in healthy individuals normally terminate when safety is attained. Thus, this finding raises the interesting possibility that failure to adjust to success in harm avoidance may contribute to compulsivity in OCD.

In conclusion, we describe for the first time to our knowledge individual biases in learning from actual painful outcomes on the one hand and from their prevention on the other. These biases are associated with qualitative differences in striatal prediction error signaling and predicted by differences in striatal structure. Further research should reveal how these functional and structural characteristics map onto psychiatric disorders that feature imbalanced harm avoidance behavior.

Materials and Methods

The experimental protocol was approved by the University of College London local research ethics committee, and informed consent was obtained from all participants. Electrical stimulation was individually titrated to induce a moderate subjective pain level. Participants performed the experiment while being scanned in a Siemens Trio 3T MRI scanner. See SI Appendix for further details of the experiment, modeling, and functional MRI procedures.

Supplementary Material

Acknowledgments

This work was funded by Wellcome Trust’s Cambridge-University College London Mental Health and Neurosciences Network Grant 095844/Z/11/Z (to E.E. and R.J.D.), Wellcome Trust Investigator Award 098362/Z/12/Z (to R.J.D.), the Gatsby Charitable Foundation (P.D.), and Swiss National Science Foundation Grant 151641 (to T.U.H.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1519829113/-/DCSupplemental.

References

- 1.American Psychiatric Association . Diagnostic and Statistical Manual of Mental Disorders. 5th Ed Am Psychiatr Assoc; Washington, DC: 2013. [Google Scholar]

- 2.Bar-Eli M, Azar OH, Ritov I, Keidar-Levin Y, Schein G. Action bias among elite soccer goalkeepers: The case of penalty kicks. J Econ Psychol. 2007;28:606–621. [Google Scholar]

- 3.Abramowitz JS, Taylor S, McKay D. Obsessive-compulsive disorder. Lancet. 2009;374(9688):491–499. doi: 10.1016/S0140-6736(09)60240-3. [DOI] [PubMed] [Google Scholar]

- 4.Frank MJ, Seeberger LC, O’reilly RC. By carrot or by stick: Cognitive reinforcement learning in parkinsonism. Science. 2004;306(5703):1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- 5.Frank MJ, Moustafa AA, Haughey HM, Curran T, Hutchison KE. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proc Natl Acad Sci USA. 2007;104(41):16311–16316. doi: 10.1073/pnas.0706111104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442(7106):1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Schönberg T, Daw ND, Joel D, O’Doherty JP. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. J Neurosci. 2007;27(47):12860–12867. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hare TA, O’Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci. 2008;28(22):5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Niv Y, Edlund JA, Dayan P, O’Doherty JP. Neural prediction errors reveal a risk-sensitive reinforcement-learning process in the human brain. J Neurosci. 2012;32(2):551–562. doi: 10.1523/JNEUROSCI.5498-10.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li J, Schiller D, Schoenbaum G, Phelps EA, Daw ND. Differential roles of human striatum and amygdala in associative learning. Nat Neurosci. 2011;14(10):1250–1252. doi: 10.1038/nn.2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Boll S, Gamer M, Gluth S, Finsterbusch J, Büchel C. Separate amygdala subregions signal surprise and predictiveness during associative fear learning in humans. Eur J Neurosci. 2013;37(5):758–767. doi: 10.1111/ejn.12094. [DOI] [PubMed] [Google Scholar]

- 12.Cohen JY, Haesler S, Vong L, Lowell BB, Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature. 2012;482(7383):85–88. doi: 10.1038/nature10754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Delgado MR, Li J, Schiller D, Phelps EA. The role of the striatum in aversive learning and aversive prediction errors. Philos Trans R Soc Lond B Biol Sci. 2008;363(1511):3787–3800. doi: 10.1098/rstb.2008.0161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Seymour B, et al. Temporal difference models describe higher-order learning in humans. Nature. 2004;429(6992):664–667. doi: 10.1038/nature02581. [DOI] [PubMed] [Google Scholar]

- 15.Schiller D, Levy I, Niv Y, LeDoux JE, Phelps EA. From fear to safety and back: Reversal of fear in the human brain. J Neurosci. 2008;28(45):11517–11525. doi: 10.1523/JNEUROSCI.2265-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Seymour B, et al. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nat Neurosci. 2005;8(9):1234–1240. doi: 10.1038/nn1527. [DOI] [PubMed] [Google Scholar]

- 17.Ashburner J, Friston KJ. Voxel-based morphometry--the methods. Neuroimage. 2000;11(6 Pt 1):805–821. doi: 10.1006/nimg.2000.0582. [DOI] [PubMed] [Google Scholar]

- 18.Helms G, Draganski B, Frackowiak R, Ashburner J, Weiskopf N. Improved segmentation of deep brain grey matter structures using magnetization transfer (MT) parameter maps. Neuroimage. 2009;47(1):194–198. doi: 10.1016/j.neuroimage.2009.03.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Phillips RG, LeDoux JE. Differential contribution of amygdala and hippocampus to cued and contextual fear conditioning. Behav Neurosci. 1992;106(2):274–285. doi: 10.1037//0735-7044.106.2.274. [DOI] [PubMed] [Google Scholar]

- 20.Kim JJ, Rison RA, Fanselow MS. Effects of amygdala, hippocampus, and periaqueductal gray lesions on short- and long-term contextual fear. Behav Neurosci. 1993;107(6):1093–1098. doi: 10.1037//0735-7044.107.6.1093. [DOI] [PubMed] [Google Scholar]

- 21.Cole S, McNally GP. Complementary roles for amygdala and periaqueductal gray in temporal-difference fear learning. Learn Mem. 2008;16(1):1–7. doi: 10.1101/lm.1120509. [DOI] [PubMed] [Google Scholar]

- 22.Johansen JP, Tarpley JW, LeDoux JE, Blair HT. Neural substrates for expectation-modulated fear learning in the amygdala and periaqueductal gray. Nat Neurosci. 2010;13(8):979–986. doi: 10.1038/nn.2594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yacubian J, et al. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26(37):9530–9537. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mobbs D, et al. When fear is near: Threat imminence elicits prefrontal-periaqueductal gray shifts in humans. Science. 2007;317(5841):1079–1083. doi: 10.1126/science.1144298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Linnman C, Moulton EA, Barmettler G, Becerra L, Borsook D. Neuroimaging of the periaqueductal gray: State of the field. Neuroimage. 2012;60(1):505–522. doi: 10.1016/j.neuroimage.2011.11.095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Navratilova E, Porreca F. Reward and motivation in pain and pain relief. Nat Neurosci. 2014;17(10):1304–1312. doi: 10.1038/nn.3811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bushnell MC, Čeko M, Low LA. Cognitive and emotional control of pain and its disruption in chronic pain. Nat Rev Neurosci. 2013;14(7):502–511. doi: 10.1038/nrn3516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Leong JK, Pestilli F, Wu CC, Samanez-Larkin GR, Knutson B. White-matter tract connecting anterior insula to nucleus accumbens correlates with reduced preference for positively skewed gambles. Neuron. 2016;89(1):63–69. doi: 10.1016/j.neuron.2015.12.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rogan MT, Leon KS, Perez DL, Kandel ER. Distinct neural signatures for safety and danger in the amygdala and striatum of the mouse. Neuron. 2005;46(2):309–320. doi: 10.1016/j.neuron.2005.02.017. [DOI] [PubMed] [Google Scholar]

- 30.Salamone JD. The involvement of nucleus accumbens dopamine in appetitive and aversive motivation. Behav Brain Res. 1994;61(2):117–133. doi: 10.1016/0166-4328(94)90153-8. [DOI] [PubMed] [Google Scholar]

- 31.Pezze MA, Feldon J. Mesolimbic dopaminergic pathways in fear conditioning. Prog Neurobiol. 2004;74(5):301–320. doi: 10.1016/j.pneurobio.2004.09.004. [DOI] [PubMed] [Google Scholar]

- 32.McNally GP, Westbrook RF. Predicting danger: The nature, consequences, and neural mechanisms of predictive fear learning. Learn Mem. 2006;13(3):245–253. doi: 10.1101/lm.196606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315(5811):515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- 34.Bolstad I, et al. Aversive event anticipation affects connectivity between the ventral striatum and the orbitofrontal cortex in an fMRI avoidance task. PLoS One. 2013;8(6):e68494. doi: 10.1371/journal.pone.0068494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Seymour B, Daw ND, Roiser JP, Dayan P, Dolan R. Serotonin selectively modulates reward value in human decision-making. J Neurosci. 2012;32(17):5833–5842. doi: 10.1523/JNEUROSCI.0053-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Roy M, et al. Representation of aversive prediction errors in the human periaqueductal gray. Nat Neurosci. 2014;17(11):1607–1612. doi: 10.1038/nn.3832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mowrer OH, Lamoreaux RR. Fear as an intervening variable in avoidance conditioning. J Comp Psychol. 1946;39:29–50. doi: 10.1037/h0060150. [DOI] [PubMed] [Google Scholar]

- 38.Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: Rewarding, aversive, and alerting. Neuron. 2010;68(5):815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ramirez F, Moscarello JM, LeDoux JE, Sears RM. Active avoidance requires a serial basal amygdala to nucleus accumbens shell circuit. J Neurosci. 2015;35(8):3470–3477. doi: 10.1523/JNEUROSCI.1331-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Menon V, Uddin LQ. Saliency, switching, attention and control: A network model of insula function. Brain Struct Funct. 2010;214(5-6):655–667. doi: 10.1007/s00429-010-0262-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Moscarello JM, LeDoux JE. Active avoidance learning requires prefrontal suppression of amygdala-mediated defensive reactions. J Neurosci. 2013;33(9):3815–3823. doi: 10.1523/JNEUROSCI.2596-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Choi JS, Cain CK, LeDoux JE. The role of amygdala nuclei in the expression of auditory signaled two-way active avoidance in rats. Learn Mem. 2010;17(3):139–147. doi: 10.1101/lm.1676610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Collins KA, Mendelsohn A, Cain CK, Schiller D. Taking action in the face of threat: Neural synchronization predicts adaptive coping. J Neurosci. 2014;34(44):14733–14738. doi: 10.1523/JNEUROSCI.2152-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Badrinarayan A, et al. Aversive stimuli differentially modulate real-time dopamine transmission dynamics within the nucleus accumbens core and shell. J Neurosci. 2012;32(45):15779–15790. doi: 10.1523/JNEUROSCI.3557-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Martinez RC, et al. Active vs. reactive threat responding is associated with differential c-Fos expression in specific regions of amygdala and prefrontal cortex. Learn Mem. 2013;20(8):446–452. doi: 10.1101/lm.031047.113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.van den Bos W, Rodriguez CA, Schweitzer JB, McClure SM. Connectivity strength of dissociable striatal tracts predict individual differences in temporal discounting. J Neurosci. 2014;34(31):10298–10310. doi: 10.1523/JNEUROSCI.4105-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kreitzer AC, Malenka RC. Striatal plasticity and basal ganglia circuit function. Neuron. 2008;60(4):543–554. doi: 10.1016/j.neuron.2008.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Apkarian AV, Hashmi JA, Baliki MN. Pain and the brain: Specificity and plasticity of the brain in clinical chronic pain. Pain. 2011;152(3) Suppl:S49–S64. doi: 10.1016/j.pain.2010.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schmidt-Wilcke T, et al. Striatal grey matter increase in patients suffering from fibromyalgia--a voxel-based morphometry study. Pain. 2007;132(Suppl 1):S109–S116. doi: 10.1016/j.pain.2007.05.010. [DOI] [PubMed] [Google Scholar]

- 50.Rotge JY, et al. Gray matter alterations in obsessive-compulsive disorder: An anatomic likelihood estimation meta-analysis. Neuropsychopharmacology. 2010;35(3):686–691. doi: 10.1038/npp.2009.175. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.