Abstract

Biology is the study of dynamical systems. Yet most of us working in biology have limited pedagogical training in the theory of dynamical systems, an unfortunate historical fact that can be remedied for future generations of life scientists. In my particular field of systems neuroscience, neural circuits are rife with nonlinearities at all levels of description, rendering simple methodologies and our own intuition unreliable. Therefore, our ideas are likely to be wrong unless informed by good models. These models should be based on the mathematical theories of dynamical systems since functioning neurons are dynamic—they change their membrane potential and firing rates with time. Thus, selecting the appropriate type of dynamical system upon which to base a model is an important first step in the modeling process. This step all too easily goes awry, in part because there are many frameworks to choose from, in part because the sparsely sampled data can be consistent with a variety of dynamical processes, and in part because each modeler has a preferred modeling approach that is difficult to move away from. This brief review summarizes some of the main dynamical paradigms that can arise in neural circuits, with comments on what they can achieve computationally and what signatures might reveal their presence within empirical data. I provide examples of different dynamical systems using simple circuits of two or three cells, emphasizing that any one connectivity pattern is compatible with multiple, diverse functions.

Keywords: hidden Markov model, Point attractors, Marginal states, line attractors, continuous attractors, Oscillating systems, cyclic attractors, limit cycles, strange attractors, Heteroclinics

Introduction

When we try to understand any biological process, our models of the system matter. Our ideas of how a parameter impacts the system or how a variable responds to manipulations of the system determine what questions we attempt to answer and which experiments we perform. These ideas are based on our own mental models, which can be misleading if not founded on the appropriate dynamical principles 1, 2.

In this article, I hope to provide a short introduction to the types of dynamical system that arise in neural circuits. In doing so, I explore why there is a perhaps surprising lack of consensus on the nature of the dynamics within mammalian neural circuits—modelers aiming to explain various cognitive processes commence from seemingly incompatible starting points, such as chaotic systems, oscillators, or sets of point attractor states. I briefly consider hallmarks and support for each paradigm and summarize how—in spite of the tremendous quantity of electrophysiological data—room for debate remains as to which type of dynamical system is best used to model and understand brain function.

Hidden variables within neural data

Neural circuits are nonlinear dynamical systems that, in principle, can be described by coupled differential equations 3. However, the relevant continuous variables necessary for a full description of the behavior of a functioning neural circuit are typically hidden from us. Minimally these include the membrane potential of every cell, but recordings of neural activity in behaving vertebrates such as mammals are limited to a small subset of cells. Even if all recorded cells reside in one circuit that we wish to describe, the circuit, which could be distributed or compact, receives inputs from tens of thousands of other neurons, whose activity is unknown. Moreover, even a continuous variable such as membrane potential is most commonly observed only at the discrete times of voltage spikes. Therefore, our descriptions of neural circuits require us to infer the behavior of underlying hidden variables when we observe only a sparse number of them.

Numerous other properties impact the ongoing behavior of a cell and the circuit as a whole. These may include the number of vesicles of neurotransmitter and their voltage-dependent release probabilities at each synaptic connection or the cell-average states of activation and inactivation of the various ion channels. Calcium concentration and spatial distributions of all these values can also affect neural activity. Such an overwhelming abundance of known unknowns makes a full or complete description impossible and helps explain why we not only fail to have a concrete, detailed explanation of the behavior of most neural circuits but even do not know which dynamical system provides the best model of the behavior.

Classes of dynamical system

A dynamical system is any system that changes in time and can be described by a set of coupled differential equations. A pendulum is a simple example, the Hodgkin-Huxley model of a neuron a more complicated one, and the coordinated activity of all neurons in a brain an intractable one. To characterize a dynamical system rigorously, one should know how the rate of change of all relevant variables depends on the combination of their instantaneous values. One then can simulate how they change in time from any initial condition and plot these co-varying variables together as a trajectory. If a small change in initial conditions leads to identical behavior after some transient period, then the system possesses a point attractor state—trajectories converge if their initial difference is not too large. If the system is an oscillator, trajectories converge to a particular loop—a limit cycle—in which differences in initial conditions are maintained over time as a fixed phase offset. If trajectories diverge from each other across a broad range of initial conditions while all variables remain bounded, then the system is chaotic.

Here we consider circuits with only two or three neurons to provide examples of many different types of dynamics. In general, a system with hundreds or thousands of neurons—so that we would need a space of hundreds or thousands of dimensions to plot the dynamics as the coordinated set of membrane potentials or firing rates of all neurons—could contain point attractors, limit cycles, and regions of chaos depending on which subsets of cells were more strongly active for one period of time. The richness of such high-dimensional systems and their relevance to cognitive function make them an important area of current study 4– 10.

Point attractors

A point attractor state is equivalent to a stable fixed point of the dynamics, such as the bottom of a bowl with a ball in it. No neural circuit in vivo can strictly be in a point attractor state, as that would require all variables (such as membrane potentials) to be static. However, it may be reasonable to consider a broad average across variables, such as the mean firing rate of a large group of neurons, to be stationary following any initial transient response to a fixed input. Simple systems without feedback operate in such point attractor states if cells have one value of firing rate in the absence of stimulus and, typically following a period of adaptation, shift to a different stable firing rate in the presence of a stimulus ( Figure 1). Neurons in the sensory periphery appear to behave in this manner.

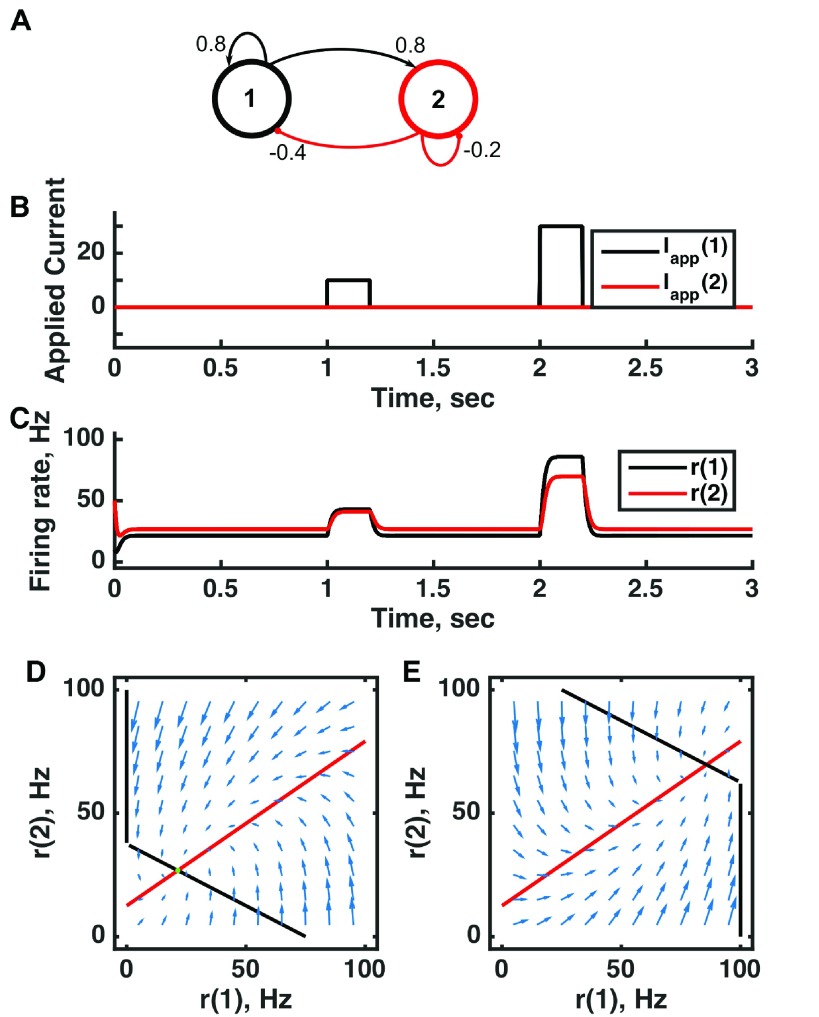

Figure 1. A single point attractor is present without input and a different one with input in a threshold-linear two-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. ( B) Applied current as a function of time. Two different sized pulses of current are applied to unit 1. ( C) Firing rate as a function of time in the coupled network. During each current step, a new attractor is produced, but following current offset the original activity state is reached. ( D) Any particular combination of the firing rates of the two units (x-axis is rate of unit 1, y-axis is rate of unit 2) determines the way those firing rates change in time (arrows). Starting from any pair of firing rates, any trajectory following arrows terminates at the point of intersection of the two lines. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Since unit 1 excites unit 2, the fixed point for r(2) increases with r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Since unit 2 inhibits neuron 1, the fixed point for r(1) decreases with r(2). The crossing point of the nullclines is a fixed point of the whole system. The fixed point is stable (so is an attractor state) because arrows converge on the fixed point. ( E) As in (D) but the solution during the second pulse of applied current. The applied current shifts the nullcline for r(1) so that the fixed point of the system is at much higher values of r(1) and r(2). For parameters, see supporting Matlab code, “dynamics_two_units.m”.

Variability in the spiking of a neuron—both within a trial and between trials—appears to be at odds with a point attractor description, which suggests a stable, stationary set of firing rates. However, such variability can be attributed to various noise terms that lead to each neuron’s spikes being produced randomly (say, as a Poisson process) with probability that depends on the rate—a hidden variable, which could be static and deterministic—and/or to noise-driven fluctuations in the rate about its stable fixed point. Thus, a point attractor framework is not incompatible with ever-varying neural activity, especially, as we shall discuss below, for systems with many attractor states.

Multistability and memory

When a system possesses multiple point attractor states in the absence of stimuli, then the history of prior stimuli can determine the neural circuit’s current activity state—the particular attractor in which it resides—so the system can retain memories ( Figure 2). In some of the most important pioneering work in computational and theoretical neuroscience 11– 14, Grossberg and Hopfield demonstrated how such discrete memory states can form via activity-dependent changes in the strength of connections between coactive neurons during stimulus presentation. While the initial analyses of the capacity (number of memories held) of such networks relied on binary neurons that were either on or off and updated in discrete time steps 12, the principle of memory formation and memory retrieval via an imperfect stimulus has been demonstrated in continuous firing rate models in continuous time 14, 15 and in circuits of model spiking neurons 16. These pattern-learning systems, known generically as autoassociative networks, provide great insight into how memories can be distributed across overlapping sets of cells and retrieved from imperfect stimuli via pattern completion to a point attractor state.

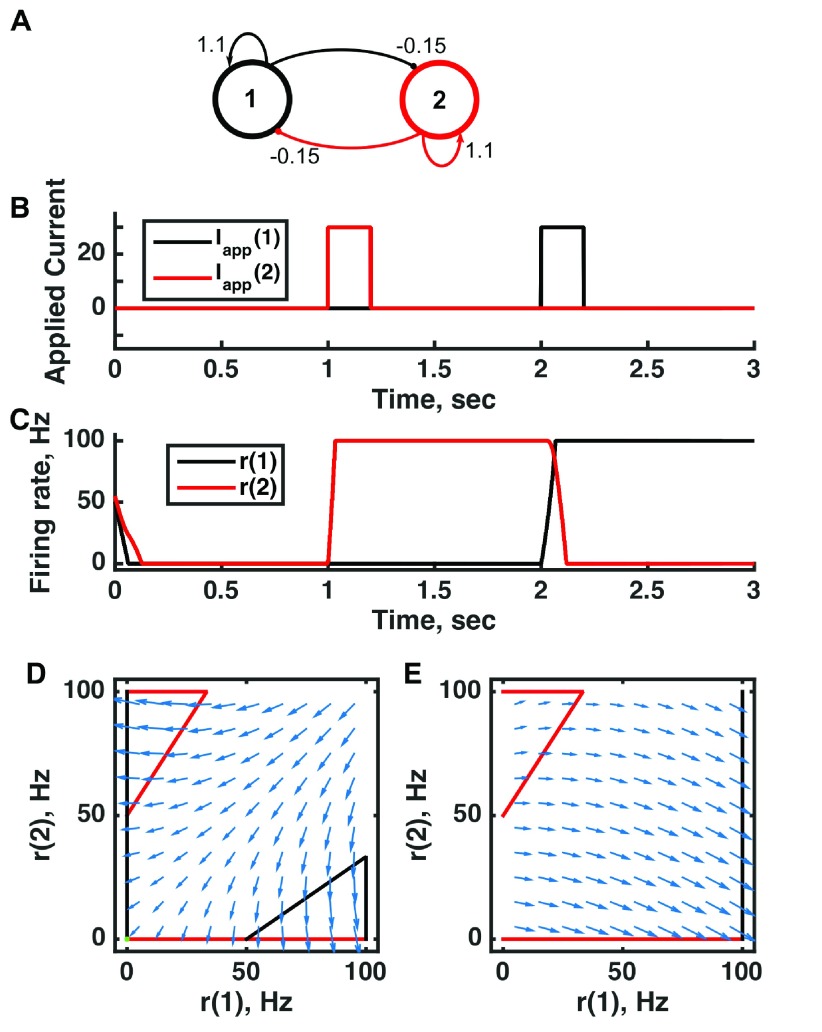

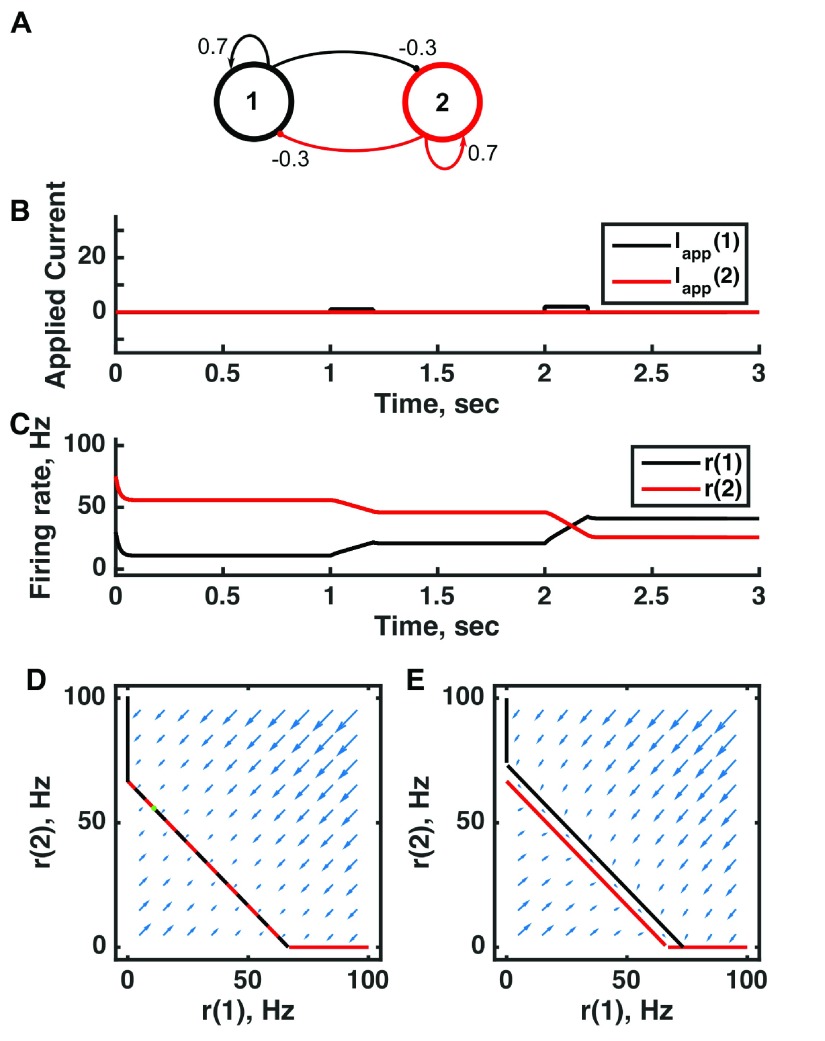

Figure 2. A multistable attractor network can be switched between states to encode distinct memories through persistent activity in a threshold-linear two-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections. The strong self-excitatory feedback renders each unit unstable once active. ( B) Applied current as a function of time. The first pulse is applied to unit 1, the second to unit 2. ( C) Firing rate as a function of time in the coupled network reveals three different activity states: both units inactive or either one active. The activity persists after offset of the applied current—a signature of multistability—so retains memory of past inputs. ( D) Any particular combination of the firing rates of the two neurons (x-axis is rate of neuron 1, y-axis is rate of neuron 2) determines the way those firing rates change in time (arrows). Depending on the initial pair of firing rates, a trajectory following arrows terminates at one of the points of intersection of the two lines, either (0,0) or (100,0) or (0,100). The intersections at the midpoints of the lines are unstable—if activity of unit 1 is under 50 Hz, it decays to 0; if it is over 50 Hz, it will increase to 100 Hz (if unit 2 is inactive). Red line: nullcline for neuron 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Since neuron 1 excites neuron 2, the fixed point for r(2) increases with r(1). Black line: nullcline for neuron 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Since neuron 2 inhibits neuron 1, the fixed point for r(1) decreases with r(2). The crossing point of the nullclines is a fixed point of the whole system. The fixed point is stable (so is an attractor state) because arrows converge on the fixed point. ( E) As in ( D) but the solution during the second pulse of applied current. The applied current shifts the nullcline for r(1) so that the only fixed point of the system is at (100,0). For parameters, see supporting Matlab code, “dynamics_two_units.m”.

Inhibition-stabilized network

The value of a dynamical systems approach to understanding neural-circuit behavior that is otherwise highly counter-intuitive—even paradoxical—is exemplified by the inhibition-stabilized (IS) network. The behavior of the IS network is particularly worth taking the time to understand because there is evidence that regions of both the hippocampus and the cortex could operate in the IS regime.

The IS network is a feedback-dominated network in which self-excitation is strong enough to destabilize excitatory firing rates in the absence of feedback inhibition. However, feedback inhibition is also very strong, in fact dominant enough to clamp the excitatory firing at what is an otherwise unstable fixed point of the dynamics 17. In IS circuits, the strong feedback inhibition is to similarly tuned neurons—to the same cells they receive excitation from—unlike most point attractor models in which the dominant inhibitory effect is cross-inhibition between differently tuned cells to enhance selectivity.

An intriguing property of the IS network is that a decrease in external excitatory input to inhibitory cells causes their steady-state firing rate to increase 17. The initial transient decrease in firing of the inhibitory cells causes a strong increase in firing rate of the excitatory cells. The feedback loop is strong enough that the net effect on inhibitory cells, following the ensuing increase in their excitatory input, is an increase in firing rate ( Figure 3). In the final state, with decreased external excitatory input to inhibitory cells, both inhibitory and excitatory cells have higher firing rate. The effect can be called paradoxical because in the final state the excitatory cells fire at a higher rate while receiving more inhibitory input than before.

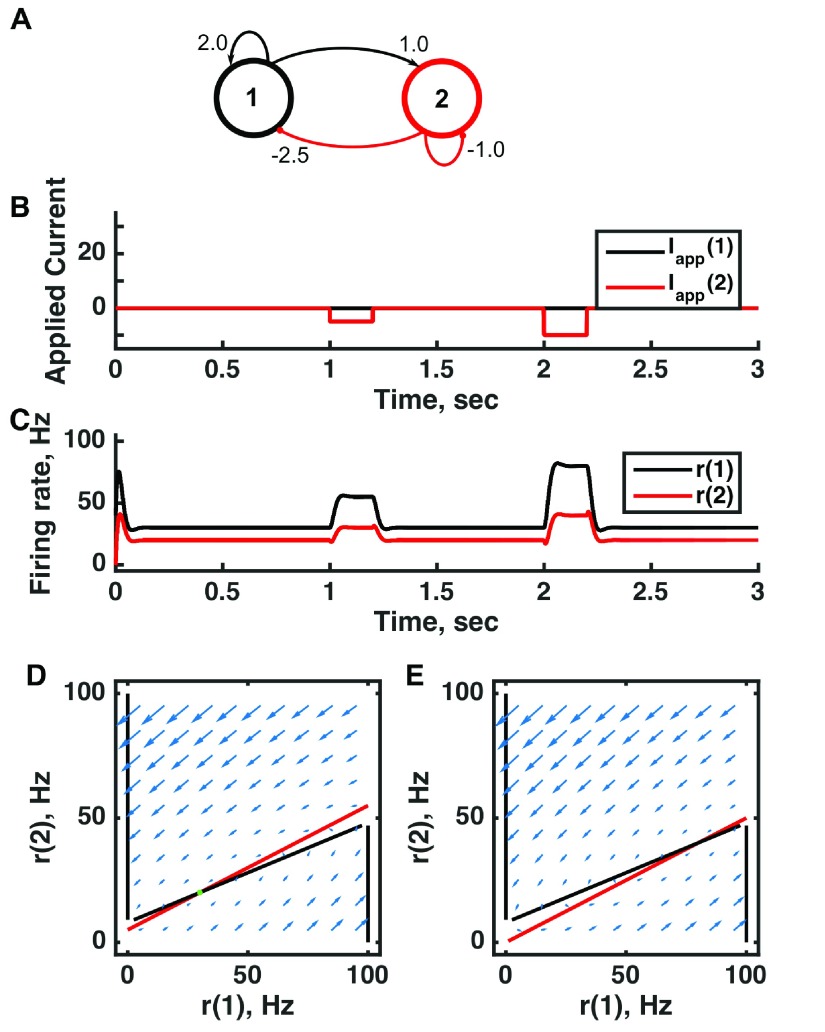

Figure 3. The “paradoxical” shift of a single point attractor in the inhibition-stabilized regime in a threshold-linear two-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. Architecture is identical to that in Figure 1. ( B) Applied current as a function of time. Two different sized inhibitory pulses of current are applied to unit 2. ( C) Firing rate as a function of time in the coupled network. During each current step, a new attractor is produced, but following current offset the original activity state is reached. When inhibition is applied to unit 2, the rates of both unit 1 and unit 2 increase as a result of the ensuing net within-circuit increase in excitation to both units. The “paradox” lies in that external inhibition to unit 2 results in an increase in its rate (due to internal raised excitation) and, even more counter-intuitively, unit 1 stabilizes at a higher firing rate in the presence of greater inhibitory input from unit 2. ( D) Any particular combination of the firing rates of the two units (x-axis is rate of unit 1, y-axis is rate of unit 2) determines the way those firing rates change in time (arrows). Starting from any pair of firing rates, any trajectory following arrows terminates at the point of intersection of the two lines. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Since unit 1 excites unit 2, the fixed point for r(2) increases with r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Since unit 2 inhibits unit 1, at high enough r(2) only r(1) = 0 is possible and at low r(2) only r(1) = 100 is possible. The line joining the minimum and maximum values of r(1) would normally be of unstable fixed points, but in this system it is stabilized. The crossing point of the nullclines is the fixed point of the whole system. The fixed point is stable (so is an attractor state) because arrows converge on the fixed point. ( E) As in ( D) but the solution during the second pulse of applied current. The inhibitory applied current shifts the nullcline for r(2) down, and the result is that the fixed point of the system moves to higher values of both r(1) and r(2). For parameters, see supporting Matlab code, “dynamics_two_units.m”.

Evidence for neural circuit operation in the IS regime was first provided by a combination of modeling and data analysis for the hippocampus during theta oscillations, based on the relative phase relationship of oscillatory activity from excitatory and inhibitory cells 17. More recently, strong evidence for operation in this regime has been provided for the primary visual cortex as an explanation of how two stimuli can switch from producing supralinear to sublinear summation as their contrast increases and the IS regime is reached 18, 19.

Attractor-state itinerancy

The term itinerancy is used if a system switches rapidly between distinguishable patterns of activity that last significantly longer than the switching time. Switches can occur by noise-driven fluctuations in a circuit with many stable point attractors 20 or via biological processes such as synaptic depression or firing rate adaptation—which operate more slowly than changes in firing rates of cells—between quasistable attractor states 21. A system with just two quasistable states can give rise to a relaxation oscillator by the latter process 1, 22, 23, whereas a system with many quasistable states can give rise to “chaotic itinerancy” 24, 25.

Itinerancy through quasistable states can subserve sequence memory, with distinct states reached in response to both the number of stimuli and the types of stimuli in a sequence 21. Noise-induced itinerancy through point attractor states can also serve as the neural basis of a sampling framework for Bayesian computation 26 and can lead to optimal decision making if certain biological constraints must be met by the system 27.

Perhaps the most compelling evidence for attractor state itinerancy is during bistable percepts 28– 32, when the switching from one percept to the other and back arises in the presence of a constant, ambiguous stimulus such as the Necker cube 33. Models of the phenomenon suggest a neural circuit possessing two attractor states with transitions between them produced by a combination of synaptic depression and noise-driven fluctuations 34– 36.

It is worth noting that experimental identification of such attractor state itinerancy may require non-standard analyses of neural spike trains. The standard practice of averaging across trials after their alignment to stimulus onset would fail to reveal such inherent dynamics because the timing of transitions varies across trials and indeed the initial state may differ from trial to trial. Thus, across-trial averaging would reveal simply a blurred, approximately constant rate dependent on the average response during the two distinct percepts. Therefore, it is essential to use single-trial methods of analysis where possible if one hopes to uncover such underlying dynamics. The use of averaging across trials has been a necessity when analyzing spike trains in vivo because of the apparent randomness and limited amount of information contained within the spike times of any one neuron in any one trial. However, methods such as hidden Markov modeling (HMM) 37– 39 allow one to treat each trial independently, especially if one has access to spike trains from many simultaneously recorded cells with correlated activity. HMM produces an analysis that assumes discrete states of activity with transitions between these activity states that vary in timing from trial to trial. Analysis of neural spike trains in vivo in multiple cortical areas by HMM and other methods has produced strong evidence for state transitions during cognitive processes, including motor preparation 37, 40, taste processing 39, and perceptual decision making 41, 42.

Addressing the unrealistic firing rates in multistable models

The multistability necessary for memory is typically achieved in models via a subset of neurons switching from a low firing rate where the feedback activity is insufficient to generate a sustained response to a high firing rate state reinforced by recurrent excitatory feedback that is limited in rate only by saturation of an intrinsic or synaptic process. Unless the saturating process has a slow time constant, such active states maintained by recurrent feedback typically have much higher firing rates than those observed in vivo—indeed, in simple models, once excitatory feedback is increased enough to engender stable persistent activity in the absence of input, that activity can be at the neuron’s maximal firing rate, on the order of 100 Hz (as in Figure 2). Such rates are incompatible with the smaller changes of activity—often no more than 10 Hz between pre-stimulus and post-stimulus levels—during memory tasks in vivo 43, 44, calling into question the validity of these recurrent excitatory models.

This issue can be resolved by several different modeling assumptions 45. If recurrent feedback current is mediated primarily through N-methyl-D-aspartate (NMDA) receptors and they are allowed a 100 ms time constant (though 50 ms may be more reasonable at in vivo temperatures for mammals), then the high firing rate state can be limited to 20 to 30 Hz 46, 47. Furthermore, if a slow time constant for synaptic facilitation of 7 seconds is used, then firing rates in the active state can be reduced to levels below 10 Hz 48. A final possibility is that the network contains subgroups of excitatory and inhibitory cells operating in the IS regime ( Figure 4) in which active subgroups can, in principle, maintain stable activity at arbitrary low rates while suppressing the activity of other subgroups via cross-inhibition 49– 51. In this regime, a system of point attractor states is compatible with the low firing rates of persistently active neurons observed in vivo.

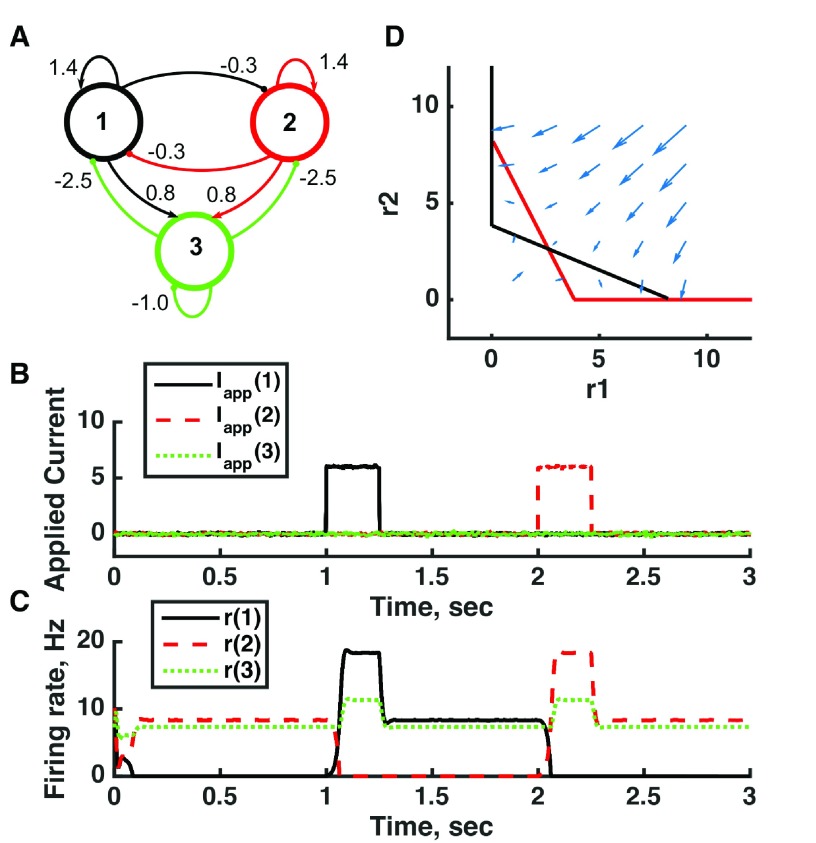

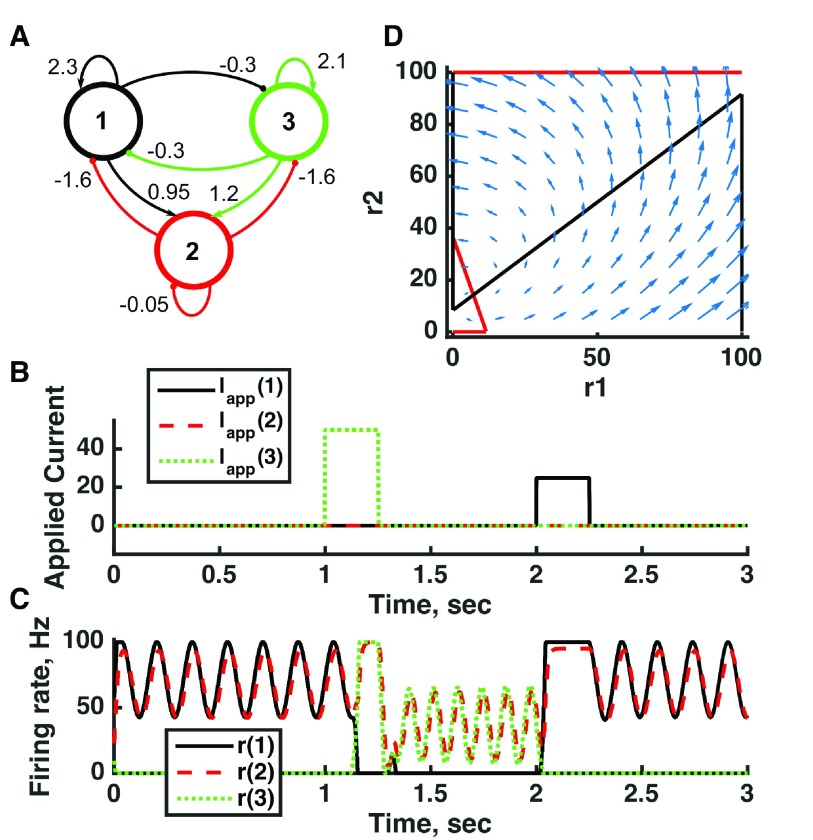

Figure 4. Bistability with low stable firing rates in the inhibition-stabilized threshold-linear three-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. A third unit is added to the architecture of Figure 3. ( B) Applied current as a function of time. The first current pulse is applied to unit 1, and the second is applied to unit 3. A small amount of noise is added to the current to prevent the system resting at an unstable symmetric state, toward which it is otherwise drawn. ( C) Firing rate as a function of time in the coupled network. The current steps switch activity between stable states in which no neuron’s activity is greater than 10 Hz—note the difference in rates from the “traditional” bistability of Figure 2. ( D) Any particular combination of the firing rates of the three units (x-axis is rate of unit 1, y-axis is rate of unit 3) determines the way those firing rates change in time (arrows). Only a plane out of the full three-dimensional space of arrows is shown—the plane corresponding to dr(3)/dt = 0. Starting from any pair of firing rates, any trajectory following arrows terminates at the point of intersection of the two lines. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Since unit 2 inhibits neuron 1, the fixed point for r(1) decreases with r(2). The crossing points of the nullclines are fixed points of the whole system. The asymmetric fixed points are stable (so are attractor states) because arrows converge on them, whereas the intervening symmetric fixed point is unstable. For parameters, see supporting Matlab code, “dynamics_three_units.m”.

A problem related to the one above is that most models of bistability produce spike patterns in the high-activity state that are much more regular than those observed in vivo. Systems that transition between various stable states will cause spike trains to be less regular because of the contribution of rate variation. Lower firing rates will likely also help with this problem because most model neurons produce irregular spike trains when operating below threshold in a fluctuation-driven regime at low firing rates. However, spike statistics such as the coefficient of variation (CV) of interspike intervals have not been analyzed to date in models of the IS regime.

Marginal states (line attractors or continuous attractors)

If a dynamical system possesses a continuous range of points (a line) that variables of the system approach, then the attractor state has “marginal stability”; if the activity is perturbed away from the line, then it recovers toward the line, but deviations along the line can accumulate over time 52. In neural circuit models, marginal states either depend on an underlying symmetry (for example, translation in space when considering memory for position via a ring attractor) 53– 56 or require other fine-tuning of parameters 57– 60. When neural activity enters a marginal state, the whole system can be described by a reduced number of variables, such as the position along the line of a line attractor 61. Systems with marginal states are able to encode and store the values of continuous quantities 53, 54, 60, integrate information over time perfectly 55, 57, 62 ( Figure 5), combine prior information with sensory input in a Bayesian manner 63, and in general achieve optimal computational performance 64. In practice, a system with many point attractor states that are close to each other—that is, total circuit activity differs little between states—can appear like a line attractor, performing integration 8 yet with the benefit of greater robustness and stability 65.

Figure 5. A marginal state or continuous/line attractor is produced by careful tuning of the connection strengths in a threshold-linear two-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. The architecture is identical to that of Figure 2. ( B) Applied current as a function of time. Two very small pulses of current are applied to unit 1. ( C) Firing rate as a function of time in the coupled network. During each current step, the firing rate of unit 1 increases linearly because of the applied current. Inhibitory feedback to unit 2 causes a linear decrease in its firing rate. Upon stimulus offset, the firing rate reached is maintained. ( D) Any particular combination of the firing rates of the two units (x-axis is rate of unit 1, y-axis is rate of unit 2) determines the way those firing rates change in time (arrows). Starting from any pair of firing rates, any trajectory following arrows terminates at a point where the two lines overlap each other. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Since the two units inhibit each other, the overlapping lines have negative gradient. ( E) A small applied current to unit 1 shifts its nullcline to the right slightly. Now the only fixed point is where the two lines intersect at r(2) = 0, but in the region between the two parallel nullclines, the rate of change is small (note the small arrows parallel to the lines) so firing rates change gradually. For parameters, see supporting Matlab code, “dynamics_two_units.m”.

Predicted experimental signatures of marginal states include drift of neural activity as noise accumulates in the manner of a random walk—leading to variance increasing linearly with time 54—in the presence of a constant stimulus; perfect temporal integration of inputs 55, 57; and correlations within single neural spike trains that decay linearly over time 52, 66. These have acquired some degree of experimental support 67– 69.

Oscillating systems (cyclic attractors or limit cycles)

Observations of oscillations are widespread throughout the brain 70, 71—indeed, the earliest human extracranial recordings revealed oscillations in electrical potential 72. In some cases, the presence of oscillations is inferred from a peak in the power spectrum at a particular frequency 73, 74—such a peak could arise from attractor state itinerancy, chaos, or heteroclinic orbits (see below) in the absence of a true oscillator. Yet, given the overwhelming abundance of evidence, there is no doubt as to the existence of oscillations in neural circuits—something still in question for other dynamical frameworks discussed here. Therefore, current research focuses on elucidating the role, if any, of oscillations in diverse mental processes 75, 76.

Spiking neurons themselves can be oscillators, so it should not seem surprising that neural circuits can also oscillate. Circuit oscillations can arise from the intrinsic oscillations of constituent neurons, or from the circuit connectivity ( Figure 6), or from a combination of the two. In general, any system with fast positive feedback and slower negative feedback is liable to oscillate ( Figure 7). Moreover, in any nonlinear dynamical system, a change in the inputs leads to a change in amplitude and frequency of ongoing oscillations, so correlations between oscillatory power or frequency and task condition are inevitable. Therefore, observed correlations between oscillatory power or synchrony and behavior or cognitive process—whether related to attention 77, arousal 78, memory load 79, or sleep state 80– 82—may indicate a causal dependence in one direction (oscillations cause the process) or the other (particular processes cause oscillations as an epiphenomenon). The difficulty of distinguishing the two arises because experiments aimed at altering an oscillation inevitably alter other properties of the dynamical system necessary for the cognitive process.

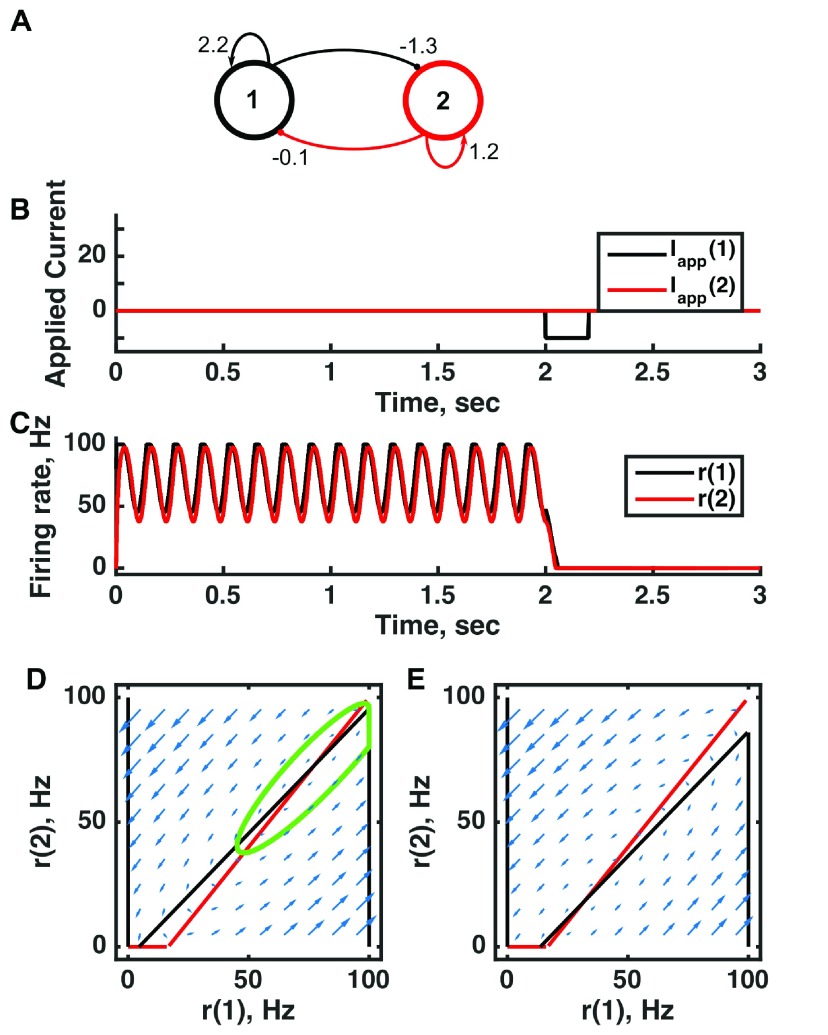

Figure 6. An oscillator produced by directed excitatory and inhibitory connections in a bistable threshold-linear two-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. The architecture is identical to that of Figure 1 and Figure 3. ( B) Applied current as a function of time. An inhibitory pulse of current is applied to unit 1. ( C) Firing rate as a function of time in the coupled network. Oscillations are switched off by the inhibition to unit 1, so the system has two stable attractors: one a limit cycle, the other a point attractor. ( D) Any particular combination of the firing rates of the two units (x-axis is rate of unit 1, y-axis is rate of unit 2) determines the way those firing rates change in time (arrows). Depending on the starting point, a trajectory following the arrows will fall on the limit cycle (the green closed orbit, which represents the coordinated variation of firing rate with time during the oscillations) or will reach the fixed point at the origin. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Since unit 1 excites unit 2, the fixed point for r(2) increases with r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). Crossing points of the nullclines are the fixed points of the whole system, but the one within the limit cycle is unstable, as is the one with r(2) = 0 but r(1) > 0. ( E) As in ( D) but the solution during the inhibitory pulse of applied current. The inhibitory current shifts the nullcline for r(1) down, and the result is that all trajectories terminate at the origin. For parameters, see supporting Matlab code, “dynamics_two_units.m”.

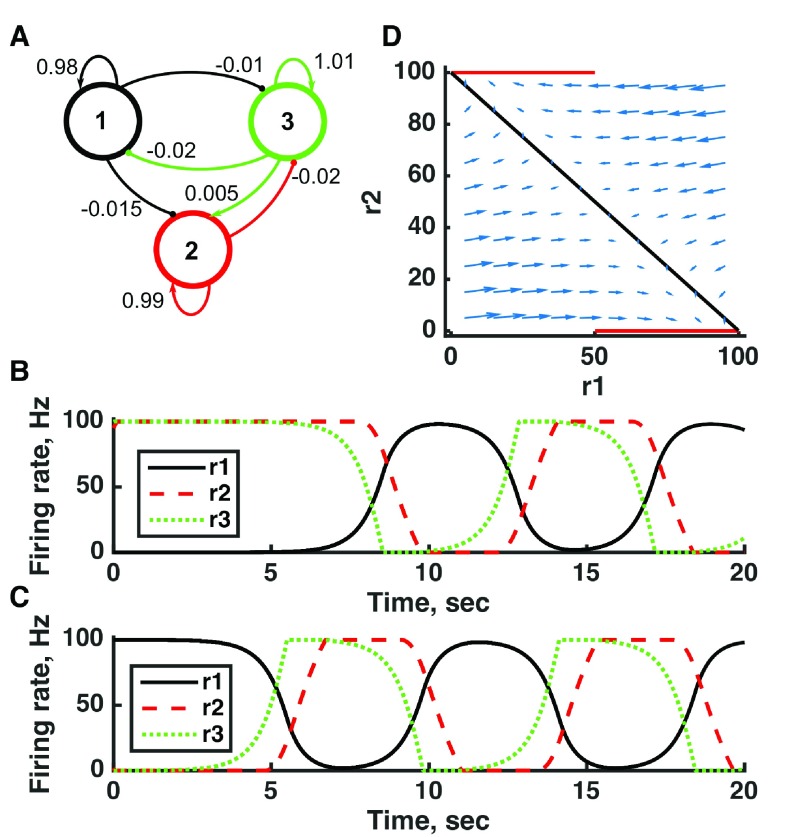

Figure 7. Switching between two distinct oscillators in a bistable threshold-linear three-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. Architecture is identical to that of Figure 4. ( B) Applied current as a function of time. The first current pulse is applied to unit 3, and the second is applied to unit 1. ( C) Firing rate as a function of time in the coupled network. The current steps switch activity between two stable states with different frequencies of oscillation. ( D) Any particular combination of the firing rates of the three units (x-axis is rate of unit 1, y-axis is rate of unit 3) determines the way those firing rates change in time (arrows). Only a plane out of the full three-dimensional space of arrows is shown: the plane corresponding to dr(3)/dt = 0. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). The crossing points of the nullclines are fixed points of the whole system, none of which is stable in this example. For parameters, see supporting Matlab code, “dynamics_three_units.m”.

The importance of oscillations is perhaps undisputed only in the case of motor systems that produce a repeating, periodic output 83 or in the sensations of whisking and olfaction that are directly related to such motor rhythms. Perhaps the most accepted roles for information processing by oscillations are within hippocampal place cells, whose phase of firing with respect to the ongoing 7 to 10 Hz theta oscillation contains substantial information 84– 87.

Oscillating systems contain limit cycles and so appear as closed loops in a plot of one variable against another ( Figure 6C). Since activity is attracted to the limit cycle—which is a line embedded within a higher-dimensional space—oscillating systems have some similarities to line attractors. In particular, small perturbations can be accumulated in the phase of the oscillation (along the line of the limit cycle), so, as with line attractors, noise accumulates as a random walk in one particular direction. Moreover, the phase of an oscillator retains a memory of perturbations, so oscillators can also be integrators, albeit only up to an offset of one cycle and with the need of an unperturbed oscillator for comparison.

Chaotic systems (strange attractors)

A high-dimensional neural system—as arises if the activity of each neuron can vary with little correlation with the activity of other neurons—with a balance between excitatory and inhibitory random connections becomes chaotic if connections are strong enough 88– 90. An early model of eye-blink conditioning in the cerebellum (the part of the mammalian brain with the highest density of cells and connections) used a chaotic circuit to encode and reproduce timing information 91, 92.

The smallest change in the initial conditions of a chaotic system leads to an indeterminate change in response, which can pose a serious problem for information processing and memory ( Figure 8). Yet a system operating near or at the “edge of chaos” can be computationally efficient 93 and become reliably entrained to inputs while responding more rapidly than ordered systems 89, 94. Moreover, certain learning rules for changing of connection strengths between neurons in a chaotic system can allow the encoding of almost any spatiotemporal input pattern 4, 95, the switching between multiple patterns 4, and the encoding and processing of many rule-based tasks 6, 8. Thus, chaotic systems appear to be highly flexible and trainable.

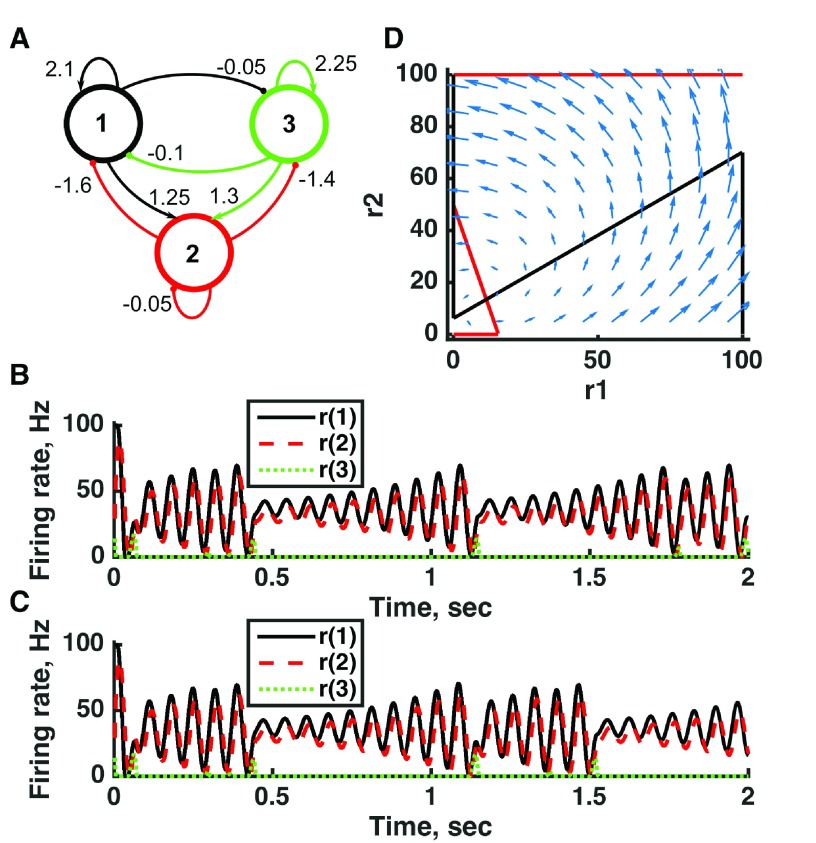

Figure 8. Chaotic activity in a threshold-linear three-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. Architecture is identical to that of Figure 4 and Figure 7. ( B, C) Firing rate as a function of time in the coupled network, from two imperceptibly different initial conditions. No applied current is present. The miniscule difference in initial conditions is amplified over time, so, for example, unit 3 produces a small burst of activity after 1.5 s in ( D), but that burst is absent from ( C). ( D) Any particular combination of the firing rates of the three units (x-axis is rate of unit 1, y-axis is rate of unit 3) determines the way those firing rates change in time (arrows). Only a plane out of the full three-dimensional space of arrows is shown: the plane corresponding to dr(2)/dt = 0. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). The crossing points of the nullclines are unstable fixed points of the whole system. For parameters, see supporting Matlab code, “dynamics_three_units.m”.

Observed signatures of chaos include the apparent randomness and variability of spike trains, especially during spontaneous activity in the absence of stimuli, and the initial drop of such variability upon stimulus presentation 94, 96.

Chaos can arise in systems with quasistable attractor states as an itinerancy between the states in an order possessing no pattern 97, 98 or in an oscillating system (typically as unpredictable jumps between different types of oscillation). Heteroclinic sequences (see below) can also be chaotic 99. Addition of a small amount of intrinsic noise to chaotic systems causes the divergence in activity observed without noise upon small changes in initial conditions to occur on separate trials with identical initial conditions.

Heteroclinics

A common type of fixed point in a system with many variables (meaning it is high-dimensional) is a saddle point. Saddle points are so-named because, like the saddle on a horse or the saddle on a ridge, there are directions where the natural tendency is to approach the fixed point (moving down from a higher point on the ridge) and other directions where the natural tendency is to move away from the fixed point (down to the valley below). If the state of a dynamical system can move toward one such saddle point and then move away from it to another one, and so on, the trajectory is called a heteroclinic sequence.

Heteroclinic sequences have similarities to systems with attractor-state itinerancy and a type of oscillator that switches between states that appear stable on a short timescale, called a relaxation oscillator. All three systems have states toward which the system is drawn but at which the system does not remain. In a heteroclinic sequence, activity can be funneled toward each saddle point as if it were an attractor state but, once in the vicinity of the saddle point, will find the direction of instability and move away ( Figure 9). In the absence of noise, the duration in a “state” (the vicinity of a particular saddle point) depends on how close to the fixed point the system gets and therefore may vary with initial conditions. Interestingly, a small amount of noise can make these state durations more regular 100.

Figure 9. Heteroclinic orbits in a threshold-linear three-unit circuit.

( A) Diagram of the model circuit. Arrows indicate excitatory connections, and balls indicate inhibitory connections between units. ( B, C) Firing rate as a function of time in the coupled network from different initial conditions (near the fixed points) with no applied current. Activity is initially slow to move away from the vicinity of the fixed point (along its unstable direction) but after a cycle returns to the vicinity of the same fixed point (along its stable direction). ( D) Any particular combination of the firing rates of the three units (x-axis is rate of unit 1, y-axis is rate of unit 3) determines the way those firing rates change in time (arrows). Only a plane out of the full three-dimensional space of arrows is shown: the plane corresponding to dr(2)/dt = 0. Red line: nullcline for unit 2—the value of r(2) at which dr(2)/dt = 0 (its fixed point) given a value of r(1). Black line: nullcline for unit 1—the value of r(1) at which dr(1)/dt = 0 (its fixed point) given a value of r(2). The crossing points of the nullclines are fixed points of the whole system, in this case at r(1) = 100, r(2) = 0, and r(3) = 0 and at r(1) = 0, r(2) = 100, and r(3) = 100. The fixed points are saddle points in that firing rates can either approach the fixed point or move away from it, depending on the precise set of rates. For parameters, see supporting Matlab code, “dynamics_three_units.m”.

Models of heteroclinic sequences have been proposed as a basis for memory 101, 102 and decision making 103, 104. According to some calculations, a randomly connected neural circuit would contain a suitable number of heteroclinic trajectories for information processing 105. In the high-dimensional space of neural activity, most random fixed points would have some directions along which they attract neural activity and other directions along which they repel it, meaning that they would be the saddle points necessary for producing heteroclinic sequences. However, no unique predictions of cognitive processing via heteroclinic sequences have been linked to empirical data to date.

Criticality

Many scientists argue that the brain is in a critical state, in fact exhibiting self-organized criticality, so should be studied as such. Criticality is a measured state of a system rather than a dynamical model. A critical system is characterized by a number of properties, including the following: power law decays of the durations and sizes of features such as neural avalanches 106; relationships between the exponents of these different power laws; a scaling of the time dependence of these features when binned by size onto a universal curve 107; and correlations of fluctuations that extend across the system. All of these features arise from a particular distribution of the number of possible states binned according to their probability of occurrence—a distribution that, in principle, can be enumerated 108—that conspire to remove any stereotypical size of the systems fluctuations. Critical systems have been argued to be optimal at information processing 93, 109.

It is likely that most of the above dynamical systems under appropriate conditions could produce critical behavior 110—although the term criticality is sometimes used for systems operating at the edge of chaos 93 where critical properties can arise in random networks 111. Criticality appears to be more strongly dependent on the modular or hierarchical structure of network connectivity 112– 114 than the within-module dynamics.

Characteristics of criticality have been measured in cortical cultures 106, 107, intact retina 108, and whole-brain imaging 115. Observations to date suggest that much neural activity is close to being critical 109, 116 rather than exactly critical, leaving it open that even if a particular dynamical system cannot engender exact criticality, it is still close enough to provide an accurate description of neural activity (but see 108).

Summary

Many different types of dynamical system have been proposed as models of neural activity, each of which can be justified by experimental evidence in some brain regions and circumstances. Different neural circuits in different parts of the brain may operate in different dynamical regimes because of connectivity differences, in particular differences in relative dominance of feedback or feedforward connections and in the relative contributions of excitatory or inhibitory neurons. Moreover, since the dynamical regime of a neural circuit depends on many factors (only one of which is the connectivity pattern), it is likely that a single circuit can change between dynamical regimes following learning or when it receives inputs or neural modulation.

Any one of these types of dynamical system (or any other not mentioned) could provide the most accurate basis for understanding a particular neurological function, another may be compatible with the observed data and more useful for explaining particular features of the neural behavior, while others may at heart be incompatible with the known circuit properties. Therefore, it behooves us all to avoid entrenchment in our favorite paradigm and to improve our understanding of the variety of dynamical systems when attempting to understand neural circuit function.

A final point worth making is that just knowing the connectome—which neurons are connected to each other—does not tell us about the operation of a neural circuit. Many of the dynamics we see arise in the same simple circuit with the same neurons (for example, Figure 1, Figure 3, and Figure 6 have identical architecture, even accounting for type of synapse, as do Figure 4, Figure 7, and Figure 8); differences in neural excitability or differences in strengths of connections can produce different functionality within a single architecture. Conversely, distinct connectivity patterns can give rise to the same function. Rather, observation of the coordinated activity of many neurons during mental processing is the route to understanding the remarkable abilities of our brains.

Editorial Note on the Review Process

F1000 Faculty Reviews are commissioned from members of the prestigious F1000 Faculty and are edited as a service to readers. In order to make these reviews as comprehensive and accessible as possible, the referees provide input before publication and only the final, revised version is published. The referees who approved the final version are listed with their names and affiliations but without their reports on earlier versions (any comments will already have been addressed in the published version).

The referees who approved this article are:

Harel Shouval, Department of Neurobiology and Anatomy, University of Texas Medical School , Houston, TX, 77030, USA

Mark Goldman, Center for Neuroscience, Department of Neurobiology, Physiology, and Behavior, and Department of Ophthalmology and Vision Science, University of California at Davis, Davis, CA, 95618, USA

John Beggs, Department of Physics, Indiana University, Bloomington, IN, 47405, USA

Funding Statement

The author(s) declared that no grants were involved in supporting this work.

[version 1; referees: 3 approved]

Supplementary material

dynamics_two_units.

.

dynamics_three_units.

.

References

- 1. Izhikevich EM: Solving the distal reward problem through linkage of STDP and dopamine signaling. Cereb Cortex. 2007;17(10):2443–52. 10.1093/cercor/bhl152 [DOI] [PubMed] [Google Scholar]

- 2. Strogatz SH: Nonlinear Dynamics and Chaos.Boulder, CO: Westview Press,2015. Reference Source [Google Scholar]

- 3. Dayan P, Abbott LF: Theoretical Neuroscience. MIT Press,2001. Reference Source [Google Scholar]

- 4. Sussillo D, Abbott LF: Generating coherent patterns of activity from chaotic neural networks. Neuron. 2009;63(4):544–57. 10.1016/j.neuron.2009.07.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Rigotti M, Ben Dayan Rubin D, Wang XJ, et al. : Internal representation of task rules by recurrent dynamics: the importance of the diversity of neural responses. Front Comput Neurosci. 2010;4:24. 10.3389/fncom.2010.00024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Barak O, Sussillo D, Romo R, et al. : From fixed points to chaos: three models of delayed discrimination. Prog Neurobiol. 2013;103:214–22. 10.1016/j.pneurobio.2013.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Laje R, Buonomano DV: Robust timing and motor patterns by taming chaos in recurrent neural networks. Nat Neurosci. 2013;16(7):925–33. 10.1038/nn.3405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Mante V, Sussillo D, Shenoy KV, et al. : Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature. 2013;503(7474):78–84. 10.1038/nature12742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Rigotti M, Barak O, Warden MR, et al. : The importance of mixed selectivity in complex cognitive tasks. Nature. 2013;497(7451):585–90. 10.1038/nature12160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Rajan K, Harvey CD, Tank DW: Recurrent Network Models of Sequence Generation and Memory. Neuron. 2016;90(1):128–42. 10.1016/j.neuron.2016.02.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Grossberg S: Some nonlinear networks capable of learning a spatial pattern of arbitrary complexity. Proc Natl Acad Sci U S A. 1968;59(2):368–72. 10.1073/pnas.59.2.368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hopfield JJ: Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci U S A. 1982;79(8):2554–8. 10.1073/pnas.79.8.2554 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Cohen MA, Grossberg S: Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Syst Man Cybern. 1983;SMC-13(5):815–26. 10.1109/TSMC.1983.6313075 [DOI] [Google Scholar]

- 14. Hopfield JJ: Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci U S A. 1984;81(10):3088–92. 10.1073/pnas.81.10.3088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Treves A: Graded-response neurons and information encodings in autoassociative memories. Phys Rev A. 1990;42(4):2418–30. 10.1103/PhysRevA.42.2418 [DOI] [PubMed] [Google Scholar]

- 16. Battaglia FP, Treves A: Stable and rapid recurrent processing in realistic autoassociative memories. Neural Comput. 1998;10(2):431–50. 10.1162/089976698300017827 [DOI] [PubMed] [Google Scholar]

- 17. Tsodyks MV, Skaggs WE, Sejnowski TJ, et al. : Paradoxical effects of external modulation of inhibitory interneurons. J Neurosci. 1997;17(11):4382–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Ahmadian Y, Rubin DB, Miller KD: Analysis of the stabilized supralinear network. Neural Comput. 2013;25(8):1994–2037. 10.1162/NECO_a_00472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Rubin DB, Van Hooser SD, Miller KD: The stabilized supralinear network: a unifying circuit motif underlying multi-input integration in sensory cortex. Neuron. 2015;85(2):402–17. 10.1016/j.neuron.2014.12.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Miller P, Katz DB: Stochastic transitions between neural states in taste processing and decision-making. J Neurosci. 2010;30(7):2559–70. 10.1523/JNEUROSCI.3047-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Miller P: Stimulus number, duration and intensity encoding in randomly connected attractor networks with synaptic depression. Front Comput Neurosci. 2013;7:59. 10.3389/fncom.2013.00059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Fitzhugh R: Impulses and Physiological States in Theoretical Models of Nerve Membrane. Biophys J. 1961;1(6):445–66. 10.1016/S0006-3495(61)86902-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Nagumo J, Arimoto S, Yoshizawa S: An Active Pulse Transmission Line Simulating Nerve Axon. Proc IRE. 1962;50(10):2061–70. 10.1109/JRPROC.1962.288235 [DOI] [Google Scholar]

- 24. Kaneko K, Tsuda I: Chaotic itinerancy. Chaos. 2003;13(3):926–36. 10.1063/1.1607783 [DOI] [PubMed] [Google Scholar]

- 25. Tsuda I: Chaotic itinerancy and its roles in cognitive neurodynamics. Curr Opin Neurobiol. 2015;31:67–71. 10.1016/j.conb.2014.08.011 [DOI] [PubMed] [Google Scholar]

- 26. Moreno-Bote R, Knill DC, Pouget A: Bayesian sampling in visual perception. Proc Natl Acad Sci U S A. 2011;108(30):12491–6. 10.1073/pnas.1101430108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Miller P, Katz DB: Accuracy and response-time distributions for decision-making: linear perfect integrators versus nonlinear attractor-based neural circuits. J Comput Neurosci. 2013;35(3):261–94. 10.1007/s10827-013-0452-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Poston T, Stewart I: Nonlinear modeling of multistable perception. Behav Sci. 1978;23(5):318–34. 10.1002/bs.3830230403 [DOI] [PubMed] [Google Scholar]

- 29. Gorea A, Lorenceau J: Perceptual bistability with counterphase gratings. Vision Res. 1984;24(10):1321–31. 10.1016/0042-6989(84)90187-1 [DOI] [PubMed] [Google Scholar]

- 30. Pressnitzer D, Hupé J: Temporal dynamics of auditory and visual bistability reveal common principles of perceptual organization. Curr Biol. 2006;16(13):1351–7. 10.1016/j.cub.2006.05.054 [DOI] [PubMed] [Google Scholar]

- 31. Hupé J, Joffo L, Pressnitzer D: Bistability for audiovisual stimuli: Perceptual decision is modality specific. J Vis. 2008;8(7):1.1–15. 10.1167/8.7.1 [DOI] [PubMed] [Google Scholar]

- 32. Toppino TC, Long GM: Time for a change: what dominance durations reveal about adaptation effects in the perception of a bi-stable reversible figure. Atten Percept Psychophys. 2015;77(3):867–82. 10.3758/s13414-014-0809-x [DOI] [PubMed] [Google Scholar]

- 33. Marr D: Vision: A Computational Investigation into the Human Representation and Processing of Visual Information.Cambridge, MA, USA: MIT Press,2010. Reference Source [Google Scholar]

- 34. Dayan P: A hierarchical model of binocular rivalry. Neural Comput. 1998;10(5):1119–35. 10.1162/089976698300017377 [DOI] [PubMed] [Google Scholar]

- 35. Deco G, Martí D: Deterministic analysis of stochastic bifurcations in multi-stable neurodynamical systems. Biol Cybern. 2007;96(5):487–96. 10.1007/s00422-007-0144-6 [DOI] [PubMed] [Google Scholar]

- 36. Moreno-Bote R, Rinzel J, Rubin N: Noise-induced alternations in an attractor network model of perceptual bistability. J Neurophysiol. 2007;98(3):1125–39. 10.1152/jn.00116.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Seidemann E, Meilijson I, Abeles M, et al. : Simultaneously recorded single units in the frontal cortex go through sequences of discrete and stable states in monkeys performing a delayed localization task. J Neurosci. 1996;16(2):752–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Gat I, Tishby N, Abeles M: Hidden Markov modelling of simultaneously recorded cells in the associative cortex of behaving monkeys. Network-Comp Neural Sys. 1997;8(3):297–322. 10.1088/0954-898X_8_3_005 [DOI] [Google Scholar]

- 39. Jones LM, Fontanini A, Sadacca BF, et al. : Natural stimuli evoke dynamic sequences of states in sensory cortical ensembles. Proc Natl Acad Sci U S A. 2007;104(47):18772–7. 10.1073/pnas.0705546104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Abeles M, Bergman H, Gat I, et al. : Cortical activity flips among quasi-stationary states. Proc Natl Acad Sci U S A. 1995;92(19):8616–20. 10.1073/pnas.92.19.8616 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Latimer KW, Yates JL, Meister ML, et al. : NEURONAL MODELING. Single-trial spike trains in parietal cortex reveal discrete steps during decision-making. Science. 2015;349(6244):184–7. 10.1126/science.aaa4056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Sadacca BF, Mukherjee N, Vladusich T, et al. : The Behavioral Relevance of Cortical Neural Ensemble Responses Emerges Suddenly. J Neurosci. 2016;36(3):655–69. 10.1523/JNEUROSCI.2265-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Miyashita Y: Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nature. 1988;335(6193):817–20. 10.1038/335817a0 [DOI] [PubMed] [Google Scholar]

- 44. Warden MR, Miller EK: Task-dependent changes in short-term memory in the prefrontal cortex. J Neurosci. 2010;30(47):15801–10. 10.1523/JNEUROSCI.1569-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Brunel N: Persistent activity and the single-cell frequency-current curve in a cortical network model. Network. 2000;11(4):261–80. 10.1088/0954-898X_11_4_302 [DOI] [PubMed] [Google Scholar]

- 46. Wang XJ: Synaptic basis of cortical persistent activity: the importance of NMDA receptors to working memory. J Neurosci. 1999;19(21):9587–603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Brunel N, Wang XJ: Effects of neuromodulation in a cortical network model of object working memory dominated by recurrent inhibition. J Comput Neurosci. 2001;11(1):63–85. 10.1023/A:1011204814320 [DOI] [PubMed] [Google Scholar]

- 48. Mongillo G, Barak O, Tsodyks M: Synaptic theory of working memory. Science. 2008;319(5869):1543–6. 10.1126/science.1150769 [DOI] [PubMed] [Google Scholar]

- 49. Amit DJ, Treves A: Associative memory neural network with low temporal spiking rates. Proc Natl Acad Sci U S A. 1989;86(20):7871–5. 10.1073/pnas.86.20.7871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Golomb D, Rubin N, Sompolinsky H: Willshaw model: Associative memory with sparse coding and low firing rates. Phys Rev A. 1990;41(4):1843–54. 10.1103/PhysRevA.41.1843 [DOI] [PubMed] [Google Scholar]

- 51. Latham PE, Nirenberg S: Computing and stability in cortical networks. Neural Comput. 2004;16(7):1385–412. 10.1162/089976604323057434 [DOI] [PubMed] [Google Scholar]

- 52. Ben-Yishai R, Bar-Or RL, Sompolinsky H: Theory of orientation tuning in visual cortex. Proc Natl Acad Sci U S A. 1995;92(9):3844–8. 10.1073/pnas.92.9.3844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Zhang K: Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J Neurosci. 1996;16(6):2112–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Compte A, Brunel N, Goldman-Rakic PS, et al. : Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb Cortex. 2000;10(9):910–23. 10.1093/cercor/10.9.910 [DOI] [PubMed] [Google Scholar]

- 55. Song P, Wang XJ: Angular path integration by moving "hill of activity": a spiking neuron model without recurrent excitation of the head-direction system. J Neurosci. 2005;25(4):1002–14. 10.1523/JNEUROSCI.4172-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Machens CK, Brody CD: Design of continuous attractor networks with monotonic tuning using a symmetry principle. Neural Comput. 2008;20(2):452–85. 10.1162/neco.2007.07-06-297 [DOI] [PubMed] [Google Scholar]

- 57. Seung HS: How the brain keeps the eyes still. Proc Natl Acad Sci U S A. 1996;93(23):13339–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Seung HS, Lee DD, Reis BY, et al. : The autapse: a simple illustration of short-term analog memory storage by tuned synaptic feedback. J Comput Neurosci. 2000;9(2):171–85. 10.1023/A:1008971908649 [DOI] [PubMed] [Google Scholar]

- 59. Seung HS, Lee DD, Reis BY, et al. : Stability of the memory of eye position in a recurrent network of conductance-based model neurons. Neuron. 2000;26(1):259–71. 10.1016/S0896-6273(00)81155-1 [DOI] [PubMed] [Google Scholar]

- 60. Miller P, Brody CD, Romo R, et al. : A recurrent network model of somatosensory parametric working memory in the prefrontal cortex. Cereb Cortex. 2003;13(11):1208–18. 10.1093/cercor/bhg101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Hopfield JJ: Understanding Emergent Dynamics: Using a Collective Activity Coordinate of a Neural Network to Recognize Time-Varying Patterns. Neural Comput. 2015;27(10):2011–38. 10.1162/NECO_a_00768 [DOI] [PubMed] [Google Scholar]

- 62. Conklin J, Eliasmith C: A controlled attractor network model of path integration in the rat. J Comput Neurosci. 2005;18(2):183–203. 10.1007/s10827-005-6558-z [DOI] [PubMed] [Google Scholar]

- 63. Deneve S, Latham PE, Pouget A: Efficient computation and cue integration with noisy population codes. Nat Neurosci. 2001;4(8):826–31. 10.1038/90541 [DOI] [PubMed] [Google Scholar]

- 64. Latham PE, Deneve S, Pouget A: Optimal computation with attractor networks. J Physiol Paris. 2003;97(4–6):683–94. 10.1016/j.jphysparis.2004.01.022 [DOI] [PubMed] [Google Scholar]

- 65. Koulakov AA, Raghavachari S, Kepecs A, et al. : Model for a robust neural integrator. Nat Neurosci. 2002;5(8):775–82. 10.1038/nn893 [DOI] [PubMed] [Google Scholar]

- 66. Miller P, Wang X: Power-law neuronal fluctuations in a recurrent network model of parametric working memory. J Neurophysiol. 2006;95(2):1099–114. 10.1152/jn.00491.2005 [DOI] [PubMed] [Google Scholar]

- 67. Romo R, Brody CD, Hernández A, et al. : Neuronal correlates of parametric working memory in the prefrontal cortex. Nature. 1999;399(6735):470–3. 10.1038/20939 [DOI] [PubMed] [Google Scholar]

- 68. Aksay E, Baker R, Seung HS, et al. : Anatomy and discharge properties of pre-motor neurons in the goldfish medulla that have eye-position signals during fixations. J Neurophysiol. 2000;84(2):1035–49. [DOI] [PubMed] [Google Scholar]

- 69. Huk AC, Shadlen MN: Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J Neurosci. 2005;25(45):10420–36. 10.1523/JNEUROSCI.4684-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Buzsáki G, Draguhn A: Neuronal oscillations in cortical networks. Science. 2004;304(5679):1926–9. 10.1126/science.1099745 [DOI] [PubMed] [Google Scholar]

- 71. Buzsáki G: Rhythms of the Brain.New York, NY: Oxford University Press.2006. 10.1093/acprof:oso/9780195301069.001.0001 [DOI] [Google Scholar]

- 72. Berger H: Über das Elektrenkephalogramm des Menschen. Archiv f Psychiatrie. 1929;87(1):527–70. 10.1007/BF01797193 [DOI] [Google Scholar]

- 73. Buzsáki G, Wang XJ: Mechanisms of gamma oscillations. Annu Rev Neurosci. 2012;35:203–25. 10.1146/annurev-neuro-062111-150444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Matzner A, Bar-Gad I: Quantifying spike train oscillations: biases, distortions and solutions. PLoS Comput Biol. 2015;11(4):e1004252. 10.1371/journal.pcbi.1004252 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Wang XJ: Neurophysiological and computational principles of cortical rhythms in cognition. Physiol Rev. 2010;90(3):1195–268. 10.1152/physrev.00035.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Freeman WJ: Mechanism and significance of global coherence in scalp EEG. Curr Opin Neurobiol. 2015;31:199–205. 10.1016/j.conb.2014.11.008 [DOI] [PubMed] [Google Scholar]

- 77. Fries P, Reynolds JH, Rorie AE, et al. : Modulation of oscillatory neuronal synchronization by selective visual attention. Science. 2001;291(5508):1560–3. 10.1126/science.1055465 [DOI] [PubMed] [Google Scholar]

- 78. Fontanini A, Katz DB: 7 to 12 Hz activity in rat gustatory cortex reflects disengagement from a fluid self-administration task. J Neurophysiol. 2005;93(5):2832–40. 10.1152/jn.01035.2004 [DOI] [PubMed] [Google Scholar]

- 79. Raghavachari S, Kahana MJ, Rizzuto DS, et al. : Gating of human theta oscillations by a working memory task. J Neurosci. 2001;21(9):3175–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Crunelli V, Hughes SW: The slow (<1 Hz) rhythm of non-REM sleep: a dialogue between three cardinal oscillators. Nat Neurosci. 2010;13(1):9–17. 10.1038/nn.2445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Buzsáki G: Hippocampal sharp wave-ripple: A cognitive biomarker for episodic memory and planning. Hippocampus. 2015;25(10):1073–188. 10.1002/hipo.22488 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Cirelli C, Tononi G: Cortical development, electroencephalogram rhythms, and the sleep/wake cycle. Biol Psychiatry. 2015;77(12):1071–8. 10.1016/j.biopsych.2014.12.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Marder E, Calabrese RL: Principles of rhythmic motor pattern generation. Physiol Rev. 1996;76(3):687–717. [DOI] [PubMed] [Google Scholar]

- 84. Jensen O, Lisman JE: Position reconstruction from an ensemble of hippocampal place cells: contribution of theta phase coding. J Neurophysiol. 2000;83(5):2602–9. [DOI] [PubMed] [Google Scholar]

- 85. Lisman J: The theta/gamma discrete phase code occuring during the hippocampal phase precession may be a more general brain coding scheme. Hippocampus. 2005;15(7):913–22. 10.1002/hipo.20121 [DOI] [PubMed] [Google Scholar]

- 86. Lisman JE, Jensen O: The θ-γ neural code. Neuron. 2013;77(6):1002–16. 10.1016/j.neuron.2013.03.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Chadwick A, van Rossum MC, Nolan MF: Independent theta phase coding accounts for CA1 population sequences and enables flexible remapping. eLife. 2015;4:e03542. 10.7554/eLife.03542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Sompolinsky H, Crisanti A, Sommers HJ: Chaos in random neural networks. Phys Rev Lett. 1988;61(3):259–62. 10.1103/PhysRevLett.61.259 [DOI] [PubMed] [Google Scholar]

- 89. van Vreeswijk C, Sompolinsky H: Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science. 1996;274(5293):1724–6. 10.1126/science.274.5293.1724 [DOI] [PubMed] [Google Scholar]

- 90. van Vreeswijk C, Sompolinsky H: Chaotic balanced state in a model of cortical circuits. Neural Comput. 1998;10(6):1321–71. 10.1162/089976698300017214 [DOI] [PubMed] [Google Scholar]

- 91. Buonomano DV, Mauk MD: Neural Network Model of the Cerebellum: Temporal Discrimination and the Timing of Motor Responses. Neural Comput. 1994;6(1):38–55. 10.1162/neco.1994.6.1.38 [DOI] [Google Scholar]

- 92. Buonomano DV, Merzenich MM: Temporal information transformed into a spatial code by a neural network with realistic properties. Science. 1995;267(5200):1028–30. 10.1126/science.7863330 [DOI] [PubMed] [Google Scholar]

- 93. Bertschinger N, Natschläger T: Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 2004;16(7):1413–36. 10.1162/089976604323057443 [DOI] [PubMed] [Google Scholar]

- 94. Rajan K, Abbott LF, Sompolinsky H: Stimulus-dependent suppression of chaos in recurrent neural networks. Phys Rev E Stat Nonlin Soft Matter Phys. 2010;82(1 Pt 1): 11903. 10.1103/PhysRevE.82.011903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Maass W, Natschläger T, Markram H: Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 2002;14(11):2531–60. 10.1162/089976602760407955 [DOI] [PubMed] [Google Scholar]

- 96. Churchland MM, Yu BM, Cunningham JP, et al. : Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nat Neurosci. 2010;13(3):369–78. 10.1038/nn.2501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Yang XS, Huang Y: Complex dynamics in simple Hopfield neural networks. Chaos. 2006;16(3): 33114. 10.1063/1.2220476 [DOI] [PubMed] [Google Scholar]

- 98. Stern M, Sompolinsky H, Abbott LF: Dynamics of random neural networks with bistable units. Phys Rev E Stat Nonlin Soft Matter Phys. 2014;90(6): 62710. 10.1103/PhysRevE.90.062710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99. Varona P, Rabinovich MI, Selverston AI, et al. : Winnerless competition between sensory neurons generates chaos: A possible mechanism for molluscan hunting behavior. Chaos. 2002;12(3):672–7. 10.1063/1.1498155 [DOI] [PubMed] [Google Scholar]

- 100. Horchler AD, Daltorio KA, Chiel HJ, et al. : Designing responsive pattern generators: stable heteroclinic channel cycles for modeling and control. Bioinspir Biomim. 2015;10(2): 26001. 10.1088/1748-3190/10/2/026001 [DOI] [PubMed] [Google Scholar]

- 101. Afraimovich VS, Zhigulin VP, Rabinovich MI: On the origin of reproducible sequential activity in neural circuits. Chaos. 2004;14(4):1123–9. 10.1063/1.1819625 [DOI] [PubMed] [Google Scholar]

- 102. Bick C, Rabinovich MI: Dynamical origin of the effective storage capacity in the brain's working memory. Phys Rev Lett. 2009;103(21): 218101. 10.1103/PhysRevLett.103.218101 [DOI] [PubMed] [Google Scholar]

- 103. Rabinovich MI, Huerta R, Afraimovich V: Dynamics of sequential decision making. Phys Rev Lett. 2006;97(18): 188103. 10.1103/PhysRevLett.97.188103 [DOI] [PubMed] [Google Scholar]

- 104. Rabinovich MI, Huerta R, Varona P, et al. : Transient cognitive dynamics, metastability, and decision making. PLoS Comput Biol. 2008;4(5):e1000072. 10.1371/journal.pcbi.1000072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105. Huerta R, Rabinovich M: Reproducible sequence generation in random neural ensembles. Phys Rev Lett. 2004;93(23): 238104. 10.1103/PhysRevLett.93.238104 [DOI] [PubMed] [Google Scholar]

- 106. Beggs JM, Plenz D: Neuronal avalanches in neocortical circuits. J Neurosci. 2003;23(35):11167–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Friedman N, Ito S, Brinkman BA, et al. : Universal critical dynamics in high resolution neuronal avalanche data. Phys Rev Lett. 2012;108(20): 208102. 10.1103/PhysRevLett.108.208102 [DOI] [PubMed] [Google Scholar]

- 108. Tkačik G, Mora T, Marre O, et al. : Thermodynamics and signatures of criticality in a network of neurons. Proc Natl Acad Sci U S A. 2015;112(37):11508–13. 10.1073/pnas.1514188112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109. Beggs JM: The criticality hypothesis: how local cortical networks might optimize information processing. Philos Trans A Math Phys Eng Sci. 2008;366(1864):329–43. 10.1098/rsta.2007.2092 [DOI] [PubMed] [Google Scholar]

- 110. Deco G, Jirsa VK: Ongoing cortical activity at rest: criticality, multistability, and ghost attractors. J Neurosci. 2012;32(10):3366–75. 10.1523/JNEUROSCI.2523-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111. Kadmon J, Sompolinsky H: Transition to Chaos in Random Neuronal Networks. Phys Rev X. 2015;5: 041030. 10.1103/physrevx.5.041030 [DOI] [Google Scholar]

- 112. Wang SJ, Hilgetag CC, Zhou C: Sustained activity in hierarchical modular neural networks: self-organized criticality and oscillations. Front Comput Neurosci. 2011;5:30. 10.3389/fncom.2011.00030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113. Friedman EJ, Landsberg AS: Hierarchical networks, power laws, and neuronal avalanches. Chaos. 2013;23(1): 13135. 10.1063/1.4793782 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114. Russo R, Herrmann HJ, de Arcangelis L: Brain modularity controls the critical behavior of spontaneous activity. Sci Rep. 2014;4: 4312. 10.1038/srep04312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115. Tagliazucchi E, Balenzuela P, Fraiman D, et al. : Criticality in large-scale brain FMRI dynamics unveiled by a novel point process analysis. Front Physiol. 2012;3:15. 10.3389/fphys.2012.00015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116. Beggs JM, Timme N: Being critical of criticality in the brain. Front Physiol. 2012;3:163. 10.3389/fphys.2012.00163 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.