Abstract

Adaptive behaviour entails the capacity to select actions as a function of their energy cost and expected value and the disruption of this faculty is now viewed as a possible cause of the symptoms of Parkinson’s disease. Indirect evidence points to the involvement of the subthalamic nucleus—the most common target for deep brain stimulation in Parkinson’s disease—in cost-benefit computation. However, this putative function appears at odds with the current view that the subthalamic nucleus is important for adjusting behaviour to conflict. Here we tested these contrasting hypotheses by recording the neuronal activity of the subthalamic nucleus of patients with Parkinson’s disease during an effort-based decision task. Local field potentials were recorded from the subthalamic nucleus of 12 patients with advanced Parkinson’s disease (mean age 63.8 years ± 6.8; mean disease duration 9.4 years ± 2.5) both OFF and ON levodopa while they had to decide whether to engage in an effort task based on the level of effort required and the value of the reward promised in return. The data were analysed using generalized linear mixed models and cluster-based permutation methods. Behaviourally, the probability of trial acceptance increased with the reward value and decreased with the required effort level. Dopamine replacement therapy increased the rate of acceptance for efforts associated with low rewards. When recording the subthalamic nucleus activity, we found a clear neural response to both reward and effort cues in the 1–10 Hz range. In addition these responses were informative of the subjective value of reward and level of effort rather than their actual quantities, such that they were predictive of the participant’s decisions. OFF levodopa, this link with acceptance was weakened. Finally, we found that these responses did not index conflict, as they did not vary as a function of the distance from indifference in the acceptance decision. These findings show that low-frequency neuronal activity in the subthalamic nucleus may encode the information required to make cost-benefit comparisons, rather than signal conflict. The link between these neural responses and behaviour was stronger under dopamine replacement therapy. Our findings are consistent with the view that Parkinson’s disease symptoms may be caused by a disruption of the processes involved in balancing the value of actions with their associated effort cost.

Keywords: Parkinson’s disease, subthalamic nucleus, reward, effort, decision-making

Introduction

The subthalamic nucleus (STN) occupies a unique position within the basal ganglia, standing at the crossroad between the indirect and hyperdirect pathways (Nambu, 2004; Mathai and Smith, 2011), and has received much attention lately given the remarkable efficacy of deep brain stimulation (DBS) of the STN in the treatment of Parkinson’s disease symptoms (Krack et al., 2003). Computational models have proposed that the STN acts by increasing the decision threshold during situations in which several choices are possible, slowing down responses, and thereby preventing impulsive decisions (Frank, 2006; Bogacz, 2007). A large body of evidence supports these models of STN function, by demonstrating correlates of conflict in the theta and delta bands of neuronal activity within the STN (Cavanagh et al., 2011; Zavala et al., 2013) that are coherent with cortical activity at the same frequency (Zavala et al., 2014), and by showing that STN stimulation leads to impaired conflict resolution and impulsive responses (Frank et al., 2007; Cavanagh et al., 2011; Green et al., 2013). However, these interpretations are mostly based on the classical view that STN DBS changes behaviour by inhibiting the STN, and are thus challenged by the suggestion that DBS may actually increase STN output (McIntyre and Hahn, 2010).

In parallel to its putative role in conflict resolution, another body of evidence points to the STN being involved in motivational behaviours and reward-related processes. Correlative evidence comes from electrophysiological recordings made in the non-human primate and rodent which have shown that STN neurons respond to reward presentation or to the expectation of reward and do so differently depending on the type or size of the reward (Darbaky et al., 2005; Lardeux et al., 2009, 2013; Espinosa-Parrilla et al., 2013). Causal evidence comes from studies where either lesioning or inactivating the STN through high frequency stimulation reduces addictive behaviours. In animals, disrupting the STN impacts on the motivation for rewards such as food or cocaine (Baunez et al., 2002, 2005; Uslaner et al., 2005; Rouaud et al., 2010; Serranová et al., 2011) and increases approach behaviour towards reward cues (Uslaner et al., 2008). Taken together these findings suggest that the STN may be crucial in regulating the incentive motivation associated with reward, i.e. how much effort one is willing to invest for a given reward (Baunez and Lardeux, 2011). In humans, indirect evidence points to the involvement of the STN in reward-related behaviours with studies showing a reduction of impulse control disorders and dopamine dysregulation syndrome following STN DBS (Lhommée et al., 2012; Eusebio et al., 2013) or indicating that the STN is involved in the evaluation of behaviourally relevant stimuli (Sauleau et al., 2009). Overall there is little evidence that human STN neurons may actually respond to reward presentation/expectation.

Here, to disentangle the motivational from the conflict-adaptation accounts of STN function, we recorded local field potentials (LFPs) in the STN of patients with Parkinson’s disease, both ON and OFF dopamine replacement therapy, while they were asked to perform an effort-based decision-making task. Patients were asked to decide whether to accept each trial based on the level of reward indicated in the first cue and the force required signalled in the second cue (Fig. 1A). If patients accepted the trial they pressed a dynamometer with the required force. We then compared directly the STN response to the subjective value of the reward and effort cues and the response to conflict, which was maximal when the subjective reward value equalled the subjective effort cost.

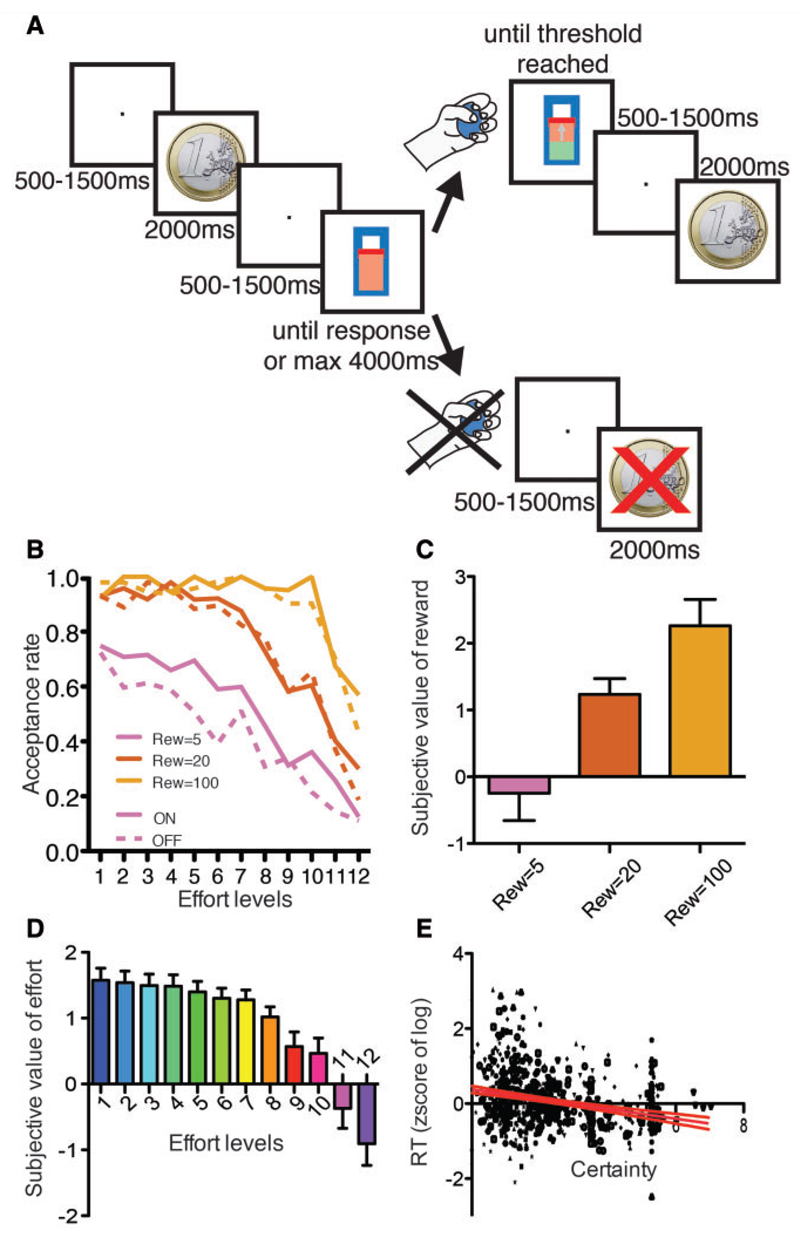

Figure 1. Behavioural task and performance.

(A) Schematic depiction of the task. (B) The proportion of accepted trials is shown as a function of the effort required (x-axis), the reward condition (pink: 5 c, red: 20 c, orange: €1) and the treatment condition (ON: solid; OFF: dashed). (C) Subjective value of reward as a function of the objective reward amount. Error bars indicate the standard error of the mean. (D) Subjective cost of effort as a function of the effort level. (E) The reaction time is plotted as a function of certainty. Each dot corresponds to an accepted trial, and each shape is specific to a particular subject. The red lines indicate the 95% confidence interval around the line of regression.

Materials and methods

Patients and surgery

Fourteen patients participated with informed written consent and the permission of the local ethics committee, and in compliance with national legislation and the Declaration of Helsinki. Two of these patients were not included in the final dataset because they failed to understand the task and their responses during the experiment were random. All had advanced idiopathic Parkinson’s disease (11 males, mean age 63.8 years ± 6.8; mean disease duration 9.4 years ± 2.5). The clinical details of all patients are summarized in Table 1. None of the patients had impulse control disorders or dopamine dysregulation syndrome as defined previously (Krack et al., 2003; Eusebio et al., 2013). Bilateral electrode implantation in the STN was performed as previously described (Fluchère et al., 2014). The DBS lead used was model 3389 (Medtronic Neurological Division) with four platinum-iridium cylindrical surfaces (1.27 mm diameter and 1.5 mm length) and a centre-to-centre separation of 2 mm. Contact 0 was the most caudal and contact 3 was the most rostral. The DBS lead was connected to an externalized extension (Medtronic Neurological Division) used for the LFP recordings (see below). The implantation of the pulse generator Activa PC® (Medtronic Neurological Division) was performed a few days later. Proper placement of the DBS lead in the region of the STN was supported by the following tests: (i) intra-operative micro-recordings (three to five electrodes on each side); (ii) intra-operative macro-stimulation for the detection of capsular spread; (iii) significant improvement in the Unified Parkinson’s Disease Rating Scale (UPDRS) motor score during chronic DBS OFF medication (11.3 ± 8.3), obtained 6 months after surgery, compared with UPDRS OFF medication with stimulator switched off (29.3 ± 9.4; P < 0.0001, paired t-test); and (iv) visual analysis of the fusion of preoperative magnetic resonance scan and postoperative CT scan (see Supplementary material and Supplementary Fig. 1).

Table 1. Clinical details of the patients.

| Case | Age (years)/sex | Disease duration (years) | Motor UPDRS preoperative OFF/ON drugs | Medication (LEDD mg) preoperatively |

|---|---|---|---|---|

| 1 | 54/M | 13 | 15/1 | 900 |

| 2 | 68/M | 6 | 34/9 | 750 |

| 3 | 65/F | 10 | 39/2 | 1500 |

| 4 | 63/M | 10 | 46/15 | 1150 |

| 5 | 67/M | 12 | 31/8 | 800 |

| 6 | 68/M | 12 | 19/6 | 1400 |

| 7 | 47/M | 7 | 21/0 | 1050 |

| 8 | 62/M | 5 | 41/17 | 925 |

| 9 | 70/M | 11 | 35/10 | 1000 |

| 10 | 71/F | 11 | 21/5 | 850 |

| 11 | 63/F | 6 | 33/1 | 1000 |

| 12 | 69/M | 10 | 23/5 | 600 |

LEDD = levodopa equivalent daily dose.

Recordings

Setting

Recordings were carried out twice in two separate sessions: either after overnight withdrawal of their antiparkinsonian medication (hereafter referred to as the OFF-DOPA condition) or after administration of their morning levodopa-equivalent dose of medication (ON-DOPA condition). The order of the sessions was balanced with six patients starting with the OFF-DOPA session. UPDRS motor scoring was performed in both OFF-and ON-DOPA conditions to ensure that the medication induced a correct ON-DOPA state (26.9 ± 10.0 versus 7.6 ± 4.7; P < 0.0001, paired t-test). This also suggested that stun effects were minimal at the time of recording (compared with UPDRS OFF medication and off stimulator 6 months postoperative of 29.3 ± 9.4; P = 0.43, paired t-test.)

Patients were comfortably seated at a 70–80 cm distance from a 19’ CRT monitor cadenced at 100 Hz where a sequence of cues was displayed (Fig. 1A). A photodiode was attached to the upper left corner of the screen and was connected to the amplifier so as to synchronize the display with the recording of the electrophysiological signals. An Eyelink 1000 camera system (SR Research) was positioned under the screen and was aimed at the patient’s dominant eye. A target sticker was placed on the patient’s forehead and was used by the Eyelink to correct for head movements while keeping track of the pupil position. A pressure bulb was strapped to the right hand of the patients. When a power grip force was exerted on the bulb, air pressure increased. These pressure changes were measured by means of a pressure transducer connected to the bulb through closed tubing. The pressure signal was then recorded by means of a 16-bit analogue to digital data acquisition device (National Instruments).

Local field potentials

STN LFPs were recorded from both hemispheres through the DBS electrodes in the few days that followed implantation prior to connection to the subcutaneous stimulator (mean 5.6 days, range 3–6). In addition, EMG activity was recorded with surface electrodes placed over the first dorsal interossei muscles in a belly-tendon derivation. All signals were amplified, filtered (band-pass filter: 0.25–300 Hz; notch filter: 50 Hz), and sampled at 2048 Hz using a Porti®32 amplification system (TMS International) and a custom-written software (developed by Alek Pogosyan).

Task

The patients were instructed to squeeze a dynamometer with variable force and with a promise of a variable virtual monetary reward. The task started with a 500–1500 ms display of a fixation cross over a 35% grey background (Fig. 1A). Fixation was followed by the display of the reward cue, consisting of the representation of a 4.5°-wide coin informing the patient of the reward they would receive if the task was completed successfully (three levels of reward: 5 c, 20 c, €1). The second cue indicated the level of force needed for the forthcoming task (12 levels representing the integral of handgrip force over time, see below) and was represented as a vertical gauge (2 × 8.75° for a 70-cm viewing distance) with a horizontal bar indicating the level required, hereafter called the threshold. When the second cue appeared, which was also indicated by a concurrent beep sound, the patients had to decide whether they accepted the trial. In case they accepted, they just had to start pressing the dynamometer, following which the level in the gauge raised with a speed proportional to the force applied on the dynamometer. The speed was computed so that filling the gauge completely required exerting a force equal to maximal voluntary contraction (MVC) for 7.6 s. Therefore, when the threshold was midlevel, the patient could reach it either by exerting a force corresponding to 50% of his MVC for 7.6 s or exerting a force at 80% for 4.75 s for instance. The 12 levels used in the task were equivalent to 0.5, 0.725, 0.95, 1.0, 1.45, 1.9, 2.0, 2.9, 3.8, 4.0, 5.8, and 7.6 s at MVC, although as noted above, subjects were free to vary how these effort levels were achieved by trading duration for force. After the level indicated on the gauge reached the threshold, there was a 500–1500 ms fixation screen, followed by the reward feedback, accompanied by a bell sound. The reward feedback consisted of the same coin as the one presented during the reward cue display, with the sentence ‘You win:’ displayed on top, and was shown for 2000 ms. Any squeezing of the bulb before the effort cue onset stopped the trial immediately, with an orange screen indicating to the patient that they had squeezed the dynamometer too early. When the patients wished to refuse the proposed trial, they had to withhold their response for 4 s, after which a display of the reward amount they had refused was shown crossed in red on the screen for 2000 ms. Intercue intervals were randomized so as to limit the expectancy of the upcoming cue and any related preparation. The probability of the 36 conditions (3 reward × 12 force) was equal and they appeared in a randomized order. Due to time constraints, only the dominant hand performed the hand grip task.

Procedure

After the patients were installed, the electrodes fixed, the Eyelink system calibrated, and 3-min rest recording of the electrophysiological activity was acquired, the patients had first to perform three maximal voluntary contractions by squeezing the pressure bulb as hard as possible. Vocal encouragements were systematically provided during this exercise. Patients were then trained on the task. They performed a slowed-down version of the task in which a break was added between each phase (reward cue/effort cue/choice/effort exertion/reward feedback), allowing the experimenter to explain the meaning of each phase and the responses that were expected from them. Then, each patient performed four 8-min blocks of the task, both ON and OFF dopamine replacement therapy, with a total of 64 min on task. Each block included an average of 37.46 ± 8.58 trials [mean ± standard deviation (SD)]. Before each block, three maximal voluntary contractions were performed and used to adjust the efforts required during the following block.

In addition, when time allowed, six patients also underwent the control version of the task, in which they had to provide their response orally, while the experimenter squeezed the dynamometer, every other aspect of the task remaining the same. The number of 8-min blocks of the control task varied between two and four in each treatment condition depending on time and fatigue constraints.

Analysis

Behavioural analyses

All the behavioural analyses consisted of Generalized Linear Mixed Models (GLMMs), performed with SAS Enterprise Guide software, Version 5.1. (Copyright © 2012 SAS Institute Inc., Cary, NC, USA). The model included systematically the treatment condition (ON, OFF), the reward cue value (5, 20, 100), the effort cue value (from 1 to 12) and all their interactions. Patients were included as random effects, taking account of the between-subject variations of intercepts and all fixed effect slopes. The residuals were systematically inspected to ensure their normal distribution, homoscedasticity and their lack of variation as a function of the explanatory variables.

Signal analysis

The difference between the signals acquired from each adjacent pair of DBS electrode contacts was computed, leading to three bipolar pairs for each hemisphere. Each of these bipolar signals was then aligned on the onset of the reward and the effort cues. They were filtered with a series of finite impulse response band-pass filters with different cut-off frequencies, Hilbert-transformed and log-transformed, leading to a set of 12 frequency bands: 0.001–2 Hz, 2–4 Hz, 4–6 Hz, 6–8 Hz, 8–11 Hz, 11–16 Hz, 16–23 Hz, 23–32 Hz, 32–45 Hz, 45–64 Hz, 64–91 Hz, 91–128 Hz (Freeman, 2004). These signals were then down-sampled to 10 Hz by taking the maximum value within each 100-ms time bin. Artefacts were removed by suppressing, within each time-frequency-dipole bin, values beyond 5 SD from the mean obtained in this particular bin over all trials.

Subject-wise analyses of the LFP response to the cues were performed by means of cluster-based permutation tests (Maris and Oostenveld, 2007). The activity within the clusters so obtained was averaged and analysed subsequently by means of linear mixed models, performed with the SAS software. Similar to the behavioural analyses described above, the intercept and all the fixed effect slopes were systematically included in the random part of the model. We modelled the successive trials as repeated measures, with an autoregressive model to account for the error variance-covariance matrix (Littell et al., 1998).

Subjective value and cost computation

The subjective value and cost of the reward and effort cues were computed on the basis of logistic regressions (Hensher et al., 2005):

| (1) |

where represents the estimated probability of acceptance, Rew corresponds to the z-scored reward level and Eff to the z-scored effort condition. The subjective value of reward was computed by putting all Eff values to zero while the subjective cost of effort was obtained by fixing all Rew values to zero. ∊ represents the error term, with logistic distribution.

The subject-by-subject estimates of subjective values were then computed for each reward level as where Rewi refers to the value of Rew for the ith reward level. Similarly, the subject-by-subject subjective cost of effort was computed as and the overall ‘net subjective value’ for each reward and effort combination was equal to . Finally, we computed a ‘certainty’ variable, equal to , and representing the distance from indifference between accept and reject decisions.

Results

Behavioural results

First, we assessed the effect of the block order and the treatment condition on the MVC scores with a repeated measure ANOVA. We found a significant effect of block order [F(1,49) = 11.9, P = 0.0054], confirming the importance of adapting the force levels to the MVC block by block, but we found no effect of the treatment condition [F(1,11) = 0.06, P = 0.80, interaction: F(1,11) = 2.73, P = 0.13], perhaps because of the postoperative state and any attendant stun effects, or because only standard morning levodopa-equivalent doses of medication were used as challenge.

As expected, the probability of accepting to execute the trial increased with the proposed reward [Fig. 1B; treatment × reward × effort GLMM: main effect of reward F(2,22) = 18.50, P < 0.0001, all post hoc P < 0.05] and decreased with the required effort [F(1,11) = 23.22, P = 0.0005, slope = −0.78 ± 0.18]. In addition, in the low reward condition, trial acceptance rate was higher in the ON than in the OFF DOPA condition [reward × treatment: F(2,22) = 3.85, P = 0.0368; all other P-values > 0.1].

Based on these behavioural decisions, we computed the subjective value and cost attributed by the patients to each reward and effort cue (Fig. 1C and D), a net subjective value variable integrating these two factors and a certainty variable representing the distance from equivalence in the decision (see ‘Materials and methods’ section). A certainty of zero corresponded to equivalence between the accept and reject decision, i.e. to the maximal level of conflict between these two choice options (Wunderlich et al., 2009). Accordingly, we found that the reaction time in accepted trials decreased with certainty [i.e. increased with conflict; Fig. 1E; treatment × certainty value GLMM: main effect of certainty: F(1,2290) = 46.93, P < 0.0001, slope = −0.025 ± 0.0037; all other P-values > 0.4].

Local field potential response to the reward cue

Regarding the neural activity in STN, we found a clear LFP response to the reward cue in the low frequency range (Supplementary Fig. 2A, averaged over treatment conditions). However, the amplitude and consistency of this response varied between subjects (Supplementary Fig. 2B). We therefore used cluster-based permutation tests to isolate subject-by-subject the significant part of the response, which was mostly limited to frequencies below 10 Hz (all P ≤ 0.05, Fig. 2A). The average number of significant time-frequency bins included in the clusters was similar in both hemispheres [Friedman non-parametric test for the hemisphere effect controlling for any treatment effect: χ2(1) = 0.2, P = 0.6519] and between ventral and dorsal contacts [Friedman test: χ2(1) = 0.49, P = 0.49]. The responses to the reward cue, averaged over all subject-specific significant time–frequency bins, were strongly affected by the reward condition [reward × treatment GLMM: main effect of reward, F(2,3324) = 14.69, P < 0.0001; all other P-values > 0.4; Fig. 2B], such that €1 rewards led to larger responses than 5 or 20 c rewards (Tukey-corrected post hocs, all P < 0.0001). This effect was also similar across the hemispheres [same GLMM with an additional side effect: main effect of reward: F(2,5355) = 9.53, P < 0.0001; reward × side interaction: F(2,5355) = 0.06, P = 0.94], even though there were overall stronger responses in dipoles located in the right hemisphere [main effect of hemisphere: F(1,5355) = 8.78, P = 0.003]. We found no difference between electrode contacts located ventrally and dorsally (all main effects and interactions: P > 0.3).

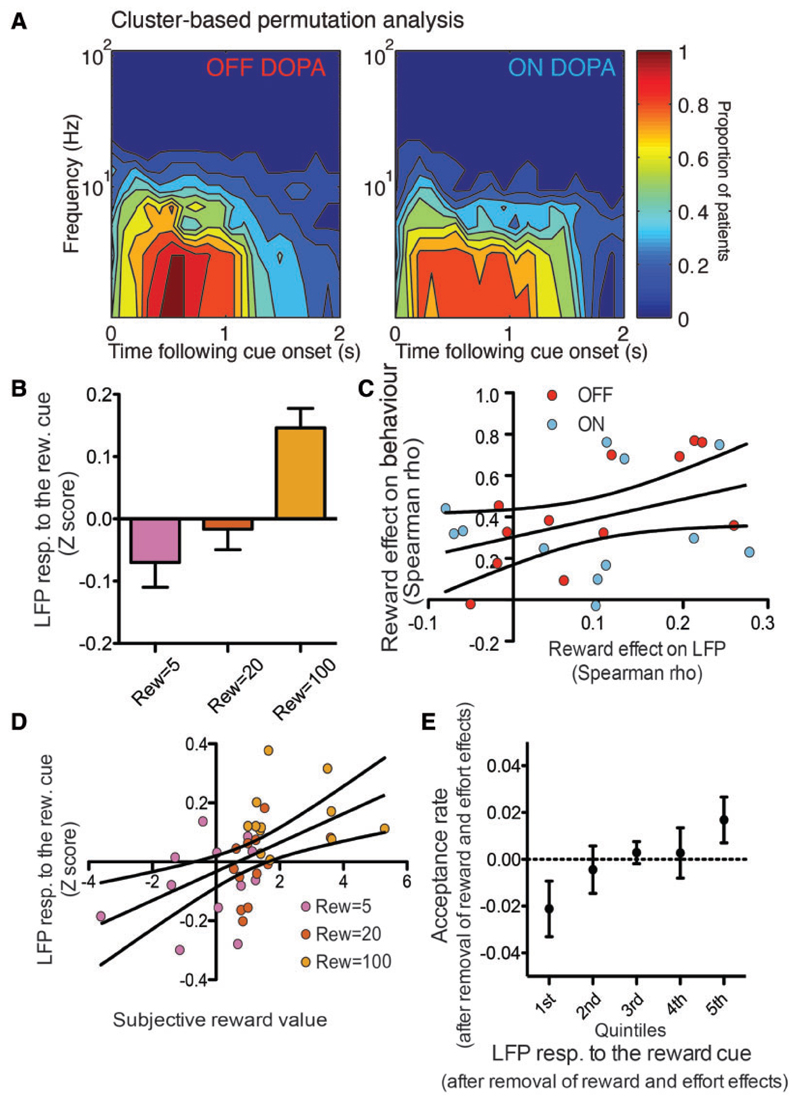

Figure 2. LFP responses to the reward cue.

(A) Significant time-frequency clusters isolated by means of the cluster-based permutation analysis of the LFP response to the reward cue. The colour code indicates the proportion of subjects in which the LFP power increased significantly for each combination of frequency and latency conditions. (B) Average LFP response to the reward cue as a function of the reward level. Error bars indicate the standard error of the mean. (C) Effect of reward on behaviour (Spearman rho coefficient of the correlation between reward and acceptance) as a function of the effect of reward on the LFP (Spearman rho between reward and LFP). Each dot corresponds to a different subject, with the treatment condition indicated with the colour code. The regression line is shown in black with the corresponding 95% confidence interval. (D) LFP response to the reward cue as a function of the subjective value of reward. The colour code indicates the reward conditions and each dot corresponds to a different subject. (E) Effect of the LFP response to the reward cue on acceptance rate. The residual of the acceptance rate after removal of the effort and reward effects is shown on the y-axis, while the residual of the LFP response to the effort cue after removal of the effort and reward effects, separated in five quintiles to facilitate visibility, is shown on the x-axis.

In addition to depending merely on the reward level indicated by the cue, the LFP responses also signalled the subjective value of the reward, irrespective of the treatment condition. This was evidenced first by a correlation between the behavioural impact of the reward and its influence on the LFP [Fig. 2C; factorial ANOVA with Spearman correlation coefficients between reward levels and acceptance as dependent variable, Spearman correlation between reward and LFP response as continuous predictor, treatment condition as categorical predictor and subject index as random factor: effect of the continuous predictor: F(1,20) = 4.37, P = 0.0496; all other effects P > 0.1] and second by adding the subjective value of reward to the GLMM of the effect of reward levels on LFP responses [Fig. 2D; subjective reward value × reward level × treatment GLMM: effect of subjective value of reward: F(1,3318) = 5.44, P = 0.0198; effect of reward level: F(2,3318) = 7.04, P = 0.0009; all other effects P > 0.05].

In addition, the trial to trial variation in the response to the reward cue was predictive of some of the variability in the decision of the patient to either accept or refuse to execute the trial [Fig. 2E; LFP response + reward × effort × treatment GLMM on binary choice data: main effect of LFP response: F(1,3316) = 3.84, P = 0.05; reward slope: 2.17 ± 0.43 with and 2.35 ± 0.47 without the LFP predictor; effort slope: −2.16 ± 0.43 with and −2.31 ± 0.46 without the LFP predictor], such that larger responses led to higher chances of accepting the trial (slope of the LFP effect = 0.14 ± 0.074), irrespective of the treatment condition (interaction: P > 0.4). The combined findings that the reward condition affected the LFP response to the reward cue on the one hand, and that the LFP response predicted the decision on the other hand, fulfil the joint significance criterion for mediation (Hayes and Scharkow, 2013). This suggests that part of the effect of the reward on the decision was mediated by the LFP response to the reward cue in STN, and therefore, that the STN response was causally involved in shaping the decision, even though its contribution was small, given the magnitude of the change in the reward and effort coefficients. It is important to emphasize that assessing the significance of the reduction in the coefficients associated to the independent variables (referred to as the delta method or the Sobel test), has been described as the least powerful and least trustworthy method for mediation analysis (Hayes and Scharkow, 2013), and no validated method has been described to perform this test with binary dependent variables and correlations between successive observations. That is why we did not attempt to perform this test on the present data. Finally, because these findings are correlative, they do not allow us to exclude that the mediation effect goes the other way and that, instead of the LFP response mediating the decision, it was the acceptance decision that mediated the effect of the reward condition on the LFP response. Irrespective of which of these two interpretations is correct, the present findings overall show that the STN activity was indicative of the subjective value attributed by the patient to the reward proposed in each particular trial.

Local field potential response to the effort cue

To analyse the response to the effort cue, we ran a similar cluster-based permutation analysis as the one used above for the response to the reward cue [alpha = 0.05, Fig. 3A and Supplementary Fig. 3A; no significant difference in average number of frequency bins across hemispheres: χ2(1) = 0.54, P = 0.4624, or ventro-dorsal locations: χ2(1) = 0.39, P = 0.53]. We evaluated the relation between the amplitude of the response, averaged within the whole response cluster, and the effort condition. We found a significant effect of the effort condition [Fig. 3B; GLMM: fixed effect of effort: F(11,3205) = 2.27, P = 0.0095] and a marginal effect of the reward level [F(2,3205) = 2.87, P = 0.0568] but no effect of treatment or interaction (all P > 0.1; Supplementary Fig. 3B). When comparing these responses across hemispheres, we found a significant effect of both reward and effort [effort: F(11,6121) = 2.34, P = 0.0073; reward: F(2,6121) = 3.65, P = 0.026], but no interaction with the hemisphere factor (all P > 0.3) or any other significant effect (all P > 0.1). Similarly, when adding a factor separating ventral and dorsal electrode contact locations, we found no effect of this factor and no interaction (all P > 0.1). To determine the direction of the effort effect, we modelled it as a continuous factor and found that the LFP amplitude decreased for larger effort intensities [F(1,3205) = 5.08, P = 0.024]. Similar to the reward cue, we found that this response to the effort cue depended on its subjective value. Indeed, the between-subject difference in the effect of the effort cue on the LFP correlated with its effect on behaviour [see Fig. 3C; factorial ANOVA: F(1,20) = 6.81, P = 0.0168] independently of the treatment condition (all P > 0.1). Along the same line, when adding the net subjective value variable as a continuous predictor to the model in addition to the reward and effort factors, the LFP response was found to be proportional to this value [GLMM: F(1,3229) = 4.82, P = 0.0282; see Fig. 3D]. Crucially, we found that this relation of proportionality with the LFP response was absent when considering the absolute value of the net subjective value instead, which can be viewed as the distance from indifference, or as an index of certainty [Fig. 3E; F(1,3229) = 0.11, P = 0.74]. Similarly, when running a GLMM analysis with both the certainty and the net subjective value variables, we found a significant effect of net subjective value [F(1,3271) = 5.67, P = 0.017] but not of certainty [F(1,3271) = 0.22, P = 0.9].

Figure 3. LFP responses to the effort cue.

(A) Results of the cluster-based permutation analysis, showing the proportion of subjects with significant LFP activity in each delay-frequency bin following the effort cue onset. (B) Average LFP response to the effort cue as a function of the effort condition. Error bars indicate standard error of the means. (C) Effect of the effort condition on behaviour (Spearman rho) as a function of the effect of effort on the LFP response to the effort cue (Spearman rho). The colour code indicates the treatment condition. The black lines indicate the 95% confidence interval around the line of regression. (D and E) Average LFP response as a function of the net subjective value (D) or certainty (E). Each dot corresponds to the average of all the trials in one effort/reward condition for one subject, with the colour indicating the reward condition (same colour code as Figs 1 and 2). X- and y-axes error bars indicate the standard error of the mean. The regression line is shown in black with its 95% confidence interval. (F) Effect of the LFP response to the effort cue on the acceptance rate. Same convention as Fig. 2E. (G) Effect of the pupil response on the LFP response. The residual of the pupil responses, after removal of the effort and reward effects, were separated in five quintiles. Each dot indicates the average of the LFP residual (after removing the reward and effort effects) for each pupil size quintile with the error bars indicating the standard error of the mean.

Finally, and again similar to the response to the reward cue, when using the LFP response to the effort cue as a predictor of the decision of the patient to either refuse or accept the trial, in addition to the reward and effort conditions, it provided significant additional information [Fig. 3F; GLMM: F(1,3267) = 13.95, P = 0.0002; reward slope: 2.19 ± 0.43 with and 2.35 ± 0.47 without the LFP predictor; effort slope: −2.16 ± 0.43 with and −2.31 ± 0.46 without the LFP predictor]. However, this time, there was also a strong interaction with the treatment condition [F(1,3267) = 7.88, P = 0.005], which indicated that this relation with acceptance was stronger in the ON DOPA condition (logistic coefficient OFF DOPA: 0.19 ± 0.12, P = 0.11; ON DOPA: 0.56 ± 0.12, P < 0.0001). Again, this joint significance suggests that the effect of the effort and reward conditions on the acceptance decision may have been mediated by the STN response or, more generally, it suggests that this response was indicative of the net subjective value of the trial.

Correlation with pupil response

Finally, we found that the LFP response to the effort cue (limited to accepted trials to avoid the confounding effect of the response) correlated strongly with pupil response [Fig. 3G; GLMM: pupil main effect: F(1,1579) = 23.70, P < 0.0001, no significant interaction with treatment, all P > 0.4], while the pupil response itself was affected by reward and effort conditions [GLMM: reward: F(2,1651) = 4.88, P = 0.0077, effort: F(11,1651) = 2.86, P = 0.001] such that it increased with reward (all P-values < 0.01 for comparisons between €1 and other reward levels) and decreased with the effort condition (when modelling the effort as a continuous variable: slope = −0.0063 ± 0.0029). These results again satisfy the joint significance criterion for mediation (with the caveat already noted), and indicate that the STN and the pupil respond similarly to the effort and reward information conveyed by the cues.

Local field potential response during the control task

To determine how much of the acceptance effect depended on the preparation of the manual response, we looked at the data gathered in a control task, in which no squeezing of the dynamometer was required. The participants provided instead an oral response, instructing the experimenter to execute the effort or not (n = 6). Importantly, since in this version of the task subjects had to respond both in case of acceptance and rejection of the offer, an effect of acceptance on the LFPs could not be explained merely as a motor preparation response.

The subjects’ behaviour didn’t differ significantly between the control and main tasks [Supplementary Fig. 4A; GLMM: task main effect and interactions, all P > 0.1; GLMM on control task only, reward main effect: F(1,4) = 10.44, P = 0.032; effort main effect: F(1,4) = 22.98, P = 0.0087; reward × treatment interaction: F(1,4) = 8.35, P = 0.0446; effort × treatment interaction: F(1,4) = 8.47, P = 0.0437; no other significant effect].

The fact that the control task required a response both in case of acceptance and rejection allowed us to study further the relation between reaction time and conflict. In four of the participants, the oral responses were recorded by means of an accelerometer attached to the patient’s chin. Reaction times were computed by taking the time difference between the effort cue onset and the maximum of the root mean square signal from the accelerometer. We performed a GLMM analysis of these data with the treatment condition, the net subjective value and the certainty variable as factors. We found a significant effect of net subjective value [Supplementary Fig 4B; F(1,3) = 33.63, P = 0.01, slope = −0.048 ± 0.008], certainty [F(1,3) = 10.90, P = 0.046, slope = −0.02 ± 0.006] and an interaction between the two [F(1,3) = 16.61, P = 0.028, slope = 0.001 ± 0.0003]. None of the other effects were significant (all P > 0.05). This shows that the reaction time was influenced both by net subjective value, with faster responses for larger values—and by certainty—being larger for net subjective values close to zero. While the first finding probably relates to the higher motivation associated with large-value trials, the second effect can be explained by decision conflict.

The cluster-based permutation analyses also showed comparable results to the main experiment for the LFP responses to the reward cue (Supplementary Fig. 4C and D, all P < 0.05). We averaged the activity within the frequencies and electrode contact pairs isolated from the clusters and performed a GLMM with task (main or control) and reward as factors. We found a significant effect of reward [F(2,3771) = 3.48, P = 0.0308] but no interaction with task [F(2,3771) = 1.15, P = 0.317], or any other interaction (P > 0.5). These effects consisted in an increase of the LFP response with reward, irrespective of the task (Supplementary Fig. 4E). We also found again a significant effect of the subjective value of reward on the trial-by-trial response amplitude [Supplementary Fig. 4F; F(1,3970) = 7.11, P = 0.0008; but no other significant effect: all P > 0.3].

The LFP response to the effort cue was also similar, with clusters qualitatively comparable to the ones obtained in the main task (all P < 0.05; Supplementary Fig. 4G and H). When running a GLMM on the average response, with treatment, task, reward and effort as factors, we found a significant effect of effort [Supplementary Fig. 4I; F(11,3781) = 2.01, P = 0.024], irrespective of the task [F(11,3781) = 0.65, P = 0.78] and no other significant effect (all P > 0.1). We also found a significant effect of the net subjective value [Supplementary Fig. 4J; F(1,3917) = 9.30, P = 0.0023], but no interaction with the task factor [F(1,3917) = 0.02, P = 0.89] and no other significant effect (all P > 0.2). Finally, when comparing the predictive effect of the LFP response on the decision across tasks, we found that while the reward cue response failed to predict the acceptance decision [F(1,3960) = 1.20, P = 0.27], the LFP response to the effort cue was still predictive of the decision [Supplementary Fig. 4H; F(1,3907) = 4.61, P = 0.0318; reward slope: 2.37 ± 0.52 with and 2.53 ± 0.58 without the LFP predictor; effort slope: −2.34 ± 0.48 with and −2.47 ± 0.51 without the LFP predictor], irrespective of the task [F(1,3907) = 0.84, P = 0.3598].

Discussion

In summary, we found in STN, robust low-frequency LFP responses, which signalled the subjective value of reward and the subjective cost of effort indicated by visual cues in an effort-based decision-making task. When the patients’ dopamine levels were relatively normalized through dopamine replacement therapy, the signal differentiated between accepted and refused trials, whereas OFF treatment, this relation with acceptance was weakened. LFP responses were also found to correlate strongly with the change in pupil size.

These findings were replicated in a second task during which the experimenter performed the effort according to the participants’ decisions. This replication in the control condition, and the similar findings in both hemispheres, confirms that the low-frequency LFP response does not result merely from the preparation of a motor response, as the control task required verbal responses both in case of acceptance and rejection of the offer. Having the experimenter perform the effort involved some social aspects to the decision, but kept patients engaged in the paradigm despite postoperative factors like fatigue. Social aspects did not affect the behaviour of the participants, which remained unchanged with respect to the main task.

Subthalamic nucleus and cost-benefit decision-making

Our results show that synchronized activity in populations of STN neurons reflect the subjective value of reward and the subjective cost of effort and that this information can be used to predict the decision in an effort-based decisionmaking task. These results extend earlier findings of reward-related responses in animals (Teagarden and Rebec, 2007; Espinosa-Parrilla et al., 2013; Lardeux et al., 2013) and provide to our knowledge the first evidence of such responses in the human STN. Taken together with data from interventional studies in animals (Uslaner et al., 2008) and in humans (van Wouwe et al., 2011) our results suggest a pivotal role for the STN in cost benefit decisionmaking and motivation-related processes. The STN is well positioned to play such a role, given that it receives direct input from, on the one hand, orbitofrontal cortex (Maurice et al., 1998), a cortical area known to be central to reward-based decision-making and subjective valuation of rewards (Padoa-Schioppa, 2011) and, on the other hand, anterior cingulate, insula and supplementary motor area (Maurice et al., 1998; Manes et al., 2014), regions thought to be involved in effort processing (Croxson et al., 2009; Zénon et al., 2015). Our results are compatible with a scheme in which the actual valuation of reward and effort processing occurs separately in the abovementioned cortical areas and is thereafter passed along to the STN where this information is used for cost-benefit computation. Alternatively, it could be proposed, in accordance with a recent theoretical study (Hwang, 2013) that the effort cost signal originates from the indirect pathway while the reward information would be relayed from cortex to the STN through the hyperdirect pathway. However, the correlative nature of the present findings does not allow us to determine the causal role of the STN in the cost-benefit computation process and other cortical and subcortical regions have been shown to encode net subjective value (Hillman and Bilkey, 2010). Therefore, it is also plausible that this computation largely occurs upstream and that the STN predominantly relays this information. Dissociating these hypotheses will require further studies.

Alterations of reward-based behaviours, such as pathological gambling, are frequently observed in Parkinson’s disease (Ferrara and Stacy, 2008). The aim of the present study was to explore the neurophysiological markers of reward and cost-benefit decision-making in the STN of Parkinson’s disease patients free from such disorders. However, further studies should explore how this system is disrupted in patients with impulse control disorders as well as the impact of dopamine in this context. Evidence that STN DBS reduces addictive behaviours in patients with Parkinson’s disease and animals also points to an important role of the STN in pathological disturbance of reward-based behaviours (Rouaud et al., 2010; Lhommée et al., 2012; Eusebio et al., 2013).

Subthalamic nucleus and conflict

Computational models have proposed a role for STN in slowing down behavioural responses in the case of conflict between the decision variables (Frank et al., 2007). A series of experimental findings has concurred with this hypothesis by showing increased low-frequency responses during conflict situations (Cavanagh et al., 2011; Zavala et al., 2013) and a decrease in the reaction time adjustment to conflict when the STN is disturbed with DBS (Green et al., 2013; Zavala et al., 2015). In the present study, the level of decision conflict was maximal when the probability to accept the offer was close to indifference, as confirmed by increased reaction times. Crucially, we found that STN LFP responses, instead of signalling conflict, varied in proportion to subjective value, which was maximal for high reward and low efforts. A potential explanation for this discrepancy with previous studies may be the difference between the tasks used. Our task involved an accept/reject decision whereas most previous studies involved a two-alternative forced choice decision task and it could be argued that the nature of the decision conflict may differ between these two types of tasks. Another potential caveat in the interpretation of the present results in light of the conflict literature is that the moment at which any conflict signal should occur is unclear in our task. Because of the limitation in the number of trials, we chose to use a fixed order of cue presentation in the task, in which the reward cue was systematically shown before the effort cue. This results in an asymmetry between the role of the reward and the effort information in making the decision. For example, the participants may have adopted a strategy consisting of making an early decision following the reward cue, and potentially revising this when the following effort cue was unexpectedly high or low. These strategies may have affected the moment of occurrence and the extent of the decision conflict.

However, another way to reconcile our findings with the previous studies addressing conflict in STN is to assume an alternative function to the low-frequency activities in STN. We propose that the role of this STN signal may be understood in the context of task-engagement and resource allocation.

Subthalamic nucleus and task engagement

The low-frequency STN responses were found to correlate strongly with the magnitude of the pupil response. Pupil size is viewed as a signature of the energization of behaviour, dependent on noradrenaline tone (Aston-Jones and Cohen, 2005; Varazzani et al., 2015) and has been associated with mental (Kahneman, 1973) and physical (Zénon et al., 2014) task-engagement. Therefore, low-frequency STN responses could signal how much resource to allocate to the selected actions (Turner and Desmurget, 2010), as a function of their net subjective value, by mobilizing attentional (Kahneman, 1973; Baunez and Robbins, 1997) and/or physical effort in the face of motivating cues or demanding situations. This hypothesis can be viewed as an extension of the ‘expected value of control’ model of anterior cingulate, proposed by Shenhav et al. (2013), which we apply here specifically to physical effort. Within this framework, decision conflict is a special case in which the unexpected dilemma between options of similar values calls for an online increase in cognitive control, which incurs a cognitive cost (Shenhav et al., 2013). In agreement with this putative role of STN in controlling task-engagement, recent pieces of evidence have shown that STN oscillatory activities over a broad frequency range are involved in the control of motor effort (Anzak et al., 2012; Tan et al., 2015). More generally, this hypothesis also concurs with the view that one of the main functions of the basal ganglia is to adjust response vigour in order to optimize net benefits and that the disruption of this function caused by dopamine depletion in Parkinson’s disease may be responsible for associated motor symptoms (Mazzoni et al., 2007; Turner and Desmurget, 2010; Baraduc et al., 2013).

The role of dopamine in decision-making

The evidence for the role of dopamine in learning, through the signalling of reward prediction errors, is overwhelming (Montague et al., 1996; Schultz et al., 1997; Wise, 2004; Glimcher, 2011), even though the details of the interpretation of these findings may vary (Friston et al., 2014). Dopamine also influences decision-making even in stable contexts in which no learning is involved (Cagniard et al., 2006; Sharot et al., 2012; Rutledge et al., 2015), pointing to its dual role. Regarding effort-based decision-making in particular, dopamine increases the willingness to exert effort (Salamone et al., 2009; Chong et al., 2015), especially when exchanged for low rewards (Wardle et al., 2011; Treadway et al., 2012). In agreement with these earlier studies, we found that dopamine depletion led to a decreased tendency to accept effortful trials, but only in low reward conditions, even though we cannot exclude the possibility that this specificity was caused by ceiling effects, affecting only uncertain decisions, rather than low-reward conditions. In addition, dopamine withdrawal led to some level of disruption in the link between STN activity and the decision-making process: even though the reward and effort responses in STN showed little or no alterations, they were less predictive of the choice. This suggests that dopamine depletion did not alter the process of associating a value (positive or negative) to the cues but rather disrupted the downstream mechanisms involved in using these cue-related values in order to reach a decision. This relative functional disconnection of the STN from the decision-making circuit under dopamine withdrawal may lead to a disruption of the balance between action value and effort investment and be causally involved in some of the deficits exhibited by parkinsonian patients (Schmidt et al., 2008; Turner and Desmurget, 2010).

Limitations

Regarding the analyses performed, we used cluster-based permutation techniques, which account for the correlations between neighbouring time-frequency bins and addresses the problem of multiple comparisons. However, like all non-parametric approaches, it is very conservative (Friston, 2012), and we cannot exclude that, besides the low-frequency response, other components of STN activity may have contained relevant information about the subjective value of the cues and the decision to accept or reject the trial.

It is also worth pointing out that, in our task, larger effort intensities led to larger execution durations. Therefore, we cannot dissociate the cost of time from the cost of effort per se in our results. Again, further studies, controlling separately the effort intensity and the effort duration should help to address this issue.

It is also noteworthy that the magnitude of the effect of the LFP response on the upcoming decision was relatively small, albeit significant. It may also be that the diversity and various tuning properties of local neurons might impact on the upcoming decision, but these features would not have been reflected in the LFP signal.

Finally, we must stress the fact that our findings are correlative and that they suggest, rather than demonstrate, the causal implication of the STN in the decision making process. Further studies, relying for instance on interferential methods, will be necessary to address this issue.

To conclude, our findings indicate that the STN is involved in balancing the value of actions with their associated effort cost, placing this nucleus within a larger vigour circuit encompassing the basal ganglia (Turner and Desmurget, 2010). Dopamine withdrawal appears to decrease the involvement of the STN within this vigour network. These findings may prove decisive in understanding the pathophysiology of Parkinson’s disease and the impact of STN DBS on addictive behaviours (Rouaud et al., 2010; Eusebio et al., 2013; Pelloux and Baunez, 2013).

Supplementary Material

Supplementary material is available at Brain online.

Acknowledgements

We would like to thank Paul Sauleau and Sabrina Ravel for their participation in the development of an earlier version of the task and Etienne Olivier for his helpful comments on an earlier version of the manuscript. We are grateful to Alek Pogosyan for sharing his custom-developed software with us. We are thankful to Giorgio Spatola for his assistance in the postoperative fusion of CT and MR scans and lead coordinates determination.

Funding

This study was sponsored by Assistance Publique – Hôpitaux de Marseille, and funded by the Agence Nationale de la Recherche (France, ANR-09-MNPS-028-01), Innoviris (Belgium) and the Fondation Médicale Reine Elisabeth (FMRE, Belgium). PB was funded by the Medical Research Council (MC_UU_12024/1) and NIHR Oxford Biomedical Centre.

Abbreviations

- DBS

deep brain stimulation

- GLMM

Generalized Linear Mixed Model

- LFP

local field potential

- STN

subthalamic nucleus

References

- Anzak A, Tan H, Pogosyan A, Foltynie T, Limousin P, Zrinzo L, et al. Subthalamic nucleus activity optimizes maximal effort motor responses in Parkinson’s disease. Brain. 2012;135:2766–78. doi: 10.1093/brain/aws183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD. An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu Rev Neurosci. 2005;28:403–50. doi: 10.1146/annurev.neuro.28.061604.135709. [DOI] [PubMed] [Google Scholar]

- Baraduc P, Thobois S, Gan J, Broussolle E, Desmurget M. A common optimization principle for motor execution in healthy subjects and parkinsonian patients. J Neurosci. 2013;33:665–77. doi: 10.1523/JNEUROSCI.1482-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baunez C, Amalric M, Robbins TW. Enhanced food-related motivation after bilateral lesions of the subthalamic nucleus. J Neurosci. 2002;22:562–8. doi: 10.1523/JNEUROSCI.22-02-00562.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baunez C, Dias C, Cador M, Amalric M. The subthalamic nucleus exerts opposite control on cocaine and ‘natural’ rewards. Nat Neurosci. 2005;8:484–9. doi: 10.1038/nn1429. [DOI] [PubMed] [Google Scholar]

- Baunez C, Lardeux S. Frontal cortex-like functions of the subthalamic nucleus. Front Syst Neurosci. 2011;5:83. doi: 10.3389/fnsys.2011.00083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baunez C, Robbins TW. Bilateral lesions of the subthalamic nucleus induce multiple deficits in an attentional task in rats. Eur J Neurosci. 1997;9:2086–99. doi: 10.1111/j.1460-9568.1997.tb01376.x. [DOI] [PubMed] [Google Scholar]

- Bogacz R. The basal ganglia and cortex implement optimal decision making between alternative actions. Neural Comput. 2007;19:442–7. doi: 10.1162/neco.2007.19.2.442. [DOI] [PubMed] [Google Scholar]

- Cagniard B, Beeler JA, Britt JP, McGehee DS, Marinelli M, Zhuang X. Dopamine scales performance in the absence of new learning. Neuron. 2006;51:541–7. doi: 10.1016/j.neuron.2006.07.026. [DOI] [PubMed] [Google Scholar]

- Cavanagh JF, Wiecki TV, Cohen MX, Figueroa CM, Samanta J, Sherman SJ, et al. Subthalamic nucleus stimulation reverses mediofrontal influence over decision threshold. Nat Neurosci. 2011;14:1462–7. doi: 10.1038/nn.2925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chong TT-J, Bonnelle V, Manohar S, Veromann K-R, Muhammed K, Tofaris GK, et al. Dopamine enhances willingness to exert effort for reward in Parkinson’s disease. Cortex. 2015;69:40–6. doi: 10.1016/j.cortex.2015.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O’Reilly JX, Behrens TEJ, Rushworth MFS. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–41. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darbaky Y, Baunez C, Arecchi P, Legallet E, Apicella P. Reward-related neuronal activity in the subthalamic nucleus of the monkey. Neuroreport. 2005;16:1241–4. doi: 10.1097/00001756-200508010-00022. [DOI] [PubMed] [Google Scholar]

- Espinosa-Parrilla J-F, Baunez C, Apicella P. Linking reward processing to behavioral output: motor and motivational integration in the primate subthalamic nucleus. Front Comput Neurosci. 2013;7:175. doi: 10.3389/fncom.2013.00175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eusebio A, Witjas T, Cohen J, Fluchère F, Jouve E, Régis J, et al. Subthalamic nucleus stimulation and compulsive use of dopaminergic medication in Parkinson’s disease. J Neurol Neurosurg Psychiatry. 2013;84:868–74. doi: 10.1136/jnnp-2012-302387. [DOI] [PubMed] [Google Scholar]

- Ferrara JM, Stacy M. Impulse-control disorders in Parkinson’s disease. CNS Spectr. 2008;13:690–8. doi: 10.1017/s1092852900013778. [DOI] [PubMed] [Google Scholar]

- Fluchère F, Witjas T, Eusebio A, Bruder N, Giorgi R, Leveque M, et al. Controlled general anaesthesia for subthalamic nucleus stimulation in Parkinson’s disease. J Neurol Neurosurg Psychiatry. 2014;85:1167–73. doi: 10.1136/jnnp-2013-305323. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Samanta J, Moustafa AA, Sherman SJ. Hold your horses: impulsivity, deep brain stimulation, and medication in Parkinsonism. Science. 2007;318:1309–12. doi: 10.1126/science.1146157. [DOI] [PubMed] [Google Scholar]

- Frank MJ. Hold your horses: a dynamic computational role for the subthalamic nucleus in decision making. Neural Netw. 2006;19:1120–36. doi: 10.1016/j.neunet.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Freeman WJ. Origin, structure, and role of background EEG activity. Part 1. Analytic amplitude. Clin Neurophysiol. 2004;115:2077–88. doi: 10.1016/j.clinph.2004.02.029. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Schwartenbeck P, FitzGerald T, Moutoussis M, Behrens T, Dolan RJ. The anatomy of choice: dopamine and decision-making. Philos Trans R Soc B Biol Sci. 2014;369 doi: 10.1098/rstb.2013.0481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ. Ten ironic rules for non-statistical reviewers. Neuroimage. 2012;61:1300–10. doi: 10.1016/j.neuroimage.2012.04.018. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Understanding dopamine and reinforcement learning: the dopamine reward prediction error hypothesis. Proc Natl Acad Sci USA. 2011;108(Suppl 3):15647–54. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green N, Bogacz R, Huebl J, Beyer A-K, Kühn AA, Heekeren HR. Reduction of influence of task difficulty on perceptual decision making by STN deep brain stimulation. Curr Biol. 2013;23:1681–4. doi: 10.1016/j.cub.2013.07.001. [DOI] [PubMed] [Google Scholar]

- Hayes AF, Scharkow M. The relative trustworthiness of inferential tests of the indirect effect in statistical mediation analysis does method really matter? Psychol Sci. 2013;24:1918–27. doi: 10.1177/0956797613480187. [DOI] [PubMed] [Google Scholar]

- Hensher DA, Rose JM, Greene WH. Applied choice analysis: a primer. Cambridge University Press; 2005. [Google Scholar]

- Hillman KL, Bilkey DK. Neurons in the rat anterior cingulate cortex dynamically encode cost-benefit in a spatial decision-making task. J Neurosci. 2010;30:7705–13. doi: 10.1523/JNEUROSCI.1273-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang EJ. The basal ganglia, the ideal machinery for the cost-benefit analysis of action plans. Front Neural Circuits. 2013;7:121. doi: 10.3389/fncir.2013.00121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D. Attention and effort. Prentice Hall; 1973. [Google Scholar]

- Krack P, Batir A, Van Blercom N, Chabardès S, Fraix V, Ardouin C, et al. Five-year follow-up of bilateral stimulation of the subthalamic nucleus in advanced Parkinson’s disease. N Engl J Med. 2003;349:1925–34. doi: 10.1056/NEJMoa035275. [DOI] [PubMed] [Google Scholar]

- Lardeux S, Paleressompoulle D, Pernaud R, Cador M, Baunez C. Different populations of subthalamic neurons encode cocaine vs. sucrose reward and predict future error. J Neurophysiol. 2013;110:1497–510. doi: 10.1152/jn.00160.2013. [DOI] [PubMed] [Google Scholar]

- Lardeux S, Pernaud R, Paleressompoulle D, Baunez C. Beyond the reward pathway: coding reward magnitude and error in the rat subthalamic nucleus. J Neurophysiol. 2009;102:2526–37. doi: 10.1152/jn.91009.2008. [DOI] [PubMed] [Google Scholar]

- Lhommée E, Klinger H, Thobois S, Schmitt E, Ardouin C, Bichon A, et al. Subthalamic stimulation in Parkinson’s disease: restoring the balance of motivated behaviours. Brain. 2012;135:1463–77. doi: 10.1093/brain/aws078. [DOI] [PubMed] [Google Scholar]

- Littell RC, Henry PR, Ammerman CB. Statistical analysis of repeated measures data using SAS procedures. J Anim Sci. 1998;76:1216–31. doi: 10.2527/1998.7641216x. [DOI] [PubMed] [Google Scholar]

- Manes JL, Parkinson AL, Larson CR, Greenlee JD, Eickhoff SB, Corcos DM, et al. Connectivity of the subthalamic nucleus and globus pallidus pars interna to regions within the speech network: a meta-analytic connectivity study. Hum Brain Mapp. 2014;35:3499–516. doi: 10.1002/hbm.22417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164:177–90. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Mathai A, Smith Y. The corticostriatal and corticosubthalamic pathways: two entries, one target. So what? Front Syst Neurosci. 2011;5:64. doi: 10.3389/fnsys.2011.00064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maurice N, Deniau JM, Glowinski J, Thierry AM. Relationships between the prefrontal cortex and the basal ganglia in the rat: physiology of the corticosubthalamic circuits. J Neurosci. 1998;18:9539–46. doi: 10.1523/JNEUROSCI.18-22-09539.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazzoni P, Hristova A, Krakauer JW. Why don’t we move faster? Parkinson’s Disease, movement vigor, and implicit motivation. J Neurosci. 2007;27:7105–16. doi: 10.1523/JNEUROSCI.0264-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntyre CC, Hahn PJ. Network perspectives on the mechanisms of deep brain stimulation. Neurobiol Dis. 2010;38:329–37. doi: 10.1016/j.nbd.2009.09.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16:1936–47. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nambu A. A new dynamic model of the cortico-basal ganglia loop. Prog Brain Res. 2004;143:461–6. doi: 10.1016/S0079-6123(03)43043-4. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci. 2011;34:333–59. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelloux Y, Baunez C. Deep brain stimulation for addiction: why the subthalamic nucleus should be favored. Curr Opin Neurobiol. 2013;23:713–20. doi: 10.1016/j.conb.2013.02.016. [DOI] [PubMed] [Google Scholar]

- Rouaud T, Lardeux S, Panayotis N, Paleressompoulle D, Cador M, Baunez C. Reducing the desire for cocaine with subthalamic nucleus deep brain stimulation. Proc Natl Acad Sci USA. 2010;107:1196–200. doi: 10.1073/pnas.0908189107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rutledge R, Skandali N, Dayan P, Dolan RJ. Dopaminergic modulation of decision making and subjective well-being. J Neurosci. 2015;35:9811–22. doi: 10.1523/JNEUROSCI.0702-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar AM, Nunes EJ, Pardo M. Dopamine, behavioral economics, and effort. Front Behav Neurosci. 2009;3:13. doi: 10.3389/neuro.08.013.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauleau P, Eusebio A, Thevathasan W, Yarrow K, Pogosyan A, Zrinzo L, et al. Involvement of the subthalamic nucleus in engagement with behaviourally relevant stimuli. Eur J Neurosci. 2009;29:931–42. doi: 10.1111/j.1460-9568.2009.06635.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt L, d’Arc BF, Lafargue G, Galanaud D, Czernecki V, Grabli D, et al. Disconnecting force from money: effects of basal ganglia damage on incentive motivation. Brain. 2008;131:1303–10. doi: 10.1093/brain/awn045. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–9. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Serranová T, Jech R, Dušek P, Sieger T, Růžička F, Urgošík D, et al. Subthalamic nucleus stimulation affects incentive salience attribution in Parkinson’s disease. Mov Disord. 2011;26:2260–6. doi: 10.1002/mds.23880. [DOI] [PubMed] [Google Scholar]

- Sharot T, Guitart-Masip M, Korn CW, Chowdhury R, Dolan RJ. How dopamine enhances an optimism bias in humans. Curr Biol. 2012;22:1477–81. doi: 10.1016/j.cub.2012.05.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shenhav A, Botvinick MM, Cohen JD. The expected value of control: an integrative theory of anterior cingulate cortex function. Neuron. 2013;79:217–40. doi: 10.1016/j.neuron.2013.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan H, Pogosyan A, Ashkan K, Cheeran B, FitzGerald JJ, Green AL, et al. Subthalamic nucleus local field potential activity helps encode motor effort rather than force in parkinsonism. J Neurosci. 2015;35:5941–9. doi: 10.1523/JNEUROSCI.4609-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teagarden MA, Rebec GV. Subthalamic and striatal neurons concurrently process motor, limbic, and associative information in rats performing an operant task. J Neurophysiol. 2007;97:2042–58. doi: 10.1152/jn.00368.2006. [DOI] [PubMed] [Google Scholar]

- Treadway MT, Buckholtz JW, Cowan RL, Woodward ND, Li R, Ansari MS, et al. Dopaminergic mechanisms of individual differences in human effort-based decision-making. J Neurosci. 2012;32:6170–6. doi: 10.1523/JNEUROSCI.6459-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner RS, Desmurget M. Basal ganglia contributions to motor control: a vigorous tutor. Curr Opin Neurobiol. 2010;20:704–16. doi: 10.1016/j.conb.2010.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uslaner JM, Dell’Orco JM, Pevzner A, Robinson TE. The influence of subthalamic nucleus lesions on sign-tracking to stimuli paired with food and drug rewards: facilitation of incentive salience attribution? Neuropsychopharmacology. 2008;33:2352–61. doi: 10.1038/sj.npp.1301653. [DOI] [PubMed] [Google Scholar]

- Uslaner JM, Yang P, Robinson TE. Subthalamic nucleus lesions enhance the psychomotor-activating, incentive motivational, and neurobiological effects of cocaine. J Neurosci. 2005;25:8407–15. doi: 10.1523/JNEUROSCI.1910-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wouwe NC, Ridderinkhof KR, van den Wildenberg WPM, Band GPH, Abisogun A, Elias WJ, et al. Deep brain stimulation of the subthalamic nucleus improves reward-based decision-learning in Parkinson’s disease. Front Hum Neurosci. 2011;5:30. doi: 10.3389/fnhum.2011.00030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Varazzani C, San-Galli A, Gilardeau S, Bouret S. Noradrenaline and dopamine neurons in the reward/effort trade-off: a direct electrophysiological comparison in behaving monkeys. J Neurosci. 2015;35:7866–77. doi: 10.1523/JNEUROSCI.0454-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle MC, Treadway MT, Mayo LM, Zald DH, de Wit H. Amping up effort: effects of d-amphetamine on human effort-based decision-making. J Neurosci. 2011;31:16597–602. doi: 10.1523/JNEUROSCI.4387-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–94. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- Wunderlich K, Rangel A, O’Doherty JP. Neural computations underlying action-based decision making in the human brain. Proc Natl Acad Sci USA. 2009;106:17199–204. doi: 10.1073/pnas.0901077106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zavala BA, Brittain J-S, Jenkinson N, Ashkan K, Foltynie T, Limousin P, et al. Subthalamic nucleus local field potential activity during the Eriksen flanker task reveals a novel role for theta phase during conflict monitoring. J Neurosci. 2013;33:14758–66. doi: 10.1523/JNEUROSCI.1036-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zavala BA, Tan H, Little S, Ashkan K, Hariz M, Foltynie T, et al. Midline frontal cortex low-frequency activity drives subthalamic nucleus oscillations during conflict. J Neurosci. 2014;34:7322–33. doi: 10.1523/JNEUROSCI.1169-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zavala BA, Zaghloul KA, Brown P. The subthalamic nucleus, oscillations, and conflict. Mov Disord. 2015;30:328–38. doi: 10.1002/mds.26072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zénon A, Sidibé M, Olivier E. Pupil size variations correlate with physical effort perception. Fron Behav Neurosci. 2014;8:286. doi: 10.3389/fnbeh.2014.00286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zénon A, Sidibé M, Olivier E. Disrupting the supplementary motor area makes physical effort appear less effortful. J Neurosci. 2015;35:8737–44. doi: 10.1523/JNEUROSCI.3789-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.