Abstract

A pyramid has expressed the idea of hierarchy of medical evidence for so long, that not all evidence is the same. Systematic reviews and meta-analyses have been placed at the top of this pyramid for several good reasons. However, there are several counterarguments to this placement. We suggest another way of looking at the evidence-based medicine pyramid and explain how systematic reviews and meta-analyses are tools for consuming evidence—that is, appraising, synthesising and applying evidence.

Keywords: EDUCATION & TRAINING (see Medical Education & Training), EPIDEMIOLOGY, GENERAL MEDICINE (see Internal Medicine)

The first and earliest principle of evidence-based medicine indicated that a hierarchy of evidence exists. Not all evidence is the same. This principle became well known in the early 1990s as practising physicians learnt basic clinical epidemiology skills and started to appraise and apply evidence to their practice. Since evidence was described as a hierarchy, a compelling rationale for a pyramid was made. Evidence-based healthcare practitioners became familiar with this pyramid when reading the literature, applying evidence or teaching students.

Various versions of the evidence pyramid have been described, but all of them focused on showing weaker study designs in the bottom (basic science and case series), followed by case–control and cohort studies in the middle, then randomised controlled trials (RCTs), and at the very top, systematic reviews and meta-analysis. This description is intuitive and likely correct in many instances. The placement of systematic reviews at the top had undergone several alterations in interpretations, but was still thought of as an item in a hierarchy.1 Most versions of the pyramid clearly represented a hierarchy of internal validity (risk of bias). Some versions incorporated external validity (applicability) in the pyramid by either placing N-1 trials above RCTs (because their results are most applicable to individual patients2) or by separating internal and external validity.3

Another version (the 6S pyramid) was also developed to describe the sources of evidence that can be used by evidence-based medicine (EBM) practitioners for answering foreground questions, showing a hierarchy ranging from studies, synopses, synthesis, synopses of synthesis, summaries and systems.4 This hierarchy may imply some sort of increasing validity and applicability although its main purpose is to emphasise that the lower sources of evidence in the hierarchy are least preferred in practice because they require more expertise and time to identify, appraise and apply.

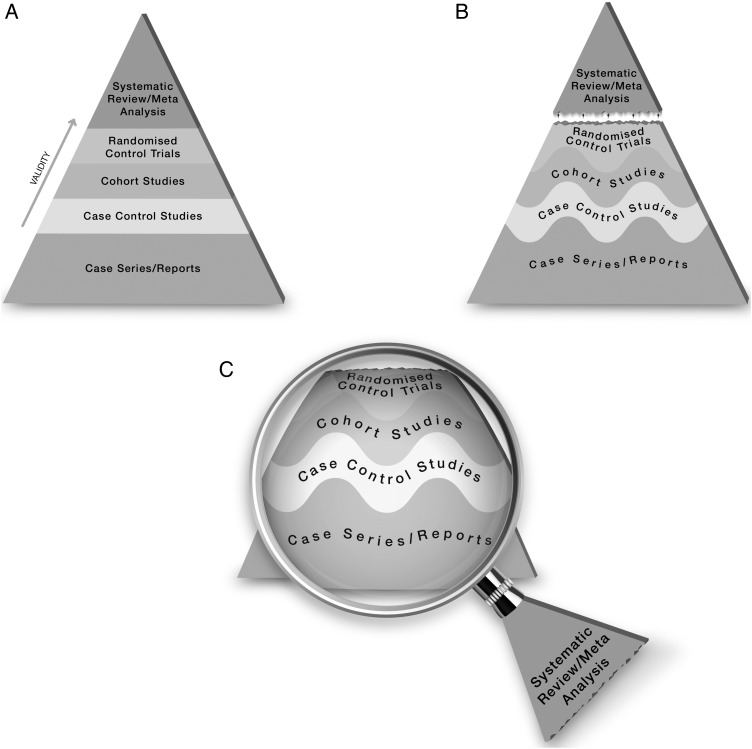

The traditional pyramid was deemed too simplistic at times, thus the importance of leaving room for argument and counterargument for the methodological merit of different designs has been emphasised.5 Other barriers challenged the placement of systematic reviews and meta-analyses at the top of the pyramid. For instance, heterogeneity (clinical, methodological or statistical) is an inherent limitation of meta-analyses that can be minimised or explained but never eliminated.6 The methodological intricacies and dilemmas of systematic reviews could potentially result in uncertainty and error.7 One evaluation of 163 meta-analyses demonstrated that the estimation of treatment outcomes differed substantially depending on the analytical strategy being used.7 Therefore, we suggest, in this perspective, two visual modifications to the pyramid to illustrate two contemporary methodological principles (figure 1). We provide the rationale and an example for each modification.

Figure 1.

The proposed new evidence-based medicine pyramid. (A) The traditional pyramid. (B) Revising the pyramid: (1) lines separating the study designs become wavy (Grading of Recommendations Assessment, Development and Evaluation), (2) systematic reviews are ‘chopped off’ the pyramid. (C) The revised pyramid: systematic reviews are a lens through which evidence is viewed (applied).

Rationale for modification 1

In the early 2000s, the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group developed a framework in which the certainty in evidence was based on numerous factors and not solely on study design which challenges the pyramid concept.8 Study design alone appears to be insufficient on its own as a surrogate for risk of bias. Certain methodological limitations of a study, imprecision, inconsistency and indirectness, were factors independent from study design and can affect the quality of evidence derived from any study design. For example, a meta-analysis of RCTs evaluating intensive glycaemic control in non-critically ill hospitalised patients showed a non-significant reduction in mortality (relative risk of 0.95 (95% CI 0.72 to 1.25)9). Allocation concealment and blinding were not adequate in most trials. The quality of this evidence is rated down due to the methodological imitations of the trials and imprecision (wide CI that includes substantial benefit and harm). Hence, despite the fact of having five RCTs, such evidence should not be rated high in any pyramid. The quality of evidence can also be rated up. For example, we are quite certain about the benefits of hip replacement in a patient with disabling hip osteoarthritis. Although not tested in RCTs, the quality of this evidence is rated up despite the study design (non-randomised observational studies).10

Therefore, the first modification to the pyramid is to change the straight lines separating study designs in the pyramid to wavy lines (going up and down to reflect the GRADE approach of rating up and down based on the various domains of the quality of evidence).

Rationale for modification 2

Another challenge to the notion of having systematic reviews on the top of the evidence pyramid relates to the framework presented in the Journal of the American Medical Association User's Guide on systematic reviews and meta-analysis. The Guide presented a two-step approach in which the credibility of the process of a systematic review is evaluated first (comprehensive literature search, rigorous study selection process, etc). If the systematic review was deemed sufficiently credible, then a second step takes place in which we evaluate the certainty in evidence based on the GRADE approach.11 In other words, a meta-analysis of well-conducted RCTs at low risk of bias cannot be equated with a meta-analysis of observational studies at higher risk of bias. For example, a meta-analysis of 112 surgical case series showed that in patients with thoracic aortic transection, the mortality rate was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, p<0.01). Clearly, this meta-analysis should not be on top of the pyramid similar to a meta-analysis of RCTs. After all, the evidence remains consistent of non-randomised studies and likely subject to numerous confounders.

Therefore, the second modification to the pyramid is to remove systematic reviews from the top of the pyramid and use them as a lens through which other types of studies should be seen (ie, appraised and applied). The systematic review (the process of selecting the studies) and meta-analysis (the statistical aggregation that produces a single effect size) are tools to consume and apply the evidence by stakeholders.

Implications and limitations

Changing how systematic reviews and meta-analyses are perceived by stakeholders (patients, clinicians and stakeholders) has important implications. For example, the American Heart Association considers evidence derived from meta-analyses to have a level ‘A’ (ie, warrants the most confidence). Re-evaluation of evidence using GRADE shows that level ‘A’ evidence could have been high, moderate, low or of very low quality.12 The quality of evidence drives the strength of recommendation, which is one of the last translational steps of research, most proximal to patient care.

One of the limitations of all ‘pyramids’ and depictions of evidence hierarchy relates to the underpinning of such schemas. The construct of internal validity may have varying definitions, or be understood differently among evidence consumers. A limitation of considering systematic review and meta-analyses as tools to consume evidence may undermine their role in new discovery (eg, identifying a new side effect that was not demonstrated in individual studies13).

This pyramid can be also used as a teaching tool. EBM teachers can compare it to the existing pyramids to explain how certainty in the evidence (also called quality of evidence) is evaluated. It can be used to teach how evidence-based practitioners can appraise and apply systematic reviews in practice, and to demonstrate the evolution in EBM thinking and the modern understanding of certainty in evidence.

Footnotes

Contributors: MHM conceived the idea and drafted the manuscript. FA helped draft the manuscript and designed the new pyramid. MA and NA helped draft the manuscript.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Paul M, Leibovici L. Systematic review or meta-analysis? Their place in the evidence hierarchy. Clin Microbiol Infect 2014;20:97–100. 10.1111/1469-0691.12489 [DOI] [PubMed] [Google Scholar]

- 2. Agoritsas T, Vandvik P, Neumann I, et al. Finding current best evidence. In: Guyatt G, Rennie D, Meade MO, et al. eds Users’ guides to the medical literature: a manual for evidence-based clinical practice. 3rd edn New York, NY: McGraw-Hill, 2015:29–50. [Google Scholar]

- 3. Tomlin G, Borgetto B. Research Pyramid: a new evidence-based practice model for occupational therapy. Am J Occup Ther 2011;65:189–96. 10.5014/ajot.2011.000828 [DOI] [PubMed] [Google Scholar]

- 4. Resources for Evidence-Based Practice: The 6S Pyramid. Secondary Resources for Evidence-Based Practice: The 6S Pyramid Feb 18, 2016 4:58 PM. http://hsl.mcmaster.libguides.com/ebm.

- 5. Vandenbroucke JP. Observational research and evidence-based medicine: what should we teach young physicians? J Clin Epidemiol 1998;51:467–72. 10.1016/S0895-4356(98)00025-0 [DOI] [PubMed] [Google Scholar]

- 6. Berlin JA, Golub RM. Meta-analysis as evidence: building a better pyramid. JAMA 2014;312:603–5. 10.1001/jama.2014.8167 [DOI] [PubMed] [Google Scholar]

- 7. Dechartres A, Altman DG, Trinquart L, et al. Association between analytic strategy and estimates of treatment outcomes in meta-analyses. JAMA 2014;312:623–30. 10.1001/jama.2014.8166 [DOI] [PubMed] [Google Scholar]

- 8. Guyatt GH, Oxman AD, Vist GE, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924–6. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Murad MH, Coburn JA, Coto-Yglesias F, et al. Glycemic control in non-critically ill hospitalized patients: a systematic review and meta-analysis. J Clin Endocrinol Metab 2012;97:49–58. 10.1210/jc.2011-2100 [DOI] [PubMed] [Google Scholar]

- 10. Guyatt GH, Oxman AD, Sultan S, et al. GRADE guidelines: 9. Rating up the quality of evidence. J Clin Epidemiol 2011;64:1311–16. 10.1016/j.jclinepi.2011.06.004 [DOI] [PubMed] [Google Scholar]

- 11. Murad MH, Montori VM, Ioannidis JP, et al. How to read a systematic review and meta-analysis and apply the results to patient care: users’ guides to the medical literature. JAMA 2014;312:171–9. 10.1001/jama.2014.5559 [DOI] [PubMed] [Google Scholar]

- 12. Murad MH, Altayar O, Bennett M, et al. Using GRADE for evaluating the quality of evidence in hyperbaric oxygen therapy clarifies evidence limitations. J Clin Epidemiol 2014;67:65–72. 10.1016/j.jclinepi.2013.08.004 [DOI] [PubMed] [Google Scholar]

- 13. Nissen SE, Wolski K. Effect of rosiglitazone on the risk of myocardial infarction and death from cardiovascular causes. N Engl J Med 2007;356:2457–71. 10.1056/NEJMoa072761 [DOI] [PubMed] [Google Scholar]