Abstract

Objectives:

Implementation of interventions designed to improve the quality of medical care often proceeds differently from what is planned. Improving existing conceptual models to better understand the sources of these differences can help future projects avoid these pitfalls and achieve desired effectiveness. To inform an adaptation of an existing theoretical model, we examined unanticipated changes that occurred in an intervention designed to improve reporting of adjuvant therapies for breast cancer patients at a large, urban academic medical center.

Methods:

Guided by the complex innovation implementation conceptual framework, our study team observed and evaluated the implementation of an intervention designed to improve reporting to a tumor registry. Findings were assessed against the conceptual framework to identify boundary conditions and modifications that could improve implementation effectiveness.

Results:

The intervention successfully increased identification of the managing medical oncologist and treatment reporting. During implementation, however, unexpected external challenges including hospital acquisitions of community practices and practices’ responses to government incentives to purchase electronic medical record systems led to unanticipated changes and associated threats to implementation. We present a revised conceptual model that incorporates the sources of these unanticipated challenges.

Conclusion:

This report of our experience highlights the importance of monitoring implementation over time and accounting for changes that affect both implementation and measurement of intervention impact. In this article, we use our study to examine the challenges of implementation research in health care, and our experience can help future implementation efforts.

Keywords: Implementation research, intervention study, breast cancer therapies, innovation implementation, case study, tumor registries

Introduction

Implementation research is a burgeoning area of scientific inquiry focused on examining the process and contextual factors that affect the ability for a proven intervention to make evidence-based practices part of routine health care workflow.1 This approach is less concerned with the design and testing of either the intervention or the evidence-based practice it may aim to achieve adherence to than with understanding how interventions can be translated or scaled up in new environments. Implementation research has been applied successfully in a variety of settings to improve the uptake of proven interventions, ultimately benefiting care coordination, teamwork, quality reporting, and guideline adherence.2–6 However, in practice, translating intervention efforts still often proceeds differently from planning despite the lessons learned from implementation science. This discrepancy contributes to the wide gap between findings that are expected based on the results of scientific studies and actual findings that result from the implementation of an innovation into clinical or organizational practice.7–15

Several challenges to implementing proven interventions contribute to this gap between actual and expected findings, including a lack of understanding by both clinicians and hospital managers of current research evidence,16 provider time constraints,17 competing priorities within health care organizations, unsupportive information technology,6 misaligned incentives,18 and organizational and cultural factors.4,5 One key challenge to implementation research is the constantly changing landscape in which interventions are put into practice, thus raising the possibility that observed outcomes may not actually be attributable to the intervention itself.19,20

Utilization of a theoretical model to guide implementation efforts is viewed as critical to overcoming these challenges and to facilitating successful uptake of an intervention.21 Yet, while models have been developed to clarify issues around the implementation of change processes,10,15,19,22,23 many models remain limited in their ability to address or account for the multiple moving parts of innovation implementation. In particular, models remain deficient in how to handle “unanticipated” challenges or threats to implementation. For example, the consolidated framework of implementation research (CFIR) acknowledges the role of anticipated change in the internal organizational context as a result of the intervention itself by predicting this co-evolution and suggesting the need for continuous re-evaluation of the internal environment.10 However, the CFIR does not conceptualize the role of unexpected events arising from the external, “outer setting” environment.24 Likewise, Flottorp et al.25 created a list of domains from 12 implementation frameworks, yet dealing with unpredicted challenges was not explicitly included in any. While several models encourage ongoing assessment and feedback, flexible models that directly address sources of uncertainty, both within and beyond organizational boundaries, may be helpful to improve implementation efforts and associated research.

In an effort to improve existing theory in order to incorporate unanticipated environmental changes, we examined the implementation process of an intervention designed as part of a larger study designed with the goal of improving reporting for the use of breast cancer adjuvant therapies.26 This larger study developed and tested an evidence-based intervention that sought to engage full-time hospital-based and community-based oncologists affiliated with a single academic medical center (AMC) in a large, urban area to improve their reporting of patients’ cancer treatments to the institution’s centralized tumor registry (TR). Adjuvant cancer therapy is often delivered by clinicians outside the hospitals that run the tumor registry, resulting in poor accuracy and under-reporting of adjuvant therapy use.27 Accurate reporting and measurement are critical to develop targeted interventions and quality improvement.28 The implementation of this intervention provided a useful case study to assist researchers and practitioners to respond to unanticipated challenges as they strive to improve the likelihood that well-designed interventions will be successfully implemented and their impacts appropriately measured.

New contribution

Building on prior research, we present this case study highlighting challenges to both intervention implementation and conducting implementation research. Framed by an established model of innovation implementation, we examined the implementation process for our evidence-based intervention to improve our understanding of implementation in health care delivery and to inform future efforts to implement innovative practices in health care organizations. In particular, we focus on how an established model was inadequately prepared to respond to unexpected events. Our findings provide important guidance about the process of studying implementation, including insights about critical issues to address when evaluating intervention effectiveness and impact.

Conceptual framework

We used the complex innovation implementation framework developed by Helfrich et al.29 to guide the implementation evaluation. Within this framework, complex innovations are those that are perceived as new by the adopter and require active coordinated use by multiple members to achieve organizational benefits. At the outset, we felt that this conceptual framework was appropriate for our study because of the nature of our intervention—an innovation that required coordinated use by multiple organizational members and involved the interplay of key organizational factors.

Using this conceptual model, the implementation process is defined as the transition period following the decision to adopt the intervention and during which users bring the innovation into sustained use. Implementation effectiveness is then defined as distinct from the effectiveness of the intervention itself and refers to the consistency and quality of collective innovation use. In practice, the assessment of the implementation effectiveness construct throughout various stages of the intervention can permit evaluation, for example, enabling determination of whether a failed innovation was the result of poor implementation or whether the innovation was successfully implemented but was nonetheless ineffective.

The innovation implementation model frames effective implementation as a function of management support and resource availability mediated by organizational policies and practices and by the implementation climate within the organization. Correspondingly, the implementation climate is influenced by innovation champions and by the fit between the innovation and users’ values. Also important is the concept of organizational climate, which refers to the shared perception that implementation of the innovation is a major organizational priority that is promoted, supported, and rewarded by the organization.29

The constructs of this model guided our implementation evaluation as each domain was considered in an effort to insure implementation effectiveness. We were interested in examining the implementation process for our planned intervention in order to gain further insight about challenges and facilitators of implementation in this context to identify boundary conditions of the implementation framework and to inform future efforts to implement innovative practices in health care organizations.

Methods

Study setting

This study was conducted in a large, urban AMC that serves a high volume of breast cancer patients with its affiliated providers, including 23 surgical oncologists, 19 medical oncologists, and 3 radiation oncologists. At the time of the study, the hospital was attempting to achieve accreditation by the American College of Surgeons’ (ACoS) Commission on Cancer. Three-quarters of the oncologists who treat breast cancer patients were based in the community as part of solo and group practices, and one-quarter delivered care through faculty practices and resident clinics within the hospital.

Intervention development

Research team members conducted in-person interviews with 31 key informants affiliated with the AMC. Interviewees included hospital- and community-based oncologists and hospital cancer leaders recruited for participation based on their affiliation with the medical center. We used different versions of a semi-structured interview guide—clinical, non-clinical, and leadership—to conduct interviews. Study participants were asked about awareness of and willingness to report patient information to a centralized tumor registry (TR) to inform an intervention being designed to improve reporting of receipt of adjuvant therapies for breast cancer patients. Interviews were conducted in person, which lasted from 30 to 90 min, and were recorded with participants’ permission. Recordings were transcribed verbatim.

Interviews were coded using both a priori and emergent codes using the constant comparative method of qualitative data analysis based on grounded theory development.30 The coding team was led by a senior investigator and included a study principal investigator, a qualitative research investigator, and a research assistant. A preliminary coding dictionary was first developed based on broad topics from the key informant interview guide. This coding dictionary defined categories based on the domains of interview questions, such as “Current Process for Reporting Breast Cancer Therapy” or “Implementation Challenges and Facilitators.” This approach followed the standards of category development for rigorous qualitative analysis.31 The coding team met frequently throughout the coding process to discuss decisions about codes and emerging themes, allowing for the development of new codes based on topics discussed by key informants.31 The discussions throughout the coding process helped to ensure consistency and accuracy of coding, as well as clarification about emergent codes.

After interviews were conducted and analyzed, research team members convened a panel including one surgical and two medical community-based oncologists; one hospital radiation oncologist; the hospital tumor registrar, her assistant, and her administrator; and the deputy chief medical officer to discuss the findings and opportunities to improve tracking and feedback. This panel conducted a solution-focused exercise based on the barriers and facilitators identified from the key informant interviews to develop an intervention to improve communication between the tumor registrar and oncologists.3 The intervention was designed to solve problems associated with capture of cancer treatment information for patients diagnosed with cancer at the institution. Specifically, the intervention included steps to identify the surgeon, communicate with the surgeon’s office prior to surgery, request information regarding adjuvant treatment through multiple methods (e.g. phone, email, fax), and have weekly, ongoing communication with the surgeon’s office to encourage participation.3 The resultant intervention was implemented with one surgical oncologist at the AMC from April to May 2012.

Implementation process evaluation

Throughout the implementation process for the intervention described above, we monitored concurrent changes in the practice environment that could threaten the validity of the intervention, taking notes as a study team. We also collected information about our observations of the implementation process, including soliciting feedback through brief interviews from study participants involved in implementing and evaluating the intervention. Regular discussions among investigators regarding these notes and observations enabled us to form consensus about the results we present. The consensus issues identified from these notes and observations concerning the challenges faced during the implementation process were evaluated against the domains of the complex innovations implementation framework. Findings that did not fit within the existing domains were classified based on the environmental influential factors.

Results

Intervention

The results from the intervention are not the primary subject of this article and are reported elsewhere in detail.3 However, a brief summary is useful for context. Prior to our intervention, the tumor registrar was unable to identify any of the pilot study patients’ managing medical oncologists, and the rate of adjuvant therapy treatment reporting at the study site during 2010–2011 was 2.6% (10 out of 387 patients). During the intervention period, 25 breast cancer patients that needed follow-up treatment and listed the participating surgical oncologist as their managing physician were identified from pathology. Following the intervention, the tumor registrar was able to identify the managing medical oncologist for 19 (76%) of the identified patients through continued communication with the surgeon’s staff, and treatment was determined for 16 (64%) of them, resulting in a significant increase in treatment reporting.

Implementation process evaluation

Despite the improvements attributable to the intervention, our monitoring and assessment of the implementation process revealed both internal and external threats to the process of implementing our treatment reporting intervention. While internal threats to implementation had largely been anticipated and were consistent with our expectations about the implementation process, we also encountered external threats to implementation that created challenges that impacted both implementation and intervention effectiveness. We next discuss these threats in the context of the complex innovation implementation framework.29

Internal threats to implementation process

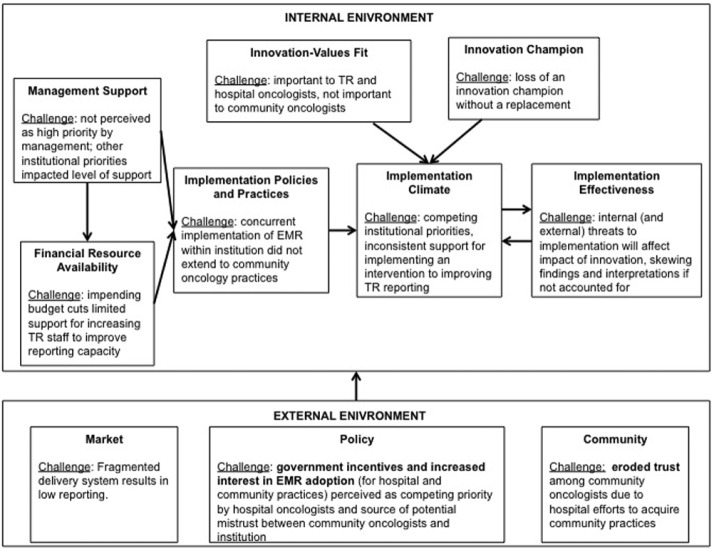

Using our conceptual model, we found internal threats to the implementation process that were largely consistent with the Helfrich innovation implementation framework (see Figure 1).29 First, the innovation implementation appeared to be threatened by the loss of an innovation champion, which likely impacted the implementation climate. While we had identified an innovation champion at the outset of the intervention project, this individual subsequently left the institution and no clear successor was identified after her departure. As a result, the implementation climate changed due to inconsistent support for the intervention.

Figure 1.

Conceptual framework for intervention implementation.

Source: adapted from the conceptual framework of complex innovation implementation.29

The second important threat involved innovation-values fit. Specifically, while the goal of improving cancer treatment reporting was important for both the AMC and the TR, this goal was not similarly valued by all of the physicians who needed to make changes to improve their cancer treatment reporting. Both hospital- and community-based oncologists noted that changes in their reporting processes created inconveniences for their practices, and few were eager to make needed modifications. Furthermore, while improving TR reporting was largely aligned with the values of hospital-based oncologists who understood that the TR could provide information that would be useful for their practice and potential research studies, the community-based oncologists did not share this goal. Oncologists in the community were concerned that changes in the reporting process could result in losing their identified oncology patients to the hospital-based oncologists and also felt that new processes could compromise the privacy of patients’ medical information.

Another threat to the implementation involved the implementation climate in the organization. At the time of the study, competing institutional priorities led to inconsistent support of and attention to the goal of improving cancer treatment reporting. Furthermore, as the organization had not declared the TR or TR reporting to be a top institutional priority, innovation implementation was not perceived as a high priority either, and this was reflected in poor management support of the intervention.

Financial resource availability was another factor impacting implementation as impending budget cuts threatened financial support for improving TR reporting as well as limiting support for hiring additional TR staff members who could help improve reporting capacity. Finally, implementation policies and practices also created a challenge for this intervention. At the time of the study, concurrent implementation of an electronic medical record (EMR) system institution-wide introduced the possibility that the TR would have better access to the treatment information for cancer patients treated by hospital-based oncologists, but as EMR implementation plans did not extend to community oncology practices, views about the potential impact of the EMR on the intervention were understandably mixed.

External threats to implementation process

In addition to factors that created internal threats to intervention implementation, we found three major external threats that impacted both the implementation process and the effectiveness of the intervention itself. First, market factors contributed to the low reporting and the need for the development and implementation of the TR reporting intervention. The fragmentation of health service delivery added an extra hurdle in implementing the intervention.

Second, concurrent with our implementation effort, the US government promoted its offer to provide incentives to community-based physicians in order to encourage purchase of EMR systems for the practices. While these incentives will result in more community physicians investing in EMRs, it remains unclear whether proliferation of EMR systems will improve cancer treatment reporting to the TR. While it is possible that new EMR capacity would permit TR staff to search EMR data for patients’ treatment information, this could only occur if community oncologists grant TRs access to their external EMRs. In the context of our study, however, increased interest in EMR adoption and implementation created an additional threat to intervention implementation because it was perceived as another competing priority by hospital-based physicians and a source of potential mistrust between community-based oncologists and the hospital.

Third, adding to the feeling of mistrust posed by the policy changes, changing health care reimbursement policies led to numerous changes that affected the relationship between local hospitals and physician practices in the surrounding area. With respect to our study and intervention, as hospitals moved to acquire community practices and build hospital market share, community oncologists’ trust of the study hospital and any hospital-based intervention was weakened. Community oncologists became less willing to share information as needed for our intervention because of these physicians’ expressed concerns about losing patients to the hospital.

Conceptual framework

Our evaluation of the implementation process of the intervention observed some potential internal and external contextual factors that may have threatened the implementation process. The internal challenges largely fit with the conceptual framework that guided our evaluation. In contrast, the complex innovation implementation framework was inadequate in its consideration of external threats to implementation effectiveness. Given the incongruence between our findings and the framework, we propose a modified conceptual framework that incorporates the internal domains originally conceptualized in the framework as well as the external domains identified in our analysis (see Figure 1). Specifically, the external domains included in our modified model include market-, policy-, and community-level factors that affect the internal environment in ways that can mediate implementation effectiveness. These external domains could be considered as additions to the model but require further validation and testing.

Discussion

Implementation of a practice innovation designed to improve treatment reporting of breast cancer adjuvant therapies was hindered by both expected and unexpected barriers. In this case, unexpected external environmental challenges including hospital acquisitions of community practices contributed to deterioration of community oncologists’ trust and willingness to share data. Furthermore, practices’ responses to government incentives for community physicians to purchase EMR systems led to unexpected changes in information technologies and capabilities. If implementation evaluation did not take into account these unanticipated changes and associated threats to innovation implementation, measurement of the intervention’s impact would be skewed, thereby biasing findings that might have been inappropriately associated with failure or success of the intervention itself.

The constantly changing practice environment and a persistent inability to control practice conditions are key challenges for implementation research. For example, even if one were to develop an intervention that addressed all the implementation barriers previously identified26—in this case, improved awareness of the TR, increased communication between oncologists and the TR and so on—additional threats to the implementation process will likely emerge that are neither anticipated nor controllable (e.g. eroded trust, changes in competing priorities, market changes). These unexpected threats to implementation, however, can affect the innovation’s impact, thereby skewing findings and interpretation of results.

In addition, these constant changes make it nearly impossible to determine whether any benefits measured are a direct effect of the intervention being implemented. The Helfrich model chosen in this study did not account for external changes to the practice environment such as those threats we found to the implementation of our intervention.29 To our knowledge, this shortcoming is not unique to this model, and many implementation models overlook the role of environmental dynamism on implementation success.10,25 We are thus left to consider how we best measure the impact of an intervention, ensuring that we appropriately ascribe benefit to the intervention that is being implemented. More generally, in the context of measuring innovation and implementation effectiveness, how do we avoid measurement bias and ensure that findings are due to the implemented innovation?

Many of the issues that underlie the challenges in assessing these kinds of interventions may be addressed by employing statistical methods that explicitly take account of inherent complexities. Most of these complexities arise from temporal changes, both internal and external, that are not investigator controlled or modifiable. These complexities may be incorporated through mixed effects modeling that combines “fixed” effects that were planned and measured, with “random” effects that were not necessarily anticipated. Specifically, measured longitudinal changes that may confound the estimate of interventional impact can be included in models as random effects, thereby reducing error and bias in estimating the intervention’s effects on outcome. In this way, the threat to the integrity and validity of results from unplanned time-dependent factors (i.e. variables whose values change over time), regardless of the source, is reduced. For example, an EMR was implemented over time in different specialty clinics at the time of this study. In addition, it was offered to community-based physicians. There was both differential uptake of EMR in physician practices, and a learning curve associated with EMR use. This unanticipated factor, implementation of an EMR, can be included in a regression model as a fixed effect as well as a random effect of physicians to account for its variable uptake.

Theoretical models guiding implementation research are increasingly important to guide the successful translation of research findings into practice.10,14,15,21,29 These models help researchers overcome an inherent challenge of implementation research that planning cannot control for every contingency in advance. These models help steer research design to take into account confounding and mediating factors that will affect both the efficacy of an intervention as well as its effective implementation.9 However, these models do not explicitly account for unanticipated factors, leaving them unmeasured in research protocols. For example, the model we employed in this study—the complex innovation implementation framework—does not incorporate elements of the external environment. Our modifications to the model help to identify sources of external uncertainty that may jeopardize implementation efforts.

Inconsistent measurement of key factors that may be changing constantly also threatens the reliability of implementation research findings. To move forward with implementation research, it will be necessary to build in opportunities to accommodate unexpected changes and shifting external factors directly into theoretical models of implementation research design. In practice, these models will allow for the ongoing monitoring and evaluation of the implementation itself, including environmental scans, soliciting feedback, and conducting formative evaluations throughout the process.

Our experience suggests potential approaches for future implementation research that, with help from existing conceptual frameworks, can increase the accuracy and generalizability of implementation research findings. First, efforts should be made to quantify or “harden” soft data, including explicit measurement of organization readiness for change and implementation climate.32,33 Second, changes in the outcome variable of interest over time should be examined, paying particular attention to temporal shifts and trends that might have been in place prior to implementation. Third, external factors, such as market- or policy-level changes, should be assessed throughout the implementation process. These factors had a major impact on the success of this current intervention designed to increase cancer reporting to the TR. Implementation research should attempt to both qualitatively and quantitatively assess the impact of these environmental changes on the organizational change processes.

The use of an existing conceptual framework will help researchers to identify key variables to be monitored over time, including key characteristics that must be controlled for when evaluating the intervention’s success. Our revised model may help to steer researchers toward environmental factors to be identified that may affect the implementation process. Researchers may be able to determine these factors by considering, at the start, what are the deliverables of the innovation: What do we expect the innovation to achieve in the end? What would be the observable (tangible) benefits? Will the benefits be visible to those who have to implement the innovation? To those who have to support it? To patients? Furthermore, researchers should build time into their data collection strategy to permit longitudinal analysis. This approach will involve sequential measurement of key variables with ongoing monitoring throughout innovation implementation. These modifications to typical implementation research designs will help overcome bias associated with unmeasured mediators and confounders.

Most importantly, researchers must find ways to account for unmeasured effects on intervention effectiveness by hypothesizing about potential factors that could have affected the intervention post hoc (e.g. changing policies over time). Researchers engaged with the implementation may be able to identify these factors by discussing their observations during the course of the process. Another approach would be to track the intervention outcome over time and pre-specify variables that may influence the intervention’s outcome but that may also be changing over time. Furthermore, statistical methods using data simulation may be employed to evaluate the independent effects and contributions of these “moving parts” as the implementation proceeds.

To combat potential threats to validity of intervention findings and distinguish between the impact of an innovation versus its effective implementation in an ever-changing practice environment, researchers should apply rigorous approaches to monitor study protocols. Strategies can include (1) measure and monitor key components within the study environment that can affect the intervention’s intended outcome, (2) monitor changes in outcome variables over time, (3) identify potential confounders external to the study environment, and (4) collect longitudinal measures of these key confounders.

Limitations

This study faces two key limitations. First, our findings are based on the implementation of the tumor registry reporting intervention with one physician. The experiences of this one physician may be unique, and our findings may not be generalizable to other populations. However, the challenges faced during the implementation at this single site make scaling up of the intervention questionable. The implementation challenges for small-scale interventions remain important to understand as these help to provide the evidence base for large-scale interventions.

Similarly, our methodological approach to identifying challenges related to the intervention implementation largely included investigator observation. While this approach can provide important perspectives about the implementation process, it is subject to observer bias and subjectivity. Nonetheless, this approach can help to identify factors that may affect implementation and opportunities to revise existing implementation frameworks. Future research could focus on evaluating implementation challenges through qualitative interviews with subjects that examine their experience in the implementation process in order to validate our modifications to the complex innovation implementation framework.

Conclusion

Unexpected internal and external changes in policies or practices often occur during intervention implementation, threatening the validity of research findings. Our case study highlights the importance of identifying and monitoring these changes that can affect the implementation process and in turn, the intervention outcome. These factors must be measured and monitored over the course of implementation and accounted for analytically to reduce possible confounding of study results. Future research may consider developing an implementation framework that explicitly considers how to handle unanticipated events.

Footnotes

Declaration of conflicting interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval: Ethical approval for this study was obtained from the Institutional Review Board of the Icahn School of Medicine at Mount Sinai.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the National Cancer Institute Grant R21 CA132773.

Informed consent: Informed consent was obtained either in writing or via telephone from all subjects participating in the study.

References

- 1. Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manage Rev 1996; 21(4): 1055–1080. [Google Scholar]

- 2. Baker R, Camosso-Stefinovic J, Gillies C, et al. Tailored interventions to overcome identified barriers to change: effects on professional practice and health care outcomes. Cochrane Database Syst Rev 2010; 3: CD005470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Bickell NA, Wellner J, Franco R, et al. New accountability, new challenges: improving treatment reporting to a tumor registry. J Oncol Pract 2013; 9(3): e81–e85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Birken SA, Lee SY, Weiner BJ, et al. From strategy to action: how top managers’ support increases middle managers’ commitment to innovation implementation in health care organizations. Health Care Manage Rev 2015; 40(2): 159–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Birken SA, Lee SY, Weiner BJ, et al. Improving the effectiveness of health care innovation implementation: middle managers as change agents. Med Care Res Rev 2013; 70(1): 29–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. McAlearney AS, Wellner J, Bickell NA. How to improve breast cancer care measurement and reporting: suggestions from a complex urban hospital. J Healthc Manag 2013; 58(3): 205–223; discussion 223–224. [PMC free article] [PubMed] [Google Scholar]

- 7. Alexander JA. Quality improvement in healthcare organizations: a review of research on QI implementation. Washington, DC: Institute of Medicine, 2008. [Google Scholar]

- 8. Bryant J, Boyes A, Jones K, et al. Examining and addressing evidence-practice gaps in cancer care: a systematic review. Implement Sci 2014; 9(1): 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Curran GM, Bauer M, Mittman B, et al. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care 2012; 50(3): 217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009; 4: 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf 2008; 34(4): 228–243. [DOI] [PubMed] [Google Scholar]

- 12. Glasgow RE, Bull SS, Gillette C, et al. Behavior change intervention research in healthcare settings: a review of recent reports with emphasis on external validity. Am J Prev Med 2002; 23(1): 62–69. [DOI] [PubMed] [Google Scholar]

- 13. Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health 2003; 93(8): 1261–1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health 1999; 89(9): 1322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof 2006; 29(1): 126–153. [DOI] [PubMed] [Google Scholar]

- 16. Grimshaw JM, Eccles MP, Lavis JN, et al. Knowledge translation of research findings. Implement Sci 2012; 7: 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Cabana MD, Rand CS, Powe NR, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. JAMA 1999; 282(15): 1458–1465. [DOI] [PubMed] [Google Scholar]

- 18. Reschovsky JD, Hadley J, Landon BE. Effects of compensation methods and physician group structure on physicians’ perceived incentives to alter services to patients. Health Serv Res 2006; 41(4 Pt 1): 1200–1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Stetler CB, Legro MW, Wallace CM, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med 2006; 21(Suppl. 2): S1–S8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Stetler CB, McQueen L, Demakis J, et al. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci 2008; 3: 30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement Sci 2010; 5: 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Hagedorn H, Hogan M, Smith JL, et al. Lessons learned about implementing research evidence into clinical practice. Experiences from VA QUERI. J Gen Intern Med 2006; 21(Suppl. 2): S21–S24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Sales A, Smith J, Curran G, et al. Models, strategies, and tools. Theory in implementing evidence-based findings into health care practice. J Gen Intern Med 2006; 21(Suppl. 2): S43–S49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ploeg J, Davies B, Edwards N, et al. Factors influencing best-practice guideline implementation: lessons learned from administrators, nursing staff, and project leaders. Worldviews Evid Based Nurs 2007; 4(4): 210–219. [DOI] [PubMed] [Google Scholar]

- 25. Flottorp SA, Oxman AD, Krause J, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci 2013; 8: 35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Bickell NA, McAlearney AS, Wellner J, et al. Understanding the challenges of adjuvant treatment measurement and reporting in breast cancer: cancer treatment measuring and reporting. Med Care 2013; 51(6): e35–e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Bickell NA, Chassin MR. Determining the quality of breast cancer care: do tumor registries measure up? Ann Intern Med 2000; 132(9): 705–710. [DOI] [PubMed] [Google Scholar]

- 28. Edwards BK, Brown ML, Wingo PA, et al. Annual report to the nation on the status of cancer, 1975–2002, featuring population-based trends in cancer treatment. J Natl Cancer Inst 2005; 97(19): 1407–1427. [DOI] [PubMed] [Google Scholar]

- 29. Helfrich CD, Weiner BJ, McKinney MM, et al. Determinants of implementation effectiveness: adapting a framework for complex innovations. Med Care Res Rev 2007; 64(3): 279–303. [DOI] [PubMed] [Google Scholar]

- 30. Strauss A, Corbins J. Basics of qualitative research: techniques and procedures for developing grounded theory. Thousand Oaks, CA: SAGE, 1998. [Google Scholar]

- 31. Constas M. Qualitative analysis as a public event: the documentation of category development procedures. Am Educ Res J 1992; 29: 253–266. [Google Scholar]

- 32. Shea CM, Jacobs SR, Esserman DA, et al. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci 2014; 9: 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Weiner BJ. A theory of organizational readiness for change. Implement Sci 2009; 4: 67. [DOI] [PMC free article] [PubMed] [Google Scholar]