Significance

As Harper Lee tells us in To Kill a Mockingbird, “You never really understand a person until you consider things from his point of view, until you climb in his skin and walk around in it.” Classic theories in social psychology argue that this purported process of social simulation provides the foundations for self-regulation. In light of this, we investigated the neural processes whereby humans may regulate their affective responses to an event by simulating the way others would respond to it. Our results suggest that during perspective-taking, behavioral and neural signatures of negative affect indeed mimic the presumed affective state of others. Furthermore, the anterior medial prefrontal cortex—a region implicated in mental state inference—may orchestrate this affective simulation process.

Keywords: perspective-taking, emotion regulation, mPFC, simulation, amygdala

Abstract

Can taking the perspective of other people modify our own affective responses to stimuli? To address this question, we examined the neurobiological mechanisms supporting the ability to take another person’s perspective and thereby emotionally experience the world as they would. We measured participants’ neural activity as they attempted to predict the emotional responses of two individuals that differed in terms of their proneness to experience negative affect. Results showed that behavioral and neural signatures of negative affect (amygdala activity and a distributed multivoxel pattern reflecting affective negativity) simulated the presumed affective state of the target person. Furthermore, the anterior medial prefrontal cortex (mPFC)—a region implicated in mental state inference—exhibited a perspective-dependent pattern of connectivity with the amygdala, and the multivoxel pattern of activity within the mPFC differentiated between the two targets. We discuss the implications of these findings for research on perspective-taking and self-regulation.

The ability to respond adaptively in the face of emotionally challenging situations is essential to mental and physical health. So much so, in fact, that emotion dysregulation is a core feature of virtually every form of psychopathology. Given this, it isn’t surprising that the last decade has seen enormous growth in behavioral and brain research asking how we can effectively regulate our emotions. Although this work has made many important advances (1, 2), it has focused almost entirely on cognitive regulatory strategies that involve controlling attention to and/or rethinking the meaning of stimuli and events. As such, this work has completely overlooked the way in which social cognitive processes can be used to regulate our emotions.

The use of social cognition to regulate emotion was suggested by classic works in social psychology (3), which noted that by simulating others’ perspective on the world we could shape our own experience and behavior. It is exemplified by “(Stanislavski) method actors” who understand a role by attempting to generate within themselves the presumed thoughts and feelings of a character, thereby allowing themselves to go beyond the written words in the script and respond as their character would (4). It is also present in everyday life when we seek guidance with respect to emotional dilemmas by asking ourselves how a friend, family member, mentor or religious figure (e.g., “What would Jesus do?”) would respond in that situation.

In the current research we asked whether and how taking the perspective of other people can modify our own affective responses to stimuli. For example, by thinking of how someone more brave than ourselves would respond to a situation, we might down-regulate negative emotions, decrease aggression, and calm frazzled nerves. Alternatively, by thinking of how someone more sensitive and anxious would respond to the situation, we might enhance vigilance and increase reactivity to threatening situations.

To address these possibilities, we conducted a neuroimaging experiment investigating whether seeing the world through the eyes of a “tough” vs. a “sensitive” person can up-regulate or down-regulate affective responding, respectively. Furthermore, we sought to delineate the neural mechanisms by which such perspective-dependent regulatory consequences transpire.

Although no prior work has addressed these questions, per se, the literatures on emotion regulation (1, 5–11) and perspective-taking (12–18) can be integrated to generate testable hypotheses. On one hand, research on emotion regulation has shown that activity in lateral prefrontal cortex (i.e., dorsolateral prefrontal cortex and ventrolateral prefrontal cortex) and middle medial prefrontal cortex (i.e., presupplementary motor area, anterior ventral midcingulate cortex, and anterior dorsal midcingulate cortex) (19) supports the use of cognitive strategies to modulate activity in (largely) subcortical systems for triggering affective responses, such as the amygdala, thereby altering individuals’ emotional responses (2). On the other hand, research on perspective-taking has shown that drawing inferences about the mental states of others (also known as “mentalizing”)—as would be involved in simulating their perspective on an event—is supported by a network of regions centered on the anterior medial frontal cortex, specifically, the pregenual anterior cingulate cortex (pgACC) and the dorsomedial prefrontal cortex (dmPFC) (13, 19, 20).

Based on this literature, we formulated two hypotheses. First, we predicted that by taking the perspective of a target person, an individual could change behavioral and brain markers of affective responding, thereby providing evidence that one is emotionally experiencing the world the way the target would. Second, we predicted that these regulatory effects would be supported not by lateral prefrontal regions implicated in attentional and cognitive control, but rather, by dorsomedial prefrontal regions involved in perspective-taking. Put another way, we predicted that perspective-taking related activity in the anterior mPFC would regulate activity in neural systems for affective responding.

To test these hypotheses, we collected whole-brain fMRI data while participants attempted to predict the affective responses of other individuals. Before scanning, participants were presented with descriptions of two people, who they were led to believe had previously participated in the experiment. These descriptions suggested that one person was likely to be emotionally sensitive and squeamish, whereas the other was likely to be rugged and tough. Next, participants viewed neutral and negative affect-inducing images and evaluated the images from either their own or the tough or sensitive targets’ perspective.

We examined the effect of perspective-taking on multiple behavioral and brain markers of affective responding, including reports of the target’s predicted affective reactions to stimuli, activation in the amygdala (which is the brain region most strongly associated with detecting, encoding, and promoting responses to affectively relevant and especially potentially threatening stimuli) (21, 22), and finally, a recently identified picture-induced negative emotion signature (PINES) (23). PINES is a distributed, whole-brain multivoxel activation pattern developed using machine learning techniques that can reliably predict levels of negative affect elicited by aversive images. Because this signature is not affected by general arousal and is not reducible to activity in the amygdala, it provides a neural marker of negative affect independent of participants’ own self-reports. We predicted that both neural measures of negative affective responding (amygdala and PINES) would simulate the presumed affective state of the target person; namely, negative affect-related activity would be up- vs. down-regulated for the sensitive (vs. tough) perspective.

To address the prefrontal systems that might support perspective-taking and regulate affective responding, we used a combination of connectivity and multivoxel pattern analyses to identify a brain region whose activity was associated with amygdala up-regulation when adopting the sensitive perspective and/or down-regulation for the tough perspective—and whose distributed pattern of activity provided evidence that it differentially represented the two perspectives. As noted, we predicted this region to be located in the anterior mPFC.

Results

Does Perspective-Taking Modulate Affective Processing?

Behavioral ratings.

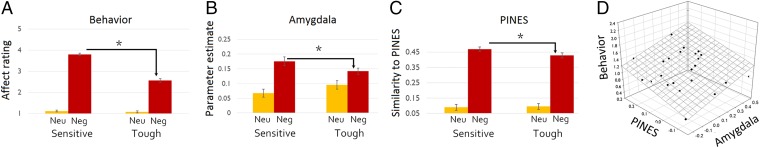

A manipulation check showed that participants reported more negative affect in response to negative than to neutral images [F(1,23) = 572.56, P < 0.001]. We conducted an ANOVA to see whether the perspective manipulation indeed altered participants predicted affective response. The results showed a significant interaction [F(1,23) = 202.08, P < 0.001], such that affect ratings were lower when participants viewed negative images from the perspective of the tough (mean = 2.564, SD = 0.118) vs. the sensitive target [mean = 3.793, SD = 0.103; t(23) = 12.60, P < 0.001]. There was no significant difference in ratings for neutral images from the perspective of the tough (mean = 1.071, SD = 0.021) and sensitive (mean = 1.117, SD = 0.028) targets [t(23) = 1.58, P = 0.126; Fig. 1]. There were also no significant differences in response latencies for the sensitive (mean = 921.57, SD = 129.19) and tough (mean = 941.90, SD = 120.97) perspectives [t(23) = 0.8; not significant].

Fig. 1.

(A) Behavioral ratings of negative affect in response to negative images were higher for sensitive (vs. tough) targets. (B) right amygdala response to negative images was higher when adopting the sensitive (vs. the tough) perspective. (C) When participants adopted the sensitive (vs. tough) perspective, their neural response to negative images reflected higher levels of negative affect, measured as the level of similarity to the PINES pattern. Error bars denote within-participant SEs. (D) Participants who exhibited a greater difference in amygdala activity and PINES expression for the tough vs. sensitive target subsequently estimated greater differences in predicted negative affect for these targets.

For negative images, affect ratings from the self perspective (mean = 3.140, SD = 0.496) were higher than those for the tough target [t(23) = 5.08, P < 0.001] and lower than those for the sensitive target [t(23) = 8.10, P < 0.001]. For neutral images, affect ratings from the self perspective (mean = 1.058, SD = 0.104) did not differ from the tough perspective [t(23) = 0.48, not significant] and were lower than those for the sensitive perspective [t(23) = 2.63, P = 0.015].

Based on participants’ affect ratings for the tough, sensitive, and self perspectives, we calculated for each participant a measure of “similarity to sensitive/tough target” that indexed the extent to which affect ratings from the self perspective were more similar to one target or the other. This measure, alongside with other neural and self-report measures of self-other similarity, indicated that, overall, participants did not identify more with one perspective or another and that the level of self-other similarity did not modulate our key measures (see SI Experimental Procedures, Fig. S1, and Table S1 for details of these analyses).

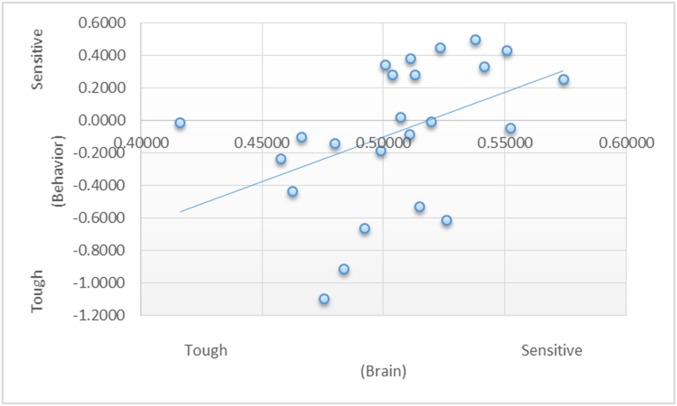

Fig. S1.

Correlation between the multivariate neural measure of self-other similarity and the behavioral measure of self-other similarity.

Table S1.

Whole-brain comparisons

| Contrast | x, y, z | K | Maximum t | Mean t | Region |

| Other > self | |||||

| 3, −72, 27 | 1,325 | 8.805779 | 4.067774 | PCC | |

| 39, −66, 31 | 345 | 5.192857 | 3.44621 | rTPJ | |

| −7, 57, −11 | 352 | 4.58128 | 3.026215 | mPFC | |

| −38, −70, 42 | 396 | 4.155125 | 2.967491 | lTPJ | |

| Self > other | |||||

| None identified | |||||

| Tough > sensitive | |||||

| None identified | |||||

| Sensitive > tough | |||||

| None identified |

P < 0.05, family-wise error-corrected.

Amygdala analysis.

As a first step in examining whether perspective-taking modulates affective processing, we defined the right and left amygdala as anatomical regions of interest based on the Harvard-Oxford probabilistic atlas (using voxels with a 50% or higher probability of being labeled as the amygdala) and extracted parameter estimates for the six conditions (negative/neutral × sensitive/tough/self). As predicted, in the left amygdala, when participants observed the images from their own perspective, activation was higher for negative (mean = 0.177, SD = 0.167) than for neutral (mean = 0.086, SD = 0.148) images [t(23) = 2.77, P = 0.005]; likewise, in the right amygdala, activation was higher for negative (mean = 0.149, SD = 0.149) than for neutral (mean = 0.087, SD = 0.112) images [t(23) = 2.15, P = 0.020].

After establishing that amygdala activity is responsive to the presentation of aversive images when viewing them from one’s own perspective, we asked whether the amygdala was modulated when taking a tough or sensitive perspective. To do so, we conducted a 2 × 2 ANOVA with perspective (sensitive/tough) and valence (negative/neutral) as within-participant factors. As predicted, the results showed an interaction of perspective and valence in both the right [F(1,23) = 6.77, P = 0.007, partial η2 = 0.227] and the left amygdala [F(1,23) = 2.96, P = 0.049, partial η2 = 0.114]. In the right hemisphere, amygdala activation was lower when viewing negative images from the perspective of the tough (mean = 0.141, SD = 0.098) vs. the sensitive target (mean = 0.175, SD = 0.120) [t(23) = 2.02, P = 0.027]; there was no significant difference in activation for neutral images from the perspective of the tough (mean = 0.095, SD = 0.092) and sensitive (mean = 0.067, SD = 0.89) targets [t(23) = 1.38, P = 0.180]. In the left hemisphere, there was a marginally significant effect wherein amygdala activation was lower when viewing negative images from the perspective of the tough (mean = 0.175, SD = 0.130) vs. sensitive target (mean = 0.200, SD = 0.129) [t(23) = 1.45, P = 0.079]. There was no significant difference in activation for neutral images from the perspective of the tough (mean = 0.100, SD = 0.117) and sensitive (mean = 0.078, SD = 0.105) targets [t(23) = 1.04, P = 0.306]. For both negative and neutral images, the self perspective did not differ from the sensitive perspective in either the right or left amygdala (P > 0.27); likewise, the self perspective did not differ from the tough perspective in either the right or left amygdala (P > 0.64)—suggesting that the perspective × valence interaction was not driven solely by either the tough or sensitive perspective.

Although the amygdala was more active for negative vs. neutral images when viewed from the self’s perspective, it could be argued that different subregions of the amygdala may be differentially engaged under the self and other conditions. To address this concern, we conducted a whole-brain search based on the self negative > self neutral contrast. This contrast yielded significant activation across several brain regions, including the left and right amygdala, which we then masked with anatomically defined amygdala regions based on the Harvard-Oxford probabilistic atlas. The interaction of perspective and valence remained significant in the right amygdala cluster [47 voxels, peak coordinate, x = 18, y = −3, z = −18; F(1,23) = 5.25, P = 0.015]; however, the interaction in the left amygdala (102 voxels, peak MNI coordinate, x = −12, y = −6, z = −18) did not attain significance [F(1,23) = 1.25, P = 0.136]. In light of this, we limited our subsequent analyses to the right amygdala cluster.

PINES analysis.

Another concern is that, although amygdala activation is strongly associated with the processing of negatively valenced stimuli, it is sometimes activated when processing positive stimuli (24), which may reflect a more general role for the amygdala in detecting and encoding of goal-relevant stimuli (25–27). These findings suggest that the amygdala’s role in negative affect be indirect, which complicates attempts to rely on its activation as a neural marker of negative affective responses.

In light of this, we sought to strengthen our claim that emotional perspective-taking modulates negative affective processing by using a recently identified PINES (23). The PINES is a whole brain activation pattern developed using machine learning techniques that can reliably predict self-reported emotional responses to aversive images. As noted, prior work (23) has shown that this signature is not affected by general arousal, and is not reducible to patterns of activity in the amygdala. Thus, it provides an independently validated neural marker of experienced affective negativity.

We first validated the PINES method in the current dataset by showing that the PINES expression score was significantly higher when observing negative (mean = 0.490, SD = 0.192) vs. neutral (mean = 0.069, SD = 0.152) images from the perspective of the self [t(23) = 11.24, P < 0.001]. Furthermore, regardless of perspective, the PINES score was higher for negative (mean = 0.462, SD = 0.175) vs. neutral (mean = 0.094, SD = 0.156) images viewing conditions [F(1,23) = 349.18, P < 0.001]. Having established that the PINES pattern differentiates images as a function of their negativity in our dataset, we investigated the effect of perspective (sensitive/tough) and valence (negative/neutral) on the degree of affective negativity, as gauged by the PINES expression score. As predicted, there was an interaction between perspective and valence, mirroring the effect in the amygdala [F(1,23) = 7.72, P = 0.005]. The PINES expression score was lower when viewing negative images from the perspective of the tough (mean = 0.428, SD = 0.173) vs. sensitive target (mean = 0.468, SD = 0.188) [t(23) = 1.83, P = 0.039]; there was no significant difference in expression for neutral images from the perspective of the tough (mean = 0.095, SD = 0.092) vs. the sensitive (mean = 0.067, SD = 0.089) targets [t(23) = 1.28, P = 0.210]. The PINES calculation was done on a trial-based model that we used for multivariate analyses [PINES, multivoxel pattern analysis (MVPA), and pattern similarity analysis]. The results of the analysis are identical when using an aggregated-trial model. Valence × perspective interaction: F(1,23) = 7.72, P = 0.005 (see SI Experimental Procedures for further details concerning PINES method).

Brain-behavior correlation.

Having established that the different measures of affective response are each impacted by the perspective taken, an important next question was whether and how they are related. In particular, it is important to demonstrate that one or both of the neural measures—amygdala activity and/or PINES score—predict self-reports of negative affective experience, as such correlations would support the idea that the neural regions supporting simulation of the tough vs. sensitive target’s emotions lead to changes in a reports of affective experience.

To address this issue, we calculated for each participant a measure of each type of effect on a measure of affective response (i.e., the behavioral effect, PINES effect, and amygdala effect), as the difference between negative and neutral conditions for the sensitive vs. tough perspectives [i.e., sensitive (negative-neutral) – tough (negative-neutral)]. As predicted, the results showed that participants who exhibited a greater difference in amygdala activity for the tough vs. sensitive target subsequently exhibited a greater difference in their behavioral evaluations of the affective states of these targets (r = 0.38, P = 0.033). Likewise, participants who exhibited a greater difference in PINES scores for the tough vs. sensitive target subsequently exhibited a greater difference in their behavioral evaluations of the affective states of these targets (r = 0.39, P = 0.014). Interestingly, there was no correlation between the PINES effect and amygdala effect (r = 0.01), as would be expected based on prior work establishing the PINES that suggested they could be independent predictors of negative affect (23). A multiple regression with both the PINES effect and amygdala effect as predictors and the behavioral effect as the dependent variable showed a significant effect for the PINES (b = 1.193, SE = 0.562, 95% CI: 0.023, 2.364, P = 0.046) and a marginally significant effect for the amygdala (b = 0.742, SE = 0.363, 95% CI: −0.103, 1.499, P = 0.053; R2 = 0.295). Thus, our results suggest that each of the two patterns made a unique contribution to changing reports of affective responding.

What Are the Neural Systems That Support the Perspective-Based Modulation of Affective Processing?

Psychophysiological interaction.

To identify regions that may play a key role in the perspective-taking–based regulation of amygdala activity, we conducted a psychophysiological interaction (PPI) analysis (28). This analysis was done by creating regressors for each of the experimental conditions, the amygdala time series, and interaction terms for the amygdala time series and the experimental conditions. The difference of the relevant PPI-term regression coefficient, i.e., [(amygdala time series) × (sensitive negative)] > [(amygdala time series) × (tough negative)] was then subjected to a second-level random effects analysis, which also included a between-participants covariate coding for the average difference in affect rating across conditions.

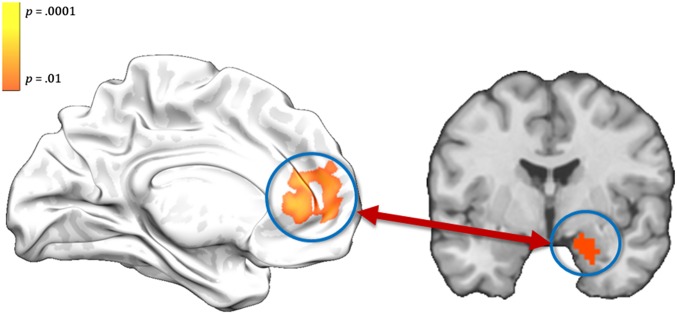

This analytical strategy allowed us to identify regions that during sensitive negative trials were more positively correlated with right amygdala activation and/or during tough negative trials we more negatively correlated with amygdala activity and exhibited this pattern more so for participants that displayed greater perspective-related modulation of affective response (i.e., greater behavioral effect). The resulting analysis yielded a cluster of 203 voxels in the anterior mPFC (specifically, pgACC and dmPFC; peak MNI coordinate, x = −9, y = 54, z = 15; Fig. 2), which survived the P < 0.05, whole-brain corrected significance threshold determined by AlphaSim. Masking out this anterior mPFC cluster did not alter the results of the PINES analysis.

Fig. 2.

The right amygdala cluster identified by contrasting the processing of negative and neutral images from the self’s perspective (Right), and the anterior mPFC region that was implicated in perspective-based regulation of amygdala activity (Left). The results suggest that the anterior mPFC up- or down-regulated amygdala activity as a function of the perspective (sensitive vs. tough) that participants adopted.

In other words, participants who showed the greatest perspective-dependent modulation of affective experience also showed the greatest perspective-dependent modulation of the anterior mPFC-amygdala pathway. More specifically, our results showed that participants who showed the greatest perspective-dependent modulation of affect ratings showed a negative coactivation pattern between the anterior mPFC and the amygdala when adopting the tough perspective.

MVPA.

If the anterior mPFC cluster identified in the PPI analysis is indeed responsible for the perspective-based modulation of amygdala activity, then the multivoxel pattern of activity in this region during image viewing could be expected contain information that can discriminate whether participants were taking the perspective of the tough or sensitive target. To test this, we conducted an MVPA examining classification accuracy in the anterior mPFC cluster. As predicted, the classifier was able to predict the perspective participants were taking with a mean accuracy of 54.60% (SD = 6.79), which significantly differed from chance performance [t(23) = 3.32, P = 0.001]. There was no difference in overall average levels of activity in this cluster between the sensitive and tough conditions [t(23) = 0.37, P = 0.709; see SI Experimental Procedures for further details concerning MVPA analyses].

SI Experimental Procedures

Imaging Procedure.

Data were collected with a 3-T GE MR750 magnet using the standard 32-channel head coil. Before the experiment, high-resolution anatomical images ([spoiled gradient recalled (SPGR)]; 1-mm3 resolution using sagittal slices, with a 256 × 256 within-slice acquisition matrix) were obtained. Functional images were acquired with a T2*-sensitive Echo Planar Imaging (EPI) BOLD sequence. Forty-five 3-mm-thick slices were collected parallel to the anterior commissure (AC) - posterior commissure (PC) line axis, with a TR of 2,000 ms (TE = 25 ms, flip angle = 77°, field of view = 19.2 cm). Head motion was minimized by using cushions arranged around each participant's head and by explicitly guiding the participants before entering the scanner. Imaging data were preprocessed using SPM8 (Wellcome Department of Cognitive Neurology). A slice-timing correction to the first slice was performed followed by realignment of the images to the first image. Next, data were spatially normalized to an EPI template based on the MNI305 stereotactic space. Normalized images were interpolated to 2-mm3 voxels and smoothed with a 6-mm full-width-at-half-maximum (FWHM) Gaussian kernel.

Imaging Analysis.

General analysis flow.

We used a multistage, multimethod analysis scheme. At the first stage, we sought to determine whether perspective-taking modulates affective processing. To this end, we used two converging measures: analysis of activity in the amygdala and the expression of a distributed, multivoxel pattern that was independently shown to accurately predict levels of negative affect (PINES) (22) elicited by photographic images drawn from the set used in this study (i.e., the IAPS) (39). At a second stage, we sought to identify the neural mechanisms that are involved in the perspective-dependent regulation of affective responding. To this end, we conducted a PPI analysis to identify regions that exhibited perspective-dependent coupling with the amygdala. Finally, we sought to verify whether the regions identified in the PPI analysis were indeed involved in perspective-taking. To this end, we conducted an MVPA to determine whether multivariate patterns of activity within the regions discovered using PPI distinguished between the sensitive and tough targets.

Amygdala activity.

To investigate the effects of perspective on affective processing, we analyzed neural activity in the amygdala. First-level general linear model (GLM) analyses were implemented in NeuroElf (neuroelf.net). Cue, stimulus-viewing, and response portions of each trial were modeled as boxcar regressors convolved with the canonical hemodynamic response function: 3 different regressors for each of the 3 cues, 6 different regressors for the image viewing conditions (negative/neutral crossed with self/tough/sensitive perspective) and a single regressor for all rating periods. A high-pass temporal filter (128-s cutoff, discrete cosine transform basis set), and estimates of global signal in white matter, gray matter, and cerebrospinal fluid were included as regressors of no interest. Following GLM computation for each participant, second-level random-effects analyses were performed on the group data. Significant clusters were identified using joint voxel and extent thresholds that preserved an α of less than 0.05, as determined by AlphaSim (1,000 iterations; smoothness estimated at 11.5 mm from the residual of the corresponding second-level regression), implemented in NeuroElf (uncorrected P < 0.02 and 253 contiguous voxels). The amygdala was defined based on the self negative > self neutral contrast, masked with anatomically defined amygdala regions based on the Harvard-Oxford probabilistic atlas (using voxels with a 50% or higher probability of being labeled as the amygdala).

PINES.

A second manner by which we investigated the effects of perspective-taking on affective processing was by using the PINES method (22). This method provides a sensitive objective measure of participants’ level of negative affect in response to negative-affect–inducing images. The complete details pertaining to the development of the PINES measure and its validation appear in ref. 22.

Because the current study is among the first to use the PINES method since its publication, we briefly describe it here. Chang et al. (23) developed the PINES on the basis of a dataset that included 183 participants who viewed affect-inducing IAPS images in the scanner. After viewing each image, participants rated their affective experience on a scale of (1 = neutral, 5 = very bad). At a first stage of their analysis flow, the authors performed a first-level GLM analysis wherein affect ratings were used to predict BOLD response, by creating one regressor (trial type) per rating value (on a five-point Likert scale). The dataset was then divided into a training (n = 121) and a test (n = 61) subset. The training data served as the input for a machine learning method (specifically, using a least absolute shrinkage and selection operator and principle components regression) that found an abstract pattern of whole-brain neural activity that best predicts reports of negative affect. This resulting pattern (the PINES) is a whole-brain map wherein each voxel contains a weight, and higher positive values represent a higher contribution to higher level to predicted negative affect, and higher negative values represent a higher contribution to lower predicted negative affect. Put another way, the degree to which whole-brain activation maps resembled (positively correlated with) the PINES map was predictive of greater reported negative affect. Importantly, the most predictive voxels of the PINES map are distributed all throughout the entire brain, and are not concentrated in classic affective processing regions such as the amygdala.

After the generation of the PINES map, it was applied to the test dataset, to investigate its applicability as a reliable measure of picture-induced negative affect. The results of this analysis showed that the degree to which neural activity was similar to the PINES pattern could serve to predict whether participants reported experiencing a medium or high degree of negative affect on 91% of the trials and whether they reported experiencing a low or medium degree of negative affect on close to 100% of the trials. Furthermore, the authors demonstrated the discriminant validity of the PINES pattern, by showing that it does not relate to participants’ rating of experienced pain in response to thermal stimulation. Thus, the degree to which neural activity resembles the PINES map provides researchers with a highly sensitive objective measure of degree of negative affect elicited by IAPS images.

In the current study, we calculated the degree of similarity to the PINES pattern across our experimental conditions. To that end, we analyzed our data using a trial-based model, for which each of the 108 experimental trials were modeled separately for each participant, resulting in 108 whole-brain coefficient maps. These models were created by iteratively specifying a regressor for the particular trial of interest alongside a common set of regressors for all remaining trials. Parallel to the second-level contrast analysis, trials were modeled on the basis of the image viewing period with a 6-s boxcar function convolved with the canonical hemodynamic response function. A high-pass filter with a cutoff of 128 s was used. The trial-based model was used for all multivariate analyses (i.e., PINES, MVPA, and the pattern similarity analysis). Calculating the PINES on the basis of an aggregated-trial model yielded identical results, validating the trial-based estimation procedure described herein.

To calculate the degree similarity to the PINES map, for each participant in each of the six conditions, following Chang et al., we (i) multiplied the β values in each trial for each voxel by the PINES weight for that voxel, (ii) summed the values across the whole brain, and (iii) averaged the values across trials, within each of the conditions.

PPI.

To identify regions that may play a key role in the perspective-taking–based regulation of amygdala activity, we conducted a PPI analysis (27). The logic of our analysis was that such regions should exhibit a pattern of functional connectivity with the amygdala that varies as a function of perspective and should do especially for participants who show a greater difference in their affect judgements. The right amygdala cluster (identified on the basis of the self negative > self neutral contrast, masked with an anatomically defined amygdala; see Results for further details) was designated as seed region. Regressors were created for each experimental condition, the seed-region time series, and interaction terms for the seed-region time series and the experimental conditions. The difference of the relevant PPI-term regression coefficient, i.e., [(amygdala time series) × (sensitive negative)] > [(amygdala time series) × (tough negative)] was then subjected to a second-level random effects analysis, which also included a between-participants covariate coding for the average difference in affect rating across conditions. Significant clusters were identified using a joint voxel and extent threshold that preserved an α of less than 0.05, as determined by AlphaSim (1,000 iterations; smoothness estimated at 10.0 mm from the residual of the corresponding second-level regression), implemented in NeuroElf (uncorrected P < 0.02 and 190 contiguous voxels).

MVPA.

To verify that the regions identified in the PPI analysis were indeed involved in perspective-taking, we conducted an MVPA analysis that examined whether these regions contain information that allows to accurately classify which perspective participants adopted. This analysis relied on the same trial-based model used for the PINES analysis. MVPA was implemented using NeuroElf and applying a support-vector machine implemented with libSVM (43). To yield a classification accuracy estimate for the region of interest, linear support vector machines were trained with cost parameter C set to 1. Five thousand cross-validation permutations were run where the classifier was trained on 83% of the trials and tested on the remaining trials. For each permutation, this yielded an accuracy estimate that relied on all voxels in the region of interest as features and reflected the degree to which the multivoxel activation pattern was able to predict which perspective was taken during the trial. Classification accuracy was then averaged across iterations to yield a single accuracy value for each participant. A one-sample t test was performed over these individual accuracies, comparing with chance classification of 50% (17).

Supplementary Analyses.

Behavioral measures of self-other similarity.

Based on participants’ affect ratings for the tough, sensitive, and self perspectives, we calculated for each participant a measure of “similarity to sensitive/tough target” that indexed the extent to which affect ratings from the self-perspective were more similar to one target or the other. For all participants, average affect ratings for the tough perspective were lower than the sensitive perspective. We averaged affect ratings for all negative trials within the self, sensitive, and tough perspectives for each participant and then determined where the self stands with respect to the tough and sensitive conditions by calculating the z-score for the self over the three measurements (self, tough, sensitive). This measure showed that participants did not identify more with one of the targets [t(23) = 0.80; not significant].

Furthermore, at the end of the study, participants were asked to respond to the binary question: “who did you identify with more?” Exactly half of the participants (n = 12) identified more with the sensitive target and exactly half (n = 12) identified more with the tough target. Participants also used five-point scales to rate how similar they perceived themselves to be to each of the two targets, and how much they liked each of them (1 = not at all, 5 = very much). Using these measures we calculated for each participant a measure of identification with the targets (ranging from 1 = sensitive to −1 = tough). Across participants, this measure did not significantly differ from 0 [mean = 0.02, SD = 0.33, t(23) = 0.29, not signficant].

Finally, across all of the measures of self-other similarity mentioned above, the extent to which each participant identified with either the tough or sensitive perspective did not significantly interact with our key dependent measures (i.e., amygdala and PINES response). Likewise, a neural measure of self-other similarity (see below) indicated that participants’ multivoxel patterns of brain activity during self perspective were not more similar to patterns seen during the sensitive or the tough perspective and that these levels of similarity did not modulate our main dependent variables.

Neural self-other similarity.

We conducted additional analyses that were intended to investigate the possibility that participants’ identification with either the tough or sensitive target played an important role in the perspective-dependent modulation of affective responding. First, we conducted a whole-brain univariate analysis to identify the broader neural network involved in mentalizing; second, we examined whether the multivariate activity in this network can indeed classify which perspective participants were taking. Third, we conducted a multivariate pattern similarity analysis that estimated—based on patterns of activity within the mentalizing network—which of the two perspectives were more similar to the patterns present when each individual participant made affect ratings from their own perspective. Fourth, we validated this neural measure against participants’ behavioral measure of identification. Finally, we investigated whether this neural measure of self-other similarity suggests that participants systemically identified with either the sensitive or tough perspective and whether the degree of identification moderated the perspective-dependent effects on our main DVs (i.e., amygdala, PINES).

Does perspective-taking recruit the mentalizing network?

Contrasting neural activity when participants took the perspective of the tough and sensitive perspective vs. neural activity during self perspective trials (i.e., other > self contrast) revealed significant activation in the network previously identified to be associated with mentalizing [i.e., mPFC, right and left temporo-parietal junction (TPJ), and the posterior cingulate cortex (PCC)]). The reverse contrast did not yield significant activation. Likewise, the tough > sensitive and sensitive > tough whole-brain contrasts did not yield significant activation.

Can multivariate patterns of activity in the mentalizing network be used to discriminate between the tough and sensitive perspective?

We investigated whether neural activity within the entire mentalizing network contains information that can discriminate whether participants were taking the perspective of the tough or sensitive target. To test this, we conducted an MVPA identical to the one conducted on the dmPFC cluster that emerged from the PPI analysis. As predicted, a classifier trained on the entirety of this mentalizing network was able to predict the perspective participants were taking with a mean accuracy of 58.37% (SD = 6.79), which significantly differed from chance performance [t(23) = 6.10, P < 0.001].

Can multivariate patterns of activity within the mentalizing network be used as a measure of self-other similarity?

We computed the correlation between the β estimates across all of the voxels in this network, for each participant, for all possible “out-of-run” pairs (i.e., discarding the pairwise correlation values for trials that occurred in the same run, as those are potentially inflated due to the fact that those single-trial β estimates are nonindependent), and averaged the z-scored correlation values across all trial-type pairings (tough/self/sensitive × negative/neutral). Finally, by comparing the self-to-tough correlation values to the self-to-sensitive correlation value, we calculated for each participant a measure of the degree to which his/her neural activity during self trials resembled that of the tough or sensitive target, ranging from (0 = identical to tough) to (1 = identical to sensitive).

To validate the neural self-other similarity measure, we investigated whether participants’ similarity to the sensitive (or, likewise, tough) target—as assessed by their self-report ratings of affective experience—was correlated with participants’ similarity to the sensitive target—as assessed by the neural pattern similarity measure described above. The results showed a significant correlation (r = 0.45, P = 0.01; Fig. 1).

Does self-other similarity play an important role in the perspective-dependent modulation of affective response?

Finally, we analyzed whether the neural measure of self-other similarity indicated that overall, participants identified more with either the sensitive or tough target. Values on the self-other similarity measure ranged from 0.41 to 0.57 (with 0.5 representing that the participant did not identify more with either perspective). Across participants, this measure did not significantly differ from 0.5 (mean = 0.505, SD = 0.035) [t(23) = 0.7, not significant]. The results of this analysis suggest that, on average, neural activity when participants judged affective responses from their own perspective was not more similar to neural activity when adopting one or the other of the two target perspectives. Furthermore, the self-other similarity measure did not moderate the perspective × valence interactions for the PINES or for the left and right amygdala (all P > 0.14). These results dovetail with the results of the analyses reported above concerning the categorical and continuous self-reports of identification with each target, as well as the result from the measure of self-other similarity based on affect ratings—all of which indicated that self-other similarity did not play a significant role in the perspective-dependent modulation of affective responding.

Discussion

We sought to investigate whether (and how) taking the perspective of other people can modify our own affective responses to stimuli. We hypothesized that (i) taking the perspective of others would regulate affective processing in neural mechanisms that subserve one’s own affective experience and (ii) the neural system involved in regulating perspective-dependent affective processing would be a region implicated in mental states inference, such as the anterior mPFC (i.e., the dmPFC and pgACC).

Consistent with our first hypothesis, whenever participants took the perspective of a sensitive (vs. tough) target, three neural indicators of negative affective processing converged to suggest that participants “simulated,” the presumed affective state of the target individual. First, amygdala activity was up-regulated for the sensitive (vs. tough) perspective. Second, a multivoxel, whole-brain pattern of activity that has been independently shown to accurately predict participants’ affective state (PINES) (23) indicated up-regulated negative affectivity when taking a sensitive (vs. tough) perspective. Third, participants who behaviorally predicted a greater difference in the affective responses of the sensitive and tough targets also exhibited a greater difference in their PINES and amygdala response when adopting the sensitive (vs. tough) perspectives.

That perspective-taking modulates amygdala activity provides initial support to the claim that perspective-taking modulates affective processing. However, because the amygdala responds to goal-relevant stimuli in general (22, 26, 27), it could be argued that its activation does not reflect negative affective intensity per se. However, the finding that perspective-taking modulated the PINES pattern—and that this modulation uniquely contributed to predictions of subsequent judgments of a targets affective response over and above amygdala activity—provides strong converging evidence to the claim that “seeing the world through another’s eyes” really does change one’s own affective processing.

Having provided support for that claim, we sought to delineate the cognitive and neural mechanisms by which such perspective-dependent regulatory consequences occur. Consistent with our second hypothesis, results suggested that the anterior mPFC may regulate, or exert top-down influence over, the affective simulation. Specifically, this brain region exhibited a pattern of perspective-dependent coupling with the amygdala that was dependent on the magnitude of perceived differences in the targets’ affective response. Relatively speaking, when adopting a sensitive perspective, anterior mPFC activity was associated with increased amygdala activity; when adopting a sensitive perspective, anterior mPFC activity was associated with relatively decreased amygdala activity. Furthermore, an MVPA analysis showed that that the multivoxel pattern of activity in this region during image viewing contained information that discriminated whether participants were taking the perspective of the tough or sensitive target.

Implications for the Study of Perspective-Taking.

The current research addressed an age-old question concerning the process of perspective-taking. It is often suggested that people are able to take the perspective of others through a process of simulation (note that the term simulation is polysemous: it can be used to discuss a cognitive process by which people may take the perspective of others, as well as a consequence of perspective taking. In this section we refer to the former). The philosopher Alvin Goldman described simulation as such: “First, the attributor creates in herself pretend states intended to match those of the target… The second step is to feed these initial pretend states into some mechanism of the attributor’s own psychology … and allow that mechanism ...to generate one or more new states (e.g., decisions)” (29, p. 80–81). In other words, according to simulation theory, the path to understanding the emotions of others relies on a readout from the very same core emotional processes that generate the emotional response in the self (see refs. 13, 30, and 31 for similar suggestions).

The current study allowed us to investigate the process of simulation with converging measures of affective processing. We showed that participants indeed exhibited greater affect negativity when they took the perspective of the sensitive (vs. tough) target. Importantly, participants who exhibited greater difference in amygdala activity/PINES expression for the tough vs. sensitive target subsequently exhibited greater difference in their evaluations of the affective state of these targets. Together, these findings present perhaps the most direct evidence, to date, for the viability of simulation theory.

The existence of shared mechanisms for both self- and other-focused processing is a prerequisite for simulation theory. However, it does not suffice to explain the process of perspective-taking. As acknowledged in some of the earliest discussions of simulation theory, if people were to simply copy their own experience and project it onto others, attempts at perspective-taking would be ineffective (15, 32). Thus, for perspective-taking to succeed, individuals must accommodate their simulation on the basis of a conceptual model of the target (e.g., “This guy is neurotic, he must be distressed by cockroaches”). This process is unlikely to rely on the amygdala alone, which is a phylogenetically ancient brain system that is unlikely to subserve the type of symbolic thought involved in conceptually-mediated perspective-taking (18). Therefore, we predicted that amygdala activity should be modulated through an interaction with a brain system that subserves such model-based, conceptual capacities.

As noted earlier, our results suggest that that this system involves the anterior mPFC. This region is widely implicated in conceptual thought in general (33–35) and social cognition in particular (17, 36). To give one example, recent work shows that multivoxel patterns of activity in the anterior mPFC can be used to predict which one of two individuals a participant is thinking about (20). The current research dovetails and builds on this prior work by showing that anterior mPFC doesn’t just support inferences about others states and traits but supports simulation of their perspective on world, thereby changing the way that we appraise the affective significance of events and subsequently respond to them.

Implications for Models of the Self-Regulation of Emotion.

An important implication of the current findings is the suggestion that perspective-taking could have emotion regulatory benefits. In the current study, participants did not have the explicit goal of up- or down-regulating their emotions, and yet, merely trying to understand the emotions of tough vs. sensitive others modulated the activity in a brain system involved in the generation of negative affect. Thus, our research suggests that the attempt to “walk in the shoes” of an emotionally resilient individual may cause people to feel less unpleasant in the face of adversity.

Accordingly, it may be possible to harness the type of emotional perspective-taking studied here as an emotion regulation strategy, aimed at helping individuals cope with emotional distress. Extant research within the field of emotion regulation has shown that people can effectively down-regulate negative affect by using top-down cognitive control (2). However, a limitation of many cognitive emotion regulation strategies is that they depend on attentional, linguistic, and working memory systems supported by lateral prefrontal regions. Lateral prefrontal regions are not fully developed until late adolescence (37) and can be disrupted under severe stress (38). Thus, the finding that perspective-based regulation of the amygdala relies on anterior medial rather than lateral prefrontal regions may suggest a new pathway for effective emotion regulation.

Specifically, a simulation-based emotion regulation strategy may be important in populations for which strategies dependent on lateral PFC may be problematic because lateral frontal functionality is compromised or yet to develop (39). For example, future studies could investigate whether young children may especially benefit from being taught how to regulate their emotions using simulative pretend play (“imagine that you are a big boy/girl”).

More broadly, the current findings highlight that there may be a plurality of computations and neural pathways by which emotion-regulatory consequences can occur. In this way, the current findings contribute to our growing understanding of the complexity of neural interactions that subserve important behavioral outcomes. Hopefully, future research extending the findings described herein could shed further light on strategies that support adaptive socioemotional functioning.

Experimental Procedures

Participants.

Twenty-four right-handed participants (12 females; average age, 20.5 y; SD = 2.577; range 18–28 y) participated in the experiment for monetary compensation. All were native-level English speakers, all had normal or corrected vision, and none had a history of neurological or psychiatric disorders and. Sample size was determined a priori, based on previous neuroimaging studies showing regulation-related modulation of amygdala activity (2). Three additional participants were excluded from the final analysis (one for missing data and two for failing to comply with task instructions, as evident by deviation of more than 3 SDs from the mean affect rating in at least one task condition). Participants gave written consent before taking part in the experiment. The study was approved by the Institutional Review Board of Columbia University.

Materials.

Target description questionnaires.

The descriptions of the tough and sensitive targets were given in the form of printed questionnaires that were ostensibly filled out by two previous participants. At the top of each questionnaire, a name appeared in hand-written text. Both names were matched to each participants’ sex. The questionnaire contained demographic details (e.g., place of birth) and responses to personal questions (e.g., music preferences, hobbies). The key differences between the two types of targets arose from the way each one had supposedly responded to particular questions. In actuality, the answers had been pretested to elicit perceptions that one target was tough and the other sensitive. For example, the tough character worked as an EMT and enjoyed action and horror movies and loud music. By contrast, the sensitive character worked as a graphic designer and liked classical music and romantic comedies. Furthermore, in one of the free response items the tough target described him/herself as being relatively resilient and the sensitive character described him/herself as being relatively sensitive. These characteristics were embedded within more mundane details to bolster the believability of the experiment.

Affective stimuli.

Fifty-four negative images (mean normative valence = 2.76, mean normative arousal = 5.91, on a 1–9 scale) and 54 neutral images (mean normative valence = 5.32, mean normative arousal = 3.15) were taken from the International Affective Picture System (40). Both negative and neutral images were divided to three lists, matched for arousal and valence. An additional set of six similarly valenced and arousing negative images were used during training.

Behavioral Procedure.

Prescanning.

After providing consent, participants were asked to fill out a questionnaire describing various demographic and personal details about themselves. They were told that in the experiment they will be asked to predict the emotions of previous participants and that we need their answers to the personal details questionnaire to use them for the next participant. In actuality, this questionnaire was only administered to bolster the believability of the experiment, and it was not subsequently used. Immediately after filling out the questionnaire, participants were given the “character description” questionnaires, which were in the same format as the one they filled out. They were asked to read the answers of each previous participant carefully and form an impression of them in their mind.

Participants then were instructed on the task they would perform inside the scanner. They were told that they will be presented with images and that each image will be preceded either by a cue with the name of the participant whose perspective they should take or by a cue asking them to take their own perspective. Each image would be followed with a screen asking them to rate the affective response (either of themselves or the target individual) the image elicits. They were then told that they should rate the images based upon the perspective they were cued with, and that these answers would be compared with the previous participants’ actual ratings. We told participants that trials wherein they gave the rating from their own perspective would be used for the next participants (in actuality, self-perspective trials were used to identify the neural substrates of spontaneous emotional response). Participants’ goal was to predict the previous participants’ responses as accurately as possible. To increase the incentive to do so, participants were told that if they were in the top 10% of participants in terms of accuracy, they will receive a $100 bonus (in actuality, the bonus criteria was based on scanner movement). Participants then performed a short training on the task that involved completing sample trials guided by the experimenter.

Finally, as a pretask manipulation check, participants were asked to recall the answers for each of the two previous participants’ questionnaires. Whenever participants made a mistake, the questions were repeated later on until participants arrived at 100% recall accuracy.

Scanner task.

The task consisted of 108 trials (18 negative images and 18 neutral images for each of the three perspectives) that were divided into three functional runs. Each run contained 36 trials (6 negative and 6 neutral for each of the three perspectives) and lasted 10 min and 48 s.

Stimuli were presented using E-Prime 2.0 (Psychology Software Tools). Each experimental trial began with the presentation a cue with the name of the participant whose perspective they should take, or a cue asking them to take their own perspective, shown for 2 s. After a jittered fixation period (1–5 s), participants viewed the affective image for 6 s. The image was replaced by a screen that appeared for 3 s, asking them to rate the affective reaction to the image from the perspective they were asked to adopt (1 = neutral, 5 = very bad). The trial concluded with a second jittered fixation period (3–9 s). Stimuli were displayed in random order and the assignment of images to the three perspective conditions was counterbalanced across participants.

Postscan.

At the end of the study, participants completed standardized questionnaires assessing individual differences in affective responding [Beck depression inventory (41) and state-trait anxiety inventory (42)] and perspective-taking [interpersonal reactivity index (43)]. None of these individual-difference measures were significantly correlated with our dependent variables of interest (PINES scores, amygdala activity, affect ratings) nor did they moderate the effect of perspective (or the interaction of perspective and valence) on these dependent variables. In light of this, they are not discussed in results section.

Acknowledgments

M.G. thanks M. Perkas, L. Chang, B. Dore, C. Helion, J. Shu, B. Denny, and R. Martin for assistance. M.G. is supported by fellowships from the Fulbright and Rothschild foundations. Completion of the manuscript was supported by National Institute on Aging Grant AG043463, National Institute of Child Health and Human Development Grant HD069178, and National Institute of Mental Health Grant MH090964 (to K.N.O.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1600159113/-/DCSupplemental.

References

- 1.Ochsner KN, Silvers JA, Buhle JT. Functional imaging studies of emotion regulation: A synthetic review and evolving model of the cognitive control of emotion. Ann N Y Acad Sci. 2012;1251:E1–E24. doi: 10.1111/j.1749-6632.2012.06751.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Buhle JT, et al. Cognitive reappraisal of emotion: A meta-analysis of human neuroimaging studies. Cereb Cortex. 2014;24(11):2981–2990. doi: 10.1093/cercor/bht154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mead GH. 1934. Mind, Self, and Society: From the Standpoint of a Social Behaviorist (Works of George Herbert Mead, Vol. 1) (Univ of Chicago Press, Chicago)

- 4.Bandelj N. How method actors create character roles. Sociol Forum. 2003;18(3):387–416. [Google Scholar]

- 5.Gross JJ. Antecedent- and response-focused emotion regulation: Divergent consequences for experience, expression, and physiology. J Pers Soc Psychol. 1998;74(1):224–237. doi: 10.1037//0022-3514.74.1.224. [DOI] [PubMed] [Google Scholar]

- 6.McRae K, et al. 2010 The neural bases of distraction and reappraisal. J Cogn Neurosci, 22(2):248–262. [Google Scholar]

- 7.Ochsner KN, Bunge SA, Gross JJ, Gabrieli JDE. Rethinking feelings: An FMRI study of the cognitive regulation of emotion. J Cogn Neurosci. 2002;14(8):1215–1229. doi: 10.1162/089892902760807212. [DOI] [PubMed] [Google Scholar]

- 8.Ochsner KN, Gross JJ. Cognitive emotion regulation: Insights from social cognitive and affective neuroscience. Curr Dir Psychol Sci. 2008;17(2):153–158. doi: 10.1111/j.1467-8721.2008.00566.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wager TD, Davidson ML, Hughes BL, Lindquist MA, Ochsner KN. Prefrontal-subcortical pathways mediating successful emotion regulation. Neuron. 2008;59(6):1037–1050. doi: 10.1016/j.neuron.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kross E, Davidson M, Weber J, Ochsner K. Coping with emotions past: The neural bases of regulating affect associated with negative autobiographical memories. Biol Psychiatry. 2009;65(5):361–366. doi: 10.1016/j.biopsych.2008.10.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Diekhof EK, Geier K, Falkai P, Gruber O. Fear is only as deep as the mind allows: A coordinate-based meta-analysis of neuroimaging studies on the regulation of negative affect. Neuroimage. 2011;58(1):275–285. doi: 10.1016/j.neuroimage.2011.05.073. [DOI] [PubMed] [Google Scholar]

- 12.Frith CD, Frith U. The neural basis of mentalizing. Neuron. 2006;50(4):531–534. doi: 10.1016/j.neuron.2006.05.001. [DOI] [PubMed] [Google Scholar]

- 13.Mitchell JP, Banaji MR, Macrae CN. The link between social cognition and self-referential thought in the medial prefrontal cortex. J Cogn Neurosci. 2005;17(8):1306–1315. doi: 10.1162/0898929055002418. [DOI] [PubMed] [Google Scholar]

- 14.Mitchell JP, Macrae CN, Banaji MR. Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron. 2006;50(4):655–663. doi: 10.1016/j.neuron.2006.03.040. [DOI] [PubMed] [Google Scholar]

- 15.Tamir DI, Mitchell JP. Neural correlates of anchoring-and-adjustment during mentalizing. Proc Natl Acad Sci USA. 2010;107(24):10827–10832. doi: 10.1073/pnas.1003242107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19(4):1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 17.Skerry AE, Saxe R. A common neural code for perceived and inferred emotion. J Neurosci. 2014;34(48):15997–16008. doi: 10.1523/JNEUROSCI.1676-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Spunt RP, et al. Amygdala lesions do not compromise the cortical network for false-belief reasoning. Proc Natl Acad Sci USA. 2015;112(15):4827–4832. doi: 10.1073/pnas.1422679112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.de la Vega A, Chang LJ, Banich MT, Wager TD, Yarkoni T. Large-scale meta-analysis of human medial frontal cortex reveals tripartite functional organization. J Neurosci. 2016;36(24):6553–6562. doi: 10.1523/JNEUROSCI.4402-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hassabis D, et al. Imagine all the people: How the brain creates and uses personality models to predict behavior. Cereb Cortex. 2014;24(8):1979–1987. doi: 10.1093/cercor/bht042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: A meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002;16(2):331–348. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- 22.Stillman PE, Van Bavel JJ, Cunningham WA. Valence asymmetries in the human amygdala: Task relevance modulates amygdala responses to positive more than negative affective cues. J Cogn Neurosci. 2015;27(4):842–851. doi: 10.1162/jocn_a_00756. [DOI] [PubMed] [Google Scholar]

- 23.Chang LJ, Gianaros PJ, Manuck SB, Krishnan A, Wager TD. A Sensitive and Specific Neural Signature for Picture-Induced Negative Affect. PLoS Biol. 2015;13(6):e1002180. doi: 10.1371/journal.pbio.1002180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Breiter HC, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17(5):875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- 25.Cunningham WA, Brosch T. Motivational salience: Amygdala tuning from traits, needs, values, and goals. Curr Dir Psychol Sci. 2012;21(1):54–59. [Google Scholar]

- 26.Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. The brain basis of emotion: A meta-analytic review. Behav Brain Sci. 2012;35(3):121–143. doi: 10.1017/S0140525X11000446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Whalen PJ, Phelps EA. The Human Amygdala. Guilford Press; New York: 2009. [Google Scholar]

- 28.Friston KJ, et al. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6(3):218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- 29.Goldman AI. 2005. Imitation, mind reading, and simulation. Perspective on Imitation, from Neuroscience to Social Science, eds Hurley S and Chater N (MIT Press, Cambridge, MA) Vol 2, pp. 79–93.

- 30.Epley N, Keysar B, Van Boven L, Gilovich T. Perspective taking as egocentric anchoring and adjustment. J Pers Soc Psychol. 2004;87(3):327–339. doi: 10.1037/0022-3514.87.3.327. [DOI] [PubMed] [Google Scholar]

- 31.Gordon R. Folk psychology as simulation. Mind Lang. 1986;1(2):158–171. [Google Scholar]

- 32.Ryle G. 1949. The Concept of Mind (Hutchinson, New York)

- 33.Fairhall SL, Caramazza A. Brain regions that represent amodal conceptual knowledge. J Neurosci. 2013;33(25):10552–10558. doi: 10.1523/JNEUROSCI.0051-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Honey CJ, Thompson CR, Lerner Y, Hasson U. Not lost in translation: Neural responses shared across languages. J Neurosci. 2012;32(44):15277–15283. doi: 10.1523/JNEUROSCI.1800-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Denny BT, Kober H, Wager TD, Ochsner KN. A meta-analysis of functional neuroimaging studies of self- and other judgments reveals a spatial gradient for mentalizing in medial prefrontal cortex. J Cogn Neurosci. 2012;24(8):1742–1752. doi: 10.1162/jocn_a_00233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gogtay N, et al. Dynamic mapping of human cortical development during childhood through early adulthood. Proc Natl Acad Sci USA. 2004;101(21):8174–8179. doi: 10.1073/pnas.0402680101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Raio CM, Orederu TA, Palazzolo L, Shurick AA, Phelps EA. Cognitive emotion regulation fails the stress test. Proc Natl Acad Sci USA. 2013;110(37):15139–15144. doi: 10.1073/pnas.1305706110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Coccaro EF, Sripada CS, Yanowitch RN, Phan KL. Corticolimbic function in impulsive aggressive behavior. Biol Psychiatry. 2011;69(12):1153–1159. doi: 10.1016/j.biopsych.2011.02.032. [DOI] [PubMed] [Google Scholar]

- 40.Lang PJ, Bradley MM, Cuthbert BN. 2008. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual (University of Florida, Gainesville, FL), Tech Rep A-8.

- 41.Beck AT, Steer RA, Garbin MG. Psychometric properties of the Beck depression inventory: 25 years of evaluation. Clin Psychol Rev. 1988;8(1):77–100. [Google Scholar]

- 42.Marteau TM, Bekker H. The development of a six-item short-form of the state scale of the Spielberger State-Trait Anxiety Inventory (STAI) Br J Clin Psychol. 1992;31(Pt 3):301–306. doi: 10.1111/j.2044-8260.1992.tb00997.x. [DOI] [PubMed] [Google Scholar]

- 43.Davis MH. Measuring individual-differences in empathy: Evidence for a multidimensional approach. J Pers Soc Psychol. 1983;44(1):113–126. [Google Scholar]