Abstract

When rats come to a decision point, they sometimes pause and look back and forth as if deliberating over the choice; at other times, they proceed as if they have already made their decision. In the 1930s, this pause-and-look behaviour was termed ‘vicarious trial and error’ (VTE), with the implication that the rat was ‘thinking about the future’. The discovery in 2007 that the firing of hippocampal place cells gives rise to alternating representations of each of the potential path options in a serial manner during VTE suggested a possible neural mechanism that could underlie the representations of future outcomes. More-recent experiments examining VTE in rats suggest that there are direct parallels to human processes of deliberative decision making, working memory and mental time travel.

In the 1930s, researchers such as Muenzinger, Gentry and Tolman noticed that, at the choice point of a maze, rats would occasionally pause and look back and forth as though confused about which way to go1–3. These researchers speculated that rats were imagining potential future options, and called this behaviour ‘vicarious trial and error’ (VTE)1–3. The researchers’ hypotheses that the rats were mentally searching future trajectories contrasted with simpler situation–action-chain hypotheses that maintained that an animal recognized a situation and ‘released’ a well-learned series of actions4 (FIG. 1).

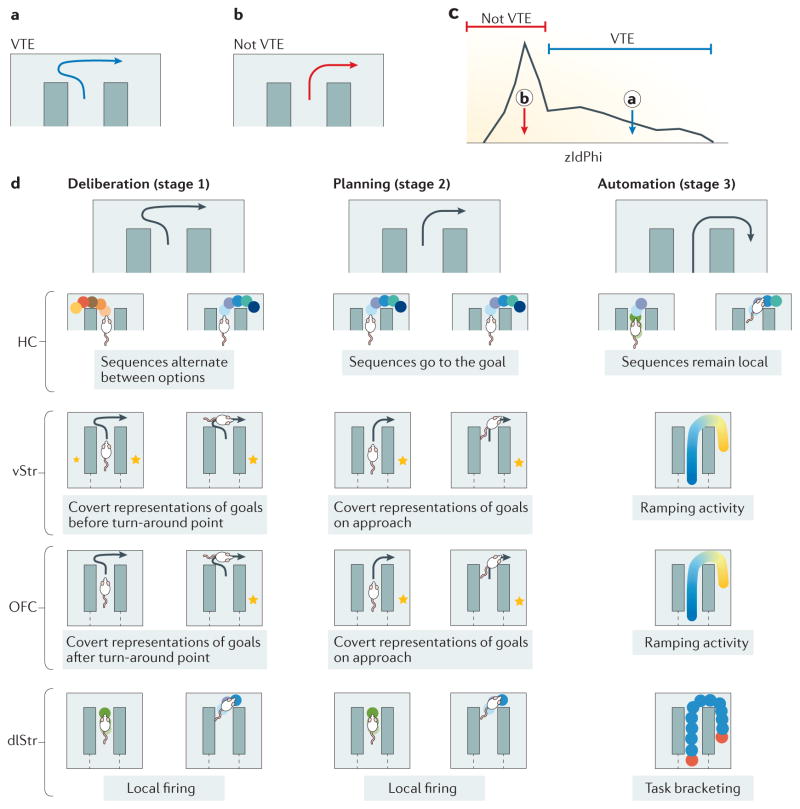

Figure 1. Vicarious trial and error.

The rodent behaviour originally termed ‘vicarious trial and error’ (VTE) by Muenzinger and Gentry1 and proposed as a prospective imagination of the future by Tolman2,3 is fundamentally a behavioural observation of pausing and reorienting. a | Example of pause-and-reorient VTE behaviour from T-choice experiments. b | Example of non-VTE behaviour: the animal orients towards only one trajectory at the choice point and continues along this trajectory. c | It is possible to quantitatively differentiate VTE laps from non-VTE laps using the zIdPhi measure. zIdPhi measures the integrated absolute angular velocity of the orientation of motion across the choice point; thus, it is high when the animals show reorientation behaviours and low when the animals simply pass through the choice point without a reorientation behaviour. zIdPhi shows a central peak (non-VTE) and a long right-skewed tail (VTE)52,66,68,89. On the panel, the circled ‘a’ shows where the path shown in panel a would fall on the zIdPhi score and the circled ‘b’ shows where the path shown in panel b would fall. d | VTE has been proposed to reflect prospective imagination and evaluation of the future. Accordingly, the shift from a flexible, deliberative decision-making process that requires this imagination to a habitual, procedural decision-making process53,54,56 can be divided into three stages (top row): deliberation (in which VTE is expressed), planning (during which VTE is diminished) and automation (when the animal expresses no VTE and instead releases an action chain). Neurophysiological data suggest information processing consistent with this hypothesis. In the hippocampus, sequences of firing of hippocampal place cells represent sweeps ahead of the animal, serially exploring the paths towards the potential goals. As behaviour automates, these sweeps transition from going in both directions to going only in one direction, and then vanish14. Although specific neural correlates of prefrontal cortex (PFC) firing remain unknown, PFC hippocampus interactions are increased during VTE101,112,157 (not shown in the figure). Reward-related cells in the ventral striatum (vStr) transiently fire before the turn-around point in VTE. This early firing disappears as behaviours automate129,132. Reward-related cells in the orbitofrontal cortex (OFC) transiently fire after the animal commits to its decision. This firing appears earlier as behaviours automate66,129. Cells in the dorsolateral striatum (dlStr) do not show extra activity during VTE54, but develop task bracketing (that is, they show increased activity at the start and end of the maze) as behaviour automates and VTE disappears53,56.

In the 1940s, search hypotheses as an explanation for behaviour lost favour to situation–action-chain hypotheses, in part because the proponents of the former could not provide mechanistic explanations for how VTE behaviour related to decisions. Concepts of computation5,6, information7 and representation5 were only just being developed in the 1940s. Moreover, the computer revolution that found that the extraction of information from representations (for example, by searching through a tree of possibilities8) takes time did not appear until the 1950s9. Furthermore, techniques to record the neural activity of behaving rats did not come into use until the 1970s10, and neural ensembles were not accessible until the 1990s11. The decoding of neural activity at fast timescales was not possible until the 2000s12–16. These decoding operations enable the identification of neural correlates of imagination17,18 even in non-human animals19. Perhaps the pausing behaviour identified as VTE really does reflect a search-and-evaluate process through imagined worlds.

In this Review, I present evidence that the neural processes that accompany VTE reflect a deliberation process in non-human animals. This article first defines deliberation algorithmically and compares it with procedural processes and other algorithms known to drive decision making in humans and other animals. I then review the behavioural and neurophysiological data and argue that these data support the description of VTE as reflecting deliberation. Finally, I address the multiple theories of VTE that have been proposed, concluding that the most likely explanation is that VTE is a behavioural manifestation of deliberative processes.

Defining deliberation

Deliberation is a process wherein an agent searches through potential possibilities based on a hypothesized model of how the world works and evaluates those hypothesized outcomes as a means of making decisions20–24. Deliberation depends on three steps: determining what those possibilities are, evaluating the outcomes and then selecting which action to take. Importantly, in humans, the deliberative search-and-evaluate process is thought to be serial, with individual options constructed as imagined concrete potential futures (a process known as ‘episodic future thinking’ or ‘mental time travel’)20,21,23,25,26. How options are compared against each other remains complex and only partially understood27–29.

Search-and-evaluate processes depend on having a schema of how the world works that can be used to determine the consequences of actions24,30–32. In Tolman’s terms, this schema is the so-called cognitive map, which was originally more cognitive than map3,10,33,34. Although many rodent studies treat the cognitive map as spatial, most theories assume that similar processes can occur through non-spatial schemas in all mammals35–37. Because VTE is specifically a rodent behaviour that manifests in a spatial context, only spatial analyses are reviewed here. Nevertheless, the neural processes underlying VTE are likely to reflect a more generally applicable deliberative process beyond those seen in the spatial examples used here.

Deliberative decision making in humans

Much of the extensive literature on human decision making differentiates between processes of deliberation and those of judgement20,38,39, a distinction that parallels the difference between choosing-between-options and willingness-to-pay experimental paradigms in non-human animals29. Although these differences are beyond the scope of this Review, mounting evidence in the human literature indicates that processes underlying deliberative decision making depend on episodically imagined futures21,25–27,40, such that more-concrete options are easier to imagine and draw decisions towards them27,41,42. In humans, the search-and-evaluate process is hypothesized to involve interaction between the prefrontal cortex (PFC) and temporal lobe structures to create imagined episodic futures26,41,43–46. In humans and non-human primates, medial striatal structures (caudate) may be involved in deliberative processes, whereas the more-cached, automated processes depend on lateral striatal structures such as the putamen47,48.

Procedural versus deliberative decision making

Current theories of decision making suggest that decisions arise from a complex interaction of multiple action-selection processes22,24. Although a full description of this interaction is beyond the scope of this article, studies of VTE have examined behaviour during processes underlying both deliberative and procedural decision making. In procedural action selection, animals automate their behaviour when repeated actions reliably achieve goals, by developing cached action chains that can be released at appropriate times4 but that, once initiated, tend to run to conclusion49. Because these action chains are inflexible once learned, they tend to be learned slowly, particularly in comparison to the cognitive map that forms the basis for the mental search process (knowing the cognitive map does not force the selection of a specific action)10,24,34,50.

On the Tolman–Hull plus maze and similar T-choice mazes (BOX 1), VTE occurs when animals express deliberative strategies and vanishes as animals automate their response using procedural strategies51,52. However, VTE is also expressed on these tasks as procedural strategies are developing14,51–53, which may suggest the presence of different underlying neural processes during the development of procedural strategies (before automation)53–56.

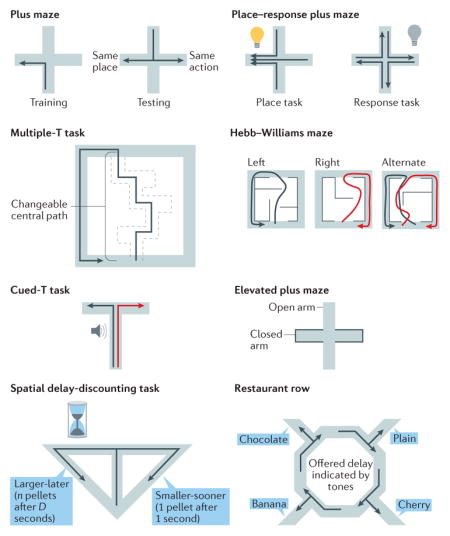

Box 1. Tasks.

Vicarious trial and error has been explored in rodents using several spatial tasks (see the figure).

Tolman–Hull plus maze

Rats are trained to turn left from the south arm to the west arm of a plus maze. The animals then start a trial from the north arm, allowing them totake a different action (to reach the same location) or the same action (leading to a different location)51,62,88.

Place or response plus maze

Rats have to proceed to a place or follow a procedural action to obtain a reward52.

Multiple-T task

Rats are trained to run through a central track and to turn left or right for food. On each day, the central track has a different shape and the side on which the final reward is placed can change, but both variables generally remain constant within a day14,54.

Left, right or alternation choice tasks

Variants of the multiple-T task in which the track or reward-delivery contingency changes part of the way through a day’s experimental session65,66,72. In the Hebb–Williams maze version, the central path is constructed of walls rather than additional T choices56.

Cued-T task

Rats or mice are trained to turn left or right at a T intersection where the correct choice depends on a sensory cue provided on the stem of the T14,53,55,140.

Elevated plus maze

Rats or mice explore a plus maze with two closed and two open arms. The open arms are less protected and more-anxious animals tend to avoid them153 (BOX 4).

Spatial delay-discounting task

Rats are trained to turn to one side of a T for a large reward that is delivered after a delay or to the other side for a smaller reward that is delivered quickly. The delay is adjusted based on the rat’s decisions, allowing the rat to balance the delay against increased reward on the larger–later side68.

Restaurant row task

Rats or mice are trained to run past a repeating sequence of reward options. As the animal runs past an option, a sensory cue informs it of the delay it would have to wait before receiving the reward. At each offer, the animal is given the option to wait out the delay or to skip the option and try the next option, allowing the assessment of how much the animal values that option (that is, through measuring how long it is willing to wait for a given option)67.

VTE as a model of deliberation

The hypothesis put forward in this Review is that VTE reflects the indecision underlying deliberation. Predictions can be derived from this hypothesis about the timing of VTE, the relation of VTE to learning and reward-delivery contingencies, and the neurophysiological processes that should co-occur with VTE behaviours. See BOX 2 for a list of the predictions that follow from this hypothesis and that are supported by the evidence discussed in the following two sections.

Box 2. Predictions.

The hypothesis that vicarious trial and error (VTE) behavioural events reflect an underlying deliberation process generates several testable predictions, which the data reviewed in this paper suggest are true.

Behavioural predictions

VTE should occur in situations in which the rat knows the shape of the world, because deliberation depends on being able to predict the consequences of one’s actions.

VTE should decrease as animals automate their behaviours when reward-delivery contingencies are particularly stable.

VTE should increase when reward-delivery contingencies are variable or change.

Neurophysiological predictions

During VTE events, there should be neural representations of future outcomes and their evaluations.

Those neural representations should encode the multiple outcomes serially.

Neural representations of future outcomes should involve the hippocampus and prefrontal cortex (PFC)–hippocampus interactions.

Neural representations of valuation should involve the ventral striatum and the orbitofrontal cortex.

Neural representations should shift from PFC–hippocampus interactions to dorsal striatal processes as behaviours automate.

Behavioural predictions

The key hypothesis of deliberation as a search-and-evaluate process is that animals are mentally searching through a schema of how the world works. In order to search through schema, a sufficiently complete model of the world is required that can predict the consequences of one’s actions 24,57,58. The hypothesis that VTE reflects deliberative but not procedural processes suggests that VTE should occur when rats depend on flexible decision-making strategies, which tend to occur early in learning10,34 and after changes in the contingency of reward delivery59,60. Moreover, the hypothesis that VTE reflects the indecision underlying deliberation suggests that it should occur in particularly difficult choices and should be reduced when choices are easy61.

VTE from deliberation to automation

Behavioural testing on the Tolman–Hull plus maze starts with habituation of the animal to the maze itself for several days, after which animals are trained to turn left from the south arm to the west arm. VTE occurs during the early learning phase on this task51, while animals are showing deliberative behaviours62,63. Thus, VTE occurs when rats know the shape of the maze but not what to do in the maze. Animals that maintain the deliberative strategy late in learning continue to show VTE, whereas animals that switch to procedural strategies do not51. When variants of this task are constructed such that only the deliberative strategy will lead to a reward, animals continue to show VTE throughout the task51,52. On a plus-maze variant in which rats either had to go to a place from multiple starting points (requiring a flexible action) or had to take a specific action (for example, turn left) from any starting point (thus allowing automation), VTE occurred when the reward was delivered based on which place the animal went to (that is, depending on flexible, deliberative strategies), but vanished when animals automated the component where reward was delivered based only on the action taken (that is, following a procedural strategy)52.

VTE and changes in reward contingency

On T-choice tasks14,53,54,56,64–66 (BOX 1), VTE occurs at high-cost choice points. On these tasks, rats know the general parameters of the task (for example, run through a central path, turn left or right for food and return to the loop) but not the specific reward-delivery contingency (which could change daily). For example, rats that were trained to turn left or right at the end of the central track for food but that did not know which way was going to be rewarded on a given day expressed VTE on the early laps on each day14,54. VTE also increases when animals encounter a change in the relationships between actions and reward56,65,66. VTE disappears as the behaviour automates and then reappears with the change in reward-delivery contingency. Similarly, VTE reappeared on the cued-T task when the reward was devalued53, and on the plus maze when the task switched between needing a place- and a response-based strategy for reward52.

VTE and the difficulty of choice

On the multiple-T task, VTE behaviours are only expressed at the final (difficult) choice point, but not at a topologically similar control point on one of the simpler Ts54, suggesting that simple choices do not produce VTE. On the restaurant row task (BOX 1), animals make sequential foraging decisions about whether to wait for a reward offered at a given delay. VTE behaviours do not appear when the rat is faced with very short delays (which are definitely worth staying for) or really long delays (which are definitely worth skipping), but are preferentially expressed when the economic offer is at the animal’s decision threshold67, which is presumably the difficult choice.

Importantly, VTE does not simply occur only in situations in which there are two equal choices between which a decision must be made. One study trained rats on a spatial delay-discounting task68 (BOX 1), in which rats decide between two differently delayed and differently rewarding options. The rats can change the delays to the options by preferentially selecting one side or the other and, once the two sides are equally valued, the animals alternate between the two sides, which holds the delays constant. In this task, VTE occurs as the delays are changing and vanishes when the options become equally valued and the rats respond with a stereotyped alternating behaviour.

VTE and behaviour: conclusions

These data suggest that VTE occurs when animals are faced with difficult, changing choices on tasks in which they understand the shape of the world. However, if it is possible to automate the behaviour (when the reward-delivery contingencies are stable), then VTE disappears as behaviour automates.

The neurophysiology of VTE

On the basis of the hypothesis that VTE reflects deliberation and disappears behaviourally as animals begin to use procedural decision-making systems, several predictions about the neurophysiology accompanying VTE can be made (BOX 2).

Deliberation entails a search-and-evaluate process, in which one imagines the consequences of an action and then evaluates that outcome in light of one’s goals. This is a form of mental time travel, or episodic future thinking: placing oneself into a future situation25,69. Studying mental time travel in non-human animals requires a methodology that can detect representations of that imagined future19, and just such a methodology has been developed that is based on the observation that imagining something activates similar representational systems to perceiving that thing17,18 (BOX 3). This methodology can be used to explore how different neural circuits contribute to the search-and-evaluate process that underlies deliberation, and thus shows that mental time travel itself uses the hippocampus, in a process that is probably initiated by the PFC, and that the outcomes of each possible option are evaluated in the ventral striatum (also known as the nucleus accumbens) (see below).

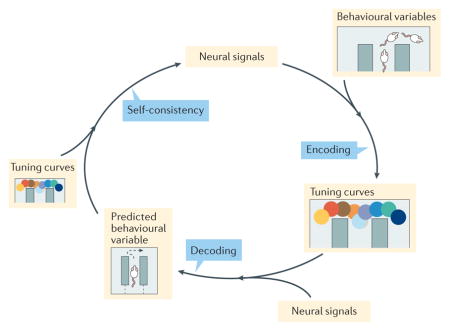

Box 3. Measuring imagination.

In humans, imagination of a thing activates the same representational systems that are active during perception of that thing17,18, and decisions that depend on an explicit knowledge of how future situations transition to other future situations include prospective representations of those future cues98. This suggests that mental time travel in non-human animals can be detected by decoding representations from patterns of neural activity and looking for times at which the neural activity decodes to information not immediately present that is, when it decodes to other places or other times.

To measure representations of other places and other times, we follow a representational loop of encoding, decoding and re-encoding19 (see the figure). The encoding step ascertains the relationship between neural firing and a behavioural variable (such as the position on the maze). The decoding step reveals the information represented by a specific firing pattern (such as a firing pattern at a particular moment in time). The third, re-encoding step measures how well the decoded information predicts the observed spike train pattern. Self-consistency is a quantitative measure of the encoding decoding re-encoding loop, taken as how consistent the activity patterns of cells is with the information hypothesized to be represented at a moment in time154. Hidden within the encoding step is an assumption about the variables that the cells are encoding (for example, that hippocampal cells encode spatial information). Incomplete assumptions about the variables that the cells are encoding will decrease the self-consistency of the representation19,154. Sometimes, one needs to add a ‘covert’ variable (not shown on the figure), such as the imagined location of the animal, in order to correctly explain neural firing. The cells fire action potentials outside their expected tuning curves (that is, in conditions besides those to which the cells normally respond); if those spikes contain structure (for example, sequences, consistency with other simultaneously recorded cells or information about future plans), then this extra structure can be interpreted as representing cognitive information about other places or other times19.

By contrast, procedural decision making entails the performance of an appropriate action chain in a single situation. Thus, structures involved in procedural decision making should slowly develop representations that relate specific actions to specific reward-delivery contingencies, and should directly reflect the ‘release’ of an action chain. The dorsolateral striatum has been shown to develop such representations as animals automate their behaviour (see below).

Hippocampal sequences

Normally, as a rat runs through a maze, each hippocampal place cell fires action potentials when the animal is in a specific location — in the place field of the cell10,34. During movement (for example, when running through a maze) and attentive pausing, place cell firing is organized within an oscillatory process that is reflected in the hippocampal local field potential (LFP) theta rhythm (6–10 Hz)10. Typically, theta is seen during movement, and other LFP patterns are seen during pauses; however, theta also occurs if the rat is attentive to the world during that pause, such as during freezing in fear after conditioning70 or during VTE10. In each 140 ms theta cycle, hippocampal place cells fire in a sequence along the path of the rat, from somewhere slightly behind the rat to slightly ahead of it15,71,72. By examining these hippocampal theta sequences during VTE events at decision points on a T-maze task, it was found that these sequences proceeded far ahead of the rat, alternating serially between goal-related options14.

Evidence from humans suggests that the hippocampus is both necessary for and active during episodic future thinking25,26,45,73,74. Together with the evidence described above that neural representations in the hippocampus project sequences forward along the path of the rat, this evidence suggests that the pause-and-look behaviour observed as VTE may indeed be a manifestation of deliberation, particularly on spatial tasks. Moreover, for the rat to be internally ‘trying out’ possible outcomes, the hippocampal place cell sequences should occur serially, alternately exploring the paths to each goal ahead of the animal (as opposed to representing a blend of all possible paths). This is exactly what occurs: when animals pause at the choice point, hippocampal sequences within any given theta cycle proceed along a single path towards a single goal, one at a time, first towards one goal and then towards the other14,72,75. Current experiments have only examined neural ensembles ranging over a small portion of the hippocampus (within a few millimetres). Recent work has suggested that the hippocampus and its associated structures may contain several potentially independent modules along the long septo-temporal axis76,77. Whether the hippocampal neural ensembles encode a unified representation of a ‘sweep’ in both the dorsal and the ventral hippocampus remains unknown. One can quantify how unified a representation is by measuring whether the activity of the neurons is consistent with the location decoded from the population, termed the ‘self-consistency’ of a representation19 (BOX 3). During VTE, dorsal hippocampal neural ensembles encode self-consistent representations of each individual option, serially and transiently.

These hippocampal sweeps during VTE were locked to the hippocampal theta oscillation14, suggesting that they may be special cases of theta sequences. During each theta cycle during normal navigation, hippocampal place cells fire in sequence along the current path of the animal15,78,79. Hippocampal theta sequences have been found to reflect ‘cognitive chunks’ (that is, continuous sequences of navigational paths) about goals and subgoals72. Moreover, these sequences have been found to represent the path to the actual goal of the animal, sweeping past earlier options at which the animal was not going to stop75.

Hippocampal LFPs: theta and LIA

In contrast to the theta rhythm, the more broad-spectral LFP known as large-amplitude irregular activity (LIA) is punctuated by short 200 ms oscillatory events called sharp-wave ripple complexes (SWRs) that contain power in frequencies of approximately 200Hz10,80. SWRs that occur just before an animal leaves a location where it has been resting often include hippocampal SWR sequences running forward towards the goal16,81, and thus it has been hypothesized that there may be SWRs at the choice point during VTE. However, the rodent hippocampal LFP shows theta, not LIA, during VTE14,72,82, and there is little to no power in the SWR band during VTE14. Although there are intra-hippocampal oscillatory events that occur shortly before the turn-around point in VTE, these events show power at frequencies in the high-gamma range (~100 Hz), well below the SWR range82. The relationship between SWRs that occur before the journey starts16 and theta sequences that alternately explore goals during VTE14 remains unknown.

Three stages of hippocampal sequences

Johnson and Redish14 first saw these VTE-related sequences on the simple multiple-T task, in which rats had already learned the general structure of the task (that is, run through the central track and turn left or right for food), but not the correct decision for that day (because reward-delivery contingencies changed from day to day). Rats that were familiar with the general structure of the task learned the correct choices quickly14,54,83, which suggests that they were finding the daily parameters to fit a learned schema84. Because rats ran the same path repeatedly through the day, they could eventually automate their behaviour, leading to highly stereotyped paths with experience54.

By decoding the hippocampal representations while the rat paused at a choice point (while the rat expressed VTE54), it was found that hippocampal representations serially explored both alternatives on early laps, sweeping first to one side and then the other14. With experience, VTE diminished but representations continued to sweep ahead of the animal; however, in these later laps, sweeps only went in one direction (the direction eventually chosen by the animal). With extended experience, VTE was no longer expressed, the path of the animal became very stereotyped, and hippocampal activity represented the local position of the animal, with little forward components at all.

I suggest that these three components of hippocampal representation entail three stages of behavioural automation. The first stage is one of indecision, in which rats know the structure of the world but need to vicariously imagine the alternatives to determine what they want to do. The second stage is one of planning, in which rats know both the structure of the world and what they want to do but, using a deliberative process, continue to run the search through only one option (checking to ensure that option is the one they want). The third stage would then be automation, in which rats no longer search the future, but rather simply execute an action chain, with the hippocampus only tracking the immediate present (FIG. 1).

Hippocampal manipulations and VTE

Hippocampal lesions decrease the expression of VTE, particularly during learning, investigatory and exploratory stages, but these same lesions can increase the expression of VTE in later stages85–87. Ageing, which disrupts cognitive spatial strategies through effects on the hippocampus88, has a similar effect of increasing VTE during later stages of tasks89. In addition, in the elevated plus maze (a task used for measuring levels of anxiety; see BOX 4), hippocampal lesions disrupt the pausing behaviour that rats exhibit before venturing on to the open arms. It may be that hippocampal lesions reduce the expression of VTE, but also prevent animals from shifting to the planning and automation stages as easily. As noted above, on tasks in which rats show a shift from a flexible behaviour to an automated behaviour, such as the multiple-T task54, the Tolman–Hull plus maze51,62,88 or the spatial delay-discounting task68, VTE typically appears during early performance along with flexible behaviour and vanishes as the behaviour automates. On these tasks, hippocampal lesions decrease the VTE that occurs early (suggesting a problem with deliberative systems), but then these hippocampal lesions also disrupt this change in VTE with experience, leading to an increase in VTE, particularly on later laps (which should be more automated). Whether this disruption in the normal decrease in VTE also disrupts processes associated with automation (such as the development of task bracketing in the dorsolateral striatum; see below) remains unknown.

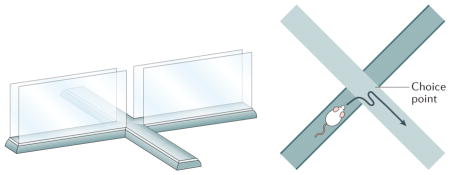

Box 4. Anxiety.

A hallmark of anxiety is the inability to act owing to pausing to consider possibilities, and particularly the dangers inherent in those possibilities. A hallmark of rodent models of anxiety is an unwillingness to venture out into open spaces. For example, on the elevated plus maze (see the figure), anxious rats prefer to stay within the enclosed arms and do not like to venture out into the open arms153. This model seems to have construct validity in terms of involvement of similar brain structures as in human anxiety155,156 and similar effects of anti-anxiolytics155.

The central intersection point between the closed and open arms of the elevated plus maze forms a choice point (see the figure), and rats often show micro-choices that look very much like VTE events at this choice point153,157. There is increased interaction between the hippocampus and the prefrontal cortex during these events157,158. This pausing at this central intersection point has been referred to as a ‘stretch attend posture’, during which the rat leans out and then returns back159. The stretch–attend posture occurs at times of conflict between approach to a reward (such as getting a food pellet) and avoidance of a threat (such as a dangerous predator-like robot)160,161. The stretch–attend posture has been hypothesized to be critical for risk assessment153,159 and probably represents a VTE event between staying and going. Predictions of this hypothesis are that there will be hippocampal theta sequences during these stretch–attend postures that will represent trajectories ahead of the rat14, that the hippocampus and the amygdala will show local field potential coupling70, and that there will be evaluation-related representations in structures such as the ventral striatum and the amygdala132,161.

Intra-hippocampal pharmacological manipulations can also change the expression of VTE. The cannabinoid agonist CP55940 has three notable effects on behaviour and hippocampal representations, whether given systemically or intra-hippocampally. First, it disrupts the ability to hold information across temporal gaps between trials90. Second, it disrupts the phase-related firing of place cells91. Third, it markedly increases VTE90,92. Given that phase-related firing of place cells is a reflection of the underlying place-cell theta sequences15,78, the cannabinoid-induced disruption of phase-related firing is likely to be reflective of the disruption of the theta sequences and thus indicates a disruption of the forward sweeps. A plausible interpretation of these disruptions is that CP55940 hinders the ability of the hippocampal sweeps to produce a self-consistent representation of the episodically imagined future, leaving an animal in the indecision stage, with continued expression of VTE.

In contrast to CP55940, reduction of the tonic release of noradrenaline by clonidine decreases VTE93. It is not known what effect clonidine has on theta sequences, but new data suggest that it limits the search process to a single path, even during VTE events94. This mentally searched path is the one that gets chosen by the rat94. A plausible explanation for the reduction in VTE following clonidine exposure is that clonidine drives the animal into the planning stage, in which it mentally explores only a single option and thus does not spend as much time expressing VTE.

Beyond the hippocampus

The neurophysiology of hippocampal representations during VTE14 suggests that the hippocampus enables the representation of the imagined future outcome required by the search component during the VTE process. Given the known interactions between the hippocampus and cortical representation systems34,84,95, it is likely that a full representation of an episodic future would depend on both hippocampal representations of the cognitive map and cortical representations of the features in that episodic future96,97. This is consistent with observations that episodic future thinking in humans activates hippocampal and medial temporal lobe systems26,73, and prospective representations of specific options activate cortical areas that can be used to decode those future options98.

Because disrupting hippocampal representations increases VTE87,90, it is unlikely that the hippocampus initiates the VTE process. I suggest, instead, that some other structure polls the hippocampus for that episodic future. Given the known influence of the rodent prelimbic cortex on outcome-dependent decisions99,100 and effects of its manipulations on goal-related activity in the hippocampus101,102, the prelimbic cortex is a good candidate for the structure that is likely to be initiating VTE.

The PFC

Theories of the neurophysiology underlying human deliberative processes, particularly the initiation of imagination, suggest a role for the PFC in deliberation26,45,73. In the rat, the prelimbic and infralimbic cortices (together termed the medial PFC (mPFC)) are usually identified as homologous to the primate dorsolateral PFC103,104, although the homology remains controversial105.

Cells in the mPFC tend to represent task-related parameters and, on structured maze tasks, their activity tends to chunk the environment into task-related sections106,107, analogous to the trajectory-related sequences seen in the hippocampus72,75. It is not known whether these mPFC neural patterns are directly related to the hippocampal sequences, but, interestingly, mPFC cell-firing patterns lock to hippocampal rhythms during episodic memory and decision-making tasks108,109, and interfering with the prelimbic–hippocampal circuit (through optogenetic silencing or physical lesions of the nucleus reuniens) disrupts the processing of goal-related information in the hippocampus102. Disruption of the mPFC of rats impaired the ability of the animals to flexibly switch to novel strategies110, but not after those strategies had been well learned111, suggesting that the mPFC is necessary during those task aspects in which VTE tends to appear52.

There is a strong, transient increase in LFP interactions between the hippocampus and the mPFC at the choice point during times when rats typically express VTE101,112,113. Several researchers have suggested that coherent oscillations (measured by paired recordings of LFPs in two separate structures) can increase the transmission of information between structures, allowing for transient changes in functional connectivity as a function of different task demands114. The functional relationships between the hippocampus and the mPFC are critical for the goal-dependent firing of hippocampal cells102, and are disrupted by cannabinoids115.

These studies suggest a model in which VTE reflects a deliberation process entailing an interaction between the mPFC and the hippocampus. I propose that the mPFC initiates the request, and the hippocampus responds by calculating the consequences of a potential sequence of actions (that is, identifying a potential future outcome). This interaction may explain why the hippocampal sequences are serial considerations of potential options — each request by the mPFC is searched through by a single theta sequence. If the hippocampus responds with poorly structured information (such as when under the influence of cannabinoids), the mPFC sends additional requests, leading to an increase in VTE. If the hippocampus responds assured of the consequences of the action (such as when under the influence of clonidine), the mPFC is satisfied and VTE is diminished. However, how the quality of the hippocampal representation is evaluated by the mPFC or other downstream structures remains unknown.

The ventral striatum and the OFC

It is not enough to know what will happen when exploring a potential path during deliberation — one also has to evaluate the consequences of that path, preferably in light of one’s goals57. Both the ventral striatum and the orbitofrontal cortex (OFC) have been identified as key to evaluation processes in rats116–118, monkeys119–121 and humans122–125. Neurons in these structures respond to reward value119,126–129, and reward-related cells in both the ventral striatum and the OFC fire transiently just before an animal initiates a journey to a goal127,130,131. Cells in these structures that exhibit large firing responses to reward also fire action potentials during VTE66,129,132. This suggests the presence of an imagination of reward during VTE events, potentially providing an evaluation signal.

On T-maze tasks, the pause-and-reorient behaviour of VTE has one (or more) very clear, sharp transition that can be identified quantitatively54,66,68,82,129, and can provide the potential to identify the timing of neural reward representations relative to decision-making processes. Covert reward signals in the ventral striatum preceded the moment of turning around, whereas the covert reward signal in the OFC appeared only as the animal oriented towards its goal, after turning around54,66,129.

These data suggest that the ventral striatum provides evaluative calculations during the decision-making process itself (before the animal stops and reorients towards its goal), whereas the OFC provides expectation information only after an animal commits to its decision. This hypothesis is consistent with recent work examining the timing of choice-related value representations in primates, comparing when evaluation signals in neurons recorded from the ventral striatum and ventral prefrontal cortical areas (which are probably homologous to the rat OFC) represent the value of the selected choice121. It is also consistent with work finding that the rat OFC exhibits outcome-specific representations of expected rewards after decisions67,133.

The dorsolateral striatum

As noted above, when repeated actions reliably achieve goals, animals can use procedural decision making, which depends on an algorithm of releasing action chains after recognizing the situation49. In a sense, the animal has learned to categorize the world (by developing a schema) and acts appropriately given those categories24. A key structure in the categorize-and-act process is the dorsolateral striatum. Lesions and other manipulations that compromise the function of the dorsolateral striatum drive behaviour away from procedural decision making and towards deliberative decision making62,63. Theoretically, the information-processing steps involved in procedural decision making are considerably different from those involved in the search-and-evaluate, deliberative system22,50,54.

Structures involved in procedural decision making should slowly develop representations of situation–action pairs, but only for the cues that provide reliable information as to which actions should be taken to achieve a reward. In contrast to the ubiquitous spatial representations of hippocampal place cells10,34,134,135, structures involved in procedural learning should only develop spatial representations when knowing where one is in space informs which actions one should take. Neural ensembles in the dorsolateral striatum only develop representations of those action–task pairs that reflect cues and task parameters that are informative about the action55,136,137. For example, dorsolateral striatal representations develop spatial correlates on the multiple-T task, but not on an analogous non-spatial task even when that non-spatial task occurs on a large track-based maze83,137.

Moreover, dorsolateral striatal ensembles should not show activity that is representative of transient mental sweeps into future outcomes (as the hippocampus does). Indeed, they do not; even during VTE events, and even on tasks in which dorsolateral striatum cells do show spatial representations54,56. Nor should reward-related cells in the dorsolateral striatum show transient activity carrying covert information about reward (as the ventral striatum and the OFC do) — again, they do not; although a subset of dorsolateral striatum cells do show reward-related activity53,56,83, these reward cells do not show a transient upregulation of firing during VTE events54. Thus, neural activity in the dorsolateral striatum during decision-making tasks is more consistent with an involvement of this structure in procedural decision making than in deliberative decision making.

As behaviour becomes ‘ballistic’ and stereotyped, dorsolateral striatal neural ensembles transition their firing to the start and end of the journey, with decreased firing in the middle53,56,138–141. This phenomenon has been termed task bracketing, and it diminishes quickly if a change in the reward-delivery contingency forces animals back into using deliberative strategies53,56. Task bracketing is negatively correlated with the presence of VTE on a lap-by-lap basis53,56, supporting the hypothesis that VTE is indicative of decisions being made by the deliberative system, whereas task bracketing is indicative of the decisions being made by the procedural system. Interestingly, task bracketing remained after reward devaluation53 and after switching the signalling cue to a novel modality142, but disappeared during extinction140 and after a reward-delivery contingency reversal that required a change in action in response to the same set of cues56. These findings may suggest that the hypothesized relationship between reward evaluation or devaluation and deliberative search processes35 may require a more complicated process model42,143 that separates one-step and multistep processes.

Neurophysiological conclusions

The neurophysiology summarized above is consistent with the hypothesis that there are (at least) two decision-making systems based on different algorithms — a deliberative system based on search-and-evaluate processes and a procedural system based on the release of learned action chains in response to situation categorization. It is also consistent with the hypothesis that VTE is a behavioural reflection of the deliberative process. The search process arises from an interaction between the PFC and the hippocampus, with pre-decision evaluation in the ventral striatum, and post-decision evaluation in the OFC. In the rat, the infralimbic cortex may have contrasting roles to the prelimbic cortex, instead affecting learned, automated procedural strategies53,99. The data reviewed above suggest that the dorsolateral striatum is a critical component of the procedural system; it learns the associated action chains that should be taken in rewarded situations. There is evidence that the dorsomedial striatum may have roles in the deliberative component55,56,63,144–146, and that there may be differences between the functions of the anterior and posterior portions of the dorsomedial striatum55,56,63, but the specific roles of the dorsomedial striatum in decision making remain incompletely explored.

Theories of VTE

The term ‘vicarious trial and error’ as originally suggested by Muenzinger, Gentry and Tolman1–3 is fraught with meaning, implying imagination, mental time travel and a cognitive search-and-evaluate process. In effect, they were proposing that rats were deliberating over choices. The neurophysiological data reviewed above suggest that this is actually an accurate description of the behaviour. Over the years, alternative accounts of VTE have been proposed, suggesting that perhaps VTE was simply a means of gathering sensory information or that it merely reflected indecision during learning. However, further examination of these two alternatives suggests that VTE really is an example of using working memory to explore mental possibilities — that is, to vicariously try out alternatives.

Is VTE a means of gathering sensory information?

As a behavioural process, VTE entails orientation towards a path to a goal, or perhaps even orientation towards the goal itself. Because of this, early theories of VTE suggested that rats were merely focusing sensory attention on cues1,147. This simple hypothesis is, however, untenable, because the proportions of VTE change as behaviour changes, even when sensory cues are held constant14,67,68,87. Certainly, there are examples of pause-and-reorientation behaviour in both rats (looking like VTE) and primates (BOX 5) that have information-gathering components. However, hippocampal place-cell sweeps do not always reflect the orientation of the rat14, suggesting that the sweeps reflect an internal process of vicarious exploration rather than a means of gathering external sensory information. Although it is true that rats have a very wide field of view, VTE has been observed in walled-maze tasks in which the animal cannot see the goal56,87, so any sensory-information-gathering theory would have to depend on only the first part of a journey. Moreover, VTE-like reorientations observed in sensory-information-gathering situations do not depend on hippocampal integrity, unlike VTE-like reorientations in goal-directed behaviours86. VTE is more likely to be a reflection of an internal process50,58, perhaps as the halted initiation of an action.

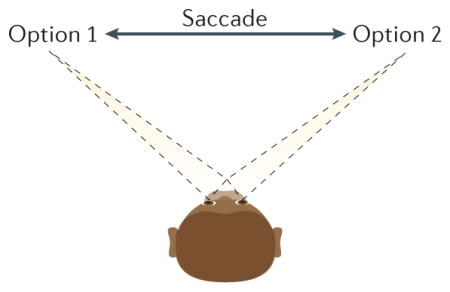

Box 5. Primates.

This Review has concentrated on the rat because vicarious trial and error (VTE) behaviour has been both best defined and most extensively studied in this organism. However, in certain visuospatial tasks in which options are separated on a visual screen, humans and non-human primates do show a similar process termed ‘saccade fixate saccade’ (SFS), in which the subject looks from one option to another61,162,163 (see the figure). Thus, just as VTE in the rat entails alternate orientation and reorientation towards goals, SFS may entail an alternate orientation and reorientation towards a target. This connects SFS to the extensive primate (both human and non-human) literature on visual search36,164; however, whereas visual search is usually used to refer to situations in which perception is difficult but the decision — once the correct stimulus is perceived is easy, SFS is usually observed in situations in which the perception is easy, but the decision is hard, such as when given a choice between two similarly valued candy bars61. As with the rats and VTE, amnesic patients with severe hippocampal dysfunction showed disruptions in these SFS revisitation processes162, which are believed to depend on prefrontal–hippocampal interactions45.

Is VTE exploration of novel environments?

On initial exploration, rats do show specific exploration-related behaviours — particularly pauses during which rats tend to show visual exploration of an environment by rearing up on their hind legs and swinging their head back and forth from side to side10,148. Although VTE often does include the head swinging from side to side, this behaviour reflects the orienting and reorienting towards paths and does not include rearing on the hind legs. Exploratory behaviours occur on novel mazes on which rats have very limited experience, whereas VTE occurs on tasks in which the animal has lots of experience but requires specific parameters for a given day. Moreover, rearing behaviours occur throughout the space of the maze, whereas VTE occurs specifically at difficult choice points. On a circular track, animals expressed pause-and-rearing behaviours randomly throughout the track149, whereas on the multiple-T task, VTE behaviours were only expressed at the final (difficult) choice point14,54, and on the restaurant row task VTE behaviours were preferentially expressed at entries onto the reward spokes, particularly when the economic offer was at the animal’s decision threshold67. On the spatial delay-discounting task, more VTE occurred during the so-called titration phase (while rats adjusted the delay) than during the investigation or exploration phases68,87. Neurophysiologically, VTE is associated with hippocampal sweeps of representations of paths towards a goal and do not produce long-term changes in the place fields of the cell14; by contrast, exploratory pause-and-rear behaviours recruit hippocampal cells to develop new place fields149. Together, this evidence suggests that VTE is more likely to reflect a form of exploration within an internal, mental space of possibilities than exploration of the external environment.

A search through mental information space

One of the discoveries of the computer revolution is that information can be hidden within complex data structures such that accessing that information can require computation and time8. Similarly, the information about which rewards are available, and where and how to get to them may be stored in an animal’s brain, but determining what the consequences of one’s actions are and whether those actions are the right choice at this time can require a search process through one’s knowledge about the world.

Interestingly, early theories of working memory suggested that working memory allowed information to be explored internally to determine previously unrecognized aspects hidden within one’s knowledge150. This exploration was hypothesized to arise from prefrontal areas45,151, using sensory and other representational systems as a visuospatial scratchpad18,150. The deliberative explanation for VTE derived in this article also suggests a very similar process, whereby the mPFC sends requests to the hippocampus to explore possible outcomes, the value of which can then be evaluated by the ventral striatum.

Exploration has been hypothesized to be a form of information foraging and, analogously, deliberation has been hypothesized to consist of a mental exploration of one’s internal schema58. The latter hypothesis requires that an animal has a model of the world (a mental schema) on which to search. Consistent with this hypothesis, VTE does not occur on initial exploration, but only after the rat has a schema about the task and environment. Similarly, VTE does not occur during every decision, but only during difficult decisions (that is, where options are similarly valued67) and before automation14,53,54,56,65,68.

Conclusion

In his 1935 critique of Tolman’s VTE hypothesis, Guthrie152 said that: “So far as the theory is concerned the rat is left buried in thought”. We now know that an internal search through a representation can derive information that is hidden within that representation, and thus that a search through a mental schema of the consequences of one’s actions can derive information that can change decisions. Importantly, computation takes time. The search-and-evaluate process that underlies deliberation therefore requires time to complete. Although several questions remain (BOX 6), the term ‘vicarious trial and error’ makes strong assumptions about cognitive and mental states; it implies that rats are actually searching through possibilities, evaluating those possibilities and making decisions that are based on those evaluations. In other words, it implies that rats really are deliberating. The data that have come in over the past decade are clear: Muenzinger, Gentry and Tolman were completely correct — VTE is a process of vicarious (mental) trial and error (search, evaluate and test). Guthrie was also right: when rats pause at choice points, they are indeed “buried in thought”.

Box 6. Open questions.

Although we have made a lot of progress in identifying the neural mechanisms underlying vicarious trial and error (VTE) behaviours, confirming many of the original implications of the term ‘vicarious trial and error’ (an explicit search process representing future options), several key open questions remain.

How does the process decide which potential futures to search?

Mental construction of potential future outcomes is a form of a search process23,36, and thus requires a recall process that must be modulated by one’s goals. Cueing expected outcomes can guide preferences towards that outcome, but it is not yet known whether those preferences also drive mental search representation to focus on paths to that outcome35,41,44,165–168.

The information represented within hippocampal sequences is correlated with the orientation of the rat during VTE, but does not track it on a one-to-one basis14,72. Similarly, hippocampal sequences during goal-related navigation include increased representations of the chosen goal but are not uniformly targeted towards the chosen goal16,75. This suggests that VTE probably reflects the process of searching but is not necessarily oriented towards the goal itself. How these two processes (the neurophysiology of search and the orientation of the rat) are related remains incompletely explored.

How are the options evaluated?

In humans, evaluation of episodically imagined futures during deliberation seems to be based on applying perceptual valuation processes to the imagined outcomes21,27,28,169. Perceptual and deliberative valuation processes in humans and other animals activate and depend on similar neural systems28,122,124,165. Evaluative steps during VTE in rodents activate the ventral striatum and the orbitofrontal cortex66,129,132. In humans, the evaluation step depends on current emotional processes20,27,28. In rats, devaluation of a reward reduces the performance of actions that lead to that reward during early learning and when flexible behaviours are required (because reward-delivery contingencies are variable); however, after automation, reward devaluation has no such effect on the performance of these actions99. This discrepancy suggests that during deliberative processes there may be an evaluation step that is based on current needs and that is not used during procedural processes57,165. Whether this step works through a homologous process to human evaluative processes remains controversial143,166,170.

How is the action selected?

Deliberation is a decision-making process24. Studies have so far failed to find any relationship between the directions being represented during VTE and the eventual choice of the animal in normal animals14,129. The saccade–fixate–saccade processes in primates (BOX 5) have been argued to reflect integration of value of a given choice61, which accesses the psychological decision theory of integration to threshold171. This model has extensive psychophysical and neurophysiological support in perceptual decisions in which one needs to determine how to perceive a complex stimulus172,70. For example, when a subject is faced with a set of dots on a screen that are moving mostly randomly and asked to determine the average non-random direction hidden within the random population, representations of the alternative possibilities develop slowly, integrating until they cross a threshold172. However, neural ensemble recordings in the ventral striatum and the orbitofrontal cortex of rats have failed to find integration-to-threshold signals during VTE in either structure129. Behavioural data on some tasks indicate that VTE is increased when decisions are difficult67, which is consistent with the integration-to-threshold hypothesis (more difficult decisions require more integration time), but in other tasks VTE is increased only when the animal is using a deliberative strategy. On the spatial delay-discounting task, VTE occurs when values are very different from each other and disappears when the values become equal68 (presumably because the rat can use a procedural strategy at that point on this task). Although the action-selection process that must end deliberation remains unknown at this time, the evidence strongly supports the idea that VTE is reflecting the search-and-evaluate process that underlies deliberation.

Acknowledgments

The author thanks all of the students and colleagues who have worked with him over the years looking at different aspects of VTE, both for helpful discussions and for the experimental and theoretical work on these questions. The author also thanks A. Johnson, K. Smith, J. Stott, S. Amemiya and Y. Breton for comments on drafts of this manuscript, and J. Voss for helpful discussions. Time to work on this manuscript was funded by MH080318 and DA030672.

Glossary

- Neural ensembles

A set of cells recorded separably, but simultaneously, generally from a single brain structure during behaviour. Information represented within ensembles can be decoded from a sufficiently large ensemble

- Mental time travel

A process in which one imagines another time and place. Sometimes referred to as episodic future thinking

- Schema

An expertise-dependent representation of the structure of the world, identifying the important parameters over which the world varies

- Cognitive map

A world representation on which one can plan. Currently, the term is generally used in a spatial context, but Tolman’s original use of the term was closer to the current use of the word ‘schema’

- Deliberative decision making

A process in which one imagines potential future outcomes (serially and individually) and then selects an action to get to that specific future outcome

- Procedural decision making

A process in which one learns an action chain and the ability to recognize the situations in which to release it. Performance is rapid, but is usually learned slowly, and is inflexible once learned

- Local field potential (LFP)

Low-frequency voltage signals reflecting neural processing. In the hippocampus, the LFP is marked by two contrasting states: theta and large-amplitude irregular activity

- Hippocampal theta sequences

Sequences of firing of hippocampal place cells within a single theta cycle, generally proceeding from the location of the rat forward towards potential goals. Also called a ‘hippocampal sweep’

- Hippocampal SWR sequences

A sequence of firing of hippocampal cells within a sharp wave ripple complex (SWR). Originally referred to as ‘replay’ (because early observations identified sequences repeated in order), but now known to include other sequences including backwards along the path of the animal or along unexplored shortcuts and novel paths

- Task bracketing

A phenomenon observed in dorsolateral striatal neural ensembles, in which the cells show increased activity at the beginning of an action chain sequence

- Covert reward signals

Signals reflecting imagined representation of reward, as detected from the patterns of activity of reward-associated neuronal ensembles during non-rewarded events

- Integration to threshold

A psychological theory of decision making whereby one accumulates evidence for one decision over another: when the evidence for one decision reaches a threshold, the decision is made

- Visuospatial scratchpad

A component hypothesized to underlie working memory processes, in which neural circuits normally used for perception are used to hold imagined information for processing

Footnotes

Competing interests statement

The author declares no competing interests.

References

- 1.Muenzinger KF, Gentry E. Tone discrimination in white rats. J Comp Psychol. 1931;12:195–206. [Google Scholar]

- 2.Tolman EC. Prediction of vicarious trial and error by means of the schematic sowbug. Psychol Rev. 1939;46:318–336. [Google Scholar]

- 3.Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- 4.Hull CL. Principles of Behavior. Appleton-Century-Crofts; 1943. [Google Scholar]

- 5.Turing A. On computable numbers, with an application to the entscheidungs problem. Proc Lond Math Soc. 1937;42:230–265. [Google Scholar]

- 6.Wiener N. Cybernetics, or Control and Communications in the Animal and the Machine. Hermann; 1948. [Google Scholar]

- 7.Shannon C. A mathematical theory of communication. Bell System Techn J. 1948;27:379–423. 623–656. [Google Scholar]

- 8.Newell A, Shaw JC, Simon HA. Proc Int Conf Information Process. UNESCO; 1959. [online], http://bitsavers.informatik.uni-stuttgart.de/pdf/rand/ipl/P-1584_Report_On_A_General_Problem-Solving_Program_Feb59.pdf. [Google Scholar]

- 9.Simon H. A behavioral model of rational choice. Q J Econ. 1955;69:99–118. [Google Scholar]

- 10.O’Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Clarendon Press; 1978. This is a comprehensive book laying out the hypothesis that the hippocampus has a key role in Tolman’s cognitive map. Also, this book explains one of the first proposed algorithmic differences between what is now called deliberative and procedural learning. [Google Scholar]

- 11.Wilson MA, McNaughton BL. Dynamics of the hippocampal ensemble code for space. Science. 1993;261:1055–1058. doi: 10.1126/science.8351520. [DOI] [PubMed] [Google Scholar]

- 12.Zhang K. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J Neurosci. 1996;16:2112–2126. doi: 10.1523/JNEUROSCI.16-06-02112.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brown EN, Frank LM, Tang D, Quirk MC, Wilson MA. A statistical paradigm for neural spike train decoding applied to position prediction from ensemble firing patterns of rat hippocampal place cells. J Neurosci. 1998;18:7411–7425. doi: 10.1523/JNEUROSCI.18-18-07411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Johnson A, Redish AD. Neural ensembles CA3 transiently encode paths forward animal decision point. J Neurosci. 2007;27:12176–12189. doi: 10.1523/JNEUROSCI.3761-07.2007. This paper shows for the first time that hippocampal sequences encode future outcomes at choice points. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Foster DJ, Wilson MA. Hippocampal theta sequences. Hippocampus. 2007;17:1093–1099. doi: 10.1002/hipo.20345. [DOI] [PubMed] [Google Scholar]

- 16.Pfeiffer BE, Foster DJ. Hippocampal place-cell sequences depict future paths to remembered goals. Nature. 2013;497:74–79. doi: 10.1038/nature12112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.O’Craven KM, Kanwisher N. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. J Cogn Neurosci. 2000;12:1013–1023. doi: 10.1162/08989290051137549. [DOI] [PubMed] [Google Scholar]

- 18.Pearson J, Naselaris T, Holmes EA, Kosslyn SM. Mental imagery: functional mechanisms clinical application. Trends Cogn Sci. 2015;19:590–602. doi: 10.1016/j.tics.2015.08.003. This is a clear review of the now established fact that imagination activates the same circuits as perception in humans, which can be used (in reference 19) to identify mental time travel in non-human animals. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Johnson A, Fenton AA, Kentros C, Redish AD. Looking for cognition in the structure within the noise. Trends Cogn Sci. 2009;13:55–64. doi: 10.1016/j.tics.2008.11.005. This paper lays out the logic and mathematics allowing for identification of neural representations of cognitive events (such as mental time travel) from neural signals in non-human animals. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Payne J, Bettman J, Johnson E. The Adaptive Decision Maker. Cambridge Univ. Press; 1993. [Google Scholar]

- 21.Gilbert DT, Wilson TD. Prospection: experiencing the future. Science. 2007;317:1351–1354. doi: 10.1126/science.1144161. [DOI] [PubMed] [Google Scholar]

- 22.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kurth-Nelson Z, Bickel WK, Redish AD. A theoretical account of cognitive effects in delay discounting. Eur J Neurosci. 2012;35:1052–1064. doi: 10.1111/j.1460-9568.2012.08058.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Redish AD. The Mind within the Brain: How we Make Decisions and How Those Decisions Go Wrong. Oxford Univ. Press; 2013. This is a thorough review of the concepts and theory underlying the multiple-decision systems hypothesis. [Google Scholar]

- 25.Buckner RL, Carroll DC. Self-projection and the brain. Trends Cognitive Sci. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. [DOI] [PubMed] [Google Scholar]

- 26.Schacter DL, Addis DR. In: Predictions in the Brain: Using Our Past to Generate a Future. Bar M, editor. Oxford Univ. Press; 2011. pp. 58–69. [Google Scholar]

- 27.Gilbert DT, Wilson TD. Why the brain talks to itself: sources of error in emotional prediction. Phil Trans R Soc B. 2009;364:1335–1341. doi: 10.1098/rstb.2008.0305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Phelps E, Lempert KM, Sokol-Hessner P. Emotion and decision making: multiple modulatory circuits. Annu Rev Neurosci. 2014;37:263–287. doi: 10.1146/annurev-neuro-071013-014119. [DOI] [PubMed] [Google Scholar]

- 29.Redish AD, Schultheiss NW, Carter EC. The computational complexity of valuation and motivational forces in decision-making processes. Curr Top Behav Neurosci. 2015 doi: 10.1007/7854_2015_375. http://dx.doi.org/10.1007/78542015375. [DOI] [PMC free article] [PubMed]

- 30.Redish AD, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: Implications for addiction, relapse, and problem gambling. Psychol Rev. 2007;114:784–805. doi: 10.1037/0033-295X.114.3.784. [DOI] [PubMed] [Google Scholar]

- 31.Gershman SJ, Blei D, Niv Y. Context, learning and extinction. Psychol Rev. 2010;117:197–209. doi: 10.1037/a0017808. [DOI] [PubMed] [Google Scholar]

- 32.Niv Y, et al. Reinforcement learning in multidimensional environments relies on attention mechanisms. J Neurosci. 2015;35:8145–8157. doi: 10.1523/JNEUROSCI.2978-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Johnson A, Crowe DA. Revisiting Tolman, his theories and cognitive maps. Cogn Critique. 2009;1:43–72. [Google Scholar]

- 34.Redish AD. Beyond the Cognitive Map: From Place Cells to Episodic Memory. MIT Press; 1999. [Google Scholar]

- 35.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 36.Winstanley CA, et al. In: Cognitive Search: Evolution, Algorithms, and the Brain. Hills T, et al., editors. MIT Press; 2012. pp. 125–156. [Google Scholar]

- 37.McKenzie S, et al. Hippocampal representation of related and opposing memories develop within distinct, hierarchically organized neural schemas. Neuron. 2014;83:202–215. doi: 10.1016/j.neuron.2014.05.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lichtenstein S, Slovic P, editors. The Construction of Preference. Cambridge Univ. Press; 2006. [Google Scholar]

- 39.Kahneman D. Thinking, Fast, and Slow. Farrar; 2011. [Google Scholar]

- 40.Benoit RG, Gilbert SJ, Burgess PW. A neural mechanism mediating the impact of episodic prospection on farsighted decisions. J Neurosci. 2011;31:6771–6779. doi: 10.1523/JNEUROSCI.6559-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Peters J, Büchel C. Episodic future thinking reduces reward delay discounting through an enhancement of prefrontal–mediotemporal interactions. Neuron. 2010;66:138–148. doi: 10.1016/j.neuron.2010.03.026. [DOI] [PubMed] [Google Scholar]

- 42.Kwan D, et al. Future decision-making without episodic mental time travel. Hippocampus. 2012;22:1215–1219. doi: 10.1002/hipo.20981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hassabis D, Kumaran D, Vann SD, Maguire EA. Patients with hippocampal amnesia cannot imagine experiences. Proc Natl Acad Sci USA. 2007;104:1726–1731. doi: 10.1073/pnas.0610561104. This paper shows that the hippocampus is critical for the ability to create episodic futures in humans. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lebreton M, et al. A critical role for the hippocampus in the valuation of imagined outcomes. PLoS Biol. 2013;11:e1001684. doi: 10.1371/journal.pbio.1001684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Wang JX, Cohen NJ, Voss JL. Covert rapid action-memory simulation (crams): a hypothesis of hippocampal–prefrontal interactions for adaptive behavior. Neurobiol Learn Mem. 2015;117:22–33. doi: 10.1016/j.nlm.2014.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Spiers HJ, Gilbert SJ. Solving the detour problem in navigation: a model of prefrontal and hippocampal interactions. Front Hum Neurosci. 2015;9:125. doi: 10.3389/fnhum.2015.00125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hikosaka O, et al. Parallel neural networks for learning sequential procedures. Trends Neurosci. 1999;22:464–471. doi: 10.1016/s0166-2236(99)01439-3. [DOI] [PubMed] [Google Scholar]

- 48.Lee SW, Shimoko S, O’Doherty JP. Neural computations underlying arbitration between model-based and model-free learning. Neuron. 2014;81:687–699. doi: 10.1016/j.neuron.2013.11.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dezfouli A, Balleine B. Habits, action sequences and reinforcement learning. Eur J Neurosci. 2012;35:1036–1051. doi: 10.1111/j.1460-9568.2012.08050.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Johnson A, van der Meer MA, Redish AD. Integrating hippocampus and striatum in decision-making. Curr Opin Neurobiol. 2007;17:692–697. doi: 10.1016/j.conb.2008.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gardner RS, et al. A secondary working memory challenge preserves primary place strategies despite overtraining. Learn Mem. 2013;20:648–656. doi: 10.1101/lm.031336.113. On the classic Tolman–Hull plus maze, VTE arises with deliberative choices. [DOI] [PubMed] [Google Scholar]

- 52.Schmidt BJ, Papale AE, Redish AD, Markus EJ. Conflict between place and response navigation strategies: effects on vicarious trial and error (VTE) behaviors. Learn Mem. 2013;20:130–138. doi: 10.1101/lm.028753.112. [DOI] [PubMed] [Google Scholar]

- 53.Smith KS, Graybiel AM. A dual operator view of habitual behavior reflecting cortical striatal dynamics. Neuron. 2013;79:361–374. doi: 10.1016/j.neuron.2013.05.038. This paper shows that VTE is negatively related to dorsolateral striatal representations that reflect procedural strategies (task bracketing) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Meer MAA, Johnson A, Schmitzer-Torbert NC, Redish AD. Triple dissociation of information processing in dorsal striatum, ventral striatum, and hippocampus on a learned spatial decision task. Neuron. 2010;67:25–32. doi: 10.1016/j.neuron.2010.06.023. This paper shows that hippocampal representations encode future options during VTE, ventral striatal representations encode potential rewards during VTE, and dorsolateral striatum does neither, instead slowly developing situation-action representations in line with the automation of behaviour. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Thorn CA, Atallah H, Howe M, Graybiel AM. Differential dynamics of activity changes in dorsolateral and dorsomedial striatal loops during learning. Neuron. 2010;66:781–795. doi: 10.1016/j.neuron.2010.04.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Regier PS, Amemiya S, Redish AD. Hippocampus and subregions of the dorsal striatum respond differently to a behavioral strategy change on a spatial navigation task. J Neurophysiol. 2015;114:1399–1416. doi: 10.1152/jn.00189.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Niv Y, Joel D, Dayan P. A normative perspective on motivation. Trends Cogn Sci. 2006;10:375–381. doi: 10.1016/j.tics.2006.06.010. This paper gives an explication of the difference between search-and-evaluate and cached-action-chain decision systems. [DOI] [PubMed] [Google Scholar]

- 58.Johnson A, Varberg Z, Benhardus J, Maahs A, Schrater P. The hippocampus and exploration: dynamically evolving behavior and neural representations. Front Hum Neurosci. 2012;6:216. doi: 10.3389/fnhum.2012.00216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- 60.Liljeholm M, Tricomi E, O’Doherty JP, Balleine BW. Neural correlates of instrumental contingency learning: differential effects of actionreward conjunction and disjunction. J Neurosci. 2011;31:2474–2480. doi: 10.1523/JNEUROSCI.3354-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Krajbich I, Rangel A. Multialternative drift-diffusion model predicts the relationship between visual fixations and choice in value-based decisions. Proc Natl Acad Sci USA. 2011;108:13852–13857. doi: 10.1073/pnas.1101328108. Investigating human saccade–fixate–saccade sequences, this paper suggests a decision model and shows that it is consistent with the behavioural selections made by subjects. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Packard MG, McGaugh JL. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiol Learn Mem. 1996;65:65–72. doi: 10.1006/nlme.1996.0007. [DOI] [PubMed] [Google Scholar]

- 63.Yin HH, Knowlton B, Balleine BW. Lesions of dorsolateral striatum preserve outcome expectancy but disrupt habit formation in instrumental learning. Eur J Neurosci. 2004;19:181–189. doi: 10.1111/j.1460-9568.2004.03095.x. [DOI] [PubMed] [Google Scholar]

- 64.Gupta AS, van der Meer MAA, Touretzky DS, Redish AD. Hippocampal replay is not a simple function of experience. Neuron. 2010;65:695–705. doi: 10.1016/j.neuron.2010.01.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Blumenthal A, Steiner A, Seeland KD, Redish AD. Effects of pharmacological manipulations of NMDA-receptors on deliberation in the Multiple-T task. Neurobiol Learn Mem. 2011;95:376–384. doi: 10.1016/j.nlm.2011.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Steiner A, Redish AD. The road not taken: neural correlates of decision making in orbitofrontal cortex. Front Decision Neurosci. 2012;6:131. doi: 10.3389/fnins.2012.00131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Steiner A, Redish AD. Behavioral and neurophysiological correlates of regret in rat decision-making on a neuroeconomic task. Nat Neurosci. 2014;17:995–1002. doi: 10.1038/nn.3740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Papale A, Stott JJ, Powell NJ, Regier PS, Redish AD. Interactions between deliberation and delay-discounting in rats. Cogn Affect Behav Neurosci. 2012;12:513–526. doi: 10.3758/s13415-012-0097-7. On the spatial delay-discounting task, VTE occurs during the titration phase, when the rat is making flexible choices, not during the exploitation phase, when the rat has automated its behaviour, even though the values of the two options are equal in the exploitation phase. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Atance CM, O’Neill DK. Episodic future thinking. Trends Cogn Sci. 2001;5:533–539. doi: 10.1016/s1364-6613(00)01804-0. [DOI] [PubMed] [Google Scholar]

- 70.Seidenbecher T, Laxmi TR, Stork O, Pape HC. Amygdalar and hippocampal theta rhythm synchronization during fear memory retrieval. Science. 2003;301:846–850. doi: 10.1126/science.1085818. [DOI] [PubMed] [Google Scholar]

- 71.Schmidt R, et al. Single-trial phase precession in the hippocampus. J Neurosci. 2009;29:13232–13241. doi: 10.1523/JNEUROSCI.2270-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gupta AS, van der Meer MAA, Touretzky DS, Redish AD. Segmentation of spatial experience by hippocampal θ sequences. Nat Neurosci. 2012;15:1032–1039. doi: 10.1038/nn.3138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Hassabis D, Maguire EA. In: Predictions in the Brain: Using our Past to Generate a Future. Bar M, editor. Oxford Univ. Press; 2011. pp. 70–82. [Google Scholar]

- 74.Howard LR, et al. The hippocampus and entorhinal cortex encode the path and Euclidean distances to goals during navigation. Curr Biol. 2014;24:1331–1340. doi: 10.1016/j.cub.2014.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]