Abstract

Exposure to traumatic experiences among youth is a serious public health concern. A trauma-informed public behavioral health system that emphasizes core principles such as understanding trauma, promoting safety, supporting consumer autonomy, sharing power, and ensuring cultural competence, is needed to support traumatized youth and the providers who work with them. This article describes a case study of the creation and evaluation of a trauma-informed publicly funded behavioral health system for children and adolescents in the City of Philadelphia (the Philadelphia Alliance for Child Trauma Services; PACTS) using the Exploration, Preparation, Implementation, and Sustainment (EPIS) as a guiding framework. We describe our evaluation of this effort with an emphasis on implementation determinants and outcomes. Implementation determinants include inner context factors, specifically therapist knowledge and attitudes (N = 114) towards evidence-based practices. Implementation outcomes include information on rate of PTSD diagnoses in agencies over time, number of youth receiving TF-CBT over time, and penetration (i.e., number of youth receiving TF-CBT divided by the number of youth screening positive on trauma screening). We describe lessons learned from our experiences building a trauma-informed public behavioral health system in the hopes that this case study can guide other similar efforts.

Keywords: Trauma-informed system, Evidence-based practices, Implementation science

Exposure to traumatic experiences among children and adolescents is a serious public health concern (Gillespie et al., 2009). The majority of youth are exposed to at least one, and often multiple, traumatic experiences by the age of 17 years (Finkelhor, Turner, Ormrod, & Hamby, 2009). Chronic stress and trauma can compromise optimal brain development and negatively impact physical, emotional, behavioral, social, and cognitive development in youth (DeCandia, Guarino, & Clervil, 2014; Middlebrooks & Audage, 2008). For example, adults who experienced four or more adverse child experiences (ACEs) had poorer physical outcomes compared to adults who did not experience four or more ACEs (Felitti et al., 1998). These findings are consistent with a growing body of research that indicates a strong relationship between cumulative exposure to traumatic events in childhood and a wide array of health and mental health impairments in adulthood (Shonkoff, Boyce, & McEwen, 2009). This effect is likely more profound in urban inner city environments (The Research and Evaluation Group at the Public Health Management Corporation, 2013).

To address the needs of traumatized youth, strengthening the infrastructure of public mental health systems around trauma-informed principles is critical. Trauma-informed systems are built around core principles, including 1) understanding trauma and its impact; 2) promoting safety; 3) supporting consumer control, choice, and autonomy; 4) sharing power and governance; 5) ensuring cultural competence; 6) integrating care; 7) the belief that healing happens in relationships; and 8) the understanding that recovery is possible (Guarino, Soares, Konnath, Clervil, & Bassuk, 2009). This article describes the creation and evaluation of a trauma-informed publicly funded behavioral health system for children and adolescents in the City of Philadelphia that included the implementation of trauma-focused cognitive-behavioral therapy (TF-CBT), an evidence-based practice (EBP) for traumatized youth. Proven efficacious (Chaffin & Friedrich, 2004), TF-CBT incorporates many key trauma-informed principles, such as psychoeducation to help families understand trauma and its impact; safety planning to prevent re-traumatization; and focusing on recovery through the creation of the trauma narrative and cognitive processing of the traumatic event (Cohen, Mannarino, & Deblinger, 2006).

This case study reflects the shared perspectives of the community-academic partners who contributed to these efforts to build a trauma-informed system. The PACTS team includes policy-makers, leadership from community mental health agencies, and a university-based evaluation team.

The objectives of this manuscript are as follows:

Describe the context within which the trauma-informed system and the evaluation were developed.

Describe the implementation science framework that guides the evaluation.

Present data with regard to implementation determinants and outcomes.

Provide recommendations, based on lessons learned, for developing and evaluating a trauma-informed public mental health system that links to other youth-serving systems.

Context

Philadelphia is a large, diverse city of over 1.5 million people. Residents include African Americans (42%), Caucasians (37%), Hispanic/Latinos (13%), Asians (6%), and individuals of other origins (2%; The Pew Charitable Trusts, 2013). Philadelphia’s poverty rate is among the highest in the nation (The Pew Charitable Trusts, 2013); educational levels are well below national averages, and unemployment rates in low-income neighborhoods are over 20%. In 2014, the homicide rate was 16 per 100,000 residents (The Pew Charitable Trusts, 2015). These indicators demonstrate the high trauma risk for Philadelphia’s youth. Approximately 80% of Philadelphia’s youth (approximately 350,000 children and adolescents) are enrolled in Medicaid. Public behavioral health services are managed by Community Behavioral Health (CBH), a nonprofit managed care organization (i.e., ‘carve-out’) established by the City of Philadelphia that functions as a component of the Department of Behavioral Health and Intellectual disAbility Services (DBHIDS). DBHIDS oversees all public behavioral health service delivery in Philadelphia County.

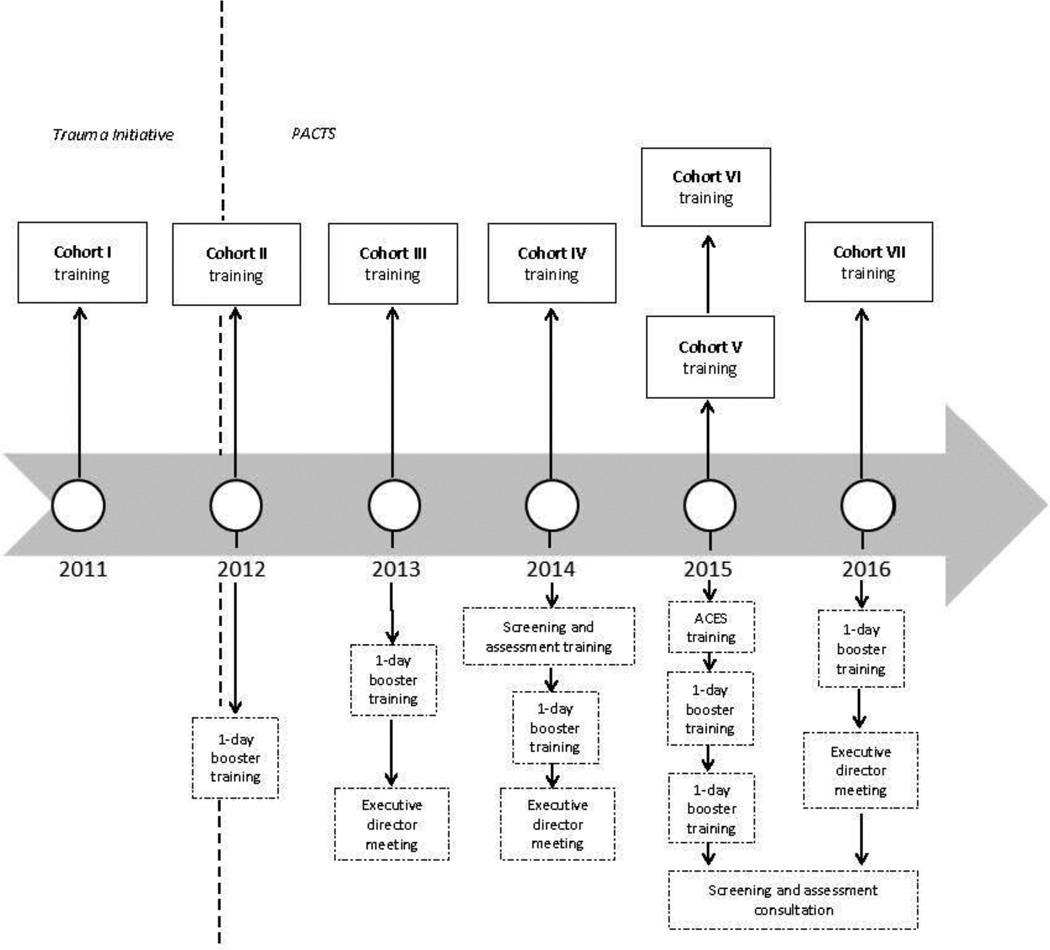

In 2005, DBHIDS initiated a system transformation for people with behavioral health needs (Achara-Abrahams, Evans, & King, 2011). This transformation emphasizes the cultures, resilience and protective factors, and unique recovery processes of individuals and families. As part of this transformation, and given the challenges facing Philadelphia residents (e.g., poverty and community violence), DBHIDS simultaneously prioritized the need to create a trauma-informed system, particularly for children and families. Given that 40% of Philadelphia adults report witnessing violence when growing up, and over 37% report four or more ACEs (The Research and Evaluation Group at the Public Health Management Corporation, 2013), conservative estimates suggest that there are approximately 30,000 youth in Philadelphia in need of evidence-based trauma treatment. The full-scale effort to develop a trauma-informed behavioral health system began in 2011 with the ‘Trauma Initiative’ (see Figure 1 for a timeline of related activities). As part of this initiative, DBHIDS contracted with treatment developers to provide training for behavioral health agencies in both trauma-specific EBPs and trauma-informed models. These approaches include TF-CBT (Cohen, Deblinger, Mannarino, & Steer, 2004; Cohen & Mannarino, 1998) and Prolonged Exposure (PE; Foa et al., 2005), both evidence-based cognitive-behavioral therapies for the treatment of PTSD in children and adults (respectively). Additionally, DBHIDS provided training in the Sanctuary Model (Bloom et al., 2003), an evidence-informed approach to building trauma-informed organizations.

Figure 1.

Timeline of TF-CBT implementation

Note. The trauma initiative began in 2011; the Philadelphia Alliance for Child Trauma Services (PACTS) began in 2012. This is denoted by the vertical dashed line. For each year, we denote the core activities that occurred to visually depict the actions taken to build a trauma-informed public behavioral health system.

In 2012, DBHIDS was awarded a National Child Traumatic Stress Initiative Community Treatment and Service Center grant (Category III) from the Substance Abuse and Mental Health Services Administration (SAMHSA) to form PACTS. The mission of PACTS is to increase the number of children who receive evidence-based trauma treatments in Philadelphia. Consistent with this mission, this manuscript focuses on describing the implementation of TF-CBT (rather than PE and Sanctuary). TF-CBT was chosen based on the strong evidence of efficacy for youth (age four to eighteen years) who have experienced potentially traumatic events (see de Arellano et al., 2014). TF-CBT consistently receives the highest ratings in research reviews on treatment for children with PTSD symptoms (Chadwick Center for Children and Families, 2009; Saunders, Berliner, & Hanson, 2004; Silverman et al., 2008). Additionally, TF-CBT is the most widely disseminated treatment for youth with PTSD symptoms, including youth from diverse cultural backgrounds (Sigel, Benton, Lynch, & Kramer, 2013), and has a proven track record of dissemination and implementation (Cohen & Mannarino, 2008).

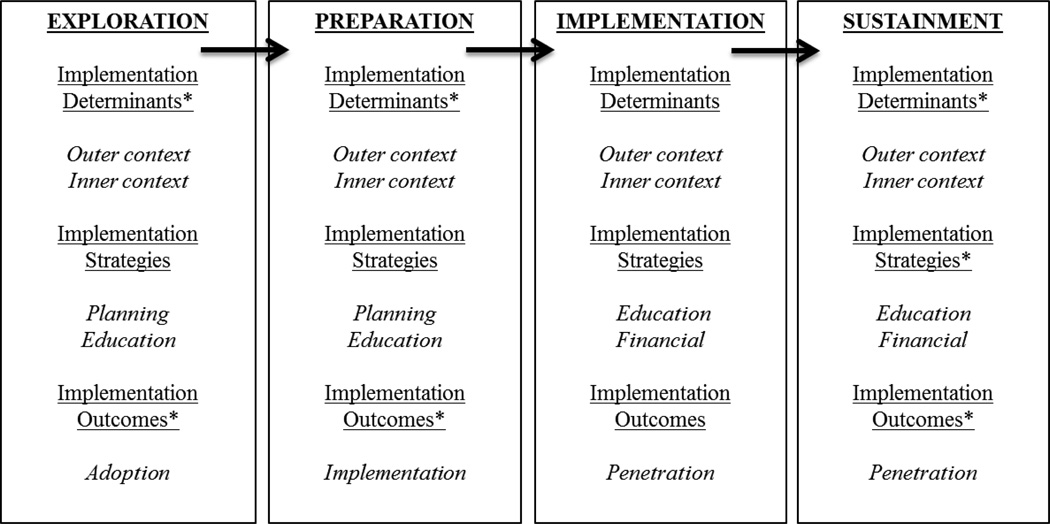

Implementation Science Framework

Both the implementation and evaluation of TF-CBT in the City of Philadelphia have been informed by leading implementation science frameworks (Aarons, Hurlburt, & Horwitz, 2011; Proctor et al., 2009) which specify the importance of both the innovation being implemented (i.e., TF-CBT), the fit of the innovation with the setting, and the inner (i.e., therapist and organizational) and outer context (i.e., system; see Figure 2) factors that influence the implementation process. Specifically, the EPIS framework (see Figure 2) is used to guide the understanding of contextual factors (or implementation determinants) which guide the implementation process and the stages of implementation (Aarons et al., 2011). The EPIS framework outlines the four phases of the implementation process (exploration, preparation, implementation, and sustainment) and identifies domains that are important to implementation, including the inner context (e.g., organizational and therapist characteristics) and outer context (e.g., the service environment, inter-organizational environment, and consumer support; Aarons et al., 2011). Within each phase, contextual variables relevant to the inner or outer context are posited. For example, during implementation, inner context variables hypothesized to be important to the implementation process include clinician attitudes toward EBP and their knowledge of EBP, whereas outer context variables include funding, engagement with treatment developers, and leadership. In this evaluation, we primarily focus on the implementation stage, although we provide background on the exploration and preparation phases. Future work will focus on the sustainment phase.

Figure 2.

The Exploration, Preparation, Implementation, and Sustainment (EPIS) framework

Note: Reproduced from (Aarons, et al., 2011). * = components that we did not measure as part of this evaluation. Our primary focus as part of this evaluation was the implementation phase. Specifically, we measured inner context implementation determinants, including therapist knowledge and attitudes, and implementation outcomes (i.e., penetration). In the manuscript, we report on implementation strategies used in each phase of the implementation process.

In addition to using EPIS to inform the implementation and evaluation, all of the work described within the case study has been strongly guided by a community-academic partnership (Drahota et al., 2016). The primary members of this partnership include policy-makers, leadership from community mental health agencies, and university-based researchers. The input of therapists, administrators, and consumers from community mental health agencies has also been solicited over the course of the efforts described.

Case Study: Creating a Trauma-Informed Behavioral Health System in the City of Philadelphia

To support TF-CBT implementation and consistent with leading implementation science frameworks used to guide the work, DBHIDS and CBH have used multi-faceted, multi-level implementation strategies including planning, educating, and financing (Powell et al., 2012; Proctor et al., 2009). Planning strategies include those that help stakeholders engage in pre-implementation strategies to increase engagement and buy-in during the exploration and preparation phases. Specifically, DBHIDS prioritized relationship building by bringing together multiple stakeholders from different organizations to attend to the barriers in implementing TF-CBT. Education strategies refer to those that are intended to change the knowledge of stakeholders implementing (e.g., training) and have occurred during all phases of the implementation process. DBHIDS has provided didactic training, ongoing clinical consultation, and technical assistance around both screening and assessment for trauma and the implementation of TF-CBT. Financial strategies refer to the use of incentives to increase use of EBPs. In 2012, CBH implemented an enhanced financial rate for therapists to incentivize use of TF-CBT during implementation. These implementation strategies are consistent with the broader approach that DBHIDS has taken to the implementation of EBPs (Powell et al., 2016).

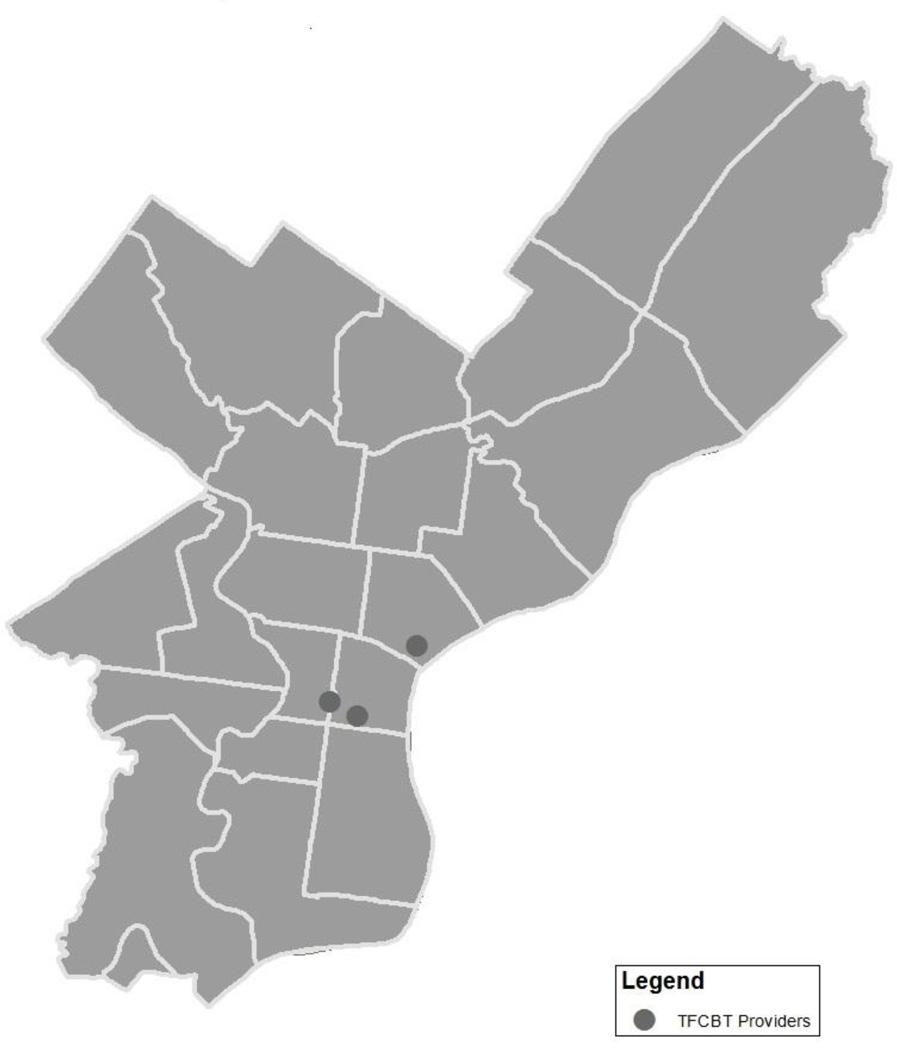

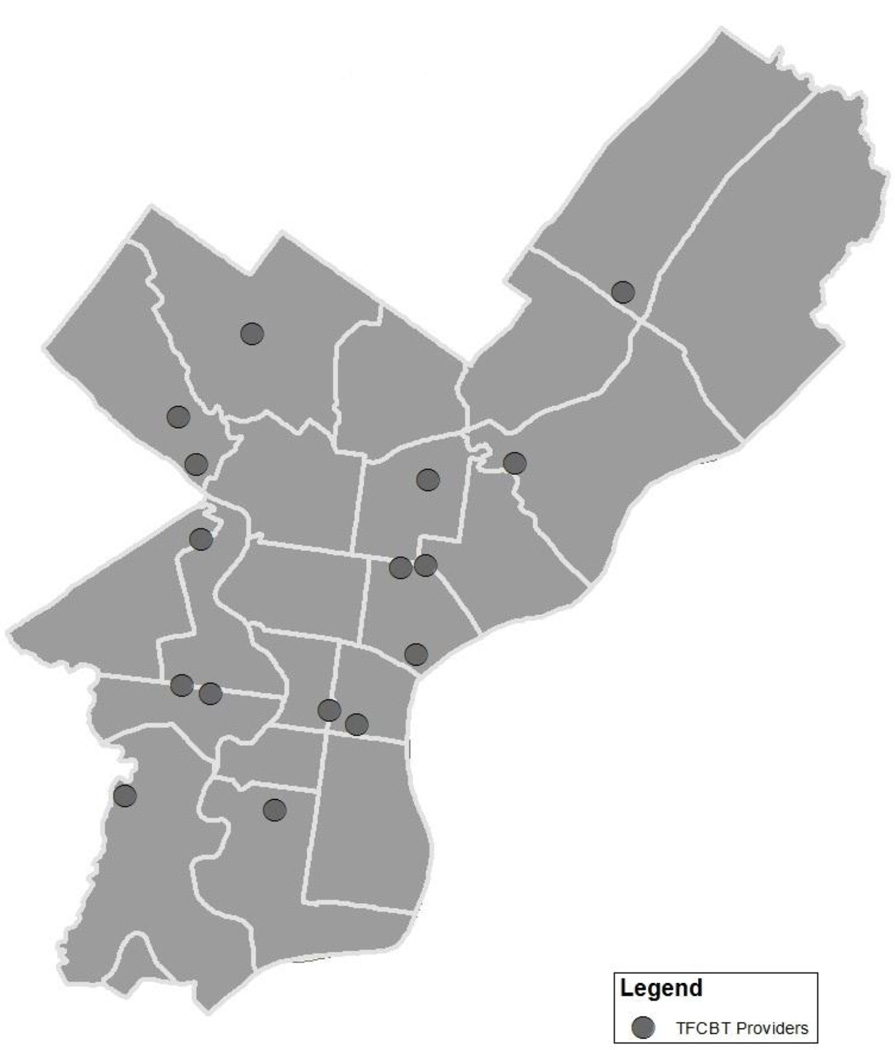

A number of activities make up the efforts to create a trauma-informed behavioral health system. First, during the preparation phase, DBHIDS endeavored to develop a coordinated system of service providers (Grasso, Webb, Cohen, & Berman, 2013) given that historically, the approach to trauma treatment in Philadelphia was not well coordinated. Three agencies in close proximity, in the center of the city, provided specialty trauma treatment, but this was not enough to meet demand across a large metropolitan city (see Figure 3 for a map of trauma providers prior to PACTS). Now, following implementation of TF-CBT through PACTS, 14 behavioral health agencies (which comprise 16 programs) across the Philadelphia metropolitan area (see Figure 4) provide TF-CBT. It is noteworthy that the number of providers providing TF-CBT has greatly increased in both general outpatient and specialty settings (i.e., residential, Hispanic/Latino oriented programs). In addition to the 14 behavioral health agencies, during the preparation and implementation phases, linkages have been made between these agencies and pediatric hospitals, child advocacy centers, crisis response centers, the medical examiner’s office, juvenile justice, the child welfare system, and schools, in order to increase system capacity to screen and refer youth appropriately.

Figure 3.

Map of trauma providers prior to PACTS

Note. Prior to PACTS, there were three programs providing TF-CBT. Each circle in the figure represents one program which has multiple therapists within it providing TF-CBT.

Figure 4.

Map of trauma providers after PACTS (as of 2016)

Note. There are 14 organizations and 16 programs in the City of Philadelphia implementing TF-CBT through PACTS. Each circle in the figure represents one program which has multiple therapists within it providing TF-CBT.

Simultaneously as the referral network of behavioral health providers was built during the preparation phase, the PACTS team also began to provide training, consultation, and technical assistance to behavioral health and non-behavioral health staff in TF-CBT (see Figure 1 for a visual depiction of described activities; Hanson et al., 2014; Powell et al., 2012). Since 2011, six cohorts (182 therapists and 34 supervisors) have received training in TF-CBT. A seventh cohort will be trained in the Fall of 2016. As of 2016, only 46% of trained therapists (n = 83) and 44% of trained supervisors (n = 15) remain in their agencies, suggesting a high turnover rate. During the implementation phase, training includes two days of didactic training and ongoing consultation for eight months that consists of bi-weekly consultation calls with a TF-CBT certified master trainer. These calls average one hour in duration with up to ten participants in attendance. Each clinician presents twice on active cases during the 16 calls, and attendance is expected (at least 13 out of the 16 calls). In addition to the training and consultation, each agency designates a supervisor who provides weekly internal supervision to therapists. Annually, booster trainings are provided to keep therapists engaged in TF-CBT implementation on relevant topics (e.g., ACES). There have also been meetings with executive directors in 2013, 2014, and 2016 to keep leadership engaged in TF-CBT implementation in their agencies.

To increase access to evidence-based trauma services in the community, during the implementation phase, it became clear that trauma screening and assessment was necessary (Cusack, Frueh, & Brady, 2004; Frueh et al., 2002). Initially, few agencies implementing TF-CBT reported using a structured trauma screening at intake and/or a trauma symptom rating scale for ongoing assessment and progress monitoring. Clinicians and supervisors discussed a desire to utilize these measures; however, concerns were raised about the realities of implementing such tools, consistent with the broader literature (Jensen-Doss & Hawley, 2010, 2011; Osterberg, Jensen-Doss, Cusack, & de Arellano, 2009). During informal conversations with the PACTS team, clinicians reported the immense paperwork burdens that they faced and worries that client rapport could be impacted by adding more paperwork. Furthermore in these conversations, clinicians emphasized the importance of ensuring that these measures could be used in clinically meaningfully ways, and the need for support from their agencies around the use of these measures. Thus, in 2014, PACTS provided screening and assessment training to PACTS-trained clinicians (i.e., cohorts one through four), and subsequently provided consultation on screening and assessment to all organizations implementing TF-CBT as part of PACTS.

Methods

Evaluation of the implementation of TF-CBT is a core mission of PACTS and is led by the university-based evaluation group. To evaluate the effectiveness of the multi-faceted, multilevel implementation strategies used by DBHIDS (and consistent with the implementation science frameworks used to guide the evaluation), the evaluation team has used a hybrid approach (Curran, Bauer, Mittman, Pyne, & Stetler, 2012) that includes both implementation and client outcomes. Variables of interest include implementation determinants (inner context factors such as therapist knowledge and attitudes about EBPs) and implementation outcomes (i.e., penetration; Proctor et al., 2009). Client outcomes such as treatment outcome, symptoms, and functioning are also measured by the evaluation team but are not reported in this manuscript as collection of this data is ongoing.

Implementation Determinants

To understand the impact of the education implementation strategies used by DBHIDS (i.e., training and ongoing consultation) on implementation determinants, therapist knowledge of and attitudes towards EBP was evaluated in four cohorts (cohorts three through six).1 Knowledge and attitudes were assessed at three time-points: pre-training, post-training (i.e., directly following the completion of the training workshop), and at six-month follow-up. The measurement strategy used was modified after cohorts three and four due to preliminary results suggesting that knowledge and attitudes did not change over time. Rather than use general EBP knowledge and attitude questionnaires, trauma-specific questionnaires were implemented because of the hypothesis that the general measures were not specific enough.

Knowledge

For cohorts three and four, therapist knowledge was measured using the Knowledge of Evidence-Based Services Questionnaire (KEBSQ; Stumpf, Higa-McMillan, & Chorpita, 2009), a 40-item self-report instrument that measures general knowledge of EBP, and demonstrates adequate psychometric properties (Stumpf et al., 2009). For cohorts five and six, the TF-CBT Knowledge Test (Woody, Anderson, D'Souza, Baxter, & Schubauer, 2015) and the TF-CBT Knowledge Questionnaire (Fitzgerald, 2012) were used to measure knowledge. The Knowledge Test provides clinicians the opportunity to apply their knowledge of TF-CBT to eight scenarios, while the Knowledge Questionnaire examines factual knowledge of principles of TF-CBT using 17 multiple-choice questions.

Attitudes

For cohorts three and four, therapist attitudes were assessed using the Evidence-Based Practice Attitude Scale (EBPAS; Aarons, 2004), a 15-item self-report questionnaire that assesses constructs related to the appeal of EBP, requirements to use EBP, general openness to new practices, and divergence between EBP and usual practice (Aarons & Sawitzky, 2006). The psychometric properties of the EBPAS have been well established and national norms are available (Aarons et al., 2010). For cohorts five and six, a modified version of the EBPAS specific to TF-CBT was utilized (Aarons, Fettes, et al., 2014). Specifically, rather than use the generic terminology in the question stems, TF-CBT was referenced (i.e., “I am willing to try TF-CBT even if I have to follow a manual” versus “I am willing to try new types of therapy/interventions even if I have to follow a treatment manual”).

Implementation Outcomes

Implementation outcomes are presented below and include administrative data. Implementation outcomes include: (1) rate of PTSD diagnoses in PACTS agencies over time, (2) number of youth receiving TF-CBT over time, and (3) penetration (i.e., number of youth receiving TF-CBT divided by the number of youth screening positive on trauma screening).

Data Sources

The data presented in this manuscript include both primary (i.e., self-reported from therapists) and administrative data (i.e., claims); primary data were collected following informed consent procedures. All data presented are quantitative; qualitative evaluation is ongoing.

Results

Implementation Determinants

Therapists trained in cohorts three and four (N = 54; 44% therapists trained) were 87% female. See Table 1 for means and standard deviations for each variable. Means at pre-training were consistent with means provided in a national sample of therapists in community mental health clinics (Aarons et al., 2010). Five repeated-measures analysis of variance analyses were conducted to ascertain change over time (pre-training, post-training, and six-month follow-up) in knowledge and attitudes. Knowledge and the majority of attitudes did not change significantly over time. However, a quadratic relationship with regard to openness to EBPs was observed (F (2, 50) = 6.68, p < .01), where openness to EBPs increased from pre-training (M = 2.98) to post-training (M = 3.23), (F (1, 25) = 14.889, p < .01) and decreased from post-training to six-month follow-up (M = 3.05), (F (1, 25) = 5.84, p < .05).

Table 1.

Therapist Evaluation Descriptive Statistics (Cohorts 3–4)

| Variables | Pre Training (n = 58) |

Post Training (n = 57) |

6-Month Follow-Up (n = 41) |

Repeated measures Analysis of Variance |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | df | F | p | |||

| EBPAS Requirementsa |

2.86 | 1.05 | 2.80 | 1.09 | 3.11 | 0.95 | 50 | 0.47 | 0.63 | ||

| EBPAS Appeala |

3.03 | 0.77 | 3.21 | 0.76 | 3.26 | 0.74 | 50 | 1.63 | 0.20 | ||

| EBPAS Opennessa |

2.98 | 0.63 | 3.23 | 0.63 | 3.05 | 0.58 | 50 | 6.68 | 0.00 | ||

| EBPAS Divergencea |

1.06 | 0.47 | 1.17 | 0.63 | 1.04 | 0.56 | 50 | 1.81 | 0.17 | ||

| KEBSQ Totalb |

96.45 | 10.02 | 95.92 | 9.51 | 94.57 | 4.46 | 56 | 1.57 | 0.22 | ||

Note.

EBPAS = Evidence-Based Practice Attitudes Scale (Aarons, 2004)

KEBSQ = Knowledge of Evidence-Based Practice Scale Questionnaire (Stumpf, et al., 2009)

Measured on a 5-point Likert scale (0-not at all; 1-slight extent; 2-moderate extent; 3-great extent; 4-very great extent). Higher scores are indicative of more positive attitudes, implementation climate, and implementation leadership.

Measured on a scale ranging from 0–160, where higher scores are indicative of more knowledge of evidence-based services for youth.

Therapists in cohorts five and six (N = 60; 87% of therapists trained) were primarily female (83%). See Table 2 for means and standard deviations for each variable. Notably, means at pre-training on the TF-CBT specific version of the EBPAS appear higher than the general EBPAS scores measured in cohorts three and four. Six paired samples t-tests were conducted to ascertain change over time (pre- to post-training) in knowledge and attitudes. Once again, knowledge and the majority of attitudes did not change significantly over time. However, openness to TF-CBT (t (57) = − 2.72, p < .01) changed over time, where openness to TF-CBT increased from pre-training (M = 3.40) to post-training (M = 3.58). Data at six-month follow up is not available for cohorts five and six.2

Table 2.

Descriptive Statistics and Tests for Significance for Therapist Evaluation (Cohorts 5 and 6)

| Variables | Pre Training (n = 60) |

Post Training (n = 58) |

Paired Sample t-test | ||||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | t | df | p | |

| TF-CBT EBPAS Requirementsa |

3.07 | 1.03 | 3.00 | 1.25 | 0.43 | 57 | 0.669 |

| TF-CBT EBPAS Appeala |

3.35 | 0.63 | 3.50 | 0.58 | −1.85 | 57 | 0.069 |

| TF-CBT EBPAS Opennessa |

3.40 | 0.55 | 3.58 | 0.56 | −2.72 | 57 | 0.009 |

| TF-CBT EBPAS Divergencea |

0.98 | 0.59 | 1.02 | 0.73 | −0.59 | 57 | 0.557 |

| TF-CBT EBPAS Totala |

3.21 | 0.50 | 3.26 | 0.55 | −0.85 | 57 | 0.399 |

| TF-CBT Knowledge Questionnaire - Total Correctb |

9.50 | 2.95 | 9.88 | 1.90 | −1.72 | 57 | 0.175 |

| TF-CBT Knowledge Test - Total Correct c |

5.36 | 1.40 | 5.74 | 1.49 | −1.38 | 57 | 0.092 |

Note.

TF-CBT EBPAS = Trauma focused cognitive behavioral therapy Evidence-Based Practice Attitude Scale (Aarons., 2014)

TF-CBT Knowledge Questionnaire (Fitzgerald, 2012)

TF-CBT Knowledge Test (Woody, et al., 2015)

Measured on a 5-point Likert scale (0-not at all; 1-slight extent; 2-moderate extent; 3-great extent; 4-very great extent). Higher scores are indicative of more positive attitudes, implementation climate, and implementation leadership.

Total correct out of 17, (1-correct; 0-incorrect). Higher scores are associated with higher levels of knowledge.

Total correct out of 8, (1-correct; 0-incorrect). Higher scores associated with higher levels of knowledge

Implementation Outcomes

Rate of PTSD diagnoses in PACTS agencies over time. Rate of PTSD diagnosis in agencies participating in TF-CBT implementation was investigated using Medicaid claims data. Prior to the launch of the trauma initiative in 2011, approximately 4% of youth receiving treatment in PACTS agencies had a diagnosis of PTSD. In 2014, approximately 31% of youth in agencies implementing TF-CBT had a diagnosis of PTSD (Community Behavioral Health, 2015), suggesting a substantial increase in the number of youth diagnosed with PTSD which may be due to increased availability and/or access to trauma services. This finding provides initial support for efforts to increase trauma screening. This rate of PTSD diagnosis falls within the estimates provided for rates of PTSD in urban, inner-city youth (i.e., 14–67%; Horowitz, Weine, & Jekel, 1995; Lipschitz, Rasmusson, Anyan, Cromwell, & Southwick, 2000). These diagnoses are based on claims data rather than research instruments and may represent an over or underestimate of the actual PTSD diagnosis prevalence.

Number of youth receiving TF-CBT from behavioral health providers

The number of youth receiving TF-CBT from PACTS behavioral health providers was measured in two ways. First, administrative claims data were used to extract the number of youth who received TF-CBT as indicated by the TF-CBT modifier implemented in 2012 to allow agencies to bill for the enhanced rate. According to these data, 316 unique clients were treated with TF-CBT from 2012–2015. Interestingly, not all youth whose agencies billed for the enhanced TF-CBT rate had a PTSD diagnosis (see Table 3). In 2012, 50% (n = 2) of youth treated with TF-CBT had a PTSD diagnosis (N = 4). In 2013, 30% (n = 38) of youth treated with TF-CBT had a PTSD diagnosis (N = 125). In 2014, 34% (n = 43) of youth treated with TF-CBT had a PTSD diagnosis (N = 125). In 2014, 52% (n = 71) of youth treated with TF-CBT had a PTSD diagnosis (N = 137).

Table 3.

Diagnoses of youth receiving TF-CBT as reported in Medicaid claims data and billing

| Diagnosis | Number of youth with diagnosis |

Percentage |

|---|---|---|

| PTSD | 146 | 35% |

| Acute reaction to stress | 1 | 0.2% |

| Adjustment Disorder | 108 | 26% |

| Anxiety Disorders | 10 | 2% |

| Disruptive Behavior Disorders | 73 | 18% |

| Mood Disorders | 26 | 6% |

| Other Disorders | 14 | 3% |

| Substance Use Disorder | 31 | 7% |

| Autism Spectrum Disorder | 5 | 1% |

| Total Diagnoses records |

414 | -- |

Note: The number of unique clients is 316. Claims data may have included more than one disorder per youth, thus the total number of diagnoses is greater than this number; however if youth had a PTSD diagnosis, then they were only counted once in the PTSD row. However, if they did not have a PTSD diagnosis, they could be counted more than once in all of the other diagnosis rows.

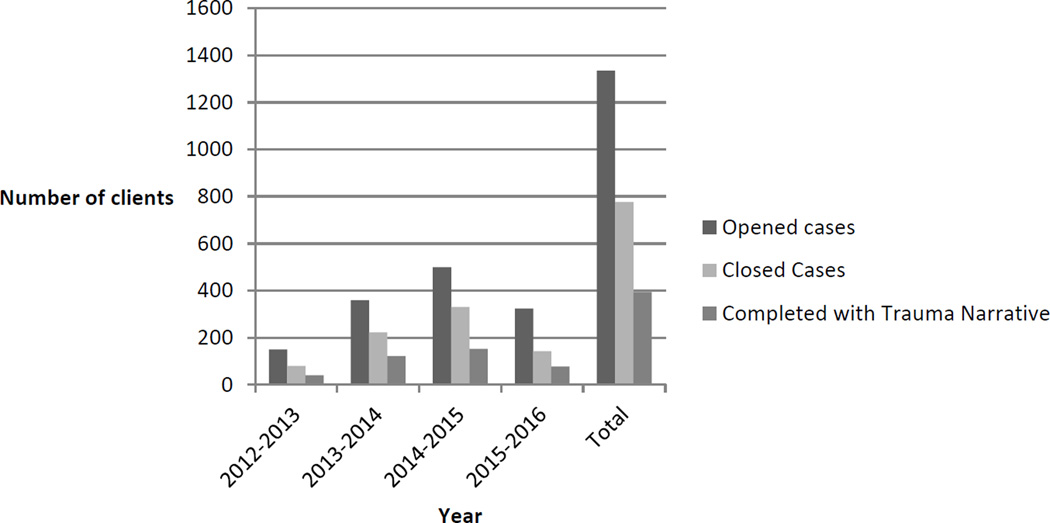

The second way to measure the number of youth receiving TF-CBT was through reports made by agency supervisors to the PACTS project manager. As evidenced by Figure 5, the number of clients starting and completing TF-CBT has grown each year, from approximately 80 youth completing treatment in 2012 to 330 youth in 2015 (the last year with complete data for the full year). Furthermore, about half of clients who have commenced TF-CBT have successfully completed all TF-CBT modules including the trauma narrative, a critical ingredient of TF-CBT (Deblinger, Mannarino, Cohen, Runyon, & Steer, 2011). The percentage of youth receiving all TF-CBT modules has been constant each year. Since 2012 when PACTS was launched, approximately 1335 children and adolescents have begun TF-CBT and about 776 youth have completed TF-CBT in the City of Philadelphia.

Figure 5.

Number of clients who started and completed TF-CBT from participating agencies implementing TF-CBT from 2012–2016

Note. Data from 2015–2016 only includes information from Quarters 1 and 2 because data is currently being collected. Opened cases refer to new cases that began TF-CBT in that year. Closed cases refer to cases that ended TF-CBT in that year. Completed with trauma narrative refers to those cases that were closed that completed TF-CBT and included the trauma narrative. All data presented here reflects unique cases.

The number of youth treated with TF-CBT as reported by supervisors is higher than the data identified through claims which can be explained in a number of ways. First, anecdotally, organizational leadership expressed reluctance about billing to the enhanced rate unless therapists were following TF-CBT with complete fidelity. Second, and also anecdotally, a number of the billing departments at the participating agencies were not aware that they could bill to the enhanced rate in early years. Third, participating agencies that were residential treatment facilities (two of the agencies) were not eligible for the enhanced rate. Fourth, three of the participating agencies already had negotiated an enhanced rate with CBH and did not use this modifier. Finally, therapists can only bill using this rate once they have completed all training requirements which takes approximately ten months.

Penetration

Penetration (i.e., number of youth receiving TF-CBT divided by the number of youth screening positive on trauma screening) has not been collected systematically across the course of the project. However, the project manager recently collected data during one month (March, 2016) to gain a better understanding of the number of youth screened for potentially traumatic events, the number of youth screening positive to experiencing a traumatic event, and the number of youth starting TF-CBT within participating agencies as reported by supervisors. During this month period, 232 youth were screened across the 14 agencies. Eighty-seven percent of these youth (n = 202) responded yes to at least one question on the Trauma History Questionnaire (THQ; Green, 1996), which is consistent with previous literature (Luthra et al., 2009). Twenty-two percent (n = 44) of eligible youth (i.e., screened positive on THQ) began TF-CBT during that month. This penetration rate suggests that about one-fifth of youth screening positive for a potentially traumatic event began TF-CBT in one month in PACTS agencies. Information about trauma symptoms at screening is not available, so it is difficult to interpret this penetration rate, particularly given that not all youth who endorse a traumatic event need TF-CBT. However, given that about 15% of youth who have experienced trauma go on to develop PTSD (Giaconia et al., 1995), a penetration rate of 20% would likely reach the majority of the youth within this sample who have clinically significant PTSD symptoms.

Discussion and Lessons Learned

This case study offers information about efforts to build a trauma-informed public behavioral health system for children and families. The results presented provide insight into the creation and initial evaluation of these efforts, and can hopefully serve as a guide to other systems undertaking similar efforts. A key aspect of developing a trauma-informed public behavioral health system was to establish a coordinated network of child providers located across the City of Philadelphia. In 2011, there were three programs providing trauma-specific services in one area of the city. As of 2016, there are fourteen providers, representing sixteen programs, providing evidence-based trauma treatments in all quadrants of the city. Second, linkages between locations where traumatized youth are identified and behavioral health providers have been strengthened, as consistent with recommendations to build trauma-informed health systems (Grasso et al., 2013; Ko et al., 2008). Third, and perhaps most importantly, there has been an increase in the number of youth identified and treated with TF-CBT. Specifically, the number of youth diagnosed with PTSD in agencies implementing TF-CBT has increased, suggesting an increased awareness and ability to screen and assess for trauma. Furthermore, these agencies have reported an over two-fold increase in the number of youth treated with TF-CBT since 2012, including youth receiving the entire treatment package, which is notable in an urban community setting. The ability to reach so many youth is partly attributable to the large footprint with regard to training behavioral health providers. Since 2011, 216 therapists and supervisors in community mental health settings have received training and consultation intended to increase implementation of TF-CBT. A number of lessons have been learned through the process of creating a trauma-informed behavioral health system, delineated below. These lessons are based upon the collective perspectives of the PACTS core team and the quantitative data presented above.

1. Agencies often need initial support in establishing trauma-based screening mechanisms

During the implementation phase, many of providers raised issues related to the screening and assessment of traumatized youth, consistent with the literature (Jensen-Doss & Hawley, 2010, 2011). Initially, providers reported difficulty finding clients who were candidates for TF-CBT because many agencies did not have established trauma-based screening mechanisms. To ameliorate this issue, each agency received training and tailored consultation (Nadeem, Gleacher, & Beidas, 2013) to assist with the implementing of screening and progress monitoring around trauma symptoms. This experience suggests that spending more time during the exploration and preparation phases of implementation on the proper identification of assessment tools, in partnership with those who will be implementing the EBP, is critical.

2. Engaging with leaders in agencies implementing TF-CBT is important

Executive leadership buy-in and involvement is critical to the implementation process (Aarons, Ehrhart, Farahnak, & Sklar, 2014) and was emphasized by annual meetings between the PACTS team and the executive directors in PACTS agencies. Equally as important, is the role of someone at each agency who can play the role of ‘boundary spanner.’ This refers to individuals who can serve as the “point-person” between the implementation team and the front-line implementers. Providing small incentives (e.g., a small stipend) and acknowledgement to those individuals proved critical to reinforce their efforts. This role was typically played by the clinical supervisor at each organization. These individuals were critical in communicating the PACTS message to front-line providers and to ensure that incentives (e.g., enhanced rate) for implementation were communicated to administrative staff.

3. Staff turnover is a critical issue that must be planned prior to training

Although a large number of clinicians have been trained in TF-CBT, there has been a high percentage of turnover in both therapists (46%) and supervisors (44%) over the past four years. This rate of turnover is roughly comparable to estimates in the literature (Mor Barak, Nissly, & Levin, 2001), and the annual average rate of turnover (25%) in Philadelphia community mental health clinics (Beidas, Marcus, Wolk, et al., 2015). Turnover can compromise continuity of care, destabilize agencies, diminish quality of services (Glisson & James, 2002; Knudsen, Johnson, & Roman, 2003; Mor Barak et al., 2001), and impact implementation of EBPs (Ganju, 2003; Isett et al., 2007; Woltmann et al., 2008), due to the substantial resources invested in training and supporting mental health workers.

The loss of almost half of the trained therapists is a threat to the sustainability of agencies providing TF-CBT (Stirman et al., 2012), although evidence from the Philadelphia system suggests that about 50% of therapists who turnover stay in the public system, suggesting some return on investment (Beidas, Marcus, Wolk, et al., 2015). Even more concerning is the loss of almost half of the supervisors, given that much of the literature assumes that turnover is not as insidious in supervisors, thus emphasizing train-the-trainer models (Nakamura et al., 2014). There are likely a number of reasons for turnover in PACTS agencies that are generalizable to community mental health and are perhaps specific to trauma-specific work. General reasons likely include professional opportunities (e.g., opportunities for promotion), personal reasons (e.g., familial responsibilities), organizational factors, and financial reasons (Beidas, Marcus, Wolk, et al., 2015). Specific reasons may include differing perspectives on how to treat trauma and vicarious traumatization. To ensure the viability of TF-CBT into the future, reducing turnover and identifying ways to support staff engaging in trauma-specific EBPs warrants future study. Strategies to reduce turnover include interventions to reduce burnout (Beidas, Marcus, Wolk, et al., 2015) and/or to improve organizational culture and climate (Glisson et al., 2008).

4. Training and consultation in evidence-based trauma treatments may be necessary but not sufficient to improve therapist knowledge and openness to them

In the therapist evaluation, change in implementation determinants hypothesized to be important to the implementation process was not observed, even after changing measurement methods, with one exception (i.e., openness to EBP). The first set of measures used in the therapist evaluation assessed knowledge and attitudes of EBP more broadly, rather than focusing specifically on TF-CBT. Given emerging evidence that therapists respond differently to the same questionnaire when it references EBP generally versus a specific EBP (Reding, Chorpita, Lau, & Innes-Gomberg, 2014), trauma-specific measures of attitudes (e.g., EBPAS-Trauma) and knowledge (see Woody et al., 2015) were implemented. However, findings remain consistent even with change in measurement strategy.

Null findings with regard to therapist knowledge change are inconsistent with the larger literature (Beidas & Kendall, 2010). It is not surprising that knowledge about general EBP did not change after therapists attended a TF-CBT workshop, particularly given that the measure used (KEBSQ) does not include questions related to trauma. However, the finding that TF-CBT specific knowledge also did not change is puzzling. The data suggests that there may have been a ceiling effect on the Knowledge Test – on average, prior to training, clinicians were able to answer more than 60% of the questions correctly. Alternatively, clinicians may have come to the training with a higher than average knowledge of TF-CBT (they were required to register for the online training; https://tfcbt.musc.edu/). A ceiling effect was not observed on the Knowledge Questionnaire. Potential explanations for the lack of findings may be because the measure was too difficult or did not represent workshop content.

The only finding in the therapist evaluation was that openness to EBP increased following training but decreased following consultation, which corroborates previous literature (Edmunds et al., 2014). Therapists who are initially open to new practices may become less open after they became aware of the significant investment needed to become competent in the EBP, making them less open to future new practices. It also may be that clinicians encountered challenges implementing TF-CBT after training and during consultation, which may have resulted in less openness (Edmunds et al., 2014). Unfortunately, it is unknown if this pattern was replicated in the TF-CBT specific EBPAS given that data at follow-up for cohorts five and six is not available. It is of note that initial attitudinal scores on the general EBPAS appeared lower than initial attitudinal scores on the TF-CBT specific EBPAS. This provides support to previous findings that EBP attitudes may differ by practice (Reding et al., 2014). Future work further specifying more precise measurement strategies in implementation science is needed. Cohort effects and other variables may have impacted these scores.

An alternative explanation for the findings observed in the therapist evaluation (i.e., lack of knowledge change; decrease in openness over time) suggests that implementation of TF-CBT did not meet the needs of therapists in the community. There are a number of explanations for why this may be the case. One explanation that is particularly germane based upon the PACTS team’s anecdotal experiences has to do with why clients come to treatment. Most youth present to community mental health clinics due to disruptive behavior problems, with many having underlying trauma histories. Therapists may need more modular approaches (Weisz et al., 2012) to allow them to treat the needs of the youth presenting to treatment; meeting the client where they indicate they need help (i.e., disruptive behavior) and then including elements of evidence-based trauma treatment. If therapists and organizations had been more involved during the exploration phase of treatment selection, a different EBP for trauma may have been selected. Additionally, therapist characteristics, such as the length of time in their agency and number of years practicing may have impacted their attitudes. This information was not collected as part of the evaluation but has been found to be predictive of knowledge and attitudes in previous work (Beidas, Marcus, Aarons, et al., 2015).

5. Youth who present in community mental health settings with trauma are heterogeneous, which has implications for how to assess and treat trauma

Youth receiving TF-CBT had heterogeneous diagnoses as reported in claims data. Approximately forty-two percent of youth had a PTSD diagnosis, while the remainder of youth had other diagnoses. The most common other diagnoses that were associated with claims data included adjustment disorder and disruptive behavior disorders. The variability in diagnoses has implications for decisions about which EBP to implement. Inclusion criteria have varied across TF-CBT treatment studies (Cohen, Mannarino, & Knudsen, 2005; Cohen, Mannarino, Perel, & Staron, 2007). Little is known about how to proceed when youth present with a history of trauma but do not meet full criteria for PTSD in community mental health settings (i.e., whether they still would benefit from TF-CBT, or if another EBP or prevention model would be more appropriate).

One important consideration that may explain the heterogeneous presentation of the client population may be that external forces (e.g., outer context factors such as fiscal and training pressures) may have resulted in the identification of youth for TF-CBT even if they did not meet full criteria for PTSD. Specifically, community mental health agencies were receiving an enhanced rate for each TF-CBT session. It is unlikely that this explanation best describes the scenario because agencies tended to not bill towards the enhanced rate because of concerns that they could only use the enhanced rate when they implemented TF-CBT with 100% fidelity. However, it is possible that participation in system efforts to implement EBP may have unintended consequences in the appropriate identification and subsequent treatment of youth in community settings. For example, therapist may treat all youth with TF-CBT even if that is not appropriate, given the desire to use what they have learned. This points to the importance of both including evidence-based assessment in all EBP efforts as well as measuring the potential unintended consequences of outer context factors on EBP implementation during all phases of the implementation process.

6. When implementing EBP in the community, taking a community-academic partnership approach is critical

The community-academic partnership supporting this work (Drahota et al., 2016) was the foundation of everything that was accomplished. Community partners, including policy-makers at DBHIDS, leadership at community mental health clinics, youth and families impacted by trauma, and the university-based evaluation team came together in various ways through PACTS in order to achieve the goal of improving access to TF-CBT in the City of Philadelphia. For example, all client-level outcome measures used in the client evaluation (not reported in this manuscript) were vetted from a youth community advisory board, who specifically selected the measures used in the evaluation. This allowed for the use of outcome measures that were both clinically relevant but acceptable to youth and families.

7. More work is needed to guide decisions on how to handle the agencies that struggle most with EBP implementation and sustainment

A big challenge has been the balance of sustaining the implementation of EBPs and dismissing agencies that are not meeting the criteria to be considered TF-CBT providers from the system perspective. Since 2011, three agencies that received training and consultation in TF-CBT are no longer formally involved in the system initiative to implement TF-CBT (i.e., no longer sustaining the implementation of TF-CBT). Two agencies were lost due to the closing of their behavioral health services. One agency was asked to leave due to inability to meet requirements, including identifying and treating youth with TF-CBT. Balancing decision-making around when to keep working with struggling agencies versus dismissing them from efforts to implement EBP is an area that warrants future research. Organizational readiness (Scaccia et al., 2015), or how ready an organization is to implement an innovation, can provide important insights into this process.

Moving forward with a trauma-informed behavioral health system

The public behavioral health system plays a primary role in supporting the behavioral health needs of children and youth in urban communities. Previous studies have highlighted the high percentage of children and youth experiencing traumatic events (Ford et al., 1999). Although the evidence base of robust treatment practices to address trauma has grown, “current policy and practice responses do not reflect the urgency, depth or quality required by the high level of need, low impact of many current efforts, and limited community-based service capacity,” (National Center for Children in Poverty, 2007, pp. 3).

Maintaining the use of EBP after the initial implementation period ends is a critical challenge in the public health sector, and comparatively less is known in the implementation science literature about how to enhance sustainability compared to initial implementation (Stirman et al., 2012). A benefit of Philadelphia’s payer (i.e., CBH) being integrated within DBHIDS is the ability to sustain initiatives beyond grant cycles. This has begun with the city provision of an enhanced rate for the use of TF-CBT. However, while DBHIDS and CBH will continue to support the initiative, the significant funding currently available from grant funding will not exist once the grant ends. Here again, it is useful to draw on the implementation science literature, which has highlighted nine core domains that affect capacity for program sustainability: political support, funding stability, partnerships, organizational capacity, program evaluation, program adaptation, communications, public health impacts, and strategic planning (Schell et al., 2013). Rather than viewing sustainability as a static end-point, newer models of sustainability emphasize continued learning and problem-solving among stakeholders, as well as the ongoing adaptation of interventions, with a primary focus on fit between interventions and context (Chambers, Glasgow, & Stange, 2013). Taken together, this suggests that in addition to outside support from DBHIDS and CBH, agencies implementing TF-CBT will need to work together to support each other as they move into the sustainment phase of implementation.

Several steps recently have been taken to move the responsibility from the grant personnel to agency leaders. First, a monthly web-based supervisors meeting to focus on the supervision of EBP and to allow for stakeholders to share challenges to successful implementation (e.g., turnover, lack of agency support, serving children and families who experience ongoing chronic stress) has been implemented to allow for collaborative problem-solving about how to best address these barriers. Second, meetings with agency leadership to discuss trauma work after the grant and to understand how to consolidate the gains made (e.g., maintaining the same number of agencies but increasing the depth of clinicians receiving training and holding new meetings with child-serving systems regarding cross-system support). It is likely that not all current agencies will continue to offer TF-CBT without ongoing support; however, many of the agencies have established strong relationships and will continue to collaborate in the future. Other future directions include expanding EBP offered to providers, including those for disruptive behavior disorders, a primary presenting concern for many traumatized youth, partnering with settings where youth spend their time, such as schools; and deliberately targeting the most vulnerable youth in the system (young children ages 2–6 years, LGBTQ youth, commercially sexually exploited children, and intentionally injured youth).

Conclusion

Building a trauma-informed behavioral health system in the City of Philadelphia has been a hugely informative learning experience. Preliminary findings and lessons learned presented here can help inform other large-scale efforts to create trauma-informed behavioral health systems (Grasso et al., 2013; Ko et al., 2008).

Highlights.

Many systems are moving towards incorporating a trauma-informed approach.

We describe a case study of building a trauma-informed public behavioral health system.

We present data on implementation determinants and outcomes.

We present lessons learned based on this experience.

Acknowledgments

We are especially grateful for the support and partnership that the Department of Behavioral Health and Intellectual disAbility Services provided for this project and for the Evidence Based Practice and Innovation (EPIC) group and Ronnie Rubin, PhD. We thank Linda Trinh, John Antonovich, and Kelly Zentgraf for their assistance in manuscript preparation. We are also very grateful to the individuals who have been a part of the Philadelphia Alliance for Child Trauma Services (PACTS), including the therapists, administrators, and families who have been involved. Funding for this project was supported by a grant from the Substance Abuse and Mental Health Administration SM61087 and NIMH K23 MH 099179 (Beidas).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Note that outcomes for therapists were not evaluated in the first two cohorts who were trained.

Six-month-follow up data was collected for each cohort at a booster training session (specific for that cohort) held approximately six months following training (see Figure 1). This occurred for cohorts one through four. However, for cohort five, the booster session was open to all cohorts. Only 16 participants from cohort five attended that booster session. The booster session for cohort six has not yet been conducted as they are still in active training and consultation. Thus, follow-up analyses were not conducted because of the small N.

References

- Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the Evidence-Based Practice Attitude Scale (EBPAS) Mental Health Services Research. 2004;6:61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annual Review of Public Health. 2014;35:255–274. doi: 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Fettes D, Willging C, Gunderson L, Garrison L, Phillips J. Work life and attitudes toward a specific evidence-based practice. NIMH Conference on Mental Health Services Research, Publishing; Bethesda, MD. 2014. [Google Scholar]

- Aarons GA, Glisson C, Hoagwood K, Kelleher K, Landsverk J, Cafri G. Psychometric properties and U.S. National norms of the Evidence-Based Practice Attitude Scale (EBPAS) Psychol Assess. 2010;22:356–365. doi: 10.1037/a0019188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological Services. 2006;3:61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Achara-Abrahams I, Evans AC, King JK. Recovery-focused behavioral health system transformation: a framework for change and lessons learned from Philadelphia. In: Kelly JF, White WL, editors. Addiction recovery management: theory, research, and practice. New York, NY: Humana Press; 2011. pp. 187–208. [Google Scholar]

- Beidas RS, Kendall PC. Training therapists in evidence-vased practice: a critical review of studies from a systems-contextual perspective. Clinical Psychology. 2010;17:1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists' use of therapy techniques in a large public mental health system. JAMA Pediatrics. 2015;169:374–382. doi: 10.1001/jamapediatrics.2014.3736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Marcus S, Wolk CB, Powell B, Aarons GA, Evans AC, et al. A prospective examination of clinician and supervisor turnover within the context of implementation of evidence-based practices in a publicly-funded mental health system. Administration and Policy in Mental Health and Mental Health Services Research. 2015 doi: 10.1007/s10488-015-0673-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bloom SL, Bennington-Davis M, Farragher B, McCorkle D, Nice-Martini K, Wellbank K. Multiple opportunities for creating sanctuary. Psychiatric Quarterly. 2003;74:173–190. doi: 10.1023/a:1021359828022. [DOI] [PubMed] [Google Scholar]

- Chadwick Center for Children and Families. Assessment-based treatment for traumatized children: a Trauma Assessment Pathway (TAP) San Diego, CA: Author; 2009. [Google Scholar]

- Chaffin M, Friedrich B. Evidence-based treatments in child abuse and neglect. Children and Youth Services Review. 2004;26:1097–1113. [Google Scholar]

- Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implementation Science. 2013;8:117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JA, Deblinger E, Mannarino AP, Steer RA. A multisite, randomized controlled trial for children with sexual abuse-related PTSD symptoms. Journal of the American Academy of Child and Adolescent Psychiatry. 2004;43:393–402. doi: 10.1097/00004583-200404000-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP. Interventions for sexually abused children: initial treatment outcome findings. Child Maltreatment. 1998;3:17–26. [Google Scholar]

- Cohen JA, Mannarino AP. Disseminating and implementing trauma-focused CBT in community settings. Trauma Violence & Abuse. 2008;9:214–226. doi: 10.1177/1524838008324336. [DOI] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Deblinger E. Treating trauma and traumatic grief in children and adolescents. New York, NY: Guilford Press; 2006. [Google Scholar]

- Cohen JA, Mannarino AP, Knudsen K. Treating sexually abused children: 1 year follow-up of a randomized controlled trial. Child Abuse & Neglect. 2005;29:135–145. doi: 10.1016/j.chiabu.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Perel JM, Staron V. A pilot randomized controlled trial of combined trauma-focused CBT and sertraline for childhood PTSD symptoms. Journal of the American Academy of Child and Adolescent Psychiatry. 2007;46:811–819. doi: 10.1097/chi.0b013e3180547105. [DOI] [PubMed] [Google Scholar]

- Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cusack KJ, Frueh BC, Brady KT. Trauma history screening in a community mental health center. Psychiatric Services. 2004;55:157–162. doi: 10.1176/appi.ps.55.2.157. [DOI] [PubMed] [Google Scholar]

- de Arellano MA, Lyman DR, Jobe-Shields L, George P, Dougherty RH, Daniels AS, et al. Trauma-focused cognitive-behavioral therapy for children and adolescents: assessing the evidence. Psychiatric Services. 2014;65:591–602. doi: 10.1176/appi.ps.201300255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deblinger E, Mannarino AP, Cohen JA, Runyon MK, Steer RA. Trauma-focused cognitive behavioral therapy for children: impact of the trauma narrative and treatment length. Depression and Anxiety. 2011;28:67–75. doi: 10.1002/da.20744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeCandia CJ, Guarino K, Clervil R. Trauma-informed care and trauma-specific services: a comprehensive approach to trauma intervention. Washington, DC: American Institute for Research; 2014. [Google Scholar]

- Drahota A, Meza RD, Brikho B, Naaf M, Estabillo JA, Gomez ED, et al. Community-academic partnerships: a systematic review of the state of the literature and recommendations for future research. The Milbank Quarterly. 2016;94:163–214. doi: 10.1111/1468-0009.12184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edmunds JM, Read KL, Ringle VA, Brodman DM, Kendall PC, Beidas RS. Sustaining clinician penetration, attitudes and knowledge in cognitive-behavioral therapy for youth anxiety. Implementation Science. 2014;9:89. doi: 10.1186/s13012-014-0089-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felitti VJ, Anda RF, Nordenberg D, Williamson DF, Spitz AM, Edwards V, et al. Relationship of childhood abuse and household dysfunction to many of the leading causes of death in adults. The Adverse Childhood Experiences (ACE) Study. American Journal of Preventive Medicine. 1998;14:245–258. doi: 10.1016/s0749-3797(98)00017-8. [DOI] [PubMed] [Google Scholar]

- Finkelhor D, Turner H, Ormrod R, Hamby SL. Violence, abuse, and crime exposure in a national sample of children and youth. Pediatrics. 2009;124:1411–1423. doi: 10.1542/peds.2009-0467. [DOI] [PubMed] [Google Scholar]

- Fitzgerald MM. TF-CBT Knowledge Questionnaire. Boulder, CO: Institute of Behavioral Science; 2012. [Google Scholar]

- Foa EB, Hembree EA, Cahill SP, Rauch SA, Riggs DS, Feeny NC, et al. Randomized trial of prolonged exposure for posttraumatic stress disorder with and without cognitive restructuring: outcome at academic and community clinics. Journal of Consulting and Clinical Psychology. 2005;73:953–964. doi: 10.1037/0022-006X.73.5.953. [DOI] [PubMed] [Google Scholar]

- Ford JD, Racusin R, Daviss WB, Ellis CG, Thomas J, Rogers K, et al. Trauma exposure among children with oppositional defiant disorder and attention deficit-hyperactivity disorder. Journal of Consulting and Clinical Psychology. 1999;67:786–789. doi: 10.1037/0022-006X.67.5.786. [DOI] [PubMed] [Google Scholar]

- Frueh BC, Cousins VC, Hiers TG, Cavenaugh SD, Cusack KJ, Santos AB. The need for trauma assessment and related clinical services in a state-funded mental health system. Community Mental Health Journal. 2002;38:351–356. doi: 10.1023/a:1015909611028. [DOI] [PubMed] [Google Scholar]

- Ganju V. Implementation of evidence-based practices in state mental health systems: implications for research and effectiveness studies. Schizophrenia Bulletin. 2003;29:125–131. doi: 10.1093/oxfordjournals.schbul.a006982. [DOI] [PubMed] [Google Scholar]

- Giaconia RM, Reinherz HZ, Silverman AB, Pakiz B, Frost AK, Cohen E. Traumas and posttraumatic stress disorder in a community population of older adolescents. Journal of the American Academy of Child and Adolescent Psychiatry. 1995;34:1369–1380. doi: 10.1097/00004583-199510000-00023. [DOI] [PubMed] [Google Scholar]

- Gillespie CF, Bradley B, Mercer K, Smith AK, Conneely K, Gapen M, et al. Trauma exposure and stress-related disorders in inner city primary care patients. General Hospital Psychiatry. 2009;31:505–514. doi: 10.1016/j.genhosppsych.2009.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C, James LR. The cross-level effects of culture and climate in human service teams. Journal of Organizational Behavior. 2002;23:767–794. [Google Scholar]

- Glisson C, Schoenwald SK, Kelleher K, Landsverk J, Hoagwood KE, Mayberg S, et al. Therapist turnover and new program sustainability in mental health clinics as a function of organizational culture, climate, and service structure. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35:124–133. doi: 10.1007/s10488-007-0152-9. [DOI] [PubMed] [Google Scholar]

- Grasso DJ, Webb C, Cohen A, Berman I. Building a consumer base for trauma-focused cognitive behavioral therapy in a state system of care. Administration and Policy in Mental Health. 2013;40:240–254. doi: 10.1007/s10488-012-0410-3. [DOI] [PubMed] [Google Scholar]

- Green BL. Trauma History Questionnaire. Measurement of stress, trauma, and adaptation. 1996;1:366–369. [Google Scholar]

- Guarino K, Soares P, Konnath K, Clervil R, Bassuk E. Trauma-informed organizational toolkit. Rockville, MD: Center for Mental Health Services, Substance Abuse and Mental Health Services Administration, Daniels Fund, National Child Traumatic Stress Network, and W.K. Kellogg Foundation; 2009. [Google Scholar]

- Hanson RF, Gros KS, Davidson TM, Barr S, Cohen J, Deblinger E, et al. National trainers' perspectives on challenges to implementation of an empirically-supported mental health treatment. Administration and Policy in Mental Health. 2014;41:522–534. doi: 10.1007/s10488-013-0492-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horowitz K, Weine S, Jekel J. PTSD symptoms in urban adolescent girls: compounded community trauma. Journal of the American Academy of Child and Adolescent Psychiatry. 1995;34:1353–1361. doi: 10.1097/00004583-199510000-00021. [DOI] [PubMed] [Google Scholar]

- Isett KR, Burnam MA, Coleman-Beattie B, Hyde PS, Morrissey JP, Magnabosco J, et al. The state policy context of implementation issues for evidence-based practices in mental health. Psychiatric Services. 2007;58:914–921. doi: 10.1176/ps.2007.58.7.914. [DOI] [PubMed] [Google Scholar]

- Jensen-Doss A, Hawley KM. Understanding barriers to evidence-based assessment: clinician attitudes toward standardized assessment tools. Journal of Clinical Child & Adolescent Psychology. 2010;39:885–896. doi: 10.1080/15374416.2010.517169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, Hawley KM. Understanding clinicians' diagnostic practices: attitudes toward the utility of diagnosis and standardized diagnostic tools. Administration and Policy in Mental Health. 2011;38:476–485. doi: 10.1007/s10488-011-0334-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen HK, Johnson JA, Roman PM. Retaining counseling staff at substance abuse treatment centers: effects of management practices. Journal of Substance Abuse Treatment. 2003;24:129–135. doi: 10.1016/s0740-5472(02)00357-4. [DOI] [PubMed] [Google Scholar]

- Ko SJ, Ford JD, Kassam-Adams N, Berkowitz SJ, Wilson C, Wong M, et al. Creating trauma-informed systems: child welfare, education, first responders, health care, juvenile justice. Professional Psychology-Research and Practice. 2008;39:396–404. [Google Scholar]

- Lipschitz DS, Rasmusson AM, Anyan W, Cromwell P, Southwick SM. Clinical and functional correlates of posttraumatic stress disorder in urban adolescent girls at a primary care clinic. Journal of the American Academy of Child and Adolescent Psychiatry. 2000;39:1104–1111. doi: 10.1097/00004583-200009000-00009. [DOI] [PubMed] [Google Scholar]

- Luthra R, Abramovitz R, Greenberg R, Schoor A, Newcorn J, Schmeidler J, et al. Relationship between type of trauma exposure and posttraumatic stress disorder among urban children and adolescents. Journal of Interpersonal Violence. 2009;24:1919–1927. doi: 10.1177/0886260508325494. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JS, Audage NC. The effects of childhood stress on health across the lifespan. Atlanta, GA: Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2008. [Google Scholar]

- Mor Barak ME, Nissly JA, Levin A. Antecedents to retention and turnover among child welfare, social work, and other human service employees: what can we learn from past research? A review and metanalysis. Social Service Review. 2001;75:625–661. [Google Scholar]

- Nadeem E, Gleacher A, Beidas RS. Consultation as an implementation strategy for evidence-based practices across multiple contexts: unpacking the black box. Administration and Policy in Mental Health and Mental Health Services Research. 2013;40:439–450. doi: 10.1007/s10488-013-0502-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura BJ, Selbo-Bruns A, Okamura K, Chang JM, Slavin L, Shimabukuro S. Developing a systematic evaluation approach for training programs within a train-the-trainer model for youth cognitive behavior therapy. Behaviour Research and Therapy. 2014;53:10–19. doi: 10.1016/j.brat.2013.12.001. [DOI] [PubMed] [Google Scholar]

- National Center for Children in Poverty. Facts about trauma for policymakers: children’s mental health. New York, NY: Columbia University: Mailman School of Public Health; 2007. [Google Scholar]

- Osterberg LD, Jensen-Doss A, Cusack KJ, de Arellano MA. Diagnostic practices for traumatized youths: do clinicians incorporate symptom scale results? Community Ment Health J. 2009;45:497–507. doi: 10.1007/s10597-009-9258-8. [DOI] [PubMed] [Google Scholar]

- Powell BJ, Beidas RS, Stewart RE, Wolk CB, Rubin RM, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Administration and Policy in Mental Health and Mental Health Services Research. 2016 doi: 10.1007/s10488-016-0733-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review. 2012;69:123–157. doi: 10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reding ME, Chorpita BF, Lau AS, Innes-Gomberg D. Providers' attitudes toward evidence-based practices: is it just about providers, or do practices matter, too? Administration and Policy in Mental Health and Mental Health Services Research. 2014;41:767–776. doi: 10.1007/s10488-013-0525-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders BE, Berliner L, Hanson RF. Child physical and sexual abuse: guidelines for treatment. Charleston, SC: National Crime Victims Research and Treatment Center; 2004. [Google Scholar]

- Scaccia JP, Cook BS, Lamont A, Wandersman A, Castellow J, Katz J, et al. A practical implementation science heuristic for organizational readiness: R = MC. Journal of Community Psychology. 2015;43:484–501. doi: 10.1002/jcop.21698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schell SF, Luke DA, Schooley MW, Elliott MB, Herbers SH, Mueller NB, et al. Public health program capacity for sustainability: a new framework. Implementation Science. 2013;8:15. doi: 10.1186/1748-5908-8-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shonkoff JP, Boyce WT, McEwen BS. Neuroscience, molecular biology, and the childhood roots of health disparities building a new framework for health promotion and disease prevention. Journal of the American Medical Association. 2009;301:2252–2259. doi: 10.1001/jama.2009.754. [DOI] [PubMed] [Google Scholar]

- Sigel BA, Benton AH, Lynch CE, Kramer TL. Characteristics of 17 Statewide initiatives to disseminate Trauma-Focused Cognitive-Behavioral Therapy (TF-CBT) Psychological Trauma-Theory Research Practice and Policy. 2013;5:323–333. [Google Scholar]

- Silverman WK, Ortiz CD, Viswesvaran C, Burns BJ, Kolko DJ, Putnam FW, et al. Evidence-based psychosocial treatments for children and adolescents exposed to traumatic events. Journal of Clinical Child and Adolescent Psychology. 2008;37:156–183. doi: 10.1080/15374410701818293. [DOI] [PubMed] [Google Scholar]

- Stirman SW, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: A review of the empirical literature and recommendations for future research. Implementation Science. 2012;7 doi: 10.1186/1748-5908-7-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stumpf RE, Higa-McMillan CK, Chorpita BF. Implementation of evidence-based services for youth: assessing provider knowledge. Behavior Modification. 2009;33:48–65. doi: 10.1177/0145445508322625. [DOI] [PubMed] [Google Scholar]

- The Pew Charitable Trusts. Philadelphia 2013: the state of the city. Philadelphia, PA: The Pew Charitable Trusts; 2013. [Google Scholar]

- The Pew Charitable Trusts. Philadelphia 2015: the state of the city. Philadelphia, PA: The Pew Charitable Trusts; 2015. [Google Scholar]

- The Research and Evaluation Group at the Public Health Management Corporation. Findings from the Philadelphia urban ACE survey. The Public Health Management Corporation; 2013. [Google Scholar]

- Weisz JR, Chorpita BF, Palinkas LA, Schoenwald SK, Miranda J, Bearman SK, et al. Testing standard and modular designs for psychotherapy treating depression, anxiety, and conduct problems in youth: a randomized effectiveness trial. Archives of General Psychiatry. 2012;69:274–282. doi: 10.1001/archgenpsychiatry.2011.147. [DOI] [PubMed] [Google Scholar]

- Woltmann EM, Whitley R, McHugo GJ, Brunette M, Torrey WC, Coots L, et al. The role of staff turnover in the implementation of evidence-based practices in mental health care. Psychiatric Services. 2008;59:732–737. doi: 10.1176/ps.2008.59.7.732. [DOI] [PubMed] [Google Scholar]

- Woody J, Anderson D, D'Souza H, Baxter B, Schubauer J. Dissemination of trauma-focused cognitive-behavioral therapy: a follow-up study of practitioners' knowledge and implementation. Journal of Evidence-Informed Social Work. 2015;00:1–13. doi: 10.1080/15433714.2013.849217. [DOI] [PubMed] [Google Scholar]