Abstract

Translating evidence-based physical activity interventions into practice have been problematic. Limited research exists on the adoption decision-making process. This study explored health educator perceptions of two evidence-based, physical activity programs—one was developed through an integrated research-practice partnership approach (FitEx) and the other was research-developed, Active Living Every Day (ALED). Semi-structured interviews were conducted with 12 health educators who were trained on either ALED (n = 6) or FitEx (n = 6) and had either delivered (n = 6) or did not deliver (n = 6) the intervention. Program adopters identified with program characteristics, materials, processes, implementation, fit within system, and collaborations as more positive factors in decision-making when compared to those that did not deliver. FitEx health educators were more likely to deliver the program and found it to be a better fit and easier to use. An integrated research-practice partnership may improve adoption of physical activity programs in typical practice settings.

Electronic supplementary material

The online version of this article (doi:10.1007/s13142-015-0371-7) contains supplementary material, which is available to authorized users.

Keywords: Adoption, Physical activity promotion, Decision-making, Integrated research-practice partnerships, Qualitative

Although the benefits of physical activity are well established [1] and evidence-based strategies for physical activity promotion exist [2], community-based programs to increase physical activity have failed to have a broad public health impact [3, 4]. Since 2001, the Task Force on Community Preventive Services has recommended two types of evidence-based programs be implemented in community settings: (1) individually adapted, health behavior change programs integrating behavioral strategies (i.e., goal-setting, self-monitoring, problem-solving, and relapse prevention) and (2) social support interventions [5–7]. These research-based recommendations for physical activity promotion have strong evidence of efficacy and cost-effectiveness [7, 8]. From the Surgeon General’s Report for Physical Activity and Health to the National Physical Activity Action Plan, evidence-based programs have been disseminated, recommended for implementation, and advocated for by the public health community for over two decades [1, 9, 10]. However, a significant gap exists in translating evidence-based programs into community practice to broadly reach those in need [11, 12]. One specific, practice-related area contributing to this gap is a limited understanding of program adoption [13].

In translational research, adoption can be defined as the decision of an organization, organizational staff, or community to commit to and initiate an evidence-based program [14]. As frontline individuals within an organization, organizational staff play an important role in how program initiation and uptake occurs. As such, it is important to consider what factors lead to the decision for staff to be trained on an intervention and subsequent program delivery [13]. Along with buy-in from an organization, staff’s knowledge, skills, attitudes, and the context in which they serve greatly impact program adoption and implementation [15–17]. Furthermore, based on Rogers’ classic diffusion of innovations theory, five main factors (i.e., relative advantage, compatibility, complexity, trialability, and observability) may influence program adoption, and each of these factors come into play over an “S” shape pattern of adopter categories (i.e., innovators, early adopters, early majority, late majority, and laggards) [18–20].

One of the major contributors to the gap between research and practice has been a lack of stakeholder input in intervention design, particularly from staff involved in delivering community interventions [21–23]. In the traditional research model, the scientific investigator is perceived as the expert and responsible for intervention design. The practitioner, conversely is considered a simple receptor of evidence-based interventions and is encouraged to deliver the intervention with high fidelity [24]. Typically operating in silos, research and practice communities rarely interact during the developmental phase of interventions [21]. As a result, there is often a lack of fit between evidence-based programs and the structure, values, and culture where interventions are to be delivered. Recognizing the gaps between evidence-based research and real-world practice, there is a national call for new delivery models, research-practice partnerships, and application of participatory approaches to accelerate translation [25–27]. Implementation science models and frameworks incorporating the concept of bidirectionality, where there is an integration of research and practice personnel to develop and test interventions, are gaining traction [28, 29]. Using a participatory dissemination model offers promise for accelerating the adoption of evidence-based physical activity programs [26].

Unfortunately, when examining the literature on behavioral interventions, including physical activity, few studies report on issues related to program adoption [30]. For instance, in a recent review of the application of the RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) framework [30], criteria for reporting staff level adoption was assessed. The reporting criteria assessed in the review included: (1) staff exclusions, (2) percent of staff offered that participate, (3) characteristics of staff participants vs. nonparticipating staff, and (4) the use of qualitative methods to understand staff participation and staff level adoption. In reviewed manuscripts, the staff level adoption dimension was frequently reported only as the proportion of staff participating in an intervention and did not address the multiple factors that lead to adoption decision-making or the barriers faced by those who decided to not adopt. The review underscored the paucity of staff level reporting and further emphasized a need for more thorough examination of the decision-making of frontline staff with qualitative methodologies to begin to develop grounded theory in this area [31].

The aim of this study are to explore the perceptions of health educators, the frontline staff from a state’s cooperative extension system, that were trained with an expectation of implementing one of two evidence-based physical activity programs in their communities. Programs included in the training were developed using either the traditional research model or an integrated research-practice partnership. Through qualitative inquiry post training, the decision-making process of both program adopters and nonadopters were examined. In addition, health educators were asked about program adaptations that would be helpful for future implementation.

METHODS

Study design and intervention

A two-group pragmatic, effectiveness-implementation hybrid type 3 trial [32, 33] was designed to test the adoption rate of two evidence-based physical activity programs in a real-world setting. Aimed at addressing practice needs, pragmatic trials are designed to measure effectiveness in usual conditions of care and involve those who will ultimately deliver and be engaged in the intervention in its intended real-world setting [32]. An effectiveness-implementation hybrid trial has an a priori focus of simultaneously assessing the effectiveness of a clinical intervention (e.g., ALED or FitEx) and an implementation strategy (e.g., a participatory dissemination model) [33].

For this study, both programs included evidence-based behavioral strategies and social support and were effective in previous settings for helping diverse age groups and populations increase activity to meet US physical activity recommendations [34–36]. However, one was a research-developed program and more intensive than the other. Active Living Every Day (ALED) is a high intensity, research-developed program, which followed the traditional model of efficacy to effectiveness, to demonstration, to dissemination and community implementation [34]. FitEx is a less intense program that was developed through an integrated research-practice partnership which followed a participatory dissemination targeted model [26]. Both ALED and the process used to develop FitEx have been outlined in previous publications [34, 37]. See Table 1 for a comparison of the two interventions.

Table 1.

Comparison of evidence-based physical activity programs

| Characteristics and components | Programs | |

|---|---|---|

| Active living every day | Fit-extension | |

| Program development | Researcher-developed through raditional model of efficacy to effectiveness to demonstration to dissemination | Developed through an integrated research-practice partnership following a participatory dissemination targeted model |

| Duration | 12 weeks | 8 weeks |

| Recruitment and delivery format | Health educators recruited community groups/organizations to host 1-h weekly classes with 12 to 20 adults | Health educators recruited team captains from community groups/organizations who, in turn, recruited members to form teams of six adults (i.e., family, friends, and coworkers) |

| Theoretical framework | Social cognitive theory and trans-theoretical model of behavior change | Group dynamics and social cognitive theory |

| Evidence-based principles | Lifestyle activity rather than structured exercise, individual goal-setting, behavioral skill development, self-monitoring based on readiness to change, and group problem-solving | Developing a sense of group distinctiveness, group goal setting, proximity to other group members, ongoing group interactions, feedback, information sharing, and collective problem solving |

| Promoted activities | Participants chose their own activities to do outside of class | Teams of six set walking/activity goal that would cumulatively equal the team traveling the distance across their state during the program; fruit and vegetable consumption weekly goals |

| Materials | Facilitator lesson plans and presentation slides; participant workbook with tracking logs, and step counters available for purchase from commercial vendor | Program guide, team captain packets, participant tracking logs and weekly newsletters |

| Overall program goal | Participants meet the recommended physical activity guidelines of 150 moderate intensity minutes per week | Participants meet the recommended physical activity guidelines of 150 moderate intensity minutes per week |

Sample

All family and consumer science health educator within the state’s cooperative extension, (n = 56) assigned to serve at the county level were eligible to deliver a physical activity program through one of the state’s107 county or city offices. These health educators were already working within their communities. They were recruited through an email solicitation from an assistant extension specialist, whose primary responsibility was to provide program support to the trial.

Sixty-four percent of the health educators (n = 36; 100 % female; 45.9 ± 10.8 years) responded to the solicitation and were randomly assigned to receive training to deliver either ALED (n = 18) or FitEx (n = 18). All 36 health educators completed the telephone training. One hundred percent (n = 18) of health educators, who were randomly assigned to ALED completed the in-person training; 89 % (n = 16) of health educators assigned to FitEx completed training.

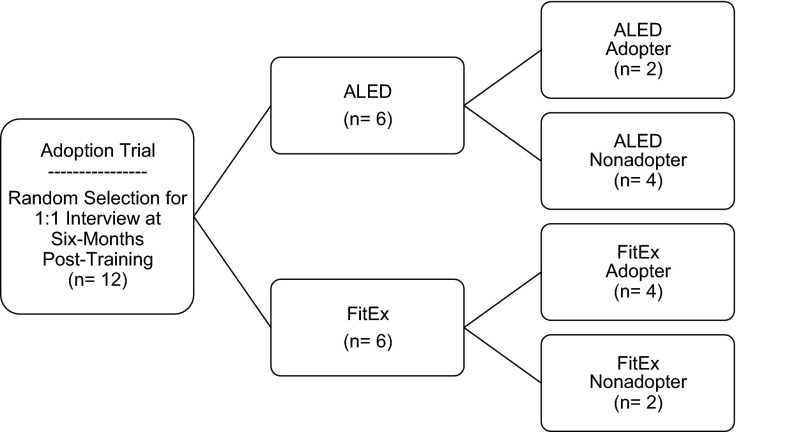

Of those completing the in-person training (n = 34), 12 health educators were randomly selected for qualitative interviews to reflect, as closely as possible, an even split of health educators that were randomly assigned to FitEx or ALED and had or had not implemented the program. All 12 health educators approached, agreed to participate. As shown in Fig. 1, these health educators were stratified by both program and adoption decision (i.e., delivered or did not deliver the program following training). Two health educators delivered ALED, while four delivered FitEx. These health educators were considered program adopters for this study. Six health educators did not deliver the program to which they were randomized. Of those six, four health educators were considered ALED nonadopters and two were considered FitEx nonadopters.

Fig 1.

Sample flowchart. This figure shows interview participation by health educator status. Note: ALED-Active Living Every Day

Measures

An interview guide was developed to gather data around the adoption decision-making process that occurred between the initial training session and program delivery. The guide included a set of predetermined open-ended questions aimed to provide a better understanding of intervention adoption, particularly the characteristics of health educators that participated compared to those that did not, as well as perceived adoption barriers. The guide included questions addressing compatibility and complexity, two factors included in the diffusion of innovation theory [19]. In addition, a section of the interview guide included program-specific questions. For instance, for ALED, it included questions asking about class sessions and facilitator lesson plans. For FitEx, it included questions asking about the program guide, team captain packets, and newsletters. A detailed list of interview guide questions can be found in the electronic supplemental material, Table 2.

Procedures

An assistant extension specialist for cooperative extension, who had graduate-level training in qualitative research methods, led 12 semi-structured, individual interviews with the randomly selected health educators. Ten interviews were conducted in-person in a cooperative extension county office and audio-recorded. Due to the length of statewide travel and conflicting schedules, two interviews were conducted using a video conferencing platform, which allowed for video interaction between interviewer and participants, along with audio-recording. For each interview, the interviewer was instructed to follow the guide and probe with additional questions when topics emerged from the dialog. All interpretations of the interview information were reported back to the health educators to assess reliability and validity of the qualitative data. By our final interviews for each program and level of adoption, we were not obtaining new information. This study was reviewed by the Virginia Tech Institutional Review Board and had approved exemption for waiver of written consent. All participants provided verbal consent for recording.

Analysis

Audio-recorded interviews were transcribed verbatim in preparation for team analysis. Using inductive analysis, all three authors independently reviewed the transcriptions and identified for meaning units associated with the semi-structured inquiries. Following best practices of content analysis of qualitative data [38], each meaning unit included a discrete phrase, sentence, or series of sentences that conveyed only one idea or one related set of perceptions. These meaning units were categorized into higher ordered themes. Categorization was based on patterns and similarities between the individual meaning units as observed by each investigator. Consensus meetings resolved differences. Each theme had a number of categories and corresponding meaning units. All three authors identified themes, assigned categories, and weighted the first 100 meaning units together, resulting in high Pearson’s correlations for each author pair (0.88, 0.84, 0.87; p < 0.001). The remaining 402 meaning units were coded by the first two authors and resulted in a Pearson correlation of 0.97.

Using Dedoose Version 5.1.29 [39], a web-based data managing and analysis program, the authors applied a code and rating system to each meaning unit for further qualitative text analysis. Referred to as sentiment weighting, this process allowed the authors to weigh each code according to several predetermined, five-point Likert scales. Depending on the meaning unit, code weights indicated degree of agreement, difficulty, quality, or satisfaction. For instance, if a health educator was referring to ease of implementation, the scale would be 1 very difficult; 5 very easy. The higher number of the scale (5) indicated a code weight depicting a more positive perception of the program. For example, using the transcriptions the following codes would be applied—“the program was really easy to deliver” = 5; “the program was easy to deliver” = 4; “some parts of the program were easy to deliver, but some parts were kind of difficult” = 3; “the program was difficult to delivery” = 2; “the program was very difficult to deliver” = 1. In each coding, modifiers of a statement (e.g., very, really) were required to get the highest or lowest weighting. Using program and health educator status as descriptor variables, frequency percentage of codes and mean code weights were computed for each theme and category and used for comparison. Results were displayed in bar graphs to expose patterns in relationships between ALED adopters, ALED nonadopters, FitEx adopters, and FitEx nonadopters.

RESULTS

The interviews with health educators lasted an average of 26 min (±9.51). Overall, six themes, 18 categories, and 502 meaning units were generated from the interviews providing in-depth insights from adopters and nonadopters.

Themes and categories

The six resultant common themes that emerged from the interviews include: (1) program perceptions and characteristics, (2) program materials, (3) program processes, (4) implementation and adaptations, (5) fit within system, and (6) collaborators and existing partnerships. Theme code frequencies and mean code weights varied by health educator status as displayed in electronic supplementary material, Figure 2. All themes had an assigned code weight range reflecting the values of the 1–5 Likert scale. Program adopters had a greater percentage of code frequencies across each theme area. Categories emerged within each theme that also varied across program allocation. Category code frequencies and sample meaning units with assigned code weights are displayed in electronic supplemental material, Table 3.

Program perceptions

Within the program perceptions and characteristics theme (n = 244 meaning units), frequency of codes ranged from 12.8 % for ALED adopters to 44.5 % for ALED nonadopters. Out of a 5-point scale, the mean code weight ranged from an identical 2.5 for ALED and FitEx nonadopters to 3.2 and 3.7, respectively, for ALED and FitEx adopters. Overall, there was a more negative program perception for nonadopters in both ALED and FitEx.

Three distinct categories for both adopters and nonadopters emerged for this theme: overall rating (n = 23 meaning units), ease of implementation (n = 34 meaning units), and special populations (n = 13 meaning units). One distinct category, reach and retention (n = 23 meaning units), emerged solely for adopters. The overall rating category was similarly strong for adopters and nonadopters of both programs. Both nonadopters and adopters perceived FitEx as an easy program to implement, while ALED adopters felt neutral about the ease of program implementation and nonadopters felt the program was difficult to implement. For example, one ALED adopter said, “ Overall, it was very easy to implement, wasn’t hard at all” while a nonadopter stated “It seemed like it was a whole lot of work.” This pattern of relationship difference in mean code weights for ease of implementation by programs and health educator status is shown in electronic supplementary material, Figure 3.

Common expressions for difficulty included health educator preparation time and program intensity, including total number of weekly sessions and amount of material to cover in a limited time period. Both adopters and nonadopters had a desire to implement programs with identified special populations, including older adults, Spanish speaking, and inmate communities. Due to technology, language, and resource barriers with these groups, the programs were perceived to be inappropriate. Finally, for this category, both ALED and FitEx adopters were pleased with the reach and retention rates of programs. However, there were large differences in the number of participants served.

Program materials

Within the program materials theme (n = 222 meaning units), frequency of codes ranged from 11 % for ALED adopters to 41 % for ALED nonadopters. Out of a 5-point scale, the mean code weights ranged from 3.2 for FitEx nonadopters to 3.8 for FitEx adopters. Four distinct categories for both adopters and nonadopters emerged for this theme: facilitator materials (n = 42 meaning units), participant materials (n = 31 meaning units), website (n = 22 meaning units), and paperwork (n = 12 meaning units). For program materials, ALED adopters were provided with lesson plans and workbooks for participants, both of which health educators deemed as helpful and useful. FitEx adopters were provided with all team captain packets, weekly newsletters, and step-by-step instructional information. Health educators enjoyed the idea of a “pre-packaged” program. As described by a FitEx adopter, “It was so nice to have stuff that was prepared. It was nice not to have to spend agent time on that…It certainly made it easier to administer the class.”

Across both programs for nonadopters, health educators also thought materials provided good, useful information. However, nonadopters in both programs perceived a need for materials to be scaled back for facilitators and more user-friendly for their intended audiences. Other reasons for poor weight ratings of program materials were that the website included technical language, materials lacked images, and associated paperwork with program activities was burdensome. In addition, program guides and captain packets were described as lengthy and potentially overwhelming by program nonadopters.

Program processes

Within the program processes theme (n = 219 meaning units), frequency of codes ranged from 6 % for ALED nonadopters to 58 % for ALED nonadopters. Out of a 5-point scale, the mean code weights ranged from 2.1 for FitEx nonadopters to 3.2 for FitEx adopters. Overall, there was a relatively neutral perception of program processes among FitEx adopters and negative perception among ALED adopters and nonadopters in both ALED and FitEx.

Three distinct categories for both adopters and nonadopters emerged for this theme: general logistics (n = 29 meaning units), recruitment and retention (n = 37 meaning units), and behavior tracking (n = 28 meaning units). One distinct category, communication (n = 19 meaning units), emerged solely for FitEx adopters. For adopters, the ease of, and enthusiasm for, recruitment resonated for both programs, with health educators sharing recruitment methods such as word of mouth, announcements, and collaborators. Yet, the registration process itself, via paper and pencil in the initial round of programming, was a source of frustration for both health educators and potential participants. Similar notes of frustration with paperwork and tracking were found within ALED and FitEx; participants did not want to fill out paperwork, and health educators felt that keeping up with attendance, tracking of physical activity, and assignments were burdensome. Inputting behavior tracking data into the FitEx website was reported to be time-consuming. For FitEx adopters, communication with participants via email and phone calls was mentioned frequently and was rated positively, while ALED adopters did not mention communication at all.

Negative program processes were centered on general logistics and scheduling for both programs. At the initial training, health educators were instructed to return to their communities and implement the program within 6 months. The brief 6-month period post-training proved a challenge for both adopters and nonadopters due to workloads and conflicting community activities. However, nonadopters had difficulty locating groups in their communities and juggling program requirements with their other schedule commitments. As expressed by an ALED nonadopter, “For me, it really was trying to get a group together who were interested and who would participate and finding the time within my own schedule with other programming.” This challenge was mentioned most frequently with groups recruited from senior centers and assisted-living facilities. Health educators also lacked the time to follow-through with groups to ensure participation.

Implementation and adaptations

Within the implementation and adaptations theme (n = 137 meaning units), frequency of codes ranged from 12 % for ALED adopters to 40 % for FitEx nonadopters. Out of a 5-point scale, the mean code weights ranged from 2.4 for FitEx nonadopters to 3.7 for ALED nonadopters. For both adopters of ALED and FitEx, along with nonadopters of ALED, there was a somewhat positive perception for this theme, and a more negative perception for FitEx nonadopters.

Two categories emerged for this theme. Suggested Adaptations (n = 43 meaning units) emerged for both adopters and nonadopters. Fidelity (n = 26 meaning units) emerged solely for adopters. Suggested program adaptation statements from adopters of both programs focused mainly on developing on-line features and increasing engagement, such as adding an exercise session to the ALED class sessions. Both program adopters recommended making registration and tracking available to participants online in order to streamline paperwork and reduce time burden. Health educators also suggested keeping the program materials “fresh,” providing suggestions such as including success stories online. For the FitEx program, adopters suggested to incorporate a youth and/or family component to the team challenge. For instance, health educators suggested getting children involved, recruiting families for support and hosting family-friendly kick-off events. Specifically for ALED, both adopters and nonadopters suggested class sessions include activity time, where participants would walk or engage in an exercise, in addition to the behavior change lessons. Nonadopters for FitEx suggested adaptations to include a single category of activity for behavior tracking and providing physical activity activities that could be incorporated into already existing cooperative extension workshops, such as adding physical activity breaks to financial management seminars.

In a similar vein, health educators also implemented some modifications throughout the delivery of the program. Health educators who delivered FitEx shared that they modified the newsletters to be more appealing, while one ALED adopter incorporated DVDs from the library that discussed healthy eating habits and exercise. As a unique category for adopters, overall fidelity was perceived as neutral for ALED and somewhat positive for FitEx adopters (mean code weight = 3.1; 3.9 out 5). Some health educators expressed strong program fidelity as conveyed in a statement from a FitEx adopter, “I used all the materials that you had on the site and I distributed that information”. However, some health educators did not implement core components with high fidelity (i.e., not delivering newsletters or not entering tracking data on the website in timely fashion to provide team feedback).

Fit within system

Within the fit within system theme (n = 103 meaning units), frequency of codes ranged from 7 % for FitEx adopters to 73 % for ALED adopters. Out of a 5-point scale, the mean code weights ranged from 2.8 for ALED nonadopters to 4.5 for FitEx adopters. Overall, there was a strong positive perception of fit within cooperative extension for FitEx adopters, a neutral perception among ALED adopters and both ALED and FitEx nonadopters.

Two distinct categories for both adopters and nonadopters emerged for this theme: health educator role (n = 17 meaning units) and infrastructure (n = 46 meaning units). Statements regarding health educator role shared how health educators perceived the programs aligning with the mission of cooperative extension and their specific job responsibilities. A FitEx adopter positively stated, “It provided me with a new curriculum to use to fulfill that area of food nutrition and health. I did not offer a physical activity program before and this was a great addition… it did not take anymore or less time than anything I do, it worked well with my programming efforts.” In contrast, for an ALED nonadopter, there was disappointment that the program focused mainly on behavior modification and did not include activity in the program sessions, and that the program was therefore outside of her area of expertise. She expressed, “It’s not the kind of program that I’m used to doing so I’m not sure that I’m real comfortable with that kind of thing.” This pattern of relationship difference for the health educator role category is shown in electronic supplementary material, Figure 3, where the mean code weight = 2.0; 2.6 for nonadopters and mean code weight = 4.0 for adopters.

As a category with negative perceptions among all health educator status, infrastructure for program delivery was a concern. A majority of the concerns included lack of administrative resources to support program activities, the length of time for health educator commitment, and costs for program materials and participant incentives. Both ALED and FitEx adopters (mean code weight = 1.9; 2.4) and nonadopters (mean code weight = 2.1; 1.8) perceptions were low.

Collaborations and existing partnerships

Within the collaborators and existing partnerships theme, frequency of codes ranged from 11 % for ALED adopters to 45 % for ALED nonadopters. Out of a 5-point scale, the mean code weights ranged from 2.7 for ALED adopters to 3.8 for both ALED and FitEx nonadopters. The overall perception of this theme was neutral, with a slightly more positive perception by FitEx adopters (mean code weight = 3.5) and nonadopters.

Two categories emerged for this theme. Community Partners (n = 25 meaning unit) emerged solely for adopters. Program Assistants (n = 21 meaning unit) emerged for both adopters and nonadopters. Both ALED and FitEx adopters found that community partnerships and buy-in were critical in the uptake and success of the program. For instance, a FitEx adopter stated, “The key to our success was our planning committee because they were really behind it and they really helped me.” Health educators provided meaning units about how they were able to recruit participants from existing partners, such as schools, senior centers, and county offices. In addition, there were explanations of how health educators were able to leverage additional funds from county government or donations from local businesses to support program activities. Obtaining resources was not a barrier to program start-up for some adopters.

Program assistants also played a critical role in uptake and successful implementation. Health educators who had access to assistance from other extension staff members and volunteers to help with program activities were more likely to adopt. Assistance with preparing team captain packets, data entry, and participant follow-up were main areas of discussion. One FitEx adopter formed a subcommittee from her already existing cooperative extension advisory group to help with the program. She explained, “They really helped me accessing resources and putting on the program, and I really felt like they were the reason our program was so successful.” Although forming a task force, consisting of various members from the community, was one of the evidence-based components of the FitEx program, very few health educators (n = 2) mentioned using a “task force” for assistance.

Discussion

The overall purpose of this study was to explore and compare health educators’ perceptions of evidence-based physical activity programs through a qualitative analysis of adopters and nonadopters’ decision-making process. By assessing program uptake after health educators were trained and instructed to implement the program within 6 months, the presented trial provides a naturalistic view of health educators’ role in the translation of research to practice. The differences observed between adoption rates and perception values of a research-developed compared to an integrated research-practice developed program are noteworthy and demonstrate the expected advantages for applying a participatory dissemination model [25, 27]. The follow-up interviews revealed facilitators and barriers to adoption commonly reported in physical activity interventions across settings [13, 40], along with insights into a variety of contextual factors and practicalities surrounding evidence-based program adoption in real-world settings.

Although the positive overall rating for programs was identical for adopters, study findings suggest there is an advantage for training health educators in an evidence-based program developed through an integrated research-practice partnership using the participatory dissemination model. Health educators trained in the FitEx program were more likely to implement [41], and those unable to implement during the trial period, shared plans for future implementation. They perceived the program to be a better fit within their system compared to those trained in the research-developed program. Based on diffusion of innovations theory, programs with greater compatibility would be more likely to be adopted [19]. This has been readily observed with schools, health departments, and aging services where physical activity programs developed with teachers and directors have had greater uptake [42–44].

Not surprisingly, being less intensive in terms of face-to-face contact, number of weekly sessions, and participant assignments, FitEx was also perceived to be easier to implement. The perceptions of ease of delivery are similar to reports from extension health educators from the Kansas State Research and Extension System who delivered Walk Kansas [13]. Aligned with diffusions of innovations theory, programs with less complexity are more likely to be adopted in a shorter time [19]. As a recommended strategy in implementation science, researchers partnering with the cooperative extension service to develop FitEx and Walk Kansas proved beneficial for identifying a feasible program delivery structure and package of resources that align with the organization mission [22, 27, 45]. The programs fit relatively easily into the day-to-day activities of an extension health educator [37].

Several facilitators to staff level adoption were identified in the analysis. Differences in perceptions between adopters and nonadopters were noted in the following themes: program and characteristics, fit within system, and program materials. These facilitators reflect those hypothesized for improved program adoption when using an integrated research-practice partnership or systems-based approach [26, 46, 47]. Specifically, adopters were likely to share more positive perceptions (mean code weight > 3) in the following categories: overall rating, ease of implementation, reach and retention, communication, facilitator and participant materials, and website. Health educators successful at starting a program in their community had already existing ties to community groups that provided avenues for recruitment. Furthermore, adopters had access to program assistants and volunteers to assist with program collaborations and existing partnerships were deemed as critical for success. For instance, FitEx adopters used their cooperative extension advisory group members and previous community connections extensively to help with group recruitment, data entry, follow-up with teams, and providing funding for participant incentives. Emphasizing these strategies to staff may facilitate uptake of evidence-based physical activity programs. This is similar to other studies that have examined program adoption from a qualitative perspective. Specifically, they found that adoption rates were improved through strong partnerships [48]. To systematically approach program development with stakeholders, the National Cancer Institute’s Implementation Science program (http://cancercontrol.cancer.gov/is), the Center for Excellence for Training and Research Translation (http://www.centertrt.org), and the RE-AIM framework websites (http://www.re-aim.org) provide a wealth of resources.

Several barriers to staff level adoption were also identified in the analysis. Health educators were likely to share more neutral and negative perceptions (mean code weight ≤ 3) in the following themes and categories: special populations, health educator role, infrastructure, program processes, recruitment and registration, and behavior tracking. These potential barriers support previous research findings revealing that practitioners often find research-developed strategies to be less generalizable to local settings and participants [49]. Both programs were perceived to not readily be able to meet the needs of the many diverse groups served by cooperative extension. In particular, literacy level and computer access among target populations, such as older adults, were issues that hindered implementation. Although, computer access is becoming less of an issue as older adults and agencies serving older adults are increasingly using technology [50]. Another consistent challenge reported across both programs was the time commitment for health educators. After training, health educators thought programs would require less time. For ALED, even though materials were pre-packaged, health educators reported being overwhelmed with the amount of time needed for program preparation. This challenge aligns with reports from other health educators delivering in national evaluations of ALED [51–53]. The paperwork associated with registration, homework assignments, and the ongoing data entry of behavior tracking were perceived as time-consuming and burdensome for both staff and participants.

Health educators also encountered scheduling barriers, especially within the context of the 6-month trial period. For FitEx, the timing of the 8-week program (beginning of Spring) was not ideal for some locations (e.g., schools). For ALED, health educators had difficulty, from the standpoint of both their own schedule and participants’ schedules, to plan for 12 consecutive weeks of program delivery during the trial’s time of year. Other barriers of practical concern for translational research are concerns with costs [8, 54]. Covering the costs of program materials and staff travel to implementation sites impeded adoption. Considering the limited resources of most organizations, developing affordable strategies is essential for translation. Addressing costs along with the above barriers are needed to increase the uptake of evidence-based physical activity programs [55].

There are strengths and limitations to this study. To begin, this study addressed the often-overlooked staff level adoption dimension and provided an in-depth exploration of the decision-making process for those that did and did not decide to participate. Interviews were conducted in the field directly following a real-world trial. Applying the sentiment weighting system to codes provided a richer picture of analysis and helped provide an intricate in-depth understanding of the patterns between adopters and nonadopters. Related to limitations, the study involves a small sample, is dependent on the individual skills of the interviewer, and may include some socially desirable responses from interviewees.

Although data collected from only 12 health educators cannot be generalized to a larger population, the findings of this study have strong external validity and can be transferable to other settings where organizations are planning and implementing community-based physical activity programs. The findings are highly relevant and actionable to improve existing programs in practice. For instance, since the interviews took place with health educators, adaptations have been made to FitEx. All registration and program activities are now available in an online format to improve registration and eliminate data entry burden for staff. Addressing other reported barriers and incorporating suggested program adaptations are areas for future research in this field.

Conclusions

In the traditional research model for dissemination, when those who are expected to deliver an evidence-based program are trained, adoption and implementation in a community is assumed to follow. The translation of research to practice is depicted as a linear, smooth path. Quite to the contrary, this qualitative study, including both adopters and nonadopters, demonstrated the known realities of the translation gap. Even when training and program tools are provided, staff deciding to participate and initiate is not absolute.

In order to bridge the gap and increase levels of physical activity in communities, real and perceived barriers for program staff must be addressed in the context of the delivery system. This study provides additional evidence of the advantages of the integrated research-practice partnership using a participatory dissemination model as a promising strategy for translation. The participatory dissemination model contributes to the external validity of research and helps to identify feasible strategies that promote staff level adoption of evidence-based physical activity programs.

Electronic supplementary material

Below is the link to the electronic supplementary material.

(DOCX 85 kb)

(DOCX 124 kb)

Interview themes; This figure displays graphs with code frequency and mean code weights by health educator status. Note: MU = MU; For code weight, 1 = negative, 5 = positive (PDF 537 kb)

Select program perception and fit within system categories; This figure displays graphs with code frequency and mean code weights by health educator status. Note: MU = MU; For code weight, 1 = negative, 5 = positive (PDF 537 kb)

Acknowledgments

We would like to acknowledge the health educators who participated in our interviews and candidly shared their experiences with ALED or FitEx, as well as Joan Wages who served as the interviewer for this qualitative study.

Compliance with ethical standards

Conflict of interest

The authors declare that they have no competing interests.

Adherence to ethical principles

This study followed accepted principles of ethical and professional conduct. The study received expedited review by the Virginia Tech Institutional Review Board (#08-466) and had approved exemption for waiver of written consent.

Footnotes

Implications

Practice: Exploring the perceptions of health educators identified potential barriers and facilitators to delivering physical activity programs in communities.

Policy: Investing in the participatory, integrated research-practice approach for program development may accelerate the translation of evidence-based physical activity programs.

Research: Analyzing the decision-making process of both program adopters and non-adopters, along with noting health educator adaptations, provides greater understanding for staff participation and insight into intervention strategies that may have uptake in practice settings.

References

- 1.Centers for Disease Control and Prevention. Surgeon General's report on physical activity and health. JAMA. 1996, 276:522. [PubMed]

- 2.Heath GW, Parra DC, Sarmiento OL, Andersen LB, et al. Evidence-based intervention in physical activity: lessons from around the world. Lancet. 2012;380:272–281. doi: 10.1016/S0140-6736(12)60816-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Beedie C, Mann S, Jimenez A. Community fitness center-based physical activity interventions: a brief review. Curr Sports Med Rep. 2014;13:267–274. doi: 10.1249/JSR.0000000000000070. [DOI] [PubMed] [Google Scholar]

- 4.Fisher EB, Fitzgibbon ML, Glasgow RE, et al. Behavior matters. Am J Prev Med. 2011;40:e15–30. doi: 10.1016/j.amepre.2010.12.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kahn EB, Ramsey LT, Brownson RC, et al. The effectiveness of interventions to increase physical activity. A systematic review. Am J Prev Med. 2002;22:73–107. doi: 10.1016/S0749-3797(02)00434-8. [DOI] [PubMed] [Google Scholar]

- 6.Task Force for Community Preventive Services Recommendations to increase physical activity in communities. Am J Prev Med. 2002;22:67–72. doi: 10.1016/S0749-3797(02)00433-6. [DOI] [PubMed] [Google Scholar]

- 7.Task Force for Community Preventive Services. Methods used for reviewing evidence and linking evidence to recommendation. In by Zaza S BP, Harris KW, eds. The Guide to Community Preventive Services: What Works to Promote Health?. Atlanta, GA: Oxford University Press; 2005: 431-448

- 8.Roux L, Pratt M, Tengs TO, et al. Cost effectiveness of community-based physical activity interventions. Am J Prev Med. 2008;35:578–588. doi: 10.1016/j.amepre.2008.06.040. [DOI] [PubMed] [Google Scholar]

- 9.Sheppard L, Senior J, Park CH, Mockenhaupt R, et al. The National Blueprint Consensus Conference summary report: strategic priorities for increasing physical activity among adults aged ≥ 50. Am J Prev Med. 2003;25:209–213. doi: 10.1016/S0749-3797(03)00185-5. [DOI] [PubMed] [Google Scholar]

- 10.Heath GW. The role of the public health sector in promoting physical activity: national, state, and local applications. J Phys Act Health. 2009;6:S159–S167. doi: 10.1123/jpah.6.s2.s159. [DOI] [PubMed] [Google Scholar]

- 11.Brownson RC, Jones E. Bridging the gap: translating research into policy and practice. Prev Med. 2009;49:313–315. doi: 10.1016/j.ypmed.2009.06.008. [DOI] [PubMed] [Google Scholar]

- 12.Dzewaltowski DA, Estabrooks PA, Glasgow RE. The future of physical activity behavior change research: what is needed to improve translation of research into health promotion practice? Exerc Sport Sci Rev. 2004;32:57–63. doi: 10.1097/00003677-200404000-00004. [DOI] [PubMed] [Google Scholar]

- 13.Downey SM, Wages J, Jackson SF, Estabrooks PA. Adoption decisions and implementation of a community-based physical activity program: a mixed methods study. Health Promot Pract. 2012;13:175–182. doi: 10.1177/1524839910380155. [DOI] [PubMed] [Google Scholar]

- 14.Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A glossary for dissemination and implementation research in health. J Public Health Manag Pract. 2008;14:117–123. doi: 10.1097/01.PHH.0000311888.06252.bb. [DOI] [PubMed] [Google Scholar]

- 15.Goode AD, Eakin EG. Dissemination of an evidence-based telephone-delivered lifestyle intervention: factors associated with successful implementation and evaluation. Transl Behav Med. 2013;3:351–356. doi: 10.1007/s13142-013-0219-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Glasgow RE, Bull SS, Gillette C, Klesges LM, et al. Behavior change intervention research in healthcare settings: a review of recent reports with emphasis on external validity. Am J Prev Med. 2002;23:62–69. doi: 10.1016/S0749-3797(02)00437-3. [DOI] [PubMed] [Google Scholar]

- 17.Haggis C, Sims-Gould J, Winters M, Gutteridge K, et al. Sustained impact of community-based physical activity interventions: key elements for success. BMC Public Health. 2013;13:892. doi: 10.1186/1471-2458-13-892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rogers E. Diffusion of innovations. New York: Free Press; 1962. [Google Scholar]

- 19.Rogers E. Diffusion of innovations. New York: Free Press; 2003. [Google Scholar]

- 20.Moseley SF. Everett Rogers' diffusion of innovations theory: its utility and value in public health. J Health Commun. 2004;9(Suppl 1):149–151. doi: 10.1080/10810730490271601. [DOI] [PubMed] [Google Scholar]

- 21.Ammerman A, Smith TW, Calancie L. Practice-based evidence in public health: improving reach, relevance, and results. Annu Rev Public Health. 2014;35:47–63. doi: 10.1146/annurev-publhealth-032013-182458. [DOI] [PubMed] [Google Scholar]

- 22.Jagosh J, Macaulay AC, et al. Uncovering the benefits of participatory research: implications of a realist review for health research and practice. Milbank Q. 2012;90:311–346. doi: 10.1111/j.1468-0009.2012.00665.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Glasgow RE, Green LW, Taylor MV, Stange KC. An evidence integration triangle for aligning science with policy and practice. Am J Prev Med. 2012;42:646–654. doi: 10.1016/j.amepre.2012.02.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;3:406–9. doi: 10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kessler R, Glasgow RE. A proposal to speed translation of healthcare research into practice: dramatic change is needed. Am J Prev Med. 2011;40:637–644. doi: 10.1016/j.amepre.2011.02.023. [DOI] [PubMed] [Google Scholar]

- 26.Estabrooks PA, Glasgow RE. Translating effective clinic-based physical activity interventions into practice. Am J Prev Med. 2006;31:S45–56. doi: 10.1016/j.amepre.2006.06.019. [DOI] [PubMed] [Google Scholar]

- 27.Chambers DA, Azrin ST. Research and services partnerships: partnership: a fundamental component of dissemination and implementation research. Psychiatr Serv. 2013;64:509–511. doi: 10.1176/appi.ps.201300032. [DOI] [PubMed] [Google Scholar]

- 28.Glasgow RE, Vinson C, Chambers D, Khoury MJ, et al. National Institutes of Health approaches to dissemination and implementation science: current and future directions. Am J Public Health. 2012;102:1274–1281. doi: 10.2105/AJPH.2012.300755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Brownson RC, Colditz GA, Proctor EK. Dissemination and implementation research in health: translating science to practice. Oxford University Press; 2012.

- 30.Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103:e38–46. doi: 10.2105/AJPH.2013.301299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kessler RS, Purcell EP, Glasgow RE, Klesges LM, et al. What does it mean to "employ" the RE-AIM model? Eval Health Prof. 2013;36:44–66. doi: 10.1177/0163278712446066. [DOI] [PubMed] [Google Scholar]

- 32.Glasgow RE. What does it mean to be pragmatic? Pragmatic methods, measures, and models to facilitate research translation. Health Educ Behav. 2013;40:257–265. doi: 10.1177/1090198113486805. [DOI] [PubMed] [Google Scholar]

- 33.Curran GM, Bauer M, Mittman B, Pyne JM, et al. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wilcox S, Dowda M, Leviton LC, Bartlett-Prescott J, et al. Active for life: final results from the translation of two physical activity programs. Am J Prev Med. 2008;35:340–351. doi: 10.1016/j.amepre.2008.07.001. [DOI] [PubMed] [Google Scholar]

- 35.Baruth M, Wilcox S. Effectiveness of two evidence-based programs in participants with arthritis: findings from the active for life initiative. Arthritis Care Res. 2011;63:1038–1047. doi: 10.1002/acr.20463. [DOI] [PubMed] [Google Scholar]

- 36.Dunn AL, Buller DB, Dearing JW, Cutter G, et al. Adopting an evidence-based lifestyle physical activity program: dissemination study design and methods. Transl Behav Med. 2012;2:199–208. doi: 10.1007/s13142-011-0063-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Estabrooks PA, Bradshaw M, Dzewaltowski DA, Smith-Ray RL. Determining the impact of Walk Kansas: applying a team-building approach to community physical activity promotion. Ann Behav Med. 2008;36:1–12. doi: 10.1007/s12160-008-9040-0. [DOI] [PubMed] [Google Scholar]

- 38.Rubin HJ, Rubin IS. Qualitative Interviewing: The Art of Hearing Data. Thousand Oaks, CA: Sage Publications; 2004. [Google Scholar]

- 39.Dedoose 4.5. Web application for managing, analyzing, and presenting qualitative and mixed method research data Los Angeles, CA: SocioCultural Research Consultants, LLC; 2013.

- 40.Wilcox S, Parra-Medina D, Felton GM, Poston MB, et al. Adoption and implementation of physical activity and dietary counseling by community health center providers and nurses. J Phys Act Health. 2010;7:602–612. doi: 10.1123/jpah.7.5.602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Harden S, Johnson S, Almeida F, Estabrooks P. Improving physical activity program adoption using integrated research-practice partnerships: an effectiveness-implementation hybrid trial. Trans Behav Med. Under Review. [DOI] [PMC free article] [PubMed]

- 42.Brownson RC, Ballew P, Dieffenderfer B, Haire-Joshu D, et al. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007;33:S66–73. doi: 10.1016/j.amepre.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 43.Ory MG, Towne Jr SD, Stevens AB, Park CH, et al. Implementing and disseminating exercise programs for older adult populations. Exercise for Aging Adults. 2015: 139-150.

- 44.Naylor P-J, Nettlefold L, Race D, Hoy C, et al. Implementation of school based physical activity interventions: A systematic review. Prev Med. 2015;72:95–115. doi: 10.1016/j.ypmed.2014.12.034. [DOI] [PubMed] [Google Scholar]

- 45.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 46.Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: tools, training, technical assistance, and quality assurance/quality improvement. Am J Community Psychol. 2012;50:445–459. doi: 10.1007/s10464-012-9509-7. [DOI] [PubMed] [Google Scholar]

- 47.Wandersman A. Four keys to success (theory, implementation, evaluation, and resource/system support): high hopes and challenges in participation. Am J Community Psychol. 2009;43:3–21. doi: 10.1007/s10464-008-9212-x. [DOI] [PubMed] [Google Scholar]

- 48.Harden S, Gaglio B, Shoup J, Kinney K, et al. Fidelity to and comparative results across behavioral interventions evaluated through the RE-AIM framework: A systematic review. Systematic Reviews. 2015. [DOI] [PMC free article] [PubMed]

- 49.Green LW, Glasgow RE, Atkins D, Stange K. Making evidence from research more relevant, useful, and actionable in policy, program planning, and practice slips "twixt cup and lip". Am J Prev Med. 2009;37:S187–191. doi: 10.1016/j.amepre.2009.08.017. [DOI] [PubMed] [Google Scholar]

- 50.Tennant B, Stellefson M, Dodd V, Chaney B, et al. eHealth literacy and Web 2.0 health information seeking behaviors among baby boomers and older adults. J Med Internet Res. 2015;17:e70. doi: 10.2196/jmir.3992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Estabrooks PA, Smith-Ray RL, Dzewaltowski DA, Dowdy D, et al. Sustainability of evidence-based community-based physical activity programs for older adults: lessons from Active for Life. Transl Behav Med. 2011;1:208–215. doi: 10.1007/s13142-011-0039-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Griffin SF, Wilcox S, Ory MG, Lattimore D, et al. Results from the Active for Life process evaluation: program delivery fidelity and adaptations. Health Educ Res. 2010;25:325–342. doi: 10.1093/her/cyp017. [DOI] [PubMed] [Google Scholar]

- 53.Ory MG, Mier N, Sharkey JR, Anderson LA. Translating science into public health practice: lessons from physical activity interventions. Alzheimers Dement. 2007;3:S52–57. doi: 10.1016/j.jalz.2007.01.004. [DOI] [PubMed] [Google Scholar]

- 54.Peek CJ, Glasgow RE, Stange KC, Klesges LM, et al. The 5 R’s: an emerging bold standard for conducting relevant research in a changing world. Ann Family Med. 2014;12:447–455. doi: 10.1370/afm.1688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Evenson KR, Brownson RC, Satinsky SB, Eyler AA, et al. The U.S. National Physical Activity Plan: dissemination and use by public health practitioners. Am J Prev Med. 2013;44:431–438. doi: 10.1016/j.amepre.2013.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 85 kb)

(DOCX 124 kb)

Interview themes; This figure displays graphs with code frequency and mean code weights by health educator status. Note: MU = MU; For code weight, 1 = negative, 5 = positive (PDF 537 kb)

Select program perception and fit within system categories; This figure displays graphs with code frequency and mean code weights by health educator status. Note: MU = MU; For code weight, 1 = negative, 5 = positive (PDF 537 kb)