Abstract

As molecular dynamics simulations access increasingly longer time scales, complementary advances in the analysis of biomolecular time-series data are necessary. Markov state models offer a powerful framework for this analysis by describing a system’s states and the transitions between them. A recently established variational theorem for Markov state models now enables modelers to systematically determine the best way to describe a system’s dynamics. In the context of the variational theorem, we analyze ultra-long folding simulations for a canonical set of twelve proteins [K. Lindorff-Larsen et al., Science 334, 517 (2011)] by creating and evaluating many types of Markov state models. We present a set of guidelines for constructing Markov state models of protein folding; namely, we recommend the use of cross-validation and a kinetically motivated dimensionality reduction step for improved descriptions of folding dynamics. We also warn that precise kinetics predictions rely on the features chosen to describe the system and pose the description of kinetic uncertainty across ensembles of models as an open issue.

I. INTRODUCTION

Understanding how proteins fold into their native three-dimensional structures is a long-standing problem that has inspired the development of several experimental, theoretical, and computational methods.1,2 Molecular dynamics (MD) is one such technique in which a protein’s motions are simulated in atomic detail.3,4 Due to a multitude of computational and algorithmic advances,5–8 millisecond time scale MD simulations are now feasible, enabling the investigation of protein folding in silico.9–24 The analysis of the enormous quantities of data generated by these simulations is currently a major challenge.

Markov state models (MSMs) are one class of methods that, parametrized from MD simulations, can provide interpretable and predictive models of protein folding.12–14,16,19–21,24–38 Building a MSM involves decomposing the phase space sampled by one or more MD trajectories into a set of discrete states and estimating the (conditional) transition probabilities between each pair of states. However, there are many ways to perform the state decomposition, and the choice of MSM building protocol can introduce subjectivity into the analysis.31,39,40 Recently, a variational theorem for evaluating MSMs has been introduced, which enables the modeler to select the best MSM for a system based on its distance from a theoretical upper limit.41,42

In this work, we reanalyze twelve ultra-long protein folding MD datasets.5,18,34,43 For each system, we create MSMs with many protocol choices and utilize the variational theorem introduced by Noé and Nüske41 as a metric for cross-validation44 to determine how different modeling choices affect the quality of the MSM as defined by its ability to detect and represent the systems’ long-time scale dynamical processes. Instead of focusing on a single specific system, we have directed our analysis toward elucidating general trends in MSM construction for protein folding datasets. Due to the diversity of proteins analyzed,18 we expect that our results will be extensible to other protein folding simulation data.

To this end, we first present a general overview of MSM construction that will inform experimental researchers about the MSM building pipeline as well as update method developers on our current recommendations for “best practices.” Next, we provide an abbreviated theoretical discussion that establishes the mathematical tools necessary to state the variational bound, evaluate it under cross-validation, and understand how it relates to the kinetic time scales predicted by a MSM. We then discuss four key recommendations that emerge from our variational analysis of protein folding MSMs: first, that cross-validation is necessary to avoid overfit models, second, that the incorporation of a kinetically motivated, optional step in MSM construction consistently produces better models, third, that the assignment of conformations in the MD dataset to system states is affected by the dimensionality of the system when states are assigned, and fourth, that the kinetics predicted by MSMs are highly sensitive to which features are chosen to represent the system.

II. MODELING CHOICES

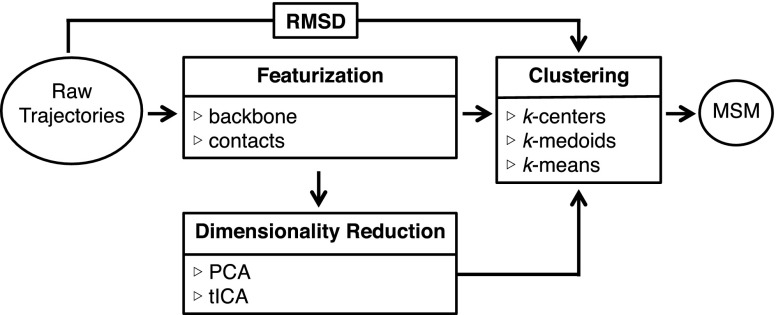

Constructing a MSM (i.e., generating state populations and pairwise transition probabilities) necessitates a state decomposition where each trajectory frame is assigned to a microstate. The state populations and pairwise transition probabilities provide the modeler with thermodynamic and kinetic information, respectively. When building a MSM from MD simulation data, it is sufficient to build the model directly from the raw MD output (atomic coordinates) but is also common to transform the data from atomic coordinates to an internal coordinate system (this transformation is often called “featurization” or “feature extraction”). Optionally, the dimensionality of these internal coordinates may be further reduced through a variance- or kinetically motivated transformation that precedes the requisite state decomposition (Fig. 1). Here, we discuss each step of the MSM building process in order to enumerate some of the options available to modelers.

FIG. 1.

The flow chart shows various options for the MSM construction starting from raw trajectory data. The state decomposition occurs in the clustering step, which is a requisite step in every MSM building protocol. The options presented in each box are intended to be a representative but not exhaustive set.

A. Featurization

The raw output of a MD trajectory consists of a time-series of frames, each of which contains the three Cartesian coordinates of every atom in the system. Optionally, a trajectory may be transformed (featurized) from its Cartesian coordinates into a system of internal coordinates. Many recent studies have constructed MSMs by initially featurizing Cartesian coordinates into a backbone-based21,35,38,45,46 or contact-based45,47–49 internal coordinate system such as and dihedral angles or inter-residue contact distances, respectively. Internal coordinate systems may also include combinations of different types of features.

1. A note on utilization of the root-mean-square deviation (RMSD) distance metric

Another common strategy is to proceed from Cartesian coordinates directly to state decomposition, which is achieved via clustering (see Sec. II C). In this case, the similarity of structures is judged by their root-mean-square deviation (RMSD) of atomic distances.14,19,20,30,33,34,36,50–53 While this process does not explicitly extract “features,” it can be interpreted as a replacement for explicit featurization.

B. Dimensionality reduction

Once the trajectories have been featurized into an internal coordinate system they can be immediately clustered into microstates for state decomposition (see Sec. II C) or pre-processed by further reducing the dimensionality of the dataset via another transformation. One type of dimensionality reduction commonly used in statistics is principal component analysis (PCA), which creates linear combinations from a dataset that account for variance in the data. A similar method, time-structure based independent component analysis (tICA), has also been recently incorporated into MSM analyses.35,54,55 In contrast to PCA, tICA describes the slowest degrees of freedom in a dataset by finding linear combinations of features that maximize autocorrelation time. Both PCA39,47,56–62 and tICA21,35,38,39,46,49,55,63,64 have been used in the analysis of protein folding and conformational change. PCA or tICA reduces the dimensionality of each frame from its number of features to a user-specified number of components (either PCs or tICs), where each component is a linear combination of the features and the weight of each feature corresponds to its relevance to that component.

C. Clustering

The clustering step is where the requisite state decomposition occurs. In this step, every frame in the time-series is assigned to a microstate. Clustering into microstates can be performed directly from Cartesian coordinates using the RMSD distance metric or from explicitly featurized trajectories or the low-dimensional output of PCA or tICA using the Euclidean distance. The clustering step reduces the representation of each frame in the time-series to a single integer (the cluster assignment) and it is from this representation that the MSM is constructed. Commonly used clustering algorithms include k-centers,16,21,30,35,36,38,39,65 k-medoids,53 and k-means.44,66,67 More sophisticated methods, such as Ward’s method,34,68,69 have also been used.

D. MSM construction

The MSM itself is generated from the state decomposition produced by clustering; i.e., the state populations and pairwise transition probabilities are determined. It is standard to find the maximum likelihood estimation (MLE) of the transition matrix under the constraint that the dynamics are reversible.32 The modeler must also select a model lag time. The Markovian assumption asserts that the system is memoryless at the chosen lag time, which means that the pathway by which the system enters any state does not affect the transition probabilities.

III. THEORY BACKGROUND

It is clear from Sec. II that there are many modeling choices involved in the MSM creation. Formally, the MSM building process offers a variety of ways to create a state decomposition (see Fig. 1). Traditionally, MSM building protocols have been determined heuristically and without an objective method to compare different models of the same system. In this section, we briefly overview the theory necessary to state the variational principle that enables the comparison of MSMs.41,42,44 This variational principle provides the modeler with a systematic way to choose which of many modeling protocols most closely approximates the time scales on which the underlying dynamical processes of the system occur.

A. Propagator

We first present the essentials of continuous-time Markov processes.70 We assert that our process, Xt, is homogeneous in time, ergodic, and reversible with respect to an equilibrium distribution, , which takes the continuous phase space to .

We are interested in the probability of transitioning from to after a duration of time , on the condition that the system is already at x. This is given by

| (1) |

where is the open -ball centered at y with infinitesimal measure dy. Moreover, we are interested in the time-evolution of the entire system from time t to time , which can be obtained by integrating over pt(x) for all ,

| (2) |

The propagator, , admits a decomposition into a complete set of eigenfunctions and eigenvalues

| (3) |

where the eigenvalues are real and indexed in decreasing order. The first eigenfunction corresponds to the equilibrium distribution and has a unique largest eigenvalue . All subsequent eigenvalues lie within the unit interval and their corresponding eigenfunctions represent the processes within the time-series.

The time scale of the ith process is given by . The propagator is often further approximated by retaining the m slowest time scales of the system (or the m largest eigenvalues). It can be shown that this is the closest possible rank-m approximation to the propagator.44

B. Variational principle

The eigenfunctions that satisfy Eq. (3) can be interpreted as the m slowest dynamical processes from a collection of time-series (e.g., MD) data. However, we do not know the true eigenfunctions and must approximate them using a trial set of ansatz eigenfunctions. The variational theorem established by Noé and Nüske41 states that the sum of the eigenvalues corresponding to the ansatz eigenfunctions is bounded from above by the sum of the true eigenvalues, i.e.,

| (4) |

where the GMRQ stands for generalized matrix Rayleigh quotient, which is the form of the approximator when the derivation is performed in the style of Ref. 44. The eigenvalues are generated from the ansatz eigenfunctions in the same way as Eq. (3).

For our purposes, we highlight that there exists a theoretical upper bound on the GMRQ, which means that a dynamical process cannot be measured to occur on a slower time scale than its true time scale. This enables us to select the best set of trial ansatz eigenfunctions by choosing the set that yields the maximum GMRQ. The use of a variational approach to choose MSM construction protocol is not new71 and is similar to the variational selection of the ground-state wavefunction that yields the minimum energy in quantum mechanics.

Each set of ansatz eigenfunctions is a guess at how to represent the important degrees of freedom in the system. In practice, it corresponds to the set of features (Sec. II A) or PCs/tICs (Sec. II B) from which the state decomposition (i.e., clustering, Sec. II C) is performed. Thus, in the context of the variational bound, we denote the best MSM as the one that is constructed from the optimal set of ansatz eigenfunctions.

C. Cross-validation

To create a MSM that describes the kinetics of a system from raw MD data, the requisite state decomposition is achieved by one or more dimensionality-reducing steps (recall Sec. II), each of which may involve tunable parameters. We will refer to the transformation from raw MD data to states as our modeling “protocol.”72 A set of trial ansatz eigenfunctions is a function of both the input data and the protocol. We are interested in a procedure that compares how closely different trial sets of ansatz eigenfunctions approximate the true eigenfunctions for the same data. We have already shown that the GMRQ is a suitable metric for this purpose: to compare across different protocols, we construct MSMs using each protocol and choose the protocol that yields the largest sum of eigenvalues (Eq. (4)). The GMRQ, or sum of eigenvalues, thus serves as a model’s “score.”

However, our dataset is finite and thus possesses statistical noise in addition to information about the true dynamics. In order to determine the best protocol in the context of only the system’s dynamics, we must employ cross-validation to avoid overfitting to the noise in the data. This is achieved by splitting the dataset into a training set and a test set, constructing the MSM for the training set, but then evaluating its performance (i.e., calculating the GMRQ) on the test set. This process ensures that the protocol performance is evaluated only on dynamics that are present in both the training and test sets, which are expected to correspond to the system’s true dynamics when they have been sufficiently sampled. For conciseness, we will thus refer to the MSM predicting a system’s slowest time scales under cross-validation as the “best,” or optimal, MSM for that search space.

It is important to note that selecting the best MSM under cross-validation addresses different modeling challenges than validating the self-consistency of a single MSM, e.g., by assessing adherence to the Chapman-Kolmogorov property.13,32,67 The comparison of models using cross-validation does not provide information about whether any single model is statistically consistent with the data (although we would expect inconsistent models to perform relatively poorly), whereas self-consistent validation does not evaluate how well a model has captured the system’s slow dynamics. Since our goal is to understand protein folding dynamics using MSMs, determining the most useful model, i.e., the model that best describes important collective degrees of freedom, requires the comparison of candidate models under cross-validation. For a representative self-consistent validation analysis, see the supplementary material, Figs. S3-S6.

IV. METHODS

The twelve MD datasets were generated by Lindorff-Larsen et al.18 via MD simulation in explicit solvent near the melting temperature. The proteins range from 10 to 80 amino acids in length. All datasets used contain a minimum of 100 μs of sampling and feature at least 10 instances each of folding and unfolding. For the analysis, we retain trajectory frames at every 2 ns. Unless otherwise specified, MSMs are created by first selecting a featurization scheme, dimensionality reduction option, and clustering algorithm (see Sec. II). Then, internal parameters relevant to those selections (e.g., the number of clusters) are optimized by generating 200 different models where internal parameters are determined by a random search (see the supplementary material, Table S2). The parameters of the highest-scoring model based on the mean of five cross-validation iterations are used for the analysis of trends in featurization, dimensionality reduction, and clustering choices.

V. RESULTS

In this section, we discuss results obtained from analyzing twelve ultra-long protein folding datasets18 in the context of choices in modeling protocol. We have chosen to highlight four key results for using MSMs to model protein folding that emerge from optimal parameter selection under cross-validation. Protocol choices corresponding to the best models as well as comparisons of different featurization, dimensionality reduction, and clustering choices for each system are reported in the supplementary material (Table S3 and Fig. S15ff, respectively). We emphasize, however, that there is no “magic bullet”: we deliberately do not recommend specific protocol choices but rather assert that the best practice for constructing MSMs is to determine the modeling protocol by systematically searching hyperparameter space (i.e., modeling choices) and evaluating the GMRQ of each model. The following discussion is thus designed to be extensible to MSM construction for protein folding in general.

A. A variational approach necessitates cross-validation

We established in Sec. III B that the time scale of a dynamical process cannot be estimated to be slower than its true time scale.41 However, it is a well-known result that a variational bound on a system’s eigenvalues only holds in the limit of complete data, i.e., in the absence of statistical noise.41,42,73 In their analysis of octa-alanine dynamics,44 used the GMRQ to show that with incomplete data, the models predicting the slowest time scales are likely describing statistical noise instead of the true dynamics, especially for models with a large number of states. Here, we extend this result to ultra-long protein folding trajectories. As an example, we created MSMs for -repressor (Protein Data Bank (PDB) ID: 1lmb), an 80-residue, 5-helix bundle analyzed by Lindorff-Larsen et al.18 This trajectory has previously been analyzed with MSMs by Bowman, Voelz, and Pande,19 who employed the k-centers clustering algorithm65 to construct a model with 30 000 microstates.

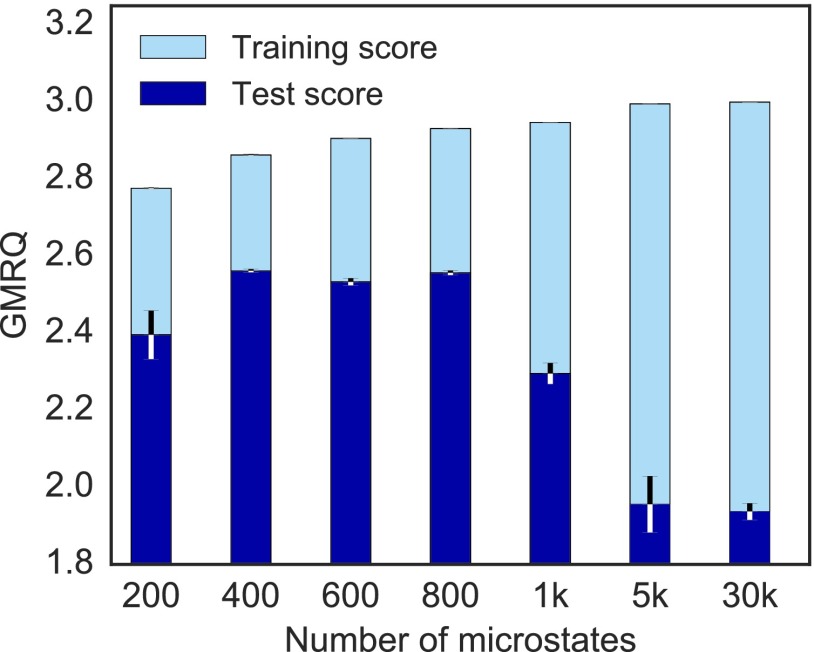

To create a new MSM for this system, we used the GMRQ under cross-validation (see Sec. III and the supplementary material, Table S2) to determine the optimal number of clusters for the dataset by creating 300 MSMs for randomly chosen numbers of microstates between 10 and 1000 using k-centers clustering with the RMSD distance metric. MSMs with 5000 and 30 000 microstates were also evaluated. Fig. 2 shows the average test and training GMRQ scores for models containing 200 to 30 000 microstates. Scores for the training datasets increase as the number of microstates increases, indicating the presence of increasingly slow processes. However, scores for the test datasets, which evaluate the ability of the model to describe data on which it was not fit, do not increase with the number of microstates. The best cross-validated model contains 400 microstates, while models containing 1000 or more microstates do not perform well when evaluated using unseen data. This is likely due to the fact that the slow transitions discovered while fitting the model on the training dataset are not present in the test dataset, indicating insufficient sampling of those processes. In some cases, the modeler may want to investigate these processes and sample them further. However, for a larger number of microstates, it becomes more difficult to sample all processes to equilibrium. Cross-validation therefore enables the modeler to choose a number of microstates that best partitions state space with respect to the slowest well-sampled processes, i.e., the processes present in both training and test sets.

FIG. 2.

GMRQ scores for two-time scale MSMs generated for -repressor (PDB ID: 1lmb) containing varying numbers of microstates show that the cross-validation is necessary to describe a system’s underlying dynamics. All models were constructed using k-centers clustering with the RMSD distance metric. The error bars signify the score standard deviation generated from five cross-validation iterations (the error bars on the training scores are negligibly small). The discrepancy between training and test scores (light blue and dark blue, respectively) as microstate number increases is likely due to overfitting during training; thus these models exhibit poorer performance on data that were hidden from the fitting process.

B. tICA systematically produces better models

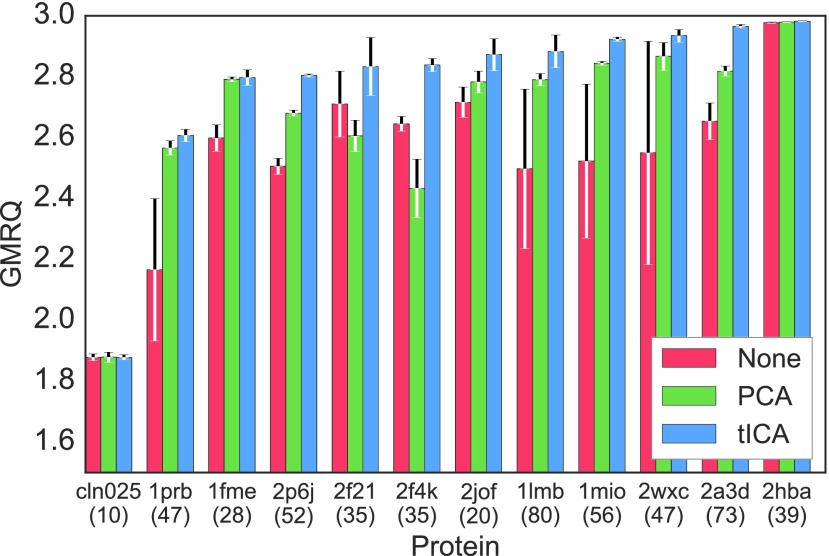

The PCA and tICA algorithms (see Sec. II B) offer an additional, optional dimensionality reduction before clustering is performed. PCA finds orthogonal degrees of freedom that account for variance in the data, while tICA identifies degrees of freedom that explain slow decorrelation.35,49,54,55 The incorporation of tICA into MSM construction was motivated by the desire to ensure, instead of assume, the retention of important kinetic information.35 Here, we show that models made by clustering from tICs consistently produce the best models when compared to models clustered from PCs or directly from features.

As an example, each of the twelve ultra-long protein trajectories was featurized using the dihedrals defined by every set of four consecutive α-carbons (α-angles74). tICA or PCA was optionally performed on the α-angle features, further reducing the dataset dimensionality up to 10 tICs or PCs. The mini-batch k-means clustering algorithm66 was then used to cluster from tICs, PCs, or α-angle features into states. For 11 of the 12 proteins, the best tICA model outperformed both the best PCA model and the best α-angles model. The one exception was chignolin, a 10-residue peptide that forms a β-hairpin in water,75 for which the scores differed only in the fourth decimal place (two orders of magnitude smaller than the score standard deviations). Fig. 3 shows the scores for the best tICA, PCA, and α-angle models for each protein. Alternate choices of featurization and clustering may be used to demonstrate the same result; see the supplementary material, Fig. S15ff.

FIG. 3.

GMRQ scores for two-time scale MSMs generated for twelve ultra-long protein folding datasets18 where additional dimensionality reduction has been omitted (red), performed with PCA (green) or performed with tICA (blue) demonstrate that tICA systematically improves models. All MSMs were made using α-angle featurization and mini-batch k-means clustering. In all cases, the best tICA model performs better than or equivalently to both the best PCA model and the best model created directly from α-angle features. The error bars signify the score standard deviation generated from five cross-validation iterations. The number of amino acids of each protein is given below its PDB ID.

C. Different clustering algorithms perform similarly well on tICA data

Clustering trajectory frames into microstates produces the state decomposition that is essential for MSM construction. Various clustering algorithms have been used for MSMs of protein folding and conformational change (see Sec. II C), and the relative performance of different clustering algorithms has been compared.32,76,77 McGibbon and Pande44 used the GMRQ under cross-validation to show that for octaalanine, k-means produced the best models while k-centers performed poorly for several different featurization choices. The authors postulated that the poor performance of the k-centers algorithm is related to its tendency to choose outlier conformations as cluster centers. Schwantes and Pande35 compared MSM time scales predicted using k-centers clustering with time scales from a hybrid k-medoids method,77 and found them relatively unchanged when tICA was used to reduce the dimensionality of the trajectories before clustering. They suggested that problems with the k-centers algorithm were less influential when clustering is performed on low-dimensional data.

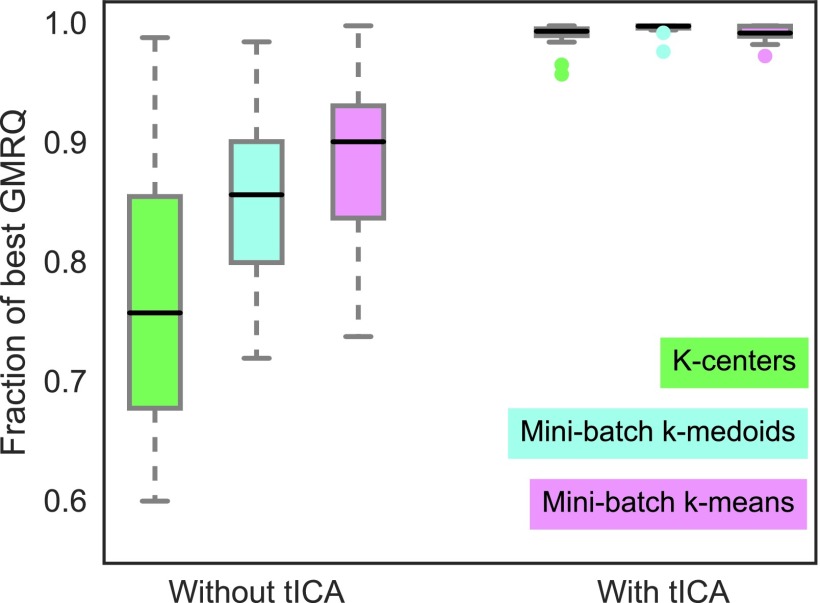

In Fig. 4, we compare mini-batch k-means, mini-batch k-medoids, and k-centers using the cross-validated GMRQs of the resulting MSMs. For this example, all twelve folding trajectories were featurized using contact distances between α-carbons, but other featurization choices will produce an equivalent result (see the supplementary material, Fig. S15ff). For each of the three clustering algorithms, two types of models were created: first directly from the contact distance features and second from tICA data generated from the same features. In order to integrate across the twelve systems, each of which has its own system-dependent upper bound on the GMRQ, we have transformed each model’s score into its ratio with the score of the best model produced for that system. We show that when protein folding trajectories are clustered from high-dimensional feature space, k-means clustering produces the best models and k-centers produce the worst models. However, when the trajectories are clustered from the lower-dimensional tICA data (chosen to be or lower), k-means, k-medoids, and k-centers clustering all produce similar models. Importantly, the median score of the tICA models consistently exceeds the median score of the models clustered directly from their features. Thus we find that when clustering is preceded by tICA, which is typically chosen to reduce the dimensionality of the trajectories by one or two orders of magnitude, the clustering algorithms yield models that are not only similarly well-performing but also categorically superior to models created without tICA.

FIG. 4.

Aggregated score ratios for two-time scale MSMs generated for twelve ultra-long protein datasets18 using three different clustering algorithms with or without tICA show that different clustering algorithms produce similarly well-performing models when tICA is used. All models were made using α-carbon contact distances. When clustering is performed directly from features, the dimensionality is reduced by 2-3 orders of magnitude; whereas when clustering is performed from tICs, dimensionality is reduced from or lower to . For large dimensionality reductions, k-means clustering produces the best models. For small dimensionality reductions via tICA, clustering algorithms produce similarly well-performing models that are categorically better than models created without tICA. (The best-performing algorithm at small dimensionality reductions is k-medoids; see the supplementary material, Fig. S9.)

D. Appropriate featurization is required to describe kinetics

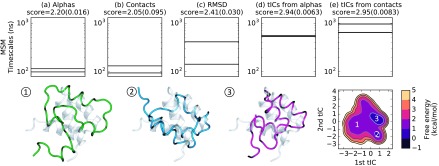

We have seen that omitting cross-validation from a MSM analysis may lead to an overfit model (Sec. V A) and that incorporating an intermediate dimensionality reduction step using tICA systematically improves models (Secs. V B and V C). In this section, we examine how the kinetic information contained within MSMs differs across models created for the same system. We constructed five MSMs for homeodomain (PDB ID: 2p6j)78 from five different types of features: (a) α-angles, (b) α-carbon contact distances, (c) pairwise α-carbon RMSD, (d) tICs from α-angles, and (e) tICs from α-carbon contact distances. All clustering was performed with the mini-batch k-medoids algorithm.

The two slowest MSM time scales of each model are presented in Fig. 5 in order of increasing scores. The time scales differ by an order of magnitude from the worst model to the best model, which indicates that not all featurization choices optimally represent the system’s slow dynamics. To investigate the folding of homeodomain, we select the best model (e) for further analysis and create a free energy landscape from its first two tICs. Structures sampled from regions of the free energy landscape of model (e) provide a preliminary interpretation of the first two tICs: progress along the first tIC leads to the formation of a secondary structure while the second tIC may track the alignment of α-helices. The first tIC is highly correlated with RMSD to the folded state and can thus serve as a reaction coordinate for the folding of homeodomain (see the supplementary material, Figs. S11-S13).

FIG. 5.

Time scale plots for two-time scale MSMs generated for homeodomain (PDB ID: 2p6j) using the best model from each of five different featurization choices (a)-(e) show that kinetics are highly sensitive to the featurization choice. All models were made using mini-batch k-medoids clustering. Standard deviations for model scores generated from five cross-validation iterations are given in parentheses, and the thickness of each horizontal lines corresponding to estimated time scales represents two standard deviations in both directions from the estimate. The kinetics feature slower processes for better models; notably, the slowest time scale predicted by the best model is an order of magnitude slower than that predicted by the worst model. A free energy landscape for model (e) was created from its first two tICs. Structures sampled from the dataset (1-3) represent different regions on the free energy surface of model (e). Each sampled structure is an α-carbon trace superimposed upon the crystal structure. The first tIC (regions 1 3) appears to track the formation of secondary structure, while the second tIC (regions 2 3) corresponds to the aligning of α-helices.

When the system’s dynamics have been sufficiently sampled, we expect thermodyamic predictions, i.e., free energies, to be much less sensitive to featurization choices, since these calculations rely only on state populations (see the supplementary material, Fig. S14). In terms of kinetics, however, it is important to recognize that models can only describe processes captured by the collective degrees of freedom chosen as the system’s features.32,71,79,80,81 The GMRQ serves as an excellent tool to distinguish between the predictive capabilities of MSMs constructed from different types of features, which enables modelers to choose the most suitable features. This example demonstrates that it is crucial to investigate different featurization choices, since the best model created for a given set of features may be underestimating slow time scales if those features are not capable of describing the corresponding processes.

VI. CONCLUSIONS AND OUTLOOK

MSM construction for protein folding datasets involves many modeling choices. Historically, these decisions have been heuristically motivated. The utilization of a variational principle41,42 under cross-validation enables the modeler to objectively optimize modeling protocol through the GMRQ score.44 With this tool we have reanalyzed twelve ultra-long protein folding trajectories,18 in order to determine which modeling choices systematically produce superior MSMs and to suggest a set of recommendations for constructing MSMs of protein folding. We have shown that (1) cross-validation is necessary to avoid overfitting models, (2) the use of tICA to reduce trajectory dimensionality before clustering consistently produces higher-scoring models, (3) different clustering algorithms perform similarly on low-dimensionality data from tICA, and (4) the featurization choice is paramount for building kinetic models with predictive capabilities.

In Sec. V D, we reported that MSM time scales differ widely across models constructed from different features. To a lesser extent, this is also the case for time scales predicted by MSMs that have indistinguishable scores; in other words, time scales across indistinguishably good models differ more than their intra-model uncertainties82–88 account for. This is likely due to the fact that each model is built from a different state decomposition and is thus describing a (perhaps subtly) different process. In the absence of an additional metric for distinguishing models, such as experimental results, the modeler may not be able to select the single best MSM. We therefore hypothesize that a system is better described by an ensemble of equivalently good models as opposed to a single best model. This motivates the need for new mathematical tools to describe ensembles of MSMs and their associated statistics, especially with regard to uncertainty in kinetics.

We anticipate that the advent of the GMRQ to evaluate MSMs will shift modelers from heuristic protocol choices toward systematic parameter searches informed by the results and practices presented in this work. The ability to construct MSMs capable of optimally describing slow processes in MD trajectories is invaluable to the theorist, whether those models are built to inform future experimental pursuits or to elucidate previous findings. It is important to be aware that the slow processes found by the best model may not correspond to the process of interest and instead may be artifacts of insufficient sampling or the force field used during simulation. A MSM that identifies uninformative slow processes may thus be an indicator of a problem with the raw data. We therefore stress that connection to experiment will continue to be crucial in evaluating model utility.

Free, open source software fully implementing all methods used in this work is available in the MDTraj,89 MSMBuilder,90 and Osprey91 packages available from http://mdtraj.org and http://msmbuilder.org.

SUPPLEMENTARY MATERIAL

See the supplementary material for specifics regarding MSM methods and cross-validation, supporting information, and individual protein results.

ACKNOWLEDGMENTS

The authors thank Carlos Hernández, Matthew Harrigan, Christian Schwantes, Ariana Peck, Josh Fass, and members of the Pande and Chodera labs for helpful discussions. We acknowledge the National Institutes of Health under No. NIH R01-GM62868 for funding. We graciously acknowledge D. E. Shaw Research for providing simulation data.

REFERENCES

- 1.Dobson C. M., Šali A., and Karplus M., Angew. Chem., Int. Ed. 37, 868 (1998). [DOI] [PubMed] [Google Scholar]

- 2.Gruebele M., Annu. Rev. Phys. Chem. 50, 485 (1999). 10.1146/annurev.physchem.50.1.485 [DOI] [PubMed] [Google Scholar]

- 3.Adcock S. A. and McCammon J. A., Chem. Rev. 106, 1589 (2006). 10.1021/cr040426m [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dror R. O., Dirks R. M., Grossman J., Xu H., and Shaw D. E., Annu. Rev. Biophys. 41, 429 (2012). 10.1146/annurev-biophys-042910-155245 [DOI] [PubMed] [Google Scholar]

- 5.Shaw D. E., Deneroff M. M., Dror R. O., Kuskin J. S., Larson R. H., Salmon J. K., Young C., Batson B., Bowers K. J., Chao J. C., Eastwood M. P., Gagliardo J., Grossman J. P., Ho C. R., Ierardi D. J., Kolossváry I., Klepeis J. L., Layman T., McLeavey C., Moraes M. A., Mueller R., Priest E. C., Shan Y., Spengler J., Theobald M., Towles B., and Wang S. C., Commun. ACM 51, 91 (2008). 10.1145/1364782.1364802 [DOI] [Google Scholar]

- 6.Shirts M. and Pande V. S., Science 290, 1903 (2000). 10.1126/science.290.5498.1903 [DOI] [PubMed] [Google Scholar]

- 7.Buch I., Harvey M. J., Giorgino T., Anderson D. P., and Fabritiis G. D., J. Chem. Inf. Model. 50, 397 (2010). 10.1021/ci900455r [DOI] [PubMed] [Google Scholar]

- 8.Kohlhoff K. J., Shukla D., Lawrenz M., Bowman G. R., Konerding D. E., Belov D., Altman R. B., and Pande V. S., Nat. Chem. 6, 15 (2014). 10.1038/nchem.1821 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Snow C. D., Nguyen H., Pande V. S., and Gruebele M., Nature 420, 102 (2002). 10.1038/nature01160 [DOI] [PubMed] [Google Scholar]

- 10.Zagrovic B., Snow C. D., Shirts M. R., and Pande V. S., J. Mol. Biol. 323, 927 (2002). 10.1016/S0022-2836(02)00997-X [DOI] [PubMed] [Google Scholar]

- 11.Snow C. D., Zagrovic B., and Pande V. S., J. Am. Chem. Soc. 124, 14548 (2002). 10.1021/ja028604l [DOI] [PubMed] [Google Scholar]

- 12.Ensign D. L., Kasson P. M., and Pande V. S., J. Mol. Biol. 374, 806 (2007). 10.1016/j.jmb.2007.09.069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Noé F., Schütte C., Vanden-Eijnden E., Reich L., and Weikl T. R., Proc. Natl. Acad. Sci. 106, 19011 (2009). 10.1073/pnas.0905466106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Voelz V. A., Bowman G. R., Beauchamp K., and Pande V. S., J. Am. Chem. Soc. 132, 1526 (2010). 10.1021/ja9090353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shaw D. E., Maragakis P., Lindorff-Larsen K., Piana S., Dror R. O., Eastwood M. P., Bank J. A., Jumper J. M., Salmon J. K., Shan Y., and Wriggers W., Science 330, 341 (2010). 10.1126/science.1187409 [DOI] [PubMed] [Google Scholar]

- 16.Beauchamp K. A., Ensign D. L., Das R., and Pande V. S., Proc. Natl. Acad. Sci. 108, 12734 (2011). 10.1073/pnas.1010880108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Buchner G. S., Murphy R. D., Buchete N.-V., and Kubelka J., Biochim. Biophys. Acta, Proteins Proteomics 1814, 1001 (2011). 10.1016/j.bbapap.2010.09.013 [DOI] [PubMed] [Google Scholar]

- 18.Lindorff-Larsen K., Piana S., Dror R. O., and Shaw D. E., Science 334, 517 (2011). 10.1126/science.1208351 [DOI] [PubMed] [Google Scholar]

- 19.Bowman G. R., Voelz V. A., and Pande V. S., J. Am. Chem. Soc. 133, 664 (2011). 10.1021/ja106936n [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Voelz V. A., Jäger M., Yao S., Chen Y., Zhu L., Waldauer S. A., Bowman G. R., Friedrichs M., Bakajin O., Lapidus L. J., Weiss S., and Pande V. S., J. Am. Chem. Soc. 134, 12565 (2012). 10.1021/ja302528z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lapidus L. J., Acharya S., Schwantes C. R., Wu L., Shukla D., King M., DeCamp S. J., and Pande V. S., Biophys. J. 107, 947 (2014). 10.1016/j.bpj.2014.06.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bowman G. R., J. Comput. Chem. 37, 558 (2015). 10.1002/jcc.23973 [DOI] [PubMed] [Google Scholar]

- 23.Chung H. S., Piana-Agostinetti S., Shaw D. E., and Eaton W. A., Science 349, 1504 (2015). 10.1126/science.aab1369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sirur A., De Sancho D., and Best R. B., J. Chem. Phys. 144, 075101 (2016). 10.1063/1.4941579 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Schütte C., Fischer A., Huisinga W., and Deuflhard P., J. Comput. Phys. 151, 146 (1999). 10.1006/jcph.1999.6231 [DOI] [Google Scholar]

- 26.Chodera J. D., Singhal N., Pande V. S., Dill K. A., and Swope W. C., J. Chem. Phys. 126, 155101 (2007). 10.1063/1.2714538 [DOI] [PubMed] [Google Scholar]

- 27.Buchete N.-V. and Hummer G., J. Phys. Chem. B 112, 6057 (2008). 10.1021/jp0761665 [DOI] [PubMed] [Google Scholar]

- 28.Buchete N.-V. and Hummer G., Phys. Rev. E 77, 030902 (2008). 10.1103/PhysRevE.77.030902 [DOI] [PubMed] [Google Scholar]

- 29.Bowman G. R., Huang X., and Pande V. S., Methods 49, 197 (2009). 10.1016/j.ymeth.2009.04.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bowman G. R., Beauchamp K. A., Boxer G., and Pande V. S., J. Chem. Phys. 131, 124101 (2009). 10.1063/1.3216567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pande V. S., Beauchamp K., and Bowman G. R., Methods 52, 99 (2010). 10.1016/j.ymeth.2010.06.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Prinz J.-H., Wu H., Sarich M., Keller B., Senne M., Held M., Chodera J. D., Schütte C., and Noé F., J. Chem. Phys. 134, 174105 (2011). 10.1063/1.3565032 [DOI] [PubMed] [Google Scholar]

- 33.Lane T. J., Bowman G. R., Beauchamp K., Voelz V. A., and Pande V. S., J. Am. Chem. Soc. 133, 18413 (2011). 10.1021/ja207470h [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Beauchamp K. A., McGibbon R., Lin Y.-S., and Pande V. S., Proc. Natl. Acad. Sci. 109, 17807 (2012). 10.1073/pnas.1201810109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Schwantes C. R. and Pande V. S., J. Chem. Theory Comput. 9, 2000 (2013). 10.1021/ct300878a [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Baiz C. R., Lin Y.-S., Peng C. S., Beauchamp K. A., Voelz V. A., Pande V. S., and Tokmakoff A., Biophys. J. 106, 1359 (2014). 10.1016/j.bpj.2014.02.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shukla D., Hernández C. X., Weber J. K., and Pande V. S., Acc. Chem. Res. 48, 414 (2015). 10.1021/ar5002999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Schwantes C. R., Shukla D., and Pande V. S., Biophys. J. 110, 1716 (2016). 10.1016/j.bpj.2016.03.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.McGibbon R. T., Schwantes C. R., and Pande V. S., J. Phys. Chem. B 118, 6475 (2014). 10.1021/jp411822r [DOI] [PubMed] [Google Scholar]

- 40.Schwantes C. R., McGibbon R. T., and Pande V. S., J. Chem. Phys. 141, 090901 (2014). 10.1063/1.4895044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Noé F. and Nüske F., Multiscale Model. Simul. 11, 635 (2013). 10.1137/110858616 [DOI] [Google Scholar]

- 42.Nüske F., Keller B. G., Pérez-Hernández G., Mey A. S. J. S., and Noé F., J. Chem. Theory Comput. 10, 1739 (2014). 10.1021/ct4009156 [DOI] [PubMed] [Google Scholar]

- 43.Kubelka J., Hofrichter J., and Eaton W. A., Curr. Opin. Struct. Biol. 14, 76 (2004). 10.1016/j.sbi.2004.01.013 [DOI] [PubMed] [Google Scholar]

- 44.McGibbon R. T. and Pande V. S., J. Chem. Phys. 142, 124105 (2015). 10.1063/1.4916292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Stanley N., Esteban-Martín S., and De Fabritiis G., Nat. Commun. 5, 5272 (2014). 10.1038/ncomms6272 [DOI] [PubMed] [Google Scholar]

- 46.Shukla D., Peck A., and Pande V. S., Nat. Commun. 7, 10910 (2016). 10.1038/ncomms10910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mu Y., Nguyen P. H., and Stock G., Proteins: Struct., Func., Bioinf. 58, 45 (2005). 10.1002/prot.20310 [DOI] [PubMed] [Google Scholar]

- 48.Zhou T. and Caflisch A., J. Chem. Theory Comput. 8, 2930 (2012). 10.1021/ct3003145 [DOI] [PubMed] [Google Scholar]

- 49.McGibbon R. T. and Pande V. S., e-print arXiv:1602.08776 (2016).

- 50.Sadiq S. K., Noé F., and De Fabritiis G., Proc. Natl. Acad. Sci. 109, 20449 (2012). 10.1073/pnas.1210983109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Haque I. S., Beauchamp K. A., and Pande V. S., preprint bioRxiv:008631 (2014).

- 52.Shukla D., Meng Y., Roux B., and Pande V. S., Nat. Commun. 5, 3397 (2014). 10.1038/ncomms4397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Vanatta D. K., Shukla D., Lawrenz M., and Pande V. S., Nat. Commun. 6, 7283 (2015). 10.1038/ncomms8283 [DOI] [PubMed] [Google Scholar]

- 54.Molgedey L. and Schuster H. G., Phys. Rev. Lett. 72, 3634 (1994). 10.1103/PhysRevLett.72.3634 [DOI] [PubMed] [Google Scholar]

- 55.Pérez-Hernández G., Paul F., Giorgino T., De Fabritiis G., and Noé F., J. Chem. Phys. 139, 015102 (2013). 10.1063/1.4811489 [DOI] [PubMed] [Google Scholar]

- 56.Ichiye T. and Karplus M., Proteins: Struct., Func., Bioinf. 11, 205 (1991). 10.1002/prot.340110305 [DOI] [PubMed] [Google Scholar]

- 57.Kitao A., Hirata F., and Gō N., Chem. Phys. 158, 447 (1991). 10.1016/0301-0104(91)87082-7 [DOI] [Google Scholar]

- 58.Amadei A., Linssen A. B. M., and Berendsen H. J. C., Proteins: Struct., Func., Bioinf. 17, 412 (1993). 10.1002/prot.340170408 [DOI] [PubMed] [Google Scholar]

- 59.Hayward S. and Go N., Annu. Rev. Phys. Chem. 46, 223 (1995). 10.1146/annurev.pc.46.100195.001255 [DOI] [PubMed] [Google Scholar]

- 60.Kitao A. and Go N., Curr. Opin. Struct. Biol. 9, 164 (1999). 10.1016/S0959-440X(99)80023-2 [DOI] [PubMed] [Google Scholar]

- 61.Berendsen H. J. and Hayward S., Curr. Opin. Struct. Biol. 10, 165 (2000). 10.1016/S0959-440X(00)00061-0 [DOI] [PubMed] [Google Scholar]

- 62.Hummer G., García A. E., and Garde S., Proteins: Struct., Func., Bioinf. 42, 77 (2001). [DOI] [PubMed] [Google Scholar]

- 63.Naritomi Y. and Fuchigami S., J. Chem. Phys. 134, 065101 (2011). 10.1063/1.3554380 [DOI] [PubMed] [Google Scholar]

- 64.Noé F. and Clementi C., J. Chem. Theory Comput. 11, 5002 (2015). 10.1021/acs.jctc.5b00553 [DOI] [PubMed] [Google Scholar]

- 65.Gonzalez T. F., Theor. Comput. Sci. 38, 293 (1985). 10.1016/0304-3975(85)90224-5 [DOI] [Google Scholar]

- 66.Sculley D., in Proceedings of the 19th International Conference on World Wide Web, WWW’10 (ACM, New York, NY, USA, 2010), pp. 1177–1178. [Google Scholar]

- 67.Bowman G. R., Pande V. S., and Noé F., An Introduction to Markov State Models and Their Application to Long Timescale Molecular Simulation (Springer, 2014). [Google Scholar]

- 68.Ward J. H. and Amer J., J. Am. Stat. Assoc. 58, 236 (1963). 10.1080/01621459.1963.10500845 [DOI] [Google Scholar]

- 69.Müllner D., e-print arXiv:1109.2378 (2011).

- 70.For more detailed discussions, we direct the reader to Refs. 32 and 49.

- 71.Scherer M. K., Trendelkamp-Schroer B., Paul F., Pérez-Hernández G., Hoffmann M., Plattner N., Wehmeyer C., Prinz J.-H., and Noé F., J. Chem. Theory Comput. 11, 5525 (2015). 10.1021/acs.jctc.5b00743 [DOI] [PubMed] [Google Scholar]

- 72.The protocol is defined through the state decomposition step, which means that models with differing MSM lag times (see Sec. II D) cannot be compared using the GMRQ.

- 73.Djurdjevac N., Sarich M., and Schütte C., Multiscale Model. Simul. 10, 61 (2012). 10.1137/100798910 [DOI] [Google Scholar]

- 74.Flocco M. M. and Mowbray S. L., Protein Sci. 4, 2118 (1995). 10.1002/pro.5560041017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Honda S., Yamasaki K., Sawada Y., and Morii H., Structure 12, 1507 (2004). 10.1016/j.str.2004.05.022 [DOI] [PubMed] [Google Scholar]

- 76.Keller B., Daura X., and van Gunsteren W. F., J. Chem. Phys. 132, 074110 (2010). 10.1063/1.3301140 [DOI] [PubMed] [Google Scholar]

- 77.Beauchamp K. A., Bowman G. R., Lane T. J., Maibaum L., Haque I. S., and Pande V. S., J. Chem. Theory Comput. 7, 3412 (2011). 10.1021/ct200463m [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Shah P. S., Hom G. K., Ross S. A., Lassila J. K., Crowhurst K. A., and Mayo S. L., J. Mol. Biol. 372, 1 (2007). 10.1016/j.jmb.2007.06.032 [DOI] [PubMed] [Google Scholar]

- 79.Swope W. C., Pitera J. W., and Suits F., J. Phys. Chem. B 108, 6571 (2004). 10.1021/jp037421y [DOI] [Google Scholar]

- 80.Swope W. C., Pitera J. W., Suits F., Pitman M., Eleftheriou M., Fitch B. G., Germain R. S., Rayshubski A., Ward T. J. C., Zhestkov Y., and Zhou R., J. Phys. Chem. B 108, 6582 (2004). 10.1021/jp037422q [DOI] [Google Scholar]

- 81.Sarich M., Noé F., and Schütte C., Multiscale Model. Simul. 8, 1154 (2010). 10.1137/090764049 [DOI] [Google Scholar]

- 82.Noé F., J. Chem. Phys. 128, 244103 (2008). 10.1063/1.2916718 [DOI] [PubMed] [Google Scholar]

- 83.Bacallado S., Chodera J. D., and Pande V., J. Chem. Phys. 131, 045106 (2009). 10.1063/1.3192309 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Metzner P., Noé F., and Schütte C., Phys. Rev. E 80, 021106 (2009). 10.1103/PhysRevE.80.021106 [DOI] [PubMed] [Google Scholar]

- 85.Metzner P., Weber M., and Schütte C., Phys. Rev. E 82, 031114 (2010). 10.1103/PhysRevE.82.031114 [DOI] [PubMed] [Google Scholar]

- 86.Weber J. K. and Pande V. S., J. Chem. Theory Comput. 7, 3405 (2011). 10.1021/ct2004484 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.McGibbon R. T. and Pande V. S., J. Chem. Phys. 143, 034109 (2015). 10.1063/1.4926516 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Trendelkamp-Schroer B., Wu H., Paul F., and Noé F., J. Chem. Phys. 143, 174101 (2015). 10.1063/1.4934536 [DOI] [PubMed] [Google Scholar]

- 89.McGibbon R. T., Beauchamp K. A., Harrigan M. P., Klein C., Swails J. M., Hernández C. X., Schwantes C. R., Wang L.-P., Lane T. J., and Pande V. S., Biophys. J. 109, 1528 (2015). 10.1016/j.bpj.2015.08.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Harrigan M. P., Sultan M. M., Hernandez C. X., Husic B. E., Eastman P., Schwantes C. R., Beauchamp K. A., McGibbon R. T., and Pande V. S., preprint bioRxiv:084020 (2016).

- 91.McGibbon R. T., Hernández C. X., Harrigan M. P., Kearnes S., Sultan M. M., Jastrzebski S., Husic B. E., and Pande V. S., J. Open Source Software 1 (2016). 10.21105/joss.00034 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

See the supplementary material for specifics regarding MSM methods and cross-validation, supporting information, and individual protein results.