Abstract

Research examining models of memory has focused on differences in the shapes of ROC curves across tasks and has used these differences to argue for and against the existence of multiple memory processes. ROC functions are usually obtained from confidence judgments, but the reaction times associated with these judgments are rarely considered. The RTCON2 diffusion model for confidence judgments has previously been applied to data from an item recognition paradigm. It provided an alternative explanation for the shape of the z-ROC function based on how subjects set their response boundaries and these settings are also constrained by reaction times. In our experiments, we apply the RTCON2 model to data from associative recognition tasks to further test the model’s ability to accommodate non-linear z-ROC functions. The model is able to fit and explain a variety of z-ROC shapes as well as individual differences in these shapes while simultaneously fitting reaction time distributions. The model is able to distinguish between differences in the information feeding into a decision process and differences in how subjects make responses (i.e., set decision boundaries and confidence criteria). However, the model is unable to fit data from a subset of subjects in these tasks and this has implications for models of memory.

Keywords: Response time, Confidence judgments, Associative recognition memory, Receiver operating characteristics, Diffusion model

Introduction

Associative memory is memory for combinations of items (i.e., do you remember whether these items were presented together or separately during the study list). Compared to simple item recognition memory (i.e., do you remember an item or not) associative recognition shows greater declines with age (e.g., Bastin & Van der Linden, 2006; Craik, Luo, & Sakuta, 2010; Naveh-Benjamin, 2000, 2012; Ratcliff, Thapar, & McKoon, 2011), is less susceptible to decay and interference (Hockley, 1992), has different patterns of false alarm rates (Hockley, 1994; Malmberg & Xu, 2007), has a different time course (Gronlund & Ratcliff, 1989), and shows different word frequency effects (Clark, 1992), among other differences.

In this paper, we apply the RTCON2 model to an associative recognition task for which subjects used a six-point scale to rate the confidence with which they believed a pair of test items had or had not appeared together earlier in the experiment. This is the more common method of collecting confidence responses, especially in memory research, although some researchers have had subjects make a two-choice response followed by a confidence rating (Baranski & Petrusic, 1998; Merkle & Van Zandt, 2006; Pleskac & Busemeyer, 2010; Van Zandt, 2000; Van Zandt & Maldonado-Molina, 2004; Vickers, 1979; Vickers & Lee, 1998, 2000). In the model, evidence is accumulated toward a set of decision thresholds and the relative heights of these thresholds explains both the location and shape of subjects’ reaction time distributions and also the shape of their z-ROC functions. This means that z-ROC shape does not solely provide information about memory representations as has been assumed to date but also reflects individual differences in how subjects use confidence response scales. Application of the RTCON2 model to associative recognition is especially interesting because this type of memory task often produces z-ROC functions with different shapes than item recognition, and these differences have previously been used to motivate the development of various memory models (Glanzer, Hilford, & Kim, 2004; Hilford, Glanzer, Kim, & DeCarlo, 2002; Kelley & Wixted, 2001; Qin, Raye, Johnson, & Mitchell, 2001; Slotnick & Dodson, 2005; Slotnick, Klein, Dodson, & Shimamura, 2000; Wixted, 2007; Yonelinas, 1997, 1999) and in neuroscience research (Eichenbaum, Yonelinas, & Ranganath, 2007; Henson, Rugg, Shallice, & Dolan, 2000; Kim & Cabeza, 2007; Kirwan, Wixted, & Squire, 2008; Moritz, Glascher, Sommer, Buchel, & Braus, 2006; Rissman, Greely, & Wagner, 2010; Stark & Squire, 2001; Wais, 2011; Yonelinas, Hopfinger, Buonocore, Kroll, & Baynes, 2001). However, these memory models typically focus only on the kind of evidence being fed into a decision, ignore or over-simplify the process of making a decision based on that evidence, and may not produce the same estimates of evidence that a full decision model would. In contrast, our research attempts to model the process of making confidence-judgments in an associative recognition paradigm and investigate to what degree experimental findings can be accounted for with a decision-making model.

In an associative recognition memory experiment, participants study pairs of words and are then asked to distinguish between pairs of words that were previously studied together (“intact”) or studied separately (“rearranged”). In an item recognition memory experiment, participants study individual items and are then asked to distinguish between items that were previously studied (“old”) and items that were not previously studied (“new”). Most of the work investigating either type of recognition memory has relied on Signal Detection theory (Banks, 1970; Bernbach, 1967; Donaldson & Murdock, 1968; Egan, 1958; Grasha, 1970; Kintsch, 1967; Kintsch & Carlson, 1967; Lockhart & Murdock, 1970; Norman & Wickelgren, 1969; Ratcliff, McKoon, & Tindall, 1994; Ratcliff, Sheu, & Gronlund, 1992; Yonelinas, 1994). In the signal detection framework, it is assumed that each tested pair has some value of associative strength that is normally distributed for each category of tested items (for example, “intact” or “rearranged” word pairs). The intact/rearranged decision can then be modeled by placing a single criterion on a dimension representing the associative strength of the test items. If the associative strength value for a test item is above the criterion, then an ‘intact’ response is made; otherwise, if the associative strength value is below the criterion, then a ‘rearranged’ response is made. Bias toward one of the response choices can be modeled by changes in the placement of the decision criterion, and multiple response options (such as confidence judgments) can be modeled by including additional decision criteria.

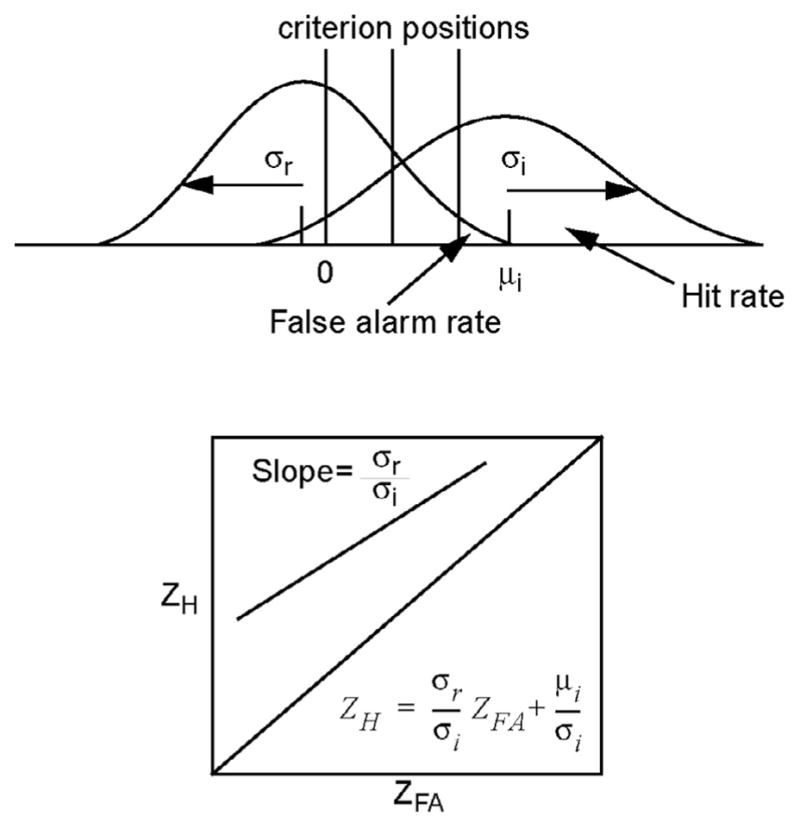

In confidence judgment procedures, subjects rate their confidence that an item is intact or rearranged using a response scale with levels ranging from ‘very sure intact’ to ‘very sure rearranged’. To model these multiple response options, additional decision criteria are used to divide the memory strength dimension into multiple response regions. Fig. 1 depicts two normal distributions, one for intact items and one for rearranged items, and three possible decision criteria. These decision criteria partition the match dimension into four response regions corresponding to four confidence categories: from left to right, high confidence rearranged, low confidence rearranged, low confidence intact, high confidence intact. As the decision criterion moves from left to right, both the hit and false alarm rates decrease.

Fig. 1.

The standard Signal Detection model with one normal distribution each for the intact and rearranged items respectively, four response regions created by three confidence criteria, the z-ROC obtained from the two distributions, and the equation relating the z-transformed hit and false alarm rates.

These decision criteria can then be used to create receiver operating characteristic (ROC) functions, which are plots of the hit rate (“intact” responses to intact word pairs) against the false alarm rate (“intact” responses to rearranged word pairs). To create an ROC function from the data, each criterion is treated as if it were the only criterion and the hit and false alarm rates for that criterion are calculated and plotted against each other as a single point on the ROC curve. Hit and false alarm rates are calculated first for the rightmost criterion, representing the highest confidence intact category, then for the two rightmost categories (adding together the number of responses in those two categories), then for the three rightmost, and so on.

These hit and false alarm rates are frequently converted to z-scores, resulting in a function called a z-ROC. The assumption of normal distributions of memory evidence predicts linear z-ROC functions with a slope equal to the ratio of the standard deviations of the “intact” and “rearranged” item distributions (Ratcliff et al., 1992). The lower portion of Fig. 1 depicts the z-ROC function obtained from the two distributions. However, linear z-ROC functions are also consistent with other kinds of distributions such as poisson, gamma, and even a combination of ramp and rectangular distributions (Banks, 1970; Lockhart & Murdock, 1970; Murdock, 1974). With different distributions of evidence, the slope of the z-ROC function is not the ratio of the standard deviations of the distributions as it is when the distributions are normal.

As predicted by SDT with normal distributions, most of the z-ROC functions found in the memory literature on item recognition have been approximately linear. However, a number of studies have demonstrated systematically non-linear z-ROC functions and these findings have prompted theoretical elaborations of the standard single-process signal-detection theory (DeCarlo, 2002; Malmberg & Xu, 2006; Ratcliff et al., 1994; Ratcliff & Starns, 2013; Rotello, Macmillan, & Reeder, 2004; Rotello, Macmillan, & Van Tassel, 2000; Yonelinas, 1994, 1997; Yonelinas, Dobbins, Szymanski, Dhaliwal, & King, 1996). Several of these theories have focused on explaining the slightly U-shaped z-ROC functions observed in some associative recognition and source-memory experiments (Glanzer et al., 2004; Hilford et al., 2002; Kelley & Wixted, 2001; Qin et al., 2001; Slotnick & Dodson, 2005; Slotnick et al., 2000; Wixted, 2007; Yonelinas, 1997, 1999).

There are a number of problems with this SDT approach to memory modeling. First, this approach often ignores differences between individuals. ROC analyses are frequently conducted on data that has been averaged across subjects, so any differences between subjects are ignored or relegated to an Appendix A. As the present study will demonstrate, there can be substantial differences in how subjects utilize confidence response scales such that it is not appropriate to only analyze averaged data (Malmberg & Xu, 2006; Ratcliff et al., 1994). Second, the SDT approach ignores the reaction time associated with each response. Although there is a well-known relationship between the speed and accuracy with which people make decisions (Pachella, 1974; Wickelgren, 1977), most memory researchers only collect and analyze accuracy data. In order to provide a complete account of the confidence decision process, it is important to consider both reaction time and accuracy. Third, the SDT approach assumes that the only source of variability in the decision process is the variability in memory strength between items. This assumption leads to inappropriate conclusions about the z-ROC functions (Ratcliff & Starns, 2009, 2013; Starns, Ratcliff, & McKoon, 2012). Fourth, elaborations of SDT often include additional memory processes or additional sources of information in order to accommodate non-linear z-ROC functions (Arndt & Reder, 2002; DeCarlo, 2002, 2003; Hilford et al., 2002; Kelley & Wixted, 2001; Rotello et al., 2004; Yonelinas, 1994; Yonelinas & Parks, 2007). With the inclusion of reaction time data and individual differences, the present study will demonstrate that these additional processes are not always necessary to produce non-linear z-ROC functions. All of these problems with SDT can potentially be addressed by using the RTCON2 model. This model produces both accuracy and reaction time predictions for individual subjects, it includes several sources of variability related to the decision process, and it has been able to fit a variety of item recognition z-ROC functions without additional memory processes (Ratcliff & Starns, 2009, 2013). The RTCON2 model is not a memory model in the same way SDT is not a memory model. A complete description of processing would have a memory model provide the distributions of memory evidence used in making the decision as in SDT. However, the model has been able to explain various z-ROC shapes observed in item recognition tasks, including non-linear functions. The explanation for these shapes is based on how subjects set their decision boundaries and is constrained by reaction time data. As such, the explanation for these shapes is based on the process of making a decision as opposed to the type of information entering into the decision process from memory. The goal of these experiments is to determine whether the RTCON2 model can similarly account for the non-linear z-ROC functions commonly observed in associative recognition tasks.

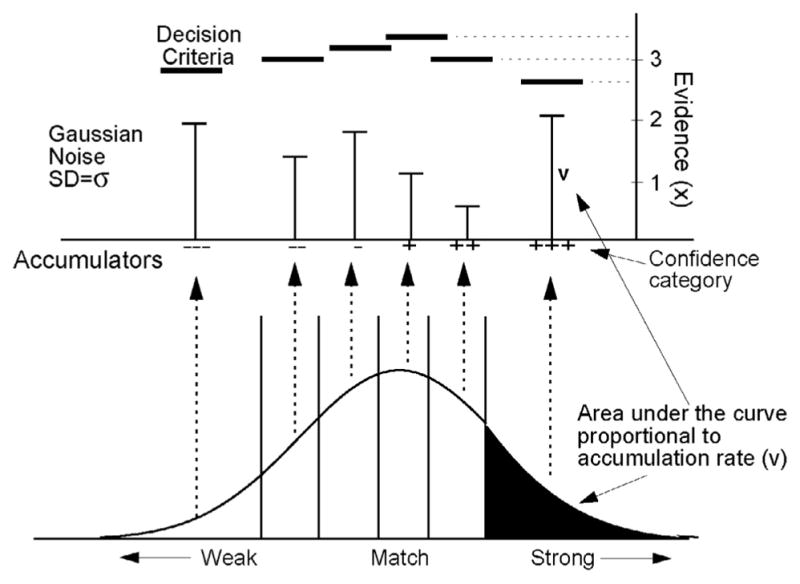

The RTCON2 model has previously been applied to confidence judgments in item recognition and motion discrimination tasks and was shown to provide a better fit to the data than several competing decision models (Ratcliff & Starns, 2013). In the RTCON2 model, the evidence available to the decision process on a single trial (i.e., the memory strength for a particular item) is assumed to be a distribution across the evidence-strength dimension instead of a single value (cf. Beck et al., 2008; Gomez, Ratcliff, & Perea, 2008; Jazayeri & Movshon, 2006; Ratcliff, 1981; Ratcliff & Starns, 2009). These item distributions have a standard deviation of 1 and their mean location varies from trial to trial (as in SDT). The bottom portion of Fig. 2 illustrates how the distribution of evidence for a single item is mapped to the decision process. As in SDT, multiple confidence criteria are used to divide the match dimension into multiple response regions corresponding to different levels of confidence.

Fig. 2.

RTCON2. The distribution of evidence for an item on a given trial drives six mutually inhibitory accumulators (one for each confidence category). The proportion of the distribution between the confidence criteria on the match dimension drives the drift rate for each confidence category. When one of the accumulators reaches its decision boundary, the corresponding response is made. Each time one accumulator takes a step up of size x, the accumulators on the opposite side take a x/(N/2) step down (where N is the number of accumulators) such that the amount of evidence stays constant (i.e., if an ‘intact’ accumulator is incremented, then the ‘rearranged’ accumulators are all decremented and the other ‘intact’ accumulators are unchanged).

Each response region has its own accumulator and decision boundary, as shown in the top portion of Fig. 2, and the diffusion processes race until one of the processes reaches its decision boundary and the corresponding response is made. Evidence for each confidence response accumulates separately over time toward a decision boundary. This is similar to other sequential sampling models that assume that noisy evidence is accumulated separately for each response alternative (as in the dual-diffusion model, Ratcliff, Hasegawa, Hasegawa, Smith, & Segraves, 2007; and the Leaky-Competing Accumulator model, Usher & McClelland, 2001).

The mean position of the distribution of evidence (μ) is determined by the quality of information extracted from the stimulus and determines the rate of accumulation (ν) for each accumulator. In an experiment, the value of μ would be different for stimulus conditions of differing difficulty. For example, in an associative recognition experiment, μ would represent the quality of the match between a given word pair and memory. A pair of words that had been presented together during the study period should have a higher degree of match (i.e., a higher value of μ) than a pair of words that had been presented in different pairs during the study period. The quality of information from stimuli of the same type is allowed to vary across trials to reflect differences in the encoding and retrieval of associative information across pairs of items. This between-trial variability in μ is assumed to be normally distributed with standard deviation s. The average rate of accumulation (ν) for each response is determined by the proportion of the within-trial distribution of evidence in each of the response regions. This accumulation process is subject to moment-to-moment variability such that processes with the same accumulation rates will not always terminate at the same time or with the same confidence response.

Several aspects of this model affect the relationship between the level of confidence and the evidence in favor of a particular choice. Specifically, a particular confidence judgment is determined by the decision boundaries of the response accumulators and by the criteria that divide the strength dimension into response regions as well as by the amount of evidence in favor of a particular confidence response. For example, a high confidence response region may have a higher decision boundary such that more evidence must be accumulated for that response to be selected. The height of the decision boundary would cause that particular response to be selected less often and with a longer reaction time than if that response region had a lower decision boundary, even for items that have a high mean value of evidence. Thus in RTCON2 confidence is not merely a function of accuracy.

The RTCON models are an extension of the diffusion model (Audley & Pike, 1965; Ratcliff, 1978, 1988, 2006; Ratcliff & McKoon, 2008), and were developed to accommodate both accuracy and reaction time distributions from multi-choice confidence judgment tasks (Ratcliff & Starns, 2009, 2013). RTCON2 differs from the original RTCON model in that it uses a slightly different decision process (RTCON2 uses constant summed evidence whereas RTCON uses an OU diffusion process) and allows accumulators to go below zero (in fact, because it is a linear process, there is no true zero point; a constant could be added to the base evidence level and decision bounds and the behavior of the model would be the same).

In the constant summed evidence algorithm, the increment to evidence (Δx) on each time step (Δt) is determined by its drift rate (v) and noise (Eq. (1)). On each time step, one of the response accumulators is selected randomly and increased (Eq. (1)) and some of the other response accumulators are decreased such that the sum of the total decrease is equal to the increase in the selected accumulator (Eq. (2)).

| (1) |

| (2) |

There are several possible variants of this algorithm. For example, all of the response accumulators could be competing (i.e., an increase on one accumulator would cause all of the other N accumulators to decrease) or only some of the accumulators could be competing (i.e., if one of the ‘intact’ accumulators was increased, only the ‘rearranged’ accumulators would decrease – the other ‘intact’ accumulators would be unchanged). For this application, we used the variant of the model where an increase in evidence in one of the ‘intact’ accumulators would cause a decrease in evidence only in the ‘rearranged’ accumulators (this is shown in Eq. (2)), but not the other ‘intact’ accumulators. This version of the constant-summed evidence algorithm makes intuitive sense in that evidence for one type of response (intact or rearranged) should not compete with other confidence levels of that same response. This version of the algorithm also provides parameter values that are more consistent across different numbers of response options. The expressions for the changes in evidence for each accumulator are given in Eqs. (1) and (2). Eq. (1) describes the update in evidence for the selected accumulator and Eq. (2) describes the corresponding change in activity for the non-selected accumulators (note that, due to noise from the second terms in the right-hand side of Eq. (1), Δxi could also be a negative value such that the other accumulators would all take a proportional step up). If the selected accumulator was one of the ‘intact’ accumulators, then Eq. (2) would be used to adjust the ‘rearranged’ accumulators, but the other intact accumulators would be unchanged (and vice versa, if a ‘rearranged’ accumulator was selected). In these equations, as is a scaling parameter that adjusts drift rate (the area under the distribution in the bottom of Fig. 2), σ is within-trial variability in the accumulation process, η is a normally distributed random variable with mean 0 and SD 1, and N is the total number of accumulators. The constant summed evidence algorithm has been shown to provide a better fit to empirical data than a competing class of models because it is better able to account for shifts in the RT distributions across confidence responses (Ratcliff & Starns, 2013).

Reaction time distributions are obtained by combining the decision time (the time taken for one of the evidence accumulators to reach a decision boundary) with a uniformly distributed non-decision component. The non-decision component is assumed to have mean Ter and range st, and it encompasses both encoding and response output processes. Reaction time distributions are also dependent on the height of the decision boundaries, which vary from trial to trial over a uniform distribution with a range of sb.

Although both RTCON2 and SDT use normal distributions of stimulus information, they produce considerably different interpretations of z-ROC functions. In SDT, the proportion of hit and false alarm rates can only be manipulated through the placement of the decision criterion. In RTCON2, the proportion of hit and false alarm responses can be manipulated either by adjusting the height of the decision boundaries or by adjusting the confidence criteria. A shift in the heights of the decision boundaries will shift the response time distributions and have an effect on the leading edge of the RT distribution whereas a shift in the confidence criteria will have a smaller effect on the leading edge. Thus, the two ways of adjusting response proportions in RTCON2 are identifiable based on reaction time distributions. Because the response proportions depend on both the height of the decision boundaries and the placement of the confidence criteria, RTCON2 is able to fit a wider variety of z-ROC functions than standard SDT. In contrast to SDT, which deals only with accuracy, RT distributions provide additional severe constraints on RTCON2 because the model not only has to account for z-ROC functions but also RT distributions.

As mentioned previously, standard SDT with normal distributions of evidence is unable to account for the non-linear z-ROC functions observed in some associative recognition experiments (Glanzer et al., 2004; Hilford et al., 2002; Kelley & Wixted, 2001; Qin et al., 2001; Slotnick & Dodson, 2005; Slotnick et al., 2000; Wixted, 2007; Yonelinas, 1997, 1999). This has prompted theorists to add extra memory processes (Yonelinas, 1994; Yonelinas & Parks, 2007) or extra sources of information (DeCarlo, 2002, 2003; Hilford et al., 2002; Kelley & Wixted, 2001) to standard SDT in order to account for these findings. Because the RTCON2 model has different ways of adjusting response proportions (but additional constraints because of RT distributions), it can potentially account for non-linear z-ROC functions through changes in the decision-making process as opposed to changes in the memory process. Moreover, because RTCON2 is fit to both accuracy and RT data, applications of the model in other paradigms have demonstrated a relationship between the shape of the z-ROC function and the behavior of response time distributions that had not previously been observed.

Another important difference between SDT and RTCON2 is that SDT contains only a single source of variability. In SDT, the variability in the distribution of memory strength is the only source of variability that affects the decision. In RTCON2, however, there is variability across trials in the quality of evidence from a stimulus (the variability in the mean value of the evidence distribution across trials), variability in the evidence accumulation process, and variability in the decision boundaries. These three sources of variability are identifiable and are needed to account for decision time, that is, RT distributions for responses for the various confidence categories (see Ratcliff & Starns, 2009, for some discussion of parameter recovery and lack of parameter correlations for RTCON). In standard SDT, the slope of the z-ROC function represents the ratio of the standard deviations of the distributions of old and new stimulus evidence. But because there are several sources of variability in the decision process, the slope of the z-ROC function is not a measure of the ratio of stimulus variability for the two choices as in SDT.

RTCON2 is able to account for both accuracy and reaction time values for confidence judgments, distinguishes between several sources of variability in the decision process, and provides an alternative explanation for the shape of z-ROC functions. The aim of these experiments is to investigate whether this model can account for data from an associative recognition task.

Experiment 1

The first experiment was designed to collect a large number of observations for a few subjects to provide stringent tests of the RTCON2 model performance on an associative recognition task. The aim is to determine whether this one-process model can account for the type of accuracy (and RT) data that has been assumed to provide evidence for different memory processes (DeCarlo, 2002, 2003; Healy, Light, & Chung, 2005; Kelley & Wixted, 2001; Rotello et al., 2000; Yonelinas, 1994). In this experiment, subjects studied lists of pairs of words and then were presented with pairs of test words and had to distinguish between intact and rearranged versions of the study pairs.

Method

Subjects

Five Ohio State University undergraduate students participated in 8 sessions and earned $10 for each completed session.

Materials

The stimuli were drawn from a pool of 814 high-frequency words, 859 low-frequency words, and 741 very-low-frequency words. Low-frequency words ranged from 4 to 6 occurrences per million (M = 4.41), very-low-frequency words ranged from 0 to 1 occurrence per million (M = 0.365), and high-frequency words ranged from 78 to 10,595 occurrences per million (M = 323.22; Kučera & Francis, 1967). Study lists were composed of 12 high-frequency words, 12 low-frequency words, and 4 very-low-frequency words selected randomly (without replacement) from the word pools. These words were randomly paired within frequency to create 14 word pairs (6 high-frequency pairs, 6 low-frequency pairs, and 2 very-low-frequency pairs). The two very-low-frequency word pairs served as buffer items for the study list and were presented in the first and last positions of the study list, and the remaining pairs were target items. All of the target word pairs were presented twice within each list. The 12 target pairs were randomly assigned to the middle study list positions with the restriction that there was at least one intervening word pair between the two presentations of each pair.

Test lists consisted of the two buffer word pairs (presented in the first and last positions of the test list) and the 12 target pairs. Each pair was presented only once during the test list and exactly half of the target pairs were randomly rearranged within frequency (i.e., a low-frequency word pair could only be rearranged with another low-frequency word pair). Words also maintained the same positions within pairs, such that a word presented as the first item in a pair during study would also be the first item of a pair during test, regardless of what word it was paired with. Thus each test list consisted of 6 rearranged pairs and 6 intact pairs. Intact pairs consisted of words which had appeared together in the study list and rearranged pairs consisted of words which appeared in different pairs in the study list.

Procedure

Each experimental session lasted approximately 50 min. The first two sessions for each subject consisted of a response-key practice block, 3 study/test blocks, a second response-key practice block, and 20 more study/test blocks. The second response-key practice block was dropped after the first two sessions, because subjects were familiar with the response keys and no longer needed the additional practice. Subjects responded using a PC keyboard on which the Z, X, C, comma, period, and slash keys were labeled with the symbols “− − −”, “− −”, “−”, “+”, “+ +”, and “+ + +”. Subjects were instructed to place their left-hand ring, middle, and index fingers on the “− − −”, “− −”, and “−” keys and their right-hand index, middle, and ring fingers on the “+”, “+ +”, and “+ + +” keys.

During the response-key practice, each of the symbols marked on the keyboard (e.g., “− −”) would appear on the screen one at a time and the subjects were told to press the designated key as quickly as possible. If a subject took longer than 800 ms to respond to one of the symbols, a “TOO SLOW” message would appear on the screen for 1000 ms. Each practice block consisted of 10 repetitions of each of the six response key options resulting in 60 trials total in each block. The symbols appeared in random order within the block with the restriction that repeated symbols had to have at least one intervening symbol.

For the remainder of the experiment, subjects were told that they would be presented with pairs of words during the study portions of the experiment and their job was to learn these pairs. During the study/test blocks, subjects initiated the start of each study list by pressing the spacebar. Each word pair in the study list was displayed for 3000 ms followed by 200 ms of blank screen. Immediately after the final study-list word pair, a message appeared directing subjects to press the space bar to begin the test list. During the test-list, subjects were required to distinguish between the word pairs that had not appeared during the study-list (rearranged word pairs) and those that had (intact word pairs). Each word pair remained on the screen until the subject had made a response. Subjects were instructed to use the different response-key options to indicate whether a word pair had appeared in the study-list and their confidence in their response. They were told to use one of the “−” keys to indicate that the word pair had not appeared in the study-list, and to use one of the “+” keys to indicate that it had. Subjects were instructed to use the different levels of “+” and “−” to indicate their amount of confidence in their response (e.g., if a subject felt very confident that a word pair was intact they would use the “+ + +” key, whereas if they felt only moderately confident they would use the “+ +” key). Subjects were encouraged to respond quickly and accurately and to try to spread their responses among all six response-keys throughout the course of the experiment. If a subject took less than 280 ms to respond to one of the test items, a “TOO FAST” message would appear on the screen for 1500 ms. Subjects were given error feedback throughout all test blocks in the form of the words “CORRECT” or “ERROR” displayed for 300 ms after their response to each test item.

Model fitting

The RTCON2 model was fit to each individual subject’s response proportion and reaction time quantiles (.1, .3, .5, .7, .9) for each of the 6 confidence response for each of the 4 conditions (rearranged high frequency, rearranged low frequency, intact high frequency, and intact low frequency word pairs). The RT quantiles divide the response proportion data into six bins for each confidence category. Initial parameter values were chosen that produced predictions similar to the empirical data, and then a simplex function (Nelder & Mead, 1965) was used to adjust the parameters of the model until the predictions matched the data as closely as possible. The match between the empirical data and the model predictions was quantified by a chi-square (χ2) statistic, which was minimized by the simplex function (see Ratcliff & Tuerlinckx, 2002 for more detail). Because there are no exact solutions for this model, simulations are used to generate predicted values from the model. To simulate the process of accumulation given by Eqs. (1) and (2), we used the simple Euler’s method with 1-ms steps (cf. Brown, Ratcliff, & Smith, 2006; Usher & McClelland, 2001). For each millisecond step, one accumulator was chosen randomly, and the evidence in it was incremented or decremented according to Eq. (1) and opposite accumulators were incremented or decremented according to Eq. (2) (e.g., if the selected accumulator was for one of the ‘intact’ responses, then the evidence in the ‘rearranged’ accumulators would be adjusted according to Eq. (2) and the other ‘intact’ accumulators would be unchanged). For each condition, 20,000 simulations of the decision process were used to generate the response proportions and RT quantiles for each confidence category. Note that we use the term ‘model predictions’ to refer to data generated by the model for a specific set of parameter values. These predictions are thus the data predicted by the model structure and a given parameter set, as opposed to predictions about some future data based on fits of the current data.

There are six RT bins for each confidence response, which gives 36 degrees of freedom for the 6-choice task. But these response proportions have to add to 1, which reduces the degrees of freedom to 35 for each condition. With four conditions, this gives a total of 140 degrees of freedom in the data. For this experiment there are 23 free parameters in RTCON2. Of these, 12 are used to represent the memory strength feeding into the decision (3 mean drift values – one is fixed to zero, 4 between-trial variability in the mean of the drift distribution, and 5 confidence criteria) and the remaining 11 parameters are used to model the decision process (6 decision boundaries, non-decision time, variability in non-decision time, the scaling parameter on drift, variability in the decision boundaries, and within-trial noise in the diffusion process). These 11 additional parameters are what enable the model to produce response times as well as accuracy. Note that an accuracy-only SDT model with the same representation of memory strength would require 12 parameters (the same ones for RTCON2) for data with only 20 degrees of freedom. Additionally, although RTCON2 has a fairly large number of parameters, a change in any one of the parameter values will affect predictions across multiple conditions or response categories such that it is not possible to remedy misfits in a single condition by simply adjusting a single parameter.

Results and discussion

There are two main results of this experiment. First, the model fits both the proportion of responses and the RT quantiles for each confidence category. Second, because the model fits the proportions of responses, it also fits the ROC and z-ROC functions for all but one subject reasonably well.

Data from this experiment consisted of response proportions and reaction-time quantiles for each subject from each condition and each confidence response. There were four conditions in this experiment: rearranged high frequency, rearranged low frequency, intact high frequency, and intact low frequency word pairs. Reaction time latencies less than 300 ms or greater than 4000 ms were excluded from these analyses (less than 0.3% of all data).

We analyzed response rates across all levels of confidence for word frequency effects. There was a higher hit-rate for LF word pairs (M = 0.81, SD = 0.07) than HF word pairs (M = 0.66, SD = 0.10) and this difference was significant (t(4) = −9.3, p < .05). There was also a higher false-alarm rate for LF word pairs (M = 0.34, SD = 0.22) than HF word pairs (M = 0.25, SD = 0.14) but this difference was not significant (t(4) = −2.3, p > .05).

The model was fit to data from individual subjects and the best-fitting model parameters are shown in Table 1. For each subject, these parameter values were used to generate predicted reaction time quantiles and response proportions for each condition. These predicted values can then be compared with the empirical data using a χ2 test to quantitatively assess the model fit. The mean χ2 value for this experiment was 254 with a SD of 36. This mean is 1.5 times the critical χ2 value (168.6) which indicates a mismatch between the model’s predictions and the data. However, the size of this mismatch is comparable to those obtained in other experiments with diffusion models. Ratcliff, Thapar, Gomez, and McKoon (2004) demonstrated that a miss as large as .1 in the proportion of responses between quantiles could produce χ2 values 2–3 times the critical value. Similarly, Ratcliff and Starns (2009) demonstrated that 10 ms perturbations of the quantile reaction times could produce large increases in χ2 values. The significance of the χ2 values is also, at least partially, a power issue. In order to fit RCON2, we need good RT quantile estimates and so need to collect a sizeable amount of data from each subject. For this experiment, we collected an average of 550 responses per condition from each subject. With this many responses, even small differences between the empirical data and the model predictions will be significant. For comparison, if we had observed these same response proportions and quantile RTs from about 358 responses per condition (65% of the actual 550) then the same χ2 test yields a mean value of 153.29 (4 out of 5 subjects have values less than the critical value) and the model would be considered to be a reasonably good match to the data. Additionally, the original RTCON model produces an average χ2 value of 331 when fit to these data. This demonstrates that this new version of the RTCON model is indeed an improvement over the original version in that it provides a closer fit to the data. The original RTCON model primarily had difficulty producing the bowed RT quantiles that were observed in this experiment.

Table 1.

Experiment 1 best fitting model parameters.

| Subject | Ter | st | as | σ | sb | χ2 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | 389 | 358 | 0.021 | 0.13 | 1.47 | 196 | ||

| 2 | 363 | 178 | 0.047 | 0.11 | 0.90 | 256 | ||

| 3 | 292 | 162 | 0.067 | 0.12 | 1.04 | 250 | ||

| 4 | 476 | 383 | 0.040 | 0.15 | 0.71 | 294 | ||

| 5 | 535 | 357 | 0.049 | 0.17 | 1.39 | 273 | ||

| b1 | b2 | b3 | b4 | b5 | b6 | |||

|

|

||||||||

| 1 | 1.81 | 1.57 | 1.76 | 1.32 | 1.17 | 1.42 | ||

| 2 | 2.30 | 2.03 | 2.46 | 2.19 | 1.76 | 1.37 | ||

| 3 | 1.68 | 1.71 | 2.48 | 2.40 | 1.65 | 1.52 | ||

| 4 | 2.80 | 2.91 | 2.72 | 2.10 | 2.36 | 2.01 | ||

| 5 | 3.00 | 2.49 | 2.27 | 2.67 | 2.27 | 2.26 | ||

| c1 | c2 | c3 | c4 | c5 | ||||

|

|

||||||||

| 1 | −0.81 | 0.00 | 0.87 | 2.04 | 3.23 | |||

| 2 | −1.20 | −0.04 | 0.76 | 1.46 | 2.51 | |||

| 3 | −0.84 | 0.08 | 0.95 | 1.96 | 2.81 | |||

| 4 | −0.69 | 0.00 | 1.15 | 1.73 | 2.18 | |||

| 5 | −0.90 | −0.25 | 0.85 | 1.93 | 3.00 | |||

| μrH | μrL | μiH | μiL | srH | srL | siH | siL | |

|

|

||||||||

| 1 | 0.00 | 0.87 | 0.42 | 2.49 | 0.61 | 0.63 | 0.65 | 0.53 |

| 2 | 0.00 | −0.07 | 1.18 | 1.71 | 0.31 | 0.52 | 0.57 | 0.48 |

| 3 | 0.00 | −0.15 | 1.82 | 2.14 | 0.40 | 0.41 | 0.63 | 0.61 |

| 4 | 0.00 | −0.44 | 1.29 | 1.73 | 0.53 | 0.69 | 0.66 | 0.61 |

| 5 | 0.00 | 0.68 | 1.28 | 1.82 | 0.57 | 0.74 | 0.86 | 0.63 |

Ter is the mean nondecision time, st is the range in nondecision time, σ is the SD in within trial variability, as is the scaling factor that multiplies drift rate, sb is the range in variability in the decision boundaries, b1–b6 are the decision boundaries, c1–c5 are the confidence criteria, the μ values are the mean values of the drift rate distributions for each experimental condition, and the s values are the between-trial variability values for each experimental condition (r represents rearranged items, i represents intact items, H represents high-frequency items, and L represents low frequency items).

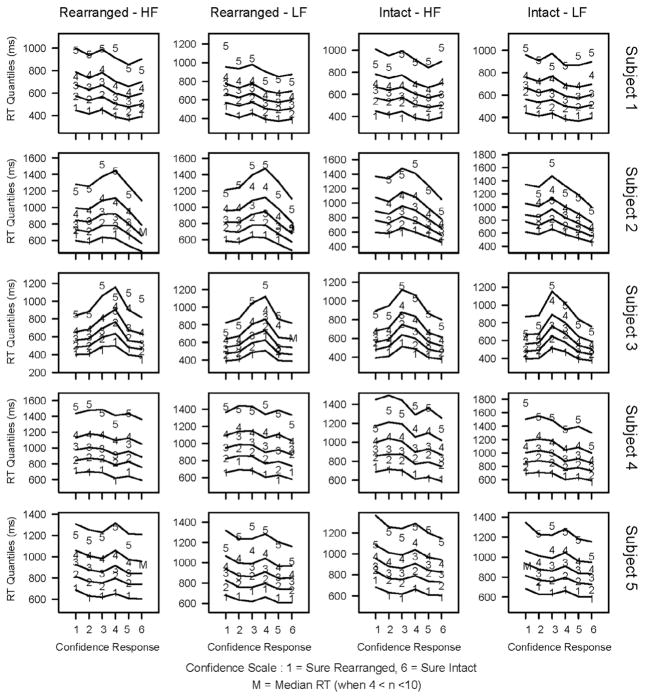

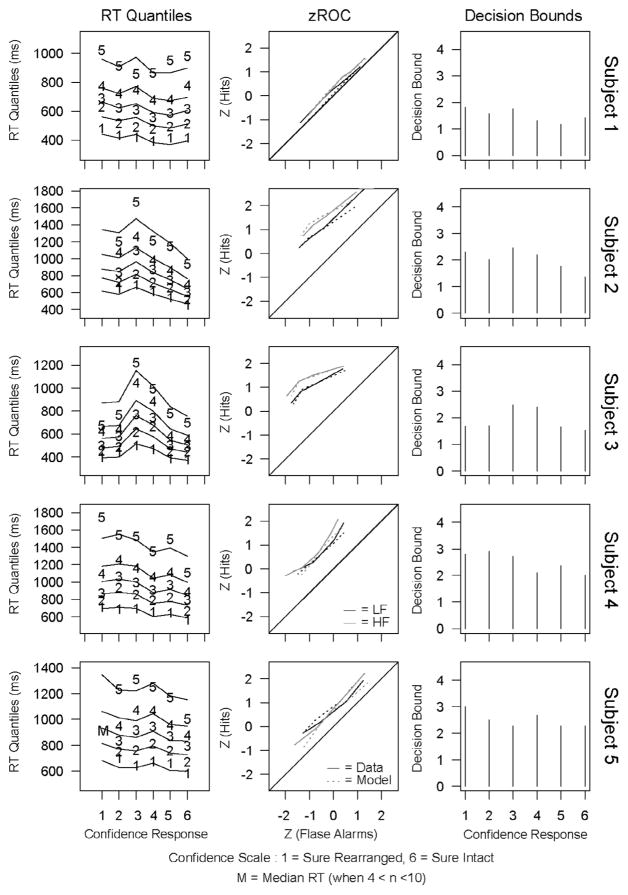

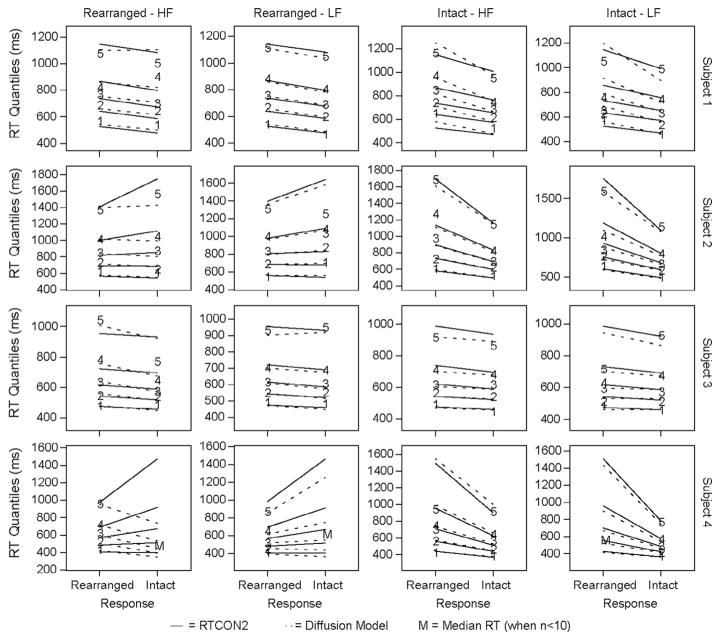

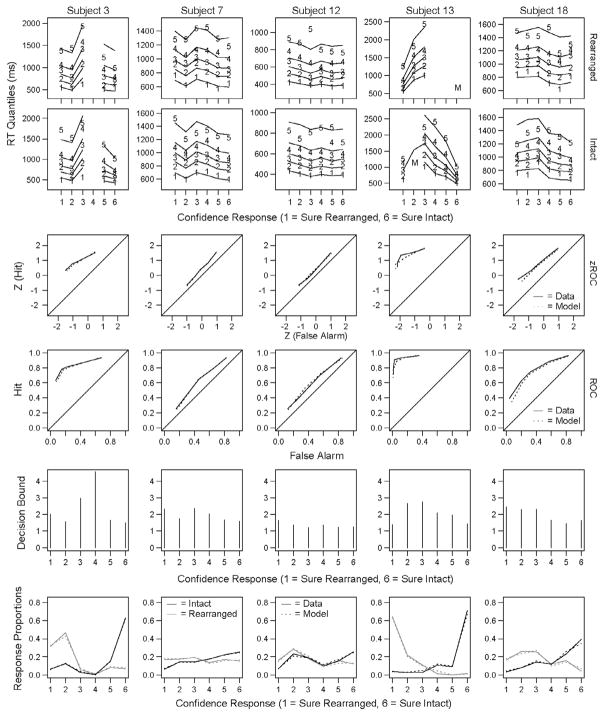

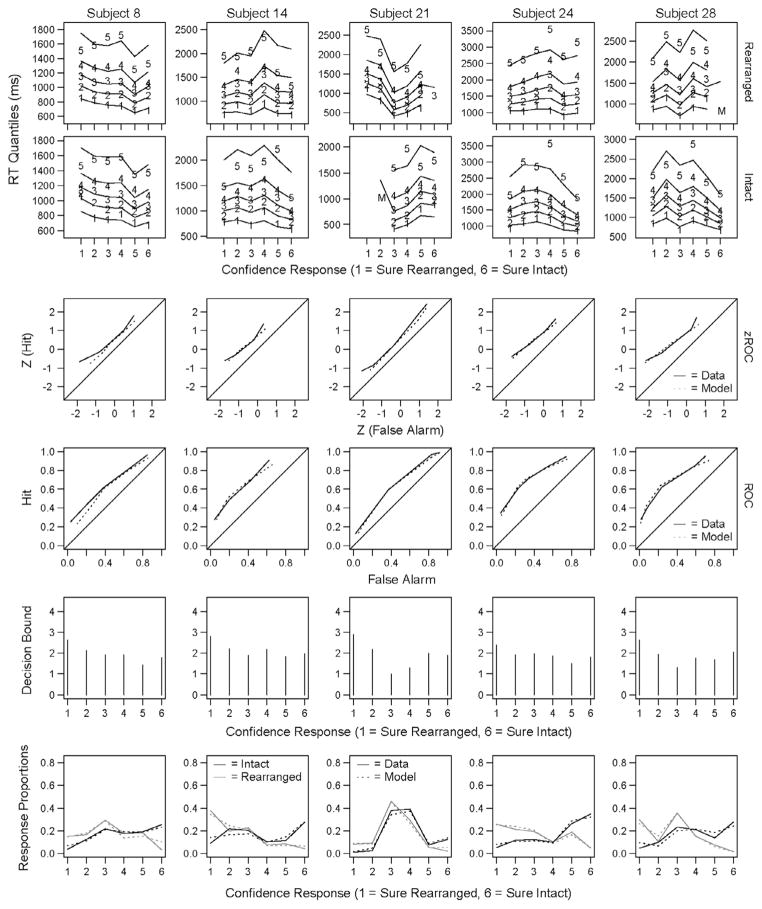

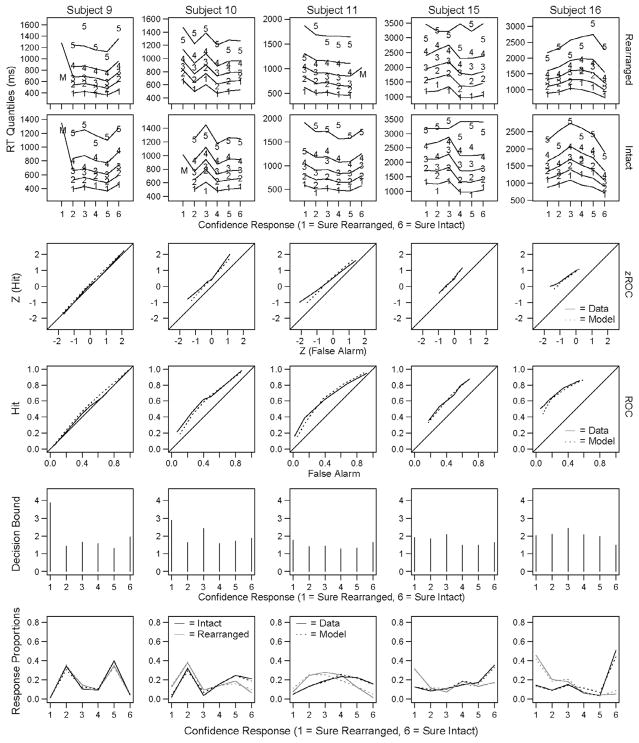

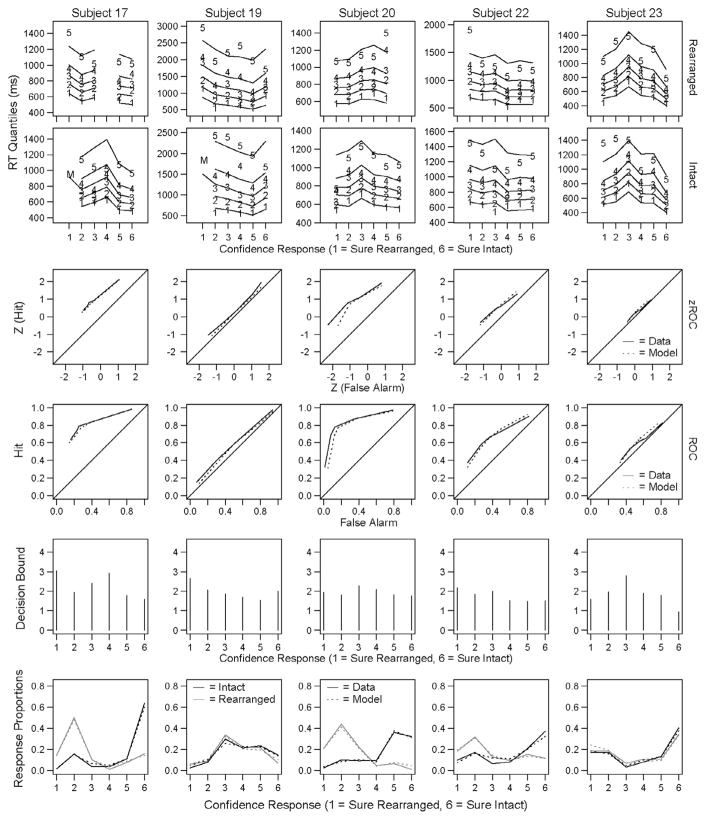

In addition to the quantitative comparison, the model predictions for each condition can also be compared with the empirical data to qualitatively assess the model fit. Quantile reaction-times for each subject for each of the four experimental conditions are shown in Fig. 3. In each of these plots, the six confidence responses are plotted across the x-axis (the “sure rearranged” category is labeled 1 and the “sure intact” category is labeled 6) and each line represents a quantile (with the lowest line depicting the .1 quantile, followed by .3, .5, .7 and .9). The numbers plotted in these figures represent the empirical data and the lines represent the predicted data from the model’s best fitting parameters. From these plots, it is apparent that there is consistency in the quantile response patterns of individual subjects across experimental conditions as well as wide differences between subjects in the quantile response patterns. For example, subjects 2 and 3 exhibit bowed reaction time quantiles where the high confidence responses are made more quickly than the low confidence responses. This is a response pattern that has been observed in previous confidence response paradigms (Murdock, 1974; Murdock & Dufty, 1972; Ratcliff & Murdock, 1976), but for which the original RTCON model was unable to account (see Ratcliff & Starns, 2013 for a discussion of why models with a decay term, such as RTCON, have difficulty capturing changes in the leading edge of RT distributions across response options). The fits to these data show that RTCON2 is able to capture this behavior of RT distributions.

Fig. 3.

Experiment 1: Quantile reaction times for each condition for each subject. Confidence responses are plotted along the x-axis (ranging from 1: Sure Rearranged to 6: Sure Intact). The numbers 1–5 depict the RT quantiles from the behavioral data and the corresponding lines depict the predictions from RTCON2. In conditions where subjects made between 4 and 10 responses the median RT is plotted as an ‘M’ and the other quantiles are not included. Conditions where subjects made fewer than 5 responses are omitted from the figure (e.g., subject 2 made fewer than 5 ‘Sure Rearranged’ responses to intact low-frequency word pairs so there are no behavioral data plotted for that condition).

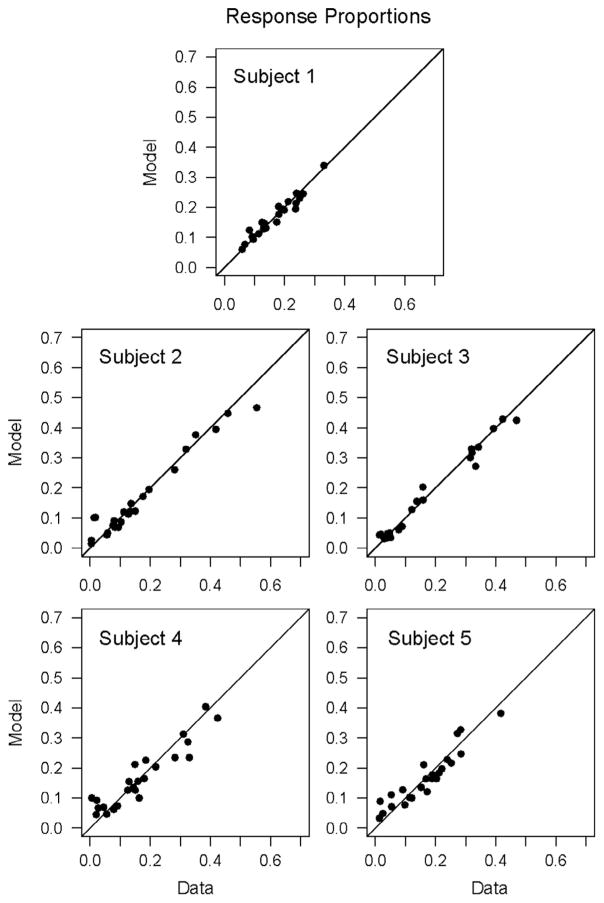

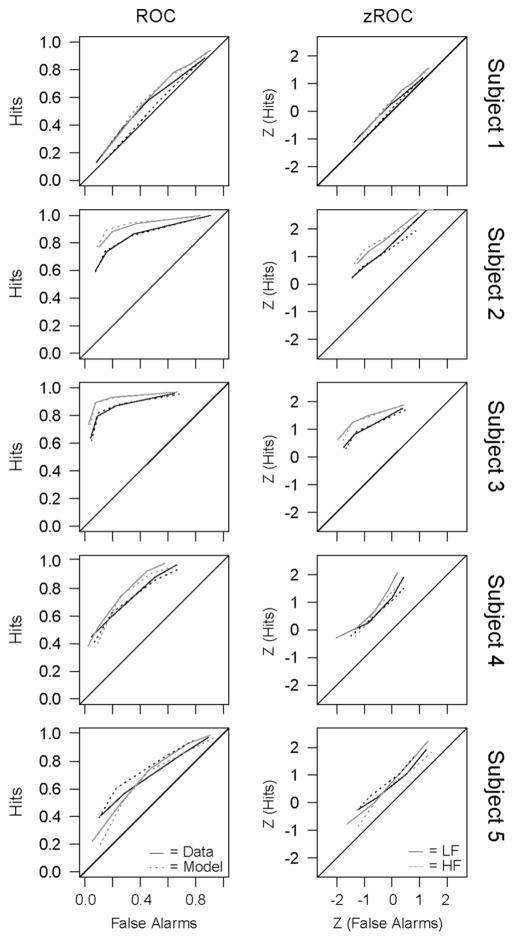

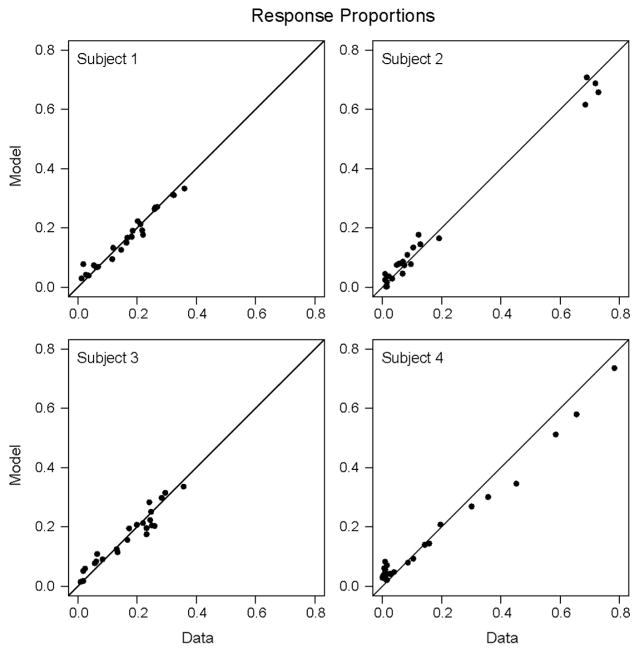

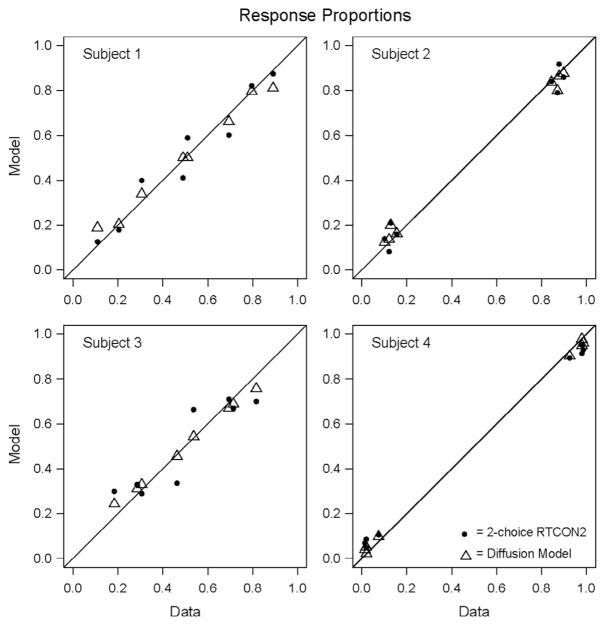

RTCON2 was also able to capture the proportion of responses in each condition and confidence category. In Fig. 4, the empirical response proportions for each subject are plotted against the predicted response proportions for that subject (with a reference line with an intercept of 0 and a slope of 1). We can see that the model matches the data reasonably well for all subjects. ROC and z-ROC functions from both the model predictions and the empirical data for each subject are plotted in Fig. 5. The solid lines depict the empirical data, the dashed lines are the predictions from the model, the black lines are for HF word pairs and the gray lines are for LF word pairs. If the model is successful at capturing the response patterns of the subjects, then the dashed lines should match the solid lines. The linearity of each of the individual z-ROC curves was tested using maximum likelihood estimation (Ogilvie & Creelman, 1968) and subjects 3, 4, and 5 were all found to have z-ROC curves that are significantly different from linear (χ2 values are reported in Table 2). Subjects 1 and 2 have z-ROC functions that are not significantly different from linear, subject 3 has inverted U-shaped z-ROC functions, and subjects 4 and 5 have U-shaped z-ROC functions. The model’s predicted ROC and z-ROC functions are relatively close to the empirical functions and exhibit the same linear and nonlinear patterns found in the empirical data for the first three subjects, but the model predicts linear z-ROC functions for subjects 4 and 5. These misfits can occur for several reasons, which will be discussed in greater detail following Experiment 3. In short, mismatches between the empirical data and model predictions occur for subjects and conditions with low numbers of observations or certain patterns of response proportions which are difficult for RTCON2 to handle. Specifically, subject 2 made very few high-confidence errors in any condition (fewer than 1.8%) and the model had difficulty producing such extreme response proportions so that a small difference between predicted and observed proportions leads to a miss in the predictions for the extreme points on the z-ROC. Similarly, subject 4 also made very few high-confidence errors. While such misses were numerically small (for example, the model predicted that subject 4 would make high confidence errors about 9.3% of the time instead of 2.2%), such small misses are exaggerated in the z-transformed ROC function. More crucially, as will be discussed following Experiment 3, certain patterns of data give rise to u-shaped z-ROC functions that are difficult for RTCON2 to produce.

Fig. 4.

Experiment 1: Empirical response proportions plotted against predicted response proportions (for the six confidence conditions and four experimental conditions for each subject) with a reference line with an intercept of 0 and a slope of 1.

Fig. 5.

Experiment 1: ROC and z-ROC functions for each subject and each condition. The solid lines are the functions from the behavioral data and the dashed lines are the predictions from RTCON2. The black lines are the functions for HF words and the gray lines are the functions for LF words. Conditions where subjects made fewer than 10 responses are omitted from the figure.

Table 2.

Linearity analysis of behavioral z-ROC curves – Experiment 1.

| Subject 1 | Subject 2 | Subject 3 | Subject 4 | Subject 5 | Average | |

|---|---|---|---|---|---|---|

| LF | 4.85 | 4.65 | 26.18* | 56.84* | 17.86* | 30.15* |

| HF | 2.30 | 5.61 | 10.39* | 75.00* | 18.59* | 20.17* |

df = 3, critical value = 7.815.

χ2 is significant at the p < .05 level.

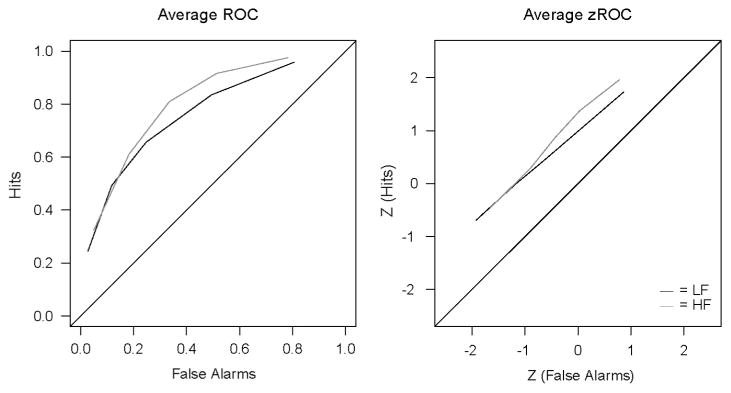

Additionally, as noted earlier, in much of the research investigating memory models it is common practice to examine only group data. For illustrative purposes, Fig. 6 shows the ROC and z-ROC functions for this experiment averaged across subjects. The linearity of the averaged z-ROC curve was also tested using the maximum likelihood estimation method (see the last column of Table 2). While the χ2 values indicate that the averaged z-ROC function is still significantly different from linear, we can see that the variety present in the individual z-ROC shapes is largely obscured by averaging.

Fig. 6.

Experiment 1: ROC and z-ROC functions for data averaged across subjects. The gray lines are the low-frequency condition and the black lines are the high-frequency condition.

In RTCON2, the shape of the z-ROC function is primarily dependent on the relative heights of the individual decision boundaries, provided the proportion of responses in each confidence category is not tiny (e.g., less than 1%). Simulations of the original RTCON model demonstrated that inverted u-shaped decision boundaries can yield inverted u-shaped z-ROC functions (Ratcliff & Starns, 2009) and fits of RTCON2 to item recognition also demonstrated this relationship (Ratcliff & Starns, 2013). In this experiment we see that the relative shape of the decision boundaries across categories corresponds to the shape of the z-ROC curves for some of the subjects. The setting of these decision boundaries is assumed to be entirely under the control of the subject, although it can be affected by instructions (Ratcliff & Starns, 2009), and so reflects an individual decision-making preference. Moreover, the relative shape of the decision boundaries is primarily constrained by the reaction time data. If a subject’s boundary for a given confidence response is set higher than the other boundaries, those responses will be slower (and the proportion of responses will be lower than if the boundary was set lower). This relationship is illustrated in Fig. 7, where the shape of the decision boundaries matches the shape of both the reaction time quantile functions for most of the subjects. The relationship between the shape of the z-ROC function and the decision boundaries is apparent for some subjects (e.g., subject 3) but not for others.

Fig. 7.

Experiment 1: Comparison of RT quantile shapes (from Intact – LF condition), z-ROC functions, and the relative heights of the decision boundaries for each subject. Plotting conventions are the same as for Figs. 3 and 5.

This experiment demonstrates that RTCON2 can fit both RT and accuracy data from an associative recognition experiment with confidence responses. The RTCON2 model distinguishes between different sources of variability, can fit individual differences in how people use confidence response scales, and provides an alternative explanation for the shape of ROC and z-ROC functions that is linked to reaction time and decision-related processes rather than changes in the nature of information from memory.

RTCON2 is able to fit both response proportions and reaction time distributions from a confidence judgment paradigm, and does so without a 1:1 mapping between accuracy and confidence. Additionally, the model is able to account for individual differences in how subjects use the confidence scale and as a result can produce a variety of ROC and z-ROC shapes. This explanation for various ROC and z-ROC shapes is entirely based on the decision-making process and individual differences in how people make confidence judgments. Therefore, some of the behavioral evidence that has been the primary support for additional memory processes may be alternatively explained through the addition of an explicit model of the decision-making process. These findings demonstrate the importance of focusing not only on what kind of information is used in a decision, but also on how the decision-making process handles that information and makes a decision.

Experiment 2

The RTCON2 model distinguishes between the representation of information from memory and how that information is used to make a decision. As such, it is important to demonstrate the validity of the representation of information in RTCON2. To this end, the second experiment was designed to compare the RTCON2 model with the standard two-choice diffusion model (Ratcliff, 1978; Ratcliff & McKoon, 2008). If these models explain individual differences in decision-making in the same way, then their parameter values should be consistent when fit to the same data. Additionally, the two-choice diffusion model’s ability to explain decision making behavior over a wide range of tasks is well-established so comparison of the two models can lend validity to the RTCON2 model. This experiment allows us to compare 6-choice and two-choice data using the RTCON2 model and then compare the RTCON2 and the diffusion model for the two-choice data. The parameters from the diffusion and RTCON2 models should be consistent when fit to the two-choice data, and the RTCON2 model should be able to fit both 6-choice and two-choice data with select parameters held constant across the number of response options.

Since most decision models focus on two-choice tasks (Busemeyer & Townsend, 1992; Laming, 1968; Link, 1975; Ratcliff, 1978; Ratcliff & McKoon, 2008; Ratcliff & Rouder, 1998; Ratcliff, Van Zandt, & McKoon, 1999; Usher & McClelland, 2001; Wagenmakers, 2009), these models are well-established and provide a benchmark for model performance. Because RTCON2 can be seen as an extension of the diffusion model, the models are quite similar. Just like RTCON2, the diffusion model has parameters that describe the non-decision process (non-decision time and variability in non-decision time, st), drift rate parameters that describe the quality of evidence entering the decision process (mean ν and between-trial variability η), and starting point (z) and boundary (a) parameters that control the amount of evidence needed to make a decision (as well as any bias toward a particular response). However, there are a few differences between the models which make their comparison worthwhile. First, the models represent the accumulation rate differently. In the diffusion model, the rate of evidence accumulation is represented as a discrete value that varies between items. In RTCON2, the rate of evidence accumulation for each response is determined by the area between the confidence criteria under a normal distribution with SD of 1 whose mean value (μ) varies between items. The area of this distribution in each response region is then scaled by a parameter (as) to produce an accumulation rate for each confidence response. Second, unlike the diffusion model, RTCON2 has no closed form solution and so must be fit by simulation methods.

In this task, subjects will alternate between using a 6-choice confidence response scale, and a two-choice response scale (corresponding to a simple intact/rearranged decision).

Method

Subjects

Four Ohio State University undergraduate students participated in 7 sessions and earned $10 for each completed session.

Materials

The stimuli were drawn from the same high-frequency, low-frequency, and very-low-frequency word pools described in the first experiment. Study lists were composed of 12 high-frequency words, 12 low-frequency words, and 4 very-low-frequency words selected randomly (without replacement) from the word pools. These words were randomly paired within frequency to create 14 word pairs (6 high-frequency pairs, 6 low-frequency pairs, and 2 very-low-frequency pairs). As in the first experiment, the 2 very-low-frequency word pairs served as buffer items for the study list and were presented in the first and last position within each list. All of the target word pairs were presented twice within each study list and were assigned to study-list positions randomly with the restriction that repeated pairs had at least one intervening word pair.

As in experiment one, test lists consisted of the two buffer word pairs (which were again presented in the first and last positions of the test list) and the 12 target pairs. Each pair was presented only once during the test list and exactly half of the target pairs were randomly rearranged within frequency and number of presentations. Thus each test list consisted of 2 buffer word pairs, 6 rearranged word pairs and 6 intact word pairs. Intact pairs consisted of words which had appeared together in the study list and rearranged pairs consisted of words which appeared in different pairs in the study list.

Procedure

Each experimental session lasted approximately 50 min. The first two sessions for each subject consisted of a response-key practice block, 3 study/test blocks, a second response-key practice block, and 20 more study/test blocks. The second response-key practice block was dropped after the first two sessions, because subjects were familiar with the response keys and no longer needed the additional practice. During the first three study/test blocks, subjects alternated between using 6 or 2 response-keys between each list (one 6-choice list, then one two-choice list, then another 6-choice list). During the last twenty study/test blocks, subjects alternated between blocks of lists (three two-choice lists, then seven 6-choice lists, then three two-choice lists, then seven 6-choice lists). Subjects responded using a PC keyboard on which the Z, X, C, comma, period, and slash keys were labeled with the symbols “− − −”, “− −”, “−”, “+”, “+ +”, and “+ + +”. Subjects were instructed to place their left-hand ring, middle, and index fingers on the “− − −”, “− −”, and “−” keys and their right-hand index, middle, and ring fingers on the “+”, “+ +”, and “+ + +” keys.

During the response-key practice, each of the symbols marked on the keyboard (e.g., “− −”) would appear on the screen one at a time and the subjects were told to press the designated key as quickly as possible. If a subject took longer than 800 ms to respond to one of the symbols, a “TOO SLOW” message would appear on the screen for 1000 ms. Each practice block consisted of 10 repetitions of each of the six response key options resulting in 60 trials total in each block. The symbols appeared in random order within the block with the restriction that repeated symbols had to have at least one intervening symbol.

For the remainder of the experiment, subjects were told that they would be presented with pairs of words during the study portions of the experiment and their job was to learn these pairs. Additionally, subjects were informed at the beginning of each study-list and each test-list how many different response-keys were to be used for that study/test block (e.g., ‘Please use all 6 confidence categories for the next study list’). During the study/test blocks, subjects initiated the start of each study list by pressing the spacebar. Each word pair in the study list was displayed for 3000 ms followed by 200 ms of blank screen. Immediately after the final study-list word pair, a message appeared directing subjects to press the space bar to begin the test list. During the test-list, subjects were required to distinguish between the word pairs that had not appeared during the study-list (rearranged word pairs) and those that had (intact word pairs). Each word pair remained on the screen until the subject had made a response.

On the 6-choice study/test blocks subjects were instructed to use all 6 response-keys to indicate whether a pair was intact or rearranged and their confidence in their response. They were told to use one of the “−” keys to indicate that the word pair had not appeared in the study-list, and to use one of the “+” keys to indicate that it had. Subjects were instructed to use the different levels of “+” and “−” to indicate their amount of confidence in their response (e.g., if a subject felt very confident that a word pair was intact they would use the “+ + +” key, whereas if they felt only moderately confident they would use the “+ +” key). On the two-choice study/test blocks, subjects were instructed to use only the two most extreme response-keys (“+ + +” and “− − −”) to indicate only whether a pair was intact or rearranged. Subjects were encouraged to respond quickly and accurately and to try to spread their responses among all six response-keys throughout the course of the experiment. If a subject took less than 280 ms to respond to one of the test items, a “TOO FAST” message would appear on the screen for 1500 ms. Subjects were given error feedback throughout all test blocks in the form of the words “CORRECT” or “ERROR” displayed for 300 ms after their response to each test item.

Model fitting

The two-choice and 6-choice versions of the RTCON2 model are fit using the same procedure described for the first experiment. To facilitate comparison between RTCON2 and the diffusion model, within-trial variability in the decision process (σ) was fixed to 0.1. All other RTCON2 parameters were allowed to vary freely when fitting the 6-choice data, and then select parameters were fixed when fitting the two-choice data. For the two-choice data, the mean value of the drift rate distributions, the between-trial variability in these mean values, and the between-trial variability in the height of the decision boundaries were fixed to the values estimated from the 6-choice data. As in the first experiment, there are 35 degrees of freedom per condition in the 6-choice task. In the two-choice task, there are also six RT bins for each response key, which gives 12 degrees of freedom, but these 12 proportions have to add to 1, which reduces the degrees of freedom to 11 per condition. With four conditions, this gives a total of 140 degrees of freedom in the 6-choice task and 44 degrees of freedom in the two-choice task. For these fits there were 23 free parameters in RTCON2 for the 6-choice data and 6 free parameters for the two-choice data, and 13 free parameters for the diffusion model.

Results and discussion

Data for this experiment consisted of response proportions and reaction-time quantiles for each subject from each condition and for each response category. Reaction time latencies less than 300 ms or greater than 4000 ms were excluded from this analysis (less than 0.1% of all data).

This experiment was designed to compare the performance of RTCON2 with the diffusion model. There are three main results of this experiment. First, all of the models fit both the proportions of responses in each confidence category and their RT quantiles well for the appropriate tasks. Second, as in the previous experiment, the RTCON2 model also fits the empirical ROC and z-ROC functions for the 6-choice task. Third, there is consistency in the model parameters across the diffusion model and RTCON2, and the RTCON2 model is able to fit data from 6-choice and two-choice task with appropriate parameters fixed across tasks.

For data collapsed over the 6-choice and two-choice tasks, there was a higher hit-rate for LF word pairs (M = 0.88, SD = 0.08) than HF word pairs (M = 0.79, SD = 0.13) and this difference was significant (t(3) = −3.5, p < .05). There was again a higher false-alarm rate for LF word pairs (M = 0.24, SD = 0.19) than HF word pairs (M = 0.21, SD = 0.16) but this difference was not significant (t(4) = −1.9, p > .05).

The diffusion model and two versions of the RTCON2 model (one for 6-choice decisions and one for two-choice decisions) were fit to the data from individual subjects. The RTCON2 model was fit to the 6-choice data and both the diffusion model and RTCON2 model were fit to the two-choice data. The RTCON2 and diffusion models were both able to fit both the quantile reaction-times and response proportions for each condition and response-key. The best-fitting parameters for each model are shown in Tables 3–5. Table 3 contains the parameters for the 6-choice version of RTCON2, Table 4 contains the parameters from the two-choice version of RTCON2, and Table 5 contains the parameters from the diffusion model.

Table 3.

Experiment 2 6-choice RTCON2 model parameters.

| Subject | Ter | st | as | σ | sb | χ2 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | 436 | 280 | 0.03 | 0.1 | 0.48 | 109 | ||

| 2 | 392 | 263 | 0.04 | 0.1 | 0.47 | 129 | ||

| 3 | 373 | 137 | 0.03 | 0.1 | 1.02 | 214 | ||

| 4 | 278 | 252 | 0.03 | 0.1 | 0.86 | 152 | ||

| b1 | b2 | b3 | b4 | b5 | b6 | |||

|

|

||||||||

| 1 | 1.80 | 1.87 | 1.78 | 1.41 | 1.33 | 1.41 | ||

| 2 | 1.59 | 2.15 | 5.52 | 3.21 | 1.92 | 1.45 | ||

| 3 | 2.32 | 1.50 | 1.42 | 1.21 | 1.31 | 1.62 | ||

| 4 | 1.85 | 2.16 | 2.64 | 2.53 | 2.34 | 1.66 | ||

| c1 | c2 | c3 | c4 | c5 | ||||

|

|

||||||||

| 1 | −1.31 | 0.00 | 0.85 | 1.77 | 2.89 | |||

| 2 | −0.48 | 0.00 | 1.19 | 1.29 | 2.18 | |||

| 3 | −0.89 | −0.12 | 0.92 | 1.90 | 2.48 | |||

| 4 | −0.82 | 0.09 | 0.87 | 1.59 | 2.64 | |||

| μrH | μrL | μiH | μiL | srH | srL | siH | siL | |

|

|

||||||||

| 1 | 0.00 | −0.06 | 1.92 | 2.24 | 0.85 | 0.87 | 1.10 | 0.96 |

| 2 | 0.00 | −0.17 | 2.01 | 2.31 | 0.53 | 0.81 | 1.18 | 0.98 |

| 3 | 0.00 | −0.13 | 1.78 | 2.00 | 0.66 | 1.07 | 1.00 | 1.03 |

| 4 | 0.00 | −0.49 | 2.33 | 2.97 | 0.59 | 0.67 | 0.92 | 1.03 |

Ter is the mean nondecision time, st is the range in nondecision time, σ is the SD in within trial variability, as is the scaling factor that multiplies drift rate, sb is the range in variability in the decision boundaries, b1–b6 are the decision boundaries, c1–c5 are the confidence criteria, the μ values are the mean values of the drift rate distributions for each experimental condition, and the s values are the between-trial variability values for each experimental condition (r represents rearranged items, i represents intact items, H represents high-frequency items, and L represents low frequency items).

Table 5.

Experiment 2 diffusion model parameters.

| Subject | Ter | st | a | z | sz | χ2 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | 536 | 350 | 0.13 | 0.05 | 0.09 | 62 | ||

| 2 | 500 | 280 | 0.20 | 0.09 | 0.16 | 39 | ||

| 3 | 463 | 170 | 0.11 | 0.05 | 0.09 | 42 | ||

| 4 | 305 | 10 | 0.17 | 0.08 | 0.08 | 30 | ||

| νrH | νrL | νiH | νiL | ηrH | ηrL | ηiH | ηiL | |

|

|

||||||||

| 1 | −0.21 | −0.28 | 0.20 | 0.09 | 0.29 | 0.36 | 0.27 | 0.31 |

| 2 | −0.32 | −0.39 | 0.24 | 0.23 | 0.32 | 0.36 | 0.14 | 0.22 |

| 3 | −0.02 | −0.18 | 0.10 | 0.15 | 0.23 | 0.17 | 0.01 | 0.16 |

| 4 | −0.23 | −0.38 | 0.26 | 0.37 | 0.14 | 0.15 | 0.01 | 0.15 |

Ter is the mean nondecision time, st is the range in nondecision time, a is the boundary separation, z is the starting point of the accumulation process, sz is the range in variability in the starting point, the ν values are the mean drift rate values for each experimental condition, and the η values are the between-trial variability values for each experimental condition (r represents rearranged items, i represents intact items, H represents high-frequency items, and L represents low-frequency items).

Table 4.

Experiment 2, 2-choice RTCON2 model parameters.

| Subject | Ter | st | as | b1 | b2 | c1 | χ2 |

|---|---|---|---|---|---|---|---|

| 1 | 456 | 295 | 0.01 | 2.10 | 1.51 | 0.61 | 84 |

| 2 | 405 | 233 | 0.02 | 3.07 | 2.46 | 0.84 | 45 |

| 3 | 410 | 108 | 0.01 | 1.72 | 1.49 | 1.10 | 57 |

| 4 | 266 | 60 | 0.02 | 2.88 | 2.42 | 1.14 | 35 |

Ter is the mean nondecision time, st is the range in nondecision time, as is the scaling factor that multiplies drift rate, b1–b2 are the decision boundaries, and c1 is the decision criteria.

For the 6-choice version of the RTCON2 model, the mean χ2 value for this experiment was 151 with a SD of 45. This mean is less than the critical value for χ2 with 140 degrees of freedom and α = 0.05 (168.6) indicating that the model provides an adequate fit to the data. For comparison, the original RTCON model produces an average χ2 value of 211 when fit to this data. For the two-choice version of the RTCON2 model, the mean χ2 value was 55 with a SD of 21. For the standard two-choice diffusion model, the mean χ2 value was 43 with a SD of 13. These means are both less than the critical value for χ2 with 44 degrees of freedom and α = 0.05 (60.5) indicating that both models provide an adequate fit to the data.

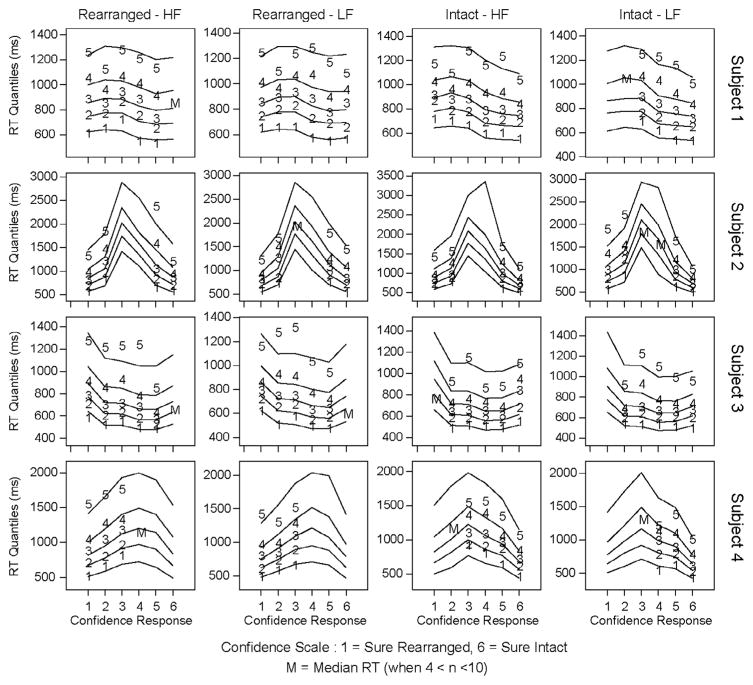

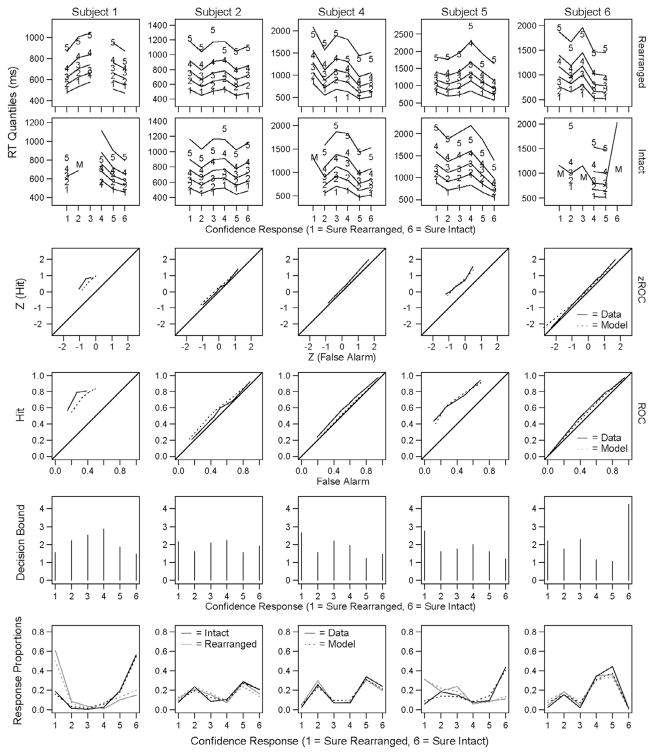

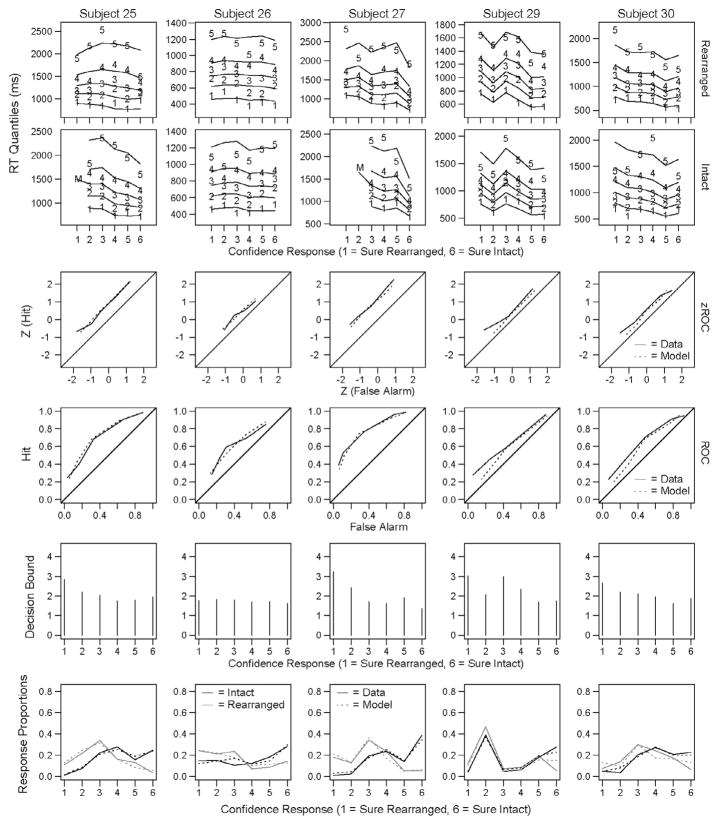

For each subject, their parameter values were used to generate predicted reaction time quantiles and response proportions for each model. These predicted values can then be compared with the empirical data to qualitatively assess the fit of the various models. For the 6-choice task, quantile reaction-times for each subject for each of the four experimental conditions (rearranged high frequency, rearranged low frequency, intact high frequency, and intact low frequency word pairs) are shown in Fig. 8 along with predicted values from the 6-choice version of RTCON2. As in the previous experiment, the 6 response keys are plotted on the x-axis and each line represents a reaction time quantile. The numbers plotted in the figures represent the subject data and the lines represent predicted data. As before, there is considerable consistency in the shapes of the subjects’ RT quantiles across conditions and considerable differences across subjects, and RTCON2 is successful at capturing these effects. Note that there is considerably less 6-choice data for this experiment compared to the first (since subjects in this experiment were alternating between using a 6-choice response scale and a two-choice response scale), so there are more conditions where subjects made fewer than 10 responses over the course of all of the sessions.

Fig. 8.

Experiment 2: Quantile reaction times from the 6-choice task for each condition for each subject. Confidence responses are plotted along the x-axis (ranging from 1: Sure Rearranged to 6: Sure Intact). The numbers 1–5 depict the RT quantiles from the behavioral data and the corresponding lines depict the predictions from RTCON2. In conditions where subjects made between 4 and 10 responses the median RT is plotted as an ‘M’ and the other quantiles are not included. Conditions where subjects made fewer than 5 responses are omitted from the figure (e.g., subject 4 made fewer than 5 ‘Intact’ responses of any confidence level to rearranged low-frequency word pairs so there are no behavioral data plotted for those conditions).

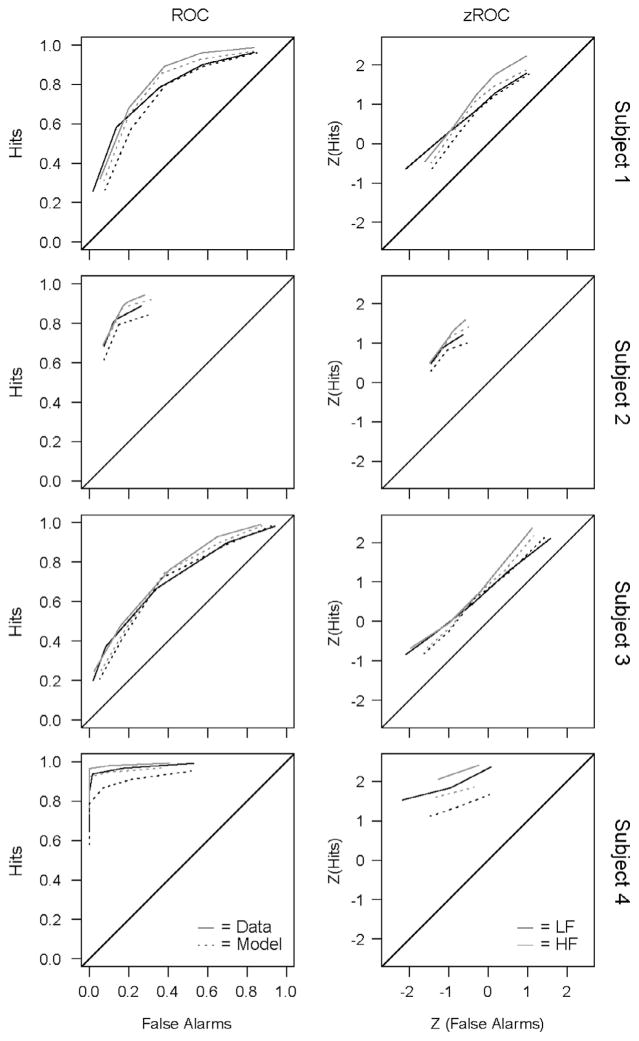

Despite these small numbers of observations, RTCON2 was still able to capture the proportion of responses in each condition and confidence category. In Fig. 9, the empirical response proportions for each subject are plotted against the model’s predicted response proportions for that subject (with a reference line with an intercept of 0 and a slope of 1). We can see that the model matches the data quite well for all subjects. ROC and z-ROC functions from both the model predictions and the empirical data for each subject are plotted in Fig. 10. The solid lines depict the empirical data, the dashed lines are the predictions from the model, the gray lines are the LF word pairs, and the black lines are the HF word pairs. If the model is successful at capturing the response patterns of the subjects, then the dashed lines should match the solid lines. The model’s predicted ROC and z-ROC functions are close to the empirical functions and generally exhibit the same linear and nonlinear patterns found in the empirical data, although there are slightly larger mismatches for subjects with extremely low numbers of observations in some conditions (such as subject 4, who made very few errors across all of the sessions).

Fig. 9.

Experiment 2: Empirical response proportions from the 6-choice task plotted against predicted response proportions (for the six confidence conditions and four experimental conditions for each subject) with a reference line with an intercept of 0 and a slope of 1.

Fig. 10.

Experiment 2: ROC and z-ROC functions from the 6-choice task for each subject and each condition. The solid lines are the functions from the behavioral data and the dashed lines are the predictions from RTCON2. The black lines are the functions for HF words and the gray lines are the functions for LF words. Conditions where subjects made fewer than 10 responses are omitted from the figure.

The linearity of the subjects’ z-ROC curves was tested using maximum likelihood estimation (Ogilvie & Creelman, 1968) and two subjects had z-ROC curves that were significantly different from linear: the low-frequency condition for subject 3 and both conditions for subject 4 (χ2 values are reported in Table 6).

Table 6.

Linearity analysis of behavioral zROC curves – Experiment 2.

df = 3, critical value = 7.815.

χ2 is significant at the p < .05 level.

For the two-choice task, Fig. 11 compares the predicted RT values from the two-choice version of RTCON2 and the diffusion model with the individual subjects’ data. Data in each row are from a single subject and data in each column are from a single experimental condition (rearranged high frequency, rearranged low frequency, intact high frequency, and intact low frequency word pairs) with the 2 response keys on the x-axis (1 representing ‘rearranged’ and 2 representing ‘intact’). The numbers plotted in the figures represent the subject data, the solid lines represent predicted data generated from RTCON2, and the dashed lines represent predicted data generated from the diffusion model. The predicted data from both models fit the empirical RT data relatively well. Both models are also able to capture the proportion of responses in each condition and response category. In Fig. 12, the empirical response proportions for each subject are plotted against the RTCON2 model’s predicted response proportions (the dots) and against the diffusion model’s predicted response proportions (the x’s) with a reference line with an intercept of 0 and a slope of 1. Although both models match the data reasonably well, the diffusion model’s predictions provide a slightly better match to the empirical data.

Fig. 11.

Experiment 2: Quantile reaction times from the two-choice task for each condition for each subject. The numbers 1–5 depict the RT quantiles from the behavioral data, the solid lines depict the predictions from RTCON2, and the dashed lines depict the predictions from the diffusion model. In conditions where subjects made fewer than 10 responses the median RT is plotted as an ‘M’ and the other quantiles are not included.

Fig. 12.

Experiment 2: Empirical response proportions from the two-choice task plotted against predicted response proportions from the RTCON2 model (the triangles) and the diffusion model (the dots) for each subject (for the two responses and four experimental conditions) with a reference line with an intercept of 0 and a slope of 1.

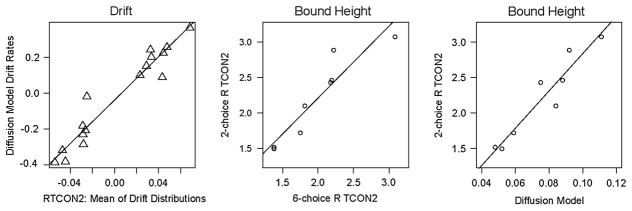

Parameters from the 6-choice version of RTCON2 were fixed for fits of the two-choice version of RTCON2, and parameters from the two-choice version of RTCON2 were compared with corresponding diffusion model parameters. Not all of the model parameters are directly comparable given that the models have different numbers of confidence criteria and decision boundaries. However, parameters that represent the quality of evidence from the stimuli (such as drift rate) and parameters that reflect individual differences in decision making (such as decision boundaries) should be consistent across all the models and tasks. A comparison of drift rate values and boundary heights across models is shown in Fig. 13. In the figure on the left, drift rate values from the diffusion model are plotted against the mean of the drift distributions from the RTCON2 model (based on fits of the 6-choice data) along with a linear regression line. The RTCON2 model fixes the mean of one of the drift distributions to zero, and allows the other drift distributions and confidence criteria to vary (see Table 3). The diffusion model allows all of the drift values to vary. For comparison purposes, for this figure the drift values from the RTCON2 model have been adjusted to match the diffusion model (mean drift values were shifted such that the middle confidence criterion was at zero) and multiplied by the scaling parameter. The two models produced very similar estimates of drift rate. This demonstrates that the RTCON2 model is able to produce estimates of the quality of evidence used in a decision that are comparable to the estimates produced by the more established standard diffusion model. In the figures in the middle and on the right of Fig. 13, the decision boundaries from the two-choice RTCON2 model are plotted against the boundaries from the 6-choice RTCON2 model and the diffusion model. For the 6-choice model, the heights of the ‘intact’ and ‘rearranged’ response options were averaged over confidence level to produce two values to compare to the parameters estimated from the two-choice task. For the diffusion model, the total distance between the two decision boundaries (a) was split into the distance from the starting point (z) to produce two values (z and a–z) to compare to the parameters estimated from the two-choice task. For both figures, a linear regression line is included for reference. Overall, the models produce similar estimates of decision boundary heights. This demonstrates both that the RTCON2 model is able to produce estimates of response caution that are comparable to estimates produced by the diffusion model and that individual differences in response caution appear consistent across response options.

Fig. 13.

Experiment 2: Comparison of drift rates and decision bounds across the models along with best-fitting linear regression lines. In the figure on the left, drift rate values have been shifted and adjusted to account for differences in how the models parameterize drift rates. In the middle figure, for the 6-choice RTCON2 model the heights of the ‘intact’ and ‘rearranged’ response options were averaged over confidence level. In the figure on the right, for the diffusion model the total distance between the two decision boundaries (a) was split into the distance from the starting point (z) to produce two values (z and a–z). Original values for all parameters are available in Tables 3–5.

This experiment provided another demonstration of RTCON2’s ability to fit a variety of z-ROC functions as well as bowed reaction time quantiles. Additionally, this experiment demonstrated consistency in model parameters within subjects and across tasks. The RTCON2 model was able to fit data from both a 6-choice task and a two-choice task with a reasonable subset of the parameters held constant across tasks. We also observed considerable correspondence between the drift rates across models. This indicates that, regardless of task differences (which likely affect other parameters such as those related to making a decision), the quality of evidence extracted from the stimulus can be held constant across task conditions.

Experiment 3

The third experiment was designed to collect a moderate number of observations from a larger group of subjects with the goal of providing more examples of non-linear z-ROC shapes to be fit by the model. As in the previous experiments, subjects in this experiment studied lists of pairs of words and then were presented with pairs of test words and had to distinguish between intact and rearranged versions of the study pairs. For this experiment, the study lists were slightly longer and each item was presented for a shorter duration in order to collect more observations from each session.

Method

Subjects

34 Ohio State University undergraduate students participated in 2 sessions each and earned research credit for an introductory Psychology course for each completed session.

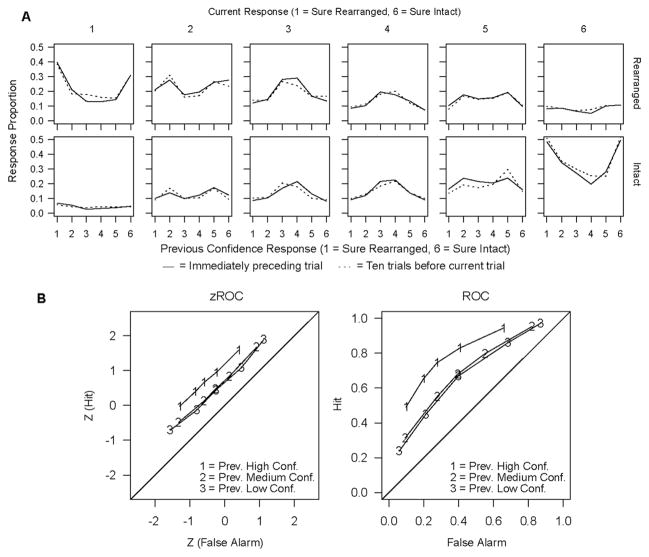

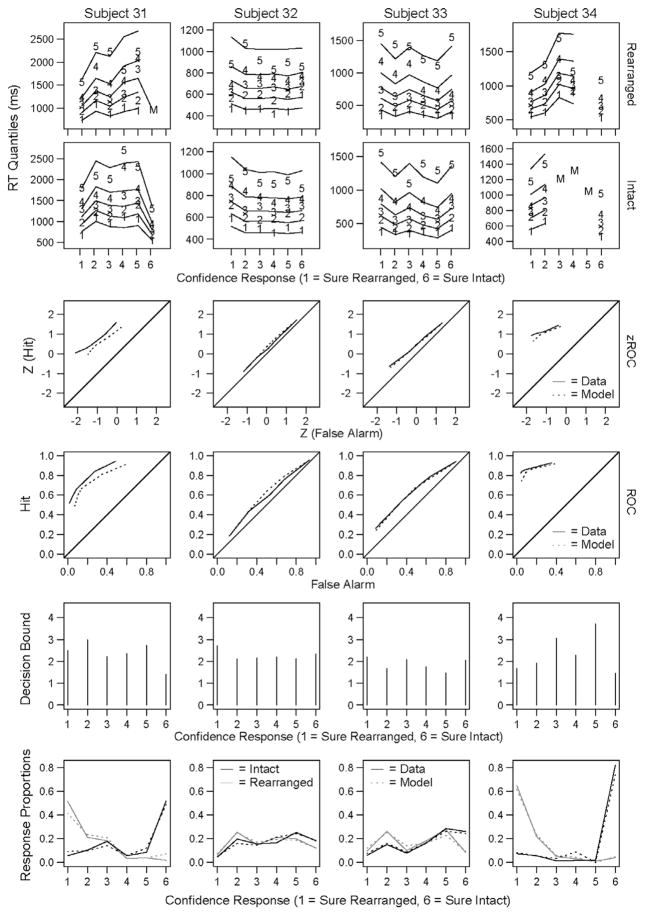

Materials