Abstract

Saccadic eye movements are the result of neural decisions about where to move the eyes. These decisions are based on visual information accumulated before the saccade; however, during an ≈100-ms interval immediately before the initiation of an eye movement, new visual information cannot influence the decision. Does the brain simply ignore information presented during this brief interval or is the information used for the subsequent saccade? Our study examines how and when the brain integrates visual information through time to drive saccades during visual search. We introduce a new technique, saccade-contingent reverse correlation, that measures the time course of visual information accrual driving the first and second saccades. Observers searched for a contrast-defined target among distractors. Independent contrast noise was added to the target and distractors every 25 ms. Only noise presented in the time interval in which the brain accumulates information will influence the saccadic decisions. Therefore, we can retrieve the time course of saccadic information accrual by averaging the time course of the noise, aligned to saccade initiation, across all trials with saccades to distractors. Results show that before the first saccade, visual information is being accumulated simultaneously for the first and second saccades. Furthermore, information presented immediately before the first saccade is not used in making the first saccadic decision but instead is stored and used by the neural processes driving the second saccade.

Saccadic eye movements are used to reorient the line of sight of the fovea to explore objects of interest. Each saccade is the result of a neural decision that is based on the processing of visual information. Neural activity related to motor preparation and visual selection has been measured in different brain areas before saccade execution (1-4); however, it is still unknown when and how the brain accumulates visual information used to choose the destination of each saccade. Immediately before each saccade's execution, as a consequence of sensory transduction and motor pathway delays (5), there is a “dead time,” an ≈100-ms time interval in which visual information does not influence the destination of the saccade. What is the impact of this on performance and strategy in a search task? Searching for an object in a scene typically requires a sequence of several saccades. If each saccade were based on a concatenation of separate independent neural decisions, each with its own dead time, then searching a complex scene would be very inefficient and difficult. Instead, for some conditions, it appears that a fast sequence of saccades is programmed in parallel (6-12). Subsequent saccadic latencies can be very short compared with the initial saccade's latency (7, 8), and in some cases the second saccade even disregards visual information presented after the execution of the first saccade (10, 11). Recently, a study measuring neural activity in the superior colliculus of monkeys provided evidence that for sequences of fast saccades, motor activity related to the goal of a second saccade can temporally overlap with activity related to an initial saccade (13). However, the time course of how the brain weights and accumulates visual information used to guide the first and second saccades is still unknown.

Correlating human perceptual decisions with stimuli containing external noise can elucidate the mechanisms mediating decisions and actions (14-17). This technique, referred to as “reverse correlation” or “classification images,” has been used to study the mechanisms humans use to process spatial (18-20) and temporal (21, 22) visual information. This method uses noise features that led to incorrect decisions, to retrieve the weights that an observer used to integrate visual information. Here, we record human eye movements during visual search for a bright target among distractors and use the temporal reverse correlation technique aligned to saccade initiation to measure how the saccadic system integrates visual information over time. In our experiments, the contrast of the target and the distractors varied through time because of statistically independent temporal noise. Noise making a distractor brighter than the target will tend to lead observers to make an incorrect saccade to that distractor. The logic of the current experiment is that a saccade will be affected only by noise presented during the time in which the brain integrates information and not by noise presented during the dead time before saccadic execution. Thus, by averaging over trials the time series of noise values presented at the distractor location selected by the saccadic eye movement, we will obtain a profile of the temporal window in which the brain accumulates visual information for eye movements during a search.

Methods

Saccade-Aligned Temporal Classification Plots. The observer's task was to search for a bright Gaussian-shaped target among four dim Gaussian distractors (Fig. 1). The intensity of the target as well as the distractors was varied over time by choosing samples from independent random normal distributions every 25 ms. The mean intensity of the sampling distribution for the target location was higher than that for the distractor locations. Gaze position as a function of time was recorded along with the noise values presented at this instant at the target and the distractor locations. For each saccade, we defined the saccadic decision as the possible target location closest to the saccade's endpoint (23-25).¶ To elucidate the time interval in which the brain accumulates visual information for a sequence of saccades, we computed classification plots separately for the first and second saccades. To obtain the classification plots, we used only trials in which the saccades went to a distractor location. For each trial, we aligned the time series of the noise intensity levels relative to the first saccade's initiation time (Fig. 2). We then averaged the time series of noise intensities over these trials to obtain the classification plots. Because we average across trials with different saccadic latencies, the classification plot yields the average temporal window of integration. This window specifies how the brain acquires visual information over time to guide the saccade. We verified that the technique retrieves the average linear integrating window even with varying latencies by performing computer simulations (see Fig. 5, which is published as supporting information on the PNAS web site).

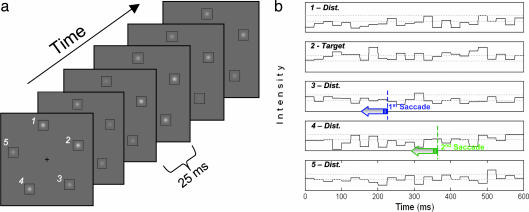

Fig. 1.

Dynamic search task. (a) The stimulus consists of a target and four distractors. In this example, the target was at the location marked by the number 2. On average the target was brighter than the distractors, but on each 40-Hz frame the intensities of the target and each of the distractors were chosen from independent normal distributions. (b) The intensities at the target and the distractor locations were recorded as a function of time along with eye positions. The dashed horizontal lines show the average intensities for the target and distractors. On some individual frames the intensity of a distractor is higher than that of the target because of the random noise. Such frames may lead the observer to make a saccade toward a distractor, but only if they are presented during the temporal window in which visual information is accumulated to guide the saccade.

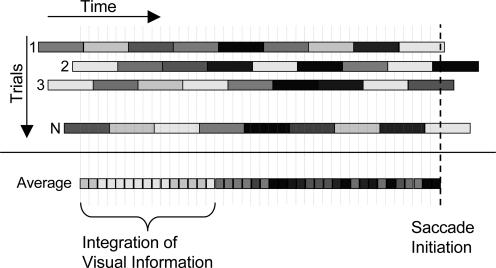

Fig. 2.

Computation of temporal classification plots. The illustration shows the way noise samples are analyzed to obtain the classification plots. The gray level of each rectangle represents the noise value on a single frame (25 ms). A row describes an individual trial and shows the noise values as a function of time at the distractor location incorrectly chosen by the saccade. The time course of noise values across trials is aligned with respect to saccade initiation, such that time equal to zero corresponds to saccade initiation on each trial, which is indicated by the vertical bold dashed line. The classification plots were obtained by averaging the noise values for each time interval (5-ms bins) over all incorrect trials for an observer. The different time windows are marked by the vertical gray dotted lines. Average noise values at each time window bedore the saccade initiation are represented by the gray level of the rectangles of the lower line. The average noise intensity is close to zero (darker) at times when the stimulus information had no effect on the saccade's endpoint, but it is significantly larger than zero (lighter) at times when the visual information influenced the saccade's endpoint.

Human classification plots for the first and second saccades were obtained by averaging noise samples over trials for which the first and second saccades were to distractors. To compare the temporal integration used by the first and second saccades relative to the execution of the first saccade, for both classification plots we temporally aligned the noise samples relative to the time of the first saccade's initiation.

Task. Before each trial the observer fixated a black dot and pressed the “Enter” key when he/she was ready to start the trial. After the fixation dot, a central cross appeared on the screen. This central cross remained visible throughout the trial. Subjects were instructed to fixate the cross until the onset of the stimulus. To reduce temporal anticipation, the duration between the appearance of the cross and stimulus onset was randomly chosen from a uniform distribution between 200 and 700 ms. If an observer made an eye movement larger than 1.5° before the stimulus appeared the trial was aborted. The stimulus consisted of a 600-ms sequence of frames, with new samples of the target and distractor luminance presented every 25 ms (40 Hz). At the end of each trial, a response screen appeared. Observers indicated their five-alternative forced choice perceptual decision about which location contained the target by using the computer mouse to place the cursor on the chosen location and clicking the left mouse button.

Each session began with a standard eye tracker calibration and validation procedure and consisted of 100 trials. Observers performed three to five sessions a day, with a recess between sessions. Subjects who participated in this study were either undergraduate psychology students or paid observers with normal or corrected to normal vision.

Images and Display. Stimuli were viewed binocularly on a 17-inch gray-scale monitor (M17L, Image Systems Corp., Minnetonka, MN) with analog display controller (Dome Md2, Planar Systems, Waltham, MA). The monitor luminance was linearized by using a lookup table. The target and the distractors were spatial Gaussians with a full width at half maximum of 0.376°. Their luminance amplitudes were randomly changed every 25 ms (40 Hz) by sampling from separate normal distributions for the target and the distractors. Both target and distractor distributions had a standard deviation of 1.5 cd/m2. The target's mean amplitude was 6.8 cd/m2 and that of the distractors was 4.7 cd/m2. The target and distractors luminance values were added to a gray background (27.5 cd/m2) and each was enclosed by 1.2° black square outline. The target and distractors were evenly spaced (separated by angles of 72°) along a 6.4° eccentricity imaginary circle. To reduce spatial anticipation of the target location, on each trial all of the target and distractor locations were displaced along the imaginary circle by a random overall rotation (quantized into 18° steps).

Eye Movement Recording. An infrared video-based eye tracker sampling at 250 Hz (Eyelink I, SMI/SR Research Ltd., Osgoode, ON, Canada) was used to measure gaze position. Measurements were made only on the left eye. At the beginning of each session, calibration and validation were performed by using nine black dots that were arranged in a 16° by 16° grid. The results of the validation were considered “valid” only if the maximum error was <1° and average error was <0.5°. A head camera compensated for small head movements. In addition, observers were positioned on a chin rest and instructed to hold their head steady. Saccades were detected when both eye velocity and acceleration exceeded a threshold (velocity > 35°/s; acceleration > 9,500°/s2). Saccades less than 2.1° from the central cross were ignored when the saccadic decision was computed.

Results

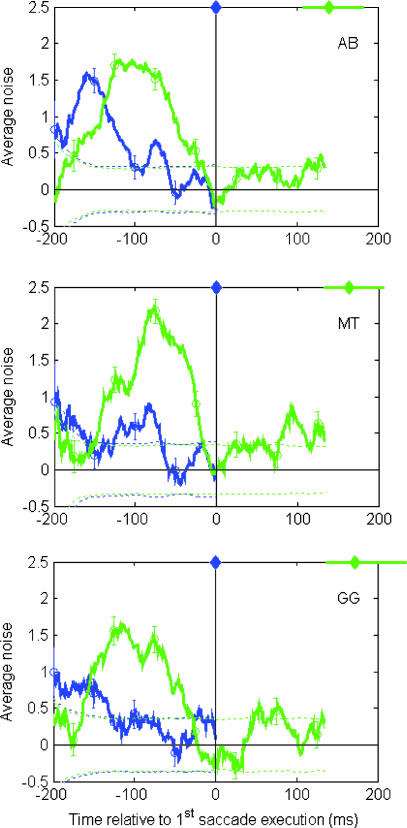

Observers generally made between one and three saccades during the 600-ms stimulus presentation time. To elucidate the time interval in which the brain accumulates visual information for a sequence of saccades, we computed classification plots for the first and second saccades. The number of images used to compute the classification plots was 2,349, 2,338, and 2,321 for observers AB, MT, and GG, respectively. Fig. 3 shows the classification plots of the first and second saccades for three naive observers aligned with respect to the time of first saccade execution (indicated with a zero on the x axis). Fig. 3 also shows the range of the intersaccadic times between the first and second saccades. Median latencies were 140, 164, and 172 ms for observers AB, MT, and GG, respectively. The values along the y axis show the average additive noise at the distractor that was chosen as the eye movement endpoint. This value will be significantly larger than zero only at times in which information has been integrated. The classification plots represent information accumulation relative to the time of the first saccade's initiation, but information cannot be accrued before the stimulus begins. Thus, the part of the classification plot actually used by each saccade on each trial will depend on the how long the stimulus was displayed before the saccade began (the first saccade's latency). Each first saccade will weight the stimulus information according to the classification plot for relative times beginning at the negative of its latency and ending at 0.

Fig. 3.

Classification plots based on the incorrect trials out of 10,000 trials per observer. The first and second saccade classification plots are shown by the blue and green lines, respectively. The y axis shows the average noise values at the distractor to which the saccade was directed (incorrect saccadic decision) and is plotted in gray level units (1 gray level is 0.214 cd/m2). Error bars show the standard error. The dashed lines bracket the average noise values that are not statistically different from zero with a confidence level of 95% (t tests). Both plots were computed relative to the first saccade's initiation, which is shown as time equal to zero (indicated by the blue diamond). Times before the first saccade are shown as negative values and times after the first saccade are shown as positive values. The green diamond at the top of each chart indicates the median intersaccadic interval, and the horizontal green line extends from the 20th to the 80th percentile. Initially the first saccade's integration window is high and then it decreases and approaches zero during a dead time before the first saccade's initiation. The second saccade's integration window begins low but then increases and extends throughout the first saccade's dead time.

The results show that the mechanism driving the first saccade weights early information heavily and has a dead time before the saccade during which visual information is no longer integrated (the classification plot is close to zero). The mechanism driving the second saccade begins integrating information later but continues collecting information during the first saccade's dead time. In addition, visual information presented during the execution of the first saccade (an ≈40-ms region beginning at time 0 on the plots) does not influence the second saccade endpoint. Finally, two observers show a low-amplitude short integration window after the first saccade. However, the relatively higher amplitude of the integration window before the first saccade suggests that the second saccade's endpoint is driven mostly by visual information acquired before the first saccade.

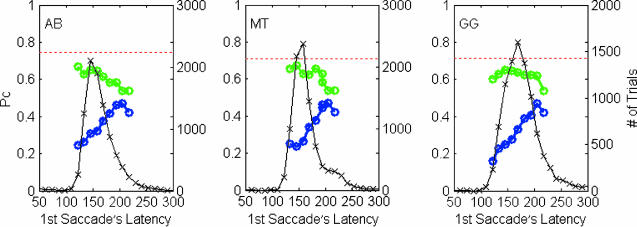

The part of the classification plot actually used by each saccade will depend on the how long the stimulus was displayed before the saccade began, the first saccade's latency. Indeed, the first saccade percent correct increases as its latency increases (Fig. 4). In contrast, the visual processing for second saccades will be less affected by latency variations because initially their classification plot is much smaller. Hence, the percent correct of the second saccade, as a function of the first saccade's latency, shows a plateau, which is also consistent with previous studies (26).

Fig. 4.

Percent correct of the first and second saccade as a function of the first saccade's latency, shown by the blue and green lines, respectively. The data are presented in time bins of 12 ms. Values of percent correct (left axis) were calculated only for time bins with >150 trials. The number of trials in each time bin is shown by the black line (right axis). For reference, a red dashed line shows the percent correct of the perceptual decision.

Discussion

The classification plots, which directly measure the time course of visual information used to guide the first and second saccade, are consistent with previous studies suggesting that processing for the second saccade begins before the execution of the first saccade, i.e., parallel programming (6-11). The previous results were unable to determine at what times visual information presented before the first saccade is accumulated for the guidance of the second saccade. However, our temporal reverse correlation technique that uses eye position information shows that the accumulation of visual information driving the second saccade overlaps with that driving the first saccade and extends through the dead time of the first saccade. Furthermore, visual information guiding the second saccade is integrated over a longer time interval than that for the first saccade. This finding predicts that the second saccade during search is based on more information than the first saccade, which provides an explanation for previous results,∥ even in the absence of transsaccadic integration.

The rapid initial rise of the first saccade's classification plot shows that the first saccade begins integrating information at stimulus onset. If we assume a constant dead time, then it is implied that short latency saccades will have less time to accumulate information than long latency saccades do. However, the second saccade weighting is initially smaller and becomes large later, suggesting that the visual processing for second saccades will be less affected by latency variations because their classification plot is much smaller initially. This prediction is confirmed by the present results, in which the percent correct of the first saccade increases with first saccade latency, whereas second saccade performance remains constant (Fig. 4).

Speed-accuracy tradeoffs in search tasks have been related to the beginning of movement preparation before the end of the visual processing stage (27, 28). Initiating the motor command based on partial visual processing or anticipation of target location increases the risk of executing an incorrect saccade. If rapid second saccades were based on information acquired only during the intersaccadic interval, then these second saccades would surely be very inaccurate. However, the costs of rapid saccades are reduced by the ability of the saccade to use information collected during the dead time of the previous saccade.

Our results also show that the second saccade was guided mostly by information presented before the first saccade rather than information presented during the intersaccadic interval. Previous studies have shown that concurrent processing of saccades is more common when the interval between the saccades is short but occurs less frequently when the intersaccadic interval is long. For the present study, intersaccadic intervals were rather short (medians ranging from 140 to 172) but longer than some previous reports of concurrent processing (9, 13). The short intersaccadic intervals in our study might be related to the fact that the display was presented for only 600 ms, creating a time pressure for observers to quickly search the display (9). It is likely that, under conditions in which there was less time pressure and intersaccadic intervals were longer, the second saccade would be driven more strongly by information presented during the intersaccadic interval. Thus, in general, the way in which information is accrued will likely depend on the task. In addition, the fact that the present study and previous studies have shown that some information about likely target locations accrued before the execution of the first saccade is used by the second saccade does not imply that full representation of scenes can be kept across saccades (29).

The accumulation of information used in guiding a sequence of saccades may be performed by subsets of visual neurons, in the superior colliculus (30) and the frontal eye fields (31), that are involved primarily in saccadic target selection and not directly involved in the timing and control of saccade initiation. Furthermore, a recent study (13) has found that activity of superior colliculus visuomotor neurons involved in generating a fast second saccade is increased before the initiation of the first saccade and maintained during the execution of the first saccade. However, this finding was true only for second saccades after intersaccadic intervals of 125 ms or less. Future studies should address whether the time course of visual information accrual as revealed from reverse correlation is different for saccades following short vs. long intersaccadic intervals.

In summary, the experiment presented here demonstrates that for a search task, saccades are not discrete, isolated events but instead need to be understood as a sequence of gaze shifts that result from concurrent visual processing.

Supplementary Material

Acknowledgments

We thank Leland Stone for many helpful discussions. This work was supported by National Aeronautics and Space Administration Grant NAG9-1329 and National Science Foundation Grant BCS-0135118.

This paper was submitted directly (Track II) to the PNAS office.

Footnotes

We also investigated use of an alternative criterion to define saccadic decisions. This criterion required a saccade's endpoint to be a maximum spatial distance of 2° from a possible target location to be assigned to that location. The use of this second criterion did not lead to any significant changes in our results.

Eckstein, M. P., Beutter, B. R. & Stone, L. S. (1999) Perception 29, Suppl., 101a (abstr.).

References

- 1.Wurtz, R. H. & Goldberg, M. E. (1971) Science 171, 82-84. [DOI] [PubMed] [Google Scholar]

- 2.Mays, L. E. & Sparks, D. L. (1980) J. Neurophysiol. 43, 207-232. [DOI] [PubMed] [Google Scholar]

- 3.Goldberg, M. E. & Bruce, C. J. (1990) J. Neurophysiol. 64, 489-508. [DOI] [PubMed] [Google Scholar]

- 4.Hanes, D. P. & Schall, J. D. (1996) Science 274, 427-430. [DOI] [PubMed] [Google Scholar]

- 5.Becker, W. (1991) in Eye Movements, ed. Carpenter, R. H. S. (Macmillan, New York), p. 117.

- 6.Becker, W. & Jurgens, R. (1979) Vision Res. 19, 967-983. [DOI] [PubMed] [Google Scholar]

- 7.Mokler, A. & Fischer, B. (1999) Exp. Brain Res. 125, 511-516. [DOI] [PubMed] [Google Scholar]

- 8.Hooge, I. T., Beintema, J. A. & van den Berg, A. V. (1999) Exp. Brain Res. 129, 615-628. [DOI] [PubMed] [Google Scholar]

- 9.Araujo, C., Kowler, E. & Pavel, M. (2001) Vision Res. 41, 3613-3625. [DOI] [PubMed] [Google Scholar]

- 10.McPeek, R. M., Skavenski, A. A. & Nakayama, K. (2000) Vision Res. 40, 2499-2516. [DOI] [PubMed] [Google Scholar]

- 11.Godijn, R. & Theeuwes, J. (2002) J. Exp. Psychol. Hum. Percept. Perform. 28, 1039-1054. [DOI] [PubMed] [Google Scholar]

- 12.Zingale, C. M. & Kowler, E. (1987) Vision Res. 27, 1327-1341. [DOI] [PubMed] [Google Scholar]

- 13.McPeek, R. M. & Keller, E. L. (2002) J. Neurophysiol. 87, 1805-1815. [DOI] [PubMed] [Google Scholar]

- 14.Eckstein, M. P. & Ahumada, A. J. (January 2, 2002) J. Vision, 10.1167/2.1.i.

- 15.Ahumada, A. J. & Lovell, J. (1971) J. Acoust. Soc. Am. 49, 1751-1756. [Google Scholar]

- 16.Ringach, D. L., Hawken, M. J. & Shapley, R. (1997) Nature 387, 281-284. [DOI] [PubMed] [Google Scholar]

- 17.Neri, P., Parker, A. J. & Blakemore, C. (1999) Nature 401, 695-698. [DOI] [PubMed] [Google Scholar]

- 18.Gold, J. M., Murray, R. F., Bennett, P. J. & Sekuler, A. B. (2000) Curr. Biol. 10, 663-666. [DOI] [PubMed] [Google Scholar]

- 19.Ahumada, A. J. (2002) J. Vision 2, 121-131. [DOI] [PubMed] [Google Scholar]

- 20.Eckstein, M. P., Shimozaki, S. S. & Abbey, C. K. (2002) J. Vision 2, 25-45. [DOI] [PubMed] [Google Scholar]

- 21.Neri, P. & Heeger, D. J. (2002) Nat. Neurosci. 5, 812-816. [DOI] [PubMed] [Google Scholar]

- 22.Simoncelli, E. P. (2003) Trends Cognit. Sci. 7, 51-53. [DOI] [PubMed] [Google Scholar]

- 23.Viviani, P. & Swensson, R. G. (1982) J. Exp. Psychol. Hum. Percept. Perform. 8, 113-126. [DOI] [PubMed] [Google Scholar]

- 24.Findlay, J. M. (1997) Vision Res. 37, 617-631. [DOI] [PubMed] [Google Scholar]

- 25.Eckstein, M. P., Beutter, B. R. & Stone, L. S. (2001) Perception 30, 1389-1401. [DOI] [PubMed] [Google Scholar]

- 26.Findlay, J. M., Brown, V. & Gilchrist, I. D. (2001) Vision Res. 41, 87-95. [DOI] [PubMed] [Google Scholar]

- 27.Dorris, M. C. & Munoz, D. P. (1998) J. Neurosci. 18, 7015-7026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bichot, N. P., Chenchal Rao, S. & Schall, J. D. (2001) Neuropsychologia 39, 972-982. [DOI] [PubMed] [Google Scholar]

- 29.Henderson, J. M. & Hollingworth, A. (2003) Psychol. Sci. 14, 493-497. [DOI] [PubMed] [Google Scholar]

- 30.Horwitz, G. D. & Newsome, W. T. (1999) Science 284, 1158-1161. [DOI] [PubMed] [Google Scholar]

- 31.Murthy, A., Thompson, K. G. & Schall, J. D. (2001) J. Neurophysiol. 86, 2634-2637. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.