Abstract

Policy Points:

Investigations on systematic methodologies for measuring integrated care should coincide with the growing interest in this field of research.

A systematic review of instruments provides insights into integrated care measurement, including setting the research agenda for validating available instruments and informing the decision to develop new ones.

This study is the first systematic review of instruments measuring integrated care with an evidence synthesis of the measurement properties.

We found 209 index instruments measuring different constructs related to integrated care; the strength of evidence on the adequacy of the majority of their measurement properties remained largely unassessed.

Context

Integrated care is an important strategy for increasing health system performance. Despite its growing significance, detailed evidence on the measurement properties of integrated care instruments remains vague and limited. Our systematic review aims to provide evidence on the state of the art in measuring integrated care.

Methods

Our comprehensive systematic review framework builds on the Rainbow Model for Integrated Care (RMIC). We searched MEDLINE/PubMed for published articles on the measurement properties of instruments measuring integrated care and identified eligible articles using a standard set of selection criteria. We assessed the methodological quality of every validation study reported using the COSMIN checklist and extracted data on study and instrument characteristics. We also evaluated the measurement properties of each examined instrument per validation study and provided a best evidence synthesis on the adequacy of measurement properties of the index instruments.

Findings

From the 300 eligible articles, we assessed the methodological quality of 379 validation studies from which we identified 209 index instruments measuring integrated care constructs. The majority of studies reported on instruments measuring constructs related to care integration (33%) and patient‐centered care (49%); fewer studies measured care continuity/comprehensive care (15%) and care coordination/case management (3%). We mapped 84% of the measured constructs to the clinical integration domain of the RMIC, with fewer constructs related to the domains of professional (3.7%), organizational (3.4%), and functional (0.5%) integration. Only 8% of the instruments were mapped to a combination of domains; none were mapped exclusively to the system or normative integration domains. The majority of instruments were administered to either patients (60%) or health care providers (20%). Of the measurement properties, responsiveness (4%), measurement error (7%), and criterion (12%) and cross‐cultural validity (14%) were less commonly reported. We found <50% of the validation studies to be of good or excellent quality for any of the measurement properties. Only a minority of index instruments showed strong evidence of positive findings for internal consistency (15%), content validity (19%), and structural validity (7%); with moderate evidence of positive findings for internal consistency (14%) and construct validity (14%).

Conclusions

Our results suggest that the quality of measurement properties of instruments measuring integrated care is in need of improvement with the less‐studied constructs and domains to become part of newly developed instruments.

Keywords: integrated care, measurement instruments, quality of measurement properties, instrument validation, systematic review

Health systems worldwide are in the midst of a demographic and epidemiological transition marked by population aging1, 2, 3 and an increasing burden of chronic diseases and disability.4 The synchronism of the change in demographics with the shift in disease patterns around the world inevitably impacts the health needs of populations. Health systems are compelled to make the necessary adjustments in order to continue supporting these needs. Along these lines, the World Health Organization (WHO) developed the Innovative Care for Chronic Conditions (ICCC) Framework in 2002 to facilitate a new way of organizing health care systems. Expanding on the Model for Effective Chronic Illness Care,5 one of the guiding principles of the ICCC framework is building integrated health care to improve chronic disease management.6

This growing importance of integrated care draws attention to the need for systematically investigating how stakeholders interpret and measure integrated care. In this widely evolving field of research, pioneers have regarded integrated care as a principal strategy for improving patient care and increasing health system performance. These improvements are often measured in terms of enhanced quality of the patient‐care experience, better health and well‐being of communities, and reduced per capita health care costs.7, 8, 9, 10, 11, 12 Despite such significance, detailed evidence on the measurement properties of standardized and validated instruments that measure integrated care remains vague and limited. This limitation may be partly due to the similarly evolving methodologies for investigating instruments in this field of research.

Our systematic review aimed to provide evidence on the state of the art in measuring integrated care, including an assessment of the measurement properties of available instruments. Toward this end, we developed a comprehensive systematic review framework from the literature. We used this framework to identify the instruments that measure integrated care and the constructs used to describe the degree of integration. Finally, we synthesized the evidence on the quality of the studies included and the measurement properties of the integrated care instruments we identified. Exploring integrated care in this milieu potentially offers practical insights that could effectively advance the current understanding, design, implementation, and evaluation of integrated care.

We begin this paper with an overview of the concept of integrated care and its measurement. Key theoretical, conceptual, and measurement frameworks are discussed to lay the groundwork for presenting the results of the systematic review. We subsequently discuss the significance of gathering evidence on the measurement properties of instruments that measure integrated care. A background on the methodology of the systematic review and the psychometric evaluation of instruments is also presented. This will enable the understanding of the fundamentals in performing a systematic review of the measurement properties of instruments and highlight the unique contribution of this study.

Defining Integrated Care

The burgeoning of integrated care as a novel area of formal research and practice started around two decades ago.8 Over the years, relevant works have created awareness and stimulated greater interest in the meaning, rationale, implementation, and implications of integrated care.7, 8, 9, 11, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34 Although health policies dating back to the 1940s shaped more recent integrated care initiatives,35 the imperative to remodel health systems may have also fueled the growing interest in integrated care. Health systems around the world have gradually evolved to better address the changing needs of the populations they serve.5, 32 Recent health care reforms in the United States, for example, prompted the emergence of accountable care systems, one of the earlier conceptualizations of integrated service delivery.36

An inherent challenge in relatively nascent fields such as integrated care is the surrounding conceptual ambiguity. Key stakeholders (ie, patients, health care providers, organization managers, and policymakers) have different perceptions of integrated care.8, 11 In espousing a health system perspective, our systematic review adopted the following working definition of “integrated health care”: “the management and delivery of health services such that people receive a continuum of health promotion, health protection, and disease prevention services, as well as diagnosis, treatment, long‐term care, rehabilitation, and palliative care services through the different levels and sites of care within the health system and according to their needs.”37 Among a few alternative definitions, we adopted this definition from the Pan American Health Organization and the WHO European Office for Integrated Health Care Services because of its comprehensive scope. Further, as the leading agency for international health affairs, the WHO recommends strategies that are often modifiable within the specific context of its individual member states.38 Hence, in using this definition, insights drawn from this paper can be potentially relevant to a wider international audience.

Key Theoretical and Conceptual Frameworks

The current literature contains a substantial amount of work synthesizing the key concepts of integrated care.8, 11, 16, 24, 29, 30, 31 Leutz previously outlined the principles of integrating medical and social services to clarify arguments surrounding the early understanding of integration.7, 9 However, the majority of these studies acknowledged that conceptual ambiguity remains a challenge. In fact, Minkman described integrated care as having various forms and lacking well‐defined boundaries among its core concepts.31 Indeed, several terms have been used in different health systems across the world to denote integrated care. These include managed care (United States),39 shared care (United Kingdom),16 transmural care (The Netherlands),40 and other terms such as continuity of care, case management, patient‐centered care, transitional care, and integrated delivery systems, to name a few.

There are various integrated care models across Western Europe (eg, United Kingdom,12, 13, 14, 18, 41 The Netherlands,13, 32, 42, 43 Denmark and Finland,44 Austria and Germany,32 and Sweden27) and North America (eg, the United States7, 9, 39, 45, 46 and Canada28). Examples include hospital‐physician relationships, health maintenance organizations (HMOs),15, 46 accountable care organizations (ACOs),39, 47, 48, 49 the chronic care model,5, 6 chains of care, and managed clinical networks.18, 27, 50

Some of the widely held integrated care models and strategies emerged from the American health system. Kreindler and colleagues found that the unique social identities of individual organizations influence perceptions of implementers of integrated care (eg, ACOs).39 The American model of HMOs is another often‐cited example of integration.48, 50 The HMO model, although based on a nonstandard definition, also contributed to generating some of the earliest concepts of integrated care.16 Lessons from successful HMOs (eg, Carle, Marshfield Clinic, Kaiser Permanente, Geisinger Health System)15, 41, 50 guided the design, implementation, and assessment of subsequent strategies for integrated care delivery.

Studies on integrated care models across European health systems also made substantial contributions in setting the foundations of integrated care. Disease management programs (The Netherlands and United Kingdom), chains of care (Sweden), and managed clinical networks (Scotland), for example, focus on meso‐level integration that involves care provision to particular groups of patients and populations.41 Other known integrated care models across Europe are presented in greater detail in the literature.51

These above‐mentioned models demonstrate how integrated care intrinsically relates to multidisciplinary components, objectives, and varying perspectives. Other studies that attempted to surmount this conceptual challenge have recognized the dynamic and polymorphous nature of the integrated care concept.8, 24 For example, Edgren viewed integrated health services as a complex adaptive system—an ever‐changing organic entity involving various interrelated drivers.22 Similarly, Minkman introduced an empirically tested conceptual framework known as the Development Model for Integrated Care (DMIC).31 Although limited within the context of Dutch disease management programs, the DMIC also recognized integrated care as a complex, multilevel process having management and organizational components that are simultaneously at work.33 Another study that used a structured framework to better understand integrated care, focused on the enabling mechanisms of integrated care for managing patients with chronic disease.52 Valentijn et al. also recently introduced the Rainbow Model of Integrated Care (RMIC).53 As a comprehensive model, the RMIC supports a more coherent understanding and interpretation of the interactions among the complex dimensions of integrated care.33

Measuring Integrated Care

The preceding discourse points to the considerable progress in constructing models and exploring strategies for developing and implementing integrated care. Thus, investigating its measurement can also benefit from adapting a similar structured approach especially because—akin to hitting a moving target—integrated care is a rapidly advancing field.

The often‐discussed conceptual ambiguity of integrated care is a key deterrent in measuring the dimensions of integration. Moreover, instrument development itself is an iterative process that requires considerable effort and time to adequately capture the integrated care concept, which makes its measurement even more challenging. As a result, theoretical foundations and psychometric assessment of existing instruments have become limited.20 Unlike more established research areas with clear‐cut methods of investigation, measuring a dynamic and multifaceted system such as integrated care can be quite complex. Nevertheless, systematic measurement methods are vital in continuously developing the knowledge base of integrated care. Measurement is key in identifying strategic areas for improving patient care and health system performance.

Existing reports on integrated care measurement range from systematic reviews to less‐structured but comprehensive literature reviews of the constructs commonly measured; the measurement approaches and methodologies; and the actual instruments used in measuring constructs related to integrated care. Based on existing conceptual frameworks, previous studies measured various constructs that relate to several components of integrated care. The constructs commonly measured and described in scoping the literature include person/patient‐centered care,54, 55 continuity of care,56, 57 care coordination,58, 59, 60 chronic disease management,61 quality measures in integrated care settings,62 integrated health care delivery,63, 64 teamwork in health care settings,65 and experiences of integrated care.66, 67

In terms of the measurement approaches, questionnaire surveys, registry data, and mixed data sources were identified as the most common methodologies in measuring integrated care.64 Other models for integrated care measurement further characterized the measurement of integrated care according to the levels, degree, or continuum of integration. For example, Leutz described the 3 levels of integration in the context of integrated medical and social services (ie, linkage, coordination, and full integration).7 Based on this model, Minkman suggested that specific needs of service users determine the required level of integration. For instance, linkage of services would be sufficient for clients with less complex needs whereas those who have more complex needs would require full integration.68, 69 In practice, however, integration involves a combination of these 3 levels of integration.9 Other research described a measurement model in terms of a continuum or cycle anchored by the 2 extremes of full segregation and full integration.17, 30 Browne et al. also developed a comprehensive model for measuring multiple dimensions of integrated human service networks. These networks consist of multisector agencies working together to provide a continuum of services for individuals with complex needs.20 Finally, Singer et al. proposed a framework using dimensions of coordination and patient‐centeredness to measure the degree of patient care integration.29

In terms of gathering evidence on the quality of measurement instruments, a recent systematic review focused on identifying available instruments for measuring care continuity.70 Further, they assessed the quality of the measurement properties of the available instruments. In so doing, the said review set a precedent in the systematic investigation of measurement properties of instruments in this field of research (vis‐à‐vis traditional instruments for health status measurement).

These studies on the relevant constructs and measurement models offer a glimpse of 2 main themes in integrated care measurement: (1) what is measured and (2) how it is measured. The current literature fairly indicates that integrated care measurement largely focuses on measuring individual aspects of integrated care. This suggests an atomistic rather than comprehensive understanding of integrated care measurement. Although the value of using a comprehensive tool for measuring integrated care remains to be justified, adopting a comprehensive framework for measurement provides the opportunity to identify other equally important aspects of integrated care that remain under‐investigated. Furthermore, using a comprehensive instrument may also be relevant given the comprehensive scope of the definition of integrated care.

The current literature on integrated care measurement also points out the important distinction between the measurement of structure/process (ie, implementation and extent of achieving integration) and the measurement of outcomes (ie, evidence of effectiveness). Prior systematic reviews on health systems integration found that studies describing instruments that can measure both the processes and the outcomes of integrated care are limited,12, 24, 64 perhaps reasonably so, to avoid confounding by single‐source bias. Further, a previous study maintained that capturing each dimension of an integrated system (ie, structural input, function, and output) would require a specific measurement instrument.20 Although existing methods for measuring integrated care usually focus on the structures and processes (ie, at the organizational and administrative levels) rather than the outcomes (ie, impact),12 triangulating these measures with clinical outcomes enhances the interpretability of their findings.71 In our systematic review, we deemed the measurement of structures and processes as more informative in describing the actual delivery (providers) and experience (users) of integrated care, compared with the measurement of outcomes. The literature on evaluating the quality of medical care72 supports this assumption, as we further explain in the discussion of the systematic review framework.

Systematic Review Framework

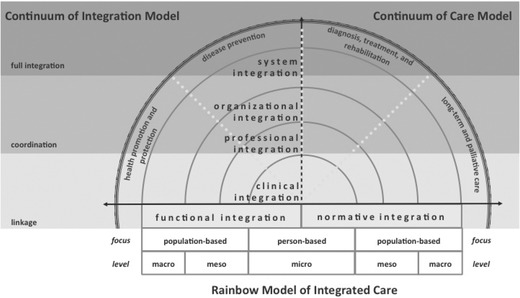

We developed a systematic review framework (Figure 1) to operationalize the concept and measurement of integrated care for our study and to enable the systematic evaluation of the instruments. This review framework was built on key studies on integrated care7, 30, 42, 43, 53 and the relevant publications from global health and research agencies.10, 12, 37, 73, 74 Based on our working definition, we put together key elements of the RMIC,42, 43, 53 the Institute of Medicine (IOM) continuum of care model,75 and the continuum of integration model.17, 30, 53

Figure 1.

Systematic Review Framework

The RMIC sits at the core of our systematic review framework (see Figure 1). The RMIC is a comprehensive model that presents the 6 dimensions of integrated care: clinical, professional, organizational, system, functional, and normative integration. The first 4 dimensions operate at the micro, meso, and macro levels of the health system where integration presumably takes place. The last 2 dimensions (functional and normative integration) support the linkages across the health system levels and the other dimensions in the model. The consensus‐based taxonomy of the key features of these dimensions is described elsewhere.42, 43

The rationale for applying the RMIC was twofold. First, the adoption of a comprehensive definition of integrated care warrants an equally comprehensive measurement framework. As such, the RMIC is one of the most comprehensive and tested models of integrated care. This unified conceptual framework can help address the ambiguity surrounding integrated care and guide subsequent investigations on the development of standards for its measurement. Second, the RMIC is based on the primary care tenets of person‐focused and population‐based care. Fundamentally, it uses primary care as a focal point to support integrated care. Integrated care offers to transfer the focus of care from high‐cost hospitalizations to lower‐cost ambulatory settings. In OECD countries, referral patterns appear to channel the entry of patients into the health care system via the primary care provider. Therefore, access to specialist care in these settings is often associated with a primary care referral. As gatekeepers, primary care providers are then increasingly important and potentially the best positioned to undertake care coordination, especially for patients with comorbidities.76 In line with presenting the state of the art in measuring integrated care, it is only reasonable to adapt a framework that includes the key elements of primary care—a core component of integrated care.

We also included the IOM continuum of care model in our framework to depict the service delivery component of integration as a continuum that covers health promotion, disease prevention, disease detection and management, and long‐term care. Finally, to conceptualize the measurement of integrated care, our systematic review framework also adopted the continuum of integration model.17, 30, 53 This measurement model describes the degree of integration in terms of a continuum that spans the 2 extremes of full segregation and full integration; linkages, coordination, and cooperation lie between these 2 extremes.17

Finally, to reiterate an important assumption, our systematic review focused on the measurement of structures and processes that depict the experience of integrated care. This approach was based on examples from the literature20, 30, 58, 63, 77 that applied Donabedian's structure‐process‐outcome framework to evaluate the quality of medical care.72 The Donabedian model expresses important caveats in using outcomes in quality assessment. According to this model, outcome measures provide limited insights into the type and setting of the weaknesses or strengths they are supposed to indicate. While Donabedian recognized that outcomes remain as the ultimate indicators of the effectiveness and quality of care, examining the structure and process of care itself appears to better characterize the nature of the care provided and experienced. Moreover, enhancing the structures and processes is important in improving performance from a system perspective.14 This further underscores the value of measuring the structures and processes involved in integrated care—even though measuring the outcomes in terms of health system performance and patient health appears more relevant for other stakeholders.

Significance of the Systematic Review

When confronted with the task of selecting the appropriate instrument to measure integrated care, end users (ie, researchers, policymakers, and practitioners) could benefit from a comprehensive summary of instruments to guide their choice. The measurement properties of instruments are key considerations in making a well‐informed decision. Measurement properties are specific attributes of an instrument that characterize the strengths and weaknesses of a test or measurement.78 Instruments with good measurement properties are known to be more powerful in obtaining strong conclusions and allow for better interpretation of findings.79

In attempting to fill specific gaps in the literature, we conducted a systematic review of measurement properties of instruments measuring integrated care. To our knowledge, our study is the first of its kind to adopt a comprehensive systematic review framework that extensively considered construct and measurement models in the integrated care literature. Our systematic review aimed to provide new insights on integrated care measurement by answering the following research questions: (1) What instruments are currently available to measure integrated care? (2) What constructs were used to describe the degree of integration? and (3) What is the strength of evidence supporting the value of the measures reported (ie, the quality of the studies and the measurement properties of instruments)?

A systematic review of the measurement properties of instruments is designed to provide information that can support and justify the development of new instruments.80 Based on our findings, the inclusion of the less‐studied constructs and domains in new instruments may also be considered. More broadly, our review can contribute in setting the research agenda for further validating the available instruments and help introduce valuable insights in the field of integrated care and its measurement.

Methods

We developed and implemented a systematic review protocol (available upon request) based on published guidelines for best practice in systematic reviews of the measurement properties of instruments.80, 81, 82, 83, 84 We also adapted the recommended procedures from prior peer‐reviewed studies on measurement properties and integrated care measurement.58, 63, 70

Data Source and Search Strategy

We searched MEDLINE/PubMed in June 2014 and March 2015 (last updated search) and retrieved articles on the measurement properties of instruments measuring integrated care. One round of reference mining was performed on the eligible articles to capture additional relevant articles. Our systematic review framework, along with key papers on quality assessment of the measurement properties of instruments, guided the search strategy development.70, 81, 82, 83, 84 We modified the search strategy from previous research70 to correspond with our review framework before applying an additional search filter83 for studies on the measurement properties of instruments. Key terms for the construct search included “continuity of care,” “coordination of care,” “integrated care,” “patient‐centered care,” and “case management.” We summarized the key components of the final search strategy (online Appendix A) in Table 1.

Table 1.

Components of the Search Strategy Development

| Component | Description | Example of Key Terms | Remarks |

|---|---|---|---|

| Construct | Integrated care search terms | continuity of care, coordination of care, integrated care, patient‐centered care, case management | Modified from Uijen et al.70 to fit the conceptual framework |

| Instrument | Search terms for the instrument of interest | questionnaires, instruments, measure, survey | User‐defined based on Terwee et al.81 |

| Measurement properties | Measurement properties search filter | validation studies, measurement properties, inter‐rater | Directly applied from Terwee et al.81 |

| Exclusion | Exclusion filter | editorial, interview, legal cases | Directly applied from Terwee et al.81 |

Study Selection

We selected eligible articles based on 4 main criteria: (1) availability of English full‐text article, (2) measurement of constructs of integrated care as defined in the systematic review framework, (3) use of a relevant instrument or questionnaire, and (4) adequate description of the development and/or validation of an instrument. Research metrics define a construct as “a characteristic or trait individuals possess to varying degrees that a test is designed to measure.”85 Our review defined a relevant construct as a feature of the integrated care experience that an instrument intends to measure. Given our previous assumption that structures and processes better depict the care experience than outcomes do, our study focused on finding instruments measuring constructs related to the structure and/or process of integrated care delivery. Therefore, the second and third selection criteria effectively excluded instruments that measure the impact of integrated care rather than the actual care experience.

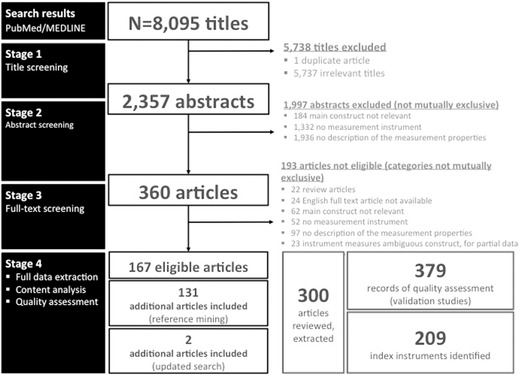

These 4 criteria were pilot tested on a sample of the search results prior to conducting the review. Thereafter, we independently screened the titles (MACB) (Stage 1) and abstracts (MACB and MN) (Stage 2) of articles from the main search results to identify records for full‐text retrieval. MACB and MN discussed and resolved inconsistent screening results and consulted 2 more researchers (YWL and HJMV) for confirmation if necessary. We (MACB and MN) further screened the articles based on the full text (Stage 3) to select the articles for inclusion in the final review (Stage 4). With best effort, we applied a similar selection procedure in the reference mining. The study flow diagram that details the study selection process along with the final search results is presented in Figure 2.

Figure 2.

Study Flow Diagram

Data Extraction

Using the standard data extraction form we constructed for our review, we abstracted the following data from the eligible articles: (1) basic article information (ie, author, title, journal name, year of publication, study eligibility); (2) validation study details (ie, design, objectives, setting, country); (3) description of respondents (ie, type, sample population, size, mean age, gender, disease status); (4) instrument details (ie, name, purpose, language, number of items, response scales, constructs purported to measure, constructs and domains of integrated care relevant to the conceptual framework); (5) details of instrument development (ie, item generation, refinement procedures, administration, scoring methods, theoretical basis); and (6) results of statistical analyses and measurement properties evaluated (ie, statistical methods, reported values for each measurement property). Index instruments that measure integrated care constructs were also identified from the eligible articles. An index instrument is a principal instrument or questionnaire from which other versions have been developed for validation (eg, the Care Transition Measure86 is an index instrument available in different versions and several languages).

Our review primarily aimed to identify instruments that measure integrated care and to describe the relevant constructs and domains the instruments used to measure integrated care. Hence, we later mapped the original constructs purportedly measured in the integrated care instruments to our systematic review framework. Based on our search strategy and systematic review framework, we recoded the original constructs into 4 categories of key constructs (Table 2). Care integration instruments, for example, included instruments that clearly specified measuring integrated care/care integration. Also classified under this key construct were instruments measuring combinations of multiple constructs that are relevant to our conceptual framework (ie, any combination of these constructs: continuity of care, coordination of care, integrated care, patient‐centered care, case management, patient experience, chronic care, long‐term care, and quality of care).

Table 2.

Instrument Categories According to Key Constructs of Integrated Care

| Key Construct | Description of Instruments |

|---|---|

| Care integration |

|

| Care continuity/comprehensive care |

|

|

Care coordination/ case management |

|

| Patient‐centered care |

|

We also classified the instruments according to the relevant domains of integrated care using the 6 dimensions of the RMIC (ie, clinical, professional, organizational, system, normative, and functional integration). The instruments were grouped into the 6 dimensions based on the taxonomy of key features described by the original framework developers.42 These steps facilitated a thorough and structured examination of the instruments and the constructs they measured.

Quality Assessment of Studies and Instruments

The COnsensus‐based Standards for the selection of health status Measurement INstruments (COSMIN) guided the quality assessment of the studies and the instruments we identified in this review.84, 87, 88, 89 In quality assessment, there is an important distinction between the quality of a study on the measurement properties and the quality of an instrument.90

Quality Assessment of Studies

The assessement of the methodological quality of the studies was performed using the 4‐point scale COSMIN checklist.84 This checklist is comparable to others that assess the quality of other types of studies included in a systematic review.89 It includes an item list assessing whether a particular study satisfied the methodological standards for every measurement property it examined (Table 3). Hence, we rated a study either as poor, fair, good, or excellent for each item in the relevant measurement property. After rating each item, we applied a worst‐score‐counts algorithm to derive a COSMIN checklist quality score per measurement property examined. For example, even if a study scored excellent in 5 of the 6 items for internal consistency, the 1 item rated poor would give the study a poor rating for this measurement property. Each validation study reported in every eligible article was counted as 1 study and assigned a unique identifier. The identifiers served to distinguish a validation study reported within a single eligbile article. Each report of an instrument validation had a corresponding quality assessment of all the measurement properties considered (eg, a COSMIN quality score for internal consistency, or any other measurement property examined).

Table 3.

Definition of Measurement Properties

| Measurement Property | Definitiona |

|---|---|

| Reliability | |

| 1. Internal consistency | The degree/extent to which items in a (sub)scale are inter‐correlated, thus measuring the same construct |

| 2. Reliability | The proportion of the total variance in the measurements due to true differences among patients |

| 3. Measurement error | The systematic and random error of a patient's score that is not attributed to true changes in the construct to be measured |

| Validity | |

| 4. Content validity | The degree to which the content of an instrument is an adequate reflection of the construct to be measured |

| 5. Structural validity | The degree to which the scores of an instrument are an adequate reflection of the dimensionality of the construct to be measured |

| 6. Construct validity (hypothesis testing) | The degree to which the scores of an instrument are consistent with hypotheses (eg, with regard to internal relationships, relationships to scores of other instruments, or differences between relevant groups) based on the assumption that the other instrument validly measures the construct |

| 7. Criterion validityb | The extent to which scores on a particular questionnaire relate to a gold standard |

| 8. Cross‐cultural validity | The degree to which the performance of the items on a translated or culturally adapted health‐related patient reported outcomes (HR‐PRO) instrument are an adequate reflection of the performance of the items of the original version of the HR‐PRO instrument |

| Responsiveness | |

| 9. Responsiveness | The ability of an instrument to detect change over time in the construct to be measured |

Quality Assessment of Instruments

The quality of an instrument is determined by its actual measurement properties (ie, statistical parameters reported for reliability, validity, etc). Guidelines on the quality criteria for good measurement properties are available from the literature.70, 81 However, as of this writing, a well‐defined benchmark for what qualifies as adequate measurement property remains to be established.89 Nevertheless, it is important to assess the quality of a study (ie, COSMIN quality scores) before evaluating the evidence on the quality of any instrument it reports (ie, the actual results of the measurement properties reported).89 We therefore assessed the quality of the studies and the quality of the measurement properties of instruments separately.

Data Analysis and Best Evidence Synthesis

We combined all data extraction records to facilitate iterative data verification steps throughout the subsequent data analysis and synthesis. We implemented a basic data management system using SPSS version 20 and Microsoft Office 2010 (eg, Word, Excel) for the descriptive statistical analyses and qualitative data synthesis, respectively. We then presented our main results with a descriptive summary of (1) the eligible articles (ie, article information, study details, and respondents), (2) the identified instruments and the constructs they measured, (3) the methodological quality assessment of each validation study per article (ie, COSMIN quality scores), and (4) the evaluation of the adequacy (ie, overall quality) of measurement properties per index instrument.

In evaluating the adequacy of measurement properties, we considered the evidence on the quality of studies and measurement properties per index instrument. It is possible to perform quantitative analysis by statistical pooling of the actual measurement properties, provided that the studies are of at least fair quality and homogeneous. Homogeneity requires the studies to be adequately similar in terms of study characteristics and the results of the measurement properties.80, 90 So far, however, there is limited research on established methods for quantitative analysis of data from studies on measurement properties.80

Alternatively, qualitative analysis using best evidence synthesis allows drawing conclusions on the measurement properties when statistical pooling cannot be supported.80 In this approach, 4 factors determine the adequacy of the measurement properties of each index instrument: (1) the number of validation studies, (2) the methodological quality of the studies, (3) the adequacy of the results of the measurement property (positive or negative), and (4) the consistency of results from several studies on the same instrument.70, 80, 81 The qualitative approach takes into account cases where different studies assessed the measurement properties of the same index instrument. In such cases, the adequacy of measurement properties of an index instrument was assessed from the results of different studies.

Of the two approaches, the qualitative approach was deemed more appropriate for our review. Thus, we summarized the evidence on the quality of measurement properties using the modified quality criteria for measurement properties (Table 4) and the criteria for the levels of evidence and overall assessment of measurement properties of instruments (Table 5). These criteria were adapted from relevant systematic reviews of measurement properties and those from the COSMIN developers.70, 80, 84 MACB applied these criteria to all index instruments (n = 209). To improve the validity of the overall assessment, 2 coauthors (YWL and HJMV) randomly checked a subsample of these assessments (60 instruments), while another (MN) independently applied the criteria to a random selection of instruments (45 instruments).

Table 4.

Criteria for Rating the Adequacy of the Reported Measurement Properties

| Measurement Property | Reported Result | Quality Criteriaa |

|---|---|---|

| Reliability | ||

| 1. Internal consistency | + | (Sub)scale unidimensional OR Cronbach's alpha(s) ≥ 0.70 |

| ? | Dimensionality not known OR Cronbach's alpha not determined | |

| – | (Sub)scale not unidimensional OR Cronbach's alpha(s) ≤ 0.70 | |

| 0 | Did not assess internal consistency | |

| 2. Reliability | + | ICC/weighted Kappa ≥ 0.70 OR Pearson's r ≥ 0.80 |

| ? | Neither ICC/weighted Kappa, nor Pearson's r determined | |

| – | ICC/weighted Kappa <0.70 OR Pearson's r < 0.80 | |

| 0 | Did not assess reliability | |

| 3. Measurement error | + | MIC > SDC OR MIC outside the LOA |

| ? | MIC not defined | |

| – | MIC ≤ SDC OR MIC equals or inside LOA | |

| 0 | Did not assess measurement error | |

| Validity | ||

| 4. Content validity | + | The target population considers all items in the questionnaire to be relevant AND considers the questionnaire to be complete |

| ? | No target population involvement | |

| – | The target population considers items in the questionnaire to be irrelevant OR considers the questionnaire to be incomplete | |

| 0 | Did not assess content validity | |

| 5. Structural validity | + | Factors should explain at least 50% of the variance |

| ? | Explained variance not mentioned | |

| – | Factors explain < 50% of the variance | |

| 0 | Did not assess structural validity | |

| 6. Construct validity (hypothesis testing) | + |

Correlation with an instrument measuring the same construct ≥ 0.50 OR at least 75% of the results are in accordance with the hypotheses AND correlation with related constructs is higher than with unrelated constructs |

| ? | Solely correlations determined with unrelated constructs | |

| – | Correlation with an instrument measuring the same construct < 0.50 OR < 75% of the results are in accordance with the hypotheses OR correlation with related constructs is lower than with unrelated constructs | |

| 0 | Did not assess construct validity | |

| 7. Criterion validityb | + | Convincing arguments that gold standard is “gold” AND correlation with gold standard > 0.70 |

| ? | No convincing arguments that gold standard is “gold” OR doubtful design or method | |

| – | Correlation with gold standard !0.70, despite adequate design and method | |

| 0 | Did not assess criterion validity | |

| Responsiveness | ||

| 8. Responsiveness | + |

Correlation with an instrument measuring the same construct ≥ 0.50 OR at least 75% of the results are in accordance with the hypotheses OR AUC ≥ 0.70 AND correlation with related constructs is higher than with unrelated constructs |

| ? | Solely correlations determined with unrelated constructs | |

| – | Correlation with an instrument measuring the same construct < 0.50 OR < 75% of the results are in accordance with the hypotheses OR AUC < 0.70 OR correlation with related constructs is lower than with unrelated constructs | |

| 0 | Did not assess responsiveness |

Abbreviations: AUC, area under the curve; ICC, intra‐class correlation coefficient; LOA, limits of agreement; MIC, minimal important change; SD, standard deviation; SDC, smallest detectable change.

These criteria were adapted from Uijen et al.70 unless stated otherwise. The relevant criterion for cross‐cultural validity is not available from the current literature; this precluded rating the quality/adequacy of cross‐cultural validity property in the review.

Adapted from Terwee et al.81

Table 5.

Criteria for the Level of Evidence and Overall Assessment of Measurement Properties

| Criteriaa | Overall Assessment | Level of Evidence |

|---|---|---|

| Consistent findings in multiple studies of good methodological quality OR in 1 study of excellent methodological quality | + + + or − − − | Strong |

| Consistent findings in multiple studies of fair methodological quality OR in 1 study of good methodological quality | + + or − − | Moderate |

| 1 study of fair methodological quality | + or − | Limited |

| Conflicting findings from multiple studies | +/− | Conflicting |

| Only studies of poor methodological quality OR only indeterminate results from multiple studies regardless of methodological quality | ? | Unknown |

| Measurement property not assessed | 0 | Not assessed |

Adapted from Uijen et al.70

Results

Our main search strategy retrieved 8,095 articles in June 2014. After systematically screening titles, abstracts, and full‐text papers, we included 167 of those articles in the full review. We included 131 additional articles in the full review after screening several potentially relevant articles that were identified in the reference mining. We expected to derive a number of additional articles from reference mining given the surrounding conceptual ambiguity of integrated care. Finally, our last updated search in March 2015 yielded 2 more articles. Our study selection process amounted to 300 unique articles for inclusion in the full review.

We identified 209 index instruments measuring a relevant integrated care construct. We assigned a unique identifier to 379 instrument validation studies reported in the eligible articles, each with a corresponding quality assessment of measurement properties. We presented consumer tables in supplementary files (available online) to provide an overview of the key characteristics of the eligible articles (Online Appendix B); the identified instruments measuring integrated care in these articles (Online Appendix C); the methodological quality assessment of each validation study (Online Appendix D); and the adequacy of measurement properties per index instrument (Online Appendix E). We structured the following summary of results according to these major themes. In addition, we provided a list of instruments that have evidence of adequate measurement properties (Online Appendix F) and another list of instruments grouped by domain (Online Appendix G) as a quick reference catalogue of instruments (see online notes on appendices).

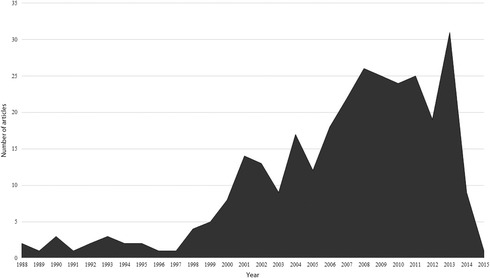

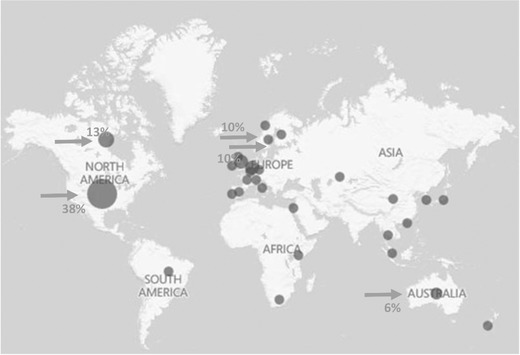

Eligible Articles Included in the Full Review

The number of relevant publications on instruments measuring integrated care–related constructs showed an increasing trend over the years (Figure 3). The gradual annual increase in published articles started in 1998, peaking to a high of approximately 30 publications in 2013. The United States (38%), Canada (13%), the Netherlands (10%), the United Kingdom (10%), and Australia (6%) were the top 5 countries that contributed to the literature on the measurement properties of integrated care instruments since 1988, with a few contributions from Asia and Africa (Figure 4). Of the 300 articles included, nearly 60% were published in either a medical specialty (33%) or health services research journal (23%). Other common journal subject areas included nursing (12%), mental health and substance abuse (11%), and cancer and patient education (4% each), with a few other articles (<4%) from journals that focused on a range of areas, including clinical epidemiology, dental health, and service industry management.

Figure 3.

Number of Articles Published per Year from 1988 to 2015

Figure 4.

Distribution of Articles by Country of Publication

Instruments Measuring Integrated Care

Constructs and Domains

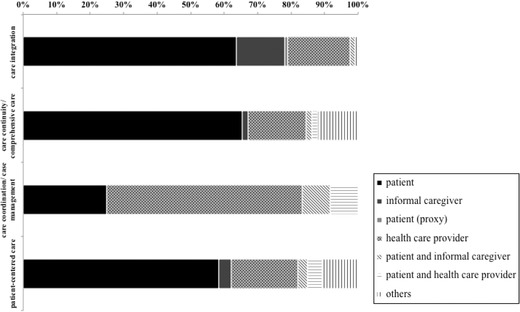

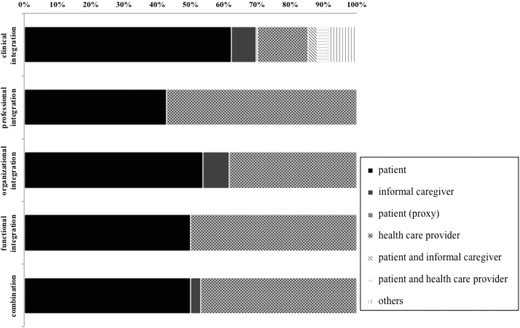

Table 6 presents a descriptive summary of the general and construct‐specific characteristics of instruments identified per validation study (n = 379). We initially determined the main constructs the instruments originally intended to measure. More than 50% of the instruments purported to measure patient‐centered care. After recoding these constructs to match the key constructs in our framework, the majority of the instruments measured care integration (33%) and patient‐centered care (49%). Fewer instruments measured constructs of care continuity/comprehensive care (15%) and care coordination/case management (3%). Furthermore, we mapped the majority of constructs to the clinical integration domain (84%), with fewer constructs related to the professional (3.7%), organizational (3.4%), and functional (0.5%) integration domains. None of the instruments exclusively assessed system or normative integration, and only 8% of the instruments measured a combination of any of the domains.

Table 6.

Characteristics of Instruments Measuring Integrated Care Constructs Identified in the Review (379 validation studies of 209 index instruments)

| General Characteristics | |

|---|---|

| Type of respondent | n (%) |

| Patient themselves | 228 (60) |

| Informal caregiver themselves | 26 (7) |

| Patients (proxy) | 1 (<1) |

| Health care providers | 77 (20) |

| Patients and informal caregivers | 9 (2) |

| Patients and health care providers | 10 (3) |

| Othera | 28 (7) |

| Health status of respondent | |

| Healthy | 40 (11) |

| With disease | 219 (58) |

| Mixed | 23 (6) |

| Not applicablec | 66 (17) |

| Not reported | 31 (8) |

| Disease category (n = 219) | |

| Non‐chronic condition | 3 (1) |

| Single chronic condition | 36 (16) |

| Multiple chronic conditions | 46 (21) |

| Cancer | 30 (14) |

| Mental and behavioral disorders | 35 (16) |

| Other nonspecific conditionsd | 36 (16) |

| Not reported | 33 (15) |

| Setting (not mutually exclusive) | |

| General population | 39 (10) |

| Primary care | 148 (39) |

| Secondary care | 121 (32) |

| Specialist care | 127 (34) |

| Community services | 47 (12) |

| Home‐based care | 20 (5) |

| Social services | 1 (<1) |

| Nursing homes | 16 (4) |

| Pharmacies | 2 (<1) |

| Mode of administration | |

| Face‐to‐face interview | 40 (11) |

| Tele‐interview | 20 (5) |

| Self‐administered (PAPI, postal) | 235 (62) |

| Self‐administered (CAPI, electronic) | 13 (3) |

| Combination | 26 (7) |

| Otherb | 30 (8) |

| Not reported | 15 (4) |

| Language administered in | |

| English | 249 (66) |

| Dutch | 32 (8) |

| Swedish | 13 (3) |

| Spanish | 6 (2) |

| Chinese | 5 (1) |

| Finnish | 5 (1) |

| German | 4 (1) |

| Korean | 4 (1) |

| Norwegian | 4 (1) |

| Thai | 3 (<1) |

| Portuguese | 3 (<1) |

| French | 2 (<1) |

| Japanese | 2 (<1) |

| Othere | 5 (1) |

| Multiple languages (at least 2) | 33 (9) |

| Not reported | 9 (2) |

| Construct‐specific Characteristics | |

| Constructs purported to measure | n (%) |

| Care integration | 25 (7) |

| Care continuity | 51 (14) |

| Care coordination | 26 (7) |

| Case management | 3 (<1) |

| Patient‐centered care | 216 (57) |

| Comprehensive care | 5 (1.3) |

| Patient satisfaction | 5 (1.3) |

| Chronic care | 10 (3) |

| Multiple constructs | 28 (7) |

| Otherg | 10 (3) |

| Constructs measured (mapped to the framework) | |

| Care integration | 124 (33) |

| Care continuity/comprehensive care | 58 (15) |

| Care coordination/case management | 12 (3) |

| Patient‐centered care | 185 (49) |

| Domain classification (mapped to the framework) h | |

| Clinical integration | 318 (84) |

| Professional integration | 14 (4) |

| Organizational integration | 13 (3) |

| System integration | 0 (0) |

| Functional integration | 2 (<1) |

| Normative integration | 0 (0) |

| Combination | 32 (8) |

Abbreviations: CAPI, computer‐assisted personal interviewing; PAPI, paper and pencil interviewing; proxy, an informal caregiver or another representative responds on behalf of the patient.

aDirect observer or rater, verbal coding, organizations, researchers, students.

bDirect observer or rater, checklist.

cRespondents were not patients.

dOnly described as patients.

eItalian, South African, Hebrew, Arabic, and Greek.

fRehabilitation center, university/teaching institution/medical schools, intermediate care facilities, integrated care organizations, health education facilities, long‐term care facilities, and dental facilities.

gClinical risk management, cultural competency, geriatric care environment, quality of care, care processes, organizational access, etc.

hNormative and system integration domains were always assessed in combination with the other domains.

We also identified a few ambiguous instruments (n = 47). An ambiguous instrument purported to measure a construct that is not relevant to integrated care (as defined in our framework and original search strategy), but used key constructs of integrated care as indicators for the main construct it intended to measure. For instance, some patient satisfaction instruments measured patient satisfaction in terms of integrated care constructs (eg, satisfaction with continuity of care, process of care, etc). Hence, these instruments had components, dimensions, or subscales related to our definition of integrated care, but did not aim to directly measure integrated care. We excluded these instruments in the quality assessment to consistently adhere to our conceptual framework.

In 5 index instruments, we found different studies91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102 using similar instruments to measure 2 different constructs (details in Appendix C). For example, different studies used the Primary Care Assessment Survey to measure different constructs such as interpersonal communication102; accessibility, relational continuity, interpersonal processes of care, respectfulness, and management continuity101; and organizational access.100 Likewise, a study used the Components of Primary Care Index to measure key aspects of primary care99 and another for measuring comprehensive care.98 The Consumer Quality Index was also used to measure quality of care in general and in disease‐specific settings,92, 93, 94, 95, 96, 97 while another study used it to measure patient experience in long‐term care.91

Other Instrument Characteristics

The majority of instruments were administered to either patients (60%) or health care providers (20%). Other types of respondents included informal caregivers, proxies to patients, direct observers, or a combination of these types. More than half of the instruments involved patients with disease (58%), which ranged from non‐chronic conditions, multiple chronic conditions, and cancers, to mental and behavioral disorders. Chronic conditions included, among others, injuries and conditions requiring rehabilitation, chronic infectious and noninfectious diseases, diabetes, hypertension, arthritis, heart conditions, and asthma. Other conditions designated as “nonspecific conditions” included frailty, poor health, hospitalized patients, and other common diseases. Cancer was included in a separate category to distinguish it from other chronic diseases. Historically, cancer is associated with an acute fatal illness, but recent developments in cancer therapy have changed the course and management of the disease.103 Some cancer types are now being managed as a chronic disease. Because our systematic review did not distinguish between the specific cancer types, we did not elect to simply combine cancer with other chronic diseases.

Traditional self‐administration using paper and pencil was the most common mode of administration (62%) followed by face‐to‐face interviews (11%), direct observation checklists (8%), and tele‐interviews (5%). A few instruments used a combination of traditional and computer‐assisted self‐administered instruments as well as face‐to‐face and tele‐interviews (8%). The instruments measured integrated care constructs in various settings, but mainly in primary (39%), secondary (32%), and specialist care (34%). Although the instruments were developed in various languages, the majority were in English (66%), with relatively fewer instruments in Dutch (8%) or Swedish (3%).

Type of Respondents

Finally, we cross‐tabulated the constructs measured with the type of respondents to further characterize the instruments we identified. In general, instruments measuring integrated care constructs were largely administered to patients, except for instruments that measure care coordination and case management, which largely targeted health care providers (Figure 5). All key constructs were consistently measured in either patients or health care providers, but very few in both (eg, 8% of instruments measured care coordination/case management). While the proportions of instruments administered to informal caregivers were noteworthy in those that measure care integration (64%) and patient‐centered care (58%), the use of patient proxies in instrument validation was largely unremarkable (only 0.8% of the care integration instruments). Examining the instruments in terms of the domains and type of respondents revealed a similar pattern (Figure 6). Most instruments classified under each domain were administered to either patients or health care providers. For example, instruments measuring clinical integration constructs were administered to patients (60%) or health care providers (15%). Moreover, the instruments generally targeted patient respondents more than the health care providers, except in the professional integration domain in which 57% of instruments were administered to health care providers.

Figure 5.

Instrument Validation According to the Constructs and Type of Respondents

Figure 6.

Instrument Validation According to the Domains and Type of Respondents

Quality of Studies: The COSMIN Quality Scores

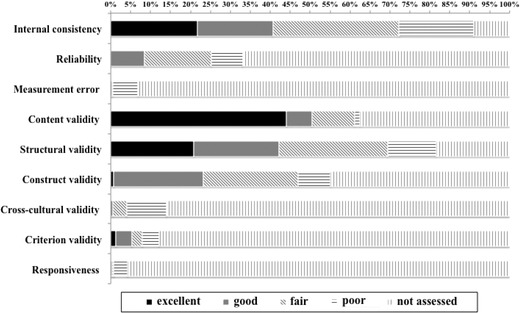

Table 7 presents a summary of the measurement properties of instruments evaluated in the validation studies (n = 379). At least half of the studies independently assessed the following measurement properties: internal consistency (91%), content validity (63%), structural validity (82%), and construct validity (55%). Cross‐cultural (14%) and criterion (12%) validity, measurement error (7%), and responsiveness (4%) were less commonly evaluated.

Table 7.

Summary of Measurement Properties Assessed in Records of Instrument Validation (n = 379)

| Measurement Property | n (%) |

|---|---|

| Internal consistency | 345 (91) |

| Reliability | 126 (33) |

| Measurement error | 26 (7) |

| Content validity | 237 (63) |

| Structural validity | 309 (82) |

| Construct validity (hypothesis testing) | 209 (55) |

| Cross‐cultural validity | 53 (14) |

| Criterion validity | 46 (12) |

| Responsiveness | 16 (4) |

For each validation study, the COSMIN quality scores obtained per measurement property indicate the quality of the study for the measurement property reported (Figure 7). We found that <50% of the validation studies were of good or excellent quality for any of the measurement properties. Studies that assessed internal consistency were mostly of fair (32%) or excellent (22%) quality. Structural validity showed a similar distribution where a larger proportion of studies were of fair (27%) or excellent (21%) quality. Content validity showed the highest percentage of excellent quality studies (44%), while internal consistency had the greatest percentage of poor quality studies (19%).

Figure 7.

COSMIN Scores: Methodological Quality of Studies per Measurement Property

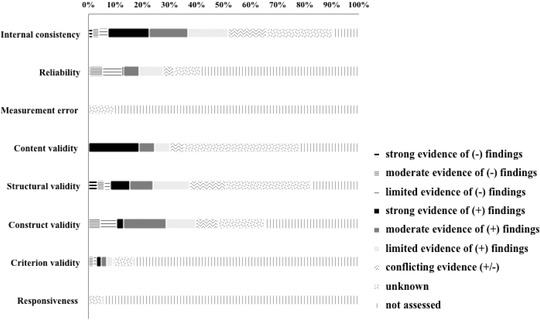

Quality of Instruments: The Best Evidence Synthesis

After separate assessments of the quality of studies (ie, COSMIN checklist) and the quality of instruments (ie, results of measurement properties reported), we combined the results from the 2 components to obtain the level of evidence and adequacy rating for each index instrument. Figure 8 shows the percentage of index instruments (n = 209) according to the level of evidence and adequacy of measurement properties. Consistent with the observed trends in each component assessed, at least 60% of the index instruments (n = 209) evaluated the following measurement properties: internal consistency (90%), content validity (78%), structural validity (74%), and construct validity (65%). The level of evidence for measurement error and responsiveness was largely unassessed, if not of unknown level. Correspondingly, the level of evidence for a considerable percentage of index instruments was unknown for internal consistency (24%), content validity (43%), structural validity (32%), and construct validity (17%). Nevertheless, a number of index instruments showed strong evidence of positive findings for internal consistency (15%), content validity (19%), and structural validity (7%); with a few others showing moderate evidence of positive findings for internal consistency (14%) and construct validity (16%). A few index instruments showed strong evidence of negative findings for internal consistency (1%), reliability (0.5%), and structural validity (3%).

Figure 8.

Level of Evidence for the Quality/Adequacy of the Measurement Properties

Discussion

Our systematic review examined studies on the measurement properties of instruments measuring integrated care. The study findings provide a comprehensive picture of important developments in integrated care measurement by presenting the currently available instruments and the relevant constructs and domains measured. We also summarized the strength of evidence for the adequacy of the measurement properties of these instruments. In the discussion, we further examine the implications of these findings within the context of the international literature and highlight the important research gaps addressed by the study.

Global Integrated Care Initiatives

The increasing number of relevant studies in our review underscores the growing interest in integrated care and its measurement. Although the majority of these studies involved work from Western Europe and North America, finding a few studies from Africa and Asia‐Pacific indicates the potential for expanding integrated care initiatives across the world. While adopting the design, implementation, and evaluation of integrated care is by no means straightforward, it is certainly worth exploring how other regions can learn from the early models of integrated care delivery and its measurement. After all, the use of integrated care as an important strategy for increasing health system performance should benefit as many health systems as possible. However, we must also recognize that the timing and relevance of implementing integrated care may differ for each health system.

Available Instruments

Our search strategy identified 209 index instruments that used different constructs to measure integrated care. Compared with prior systematic reviews on instruments,58, 63, 64, 70 the large number of instruments we identified in our review is consistent with the growing interest in integrated care described in the literature. Conversely, the paradigm shift toward chronic disease management and the resulting health care reforms1, 2, 3, 4 also may have driven the increasing relevance of integrated care. The large number of instruments also underscores the need to explore and develop more structured methodologies in the metrics of integrated care. Observing a considerable number of validated instruments (39%) tested in the primary care setting is consistent with our use of a framework that is based on primary care (eg, RMIC).53 In our review, we also found a few studies91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102 where similar instruments were used to measure different constructs of integrated care.98, 99, 100, 101, 102 This further demonstrates the need for a more standard process of selecting appropriate instruments to measure a particular construct.

Clearly, the integrated care instruments we identified measured varied constructs. This finding can be a direct consequence of our comprehensive framework designed to capture any construct relevant to the integrated care experience. In addition, our review included studies from as early as the 1980s. Such studies predate the recently developed taxonomy and conceptual framework of integrated care we used, which may have contributed to the nonspecific conceptualization of integrated care. The variation in the constructs measured remains consistent with previous accounts that described the multidimensionality of integrated care.8, 11 Moreover, the different constructs and dimensions measured in the instruments we identified can offer insights into the state of integrated care initiatives in the health systems where these instruments were developed. We venture this view given the importance of the “context of use” in instrument development and validation. Hence, the measurement model that is relevant to one health system may not necessarily work for another. For example, providers that focus on acute care services would be interested in evaluating structures and processes that are fundamentally different from those that have adopted a chronic care model (eg, integrating chronic disease management in primary care services). In this regard, the exclusion of the ambiguous instruments in our study emphasizes our focus on identifying instruments that measure the structure and process—rather than the outcomes—of integrated care. This important distinction underscores the importance of focusing on organizational structures and processes in improving overall performance of the system.14

Mapping the constructs purportedly measured by the instruments to our systematic review framework provided additional practical information. We categorized the majority of the instruments under patient‐centered care and care integration, with fewer instruments measuring care continuity/comprehensive care and care coordination/case management. The relative abundance of instruments measuring patient‐centered care is consistent with the majority of these instruments (60%) targeting patient respondents. These findings suggest the growing relevance of patients in assuming a more active role in the health system. Conversely, the findings may also indicate that health systems have started to recognize the importance of engaging patients in improving the health system.

In terms of the domains, we mapped only a few of the constructs to the professional, organizational, and functional integration domains, and we found that none of the instruments measured constructs specifically related to the normative or system integration domains. It is likely that the relevant constructs for these domains may be more deeply underlying (eg, organizational policies, value system),42 which could limit efforts to measure these areas. Although we mapped the constructs to combinations of these domains in some instruments, none of the instruments measured all the relevant constructs and domains of integrated care as defined in our review framework.

These results draw attention to the specific dimensions of integrated care that remain under‐investigated. Other equally important constructs (ie, care continuity/comprehensive care and care coordination/case management) and domains (ie, system and normative integration) were not extensively examined. Further, we did not find one comprehensive instrument that measured all the relevant dimensions of integrated care. The fundamental structure of a health system may influence the decision to measure parts rather than the whole of integrated care. It is also likely that some health systems choose to focus on specific aspects of integrated care—while dispensing with other aspects—given their respective priorities and prevailing needs, goals, and standards in health care delivery. For example, a study on instrument development involving a health system that aims to strengthen both person‐focused and population‐based care may elect to develop and validate an instrument that captures all the dimensions of integrated care as depicted in our framework. Fundamentally, a partial understanding of integrated care may be suitable given the proper context and objective of measurement. However, focusing on specific aspects of integrated care without the unifying elements of a comprehensive framework could further contribute to the conceptual ambiguity that has persistently plagued investigations in integrated care. It is also important to note the possibility that the capacity of standard measurement instruments may be limited and insufficient to adequately capture some dimensions of integrated care. Other data sources and measurement approaches (eg, registry data, mixed data sources, policy documents) may be needed to measure some dimensions.

While these findings clearly point to a measurement gap, they still imply that partial integrated care efforts are making headway. Finding studies that only measured certain dimensions of integrated care is still a good indication of the measurement of ongoing integrated care initiatives. Whether a comprehensive instrument is needed to provide a better measure of integrated care remains an outstanding question63 and one that is beyond the scope of our study. Nevertheless, our systematic summary of the available instruments and their measurement properties provides a good starting point for subsequent investigations. Future studies should consider identifying and synthesizing all the necessary information that can provide sufficient evidence of the need for a comprehensive instrument. Doing so would entail a series of validity testing of the available comprehensive instruments.

Altogether, these findings on integrated care instruments reinforce assertions from previous studies that point to the lack of a standard definition and a unified framework for measuring integrated care. The complexity in operationalizing the integrated care concept has ever since rendered the task of measuring it even more challenging. Nevertheless, it is imperative to develop valid measures of integration across health care systems.16

Quality of Instruments

Besides identifying integrated care instruments and the constructs they measured, our systematic review also attempted to assess the quality of studies and the quality of instruments measuring integrated care. Applying the COSMIN checklist to these instruments showed that internal consistency, structural validity, content validity, and construct validity were the most commonly assessed measurement properties; whereas responsiveness, measurement error, and criterion and cross‐cultural validity were less commonly evaluated. Less than 50% of the validation studies were rated to be of good or excellent quality for any of the measurement properties. Only 19% of index instruments showed strong to moderate evidence of positive findings for some measurement properties (ie, internal consistency, content validity, structural validity, and construct validity). However, the strength of evidence on the adequacy of most of the measurement properties remained largely unassessed (see Figure 8). This is mainly due to having only poor quality studies or only indeterminate findings on the measurement properties of the instruments. As proffered in a previous systematic review of measurement properties of care continuity instruments,70 these findings on the levels of evidence do not suggest that the available instruments are of poor quality. The results only highlight the need for more high‐quality studies that can adequately assess the measurement properties and, ultimately, the instrument quality.

The latter findings further position our review in the context of the broad integrated care literature. Assessing the quality of studies and measurement properties of integrated care instruments is important in refining the knowledge base in this field. Other systematic reviews on the instruments measuring integrated care concepts58, 63 did not include the quality assessment of the measurement properties of the instruments. Uijen and colleagues, however, provided a useful guide for our own work, as they closely examined the measurement properties of questionnaires measuring continuity of care.70 Moreover, the pervasiveness of using questionnaires in measuring integrated care64 suggests the need for a thorough validation of already existing measurement instruments across different settings.63, 77 The results of our systematic review can facilitate setting the research agenda for validating the currently available instruments and informing the decision to develop new ones.80 In doing this study, other research areas may take an interest in exploring the value of a systematic review of the measurement properties of instruments. And as more reviews of this kind are conducted, the methodologies for systematic reviews of measurement properties can be improved.

Limitations and Strengths of the Systematic Review

It is important to consider these findings in light of the limitations of the review. First, our comprehensive systematic review framework resulted in a heterogeneous pool of studies and instruments. This heterogeneity in the study design characteristics and the statistical results of the measurement properties precluded more advanced quantitative analysis via statistical pooling. A quantitative approach could have improved the interpretability of the findings at a level sufficient to support recommendations for selecting the best instrument. Moreover, given the number of studies included in our review, we resolved to take the data from the studies at face value without verifying the data with the primary study investigators. Although we devised a basic data management system to maintain the validity of data extraction, the reporting of results in the primary studies also limited the quality of data extracted.

Second, we did not search the gray literature, which may contain relevant instruments. Considering the scope of our review, we anticipated a substantial number of relevant articles, which inevitably had an impact on the feasibility of searching multiple databases. Nevertheless, limiting the search to MEDLINE/PubMed is based on a reasonable assumption that the majority of relevant publications would have been indexed in PubMed, rather than in other databases that are recommended for clinical research and other sub‐branches of medicine. Experts in this field recommend using at least MEDLINE and Embase.80 Including Embase in our search, however, may not have provided additional relevant articles given that the database focuses more on drugs and pharmacology.104 Similarly, searching Embase would yield a greater number of case reports,105 which is an article type that is not relevant to our selection criteria.

Third, excluding non‐English articles may have introduced language bias. We expect to minimize the potential influence of such a bias in maintaining that English is the standard language in journals that publish works on integrated care. Furthermore, there is no evidence that the exclusion of languages other than English introduces any systematic bias in reviews.106 Finally, unlike conventional systematic reviews, we could not assess the impact of publication bias in the absence of standard methods of bias assessment for systematic reviews of measurement properties.80

Aside from these limitations, a systematic review of the measurement properties of instruments also presents general methodological challenges and learning opportunities. This type of systematic review has multiple outcome measures (ie, constructs and measurement properties) that may not be consistently evaluated in all the studies. Conversely, the evidence for one measurement property may come from multiple studies; each measurement property would then require its own set of data synthesis. Nevertheless, we put forth our best effort to present the quality of studies and measurement properties of instruments that would allow for a comprehensive overview of how integrated care is measured. Further, our data synthesis focused on the qualitative approach used in previous systematic reviews.58, 63, 70, 81 This approach permitted the best possible synthesis of evidence that accounted for the between‐study similarities, the quality of each study, and the consistency of measurement properties reported across different studies.81 Although the COSMIN checklist was originally developed for the assessment of instruments that intend to measure patient‐reported health outcomes,107 it was deemed an appropriate tool for assessing the quality of studies on other types of instruments.80

Despite these limitations, a key strength of our review is the completion of the formidable task of presenting a comprehensive picture of the integrated care measurement landscape. Pursuing maximum effort in capturing and synthesizing data on an evolving research area is challenging. Still, the insights gained from our review findings outweigh the challenges that had to be overcome. These insights, however, are only as sound as the supporting framework for understanding and measuring integrated care. Hence, we presented our findings within a framework that combined core elements of the IOM continuum of care model, the continuum of integration model, and the RMIC, which is central to the current understanding of integrated care.53 We identified a large number of studies on instruments measuring a range of different integrated care concepts. Moreover, our review included studies involving patients, informal caregivers, and health care providers that represent important groups of stakeholders in integrated care. We also exploited a rich dataset of instruments to provide potential end users with organized and structured information. Finally, we provided a best evidence synthesis of the quality of the measurement properties of the instruments we identified, which makes for a distinct contribution to the integrated care literature.

Study Implications

The results of systematic reviews are generally useful for research, guideline development in evidence‐based patient care, and policymaking.80 Used with proper consideration of the review framework and its inherent limitations, the results generated from our study have practical benefits for researchers, decision makers, and field practitioners alike.