Abstract

Network meta-analysis is a technique for comparing multiple treatments simultaneously in a single analysis by combining direct and indirect evidence within a network of randomized controlled trials. Network meta-analysis may assist assessing the comparative effectiveness of different treatments regularly used in clinical practice, and therefore has become attractive among clinicians. However, if proper caution is not taken in conducting and interpreting network meta-analysis, inferences might be biased. The aim of this paper is to illustrate the process of network meta-analysis with the aid of a working example on first-line medical treatment for primary open-angle glaucoma. We discuss the key assumption of network meta-analysis, as well as the unique considerations for developing appropriate research questions, conducting the literature search, abstracting data, performing qualitative and quantitative synthesis, presenting results, drawing conclusions, and reporting the findings in a network meta-analysis.

Keywords: Network meta-analysis, Multiple treatment meta-analysis, Comparative effectiveness, Transitivity

Introduction

Systematic reviews of randomized controlled trials (RCTs) are generally considered the highest level of evidence for the relative effectiveness of interventions [1–3]. Meta-analysis is a statistical technique for quantitatively synthesizing similar studies from a systematic review. The conventional meta-analysis approach is useful, but is limited in that it can only compare two interventions at a time, and only those evaluated directly in head-to-head trials [4–6].

Network meta-analysis (NMA) is a relatively recent development, which extends principles of meta-analysis to the evaluation of multiple treatments in a single analysis. This is achieved by combining the direct and indirect evidence. Direct evidence refers to evidence obtained from randomized control trials (RCTs); for example, in a trial comparing treatments A and B, direct evidence is the estimate of relative effects between A and B. Indirect evidence refers to the evidence obtained through one or more common comparators [7]. For example, in the absence of RCTs that directly evaluate A and B, interventions A and B can be compared indirectly if both have been compared to C in studies (forming an A-B-C “loop” of evidence). The combination of direct and indirect evidence is called mixed evidence.

A valid NMA will satisfy the assumption of transitivity, that there are no systematic differences between the available comparisons other than the treatments being compared [6]. Another way to see this is that in a hypothetical RCT consisting of all the treatments included in the NMA, participants could be randomized to any of the treatments. For example, in glaucoma, topical medications are prescribed as monotherapies for initial treatment, and combination therapies are only used in patients whose intraocular pressure is insufficiently controlled by monotherapy [8, 9]. Therefore, RCTs examining first-line treatment would not include combination therapies, and including combination treatments in an NMA of first-line treatments would introduce intransitivity. Another example where transitivity would be violated is when treatment for a condition is based on genetic biomarkers. In breast cancer, the expression of the human epidermal growth factor receptor 2 (HER2) gene is used to determine the treatments plan, as treatments targeting HER2 are used for HER2-positive breast cancer, but not HER2-negative [10]. Therefore, treatments for HER2-positive breast cancer and for HER2-negative breast cancer would not be evaluated in the same RCT, and should not be included in the same NMA. The evaluation of the transitivity assumption is critical because the existence of intransitivity will bias treatment effect estimates.

In this paper, we provide a tutorial, and discuss important aspects of NMA (that are different from a conventional pair-wise meta-analysis) using a published NMA as a working example. A summary of the steps for a conventional systematic review and meta-analysis and the additional considerations for NMA are shown in Table 1.

Table 1.

Summary of steps of a conventional systematic review and additional considerations for a network meta-analysis

| Steps of a Systematic Review | Considerations for a Network Meta-analysis |

|---|---|

| 1. Define the review question and eligibility criteria | - Question should benefit from network meta- analysis - Define the treatment network |

| 2. Search for and select studies | - Ensure search is broad enough to capture treatments of interest |

| 3. Abstract data and assess risk of bias | - Abstract information on potential effect modifiers which may violate the assumption of transitivity |

| 4. Synthesize evidence qualitatively | - Evaluate the network geometry - Evaluate transitivity |

| 5. Synthesize evidence quantitatively | - Conduct pairwise meta-analyses first - Use statistical models appropriate for network meta-analysis - Evaluate inconsistency - Summarize results using approaches suitable for network meta-analysis, such as league tables - If ranking, use appropriate ranking statistics, such as SUCRA |

| 6. Interpret results and draw conclusions | - Carefully interpret results, especially rankings - Interpret results in context of outcomes examined - If using GRADE approach to evaluate quality of evidence, adapt for network meta-analysis |

| 7. Report findings | - Follow the PRISMA extension for network meta- analysis |

The working example we will use is an NMA for first-line medical treatment for primary open-angle glaucoma [8]. This NMA includes 14 active treatments and placebo from 114 RCTs (20,275 participants), and examines mean reduction of intraocular pressure at 3 months.

Defining the research question and treatment network

As with any systematic review, the first step is to define the research question. The recommended approach to developing the research question is the PICO (i.e., participants, intervention, comparator, and outcome) framework [11]. NMA can be used to answer comparative effectiveness research questions in which multiple interventions are available, or can be used for a given condition. In addition, network meta-analysis can estimate relative rankings of interventions. In our example, the research question is: What is the comparative effectiveness of first-line medical treatments for reducing intraocular pressure at 3 months in patients with primary open-angle glaucoma or ocular hypertension, and what are the relative rankings of these treatments [8]? Since the research question is about the comparative effectiveness of multiple treatments, it is suitable for NMA. On the other hand, if the question focused on the effectiveness of a particular topical therapy for glaucoma, and enough studies were available for that specific comparison, we could address it more efficiently using conventional pairwise meta-analysis.

The next step for NMA is defining the treatment network. Decisions need to be made on the size of the network, and how distinctly treatments should be examined [12, 13]. While it is ideal to include all treatments relevant to the population to have a complete evidence base, larger networks require more resources to acquire the data, and may be more likely to violate transitivity [12, 14]. Interventions such as placebo or no treatment are usually considered even if they are not of clinical interest because they can inform other comparisons by providing indirect evidence. However, in cases where the placebo-controlled trials are qualitatively different from head-to-head trials (e.g., different susceptibility to reporting bias), putting them together may be problematic.

The distinct identity of interventions evaluated is also important. Interventions can be split into individual drugs or specific doses of drugs, or lumped into drug classes or type of treatment (e.g., medical, behavioral, etc.). The decision of splitting or lumping of interventions should be based on clinical relevance. If all drugs within a class are considered clinically interchangeable, then it may be sufficient to examine treatments as classes. If there are important questions at the class, drug, and even dose level, the reviewers can define separate networks for analysis. In the glaucoma NMA, treatments were evaluated at the drug level [8].

Data collection process

Data collection in meta-analysis consists of a literature search, study screening, and data abstraction as pre-specified in a protocol. In NMA, there are some additional considerations for data collection. Since the research question for network meta-analyses is broader than for a conventional one, the literature search is usually broader as well. The literature search should be conducted with an informationist to ensure that all possible treatments of interest are covered in the identified studies [15].

For data abstraction, it is important to abstract information on potential effect modifiers from studies to enable the evaluation of transitivity. Effect modifiers are clinical and methodological features of the included studies that could affect the size of effect. The effect modifiers should be pre-specified in the protocol based on clinical experience or review of the prior literature; usually relevant characteristics are study eligibility criteria, population characteristics, study design, and risk of bias items. In the glaucoma NMA, potential effect modifiers include the age of the participants, the baseline intraocular pressure, and study sponsorship.

Analyzing data

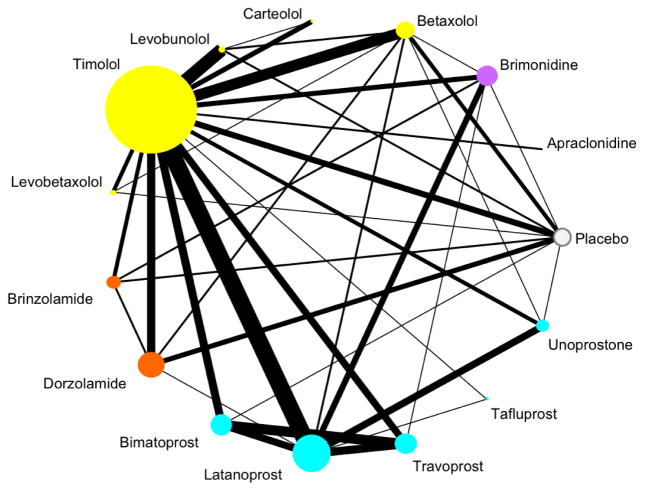

Prior to data analysis, it is important to understand the geometry of the network [13, 16]. The network geometry shows which interventions have been compared directly in RCTs, and which can only be informed indirectly. The network geometry can be visualized using a network graph (Figure 1). From this example, we can see that the most common comparator is timolol, which has been compared in head-to-head trials with all other interventions in the network. Direct comparisons are missing for many interventions, such as between placebo and latanoprost, a commonly prescribed glaucoma treatment. The width of the edges and the size of the nodes can show the amount of information available, for example, they can be drawn being proportional to the number of trials, number of participants, or precision.

Fig. 1.

Network graph. Each node represents one drug, color-coded by class. Here, the size of the node is proportional to the number of participants randomized to that drug, and the width of the edge is proportional to the number of trials comparing two drugs [8]

Qualitative synthesis

Every systematic review should conduct a qualitative synthesis before the quantitative synthesis, and this applies equally to NMA. In NMA, the qualitative synthesis includes assessing clinical and methodological heterogeneity, as in a conventional systematic review, as well as transitivity. If there is substantial variation in the potential effect modifiers between studies, this suggests the presence of important heterogeneity. If there is substantial variation between comparisons on effect modifiers, it suggests intransitivity. For example, all the studies for one comparison may focus on younger populations while the studies for all other comparisons primarily examine older adults; this scenario is problematic when age is an effect modifier. If intransitivity is suspected based on the qualitative synthesis, then a quantitative synthesis may not be appropriate.

Quantitative synthesis

Pairwise meta-analyses of all directly compared interventions should be carried out before conducting NMA so that the statistical heterogeneity of studies within each comparison can be evaluated [17]. High statistical heterogeneity in the pairwise meta-analyses may affect the confidence of the NMA results.

The next step is to develop the NMA model. Examples of commonly used models include the multivariate model [18, 19] or hierarchical model [20, 21]. In either case, the reference treatment, which all treatments will be compared to in the analysis, needs to be selected. The reference selected is usually either a placebo or no treatment group, or the most common comparator treatment. For example, in the glaucoma network, either placebo or timolol would be an appropriate reference. All other treatments can be compared to each other via the reference.

It is also important to specify how heterogeneity will be assumed to act in the NMA model. Heterogeneity can either be comparison-specific, or common across comparisons. Many NMAs assume a common heterogeneity when there are only a small number of studies per direct comparison, since the estimation of heterogeneity can be more powerful by borrowing strength across the comparisons [6]. On the other hand, comparison-specific heterogeneities may be preferred when many studies inform each comparison, and the assumption of a common heterogeneity is not plausible [6]. Routines and codes for conducting NMA exist for R, Stata, and WinBUGS [19, 22–24]. Since NMA is statistically complex, regardless of the model used, it is advised that one work with a trained statistician when conducting NMA.

A further assumption of NMA is consistency, the statistical agreement between the direct and indirect comparisons. Consistency is the statistical manifestation of transitivity to the data. The approaches for checking inconsistency can be classified in two categories: the global approaches and the local approaches. For the global approaches, inconsistency is evaluated in the entire network by modifying the NMA model to account for potential inconsistency, whereas the local approaches detect potential “hot spots” of inconsistency in the network, such as by examining individual loops of evidence separately. It is generally recommended to use both types of methods for inconsistency. Inconsistency can be checked using routines in either Stata or R or WinBUGS codes [24–26].

If inconsistency is identified, there are a few approaches to handling it. First, reviewers should make sure there are no errors in data extraction. Next, it is important to more closely examine the potential effect modifiers of studies within inconsistent loops. Network meta-regression models could be fitted to check how the potential effect modifiers may impact the results. Sensitivity analyses excluding studies that may be sources of inconsistency might be also helpful to assess the robustness of results. If there is substantial inconsistency and the sources cannot be identified, NMA may not be appropriate method for synthesizing the data [6].

Presentation of results

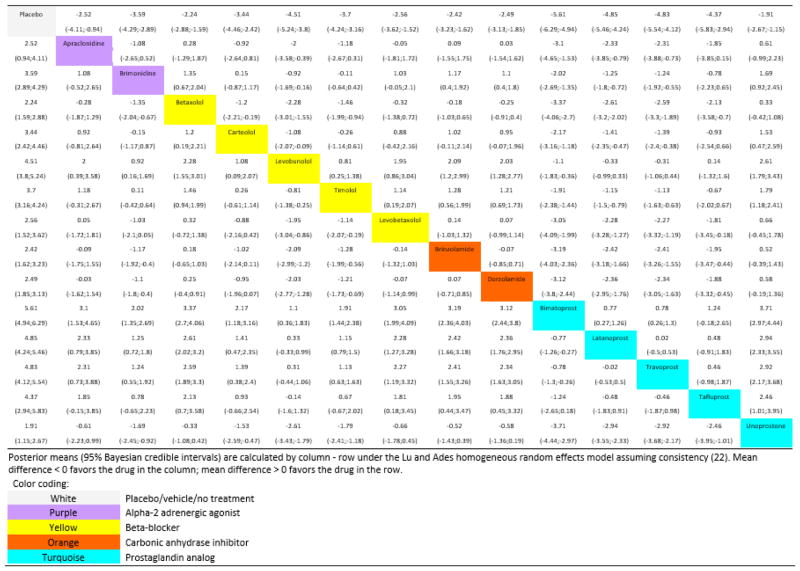

Relative effect estimates

NMA allows for comparing the relative effect between any pair of interventions. In our example of 15 interventions, there are 105 relative effect estimates. We can present these results using a square matrix called a league table [13]. The league table contains all information about relative effectiveness and their uncertainty for all possible pairs of interventions (Figure 2). For example, the first cell in the upper left corner shows that apraclonidine significantly reduces intraocular pressure compared to placebo (mean reduction: 2.52 mm Hg; 95% credible interval: 0.94, 4.11). Overall, by looking at the first column of the league table, it shows that all active interventions are more effective than placebo, and that bimatoprost has the highest point estimate reduction in intraocular pressure, although the 95% credible intervals for many estimates in this column overlap. A single league table can show the results for up to two outcomes. For example, in an NMA on antimanic drugs for acute mania, the authors showed both efficacy and acceptability in one league table [27].

Fig. 2.

League table representing summary estimates from network meta-analysis [8]

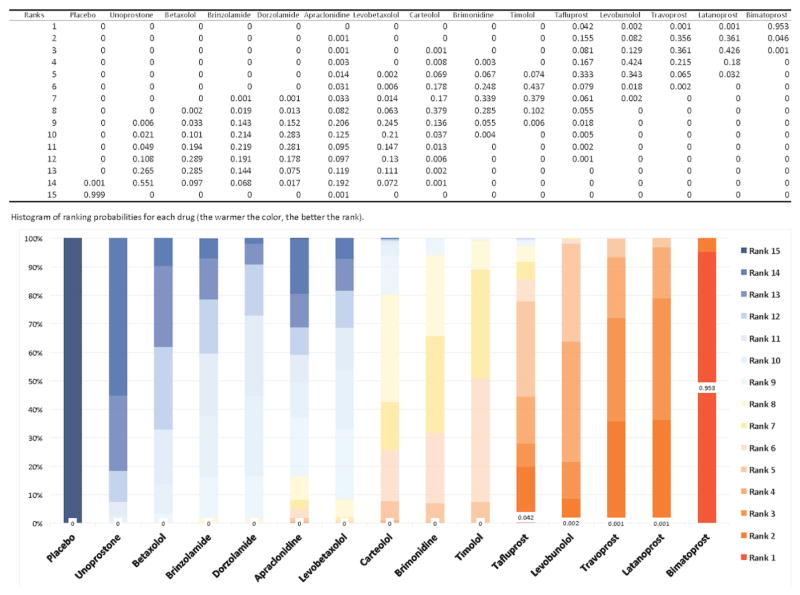

Ranking probabilities

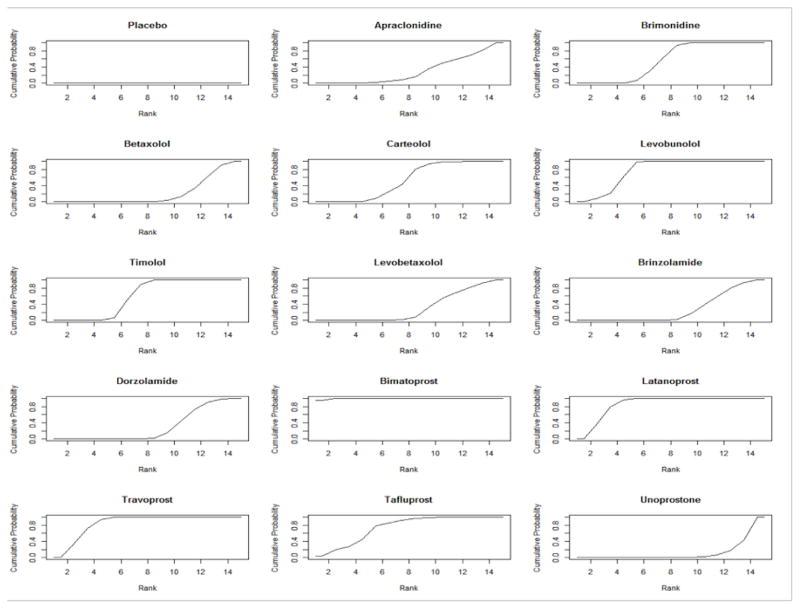

One of the advantages of NMA is that it allows for the ranking of interventions. Based on the results of the NMA, we can calculate the probability of each intervention taking a particular rank (Figure 3). We can see that the probability for bimatoprost being ranked the best is 95.3%, while tafluprost, with the next highest probability for being ranked the best, is 4.2%. It is discouraged to rank treatments based on the probability of being ranked the best because this approach does not account for uncertainty in relative effect estimates and relative ranking. It is more appropriate to infer on treatment ranking using the mean rank, or the cumulative ranking probabilities [22]. Figure 4 shows the cumulative ranking probability curves for each treatment in our network. Using these graphs, we can rank the treatments according to the surface under the cumulative ranking curves (SUCRA). The SUCRA value represents the probability that a treatment has of being among the best options [28]. A SUCRA value of 100% indicates the treatment is certain to be the most effective in the network, while a value of 0% indicates it is certain to be the least effective. The larger the SUCRA value, the better the rank of an intervention in the network.

Fig. 3.

Ranking probabilities for any drug at any position [8]

Fig. 4.

Cumulative ranking probabilities for each treatment in the network. The surface under the cumulative ranking curve (SUCRA) value is the probability each treatment has of being among the best of those in the network, with larger values representing higher ranking probabilities. In this figure, the top three treatments are bimatoprost, latanoprost, and travoprost, with SUCRA values of 99.6%, 86.56%, and 85.76%, respectively [8]

Interpreting the results and drawing conclusions

Much care should be taken when interpreting the results and drawing conclusions from NMA. The conclusions drawn from NMA should depend on the outcomes examined. The clinical utility of interventions can be better understood if the analysis considers both effectiveness and safety outcomes. It is also important to consider if the outcomes evaluated are surrogate outcomes or clinical or patient-important outcomes. For example, the primary outcome of the glaucoma NMA was intraocular pressure, a surrogate outcome for visual function. Despite serving as the basis for glaucoma drug approval by regulatory agencies, the validity of intraocular pressure as a surrogate outcome is not completely established as some studies support the relationship between intraocular pressure and visual function while others provide evidence against it [29]. On the other hand, relatively few glaucoma trials report information on visual function or other clinical or patient-important outcomes [8]. In such a case, the relevance of the NMA findings to clinicians and patients may be less certain, and the conclusions should reflect this.

In the presence of heterogeneity or inconsistency in the network, poor quality of the underlying studies, or only a small amount of data available, results need to be interpreted with caution. Ranking, while appealing to facilitate clinical decisions, may be misleading. Even when appropriate ranking statistics like SUCRA values are used, high-ranking treatments may still have modest or insignificant clinical effects, and therefore should be interpreted in the context of the treatment effects [30]. In the glaucoma NMA, the SUCRA method produced a distinct rank for each treatment, but within-class treatment effect differences were small and potentially not clinically meaningful, indicating that factors other than effectiveness, such as: cost, side effects, and patient preferences, may be more important in treatment selection [8].

A common approach for evaluating the quality of evidence from a systematic review is the Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach [31]. In the GRADE approach, the evidence is evaluated based on six domains: study limitations, heterogeneity and inconsistency, indirectness, imprecision, and publication bias. Considerations for study limitations include study design (randomized vs. non-randomized) and risk of bias of the individual studies, with non-randomized studies or randomized studies with a high risk of bias being downgraded. Important heterogeneity and inconsistency will downgrade the evidence. Indirectness refers to the applicability of the body of evidence to the question of interest in terms of population, interventions, and outcomes. If the review is interested in considering patients with comorbidities, but trials only include patients without comorbidities, the evidence would be downgraded for indirectness. Evidence would also be downgraded for indirectness if trials only examined surrogate outcomes rather than clinical or patient-important outcomes of interest to the review. If effect estimates have wide uncertainty intervals crossing or close to the null effect, the evidence is downgraded for imprecision. Finally, evidence of publication bias, that studies are more likely to be published if they have favorable results for the treatment of interest, will downgrade the evidence. Publication bias may be suspected if direct evidence comes from a small number of commercially funded studies [32], or based on quantitative assessment using contour-enhanced funnel plots [33].

There are two proposed approaches for applying GRADE to NMA [33, 34]. Both approaches begin by evaluating each domain for each direct comparison. In the Salanti et al. approach, ratings across domains are combined to give domain-specific ratings, and then the domain-specific ratings are combined to determine the quality of the indirect or mixed evidence overall [33]. In the Puhan et al. approach, ratings for the six domains are combined for each comparison to give comparison-specific summary ratings, and then the summary ratings are combined to determine the quality of evidence for indirect and mixed evidence [34].

The conclusions of NMA can be used to inform clinical practice guidelines because guidelines often aim to make treatment recommendations in the context of multiple available options [35]. In our previous work, based on the glaucoma NMA, we find that NMA may facilitate the timeliness of practice recommendations made in clinical guidelines [30].

Reporting of network meta-analysis

To make the results of a systematic review useful to researchers, clinicians, decision-makers, and patients, it is important to ensure that the entire process is transparent so that the strengths and weaknesses of the review can be properly evaluated. To this end, the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) statement was developed to provide guidance on essential items to report for transparency [36]. Due to the unique aspects of NMA, an extension to the PRISMA statement for NMA was recently published [37]. Items added to the extension statement include presenting, summarizing, and evaluating network geometry, and assessing and exploring inconsistency. Important modifications of the original statement include describing the eligibility criteria for treatments and how treatments will be lumped or split, using NMA-specific approaches to presenting results (such as league tables and treatment rankings), and discussing the validity of the transitivity assumption.

A few cautious notes about network meta-analysis

NMA can be a powerful tool for comparative effectiveness research due to its ability to indirectly assess evidence and to rank treatments. However, NMA is more complex than pair-wise meta-analysis. The assumption of transitivity is strict, and needs to be considered throughout the entire process of NMA. Additional analyses, such as network meta-regression are often required, and further increase the complexity of the analysis. Furthermore, NMA is very resource-intensive. As NMAs generally ask broader questions, they usually involve more studies at each step of the systematic review, from screening to analysis, than conventional meta-analysis. Before actually conducting an NMA, it is important to anticipate the time and resource commitment required.

Conclusions

In summary, NMA is a promising method that can inform comparative effectiveness research in the presence multiple treatments, but care needs to be taken using this method. The clinical question should be developed with input from both a subject area clinical expert and a statistician. Assessments of transitivity and consistency are integral for ensuring the NMA is valid. Good reporting is important so that the NMA can be properly evaluated. Finally, the time and resource commitments required to produce a high-quality NMA should be considered before undertaking one.

Footnotes

Conflict of Interest

The authors declare that they have no conflict of interest.

References

- 1.Guyatt GH, Sackett DL, Sinclair JC, et al. Users’ guides to the medical literature. IX. A method for grading health care recommendations. Evidence-Based Medicine Working Group. JAMA. 1995;274:1800–4. doi: 10.1001/jama.281.21.2029. [DOI] [PubMed] [Google Scholar]

- 2.Brighton B, Bhandari M, Tornetta P, Felson DT. Hierarchy of evidence: from case reports to randomized controlled trials. [10.1097/01.blo.0000079323.41006.12];Clin Orthop Relat Res. 2003 Aug;:19–24. doi: 10.1097/01.blo.0000079323.41006.12. [DOI] [PubMed] [Google Scholar]

- 3.Evans D. Hierarchy of evidence: A framework for ranking evidence evaluating healthcare interventions. J Clin Nurs. 2003;12:77–84. doi: 10.1046/j.1365-2702.2003.00662.x. [DOI] [PubMed] [Google Scholar]

- 4.Cipriani A, Higgins JPT, Geddes JR, Salanti G. Conceptual and technical challenges in network meta-analysis. Ann Intern Med. 2013;159:130–7. doi: 10.7326/0003-4819-159-2-201307160-00008. [DOI] [PubMed] [Google Scholar]

- 5.Biondi-Zoccai G, Abbate A, Benedetto U, et al. Network meta-analysis for evidence synthesis: What is it and why is it posed to dominate cardiovascular decision making? Int J Cardiol. 2015;182:309–314. doi: 10.1016/j.ijcard.2015.01.023. [DOI] [PubMed] [Google Scholar]

- 6.Salanti G. Indirect and mixed-treatment comparison, network, or multiple-treatments meta-analysis: many names, many benefits, many concerns for the next generation evidence synthesis tool. Res Synth Methods. 2012;3:80–97. doi: 10.1002/jrsm.1037. [DOI] [PubMed] [Google Scholar]

- 7.Li T, Puhan MA, Vedula SS, et al. Network meta-analysis-highly attractive but more methodological research is needed. BMC Med. 2011;9:79. doi: 10.1186/1741-7015-9-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li T, Lindsley K, Rouse B, et al. Comparative Effectiveness of First-Line Medications for Primary Open-Angle Glaucoma: A Systematic Review and Network Meta-analysis. Ophthalmology. 2016;123:129–140. doi: 10.1016/j.ophtha.2015.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Michelessi M, Lindsley K, Yu T, Li T. Combination medical treatment for primary open angle glaucoma and ocular hypertension: a network meta-analysis. Cochrane database Syst Rev. 2014 doi: 10.1002/14651858.CD011366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yu Q, Zhu Z, Liu Y, et al. Efficacy and Safety of HER2-Targeted Agents for Breast Cancer with HER2-Overexpression: A Network Meta-Analysis. PLoS One. 2015;10:e0127404. doi: 10.1371/journal.pone.0127404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Russell R, Chung M, Balk EM, et al. Issues and Challenges in Conducting Systematic Reviews to Support Development of Nutrient Reference Values: Workshop Summary. Agency for Healthcare Research and Quality (US); Rockville, MD: 2009. [PubMed] [Google Scholar]

- 12.Caldwell DM. An overview of conducting systematic reviews with network meta-analysis. Syst Rev. 2014;3:109. doi: 10.1186/2046-4053-3-109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mavridis D, Giannatsi M, Cipriani A, Salanti G. A primer on network meta-analysis with emphasis on mental health. Evid Based Ment Heal. 2015;18:40–46. doi: 10.1136/eb-2015-102088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sturtz S, Bender R. Unsolved issues of mixed treatment comparison meta-analysis: network size and inconsistency. Res Synth Methods. 2012;3:312–24. doi: 10.1002/jrsm.1058. doi: 10.1002/jrsm.1058. Epub 2012 Sep 25. [DOI] [PubMed] [Google Scholar]

- 15.Li L, Tian J, Tian H, et al. Network meta-analyses could be improved by searching more sources and by involving a librarian. J Clin Epidemiol. 2014;67:1001–7. doi: 10.1016/j.jclinepi.2014.04.003. [DOI] [PubMed] [Google Scholar]

- 16.Mills EJ, Thorlund K, Ioannidis JP. Demystifying trial networks and network meta-analysis. BMJ Br Med J. 2013;2914:10–15. doi: 10.1136/bmj.f2914. [DOI] [PubMed] [Google Scholar]

- 17.Deeks JJ, Higgins JP, Altman DG. Chapter 9. Analysing data and undertaking meta-analyses. Cochrane Handb Syst Rev Interv 2011 [Google Scholar]

- 18.Higgins JPT, Jackson D, Barrett JK, et al. Consistency and inconsistency in network meta-analysis: concepts and models for multi-arm studies. Res Synth Methods. 2012;3:98–110. doi: 10.1002/jrsm.1044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.White IR. Network meta-analysis. Stata J. 2015;15:951–985. [Google Scholar]

- 20.Salanti G, Higgins JP, Ades A, Ioannidis JP. Evaluation of networks of randomized trials. Stat Methods Med Res. 2008;17:279–301. doi: 10.1177/0962280207080643. [DOI] [PubMed] [Google Scholar]

- 21.Lu G, Ades AE. Combination of direct and indirect evidence in mixed treatment comparisons. Stat Med. 2004;23:3105–3124. doi: 10.1002/sim.1875. [DOI] [PubMed] [Google Scholar]

- 22.Chaimani A, Higgins JPT, Mavridis D, et al. Graphical tools for network meta-analysis in STATA. PLoS One. 2013;8:e76654. doi: 10.1371/journal.pone.0076654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.van Valkenhoef G, Lu G, de Brock B, et al. Automating network meta-analysis. Res Synth Methods. 2012;3:285–299. doi: 10.1002/jrsm.1054. [DOI] [PubMed] [Google Scholar]

- 24.Dias S, Welton NJ, Sutton AJ, et al. Evidence synthesis for decision making 4: inconsistency in networks of evidence based on randomized controlled trials. Med Decis Making. 2013;33:641–56. doi: 10.1177/0272989X12455847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.White IR, Barrett JK, Jackson D, Higgins JPT. Consistency and inconsistency in network meta-analysis: model estimation using multivariate meta-regression. Res Synth Methods. 2012;3:111–125. doi: 10.1002/jrsm.1045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Song F, Xiong T, Parekh-Bhurke S, et al. Inconsistency between direct and indirect comparisons of competing interventions: meta-epidemiological study. BMJ Br Med J. 2011;343:d4909–d4909. doi: 10.1136/bmj.d4909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cipriani A, Barbui C, Salanti G, et al. Comparative efficacy and acceptability of antimanic drugs in acute mania: A multiple-treatments meta-analysis. Lancet. 2011;378:1306–1315. doi: 10.1016/S0140-6736(11)60873-8. [DOI] [PubMed] [Google Scholar]

- 28.Salanti G, Ades A, Ioannidis JP. Graphical methods and numerical summaries for presenting results from multiple-treatment meta-analysis: an overview and tutorial. J Clin Epidemiol. 2011;64:163–71. doi: 10.1016/j.jclinepi.2010.03.016. [DOI] [PubMed] [Google Scholar]

- 29.Medeiros FA. Biomarkers and surrogate endpoints in glaucoma clinical trials. Br J Ophthalmol. 2014:1–5. doi: 10.1136/bjophthalmol-2014-305550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rouse B, Cipriani A, Shi Q, et al. Network Meta-Analysis for Clinical Practice Guidelines – A Case Study on First-Line Medical Therapies for Primary Open-Angle Glaucoma. Ann Intern Med. 2016;164:674–82. doi: 10.7326/M15-2367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Guyatt GH, Oxman AD, Vist GE, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336:924–6. doi: 10.1136/bmj.39489.470347.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Guyatt GH, Oxman AD, Montori V, et al. GRADE guidelines: 5. Rating the quality of evidence - Publication bias. J Clin Epidemiol. 2011;64:1277–1282. doi: 10.1016/j.jclinepi.2011.01.011. [DOI] [PubMed] [Google Scholar]

- 33.Salanti G, Giovane C, Del Chaimani A, et al. Evaluating the quality of evidence from a network meta-analysis. PLoS One. 2014 doi: 10.1371/journal.pone.0099682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Puhan MA, Schunemann HJ, Murad MH, et al. A GRADE Working Group approach for rating the quality of treatment effect estimates from network meta-analysis. BMJ Br Med J. 2014;349:g5630–g5630. doi: 10.1136/bmj.g5630. [DOI] [PubMed] [Google Scholar]

- 35.Leucht S, Chaimani A, Cipriani AS, et al. Network meta-analyses should be the highest level of evidence in treatment guidelines. Eur Arch Psychiatry Clin Neurosci. 2016:10–13. doi: 10.1007/s00406-016-0715-4. [DOI] [PubMed] [Google Scholar]

- 36.Moher D, Liberati A, Tetzlaff J, et al. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement (Reprinted from Annals of Internal Medicine) PLoS Med. 2009;6:e1000097. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hutton B, Salanti G, Caldwell DM, et al. The PRISMA Extension Statement for Reporting of Systematic Reviews Incorporating Network Meta-analyses of Health Care Interventions: Checklist and Explanations. Ann Intern Med. 2015;162:777. doi: 10.7326/M14-2385. [DOI] [PubMed] [Google Scholar]