Abstract

Motivation

Protein contacts contain key information for the understanding of protein structure and function and thus, contact prediction from sequence is an important problem. Recently exciting progress has been made on this problem, but the predicted contacts for proteins without many sequence homologs is still of low quality and not very useful for de novo structure prediction.

Method

This paper presents a new deep learning method that predicts contacts by integrating both evolutionary coupling (EC) and sequence conservation information through an ultra-deep neural network formed by two deep residual neural networks. The first residual network conducts a series of 1-dimensional convolutional transformation of sequential features; the second residual network conducts a series of 2-dimensional convolutional transformation of pairwise information including output of the first residual network, EC information and pairwise potential. By using very deep residual networks, we can accurately model contact occurrence patterns and complex sequence-structure relationship and thus, obtain higher-quality contact prediction regardless of how many sequence homologs are available for proteins in question.

Results

Our method greatly outperforms existing methods and leads to much more accurate contact-assisted folding. Tested on 105 CASP11 targets, 76 past CAMEO hard targets, and 398 membrane proteins, the average top L long-range prediction accuracy obtained by our method, one representative EC method CCMpred and the CASP11 winner MetaPSICOV is 0.47, 0.21 and 0.30, respectively; the average top L/10 long-range accuracy of our method, CCMpred and MetaPSICOV is 0.77, 0.47 and 0.59, respectively. Ab initio folding using our predicted contacts as restraints but without any force fields can yield correct folds (i.e., TMscore>0.6) for 203 of the 579 test proteins, while that using MetaPSICOV- and CCMpred-predicted contacts can do so for only 79 and 62 of them, respectively. Our contact-assisted models also have much better quality than template-based models especially for membrane proteins. The 3D models built from our contact prediction have TMscore>0.5 for 208 of the 398 membrane proteins, while those from homology modeling have TMscore>0.5 for only 10 of them. Further, even if trained mostly by soluble proteins, our deep learning method works very well on membrane proteins. In the recent blind CAMEO benchmark, our fully-automated web server implementing this method successfully folded 6 targets with a new fold and only 0.3L-2.3L effective sequence homologs, including one β protein of 182 residues, one α+β protein of 125 residues, one α protein of 140 residues, one α protein of 217 residues, one α/β of 260 residues and one α protein of 462 residues. Our method also achieved the highest F1 score on free-modeling targets in the latest CASP (Critical Assessment of Structure Prediction), although it was not fully implemented back then.

Availability

Author Summary

Protein contact prediction and contact-assisted folding has made good progress due to direct evolutionary coupling analysis (DCA). However, DCA is effective on only some proteins with a very large number of sequence homologs. To further improve contact prediction, we borrow ideas from deep learning, which has recently revolutionized object recognition, speech recognition and the GO game. Our deep learning method can model complex sequence-structure relationship and high-order correlation (i.e., contact occurrence patterns) and thus, improve contact prediction accuracy greatly. Our test results show that our method greatly outperforms the state-of-the-art methods regardless how many sequence homologs are available for a protein in question. Ab initio folding guided by our predicted contacts may fold many more test proteins than the other contact predictors. Our contact-assisted 3D models also have much better quality than homology models built from the training proteins, especially for membrane proteins. One interesting finding is that even trained mostly with soluble proteins, our method performs very well on membrane proteins. Recent blind CAMEO test confirms that our method can fold large proteins with a new fold and only a small number of sequence homologs.

This is a PLoS Computational Biology Methods paper.

Introduction

De novo protein structure prediction from sequence alone is one of most challenging problems in computational biology. Recent progress has indicated that some correctly-predicted long-range contacts may allow accurate topology-level structure modeling [1] and that direct evolutionary coupling analysis (DCA) of multiple sequence alignment (MSA) may reveal some long-range native contacts for proteins and protein-protein interactions with a large number of sequence homologs [2, 3]. Therefore, contact prediction and contact-assisted protein folding has recently gained much attention in the community. However, for many proteins especially those without many sequence homologs, the predicted contacts by the state-of-the-art predictors such as CCMpred [4], PSICOV [5], Evfold [6], plmDCA[7], Gremlin[8], MetaPSICOV [9] and CoinDCA [10] are still of low quality and insufficient for accurate contact-assisted protein folding [11, 12]. This motivates us to develop a better contact prediction method, especially for proteins without a large number of sequence homologs. In this paper we define that two residues form a contact if they are spatially proximal in the native structure, i.e., the Euclidean distance of their Cβ atoms less than 8Å [13].

Existing contact prediction methods roughly belong to two categories: evolutionary coupling analysis (ECA) and supervised machine learning. ECA predicts contacts by identifying co-evolved residues in a protein, such as EVfold [6], PSICOV [5], CCMpred [4], Gremlin [8], plmDCA and others [14–16]. However, DCA usually needs a large number of sequence homologs to be effective [10, 17]. Supervised machine learning predicts contacts from a variety of information, e.g., SVMSEQ [18], CMAPpro [13], PconsC2 [17], MetaPSICOV [9], PhyCMAP [19] and CoinDCA-NN [10]. Meanwhile, PconsC2 uses a 5-layer supervised learning architecture [17]; CoinDCA-NN and MetaPSICOV employ a 2-layer neural network [9]. CMAPpro uses a neural network with more layers, but its performance saturates at about 10 layers. Some supervised methods such as MetaPSICOV and CoinDCA-NN outperform ECA on proteins without many sequence homologs, but their performance is still limited by their shallow architectures.

To further improve supervised learning methods for contact prediction, we borrow ideas from very recent breakthrough in computer vision. In particular, we have greatly improved contact prediction by developing a brand-new deep learning model called residual neural network [20] for contact prediction. Deep learning is a powerful machine learning technique that has revolutionized image classification [21, 22] and speech recognition [23]. In 2015, ultra-deep residual neural networks [24] demonstrated superior performance in several computer vision challenges (similar to CASP) such as image classification and object recognition [25]. If we treat a protein contact map as an image, then protein contact prediction is kind of similar to (but not exactly same as) pixel-level image labeling, so some techniques effective for image labeling may also work for contact prediction. However, there are some important differences between image labeling and contact prediction. First, in computer vision community, image-level labeling (i.e., classification of a single image) has been extensively studied, but there are much fewer studies on pixel-level image labeling (i.e., classification of an individual pixel). Second, in many image classification scenarios, image size is resized to a fixed value, but we cannot resize a contact map since we need to do prediction for every residue pair (equivalent to an image pixel). Third, contact prediction has much more complex input features (including both sequential and pairwise features) than image labeling. Fourth, the ratio of contacts in a protein is very small (<2%). That is, the number of positive and negative labels in contact prediction is extremely unbalanced.

In this paper we present a very deep residual neural network for contact prediction. Such a network can capture very complex sequence-contact relationship and high-order contact correlation. We train this deep neural network using a subset of proteins with solved structures and then test it on public data including the CASP [26, 27] and CAMEO [28] targets as well as many membrane proteins. Our experimental results show that our method yields much better accuracy than existing methods and also result in much more accurate contact-assisted folding. The deep learning method described here will also be useful for the prediction of protein-protein and protein-RNA interfacial contacts.

Results

Deep learning model for contact prediction

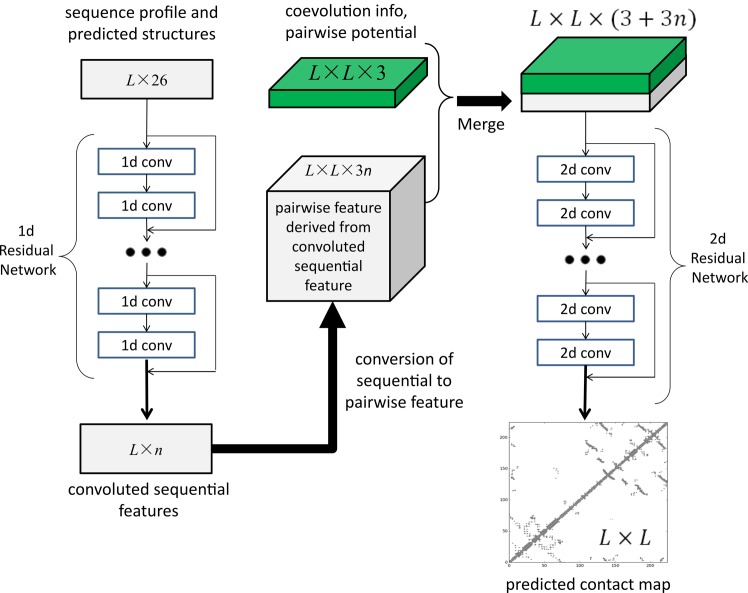

Fig 1 illustrates our deep neural network model for contact prediction [29]. Different from previous supervised learning approaches[9, 13] for contact prediction that employ only a small number of hidden layers (i.e., a shallow architecture), our deep neural network employs dozens of hidden layers. By using a very deep architecture, our model can automatically learn the complex relationship between sequence information and contacts and also model the interdependency among contacts and thus, improve contact prediction [17]. Our model consists of two major modules, each being a residual neural network. The first module conducts a series of 1-dimensional (1D) convolutional transformations of sequential features (sequence profile, predicted secondary structure and solvent accessibility). The output of this 1D convolutional network is converted to a 2-dimensional (2D) matrix by outer concatenation (an operation similar to outer product) and then fed into the 2nd module together with pairwise features (i.e., co-evolution information, pairwise contact and distance potential). The 2nd module is a 2D residual network that conducts a series of 2D convolutional transformations of its input. Finally, the output of the 2D convolutional network is fed into a logistic regression, which predicts the probability of any two residues form a contact. In addition, each convolutional layer is also preceded by a simple nonlinear transformation called rectified linear unit [30]. Mathematically, the output of 1D residual network is just a 2D matrix with dimension L×m where m is the number of new features (or hidden neurons) generated by the last convolutional layer of the network. Biologically, this 1D residual network learns the sequential context of a residue. By stacking multiple convolution layers, the network can learn information in a very large sequential context. The output of a 2D convolutional layer has dimension L×L×n where n is the number of new features (or hidden neurons) generated by this layer for one residue pair. The 2D residual network mainly learns contact occurrence patterns or high-order residue correlation (i.e., 2D context of a residue pair). The number of hidden neurons may vary at each layer.

Fig 1. Illustration of our deep learning model for contact prediction where L is the sequence length of one protein under prediction.

Our test data includes the 150 Pfam families described in [5], 105 CASP11 test proteins [31], 398 membrane proteins (S1 Table) and 76 CAMEO hard targets released from 10/17/2015 to 04/09/2016 (S2 Table). The tested methods include PSICOV [5], Evfold [6], CCMpred [4], plmDCA[7], Gremlin[8], and MetaPSICOV [9]. The former 5 methods employs pure DCA while MetaPSICOV [9] is a supervised learning method that performed the best in CASP11 [31]. All the programs are run with parameters set according to their respective papers. We cannot evaluate PconsC2 [17] since we failed to obtain any results from its web server. PconsC2 did not outperform MetaPSICOV in CASP11 [31], so it may suffice to just compare our method with MetaPSICOV.

Overall performance

We evaluate the accuracy of the top L/k (k = 10, 5, 2, 1) predicted contacts where L is protein sequence length [10]. We define that a contact is short-, medium- and long-range when the sequence distance of the two residues in a contact falls into [6, 11], [12, 23], and ≥24, respectively. The prediction accuracy is defined as the percentage of native contacts among the top L/k predicted contacts. When there are no L/k native (short- or medium-range) contacts, we replace the denominator by L/k in calculating accuracy. This may make the short- and medium-range accuracy look small although it is easier to predict short- and medium-range contacts than long-range ones.

As shown in Tables 1–4, our method outperforms all tested DCA methods and MetaPSICOV by a very large margin on the 4 test sets regardless of how many top predicted contacts are evaluated and no matter whether the contacts are short-, medium- or long-range. These results also show that two supervised learning methods greatly outperform the pure DCA methods and that the three pseudo-likelihood DCA methods plmDCA, Gremlin and CCMpred perform similarly, but outperform PSICOV (Gaussian model) and Evfold (maximum-entropy method). The advantage of our method is the smallest on the 150 Pfam families because many of them have a pretty large number of sequence homologs. In terms of top L long-range contact accuracy on the CASP11 set, our method exceeds CCMpred and MetaPSICOV by 0.32 and 0.20, respectively. On the 76 CAMEO hard targets, our method exceeds CCMpred and MetaPSICOV by 0.27 and 0.17, respectively. On the 398 membrane protein set, our method exceeds CCMpred and MetaPSICOV by 0.26 and 0.17, respectively. Our method uses a subset of protein features used by MetaPSICOV, but performs much better than MetaPSICOV due to our deep architecture and that we predict contacts of a protein simultaneously. Since the Pfam set is relatively easy, we will not analyze it any more in the following sections.

Table 1. Contact prediction accuracy on the 150 Pfam families.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.50 | 0.40 | 0.26 | 0.17 | 0.64 | 0.52 | 0.34 | 0.22 | 0.74 | 0.68 | 0.53 | 0.39 |

| PSICOV | 0.58 | 0.43 | 0.26 | 0.17 | 0.65 | 0.51 | 0.32 | 0.20 | 0.77 | 0.70 | 0.52 | 0.37 |

| CCMpred | 0.65 | 0.50 | 0.29 | 0.19 | 0.73 | 0.60 | 0.37 | 0.23 | 0.82 | 0.76 | 0.62 | 0.45 |

| plmDCA | 0.66 | 0.50 | 0.29 | 0.19 | 0.72 | 0.60 | 0.36 | 0.22 | 0.81 | 0.76 | 0.61 | 0.44 |

| Gremlin | 0.66 | 0.51 | 0.30 | 0.19 | 0.74 | 0.60 | 0.37 | 0.23 | 0.82 | 0.76 | 0.63 | 0.46 |

| MetaPSICOV | 0.82 | 0.70 | 0.45 | 0.27 | 0.83 | 0.73 | 0.52 | 0.33 | 0.92 | 0.87 | 0.74 | 0.58 |

| Our method | 0.93 | 0.81 | 0.51 | 0.30 | 0.93 | 0.86 | 0.62 | 0.38 | 0.98 | 0.96 | 0.89 | 0.74 |

Table 4. Contact prediction accuracy on 398 membrane proteins.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.16 | 0.13 | 0.09 | 0.07 | 0.28 | 0.22 | 0.13 | 0.09 | 0.44 | 0.37 | 0.26 | 0.18 |

| PSICOV | 0.22 | 0.16 | 0.10 | 0.07 | 0.29 | 0.21 | 0.13 | 0.09 | 0.42 | 0.34 | 0.23 | 0.16 |

| CCMpred | 0.27 | 0.19 | 0.11 | 0.08 | 0.36 | 0.26 | 0.15 | 0.10 | 0.52 | 0.45 | 0.31 | 0.21 |

| plmDCA | 0.26 | 0.18 | 0.11 | 0.08 | 0.35 | 0.25 | 0.14 | 0.09 | 0.51 | 0.42 | 0.29 | 0.20 |

| Gremlin | 0.27 | 0.19 | 0.11 | 0.07 | 0.37 | 0.26 | 0.15 | 0.10 | 0.52 | 0.45 | 0.32 | 0.21 |

| MetaPSICOV | 0.45 | 0.35 | 0.22 | 0.14 | 0.49 | 0.40 | 0.27 | 0.18 | 0.61 | 0.55 | 0.42 | 0.30 |

| Our method | 0.60 | 0.46 | 0.27 | 0.16 | 0.66 | 0.53 | 0.33 | 0.22 | 0.78 | 0.73 | 0.62 | 0.47 |

Table 2. Contact prediction accuracy on 105 CASP11 test proteins.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.25 | 0.21 | 0.15 | 0.12 | 0.33 | 0.27 | 0.19 | 0.13 | 0.37 | 0.33 | 0.25 | 0.19 |

| PSICOV | 0.29 | 0.23 | 0.15 | 0.12 | 0.34 | 0.27 | 0.18 | 0.13 | 0.38 | 0.33 | 0.25 | 0.19 |

| CCMpred | 0.35 | 0.28 | 0.17 | 0.12 | 0.40 | 0.32 | 0.21 | 0.14 | 0.43 | 0.39 | 0.31 | 0.23 |

| plmDCA | 0.32 | 0.26 | 0.17 | 0.12 | 0.39 | 0.31 | 0.21 | 0.14 | 0.42 | 0.38 | 0.30 | 0.23 |

| Gremlin | 0.35 | 0.27 | 0.17 | 0.12 | 0.40 | 0.31 | 0.21 | 0.14 | 0.44 | 0.40 | 0.31 | 0.23 |

| MetaPSICOV | 0.69 | 0.58 | 0.39 | 0.25 | 0.69 | 0.59 | 0.42 | 0.28 | 0.60 | 0.54 | 0.45 | 0.35 |

| Our method | 0.82 | 0.70 | 0.46 | 0.28 | 0.85 | 0.76 | 0.55 | 0.35 | 0.81 | 0.77 | 0.68 | 0.55 |

Table 3. Contact prediction accuracy on 76 past CAMEO hard targets.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.17 | 0.13 | 0.11 | 0.09 | 0.23 | 0.19 | 0.13 | 0.10 | 0.25 | 0.22 | 0.17 | 0.13 |

| PSICOV | 0.20 | 0.15 | 0.11 | 0.08 | 0.24 | 0.19 | 0.13 | 0.09 | 0.25 | 0.23 | 0.18 | 0.13 |

| CCMpred | 0.22 | 0.16 | 0.11 | 0.09 | 0.27 | 0.22 | 0.14 | 0.10 | 0.30 | 0.26 | 0.20 | 0.15 |

| plmDCA | 0.23 | 0.18 | 0.12 | 0.09 | 0.27 | 0.22 | 0.14 | 0.10 | 030 | 0.26 | 0.20 | 0.15 |

| Gremlin | 0.21 | 0.17 | 0.11 | 0.08 | 0.27 | 0.22 | 0.14 | 0.10 | 0.31 | 0.26 | 0.20 | 0.15 |

| MetaPSICOV | 0.56 | 0.47 | 0.31 | 0.20 | 0.53 | 0.45 | 0.32 | 0.22 | 0.47 | 0.42 | 0.33 | 0.25 |

| Our method | 0.67 | 0.57 | 0.37 | 0.23 | 0.69 | 0.61 | 0.42 | 0.28 | 0.69 | 0.65 | 0.55 | 0.42 |

Prediction accuracy with respect to the number of sequence homologs

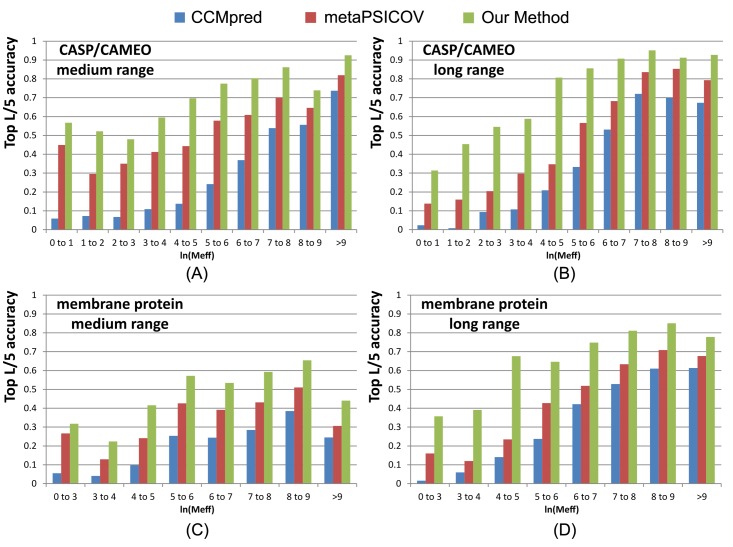

To examine the performance of our method with respect to the amount of homologous information available for a protein under prediction, we measure the effective number of sequence homologs in multiple sequence alignment (MSA) by Meff [19], which can be roughly interpreted as the number of non-redundant sequence homologs when 70% sequence identity is used as cutoff to remove redundancy (see Method for its formula). A protein with a smaller Meff has less homologous information. We divide all the test proteins into 10 bins according to ln(Meff) and then calculate the average accuracy of proteins in each bin. We merge the first 3 bins for the membrane protein set since they have a small number of proteins.

Fig 2 shows that the top L/5 contact prediction accuracy increases with respect to Meff, i.e., the number of effective sequence homologs, and that our method outperforms both MetaPSICOV and CCMpred regardless of Meff. Our long-range prediction accuracy is even better when ln(Meff)≤7 (equivalently Meff<1100), i.e., when the protein under prediction does not have a very large number of non-redundant sequence homologs. Our method has a large advantage over the other methods even when Meff is very big (>8000). This indicates that our method indeed benefits from some extra information such as inter-contact correlation or high-order residue correlation, which is orthogonal to pairwise co-evolution information.

Fig 2. Top L/5 accuracy of our method (green), CCMpred (blue) and MetaPSICOV (red) with respect to the amount of homologous information measured by ln(Meff).

The accuracy on the union of the 105 CASP and 76 CAMEO targets is displayed in (A) medium-range and (B) long-range. The accuracy on the membrane protein set is displayed in (C) medium-range and (D) long-range.

Contact-assisted protein folding

One of the important goals of contact prediction is to perform contact-assisted protein folding [11]. To test if our contact prediction can lead to better 3D structure modeling than the others, we build structure models for all the test proteins using the top predicted contacts as restraints of ab initio folding. For each test protein, we feed the top predicted contacts as restraints into the CNS suite [32] to generate 3D models. We measure the quality of a 3D model by a superposition-dependent score TMscore [33], which ranges from 0 to 1, with 0 indicating the worst and 1 the best, respectively. According to Xu and Zhang [34], a model with TMscore>0.5 (TMscore>0.6) is likely (highly likely) to have a correct fold. We also measure the quality of a 3D model by a superposition-independent score lDDT, which ranges from 0 to 100, with 0 indicating the worst and 100 the best, respectively.

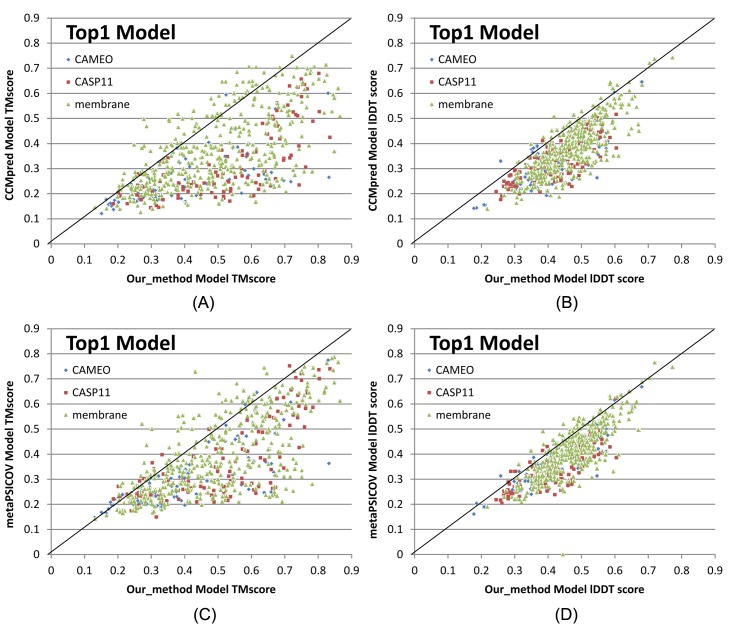

Fig 3 shows that our predicted contacts can generate much better 3D models than CCMpred and MetaPSICOV. On average, our 3D models are better than MetaPSICOV and CCMpred by ~0.12 TMscore unit and ~0.15 unit, respectively. When the top 1 models are evaluated, the average TMscore obtained by CCMpred, MetaPSICOV, and our method is 0.333, 0.377, and 0.518, respectively on the CASP dataset. The average lDDT of CCMpred, MetaPSICOV and our method is 31.7, 34.1 and 41.8, respectively. On the 76 CAMEO targets, the average TMsore of CCMpred, MetaPSICOV and our method is 0.256, 0.305 and 0.407, respectively. The average lDDT of CCMpred, MetaPSICOV and our method is 31.8, 35.4 and 40.2, respectively. On the membrane protein set, the average TMscore of CCMpred, MetaPSICOV and our method is 0.354, 0.387, and 0.493, respectively. The average lDDT of CCMpred, MetaPSICOV and our method is 38.1, 40.5 and 47.8, respectively. Same trend is observed when the best of top 5 models are evaluated (S1 Fig). On the CASP set, the average TMscore of the models generated by CCMpred, MetaPSICOV, and our method is 0.352, 0.399, and 0.543, respectively. The average lDDT of CCMpred, MetaPSICOV and our method is 32.3, 34.9 and 42.4, respectively. On the 76 CAMEO proteins, the average TMscore of CCMpred, MetaPSICOV, and our method is 0.271, 0.334, and 0.431, respectively. The average lDDT of CCMpred, MetaPSICOV and our method is 32.4, 36.1 and 40.9, respectively. On the membrane protein set, the average TMscore of CCMpred, MetaPSICOV, and our method is 0.385, 0.417, and 0.516, respectively. The average lDDT of CCMpred, MetaPSICOV and our method is 38.9, 41.2 and 48.5, respectively. In particular, when the best of top 5 models are considered, our predicted contacts can result in correct folds (i.e., TMscore>0.6) for 203 of the 579 test proteins, while MetaPSICOV- and CCMpred-predicted contacts can do so for only 79 and 62 of them, respectively.

Fig 3. Quality comparison of top 1 contact-assisted models generated by our method, CCMpred and MetaPSICOV on the 105 CASP11 targets (red square), 76 CAMEO targets (blue diamond) and 398 membrane protein targets (green triangle), respectively.

(A) and (B): comparison between our method (X-axis) and CCMpred (Y-axis) in terms of TMscore and lDDT, respectively. (C) and (D): comparison between our method (X-axis) and MetaPSICOV (Y-axis) in terms of TMscore and lDDT, respectively. lDDT is scaled to between 0 and 1.

Our method also generates much better contact-assisted models for the test proteins without many non-redundant sequence homologs. When the 219 of 579 test proteins with Meff≤500 are evaluated, the average TMscore of the top 1 models generated by our predicted contacts for the CASP11, CAMEO and membrane sets is 0.426, 0.365, and 0.397, respectively. By contrast, the average TMscore of the top 1 models generated by CCMpred-predicted contacts for the CASP11, CAMEO and membrane sets is 0.236, 0.214, and 0.241, respectively. The average TMscore of the top 1 models generated by MetaPSICOV-predicted contacts for the CASP11, CAMEO and membrane sets is 0.292, 0.272, and 0.274, respectively.

Contact-assisted models vs. template-based models

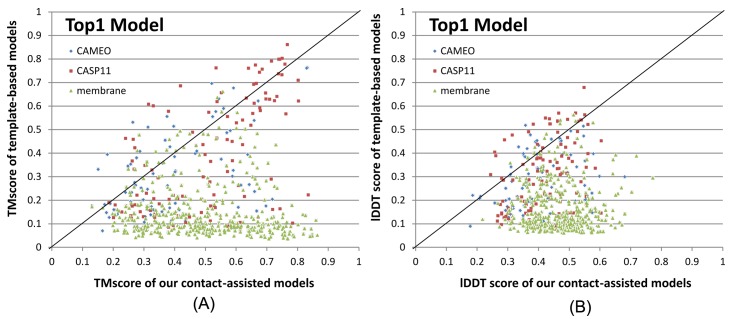

To compare the quality of our contact-assisted models and template-based models (TBMs), we built TBMs for all the test proteins using our training proteins as candidate templates. To generate TBMs for a test protein, we first run HHblits (with the UniProt20_2016 library) to generate an HMM file for the test protein, then run HHsearch with this HMM file to search for the best templates among the 6767 training proteins, and finally run MODELLER to build a TBM from each of the top 5 templates. Fig 4 shows the head-to-head comparison between our top 1 contact-assisted models and the top 1 TBMs on these three test sets in terms of both TMscore and lDDT. The average lDDT of our top 1 contact-assisted models is 45.7 while that of top 1 TBMs is only 20.7. When only the first models are evaluated, our contact-assisted models for the 76 CAMEO test proteins have an average TMscore 0.407 while the TBMs have an average TMscore 0.317. On the 105 CASP11 test proteins, the average TMscore of our contact-assisted models is 0.518 while that of the TBMs is only 0.393. On the 398 membrane proteins, the average TMscore of our contact-assisted models is 0.493 while that of the TBMs is only 0.149. Same trend is observed when top 5 models are compared (S2 Fig). The average lDDT of our top 5 contact-assisted models is 46.4 while that of top 5 TBMs is only 24.0. On the 76 CAMEO test proteins, the average TMscore of our contact-assisted models is 0.431 while that of the TBMs is only 0.366. On the 105 CASP11 test proteins, the average TMscore of our contact-assisted models is 0.543 while that of the TBMs is only 0.441. On the 398 membrane proteins, the average TMscore of our contact-assisted models is 0.516 while that of the TBMs is only 0.187. The low quality of TBMs further confirms that there is little redundancy between our training and test proteins (especially membrane proteins). This also indicates that our deep model does not predict contacts by simply copying from training proteins. That is, our method can predict contacts for a protein with a new fold.

Fig 4.

Comparison between our contact-assisted models of the three test sets and their template-based models in terms of (A) TMscore and (B) lDDT score. The top 1 models are evaluated.

Further, when the best of top 5 models are considered for all the methods, our contact-assisted models have TMscore>0.5 for 24 of the 76 CAMEO targets while TBMs have TMscore>0.5 for only 18 of them. Our contact-assisted models have TMscore >0.5 for 67 of the 105 CASP11 targets while TBMs have TMscore>0.5 for only 44 of them. Our contact-assisted models have TMscore>0.5 for 208 of the 398 membrane proteins while TBMs have TMscore >0.5 for only 10 of them. Our contact-assisted models for membrane proteins are much better than their TBMs because there is little similarity between the 6767 training proteins and the 398 test membrane proteins. When the 219 test proteins with ≤500 non-redundant sequence homologs are evaluated, the average TMscore of the TBMs is 0.254 while that of our contact-assisted models is 0.421. Among these 219 proteins, our contact-assisted models have TMscore>0.5 for 72 of them while TBMs have TMscore>0.5 for only 17 of them.

The above results imply that 1) when a query protein has no close templates, our contact-assisted modeling may work better than template-based modeling; 2) contact-assisted modeling shall be particularly useful for membrane proteins; and 3) our deep learning model does not predict contacts by simply copying contacts from the training proteins since our predicted contacts may result in much better 3D models than homology modeling.

Blind test in CAMEO

We have implemented our algorithm as a fully-automated contact prediction web server (http://raptorx.uchicago.edu/ContactMap/) and in September 2016 started to blindly test it through the weekly live benchmark CAMEO (http://www.cameo3d.org/). CAMEO is operated by the Schwede group, with whom we have never collaborated. CAMEO can be interpreted as a fully-automated CASP, but has a smaller number (~30) of participating servers since many CASP-participating servers are not fully automated and thus, cannot handle the large number of test targets used by CAMEO. Nevertheless, the CAMEO participants include some well-known servers such as Robetta[35], Phyre[36], RaptorX[37], Swiss-Model[38] and HHpred[39]. Meanwhile Robetta employs both ab initio folding and template-based modeling while the latter four employ mainly template-based modeling. Every weekend CAMEO sends test sequences to participating servers for prediction and then evaluates 3D models collected from servers. The test proteins used by CAMEO have no publicly available native structures until CAMEO finishes collecting models from participating servers.

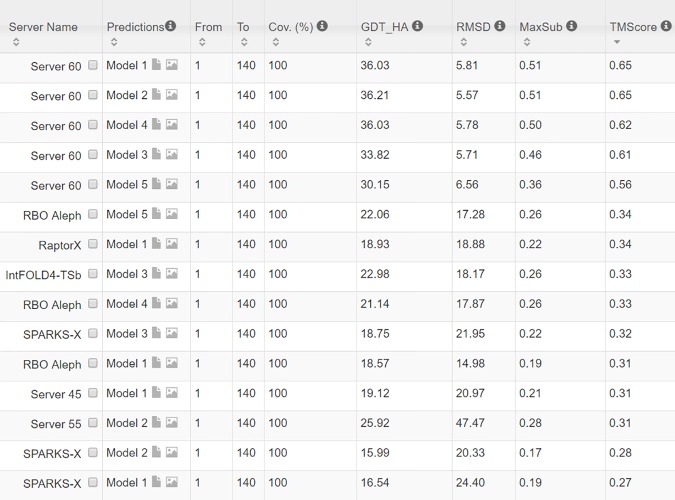

From 9/3/2016 to 10/31/2016, CAMEO in total released 41 hard targets (S3 Table). Although classified as hard by CAMEO, some of them may have distantly-related templates. Table 5 lists the contact prediction accuracy of our server in the blind CAMEO test as compared to the other methods. Again, our method outperforms the others by a very large margin no matter how many contacts are evaluated. The CAMEO evaluation of our contact-assisted 3D models is available at the CAMEO web site. You will need to register CAMEO in order to see all the detailed results of our contact server (ID: server60). Although our server currently build 3D models using only top predicted contacts without any force fields and fragment assembly procedures, our server predicts 3D models with TMscore>0.5 for 28 of the 41 targets and TMscore>0.6 for 16 of them. The average TMscore of the best of top 5 models built from the contacts predicted by our server, CCMpred and MetaPSICOV is 0.535, 0.316 and 0.392, respectively. See Fig 5 for the detailed comparison of the 3D models generated by our server, CCMpred and MetaPSICOV. Our server has also successfully folded 4 targets with a new fold plus one released in November 2016 (5flgB). See Table 6 for a summary of our prediction results of these targets and the below subsections for a detailed analysis. Among these targets, 5f5pH is particularly interesting since it has a sequence homolog in PDB but adopting a different conformation. That is, a template-based technique cannot obtain a good prediction for this target.

Table 5. Contact prediction accuracy on 41 recent CAMEO hard targets.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.20 | 0.15 | 0.11 | 0.08 | 0.25 | 0.19 | 0.12 | 0.09 | 0.33 | 0.29 | 0.21 | 0.15 |

| PSICOV | 0.21 | 0.16 | 0.11 | 0.08 | 0.26 | 0.20 | 0.11 | 0.08 | 0.33 | 0.30 | 0.21 | 0.15 |

| plmDCA | 0.26 | 0.19 | 0.12 | 0.09 | 0.28 | 0.23 | 0.13 | 0.09 | 0.38 | 0.33 | 0.24 | 0.17 |

| Gremlin | 0.25 | 0.18 | 0.12 | 0.08 | 0.29 | 0.22 | 0.13 | 0.09 | 0.37 | 0.34 | 0.25 | 0.17 |

| CCMpred | 0.24 | 0.18 | 0.12 | 0.08 | 0.29 | 0.22 | 0.13 | 0.09 | 0.37 | 0.34 | 0.24 | 0.17 |

| MetaPSICOV | 0.53 | 0.43 | 0.27 | 0.17 | 0.51 | 0.42 | 0.28 | 0.19 | 0.60 | 0.54 | 0.40 | 0.30 |

| Our server | 0.67 | 0.52 | 0.32 | 0.20 | 0.68 | 0.58 | 0.38 | 0.24 | 0.82 | 0.75 | 0.62 | 0.46 |

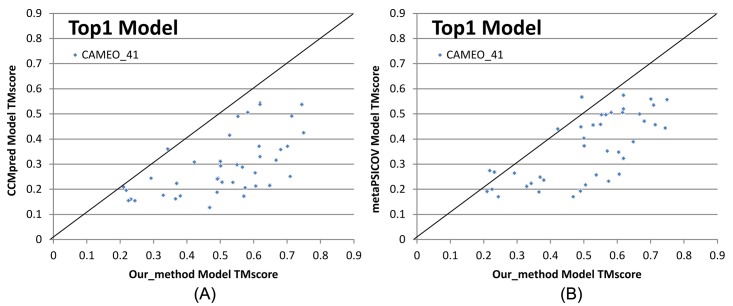

Fig 5. Quality comparison (measured by TMscore) of contact-assisted models generated by our server, CCMpred and MetaPSICOV on the 41 CAMEO hard targets.

(A) our server (X-axis) vs. CCMpred and (B) our server (X-axis) vs. MetaPSICOV.

Table 6. A summary of our blind prediction results on 5 CAMEO hard targets with a new fold.

| Target | CAMEO ID | Type | Len | Meff | Method | RMSD(Å) | TMscore |

|---|---|---|---|---|---|---|---|

| 2nc8A | 2016-09-10_00000002_1 | β | 182 | 250 | Our server | 6.5 | 0.61 |

| Best of the others | 12.18 | 0.47 | |||||

| 5dcjA | 2016-09-17_00000018_1 | α+β | 125 | 180 | Our server | 7.9 | 0.52 |

| Best of the others | 10.0 | 0.53 | |||||

| 5djeB | 2016-09-24_00000052_1 | α | 140 | 330 | Our server | 5.81 | 0.65 |

| Best of the others | 14.98 | 0.34 | |||||

| 5f5pH | 2016-10-15_00000047_1 | α | 217 | 65 | Our server | 4.21 | 0.71 |

| Best of the others | >40.0 | 0.48 | |||||

| 5flgB | 2016-11-12_00000046_1 | α/β | 260 | 113 | Our server | 7.12 | 0.61 |

| Best of the others | 16.9 | 0.35 |

Among these 41 hard targets, there are five multi-domain proteins: 5idoA, 5hmqF, 5b86B, 5b2gG and 5cylH. Table 7 shows that the average contact prediction accuracy of our method on these 5 multi-domain proteins is much better than the others. For multi-domain proteins, we use a superposition-independent score lDDT instead of TMscore to measure the quality of a 3D model. As shown in Table 8, the 3D models built by our server from predicted contacts have much better lDDT score than those built from CCMpred and MetaPSICOV.

Table 7. The average contact prediction accuracy of our method and the others on 5 multi-domain proteins among the 41 CAMEO hard targets.

| Method | Short | Medium | Long | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | L/10 | L/5 | L/2 | L | |

| EVfold | 0.17 | 0.13 | 0.09 | 0.07 | 0.18 | 0.12 | 0.08 | 0.06 | 0.54 | 0.40 | 0.26 | 0.18 |

| PSICOV | 0.27 | 0.18 | 0.10 | 0.07 | 0.26 | 0.17 | 0.11 | 0.07 | 0.62 | 0.49 | 0.31 | 0.20 |

| plmDCA | 0.29 | 0.23 | 0.11 | 0.07 | 0.32 | 0.22 | 0.11 | 0.08 | 0.66 | 0.51 | 0.34 | 0.22 |

| Gremlin | 0.30 | 0.24 | 0.12 | 0.08 | 0.32 | 0.22 | 0.12 | 0.07 | 0.67 | 0.52 | 0.36 | 0.23 |

| CCMpred | 0.30 | 0.23 | 0.12 | 0.08 | 0.32 | 0.22 | 0.12 | 0.08 | 0.66 | 0.51 | 0.35 | 0.23 |

| MetaPSICOV | 0.52 | 0.37 | 0.21 | 0.14 | 0.32 | 0.26 | 0.16 | 0.11 | 0.72 | 0.58 | 0.41 | 0.26 |

| Our method | 0.74 | 0.58 | 0.33 | 0.19 | 0.68 | 0.55 | 0.33 | 0.20 | 0.96 | 0.91 | 0.76 | 0.57 |

Table 8. The lDDT score of the 3D models built for the 5 multi-domain proteins using predicted contacts.

| Targets | Length | CCMpred | MetaPSICOV | Our |

|---|---|---|---|---|

| 5idoA | 512 | 23.67 | 24.24 | 36.83 |

| 5hmqF | 637 | 24.84 | 25.91 | 33.16 |

| 5b86B | 600 | 29.88 | 32.85 | 42.58 |

| 5b2gG | 364 | 28.52 | 30.47 | 47.91 |

| 5cylH | 370 | 22.21 | 23.37 | 30.62 |

Study of CAMEO target 2nc8A (CAMEO ID: 2016-09-10_00000002_1, PDB ID:2nc8)

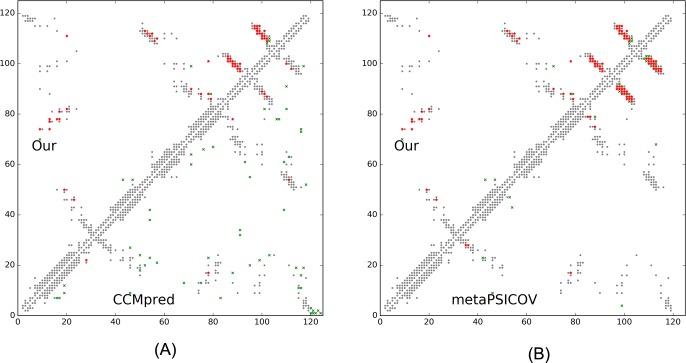

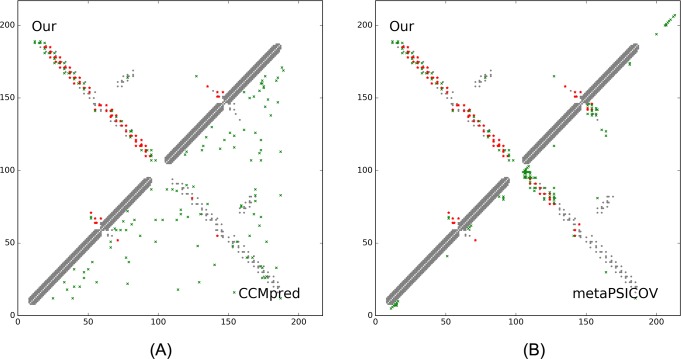

On September 10, 2016, CAMEO released two hard test targets for structure prediction. Our contact server successfully folded the hardest one (PDB ID: 2nc8), a mainly β protein of 182 residues. Table 9 shows that our server produced a much better contact prediction than CCMpred and MetaPSICOV. CCMpred has very low accuracy since HHblits detected only ~250 non-redundant sequence homologs for this protein, i.e., its Meff = 250. Fig 6 shows the predicted contact maps and their overlap with the native. MetaPSICOV fails to predict many long-range contacts while CCMpred introduces too many false positives.

Table 9. The long- and medium-range contact prediction accuracy of our method, MetaPSICOV and CCMpred on the CAMEO target 2nc8A.

| Long-range accuracy | Medium-range accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| L | L/2 | L/5 | L/10 | L | L/2 | L/5 | L/10 | |

| Our method | 0.764 | 0.923 | 0.972 | 1.0 | 0.450 | 0.769 | 0.972 | 1.0 |

| MetaPSICOV | 0.258 | 0.374 | 0.556 | 0.667 | 0.390 | 0.626 | 0.806 | 0.944 |

| CCMpred | 0.165 | 0.231 | 0.389 | 0.333 | 0.148 | 0.187 | 0.167 | 0.222 |

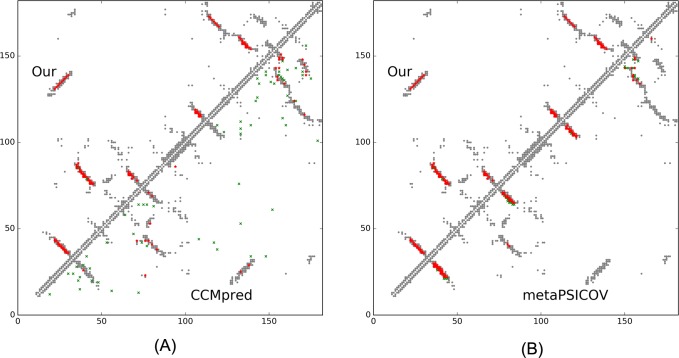

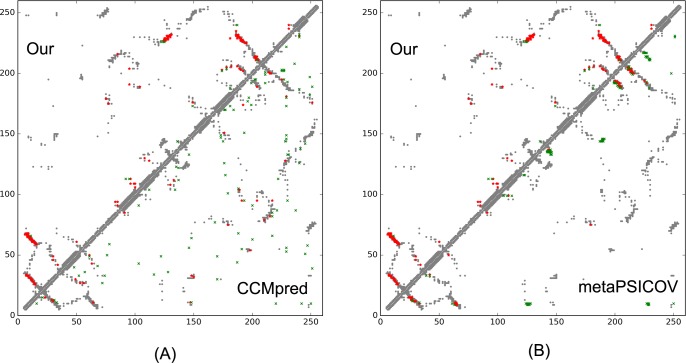

Fig 6. Overlap between top L/2 predicted contacts (in red or green) and the native contact map (in grey) for CAMEO target 2nc8A.

Red (green) dots indicate correct (incorrect) prediction. (A) The comparison between our prediction (in upper-left triangle) and CCMpred (in lower-right triangle). (B) The comparison between our prediction (in upper-left triangle) and MetaPSICOV (in lower-right triangle).

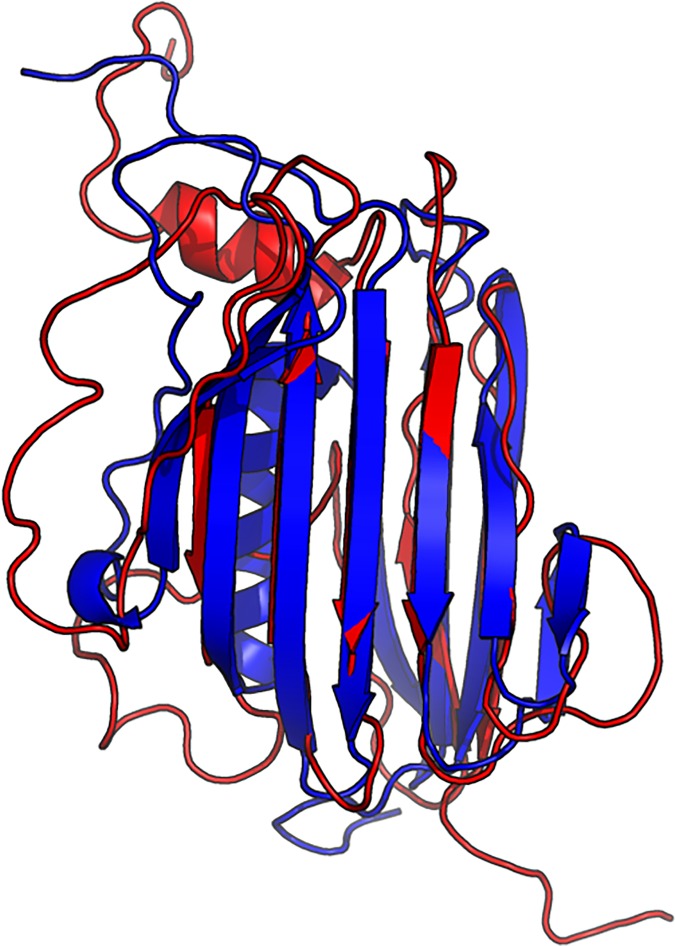

The 3D model submitted by our contact server has TMscore 0.570 (As of September 16, 2016, our server submits only one 3D model for each test protein) and the best of our top 5 models has TMscore 0.612 and RMSD 6.5Å. Fig 7 shows that the beta strands of our predicted model (red) matches well with the native (blue). To examine the superimposition of our model with its native structure from various angles, please see http://raptorx.uchicago.edu/DeepAlign/75097011/. By contrast, the best of top 5 models built by CNS from CCMpred- and MetaPSICOV-predicted contacts have TMscore 0.206 and 0.307, respectively, and RMSD 15.8Å and 14.2Å, respectively. The best TMscore obtained by the other CAMEO-participating servers is only 0.47 (Fig 8). Three top-notch servers HHpred, RaptorX and Robetta only submitted models with TMscore≤0.30. According to Xu and Zhang [34], a 3D model with TMscore<0.5 is unlikely to have a correct fold while a model with TMscore≥0.6 surely has a correct fold. That is, our contact server predicted a correct fold for this test protein while the others failed to.

Fig 7. Superimposition between our predicted model (red) and its native structure (blue) for the CAMEO test protein (PDB ID 2nc8 and chain A).

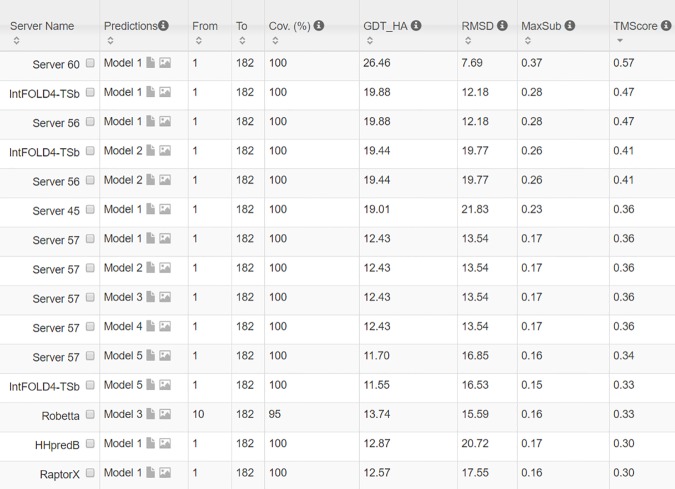

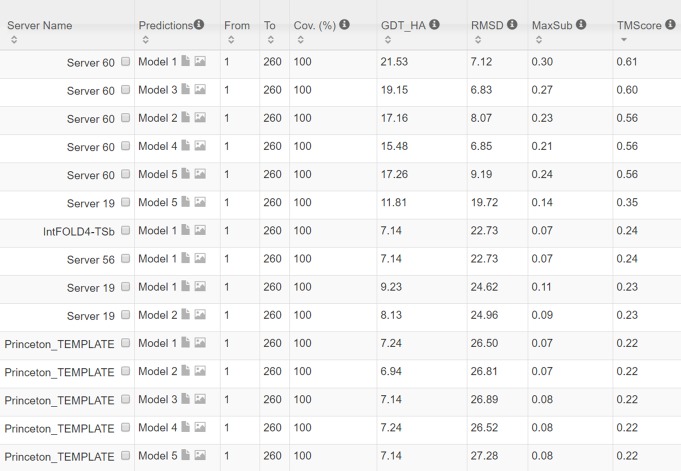

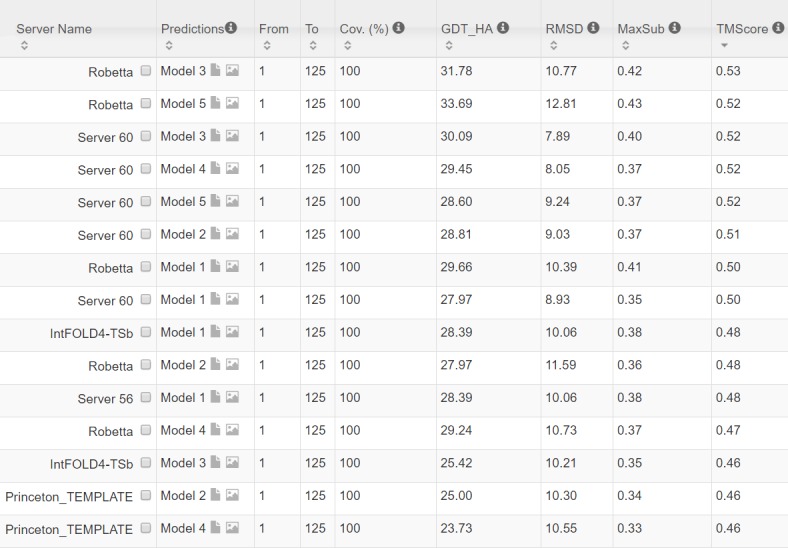

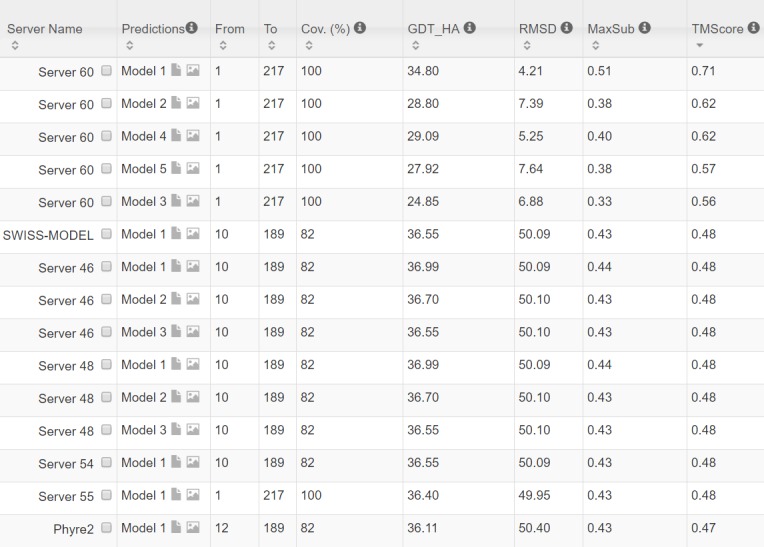

Fig 8. The list of top models submitted by CAMEO servers for 2nc8A and their quality scores.

The rightmost column displays the TMscore of submitted models. Server60 is our contact web server.

This test protein represents almost a novel fold. Our in-house structural homolog search tool DeepSearch[40] cannot identify structurally very similar proteins in PDB70 (created right before September 10, 2016) for this target. PDB70 is a set of representative structures in PDB, in which any two share less than 70% sequence identity. DeepSearch returned two weakly similar proteins 4kx7A and 4g2aA, which have TMscore 0.521 and 0.535 with the native structure of the target, respectively, and TMscore 0.465 and 0.466 with our best model, respectively. This is consistent with the fact that none of the template-based servers in CAMEO submitted a model with TMscore>0.5. We cannot find structurally similar proteins in PDB70 for our best model either; the best TMscore between PDB70 and our best model is only 0.480. That is, the models predicted by our method are not simply copied from the solved structures in PDB, and our method can indeed fold a relatively large β protein with a novel fold.

Study of CAMEO target 5dcjA (CAMEO ID: 2016-09-17_00000018_1, PDB ID:5dcj)

This target was released by CAMEO on September 17, 2016. It is an α+β sandwich protein of 125 residues. The four beta sheets of this protein are wrapped by one and three alpha helixes at two sides. Table 10 shows that our server produced a much better contact prediction than CCMpred and MetaPSICOV. Specifically, the contact map predicted by our method has L/2 long-range accuracy 0.645 while that by CCMpred and MetaPSICOV has L/2 accuracy only 0.05 and 0.194, respectively. CCMpred has very low accuracy since HHblits can only find ~180 non-redundant sequence homologs for this protein, i.e., its Meff = 180. Fig 9 shows the predicted contact maps and their overlap with the native. Both CCMpred and MetaPSICOV failed to predict some long-range contacts.

Table 10. The long- and medium-range contact prediction accuracy of our method, MetaPSICOV and CCMpred on the CAMEO target 5dcjA.

| Long range | Medium range | |||||||

|---|---|---|---|---|---|---|---|---|

| L | L/2 | L/5 | L/10 | L | L/2 | L/5 | L/10 | |

| Our method | 0.456 | 0.645 | 0.88 | 0.833 | 0.36 | 0.645 | 0.92 | 1.0 |

| metaPSICOV | 0.144 | 0.194 | 0.32 | 0.25 | 0.344 | 0.532 | 0.8 | 1.0 |

| CCMpred | 0.05 | 0.05 | 0.08 | 0.08 | 0.1 | 0.129 | 0.12 | 0.25 |

Fig 9. Overlap between top L/2 predicted contacts (in red or green) and the native contact map (in grey) for CAMEO target 5dcjA.

Red (green) dots indicate correct (incorrect) prediction. (A) The comparison between our prediction (in upper-left triangle) and CCMpred (in lower-right triangle). (B) The comparison between our prediction (in upper-left triangle) and MetaPSICOV (in lower-right triangle).

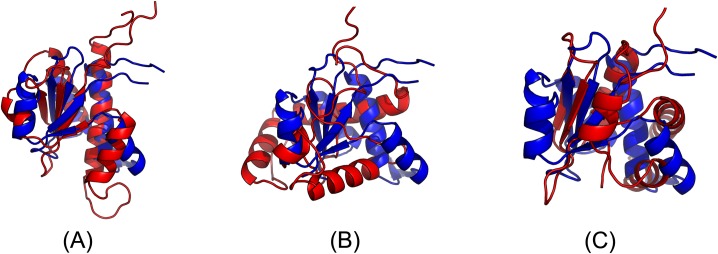

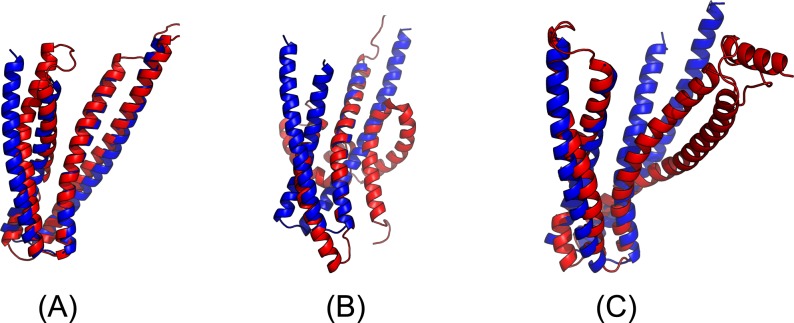

The first 3D model submitted by our contact server has TMscore 0.50 and the best of our 5 models has TMscore 0.52 and RMSD 7.9Å. The best of top 5 models built by CNS from CCMpred- and MetaPSICOV-predicted contacts have TMscore 0.243 and 0.361, respectively. Fig 10(A) shows that all the beta strands and the three surrounding alpha helices of our predicted model (in red) matches well with the native structure (blue), while the models from CCMpred (Fig 10(B)) and MetaPSICOV (Fig 10(C)) do not have a correct fold. To examine the superimposition of our model with its native structure from various angles, please see http://raptorx.uchicago.edu/DeepAlign/92913404/.

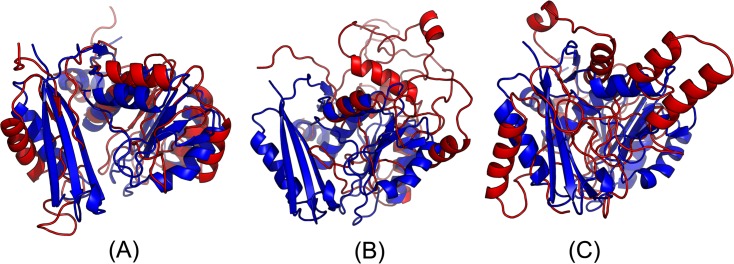

Fig 10. Superimposition between the predicted models (red) and the native structure (blue) for the CAMEO test protein (PDB ID 5dcj and chain A).

The models are built by CNS from the contacts predicted by (A) our method, (B) CCMpred, and (C) MetaPSICOV.

In terms of TMscore, our models have comparable quality to Robetta, but better than the other servers (Fig 11). In terms of RMSD and lDDT-Cα score, our models are better than all the others. In particular, our method produced better models than the popular homology modeling server HHpredB and our own template-based modeling server RaptorX, which submitted models with TMscore≤0.45.

Fig 11. The list of top models submitted by CAMEO-participating servers for 5dcjA and their quality scores.

The rightmost column displays the TMscore of submitted models. Server60 is our contact web server.

This target represents a novel fold. Searching through PDB70 created right before September 17, 2016 by our in-house structural homolog search tool DeepSearch cannot identify structurally similar proteins for this target. The most structurally similar proteins are 3lr5A and 5ereA, which have TMscore 0.431 and 0.45 with the target, respectively. This is consistent with the fact that none of the template-based servers in CAMEO can predict a good model for this target. By contrast, our contact-assisted model has TMscore 0.52, which is higher than all the template-based models.

Study of CAMEO target 5djeB (CAMEO ID: 2016-09-24_00000052_1, PDB ID: 5dje)

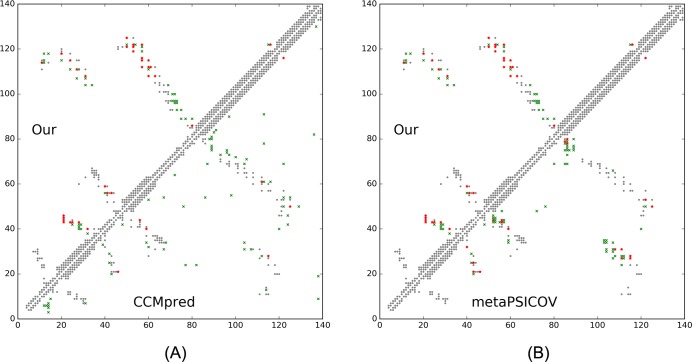

This target was released on September 24, 2016. It is an alpha protein of 140 residues with a novel fold. Table 11 shows that our server produced a much better contact prediction than CCMpred and MetaPSICOV. Specifically, the contact map predicted by our method has L/5 and L/10 long-range accuracy 50.0% and 71.4%, respectively, while that by CCMpred and MetaPSICOV has L/5 and L/10 accuracy less than 30%. HHblits can only find ~330 non-redundant sequence homologs for this target, i.e., its Meff = 330. Fig 12 shows the predicted contact maps and their overlap with the native. Both CCMpred and metaPSICOV failed to predict some long-range contacts.

Table 11. The long- and medium-range contact prediction accuracy of our method, MetaPSICOV and CCMpred on the CAMEO target 5djeB.

| Long range accuracy | Medium range accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| L | L/2 | L/5 | L/10 | L | L/2 | L/5 | L/10 | |

| Our method | 0.300 | 0.357 | 0.500 | 0.714 | 0.186 | 0.229 | 0.357 | 0.357 |

| metaPSICOV | 0.193 | 0.200 | 0.286 | 0.286 | 0.100 | 0.143 | 0.214 | 0.286 |

| CCMpred | 0.079 | 0.114 | 0.107 | 0.214 | 0.036 | 0.029 | 0.071 | 0.143 |

Fig 12. Overlap between top L/2 predicted contacts (in red and green) and the native contact map (in grey) for CAMEO target 5djeB.

Red (green) dots indicate correct (incorrect) prediction. (A) The comparison between our prediction (in upper-left triangle) and CCMpred (in lower-right triangle). (B)The comparison between our prediction (in upper-left triangle) and MetaPSICOV (in lower-right triangle).

The first 3D model submitted by our contact server has TMscore 0.65, while the best of our 5 models has TMscore 0.65 and RMSD 5.6Å. By contrast, the best of top 5 models built by CNS from CCMpred- and MetaPSICOV-predicted contacts have TMscore 0.404 and 0.427, respectively. Fig 13(A) shows that all the four alpha helices of our predicted model (in red) matches well with the native structure (blue), while the models from CCMpred (Fig 13(B)) and MetaPSICOV (Fig 13(C)) fail to predict the 3rd long helix correctly. To examine the superimposition of our model with its native structure from various angles, please see http://raptorx.uchicago.edu/DeepAlign/26652330/. Further, all other CAMEO registered servers, including the top-notch servers such as HHpred, RaptorX, SPARKS-X, and RBO Aleph (template-based and ab initio folding) only submitted models with TMscore≤0.35, i.e., failed to predict a correct fold (Fig 14).

Fig 13. Superimposition between the predicted models (red) and the native structure (blue) for the CAMEO test protein (PDB ID 5dje and chain B).

The models are built by CNS from the contacts predicted by (A) our method, (B) CCMpred, and (C) MetaPSICOV.

Fig 14. The list of top models submitted by CAMEO-participating servers for 5djeB and their quality scores.

The rightmost column displays the TMscore of submitted models. Server60 is our contact web server.

This target represents a novel fold. Searching through PDB70 created right before September 24, 2016 by our in-house structural homolog search tool DeepSearch cannot identify structurally similar proteins for this test protein. The most structurally similar proteins are 1u7lA and 4x5uA, which have TMscore 0.439 and 0.442 with the test protein, respectively. This is consistent with the fact that none of the template-based CAMEO-participating servers predicted a good model for this target. By contrast, our contact-assisted model has TMscore 0.65, much better than all the template-based models.

Study of CAMEO target 5f5pH (CAMEO ID: 2016-10-15_00000047_1, PDB ID: 5f5p)

On October 15, 2016, our contact web server successfully folded a very hard and also interesting CAMEO target (PDB ID: 5f5pH, CAMEO ID: 2016-10-15_00000047_1). This target is an alpha protein of 217 residues with four helices. Table 12 shows that our server produced a much better long-range contact prediction than CCMpred and MetaPSICOV. Specifically, our contact prediction has L/5 and L/10 long-range accuracy 76.7% and 95.2%, respectively, while MetaPSICOV has L/5 and L/10 accuracy less than 40%. This target has only ~65 non-redundant sequence homologs, i.e., its Meff = 65. The three methods have low L/k (k = 1, 2) medium-range accuracy because there are fewer than L/k native medium-range contacts while we use L/k as the denominator in calculating accuracy. As shown in Fig 15, CCMpred predicts too many false positives while MetaPSICOV predicts very few correct long-range contacts.

Table 12. The long- and medium-range contact prediction accuracy of our method, MetaPSICOV and CCMpred on the CAMEO target 5f5pH.

| Long-range accuracy | Medium-range accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| L | L/2 | L/5 | L/10 | L | L/2 | L/5 | L/10 | |

| Our server | 0.382 | 0.602 | 0.767 | 0.952 | 0.041 | 0.083 | 0.209 | 0.381 |

| metaPSICOV | 0.161 | 0.250 | 0.326 | 0.476 | 0.041 | 0.083 | 0.163 | 0.190 |

| CCMpred | 0.032 | 0.037 | 0.047 | 0.048 | 0.009 | 0.019 | 0.023 | 0.032 |

Fig 15. Overlap between top L/2 predicted contacts (in red and green) and the native contact map (in grey) for CAMEO target 5f5pH.

Red (green) dots indicate correct (incorrect) prediction. (A) The comparison between our prediction (in upper-left triangle) and CCMpred (in lower-right triangle). (B) The comparison between our prediction (in upper-left triangle) and MetaPSICOV (in lower-right triangle).

Our submitted 3D model has TMscore 0.71 and RMSD 4.21Å. By contrast, the best of top 5 models built by CNS from CCMpred- and MetaPSICOV-predicted contacts have TMscore 0.280 and 0.472, respectively. Fig 16(A) shows that our predicted model (in red) match well with the native structure (blue), while the model from CCMpred (Fig 16(B)) is completely wrong and the model from MetaPSICOV (Fig 16(C)) fails to place the 1st and 4th helices correctly. Please see http://raptorx.uchicago.edu/DeepAlign/14544627/ for the animated superimposition of our model with its native structure. As shown in the ranking list (Fig 17), all the other CAMEO-participating servers, including Robetta, HHpred, RaptorX, SPARKS-X, and RBO Aleph (template-based and ab initio folding) only submitted models with TMscore≤0.48 and RMSD>43.82Å. Our contact server is the only one that predicted a correct fold for this target.

Fig 16. Superimposition between the predicted models (red) and the native structure (blue) for the CAMEO target 5f5pH.

The models are built by CNS from the contacts predicted by (A) our method, (B) CCMpred, and (C) MetaPSICOV.

Fig 17. The list of top models submitted by CAMEO-participating servers for 5f5pH and their quality scores.

The rightmost column displays the TMscore of submitted models. Server60 is our contact web server.

To make sure our best model is not simply copied from the database of solved structures, we search our best model against PDB70 created right before October 15, 2016 using our in-house structural homolog search tool DeepSearch, which yields two weakly similar proteins 2yfaA and 4k1pA. They have TMscore 0.536 and 0.511 with our best model, respectively. This implies that our model is not simply copied from a solved structure in PDB.

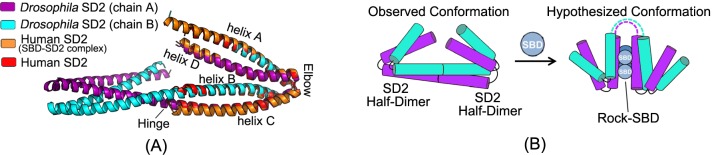

We ran BLAST on this target against PDB70 and surprisingly, found one protein 3thfA with E-value 3E-16 and sequence identity 35%. In fact, 3thfA and 5f5pH are two SD2 proteins from Drosophila and Human[41], respectively. Although homologous, they adopt different conformations and oligomerizations. In particular, 3thfA is a dimer and each monomer adopts a fold consisting of three segmented anti-parallel coiled-coil[42], whereas 5f5pH is a monomer that consists of two segmented antiparallel coiled-coils[41]. Superimposing the Human SD2 monomer onto the Drosophila SD2 dimer shows that the former structure was located directly in between the two structurally identical halves of the latter structure (see Fig 18(A)). That is, if our method predicts the contacts of 5f5pH by simply copying from 3thfA, it would produce a wrong 3D model. By contrast, all the other CAMEO-participating servers produced a wrong prediction for this target by using 3thfA as the template.

Fig 18.

(A) Structure superimposition of Drosophila SD2 and Human SD2. (B) Conformation change of Drosophila SD2 in binding with Rock-SBD.

Since SD2 protein may have conformational change when docking with Rock SBD protein, we check if the Drosophila SD2 monomer would change to a similar fold as the Human SD2 monomer or not. According to[41], the Human SD2 adopts a similar fold no matter whether it docks with the Rock SBD or not. According to [42], although the Drosophila SD2 dimer may have conformational change in the presence of Rock, the change only occurs in the hinge regions, but not at the adjacent identical halves. That is, even conformational change happens, the Drosophila SD2 monomer would not resemble the Human SD2 monomer (Fig 18(B)).

Study of CAMEO target 5flgB (CAMEO ID: 2016-11-12_00000046_1, PDB ID: 5flgB)

This target was released by CAMEO on November 12, 2016 and not included in the abovementioned 41 CAMEO hard targets. This target is a unique α/β protein with 260 residues. Table 13 shows that our server produced a much better (long-range) contact prediction than CCMpred and MetaPSICOV. In particular, our predicted contact map has L, L/2, L/5 and L/10 long-range accuracy 71.1%, 86.1%, 96.1% and 100.0%, respectively, while CCMpred- and MetaPSICOV-predicted contacts have long-range accuracy less than 35% since there are only ~113 effective sequence homologs for this protein, i.e., its Meff = 113. Fig 19 shows that both CCMpred and MetaPSICOV generated many false positive contact predictions and failed to predict long-range contacts.

Table 13. The long- and medium-range contact prediction accuracy of our method, MetaPSICOV and CCMpred on the CAMEO target 5flgB.

| Long-range accuracy | Medium-range accuracy | |||||||

|---|---|---|---|---|---|---|---|---|

| L | L/2 | L/5 | L/10 | L | L/2 | L/5 | L/10 | |

| Our server | 0.711 | 0.861 | 0.961 | 1.00 | 0.331 | 0.500 | 0.750 | 0.808 |

| MetaPSICOV | 0.208 | 0.262 | 0.269 | 0.288 | 0.242 | 0.285 | 0.442 | 0.615 |

| CCMpred | 0.165 | 0.184 | 0.308 | 0.346 | 0.150 | 0.215 | 0.346 | 0.385 |

Fig 19. Overlap between predicted contacts (in red and green) and the native (in grey) for CAMEO target 5flgB.

Red (green) dots indicate correct (incorrect) prediction. Top L/2 predicted contacts by each method are shown. The left picture shows the comparison between our prediction (in upper-left triangle) and CCMpred (in lower-right triangle) and the right picture shows the comparison between our prediction (in upper-left triangle) and MetaPSICOV (in lower-right triangle).

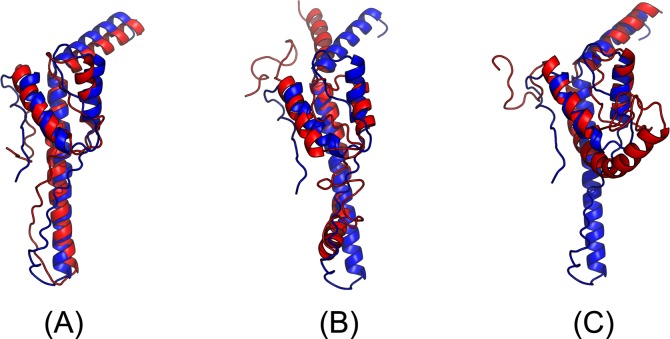

The 3D model submitted by our contact server has TMscore 0.61 and RMSD 7.12Å. The best of top 5 models built by CNS from CCMpred- and MetaPSICOV-predicted contacts have TMscore 0.240 and 0.267, respectively. Fig 20 shows that our method correctly modeled the overall fold, while CCMpred and MetaPSICOV failed. To examine the superimposition of our model with its native structure from various angles, please see http://raptorx.uchicago.edu/DeepAlign/12043612/. Furthermore, all the other CAMEO-participating servers, including the top-notch servers Robetta, HHpred, RaptorX, SPARKS-X, and RBO Aleph (template-based and ab initio folding), only submitted models with TMscore≤0.25 and RMSD>16.90Å (Fig 21). A 3D model with TMscore less than 0.25 does not have the correct fold while a model with TMscore≥0.6 very likely has a correct fold. That is, our contact server predicted a correct fold for this target while the others failed to.

Fig 20. Superimposition between the predicted models (red) and the native structure (blue) for the CAMEO test protein 5flgB.

The models are built by CNS from the contacts predicted by (A) our method, (B) CCMpred, and (C) MetaPSICOV.

Fig 21. The list of top models submitted by CAMEO-participating servers for 5flgB and their quality scores.

The rightmost column displays the model TMscore. Server60 is our contact web server.

This test protein has a novel fold. Searching through PDB70 created right before November 12, 2016 by our in-house structural homolog search tool DeepSearch cannot identify any similar structures. The most structurally similar proteins returned by DeepSearch are 2fb5A and 5dwmA, which have TMscore 0.367 and 0.355 with the native structure of this target, respectively. This is consistent with the fact that all the other CAMEO-participating servers failed to predict a correct fold for this target.

In addition, on December 3, 2016 CAMEO released a Leucine rich repeat protein (PDB chain: 5f0pA) of 462 residues with a new fold and Meff = 212 (see http://www.cameo3d.org/sp/targets/1-week/target/2016-12-03/5F0P_A/). Our server submitted a model with TMscore 0.70 and RMSD 6.89Å while the best model submitted by the others has TMscore only 0.42. Here we do not describe this target in detail to avoid elongating our paper further.

Conclusion and Discussion

This paper has presented a new deep (supervised) learning method that can greatly improve protein contact prediction. Our method distinguishes itself from previous supervised learning methods in that we employ a concatenation of two deep residual neural networks to model sequence-contact relationship, one for modeling of sequential features (i.e., sequence profile, predicted secondary structure and solvent accessibility) and the other for modeling of pairwise features (e.g., coevolution information). Ultra-deep residual network is the latest breakthrough in computer vision and has demonstrated the best performance in the computer vision challenge tasks (similar to CASP) in 2015. Our method is unique in that we predict all contacts of a protein simultaneously, which allows us to easily model high-order residue correlation. By contrast, existing supervised learning methods predict if two residues form a contact or not independent of the other residue pairs. Our (blind) test results show that our method dramatically improves contact prediction, exceeding currently the best methods (e.g., CCMpred, Evfold, PSICOV and MetaPSICOV) by a very large margin. Even without using any force fields and fragment assembly, ab initio folding using our predicted contacts as restraints can yield 3D structural models of correct fold for many more test proteins. Further, our experimental results also show that our contact-assisted models are much better than template-based models built from the training proteins of our deep model. We expect that our contact prediction methods can help reveal much more biological insights for those protein families without solved structures and close structural homologs.

Our method outperforms ECA due to a couple of reasons. First, ECA predicts contacts using information only in a single protein family, while our method learns sequence-structure relationship from thousands of protein families. Second, ECA considers only pairwise residue correlation, while our deep network architecture can capture high-order residue correlation (or contact occurrence patterns) very well. Our method uses a subset of protein features used by MetaPSICOV, but outperforms MetaPSICOV mainly because we explicitly model contact patterns (or high-order correlation), which is enabled by predicting contacts of a single protein simultaneously. MetaPSICOV employs a 2-stage approach. The 1st stage predicts if there is a contact between a pair of residues independent of the other residues. The 2nd stage considers the correlation between one residue pair and its neighboring pairs, but not in a very good way. In particular, the prediction errors in the 1st stage of MetaPSICOV cannot be corrected by the 2nd stage since two stages are trained separately. By contrast, we train all 2D convolution layers simultaneously (each layer is equivalent to one stage) so that later stages can correct prediction errors in early stages. In addition, a deep network can model much higher-order correlation and thus, capture information in a much larger context.

Our deep model does not predict contact maps by simply recognizing them from PDB, as evidenced by our experimental settings and results. First, we employ a strict criterion to remove redundancy so that there are no training proteins with sequence identity >25% or BLAST E-value <0.1 with any test proteins. Second, our contact-assisted models also have better quality than homology models, so it is unlikely that our predicted contact maps are simply copied from the training proteins. Third, our deep model trained by only soluble proteins works very well on membrane proteins. By contrast, the homology models built from soluble proteins for the membrane proteins have very low quality. Their average TMscore is no more than 0.17, which is the expected TMscore of any two randomly-chosen proteins. Finally, the blind CAMEO test indicates that our method successfully folded several targets with a new fold.

Our contact prediction method also performed the best in CASP12 in terms of the F1 score calculated on top L/2 long- and medium-range contacts of 38 free-modeling targets, although back then (May-July 2016) our method was not fully implemented. F1 score is a well-established and robust metric in evaluating the performance of a prediction method. Our method outperformed the 2nd best server iFold_1 by about 7.6% in terms of the total F1 score and the 3rd best server (i.e., an improved MetaPSICOV) by about 10.0%. Our advantage is even bigger when only top L/5 long- and medium-range contacts are evaluated. iFold_1 also used a deep neural network while the new MetaPSICOV used a deeper and wider network and more input features than the old version. This CASP result further confirms that deep learning can indeed improve protein contact prediction.

We have studied the impact of different input features. First of all, the co-evolution strength produced by CCMpred is very important. Without it, the top L/10 long-range prediction accuracy may drop by 0.15 for soluble proteins and more for membrane proteins. The larger performance degradation for membrane proteins is mainly because information learned from sequential features of soluble proteins is not very useful for membrane proteins. The depth of our deep model is as important as CCMpred, as evidenced by the fact that our deep method has much better accuracy than MetaPSICOV although we use a subset of protein features used by MetaPSICOV. Our test shows that a deep model with 9 and 30 layers have top L/10 accuracy ~0.1 and ~0.03 worse than a 60-layer model, respectively. This suggests that it is very important to model contact occurrence patterns (i.e., high-order residue correlation) by a deep architecture. The pairwise contact potential and mutual information may impact the accuracy by 0.02–0.03. The secondary structure and solvent accessibility may impact the accuracy by 0.01–0.02.

An interesting finding is that although our training set contains only ~100 membrane proteins, our model works well for membrane proteins, much better than CCMpred and MetaPSICOV. Even without using any membrane proteins in our training set, our deep models have almost the same accuracy on membrane proteins as those trained with membrane proteins. This implies that the sequence-structure relationship learned by our model from non-membrane proteins can generalize well to membrane protein contact prediction. This may be because that both soluble and membrane proteins share similar contact occurrence patterns in their contact maps and our deep method improves over previous methods by making a good use of contact occurrence patterns. We are going to study if we can further improve contact prediction accuracy of membrane proteins by including many more membrane proteins in the training set.

We may further improve contact prediction accuracy by enlarging the training set. First, the latest PDB25 has more than 10,000 proteins, which can provide many more training proteins than what we are using now. Second, when removing redundancy between training and test proteins, we may relax the BLAST E-value cutoff to 0.001 or simply drop it. This will improve the top L/k (k = 1, 2, 5, 10) contact prediction accuracy by 1–3% and accordingly the quality of the resultant 3D models by 0.01–0.02 in terms of TMscore. We may also improve the 3D model quality by combining our predicted contacts with energy function and fragment assembly. For example, we may feed our predicted contacts to Rosetta to build 3D models. Compared to CNS, Rosetta makes use of energy function and more local structural restraints through fragment assembly and thus, shall result in much better 3D models. Finally, instead of predicting contacts, our deep learning model actually can predict inter-residue distance distribution (i.e., distance matrix), which provides finer-grained information than contact maps and thus, shall benefit 3D structure modeling more than predicted contacts.

Our model achieves pretty good performance when using around 60–70 convolutional layers. A natural question to ask is can we further improve prediction accuracy by using many more convolutional layers? In computer vision, it has been shown that a 1001-layer residual neural network can yield better accuracy for image-level classification than a 100-layer network (but no result on pixel-level labeling is reported). Currently we cannot apply more than 100 layers to our model due to insufficient memory of a GPU card (12G). We plan to overcome the memory limitation by extending our training algorithm to run on multiple GPU cards. Then we will train a model with hundreds of layers to see if we can further improve prediction accuracy or not.

Method

Deep learning model details

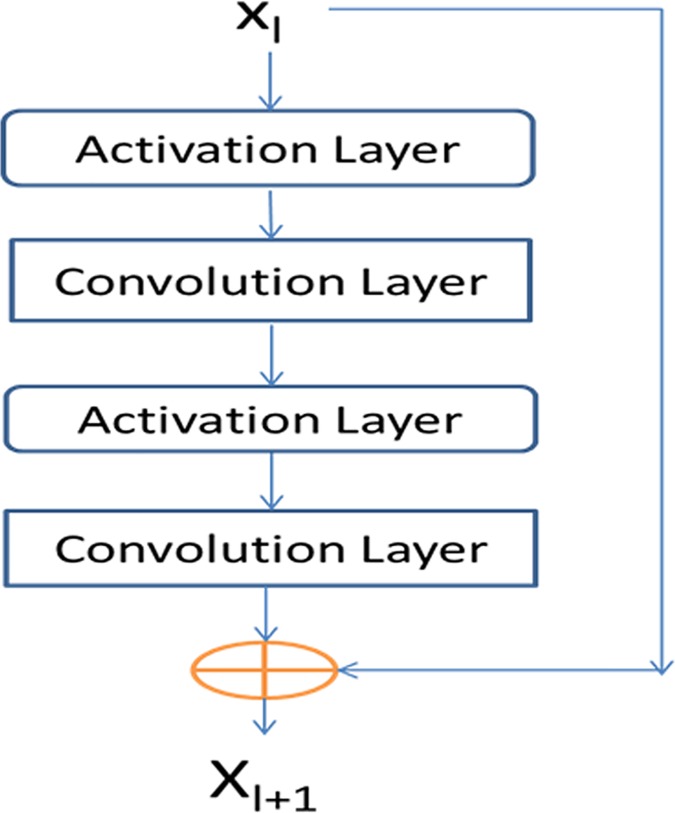

Residual network blocks

Our network consists of two residual neural networks, each in turn consisting of some residual blocks concatenated together. Fig 22 shows an example of a residual block consisting of 2 convolution layers and 2 activation layers. In this figure, Xl and Xl+1 are the input and output of the block, respectively. The activation layer conducts a simple nonlinear transformation of its input without using any parameters. Here we use the ReLU activation function [30] for such a transformation. Let f(Xl) denote the result of Xl going through the two activation layers and the two convolution layers. Then, Xl+1 is equal to Xl + f(Xl). That is, Xl+1 is a combination of Xl and its nonlinear transformation. Since f(Xl) is equal to the difference between Xl+1 and Xl, f is called residual function and this network called residual network. In the first residual network, Xl and Xl+1 represent sequential features and have dimension L×nl and L×nl+1, respectively, where L is protein sequence length and nl (nl+1) can be interpreted as the number of features or hidden neurons at each position (i.e., residue). In the 2nd residual network, Xl and Xl+1 represent pairwise features and have dimension L × L × nl and L × L× nl+1, respectively, where nl (nl+1) can be interpreted as the number of features or hidden neurons at one position (i.e., residue pair). Typically, we enforce nl ≤ nl+1 since one position at a higher level is supposed to carry more information. When nl < nl+1, in calculating Xl + f(Xl) we shall pad zeros to Xl so that it has the same dimension as Xl+1. To speed up training, we also add a batch normalization layer [43] before each activation layer, which normalizes its input to have mean 0 and standard deviation 1. The filter size (i.e., window size) used by a 1D convolution layer is 17 while that used by a 2D convolution layer is 3×3 or 5×5. By stacking many residual blocks together, even if at each convolution layer we use a small window size, our network can model very long-range interdependency between input features and contacts as well as the long-range interdependency between two different residue pairs. We fix the depth (i.e., the number of convolution layers) of the 1D residual network to 6, but vary the depth of the 2D residual network. Our experimental results show that with ~60 hidden neurons at each position and ~60 convolution layers for the 2nd residual network, our model can yield pretty good performance. Note that it has been shown that for image classification a convolutional neural network with a smaller window size but many more layers usually outperforms a network with a larger window size but fewer layers. Further, a 2D convolutional neural network with a smaller window size also has a smaller number of parameters than a network with a larger window size. See https://github.com/KaimingHe/deep-residual-networks for some existing implementations of 2D residual neural network. However, they assume an input of fixed dimension, while our network needs to take variable-length proteins as input.

Fig 22. A building block of our residual network with Xl and Xl+1 being input and output, respectively.

Each block consists of two convolution layers and two activation layers.

Our deep learning method for contact prediction is unique in at least two aspects. First, our model employs two multi-layer residual neural networks, which have not been applied to contact prediction before. Residual neural networks can pass both linear and nonlinear information from end to end (i.e., from the initial input to the final output). Second, we do contact prediction on the whole contact map by treating it as an individual image. In contrast, previous supervised learning methods separate the prediction of one residue pair from the others. By predicting contacts of a protein simultaneously, we can easily model long-range contact correlation and high-order residue correlation and long-range correlation between a contact and input features.

Convolutional operation

Existing deep learning development toolkits such as Theano (http://deeplearning.net/software/theano/) and Tensorflow (https://www.tensorflow.org/) have provided an API (application programming interface) for convolutional operation so that we do not need to implement it by ourselves. See http://deeplearning.net/tutorial/lenet.html and https://www.nervanasys.com/convolutional-neural-networks/ for a good tutorial of convolutional network. Please also see [44] for a detailed account of 1D convolutional network with application to protein sequence labeling. Roughly, a 1D convolution operation is de facto matrix-vector multiplication and 2D convolution can be interpreted similarly. Let X and Y (with dimensions L×m and L×n, respectively) be the input and output of a 1D convolutional layer, respectively. Let the window size be 2w+1 and s = (2w+1)m. The convolutional operator that transforms X to Y can be represented as a 2D matrix with dimension n×s, denoted as C. C is protein length-independent and each convolutional layer may have a different C. Let Xi be a submatrix of X centered at residue i (1≤ i ≤L) with dimension (2w+1)×m, and Yi be the i-th row of Y. We may calculate Yi by first flattening Xi to a vector of length s and then multiplying C and the flattened Xi.

Conversion of sequential features to pairwise features

We convert the output of the first module of our model (i.e., the 1-d residual neural network) to a 2D representation using an operation similar to outer product. Simply speaking, let v = {v1, v2, …, vi, …, vL} be the final output of the first module where L is protein sequence length and vi is a feature vector storing the output information for residue i. For a pair of residues i and j, we concatenate vi, v(i+j)/2 and vj to a single vector and use it as one input feature of this residue pair. The input features for this pair also include mutual information, the EC information calculated by CCMpred and pairwise contact potential [45, 46].

Loss function

We use maximum-likelihood method to train model parameters. That is, we maximize the occurrence probability of the native contacts (and non-contacts) of the training proteins. Therefore, the loss function is defined as the negative log-likelihood averaged over all the residue pairs of the training proteins. Since the ratio of contacts among all the residue pairs is very small, to make the training algorithm converge fast, we assign a larger weight to the residue pairs forming a contact. The weight is assigned such that the total weight assigned to contacts is approximately 1/8 of the number of non-contacts in the training set.

Regularization and optimization

To prevent overfitting, we employ L2-norm regularization to reduce the parameter space. That is, we want to find a set of parameters with a small L2 norm to minimize the loss function, so the final objective function to be minimized is the sum of loss function and the L2 norm of the model parameters (multiplied by a regularization factor). We use a stochastic gradient descent algorithm to minimize the objective function. It takes 20–30 epochs (each epoch scans through all the training proteins exactly once) to obtain a very good solution. The whole algorithm is implemented by Theano [47] and mainly runs on GPU.

Training and dealing with proteins of different lengths

Our network can take as input variable-length proteins. We train our deep network in a minibatch mode, which is routinely used in deep learning. That is, at each training iteration, we use a minibatch of proteins to calculate gradient and update the model parameters. A minibatch may have one or several proteins. We sort all training proteins by length and group proteins of similar lengths into minibatches. Considering that most proteins have length up to 600 residues, proteins in a minibatch often have the same length. In the case that they do not, we add zero padding to shorter proteins. Our convolutional operation is protein-length independent, so two different minibatches are allowed to have different protein lengths. We have tested minibatches with only a single protein or with several proteins. Both work well. However, it is much easier to implement minibatches with only a single protein.

Since our network can take as input variable-length lengths, we do not need to cut a long protein into segments in predicting contact maps. Instead we predict all contacts of a protein chain simultaneously. There is no need to use zero padding when only a single protein is predicted in a batch. Zero padding is needed only when several proteins of different lengths are predicted in a batch.

Training and test data

Our test data includes the 150 Pfam families [5], 105 CASP11 test proteins, 76 hard CAMEO test proteins released in 2015 (S1 Table) and 398 membrane proteins (S2 Table). All test membrane proteins have length no more than 400 residues and any two membrane proteins share less than 40% sequence identity. For the CASP test proteins, we use the official domain definitions, but we do not parse a CAMEO or membrane protein into domains.

Our training set is a subset of PDB25 created in February 2015, in which any two proteins share less than 25% sequence identity. We exclude a protein from the training set if it satisfies one of the following conditions: (i) sequence length smaller than 26 or larger than 700, (ii) resolution worse than 2.5Å, (iii) has domains made up of multiple protein chains, (iv) no DSSP information, and (v) there is inconsistency between its PDB, DSSP and ASTRAL sequences [48]. To remove redundancy with the test sets, we exclude any training proteins sharing >25% sequence identity or having BLAST E-value <0.1 with any test proteins. In total there are 6767 proteins in our training set, from which we have trained 7 different models. For each model, we randomly sampled ~6000 proteins from the training set to train the model and used the remaining proteins to validate the model and determine the hyper-parameters (i.e., regularization factor). The final model is the average of these 7 models.

Protein features

We use similar but fewer protein features as MetaPSICOV. In particular, the input features include protein sequence profile (i.e., position-specific scoring matrix), predicted 3-state secondary structure and 3-state solvent accessibility, direct co-evolutionary information generated by CCMpred, mutual information and pairwise potential [45, 46]. To derive these features, we need to generate MSA (multiple sequence alignment). For a training protein, we run PSI-BLAST (with E-value 0.001 and 3 iterations) to search the NR (non-redundant) protein sequence database dated in October 2012 to find its sequence homologs, and then build its MSA and sequence profile and predict other features (i.e., secondary structure and solvent accessibility). Sequence profile is represented as a 2D matrix with dimension L×20 where L is the protein length. Predicted secondary structure is represented as a 2D matrix with dimension L××3 (each entry is a predicted score or probability), so is the predicted solvent accessibility. Concatenating them together, we have a 2D matrix with dimension L×26, which is the input of our 1D residual network.

For a test protein, we generate four different MSAs by running HHblits [39] with 3 iterations and E-value set to 0.001 and 1, respectively, to search through the uniprot20 HMM library released in November 2015 and February 2016. From each individual MSA, we derive one sequence profile and employ our in-house tool RaptorX-Property [49] to predict the secondary structure and solvent accessibility accordingly. That is, for each test protein we generate 4 sets of input features and accordingly 4 different contact predictions. Then we average these 4 predictions to obtain the final contact prediction. This averaged contact prediction is about 1–2% better than that predicted from a single set of features. Although currently there are quite a few packages that can generate direct evolutionary coupling information, we only employ CCMpred to do so because it runs fast on GPU [4].

Programs to compare and evaluation metrics

We compare our method with PSICOV [5], Evfold [6], CCMpred [4], plmDCA, Gremlin, and MetaPSICOV [9]. The first 5 methods conduct pure DCA while MetaPSICOV employs supervised learning. MetaPSICOV [9] performed the best in CASP11 [31]. CCMpred, plmDCA, Gremlin perform similarly, but better than PSICOV and Evfold. All the programs are run with parameters set according to their respective papers. We evaluate the accuracy of the top L/k (k = 10, 5, 2, 1) predicted contacts where L is protein sequence length. The prediction accuracy is defined as the percentage of native contacts among the top L/k predicted contacts. We also divide contacts into three groups according to the sequence distance of two residues in a contact. That is, a contact is short-, medium- and long-range when its sequence distance falls into [6, 11], [12, 23], and ≥24, respectively.

Calculation of meff

Meff measures the amount of homologous information in an MSA (multiple sequence alignment). It can be interpreted as the number of non-redundant sequence homologs in an MSA when 70% sequence identity is used as cutoff. To calculate Meff, we first calculate the sequence identity between any two proteins in the MSA. Let a binary variable Sij denote the similarity between two protein sequences i and j. Sij is equal to 1 if and only if the sequence identity between i and j is at least 70%. For a protein i, we calculate the sum of Sij over all the proteins (including itself) in the MSA and denote it as Si. Finally, we calculate Meff as the sum of 1/Si over all the protein sequences in this MSA.

3D model construction by contact-assisted folding