Abstract

We consider the estimation of sparse graphical models that characterize the dependency structure of high-dimensional tensor-valued data. To facilitate the estimation of the precision matrix corresponding to each way of the tensor, we assume the data follow a tensor normal distribution whose covariance has a Kronecker product structure. The penalized maximum likelihood estimation of this model involves minimizing a non-convex objective function. In spite of the non-convexity of this estimation problem, we prove that an alternating minimization algorithm, which iteratively estimates each sparse precision matrix while fixing the others, attains an estimator with the optimal statistical rate of convergence as well as consistent graph recovery. Notably, such an estimator achieves estimation consistency with only one tensor sample, which is unobserved in previous work. Our theoretical results are backed by thorough numerical studies.

1 Introduction

High-dimensional tensor-valued data are prevalent in many fields such as personalized recommendation systems and brain imaging research [1, 2]. Traditional recommendation systems are mainly based on the user-item matrix, whose entry denotes each user’s preference for a particular item. To incorporate additional information into the analysis, such as the temporal behavior of users, we need to consider a user-item-time tensor. For another example, functional magnetic resonance imaging (fMRI) data can be viewed as a three way (third-order) tensor since it contains the brain measurements taken on different locations over time for various experimental conditions. Also, in the example of microarray study for aging [3], thousands of gene expression measurements are recorded on 16 tissue types on 40 mice with varying ages, which forms a four way gene-tissue-mouse-age tensor.

In this paper, we study the estimation of conditional independence structure within tensor data. For example, in the microarray study for aging we are interested in the dependency structure across different genes, tissues, ages and even mice. Assuming data are drawn from a tensor normal distribution, a straightforward way to estimate this structure is to vectorize the tensor and estimate the underlying Gaussian graphical model associated with the vector. Such an approach ignores the tensor structure and requires estimating a rather high dimensional precision matrix with insufficient sample size. For instance, in the aforementioned fMRI application the sample size is one if we aim to estimate the dependency structure across different locations, time and experimental conditions. To address such a problem, a popular approach is to assume the covariance matrix of the tensor normal distribution is separable in the sense that it is the Kronecker product of small covariance matrices, each of which corresponds to one way of the tensor. Under this assumption, our goal is to estimate the precision matrix corresponding to each way of the tensor. See § 1.1 for a detailed survey of previous work.

Despite the fact that the assumption of the Kronecker product structure of covariance makes the statistical model much more parsimonious, it poses significant challenges. In particular, the penalized negative log-likelihood function is non-convex with respect to the unknown sparse precision matrices. Consequently, there exists a gap between computational and statistical theory. More specifically, as we will show in §1.1, existing literature mostly focuses on establishing the existence of a local optimum that has desired statistical guarantees, rather than offering efficient algorithmic procedures that provably achieve the desired local optima. In contrast, we analyze an alternating minimization algorithm which iteratively minimizes the non-convex objective function with respect to each individual precision matrix while fixing the others. The established theoretical guarantees of the proposed algorithm are as follows. Suppose that we have n observations from a K-th order tensor normal distribution. We denote by mk, sk, dk (k = 1,…, K) the dimension, sparsity, and max number of non-zero entries in each row of the precision matrix corresponding to the k-th way of the tensor. Besides, we define . The k-th precision matrix estimator from our alternating minimization algorithm achieves a statistical rate of convergence in Frobenius norm, which is minimax-optimal since this is the best rate one can obtain even when the rest K − 1 true precision matrices are known [4]. Furthermore, under an extra irrepresentability condition, we establish a rate of convergence in max norm, which is also optimal, and a rate of convergence in spectral norm. These estimation consistency results and a sufficiently large signal strength condition further imply the model selection consistency of recovering all the edges. A notable implication of these results is that, when K ≥ 3, our alternating minimization algorithm can achieve estimation consistency in Frobenius norm even if we only have access to one tensor sample, which is often the case in practice. This phenomenon is unobserved in previous work. Finally, we conduct extensive experiments to evaluate the numerical performance of the proposed alternating minimization method. Under the guidance of theory, we propose a way to significantly accelerate the algorithm without sacrificing the statistical accuracy.

1.1 Related work and our contribution

A special case of our sparse tensor graphical model when K = 2 is the sparse matrix graphical model, which is studied by [5–8]. In particular, [5] and [6] only establish the existence of a local optima with desired statistical guarantees. Meanwhile, [7] considers an algorithm that is similar to ours. However, the statistical rates of convergence obtained by [6, 7] are much slower than ours when K = 2. See Remark 3.6 in §3.1 for a detailed comparison. For K = 2, our statistical rate of convergence in Frobenius norm recovers the result of [5]. In other words, our theory confirms that the desired local optimum studied by [5] not only exists, but is also attainable by an efficient algorithm. In addition, for matrix graphical model, [8] establishes the statistical rates of convergence in spectral and Frobenius norms for the estimator attained by a similar algorithm. Their results achieve estimation consistency in spectral norm with only one matrix observation. However, their rate is slower than ours with K = 2. See Remark 3.11 in §3.2 for a detailed discussion. Furthermore, we allow K to increase and establish estimation consistency even in Frobenius norm for n = 1. Most importantly, all these results focus on matrix graphical model and can not handle the aforementioned motivating applications such as the gene-tissue-mouse-age tensor dataset.

In the context of sparse tensor graphical model with a general K, [9] shows the existence of a local optimum with desired rates, but does not prove whether there exists an efficient algorithm that provably attains such a local optimum. In contrast, we prove that our alternating minimization algorithm achieves an estimator with desired statistical rates. To achieve it, we apply a novel theoretical framework to separately consider the population and sample optimizers, and then establish the onestep convergence for the population optimizer (Theorem 3.1) and the optimal rate of convergence for the sample optimizer (Theorem 3.4). A new concentration result (Lemma B.1) is developed for this purpose, which is also of independent interest. Moreover, we establish additional theoretical guarantees including the optimal rate of convergence in max norm, the estimation consistency in spectral norm, and the graph recovery consistency of the proposed sparse precision matrix estimator.

In addition to the literature on graphical models, our work is also closely related to a recent line of research on alternating minimization for non-convex optimization problems [10–13]. These existing results mostly focus on problems such as dictionary learning, phase retrieval and matrix decomposition. Hence, our statistical model and analysis are completely different from theirs. Also, our paper is related to a recent line of work on tensor decomposition. See, e.g., [14–17] and the references therein. Compared with them, our work focuses on the graphical model structure within tensor-valued data.

Notation

For a matrix A = (Ai,j) ∈ ℝd×d, we denote ‖A‖∞, ‖A‖2, ‖A‖F as its max, spectral, and Frobenius norm, respectively. We define ‖A‖1,off ≔ ∑i≠j |Ai,j| as its off-diagonal ℓ1 norm and |‖A‖|∞ ≔ maxi ∑j |Ai,j| as the maximum absolute row sum. Denote vec(A) as the vectorization of A which stacks the columns of A. Let tr(A) be the trace of A. For an index set 𝕊 = {(i, j), i, j ∈ {1,…, d}}, we define [A]𝕊 as the matrix whose entry indexed by (i, j) ∈ 𝕊 is equal to Ai,j, and zero otherwise. We denote 𝟙d as the identity matrix with dimension d×d. Throughout this paper, we use C, C1, C2,… to denote generic absolute constants, whose values may vary from line to line.

2 Sparse tensor graphical model

2.1 Preliminary

We employ the tensor notations used by [18]. Throughout this paper, higher order tensors are denoted by boldface Euler script letters, e.g. 𝒯. We consider a K-th order tensor 𝒯 ∈ ℝm1×m2×⋯×mK. When K = 1 it reduces to a vector and when K = 2 it reduces to a matrix. The (i1,…, iK)-th element of the tensor 𝒯 is denoted to be 𝒯i1,…,iK. Meanwhile, we define the vectorization of 𝒯 as vec(𝒯) ≔ (𝒯1,1,…,1,…, 𝒯m1,1,…,1,…, 𝒯1,m2,…,mK, 𝒯m1,m2,…,mK)⊤ ∈ ℝm with m = ∏k mk. In addition, we define the Frobenius norm of a tensor 𝒯 as .

For tensors, a fiber refers to the higher order analogue of the row and column of matrices. A fiber is obtained by fixing all but one of the indices of the tensor, e.g., the mode-k fiber of 𝒯(k) is given by 𝒯i1,…,,ik−1,:,ik+1,…,iK. Matricization, also known as unfolding, is the process to transform a tensor into a matrix. We denote 𝒯(k) as the mode-k matricization of a tensor 𝒯, which arranges the mode-k fibers to be the columns of the resulting matrix. Another useful operation in tensors is the k-mode product. The k-mode product of a tensor 𝒯 ∈ ℝm1×m2×⋯×mK with a matrix A ∈ ℝJ×mk is denoted as 𝒯 ×k A and is of the size m1 × ⋯× mk−1 × J × mk+1 × ⋯ × mK. Its entry is defined as . In addition, for a list of matrices {A1,…, AK} with Ak ∈ ℝmk×mk, k = 1,…, K, we define 𝒯 × {A1,…, AK} ≔ 𝒯 ×1 A1 ×2 × ⋯ ×K AK.

2.2 Model

A tensor 𝒯 ∈ ℝm1×m2×⋯×mK follows the tensor normal distribution with zero mean and covariance matrices Σ1,…, ΣK, denoted as 𝒯 ~ TN(0, Σ1,…, ΣK), if its probability density function is

| (2.1) |

where and . When K = 1, this tensor normal distribution reduces to the vector normal distribution with zero mean and covariance Σ1. According to [9, 18], it can be shown that 𝒯 ~ TN(0, Σ1,…, ΣK) if and only if vec(𝒯) ~ N(vec(0);ΣK ⊗ ⋯ ⊗ Σ1), where vec(0) ∈ ℝm and ⊗ is the matrix Kronecker product.

We consider the parameter estimation for the tensor normal model. Assume that we observe independently and identically distributed tensor samples 𝒯1,…, 𝒯n from TN(0; ). We aim to estimate the true covariance matrices () and their corresponding true precision matrices () where . To address the identifiability issue in the parameterization of the tensor normal distribution, we assume that for k = 1,…, K. This renormalization assumption does not change the graph structure of the original precision matrix.

A standard approach to estimate , k = 1,…, K, is to use the maximum likelihood method via (2.1). Up to a constant, the negative log-likelihood function of the tensor normal distribution is , where . To encourage the sparsity of each precision matrix in the high-dimensional scenario, we consider a penalized log-likelihood estimator, which is obtained by minimizing

| (2.2) |

where Pλk (·) is a penalty function indexed by the tuning parameter λk. In this paper, we focus on the lasso penalty [19], i.e., Pλk (Ωk) = λk‖Ωk‖1,off. This estimation procedure applies similarly to a broad family of other penalty functions.

We name the penalized model from (2.2) as the sparse tensor graphical model. It reduces to the sparse vector graphical model [20, 21] when K = 1, and the sparse matrix graphical model [5–8] when K = 2. Our framework generalizes them to fulfill the demand of capturing the graphical structure of higher order tensor-valued data.

2.3 Estimation

This section introduces the estimation procedure for the sparse tensor graphical model. A computationally efficient algorithm is provided to estimate the precision matrix for each way of the tensor.

Recall that in (2.2), qn(Ω1,…, ΩK) is jointly non-convex with respect to Ω1,…, ΩK. Nevertheless, qn(Ω1,…, ΩK) is a bi-convex problem since qn(Ω1,…, ΩK) is convex in Ωk when the rest K − 1 precision matrices are fixed. The bi-convex property plays a critical role in our algorithm construction and its theoretical analysis in §3.

According to its bi-convex property, we propose to solve this non-convex problem by alternatively update one precision matrix with other matrices fixed. Note that, for any k = 1,…, K, minimizing (2.2) with respect to Ωk while fixing the rest K − 1 precision matrices is equivalent to minimizing

| (2.3) |

Here , where with × the tensor product operation and [·](k) the mode-k matricization operation defined in §2.1. The result in (2.3) can be shown by noting that according to the properties of mode-k matricization shown by [18]. Hereafter, we drop the superscript k of if there is no confusion. Note that minimizing (2.3) corresponds to estimating vector-valued Gaussian graphical model and can be solved efficiently via the glasso algorithm [21].

Algorithm 1.

Solve sparse tensor graphical model via Tensor lasso (Tlasso)

| 1: | Input: Tensor samples 𝒯1…, 𝒯n, tuning parameters λ1,…, λK, max number of iterations T. |

| 2: | Initialize randomly as symmetric and positive definite matrices and set t = 0. |

| 3: | Repeat: |

| 4: | t = t + 1. |

| 5: | For k = 1,…, K: |

| 6: | Given , solve (2.3) for via glasso [21]. |

| 7: | Normalize such that . |

| 8: | End For |

| 9: | Until t = T. |

| 10: | Output: . |

The details of our Tensor lasso (Tlasso) algorithm are shown in Algorithm 1. It starts with a random initialization and then alternatively updates each precision matrix until it converges. In §3, we will illustrate that the statistical properties of the obtained estimator are insensitive to the choice of the initialization (see the discussion following Theorem 3.5).

3 Theory of statistical optimization

We first prove the estimation errors in Frobenius norm, max norm, and spectral norm, and then provide the model selection consistency of our Tlasso estimator. We defer all the proofs to the appendix.

3.1 Estimation error in Frobenius norm

Based on the penalized log-likelihood in (2.2), we define the population log-likelihood function as

| (3.1) |

By minimizing q(Ω1,…, ΩK) with respect to Ωk, k = 1,…, K, we obtain the population minimization function with the parameter Ω[K]−k ≔ {Ω1,…, Ωk−1, Ωk+1,…, ΩK}, i.e.,

| (3.2) |

Theorem 3.1

For any k = 1,…, K, if Ωj (j ≠ k) satisfies , then the population minimization function in (3.2) satisfies .

Theorem 3.1 shows a surprising phenomenon that the population minimization function recovers the true precision matrix up to a constant in only one iteration. If , j ≠ k, then . Otherwise, after a normalization such that ‖Mk(Ω[K]−k)‖F = 1, the normalized population minimization function still fully recovers . This observation suggests that setting T = 1 in Algorithm 1 is sufficient. Such a suggestion will be further supported by our numeric results.

In practice, when (3.1) is unknown, we can approximate it via its sample version qn(Ω1,…, ΩK) defined in (2.2), which gives rise to the statistical error in the estimation procedure. Analogously to (3.2), we define the sample-based minimization function with parameter Ω[K]−k as

| (3.3) |

In order to prove the estimation error, it remains to quantify the statistical error induced from finite samples. The following two regularity conditions are assumed for this purpose.

Condition 3.2

(Bounded Eigenvalues). For any k = 1,…, K, there is a constant C1 > 0 such that,

where and refer to the minimal and maximal eigenvalue of , respectively.

Condition 3.2 requires the uniform boundedness of the eigenvalues of true covariance matrices . It has been commonly assumed in the graphical model literature [22].

Condition 3.3

(Tuning). For any k = 1,…, K and some constant C2 > 0, the tuning parameter λk satisfies .

Condition 3.3 specifies the choice of the tuning parameters. In practice, a data-driven tuning procedure [23] can be performed to approximate the optimal choice of the tuning parameters.

Before characterizing the statistical error, we define a sparsity parameter for , k = 1,…, K. Let . Denote the sparsity parameter sk ≔ |𝕊k| − mk, which is the number of nonzero entries in the off-diagonal component of . For each k = 1,…, K, we define as the set containing and its neighborhood for some sufficiently large constant radius α > 0, i.e.,

| (3.4) |

Theorem 3.4

Assume Conditions 3.2 and 3.3 hold. For any k = 1,…, K, the statistical error of the sample-based minimization function defined in (3.3) satisfies that, for any fixed ,

| (3.5) |

where Mk(Ω[K]−k) and M̂k(Ω[K]−k) are defined in (3.2) and (3.3), and .

Theorem 3.4 establishes the statistical error associated with M̂k(Ω[K]−k) for arbitrary with j ≠ k. In comparison, previous work on the existence of a local solution with desired statistical property only establishes theorems similar to Theorem 3.4 for with j ≠ k. The extension to an arbitrary involves non-trivial technical barriers. Particularly, we first establish the rate of convergence of the difference between a sample-based quadratic form with its expectation (Lemma B.1) via Talagrand’s concentration inequality [24]. This result is also of independent interest. We then carefully characterize the rate of convergence of Sk defined in (2.3) (Lemma B.2). Finally, we develop (3.5) using the results for vector-valued graphical models developed by [25].

According to Theorem 3.1 and Theorem 3.4, we obtain the rate of convergence of the Tlasso estimator in terms of Frobenius norm, which is our main result.

Theorem 3.5

Assume that Conditions 3.2 and 3.3 hold. For any k = 1,…, K, if the initialization satisfies for any j ≠ k, then the estimator Ω̂k from Algorithm 1 with T = 1 satisfies,

| (3.6) |

where and is defined in (3.4).

Theorem 3.5 suggests that as long as the initialization is within a constant distance to the truth, our Tlasso algorithm attains a consistent estimator after only one iteration. This initialization condition trivially holds since for any that is positive definite and has unit Frobenius norm, we have by noting that for the identifiability of the tensor normal distribution. In literature, [9] shows that there exists a local minimizer of (2.2) whose convergence rate can achieve (3.6). However, it is unknown if their algorithm can find such minimizer since there could be many other local minimizers.

A notable implication of Theorem 3.5 is that, when K ≥ 3, the estimator from our Tlasso algorithm can achieve estimation consistency even if we only have access to one observation, i.e., n = 1, which is often the case in practice. To see it, suppose that K = 3 and n = 1. When the dimensions m1, m2, and m3 are of the same order of magnitude and sk = O(mk) for k = 1, 2, 3, all the three error rates corresponding to k = 1, 2, 3 in (3.6) converge to zero.

This result indicates that the estimation of the k-th precision matrix takes advantage of the information from the j-th way (j ≠ k) of the tensor data. Consider a simple case that K = 2 and one precision matrix is known. In this scenario the rows of the matrix data are independent and hence the effective sample size for estimating is in fact nm1. The optimality result for the vector-valued graphical model [4] implies that the optimal rate for estimating is , which matches our result in (3.6). Therefore, the rate in (3.6) obtained by our Tlasso estimator is minimax-optimal since it is the best rate one can obtain even when are known. As far as we know, this phenomenon has not been discovered by any previous work in tensor graphical model.

Remark 3.6

For K = 2, our tensor graphical model reduces to matrix graphical model with Kronecker product covariance structure [5–8]. In this case, the rate of convergence of Ω̂1 in (3.6) reduces to , which is much faster than established by [6] and established by [7]. In literature, [5] shows that there exists a local minimizer of the objective function whose estimation errors match ours. However, it is unknown if their estimator can achieve such convergence rate. On the other hand, our theorem confirms that our algorithm is able to find such estimator with optimal rate of convergence.

3.2 Estimation error in max norm and spectral norm

We next show the estimation error in max norm and spectral norm. Trivially, these estimation errors are bounded by that in Frobenius norm shown in Theorem 3.5. To develop improved rates of convergence in max and spectral norms, we need to impose stronger conditions on true parameters.

We first introduce some important notations. Denote dk as the maximum number of non-zeros in any row of the true precision matrices , that is,

| (3.7) |

with |·| the cardinality of the inside set. For each covariance matrix , we define . Denote the Hessian matrix , whose entry corresponds to the second order partial derivative of the objective function with respect to [Ωk]i,j and [Ωk]s,t. We define its sub-matrix indexed by the index set 𝕊k as , which is the |𝕊k| × |𝕊k| matrix with rows and columns of indexed by 𝕊k and 𝕊k, respectively. Moreover, we define . In order to establish the rate of convergence in max norm, we need to impose an irrepresentability condition on the Hessian matrix.

Condition 3.7

(Irrepresentability). For each k = 1,…, K, there exists some αk ∈ (0, 1] such that max

Condition 3.7 controls the influence of the non-connected terms in on the connected edges in 𝕊k. This condition has been widely applied in lasso penalized models [26, 27].

Condition 3.8

(Bounded Complexity). For each k = 1,…, K, the parameters are bounded and the parameter dk in (3.7) satisfies .

Theorem 3.9

Suppose Conditions 3.2, 3.3, 3.7 and 3.8 hold. Assume sk = O(mk) for k = 1,…, K and assume are in the same order, i.e., m1 ≍ m2 ≍ ⋯ ≍ mK. For each k, if the initialization satisfies for any j ≠ k, then the estimator Ω̂k from Algorithm 1 with T = 2 satisfies,

| (3.8) |

In addition, the edge set of Ω̂k is a subset of the true edge set of , that is, .

Theorem 3.9 shows that our Tlasso estimator achieves the optimal rate of convergence in max norm [4]. Here we consider the estimator obtained after two iterations since we require a new concentration inequality (Lemma B.3) for the sample covariance matrix, which is built upon the estimator in Theorem 3.5. A direct consequence from Theorem 3.9 is the estimation error in spectral norm.

Corollary 3.10

Suppose the conditions of Theorem 3.9 hold, for any k = 1,…, K, we have

| (3.9) |

Remark 3.11

Now we compare our obtained rate of convergence in spectral norm for K = 2 with that established in the sparse matrix graphical model literature. In particular, [8] establishes the rate of for k = 1, 2. Therefore, when , which holds for example in the bounded degree graphs, our obtained rate is faster. However, our faster rate comes at the price of assuming the irrepresentability condition. Using recent advance in nonconvex regularization [28], we can eliminate the irrepresentability condition. We leave this to future work.

3.3 Model selection consistency

Theorem 3.9 ensures that the estimated precision matrix correctly excludes all non-informative edges and includes all the true edges (i, j) with for some constant C > 0. Therefore, in order to achieve the model selection consistency, a sufficient condition is to assume that, for each k = 1,…, K, the minimal signal is not too small.

Theorem 3.12

Under the conditions of Theorem 3.9, if for some constant C > 0, then for any k = 1,…, K, , with high probability.

Theorem 3.12 indicates that our Tlasso estimator is able to correctly recover the graphical structure of each way of the high-dimensional tensor data. To the best of our knowledge, these is the first model selection consistency result in high dimensional tensor graphical model.

4 Simulations

We compare the proposed Tlasso estimator with two alternatives. The first one is the direct graphical lasso (Glasso) approach [21] which applies the glasso to the vectorized tensor data to estimate directly. The second alternative method is the iterative penalized maximum likelihood method (P-MLE) proposed by [9], whose termination condition is set to be .

For simplicity, in our Tlasso algorithm we set the initialization of k-th precision matrix as 𝟙mk for each k = 1,…, K and the total iteration T = 1. The tuning parameter λk is set as . For a fair comparison, the same tuning parameter is applied in the P-MLE method. In the direct Glasso approach, its tuning parameter is chosen by cross-validation via huge package [29].

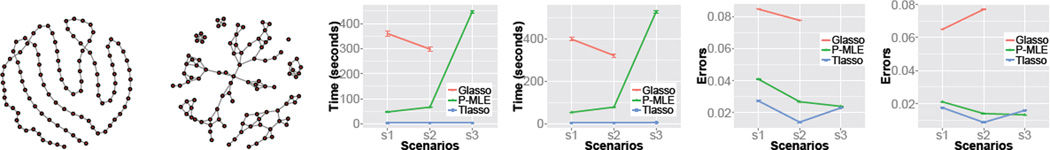

We consider two simulations with a third order tensor, i.e., K = 3. In Simulation 1, we construct a triangle graph, while in Simulation 2, we construct a four nearest neighbor graph for each precision matrix. An illustration of the generated graphs are shown in Figure 1. In each simulation, we consider three scenarios, i.e., s1: n = 10 and (m1, m2, m3) = (10, 10, 10); s2: n = 50 and (m1, m2, m3) = (10, 10, 10); s3: n = 10 and (m1, m2, m3) = (100, 5, 5). We repeat each example 100 times and compute the averaged computational time, the averaged estimation error of the Kronecker product of precision matrices , the true positive rate (TPR), and the true negative rate (TNR). More specifically, we denote be the (i, j)-th entry of , and define and .

Figure 1.

Left two plots: illustrations of the generated graphs; Middle two plots: computational time; Right two plots: estimation errors. In each group of two plots, the left (right) is for Simulation 1 (2).

As shown in Figure 1, our Tlasso is dramatically faster than both alternative methods. In Scenario s3, Tlasso takes about five seconds for each replicate, the P-MLE takes about 500 seconds while the direct Glasso method takes more than one hour and is omitted in the plot. Tlasso algorithm is not only computationally efficient but also enjoys superior estimation accuracy. In all examples, the direct Glasso method has significantly larger errors than Tlasso due to ignoring the tensor graphical structure. Tlasso outperforms P-MLE in Scenarios s1 and s2 and is comparable to it in Scenario s3.

Table 1 shows the variable selection performance. Our Tlasso identifies almost all edges in these six examples, while the Glasso and P-MLE method miss several true edges. On the other hand, Tlasso tends to include more non-connected edges than other methods.

Table 1.

A comparison of variable selection performance. Here TPR and TNR denote the true positive rate and true negative rate.

| Scenarios | Glasso | P-MLE | Tlasso | ||||

|---|---|---|---|---|---|---|---|

| TPR | TNR | TPR | TNR | TPR | TNR | ||

| Sim 1 | s1 | 0.27 (0.002) | 0.96 (0.000) | 1 (0) | 0.89 (0.002) | 1(0) | 0.76 (0.004) |

| s2 | 0.34 (0.000) | 0.93 (0.000) | 1 (0) | 0.89 (0.002) | 1(0) | 0.76 (0.004) | |

| s3 | / | / | 1 (0) | 0.93 (0.001) | 1(0) | 0.70 (0.004) | |

| Sim 2 | s1 | 0.08 (0.000) | 0.96 (0.000) | 0.93 (0.004) | 0.88 (0.002) | 1(0) | 0.65 (0.005) |

| s2 | 0.15 (0.000) | 0.92 (0.000) | 1 (0) | 0.85 (0.002) | 1(0) | 0.63 (0.005) | |

| s3 | / | / | 0.82 (0.001) | 0.93 (0.001) | 0.99(0.001) | 0.38 (0.002) | |

Supplementary Material

Acknowledgments

We would like to thank the anonymous reviewers for their helpful comments. Han Liu is grateful for the support of NSF CAREER Award DMS1454377, NSF IIS1408910, NSF IIS1332109, NIH R01MH102339, NIH R01GM083084, and NIH R01HG06841. Guang Cheng’s research is sponsored by NSF CAREER Award DMS1151692, NSF DMS1418042, Simons Fellowship in Mathematics, ONR N00014-15-1-2331 and a grant from Indiana Clinical and Translational Sciences Institute.

Contributor Information

Wei Sun, Yahoo Labs, Sunnyvale, CA.

Zhaoran Wang, Department of Operations Research, and Financial Engineering, Princeton University, Princeton, NJ.

Han Liu, Department of Operations Research, and Financial Engineering, Princeton University, Princeton, NJ.

Guang Cheng, Department of Statistics, Purdue University, West Lafayette, IN.

References

- 1.Rendle S, Schmidt-Thieme L. Pairwise interaction tensor factorization for personalized tag recommendation; International Conference on Web Search and Data Mining; 2010. [Google Scholar]

- 2.Allen GI. Sparse higher-order principal components analysis; International Conference on Artificial Intelligence and Statistics; 2012. [Google Scholar]

- 3.Zahn J, Poosala S, Owen A, Ingram D, et al. AGEMAP: A gene expression database for aging in mice. PLOS Genetics. 2007;3:2326–2337. doi: 10.1371/journal.pgen.0030201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cai T, Liu W, Zhou HH. Estimating sparse precision matrix: Optimal rates of convergence and adaptive estimation. Annals of Statistics. 2015 [Google Scholar]

- 5.Leng C, Tang CY. Sparse matrix graphical models. Journal of the American Statistical Association. 2012;107:1187–1200. doi: 10.1080/01621459.2011.644498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yin J, Li H. Model selection and estimation in the matrix normal graphical model. Journal of Multivariate Analysis. 2012;107:119–140. doi: 10.1016/j.jmva.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tsiligkaridis T, Hero AO, Zhou S. On convergence of Kronecker graphical Lasso algorithms. IEEE Transactions on Signal Processing. 2013;61:1743–1755. [Google Scholar]

- 8.Zhou S. Gemini: Graph estimation with matrix variate normal instances. Annals of Statistics. 2014;42:532–562. [Google Scholar]

- 9.He S, Yin J, Li H, Wang X. Graphical model selection and estimation for high dimensional tensor data. Journal of Multivariate Analysis. 2014;128:165–185. [Google Scholar]

- 10.Jain P, Netrapalli P, Sanghavi S. Low-rank matrix completion using alternating minimization; Symposium on Theory of Computing; 2013. pp. 665–674. [Google Scholar]

- 11.Netrapalli P, Jain P, Sanghavi S. Phase retrieval using alternating minimization. Advances in Neural Information Processing Systems. 2013:2796–2804. [Google Scholar]

- 12.Sun J, Qu Q, Wright J. Complete dictionary recovery over the sphere. arXiv:1504.06785. 2015 [Google Scholar]

- 13.Arora S, Ge R, Ma T, Moitra A. Simple, efficient, and neural algorithms for sparse coding. arXiv:1503.00778. 2015 [Google Scholar]

- 14.Anandkumar A, Ge R, Hsu D, Kakade S, Telgarsky M. Tensor decompositions for learning latent variable models. Journal of Machine Learning Research. 2014;15:2773–2832. [Google Scholar]

- 15.Sun W, Lu J, Liu H, Cheng G. Provable sparse tensor decomposition. arXiv:1502.01425. 2015 [Google Scholar]

- 16.Zhe S, Xu Z, Chu X, Qi Y, Park Y. Scalable nonparametric multiway data analysis; International Conference on Artificial Intelligence and Statistics; 2015. [Google Scholar]

- 17.Zhe S, Xu Z, Qi Y, Yu P. Sparse bayesian multiview learning for simultaneous association discovery and diagnosis of alzheimer’s disease. Twenty-Ninth AAAI Conference on Artificial Intelligence. 2015 [Google Scholar]

- 18.Kolda T, Bader B. Tensor decompositions and applications. SIAM Review. 2009;51:455–500. [Google Scholar]

- 19.Tibshirani R. Regression shrinkage and selection via the Lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- 20.Yuan M, Lin Y. Model selection and estimation in the gaussian graphical model. Biometrika. 2007;94:19–35. [Google Scholar]

- 21.Friedman J, Hastie H, Tibshirani R. Sparse inverse covariance estimation with the graphical Lasso. Biostatistics. 2008;9:432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rothman AJ, Bickel PJ, Levina E, Zhu J. Sparse permutation invariant covariance estimation. Electronic Journal of Statistics. 2008;2:494–515. [Google Scholar]

- 23.Sun W, Wang J, Fang Y. Consistent selection of tuning parameters via variable selection stability. Journal of Machine Learning Research. 2013;14:3419–3440. [Google Scholar]

- 24.Ledoux M, Talagrand M. Probability in Banach Spaces: Isoperimetry and Processes. Springer; 2011. [Google Scholar]

- 25.Fan J, Feng Y, Wu Y. Network exploration via the adaptive Lasso and scad penalties. Annals of Statistics. 2009;3:521–541. doi: 10.1214/08-AOAS215SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhao P, Yu B. On model selection consistency of Lasso. Journal of Machine Learning Research. 2006;7:2541–2567. [Google Scholar]

- 27.Ravikumar P, Wainwright MJ, Raskutti G, Yu B. High-dimensional covariance estimation by minimizing ℓ1-penalized log-determinant divergence. Electronic Journal of Statistics. 2011;5:935–980. [Google Scholar]

- 28.Loh Po-Ling, Wainwright Martin J. Support recovery without incoherence: A case for nonconvex regularization. arXiv preprint arXiv:1412.5632. 2014 [Google Scholar]

- 29.Zhao T, Liu H, Roeder K, Lafferty J, Wasserman L. The huge package for high-dimensional undirected graph estimation in R. Journal of Machine Learning Research. 2012;13:1059–1062. [PMC free article] [PubMed] [Google Scholar]

- 30.Gupta A, Nagar D. Matrix variate distributions. Chapman and Hall/CRC Press; 2000. [Google Scholar]

- 31.Hoff P. Separable covariance arrays via the Tucker product, with applications to multivariate relational data. Bayesian Analysis. 2011;6:179–196. [Google Scholar]

- 32.Dawid AP. Some matrix-variate distribution theory: Notational considerations and a bayesian application. Biometrika. 1981;68:265–274. [Google Scholar]

- 33.Negahban S, Wainwright MJ. Estimation of (near) low-rank matrices with noise and high-dimensional scaling. Annals of Statistics. 2011;39:1069–1097. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.