Abstract

We consider a partially linear framework for modelling massive heterogeneous data. The major goal is to extract common features across all sub-populations while exploring heterogeneity of each sub-population. In particular, we propose an aggregation type estimator for the commonality parameter that possesses the (non-asymptotic) minimax optimal bound and asymptotic distribution as if there were no heterogeneity. This oracular result holds when the number of sub-populations does not grow too fast. A plug-in estimator for the heterogeneity parameter is further constructed, and shown to possess the asymptotic distribution as if the commonality information were available. We also test the heterogeneity among a large number of sub-populations. All the above results require to regularize each sub-estimation as though it had the entire sample size. Our general theory applies to the divide-and-conquer approach that is often used to deal with massive homogeneous data. A technical by-product of this paper is the statistical inferences for the general kernel ridge regression. Thorough numerical results are also provided to back up our theory.

Keywords: partially linear model, heterogenous data, massive data, reproducing kernel Hilbert space, joint asymptotics, mean square error, bias propagation

1. Introduction

In this paper, we propose a partially linear regression framework for modelling massive heterogeneous data. Let be samples from an underlying distribution that may change with N. We assume that there exist s independent sub-populations, and the data from the jth sub-population follows a partially linear model:

| (1.1) |

where ε has zero mean and known variance σ2. In the above model, Y depends on X through a linear function that may vary across all sub-populations, and depends on Z through a nonlinear function that is common to all sub-populations. The possibly different values of are viewed as the source of heterogeneity. We also point out that the sample sizes in some sub-populations could be extremely high in reality. Note that (1.1) is a typical “semi-nonparametric” model (Cheng and Shang, 2013) since we want to infer both commonality and heterogeneity components throughout the paper.

The model (1.1) is motivated by the following scenario: different labs conduct the same experiment on the relationship between a response variable Y (e.g., heart disease) and a set of predictors Z, X1, X2,...,Xp. It is known from biological knowledge that the dependence structure between Y and Z (e.g., blood pressure) should be homogeneous for all human. However, for the other covariates (e.g., certain genes), we allow their (linear) relations with Y to potentially vary in different labs. For example, the genetic functionality of different races might be heterogenous. The linear relation is assumed here for simplicity, and particularly suitable when the covariates are discrete.

Statistical modelling for massive data has attracted a flurry of recent research. For homogeneous data, the statistical studies of the divide-and-conquer method currently focus on either parametric inferences, e.g., Bag of Little Bootstraps (Kleiner et al., 2012), and parallel MCMC computing (Wang and Dunson, 2013), or nonparametric minimaxity (Zhang et al., 2013). The other relevant work includes high dimensional linear models with variable selection (Chen and Xie, 2012) and structured perceptron (McDonald et al., 2010). Heterogenous data are often handled by fitting mixture models (Aitkin and Rubin, 1985; McLachlan and Peel, 2004; Figueiredo and Jain, 2002), time varying coefficient models (Hastie and Tibshirani, 1993; Fan and Zhang, 1999) or multitask regression (Huang and Zhang, 2010; Nardi and Rinaldo, 2008; Obozinski et al., 2008). The recent high dimensional work includes Stäadler et al. (2010); Meinshausen and Bühlmann (2014). However, as far as we are aware, semi-nonparametric inference for massive homogeneous/heterogeneous data still remains untouched.

In this paper, our primary goal is to extract common features across all sub-populations while exploring heterogeneity of each sub-population. Specifically, we employ a simple aggregation procedure, which averages commonality estimators across all sub-populations, and then construct a plug-in estimator for each heterogeneity parameter based on the combined estimator for commonality. The secondary goal is to apply the divide-and-conquer method to the sub-population having a huge sample size that is unable to be processed in one single computer. The above purposes are achieved by estimating our statistical model (1.1) with the kernel ridge regression (KRR) method. The KRR framework is known to be very flexible and well supported by the general reproducing kernel Hilbert space (RKHS) theory (Mendelson, 2002; Steinwart et al., 2009; Zhang, 2005). In particular, the partial smoothing spline model (Wahba, 1990) can be viewed a special case. An important technical contribution of this paper is that a (point-wise) limit distribution of the KRR estimate is established by generalizing the smoothing spline inference results in Cheng and Shang (2013). This theoretical innovation makes our work go beyond the existing statistical study on the KRR estimation in large datastes, which mainly focus on their nonparametric minimaxity, e.g., Zhang et al. (2013); Bach (2012); Raskutti et al. (2014).

Our theoretical studies are mostly concerned with the so-called “oracle rule” for massive data. Specifically, we define the “oracle estimate” for commonality (heterogeneity) as the one computed when all the heterogeneity information are given (the commonality information is given in each-subpopulation), i.e., 's are known (f0 is known). We claim that a commonality estimator satisfies the oracle rule if it possesses the same minimax optimality and asymptotic distribution as the “oracle estimate” defined above. A major contribution of this paper is to derive the largest possible diverging rate of s under which our combined estimator for commonality satisfies the oracle rule. In other words, our aggregation procedure can “filter out” the heterogeneity in data when s does not grow too fast with N. Interestingly, we have to set a lower bound on s for our heterogeneity estimate to possess the asymptotic distribution as if the commonality information were available, i.e., oracle rule. Our second contribution is to test the heterogeneity among a large number of sub-populations by employing the recent Gaussian approximation theory (Chernozhukov et al., 2013). The above results directly apply to the divide-and-conquer approach that deals with the sub-population with a huge sample size. In this case, the “oracle estimate” is defined as those computed based on the entire (homogeneous) data in those sub-populations. A rather different goal here is to explore the most computationally efficient way to split the whole sample while performing the best possible statistical inference. Specifically, we derive the largest possible number of splits under which the averaged estimators for both components enjoy the same statistical properties as the oracle estimators.

In both homogeneous and heterogeneous setting above, we note that the upper bounds established for s increase with the smoothness of f0. Hence, our aggregation procedure favors smoother regression functions in the sense that more sub-populations/splits are allowed in the massive data. On the other hand, we have to admit that our upper and lower bound results for s are only sufficient conditions although empirical results show that our bounds are quite sharp. Another interesting finding is that even the semi-nonparametric estimation is applied to only one fraction of the entire data, it is nonetheless essential to regularize each sub-estimation as if it had the entire sample.

In the end, we highlight two key technical challenges: (i) nontrivial interaction between the parametric and nonparametric components in the semi-nonparametric estimation. In particular, we observe a “bias propagation” phenomenon: the bias introduced by the penalization of the nonparametric component propagates to the parametric component, and the resulting parametric bias in turn propagates back to the nonparametric component. To analyze this complicated propagation mechanism, we extend the existing RKHS theory to an enlarged partially linear function space by defining a novel inner product under which the expectation of the Hessian of the objective function becomes identity. (ii) double asymptotics framework in terms of diverging s and N. In this challenging regime, we develop more refined concentration inequalities in characterizing the concentration property of an aggregated empirical processes. These delicate theoretical analysis show that an average of s asymptotic linear expansions is still a valid one as .

The rest of the paper is organized as follows: Section 2 briefly introduces the general RKHS theory and discusses its extension to an enlarged partially linear function space. Section 3 describes our aggregation procedure, and studies the “oracle” property of this procedure from both asymptotic and non-asymptotic perspectives. The efficiency boosting of heterogeneity estimators and heterogenous testing results are also presented in this section. Section 4 applies our general theory to various examples with different smoothness. Section 5 is devoted to the analysis of divide-and-conquer algorithms for homogeneous data. Section 6 presents some numerical experiments. All the technical details are deferred to Section 7 or Online Supplementary.

2. Preliminaries

In this section, we briefly introduce the general RKHS theory, and then extend it to a partially linear function space. Below is a generic definition of RKHS (Berlinet and Thomas-Agnan, 2004):

Definition 2.1

Denote by a vector space of functions from a general set to . We say that is a reproducing kernel Hilbert space (RKHS) on , provided that:

-

(i)

is a vector subspace of ;

-

(ii)

is endowed with an inner product, denoted as , under which it becomes a Hilbert space;

-

(iii)

for every , the linear evaluation functional defined by Ey(f) = f(y) is bounded.

If is a RKHS, by Riesz representation, we have that for every , there exists a unique vector, , such that for every . The reproducing kernel for is defined as .

Denote , and as the distribution of U ( and are defined similarly). According to Definition 2.1, if and , then we can define a RKHS (endowed with a proper inner product ), in which the true function f0 is believed to lie. The corresponding kernel for is denoted by K such that for any : . By Mercer theorem, this kernel function has the following unique eigen-decomposition:

where μ1 ≥ μ2 ≥ ... > 0 are eigenvalues and are an orthonormal basis in . Let be the Fourier coefficient of f0 under the basis (given the -inner product ). Mercer theorem together with the reproducing property implies that , where δij is the Kronecker's delta. The smoothness of the functions in RKHS can be characterized by the decaying rate of . Below, we present three different decaying rates together with the corresponding kernel functions.

Finite rank kernel

the kernel has finite rank r if for all . For example, the linear kernel has rank d, and generates a d-dimensional linear function space. The eigenfunctions are given by for . The polynomial kernel K(z1, z2) = (1 + z1 z2)d has rank d + 1, and generates a space of polynomial functions with degree at most d. The eigenfunctions are given by for .

Exponentially decaying kernel

the kernel has eigenvalues that satisfy for some c1 c2 > 0. An example is the Gaussian kernel K(z1, z2) = exp(–|z1 – z2|2). The eigenfunctions are given by Sollich and Williams (2005)

| (2.1) |

for , where is the Hermite polynomial.

Polynomially decaying kernel

the kernel has eigenvalues that satisfy for some ν ≥ 1/2. Examples include those underlying for Sobolev space and Besov space (Birman and Solomjak, 1967). In particular, the eigenfunctions of a ν-th order periodic Sobolev space are trigonometric functions as specified in Section 4.3. The corresponding Sobolev kernels are given in Gu (2013).

In this paper, we consider the following penalized estimation:

| (2.2) |

where λ > 0 is a regularization parameter and is defined as the parameter space . For simplicity, we do not distinguish from its associated function throughout the paper. We call as partially linear kernel ridge regression (KRR) estimate in comparison with the nonparametric KRR estimate in Shawe-Taylor and Cristianini (2004). In particular, when is a ν-th order Sobolev space endowed with , becomes the commonly used partial smoothing spline estimate.

We next illustrate that can be viewed as a partially linear extension of in the sense that it shares some nice reproducing properties as this RKHS under the following inner product:

| (2.3) |

where and . Similar as the kernel function Kz, we can construct a linear operator such that for any . Also, construct another linear operator such that for any m and . See Proposition 2.3 for the construction of Ru and Pλ.

We next present a proposition illustrating the rational behind the definition of . Denote as the outer product on . Hence, is an operator from to .

Proposition 2.2

, where id is an identity operator on .

Proof

For any and , we have

Since the choice of is arbitrary, we conclude our proof.

As will be seen in the subsequent analysis, e.g., in Theorem 3.3, the operator is essentially the expectation of the Hessian of the objective function (w.r.t. Fréchet derivative) minimized in (2.2). Proposition 2.2 shows that the inversion of this Hessian matrix is trivial when the inner product is designed as in (2.3). Due to that, the theoretical analysis of based on the first order optimality condition becomes much more transparent.

To facilitate the construction of Ru and Pλ, we need to endow a new inner product with :

| (2.4) |

for any . Under (2.4), is still a RKHS as the evaluation functional is bounded by Lemma A.1. We denote the new kernel function as , and define a positive definite self-adjoint operator :

| (2.5) |

whose existence is proven in Lemma A.2. Since and , we now have by (2.4). We next define two crucial quantities needed in the construction: and its Riesz representer satisfying for all . Here, we implicity assume Bk is square integrable. The existence of Ak follows from the boundedness of the linear functional (by Riesz's representer theorem) as follows:

We are now ready to construct Ru and Pλ based on , Wλ, B and A introduced above, where B = (B1,..., Bp)T and A = (A1,...,Ap)T. Define and .

Proposition 2.3

For any u = (x, z), Ru can be expressed as , where

Moreover, for any , Pλm can be expressed as , where

Notation

Denote ∥ · ∥2 and ∥ · ∥∞ as the Euclidean L2 and infinity norm in , respectively. For any function , let . We use ∥ · ∥ to denote the spectral norm of matrices. For positive sequences an and bn, we write if there exists some universal constant constant c > 0 (c′ > 0) independent of n such that an ≤ cbn (an ≥ c′bn) for all . We denote if both and . We define h as the inverse of , which is known to be the “effective dimension” of a kernel K (Zhang, 2005). For any function space , define

where is an ∈-covering number of w.r.t. supreme norm. Define the following sets of functions: , , .

3. Heterogeneous Data: Aggregation of Commonality

In this section, we start from describing our aggregation procedure and model assumptions in Section 3.1. The main theoretical results are presented in Sections 3.2–3.4 showing that our combined estimate for commonality enjoys the “oracle property”. To be more specific, we show that it possesses the same (non-asymptotic) minimax optimal bound (in terms of mean-squared error) and asymptotic distribution as the “oracle estimate” computed when all the heterogeneity information are available:

| (3.1) |

The above nice properties hold when the number of sub-populations does not grow too fast and the smoothing parameter is chosen according to the entire sample size N. Based on this combined estimator, we further construct a plug-in estimator for each heterogeneity parameter , which possesses the asymptotic distribution as if the commonality were known, in Section 3.5. Interestingly, this oracular result holds when the number of sub-population is not too small. In the end, Section 3.6 tests the possible heterogeneity among a large number of sub-populations.

3.1. Method and Assumptions

The heterogeneous data setup and averaging procedure are described below:

1. Obverse data (Xi, Zi, Yi) with the known label Li ∈ {1, 2,...,s indicating the sub-population it belongs to, for i = 1,...,N. The size of samples from each sub-population is assumed to be the same, denoted by n, for simplicity. Hence, N = n × s.

2. On the j-th sub-population, obtain the following penalized estimator:

| (3.2) |

3. Obtain the final nonparametric estimate1 for commonality by averaging:

| (3.3) |

We point out that is not our final estimate for heterogeneity. In fact, it can be further improved based on f̄N,λ; see Section 3.5.

For simplicity, we will drop the subscripts (n, λ) and (N, λ) in those notation defined in (3.2) and (3.3) throughout the rest of this paper. The main assumptions of this section are stated below.

Assumption 3.1 (Regularity Condition)

(i) εi's are i.i.d. sub-Gaussian random variables independent of the designs; (ii) for all k, and is positive definite; (iii) Xi's are uniformly bounded by a constant cx.

Conditions in Assumption 3.1 are fairly standard in the literature. For example, the positive definiteness of Ω is needed for obtaining semiparametric efficient estimation; see Mammen and van de Geer (1997). Note that we do not require the independence between X and Z throughout the paper.

Assumption 3.2 (Kernel Condition)

We assume that there exist 0 < cϕ < ∞ and 0 < cK < ∞ such that and supz K(z, z) ≤ cK.

Assumption 3.2 is commonly assumed in kernel ridge regression literature (Zhang et al., 2013; Lafferty and Lebanon, 2005; Guo, 2002). In the case of finite rank kernel, e.g., linear and polynomial kernels, the eigenfunctions are uniformly bounded as long as Ƶ has finite support. As for the exponentially decaying kernels such as Gaussian kernel, we prove in Section 4.2 that the eigenfunctions given in (2.1) are uniformly bounded by 1.336. Lastly, for the polynomially decaying kernels, Proposition 2.2 in Shang and Cheng (2013) showed that the eigenfunctions induced from a ν-th order Sobolev space (under a proper inner product ) are uniformly bounded under mild smoothness conditions for the density of Z.

Assumption 3.3

For each . This is equivalent to

Assumption 3.3 requires the conditional expectation of Xk given Z = z is as smooth as f0(z). As can be seen in Section 3.4, this condition is imposed to control the bias of the parametric component, which is caused by penalization on the the nonparametric component. We call this interaction as the “bias propagation phenomenon”, and study it in Section 3.4.

3.2. Non-Asymptotic Bound for Mean-Squared Error

The primary goal of this section is to evaluate the estimation quality of the combined estimate from a non-asymptotic point of view. Specifically, we derive a finite sample upper bound for the mean-squared error . When s does not grow too fast, we show that MSE(f̄) is of the order , from which the aggregation effect on f can be clearly seen. If λ is chosen in the order of N, the mean-squared error attains the (un-improvable) optimal minimax rate. As a by-product, we establish a non-asymptotic upper bound for the mean-squared error of , i.e., . The results in this section together with Theorem 3.4 in Section 3.4 determine an upper bound of s under which f̄ enjoys the same statistical properties (minimax optimality and asymptotic distribution) as the oracle estimate f̂or.

Define τmin(Ω) as the minimum eigenvalue of Ω and as the trace of K. Moreover, let , , and .

Theorem 3.1

Suppose that Assumptions 3.1–3.3 hold. If , then we have

| (3.4) |

where , rn,s = (nh)−1/2log2 N + λ and C is some generic constant.

Typically, we require an upper bound for s so that the third term in the R.H.S. of (3.4) can be dominated by the first two terms, which correspond to variance and bias, respectively. Hence, we choose to attain the optimal bias-variance trade-off. The resulting rate coincides with the minimax optimal rate of the oracle estimate in different RKHS; see Section 4. This can be viewed as a non-asymptotic version of the “oracle property” of f̄. In comparison with the nonparametric KRR result in Zhang et al. (2013), we realize that adding one parametric component does not affect the finite sample upper bound (3.4).

As a by-product, we obtain a non-asymptotic upper bound for . This result is new, and also of independent interest.

Theorem 3.2

Suppose that Assumptions 3.1 – 3.3 hold. Then we have

| (3.5) |

where a(n, s, h, λ, ω) is defined in Theorem 3.1.

Again, the first term and second term in the R.H.S. of (3.5) correspond to the variance and bias, respectively. In particular, the second term comes from the bias propagation effect to be discussed in Section 3.4. By choosing λ= o(n−1/2), we can obtain the optimal rate of , i.e., O(n−1/2), but may lose the minimax optimality of MSE(f̄) in most cases.

3.3. Joint Asymptotic Distribution

In this section, we derive a preliminary result on the joint limit distribution of at any . A key issue with this result is that their centering is not at the true value. However, we still choose to present it here since we will observe an interesting phenomenon when removing the bias in Section 3.4.

Theorem 3.3 (Joint Asymptotics I)

Suppose that Assumptions 3.1 and 3.2 hold, and that as , , and . Suppose the following conditions are satisfied

| (3.6) |

| (3.7) |

Denote as , where . We have for any z0 ∈ Ƶ and j = 1,...,s,

-

(i)if s → ∞ then

where ;(3.8) -

(ii)if s is fixed, then

where .(3.9)

Part (i) of Theorem 3.3 says that and are asymptotically independent as s → ∞. This is not surprising since only samples in one sub-population (with size n) contribute to the estimation of the heterogeneity component while the entire sample (with size N) to commonality. As n/N = s−1 → 0, the former data becomes asymptotically independent of (or asymptotically ignorable to) the latter data. So are these two estimators. The estimation bias can be removed by placing a smoothness condition on Bk, i.e., Assumption 3.3. Interestingly, given this additional condition, even when s is fixed, these two estimators can still achieve the asymptotic independence if h → 0. Please see more details in next section.

3.4. Bias Propagation

In this section, we first analyze the source of estimation bias observed in the joint asymptotics Theorem 3.3. In fact, these analysis leads to a bias propagation phenomenon, which intuitively explains how Assumption 3.3 removes the estimation bias. More importantly, we show that f̄ shares exactly the same asymptotic distribution as f̂or, i.e., oracle rule, when s does not grow too fast and λ is chosen in the order of N.

Our study on propagation mechanism is motivated by the following simple observation. Denote and as the designs based on the samples from the jth sub-population and let . The first order optimality condition (w.r.t. β) gives

| (3.10) |

where is a n-dimensional vector with entries f0(Zi) for i ∈ Lj and is defined similarly. Hence, the estimation bias of inherits from that of f̂(j). A more complete picture on the propagation mechanism can be seen by decomposing the total bias into two parts:

| (3.11) |

| (3.12) |

according to Proposition 2.3. The first term in (3.12) explains the bias introduced by penalization; see (2.5). This bias propagates to the parametric component through B, as illustrated in (3.11). The parametric bias Lλf0 propagates back to the nonparametric component through the second term of (3.12). Therefore, by strengthening to , i.e., Assumption 3.3, it can be shown that the order of Lλf0 in (3.11) reduces to that of λ. And then we can remove Lλf0 asymptotically by choosing a sufficiently small λ. In this case, the nonparametric bias becomes Wλf0.

We summarize the above discussions in the following theorem:

Theorem 3.4. (Joint Asymptotics II)

Suppose Assumption 3.3 and the conditions in Theorem 3.3 hold. If we choose , then

-

(i)if s → ∞ then

where ;(3.13) -

(ii)if s is fixed, then

where and is the same as in (i).(3.14)

Moreover, if h → 0, then and in (i) and (ii).

The nonparametric estimation bias Wλf0(z0) can be further removed by performing undersmoothing, a standard procedure in nonparametric inference, e.g., Shang and Cheng (2013). We will illustrate this point in Section 4.

By examining the proof for case (ii) of Theorem 3.4 (and taking s = 1), we know that the oracle estimate f̄or defined in (3.1) attains the same asymptotic distribution as that of f̄ in (3.13) when s grows at a proper rate. Therefore, we claim that our combined estimate f̄ satisfies the desirable oracle property.

In Section 4, we apply Theorem 3.4 to several examples, and find that even though the minimization (3.2) is based only on one fraction of the entire sample, it is nonetheless essential to regularize each sub-estimation as if it had the entire sample. In other words, λ should be chosen in the order of N. Similar phenomenon also arises in analyzing minimax optimality of each sub-estimation; see Section 3.2.

Cheng and Shang (2013) have recently uncovered a joint asymptotics phenomenon in partial smoothing spline models: parametric estimate and (point-wise) nonparametric smoothing spline estimate become asymptotically independent after the parametric bias is removed. This corresponds to a special case of Part (ii) of Theorem 3.4 for polynomially decaying kernels with s = 1 and h → ∞. Therefore, case (ii) in Theorem 3.4 generalizes this new phenomenon to the partially linear kernel ridge regression models. When , e.g., for finite rank kernel, the semi-nonparametric estimation in consideration essentially reduces to a parametric one. Hence, it is not surprising that the asymptotic dependence remains.

Remark 3.1

Theorem 3.4 implies that when λ = o(n−1/2). When the error ∈ follows a Gaussian distribution, it is well known that achieves the semiparametric efficiency bound (Kosorok, 2007). Hence, the semiparametric efficient estimate can be the obtained by applying the kernel ridge method. However, we can further improve its estimation efficiency to a parametric level by taking advantage of f̄ (built on the whole samples). This is one important feature of massive data: strength-borrowing.

Efficiency Boosting: from semiparametric level to parametric level

The previous sections show that the combined estimate f̄ achieves the “oracle property” in both asymptotic and non-asymptotic senses when s does not grow too fast and λ is chosen according to the entire sample size. In this section, we employ f̄ to boost the estimation efficiency of from semiparametric level to parametric level. This leads to our final estimate for heterogeneity, i.e., defined in (3.15). More importantly, possesses the limit distribution as if the commonality in each sub-population were known, and hence satisfies the “oracle rule”. This interesting efficiency boosting phenomenon will be empirically verified in Section 6.

Specifically, we define the following improved estimator for β0:

| (3.15) |

Theorem 3.5 below shows that achieves the parametric efficiency bound as if the nonparametric component f were known. This is not surprising given that the nonparametric estimate f̄ now possesses a faster convergence rate after aggregation. What is truly interesting is that we need to set a lower bound for s, i.e., (3.16), and thus the homogeneous data setting is trivially excluded. This lower bound requirement slows down the convergence rate of , i.e., √n, such that f̄ can be treated as if it were known.

Theorem 3.5

Suppose Assumption 3.1 and 3.2 hold. If s satisfies

| (3.16) |

| (3.17) |

| (3.18) |

and we choose λ = o((Nh)−1), then we have

where .

Recall that X and Z are not assumed to be independent. Hence, the parametric efficiency bound Σ−1 is not larger than the semiparametric efficiency bound Ω−1.

3.6. Testing for Heterogeneity

The heterogeneity across different sub-populations is a crucial feature of massive data. However, there is still some chance that some sub-populations may share the same underlying distribution. In this section, we consider testing for the heterogeneity among sub-populations. We start from a simple pairwise testing, and then extend it to a more challenging simultaneous testing that can be applied to a large number of sub-populations.

Consider a general class of pairwise heterogeneity testing:

| (3.19) |

where is a q × q matrix with q ≥ p. The general formulation (3.19) can test either the whole vector or one fraction of is equal to that of . A test statistic can be constructed based on either or its improved version . Let be a confidence region satisfying for any b ~ N(0, Iq). Specifically, we have the following α-level Wald tests:

The consistency of the above tests are guaranteed by Theorem 3.6 below. In addition, we note that the power of the latter test is larger than the former; see the analysis below Theorem 3.6. The price we need to pay for this larger power is to require a lower bound on s.

Theorem 3.6

Suppose that the conditions in Theorem 3.4 are satisfied. Under the null hypothesis specified in (3.19), we have

Moreover, under the conditions in Theorem 3.5, we have

where .

The larger power of Ψ2 is due to the smaller asymptotic variance of , and can be deduced from the following power function. For simplicity, we consider , i.e., Q = (1, 0, 0 ..., 0). In this case, we have , and . The (asymptotic) power function under the alternative that for some non-zero β* is

where W ~ N(0, 1) and σ* is for Ψ1 and for Ψ2. Hence, a smaller σ* gives rise to a larger power, and Ψ2 is more powerful than Ψ1. Please see Section 6 for empirical support for this power comparison.

We next consider a simultaneous testing that is applied to a large number of sub-populations:

| (3.20) |

where , versus the alternative:

| (3.21) |

The above 's are pre-specified for each . If all 's are the same, then it becomes a type of heterogeneity test for the group of sub-populations indexed by . Here we allow to be as large as s, and thus it can increase with n. Let be the sample covariance matrix of X for the j-th sub-population, i.e., . Define the test statistic

We approximate the distribution of the above test statistic using multiplier bootstrap. Define the following quantity:

where ei's are i.i.d. N(0, σ2) independent of the data and is the k-th row of . Let . We employ the recent Gaussian approximation and multiplier bootstrap theory (Chernozhukov et al., 2013) to obtain the following theorem.

Theorem 3.7

Suppose Assumptions 3.1 and 3.2 hold. In addition, suppose (3.17) and (3.18) in Theorem 3.5 hold. For any with , if (i) , (ii) for some constants c1, C1 > 0, and (iii) , then under H0 and choosing λ = o((Nh)−1), we have

Remark 3.2

We can perform heterogeneity testing even without specifying 's. This can be done by simply reformulating the null hypothesis as follows (for simplicity we set ): H0 : α(j) = 0 for j ∈ [s – 1], where α(j) = β(j) – β(j+1) for j = 1,...,s – 1. The test statistic is . The bootstrap quantity is defined as

The proof is similar to that of Theorem 3.7 and is omitted.

4. Examples

In this section, we consider three specific classes of RKHS with different smoothness, characterized by the decaying rate of the eigenvalues: finite rank, exponential decay and polynomial decay. In particular, we give explicit upper bounds for s under which the combined estimate enjoys the oracle property, and also explicit lower bounds for obtaining efficiency boosting studied in Section 3.5. Interestingly, we find that the upper bound for s increases for RKHS with faster decaying eigenvalues. Hence, our aggregation procedure favors smoother regression functions in the sense that more sub-populations are allowed to be included in the observations. The choice of λ is also explicitly characterized in terms of the entire sample size and the decaying rate of eigenvalues. In all three examples, the undersmoothing is implicitly assumed for removing the nonparametric estimation bias. Our bounds on s and λ here are not the most general ones. Rather, we present the bounds that have less complicated forms but are still sufficient to deliver theoretical insights.

4.1. Example I: Finite Rank Kernel

The RKHS with finite rank kernels includes linear functions, polynomial functions, and, more generally, functional classes with finite dictionaries. In this case, the effective dimension is simply proportional to the rank r. Hence, . Combining this fact with Theorem 3.4, we get the following corollary for finite rank kernels:

Corollary 4.1

Suppose Assumption 3.1 – 3.3 hold and s → ∞. For any , if λ = o(N−1/2), log(λ−1) = o(N2log−12N) and , then

where and .

From the above Corollary, we can easily tell that the upper bound for s can be as large as o(N log−7 N) by choosing a sufficiently large λ. Hence, s can be chosen nearly as large as N. As for the lower bound of s for boosting the efficiency, we have by plugging into (3.16). This lower bound is clearly smaller than the upper bound. Hence, the efficiency boosting is feasible.

Corollary 4.2 below specifies conditions and s and λ under which f̄ achieves the nonparametric minimaxity.

Corollary 4.2

Suppose that Assumption 3.1 - 3.3 hold. When λ = r/N and s = o(N log−5 N), we have

for some constant C.

4.2. Example II: Exponential Decay Kernel

We next consider the RKHS for which the kernel has exponentially decaying eigenvalues, i.e., for some α > 0. In this case, we have by explicit calculations.

Corollary 4.3

Suppose Assumption 3.1 – 3.3 hold, and for any satisfies . If , and , then

where .

Corollary 4.3 implies the shrinking rate of the confidence interval for f0(z0) as (Nh)−1/2. This motivates us to choose λ (equivalently h) as large as possible. Plugging such a λ into the upper bound of s yields . For example, when p = 1(p = 2), the upper bound is . Note that this upper bound for s only differs from that for the finite rank kernel up to some logrithmic term. This is mainly because RKHS with exponentially decaying eigenvalues has an effective dimension (log N)1/p (for the above λ). Again, by (3.16) we get the lower bound of When , it is approximately .

As a concrete example, we consider the Gaussian kernel K(z1, z2) = exp (–|z1 – z2|2/2). The eigenfunctions are given in (2.1), and the eigenvalues are exponentially decaying, as , where η = √5–1)/2. According to Krasikov (2004), we can get that

Thus, Assumption 3.2 is satisfied. We next give an upper bound of in Corollary 4.3 as follows:

where equality follows from Lemma C.1 in Appendix C with the case t = 2. Hence, a (conservative) 100(1–α)% confidence interval for f0(z0) is given by f̄(z0) ± 1.3106σzα/2/√Nh.

Corollary 4.4

Suppose that Assumption 3.1 – 3.3 hold. By choosing λ = log1/p N/N and s = o(N log–(5p+3)/p N), we have

We know that the above rate is minimax optimal according to Zhang et al. (2013). Note that the upper bound for s required here is similar as that for obtaining the joint limiting distribution in Corollary 4.3.

4.3. Example III: Polynomial Decay Kernel

We now consider the RKHS for which the kernel has polynomially decaying eigenvalues, i.e., for some ν > 1/2. Hence, we can explicitly calculate that h = λ1/(2ν). The resulting penalized estimate is called as “partial smoothing spline” in the statistics literature; see Gu (2013); Wang (2011).

Corollary 4.5

Suppose Assumption 3.1 – 3.3 hold, and for any and . For any for some and , then

where .

Similarly, we choose to get the fastest shrinking rate of the confidence interval. Plugging the above λ into the upper bound for s, we get

When N is large, the above bound reduces to . We notice that the upper bound for s increases as ν increases, indicating that the aggregation procedure favors smoother functions. As an example, for the case that ν = 2, we have the upper bound for s = o(N17/36 log−6 N) ≈ o(N0.47 log−6 N). Again, we obtain the lower bound by plugging into (3.16). When , we get . For ν = 2, this is approximately .

As a concrete example, we consider the periodic Sobolev space with the following eigenfunctions:

| (4.1) |

and eigenvalues

| (4.2) |

Hence, Assumption 3.2 trivially holds. Under the above eigensystem, the following lemma gives an explicit expression of .

Lemma 4.1

Under the eigen-system defined by (4.1) and (4.2), we can explicitly calculate:

Therefore, by Corollary 4.5, we have that when and ,

| (4.3) |

where is given in Lemma 4.1. When and the upper bound for s = o(N17/36 log−6 N).

Corollary 4.6

Suppose that Assumption 3.1 - 3.3 hold. If we choose , and , the combined estimator achieves optimal rate of convergence, i.e.,

| (4.4) |

The above rate is known to be minimax optimal for the class of functions in consideration (Stone, 1985).

5. Application to Homogeneous Data: Divide-and-Conquer Approach

In this section, we apply the divide-and-conquer approach, which is commonly used to deal with massive homogeneous data, to some sub-populations that have huge sample sizes. A general goal of this section is to explore the most computationally efficient way to split the sample in those sub-populations while preserving the best possible statistical inference. Specifically, we want to derive the largest possible number of splits under which the averaged estimators for both components enjoy the same statistical performances as the “oracle” estimator that is computed based on the entire sample. Without loss of generality, we assume the entire sample to be homogeneous by setting all 's to be equal throughout this section.

The divide-and-conquer method randomly splits the massive data into s mutually exclusive subsamples. For simplicity, we assume all the subsamples share the same sample size, denoted as n. Hence, N = n × s. With a bit abuse of notation, we define the divide-and-conquer estimators as and when they are based on the j-th subsample. Thus, the averaged estimator is defined as

Comparing to the oracle estimator, the aggregation procedure reduces the computational complexity in terms of the entire sample size N to the sub-sample size N/s. In the case of kernel ridge regression, the complexity is O(N3), while our aggregation procedure (run in one single machine) reduces it to O(N3/s2). Propositions 5.1 and 5.2 below state conditions under which the divide-and-conquer estimators maintain the same statistical properties as oracle estimate, i.e., so-called oracle property.

Our first contribution is a non-asymptotic upper bound for MSE(f̄).

Proposition 5.1

Suppose that the conditions in Theorem 3.1 hold. We have that the divide and conquer estimator f̄ satisfies

| (5.1) |

where a(n, s, h, λ, ω), C1 and C2 are constants defined in Theorem 3.1.

Our second contribution is on the joint asymptotic distribution under the same conditions for (s, λ) required in the heterogeneous data setting.

Proposition 5.2

Suppose that the conditions in Theorem 3.4 hold. If we choose λ = o(N−1/2), then

where and . Moreover, if h → 0, then γz0 = 0. In this case, and .

The conclusion of Proposition 5.2 holds no matter s is fixed or diverges (once the condition for s in Theorem 3.4 are satisfied).

In view of Propositions 5.1 and 5.2, we note that the above upper bound and joint asymptotic distribution are exactly the same as those for the oracle estimate, i.e., s = 1.

6. Numercial Experiment

In this section, we empirically examine the impact of the number of sub-populations on the statistical inference built on . As will be seen, the simulation results strongly support our general theory.

Specifically, we consider the partial smoothing spline models in Section 4.3. In the simulation setup, we let ε ~ N(0,1), p = 1 and ν = 2 (cubic spline). Moreover Z ~ Uniform (−1, 1) and X = (W + Z)/2, where W ~ Unfiform(−1,1), such that X and Z are dependent. It is easy to show that Ω = E[(X – E[X|Z])2)] = 1/12 and Σ = E[X2] = 1/6. To design that heterogeneous data setting, we let for j = 1,2,...,s on the j-th subpopulation. The nonparametric function f0(z), which is common across all subpopulations, is assumed to be 0.6b30,17(z) + 0.4b3,11, where bα1,α2 is the density function for Beta(α1, α2).

We start from the 95% predictive interval (at (x0, z0)) implied by the joint asymptotic distribution (4.3):

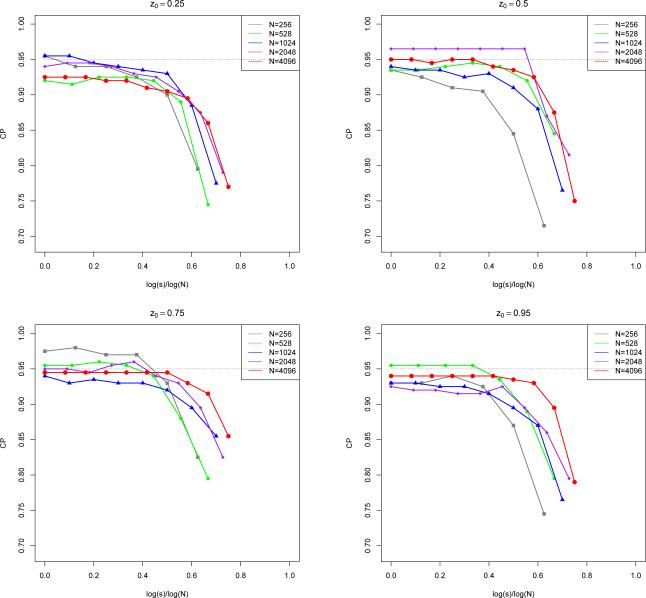

where is the predicted response. The unknown error variance σ is estimated by , where A(λ) denotes the smoothing matrix, followed by an aggregation . In the simulations, we fix x0 = 0.5 and choose z0 = 0.25, 0.5, 0.75 and 0.95. The coverage probability is calculated based on 200 repetitions. As for N and s, we set N = 256, 528, 1024, 2048, 4096, and choose s = 20, 21,...,2t–3 when N = 2t. The simulation results are summarized in Figure 1. We notice an interesting phase transition from Figure 1: when s ≤ s* where s* ≈ N0.45, the coverage probability is approximately 95%; when s ≥ s*, the coverage probability drastically decreaes. This empirical observation is strongly supported by our theory developed in Section 4.3 where s* ≈ N0.42 log−6N for ν = 2.

Fig 1.

Coverage probability of 95% predictive interval with different choices of s and N

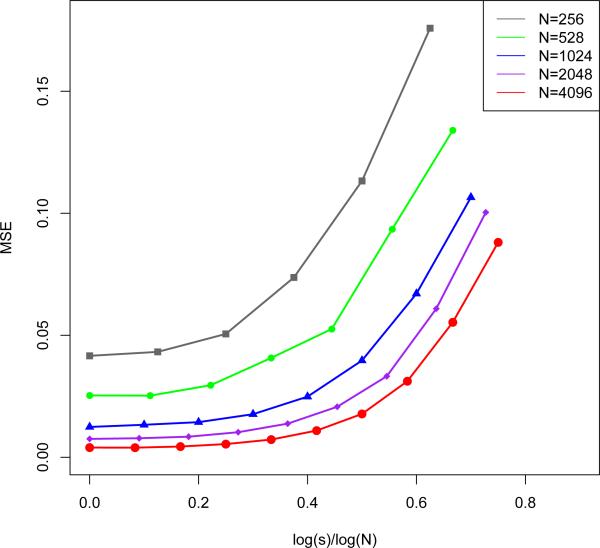

We next compute the mean-squared errors of f̄ under different choices of N and s in Figure 2. It is demonstrated that the increasing trends of MSE as s increases are very similar for different N. More importantly, all the MSE curves suddenly blow up when s ≈ N0.4. This is also close to our theoretical result that the transition point is around N0.45 log−6 N.

Fig 2.

Mean-square errors of f̄ under different choices of N and s

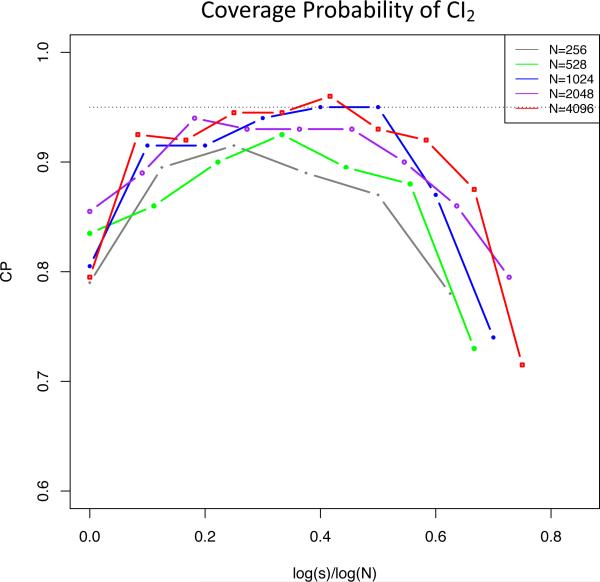

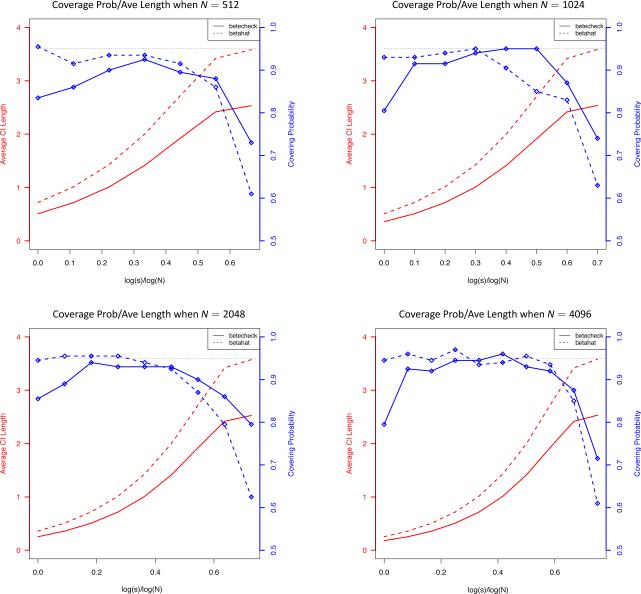

We next empirically verify the efficiency boosting theory developed in Section 3.5. Based on and , we construct the following two types of 95% confidence intervals for .

Obviously, CI2 is shorter than CI1. However, Theorem 3.5 shows that CI2 is valid only when s satisfies both a upper bound and a lower bound. This theoretical condition is empirically verified in Figure 3 which exhibits the validity range of CI2 in terms of s. In Figure 4, we further compare CI2 and CI1 in terms of their coverage probabilities and lengths. This figure shows that when s is in a proper range, the coverage probabilities of CI1 and CI2 are similar, while CI2 is significantly shorter.

Fig 3.

Coverage probability of 95% confidence interval based on

Fig 4.

Covergae probabilities and average lengths of 95% confidence intervals constructed based on and . In the above figures, dashed lines represent CI1, which is constructed based on , and solid lines represent CI2, which is constructed based on .

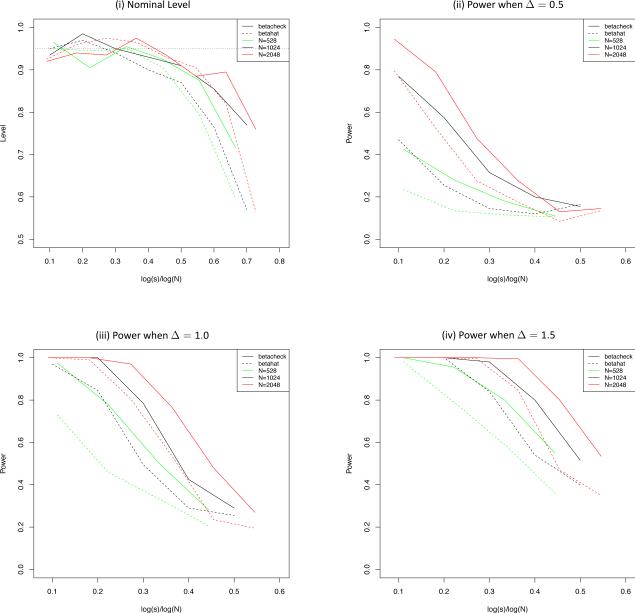

Lastly, we consider the heterogeneity testing. In Figure 5, we compare tests Ψ1 and Ψ2 under different choices of N and s ≥ 2. Specifically, Figure 5 (i) compares the nominal levels, while Figure 5 (ii) - (iv) compare the powers under various alternative hypotheses , where Δ = 0.5, 1, 1.5. It is clearly seen that both tests are consistent, and their powers increase as Δ or N increases. In addition, we observe that Ψ2 has uniformly larger powers than Ψ1.

Fig 5.

(i) Nominal level of heterogeneity tests Ψ1 and Ψ2; (ii) - (iv) Power of heterogeneity tests Ψ1 and Ψ2 when Δ= 0.5, 1.0, 1.5. In the above figures, dashed lines represent Ψ1, which is constructed based on , and solid lines represent Ψ2, which is constructed based on .

7. Proof of Main Results

In this section, we present main proofs of Theorem 3.1, 3.3 and 3.4 in the main text.

7.1. Proof of Theorem 3.1

Proof

We start from analyzing the minimization problem (3.2) on each sub-population. Recall m = (β,f) and U = (X, Z). The objective function can be rewritten as

The first order optimality condition (w.r.t. Fréchet derivative) gives

where . This implies that

where . Define . Adding on both sides of the above equation, we have

| (7.1) |

The L.H.S. of (7.1) can be rewritten as

where the last equality follows from proposition 2.2. Then (7.1) becomes

| (7.2) |

We will show that the first term in the R.H.S. of (7.2) weakly converges to a normal distribution, the second term contributes to the estimation bias, and that the last term is an asymptotically ignorable remainder term. We denote the last term as . Recall that Ru = (Lu, Nu) and . Thus the above remainder term decomposes into two components:

Similarly, (7.2) can be rewritten into the following two equations:

| (7.3) |

and

| (7.4) |

for all j = 1,...,s. Taking average of (7.4) for all j over s, and by definition of f̄, we have

| (7.5) |

where we used . By (7.5), it follows that

| (7.6) |

By Lemma A.5 and the fact that each NUiεi is i.i.d., it follows that

| (7.7) |

and

| (7.8) |

where C1 and C2 are constants specified in Lemma A.5.

As for the third term in (7.6), we have by independence across sub-populations that

| (7.9) |

Therefore it suffices to bound . We have the following lemma that controls this term:

Lemma 7.1

Suppose Assumptions 3.1, 3.2 and Condition (3.6) hold. We have for all j = 1,...,s

for sufficiently large n. Moreover, the inequality also holds for and

Combining (7.6) - (7.9) and Lemma 7.1, and by the fact that , we complete the proof of Theorem 3.1.

7.2. Proof of Theorem 3.3

Proof

Recall that where . This implies that and (7.5), for arbitrary x and z0,

In what follows, we will show that the main term (I) is asymptotically normal and the remainder term (II) is of order oP(1). Given that x is arbitrary, we apply Wold device to conclude the proof of joint asymptotic normality.

Asymptotic normality of (I)

We present that result for showing asymptotic normality of (I) in the following lemma and defer its proof to supplemental material.

Lemma 7.2.

Suppose that as , and . We have

-

(i)if s → ∞, then

(7.10) - (ii) if s is fixed, then

(7.11)

Control of the remainder term (II)

We now turn to bound the remainder term (II). We need the following lemma:

Lemma 7.3

Suppose Assumption 3.1, 3.2 and Condition (3.6) hold. We have the following two sets of results that control the remainder terms:

-

(i)For all j = 1,...,s

where and . Also, and . -

(ii)Moreover, we have

By Lemma 7.3, we have

| (7.12) |

where we used the boundedness of x. Also,

| (7.13) |

where the second inequality follows from Lemma A.4. Therefore by (7.12) and (7.13), we have

| (7.14) |

Now by definition of bn,s and condition (3.7), we have (II) = oP (1). Combining (7.10) and (7.14), it follows that if s → ∞, then

Combining (7.11) and (7.14), it follows that if s is fixed, then

By the arbitrariness of x, we reach the conclusion of the theorem using Wold device.

7.3. Proof of Lemma 7.3: Controlling the Remainder Term

Proof

(i) We first derive the bound of . Recall

Let , where cr is the constant specified in Lemma A.4. Note that Zn(m) is implicitly related to j but we omit the superscript of (j). We have . We apply Lemma F.1 to obtain an exponential inequality for . The first step is to show that Zn(m) is a sub-Gaussian process by Lemma G.1. Let . Now for any m1 and m2,

where we used the fact that by Lemma A.4. Note that . Therefore by Lemma G.1, we have for any t > 0,

| (7.15) |

Then by Lemma F.1, we have

| (7.16) |

where .

Define dn,s = crrn,sh−1/2 and . Again we do not specify its relationship with j. Define the event . On the event , we have

where we used the fact that by Lemma A.4. This implies for any (x, z). Letting x = 0, one gets , which further implies for all x by triangular inequality. Moreover, on the even we have

by the definition of . Hence, we have shown that . Combining this fact with (7.16), and noting that , we have

| (7.17) |

By the definition of m̃, and the relationship , we calculate that . Plugging the above form of Zn(m̃) into (7.17) and letting x = log N in (7.17), we have

| (7.18) |

where we used the definition that . Therefore we have

| (7.19) |

We have the following lemma that controls .

Lemma 7.4

If Assumption 3.1, 3.2 and Condition (3.6) are satisfied, then there exist a constant c such that

for all j = 1,...,s.

By Lemma 7.4 and (7.19) we have

| (7.20) |

(ii) We will use an Azuma-type inequality in Hilbert space to control the averaging remainder term , as all Rem(j) are independent and have zero mean. Define the event . By Lemma G.1, we have

| (7.21) |

Moreover, by (7.20),

| (7.22) |

Hence it follows that

as N → ∞, where the last inequality follows from (7.21), (7.22) and union bound. This finishes the proof.

We can apply similar arguments as above to bound and , by changing to , which is dominated by . The bounds of and then follow from triangular inequality.

7.4. Proof of Theorem 3.4

Proof

In view of Theorem 3.4, we first prove

| (7.23) |

for both (i) and (ii). By Proposition 2.3, we have

| (7.24) |

By Lemma A.5, it follows that under Assumption 3.3, . Now we turn to . Observe that

| (7.25) |

Applying Cauchy-Schwarz, we obtain

where the last inequality follows from the uniform boundedness of . Hence we have that Ak(z0) is uniformly bounded, which implies

Therefore, if we choose , then we get (7.23), which eliminates the estimation bias for .

Now we consider the asymptotic variance for cases (i) and (ii). It suffices to show that . By Lemma A.2 and (7.25), we have

Hence by dominated conference theorem, as λ → 0 we have . As h = O(1), it follows that .

When h → 0, we have , as Ak(z0) is uniformly bounded. Hence and .

Supplementary Material

Acknowledgments

Research Sponsored by NSF IIS1408910, NSF IIS1332109, NIH R01MH102339, NIH R01GM083084, and NIH R01HG06841

Research Sponsored by NSF CAREER Award DMS-1151692, DMS-1418042, Simons Foundation 305266. Guang Cheng was on sabbatical at Princeton while part of this work was carried out; he would like to thank the Princeton ORFE department for its hospitality.

Footnotes

AMS 2000 subject classifications: Primary 62G20, 62F25; secondary 62F10, 62F12

The commonality estimator f̄N,λ can be adjusted as a weighted sum if sub-sample sizes are different. In particular, the divide-and-conquer method can be applied to those sub-populations with huge sample sizes; see Section 5.

SUPPLEMENTARY MATERIAL

Supplementary material for: A Partially Linear Framework for Massive Heterogenous Data (DOI: To Be Assigned; .pdf). We provide the detailed proof in the supplement.

References

- Aitkin M, Rubin DB. Estimation and hypothesis testing in finite mixture models. Journal of the Royal Statistical Society. Series B (Methodological) 1985:67–75. [Google Scholar]

- Bach F. Sharp analysis of low-rank kernel matrix approximations. arXiv preprint arXiv. 2012:1208.2015. [Google Scholar]

- Berlinet A, Thomas-Agnan C. Reproducing kernel Hilbert spaces in probability and statistics. Vol. 3. Springer; 2004. [Google Scholar]

- Birman MŠ, Solomjak M. Piecewise-polynomial approximations of functions of the classes img align= absmiddle alt= w_p^ α tex_sm_2343_img1/img. Sbornik: Mathematics. 1967;2:295–317. [Google Scholar]

- Carl B, Triebel H. Inequalities between eigenvalues, entropy numbers, and related quantities of compact operators in banach spaces. Mathematische Annalen. 1980;251:129–133. [Google Scholar]

- Chen X, Xie M. Tech. rep., Technical Report 2012-01. Dept. Statistics, Rutgers Univ; 2012. A split-and-conquer approach for analysis of extraordinarily large data. [Google Scholar]

- Cheng G, Shang Z. Joint asymptotics for semi-nonparametric models under penalization. arXiv. 2013:1311.2628. [Google Scholar]

- Chernozhukov V, Chetverikov D, Kato K, et al. Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. The Annals of Statistics. 2013;41:2786–2819. [Google Scholar]

- Fan J, Zhang W. Statistical estimation in varying coefficient models. Annals of Statistics. 1999:1491–1518. [Google Scholar]

- Figueiredo MA, Jain AK. Unsupervised learning of finite mixture models. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 2002;24:381–396. [Google Scholar]

- Gu C. Smoothing spline ANOVA models. Vol. 297. Springer; 2013. [Google Scholar]

- Guo W. Inference in smoothing spline analysis of variance. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2002;64:887–898. [Google Scholar]

- Hastie T, Tibshirani R. Varying-coefficient models. Journal of the Royal Statistical Society. Series B (Methodological) 1993:757–796. [Google Scholar]

- Huang J, Zhang T. The benefit of group sparsity. The Annals of Statistics. 2010;38:1978–2004. [Google Scholar]

- Kleiner A, Talwalkar A, Sarkar P, Jordan M. The big data bootstrap. arXiv preprint arXiv. 2012:1206.6415. [Google Scholar]

- Kosorok MR. Introduction to empirical processes and semiparametric inference. Springer; 2007. [Google Scholar]

- Krasikov I. New bounds on the hermite polynomials. arXiv preprint math/0401310. 2004 [Google Scholar]

- Lafferty JD, Lebanon G. Diffusion kernels on statistical manifolds. 2005 [Google Scholar]

- Mammen E, van de Geer S. Penalized quasi-likelihood estimation in partial linear models. The Annals of Statistics. 1997:1014–1035. [Google Scholar]

- McDonald R, Hall K, Mann G. Distributed training strategies for the structured perceptron. In Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics. Association for Computational Linguistics. 2010 [Google Scholar]

- McLachlan G, Peel D. Finite mixture models. John Wiley & Sons; 2004. [Google Scholar]

- Meinshausen N, Bühlmann P. Maximin effects in inhomogeneous large-scale data. arXiv preprint arXiv. 2014:1406.0596. [Google Scholar]

- Mendelson S. In Computational Learning Theory. Springer; 2002. Geometric parameters of kernel machines. [Google Scholar]

- Nardi Y, Rinaldo A. On the asymptotic properties of the group lasso estimator for linear models. Electronic Journal of Statistics. 2008;2:605–633. [Google Scholar]

- Obozinski G, Wainwright MJ, Jordan MI. Union support recovery in high-dimensional multivariate regression.. Communication, Control, and Computing, 2008 46th Annual Allerton Conference on; IEEE; 2008. [Google Scholar]

- Pinelis I. Optimum bounds for the distributions of martingales in banach spaces. The Annals of Probability. 1994:1679–1706. [Google Scholar]

- Raskutti G, Wainwright MJ, Yu B. Early stopping and non-parametric regression: an optimal data-dependent stopping rule. The Journal of Machine Learning Research. 2014;15:335–366. [Google Scholar]

- Shang Z, Cheng G. Local and global asymptotic inference in smoothing spline models. The Annals of Statistics. 2013;41:2608–2638. [Google Scholar]

- Shawe-Taylor J, Cristianini N. Kernel methods for pattern analysis. Cambridge university press; 2004. [Google Scholar]

- Sollich P, Williams CK. Deterministic and Statistical Methods in Machine Learning. Springer; 2005. Understanding gaussian process regression using the equivalent kernel. pp. 211–228. [Google Scholar]

- Städler N, Bühlmann P, van de Geer S. #21131-penalization for mixture regression models. Test. 2010;19:209–256. [Google Scholar]

- Steinwart I, Hush DR, Scovel C, et al. Optimal rates for regularized least squares regression. COLT; 2009. [Google Scholar]

- Stewart GW, Sun J.-g. Matrix perturbation theory. 1990 [Google Scholar]

- Stone CJ. Additive regression and other nonparametric models. The annals of Statistics. 1985:689–705. [Google Scholar]

- Tropp JA. User-friendly tail bounds for sums of random matrices. Foundations of Computational Mathematics. 2012;12:389–434. [Google Scholar]

- Tsybakov AB, Zaiats V. Introduction to nonparametric estimation. Vol. 11. Springer; 2009. [Google Scholar]

- Van Der Vaart AW, Wellner JA. Weak Convergence. Springer; 1996. [Google Scholar]

- van Handel R. Probability in high dimension: Lecture notes. 2014 [Google Scholar]

- Wahba G. Spline models for observational data. Vol. 59. Siam; 1990. [Google Scholar]

- Wang X, Dunson DB. Parallel mcmc via weierstrass sampler. arXiv preprint arXiv. 2013:1312.4605. [Google Scholar]

- Wang Y. Smoothing splines: methods and applications. CRC Press; 2011. [Google Scholar]

- Wasserman L. Steins method and the bootstrap in low and high dimensions: A tutorial. 2014 [Google Scholar]

- Williamson RC, Smola AJ, Scholkopf B. Generalization performance of regularization networks and support vector machines via entropy numbers of compact operators. Information Theory, IEEE Transactions on. 2001;47:2516–2532. [Google Scholar]

- Yang Y, Barron A. Information-theoretic determination of minimax rates of convergence. Annals of Statistics. 1999:1564–1599. [Google Scholar]

- Zhang T. Learning bounds for kernel regression using effective data dimensionality. Neural Computation. 2005;17:2077–2098. doi: 10.1162/0899766054323008. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Duchi J, Wainwright M. Divide and conquer kernel ridge regression. Conference on Learning Theory. 2013 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.