Abstract

Retraction of flawed articles is an important mechanism for correction of the scientific literature. We recently reported that the majority of retractions are associated with scientific misconduct. In the current study, we focused on the subset of retractions for which no misconduct was identified, in order to identify the major causes of error. Analysis of the retraction notices for 423 articles indexed in PubMed revealed that the most common causes of error-related retraction are laboratory errors, analytical errors, and irreproducible results. The most common laboratory errors are contamination and problems relating to molecular biology procedures (e.g., sequencing, cloning). Retractions due to contamination were more common in the past, whereas analytical errors are now increasing in frequency. A number of publications that have not been retracted despite being shown to contain significant errors suggest that barriers to retraction may impede correction of the literature. In particular, few cases of retraction due to cell line contamination were found despite recognition that this problem has affected numerous publications. An understanding of the errors leading to retraction can guide practices to improve laboratory research and the integrity of the scientific literature. Perhaps most important, our analysis has identified major problems in the mechanisms used to rectify the scientific literature and suggests a need for action by the scientific community to adopt protocols that ensure the integrity of the publication process.—Casadevall, A., Steen, R. G., Fang, F. C. Sources of error in the retracted scientific literature.

Keywords: bibliometric analysis, biomedical publishing, ethics

Delay is preferable to error.

Thomas Jefferson, in a letter to George Washington, 1792 (1)

Increasing concern has focused on the veracity and reliability of the scientific literature. Several studies have documented low rates of reproducibility of published literature and an alarming failure rate for preclinical observations that progress to clinical evaluation (2, 3). The causes for the irreproducibility of many studies are poorly understood, but several explanations are emerging, including bias in publication and outcome reporting (4, 5), insufficient statistical power (6), group dynamics among scientists (7), inadequate thresholds for statistical significance (8), and research misconduct, which in extreme cases involves outright fraud (9). Problems in the scientific enterprise are of great societal concern because science remains the best hope for findings solutions to some of humanity's greatest challenges (10). The reliability of science has been recently questioned in the general media in such venues as The Economist (11, 12) and The New York Times (13).

The retraction of a scientific article when the results are no longer considered to be valid plays a critical role in maintaining the integrity of the scientific literature (14). Published articles may be retracted for a myriad of reasons, ranging from fraud to publisher error. Authors and publishers alike may be reluctant to retract an article, as retractions provide unequivocal evidence of failure. Each retraction represents a loss of time and resources, damage to the prestige of science, and the potential to mislead the course of science and medical practice (15, 16). Hence, the frequency of retractions can be viewed as a surrogate marker of scientific failure, with the caveats that not all articles known to be erroneous are retracted, and the actual number of retractable articles in the literature is unknown. The rate of retraction of scientific articles is accelerating relative to the growth of the scientific literature (17), although whether this reflects more misconduct or greater scrutiny continues to be debated (18). In any event, the retracted literature provides opportunities to better understand the challenges of doing science and to improve the scientific enterprise.

We have previously categorized retractions as “honest” or “dishonest” to distinguish those resulting from error from those attributed to misconduct (19). We hypothesized that analyzing the causes responsible for error-related retraction can provides insights into the failings of contemporary science. We have previously established that the majority of articles are retracted because of scientific misconduct, including data fabrication, data falsification, plagiarism, and duplicate publication (9). Retractions due to misconduct may have devastating consequences for the careers of perpetrators, and their occurrence can undermine trust in the veracity of science. Although retractions due to honest error are less common than those for misconduct, articles retracted due to error are also increasing over time, and like those retracted for fraud, are associated with higher-impact journals than those retracted for plagiarism or duplicate publication (9).

A published retraction notice usually contains an explanation of why an article was retracted, although the amount of detail provided can vary tremendously. Both misconduct-related and error-related retractions are heterogeneous, as there are multiple types of misconduct and error. In this study, we utilized a previously assembled retraction database (9) to investigate the causes of error-related retractions, with the overall goal of identifying patterns that can inform future scientific practice and reduce the occurrence of errors in science. To our knowledge this is the first systematic analysis of error in the scientific literature.

MATERIALS AND METHODS

The database used for this study includes 2047 English language articles identified as retracted articles in PubMed as of May 3, 2012, and was previously compiled for a study that identified misconduct as the major cause for retraction in the scientific literature (9). The present study focused on the 439 articles categorized as error-related retractions and unassociated with scientific misconduct. Because the original analysis in 2012, information has come to light regarding 16 of the articles that were originally classified as error related but have subsequently been reclassified as misconduct or possible misconduct; these were excluded, leaving 423 articles for this analysis. To understand the causes of error, the retraction notices were reviewed, and the causes of retraction were classified into 8 categories: irreproducibility, laboratory error, analytical error, contamination, control issues, programming problems, control problems, or other. Category assignment was made by a single reviewer (A.C.). Laboratory error included subcategories of unique errors, contamination, DNA-related errors, and control problems. Analytical errors included programming errors. The “other” category includes cases in which retraction was triggered by inadvertent duplicate publication on the part of the publisher. Errata were enumerated from PubMed as articles retrieved with the keyword “journal” [ALL FIELDS] and the keywords “corrigend*,” “erratum,” or “errata.”

RESULTS AND DISCUSSION

We reviewed the retraction notices for 439 retracted articles published between 1979 and 2011 (9), in which no misconduct was apparent, to identify a cause for the withdrawal of the article. This represents ∼21% of the 2047 articles in the PubMed database that were retracted as of May 3, 2012, and 0.002% of the >20 million journal articles indexed as of that date. For 16 articles (3.6%), the cause for retraction was found to be misconduct or probable misconduct, based on information available since the compiling of the database (9); these articles were not further considered in the analysis.

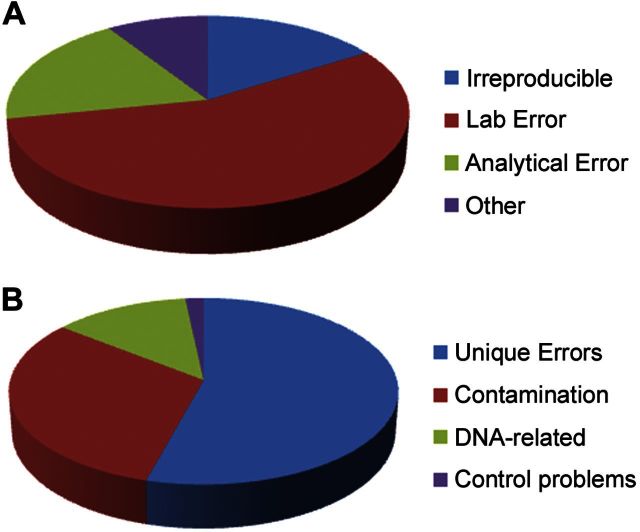

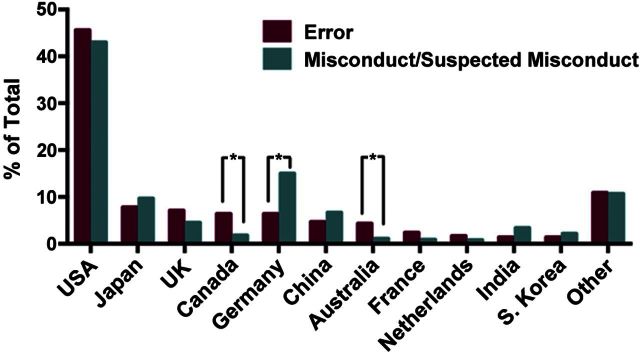

The articles retracted due to error were found to have been retracted for one of 3 major causes: laboratory error (236, 55.8%), analytical error (80, 18.9%), or a lack of reproducibility (68, 16.1%) (Fig. 1 and Table 1). For 39 articles (9%), the cause was indeterminate or failed to fall into one of the 3 major categories, and these were categorized as “other.” The causes of retraction in these cases included publisher errors leading to duplicate publication, priority claim errors, and editor-initiated retractions due to manuscript errors. Error-related retractions originated from 31 countries, with a distribution similar to that previously observed for retractions due to misconduct or suspected misconduct (ref. 9 and Fig. 2). Although PubMed entries are not indexed by country of origin, this most likely reflects the countries from which most research originates. The greater proportion of retractions for error from Canada and of retractions for misconduct from Germany is attributable to multiple retractions resulting from a single contamination event in Canada and a single dishonest investigator in Germany.

Figure 1.

A) Distribution of the major categories of error leading to retraction. B) Distribution of the major identifiable subcategories of laboratory error.

Table 1.

Category of errors before and after 2000

| Category | Retractions (n) |

Total [n (%)] | P | |

|---|---|---|---|---|

| Pre-2000 | Post-2000 | |||

| Irreproducibility | 38 | 30 | 68 (16.1) | 0.0017 |

| Laboratory error | 97 | 139 | 236 (55.8) | 0.2293 |

| Unique | 43 | 85 | 128 (54.2) | 0.0119 |

| Contamination | 44 | 30 | 74 (31.3) | 0.0002 |

| DNA-related | 8 | 22 | 30 (12.7) | 0.1118 |

| Control | 2 | 2 | 4 (1.7) | 0.6411 |

| Analytical error | 20 | 60 | 80 (18.9) | 0.0071 |

| Other | 8 | 31 | 39 (9.2) | 0.0155 |

| Total | 163 | 260 | 423 | |

P values calculated by 2-tailed Fisher's exact test using raw numbers of retracted papers.

Figure 2.

Countries of origin of articles retracted due to error. Percentage of error-related retractions by country is shown in comparison to the percentage of retractions for misconduct/suspected misconduct (9). *P ≤ 0.001; χ2 test.

More than half of all error-related retractions were attributed to laboratory error. Although the majority of instances of laboratory error were unique circumstances, we were able to subdivide laboratory errors into unique laboratory errors (128, 54.2%), contamination (74, 31.3%), DNA sequencing and cloning errors (30, 12.7%), and problems with controls (4, 1.7%) (Table 1). Unique laboratory errors were those that were specifically associated with the study in question and did not fall into the other categories. Laboratory errors sometimes resulted in multiple retractions when the error was propagated across different studies. For example, an error in an assay for measuring fospropofol drug concentration resulted in 6 retractions (20). Contamination was the single largest cause of retraction due to laboratory error, accounting for half of all retractions. Isolated episodes of contamination sometimes resulted in multiple retractions when common reagents were used in multiple experiments. For example, an instance of contamination in which a reagent inadvertently contained cobra toxin or phospholipase resulted in the retraction of 13 articles (21), providing an example of the vulnerability of studies that rely on a single-source reagent.

We found that only 6 (1.4%) notices attributed the cause for retraction to contamination of cell lines or the use of inappropriate cells. The problem of cross-contamination of cell lines has been known for decades, but despite several calls to action to address this pervasive problem (22–24), the biological research community has thus far not mounted an adequate response (25). As early as 1981, a compilation of dozens of articles tainted by cell line contamination was published (26). The problem has continued to be amply documented in the literature: a study of 550 leukemia cell lines found that 14.9% were contaminated with other cells (27), and another study found widespread contamination of tumor cell lines (28). Capes-Davis et al. (29) have compiled a list of >350 contaminated cell lines and stressed the need for validation of cell lines. Recently, a study using genetic cross-filing identified contamination in six commonly used human adenoid cystic carcinoma cell lines, including HeLa cells and cells from nonhuman species (30). The paucity of retractions attributed to contaminated cell lines suggests that the literature contains many unretracted but potentially erroneous studies. Fields that rely heavily on the use of cell lines may be particularly affected by this problem, including cancer research, in which irreproducibility has been documented to be a major concern (2).

Analytical errors comprised the next largest category of retracted results, involving 18.9% of causes identified in error-related retraction notices. Unlike contamination, which is easily defined, analytical errors were a heterogeneous group that included errors in computer program coding, data transfer, data forms, spreadsheets, statistical analysis, calculations, crystallographic analysis, and histological interpretation. As with contamination of common reagents, analytical errors can result in multiple retractions for articles that rely on the same erroneous database or computer program. An error in an in-house program used for analyzing crystallographic data that inadvertently reversed two columns of data resulted in inverted electron density maps and led to the retraction of 5 articles (31). It is noteworthy that the same program had been used for other articles in preparation (31); had the error not been caught, additional retractions would likely have resulted. Incorrect clinical data entry into case study forms led to 7 retractions (32). Programming- and computer-related errors were blamed for 12 retractions. Recently, there has been increasing concern about the proliferation of commercial modeling programs that are used without a full understanding of the limitations of the source code and the assumptions made by users (33). It is, therefore, possible that programming errors will make a higher contribution to error in the future.

Retractions due to irreproducibility of published results accounted for 16.1% of error-related retractions (Table 1). The irreproducibility category included all notices in which the authors withdrew a article and cited an inability to reproduce prior results without providing an explanation for the problem. Clearly, this category must have included studies that were retracted due to laboratory and analytical errors, but the authors did not provide sufficient information in the retraction notice to make those assignments. The irreproducibility of the scientific literature has become a topic of increasing interest and concern, even in the general press (11–13). Numerous explanations have been suggested for the irreproducibility of scientific studies: inadequate characterization of key resources such as antibodies, model organisms, knockdown reagents, constructs, and cell lines (34); insufficiently detailed methods (35); publication bias favoring positive results (36); and random variations interpreted as significant results (37). The latter problem is particularly relevant to the biological sciences, which comprise the majority of articles indexed in PubMed. An application of Bayesian tests suggests that the commonly accepted criterion for statistical significance (P≤0.05) is not sufficiently stringent (8), which could lead to false-positive associations that are not reproducible. The concern about reproducibility of scientific studies has led to the emergence of entities that offer to reproduce research results for a fee (38). The pressure to publish has been blamed for reduced quality of scientific work. Brown and Ramaswamy analyzed the quality of protein structures and found an inverse correlation between quality and the impact factor of the journal in which the work was published, with the “worst offenders” regarding the publication of erroneous structural data being the high-impact general science journals (39). This suggests that publishing incentives may perversely encourage haste and error.

We attempted to discern temporal trends by comparing the causes for error before and after the year 2000 (Table 1). This year was chosen arbitrarily because it represents a time roughly corresponding to the widespread introduction of several new technologies in the biological sciences, including next-generation DNA sequencing, mass spectrometry, and RNA interference. The number of retractions caused by unique laboratory error events increased from 27.0 to 32.7% (P=0.0019), a trend that may reflect the increasing diversity of laboratory techniques used in biological research. Comparison of the pre-2000 and post-2000 retracted literature revealed no significant decrease in the prevalence of DNA sequencing-related errors despite improvements in the technologies available for DNA sequencing. Nevertheless, there were there interesting findings from the pre-2000 and post-2000 comparison. First, the number of retractions that admitted irreproducibility without providing an explanation was reduced from 23.3 to 11.5% (P=0.0017). Although we do not know the explanation for this trend, it is possible that the cost of retraction in terms of reputation and prestige has led investigators to provide more information to support their actions and/or conclusions. Second, the number of retractions attributed to contamination was significantly reduced from 27 to 11.5% (P=0.0002). Here, the explanation may be improved analytical techniques that provide more information with regard to sample purity. Furthermore, the widespread use of kits for carrying out molecular and biochemical techniques could be associated with a reduction in inadvertent contamination of reagents generated by individual investigators. However, just because a reagent originates from a commercial source does not guarantee its purity, and the increased reliance on commercial reagents raises the possibility that contamination at the source could simultaneously impact many laboratories. Third, there was a significant increase in retractions attributed to analytical error, rising from 12.2 to 23.1% (P=0.01). Although it may be too early to identify the causes for this trend, it is possible that studies that generate large amounts of numerical data make data manipulation errors more likely (40).

We acknowledge several limitations to this study. First, by necessity, we relied on information in retraction notices to describe the source of error accurately. Such information has been shown to be potentially misleading, such as when misconduct is disguised by invoking error or irreproducibility (9). Second, the information in retraction notices represents the authors' version of events, and such notices do not generally receive peer review. Third, the number of retractions studied represents a small fraction of all problematic articles in the literature. Our experience with the paucity of retractions caused by cell contamination despite widespread recognition of the problem suggests that there may be biases in the type of studies that are retracted, such that in some fields, the retraction option is not used as frequently as it should. Fourth, the information quality in retraction notices is highly variable, ranging from detailed explanations to terse and uninformative statements. Fifth, there is a striking lack of uniformity among journals in the quality of retraction notices (41). However, these limitations also suggest areas where the literature can be improved. For example, establishing criteria for retraction notices and for standardizing the information in notices could significantly improve this aspect of the scientific literature.

Although the number of problematic articles in the literature cannot be precisely ascertained, it is almost certain that retraction notices represent a small fraction of the erroneous literature. A number of articles widely regarded as erroneous remain in the literature despite published concerns and in some instances, calls for their retraction (some prominent examples are listed in Table 2). The paucity of retractions for contaminated cell lines suggests that problematic articles in some scientific fields may be ignored without being retracted. The mechanism of issuing errata or retracting articles is a useful tool to correct isolated errors, but errors that systematically affect entire fields (like cell contamination) require other remedies, such as the development of new standards moving forward.

Table 2.

Examples of unretracted articles containing significant errors

| Authors | Journal and year | Claim | Errata | Description of error |

|---|---|---|---|---|

| Traver (51) | Proceedings of the Entomological Society of Washington, 1951 | The author reported a mite infestation of her own scalp that was refractory to treatment and undetectable to others. | None | The author is now felt to have suffered from delusional parasitosis (52). |

| Steinschneider (53) | Pediatrics, 1972 | Multiple cases of SIDS were reported in a single family. | None | The children's mother was convicted of murder (54). |

| Davenas et al. (55) | Nature, 1988 | Serial dilutions of anti-IgE that eliminated the presence of any anti-IgE molecules were reported to remain capable of stimulating basophil degranulation. | None | A team of observers visited the authors' laboratory and pronounced the results to be a “delusion” (56). An independent group subsequently failed to reproduce the original findings (57), although their conclusions were not accepted by the original authors (58). |

| Fleischmann and Pons (59) | Journal of Electroanalytical Chemistry, 1989 | Nuclear fusion was reported from the electrolysis of deuterium on the surface of a palladium electrode at room temperature (“cold fusion”). | Erratum (60) | Potential sources of error were identified (61), and an independent group of physicists monitoring experiments in Pons' laboratory was unable to detect evidence of fusion (62). |

| Bagenal et al. (63) | Lancet, 1990 | Patients treated at a complementary therapy center for breast cancer exhibited higher mortality than those receiving conventional care. | None | A statistical analysis subsequently assessed the differences between cases and controls to be “so small that no conclusion could be made.” (64) |

| Chow et al. (43) | Nature, 1993 | Combinations of mutations conferring resistance to 3 antiretroviral drugs were incompatible with HIV replication. | Erratum (45), acknowledging that additional unrecognized mutations were present | Other groups reported that multiresistant mutant HIV is still able to replicate (44, 65). |

| Bellgrau et al. (66) | Nature, 1995 | Expression of CD95L (FasL) was reported to prevent rejection of mismatched transplanted tissue. | Erratum (containing a minor correction only) | Other investigators were unable to reproduce the findings in other experimental systems (67–69). The author of an accompanying commentary retracted his commentary (70). |

| Gugliotti et al. (71) | Science, 2004 | RNA was reported to form hexagonal palladium nanoparticles. | None | A former collaborator concluded that the hexagonal crystals were a solvent artifact (72, 73). |

| Labandeira-Rey et al. (74) | Science, 2007 | Panton-Valentine Leukocidin (PVL) reported to be required for staphylococcal virulence. | None | Virulence phenotypes previously attributed to PVL found to be due to a mutation in the agr P2 promoter (75). |

| Wyatt et al. (76) | Science, 2010 | Nonribosomal peptides (aureusimines) reported to be required for staphylococcal virulence. | Erratum (77), acknowledging second site mutation | Virulence phenotypes previously attributed to aureusimines found to be due to a saeS mutation (78). |

| Wolfe-Simon et al. (46) | Science, 2011 | The bacterium GFAJ-1 was reported to substitute arsenate for phosphate in nucleic acids. | Editor's note (49) | Eight critical technical comments were published, followed by 2 articles showing that GFAJ-1 DNA does not contain arsenate (47, 48). |

| Regan et al. (79) | Journal of the National Cancer Institute, 2012 | CYP2D6 genotyping did not predict responsiveness of breast cancer patients to tamoxifen. | None | Deviation from Hardy-Weinberg equilibrium suggests genotyping errors (80). |

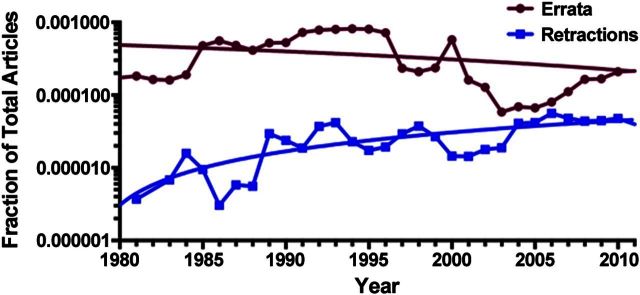

The percentage of scientific articles requiring correction ranges from 0.5% to >3%, depending on the field (42). Many corrections are minor, do not undermine the central conclusions of an article, and may be noted in errata (publisher's corrections) or corrigenda (authors' corrections). The incidence of errata and corrigenda has been stable over time, whereas the incidence of error-related retractions has been rising over the past 3 decades (Fig. 3). This suggests that errata/corrigenda and error-related retractions are independent and largely unrelated phenomena. However, we have occasionally observed correction notices that report major problems with publications. For example a report in Nature in 1993 that combination antiviral chemotherapy halted HIV replication (43) was later found to be erroneous (44), but a correction was issued instead of a retraction (ref. 45 and Table 2). Recently, a bacterium was reported to incorporate arsenic rather than phosphorus into its DNA (46). This sensational finding was subsequently shown to be erroneous (47, 48), and the journal issued an editor's note (49), yet the original article has not been retracted. In other instances, we note that authors of erroneous articles have responded to criticism in a prompt and transparent manner (50), and we laud their actions as an example of the self-correcting nature of science.

Figure 3.

Errata and error-related related retractions over time. Data from PubMed 1980–2011, inclusive. Decline in errata is nonsignificant (P=0.07); increase in error-related retractions is significant (P<0.0001).

In summary, the present study suggests that a few broad categories of error are responsible for the majority of error-related retractions. These categories suggest general areas in which scientists are most likely to encounter problems during the conduct of research. Some retraction notices provide details regarding how errors were discovered, which, in turn, suggest ways in which such problems may be avoided in the future. Many encountered problems could have been avoided by an experimental design that was more robust, incorporated more controls, or included independent secondary methods to verify results. For example, the ubiquity of contamination, with its potential for unraveling multiple studies, suggests the need for greater attention to the characterization of reagents, cell lines, vectors, etc. Errors involving the inadvertent use of incorrect cell lines might be reduced by the generation of validated bar-coded cell lines that can be easily verified before carrying out experimental work, a step that has been formally proposed by an international panel of scientists (25). We have suggested the use of checklists as a mechanism for reducing error (19), and the journal Nature has recently introduced a presubmission checklist in an effort to reduce error in manuscripts. We conclude that the analysis of error in the retracted literature has the potential to improve science by informing the best practices for research. Given the high personal, financial, and societal costs associated with erroneous scientific literature, the implementation of research practices to reduce error has the potential to significantly improve the scientific enterprise. On the basis of our analysis of the retracted literature, we suggest the adoption of specific measures to reduce experimental error (Table 3).

Table 3.

Examples of common errors and suggested remedies

| Category | Type | Example | PMID | Suggested remedy |

|---|---|---|---|---|

| Irreproducibility | “Thus, our own present findings, along with those from other laboratories, contradict major findings from our previous report.” | 20134478 | Increase redundancy in experimental design, more replications, aim for more robust statistics | |

| Lab error | Data entry | “Incorrect data were found to have been included on the case report forms and subsequently in the databases of some studies.” | 15504385 | Double entry or the creation of duplicate databases (81) |

| Data pertaining to a dopamine receptor D4 (DRD4) polymorphism, rs4646984, was mistakenly pasted into a column containing serotonin transporter gene (5-HTTLPR) data. | 20050156 | |||

| Contamination | “The cell line ACC3 which was used in the study … was reportedly found cross contaminated with HeLa.” | 22359742 | Cell line authentication and good cell line practice (24, 25) | |

| Selection | “The mice that had been ordered for the experiments described in our published article were, in fact, mistakenly mice that were doubly deficient in … receptor.” | 21209288 | Double check critical reagents, preferably by a second individual | |

| DNA related | “A mistake was made during the sequencing of the smk-1 allele from the…strain that was used in our studies.” | 20584918 | Validation of results by independent methods and incorporating more redundancy into experimental design | |

| Methodological problem that resulted in a misidentification of a pseudogene as a novel mutation. | 21135394 | |||

| “Resequencing of the cDNA clone obtained in the Y2H screen resolved a particularly GC-rich region upstream of the calcyon start codon that had been misread before and indicated that the calcyon coding sequence is out of frame with the GAL4 activation domain.” | 17170272 | |||

| Data analysis | Statistical analysis | These errors relate to the numbers of families included and several erroneous values in the tables. This failure led, among others, to an extremely high correlation between the male subjects' mates and subjects' parents in the facial proportion jaw width/face width. | 19129144 | Statistical consultation and independent assessment of database integrity |

| “Errors in the statistical calculations and interpretation of the analyses presented in that article.” | 16983065 | |||

| Computation | “Due to a decimal place error for body weight…we have had to reanalyze our data upon reanalysis with the corrected value we have found our conclusions to change in a way that does not warrant publication.” | 17193703 | Double check critical calculations, preferably by a second individual | |

| “Dilution errors were made in calculating | 7594697 | |||

| the numbers of bacilli present in the lungs.” | ||||

| “We rechecked the original data and found that the equation used to correct for differences in weights between groups of animals was incorrect.” | ||||

| 3882286 | ||||

| Programming | Coding error | As a result of a bug in the Perl script used to compare estimated trees with true trees, the clade confidence measures were sometimes associated with the incorrect clades. | 17658946 | Validate program outputs with known databases and results |

| Controls | “A change in international convention of testing occurred between the time that the majority of controls were investigated and the time that the majority of patients were investigated. We incorrectly assumed these tests to be entirely equivalent.” | 9303950 | Identification of correct control group |

Example quotations are taken from the retraction notice. PMID refers to the retraction notice.

Finally, and perhaps most important, our analysis has revealed major problems in the mechanisms used to correct the scientific literature. These problems range from inadequate information in retraction notices to the continued presence of publications known to be erroneous in the literature and the use of errata to report major flaws in articles that should instead be retracted. Both the scientific community and society are dependent on the integrity and veracity of the scientific literature, which is now being questioned in the general media (11–13). This raises concern that future public support for the scientific enterprise could be eroded, and scientific findings of major societal importance might not be heeded. We are hopeful that our findings, together with our previous report that the majority of retractions are due to misconduct (9), will stimulate discussion to develop standards for dealing with error in the scientific literature and actions to improve its integrity.

REFERENCES

- 1. Jefferson T. (1792) Letter to George Washington, 16 May 1792, Philadelphia, PA [Google Scholar]

- 2. Begley C. G., Ellis L. M. (2012) Drug development: Raise standards for preclinical cancer research. Nature 483, 531–533 [DOI] [PubMed] [Google Scholar]

- 3. Prinz F., Schlange T., Asadullah K. (2011) Believe it or not: how much can we rely on published data on potential drug targets? Nat. Rev. Drug Discov. 10, 712. [DOI] [PubMed] [Google Scholar]

- 4. Dwan K., Altman D. G., Arnaiz J. A., Bloom J., Chan A. W., Cronin E., Decullier E., Easterbrook P. J., Von Elm E., Gamble C., Ghersi D., Ioannidis J. P., Simes J., Williamson P. R. (2008) Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS One 3, e3081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. McGauran N., Wieseler B., Kreis J., Schuler Y. B., Kolsch H., Kaiser T. (2010) Reporting bias in medical research—a narrative review. Trials 11, 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Button K. S., Ioannidis J. P., Mokrysz C., Nosek B. A., Flint J., Robinson E. S., Munafo M. R. (2013) Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 [DOI] [PubMed] [Google Scholar]

- 7. Rosmalen J. G., Oldehinkel A. J. (2011) The role of group dynamics in scientific inconsistencies: a case study of a research consortium. PLoS Med 8, e1001143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Johnson V. E. (2013) Revised standards for statistical evidence. Proc. Natl. Acad. Sci. U. S. A. 110, 19313–19317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Fang F. C., Steen R. G., Casadevall A. (2012) Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Acad. Sci. U. S. A. 109, 17028–17033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Casadevall A., Fang F. C. (2013, June 5) Science, heal thyself. Project Syndicate http://www.project-syndicate.org

- 11. Anonymous. (2013, October 19) Unreliable research: trouble at the lab. Economist http://www.economist.com/news/briefing/21588057-scientists-think-science-self-correcting-alarming-degree-it-not-trouble

- 12. Anonymous (2013, October 17) Problems with scientific research: how science goes wrong. Economist http://www.economist.com/news/leaders/21588069-scientific-research-has-changed-world-now-it-needs-change-itself-how-science-goes-wrong

- 13. Johnson G. (2014, January 20) New truths that only one can see. New York Times http://www.nytimes.com/2014/01/21/science/new-truths-that-only-one-can-see.html?_r=0

- 14. Fang F. C., Casadevall A. (2011) Retracted science and the retraction index. Infect. Immun. 79, 3855–3859 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zarychanski R., Abou-Setta A. M., Turgeon A. F., Houston B. L., McIntyre L., Marshall J. C., Fergusson D. A. (2013) Association of hydroxyethyl starch administration with mortality and acute kidney injury in critically ill patients requiring volume resuscitation: a systematic review and meta-analysis. JAMA 309, 678–688 [DOI] [PubMed] [Google Scholar]

- 16. Husten L. (2013, July 31) How heart guidelines based on disgraced research may have caused thousands of deaths. Forbes http://www.forbes.com/sites/larryhusten/2013/07/31/european-heart-guidelines-based-on-disgraced-research-may-have-caused-thousands-of-deaths/

- 17. Steen R. G., Casadevall A., Fang F. C. (2013) Why has the number of scientific retractions increased? PLoS One 8, e68397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Fanelli D. (2013) Why growing retractions are (mostly) a good sign. PLoS Med 10, e1001563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Casadevall A., Fang F. C. (2012) Reforming science: methodological and cultural reforms. Infect. Immun. 80, 891–896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Struys M. M., Fechner J., Schuttler J., Schwilden H. (2010) Erroneously published fospropofol pharmacokinetic-pharmacodynamic data and retraction of the affected publications. Anesthesiology 112, 1056–1057 [DOI] [PubMed] [Google Scholar]

- 21. Quik M., Cook R. G., Revah F., Changeux J. P., Patrick J. (1993) Presence of α-cobratoxin and phospholipase A2 activity in thymopoietin preparations. Mol. Pharmacol. 44, 678–679 [PubMed] [Google Scholar]

- 22. Nardone R. M. (2007) Eradication of cross-contaminated cell lines: a call for action. Cell Biol. Toxicol. 23, 367–372 [DOI] [PubMed] [Google Scholar]

- 23. Rojas A., Gonzalez I., Figueroa H. (2008) Cell line cross-contamination in biomedical research: a call to prevent unawareness. Acta Pharmacol. Sin. 29, 877–880 [DOI] [PubMed] [Google Scholar]

- 24. Knight L. A., Cree I. A. (2011) Quality assurance and good laboratory practice. Methods. Mol. Biol. 731, 115–124 [DOI] [PubMed] [Google Scholar]

- 25. Barallon R., Bauer S. R., Butler J., Capes-Davis A., Dirks W. G., Elmore E., Furtado M., Kline M. C., Kohara A., Los G. V., MacLeod R. A., Masters J. R., Nardone M., Nardone R. M., Nims R. W., Price P. J., Reid Y. A., Shewale J., Sykes G., Steuer A. F., Storts D. R., Thomson J., Taraporewala Z., Alston-Roberts C., Kerrigan L. (2010) Recommendation of short tandem repeat profiling for authenticating human cell lines, stem cells, and tissues. In Vitro Cell. Dev. Biol. Anim. 46, 727–732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Nelson-Rees W. A., Daniels D. W., Flandermeyer R. R. (1981) Cross-contamination of cells in culture. Science 212, 446–452 [DOI] [PubMed] [Google Scholar]

- 27. Drexler H. G., Dirks W. G., Matsuo Y., MacLeod R. A. (2003) False leukemia-lymphoma cell lines: an update on over 500 cell lines. Leukemia 17, 416–426 [DOI] [PubMed] [Google Scholar]

- 28. MacLeod R. A., Dirks W. G., Matsuo Y., Kaufmann M., Milch H., Drexler H. G. (1999) Widespread intraspecies cross-contamination of human tumor cell lines arising at source. Int. J. Cancer 83, 555–563 [DOI] [PubMed] [Google Scholar]

- 29. Capes-Davis A., Theodosopoulos G., Atkin I., Drexler H. G., Kohara A., MacLeod R. A., Masters J. R., Nakamura Y., Reid Y. A., Reddel R. R, Freshney R. I. (2010) Check your cultures! A list of cross-contaminated or misidentified cell lines. Int. J. Cancer 127, 1–8 [DOI] [PubMed] [Google Scholar]

- 30. Phuchareon J., Ohta Y., Woo J. M., Eisele D. W., Tetsu O. (2009) Genetic profiling reveals cross-contamination and misidentification of 6 adenoid cystic carcinoma cell lines: ACC2, ACC3, ACCM, ACCNS, ACCS and CAC2. PLoS One 4, e6040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Miller G. (2006) Scientific publishing. A scientist's nightmare: software problem leads to five retractions. Science 314, 1856–1857 [DOI] [PubMed] [Google Scholar]

- 32. Rekers H., Affandi B. (2004) Implanon studies conducted in Indonesia. Contraception 70, 433. [DOI] [PubMed] [Google Scholar]

- 33. Joppa L. N., McInerny G., Harper R., Salido L., Takeda K., O'Hara K., Gavaghan D., Emmott S. (2013) Computational science. troubling trends in scientific software use. Science 340, 814–815 [DOI] [PubMed] [Google Scholar]

- 34. Vasilevsky N. A., Brush M. H., Paddock H., Ponting L., Tripathy S. J., Larocca G. M., Haendel M. A. (2013) On the reproducibility of science: unique identification of research resources in the biomedical literature. Peer J. 1, e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Ram K. (2013) Git can facilitate greater reproducibility and increased transparency in science. Source Code Biol. Med. 8, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Ter Riet G., Korevaar D. A., Leenaars M., Sterk P. J., Van Noorden C. J., Bouter L. M., Lutter R., Elferink R. P., Hooft L. (2012) Publication bias in laboratory animal research: a survey on magnitude, drivers, consequences and potential solutions. PLoS One 7, e43404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Loscalzo J. (2012) Irreproducible experimental results: causes, (mis)interpretations, and consequences. Circulation 125, 1211–1214 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Couzin-Frankel J. (2012) Research quality. Service offers to reproduce results for a fee. Science 337, 1031. [DOI] [PubMed] [Google Scholar]

- 39. Brown E. N., Ramaswamy S. (2007) Quality of protein crystal structures. Acta Crystallogr. D Biol. Crystallogr. 63, 941–950 [DOI] [PubMed] [Google Scholar]

- 40. MacArthur D. (2012) Methods: face up to false positives. Nature 487, 427–428 [DOI] [PubMed] [Google Scholar]

- 41. Wager E., Williams P. (2011) Why and how do journals retract articles? An analysis of Medline retractions 1988–2008. J. Med. Ethics 37, 567–570 [DOI] [PubMed] [Google Scholar]

- 42. Grcar J. (2013) Comments and corrigenda in scientific literature. Am. Scientist 101, 16 [Google Scholar]

- 43. Chow Y. K., Hirsch M. S., Merrill D. P., Bechtel L. J., Eron J. J., Kaplan J. C., D'Aquila R. T. (1993) Use of evolutionary limitations of HIV-1 multidrug resistance to optimize therapy. Nature 361, 650–654 [DOI] [PubMed] [Google Scholar]

- 44. Larder B. A., Kellam P., Kemp S. D. (1993) Convergent combination therapy can select viable multidrug-resistant HIV-1 in vitro. Nature 365, 451–453 [DOI] [PubMed] [Google Scholar]

- 45. Chow Y. K., Hirsch M. S., Kaplan J. C., D'Aquila R. T. (1993) HIV-1 error revealed. Nature 364, 679. [DOI] [PubMed] [Google Scholar]

- 46. Wolfe-Simon F., Switzer Blum J., Kulp T. R., Gordon G. W., Hoeft S. E., Pett-Ridge J., Stolz J. F., Webb S. M., Weber P. K., Davies P. C, Anbar A. D., Oremland R. S. (2011) A bacterium that can grow by using arsenic instead of phosphorus. Science 332, 1163–1166 [DOI] [PubMed] [Google Scholar]

- 47. Erb T. J., Kiefer P., Hattendorf B., Gunther D., Vorholt J. A. (2012) GFAJ-1 is an arsenate-resistant, phosphate-dependent organism. Science 337, 467–470 [DOI] [PubMed] [Google Scholar]

- 48. Reaves M. L., Sinha S., Rabinowitz J. D., Kruglyak L., Redfield R. J. (2012) Absence of detectable arsenate in DNA from arsenate-grown GFAJ-1 cells. Science 337, 470–473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Alberts B. (2011) Editor's note. Science 332, 1149. [DOI] [PubMed] [Google Scholar]

- 50. Tomkins J. L., Penrose M. A., Greeff J., Lebas N. R. (2011) Retraction. Science 333, 1220. [DOI] [PubMed] [Google Scholar]

- 51. Traver J. R. (1951) Unusual scalp dermatitis in humans caused by the mite, Dermatophagoides. (Acarine, Epidermoptidae). Proc. Entomol. Soc. Wash. 53, 1–25 [Google Scholar]

- 52. Shelomi M. (2013) Mad scientist: the unique case of a published delusion. Sci. Eng. Ethics 19, 381–388 [DOI] [PubMed] [Google Scholar]

- 53. Steinschneider A. (1972) Prolonged apnea and the sudden infant death syndrome: clinical and laboratory observations. Pediatrics 50, 646–654 [PubMed] [Google Scholar]

- 54. Pinholster G. (1994) SIDS paper triggers a murder charge. Science 264, 197–198 [DOI] [PubMed] [Google Scholar]

- 55. Davenas E., Beauvais F., Amara J., Oberbaum M., Robinzon B., Miadonna A., Tedeschi A., Pomeranz B., Fortner P., Belon P., Sainte-Laudy J., Poitevin B., Benvenise J. (1988) Human basophil degranulation triggered by very dilute antiserum against IgE. Nature 333, 816–818 [DOI] [PubMed] [Google Scholar]

- 56. Maddox J., Randi J., Stewart W. W. (1988) “High-dilution” experiments a delusion. Nature 334, 287–291 [DOI] [PubMed] [Google Scholar]

- 57. Hirst S. J., Hayes N. A., Burridge J., Pearce F. L., Foreman J. C. (1993) Human basophil degranulation is not triggered by very dilute antiserum against human IgE. Nature 366, 525–527 [DOI] [PubMed] [Google Scholar]

- 58. Benveniste J., Ducot B., Spira A. (1994) Memory of water revisited. Nature 370, 322. [DOI] [PubMed] [Google Scholar]

- 59. Fleischmann M., Pons S. (1989) Electrochemically induced nuclear fusion of deuterium. J. Electroanal. Chem. 261, 301–308 [Google Scholar]

- 60. Fleischmann M, Pons S, Hawkins M. (1989) Errata. J. Electroanal. Chem. 263, 187–188 [Google Scholar]

- 61. Miskelly G. M., Heben M. J., Kumar A., Penner R. M., Sailor M. J., Lewis N. S. (1989) Analysis of the published calorimetric evidence for electrochemical fusion of deuterium in palladium. Science 246, 793–796 [DOI] [PubMed] [Google Scholar]

- 62. Salamon M. H. M. W., Bergeson H. E., Crawford H. C., Delaney W. H., Henderson C. L., Li Y. Q., Rusho J. A., Sandquist G. M., Seltzer S. M. (1990) Limits on the emission of neutrons, γ-rays, electrons and protons from Pons/Fleischmann electrolytic cells. Nature 344, 401–405 [Google Scholar]

- 63. Bagenal F. S., Easton D. F., Harris E., Chilvers C. E., McElwain T. J. (1990) Survival of patients with breast cancer attending Bristol Cancer Help Centre. Lancet 336, 606–610 [DOI] [PubMed] [Google Scholar]

- 64. Goodare H. (1994) The scandal of poor medical research. Wrong results should be withdrawn. Br. Med. J. 308, 593. [PMC free article] [PubMed] [Google Scholar]

- 65. Emini E. A., Graham D. J., Gotlib L., Condra J. H., Byrnes V. W., Schleif W. A. (1993) HIV and multidrug resistance. Nature 364, 679. [DOI] [PubMed] [Google Scholar]

- 66. Bellgrau D., Gold D., Selawry H., Moore J., Franzusoff A., Duke R. C. (1995) A role for CD95 ligand in preventing graft rejection. Nature 377, 630–632 [DOI] [PubMed] [Google Scholar]

- 67. Yagita H., Seino K., Kayagaki N., Okumura K. (1996) CD95 ligand in graft rejection. Nature 379, 682. [DOI] [PubMed] [Google Scholar]

- 68. Allison J., Georgiou H. M., Strasser A., Vaux D. L. (1997) Transgenic expression of CD95 ligand on islet beta cells induces a granulocytic infiltration but does not confer immune privilege upon islet allografts. Proc. Natl. Acad. Sci. U. S. A. 94, 3943–3947 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Kang S. M., Schneider D. B., Lin Z., Hanahan D., Dichek D. A., Stock P. G., Baekkeskov S. (1997) Fas ligand expression in islets of Langerhans does not confer immune privilege and instead targets them for rapid destruction. Nat. Med. 3, 738–743 [DOI] [PubMed] [Google Scholar]

- 70. Vaux D. L. (1998) Immunology. Ways around rejection. Nature 394, 133. [DOI] [PubMed] [Google Scholar]

- 71. Gugliotti L. A., Feldheim D. L., Eaton B. E. (2004) RNA-mediated metal-metal bond formation in the synthesis of hexagonal palladium nanoparticles. Science 304, 850–852 [DOI] [PubMed] [Google Scholar]

- 72. Franzen S., Cerruti M., Leonard D. N., Duscher G. (2007) The role of selection pressure in RNA-mediated evolutionary materials synthesis. J. Am. Chem. Soc. 129, 15340–15346 [DOI] [PubMed] [Google Scholar]

- 73. Neff J. (2014, January 19) NC State professor uncovers problems in lab journal. News & Observer http://www.newsobserver.com/2014/01/19/3544566/in-a-notebook-at-nc-state-a-smoking.html

- 74. Labandeira-Rey M., Couzon F., Boisset S., Brown E. L., Bes M., Benito Y., Barbu E. M., Vazquez V., Hook M., Etienne J., Vandenesch F., Bowden M. G. (2007) Staphylococcus aureus Panton-Valentine leukocidin causes necrotizing pneumonia. Science 315, 1130–1133 [DOI] [PubMed] [Google Scholar]

- 75. Villaruz A. E., Bubeck Wardenburg J., Khan B. A., Whitney A. R., Sturdevant D. E., Gardner D. J., DeLeo F. R., Otto M. (2009) A point mutation in the agr locus rather than expression of the Panton-Valentine leukocidin caused previously reported phenotypes in Staphylococcus aureus pneumonia and gene regulation. J. Infect. Dis. 200, 724–734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Wyatt M. A., Wang W., Roux C. M., Beasley F. C., Heinrichs D. E., Dunman P. M., Magarvey N. A. (2010) Staphylococcus aureus nonribosomal peptide secondary metabolites regulate virulence. Science 329, 294–296 [DOI] [PubMed] [Google Scholar]

- 77. Wyatt M. A., Wang W., Roux C. M., Beasley F. C., Heinrichs D. E., Dunman P. M., Magarvey N. A. (2011) Clarification of “Staphylococcus aureus nonribosomal peptide secondary metabolites regulate virulence.” Science 333, 1381. [DOI] [PubMed] [Google Scholar]

- 78. Sun F., Cho H., Jeong D. W., Li C., He C., Bae T. (2010) Aureusimines in Staphylococcus aureus are not involved in virulence. PLoS One 5, e15703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Regan M. M., Leyland-Jones B., Bouzyk M., Pagani O., Tang W., Kammler R., Dell'orto P., Biasi M. O., Thurlimann B., Lyng M. B., Ditzel H. J., Neven P., Debled M., Maibach R., Price K. N., Gelber R. D., Coates A. S., Goldhirsch A., Rae J. M., Viale G., and Breast International Group (BIG) 1–98 Collaborative Group. (2012) CYP2D6 genotype and tamoxifen response in postmenopausal women with endocrine-responsive breast cancer: the breast international group 1–98 trial. J. Natl. Cancer Inst. 104, 441–451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Nakamura Y., Ratain M. J., Cox N. J., McLeod H. L., Kroetz D. L., Flockhart D. A. (2012) Re: CYP2D6 genotype and tamoxifen response in postmenopausal women with endocrine-responsive breast cancer: the Breast International Group 1–98 trial. J. Natl. Cancer Inst. 104, 1264. [DOI] [PubMed] [Google Scholar]

- 81. Atkinson I. (2012) Accuracy of data transfer: double data entry and estimating levels of error. J. Clin. Nurs. 21, 2730–2735 [DOI] [PubMed] [Google Scholar]