Within the context of a large-enrollment, introductory biology course, this study identifies which self-regulated learning strategies that students reported using are associated with higher exam grades and with improvement in exam grades early in the course.

Abstract

In college introductory science courses, students are challenged with mastering large amounts of disciplinary content while developing as autonomous and effective learners. Self-regulated learning (SRL) is the process of setting learning goals, monitoring progress toward them, and applying appropriate study strategies. SRL characterizes successful, “expert” learners, and develops with time and practice. In a large, undergraduate introductory biology course, we investigated: 1) what SRL strategies students reported using the most when studying for exams, 2) which strategies were associated with higher achievement and with grade improvement on exams, and 3) what study approaches students proposed to use for future exams. Higher-achieving students, and students whose exam grades improved in the first half of the semester, reported using specific cognitive and metacognitive strategies significantly more frequently than their lower-achieving peers. Lower-achieving students more frequently reported that they did not implement their planned strategies or, if they did, still did not improve their outcomes. These results suggest that many students entering introductory biology have limited knowledge of SRL strategies and/or limited ability to implement them, which can impact their achievement. Course-specific interventions that promote SRL development should be considered as integral pedagogical tools, aimed at fostering development of students’ lifelong learning skills.

INTRODUCTION

Introductory science courses are particularly challenging to learners transitioning to college. Not surprisingly, these have been perceived for a long time as “gatekeeping courses,” meaning that they often have high failure rates and may discourage students from pursuing science, technology, engineering, and mathematics (STEM) degrees (Seymour and Hewitt, 1997; Daempfle, 2003). Some studies have highlighted the role of instructional styles, faculty expectations, and classroom climate as critical to first-year student success (Daempfle, 2003; Gasiewski et al., 2012). There is considerable evidence, however, that learners’ cognitive, behavioral, motivational, and developmental attributes also play an important role in the transition to college and contribute to academic achievement (Felder and Brent, 2004; Credé and Kuncel, 2008; Richardson et al., 2012). First-year science students face multiple challenges, including the need to succeed academically in multiple courses while adapting to college life, establishing social networks, and taking accountability for their own learning. They must also learn how to manage their time and commitments, how to study independently, and how to optimize the effectiveness of their study practices. “Learning how to learn,” which requires metacognitive skills, is vital in the first year of college and beyond. Students’ development as independent, inquisitive, and reflective learners, capable of lifelong intellectual growth, is increasingly included among the desired outcomes of the higher education experience (King et al., 2007). Accordingly, the STEM education community has developed interest in metacognition and self-regulated learning (SRL) as mediators and developmental outcomes of student learning (Zimmerman, 2002; Schraw et al., 2006; Dinsmore et al., 2008; Tanner, 2012; Stanton et al., 2015).

While metacognition and SRL are closely related concepts that may appear difficult to disentangle, they are distinct in significant ways (Dinsmore et al., 2008). The metacognition construct, relevant to learning in educational settings and in everyday life (Flavell, 1979; Veenman et al., 2006), can be conceptualized as including two broad components: knowledge of cognition and regulation of cognition. Learners who have knowledge of cognition are aware of what and how they learn, possess a toolbox of learning strategies and procedures, and know when and why it is appropriate to use certain strategies; regulation of cognition, on the other hand, refers to learners’ ability to plan, monitor, and evaluate their own learning (Schraw and Moshman, 1995; Schraw et al., 2006). Knowledge and regulation of cognition are coordinated through reflection, which allows an individual to use knowledge about learning, monitor his or her learning process, and take actions to regulate it (Ertmer and Newby, 1996).

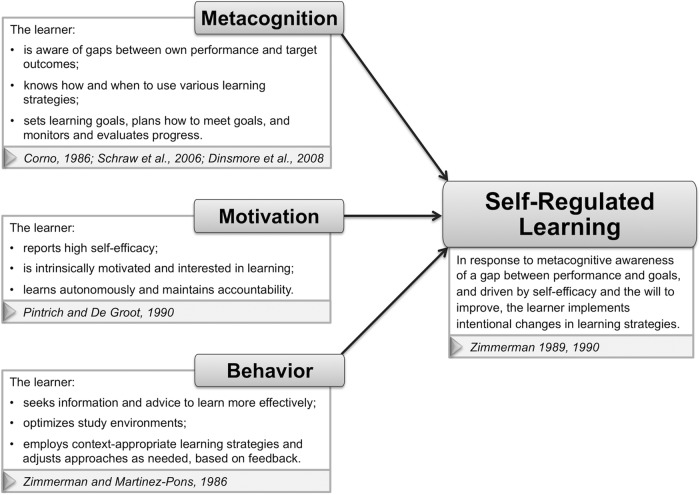

Metacognition is a necessary element of SRL but does not, by itself, result in self-regulated learning (Corno, 1986). Besides metacognition, SRL involves other critical components, such as motivation and behavior (Zimmerman, 1995; Wolters, 2003). In the educational psychology literature, SRL has been defined within a few different—although largely overlapping—theoretical frameworks (Boekaerts and Corno, 2005; Sitzmann and Ely, 2011). While each conceptualization of SRL may emphasize some aspects over others, they all converge on some fundamental assumptions. Namely, self-regulated learners set goals and actively and constructively engage in their learning by adjusting their efforts, approaches, and behaviors as needed to achieve these learning goals (Boekaerts and Corno, 2005; Sitzmann and Ely, 2011). In this study, we refer primarily to Zimmerman’s perspective of SRL (Zimmerman, 1989, 1990), which draws from social cognitive theory (Bandura, 1986, 1991) and defines self-regulated learners as those who actively participate in their own learning and control it through motivational, metacognitive, and behavioral engagement (Figure 1). These three aspects of SRL, in Zimmerman’s view, are manifest in learners who proactively and systematically engage in:

metacognitive processes, such as planning, goal setting, monitoring learning, and self-evaluating (Corno, 1986; Schraw et al., 2006; Dinsmore et al., 2008);

motivational processes, such as reporting high self-efficacy (belief in one’s ability to successfully complete academic tasks), having an intrinsic interest in their studies, assuming control of their learning, and accepting responsibility for their achievement outcomes (Pintrich and De Groot, 1990); and

behavioral processes, such as seeking information and advice, selecting and structuring optimal study environments, and adopting effective study strategies for a given task or context (Zimmerman and Martinez-Pons, 1986).

FIGURE 1.

The three components of self-regulated learning: metacognition, motivation, and behavior. According to Zimmerman (1989, 1990), a self-regulated learner demonstrates proactive and systematic engagement in all three components.

Self-regulation of learning, therefore, can be regarded as an application of metacognition—a learner-directed process that is influenced by motivation and involves the implementation of strategies aimed at achieving learning goals.

Several studies have linked SRL to academic performance, identifying a direct correlation between the extent to which learners are (or report being) self-regulated and their achievement in diverse disciplines and at different educational levels (Zimmerman and Martinez-Pons, 1986; Pintrich and De Groot, 1990; Kitsantas, 2002; Lopez et al., 2013). The ability to establish relationships between self-regulation and academic achievement, of course, depends on the availability of valid and reliable instruments to measure students’ SRL. Available instruments include surveys, such as the Motivated Strategies for Learning Questionnaire (MSLQ; Pintrich and De Groot, 1990; Pintrich et al., 1991) and the Learning and Study Strategies Inventory (LASSI; Weinstein et al., 1987). In addition to surveys, researchers have developed structured interview protocols, such as the Self-Regulated Learning Interview Schedule (SRLIS; Zimmerman and Martinez-Pons, 1986, 1988). The SRLIS was designed based on Zimmerman’s social cognitive view of SRL (Zimmerman and Martinez-Pons, 1986, 1988) and is aimed at assessing learners’ use of 14 specific self-regulated learning strategies. Zimmerman mapped the 14 SRL strategies onto three categories (Zimmerman, 2008): 1) metacognitive strategies included goal setting and planning, organizing and transforming, seeking information, and rehearsing and memorizing; 2) motivational strategies included self-evaluation and self-consequating; and 3) behavioral strategies included environmental structuring, keeping records and monitoring, reviewing texts/notes/exams, and seeking assistance from peers/instructors/other resources. The SRLIS interview protocol was initially developed and used with high school students. Students’ reports of the number of SRL strategies they used, and the consistency with which they used them, were strong predictors of 1) whether students were in the high- or low-achievement track and 2) their standardized test scores (Zimmerman and Martinez-Pons, 1986). Furthermore, students’ self-reported SRL strategy use, based on the SRLIS, coincided strongly with teachers’ ratings of these students’ self-regulated behavior in their classes (Zimmerman and Martinez-Pons, 1988).

Beginning as early as the preschool and kindergarten years (Vandevelde et al., 2013), SRL processes develop gradually in children (Paris and Newman, 1990) and become more sophisticated as students proceed through primary and secondary school (Veenman et al., 2006). At the undergraduate level, students are expected to be—or, at least, to become—autonomous, self-directed learners. Unfortunately, a significant proportion of students enter college lacking an adequate repertoire of learning strategies necessary to succeed academically (Ley and Young, 1998; Kiewra, 2002; Wingate, 2007). The transition from high school to college can be challenging for students because it also entails moving from a closely monitored and structured educational setting to an environment in which they have increased autonomy and responsibility for their own learning. Often, students who are academically underprepared at the onset of their college studies also seem to hold poor metacognitive skills, low self-esteem, and immature attributional beliefs (Carr et al., 1991; Ley and Young, 1998). Higher-achieving students who were very successful in high school frequently face similar difficulties. Despite stronger background knowledge, they are not prepared to assume control of their own learning (Dembo and Seli, 2004; Perry et al., 2005; Wingate, 2007). To meet the expectations of college science classes, students must activate an entire set of resources that include prior knowledge and preparation, self-efficacy beliefs and motivation, and effective cognitive and metacognitive strategies (Perry et al., 2005; Vandevelde et al., 2013). Especially in the context of learner-centered, constructivist classroom environments, the responsibility for learning is largely and intentionally shifted onto the learner (Perkins, 1991). Additionally, these settings include instruction and assessment that favor integrative, critical thinking and application of higher-order cognitive skills (Crowe et al., 2008). Therefore, students who do not possess a robust repertoire of self-regulated learning strategies may find navigating the road to success more challenging than expected. In this work, we focus on students’ SRL practices in the context of a large-enrollment introductory biology course.

“How should I study for the exam?” is possibly one of the most frequently asked questions in introductory biology. Unfortunately, it is difficult to provide a good, useful answer to this question. To answer, we would need to consider not only the course context, including learning goals, instructional style, and assessment demands, but also individual learner characteristics, such as students’ approaches to studying. Often, we find that we need to begin by asking the student, “How DO you study?” We need information about the basic toolbox of strategies students are equipped with and comfortable using. We also need to know what strategies, among the many possible, are most likely to be effective in our specific courses, based on what students are expected to know and be able to do.

In a large-enrollment, first-year introductory biology course for science majors and pre-medical/pre–professional health students, we wanted to identify what SRL strategies our students used most frequently and whether their use of any specific strategies corresponded with higher achievement on course exams. With a student population nearing 400 students, we could not practically execute the formal interview protocol developed by Zimmerman (Zimmerman and Martinez-Pons, 1986). Thus, we translated the SRLIS protocol into a questionnaire aimed at identifying whether and how frequently our introductory biology students used each SRL strategy to prepare for exams. Specific goals of this exploratory study were to identify:

which SRL strategies our introductory biology students reported using most often;

which, if any, SRL strategies were associated with higher achievement on course exams and with grade improvement over time; and

what strategies students planned to adopt in preparing for future exams.

METHODS

Participants and Context

Participants in this study were enrolled in a first-semester introductory biology course for life science majors and pre-medical/pre–professional health students at a large, private research institution in the midwestern United States. The student population (n = 414) was composed for the most part of freshmen (∼88%) majoring in a STEM (e.g., biology, biochemistry, biomedical engineering) or allied health (e.g., public health, nutrition and dietetics, investigative medical sciences) discipline. Course content included principles of cell and molecular biology, genetics, cellular metabolism, and animal form and function. The investigation was conducted in three large sections of the course taught by two instructors who collaborated closely to develop instruction and assessment. Both instructors implemented a learner-centered and model-based pedagogy (Long et al., 2014; Reinagel and Bray Speth, 2016) based on the “flipped-classroom” approach (Seery, 2015). Students routinely completed preclass homework assignments, which included textbook readings and instructor-made screencasts, followed by online quizzes (administered through the course management system) and often paper-and-pencil assignments. In class, students worked in permanent collaborative groups of three to solve problems and apply their understanding in a variety of formats (discussions, worksheets, clicker questions, conceptual model construction). In-class learning activities were supported by undergraduate learning assistants (LAs, in a ratio of one LA to ∼40 students). Course assessment included three unit exams, spaced about 4 weeks apart, and a cumulative final exam.

This research was conducted in the context of a broader project, which was reviewed and approved by the local institutional review board (IRB; protocol #22988). At the beginning of the course, instructors informed students about the ongoing research with a recruitment statement shared electronically in the course management system. Students confirmed that they read the recruitment statement by checking a box and were given the option to decline participation in this study.

SRL Strategies Survey and Procedure

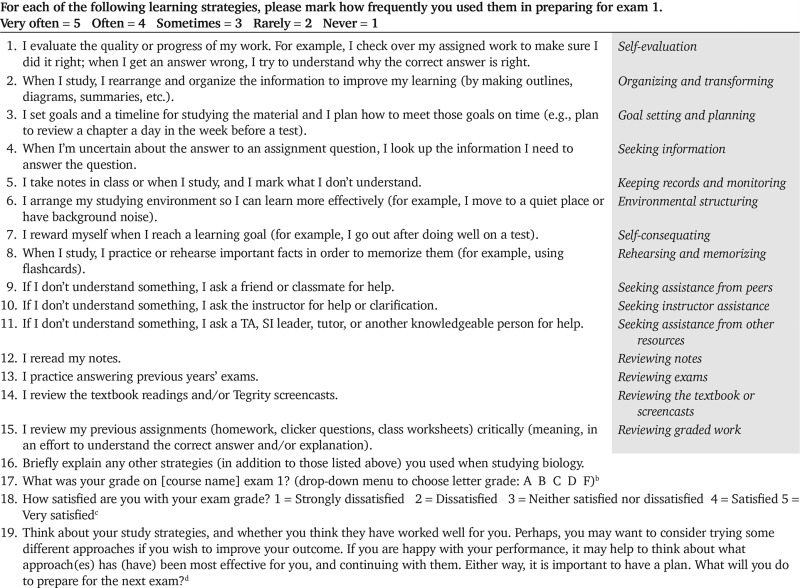

We developed a Likert-type questionnaire based on the categories of SRL strategies identified for the SRLIS structured interview protocol (Zimmerman and Martinez-Pons, 1986; Zimmerman, 1989). Using the original list of 14 SRL strategies, we adapted strategy descriptions to describe study behaviors in a language that reflected the experience of students in the context of our introductory biology course (Table 1). For example, Zimmerman’s category of “seeking social assistance” strategies was implemented in our survey as three items (9, 10, and 11; Table 1), each referring to a distinct social resource available to our students (classmates, instructor, or other knowledgeable peers such as teaching/learning assistants [TAs/LAs] or supplemental instructors [SI leaders], who are undergraduates). Furthermore, we added to the “reviewing records” category a strategy of “reviewing graded work,” which was not originally in the work of Zimmerman and Martinez-Pons (1986). In our course, students completed daily homework and in-class work that was graded and returned quickly to provide feedback on their learning. Therefore, in addition to traditional resources (notes, textbook, and tests), they also had low-stakes graded assessments they could use to study.

TABLE 1.

Survey 1, administered after exam 1, with the names of the strategies italicized and shaded in the table, on the righta

|

aThe names of the strategies are based on Zimmerman and Martinez-Pons (1986, 1988) and were not shown to students during the survey; they are reported here as a reference and are used throughout the manuscript to report results. Survey 2 was identical to survey 1, except where noted.

bSurvey 2: Q17. What is your grade on [course name] exam 2 (the midterm exam)? Q18. What was your grade on [course name] exam 1?

cThis question was not included in survey 2.

dSurvey 2: Q19. You answered a similar questionnaire after exam 1. At that time, you were invited to come up with a plan for how to study. Revisit your proposed study plan (you can even go back to the answer you entered). Did you follow your plan? Q20. What study strategies worked well for you in preparing for exam 2? What, if anything, did not work as well as you wished? Q21. Now, make your plan for the rest of the semester. How will you study for this course?

Students were asked to complete the SRL questionnaire twice: after receiving their graded exam 1 (survey 1, in week 5 of the course) and again after receiving their graded exam 2 (survey 2, in week 9). The questionnaires were administered online as homework assignments through the course management system, formatted as anonymous surveys. The system recorded each student’s responses separately from his or her identifier and only attributed a checkmark to students who submitted the survey. Because the questionnaire was assigned as a regular homework activity, students who submitted it were awarded credit toward their course homework grade (consistent with our IRB protocol). The complete survey (Table 1) asked students to report: 1) how often they used each of 15 SRL study strategies on a five-point Likert scale (1 = never, 2 = rarely, 3 = sometimes, 4 = often, 5 = very often), 2) the letter grade they earned on the exam (“A,” “B,” “C,” “D,” or “F”), and 3) their proposed study plan for the next exam(s). Response patterns were similar between the three course sections (unpublished data); therefore, we combined students into a single sample (survey 1: n = 388; survey 2: n = 385). No demographic data or other identifiable information were linked to students’ responses.

Data Analysis

For each strategy in the survey, we analyzed frequency of responses by pooling “very often” (5) and “often” (4) into a single category, which we refer to as “higher use.” Conversely, the responses “sometimes” (3), “rarely” (2), and “never” (1) were pooled into the single category of “lower use.” To determine whether strategy use differed significantly between exams, we performed a contingency analysis with a chi-square test of independence for each strategy. Because these were 2 × 2 contingency tables representing frequency of strategy use (higher, lower) against exam (exam 1, exam 2), we applied Yates’s correction for continuity, which controls for the underestimation of p values when using the continuous chi-square distribution to test independence between binomial variables (Yates, 1934).

To analyze association between SRL strategy use and exam grade, we performed contingency analyses with chi-square tests of independence to determine whether exam grade (“A,” “B,” “C,” and “D”/“F”) depended upon how often a study strategy was used (higher use vs. lower use). We pooled “D” and “F” because of the smaller sample size compared with the other grade groups, and because both represent unsatisfactory performance. We also analyzed the association of grade improvement from exam 1 to exam 2 with strategy use frequency. All contingency analyses were performed in R with the default package (R Development Core Team, 2014).

We developed a rubric to categorize the strategies that students proposed to use while studying for future exams (responses to Q19 on survey 1 and Q21 on survey 2). Using a general inductive approach to summarize emergent themes in qualitative data (Thomas, 2006), we identified student-proposed strategies that aligned with those generated by Zimmerman and Martinez-Pons (1986) and included any additional strategies that students suggested. The rubric (Table 2) includes the categories of student responses and examples of strategies within each category. Each student response could list more than one strategy; thus, rubric categories were not mutually exclusive. Two raters (A.J.S. and E.B.S.) independently coded a subset of student study plans from survey 1. From each group of students, which had been separated based on exam grade, ∼15% of responses were selected randomly for coding. Because the raters achieved an average of 94% agreement across all categories in the rubric, one rater (A.J.S.) coded the rest of the study plans.

TABLE 2.

Rubric for coding study plans proposed by students in response to Q19 on survey 1 and Q21 on survey 2

| Category | Strategy examples—student plans to implement one or more of the following: |

|---|---|

| Self-evaluation |

|

| Keeping records and monitoring; organizing and transforming |

|

| Goal setting and planning; time management |

|

| Seeking information |

|

| Environmental structuring |

|

| Seeking assistance from peers |

|

| Seeking instructor assistance |

|

| Seeking assistance from other resources |

|

| Reviewing exams |

|

| Reviewing notes and/or course materials |

|

| Reviewing graded work |

|

| Better aimed efforts (quality) |

|

| More generic effort (quantity) |

|

| Same as before (no change) |

|

RESULTS

Identifying the Most (and Least) Used SRL Strategies

For each SRL strategy surveyed (Table 1), we calculated the frequency of students reporting “higher use” as the relative percentage out of the total number of survey participants. We ranked these percentages from highest to lowest to identify which strategies students reported using the most while studying for an exam (Supplemental Figure S1). In both surveys, the most used strategies (based on percentage of students reporting higher use) were: seeking information (survey 1 (S1): 91.8%; survey 2 (S2): 90.1%), environmental structuring (S1: 83.5%; S2: 81.8%), reviewing the textbook or screencasts (S1: 82.5%; S2: 80.8%), seeking assistance from peers (S1: 82.2%; S2: 77.4%), and keeping records and monitoring (S1: 82.0%; S2: 80.8%). Conversely, the two strategies students consistently reported using the least when preparing for exams were seeking instructor assistance (S1: 19.6%; S2: 16.1%) and seeking assistance from other resources (TAs, tutors, etc.; S1: 34.0%; S2: 35.6%).

The only strategy that students reported using significantly more often on survey 2 than they did on survey 1 was reviewing exams, which rose to become the third most used strategy in preparing for exam 2 (S1: 74.0%; S2: 81.0%; χ² = 5.5258, df = 1, p < 0.05). Frequency of use reported for the other strategies did not significantly change from exam 1 to exam 2.

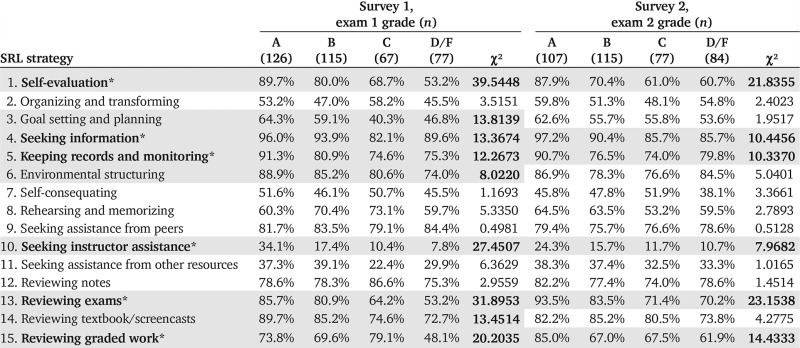

SRL Strategies Associated with Exam Grades

We performed contingency analyses to identify SRL strategies that were significantly associated with exam grades. Specifically, we looked for strategies that higher-achieving students—meaning those who earned an “A” on the exam—reported using significantly more often than lower-achieving students, who earned a “D” or “F” on the exam. We identified nine strategies in total (shaded in Table 3) that had a significant association with exam grades. Three of these only had a significant association with exam 1 grade (goal setting and planning, environmental structuring, and reviewing the textbook or screencasts). Six strategies had a significant association with grades on both exams. These were self-evaluation, seeking information, keeping records and monitoring, seeking instructor assistance, reviewing exams, and reviewing graded work.

TABLE 3.

Frequency with which students who earned different grades on exams reported using each of the 15 SRL study strategies often/very often (higher use)a

|

aThe χ² value from the contingency analysis is reported for each strategy (df = 3; α = 0.05). Strategies having a significant association with exam grade are shaded; boldfaced χ² values indicate statistical significance. Boldfaced strategies marked with an asterisk were significantly associated with higher achievement on both exams.

Characterizing Strategies Proposed in Study Plans

We used the rubric for coding student-proposed strategies (Table 2) to analyze open-ended responses to the question of how students intended to prepare for subsequent exams. On both surveys, five categories of strategies emerged as the most commonly proposed by students, regardless of their exam grade: goal setting and planning/time management, reviewing notes and/or course materials, self-evaluation, keeping records and monitoring/organizing and transforming, and seeking assistance from other resources (survey 1 data are reported in Table 4; survey 2 data [unpublished] mirrored the same pattern of relative distribution as survey 1). Students expressed most highly the intention to avoid procrastinating or cramming before an exam (goal setting and planning; time management) and to use their own notes and/or other provided resources more thoroughly. However, the proportion of students proposing to study more from notes and/or course materials was much greater among higher-achieving (“A”) than among lower-achieving (“D”/“F”) students. Conversely, students who earned a “D” or “F” on exam 1 more frequently planned on seeking expert peer help (from class TAs or LAs, tutors, supplemental instructors) compared with their higher-achieving peers.

TABLE 4.

Most commonly reported strategy categories students indicated on survey 1 when asked how they planned to study to prepare for future examsa

| Proposed strategy | A (n = 126) | B (n = 115) | C (n = 67) | D/F (n = 77) |

|---|---|---|---|---|

| Goal setting and planning; time management | 45.2% | 57.4% | 65.7% | 54.5% |

| Reviewing notes and/or course materials | 51.6% | 36.5% | 28.4% | 27.3% |

| Self-evaluation | 17.5% | 20.9% | 25.4% | 14.3% |

| Keeping records and monitoring; organizing and transforming | 23.8% | 20.9% | 26.9% | 14.3% |

| Seeking assistance from other resources (TA, SI leader, tutor, other expert) | 12.7% | 17.4% | 22.4% | 28.6% |

Students are grouped based on their self-reported exam 1 grade. The table includes only strategies that at least 25% of students within a grade group reported that they would be using in the future.

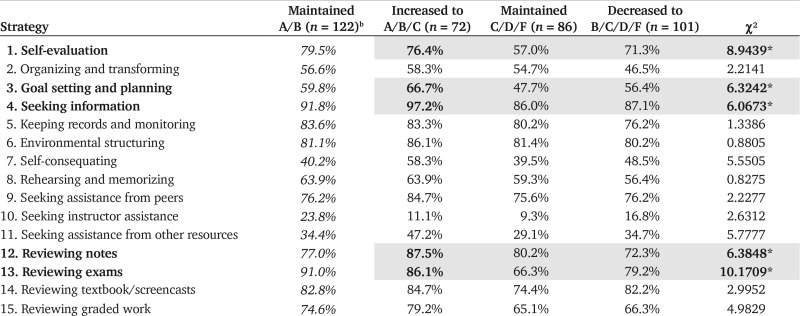

SRL Strategies Associated with Grade Improvement

To identify potential associations between frequency of use of specific strategies and grade improvement from exam 1 to exam 2, we binned students into four groups based on the exam grades they self-reported on survey 2 (Q17 and Q18 in survey 2; Table 1, footnote b). Students who earned a grade of “A” or “B” on exam 1 and maintained that grade on exam 2 (n = 122) were excluded from statistical analysis. The frequencies with which these students reported using each SRL strategy are reported in Table 5 for reference (italicized). We focused our analysis on the remaining students, binned into three groups: 72 students who earned a higher grade (“A,” “B,” or “C”) on the second exam than they did on the first; 86 students who maintained a grade of “C,” “D,” or “F”; and 101 students who earned a lower grade on the second exam than they did on the first (“B,” “C,” “D,” or “F”; Table 5). A series of contingency tests comparing use of each SRL strategy across these three student groups identified five strategies that were statistically associated with an improved exam grade (i.e., students who improved to an “A,” “B,” or “C” reported using these strategies significantly more often than students whose grade did not change or decreased from exam 1 to exam 2). These strategies were self-evaluation, goal setting and planning, seeking information, reviewing notes, and reviewing exams (listed in Table 5, along with relative proportions of students reporting using these strategies often/very often and with chi-square statistics).

TABLE 5.

Association between self-reported use of SRL strategies and grade improvement from exam 1 to exam 2a

|

aPercentages indicate the proportion of students within each group who reported using each strategy with high frequency (i.e., often or very often). Sample sizes for each group are provided, along with the χ² value from the contingency analysis (df = 2; α = 0.05). Strategies having a significant association with grade improvement are noted with boldfaced font and asterisks next to the χ² values.

bReported for reference only; this group was not included in the χ² analyses.

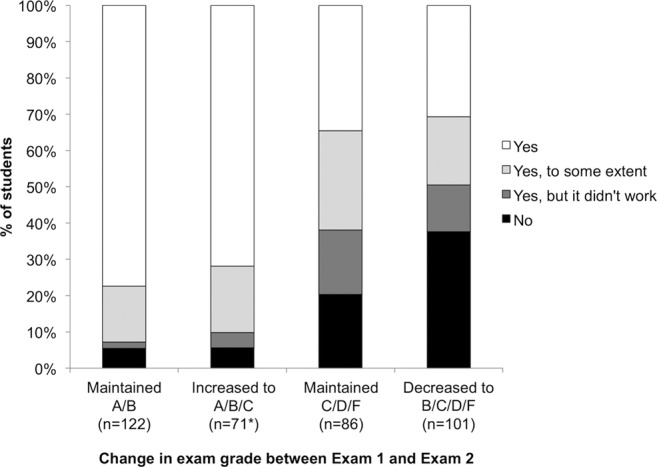

Characterizing Students’ Adherence to Their Study Plans

When asked whether they had followed through with the study strategies they had planned to implement after exam 1 (survey 2, Q19), most students stated they did, at least to some extent. Students’ answers fell into one of four categories: “yes” (students followed the plan they had made); “yes, to some extent” (students only implemented some of the strategies they had planned); “yes, but it didn’t work” (students said they followed their plans, yet they did not improve their grades); and “no” (students did not implement any of their intended strategies). The proportion of students who reported not following their plans was highest among students whose exam grade decreased (Figure 2).

FIGURE 2.

Proportion of students reporting on survey 2 (Q19) whether they had followed their study plans, grouped based on whether their exam grade improved, decreased, or did not change. The asterisk (*) by the group of students who increased their grade to A/B/C denotes that one student did not answer this question.

When asked how they planned to study for future exams, a subset of students indicated that they intended to carry on studying, to some degree, the same way they had studied before. More students, regardless of their exam grade, remarked they would continue to use the same strategies after exam 2 compared with after exam 1 (Table 6). Unsurprisingly, students earning an “A” were largely committed to strategies that had evidently worked well for them. Despite the poor outcome, 13% of the students who earned a “D” or “F” on exam 2 affirmed that they were going to continue to study as they did before; similarly, nearly 20% of students earning a “C” reported they did not intend to make any changes in their strategies.

TABLE 6.

Proportion of students reporting they intended to continue using the same strategies to prepare for future exams (i.e., did not plan to make any changes), shown by exam grade and in aggregate

| Survey 1 | Survey 2 | |||

|---|---|---|---|---|

| Exam grade | n | Percentage of students who will continue with same strategies after exam 1 | n | Percentage of students who will continue with same strategies after exam 2 |

| A | 126 | 46.8 | 107 | 68.2 |

| B | 115 | 18.3 | 115 | 40.0 |

| C | 67 | 4.5 | 77 | 19.5 |

| D/F | 77 | 1.3 | 84 | 13.1 |

| All students | 385 | 21.8 | 383 | 37.9 |

DISCUSSION

In a large-enrollment, first-semester introductory biology course, we identified which SRL strategies students reported using the most when preparing for the first and second course exams and which strategies were associated with higher exam grades, more specifically with grade improvement from exam 1 to exam 2. We derived the SRL strategies from a framework developed by Zimmerman (1989, 1990), who regards SRL as a metacognitive, motivational, and behavioral construct (Figure 1). Although Zimmerman mapped SRL strategies directly onto categories (motivational, metacognitive, or behavioral; Zimmerman, 2008), we do not necessarily view each student learning strategy as fitting exclusively into a single category. Self-evaluation practices, for example, can motivate a student to pursue further learning, but rest upon the student’s metacognitive ability to identify a gap between his/her performance and the desired outcome. Authors who used different categorization frameworks (i.e., Pintrich and De Groot, 1990) would define rehearsing and memorizing as a cognitive—rather than a behavioral—strategy. Regardless of labels, most researchers in the field converged upon the same strategies as being the basis for self-regulation of learning.

The distribution of students reporting higher use (often/very often) for each of 15 surveyed SRL strategies did not change substantially from survey 1 to survey 2 (Supplemental Figure S1), except for a significant increase in the frequency with which students reported reviewing previous years’ exams when studying for exam 2, compared with exam 1. Unsurprisingly, seeking instructor and expert peer assistance were the least popular strategies among our students. This is consistent with our observation of overall poorly attended instructor and TA office hours. However, the exam grade breakdown revealed that the students who attended instructor office hours more frequently were those who earned an “A” on exams (Table 3). While this may suggest that attending office hours was beneficial to student learning, it more likely reflects higher-achieving students’ motivation to maintain high grades and confidence in bringing their questions to the instructor.

Not all SRL strategies were associated with higher exam grades in our population. In fact, of the 15 SRL strategies we surveyed, nine were significantly associated with achievement on exam 1, and only six of these maintained a significant association with exam 2 grades (Table 3). The six practices that, in our course, were associated with higher achievement on exams were self-evaluation, seeking information, keeping records and monitoring, seeking instructor assistance, reviewing exams, and reviewing graded work. Self-evaluating and reviewing graded work effectively require critical examination of one’s own work and of the feedback received on that work, when available. Interestingly, Zimmerman and Martinez-Pons (1986) found self-evaluation to be the only SRL strategy that did not effectively discriminate between high- and low-achieving high school students. This discrepancy may be related to the age difference between study populations, as metacognitive skills take time to develop. Our results, on the other hand, are largely consistent with those reported by Ley and Young (1998) in their study of differences in self-regulation between underprepared and regular-admission college students. Frequency of use of specific SRL strategies—self-evaluation, environmental structuring, organizing and transforming, keeping records and monitoring, and reviewing exams—was found to be significantly higher in regular-admission students than in their underprepared peers (Ley and Young, 1998). The specific study strategies that are associated with academic achievement in our study could also be a product of the instructional context. Indeed, research has indicated that assessment of SRL is context-dependent (Hadwin et al., 2001; Veenman et al., 2006). Our instructional design includes a significant amount of graded homework and constructed-response assessments evaluated with rubrics; therefore, it is understandable that frequent practice of self-evaluation correlates with better exam grades. In courses or contexts that do not leverage students’ self-evaluation, this practice may be less relevant to student achievement.

Consistent with the results of Stanton et al. (2015), a large fraction of our students proposed study plans demonstrating willingness to change the way they studied, or at least to adopt new strategies. A common theme emerging from students’ study plans for future exams was that of needing better time management (Table 4). Regardless of exam grade, most students mentioned the intention to avoid procrastinating and/or to make timetables and pace their studying, rather than cramming in the last days before the test. Students who earned low exam grades (“D” or “F”) proposed studying course materials, taking better notes, and self-evaluating less frequently than students earning higher grades; conversely, these lower-achieving students were much more inclined to seek expert peer assistance (e.g., undergraduate TAs/LAs) compared with higher-achieving students (Table 4). We may speculate that this pattern of responses reflects, or is related to, lower-achieving students’ lack of self-efficacy and of procedural metacognitive knowledge (Stanton et al., 2015). Accordingly, these students indicated that they intended to seek external sources of help more frequently than they planned on engaging in independent study practices (such as organizing and transforming their study materials, and self-evaluating; Table 4). Expert peers, made available by the institution, may appear to be more authoritative and reliable than a classmate, but less intimidating and more approachable than the instructor.

We analyzed our data to identify whether higher use of any specific SRL strategy was associated with grade improvement from exam 1 to exam 2. The practices of self-evaluation, goal setting and planning, seeking information, reviewing notes, and reviewing exams were significantly associated with grade improvement (Table 5). In other words, students who earned a better grade on exam 2 than they did on exam 1 reported using these key cognitive and metacognitive strategies more frequently than their peers.

Students whose grades improved also reported following their study plans more frequently than any other group (Figure 2). On the other hand, the frequency of students reporting not following their study plans was highest among those who maintained a low exam grade or dropped to a lower grade. Interestingly, a proportion of the students who achieved a lower grade on exam 2 (12.9%) or maintained a “C,” “D,” or “F” (17.4%) expressed frustration about having implemented new study strategies to no avail (Figure 2). These students often lamented that they did not understand what went wrong and why, and their comments revealed discouragement and uncertainty on how to move forward. This observation aligns with the findings of Stanton et al. (2015), who proposed that undergraduate students can be at different stages along a continuum of metacognitive regulation and awareness. We suspect that the students in our population who expressed surprise and dismay about their unrewarded efforts may be at the emerging metacognitive regulation category, meaning that they can identify appropriate practices but lack the procedural knowledge needed to implement them effectively (Stanton et al., 2015).

By the time students received their graded midterm exam (exam 2) and filled out the second survey, the proportion of students who reported settling on their study strategies noticeably increased (Table 6). At midterm, most of the higher-achieving students were comfortable with their strategies. The increase in the number of lower-achieving students (earning “C,” “D,” or “F”) who, at midterm, reported they did not plan on changing their study strategies is worrisome, as it may very well indicate that discouragement and low self-efficacy are settling in at this point for many lower-achieving students. Failure to follow through with changed plans, coupled with poor achievement on the second exam, may trigger a detrimental positive feedback loop in which procrastination, low self-efficacy, and poor performance reinforce one another (Wäschle et al., 2014).

Limitations and Future Directions

The questionnaire we developed and used in this study (Table 1) is not intended as a valid and reliable measure of students’ self-regulated learning abilities and should not be regarded as such. A variety of validated measures to assess SRL exist, each with slightly different focus and purpose. The MSLQ (Pintrich and De Groot, 1990; Pintrich et al., 1991), for example, is a 81-item questionnaire that measures students’ motivation (value, expectancy, and affective components) and learning strategies (cognitive, metacognitive, and resource-management strategies). Another well-known instrument, the LASSI (Weinstein et al., 1987), targets three areas: will (anxiety, attitude, motivation), skill (information processing, selecting main ideas, test strategies), and self-regulation (concentration, self-testing, time management, using academic resources). Both of these popular instruments are fairly long and complex. For this exploratory study, we sought a brief yet comprehensive and targeted questionnaire that would allow us to survey the studying behaviors of our student population. The SRLIS (Zimmerman and Martinez-Pons, 1986, 1988)—which departs from the self-report questionnaire format of the former two instruments—was developed to assess students’ use of SRL strategies across various learning contexts, from learning inside the classroom to studying and completing assignments at home. This interview protocol yielded useful insights about the self-regulated strategy use of high- and low-achieving high school students: higher-achieving students used more SRL strategies, and they used them more consistently (Zimmerman and Martinez-Pons, 1986). Ley and Young (1998) used the SRLIS with a sample of 59 college students. In their paper, they thoroughly discussed choosing a labor-intensive structured interview protocol rather than a Likert-scale instrument to avoid a potential source of error in measuring study behaviors. A Likert-scale questionnaire might, they suggested, prompt or cue students to report strategies they would not otherwise have thought about. In essence, they lamented the lack of validation of a Likert-scale instrument, analogous to the SRLIS, to measure use of self-regulated learning strategies.

It is important to note that our study, conducted with a sample of nearly 400 students, did not aim to measure SRL as a quantifiable characteristic of each learner. Our objective was to investigate 1) which self-regulated study strategies our students reported adopting in the specific context of our first-year introductory biology course, and 2) whether students’ achievement on our course exams was associated with their self-reported use of specific strategies. We intentionally chose, therefore, to translate the SRLIS strategy categories into a survey format for rapid, easy, repeated administration to a large student population. We are aware of the inherent limitations of self-reported data, because it is known that learners’ perceptions of their strategies may not correspond entirely to their behaviors (Schellings and Van Hout-Wolters, 2011; Veenman, 2011). However, the large sample size greatly increases the power of the data analysis. Another potential issue with retrospective questionnaires is that of “memory distortion,” which we aimed to limit by following best practices—that is, by administering the surveys shortly after the exams and making the questionnaire as task specific as possible (Schellings and Van Hout-Wolters, 2011).

Prior work on SRL has typically related measures of self-regulation to readily available achievement metrics, such as exam grades, course grades, or grade point average (e.g., Zimmerman and Martinez-Pons, 1986; Pintrich and De Groot, 1990). In this study, we used achievement on course exams as our dependent variable. Exam grades are an imperfect proxy for student learning, yet they are valuable in that they represent the feedback students had on their performance in our course context. Exams in this course included a mix of multiple-choice and constructed-response questions (e.g., constructing concept models of biological systems and articulating explanations) distributed across the knowledge, comprehension, and application/analysis levels of Bloom’s taxonomy. Assessment format and targeted cognitive skills are known to influence students’ perceptions of how to learn and study in a given course (Scouller, 1998; Broekkamp and Van Hout-Wolters, 2007; Crowe et al., 2008). Thus, exams that assess higher-order thinking skills may cue students to use strategies that foster deeper thinking and learning. Further research, however, is necessary to unpack the interconnections among self-regulation of learning, performance on course assessments, and deep approaches to learning.

The work we report here enables us to begin developing an emerging picture of the SRL habits our students bring to their first biology course. However, that picture can and should be further refined to allow identification of possible reasons underlying lower-achieving students’ difficulties with SRL practices (and, ideally, remedies for such difficulties). Future research, for example, should include measures of motivation and attitudes toward learning that can help explain differences in strategy use and performance. Capturing students’ self-efficacy would be particularly relevant, since self-efficacy influences 1) the kinds of goals students make for their learning, 2) the strategies they adopt and how effectively they use them, and 3) how they reflect on and adjust future goals and approaches (Zimmerman, 1989; Pintrich and De Groot, 1990; Richardson et al., 2012; Wäschle et al., 2014). Additionally, using identifiers to track students’ survey responses over time (rather than anonymous responses) and instructor-recorded exam grades (rather than self-reported exam grades) will allow for a richer analysis of students’ development of SRL, at least in the context of one course—namely, how students study initially, how they adjust their strategies based on their assessment outcomes, and whether their adjusted approaches affect their subsequent outcomes.

Implications for Instruction

Both disciplinary experts and “expert learners” in general are more metacognitive, reflective, and effective than novices in acquiring, retaining, and using their knowledge (Ertmer and Newby, 1996; Zimmerman, 2002). Most students entering introductory science courses, however, are not expert learners, and they need practice and feedback to develop robust cognitive and metacognitive strategies. It is critical that instructors, who are disciplinary experts, become cognizant that 1) students are still developing their learning strategies and 2) self-regulation can be fostered in concrete ways. There is, in fact, evidence that learners—regardless of academic ability—can develop SRL habits and, concurrently, improve their academic achievement (Eilam and Reiter, 2014). With appropriate instruction and training, or by participating in learning environments that are designed to promote SRL, students can acquire and strengthen self-regulatory processes (Ley and Young, 2001; Zimmerman, 2002; Perels et al., 2005; Lord et al., 2012).

Supporting students in becoming “expert learners” is one of the (sometimes covert) goals of the higher education experience. As such, it is rarely perceived as the responsibility of any individual course or instructor. While broad, nontargeted study skills seminars, workshops, or one-on-one tutoring are frequently offered by colleges and universities, they may not be the most effective way to enable students to acquire the cognitive and metacognitive skills needed to succeed in many diverse courses and academic tasks (Case and Gunstone, 2002; Steiner, 2016). Instructional approaches that foster development of self-regulated learning habits need to be embedded within specific course contexts and appropriately reflect content and practices of the discipline (Case and Gunstone, 2002; Steiner, 2016).

A variety of instructional strategies can be implemented in introductory courses to foster student development of SRL habits. Assignments such as the postexam survey in this study, exam reviews (also known as “exam wrappers”), and student-generated study plans (Stanton et al., 2015) are valuable ways of engaging students in metacognitive reflection on the effectiveness of their study strategies. However, it is important to be aware that not all undergraduates benefit equally from these “metacognitive prompts”; many may still struggle with metacognitive procedural knowledge and need more explicit coaching (Stanton et al., 2015). Other instructional practices that promote SRL development include 1) regular homework assignments, 2) intentionally designed formative assessments, and 3) frequent feedback to students about their learning (Butler and Winne, 1995; Nicol and Macfarlane-Dick, 2006; Boud and Molloy, 2013). Timely and effective feedback to students about their learning, in particular, is critical, as it provides learners with information about the gap between their performances and their expectations (or the instructor’s expectations). This awareness is a first, necessary step in the search for new, more effective strategies.

Instructional approaches aimed at promoting SRL should be tailored to students’ needs and foster their consistent engagement with disciplinary content and with the process of their own learning. To meet learners where they are and provide specific, relevant support, it is also important that instructors identify the learning strategies that are linked to success in a particular course. Data such as those presented in this study would enable development of evidence-based interventions, such as study skills workshops, that appropriately emphasize strategies associated with positive outcomes in a given context. We hypothesize that a course-specific study skills seminar that uses student data to point out key study strategies may be more convincing to students than a generic “how to study” workshop. Evidence can also inform decisions about the timing of interventions. Our data—particularly Table 6—suggest that, if one is planning an intervention to help students learn how to study, course midterm or later may be too late for many students who have already experienced failure and are beginning to feel helpless. The grades students earn on early course exams tend to predict final grades in several biology courses, including introductory biology (Jensen and Barron, 2014). While earning low grades early on does not necessarily “doom” students for poor performance for the rest of the course, it is crucial that students adopt effective study approaches as early as possible—perhaps because they are less likely to change as the semester progresses.

In summary, to meet the needs of all students and to connect SRL to the practices and ways of thinking in our discipline, it is critical that we weave instructional approaches promoting SRL development within the fabric of our courses and programs. Pedagogical strategies that increase students’ awareness of their content understanding and highlight context-appropriate study approaches should help to bring learning under stronger control of the learner.

Supplementary Material

Acknowledgments

We are very grateful to Laurie Russell for contributing to data collection and for providing feedback during survey development, Gerardo Camilo for advice on statistical analysis, and Janet Kuebli for helpful perspective on self-regulated learning and survey methods. We also thank members of the Bray Speth lab for critically reviewing earlier versions of the paper and the anonymous reviewers who provided insights and suggestions for improving the article. This article is based on work supported in part by the National Science Foundation (NSF; award 1245410). Any opinions, findings, and conclusions or recommendations expressed here are those of the authors and do not necessarily reflect the views of the NSF.

REFERENCES

- Bandura A. Social Foundations of Thought and Action: A Social Cognitive Theory. Englewood Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- Bandura A. Social cognitive theory of self-regulation. Organ Behav Hum Decis Process. 1991;50:248–287. [Google Scholar]

- Boekaerts M, Corno L. Self-regulation in the classroom: a perspective on assessment and intervention. Appl Psychol. 2005;54:199–231. [Google Scholar]

- Boud D, Molloy E. Rethinking models of feedback for learning: the challenge of design. Assess Eval High Educ. 2013;38:698–712. [Google Scholar]

- Broekkamp H, Van Hout-Wolters BHAM. Students’ adaptation of study strategies when preparing for classroom tests. Educ Psychol Rev. 2007;19:401–428. [Google Scholar]

- Butler DL, Winne PH. Feedback and self-regulated learning: a theoretical synthesis. Rev Educ Res. 1995;65:245–281. [Google Scholar]

- Carr M, Borkowski JG, Maxwell SE. Motivational components of underachievement. Dev Psychol. 1991;27:108. [Google Scholar]

- Case J, Gunstone R. Metacognitive development as a shift in approach to learning: an in-depth study. Stud High Educ. 2002;27:459–470. [Google Scholar]

- Corno L. The metacognitive control components of self-regulated learning. Contemp Educ Psychol. 1986;11:333–346. [Google Scholar]

- Credé M, Kuncel NR. Study habits, skills, and attitudes: the third pillar supporting collegiate academic performance. Perspect Psychol Sci. 2008;3:425–453. doi: 10.1111/j.1745-6924.2008.00089.x. [DOI] [PubMed] [Google Scholar]

- Crowe A, Dirks C, Wenderoth MP. Biology in Bloom: implementing Bloom’s taxonomy to enhance student learning in biology. CBE Life Sci Educ. 2008;7:368–381. doi: 10.1187/cbe.08-05-0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daempfle PA. An analysis of the high attrition rates among first year college science, math, and engineering majors. J Coll Stud Ret. 2003;5:37–52. [Google Scholar]

- Dembo MH, Seli HP. Students’ resistance to change in learning strategies courses. J Dev Educ. 2004;27(3):2–11. [Google Scholar]

- Dinsmore DL, Alexander PA, Loughlin SM. Focusing the conceptual lens on metacognition, self-regulation, and self-regulated learning. Educ Psychol Rev. 2008;20:391–409. [Google Scholar]

- Eilam B, Reiter S. Long-term self-regulation of biology learning using standard junior high school science curriculum. Sci Educ. 2014;98:705–737. [Google Scholar]

- Ertmer PA, Newby TJ. The expert learner: strategic, self-regulated, and reflective. Instr Sci. 1996;24:1–24. [Google Scholar]

- Felder RM, Brent R. The intellectual development of science and engineering students. Part 1: models and challenges. J Eng Educ. 2004;93:269–277. [Google Scholar]

- Flavell JH. Metacognition and cognitive monitoring: a new area of cognitive–developmental inquiry. Am Psychol. 1979;34:906. [Google Scholar]

- Gasiewski JA, Eagan MK, Garcia GA, Hurtado S, Chang MJ. From gatekeeping to engagement: a multicontextual, mixed method study of student academic engagement in introductory STEM courses. Res High Educ. 2012;53:229–261. doi: 10.1007/s11162-011-9247-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadwin AF, Winne PH, Stockley DB, Nesbit JC, Woszczyna C. Context moderates students’ self-reports about how they study. J Educ Psychol. 2001;93:477–487. [Google Scholar]

- Jensen PA, Barron JN. Midterm and first-exam grades predict final grades in biology courses. J Coll Sci Teach. 2014;44:82–89. [Google Scholar]

- Kiewra KA. How classroom teachers can help students learn and teach them how to learn. Theory Pract. 2002;41:71–80. [Google Scholar]

- King PM, Brown MK, Lindsay NK, Vanhecke JR. Liberal arts student learning outcomes: an integrated approach. About Campus. 2007;12:2–9. [Google Scholar]

- Kitsantas A. Test preparation and performance: a self-regulatory analysis. J Exp Educ. 2002;70:101–113. [Google Scholar]

- Ley K, Young DB. Self-regulation behaviors in underprepared (developmental) and regular admission college students. Contemp Educ Psychol. 1998;23:42–64. doi: 10.1006/ceps.1997.0956. [DOI] [PubMed] [Google Scholar]

- Ley K, Young DB. Instructional principles for self-regulation. Educ Technol Res Dev. 2001;49:93–103. [Google Scholar]

- Long TM, Dauer JT, Kostelnik KM, Momsen JL, Wyse SA, Speth EB, Ebert-May D. Fostering ecoliteracy through model-based instruction. Front Ecol Environ. 2014;12:138–139. [Google Scholar]

- Lopez EJ, Nandagopal K, Shavelson RJ, Szu E, Penn J. Self-regulated learning study strategies and academic performance in undergraduate organic chemistry: an investigation examining ethnically diverse students. J Res Sci Teach. 2013;50:660–676. [Google Scholar]

- Lord SM, Prince MJ, Stefanou CR, Stolk JD, Chen JC. The effect of different active learning environments on student outcomes related to lifelong learning. Int J Eng Edu. 2012;28:606. [Google Scholar]

- Nicol DJ, Macfarlane-Dick D. Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud High Educ. 2006;31:199–218. [Google Scholar]

- Paris SG, Newman RS. Development aspects of self-regulated learning. Educ Psychol. 1990;25:87–102. [Google Scholar]

- Perels F, Gürtler T, Schmitz B. Training of self-regulatory and problem-solving competence. Learn Instr. 2005;15:123–139. [Google Scholar]

- Perkins DN. What constructivism demands of the learner. Educ Technol. 1991:19–21. [Google Scholar]

- Perry RP, Hladkyj S, Pekrun RH, Clifton RA, Chipperfield JG. Perceived academic control and failure in college students: a three-year study of scholastic attainment. Res High Educ. 2005;46:535–569. [Google Scholar]

- Pintrich PR, De Groot EV. Motivational and self-regulated learning components of classroom academic performance. J Educ Psychol. 1990;82:33. [Google Scholar]

- Pintrich PR, Smith DAF, Garcia T, McKeachie WJ. A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ) Ann Arbor: University of Michigan; 1991. [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2014. [Google Scholar]

- Reinagel A, Bray Speth E. Beyond the central dogma: model-based learning of how genes determine phenotypes. CBE Life Sci Educ. 2016;15:ar4. doi: 10.1187/cbe.15-04-0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson M, Abraham C, Bond R. Psychological correlates of university students’ academic performance: a systematic review and meta-analysis. Psychol Bull. 2012;138:353. doi: 10.1037/a0026838. [DOI] [PubMed] [Google Scholar]

- Schellings G, Van Hout-Wolters B. Measuring strategy use with self-report instruments: theoretical and empirical considerations. Metacogn Learn. 2011;6:83–90. [Google Scholar]

- Schraw G, Crippen KJ, Hartley K. Promoting self-regulation in science education: metacognition as part of a broader perspective on learning. Res Sci Educ. 2006;36:111–139. [Google Scholar]

- Schraw G, Moshman D. Metacognitive theories. Educ Psychol Rev. 1995;7:351–371. [Google Scholar]

- Scouller K. The influence of assessment method on students’ learning approaches: multiple choice question examination versus assignment essay. High Educ. 1998;35:453–472. [Google Scholar]

- Seery MK. Flipped learning in higher education chemistry: emerging trends and potential directions. Chem Educ Res Pract. 2015;16:758–768. [Google Scholar]

- Seymour E, Hewitt NM. Talking about Leaving: Why Undergraduates Leave the Sciences. Boulder, CO: Westview; 1997. [Google Scholar]

- Sitzmann T, Ely K. A meta-analysis of self-regulated learning in work-related training and educational attainment: what we know and where we need to go. Psychol Bull. 2011;137:421. doi: 10.1037/a0022777. [DOI] [PubMed] [Google Scholar]

- Stanton JD, Neider XN, Gallegos IJ, Clark NC. Differences in metacognitive regulation in introductory biology students: when prompts are not enough. CBE Life Sci Educ. 2015;14:ar15. doi: 10.1187/cbe.14-08-0135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steiner H. The strategy project: promoting self-regulated learning through an authentic assignment. Int J Teach Learn High Educ. 2016;28:271–282. [Google Scholar]

- Tanner KD. Promoting student metacognition. CBE Life Sci Educ. 2012;11:113–120. doi: 10.1187/cbe.12-03-0033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas DR. A general inductive approach for analyzing qualitative evaluation data. Am J Eval. 2006;27:237–246. [Google Scholar]

- Vandevelde S, Van Keer H, Rosseel Y. Measuring the complexity of upper primary school children’s self-regulated learning: a multi-component approach. Contemp Educ Psychol. 2013;38:407–425. [Google Scholar]

- Veenman MV, Van Hout-Wolters BH, Afflerbach P. Metacognition and learning: conceptual and methodological considerations. Metacogn Learn. 2006;1:3–14. [Google Scholar]

- Veenman MVJ. Alternative assessment of strategy use with self-report instruments: a discussion. Metacogn Learn. 2011;6:205–211. [Google Scholar]

- Wäschle K, Allgaier A, Lachner A, Fink S, Nückles M. Procrastination and self-efficacy: tracing vicious and virtuous circles in self-regulated learning. Learn Instr. 2014;29:103–114. [Google Scholar]

- Weinstein C, Schulte A, Palmer D. LASSI: Learning and Study Strategies Inventory. Clearwater, FL: H & H Publishing; 1987. [Google Scholar]

- Wingate U. A framework for transition: supporting “learning to learn” in higher education. High Educ Q. 2007;61:391–405. [Google Scholar]

- Wolters CA. Regulation of motivation: evaluating an underemphasized aspect of self-regulated learning. Educ Psychol. 2003;38:189–205. [Google Scholar]

- Yates F. Contingency table involving small numbers and the χ² test. Suppl J R Stat Soc. 1934;1:217–235. [Google Scholar]

- Zimmerman BJ. A social cognitive view of self-regulated academic learning. J Educ Psychol. 1989;81:329. [Google Scholar]

- Zimmerman BJ. Self-regulated learning and academic achievement: an overview. Educ Psychol. 1990;25:3–17. [Google Scholar]

- Zimmerman BJ. Self-regulation involves more than metacognition: a social cognitive perspective. Educ Psychol. 1995;30:217–221. [Google Scholar]

- Zimmerman BJ. Becoming a self-regulated learner: an overview. Theory Pract. 2002;41:64–70. [Google Scholar]

- Zimmerman BJ. Investigating self-regulation and motivation: historical background, methodological developments, and future prospects. Am Educ Res J. 2008;45:166–183. [Google Scholar]

- Zimmerman BJ, Martinez-Pons M. Development of a structured interview for assessing student use of self-regulated learning strategies. Am Educ Res J. 1986;23:614–628. [Google Scholar]

- Zimmerman BJ, Martinez-Pons M. Construct validation of a strategy model of student self-regulated learning. J Educ Psychol. 1988;80:284. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.