Abstract

Objectives

Discrepancies between leaders' self-ratings and follower ratings of the leader are common but usually go unrecognized. Research on discrepancies is limited but there is evidence that discrepancies are associated with organizational context. This study examined the association of leader-follower discrepancies in Implementation Leadership Scale (ILS) ratings of mental health clinic leaders, and the association of those discrepancies with organizational climate for involvement and performance feedback. Both involvement and performance feedback may be important for evidence-based practice implementation in mental health.

Methods

A total of 593 supervisors (i.e., leaders, n=80) and clinical service providers (i.e., followers, n=513) completed surveys including ratings of implementation leadership and organizational climate. Polynomial regression and response surface analyses were conducted to examine the associations of discrepancies in leader-follower ILS ratings with organizational involvement climate and performance feedback climate, aspects of climate likely to support EBP implementation.

Results

Both involvement climate and performance feedback climate were highest where leaders rated themselves low on the ILS and their followers rated those leaders high on the ILS (i.e., “humble leaders”).

Conclusions

Teams with “humble leaders” showed more positive organizational climate for involvement and for performance feedback, contextual factors important during EBP implementation and sustainment. Discrepancy in leader and follower ratings of implementation leadership should be a consideration in understanding and improving leadership and organizational climate for mental health services and for evidence-based practice implementation and sustainment in mental health and other allied health settings.

Keywords: leadership, implementation, discrepancy, organizational climate, evidence-based practice

Introduction

There is increasing demand for the use of public health interventions supported by rigorous scientific research, but frequently the promise of such evidence-based practices (EBPs) fails to translate into their effective implementation, sustained use, or intended public health benefits. To bridge this gap between research and effective delivery in practice, researchers increasingly recognize the importance of studying the process of EBP implementation and sustainment (1-4). Although individual provider factors contribute to successful EBP implementation (5), organizational factors are likely to have an equal or greater influence on EBP implementation (6, 7). Leadership is one factor that has been suggested to play an important role in organizational context and implementation of health innovations (8-10).

Organizational climate that supports EBP implementation and sustainment can facilitate implementation, and leadership is an antecedent of organizational culture and climate (11-17). For example, more positive leadership is associated with a climate of involvement, in which followers feel involved in problem solving and organizational decision making (18). Leaders who emphasize the importance of learning, and establish trust with their followers, foster development of a positive feedback climate, which encourages receiving formal and informal performance feedback (19). Leader “credibility” has also been identified as an important facet of feedback climate, as leaders should be knowledgeable about their followers' assigned tasks, in order to accurately judge performance on those tasks (20).

Early research on leadership and implementation focused on general leadership constructs such as transformational leadership (21, 22). Leaders enact transformational leadership through behaviors that embody inspirational motivation, individualized consideration of followers, ability to engender buy-in and intellectual stimulation, and idealized influence or serving as a role model (23). However, research on developing specific types of climates such as safety climate (24, 25) and service climate (26) has increasingly considered leadership focused on the achievement of a specific strategic outcome (e.g., reducing accidents, improving customer service, respectively). Such a strategic leadership approach can also be applied to EBP implementation in the form of implementation leadership (27).

Implementing EBPs can be incredibly challenging and requires specific leader attributes such as being knowledgeable about EBPs, engaging in proactive problem solving, perseverance in the face of implementation challenges, and supporting service providers in the implementation process. The Implementation Leadership Scale (ILS) was developed as a pragmatic, brief, and efficient (3, 28, 29) measure to assess these leader behaviors thought to promote a strategic climate for implementing and sustaining EBPs (27). The construct of implementation leadership is complementary to general leadership and is the focus of this study that involves “first-level leaders” and their followers. First-level leaders (i.e., those who supervisor others who provide direct services) may be particularly influential in supporting new practices as they are on the frontline directly supervising clinicians and bridging organizational imperatives and clinical service provision as EBPs are integrated into daily work routines (30). However, leaders and followers do not always agree about the leader's behavior.

Research comparing leader and follower leadership ratings has focused on agreement and outcomes related to agreement. For example, Atwater and Yammarino's (31) model of leader-follower agreement posits that congruence in positive leadership ratings are more likely linked to positive outcomes, and conversely, leader-follower agreement in negative leadership ratings are linked to negative outcomes. For leaders who under- or over-estimate their own leadership abilities and skills; findings are equivocal. For example, one set of studies found that leaders who rated themselves lower in relation to others' ratings of them were considered to be more effective as leaders (32, 33). Other studies have shown that leaders who overestimate their leadership abilities tend to use hard persuasion tactics, such as pressure, to influence followers (34). Followers of such leaders are likely to think unfavorably of such hard influence tactics and recognize their leader's erroneous evaluation of their own strengths. Moreover, leaders who overestimate their leadership behaviors, tend to misdiagnose their strengths, adversely affecting their effectiveness as a leader (31). Although these studies have added to an understanding of the different types of disagreement, there has been limited research specifically focusing on leadership discrepancy and its effect on outcomes such as organizational climate. This is an important area of inquiry as recent work has shown that mental health leader-follower discrepancies in transformational leadership ratings can negatively affect organizational culture (35).

The present study, conducted in public mental health organizations, addresses the extent to which leader-follower discrepancies in leadership ratings are related to the organizational climate of the leaders' units, particularly with regard to organizational climate for involvement and performance feedback. Climate for involvement is important because EBP implementation requires participation and buy-in across organizational levels, especially for clinicians and service providers. Indeed, congruence of leadership across multiple levels may also be important during implementation (10). Climate for performance feedback is also critically important for EBP implementation in that feedback and coaching regarding intervention fidelity is a critical part of implementation of many EBPs. For example, in previous work in home-based services, a key implementation strategy was providing feedback through in-vivo coach observation and real-time feedback (36, 37). Thus, it is important to understand how implementation leadership affects organizational involvement climate and feedback climate.

The purpose of the present study was to examine the association of discrepancy between leader (i.e., clinic supervisor) self-ratings and their followers' (i.e., clinical service providers) ratings on the ILS and the associations of discrepancy with involvement and performance feedback climate in the leaders' teams. Based on past research showing that leaders who underestimate their leadership may be more effective (32, 33), we hypothesized that discrepancies - where leaders rated themselves lower than their follower ratings of them - would be associated with higher levels of climate for involvement and performance feedback.

Methods

Participants

Participants were 753 public mental health team leaders (i.e., leaders) and the service providers that they supervised (i.e., followers), from 31 different mental health service organizations in California. Of the 753 eligible participants, 593 (80 leaders and 513 providers) completed the measures that were used in these analyses (79% response rate). Table 1 provides demographic information about the leaders and providers.

Table 1. Participant Demographics.

| n | Leaders | N | Providers (Followers) | |

|---|---|---|---|---|

| Age in Years | 45.4 | 37.3 | ||

| Years of Experience | 13.8 | 6.2 | ||

| Years in Agency | 5.9 | 3.2 | ||

| Gender | ||||

| Male | 20 | 25% | 119 | 23% |

| Female | 60 | 75% | 394 | 77% |

| Race | ||||

| Caucasian | 57 | 71% | 214 | 44% |

| African American | 3 | 4% | 85 | 17% |

| Asian American | 9 | 11% | 28 | 6% |

| Other | 11 | 14% | 165 | 34% |

| Hispanic | 10 | 13% | 214 | 42% |

| Educational level | ||||

| High School | - | - | 14 | 3% |

| Some college | 3 | 4% | 48 | 9% |

| Bachelor's degree | 1 | 1% | 117 | 23% |

| Some graduate work | 1 | 1% | 38 | 7% |

| Master's degree | 69 | 86% | 290 | 57% |

| Doctoral degree | 6 | 8% | 6 | 1% |

| Major of highest degree | ||||

| Marriage/Family Therapy | 20 | 25% | 108 | 22% |

| Social Work | 4 | 5% | 56 | 11% |

| Psychology | 2 | 3% | 34 | 7% |

| Child Development | 2 | 3% | 31 | 6% |

| Human Relations | 38 | 48% | 144 | 29% |

| Other | 14 | 17% | 118 | 24% |

Note: N=593; n=513; leaders n=80; providers (i.e., followers).

Data Collection Procedures

The research team first obtained permission from agency executive directors or their designees to recruit leaders and their followers for participation in the study. Eligible leaders were identified as those that directly supervise staff in mental health treatment teams or workgroups. Data collection was completed using online surveys or in-person with paper-and-pencil surveys. For online surveys, each participant received a link to the web survey and a unique password via email. For in-person surveys, paper forms were provided and completed at team meetings. In previous research we found no differences in ILS scores by method of survey administration (38). The survey took approximately 20-40 minutes and participants received incentives by email following survey completion. The Institutional Review Board of San Diego State University approved this study. Participation was voluntary and informed consent was obtained from all participants.

Measures

Implementation Leadership Scale (ILS; 27)

The ILS includes 12 items scored on a 0 (‘not at all’) to 4 (‘to a very great extent’) scale (27). The ILS includes 4 subscales, Proactive Leadership (α = .93), Knowledgeable Leadership (α = .95), Supportive Leadership (α = .90), and Perseverant Leadership (α = .93). The total ILS score (α = .95) was created by computing the mean of the four subscales. The complete ILS measure and scoring instructions can be found in the “additional files” associated with the original scale development study (27). Leaders completed self-ratings of implementation leadership and followers completed ratings of their leader's implementation leadership.

Organizational Climate Measure (OCM; 39)

The OCM consists of 17 scales capturing a number of organizational climate dimensions; in the present study we utilized the Involvement (α = .87, 6 items) and Performance Feedback (α = .79, 5 items) climate scales that measure potentially important aspects of organizational climate for implementation. Clinicians completed scales from the OCM.

Statistical Analyses

Follower ratings were aggregated to create a team-level rating of implementation leadership for each leader. Intraclass correlation coefficients (ICC(1)s) and average within group agreement (awg(j)) statistics (40) supported the aggregation of team ratings (i.e., (awg(j) > .70). Consistent with Fleenor and colleagues (41) and Shanock and colleagues (42), scores were standardized and scores that differed by .5 standard deviations or more were considered discrepant values.

In order to explore the relationship between discrepancies in leadership ratings and organizational climate (i.e., involvement climate and performance feedback climate), we conducted polynomial regressions response surface analyses (42-44). Consistent with past research using this technique, we focused on the slope and curvature along the y=x and y=-x axes of the response surface because they correspond directly to the substantive research questions of interest. The y=x axis is the axis along which follower and leader ratings are congruent, whereas the y=-x axis is the axis along which follower and leader ratings are incongruent. The relationship between organizational climate and either congruence or incongruence of ILS ratings was then explored by examining the response surfaces of the alignment between leader and follower ratings of implementation leadership and associations with organizational climate.

Results

Means, standard deviations, and correlations among the study variables included in the discrepancy analyses are presented in Table 2. Prior to conducting polynomial regression and response surface analysis for examining discrepancies, ILS data were analyzed to ensure that discrepancies existed in the data (42). Three groups were identified: 31% (n=33) of leaders rated themselves higher than their followers rated them, 33% (n=35) ratings were in agreement with their followers rating of them, and 36% (n=38) leaders rated themselves lower than their followers rated them. Thus over 65% (n=71) of the sample showed discrepancies.

Table 2. Means, Standard Deviations, and Correlations among Study Variables.

| Mean | sd | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|---|

| 1. Provider ILS ratings | 2.42 | .79 | -- | |||

| 2. Leader ILS ratings | 2.39 | .92 | .17 | -- | ||

| 3. OCM - Involvement | 1.81 | .48 | .27** | -.06 | -- | |

| 4. OCM - Performance Feedback | 1.95 | .43 | .31** | -.09 | .64** | -- |

Note:

p < .05

p < .01

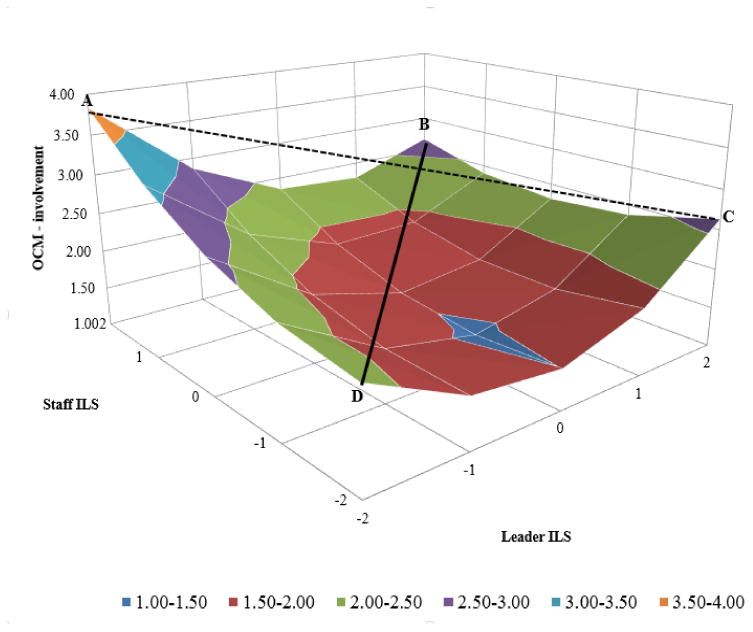

Results for the polynomial regression for associations between discrepancy on the ILS and the OCM Involvement scale are provided in Table 3, and the response surface is depicted in Figure 1. The line of incongruence (the dashed line in Figure 1) had a significant slope (a3 = -.30, t = -3.15, p < .01) and curvature (a4 = .42, t = 3.47, p < .01). The significant slope indicates that involvement climate scores were higher when leader ILS ratings were low and follower ILS ratings were high compared to when leader ILS ratings were high and follower ILS ratings were low. Thus, Involvement climate was impacted by discrepancy differently depending on who was rating ILS more favorably (i.e., direction of discrepancy matters). The significant positive curvature (i.e., convex surface) shown in Figure 1 shows that involvement climate scores were higher as levels of discrepancy increased. With regard to the line of congruence (the solid line), the slope was non-significant (a1 = .12, t = 1.24, p = .22), indicating that involvement climate scores were not different when leaders and followers agreed that ILS levels were high versus when ILS levels were low. However, the curvature of the line of congruence was significant, indicating that the lowest levels of involvement occurred when there was agreement at intermediate ILS levels. As a follow-up analysis to clarify the nature of the findings, we compared the four corner points of the response surface, in line with recommendations of Lee and Antonakis (45). This analysis revealed that Involvement was highest at the left corner of the response surface (labeled A on the graph), where leaders rated themselves low and followers rated the leader high on the ILS. As summarized in Table 4, point A was significantly higher than all other corners of the surface (points B, C, and D), and the other three points were not different from each other.

Table 3. Polynomial Regression and Response Surface Analysis Results.

| OCM Involvement and Implementation Leadership | ||

|---|---|---|

| Variable Regressed onto OCM Involvement | b | se |

| Constant | 1.56** | .10 |

|

|

||

| Implementation Leadership: Leader | -.09 | .06 |

|

|

||

| Implementation Leadership: Team | .21** | .07 |

|

|

||

| Implementation Leadership: Leader squared | .23** | .07 |

|

|

||

| Implementation Leadership: Leader x Team | -.10* | .04 |

|

|

||

| Implementation Leadership: Team squared | .09 | .10 |

|

|

||

| R2 | .18 | |

|

| ||

| Response Surface Tests | ||

|

| ||

| a1 | .12 | .10 |

|

|

||

| a2 | .22** | .08 |

|

|

||

| a3 | -.30** | .10 |

|

|

||

| a4 | .42** | .12 |

|

| ||

| OCM Performance Feedback and Implementation Leadership | ||

|

| ||

| Variable Regressed onto OCM Performance Feedback | b | se |

| Constant | 1.69** | .07 |

| Implementation Leadership: Leader | -.11* | .05 |

| Implementation Leadership: Team | .27** | .56 |

| Implementation Leadership: Leader squared | .27** | .06 |

| Implementation Leadership: Leader × Team | -.12** | .03 |

| Implementation Leadership: Team squared | .09 | .07 |

| R2 | .37 | |

|

| ||

| Response Surface Tests | ||

|

| ||

| a1 | .16* | .07 |

| a2 | .23** | .08 |

| a3 | -.37** | .07 |

| a4 | .47** | .08 |

Note: N = 80

p < .05

p < .01

Figure 1.

Response Surface for Involvement Climate predicted from discrepancy between leader and follower ratings of Implementation Leadership Scale Scores.

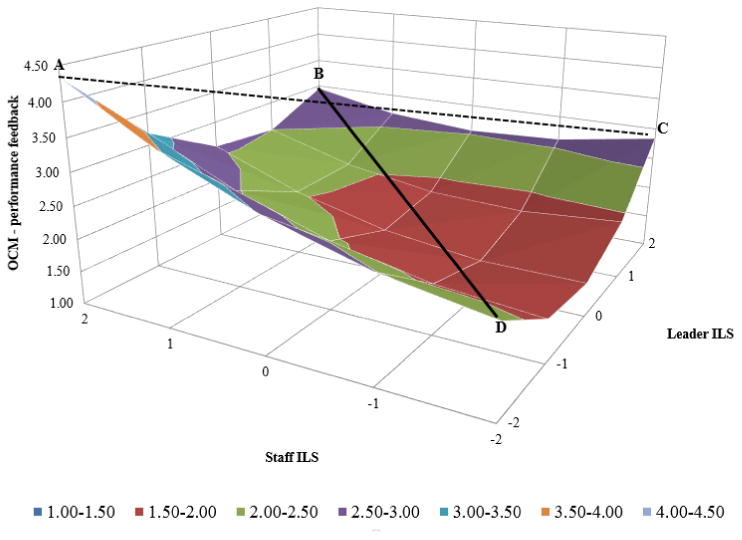

Table 4. Tests of Equality Between Predicted Values for Response Surfaces for Figure 1 (OCM Involvement) and Figure 2 (OCM Performance Feedback).

| OCM Involvement | OCM Performance Feedback | |

|---|---|---|

| Predicted Value at Specific Point | ||

| A | 3.84 | 4.33 |

| B | 2.67 | 2.95 |

| C | 2.64 | 2.84 |

| D | 2.20 | 2.30 |

| Test of Equality Between Predicted Values | ||

| Along the edges of the surface | F-statistic | |

| A vs. B | 7.09* | 11.23** |

| B vs. C | .01 | .07 |

| C vs. D | .98 | 1.66 |

| D vs. A | 11.33** | 20.83** |

| Along diagonal lines | ||

| A vs. C | 10.38** | 26.42** |

| B vs. D | 2.70 | 9.17* |

Note: df for all F-statistics is (1, 74)

p < .05

p < .001

Table 3 provides the polynomial regression results for the associations between discrepancy on the ILS and the OCM Performance Feedback climate scale, and the corresponding response surface is provided in Figure 2. The line of incongruence (the dashed line in Figure 2) had a significant slope (a3 = -.37, t = -5.15, p < .001) and curvature (a4 = .47, t = 6.29, p < .001). The significant slope indicates that performance feedback scores were higher when leader ILS ratings were low and follower ILS ratings were high compared to when leader ILS ratings were high and follower ILS ratings were low. Thus, performance feedback climate was impacted by discrepancy differently depending on who was rating ILS more favorably (i.e., direction of discrepancy matters). The significant, positive curvature (i.e., convex surface) indicates that performance feedback climate scores were higher as levels of discrepancy increased. With regard to the line of congruence (the solid line), the slope was also significant (a1 = .16, t = 2.249, p < .05), meaning that performance feedback scores were different when leaders and followers agreed that ILS levels were high versus when ILS levels were low. Likewise, the curvature of the line of congruence was significant, indicating that the lowest levels of feedback climate occurred when there was agreement at intermediate ILS levels. Similar to the follow-up analysis conducted for Involvement, we compared the four corner points of the response surface (45). This analysis revealed that Performance Feedback was highest at the left corner of the response surface (labeled A on the graph), where followers rated ILS high and leaders rated themselves low. As summarized in Table 4, point A was significantly higher than all other corners of the surface (points B, C, and D), point B was significantly higher than point D, and none of the other comparisons were significant.

Figure 2.

Response Surface for Performance Feedback Climate predicted from discrepancy between leader and follower ratings of Implementation Leadership Scale Scores.

Discussion

We found three almost equally distributed discrepancy/agreement groups: leaders and followers who agreed, leaders who rated themselves more positively than did their followers, and leaders who rated themselves lower than did their followers. We refer to the latter as “humble leaders.” Organizational climate for involvement and climate for feedback were most positive for humble leaders. These findings are consistent with research examining general leadership in other settings (32, 33) and support the effectiveness of humble leaders (46, 47). Moreover, discrepancies were associated with two aspects of organizational climate likely to be important for EBP implementation and sustainment.

Humble leadership was associated with significantly higher involvement climate and performance feedback climate in contrast to leaders who rated themselves high - but followers rated the leaders low. This finding suggests that this leader-follower dynamic, in which leaders rate themselves lower than followers, creates a more positive climate which supports the leader's capacity to implement EBPs. For example, leader humility is associated with increased follower humble behaviors and the development of shared team process that supports team goal achievement (48). However, the presence of humble leadership does not necessarily mean that EBPs will be implemented effectively. It is likely that effective leadership is a necessary but not sufficient condition for effective implementation and leadership is one component of organizational capacity for implementation (49). Further research is needed to better understand the nuances of how leader-follower discrepancies develop and influence follower experiences of their workplace as well as examining additional factors that may impact effective implementation for both leaders and followers. Qualitative or mixed-methods might be utilized to better understand leader and follower perceptions of leadership and their relationships to implementation climate (50) and to advance leadership and climate improvement strategies.

There are promising interventions for improving leadership and organizational context for implementation. The Leadership and Organizational Change for Implementation (LOCI) intervention (51) combines principles of transformational leadership with implementation leadership to train first-level leaders to develop more positive EBP implementation climate in their teams, while concurrently working with organizations to assure the availability of organizational processes and supports (e.g., fidelity feedback, educational materials, coaching) for effective implementation. Another example is the ARC implementation strategy, that works across organizational levels to improve molar organizational culture and climate (52). In another approach, Zohar and Polacheck (53) demonstrated that providing feedback to leaders about their followers' perceptions of the leader's team's safety climate affected leader verbalizations and behaviors, organizational safety climate, and safety outcomes. Thus, there may be multiple strategies (some extremely low cost/burden) that can be employed to influence leader cognition and behavior, and ultimately improve organizational context and strategic outcomes.

There is a need for brief and pragmatic measures to guide leader development with the goal of changing strategic climate and improving implementation (54). Leader self-ratings can be compared with provider ratings of the leader in order to provide insight to leaders about the degree to which their own perspective is aligned with that of their followers. Thus, the ILS can be used by health and allied health care organizations so that leaders can assess their own leadership for EBP implementation at any stage of the implementation process as outlined in the exploration, preparation, implementation or sustainment (EPIS) implementation framework (1). In the early implementation phases (e.g., exploration and preparation), leaders might be provided training in effective leadership to support EBP implementation. Such an implementation strategy could contribute to facilitating the implementation process.

Some limitations of the present study should be noted. First, this study focused on organizational climate supportive of implementation context as the distal outcome. Future studies of implementation leadership should examine additional outcomes such as implementation effectiveness, innovation effectiveness, and patient outcomes (55, 56). Second, this study was conducted in mental health organizations. Generalizability of these findings should be examined through replication in other health and allied health service sectors. Third, in the present study there were apparent differences in race/ethnicity distribution for the samples of leaders and followers. There have been calls for leadership research to examine the degree to which such differences impact perceptions, relative power, and causality (57). While beyond the purview of the present study, we recommend future more detailed examination of these issues. Finally, the data were cross-sectional; future research should examine these relationships prospectively in addition to examining whether leader interventions may affect leader-follower discrepancies.

Effective EBP implementation and sustainment is critical to improve the impact of effective interventions. Sadly, many implementation efforts fail or do not deliver interventions with the needed rigor or fidelity. It is critical to understand how health care organization leaders and providers interact to create an organizational climate conducive to effective implementation and sustainment. The present study demonstrated that discrepancy does matter in its impact on organizational climate relevant for EBP implementation. Leadership and organizational interventions to improve implementation and sustainment should be further developed and tested in order to advance implementation science and improve the public health impact of investments in clinical intervention development.

Footnotes

The authors report no conflicts of interest.

This work previously presented at the [blinded] Conference

Contributor Information

Gregory A. Aarons, University of California, San Diego - Psychiatry, 3020 Children's Way MC-5033 , San Diego, California 92123, Child & Adolescent Services Research Center - San Diego, California

Mark G. Ehrhart, San Diego State University - Psychology, San Diego, California

Elisa M. Torres, University of California San Diego Ringgold standard institution - Psychiatry, 9500 Gilman Dr. MC 0812 , La Jolla, California 92093

Natalie K. Finn, University of California San Diego Ringgold standard institution - Psychiatry, La Jolla, California

Rinad Beidas, University of Pennsylvania - Psychiatry, 3535 Market Street 3015 , Philadelphia, Pennsylvania 19104.

References

- 1.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Damschroder L, Aron D, Keith R, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4:50–64. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Proctor EK, Landsverk J, Aarons GA, et al. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bammer G. Integration and implementation sciences: building a new specialization. Ecology and Society. 2005;2:6. [Google Scholar]

- 5.Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS) Mental Health Services Research. 2004;6:61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jacobs JA, Dodson EA, Baker EA, et al. Barriers to evidence-based decision making in public health: A national survey of chronic disease practitioners. Public Health Reports. 2010;125:736–42. doi: 10.1177/003335491012500516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Beidas RS, Marcus S, Aarons GA, et al. Predictors of community therapists' use of therapy techniques in a large public mental health system. JAMA Pediatrics. 2015;169:374–82. doi: 10.1001/jamapediatrics.2014.3736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bass BM. Leadership and performance beyond expectations. New York, NY: Free Press; 1985. [Google Scholar]

- 9.Bass BM, Avolio BJ. The implications of transformational and transactional leadership for individual, team, and organizational development. In: Pasmore W, Woodman RW, editors. Research in organizational change and development. Greenwich, CT: JAI Press; 1990. [Google Scholar]

- 10.Aarons GA, Ehrhart MG, Farahnak LR, et al. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annual Review of Public Health. 2014;35:255–74. doi: 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychological Services. 2006;3:61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Aarons GA, Sawitzky AC. Organizational climate partially mediates the effect of culture on work attitudes and staff turnover in mental health services. Administration and Policy in Mental Health and Mental Health Services Research. 2006;33:289–301. doi: 10.1007/s10488-006-0039-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ehrhart MG, Schneider B, Macey WH. Organizational climate and culture: An introduction to theory, research, and practice. New York, NY: Routledge; 2014. [Google Scholar]

- 14.Ehrhart MG. Leadership and procedural justice climate as antecedents of unit-level organizational citizenship behavior. Personnel Psychology. 2004;57:61–94. [Google Scholar]

- 15.Litwin G, Stringer R. Motivation and organizational climate. Cambridge, MA: Harvard University Press; 1968. [Google Scholar]

- 16.Zohar D, Tenne-Gazit O. Transformational leadership and group interaction as climate antecedents: A social network analysis. Journal of Applied Psychology. 2008;93:744–57. doi: 10.1037/0021-9010.93.4.744. [DOI] [PubMed] [Google Scholar]

- 17.Tsui AS, Zhang Z-X, Wang H, et al. Unpacking the relationship between CEO leadership behavior and organizational culture. Leadership Quarterly. 2006;17:113–37. [Google Scholar]

- 18.Richardson HA, Vandenberg RJ. Integrating managerial perceptions and transformational leadership into a work-unit level model of employee involvement. Journal of Organizational Behavior. 2005;26:561–89. [Google Scholar]

- 19.Baker A, Perreault D, Reid A, et al. Feedback and organizations: feedback is good, feedback-friendly culture is better. Canadian Psychology. 2013;54:260–8. [Google Scholar]

- 20.Steelman LA, Levy PE, Snell AF. The feedback environment scale: construct definition, measurement, and validation. Educational and Psychological Measurement. 2004;64:165–84. [Google Scholar]

- 21.Michaelis B, Stegmaier R, Sonntag K. Shedding light on followers' innovation implementation behavior: The role of transformational leadership, commitment to change, and climate for initiative. Journal of Managerial Psychology. 2010;25:408–29. [Google Scholar]

- 22.Aarons GA, Sommerfeld DH. Leadership, innovation climate, and attitudes toward evidence-based practice during a statewide implementation. Journal of the American Academy Child and Adolescent Psychiatry. 2012;51:423–31. doi: 10.1016/j.jaac.2012.01.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bass BM, Avolio BJ. MLQ: Multifactor leadership questionnaire (Technical Report) Binghamton University, NY: Center for Leadership Studies; 1995. [Google Scholar]

- 24.Barling J, Loughlin C, Kelloway EK. Development and test of a model linking safety-specific transformational leadership and occupational safety. Journal of Applied Psychology. 2002;87:488–96. doi: 10.1037/0021-9010.87.3.488. [DOI] [PubMed] [Google Scholar]

- 25.Zohar D. Modifying supervisory practices to improve subunit safety: A leadership-based intervention model. Journal of Applied Psychology. 2002;87:156–63. doi: 10.1037/0021-9010.87.1.156. [DOI] [PubMed] [Google Scholar]

- 26.Schneider B, Ehrhart MG, Mayer DM, et al. Understanding organization-customer links in service settings. Academy of Management Journal. 2005;48:1017–32. [Google Scholar]

- 27.Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): Development of a brief measure of unit level implementation leadership. Implementation Science. 2014;9:45. doi: 10.1186/1748-5908-9-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implementation Science. 2014;9:118. doi: 10.1186/s13012-014-0118-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Glasgow RE, Riley WT. Pragmatic measures: what they are and why we need them. American Journal of Preventive Medicine. 2013;45:237–43. doi: 10.1016/j.amepre.2013.03.010. [DOI] [PubMed] [Google Scholar]

- 30.Priestland A, Hanig R. Developing first-level leaders. Harvard Business Review. 2005;83:112–20. [PubMed] [Google Scholar]

- 31.Atwater LE, Yammarino FJ. Self–other rating agreement. In: Ferris GR, editor. Research in personnel and human resources management. Greenwich, CT: JAI Press; 1997. [Google Scholar]

- 32.Van Velsor E, Taylor S, Leslie J. An examination of the relationships among selfperception accuracy, self-awareness, gender, and leader effectiveness. Human Resource Management. 1993;32:249–64. [Google Scholar]

- 33.Atwater LE, Roush P, Fischthal A. The influence of upward feedback on self and follower ratings of leadership. Personnel Psychology. 1995;48:35–59. [Google Scholar]

- 34.Berson Y, Sosik JJ. The relationship between self—other rating agreement and influence tactics and organizational processes. Group & Organization Management. 2007;32:675–98. [Google Scholar]

- 35.Aarons GA, Ehrhart MG, Farahnak LR, et al. Discrepancies in leader and follower ratings of transformational leadership: Relationships with organizational culture in mental health. Administration and Policy in Mental Health and Mental Health Services Research. doi: 10.1007/s10488-015-0672-7. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Aarons GA, Sommerfeld DH, Hecht DB, et al. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: Evidence for a protective effect. Journal of Consulting and Clinical Psychology. 2009;77:270–80. doi: 10.1037/a0013223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chaffin M, Hecht D, Bard D, et al. A statewide trial of the SafeCare home-based services model with parents in Child Protective Services. Pediatrics. 2012;129:509–15. doi: 10.1542/peds.2011-1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Finn NK, Torres EM, Ehrhart MG, et al. Cross-validation of the Implementation Leadership Scale (ILS) in child welfare service organizations. Child Maltreatment. doi: 10.1177/1077559516638768. In press. [DOI] [PubMed] [Google Scholar]

- 39.Patterson MG, West MA, Shackleton VJ, et al. Validating the organizational climate measure: Links to managerial practices, productivity and innovation. Journal of Organizational Behavior. 2005;26:379–408. [Google Scholar]

- 40.Brown RD, Hauenstein NMA. Interrater agreement reconsidered: An alternative to the rwg indices. Organizational Research Methods. 2005;8:165–84. [Google Scholar]

- 41.Fleenor JW, McCauley CD, Brutus S. Self-other rating agreement and leader effectiveness. Leadership Quarterly. 1996;7:487–506. [Google Scholar]

- 42.Shanock LR, Baran BE, Gentry WA, et al. Polynomial regression with response surface analysis: A powerful approach for examining moderation and overcoming limitations of difference scores. Journal of Business and Psychology. 2010;25:543–54. [Google Scholar]

- 43.Edwards JR. Alternatives to difference scores: Polynomial regression and response surface methodology. In: Drasgow F, Schmitt NW, editors. Advances in measurement and data analysis. San Francisco: Jossey-Bass; 2002. [Google Scholar]

- 44.Shanock LR, Allen JA, Dunn AM, et al. Less acting, more doing: How surface acting relates to perceived meeting effectiveness and other employee outcomes. Journal of Occupational and Organizational Psychology. 2013;86:457–76. [Google Scholar]

- 45.Lee YT, Antonakis J. When preference is not satisfied but the individual is: how power distance moderates person–job fit. Journal of Management in Medicine. 2014;40:641–75. [Google Scholar]

- 46.Morris JA, Brotheridge CM, Urbanski JC. Bringing humility to leadership: Antecedents and consequences of leader humility. Human Relations. 2005;58:1323–50. [Google Scholar]

- 47.Owens BP, Hekman DR. Modeling how to grow: An inductive examination of humble leader behaviors, contingencies, and outcomes. Academy of Management Journal. 2012;55:787–818. [Google Scholar]

- 48.Owens B, Hekman DR. How does leader humility influence team performance? Exploring the mechanisms of contagion and collective promotion focus Academy of Management Journal. In press. [Google Scholar]

- 49.Guerrero EG, Aarons GA, Palinkas LA. Organizational capacity for service integration in community-based addiction health services. American Journal of Public Health. 2014;104:e40–e7. doi: 10.2105/AJPH.2013.301842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS) Implementation Science. 2014;9 doi: 10.1186/s13012-014-0157-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Aarons GA, Ehrhart MG, Farahnak LR, et al. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implementation Science. 2015;10:11. doi: 10.1186/s13012-014-0192-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Glisson C, Schoenwald SK, Hemmelgarn A, et al. Randomized trial of MST and ARC in a two-level evidence-based treatment implementation strategy. Journal of Consulting and Clinical Psychology. 2010;78:537–50. doi: 10.1037/a0019160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zohar D, Polachek T. Discourse-based intervention for modifying supervisory communication as leverage for safety climate and performance improvement: A randomized field study. Journal of Applied Psychology. 2014;99:113. doi: 10.1037/a0034096. [DOI] [PubMed] [Google Scholar]

- 54.Lewis CC, Weiner BJ, Stanick C, et al. Advancing implementation science through measure development and evaluation: a study protocol. Implementation Science. 2015;10:102. doi: 10.1186/s13012-015-0287-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Klein KJ, Conn AB, Sorra JS. Implementing computerized technology: An organizational analysis. Journal of Applied Psychology. 2001;86:811–24. doi: 10.1037/0021-9010.86.5.811. [DOI] [PubMed] [Google Scholar]

- 56.Proctor EK, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ospina S, Foldy E. A critical review of race and ethnicity in the leadership literature: Surfacing context, power and the collective dimensions of leadership. The Leadership Quarterly. 2009;20:876–96. [Google Scholar]