Abstract

Research in psychology and neuroscience has successfully modeled decision making as a process of noisy evidence accumulation to a decision bound. While there are several variants and implementations of this idea, the majority of these models make use of a noisy accumulation between two absorbing boundaries. A common assumption of these models is that decision parameters, e.g., the rate of accumulation (drift rate), remain fixed over the course of a decision, allowing the derivation of analytic formulas for the probabilities of hitting the upper or lower decision threshold, and the mean decision time. There is reason to believe, however, that many types of behavior would be better described by a model in which the parameters were allowed to vary over the course of the decision process.

In this paper, we use martingale theory to derive formulas for the mean decision time, hitting probabilities, and first passage time (FPT) densities of a Wiener process with time-varying drift between two time-varying absorbing boundaries. This model was first studied by Ratcliff (1980) in the two-stage form, and here we consider the same model for an arbitrary number of stages (i.e. intervals of time during which parameters are constant). Our calculations enable direct computation of mean decision times and hitting probabilities for the associated multistage process. We also provide a review of how martingale theory may be used to analyze similar models employing Wiener processes by re-deriving some classical results. In concert with a variety of numerical tools already available, the current derivations should encourage mathematical analysis of more complex models of decision making with time-varying evidence.

1. Introduction

Continuous time stochastic processes modeling a particle's diffusion (with drift) towards one of two absorbing boundaries have been used in a wide variety of applications including statistical physics (Farkas and Fulop, 2001), finance (Lin, 1998), economics (Webb, 2015), and health science (Horrocks and Thompson, 2004). Varieties of such models have also been applied extensively within psychology and neuroscience to describe both the behavior and neural activity associated with decision processes involved in perception, memory, attention, and cognitive control (Heath, 1992; Ratcliff and Rouder, 1998; Ratcliff and McKoon, 2008; Simen et al., 2009; Gold and Shadlen, 2001, 2007; Brunton et al., 2013; Feng et al., 2009; Shadlen and Newsome, 2001; Diederich and Oswald, 2014, 2016); for reviews see (Ratcliff and Smith, 2004; Bogacz et al., 2006; Busemeyer and Diederich, 2010).

In these stochastic accumulation decision models, the state variable x(t) is thought to represent the amount of accumulated noisy evidence at time t for decisions represented by the two absorbing boundaries, that we refer to as the upper (+) and lower (–) thresholds (boundaries). The evidence x(t) evolves in time according to a biased random walk with Gaussian increments, which may be written as dx(t) ~ Normal(μ dt, σ2 dt), and a decision is said to be made at the random time τ, the smallest time t for which x(t) hits either the upper threshold (x(τ) = +ζ) or the lower threshold (x(τ) = −ζ), also known as the first passage time (FPT). The resulting decision dynamics are thus described by the FPT of the underlying model. In studying these processes one is often interested in relating the mean decision time and the probability of hitting a certain threshold (e.g. the probability of making a certain decision) to empirical data. For example, these metrics can offer valuable insight into how actions and cognitive processes might maximize reward rate, which is a simple function of the FPT properties (Bogacz et al., 2006).

However, not all decisions can be properly modeled if parameters are fixed throughout the duration of the decision process. Certain contexts can be better described by a model whose parameters change with time. In this article we analyze the time-dependent version of the Wiener process with drift between two absorbing boundaries, building on recent work that is focused on similar time-varying random walk models (Hubner et al., 2010; Diederich and Oswald, 2014). After reviewing how martingale theory can be used to analyze and re-derive the classical FPT results for the time independent case, we calculate results for the time-dependent case. The main theoretical results are presented in §5.2, where we provide closed form expressions for threshold-hitting probabilities and expected decision times. In Appendix D, we also describe how our methods can be applied to the more general Ornstein-Uhlenbeck (O-U) processes, which are similar to the Wiener diffusion processes albeit with an additional “leak” term. We conclude with a summary of the results and a discussion of how the present work interfaces with other similar analyses of time-varying random walk models.

2. Notation and terminology

Here we introduce the notation and terminology for describing the model we analyze, which is a Wiener process with (time-dependent) piecewise constant parameters. This simple stochastic model, and others close to it, have been studied before (Ratcliff, 1980; Heath, 1992; Smith, 2000; Diederich and Busemeyer, 2003, 2006; Diederich and Oswald, 2014; Bogacz et al., 2006; Wagenmakers et al., 2007), although the reader should note that our parameterization differs from that of some previous studies. Before describing the model, we first review the case where parameters are unchanging with time. In order to easily discuss this simpler model alongside the main time-dependent model analyzed in §5, we use the terms single-stage model and multistage model, respectively. Readers familiar with the popular Diffusion Decision Model of Ratcliff and McKoon (2008) should be aware that parameters in our model do not vary randomly trial-to-trial. Readers familiar with Bogacz et al. (2006) should be aware that the single-stage model (1) is equivalent to what Bogacz et al. (2006) call the “pure” Drift Diffusion Model.

2.1. The single-stage model/process with constant parameters

Consider the stochastic differential equation (SDE):

| (1) |

where parameters μ and σ are constants referred to as the drift and diffusion rates, respectively; x0 is the initial condition (the initial evidence or starting point) of the decision process; and σdW(t) are independent Wiener increments with variance σ2dt. This simple stochastic model has successfully modeled the evolution of evidence between two decisions during a two-alternative forced choice task (Ratcliff and Rouder, 1998; Bogacz et al., 2006; Ratcliff et al., 2016), so that (1) can be interpreted as modeling a decision process in which an agent is integrating noisy evidence until sufficient evidence is gathered in favor of one of the two alternatives.

A decision is made when the evidence x(t) crosses one of the two symmetric decision thresholds ±ζ for the first time, also referred to as its first passage time (FPT). In other words, a decision occurs the instance x(t) crosses and is absorbed by one of the two boundaries. The two boundaries each correspond to one of the two possible decisions for the task. We will refer to the absorbing thresholds at +ζ and −ζ as the upper and lower decision boundaries/thresholds. To contrast with the next section, we will sometimes refer to this model with time-invariant parameters as the single-stage model or single-stage process. We again note that the parameterization used here differs from that employed by others (Smith, 2000; Ratcliff and Smith, 2004; Navarro and Fuss, 2009), although the underlying model is equivalent. Our formulation, compared to some others, does not include a parameter for “non-decision time” or “timeout” for a given trial. Such a term could be incorporated by shifting the entire reaction time distribution – it has no effect on any of our analyses.

2.2. Time-dependent, piecewise constant parameters

The assumption that model parameters remain constant throughout the decision process is unlikely to hold in many situations. For example, the quality of evidence may not be stationary (i.e., the drift rate and diffusion rate are not a constant with respect to time) or decision urgency may require thresholds to collapse in order to force a decision by some deadline (Cisek et al., 2009; Mormann et al., 2010; Zhang et al., 2014; Drugowitsch et al., 2012).

In order to analyze such situations, we focus our present study on a two-stage model originally analyzed by Ratcliff (1980), which we generalize to an arbitrary number of stages. We refer to this slightly generalized model as a multistage model or multistage process, to distinguish it from (1) above. The multistage model allows for the drift rate, diffusion rate, and thresholds to be piecewise constant functions of time.

To fully describe the multistage model, we first partition the set of non-negative real numbers (i.e. time axis) into n intervals (or stages) [ti–1, ti], i ∈ {1, . . . , n} with t0 = 0 and tn = +∞. We then assume that the drift rate, the diffusion rate, and the decision thresholds are constant within each interval, but that their values change between intervals. Evidence integration is thus modeled by

| (2) |

where

for each i ∈ {1, . . . , n}. The above assumptions are identical to the assumptions in Diederich and Oswald (2014, 2016). If n = 1, the multistage model reduces to the single-stage model (1). For expository clarity, we begin by assuming the decision thresholds are fixed at ±ζ, and in §6 we generalize to time-varying (piecewise constant) thresholds. Let τ be the first passage (decision) time for the multistage model.

We will frequently refer to the i-th stage of (2), which for t > ti–1, is written as

| (3) |

where the initial condition Xi–1 is a random variable defined as x(ti–1) conditioned on there being no decision (threshold-crossing) before time ti–1. More precisely, the density of Xi–1 is the conditional distribution of x(ti–1) given that τ > ti–1. Thus, the random variable Xi–1 corresponds to realizations of the multistage model that remain within the thresholds ±ζ until time ti–1. For the first stage, X0 could be a point mass centered at x0, or it may be a random variable capturing the variability in starting points (Ratcliff and Rouder, 1998). The key difference between (3) and the single-stage process (1) is that the initial condition Xi–1 is a random variable whose distribution is determined by previous stages.

3. Martingale theory applied to the single-stage model

In this section, we give an introduction to the basic properties of martingales and the optional stopping theorem, which are the key mathematical tools used in calculating our main results in §5. For readers who are less familiar with martingale methods, we first derive the mean decision time, hitting probabilities, and FPT densities for the single- and two-stage models. These analyses provide an alternate approach to deriving these classical results as compared to other non-martingale based approaches (Ratcliff, 1980; Diederich and Oswald, 2014). We discuss the differences between these various approaches in §8.

3.1. Continuous time martingales

Consider a continuous time stochastic process η(t), t > 0. Let η(t1 : t2) denote the portion of η(t) between times t1 and t2. A stochastic process M(t) is said to be a martingale with respect to η(t) if the following three conditions hold:

M(t) is a function of η(0 : t) and does not depend on future values of η(t)1

- M(t) satisfies the stationarity condition in expected value

(4)

The first condition means that given the realized values η(0 : t), we should be able to compute M(t) deterministically, so that M(t) does not depend on the future. The second condition is a regularity condition that ensures that M(t) is sufficiently2 light-tailed and holds under several decision-making scenarios. The third condition is the most crucial – it enforces stationarity in expected value. This last condition can be interpreted as a “fair play” condition ensuring that chances of gaining and losing starting with value M(t1) at time t1 are the same, as in the classic example of a sequence of flips of a fair coin. When introducing a martingale, one often does not explicitly specify the process η(t), and in this case η(t) is assumed equal to M(t).

Martingale theory is very broad and there are many different choices for M(t) and η which are interesting. All of the calculations and results of this paper are constructed from two fundamental stochastic processes, which we now introduce. Let W(t) be the standard Wiener process and X(t) = μt + σW(t) be a single-stage Wiener process with drift rate μ and diffusion rate σ (without boundaries). We now consider some martingales associated with these two stochastic processes:

W(t) is a martingale. It is easy to verify that for 0 ≤ t1 < t2, . Similarly, X(t) – μt is a martingale.

- W(t)2 – t is a martingale. Note that for 0 ≤ t1 < t2, conditioned on W(t1), W(t2) has a Gaussian distribution with mean W(t1) and variance (t2 – t1). Therefore,

- For any , exp(λX(t) – λμt – λ2σ2t/2) is a martingale. Note that for 0 ≤ t1 < t2, conditioned on X(t1), X(t2) has a Gaussian distribution with mean X(t1) + μ(t2 – t1) and variance σ2(t2 – t1). Thus,

For λ = −2μ/σ2, this martingale reduces to exp(−2μX(t)/σ2) which is referred to as the exponential martingale.

3.2. Stopping times and the optional sampling theorem

The first passage time τ is a random variable defined by τ = inf{t > 0|x(t) ∉ (−ζ, ζ)}. We are interested in computing conditional expectations and probability densities of τ, which correspond to expected decision times and the corresponding distributions of response times. The key tool we borrow from the theory of martingales is a classic result known as Doob's optional sampling theorem (also known as the optional stopping theorem), which we motivate and introduce here. To understand the optional sampling theorem, one must first recall that the expected value of a martingale M(t) computed over all realizations starting from M(0) is equal to the initial expectation of M(0). That is, martingales by definition must satisfy the following:

One then wonders: Does a similar property extend to the random time τ? More specifically, if we consider different realizations of τ and compute averages of M(τ) at these realized values, does this average, as the number of realizations grow large, converge to M(0)? The answer is affirmative if τ is well behaved and is independent of the process M(t). Indeed, in this case

| (5) |

where the outer expectation is with respect to τ and the inner expectation is with respect to M(t).

But what if τ is not independent of M(t)? In these cases the situation is more subtle. Suppose τ is bounded from above by t̄. Then, we can write

where 1(·) is the indicator function that takes value 0 if its argument is false and 1 otherwise, and dM(u) is the (random) increment in M(u) at time u. If we assume that the value 1(τ ≥ u) can be deterministically computed based on the knowledge of M(0 : u), we can then write

where the second equality follows from the law of total expectation3. The first equality requires swapping of integral and expectation operators, which is allowed because t̄ is finite. Furthermore, 1(τ ≥ u) is a deterministic function of M(0 : u) and thus, , where the last equality follows by definition of martingale. Consequently, for a random variable τ and martingale M(t), if (i) the event τ ≥ u is determined by M(0 : u), and (ii) τ is bounded from above with probability one. A random variable satisfying the first condition is called a stopping time, and the above discussion is the optional sampling theorem which we formally state:

The optional sampling theorem

Suppose M(t), t ≥ 0 is a martingale with respect to η(t) and τ is a bounded (with probability one) stopping time with respect to η(t), then .

Heuristically, the optional sampling theorem states that different realizations of a martingale M(t) stopped at random times average out to constitute a fair game. The crucial aspect is that the stationarity of the expected value holds even for random (stopping) times, including our first passage time τ. As we will see in §3.3, this stationarity enables us to calculate analytic expressions for first passage time properties by finding appropriate martingales.

A helpful example is to consider the standard Wiener process with initial position at X(0) = x0, and absorbing thresholds at ±ζ, with x0 between ±ζ. In this case X(t) is itself a martingale, the first passage time τ is a stopping time, and the optional sampling theorem says that . The expectation on the left hand side is simply the average of the initial distribution of X(0) which is the number x0. The right hand side is more interesting: X(τ) is the random value of X(t) at the random decision time τ, the instant X(t) crosses +ζ or −ζ. Thus X(τ) attains one of two possible values, +ζ or −ζ, and heuristically resembles an average over all +ζ's and −ζ's corresponding to sample paths starting at x0 and diffusing until they hit either +ζ or −ζ at τ. The optional sampling theorem says that this average of +ζ's and −ζ's, over all such sample paths, ends up being equal to the number x0.

3.3. Applications to the single-stage model

The optional sampling theorem is a powerful mathematical tool for decision-making models that associate decisions and decision times with a diffusion processes crossing a threshold. Ratcliff's Diffusion Decision Model (Ratcliff, 1978; Ratcliff and McKoon, 2008), the leaky competing accumulator model (Usher and McClelland, 2001), and the EZ diffusion model (Wagenmakers et al., 2007), are popular examples of such models. The optional sampling theorem reduces the problem of computing analytic expressions for the statistics of the first passage times to identifying appropriate martingales. In this section we illustrate the flavor of such calculations for the single-stage model from (1) in §2.1. Recall that the decision time τ is defined by τ = inf{t > 0|x(t) ∉ (−ζ, ζ)}. Throughout this this section, we introduce θ = (μ, σ, ζ) to slightly condense the notation when desired.

We first compute , the probability of hitting the lower threshold. First, we let s = μ/σ2, the ratio of the drift parameter to the squared diffusion parameter (i.e. signal to noise). Recall from §3.1 that for μ ≠ 0, exp(−2sX) is a martingale. Applying the optional sampling theorem, we get

Substituting and solving for , we obtain a closed form expression

Similarly, for μ = 0, we note that X(t) is a martingale. Applying optional sampling theorem, we get

and following the same argument we obtain . In summary, we get

| (6) |

where P±(x0, θ) is the probability of hitting the upper and the lower threshold, respectively.

To compute the expected decision time , recall from §3.1 that X(t) – μt is a martingale. For μ ≠ 0, applying the optional sampling theorem yields

Solving for , we get . When μ = 0, recall from §3.1 that is a martingale, and the same argument as above yields . In summary, the mean decision time mDT(x0, θ) is given by

| (7) |

We also wish to find τ's Laplace transform, or moment generating function4, . We remember from §3.1 that exp(λX(t) – λμt – λ2σ2t/2) is a martingale, and choose λ so that the coefficient of t becomes −α, i.e., λ solves the equation . The two solutions to this equation are

Applying the optional sampling theorem, we obtain a pair of equations,

which we can solve simultaneously for to obtain

Thus, the moment generating function of the decision time is

| (8) |

As a byproduct, we also get the Laplace transform of conditional decision times:

| (9) |

| (10) |

The derivatives of the Laplace transform yield moments of decision time (see Srivastava et al. (2016) for detailed derivation of conditional and unconditional moments of decision time using Laplace transforms). Here, we focus on expressions for conditional expected (mean) decision times that are the derivative of the Laplace transform with respect to −α computed at α = 0. The expected decision time conditioned on hitting the upper and lower boundaries are denoted by mDT+ and mDT−, and may be computed by differentiating (9) and (10):

| (11) |

| (12) |

where and 1(·) is the indicator function. We again note, just as with , mDT± also depend on the underlying parameters μ, x0, ζ, and σ.

We now compute τ's first passage time density f(t; x0, μ, σ, ζ), i.e., the probability density function of the decision time. This amounts to calculating the inverse Laplace transform of (8). In this case, the inverse Laplace transform needs to be expressed as an infinite series (see Lin (1998) for a detailed derivation):

| (13) |

where θ = (a, σ, ζ), and ϑ(t; u, v) is a function (Borodin and Salminen, 2002, pp. 451) defined by

Similarly, the first passage time density conditioned on a particular decision is given by

| (14) |

| (15) |

where , i.e., f±(t; x0, θ)dt is the probability of the event τ ∈ [t, t + dt) and x(τ) = ±ζ. Note that f defined in (13) is the sum of f+ and f−.

Alternate derivations for the hitting probabilities, mean decision times, and FPT densities may be found in the decision making literature (Ratcliff and Smith, 2004; Bogacz et al., 2006; Navarro and Fuss, 2009). It is worth noting that the infinite series solution for the FPT density given in (13) is equivalent to the small-time representations for the FPT analyzed in (Navarro and Fuss (2009) and Blurton et al. (2012)). For completeness, we provide the alternative expression for density in Appendix A.

4. Analysis of the two-stage model

In this section, we use the tools developed in §3 in order to analyze the two-stage process originally presented and analyzed in Ratcliff (1980). While our calculations lead to equivalent formulas for the first passage time densities, a martingale argument provides us with additional closed form expressions for the probability of hitting a particular threshold and expected decision times. Computations of these FPT statistics using the results of Ratcliff (1980) requires numerical integration of the FPT density, which our formulas now avoid.

We may explicitly write the two-stage model as

| (16) |

where

As before, the decision time τ = inf{t > 0|x(t) ∉ (−ζ, ζ)} is the first passage time with respect to boundaries at ±ζ. Let θi = (μi, σi, ti, ζ) and , for i ∈ {1, 2}.

We interpret the first stage as a single-stage model with a deadline at t1. For a single-stage model with thresholds ±ζ and a deadline t1, the joint density gddln(x, τ; x0, t1, θ1) of the evidence x(t1) and the event τ ≥ t1 is given by (Douady, 1999; Durrett, 2010):

| (17) |

Here superscript “ddln” refers to the deadline. gddln may then be used to determine the FPT distribution by integrating it over the range of x. More importantly, dividing gddln by yields the conditional density on the evidence x(t1) conditioned on no decision until time t1.

4.1. Probability of hitting the lower threshold

In trying to compute , we view the two-stage process as two single-stage processes in sequence. Let τ1 be the first passage time for the first stage by itself (without any deadline at t1) and define the random time which is a stopping time. Applying the optional sampling theorem to the exponential martingale for the first stage gives us

which yields

In this expression, both and may be obtained from (17).

The second stage is another single-stage process, this time starting at time t1 with a random initial condition x(t1) with distribution given by (17). Computing the expected value of the standard lower threshold hitting probability (6) with respect to the random initial condition X1, i.e., x(t1) conditioned on τ1 > t1 we obtain

The term can be readily computed from (17). Combining the previous two conditional expressions allows us to obtain

This probability depends on all of the parameters of the two-stage model: x0, μ1, σ1, t1, μ2, σ2, ζ.

4.2. Expected decision time

To compute the expected decision time, we apply the optional sampling theorem to the martingale x(t) – μ1t with the stopping time from the previous section to obtain

Solving for we obtain

Much like in the previous section, we observe that the second stage is similar to a single-stage process starting at time t1 with a random initial condition determined by (17). Thus, the associated expected first passage time is

Combining the above expressions, we obtain

4.3. First passage time density

We now compute the first passage time probability density function. Let F(t; θ, x0) be the cumulative distribution function of the decision time for the single-stage process (1) obtained by integrating (13). For t ≤ t1, the two-stage model is identical to the first-stage model and the first passage time distribution is F(t; θ1, x0). For t > t1, the first passage time distribution is

i.e., the distribution function corresponds to trajectories that reach threshold before t1 and trajectories that reach threshold between t1 and t. The latter trajectories can be modeled as trajectories of a single stage process starting at time t1 with stochastic initial condition. The stochastic initial condition leads to the expectation operator on the second term. The distribution of decision time conditioned on particular decisions can be computed analogously.

5. Analysis of the multistage model

In this section we derive first passage time (FPT) properties of the multistage process defined in §2.2 using an approach similar to that employed throughout §4. The model is viewed as n modified processes in sequence in which for each stage, the initial condition is a random variable and only the decisions made before a deadline are considered. For the i-th stage process with a known distribution of initial condition Xi–1, we derive properties of the FPT conditioned on a decision before the deadline ti, along with the distribution of Xi for i ∈ {1, . . . , n}. This latter distribution, more precisely, is the distribution of x(ti) conditioned on the FPT for the i-th stage being greater than ti. We then use these properties sequentially for i ∈ {1, . . . , n} to determine the FPT properties during each stage. Finally, we aggregate FPT properties at each stage to compute FPT properties for the whole multistage process. Our calculations have features similar to an idea of Diederich and Busemeyer (2006), who first proposed that the bias (i.e. initial condition) of a stage may have a time dimension. In some sense our formulas below elucidate how previous temporal stages of processing affect the bias of future stages.

The extension of the FPT distribution computation from the two-stage model (Ratcliff , 1980) to the multistage model requires careful computation of expressions similar to (17) at the end of each stage. Also, in contrast to Ratcliff (1980), as in §4, our martingale based approach allows direct computation of probability of hitting a particular threshold and expected decision times. As in the previous section, this avoids integration of the first passage time density to compute these quantities.

Throughout this section we use the following notations:

τ = inf{t > 0|x(t) ∉ (−ζ, ζ)}, the first passage time through either threshold for the entire multistage process;

τi = τ|τ > ti–1, the first passage time for the i-th stage (3) without any deadline;

θi = (μi, σi, ti, ζ), and representing the parameters for the i-th stage and stages 1, . . . , , respectively;

, the i-th stage ratio of signal to squared noise.

Here we are concerned with computation of FPT properties and allow θ1:n to be free parameters. For scenarios such as estimation of parameters, to ensure identifiability of the parameters, all diffusion rates may be set equal to unity. However, such cases are beyond the scope of this manuscript and we do not discuss these issues here.

5.1. FPT properties of the i-th stage

For the i-th stage, the initial condition Xi–1 is a random variable and only decisions made before the deadline ti are relevant. The analysis thus focuses on the random variable Xi–1 and the random time τi. Conditioned on a realization of Xi–1, the density of Xi can be computed using (17). If the density of Xi–1 is known, then the unconditional density of Xi can be obtained by computing the expected value of the conditional density of Xi with respect to Xi–1. Since the density of X0 is known, this procedure can be recursively applied to obtain densities of Xi–1, for each i ∈ {1, . . . , n}. Formally, the joint density of the evidence x(ti) and the event τ ≥ ti is

| (18) |

where denotes the expected value with respect to Xi–1. Note that

Thus, the density of Xi, i.e., x(ti) conditioned on τi > ti is determined by dividing by which can be computed by integrating over the range of x(ti). Note that the parameters in are x0 and θ1:i; this highlights the fact that the distribution of Xi depends on all previous stages.

Similarly, the FPT density for the i-th stage conditioned on a realization of Xi–1 can be computed using (13), and the unconditional density can be obtained by computing the expected value of the conditional density with respect to Xi–1:

| (19) |

where t > ti–1. The cumulative distribution function is obtained by integrating fi(t; x0, θ1:i). Note that every trajectory crossing the decision threshold before ti does so irrespective of the deadline at ti. Thus, the expression for density fi does not depend on ti.

To conclude this section, we state equations for computing hitting times, mean decision times, and first passage time densities, conditional on a response during the i-th stage. The derivation of these expressions are found in Appendix B.

-

(i)The probability of hitting the lower threshold given that a response is made during the i-th stage, denoted by , is given by

is the probability of hitting the upper threshold during the i-th stage. These expression depends on all of the model parameters up to and including the i-th stage (i.e. and both depend on x0 and θ1:i) and will be used in subsequent calculations.(20) -

(ii)The joint FPT density for the i-th stage process and a given upper/lower response, denoted by , is given by

(21)

where the functions f±(t; x0, θi) are taken from (14) and (15). Again, these expressions depend on all of the multistage model parameters up to and including the i-th stage (i.e. both depend on x0 and θ1:i).(22) -

(iii)The mean decision time given a response during stage i, denoted by mDTi(x0, θ1:i), is given by

(23) - (iv)

5.2. FPT properties of the multistage model

For a given multistage process (2) with initial condition x0, we sequentially compute all of the distributions of the initial conditions Xi for each i ∈ {1, . . . , n} using (18). Then, we compute the properties of the FPT associated with the i-th stage. Finally, the total probability formula aggregates these results into FPT properties of the entire multistage model. The calculations are contained in Appendix C and are given in terms of earlier formulas. In the following, we omit the arguments of functions whenever it is clear from the context.

-

(i)Let t ≥ 0 be given such that t ∈ (tk–1, tk] for some k ∈ {1, . . . , n}. The FPT distribution for the multistage process is

Note that and .(26) -

(ii)The mean decision time, denoted by mDTms(x0, θ1:n), for the multistage process is

Put simply, the expected decision time is the sum of the expected decision times for the individual stages (mDTi) weighted by the probability of the decision in each stage .(27) -

(iii)The probability of hitting the lower threshold, denoted by , is

This expression is similar to (27), with the probability of hitting the lower threshold being the sum of the hitting probabilities for each stage weighted by the probability of the decision in each stage .(28) -

(iv)The mean decision time conditioned on hitting the upper/lower threshold is

(29)

Note that and . Similar to (27), these equations show that the conditional decision times are weighted sums of the expected conditional decision times for each stage, with the weights being the conditional probability of the decision in each stage.(30) -

(v)The FPT cumulative distribution functions conditioned on hitting upper/lower threshold are

(31)

Note that and .(32)

6. Time-varying thresholds for the multistage process

The results in §5 were obtained under the assumption that the thresholds are constant throughout each stage. Now suppose that the thresholds for the i-th stage are ±ζi, i.e., piecewise constant thresholds. If the upper thresholds decrease at time ti (i.e. ζi+1 < ζi) and x(ti) is in the interval (ζi+1, ζi), then the path is absorbed by the upper boundary, and the probability of this instantaneous absorption is calculated by integrating (18) from ζi+1 to ζi. Likewise, the probability of instantaneous absorption into the lower threshold at ti is determined by integrating (18) from −ζi to −ζi+1. The density of Xi is then found by truncating the support of the density in (18) to (−ζi+1, ζi+1) and normalizing the truncated density. In the cases where the upper threshold in the (i + 1)-th stage is larger than the upper threshold in the i-th stage, i.e., ζi+1 > ζi, there is no instantaneous absorption, and the density of Xi is found by extending the density in (18), assigning zero density to the previously undefined support (see Figure 1). In all cases, the new, updated Xi may be used for computations dealing with the (i + 1)-th stage of the multistage model. Codes implementing all of the formulas through §5 with time-varying thresholds may be found at https://github.com/sffeng/multistage. In Appendix D, we describe how these ideas extend to a time varying Ornstein-Uhlenbeck (O-U) model.

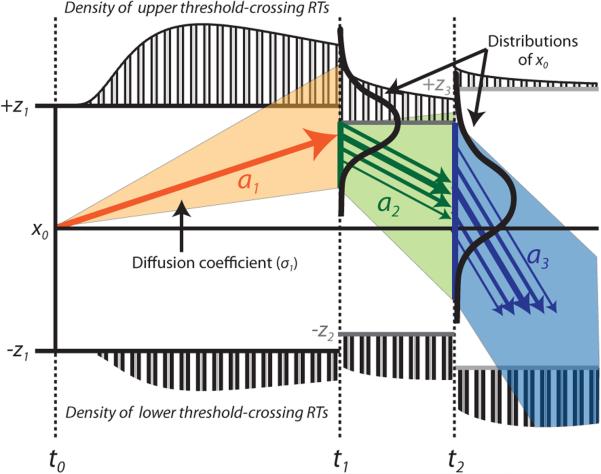

Figure 1.

Illustration of the key ideas for computation of FPT properties of the multistage model with piecewise constant drift and thresholds. The distribution of evidence x(ti) conditioned on no decision until ti serves as the distribution of initial condition for the i-th stage process. If threshold ζi+1 < ζi, then probability of instantaneous decision at time ti is computed as the probability of x(ti) conditioned on no decision until ti not belonging to the set (−ζi, ζi). If threshold ζi+1 > ζi, then there is no instantaneous decision and only the support of x(ti) conditioned on no decision until ti is increased to (−ζi+1, ζi+1).

7. Numerical examples

In this section we apply our calculations from §5 and §6 to a variety of numerical experiments. In doing so, we compare the theoretical predictions obtained from the analysis in this paper with the numerical values obtained through Monte-Carlo simulations, thereby numerically verifying our derivations above. We also provide examples illustrating time pressure or changes in attention over the course of a decision process, and demonstrate how our work can help to find the optimal speed-accuracy trade-off by maximizing reward rate (or any other function of mean first passage time, threshold-hitting probability, and reward). Unless otherwise noted, Monte Carlo simulations were obtained using 1000 runs; relatively few runs are used so that curves are visually distinguishable. Stochastic simulations use the Euler-Maruyama method with time step size 10−3. All of the above calculations have been implemented in MATLAB, and all codes used to produce the figures in this section may be found at https://github.com/sffeng/multistage.

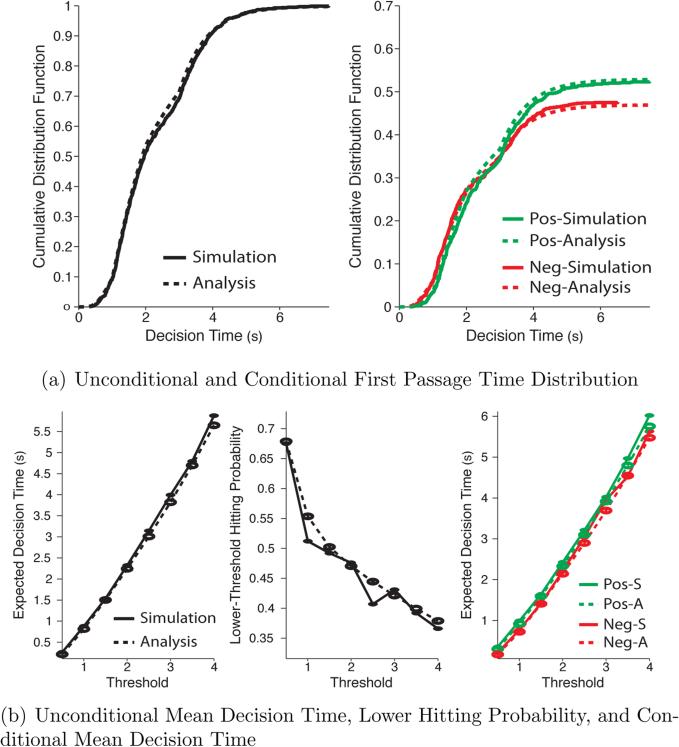

7.1. A Four-stage process

Consider a four stage process with drift and diffusion rates given by (μ1, μ2, μ3, μ4) = (0.1, 0.2, 0.05, 0.3) and (σ1, σ2, σ3, σ4) = (1, 1.5, 1.25, 2), respectively, with (t0, t1, t2, t3) = (0, 1, 2, 3) and initial condition x0 = −0.2. The cumulative distribution function (CDF) of the unconditional and conditional decision time for ζ = 2 obtained using the above analytic expressions (solid lines) and Monte-Carlo simulations (dotted lines) is shown in Figure 2(a). Similarly, the unconditional mean decision time, the lower hitting probability, and the mean conditional decision times (for upper/lower responses) are shown in Figure 2(b) as a function of threshold ζ. Note that the analytic expressions match closely with quantities computed using Monte-Carlo simulations. Also, notice that the CDF almost looks like a double-sigmoidal function (it starts to saturate around 0.6 before picking up and eventually saturating at 1) due to the drop in drift rate from 0.2 to 0.05.

Figure 2.

FPT calculations for a four-stage process with drift rates (μ1, μ2, μ3, μ4) = (0.1, 0.2, 0.05, 0.3), diffusion rates (σ1, σ2, σ3, σ4) = (1, 1.5, 1.25, 2), stage initiation times (t0, t1, t2, t3) = (0, 1, 2, 3), and initial condition x0 = −0.2. The FPT distribution is computed for threshold ζ = 2.

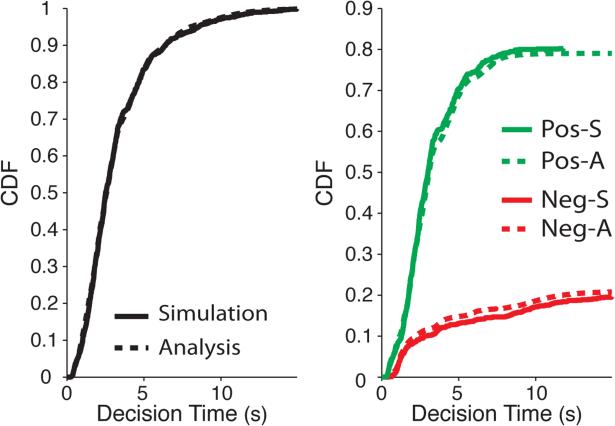

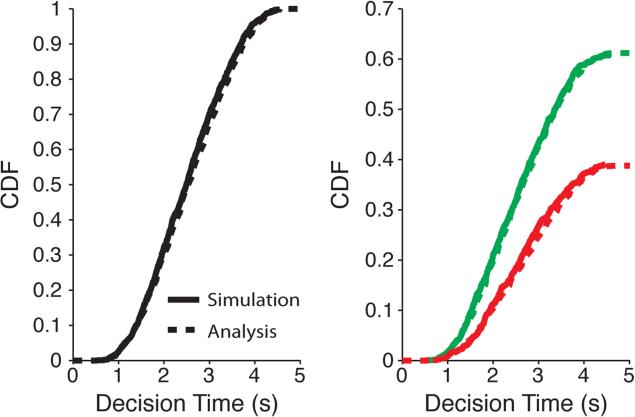

7.2. FPT Distribution for a process with Alternating Drift

In this example, the sign of the drift rate changes from stage to stage. This may be used to describe situations in which evidence accumulation changes dynamically with the decision-maker's focus of attention. For instance, Krajbich et al. (2010) have shown that the process of weighing two value-based options (e.g., foods) can be modeled with a process in which drift rates vary based on the option being attended at any given moment. We consider such a case using a 30-stage model in which the drift rates 1 and −0.75 alternate (i.e., μ1 = 1, μ2 = −0.75, μ3 = 1, . . .) to capture a situation in which the decision maker's attention alternates between two options, one of which has greater perceived value (higher drift rate) than the other. Let t0 = 0 and the remaining 29 stage initiation times be a fixed realization of 29 uniformly sampled points between 0 and 10. Assume x0 = 0, ζ = 2, and let the diffusion rate be stationary and equal to unity (σi = 1). The unconditional and conditional FPT distributions in this scenario obtained using both the analytic expressions (solid lines) and Monte-Carlo simulations (dotted lines) are shown in Figure 3. Note that the analytic expressions match closely with quantities computed using Monte-Carlo simulations.

Figure 3.

Unconditional and conditional FPT distributions for a 30-stage model with alternating drift rate. The drift rates are (μ1, μ2, μ3, . . .) = (1, −0.75, 1, . . .), diffusion rate at each stage is unity, the threshold ζ = 2, and stage initiation times are equally spaced throughout the interval (0, 10).

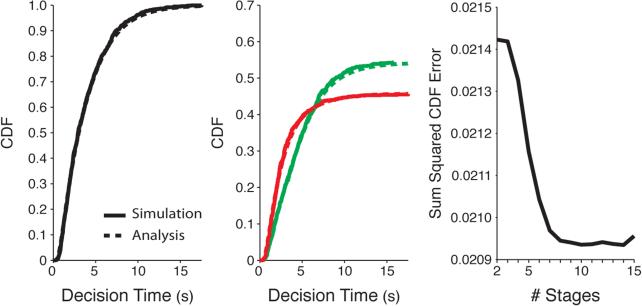

7.3. FPT Distribution for a model with gradually time-varying drift

Changes in evidence accumulation may occur gradually over time. For instance, White et al. (2011) proposed a “shrinking spotlight” model of the Eriksen Flanker Task, a task in which participants responding to the direction of a central arrow are influenced by the direction of arrows in the periphery (see also Servan-Schreiber et al., 1990; Liu et al., 2009; Servant et al., 2015). According to these models, evidence accumulation is initially influenced by all of the arrows (central as well as flankers, which may drive an incorrect response, modeled here as as a lower threshold response) but as the attentional spotlight narrows the drift rate is gradually more influenced by the central arrow alone. The multistage model, in spite of having discontinuous changes in parameters, can still be used to approximate a model with gradually time-varying parameters.

As a demonstration, we use a 20-stage model as an approximation to a model with continuously time-varying drift rate. Assume σi = 1, x0 = 0, ζ = 2, and let the stage initiation times t0, t1, . . . , t19 be equally spaced throughout the interval [0, 5]. Furthermore, suppose the drift rate during the i-th stage is −0.2 + 0.0263(i – 1). The unconditional and conditional FPT distributions for such a 20-stage process obtained using the analytic expressions (solid lines) and using Monte-Carlo simulations (dotted lines) are shown in Figure 4. Note that the analytic expressions match closely with quantities computed using Monte-Carlo simulations. We also show the error due to piecewise constant approximation of the drift rate as a function of number of stages (Figure 4, right). It should be noted that even for 5 stages the approximation error is very small.

Figure 4.

Unconditional (left) and conditional (middle) FPT distributions for a 20-stage process with gradually increasing drift rate. The drift rate for the i-th stage is μi = −0.2 + 0.0263(i – 1), diffusion rate at each stage is unity, the threshold ζ = 2, and stage initiation times are equally spaced throughout the interval [0, 5]. Right: The total squared error between the simulated and analytic CDFs (left) decreases with increasing discrete stages of the model. 10,000 simulations were used for each approximation.

7.4. Collapsing Thresholds

One may be interested in modeling a decision process in which thresholds are dynamic rather than constant across stages. This can be used to describe discrete changes in choice strategy, or a continuous change in thresholds over time; the latter approach has been successful at describing behavior under conditions that either involve an explicit response deadline (e.g., Milosavljevic et al., 2010; Frazier and Yu, 2008) or where there is an implicit opportunity cost for longer time spent accumulating evidence (Drugowitsch et al., 2012). Recently, (Voskuilen et al., 2016) used analytic methods to model collapsing boundaries in order to compare fixed boundaries against collapsing boundaries in diffusion models.

Here, we model such a situation using a 20-stage process, as an approximation to a diffusion model with continuously collapsing thresholds, i.e., ζ ↓ 0 with time, the drift rate and the diffusion rate are constant and equal to 0.15 and 1, respectively, x0 = 0, and stage initiation times t0, t1, . . . , t19 are equally spaced throughout the interval [0, 5]. The threshold in the i-th stage is . The unconditional and conditional FPT distributions for such a 20-stage process obtained using the analytic expressions (solid lines) and using Monte-Carlo simulations (dotted lines) are shown in Figure 5. Note that the analytic expressions match closely with quantities computed using Monte-Carlo simulations.

Figure 5.

Unconditional and conditional FPT distributions for a 20-stage process with collapsing thresholds. The drift rate and diffusion rate at each stage are 0.15 and 1, respectively. The stage initiation times are equally spaced throughout the interval [0, 5], and the threshold at i-th stage is , for i = {1, . . . , 19}.

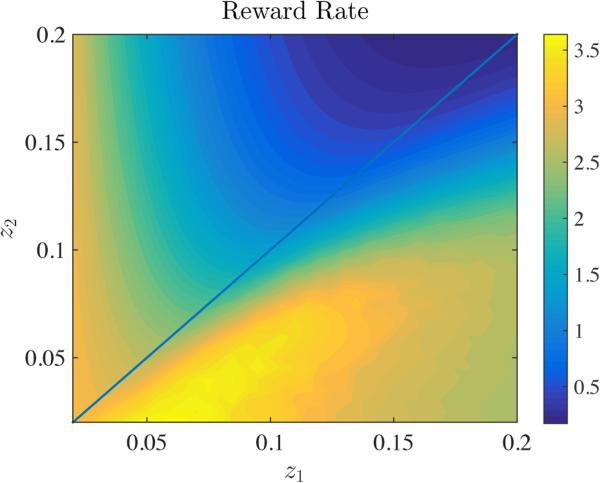

7.5. Optimizing the Speed-Accuracy Trade-off in a Two-stage Model

Human decision-making in many two alternative forced choice signal detection tasks has been successfully captured by the single stage model. In such tasks, hitting the upper/lower boundary is interpreted as a correct/incorrect response. The accuracy of a decision can then be determined by the sign of the drift rate – if μ is positive, participants are said to be more accurate the more likely they are to hit the upper threshold and more error-prone the more likely they are to hit the lower threshold. One then assumes without loss of generality that the drift rate is positive, in which case the lower hitting probability is called the error rate and the upper hitting probability is called the accuracy. Here, the choice of threshold dictates the speed-accuracy trade-off, i.e., the trade-off between a fast decision and an accurate decision. In the previous examples, the thresholds have been known and we have characterized the associated error rate and first passage time properties. These can be used to define a joint function of speed and accuracy that may dictate how humans/animals choose to set and adjust their threshold. In particular, it has been proposed (Bogacz et al., 2006) that human subjects choose a threshold that maximizes reward rate (RR), defined as

| (33) |

where Tnd is the sensory and motor processing time (non-decision time) and P− and are computed using the expressions derived in §5.

The reward rate for a two-stage process is shown in Figure 6. For the set of parameters in Figure 6, reward rate is maximal at approximately (ζ1, ζ2) = (0.06, 0.01). Thus, the maximizing reward rate in this setting interestingly requires that the threshold across stages be different.

Figure 6.

Reward rate as a function of the thresholds for the two-stage model. The parameters are μ1 = 0.5, μ2 = −0.1, σ1 = σ2 = 0.1, x0 = ζ1/2, and t1 = 0.1. The non-decision time Tnd = 0.3. Note that the maximum reward rate is achieved for ζ1 ≠ ζ2.

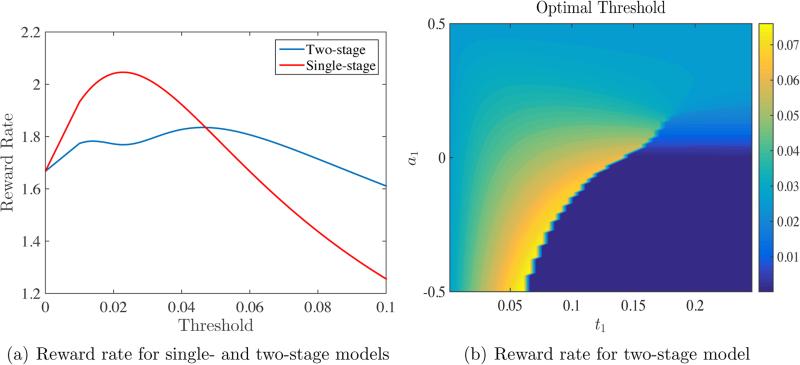

Setting the threshold to be constant across stages (ζ1 = ζ2), we can compare how reward rate changes with this constant threshold in a single-stage (traditional) versus a two-stage mode. As shown in Figure 7(a), we find that this reward rate function is unimodal in a single-stage model (as previously observed) whereas it is bimodal in a two-stage model. Figure 7(b) explores this parameter space in greater depth and shows that the curvature of reward rate (and in particular the relative height of its first and second modes) vary as a function of the length and drift rate of the first stage (for example). As a result, this analysis reveals a discontinuous jump in optimal threshold as these parameters vary. Whether individuals are sensitive to these discontinuities when setting thresholds for a multistage decision-making task deserves further exploration.

Figure 7.

(a) For the single-stage model μ1 = 0.2, σ1 = 0.1 and x0 = 0, while for the two-stage model μ1 = 0.1, μ2 = 0.5, σ1 = σ2 = 0.1, x0 = 0 and t1 = 0.15. The non-decision time Tnd = 0.3. The reward rate for the single-stage model is a unimodal function and achieves a unique local maximum, while the reward rate for the two-stage model has two local maxima. (b) Optimal threshold for two-stage model obtained by maximizing reward rate. The left panel shows the variation of the optimal threshold as a function of t1 and μ1. The other parameters are μ2 = 0.5, σ1 = σ2 = 0.1, and x0 = 0. The regions of the contour plot associated with t1 = 0 and μ1 = 0.5 correspond to the single-stage model.

8. Discussion

In this paper we analyze the first passage time properties of a Wiener process between two absorbing boundaries with piecewise constant (time-dependent) parameters, which we call a multistage model or multistage process. Our main theoretical results, collected in §5, add to previous work on analyzing time-dependent random walk models in psychology and neuroscience. Broadly speaking, these can be split into three approaches. One approach is the integral equation approach introduced and developed in Smith (1995, 2000); Smith and Ratcliff (2009). Another approach is the matrix based Markov Chain approximation which has been applied to a wide variety of multi-attribute choice settings (Diederich and Busemeyer, 2003; Diederich and Oswald, 2016, 2014). A third approach analyzes the backward partial differential equation associated with the multistage process (Ratcliff, 1980; Heath, 1992). The results of §5 align most closely with the work of the third approach, as we also directly analyze the multistage stochastic process, albeit with different techniques. Whereas Ratcliff (1980) and Heath (1992) analyzed a multistage process by solving the Kolmogorov partial differential equation, we employ martingale theory (e.g. the optional sampling theorem) in order to obtain analytic results which extend those of previous studies. In doing so, our work also builds on martingale-based analyses described by Smith (1990) for a single stage model.

The modeler utilizing time-dependent random walk models in decision making should be aware of all of the above approaches, as one approach may demand additional approximations compared to another, due to differences in how they discretize temporal dynamics. For example, when modeling experiments with continuously (gradually) changing stimuli (e.g., White et al. (2011); Zhang et al. (2014)) one may find the integral equation approach more natural, given the continuity and smoothness assumptions built in to the techniques. Diederich and Oswald's model also allows for continuous changes in boundaries. In contrast, our methods must approximate the underlying gradually changing drift with a piecewise constant function, thereby inducing some additional error in the calculations of first passage times. If, however, the application lends itself well to discrete changes in drift rate or threshold (e.g., Krajbich et al. (2010)), then both the matrix based methods and methods discussed in this paper may be more natural, as they explicitly consider such discrete changes in the underlying calculations. Ornstein-Uhlenbeck processes lend themselves more naturally to the matrix based approach of Diederich and Busemeyer (2003), compared to our analysis in Appendix D, since our analysis requires a change in time scale that the matrix method does not. In the specific case of a multistage model (2), our work provides semi-analytic formulas that can be easily computed or studied further (see below). In general, the modeler should be ready to employ the most suitable approach given their situation.

The reader should also be aware of the software tools available for each approach, as there already exist several good non-martingale based software packages for computing FPT statistics. One package implementing the integral equation approach is that of Drugowitsch (2014), which computes first passage time densities using the stable numerical approximations developed in Smith (2000). More recently, highly optimized codes for a broad class of diffusion models have been developed by Verdonck et al. (2015), with implementations on both CPUs and GPUs. Compared to other available codes, Diederich and Busemeyer (2003)'s matrix approach is substantially simpler and more elegant to implement – one can compute desired choice probabilities and mean decision times in less than a few dozen lines of MATLAB code. For practitioners wishing to write all of their own model code from scratch, this may be a considerable advantage. The MATLAB code released with this report5 provides implementations of the results from §5 and allows one to reproduce all of the figures from §7. Unlike the work of Verdonck et al. (2015), our work is not immediately focused on developing a rapid numerical tool for simulation, but rather on introducing martingale theory as a useful mathematical tool for analyzing multistage decision models. Thus, the codes released with this report are not intended to compete with the efficiency of the aforementioned codes, which have been highly optimized and tuned for throughput, but instead to demonstrate the simplicity and effectiveness of our analysis. These considerations notwithstanding, our results do suggest promising avenues for future numerical work. Particularly relevant is work by Navarro and Fuss (2009); Blurton et al. (2012); Gondan et al. (2014) who developed efficient numerical schemes for evaluating the relevant infinite sums involved in FPT calculations. Similar methods could be applied to results in §5.1 and §5.2 to develop efficient multistage codes, which could in turn contribute to the growing collection of numerical tools available for practitioners using diffusion models to study decision making.

In §3 we re-derived classical mean decision times and choice probabilities equivalent to results first derived for a discrete time random walk model using the Wald identity (Laming, 1968; Link and Heath, 1975; Link, 1975; Smith, 1990), which itself is a corollary of the optional sampling theorem. Smith (1990) notes that results concerning the discrete time random walk model with Gaussian increments and the continuous time single stage Wiener diffusion model of §3 should be equivalent, which is indeed the case. Furthermore, the moment generating function derived in §3.3 is identical to that obtained by (Smith, 1990, p. 9) via the Wald identity. Our aim in presenting §3 was to introduce the martingale analysis used in §5 by first demonstrating its utility in re-deriving these classical results. We hope these calculations provide an intuition for the computations in §5 in a less technical setting. For reviews of the classical results on random walks in psychological decision making, see (Townsend and Ashby, 1983, pp. 300-301) and (Luce, 1986, pp. 328-334).

One particularly useful aspect of equations (20) through (32) is that they demonstrate how various other measures of performance, such as the lower-threshold hitting probability during each stage, evolve as the underlying dynamics change. Using these, one may efficiently compute a variety of behavioral measures of performance without resorting to first computing the FPT densities. Our results may also serve as a starting point for further analysis of more complicated stochastic decision models. In appendix D, we show how our results apply to Ornstein-Uhlenbeck processes, which approximate leaky integration over the course of evidence accumulation, e.g., the Leaky Competing Accumulator model [LCA] (Usher and McClelland, 2001). Given that the LCA itself can in certain cases approximate a reduced form of more complex and biologically plausible models of interactions across neuronal populations (e.g., Wang, 2002; Wong and Wang, 2006; Bogacz, 2007), our work may help analysts better understand time-varying dynamics within and across neural networks, and how such dynamics relate to complex cognitive phenomena.

Beyond its analytic and numerical utility, our uses of martingale theory, and the optional sampling theorem in particular, provide some theoretical insights into random walk decision models. For example, a single stage model with zero drift rate (i.e. Wiener process) and lower/upper thresholds at 0 and 1 necessarily has the qualitative property that the probability of hitting the upper threshold equals the initial (nonrandom) position x0. Although these results are known in the probability literature (Doob, 1953), they provide practitioners and experimentalists a unique way of planning and analyzing experiments based on decisions thought to evolve according to diffusion processes. In summary, we hope that the tools described in this article and the results of §5 will encourage computational and mathematical analyses of decision models involving time-dependent parameters.

Highlights.

Multistage processes model decisions involving time-varying stimuli

Martingale theory provides derivations of classical reaction time properties

Semi-analytic formulas for mean decision time and hitting probability are derived

These derivations contribute to the study richer decision processes

Acknowledgements

We also thank the editor, Philip Smith, and the referees for helpful comments, especially regarding this article's exposition and discussion. We thank Ryan Webb for the reference to Smith (2000) and the associated code. We thank Phil Holmes and Patrick Simen for helpful comments and discussions. This work was jointly supported by the C.V. Starr Foundation (AS), the Insley-Blair Pyne Fund, ONR grant N00014-14-1-0635 and ARO grant W911NF-14-1-0431(VS and NEL).

Appendix A. Alternative expression for FPT density of the single stage model

An alternative expression to the FPT density (13) that can be obtained by solving the Fokker-Planck equation (Feller, 1968) is:

Appendix B. Derivation of expressions in §5.1

We first establish (20). First consider the case μi > 0. Let be the filtration defined by the evolution of the multistage process (2) until time t conditioned on τ > ti–1. A filtration can be thought of as an increasing sequence of available information. For some s ∈ (ti–1, t), as shown in §3, {exp(−2six(t))}t≥ti–1 is a martingale, i.e., .

Furthermore, is a stopping time. Therefore, it follows from optional sampling theorem that

Solving the above equation for , we obtain the desired expression.

For μi = 0, , as shown in §3, {x(t)}t≥ti is a martingale. Therefore, applying the optional sampling theorem, we obtain

Solving the above equation for , we obtain the desired expression.

The formulas (21) and (22) immediately follow from applying expectation to (14) and (15), respectively.

To establish (23) for μi ≠ 0, we note from §3 that for the i-th stage, {x(t) – μit}t≥ti is a martingale. Therefore, applying the optional sampling theorem, we obtain

Solving the above equation for yields the desired expression.

For μi = 0, we note from §3 that is a martingale. Therefore, applying the optional sampling theorem, we obtain

Solving the above equation for yields the desired expression.

Next, we need to establish that the Laplace transform of the density for the FPT for a particular decision made before ti is

To establish this, we consider the stochastic process . From §3, we note that is a martingale for each , i.e.,

We choose two particular values of λ:

Note that for . Therefore, stochastic processes {exp(λ1x(t) – αt)}t≥0 and {exp(λ2x(t) – αt)}t≥ti–1 are martingales. Now applying the optional sampling theorem, we obtain

| (B.1) |

Similarly,

| (B.2) |

Equations (B.1) and (B.2) are two simultaneous equations in two unknowns and . Solving for these unknowns, we obtain

and

Simplifying these expressions, we obtain the desired expression.

Finally, (24) and (25) follow from differentiating the Laplace transform with respect to −α, and then evaluating at α = 0.

Appendix C. Performance metrics for the overall multistage process

We start by establishing (26). Since t ∈ (tk–1, tk],

We now establish (27). We note that

To establish (28), we note that

Equations (29) and (30) follow similarly to (27), and Equations (31) and (32) follow similarly to (26).

Appendix D. Time-varying Ornstein-Uhlenbeck model

In this section, we discuss how the ideas presented in §5 and §6 can be used to computed FPT properties for a time varying Ornstein-Uhlenbeck (O-U) model.

The O-U model captures decision-making through the first passage of trajectories of an O-U process (Cox and Miller, 1965) through two thresholds. Our calculations for the multistage process also help analyze the Ornstein-Uhlenbeck (O-U) model. Similar to the multistage process, the n-stage O-U process with piecewise constant parameters is defined by

| (D.1) |

where

for each i ∈ {1, . . . , n}. Due to the extra −λ(t)x(t)dt term, the O-U process is a leaky integrator, while the original single stage process is a perfect integrator (λ(t) = 0).

Here, leaky integration means that as the noisy signal is integrated in time with exponentially increasing (λ < 0) or decreasing (λ > 0) weights on past observations. Such exponential weights lead to ‘recency’ or ‘decay’ effects, i.e., the earlier stages (or late stages) may have greater influence on the ultimate decision, whereas with the single stage process, all of the signal throughout the entire decision period is weighed equally.

Appendix D.1. The O-U process as a transformation of the Wiener process

In this section we show how our calculations for the multistage process can be easily applied to decision models driven by O-U processes via a transformed Wiener process (Cox and Miller, 1965, §5.9).

Consider the single-stage O-U process

| (D.2) |

The O-U process (D.2) can be written as a time-varying location-scale transformation of the Wiener process (Cox and Miller, 1965, §5.9), i.e.,

| (D.3) |

In order to derive (D.3), note that for O-U process (D.2)

Note that the stochastic process is equivalent to the stochastic process σW((1 – exp(−2λt))/2λ) in the sense of distribution. Furthermore, σW((1 – exp(−2λt))/2λ) is equivalent to the stochastic process e−λtW(σ2(exp(2λt) – 1)/2λ) in a similar sense. This means that for each realization of the process there exists an identical realization of the process e–λtW(σ2(exp(2λt) – 1)/2λ).

If we define the transformed time by so that . Then

We refer to this process as a Wiener process evolving on exponential time scale.

Appendix D.2. First passage time of the O-U process

We now consider the first passage time of the O-U process (D.2) with respect to symmetric thresholds ±ζ. If x(t) = ±ζ, we have . We denote this last quantity ζ±(u). Consequently, the FPT for x(t) with respect to thresholds ±ζ is a continuous transformation of the FPT of a Wiener process starting at x0 and evolving on the transformed time u with respect to time-varying thresholds at ζ±(u). Since u is a monotonically increasing function, the distribution of the first passage time τ can be obtained from u(τ), the FPT distribution of the Wiener process. Furthermore, the lower threshold hitting probabilities of the two processes are the same.

Note that in transforming the FPT problem for the O-U process (D.2) to the FPT problem for the Wiener process evolving on exponential time scale, removes all the parameters from the underlying process x0 + W(u) and puts them in thresholds ζ±(u) and exponential time scale u. In addition to utilizing the results of §5 to multistage O-U processes, the above transformation is also helpful is speeding up Monte-Carlo simulations of the O-U process. Since the transformed process evolves on exponential time scale, the Monte-Carlo simulations with transformed process should roughly take time that is a logarithmic function of time taken by the O-U process (D.2).

Computation of FPT distributions for the Wiener process with time-varying thresholds is, to our knowledge, not analytically tractable. However, the time-varying thresholds can be approximated by piecewise constant time-varying thresholds and approximate FPT distributions can be computed using the multistage model. While the thresholds ζ± are asymmetric for the transformed process (i.e. ζ+ ≠ −ζ−), unlike in the case described for the multistage process; such a case can be easily handled by replacing the expression in (13) and (18) with corresponding expressions for asymmetric thresholds (see Douady, 1999; Borodin and Salminen, 2002).

Appendix D.3. Approximate computation of the FPT distribution of the multistage O-U process

Similar to the transformation described in Appendix D.1, the multistage O-U process (D.1) for t ∈ [ti–1, ti) can be written as

| (D.4) |

Let . Also, let τi and Xi–1, i ∈ {1, . . . , n} be defined similarly to the multistage model. Then, conditioned on a realization of Xi–1, the FPT problem of the i-th stage O-U process can be equivalently written as the FPT problem of the Wiener process Xi–1 + W(ui(t)) with respect to thresholds

We can approximate each stage of the O-U process (D.1) by a multistage process representing the above Wiener process with time varying thresholds. This sequence of multistage processes is itself a larger multistage process that approximates (D.1) and its FPT distribution can be computed using the method developed in §5. Note that this method only yields FPT distributions. The expected decision times and probability of hitting a particular threshold can be computed by integrating these distributions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

More precisely, M(t) is progressively measurable with respect to the sigma algebra generated by η(0 : t). See (Doob, 1953, chap. 2), (Karatzas and Shreve, 1998, chap. 1), or (Revuz and Yor, 1999, chap. 1).

M(t) may be heavy tailed due to non-existence of second and higher moments.

For an integrable random variables Y and an arbitrary random variable Z, E[Y] = E[E[Y|Z]]. Loosely speaking, the law of total expectation states that the expectation of a random variable can be computed by first computing the expectation conditional on another random variable, and then computing the expected value of the resulting expectation.

The moment-generating function (technically, the two-sided Laplace transform) of a random variable X is , a function of . It is often of interest because it specifies the probability distribution of X, and can be used to obtain the moments of X.

Bibliography

- Blurton SP, Kesselmeier M, Gondan M. Fast and accurate calculations for cumulative first-passage time distributions in Wiener diffusion models. Journal of Mathematical Psychology. 2012;56(6):470–475. [Google Scholar]

- Bogacz R. Optimal decision-making theories: linking neurobiology with behaviour. Trends in Cognitive Sciences. 2007;11(3):118–125. doi: 10.1016/j.tics.2006.12.006. [DOI] [PubMed] [Google Scholar]

- Bogacz R, Brown E, Moehlis J, Holmes PJ, Cohen JD. The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychological Review. 2006;113(4):700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- Borodin AN, Salminen P. Handbook of Brownian Motion: Facts and Formulae. Springer; 2002. [Google Scholar]

- Brunton BW, Botvinick MM, Brody CD. Rats and humans can optimally accumulate evidence for decision-making. Science. 2013;340(6128):95–98. doi: 10.1126/science.1233912. [DOI] [PubMed] [Google Scholar]

- Busemeyer JR, Diederich A. Cognitive modeling. Sage; 2010. [Google Scholar]

- Cisek P, Puskas GA, El-Murr S. Decisions in changing conditions: The urgency-gating model. The Journal of Neuroscience. 2009;29(37):11560–11571. doi: 10.1523/JNEUROSCI.1844-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox DR, Miller HD. The Theory of Stochastic Processes. Methuen & Co. Ltd.; 1965. [Google Scholar]

- Diederich A, Busemeyer JR. Simple matrix methods for analyzing diffusion models of choice probability, choice response time, and simple response time. Journal of Mathematical Psychology. 2003;47(3):304–322. [Google Scholar]

- Diederich A, Busemeyer JR. Modeling the effects of payoff on response bias in a perceptual discrimination task: Bound-change, drift-rate-change, or two-stage-processing hypothesis. Perception & Psychophysics. 2006;68(2):194–207. doi: 10.3758/bf03193669. URL http://dx.doi.org/10.3758/BF03193669. [DOI] [PubMed] [Google Scholar]

- Diederich A, Oswald P. Sequential sampling model for multiattribute choice alternatives with random attention time and processing order. Frontiers in Human Neuroscience. 2014;8(697):1–13. doi: 10.3389/fnhum.2014.00697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diederich A, Oswald P. Multi-stage sequential sampling models with finite or infinite time horizon and variable boundaries. Journal of Mathematical Psychology. 2016 In press. [Google Scholar]

- Doob J-L. Stochastic Processes. John Wiley & Sons, Inc., Chapman & Hall; 1953. [Google Scholar]

- Douady R. Closed form formulas for exotic options and their lifetime distribution. International Journal of Theoretical and Applied Finance. 1999;2(1):17–42. [Google Scholar]

- Drugowitsch J. C++ diffusion model toolset with Python and Matlab interfaces. 2014 GitHub repository: https://github.com/jdrugo/dm,commit:5729cd891b6ab37981acc02d04016870f0a998.

- Drugowitsch J, Moreno-Bote R, Churchland AK, Shadlen MN, Pouget A. The cost of accumulating evidence in perceptual decision making. The Journal of Neuroscience. 2012;32(11):3612–3628. doi: 10.1523/JNEUROSCI.4010-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durrett R. Probability: Theory and Examples. Cambridge University Press; 2010. [Google Scholar]

- Farkas Z, Fulop T. One-dimensional drift-diffusion between two absorbing boundaries: Application to granular segregation. Journal of Physics A: Mathematical and General. 2001;34(15):3191–3198. [Google Scholar]

- Feller W. An Introduction to Probability Theory and its Applications. Vol. 1. John Wiley & Sons; 1968. [Google Scholar]

- Feng S, Holmes P, Rorie A, Newsome WT. Can monkeys choose optimally when faced with noisy stimuli and unequal rewards. PLoS Computational Biology. 2009;5(2):e1000284. doi: 10.1371/journal.pcbi.1000284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frazier P, Yu AJ. Sequential hypothesis testing under stochastic deadlines. In: Platt J, Koller D, Singer Y, Roweis S, editors. Advances in Neural Information Processing Systems 20. Curran Associates, Inc.; 2008. pp. 465–472. [Google Scholar]

- Gold JI, Shadlen MN. Neural computations that underlie decisions about sensory stimuli. Trends in Cognitive Sciences. 2001;5(1):10–16. doi: 10.1016/s1364-6613(00)01567-9. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annual Review of Neuroscience. 2007;30(1):535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Gondan M, Blurton SP, Kesselmeier M. Even faster and even more accurate first-passage time densities and distributions for the Wiener diffusion model. Journal of Mathematical Psychology. 2014 Jun;60:20–22. [Google Scholar]

- Heath RA. A general nonstationary diffusion model for two-choice decision-making. Mathematical Social Sciences. 1992;23(3):283–309. [Google Scholar]

- Horrocks J, Thompson ME. Modeling event times with multiple outcomes using the Wiener process with drift. Lifetime Data Analysis. 2004;10(1):29–49. doi: 10.1023/b:lida.0000019254.29153.1a. [DOI] [PubMed] [Google Scholar]

- Hubner R, Steinhauser M, Lehle C. A dual-stage two-phase model of selective attention. Psychological Review. 2010;117(3):759–784. doi: 10.1037/a0019471. [DOI] [PubMed] [Google Scholar]

- Karatzas I, Shreve S. Brownian Motion and Stochastic Calculus. 2nd Edition. Vol. 113. Springer-Verlag; New York: 1998. [Google Scholar]

- Krajbich I, Armel C, Rangel A. Visual fixations and the computation and comparison of value in simple choice. Nature Neuroscience. 2010;13(10):1292–1298. doi: 10.1038/nn.2635. [DOI] [PubMed] [Google Scholar]

- Laming DRJ. Information theory of choice-reaction times. Academic Press; 1968. [Google Scholar]

- Lin XS. Double barrier hitting time distributions with applications to exotic options. Insurance: Mathematics and Economics. 1998;23(1):45–58. [Google Scholar]

- Link S, Heath R. A sequential theory of psychological discrimination. Psychometrika. 1975;40(1):77–105. [Google Scholar]

- Link SW. The relative judgment theory of two choice response time. Journal of Mathematical Psychology. 1975;12(1):114–135. [Google Scholar]

- Liu S, Yu AJ, Holmes P. Dynamical analysis of Bayesian inference models for the Eriksen task. Neural Computation. 2009;21(6):1520–1553. doi: 10.1162/neco.2009.03-07-495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce RD. Response times: Their role in inferring elementary mental organization. No. 8. Oxford University Press; 1986. [Google Scholar]

- Milosavljevic M, Malmaud J, Huth A, Koch C, Rangel A. The drift diffusion model can account for the accuracy and reaction time of value-based choices under high and low time pressure. Judgment and Decision Making. 2010;5(6):437–449. [Google Scholar]

- Mormann MM, Malmaud J, Huth A, Koch C, Rangel A. The drift diffusion model can account for the accuracy and reaction time of value-based choices under high and low time pressure. 2010 Available at SSRN 1901533. [Google Scholar]

- Navarro DJ, Fuss IG. Fast and accurate calculations for first-passage times in Wiener diffusion models. Journal of Mathematical Psychology. 2009;53(4):222–230. [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological Review. 1978;85(2):59–108. [Google Scholar]

- Ratcliff R. A note on modeling accumulation of information when the rate of accumulation changes over time. Journal of Mathematical Psychology. 1980;21(2):178–184. [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: Theory and data for two-choice decision tasks. Neural Computation. 2008;20(4):873–922. doi: 10.1162/neco.2008.12-06-420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Rouder JN. Modeling response times for two-choice decisions. Psychological Science. 1998;9(5):347–356. [Google Scholar]

- Ratcliff R, Smith PL. A comparison of sequential sampling models for two-choice reaction time. Psychological Review. 2004;111(2):333–367. doi: 10.1037/0033-295X.111.2.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Smith PL, Brown SD, McKoon G. Diffusion decision model: Current issues and history. Trends in Cognitive Sciences. 2016;20(4):260–281. doi: 10.1016/j.tics.2016.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Revuz D, Yor M. Continuous Martingales and Brownian Motion. Vol. 293. Springer; Berlin Heidelberg: 1999. [Google Scholar]

- Servan-Schreiber D, Printz H, Cohen J. A network model of catecholamine effects-gain, signal-to-noise ratio, and behavior. Science. 1990;249(4971):892–895. doi: 10.1126/science.2392679. [DOI] [PubMed] [Google Scholar]

- Servant M, White C, Montagnini A, Burle B. Using covert response activation to test latent assumptions of formal decision-making models in humans. The Journal of Neuroscience. 2015;35(28):10371–10385. doi: 10.1523/JNEUROSCI.0078-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. Journal of Neurophysiology. 2001;86(4):1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- Simen P, Contreras D, Buck C, Hu P, Holmes P, Cohen JD. Reward rate optimization in two-alternative decision making: Empirical tests of theoretical predictions. Journal of Experimental Psychology: Human Perception and Performance. 2009;35(6):1865. doi: 10.1037/a0016926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith PL. A note on the distribution of response times for a random walk with Gaussian increments. Journal of Mathematical Psychology. 1990;34(4):445–459. [Google Scholar]

- Smith PL. Psychophysically principled models of visual simple reaction time. Psychological review. 1995;102(3):567. [Google Scholar]

- Smith PL. Stochastic dynamic models of response time and accuracy: A foundational primer. Journal of Mathematical Psychology. 2000;44(3):408–463. doi: 10.1006/jmps.1999.1260. [DOI] [PubMed] [Google Scholar]

- Smith PL, Ratcliff R. An integrated theory of attention and decision making in visual signal detection. Psychological review. 2009;116(2):283. doi: 10.1037/a0015156. [DOI] [PubMed] [Google Scholar]

- Srivastava V, Holmes P, Simen P. Explicit moments of decision times for single- and double-threshold drift-diffusion processes. Journal of Mathematical Psychology. 2016 In press. [Google Scholar]

- Townsend JT, Ashby FG. Stochastic modeling of elementary psychological processes. Cambridge University Press; 1983. [Google Scholar]

- Usher M, McClelland J. The time course of perceptual choice: the leaky, competing accumulator model. Psychological Review. 2001;108(3):550. doi: 10.1037/0033-295x.108.3.550. [DOI] [PubMed] [Google Scholar]

- Verdonck S, Meers K, Tuerlinckx F. Efficient simulation of diffusion-based choice rt models on CPU and GPU. Behavior Research Methods. 2015:1–15. doi: 10.3758/s13428-015-0569-0. [DOI] [PubMed] [Google Scholar]

- Voskuilen C, Ratcliff R, Smith PL. Comparing fixed and collapsing boundary versions of the diffusion model. Journal of Mathematical Psychology. 2016;73:59–79. doi: 10.1016/j.jmp.2016.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagenmakers E-J, Van Der Maas HL, Grasman RP. An EZ-diffusion model for response time and accuracy. Psychonomic Bulletin & Review. 2007;14(1):3–22. doi: 10.3758/bf03194023. [DOI] [PubMed] [Google Scholar]

- Wang X-J. Probabilistic decision making by slow reverberation in cortical circuits. Neuron. 2002;36(5):955–968. doi: 10.1016/s0896-6273(02)01092-9. [DOI] [PubMed] [Google Scholar]

- Webb R. The dynamics of stochastic choice. 2015 Jul; Working paper: available at SSRN 2226018. [Google Scholar]

- White CN, Ratcliff R, Starns JJ. Diffusion models of the flanker task: Dzhaniscrete versus gradual attentional selection. Cognitive Psychology. 2011;63(4):210–238. doi: 10.1016/j.cogpsych.2011.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong K-F, Wang X-J. A recurrent network mechanism of time integration in perceptual decisions. The Journal of Neuroscience. 2006;26(4):1314–1328. doi: 10.1523/JNEUROSCI.3733-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang S, Lee MD, Vandekerckhove J, Maris G, Wagenmakers E-J. Time-varying boundaries for diffusion models of decision making and response time. Frontiers in Psychology. 2014;5(1364) doi: 10.3389/fpsyg.2014.01364. [DOI] [PMC free article] [PubMed] [Google Scholar]