Abstract

In linear regression models with high dimensional data, the classical z-test (or t-test) for testing the significance of each single regression coefficient is no longer applicable. This is mainly because the number of covariates exceeds the sample size. In this paper, we propose a simple and novel alternative by introducing the Correlated Predictors Screening (CPS) method to control for predictors that are highly correlated with the target covariate. Accordingly, the classical ordinary least squares approach can be employed to estimate the regression coefficient associated with the target covariate. In addition, we demonstrate that the resulting estimator is consistent and asymptotically normal even if the random errors are heteroscedastic. This enables us to apply the z-test to assess the significance of each covariate. Based on the p-value obtained from testing the significance of each covariate, we further conduct multiple hypothesis testing by controlling the false discovery rate at the nominal level. Then, we show that the multiple hypothesis testing achieves consistent model selection. Simulation studies and empirical examples are presented to illustrate the finite sample performance and the usefulness of the proposed method, respectively.

Keywords: Correlated Predictors Screening, False discovery rate, High dimensional data, Single coefficient test

1. Introduction

In linear regression models, it is a common practice to employ the z-test (or t-test) to assess whether an individual predictor (or covariate) is significant when the number of covariates (p) is smaller than the sample size (n). This test has been widely applied across various fields (e.g., economics, finance and marketing) and is available in most statistical software. One usually applies the ordinary least squares (OLS) approach to estimate regression coefficients and standard errors for constructing a z-test (or t-test); see, for example, Draper and Smith (1998) and Wooldridge (2002). However, in a high dimensional linear model with p exceeding n, the classical z-test (or t-test) is not applicable because it is infeasible to compute the OLS estimators of p regression coefficients. This motivates us to modify the classical z-test (or t-test) to accommodate high dimensional data.

In high dimensional regression analysis, hypothesis testing has attracted considerable attention (Goeman et al., 2006, 2011; Zhong and Chen, 2011). Since these papers mainly focus on testing a large set of coefficients against a high dimensional alternative, their approaches are not applicable for testing the significance of a single coefficient. Hence, Bühlmann (2013) recently applied the ridge estimation approach and obtained a test statistic to examine the significance of an individual coefficient. His proposed test involves a bias correction, which is different from the classical z-test (or t-test) via the OLS approach. In the meantime, Zhang and Zhang (2014) proposed a low dimensional projection procedure to construct the confidence intervals for a linear combination of a small subset of regression coefficients. The key assumption behind their procedure is the existence of good initial estimators for the unknown regression coefficients and the unknown standard deviation of random errors. To this end, the penalty function with a tuning parameter is required to implement Zhang and Zhang’s (2014) procedure. Later, van de Geer et al. (2014) extended the results of Zhang and Zhang’s (2014) to broad models and general loss functions.

Instead of the ridge estimation and low dimensional projection, Fan and Lv (2008) and Fan et al. (2011) used the correlation approach to screen out those covariates that have weak correlations with the response variable. As a result, the total number of predictors that are highly correlated with the response variable is smaller than the sample size. However, Cho and Fryzlewicz (2012) found out that such a screening process via the marginal correlation procedure may not be reliable when the predictors are highly correlated. To this end, they proposed a tilting correlation screening (TCS) procedure to measure the contribution of the target variable to the response. Motivated by the TCS idea of Cho and Fryzlewicz (2012), we develop a new testing procedure that can lead to accurate inferences. Specifically, we adopt the TCS idea and introduce the Correlated Predictors Screening (CPS) method to control for predictors that are highly correlated with the target covariate before a hypothesis test is conducted. It is worth noting that Cho and Fryzlewicz (2012) mainly focus on variable selection, while we aim at hypothesis testing.

If the total number of highly correlated predictors resulting from the CPS procedure is smaller than the sample size, their effects can be profiled out from both the response and the target predictor via projections. Based on the profiled response and the profiled predictor, we are able to employ a classical simple regression model to obtain the OLS estimate of the target regression coefficient. We then demonstrate that the resulting estimator is -consistent and asymptotically normal, even if the random errors are heteroskedastic as considered by Belloni et al. (2012, 2014). Accordingly, a z-test statistic can be constructed for testing the target coefficient. Under some mild conditions, we show that the p-values obtained by the asymptotic normal distribution satisfy the weak dependence assumption of Storey et al. (2004). As a result, the multiple hypothesis testing procedure of Storey et al. (2004) can be directly applied to control the false discovery rate (FDR). Finally, we demonstrate that the proposed multiple testing procedure achieves model selection consistency.

The rest of the article is organized as follows. Section 2 introduces model notation and proposes the CPS method. The theoretical properties of hypothesis tests via the CPS as well as the FDR procedures are obtained. Section 3 presents simulation studies, while Section 4 provides real data analyses. Some concluding remarks are given in Section 5. All technical details are relegated to Appendix.

2. The methodology

2.1. The CPS method

Let (Yi, Xi) be a random vector collected from the ith subject (1 ≤ i ≤ n), where Yi ∈ ℝ1 is the response variable and Xi = (Xi1, …, Xip)⊤ ∈ ℝp is the associated p-dimensional predictor vector with E(Xi) = 0 and cov(Xi) = Σ = (σj1j2 ) ∈ ℝp×p. In addition, the response variable has been centralized such that E(Yi) = 0. Unless explicitly stated otherwise, we hereafter assume that p ≫ n and n tends to infinity for asymptotic behavior. Then, consider the linear regression model,

| (2.1) |

where β = (β1, …, βp)⊤ ∈ ℝp is an unknown regression coefficient vector. Motivated by Belloni et al. (2012, 2014), we assume that the error terms εi are independently distributed with E(εi|Xi) = 0 and finite variance for i = 1, …, n. In addition, define the average of error variances as , and assume that as n → ∞ for some finite positive constant σ̄2. To assess the significance of a single coefficient, we test the null hypothesis H0 : βj = 0 for any given j. Without loss of generality, we focus on testing the first regression coefficient. That is,

| (2.2) |

and the same testing procedure is applicable to the rest of the individual regression coefficients.

For the sake of convenience, let 𝕐 = (Y1, …, Yn)⊤ ∈ ℝn be the vector of responses, 𝕏 = (X1, …, Xn)⊤ ∈ ℝn×p be the design matrix with the jth column 𝕏j ∈ ℝn, and ℰ = (ε1, …, εn)⊤ ∈ ℝn. In addition, let ℓ be an arbitrary index set with cardinality |ℓ|. Then, define Xiℓ = (Xij : j ∈ ℓ)⊤ ∈ ℝ|ℓ|, 𝕏ℓ = (X1ℓ, …, Xnℓ)⊤ = (𝕏j : j ∈ ℓ) ∈ ℝn×|ℓ|, Σℓ = (σj1j2 : j1 ∈ ℓ, j2 ∈ ℓ) ∈ ℝ|ℓ|×|ℓ|, and . Moreover, define Σℓaℓb = (σj1j2 : j1 ∈ ℓa, j2 ∈ ℓb) ∈ ℝ|ℓa|×|ℓb| for any two arbitrary index sets ℓa and ℓb, which implies Σℓℓ = Σℓ.

Before constructing the test statistic, we first control those predictors that are highly correlated with Xi1. Otherwise, they can generate a confounding effect, due to multicollinearity and yield an incorrect estimator of β1. Specifically, the marginal regression coefficient is not a consistent estimator of β1 when 𝕐 − 𝕏1β1 and 𝕏1 have a strong linear relationship. To remove the confounding effect, define ρ1j = corr(Xi1, Xij) as the correlation coefficient of Xi1 and Xij for j = 2, …, p, and . We also assume that |ρ1j| are distinct. Then, let 𝒮k be the set of k indices whose associated predictors have the largest absolute correlations with Xi1:

| (2.3) |

The choice of k (i.e., 𝒮k) will be discussed in Remark 2. With a slight abuse of notation, we sometimes denote 𝒮k by 𝒮 in the rest of the paper for the sake of convenience. To remove the confounding effect due to Xi𝒮, we construct the profiled response and predictor as 𝕐̃ = 𝒬𝒮𝕐 and 𝕏̃1 = 𝒬𝒮𝕏1, respectively, where and In ∈ ℝn×n is the n×n identity matrix. We next follow the OLS approach and obtain the estimate of the target coefficient β1,

We refer to the above procedure as the Correlated Predictors Screening (CPS) method, β̂1 as the CPS estimator of β1, and 𝒮 as the CPS set of Xi1.

It is of interest to note that the proposed CPS estimator β̂1 is closely related to the estimator obtained via the “added-variable plot” approach (e.g., see Cook and Weisberg, 1998). To illustrate their relationship, let 𝕏−1 be the collection of all covariates in 𝕏 except for 𝕏1. Then the method of “added-variable plot” essentially takes the residuals from regressing 𝕐 against 𝕏−1 as the response and the residuals from regressing 𝕏1 against 𝕏−1 as covariates. Although both approaches can be used to assess the effect of 𝕏−1 on the estimation of β1, they are different. Specifically, the “added-variable plot” approach requires regressing 𝕏1 on all remaining covariates, which is not computable when the dimension p is larger than n. By contrast, CPS only considers those predictors in 𝒮 that are highly correlated with 𝕏1, which is applicable in high dimensional settings.

Making inferences about β1 in high dimensional models is challenging because these inferences can depend on the accuracy of estimating the whole vector β; see Belloni et al. (2014), van de Geer et al. (2014) and Zhang and Zhang (2014). The main contribution of our proposed CPS method is employing a simple marginal regression approach to estimate β1 after controlling for the predictors that are highly correlated with 𝕏1. As a result, the profiled predictor, 𝕏̃1, is approximately independent of the remaining covariates. This allows us to not only directly estimate β1, but also make inferences about β1. The theoretical properties of the CPS estimator and associated test statistic are presented below.

2.2. Asymptotic normality of the CPS estimator and test statistic

To make inferences, we study the asymptotic properties of the CPS estimator β̂1. Define , which measures the partial covariance of Xij1 and Xij2, after controlling for the effect of Xi𝒮 = (Xij : j ∈ 𝒮)⊤ ∈ ℝ|𝒮|. Then, we make the following assumptions to facilitate the technical proofs, while admittedly not the weakest possible assumptions.

-

(C1)

Gaussian condition. Assume that the Xis are independent and normally distributed with mean 0 and covariance matrix Σ.

-

(C2)

Bounded diagonal elements. There exist two finite constants cmax and such that the diagonal components of Σ and Σ−1 are bounded above by cmax and , respectively.

-

(C3)

Predictor dimension. There exist two positive constants ħ < 1 and ν > 0 such that log p ≤ νnħ for every n > 0.

-

(C4)

Partial covariance. There exists a constant ξ > 3/2 such that maxj∉𝒮 |ϱ1j(𝒮)| = O(|𝒮|−ξ ) as |𝒮| → ∞.

-

(C5)

Dimension of the CPS set. There exist a CPS set |𝒮| and two positive constants Ca and Cb such that Canν1 ≤ |𝒮| ≤ Cbnν2, where ν1 and ν2 are two positive constants with 1/(2ξ) < ν1 ≤ ν2 < 1/3 and ħ + 3ν2 < 1, where ħ is defined in Condition (C3).

-

(C6)

Regression coefficients. Assume that for some constant Cmax > 0 and ϖ < min(1/4, ξν1 − 1/2), where ξ and ν1 are defined in (C4) and (C5), respectively.

Condition (C1) is a common condition used for high dimensional data to simplify theoretical proofs; see for example, Wang (2009) and Zhang and Zhang (2014). This condition can be relaxed to the sub-Gaussian random variables (Wang, 2012; Li et al., 2012) and our theoretical results still hold. Condition (C2) is a mild condition that has been well discussed in Liu (2013). Condition (C3) allows the dimension of predictors p to diverge exponentially with the sample size n, so that p can be much larger than n. Condition (C4) is a technical condition for simplifying the proofs of our theory, and it requires that the partial covariance between the target covariate and any other predictor that does not belong to 𝒮, after controlling for the effect of Xi𝒮 (i.e., key confounders), and that it converges towards 0 at a fast speed as |𝒮| → ∞. This condition is satisfied for many typical covariance structures, e.g., diagonal and autoregressive structures. It is worth noting that, according to (C1), the conditional distribution of Xi1 given Xi,−1 = (Xi2, …, Xip)⊤ ∈ ℝp−1 remains normal. As a result, there exists a coefficient vector θ(1) = (θ(1),1, …, θ(1),p−1)⊤ ∈ ℝp−1 such that , where ei1 is a random error that is independent of Xi,−1. Furthermore, if 𝒮 ⊂ 𝒮θ = {j : θ(1),j ≠ 0}, then it implies that maxj∉𝒮 |ϱ1j(𝒮)| = 0. Hence, Condition (C4) is satisfied. Furthermore, this condition is closely related to the assumption given in Theorem 5 of Zhang and Zhang (2014). Moreover, Condition (C5), together with Condition (C4), ensures that the size of the CPS set is much smaller than the sample size, but it does not imply that the number of regressors highly correlated with 𝕏1 is bounded. Condition (C5) is used to guarantee that maxj∉𝒮 |ϱ1j(𝒮)| is of order o(n−1/2), so that the bias of β̂1 vanishes. Note that the size of the CPS set in Condition (C5) depends on the rate of maxj∉𝒮 |ϱ1j(𝒮)| → 0. Thus, Condition (C5) can be dropped if θ(1) has finite non-zero elements or Σ follows an autoregressive structure so that maxj∉𝒮 |ϱ1j(𝒮)| = O{exp(−ζ̄ |𝒮|η̄)} for some positive constants ζ̄ and η̄. Lastly, Condition (C6) is satisfied when β is sparse with only a finite number of nonzero coefficients. Under the above conditions, we obtain the following result.

Theorem 1

Assume that Conditions (C1)–(C6) hold. We then have , where , 𝒮+ = {1}∪ 𝒮, and 𝒮* = {j : j ∉ 𝒮+}.

Using the results of two lemmas in Appendix A, we are able to prove the above theorem; see the detailed proofs in Appendix B. By Theorem 1, we construct the test statistic,

| (2.4) |

where ,ℰ̂𝒮+ is the residual vector obtained by regressing Yi on Xi𝒮+, and Xi𝒮+ = (Xij : j ∈ 𝒮+)⊤. Applying similar techniques to those used in the proof of Theorem 1 under Conditions (C1)–(C6), together with Slutsky’s theorem and the result that obtained from Lemma 3 and Condition (C2), we can verify that is the consistent estimator of . As a result, Z1 is asymptotically standard normal under H0, and one can reject the null hypothesis if |Z1| > z1−α/2, where zα stands for the αth quantile of the standard normal distribution. Note that if p < n and 𝒮 = {j : j ≠ 1}, the test statistic Z1 is the same as the classical z-test statistic.

To make the testing procedure practically useful, one needs to select the CPS set 𝒮 among the sets 𝒮k for k ≥ 1. Since 𝒮k in (2.3) is unknown in practice, we consider its estimator

| (2.5) |

where ρ̂1j is the sample correlation coefficient of Xi1 and Xij and . The connection between 𝒮k and its sample counterpart 𝒮̂k is established in the following proposition.

Proposition 1

Let |ρ1ji | be the ith largest absolute value of {ρ1j : 2 ≤ j ≤ p}. For any 1 ≤ k ≤ Cbnν2 with Cb and ν2 being defined in Condition (C5), mini≥dmaxnν2/2(|ρ1jk| − |ρ1jk+i |) > dmin/nν2 for some positive constants dmin and dmax. Then, under Conditions (C1) and (C3), for any CPS set 𝒮k0 satisfying k0 ≤ Cbnν2, there exists k* ≤ k0 + dmaxnν2 such that P(𝒮̂k* ⊂ 𝒮k0 ) → 1.

The proof is given in Appendix C. The condition mini≥dmaxnν2/2(|ρ1jk | − |ρ1jk+i |) > dmin/nν2 for some finite positive constants dmin and dmax is quite mild, and it ensures that the difference between |ρ1jl | and |ρ1jm| cannot be too small when |jl − jm| is large enough. By Condition (C5), there exists a positive integer k0 ∈ (Canν1, Cbnν2 ) such that 𝒮k0 is the CPS set. According to Proposition 1, we can then find k* ≤ k0 + dmaxnν2 = O(nν2 ) satisfying P(𝒮̂k* ⊂ 𝒮k0 ) → 1. This indicates that there exists a set among the paths 𝒮̂k that contains the CPS set. By Condition (C4), we further have that . Using this result, one can verify that Theorem 1 holds by replacing 𝒮k0 with 𝒮̂k*.

Proposition 1 indicates that the sequential selection of the CPS set along the paths 𝒮̂k (k = 1, …, p − 1) is attainable. In practice, however, k is unknown and needs to be selected effectively. By the results of Corollary 1 of Kalisch and Bühlmann (2007), we have that |ϱ̂1j(𝒮̄) − ϱ1j(𝒮̄)| = Op(n−1/2) uniformly for any conditional set with size |𝒮̄| = O(nν2 ), which leads to for any k = O(nν2 ). This indicates that the sample partial correlation is close to its true partial correlation as the sample size gets large. Motivated by this finding, we propose choosing the CPS set among the paths 𝒮̂k by sequentially testing the partial correlations. Specifically, for any k ≥ 1, be the sample counterpart of ϱ1j(𝒮k) and define F̂1j(𝒮̂k) = 2−1 log[{1 + ϱ̂1j(𝒮̂k)}/{1 − ϱ̂1j(𝒮̂k)}], which is in the spirit of Fisher’s Z-transformation for the purpose of identifying nodes (variables) that have edges connected to the variable Xi1 in a Gaussian graph (see Kalisch and Bühlmann, 2007). Then, we select the smallest size of k sequentially such that (denoted as k̂), for every , where γ is a pre-specified significance level and . Employing Lemma 3 in Kalisch and Bühlmann (2007) that |F̂1j(𝒮̄)−F1j(𝒮̄)| = Op(n−1/2) uniformly for any conditional set with size |𝒮̄| = O(nν2 ), we then have , which immediately leads to . Using the result of Proposition 1 and Condition (C4), we further obtain |𝒮̂k̂| ≤ (Cb + dmax)nν2. Hence, the k̂ selected via the sequential testing procedure is of order O(nν2 ), which is directly related to the assumption imposed on 𝒮k in Condition (C5).

Remark 1

It is worth noting that the proposed CPS method is based on the same idea as the tilting method of Cho and Fryzlewicz (2012), namely controlling the effect of the predictors that could generate a confounding effect. However, there is a difference between these two methods in one of their scaling factors. Specifically, our proposed test statistic is

and the tilted correlation of Cho and Fryzlewicz (2012) is

Note that 𝒮+ = 𝒮 ∪ {1}. The asymptotic properties of these two quantities above can be quite different when β1 ≠ 0 because the difference between 𝕐⊤ 𝒬𝒮+𝕐 and 𝕐⊤𝒬𝒮𝕐 can be large. This indicates that the tilted correlation approach designed for variable selection may not be appropriate for hypothesis testing.

Remark 2

Based on partial correlation, we construct the CPS set. An alternative approach is via the correlation approach proposed by Cho and Fryzlewicz (2012, Section 3.4), who focused on testing correlations between covariates by controlling the false discovery rate. Although their method is quite useful for variable selection, it raises the following two concerns for our testing procedure. First, Theorem 1 may not be valid via the correlation approach. The reason is that Theorem 1 requires the partial covariance, maxj |ϱ1j(𝒮)|, to converge to 0 at a fast rate so that the bias of β̂1 is asymptotically negligible; see the proof of Theorem 1 in Appendix B for details. However, the correlation approach only ensures the convergence of maxj |ρ1j|, but not maxj |ϱ1j(𝒮)|. Hence, β̂1 may yield a nontrivial bias by using the correlation approach. Second, their method requires that only a small proportion of the ρj1j2 s are nonzero. Accordingly, it may not be applicable for our proposed test when correlations among predictors are either non-sparse or less sparse (see the covariance structure with the polynomial decay setting above Example 4).

Remark 3

We use a single screening approach to obtain the CPS set of the target covariate, which yields the CPS estimator of the target regression coefficient. On the other hand, Zhang and Zhang (2014) employed the scaled lasso procedure of Sun and Zhang (2012) to obtain the initial estimators of all regression coefficients and the scale parameter estimator. Then, they apply the classical lasso procedure to find the low dimensional projection vector. In sum, Zhang and Zhang (2014) applied the lasso approach to find the low dimensional projection estimator (LDPE) for the target regression coefficient. When 𝕏 has orthogonal columns and p < n, both approaches lead to the same parameter estimator as that obtained from the marginal univariate regression (MUR). However, these two approaches are quite different, and it seems nearly impossible to find the exact relationship between the CPS estimator and LDPE when the columns of 𝕏 are not orthogonal.

2.3. Controlling the False Discovery Rate (FDR)

In identifying significant coefficients among the high dimensional regression coefficients βj (j = 1, …, p), a multiple testing procedure can be considered by testing H0j : βj = 0 simultaneously. Denote the p-value obtained by testing each individual null hypothesis, H0j, as pj = 2{1 − Φ(|Zj|)}, where Zj is the test statistic and can be constructed similarly to that in Eq. (2.4). To guard against false discoveries, we next develop a procedure to control the false discovery rate (Benjamini and Hochberg, 1995).

Let 𝒩0 = {j : βj = 0} be the set of variables whose associated coefficients are truly zero and 𝒩1 = {j : βj ≠ 0} be the set of variables whose associated coefficients are truly nonzero. For any significance level t ∈ [0, 1], let V(t) = #{j ∈ 𝒩0 : pj ≤ t} be the number of falsely rejected hypotheses, S(t) = #{j ∈ 𝒩1 : pj ≤ t} be the number of correctly rejected hypotheses, and R(t) = #{j : pj ≤ t} be the total number of rejected hypotheses. We adopt the approach of Storey et al. (2004) to implement the multiple testing procedure, which is less conservative than the method of Benjamini and Hochberg (1995) and is applicable under a weak dependence structure (Storey et al., 2004). To this end, define FDP(t) = V(t)/[R(t)∨1] and FDR(t) = E{V(t)/[R(t)∨1]}, where R(t) ∨ 1 = max{R(t), 1}. Then, the estimator proposed by Storey (2002) is

| (2.6) |

where π̂0(λ) = {(1−λ)p}−1{p−R(λ)} is an estimate of π0 = p0/p, p0 = |𝒩0| is the number of true null hypotheses, and λ ∈ [0, 1) is a tuning parameter. Then, for any pre-specified significance level q and a fixed λ, consider the cutoff point chosen by the thresholding rule, . We reject the null hypotheses for those p-values that are less than or equal to .

To study the theoretical property of , we begin by introducing two notations. Let be the average probability of all rejected hypotheses and 𝒮j be the CPS set of covariates Xij. We next demonstrate that asymptotically provides strong control of FDR at the pre-specified nominal level q.

Theorem 2

Assume that p0/p → 1 as p goes to infinity, limn→∞ T1,n(t) = T1(t) and, for any k ∈ 𝒩0, , where T1(t) is a continuous function and Λ0 = max{maxj∈𝒩0 |𝒮j|, |𝒩1|}. Under Conditions (C1)–(C6), we have that .

The proof is given in Appendix D. In general, the dependences among the test statistics Zj become stronger as the overlap among the CPS sets increases. To control the dependences, they must have weaker dependence among covariates as the size of overlap increases. Accordingly, the condition for any k ∈ 𝒩0, in Theorem 2, controls the overall dependence between the covariates in the union of the CPS sets ∪l∈𝒩0 𝒮l for any fixed k ∈ 𝒩0; see Fan et al. (2012) for a similar condition on dependence. In addition, Λ0 provides an upper bound on the size of the overlap among the CPS sets. Assume that Σ follows an autoregressive structure such that the |𝒮j|s are small compared with n and for any 1 ≤ k ≤ p. Hence, the above condition is satisfied. In sum, in (2.6) is applicable under weak dependence. For a more general dependence structure, one might apply the FDP estimation procedure proposed by Fan et al. (2012).

2.4. Model selection consistency

According to Theorem 2, for any given significance level q > 0, the FDR can be controlled asymptotically by setting the threshold at . This result motivates us to further investigate the model selection consistency by letting q → 0. In fact, the model selection consistency in high dimensional linear models has been intensively studied in the variable selection literature. There is a large body of papers discussing the model selection consistency via the penalized likelihood approach (e.g., Meinshausen and Bühlmann, 2006; Zhao and Yu, 2006; Huang et al., 2007). However, the use of p-values for model selection has not received considerable attention. Some exceptions include Bunea et al. (2006) who considered variable selection consistency using p-values under the condition p = o(n1/2), and Meinshausen et al. (2009) who investigated the consistency of a two-step procedure involving screening and then a multiple test procedure. It is worth noting that the p-value obtained in Meinshausen et al. (2009) is not designed for assessing the significance of a single coefficient. The aim of this section in our paper is to study the model selection consistency using p-values obtained from the test proposed in Section 2.2.

For any given nominal levels αn, let be an estimate of 𝒩1, the set containing all the variables whose associated coefficients are truly nonzero. Assume that αn → 0 as n → ∞. By Theorem 2, the probability of obtaining false discoveries is , which implies that . Thus, this procedure requires a sure screening property to obtain model selection consistency. Before demonstrating this property, two additional assumptions are given below.

-

(C7)

There exist two positive constants κ and Cκ such that minj∈𝒩 1 |βj| > Cκn−κ for κ + ħ < 1/2, where ħ is defined in (C3).

-

(C8)

There exists some positive constant Ce such that for any ℓ > 0 and 1 ≤ j ≤ p, .

Condition (C7) is a minimum signal assumption, and similar conditions are commonly considered in the variable screening literature (Fan and Lv, 2008; Wang, 2009). We further assume that the random errors εi are independent and normally distributed. Using the fact that n−1||𝕏j||2 → 1 and that follows a normal distribution with finite variance for j = 1, …, p, Condition (C8) is satisfied. The above conditions, together with Conditions (C1)–(C6), lead to the following result.

Theorem 3

Under Conditions (C1)–(C8), there exists a sequence of significance levels αn → 0 such that .

The proof of Theorem 3 is given in Appendix E. According to the proof of Theorem 3, one can select αn at the level of αn = 2{1 − Φ(nj)} with ħ < j < 1/2 − κ. This selection implies that pαn/log(p) → 0 as n → ∞, which is similar to the assumption (Cq) in Bunea et al. (2006). Compared with the penalized likelihood method, the proposed testing procedure is able to control the false discovery rate and the family-wise error rate for the given αn. This is important especially in the finite sample case; see Meinshausen et al. (2009) for a detailed discussion.

3. Simulation studies

To demonstrate the finite sample performance of the proposed methods, we consider four simulation studies with different covariance patterns and distributions among predictors. Each simulation includes three different sample sizes (n = 100, 200, 500) and two different dimensions of predictors (p = 1000 and 2000). All simulation results presented in this section were based on 1000 realizations. The nominal level α of the CPS test and the significance level q of FDR are both set to 5%. Moreover, to determine the CPS set for each predictor, three different significance levels were considered (α = 0.01, 0.05, and 0.10). Since the results were similar, we only report the case with the nominal level α = 0.05.

To study the significance of each individual regression coefficient, consider the proposed test statistic Zrj for testing the jth coefficient in the rth simulation, where j = 1, …, p and r = 1, …, 1000. Then, define an indicator measure Irj = I(|Zrj| > z1−α/2) and compute the empirical rejection probability (ERP) for the jth coefficient test, . As a result, ERPj is the empirical size under the null hypothesis H0j : βj = 0, while it is the empirical power under the alternative hypothesis. Subsequently, define the average empirical size (ES) and the average empirical power (EP) as ES = |𝒩0|−1Σj∈𝒩 0 ERPj and EP = |𝒩1|−1Σj∈𝒩 1 ERPj, respectively. Accordingly, ES and EP provide overall measures for assessing the performance of the single coefficient test. Based on the p-values of the Zrj tests, we next employ the multiple testing procedure of Storey et al. (2004) to study the performance of multiple tests via the empirical FDR discussed in Section 2.3. It is worth noting that we adopt the commonly used tuning parameter λ = 1/2 in the first two examples, and its robustness is evaluated in Example 3. To assess the effect of model selection consistency, we examine the average true rate and the average false rate . When the true model can be identified consistently, TR and FR should approach 1 and 0, respectively, as the sample size gets large. For the sake of comparison, we also examine the marginal univariate regression (MUR) test (i.e., the classical t-test obtained from the marginal univariate regression model) and the low dimensional projection estimator (LDPE) proposed by Zhang and Zhang (2014) and van de Geer et al. (2014) in Monte Carlo studies. The tuning parameter of the LDPE method is set to {2 log p/n}1/2, as suggested by Zhang and Zhang (2014). It is noteworthy that we do not include the method of Bühlmann (2013) for comparison since it is not optimal, as shown by van de Geer et al. (2014).

Example 1: Autocorrelated predictors

Consider a linear regression model with autocorrelated predictors Xi generated from a multivariate normal distribution with mean 0 and covariance Σ = (σj1j2) ∈ ℝp×p with σj1j2 = 0.5|j1−j2|. Although different predictors are correlated with each other, the correlation decreases to 0 as the distance |j1 − j2| between Xij1 and Xij2 increases. The regression coefficient vector β is such that β3j+1 = 1 for any 0 ≤ j ≤ d0, and βj = 0 otherwise. Note that d0 = |𝒩1| represents the number of non-zero regression coefficients. In this example, we consider three different values of d0 (d0 = 10, 50, 100) to investigate the performance of the proposed test under sparse (i.e., d0 = 10) and less sparse (i.e., d0 = 50 and 100) scenarios. In addition, the average variance of εi (i.e., σ̄2) is chosen to generate a theoretical . Moreover, the variance of is independently generated from a uniform distribution with the lower and upper endpoints σ̄2/2 and 3 σ̄2/2, respectively. Accordingly, we generate the heteroscedastic linear regression model.

The results for d0 = 10 are presented in Table 1. Since the results for d0 = 50 and 100 yield a similar pattern to those in Table 1, we provide them in the supplementary material to save space. Table 1 shows that both CPS and MUR control the size well, while MUR has a larger power than CPS. After closely examining MUR’s performance, however, we find that its ES can be misleading. For example, Xi2 ∈ 𝒩0 is moderately correlated with a nonzero predictor Xi1 ∈ 𝒩1. As a result, the empirical size for testing H0 : β2 = 0 obtained from MUR could be as large as 0.90 in almost all realizations. On the other hand, most predictors in𝒩0 are nearly independent of the predictors in 𝒩1 and the response variable. Accordingly, MUR can have a reasonable average empirical size and a high average true rate (TR). This misleading result can be detected by the empirical false discovery rate (FDR) being much greater than the nominal level. In addition, the average false rate (FR) becomes larger as the sample size increases. Therefore, the MUR approach should be used with caution when testing a single coefficient, conducting multiple hypothesis tests, or selecting variables.

Table 1.

Simulation results for Example 1 with α = 5%, q = 5% and d0 = 10.

| p | n | Methods | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 0.055 | 0.981 | 0.753 | 0.812 | 0.004 |

| LDPE | 0.071 | 0.882 | 0.396 | 0.808 | 0.028 | ||

| CPS | 0.056 | 0.495 | 0.127 | 0.451 | 0.000 | ||

| 200 | MUR | 0.054 | 1.000 | 0.726 | 1.000 | 0.007 | |

| LDPE | 0.059 | 0.980 | 0.354 | 0.901 | 0.014 | ||

| CPS | 0.053 | 0.792 | 0.073 | 0.762 | 0.000 | ||

| 500 | MUR | 0.054 | 1.000 | 0.691 | 1.000 | 0.011 | |

| LDPE | 0.055 | 1.000 | 0.201 | 1.000 | 0.006 | ||

| CPS | 0.053 | 0.982 | 0.053 | 0.958 | 0.000 | ||

| 2000 | 100 | MUR | 0.057 | 0.884 | 0.717 | 0.731 | 0.014 |

| LDPE | 0.078 | 0.731 | 0.429 | 0.690 | 0.035 | ||

| CPS | 0.055 | 0.442 | 0.128 | 0.362 | 0.000 | ||

| 200 | MUR | 0.053 | 1.000 | 0.793 | 0.951 | 0.020 | |

| LDPE | 0.059 | 0.941 | 0.390 | 0.892 | 0.009 | ||

| CPS | 0.053 | 0.712 | 0.078 | 0.668 | 0.000 | ||

| 500 | MUR | 0.052 | 1.000 | 0.826 | 1.000 | 0.023 | |

| LDPE | 0.055 | 1.000 | 0.229 | 1.000 | 0.006 | ||

| CPS | 0.052 | 0.979 | 0.056 | 0.971 | 0.000 |

We next study the performance of LDPE. Table 1 indicates that, although LDPE can control the size well at a reasonable level, it fails to control the FDR at the nominal level, particularly in small samples. For instance, when the sample size n = 100, the FDR values are 0.396 and 0.429 for p = 1000 and p = 2000, respectively. In contrast to MUR and LDPE, the CPS approach not only controls the size well, but also leads to FDR converging to the nominal level as the sample size increases. Furthermore, the average TR increases towards 1 and the average FR decreases to 0, both of which are consistent with theoretical findings.

In addition to d0 = 10, the results for d0 = 50 and 100 in Tables S1 and S2 of the supplementary material indicate that CPS is still superior to MUR and LDPE under the less sparse scenario. It is of interest to note that LDPE does not control the size well under less sparse regression models. This finding is not surprising since LDPE depends heavily on the accuracy of estimating the whole vector β. In sum, CPS performs well for testing a single coefficient, and the resulting p-values are reliable for multiple hypothesis tests and model selection.

Example 2: Moving average predictors

In this example, we generate data from a linear regression model with predictors following the moving average model with order 1: Xi = ui + 0.5ui−1 for i = 2, …, n and X1 = u1, where ui are independently generated from a multivariate normal distribution with mean 0 and covariance 0.8Ip for i = 1, …, n. Accordingly, the covariance matrix of Xi can be written as Σ = (σj1j2) ∈ ℝp×p with σj1j2 = 1 if j1 = j2, σj1j2 = 0.4 if |j1 − j2| = 1, and σj1j2 = 0 otherwise. The regression coefficients β, the number of non-zero coefficients d0, and the variance of , are the same as those in Example 1.

Table 2 reports the results for d0 = 10, and similar findings for d0 = 50 and 100 can be found in Tables S3 and S4, respectively, of the supplementary material. Table 2 shows that both CPS and MUR control the size well. However, MUR fails to control FDR at the nominal level. In fact, its FDR is much greater than the nominal level. In addition, its average false rate (FR) becomes larger as the sample size increases. We next study the performance of LDPE. Table 2 indicates that LDPE fails to control the FDR at the nominal level, particularly in small samples, although it can control the size well at a reasonable level. In addition, LDPE fails to control the size well for less sparse regression models (see Tables S3 and S4 for d0 = 50 and 100, respectively, in the supplementary material). This finding is not surprising since LDPE depends heavily on the accuracy of estimating the whole vector β. In contrast to MUR and LDPE, the resulting p-values obtained by CPS are reliable for multiple hypothesis tests and model selections. Furthermore, CPS performs well even under less sparse models (see Tables S3 and S4 in the supplementary material), and this nice property is not enjoyed by MUR and LDPE.

Table 2.

Simulation results for Example 2 with α = 5%, q = 5% and d0 = 10.

| p | n | Methods | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 0.054 | 0.929 | 0.289 | 0.787 | 0.005 |

| LDPE | 0.070 | 0.793 | 0.179 | 0.765 | 0.021 | ||

| CPS | 0.057 | 0.386 | 0.120 | 0.398 | 0.000 | ||

| 200 | MUR | 0.053 | 1.000 | 0.236 | 1.000 | 0.009 | |

| LDPE | 0.061 | 0.998 | 0.147 | 1.000 | 0.015 | ||

| CPS | 0.053 | 0.947 | 0.068 | 0.952 | 0.000 | ||

| 500 | MUR | 0.052 | 1.000 | 0.186 | 1.000 | 0.011 | |

| LDPE | 0.054 | 1.000 | 0.098 | 1.000 | 0.002 | ||

| CPS | 0.051 | 1.000 | 0.051 | 1.000 | 0.000 | ||

| 2000 | 100 | MUR | 0.054 | 0.882 | 0.282 | 0.716 | 0.009 |

| LDPE | 0.074 | 0.737 | 0.169 | 0.724 | 0.027 | ||

| CPS | 0.058 | 0.329 | 0.126 | 0.325 | 0.000 | ||

| 200 | MUR | 0.053 | 1.000 | 0.229 | 1.000 | 0.012 | |

| LDPE | 0.060 | 0.968 | 0.128 | 0.954 | 0.015 | ||

| CPS | 0.054 | 0.874 | 0.071 | 0.901 | 0.000 | ||

| 500 | MUR | 0.051 | 1.000 | 0.156 | 1.000 | 0.015 | |

| LDPE | 0.056 | 1.000 | 0.093 | 1.000 | 0.006 | ||

| CPS | 0.051 | 1.000 | 0.055 | 1.000 | 0.000 |

Example 3: Equally correlated predictors

Consider a model with equally correlated predictors, Xi, generated from a multivariate normal distribution with mean 0 and a compound symmetric covariance matrix Σ = (σj1j2) ∈ ℝp×p, where σj1j2 = 1 if j1 = j2 and σj1j2 = 0.5 for any j1 ≠ j2. In addition, the regression coefficients are set as follows: βj = 5 for 1 ≤ j ≤ d0, and βj = 0 for j > d0. The number of non-zero regression coefficients d0 and the variance of are the same as those in Example 1.

To save space, we only present the results for d0 = 10 in Table 3, and the results for d0 = 50 and 100 are in Tables S5 and S6, respectively, of the supplementary material. Table 3 indicates that MUR performs poorly in terms of both ES and FDR measures. This finding is not surprising because every predictor in 𝒩0 is equally correlated with those predictors in 𝒩1. As a result, the marginal correlation between any predictor in 𝒩0 and the response variable is bounded well away from 0. Thus, MUR’s empirical rejection probability is close to 100%, which leads to highly inflated ES and FDR. Furthermore, FR equals 1 at all sample sizes, which implies that MUR tends to over reject the null hypothesis. Moreover, the results of LDPE are similar to those in Tables 1–2. On the other hand, the ES and FDR of CPS are close to the nominal level, except for the case of CPS with n = 100. Moreover, TR and EP increase towards 1 as the sample size gets large, and FR equals 0 at all sample sizes.

Table 3.

Simulation results for Example 3 with α = 5%, q = 5% and d0 = 10.

| p | n | Test | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 1.000 | 1.000 | 0.997 | 1.000 | 1.000 |

| LDPE | 0.054 | 0.528 | 0.349 | 0.477 | 0.009 | ||

| CPS | 0.058 | 0.449 | 0.141 | 0.418 | 0.000 | ||

| 200 | MUR | 1.000 | 1.000 | 0.998 | 1.000 | 1.000 | |

| LDPE | 0.054 | 0.832 | 0.207 | 0.719 | 0.005 | ||

| CPS | 0.049 | 0.747 | 0.051 | 0.701 | 0.000 | ||

| 500 | MUR | 1.000 | 1.000 | 0.998 | 1.000 | 1.000 | |

| LDPE | 0.051 | 1.000 | 0.162 | 0.981 | 0.004 | ||

| CPS | 0.049 | 0.987 | 0.048 | 0.965 | 0.000 | ||

| 2000 | 100 | MUR | 1.000 | 1.000 | 0.996 | 1.000 | 1.000 |

| LDPE | 0.050 | 0.465 | 0.402 | 0.428 | 0.008 | ||

| CPS | 0.055 | 0.398 | 0.138 | 0.366 | 0.000 | ||

| 200 | MUR | 1.000 | 1.000 | 0.997 | 1.000 | 1.000 | |

| LDPE | 0.049 | 0.776 | 0.232 | 0.713 | 0.005 | ||

| CPS | 0.051 | 0.689 | 0.055 | 0.664 | 0.000 | ||

| 500 | MUR | 1.000 | 1.000 | 0.995 | 1.000 | 1.000 | |

| LDPE | 0.050 | 1.000 | 0.144 | 0.962 | 0.002 | ||

| CPS | 0.050 | 0.937 | 0.050 | 0.915 | 0.000 |

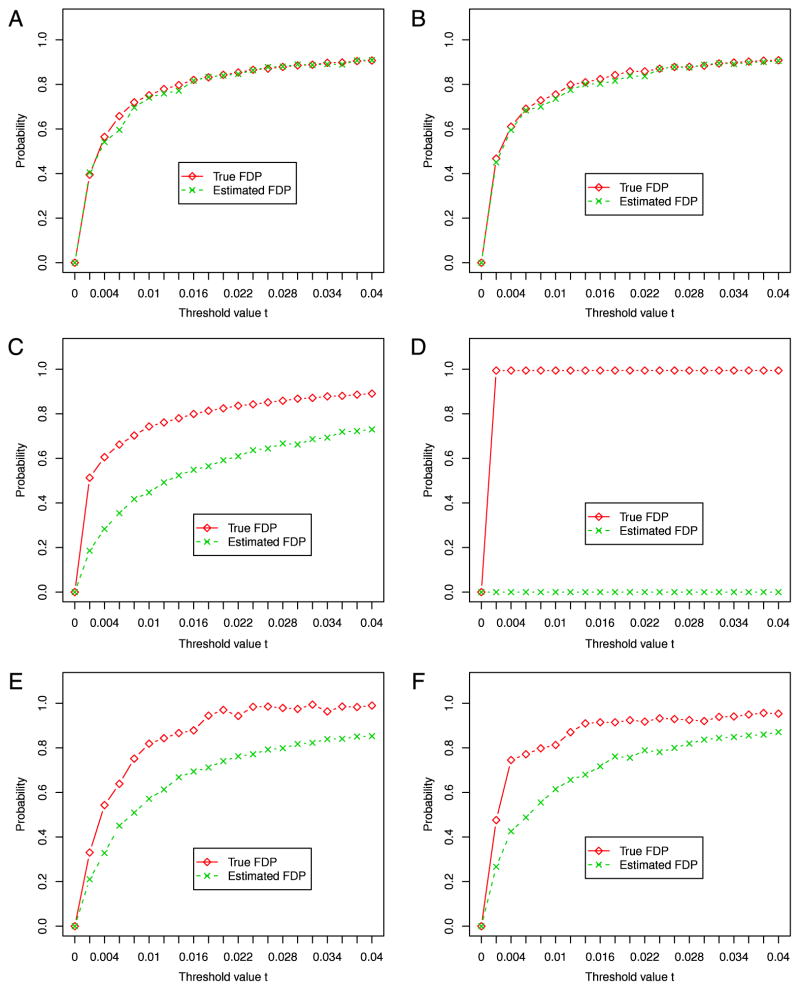

From the above simulation studies, we find that FDR plays an important role for examining the reliability of test statistics. Hence, we next study the accuracy for the estimation of FDR discussed in Section 2.3. Since we are interested in the statistical behavior of the number of false discoveries V(t), we follow Fan et al.’s (2012) suggestion and compare in (2.6), with λ = 1/2, to FDP(t) calculated via V(t)/[R(t) ∨ 1]. For the sake of illustration, we consider the same simulation settings as given in Examples 1 and 3 with n = 100, p = 1000 and d0 = 10. Panels A and B in Fig. 1 depict and FDP(t), obtained via the CPS method for Examples 1 and 3, respectively, across various t values. In contrast, Panels C and D are calculated via the MUR approach and Panels E and F are calculated via the LDPE method. Fig. 1 clearly shows that calculated from the p-values of CPS is reliable and consistent with the theoretical finding in Theorem 2. However, MUR and LDPE do not provide accurate estimates of FDP, and they should be used with caution in high dimensional data analysis.

Fig. 1.

Panels A and B depict the estimated FDP value (i.e., ) compared with the true FDP value obtained via the CPS method for Examples 1 and 3, respectively. Panels C and D are obtained via the MUR approach, and Panels E and F are obtained via the LDPE method.

The above three examples have demonstrated that CPS performs well across three commonly used covariance structures. It is worth noting that Conditions (C4) and (C5) hold in the first two examples, while these conditions are invalid in the third example. However, CPS still performs well in Example 3, which shows its robustness. Motivated by an anonymous referee’s comments, we present an additional study with the covariance structure Σ = Ip+uu⊤, where u = (u1, …, up)⊤ ∈ ℝp, uj = δj−2 for j = 1, …, p, and δ is a finite constant. Accordingly, cov(Xi1, Xij) = (δ/j)2 so that covariates exhibit polynomial decay and ρ1j = (δ/j)2/{(1+δ2)(1+ δ2/j4)}0.5. Hence, there are quite a number of predictors that are highly correlated with Xi1 when δ is large enough. One can also verify that maxj∉∈𝒮 |ϱ1j(𝒮)| = O(|𝒮|−2) as |𝒮| → ∞, and then both Conditions (C4) and (C5) hold. Our simulation results indicate that CPS performs well; see Table S7 in the supplementary material.

Example 4: Robustness of covariate distribution and λ parameter

In the first three examples, the covariate vector Xis were generated from a multivariate normal distribution and the tuning parameter λ was set to be 1/2. To assess the robustness of CPS against the covariate distribution and λ, we conduct simulation studies for various λs and three distributions of Xi = Σ1/2Zi, where each element of Zi is randomly generated from the standard normal distribution, the standardized exponential distribution exp(1), and the normal mixture distribution 0.1N(0, 32) + 0.9N(0, 1), respectively, for i = 1, …, n, and the Σs are correspondingly defined in Examples 1–3. Since all results are qualitatively similar, we only report the case when λ = 0.1, d0 = |𝒩1| = 10 and Zi follows a standardized exponential distribution. The results in Tables 4–6 show similar findings to those in Tables 1–3, respectively. Hence, Monte Carlo studies indicate that the CPS approach is robust against the covariate distribution and the threshold parameter λ.

Table 4.

Simulation results for Example 4 with α = 5%, q = 5%, d0 = 10, Σ as given in Example 1, λ = 0.1, and Xis being generated from a standardized exponential distribution.

| p | n | Methods | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 0.055 | 0.962 | 0.728 | 0.833 | 0.008 |

| LDPE | 0.068 | 0.881 | 0.407 | 0.816 | 0.021 | ||

| CPS | 0.057 | 0.518 | 0.129 | 0.492 | 0.000 | ||

| 200 | MUR | 0.055 | 1.000 | 0.721 | 0.975 | 0.012 | |

| LDPE | 0.058 | 0.995 | 0.349 | 0.912 | 0.014 | ||

| CPS | 0.052 | 0.775 | 0.067 | 0.744 | 0.000 | ||

| 500 | MUR | 0.052 | 1.000 | 0.722 | 1.000 | 0.016 | |

| LDPE | 0.055 | 1.000 | 0.174 | 1.000 | 0.006 | ||

| CPS | 0.052 | 0.991 | 0.053 | 0.971 | 0.000 | ||

| 2000 | 100 | MUR | 0.056 | 0.882 | 0.723 | 0.711 | 0.015 |

| LDPE | 0.069 | 0.731 | 0.441 | 0.704 | 0.022 | ||

| CPS | 0.058 | 0.449 | 0.130 | 0.401 | 0.000 | ||

| 200 | MUR | 0.056 | 1.000 | 0.744 | 0.922 | 0.018 | |

| LDPE | 0.063 | 0.943 | 0.386 | 0.915 | 0.016 | ||

| CPS | 0.054 | 0.738 | 0.076 | 0.703 | 0.000 | ||

| 500 | MUR | 0.052 | 1.000 | 0.782 | 1.000 | 0.022 | |

| LDPE | 0.053 | 1.000 | 0.196 | 1.000 | 0.005 | ||

| CPS | 0.053 | 0.988 | 0.057 | 0.981 | 0.000 |

Table 6.

Simulation results for Example 4 with α = 5%, q = 5%, d0 = 10, Σ as given in Example 3, λ = 0.1, and Xis being generated from a standardized exponential distribution.

| p | n | Test | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 1.000 | 1.000 | 0.998 | 1.000 | 1.000 |

| LDPE | 0.054 | 0.521 | 0.374 | 0.496 | 0.005 | ||

| CPS | 0.059 | 0.449 | 0.126 | 0.433 | 0.000 | ||

| 200 | MUR | 1.000 | 1.000 | 0.998 | 1.000 | 1.000 | |

| LDPE | 0.054 | 0.832 | 0.211 | 0.743 | 0.002 | ||

| CPS | 0.049 | 0.743 | 0.052 | 0.683 | 0.000 | ||

| 500 | MUR | 1.000 | 1.000 | 0.998 | 1.000 | 1.000 | |

| LDPE | 0.050 | 1.000 | 0.127 | 0.982 | 0.001 | ||

| CPS | 0.049 | 0.982 | 0.051 | 0.970 | 0.000 | ||

| 2000 | 100 | MUR | 1.000 | 1.000 | 0.996 | 1.000 | 1.000 |

| LDPE | 0.052 | 0.471 | 0.412 | 0.451 | 0.012 | ||

| CPS | 0.054 | 0.429 | 0.143 | 0.352 | 0.000 | ||

| 200 | MUR | 1.000 | 1.000 | 0.996 | 1.000 | 1.000 | |

| LDPE | 0.052 | 0.772 | 0.217 | 0.717 | 0.005 | ||

| CPS | 0.051 | 0.712 | 0.058 | 0.686 | 0.000 | ||

| 500 | MUR | 1.000 | 1.000 | 0.997 | 1.000 | 1.000 | |

| LDPE | 0.050 | 1.000 | 0.143 | 0.981 | 0.002 | ||

| CPS | 0.052 | 0.952 | 0.050 | 0.937 | 0.000 |

4. Real data analysis

To illustrate the usefulness of the proposed method, we consider two empirical examples. The first example analyzes financial data and the second example studies supermarket data.

4.1. Index fund data

The data set consists of a total of n = 155 observations, in which the response Yi is the weekly return of the Shanghai composite index. Explanatory variables Xi are p = 382 stock returns that traded on the Shanghai stock exchange during the period from Oct. 9, 2010 to Sep. 28, 2013, with i = 1, …, 155. We assume that there is a linear relationship between Yi and Xi, which is , as given in Eq. (2.1). In addition, both the response and predictors are standardized so that they have zero mean and unit variance. The task of this study is identifying a small number of relevant stocks that financial managers can use to establish a portfolio that tracks the return of the Shanghai composite index.

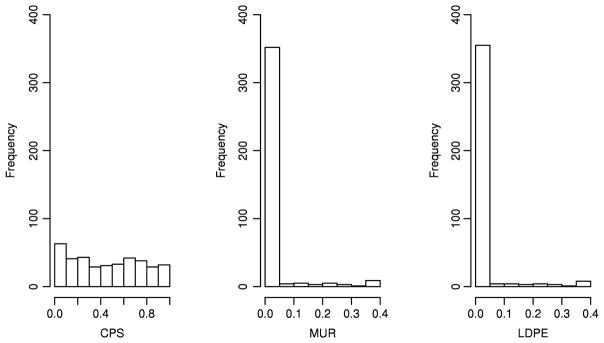

To identify important stocks (predictors) that are associated with Yi, we employ the CPS, MUR, and LDPE methods and test the significance of each individual regression coefficient, namely, testing H0j : βj = 0 vs. H1j : βj ≠ 0 for j = 1, …, 382. Here, the tuning parameter of the LDPE method is set to {2 log p/n}1/2, as suggested by Zhang and Zhang (2014). Since the asymptotic distribution of the p-values obtained from the above test statistics is uniform [0, 1], we use the histogram to effectively illustrate their performances. Fig. 2 depicts the histograms of the p-values for testing H0j (j = 1, …, 382) via three tests. Based on the CPS test, we find 32 p-values that are less than the significance level α = 5%. After controlling the false discoveries rate via the method of Storey et al. (2004) at the level of q = 5%, the number of hypotheses H0j being rejected is 12. As a result, we have identified the 12 most important stocks that can be used for index tracking.

Fig. 2.

Index fund data. The histograms of the p-values for the CPS, MUR, and LDPE tests.

In contrast, the histogram of the p-values calculated from the MUR tests is heavily skewed with very thin tails. This suggests that most of its p-values are very small. Consequently, it rejected a total of 161 hypotheses H0j after controlling the FDR at the level of q = 5%. This finding is not surprising since the covariates in the model are highly correlated due to the existence of latent factors, as observed by Fama and French (1993). Analogous results can be found in the histogram of the p-values generated from LDPE. In sum, CPS is able to identify the most relevant stocks from high dimensional data, while MUR and LDPE cannot.

4.2. Supermarket data

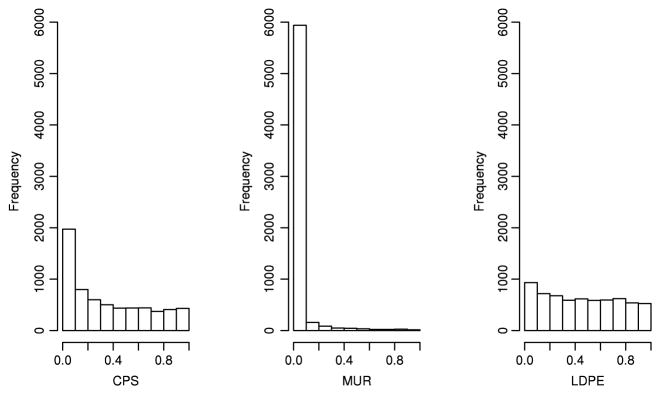

This data set contains a total of n = 464 daily records. For each record, the response variable (Yi) is the number of customers and the predictors (Xi1, …, Xip) are the sales volumes of p = 6398 products. Consider a linear relationship between Yi and Xi = (Xi1, …, Xip)⊤ ∈ ℝp, given by , where both the response and predictors are standardized so that they have zero mean and unit variance. The purpose of this study is to determine a small number of products that attract the most customers.

We apply the proposed CPS, MUR, and LDPE methods to test the significance of each regression coefficient, namely H0j : βj = 0 vs. H1j: βj ≠ 0. Fig. 3 depicts the three histograms of the p-values computed via the CPS, MUR, and LDPE methods, respectively. As one can observe from the histogram, for the CPS method, the pattern indicates that most of the H0j are true and the p-values are asymptotically valid. There were 1426 p-values that are less than the significance level α = 5%. After controlling the false discoveries rate via the method of Storey et al. (2004) at the level of q = 5%, the number of hypotheses H0j being rejected is 132. In other words, we have identified 132 most important products on which the supermarket decision maker (or manager) might perform further analysis. In contrast, for the MUR method, the histogram of the p-values are extremely skewed with very thin tails. It rejected a total of 5648 hypotheses H0j after controlling the FDR at the level of q = 5%. In addition, the histogram of the p-values generated from the LDPE tests in Fig. 3 shows a flat pattern within the entire interval [0, 1]. As a result, it is not surprising to find that there were a total of 535 p-values that are less than the significance level α = 5%, while none of them were significant after controlling the false discoveries rate at the level of q = 5%.

Fig. 3.

Supermarket data. The histograms of the p-values for the CPS, MUR, and LDPE tests.

The above two above examples indicate that the CPS method not only practically provides a simple and efficient approach to compute the p-value for testing a single coefficient in a high dimensional linear model, but also results in reliable p-values for multiple hypothesis testing.

5. Discussion

In linear regression models with high dimensional data, we propose a single screening procedure, Correlated Predictors Screening (CPS), to control for predictors that are highly correlated with the target covariate. This allows us to employ the classical ordinary least squares approach to obtain the parameter estimator. We then demonstrate that the resulting estimator is asymptotically normal. Accordingly, we extend the classical t-test (or z-test) for testing a single coefficient to the high dimensional setting. Based on the p-value obtained from testing the significance of each covariate, the multiple hypothesis testing is established by controlling the false discovery rate at the nominal level. In addition, we show that multiple hypothesis test leads to consistent model selection. Accordingly, the main focus of this paper is on statistical inference rather than variable selection and parameter estimation, which are often the aims of regularization methods such as LASSO (Tibshirani, 1996) and SCAD (Fan and Li, 2001).

The proposed CPS method can be extended for testing a small subset of regression coefficients. Consider the hypothesis:

| (5.1) |

where ℳ is a pre-specified index set with a fixed size and βℳ = (βj: j ∈ ℳ)⊤ ∈ ℝ|ℳ| is the subvector of β corresponding to ℳ. Without loss of generality, we assume that ℳ = {j: 1 ≤ j ≤ |ℳ|} and 1 < |ℳ| ≪ n. Then, define an overall CPS set of ℳ as 𝒮ℳ = ⋃j∈ℳ 𝒮j, where 𝒮j is the CPS set for the jth predictor in ℳ. Accordingly, the target parameter estimator βℳ = (βj: j ∈ ℳ) ⊤ ∈ ℝ|ℳ| can be estimated by , where 𝕏ℳ = (𝕏j: j ∈ ℳ) ∈ ℝn×|ℳ|. Applying similar techniques to those used in the proof of Theorem 1, we can show that n1/2(β̂ℳ −βℳ)→d N(0,Σβ), where with and . Consequently, an F-type test statistic can be constructed to test (5.1).

To broaden the usefulness of the proposed method, we conclude the article by discussing three possible research avenues. Firstly, from the model aspect, it would be practically useful to extend the CPS method to generalized linear models, single index models, partial linear models, and survival models. Secondly, from the data aspect, it is important to generalize the proposed CPS method to accommodate category explanatory variables, repeated measurements, and missing observations. Lastly, to control the FDR at the nominal level, we have imposed a weak dependence assumption in Theorem 2. Hence, it would be useful to employ the method of Fan et al. (2012) to adjust for the arbitrary covariance dependence among test statistics Zj. We believe these extensions would enhance the usefulness of CPS in high dimensional data analysis.

Supplementary Material

Table 5.

Simulation results for Example 4 with α = 5%, q = 5%, d0 = 10, Σ as given in Example 2, λ = 0.1, and Xis being generated from a standardized exponential distribution.

| p | n | Test | ES | EP | FDR | TR | FR |

|---|---|---|---|---|---|---|---|

| 1000 | 100 | MUR | 0.055 | 0.938 | 0.296 | 0.792 | 0.009 |

| LDPE | 0.071 | 0.802 | 0.185 | 0.772 | 0.022 | ||

| CPS | 0.056 | 0.404 | 0.132 | 0.401 | 0.000 | ||

| 200 | MUR | 0.055 | 1.000 | 0.228 | 1.000 | 0.012 | |

| LDPE | 0.064 | 1.000 | 0.152 | 1.000 | 0.013 | ||

| CPS | 0.052 | 0.943 | 0.070 | 0.947 | 0.000 | ||

| 500 | MUR | 0.053 | 1.000 | 0.190 | 1.000 | 0.017 | |

| LDPE | 0.054 | 1.000 | 0.103 | 1.000 | 0.004 | ||

| CPS | 0.052 | 1.000 | 0.052 | 1.000 | 0.000 | ||

| 2000 | 100 | MUR | 0.053 | 0.891 | 0.290 | 0.725 | 0.009 |

| LDPE | 0.072 | 0.744 | 0.181 | 0.731 | 0.030 | ||

| CPS | 0.059 | 0.352 | 0.133 | 0.332 | 0.000 | ||

| 200 | MUR | 0.053 | 1.000 | 0.242 | 1.000 | 0.013 | |

| LDPE | 0.059 | 0.977 | 0.153 | 0.973 | 0.018 | ||

| CPS | 0.054 | 0.853 | 0.069 | 0.911 | 0.000 | ||

| 500 | MUR | 0.054 | 1.000 | 0.134 | 1.000 | 0.015 | |

| LDPE | 0.053 | 1.000 | 0.097 | 1.000 | 0.009 | ||

| CPS | 0.052 | 1.000 | 0.058 | 1.000 | 0.000 |

Acknowledgments

Wei Lan’s research was supported by National Natural Science Foundation of China (NSFC, 11401482, 71532001). Ping-Shou Zhong’s research was supported by a National Science Foundation grant DMS 1309156. Runze Li’s research was supported by a National Science Foundation grant DMS 1512422, National Institute on Drug Abuse (NIDA) grants P50 DA039838, P50 DA036107, and R01 DA039854. Hansheng Wang’s research was supported in part by National Natural Science Foundation of China (NSFC, 11131002, 11271031, 71532001), the Business Intelligence Research Center at Peking University, and the Center for Statistical Science at Peking University. The authors thank the Editor, the AE and reviewers for their constructive comments, which have led to a dramatic improvement of the earlier version of this paper. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NSF, NIH and NIDA.

Appendix A. Four useful lemmas

Before proving the theoretical results, we present the following four lemmas which are needed in the proofs. The first lemma is directly borrowed from Lemma A.3 of Bickel and Levina (2008), and the second lemma can be found in Bendat and Piersol (1966). As a result, we only verify the third and fourth lemmas.

Lemma 1

Let σ̂j1j2 = n−1Σi Xij1Xij2 and ρ̂j1j2 = σ̂j1j2/{σ̂j1j1 σ̂j2j2}1/2, and assume that Condition (C1) holds. Then, there exist three positive constants ζ0 > 0, C1 > 0, and C2 > 0, such that (i) P(|σ̂j1j2 − σj1j2| > ζ) ≤ C1 exp(−C2nζ2) and (ii) P(|ρ̂j1j2 − ρ̂j1j2 | > ζ) ≤ C1 exp(−C2nζ2) for any 0 < ζ < ζ0 and every 1 ≤ j1, j2 ≤ p.

Lemma 2

Let (U1, U2, U3, U4)⊤ ∈ ℝ4 be a 4-dimensional normal random vector with E(Uj) = 0 and var(Uj) = 1 for 1 ≤ j ≤ 4. We then have E(U1U2U3U4) = δ12δ34 + δ13δ24 + δ14δ23, where δij = E(UiUj).

Lemma 3

Assume that Conditions (C1)–(C3) hold, and m = O(nν2) for some positive constant ν2 which satisfies 3ν2 + ħ < 1, where ħ is given in (C3). Then, .

Proof

Since and , it suffices to show that

| (A.1) |

Denote ||A|| = {tr(AA⊤)}1/2 for any arbitrary matrix A. Since is the conditional variance of X1 given X𝒮, . Then by Condition (C2), we have . Then, we obtain (A.1) if the following two uniform convergence results hold:

| (A.2) |

| (A.3) |

Accordingly, it suffices to demonstrate (A.2) and (A.3).

It is noteworthy that, for any 𝒮 satisfying |𝒮| ≤ m, we have

This, together with the Bonferroni inequality, Condition (C1), Lemma 1(i), and the fact that #{𝒮 ⊂ {1, …, p}: |𝒮| ≤ m} ≤ pm, implies

| (A.4) |

Furthermore, by the assumptions in Lemma 3 (m = O(nν2)) and Condition (C3) (log p ≤ νnħ), we have that m log p = O(nν2+ħ). Moreover, using the assumptions in Lemma 3 again (3ν2 + ħ < 1), the right-hand side of (A.4) converges towards 0 as n → ∞. Hence, we have proved (A.2). Applying similar techniques to those used in the proof of (A.2), we can also demonstrate (A.3). This completes the entire proof.

Lemma 4

Assume that (a) limp→∞ V(t)/p0 = G0(t) and limp→∞ S(t)/(p − p0) = G1(t), where G0(t) and G1(t) are continuous functions; (b) 0 < G0(t) ≤ t for t ∈ (0, 1]; (c) limp→∞ p0/p = 1. Then, we have .

Proof

By slightly modifying the proof of Theorem 4 in Storey et al. (2004), we can demonstrate the result. The detailed proof can be obtained from the authors upon request.

Appendix B. Proof of Theorem 1

Let and . Then, β̂1 − β1 = T1 + T2. Using the fact that E(εi|Xi) = 0, one can show that cov(T1, T2) = E(T1T2) − E(T1)E(T2) = 0. Therefore, T1 and T2 are uncorrelated. To prove the theorem, hence, it suffices to show that is asymptotically bivariate normal. By Conditions (C1)–(C3) and Lemma 2, we obtain that , and . Accordingly, we have

Applying the same arguments as those given above, we also obtain that

Let and . Then, it can be shown that E(ξi1) = 0, , and . Using Conditions (C4)–(C6), we further obtain that . Moreover,

The bivariate Central Limit Theorem, together with the above results, implies that

is asymptotically bivariate normal with mean zero and diagonal covariance matrix V = Diag(Vii). In addition, and . Consequently, is asymptotically normal with mean zero and variance V11 + V22, which completes the proof.

Appendix C. Proof of Proposition 1

As defined in (2.3), 𝒮k0 = {j1, …, jk0} contains the indices whose associated predictors have the k0 largest absolute correlations with Xi1. For a given k̄, 𝒮̂k̄ is defined as in (2.5). In addition, the event {𝒮̂k̄ ⊅ 𝒮k0} indicates that there exists at least one index, say ji1 ∈ 𝒮k0 (i1 ≤ k0), but ji1 ∉ 𝒮̂k̄. Then, for any k̄ satisfying k0 + dmaxnν2/2 < k̄ < k0 + dmaxnν2 with 1 ≤ k0 ≤ Cbnν2, we have {𝒮̂k̄ ⊅ 𝒮k0} ⊂ {There exist indices i1 ≤ k0 and i2 > k̄ that satisfy |ρ̂1ji2| > |ρ̂1ji1|}. The reasoning is as follows. When ji1 ∉ 𝒮̂k̄, it implies that there exists some index, say ji2 with i2 > k̄, such that ji2 ∈ 𝒮̂k̄. Otherwise, all indices jk in 𝒮̂k̄ satisfy k ≤ k̄0, which implies that 𝒮̂k̄ = {j1, …, jk̄} contains 𝒮k0 as a subset. This yields a contradiction. As a result, we have P(𝒮̂k̄ ⊅ 𝒮k0) ≤ P(There exist indices i1 ≤ k0 and i2 > k̄ that satisfy |ρ̂1ji2 | > |ρ̂1ji1|). Thus,

After simple calculation, we obtain that

This, together with Lemma 1(ii) and the assumption in Proposition 1 that |ρ1ji1| − |ρ1ji2| > dminn−ν2 for any i2 − i1 > dmaxnν2/2, leads to

By Condition (C3), the negative sign of the first term on the right-hand side of the above equation, , dominates the second term log p. As a result,

which completes the proof.

Appendix D. Proof of Theorem 2

We mainly apply Lemma 4 to prove Theorem 2. To this end, we need to show the following two results,

| (D.1) |

| (D.2) |

as p → ∞, where . Since the proofs for (D.1) and (D.2) are quite similar, we only verify (D.1). By the law of large numbers, it is enough to show that

| (D.3) |

It is worth noting that the left-hand side of (D.3) is equivalent to

| (D.4) |

Using the fact that var{I(|Zj| ≥ z1−t/2)} ≤ E{I(|Zj| ≥ z1−t/2)} ≤ 1 and the assumption that p0/p → 1, together with the Cauchy–Schwarz inequality, we have that J2 ≤ p−2|𝒩1| Σj∈𝒩1 var{I(|Zj| ≥ z1−t/2)} ≤ (p − p0)2/p2 → 0. In addition, applying the Cauchy–Schwarz inequality, we obtain

Accordingly, to prove (D.3), we only need to show that J1 = O(p−δ) for some δ > 0. It can be seen that

Since J11 ≤ p0/p2 → 0 as p → ∞, it suffices to show that J12 = O(p−δ) for some δ > 0. Note that

where I1 = E{I(Zj1≥ z1−t/2)I(Zj2 ≥ z1−t/2)} − E{I(Zj1 ≥ z1−t/2)}E{I(Zj2 ≥ z1−t/2)}, I2 = E{I(Zj1 ≥ z1−t/2)I(Zj2 ≤ −z1−t/2)} − E{I(Zj1 ≥ z1−t/2)}E{I(Zj2 ≤ −z1−t/2)}, I3 = E{I(Zj1 ≤ −z1−t/2)I(Zj2 ≥ z1−t/2)} − E{I(Zj1 ≤ −z1−t/2)}E{I(Zj2 ≥ z1−t/2)} and I4 = E{I(Zj1 ≤ −z1−t/2)I(Zj2 ≤ −z1−t/2)} − E{I(Zj1 ≤ −z1−t/2)}E{I(Zj2 ≤ −z1−t/2)}. Since the proofs for I1 to I4 are essentially the same, we only focus on I1.

Applying the asymptotic expansion of Zj given in the proof of Theorem 1, we have

| (D.5) |

where , and . As a result, for any j ∈ 𝒩0, and Zj can be expressed as a summation of independent and identically distributed (i.i.d.) random variables uij. In addition, Condition (C1) implies that uij has an exponential tail. This, together with the bivariate large deviation result (Zhong et al., 2013), leads to

where ρj1j2 = corr(uij1, uij2) and

Without loss of generality, we assume that ρj1j2 = corr(uij1, uij2) > 0 and z1−t/2 > 0. Then, by using the inequality in Willink (2004), we have

| (D.6) |

where ζ = {(1−ρj1j2)/(1+ρj1j2)}1/2. Accordingly, we obtain that

After algebraic simplification, (1 − ζ)/ρj1j2 → 1 as ρj1j2 → 0. Hence, I1/(CIρj1j2) → 1 for a positive constant CI, which implies that cov{I(|Zj1 | ≥ z1−t/2), I(|Zj2 | ≥ z1−t/2)} ≈ CI |ρj1j2 |. Consequently, if Σj∈𝒩0 |ρjk| = o(p) for any k ∈ 𝒩0, then J12 = O(p−δ) for some δ > 0.

To complete the proof, we next verify the above condition Σj∈𝒩0 |ρjk| = o(p) for any k ∈ 𝒩0. By the Cauchy–Schwarz inequality, we need to show that . Since is the conditional variance of Xj given X𝒮j, . Therefore, uniformly by Condition (C2) and is bounded, respectively, for any j ∈ 𝒩0. Hence, maxj . In addition, (D.5) implies var(uij) = 1. As a result, we only need to demonstrate that , where υjk = cov(uij, uik).

It can be shown that υjk = υjk,1+υjk,2, where υjk,1 = cov(ξij, ξik), υjk,2 = cov(δij, δik), and ξij and δij are defined after Eq. (D.5). Hence, to complete the proof, it suffices to show the following results:

| (D.7) |

We begin with proving the first equation of (D.7). Applying the Cauchy–Schwarz inequality, it can be shown that

We then study the above three components separately.

By definition, we have uniformly for any j = 1, … , p. This, together with Condition (C5), implies that uniformly for any j. As a result, we have

where the second summation on the right-hand side of the above equation is 0 since, for k ∈ 𝒮j, is one of the component of the vector . We next consider the second term . Using the fact that the conditional variance of Xij is non-negative and then applying Condition (C2), we have . This, together with the assumption in Theorem 2, Condition (C2), and the fact that |𝒮k| ≤ Λ0, leads to

Employing similar techniques, we can show that . The above results complete the proof of the first equation in (D.7).

Subsequently, we will verify the second equation of (D.7). According to the result in the proof of Theorem 1, for some positive constant Cδ. It follows that p−1Σj∈𝒩0E2(δij)E2(δik) = o(1); hence, we only need to show that p−1Σj∈𝒩0E2(δijδik) = o(1). After algebraic simplification, we obtain that

For the sake of simplicity, we suppress the subscript i in the rest of the proof.

We first demonstrate Q1j = o(1) for each j ∈ 𝒩0. By Lemma 2 with some tedious calculations, we obtain that

By Condition (C2), we have |σj2j3| ≤ cmax. As a result.

Then employing Condition (C6), we obtain Σj |βj| = O(nϖ). In addition, Conditions (C4) and (C5) imply that ϱjk(𝒮j) = O(n−1/2). The above results lead to

uniformly for any j. Applying similar techniques, we can also show that and , which complete the proof of Q1j = o(1).

We next verify Q2j = o(1) for each j ∈ 𝒩0. After algebraic calculation, we obtain that

where represents for the j3th elements of . By Conditions (C2), (C4) and (C5), we have that and ϱjj2 (𝒮j) ≤ maxj2∉𝒮jϱjj2(𝒮j) = O(n−1/2). These results, in conjunction with Condition (C6), yield

Employing similar techniques, we can also demonstrate that and , which lead to Q2j = o(1). This, together with Q1j = o(1), implies that

which completes the proof of (D.1).

It is worth noting that G0,n(t) → t. This, in conjunction with (D.1), (D.2), and the assumptions T1,n → T1(t) with T1(t) continuous and p0/p → 1 as p→∞, indicates that Conditions (a), (b), and (c) in Lemma 4 hold. Accordingly, the proof of Theorem 2 is complete.

Appendix E. Proof of Theorem 3

Let Zj = n1/2β̂j/σ̂βj be the test statistic and pj be the corresponding p-value for j = 1, … , p. Define αn = 2{1 − Φ(nj)} for some ħ < j < 1/2 − κ, where ħ and κ are given in Condition (C7); hence, αn → 0 as n → ∞. To prove the theorem, it suffices to show that

It is worth noting that n → ∞ implicitly implies p → ∞. We demonstrate the above equations in the following two steps accordingly.

STEP I

We show that P{V(αn) > 0} → 0. Using the fact that for 1 ≤ j ≤ p, we have

This, together with Bonferroni’s inequality, leads to

| (E.1) |

Consider the quantity , which is in the first term of the right-hand side of the above equation. Employing the same technique as used in the proof of Lemma 3, we obtain that . By Condition (C2), one can easily verify that . The above two results lead to uniformly for any j. Accordingly, there exists some constant C3 such that

This, in conjunction with Bonferroni’s inequality and Condition (C8), yields

for some positive constant C4. By definition, ħ < 2j. Thus, the first term on the right-hand side of the above equation, −Cℰn2j/4, dominates the second term νnħ, which immediately leads to

We next consider the quantity , which is in the second term of the right-hand side of Eq. (E.1). It is worth noting that

for some finite positive constant C5.

Using the results of and discussed after (E.1), we have that . In addition, Condition (C4), together with the fact that 𝒮j satisfies Condition (C5), leads to . By Corollary 1 of Kalisch and Bühlmann (2007), we immediately obtain

for every ħ < b < 1. Taking b = (ħ + j)/2, we then have . This, in conjunction with the above result , results in

In sum, we have shown the asymptotic behavior of the first component on the right-hand side of (E.1).

STEP II

We prove that limn→∞ P{S(αn)/(p − p0) = 1} → 1. By definition, we have

Applying the asymptotic result of the first component on the right-hand side of (E.1), we have maxj |n1/2( β̂j−βj)/σ̂βj| = o(nj). Then by Condition (C7) that minj∈𝒩1|βj| ≥ Cκn−κ for some constants Cκ > 0 and κ > 0, we can further obtain that minj∈𝒩1|n1/2βj/σ̂βj| = O(n1/2−κ). Moreover, by Bonferroni’s inequality and the fact that j +κ < 1/2, we have

which completes the proof of Step II. Consequently, the entire proof is complete.

Appendix F. Supplementary data

Supplementary material related to this article can be found online at http://dx.doi.org/10.1016/j.jeconom.2016.05.016.

References

- Belloni A, Chen D, Chernozhukov V, Hansen C. Sparse models and methods for optimal instruments with an application to eminent domain. Econometrica. 2012;80:2369–2429. [Google Scholar]

- Belloni A, Chernozhukov V, Hansen C. Inference on treatment effects after selection amongst high-dimensional controls. Rev Econom Stud. 2014;81:608–650. [Google Scholar]

- Bendat JS, Piersol AG. Measurement and Analysis of Random Data. Wiley; New York: 1966. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc Ser B Stat Methodol. 1995;57:289–300. [Google Scholar]

- Bickel P, Levina E. Regularized estimation of large covariances matrix. Ann Statist. 2008;36:199–227. [Google Scholar]

- Bühlmann P. Statistical significance in high-dimensional linear models. Bernoulli. 2013;19:1212–1242. [Google Scholar]

- Bunea F, Wegkamp M, Auguste A. Consistent variable selection in high dimensional regression via multiple testing. J Statist Plann Inference. 2006;136:4349–4364. [Google Scholar]

- Cho H, Fryzlewicz P. High dimensional variable selection via tilting. J R Stat Soc Ser B Stat Methodol. 2012;74:593–622. [Google Scholar]

- Cook RD, Weisberg S. Residuals and Influence in Regression. Chapman and Hall; New York: 1998. [Google Scholar]

- Draper NR, Smith H. Applied Regression Analysis. 3. Wiley; New York: 1998. [Google Scholar]

- Fama EF, French KR. Common risk factors in the return on stocks and bonds. J Financ Econ. 1993;33:3–56. [Google Scholar]

- Fan J, Han X, Gu W. Estimating false discovery proportion under arbitrary covariance dependence. J Amer Statist Assoc. 2012;107:1019–1035. doi: 10.1080/01621459.2012.720478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Statist Assoc. 2001;96:1348–1360. [Google Scholar]

- Fan J, Lv J. Sure independence screening for ultra-high dimensional feature space (with discussion) J R Stat Soc Ser B Stat Methodol. 2008;70:849–911. doi: 10.1111/j.1467-9868.2008.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Lv J, Qi L. Sparse high-dimensional models in economics. The Annual Review of Economics. 2011;3:291–317. doi: 10.1146/annurev-economics-061109-080451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeman J, Geer VD, Houwelingen V. Testing against a high-dimensional alternative. J R Stat Soc Ser B Stat Methodol. 2006;68:477–493. [Google Scholar]

- Goeman J, Houwelingen V, Finos L. Testing against a high dimensional alternative in the generalized linear model: asymptotic type I error control. Biometrika. 2011;98:381–390. [Google Scholar]

- Huang J, Ma S, Zhang CH. Adaptive Lasso for sparse high-dimensional regression models. Statist Sinica. 2007;18:1603–1618. [Google Scholar]

- Kalisch M, Bühlmann P. Estimating high-dimensional directed acyclic graphs with the PC-algorithm. J Mach Learn Res. 2007;8:613–636. [Google Scholar]

- Li R, Zhong W, Zhu LP. Feature screening via distance correlation learning. J Amer Statist Assoc. 2012;107:1129–1139. doi: 10.1080/01621459.2012.695654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu WD. Gaussian graphical model estimation with false discovery rate control. Ann Statist. 2013;41:2948–2978. [Google Scholar]

- Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the Lasso. Ann Statist. 2006;34:1436–1462. [Google Scholar]

- Meinshausen N, Meier L, Bühlmann P. P-values for high-dimensional regression. J Amer Statist Assoc. 2009;104:1671–1681. [Google Scholar]

- Storey JD. A direct approach to false discovery rates. J R Stat Soc Ser B Stat Methodol. 2002;64:479–498. [Google Scholar]

- Storey JD, Taylor JE, Siegmund D. Strong control, conservative point estimation and simultaneous conservative consistency of false discovery rates: a unified approach. J R Stat Soc Ser B Stat Methodol. 2004;66:187–205. [Google Scholar]

- Sun T, Zhang CH. Scaled sparse linear regression. Biometrika. 2012;99:879–898. [Google Scholar]

- Tibshirani RJ. Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B Stat Methodol. 1996;58:267–288. [Google Scholar]

- van de Geer S, Bühlmann P, Ritov Y, Dezeure R. On asymptotically optimal confidence regions and tests for high-dimensional models. Ann Statist. 2014;42:1166–1202. [Google Scholar]

- Wang H. Forward regression for ultra-high dimensional variable screening. J Amer Statist Assoc. 2009;104:1512–1524. [Google Scholar]

- Wang H. Factor profiled independence screening. Biometrika. 2012;99:15–28. [Google Scholar]

- Willink R. Bounds on the bivariate normal distribution function. Commun Stat - Theory Methods. 2004;33:2281–2297. [Google Scholar]

- Wooldridge J. Econometric Analysis of Cross Section and Panel Data. MIT Press; USA: 2002. [Google Scholar]

- Zhang CH, Zhang SS. Confidence intervals for low dimensional parameters in high dimensional linear models. J R Stat Soc Ser B Stat Methodol. 2014;76:217–242. [Google Scholar]

- Zhao P, Yu B. On model selection consistency of Lasso. J Mach Learn Res. 2006;7:2541–2563. [Google Scholar]

- Zhong PS, Chen SX. Tests for high dimensional regression coefficients with factorial designs. J Amer Statist Assoc. 2011;106:260–274. [Google Scholar]

- Zhong PS, Chen SX, Xu M. Tests alternative to higher criticism for high dimensional means under sparsity and column-wise dependence. Ann Statist. 2013;41:2820–2851. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.