Abstract

Population-wide oscillations are ubiquitously observed in mesoscopic signals of cortical activity. In these network states a global oscillatory cycle modulates the propensity of neurons to fire. Synchronous activation of neurons has been hypothesized to be a separate channel of signal processing information in the brain. A salient question is therefore if and how oscillations interact with spike synchrony and in how far these channels can be considered separate. Experiments indeed showed that correlated spiking co-modulates with the static firing rate and is also tightly locked to the phase of beta-oscillations. While the dependence of correlations on the mean rate is well understood in feed-forward networks, it remains unclear why and by which mechanisms correlations tightly lock to an oscillatory cycle. We here demonstrate that such correlated activation of pairs of neurons is qualitatively explained by periodically-driven random networks. We identify the mechanisms by which covariances depend on a driving periodic stimulus. Mean-field theory combined with linear response theory yields closed-form expressions for the cyclostationary mean activities and pairwise zero-time-lag covariances of binary recurrent random networks. Two distinct mechanisms cause time-dependent covariances: the modulation of the susceptibility of single neurons (via the external input and network feedback) and the time-varying variances of single unit activities. For some parameters, the effectively inhibitory recurrent feedback leads to resonant covariances even if mean activities show non-resonant behavior. Our analytical results open the question of time-modulated synchronous activity to a quantitative analysis.

Author summary

In network theory, statistics are often considered to be stationary. While this assumption can be justified by experimental insights to some extent, it is often also made for reasons of simplicity. However, the time-dependence of statistical measures do matter in many cases. For example, time-dependent processes are examined for gene regulatory networks or networks of traders at stock markets. Periodically changing activity of remote brain areas is visible in the local field potential (LFP) and its influence on the spiking activity is currently debated in neuroscience. In experimental studies, however, it is often difficult to determine time-dependent statistics due to a lack of sufficient data representing the system at a certain time point. Theoretical studies, in contrast, allow the assessment of the time dependent statistics with arbitrary precision. We here extend the analysis of the correlation structure of a homogeneously connected EI-network consisting of binary model neurons to the case including a global sinusoidal input to the network. We show that the time-dependence of the covariances—to first order—can be explained analytically. We expose the mechanisms that modulate covariances in time and show how they are shaped by inhibitory recurrent network feedback and the low-pass characteristics of neurons. These generic properties carry over to more realistic neuron models.

Introduction

To date it is unclear which channels the brain uses to represent and process information. A rate-based view is argued for by the apparent stochasticity of firing [1] and by the high sensitivity of the network dynamics to single spikes [2]. In an extreme view, correlated firing is a mere epiphenomenon of neurons being connected. Indeed, a large body of literature has elucidated how correlations relate to the connectivity structure [3–14]. But the matter is further complicated by the observation that firing rates and correlations tend to be co-modulated, as demonstrated experimentally and explained theoretically [4, 5]. If the brain employs correlated firing as a means to process or represent information, this requires in particular that the appearance of correlated events is modulated in a time-dependent manner. Indeed, such modulations have been experimentally observed in relation to the expectation of the animal to receive task-relevant information [15, 16] or in relation to attention [17].

Oscillations are an extreme case of a time-dependent modulation of the firing rate of cells. They are ubiquitously observed in diverse brain areas and typically involve the concerted activation of populations of neurons [18]. They can therefore conveniently be studied in the local field potential (LFP) that represents a complementary window to the spiking activity of individual neurons or small groups thereof: It is composed of the superposition of the activity of hundreds of thousands to millions of neurons [19, 20] and forward modeling studies have confirmed [21] that it is primarily driven by the synaptic inputs to the local network [22–24]. As the LFP is a quantity that can be measured relatively easily, this mesoscopic signal is experimentally well documented. Its interpretation is, however, still debated. For example, changes in the amplitude of one of the components of the spectrum of the LFP have been attributed to changes in behavior (cf. e.g. [25]).

A particular entanglement between rates and correlations is the correlated firing of spikes in pairs of neurons in relation to the phase of an ongoing oscillation. With the above interpretation of the LFP primarily reflecting the input to the cells, it is not surprising that the mean firing rate of neurons may modulate in relation to this cycle. The recurrent network model indeed confirms this expectation, as shown in Fig 1A. It is, however, unclear if and by which mechanisms the covariance of firing follows the oscillatory cycle. The simulation shown in Fig 1B indeed exhibits a modulation of the covariance between the activities of pairs of cells. Such modulations have also been observed in experiments:

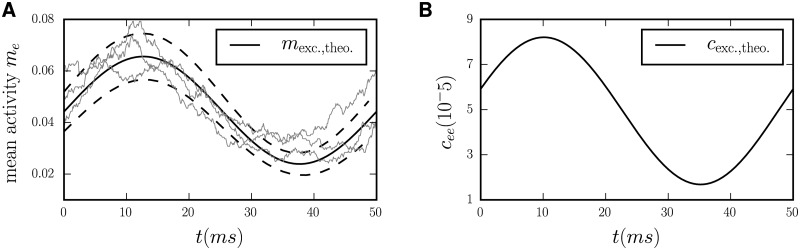

Fig 1.

A Time-varying mean activity of the excitatory population in a balanced EI-network (parameters given in Table 1). Thin gray lines are the outcomes of three independent simulations, the solid black line indicates the mean activity predicted by the theory (Eqs (7) and (8)). Dashed black lines indicate the range of expected fluctuations of the population activity (± one standard deviation): The square of the fluctuation magnitude is given by the variance of the population activity (Eqs (3) and (4)). B Population-averaged cross covariance .

Denker et al. [26] have shown that the synchronous activation of pairs of neurons within milliseconds preferentially appears at a certain phase of the oscillatory component of the LFP in the beta-range—in their words the spike-synchrony is “phase-locked” to the beta-range of the LFP. They explain their data by a conceptual model, in which an increase in the local input, assumed to dominate the LFP, leads to the activation of cell assemblies. The current work investigates an alternative hypothesis: We ask if a periodically-driven random network is sufficient to explain the time-dependent modulation of covariances between the activities of pairs of cells or whether additional structural features of the network are required to explain this experimental observation.

To investigate the mechanisms causing time-dependent covariances in an analytically tractable case, we here present the simplest model that we could come up with that captures the most important features: A local network receiving periodically changing external input. The randomly connected neurons receive sinusoidally modulated input, interpreted as originating from other brain areas and mimicking the major source of the experimentally observed LFP. While it is obvious that the mean activity in a network follows an imposed periodic stimulation, it is less so for covariances. In the following we will address the question why they are modulated in time as well. Extending the analysis of mean activities and covariances in the stationary state [13, 27, 28], we here expose the fundamental mechanisms that shape covariances in periodically driven networks.

Our network model includes five fundamental properties of neuronal dynamics: First, we assume that the state of low and irregular activity in the network [1] is a consequence of its operation in the balanced state [29, 30], where negative feedback dynamically stabilizes the activity. Second, we assume that each neuron receives a large number of synaptic inputs [31], each of which only has a minor effect on the activation of the receiving cell, so that total synaptic input currents are close to Gaussian. Third, we assume the neurons are activated in a threshold-like manner depending on their input. Fourth, we assume a characteristic time scale τ that measures the duration of the influence a presynaptic neuron has on its postsynaptic targets. Fifth, the output of the neuron is dichotomous or binary, spike or no spike, rather than continuous. As a consequence, the variance of the single unit activity is a direct function of its mean.

We here show how each of the five above-mentioned fundamental properties of neuronal networks shape and give rise to the mechanisms that cause time-dependent covariances. The presented analytical expressions for the linear response of covariances expose two different paths by which a time-dependence arises: By the modulation of single-unit variances and by the modulation of the linear gain resulting from the non-linearity of the neurons. The interplay of negative recurrent feedback and direct external drive can cause resonant behavior of covariances even if mean activities are non-resonant. Qualitatively, these results explain the modulation of synchrony in relation to oscillatory cycles that are observed in experiments, but a tight locking of synchronous events to a particular phase of the cycle is beyond the mechanisms found in the here-studied models.

Results

To understand the locking of synchronous activity to an oscillatory cycle, as observed experimentally, we here need to consider time-dependent network states. We are in particular interested in the covariance between two stochastic variables x1 and x2, which is defined as c(t) = 〈δx1(t)δx2(t)〉 = 〈(x1(t) − 〈x2(t)〉) (x1(t) − 〈x2(t)〉〉, where 〈…〉 denotes the average over realizations. In words, the covariance in a time-dependent setting measures the co-variability of a pair of signals with respect to their respective mean. The mean value itself may depend on time. Only if this quantity can be determined with sufficient accuracy, time-dependent covariances can be calculated correctly. This is the source of the technical problems occurring in the context of a time-dependent covariance: It may be hard to assess the covariance, much more its time-dependence, because it is overshadowed by the time-varying mean. If a stochastic model is given, however, disentangling the time dependence of different cumulants, like mean and covariance, is possible. A theoretical study to understand the prevalent mechanisms that cause time-dependent covariances in a network model is therefore a necessary first step. In the following we identify these mechanisms by which time-dependent covariances of activities arise in oscillatory-driven recurrent networks. In Fig 1A we show the population-averaged activity of the excitatory population activity in a balanced EI-network together with the theoretical prediction to be developed in the sequel: The fluctuations around the mean show a wider spread close to the peak of the oscillation than at the trough. Correspondingly, the covariance between pairs of neurons in panel B has its peaks and troughs at points of high and low variability of the population activity in A, respectively.

Binary network model and its mean field equations

To address our central question, whether a periodically-driven random network explains the experimental observations of time-modulated pairwise covariances, we consider a minimal model here. It consists of one inhibitory (I) population and, in the latter part of the paper, additionally one excitatory population (E) of binary model neurons [6, 27, 29, 32]. Neurons within these populations are recurrently and randomly connected. All neurons are driven by a global sinusoidal input mimicking the incoming oscillatory activity that is visible in the LFP, illustrated in Fig 2. The local network may in addition receive input from an external excitatory population (X), representing the surrounding of the local network. The fluctuations imprinted by the external population, providing shared inputs to pairs of cells, in addition drive the pairwise covariances within the network [13, c.f. especially the discussion]. Therefore we need the external population X to arrive at a realistic setting that includes all sources of covariances. In the following, we extend the analysis of cumulants in networks of binary neurons presented in [6, 13, 27, 28, 33] to the time-dependent setting. This formal analysis allows us to obtain analytical approximations for the experimentally observable quantities, such as pairwise covariances, that expose the mechanisms shaping correlated network activity.

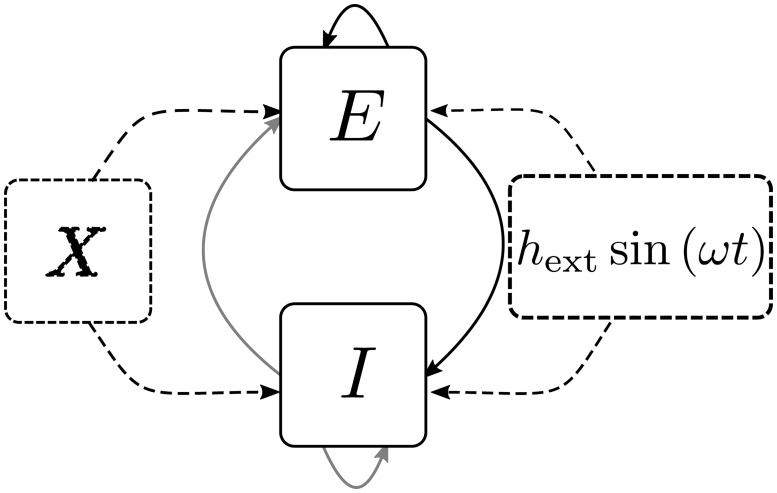

Fig 2. Recurrent balanced network driven by oscillatory input.

Recurrently connected excitatory (E) and inhibitory (I) populations (Erdős-Rényi random network with connection probability p) receiving input from an external (X) excitatory population. Additionally, all neurons in the microcircuit receive a sinusoidal signal of amplitude hext and frequency ω, representing the oscillatory activity received from external brain areas.

Binary model neurons at each point in time are either inactive ni = 0 or active ni = 1. The time evolution of the network follows the Glauber dynamics [34]; the neurons are updated asynchronously. At every infinitesimal time step dt, any neuron is chosen with probability . After an update, neuron i is in the state 1 with the probability Fi(n) and in the 0-state with probability 1 − Fi(n), where the activation function F is chosen to be

| (1) |

We here introduced the connectivity matrix J with the synaptic weights describing the influence of neuron j on neuron i. The weight Jij is negative for an inhibitory neuron j and positive for an excitatory neuron. Due to the synaptic coupling the outcome of the update of neuron i potentially depends on the state n = (n1, …, nN) of all other neurons in the network. Compared to the equations in [13, page 4], we added an external sinusoidal input to the neurons representing the influence of other cortical or subcortical areas and Gaussian uncorrelated noise with vanishing mean 〈ξi〉 = 0 and covariance . The threshold θi depends on the neuron type and will be chosen according to the desired mean activity.

We employ the neural simulation package NEST [35, 36] for simulations. Analytical results are obtained by mean-field theory [6, 13, 27, 28, 37, 38] and are described for completeness and consistency of notation in the section “Methods”. In the main text we only mention the main steps and assumptions entering the approximations. The basic idea is to describe the time evolution of the Markov system in terms of its probability distribution p(n, t). Using the master Eq 14 we obtain ordinary differential equations (ODEs) for the moments of p(n, t). In particular we are interested in the population averaged mean activities mα, variances aα, and covariances cαβ

| (2) |

| (3) |

| (4) |

which are defined as expectation values 〈〉 over realizations of the network activity, where the stochastic update of the neurons and the external noisy input presents the source of randomness in the network. The dynamics couples moments of arbitrarily high order [33]. To close this set of equations, we neglect cumulants of order higher than two, which also approximates the input by a Gaussian stochastic variable with cumulants that vanish for orders higher than two [39]. This simplification can be justified by noticing that the number of neurons contributing to the input is large and their activity is weakly correlated, which makes the central limit theorem applicable. In a homogeneous random network, on expectation there are Kαβ = pαβ Nβ synapses from population β to a neuron in population α. Here pαβ is the connection probability; the probability that there is a synapse from any neuron in population β to a particular neuron in population α and Nα is the size of the population. Mean Eq (2) and covariance Eq (4) then follow the coupled set of ordinary differential equations (ODEs, see section II A in S1 Text for derivation)

| (5) |

| (6) |

where α ↔ β indicates the transposed term. The Gaussian truncation employed here is parameterized by the mean μα and the variance of the summed input to a neuron in population α. These, in turn, are functions of the mean activity and the covariance, given by Eqs (18) and (19), respectively.

Here φ is the expectation value of the activation function, which is smooth, even though the activation function itself is a step function, therefore not even continuous. The function φ fulfills limm → 0 φ = 0 and limm → 1 φ = 1 and monotonically increases. Its derivative S with respect to μ has a single maximum and is largest for the mean input μ within a region with size σ around the threshold θ. S measures the strength of the response to a slow input and is therefore termed susceptibility. The definitions are given in “Methods” in Eqs (17) and (20).

The stationary solution (indicated by a bar) of the ODEs Eqs (5) and (6) can be found by solving the equations

| (7) |

| (8) |

numerically and self-consistently, as it was done in [13, 27, 33].

The full time-dependent solution of Eqs (5) and (6) can, of course, be determined numerically without any further assumptions. Besides the comparison with simulation results, this will give us a check for the subsequently applied linear perturbation theory. The resulting analytical results allow the identification of the major mechanisms shaping the time-dependence of the first two cumulants. To this end, we linearize the ODEs Eqs (5) and (6) around their stationary solutions. We only keep the linear term of order hext of the deviation, justifying a Fourier ansatz for the solutions. For the mean activities this results in with

| (9) |

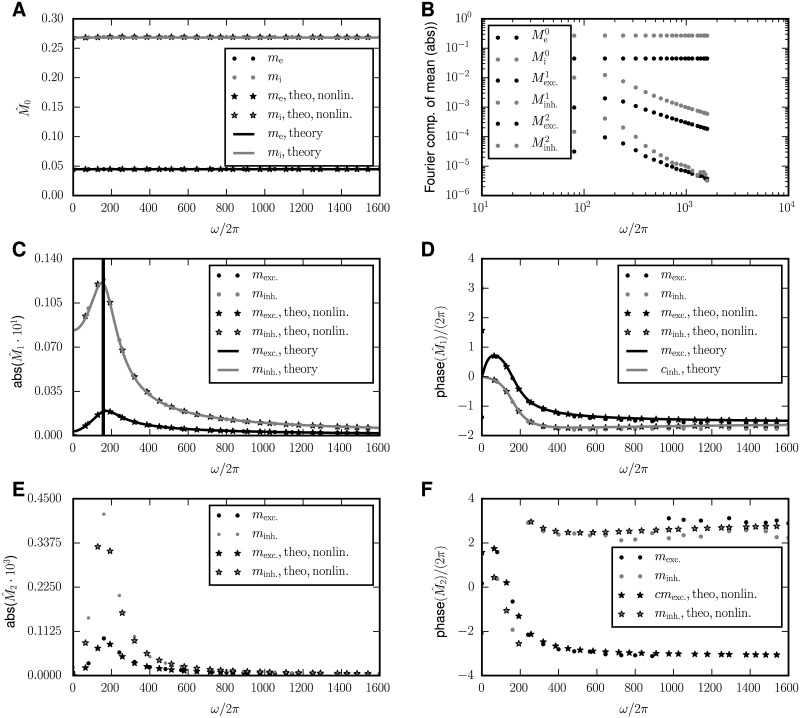

The time-dependence of σ was neglected here, which can be justified for large networks (“Methods”, Eqs (22) and (30)). The matrix U represents the basis change that transforms into a diagonal matrix with λα the corresponding eigenvalues. We see that, independent of the number of populations or the detailed form of the connectivity matrix, the amplitude of the time-dependent part of the mean activities has the shape of a low-pass-filtered signal to first order in hext. Therefore the phase of δm lags behind the external drive and its amplitude decreases asymptotically like , as can be seen in Fig 3A and 3B.

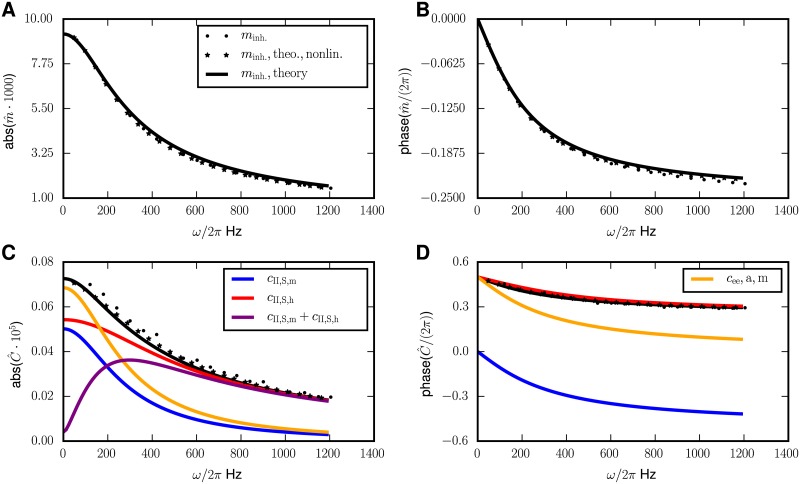

Fig 3. Periodically driven single population network.

Dependence of the modulations of the mean activity and covariances on the driving frequency ω. A Amplitude of modulation of mean activity. B Phase of modulation of mean activity relative to the external drive. C Amplitude of modulation of covariances. D Phase of modulation of covariance relative to the external drive. In all panels, the analytical predictions (Eqs (9) and (39)) are shown as solid black curves. The black curve is the complete solution. The different contributions to the time-dependent covariances, identified in Eq (12), are shown separately: The Sh-term in red, the Sm-term in blue, their sum in purple, and the a-term in orange. Numerical solutions of the full mean-field equations (Eqs (5) and (6)) are shown as stars and simulation results by dots (only indicated in the legend of A). The numerical results are obtained by using the integrate.ode-method from the python-package scipy [41] with the option “lsoda”, meaning that either implicit Adams- or backward differentiation-algorithms (depending on the given problem) are used. Network parameters: Number of neurons NI = 5000, connection probability pII = 0.1, coupling strength JII = −1, mean activity mI ≈ 0.3, and .

If we also separate the covariances into their stationary part and a small deviation that is linear in the external drive, , expand S (μα (t), σα (t)) and a (t) around their stationary values, keeping only the terms of order hext and neglect contributions from the time-dependent variation of the variance of the input σ2 (see “Methods”, especially Eq (30) for a discussion of this point), we get the ODE

| (10) |

where we introduced the point-wise (Hadamard) product ⊛ of two matrices A and B [see 40, for a consistent notation of matrix operations] as (A ⊛ B)ij ≔ AijBij, defined the matrix with the entries diag (x)ij := δij xi for the vector x = (x1, ‥, xn) and set to bring our main equation into a compact form.

We can now answer the question posed in the beginning: Why does a global periodic drive influence the cross covariances in the network at all and does not just make the mean activities oscillate? First, the variances are modulated with time, simply because they are determined via Eq (3) by the modulated mean activities. A neuron i with modulated autocorrelation ai(t) projects via Jji to another neuron j and therefore shapes the pairwise correlation cji(t) in a time-dependent way. We call this effect the “modulated-autocovariances-drive”, indicated by the curly brace in the second line of Eq (10). Its form in index notation is . This is the low-pass-filtered input.

The other contributions are a bit more subtle and less obvious, as they are absent in networks with a linear activation function. The derivative of the expectation value of the activation function, the susceptibility, contributes linearly to the ODE of the covariances. As the threshold-like activation function gives rise to a nonlinear dependence of φ on the mean input μ, the susceptibility S = φ′ is not constant, but depends on the instantaneous mean input. The latter changes as a function of time by the direct external drive and by the recurrent feedback of the oscillating mean activity, indicated by the terms denoted by the curly braces in the third line of Eq (10). Together, we call these two term the “susceptibility terms”. Both terms are of the same form

| (11) |

but with different δμα. This form shows how the time-dependent modulation of the mean input δμα, by the second derivative of the gain function , influences the transmission of covariances. The sum following is identical to the one in the static case Eq (8). For the “recurrent drive”, the time-dependent input is given by δμα(t) = ∑β Kαβ Jαβ δmβ(t), which is a superposition of the time-dependent activities that project to population α and is therefore low-pass-filtered, too. The term due to “direct drive” is δμα(t) = hext sin(ωt).

We solve Eq (10) by transforming into the eigensystem of and inserting a Fourier ansatz, . The solution consists of a low-pass filtered part coming from the direct drive and two parts that are low-pass filtered twice, coming from the recurrent drive and the modulated-autocovariances-drive. For a detailed derivation, consult the section “Covariances: Stationary part and response to a perturbation in linear order”.

We have calculated higher Fourier modes of the simulated network activity and of the numerical solution of the mean-field equations to check if they are small enough to be neglected, so that the response is dominated by the linear part. Of course, it would be possible to derive analytical expressions for those as well. However, we will see that the linear order and the corresponding first harmonic qualitatively and for remarkably large perturbations even quantitatively gives the right predictions. The limits of this approximation are analyzed in Fig D in S1 Text. We will therefore constrain our analysis to controlling the higher harmonics through the numerical solution.

In the following we will study three different models of balanced neuronal networks to expose the different mechanisms in their respective simplest setting.

Single population

As a first example, we quantitatively study the particular case of a single population, which has to be inhibitory to ensure stable stationary activity. Let us look at the behavior of the different contributions in Eq (10) to the modulated covariance and their mutual relation. Written explicitly, the terms driving the time variation of the covariance are

| (12) |

With respect to their dependence on the number of synaptic connections |K|, the sum of the two susceptibility terms is of the same order of magnitude as the modulated-autocovariances-drive (cf. 37 in the section “Methods”), therefore their interplay determines the shape of the solution of Eq (10) and we cannot neglect either term in favor of the other.

To analyze the contributions to δc, it is reasonable to first focus on the quasi-static case ω → 0, because its analysis is simplest and, due to the continuity of the observed quantities, it carries over to the case of biologically relevant small frequencies up to the β-range. For ω → 0, the solution δc in Eq (10) has the same sign as the sum of the inhomogeneities, because it is given by a multiplication with 0.5 (1 − W)−1, where W < 0. The main information—especially about the sign—is therefore already included in these inhomogeneities, that we termed “recurrent drive” and “direct drive” (the susceptibility terms) and “modulated-autocovariances-drive” in the previous section. The modulation of the covariance δc(t) then results by low pass filtering their sum. Individually they yield the Sm-term and Sh-term (together the S-terms) and the a-term, respectively.

In a general balanced network, the deviation of the mean activity from the stationary solution δm(t) is in phase with the perturbation for ω ≈ 0 and lags behind it for larger ω due to the “forgetfulness” of the network caused by the leak term in the ODE. At low frequencies, the recurrent drive ∝ K ⊛ J δm(t) therefore partly cancels the direct drive ∝ hext sin (ωt). This is because the rate response δm is in phase, and the feedback KJ < 0 in the network is negative. The cancellation becomes less efficient at larger frequencies, because the recurrent drive asymptotically decays like ω−1 and is phase-shifted; the mean activity is low-pass-filtered Eq (9). The direct drive, in contrast, does not depend on the driving frequency ω. Therefore, the Sm-term is low-pass-filtered twice and the Sh-term term only once, therefore their sum has a peak at an intermediate frequency, as visible in Fig 3C, purple curve. Note that this cancellation generally appears in the balanced state, because the network feedback is always effectively inhibitory. Furthermore, the modulated-autocovariances-drive only vanishes for ; for realistic activity it is in anti-phase with δm(t), because it is defined including W < 0, which flips the phase by π.

Average covariances in inhibitory networks are negative [13]. As a consequence, in the setting of a single inhibitory population there is a second kind of cancellation: The two terms and in the prefactor of the susceptibility terms in Eq (12) partly cancel; their sum in fact vanishes in the large N limit [cf. 13, eq. (32) and their Fig 5]. This leads to the dominance of the a-term, shown in Fig 3D (orange curve). The maximum in the S-terms is therefore overshadowed by the a-term, which asymptotically also shows a second order low pass characteristics with ∝ ω−2. So in the purely inhibitory network the peak is not visible in the sum of all contributions to .

In summary, the model of a single population in the balanced state exposes several generic features of time-dependent mean activities and covariances: Mean activities and the direct drive contribution to covariances follow the external modulation with first order low pass characteristics. The Sm-term and the a-term of the covariances, being mediated by the mean activity, consequently expose a second order low pass filtering. The direct drive and the recurrent drive (the susceptibility terms) to large extent cancel at low frequencies, but not at high ones. Due to their overall decay in amplitude with increasing frequency, an intermediate maximum arises in their sum. In the single population model this peak is typically overshadowed by the a-term. This is because of the suppression of population fluctuations by negative feedback in the stationary state [10], which causes a small population variance and the latter term controls the amplitude of the susceptibility terms.

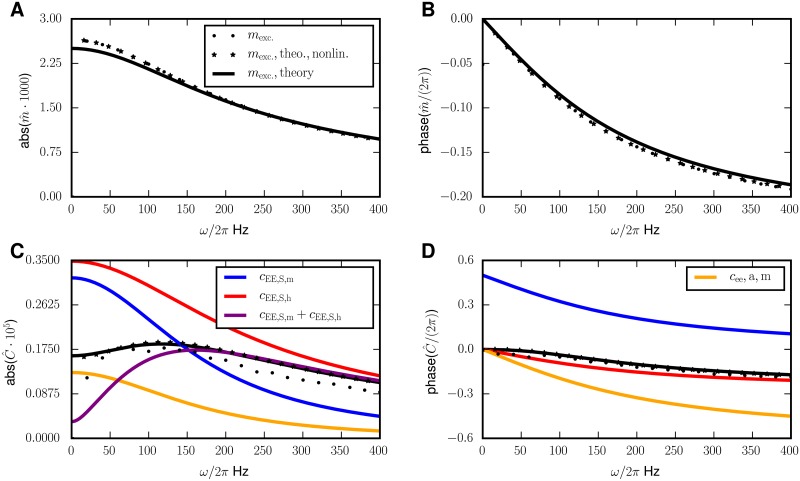

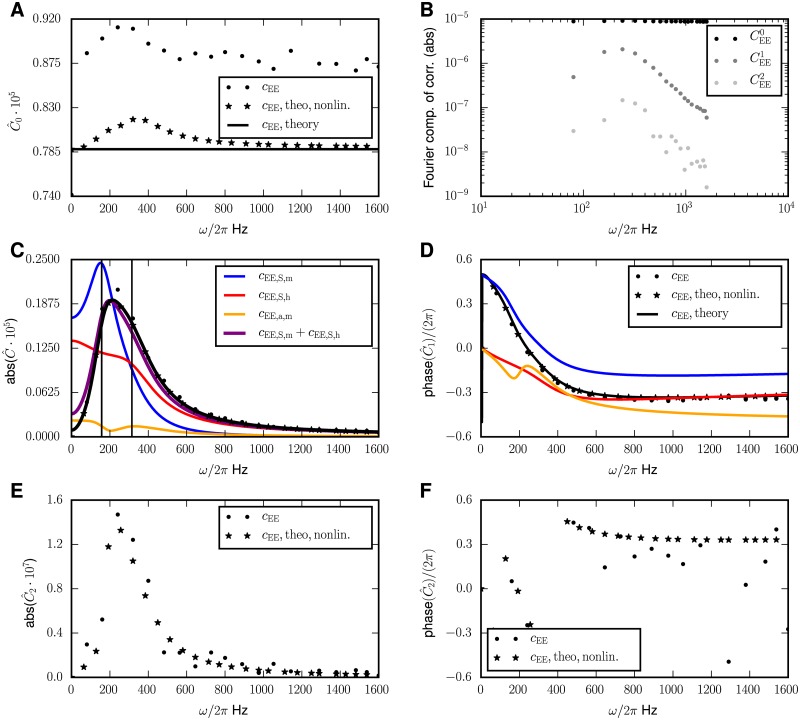

Two homogeneously connected populations

A slightly more realistic, but still simple setup is an EI-network with the same input for the inhibitory and the excitatory neurons, as studied before, in [13, parameters, except mX as in fig. 6 there]. This network is also inhibition-dominated, therefore we observe qualitatively the same competition of the two S-terms leading to the existence of a maximum in the ω-dependence of |C1|. In contrast to the single population case, in the E-I network the peak may be visible. This is because—in contrast to the single population case—covariances in this setup may also be positive, preventing the cancellation with the variances in the term that drives the S-terms. The latter contribution may therefore dominate over the a-term at small ω. Its dominance increases the larger the covariances are, which for example arises when increasing the external drive or by lowering the noise level at the input to the neurons. The “resonance” effect itself increases for weaker the excitatory synapses.

Fig 4C, indeed shows a peak of the response of the covariances at a frequency of about 120 Hz. We here focus on the covariances between excitatory neurons, because their activities are recorded most often and also cell assemblies are normally assumed to consist of excitatory neurons.

Fig 4. Periodically driven E-I network.

A Amplitude of modulation of the mean activity deviating from the stationary value for the excitatory population. B Phase of the modulation of the mean activity. C Different contributions to the amplitude of the covariance between pairs of excitatory cells in dependence of the frequency ω of the external drive. D Phase of covariances relative to the driving signal. Analytical theory (Eqs (9) and (39)) shown by solid black curves, numerical solutions of the full mean field equations (Eqs (5) and (6)) (stars) and simulation results (dots, only indicated in the legend of A). Same color code as in C. In C and D, the contributions to the variation of covariances are shown separately: The Sh-term in red, the Sm-term in blue, their sum in purple and the a-term in yellow. The legend for C and D is split over both panels. Numerical solutions obtained by the same methods as in Fig 3. Parameters: NE = NI = NX = 8192, pE = pI = pX = p = 0.2, mE = mI ≈ 0.11, mX = 0.25, identical to [13, e.g. fig. 6].

Two populations with inhomogeneous connections

The example of homogeneous connectivity helps to explain the fundamental mechanisms that shape the covariances; it is, however, certainly not very realistic. Furthermore, in the case of synaptic weights being different for individual receiving populations, the linearized connectivity W can have a pair of complex eigenvalues, which is qualitatively different to the setup described before. To check if the theory also works for parameters satisfying biological constraints, we choose the connectivity and activity levels in accordance to experimental studies. Apart from the results from [26], the parameters were measured in the layer 2/3 in the barrel cortex of mice. We select this layer, because it is the assumed location of cell assemblies [42], allowing us to relate our results to the original hypothesis of excess synchrony by activation of assemblies [26], a feature that could be considered in future studies. The connection probabilities are taken from [43], the fractions of excitatory and inhibitory neurons from [44] and the membrane time constant is extracted from [45, supplementary material]. We adjust the neurons’ thresholds such that the stationarity condition is fulfilled for mα = τνα, where α ∈ {exc., inh.}, να is the firing rate of the respective population and τ is the neuronal time constant. Note that the mapping m = τν implies a slightly different notion of a “spike” of a binary neuron than previously used [28]. The two conventions agree in the limit of vanishing firing rates (cf. section II B in S1 Text). The firing rate of 18 Hz given in [26] presumably reflects the activity of excitatory neurons (private communication). To obtain the firing rate of the inhibitory neurons νinh., we scale the measurement from [26] by the ratio νinh/νexc. from [44]. All parameters are summarized in Table 1. The effective connectivity W of this system has two conjugate complex eigenvalues. Therefore, there exists a resonance frequency also for the mean activity, shown in Fig 5C.

Table 1. Parameters for the biologically inspired network model used in Figs 5 and 6 and Figs A, B, C and D in S1 Text.

| exc. | inh. | ν (Hz) | mean act. | #neurons | |||

|---|---|---|---|---|---|---|---|

| exc. | connection prob. | 0.168 | 0.5 | 18 | 0.045 | 1691 | τ = 2.5 ms |

| synaptic weight | 0.37 | −0.52 | |||||

| inh. | connection prob. | 0.327 | 0.36 | 108 | 0.27 | 230 | mext = 0.1 |

| synaptic weight | 0.82 | −0.54 |

Fig 5. Driven E-I network with biologically inspired parameters: Mean activity.

From the first to the third row, the zeroth to second Fourier mode of the mean activity is shown. A Constant part of mean activity (zeroth order). B First three Fourier-modes of the mean activities on a loglog-scale. C Amplitude of first mode of the mean activity. D Phase of first mode relative to driving signal. E and F are structured analogous to C and D for the second Fourier modes. Solid curves indicate the linear theory (Eq (9)), stars numerical integration of the full mean field equations (Eqs (5) and (6)) and dots the simulation results of the full network. Black symbols indicate the activity of excitatory, gray symbols of inhibitory neurons. Numerical results obtained by the same methods as in Fig 3. Noise amplitudes σnoise,E = σnoise,I = 10, σnetwork,E = 2.8, σnetwork,E = 4.6, other parameters of the network model given in Table 1.

In the two upper panels of Figs 5 and 6, we compare the stationary values for the mean activity Eq (7) and the covariances Eq (8) with the respective time averaged results of the simulation and with the numerical solution of the full mean-field equations. The stationary statistics have been investigated before for other parameters in finite networks [13] and in the limit N → ∞ [6]. The second harmonics extracted from the simulations and the numerical solution of the full mean-field equations show good agreement and are overall small compared to the zeroth and first harmonics, justifying the truncation of the Fourier series in the analytical theory after the first term.

Fig 6. Driven E-I network with biologically inspired parameters: EE-Covariance.

Response of the covariance to a perturbation with frequency ω in the Fourier space. A Zeroth Fourier mode (time independent part) of the covariance. B Absolut value of the first three Fourier components of the cee-covariances on a loglog-scale. C Absolute value of the first order of the time-dependent part of the covariance. D Phase angle in relation to the driving signal. E and F are analogous to C and D for the second Fourier modes. Solid lines indicate the linear theory Eq (39), stars the results of the numerical solution of the full mean-field theory Eqs (5) and (6) and dots the direct simulation of the full network. Numerical results obtained by the same methods as in Fig 3. Parameters of the network model as in Fig 5.

The first harmonics of the mean activity (see Fig 5) and covariances (see Fig 6) predicted by the linear response theory agree well with simulations and the numerical solution. This is not necessarily clear a priori because the perturbation in the input to every neuron is of the order , where σ is the input noise level of the unperturbed system. However, linear response theory works surprisingly well, even for the covariances caused by a perturbation leading to a response of the same order of magnitude as the stationary value. Increasing the perturbation strength hext further ultimately leads to a breakdown of the linear perturbation theory, visible in the growing absolute values of the second Fourier modes of mean activities and covariances (Fig D in S1 Text). The maximal modulation in the firing rates amounts to ≈ 0.8 Hz for the excitatory and 4.9 Hz for the inhibitory neurons.

In this biologically inspired setting, it is also interesting to apply the Unitary Event (UE) analysis to our data, as it was done for experimental data in [26]. Because this is a bit aside the scope of this paper, we present this part in the appendix, Sec. I in S1 Text.

The connectivity matrix has complex eigenvalues , so we observe a resonance of the mean activities at the frequencies

indicated by a vertical line in Fig 5C. The components of δm are composed of different modes, therefore their maximum does not appear exactly at fres, mean. The covariances are shaped by more modes: In general, the covariance matrix for a three-dimensional quantity has 6 independent components. In our case, cXX is always 0, which is a consequence of the missing feedback to X. Now, the evolution of every mode of is given by the sum of two eigenvalues of 1 − W, i.e. 2 − λ, 2 − λ*, 2 − 2λ, 2 − 2λ* and 2 − (λ* + λ). The missing mode is the “trivial” one owing to the vanishing eigenvalue of W. So the behavior of the “kernel” of the ODE for δc is given by the resonances at and . In addition, the inhomogeneity of the ODE (10) (its right hand side) is already resonant at . All these modes are mixed with different strength in the different modes of δc, giving rise to a maximum of |C1| somewhere in the vicinity of fres, mean and 2fres, mean. In all cases the “resonances” are damped, therefore, a resonance catastrophe, induced by δm oscillating with the resonance frequency of δc, cannot occur. We also notice that all resonances are the stronger, the closer ℜ(λ) is to 1, the critical point, which makes sense intuitively: The damping comes from the overall inhibitory feedback; at the critical point the leak term is exactly compensated by positive feedback of identical magnitude. It is worth noticing that the effect of the partial cancellation of the S-terms, which can be read off from Eq (10) and is described in the previous subsections for small ω is still valid. The functional form of |C1(ω)|, however, is now mainly determined by the resonances due to the complex eigenvalues of W.

The ω-dependencies of the cII- and the cEI- covariances shown in the appendix are qualitatively similar (Fig. B and Fig. C in S1 Text). The stationary covariance is well predicted by the theory [13], which is confirmed here.

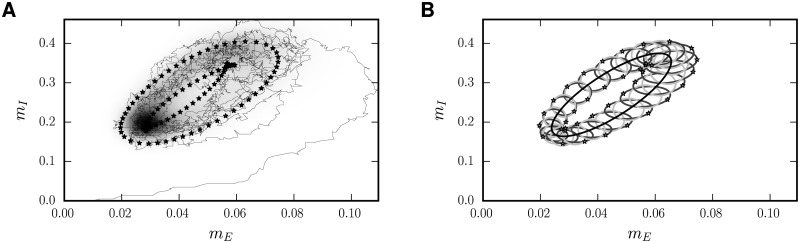

Fig 7 illustratively summarizes the results of this section. In panel A, the probability of the binary system to be in a certain activity state (minh, mexc)T is indicated by different gray shades, the darker, the higher the probability to find it in the respective area. On top, the area including the most probable network states, as predicted by the linear theory, is indicated by black dots. Its construction is depicted in panel B: We draw the limit cycle (black) formed by the points (〈minh(t)〉, 〈mexc(t)〉)T as a parametric plot with time as parameter. Then, we define the points on the error ellipse (minh, mexc)T as follows

| (13) |

where δmT := (minh, mexc, 0)T − (〈minh〉, 〈mexc〉, 0)T and

In this way, the solutions δm(t) of Eq (13) are composed of all points that are one standard deviation away from the expected activity. The covariances enter the total population averaged variability, given by

with the definitions Eqs (3) and (4).

Fig 7. Distribution of population-averaged activity of periodically driven E-I network.

A Empirical density of population activity of the E-I network. Gray shading indicates time-averaged occupation of states. The thin mid gray curve is a sample of the binary dynamics of 10 periods after the start of the simulation. The black dots indicate the σ-region predicted by the linear theory as described by Eq (13) in the main text. B Limit cycle of the linear theory (black ellipse), together with error ellipses stemming from the sum of covariances and variances (dark gray, slightly tilted) and representing solely variances (light gray). The stars are at the same places as in A. Parameters are given in Table 1, only the perturbation strength is increased to hext = 6 (noise level around σE ≃ 14, σI ≃ 23) for reasons of readability (for this value the simulated results already show deviations from the linear approximation as shown in Fig. D in S1 Text). The perturbing frequency is chosen to be f = 80 Hz.

The two points on the border of the dark gray error-ellipses of the full covariances with the largest distance to the tangent of the limit cycle at (〈minh〉, 〈mexc〉) are marked by a star, which, taken together, form the border of a tube-shaped σ-area. This tube indicates the region in which we most likely expect to find the system. To visualize the contributions of auto- and pairwise covariances, we plot in light gray the error ellipses based solely on the variances (cpop (t) is diagonal in this case). The dark error ellipses are bigger than the light ones, indicating that the covariances are positive and their axes are tilted; the cEI = cIE-component is nonzero. Furthermore, the error ellipses significantly change their size in time, indicative of the modulation of the fluctuations with time. The variances grow monotically with the respective mean activities, explaining that the light gray ellipses are largest (smallest) where the mean activities are largest (smallest). One can read off the phase shift of cEE to mE to be roughly : the deviation of the dark gray error ellipses from the light gray ones is largest at the points where and δmI (t) is minimal.

Methods

Glauber dynamics in mean-field theory

We have left out so far several steps in the derivation of the results that were not necessary for the presentation of the main ideas. In this section, we will therefore give a self-contained derivation of our results also necessitating paraphrases of some results known from earlier works. The starting point is the master equation for the probability density of the possible network states emerging from the Glauber dynamics [34] described in “Binary network model and its mean field equations” (see for the following also [13, 37])

| (14) |

where

The activation function Fi(n) is given by Eq (1).

Using the master equation (for details cf. section II A in S1 Text), one can derive a differential equation for the mean activity of the neuron i, 〈ni〉 (t) = ∑n p(n, t)ni and the raw covariance of the neurons i and j, 〈ni (t) nj (t)〉 = ∑n p(n, t)ninj [6, 13, 27, 34, 37]. This yields

| (15) |

As mentioned in “Binary network model and its mean field equations”, we assume that the input hi coming from the local and the external population is normally distributed, say with mean μi and standard deviation σi given by

| (16) |

where the average 〈〉 is taken over realizations of the stochastic dynamics and we used the element-wise (Hadamard) product (see main text).

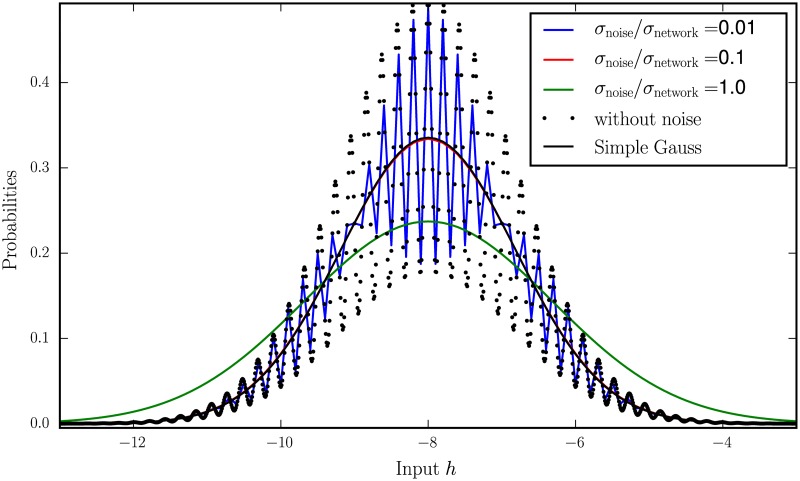

The additional noise introduced in Eq (1) effectively leads to a smoothing of the neurons’ activation threshold and broadens the width of the input distribution. It can be interpreted as additional variability coming from other brain areas. Furthermore, it is computationally convenient, because the theory assumes the input to be a (continuous) Gaussian distribution, while in the simulation, the input , being a sum of discrete binary variables, can only assume discrete values. The smoothing by the additive noise therefore improves the agreement of the continuous theory with the discrete simulation. Already weak external noise compared to the intrinsic noise is sufficient to obtain a quite smooth probability distribution of the input (Fig 8).

Fig 8. Distribution of inputs from binary neurons for different noise levels.

Probability distribution of synaptic input hi = ∑j Jijnj + ξi of a neuron in a network of independently active cells nj with 〈nE〉 = 〈nI〉 = 0.2 and synaptic weights jI = −0.21, jE = 0.01. was deliberately chosen to be large because only then the convolution of a binomial distribution “squeezed” to the step size jE with the binomial distribution squeezed to the step size |jI| results in a probability distribution with many local maxima leading to the impression of an oscillation. The noiseless case ξi = 0 is shown as black dots. The solid black curve indicates the Gaussian approximation (cf. e.g. Eq (16), here without perturbation) of this distribution from the main text. This distribution appears in the expectation values of the activation function F (cf. e.g. Eq (1)): It is a Gaussian distribution with the mean μ = KE jE mE + KIjI mI and the variance of the original binomial distributions Binom(mE, KE), Binom(mI, KI). The other curves indicate convolutions with the Gaussian noise of different magnitudes σnoise, given in units of the noise level σnetwork intrinsically produced by the network.

The description in terms of a coupled set of moment equations instead of the ODE for the full probability distribution here serves to reduce the dimensionality: It is sufficient to describe the time evolution of the moments on the population level, rather than on the level of individual units. To this end we need to assume that the synaptic weights Jij only depend on the population α, β ∈ {exc., inh., ext.} that i and j belong to, respectively, and thus (re)name them Jαβ (homogeneity). Furthermore, we assume that not all neurons are connected to each other, but that Kαβ is the number of incoming connections a neuron in population α receives from a neuron in population β (fixed in-degree). The incoming connections to each neuron are chosen randomly, uniformly distributed over all possible sending neurons. This leads to expressions for the population averaged input hα, mean activity mα and covariance cαβ, formally nearly identical to those on the single cell level and analogous to those in [13, sec. Mean-field solution].

Mean activity: Stationary part and response to perturbation in linear order

We are now able to calculate the quantity 〈Fα (n(t), t)〉 = 〈H (hα (t) − θ)〉 (recall that hα (t) is a Gaussian random variable with mean μα (t) and standard deviation σα (t)), the nonlinearity of the ODEs 15 on the population level. Multiplying H (hα (t) − θα) by the Gaussian probability density for hα (t), we get, after substitution of the integration variable,

| (17) |

where we defined the average input μα and the width of the input distribution σα

| (18) |

| (19) |

Recall that we defined to be the quantity x in the stationary case (without external input). For the linear approximation around , and hext = 0, we have to take into account all dependencies via inner derivatives. We set and . Note that δμ includes the variation of μ both because of fluctuations in the network and because of the external drive. The Taylor expansion up to linear order is

where we introduced the susceptibility on the population level

| (20) |

Now, we express δσα and δμα via and (cf. [13, eq. (29)] for the time-independent case):

| (21) |

| (22) |

Note that in δμα (but not δσα), the perturbation occurs again explicitly. In Eq (33), we demonstrate that δc scales like , like in the stationary case. Furthermore, we certainly have and , thus

We therefore neglect δσ in our calculations for δm from Eq (24) on. This yields for the linearization of the ODE Eq (5)

| (23) |

| (24) |

where we used the relation , derived from Eq (7) in connection with Eq (17), which implies that this expression does not depend on K, but solely on and we defined

The only change compared to the setup in [13] is again the occurrence of a periodic term, here sin (ωt).

We solve Eq (24) by transforming it into the eigenbasis of the matrix Wαβ

| (25) |

We multiply Eq (24) by U−1, define δmα ≔ (U−1)αβ δmβ and get

| (26) |

Note that the input is projected onto the respective eigenmodes. Eq (26) can be solved including the transient phase by the method of variation of constants.

But as we are only interested in the cyclostationary part of the solution, we can neglect the solution of the homogeneous part and solely compute the particular solution. Observe that for a differentiable function δmα because . We insert the ansatz and solve for , which gives Eq (9) of the main text. For further calculations, keep in mind that and therefore δmα are of order . In section II C in S1 Text, we describe how to extract the right phase of the real solution from the complex ansatz.

Covariances: Stationary part and response to a perturbation in linear order

Using Eq (15) in the population-averaged version, we calculate the derivative of the zero time-lag covariance

getting

Neglecting cumulants of order higher than two, we can expand the expectation value 〈Fi (n (t)) δnj (t)〉 (cf. [13, 33, section “Linearized equation for correlations and susceptibility”]) and get

| (27) |

After carrying out the population averaging, we get the ordinary differential equation

| (28) |

Therefore, the stationary part of the covariances fulfills the relation (cf. [13, 33])

| (29) |

As for the mean activities, we want to make a little step (of order hext, to be precise) away from the stationary state determining the deviation . For that, we have to calculate the Taylor expansion of S (μα (t), σα (t)) in δm, i.e.

where δμα and δσα are given by Eq (21) and

Here again, the relation was used to estimate the dependence on K. We insert the linearization of S and the expressions for δμ and δσ, Eq (21), into the ODE for to get, after neglecting the contributions of order and sorting the rest into terms proportional to δc, hext and δm respectively:

| (30) |

Before finally solving for δc (t), we want to justify the assumption , which we needed already in the beginning to determine δm (t), by a short calculation. We insert Eq (22) into Eq (30) and switch to matrix notation for brevity, which yields

| (31) |

We can rewrite the left hand side in order to recognize the parts, which are identical to the right hand side of Eq (23), i.e. the ODE for δm (t) without the neglect of δσ, which gives

| (32) |

Bringing the δc-terms on the left hand side finally yields

| (33) |

We have therefore shown that—independent of the scaling of the synaptic weights J—the relation holds not only for the zero-mode, i.e. for the stationary case, but also for the time-dependent part. Note that for our actual calculation of δm, we have neglected its dependence on δσ, as it is one order smaller than the δμ-contribution. However, this is not true for δc because of the cancellation of the two contributions to δμ. Inserting the rhs of the ODE Eq (24) actually used to determine δm and shifting the δc-contribution of δσ back to the other side, we arrive at

| (34) |

We want to compare the contribution from δμ in the second line of Eq (34) with the contribution from δσ in the third line. As pointed out above, they scale in the same way with the system size N, given that we do not rescale the driving frequency with N. Therefore, its contribution stays equally important if we enlarge the network. We neglect it anyway, which can be justified by comparing the decisive part of the prefactors of the δσ and the δμ-parts (the remaining parts are of the same order of magnitude):

This inequality is fulfilled for the three settings used in this work, whereas the first term is one or two orders of magnitude larger than the second. Especially, this inequality can always be fulfilled if the externally generated noise level is high. Therefore, even if the neglect of the δσ-contribution to δc cannot be justified by the standard mean-field argument that it decays faster with the system size than other terms, it is applicable because the input fluctuations are large enough—for all system sizes. This largely simplifies the calculations because the ODE for δc can be solved by transforming into the eigensystem of W, which would not be possible after including the more involved term emerging from δσ. Taking into account the neglected term would require to reformulate the problem as an equation for the vector (δcEE, δcEI, ‥), which would be much less intuitive. Furthermore, there is an indirect argument for high frequencies that does rely on the system size: The ω-dependence of the absolute value of the maxima of δm and δc scales with the eigenvalues of W, which scale with . Thus, changing the system size N in first order just stretches the ω-axis. Therefore, the “interesting” frequencies do scale with N, which leads to the dominance of the derivative term in the second line of Eq (34) over the δσ-term. Note that the observation from Eq (32) that

| (35) |

| (36) |

is a direct consequence of the recurrent drive being effectively inhibitory (for other networks, the expansion around the stationary point would not make sense): Any of the two terms in the susceptibility terms are of order bigger than their sum. Furthermore, we see from Eq (33) that the sum of the susceptibility terms is of the same order of magnitude with respect to its dependence on K (or, equivalently, the connection probabilities and the system size) as the term coming from the time modulation of the variances (modulated-autocovariances-drive).

We define

| (37) |

and

| (38) |

in order to end up with the index-free version Eq (10). The first two inhomogeneities, the susceptibility terms introduced in the main part (“Results“) reflect the nonlinearity of the gain-function.

With U given in Eq (25), we multiply Eq (30) from the left by U−1 and from the right by (U−1)T to get (cf. [13, 33])

We are only interested in the cyclostationary statistics, so we can ignore again the transient state making the ansatz . Inserting this ansatz and transforming back into the original system, we get

| (39) |

Together with Eq (9), this is the main result of this section.

Discussion

The present work offers an extension of the well-known binary neuronal network model beyond the stationary case [6, 13, 27, 28, 33]. We here describe the influence of a sinusoidally modulated input on the mean activities and the covariances to study the statistics of recurrently generated network activity in an oscillatory regime, ubiquitously observed in cortical activity [18].

Comparing with the results of the simulation of the binary network with NEST [35, 36] and the numerical solution of the full mean-field ODE, we are able to show that linear perturbation theory is sufficient to explain the most important effects occurring due to sinusoidal drive. This enables us to understand the mechanisms by the help of analytical expressions and furthermore we can predict the network response to any time-dependent perturbation with existing Fourier representation by decomposing the perturbing input into its Fourier components.

We find that the amplitude of the modulation of the mean activity is of the order , where λα, α ∈ {E, I} are the eigenvalues of the effective connectivity matrix W, i.e. the input is filtered by a first order low-pass filter and the amplitude of the modulation decays like ∝ ω−1 for large frequencies. This finding is in line with earlier work on the network susceptibility [27, esp. section V].

The qualitatively new result here is the identification of two distinct mechanisms by which the covariances δc are modulated in time. First, covariances are driven by the direct modulation of the susceptibility S due to the time-dependent external input and by the recurrent input from the local network. Second, time-modulated variances, analogous to their role in the stationary setting [13], drive the pairwise covariances.

Our setup is the minimal network model, in which these effects can be observed—minimal in the sense that we would lose these properties if we further simplified the model: The presence of a nonlinearity in the neuronal dynamics, here assumed to be a threshold-like activation function, is required for the modulation of covariances by the time-dependent change of the effective gain. In a linear rate model [10, 46] this effect would be absent, because mean activities and covariances then become independent.

The second mechanism relies on the binary nature of neuronal signal transmission: the variance a(t) of the binary neuronal signal is, at each point in time, completely determined by its mean m(t). This very dependence provides the second mechanism by which the temporally modulated mean activity causes time-dependent covariances, because all fluctuations and therefore all covariances are driven by the variance a(t).

Rate models have successfully been used to explain the smallness of pairwise covariances [6] by negative feedback [10]. A crucial difference is that their state is continuous, rather than binary. As a consequence, the above-mentioned fluctuations present due to the discrete nature of the neuronal signal transmission need to be added artificially: The pairwise statistics of spiking or binary networks are equivalent to the statistics of rate models with additive white noise [46]. To obtain qualitative or even quantitative agreement of time-dependent covariances between spiking or binary networks and rate models, the variance of this additive noise needs to be chosen such that its variance is a function of the mean activity and its time derivative.

The direct modulation of the susceptibility S due to the time-dependent external input leads to a contribution to the covariances with first order low-pass filter characteristics that dominates the modulated covariances at large frequencies. For small—and probably biologically realistic—frequencies (typically the LFP shows oscillations in the β-range around 20 Hz), however, the modulation of the susceptibility by the local input from the network leads to an equally important additional modulation of the susceptibility. The intrinsic fluctuations of the network activity are moreover driven by the time-dependent modulation of the variance, which is a function of the mean activity as well. Because the mean activity follows the external drive in a low-pass filtered manner, the latter two contributions hence exhibit a second order low-pass-filter characteristics. These contributions are therefore important at the small frequencies we are interested in here.

The two terms modulating the susceptibility, by the direct input and by the feedback of the mean activity through the network, have opposite signs in balanced networks. In addition they have different frequency dependencies. In networks in which the linearized connectivity has only real eigenvalues, these two properties together lead to their summed absolute value having a maximum. Whether or not the total modulation of the covariance shows resonant behavior, however, depends also on the third term that stems from the modulated variances. We find that in purely inhibitory networks, the resonance peak is typically overshadowed by the latter term. This is because inhibitory feedback leads to negative average covariances [13], which we show here reduce the driving force for the two resonant contributions. In balanced E-I networks, the driving force is not reduced, so the resonant contribution can become dominant.

For the biologically motivated parameters used in the last setting studied here, the effective coupling matrix W has complex eigenvalues which cause resonant mean activities. If the inhomogeneity was independent of the driving frequency, δc would have resonant modes with frequency fres and 2fres. Due to the mixing of the different modes and by the frequency dependence of the inhomogeneity driving the modulation of covariances, these modes determine only the ballpark for the location of the resonance in the covariance. Especially the resonances are not sharp enough so that each of them is visible in any combination of the modes. Different behavior is expected near the critical point where .

For predictions of experimental results, however, a more careful choice of reasonable biological parameters would be necessary. In particular, the external drive should be gauged such that the modulations of the mean activities are in the experimentally observed range. Still, our setup shows that the theory presented here works in the biologically plausible parameter range.

The goal of extracting fundamental mechanisms of time-dependent covariances guides the here presented choice of the level of detail of our model. Earlier works [6, 28, 29] showed that our setup without sinusoidal drive is sufficient to qualitatively reproduce and explain phenomena observed in vivo, like high variability of neuronal activity and small covariances. The latter point can be explained in binary networks by the suppression of fluctuations by inhibitory feedback, which is a general mechanism also applicable to other neuron models [10] and even finds application outside neuroscience, for example in electrical engineering [47]. The high variability observed in binary networks can be explained by the network being in the balanced state, that robustly emerges in the presence of negative feedback [29, 30]. In this state, the mean excitatory and inhibitory synaptic inputs cancel so far that the summed input to a neuron fluctuates around its threshold. This explanation holds also for other types of model networks and also for biological neural networks [48]. We have seen here that the operation in the balanced state, at low frequencies, gives rise to a partial cancellation of the modulation of covariances.

Our assumption of a network of homogeneously connected binary neurons implements the general feature of neuronal networks that every neuron receives input from a macroscopic number of other neurons, letting the impact of a single synaptic afferent on the activation of a cell be small and the summed input be distributed close to Gaussian: For uncorrelated incoming activity, the ratio between the fluctuations caused by a single input and the fluctuations of the total input is , independent of how synapses scale with N. However, the input to a neuron is actually not independent, but weakly correlated, with covariances decaying at least as fast as N−1 [6, 29]. Therefore this additional contribution to the fluctuations also decays like . The Gaussian approximation of the synaptic input relies crucially on these properties. Dahmen et al. [39] investigated third order cumulants, the next order of non-Gaussian corrections to this approximation. They found that the approximation has a small error even down to small networks of about 500 neurons and 50 synaptic inputs per neuron. These estimates hold as long as all synaptic weights are of equal size. For distributed synaptic amplitudes, in particular those following a wide or heavy-tailed distributions (e.g. [49, 50], reviewed in [51]), we expect the simple mean-field approximation applied here to require corrections due to the strong effect of single synapses.

The generic feature of neuronal dynamics, the threshold-like nonlinearity that determines the activation of a neuron, is shared by the binary, the leaky integrate-and-fire and, approximately, also the Hodgkin-Huxley model neuron. An important approximation entering our theory is the linearity of the dynamic response with respect to the perturbation. We estimate the validity of our theory by comparison to direct simulations. To estimate the breakdown of this approximation we compare the linear response to the first non-linear correction. We observe that the second order harmonics in the considered range of parameters remains as small as about 10 percent of the first harmonics. The quadratic contribution to the transfer properties of the neurons stems from the curvature of the effective gain function φ (Eq (17)). The linear portion of this gain function, in turn, is controlled by the amplitude σ of the synaptic noise. One therefore expects a breakdown of the linear approximation as soon as the temporal modulation of the mean input is of the order of this amplitude. Fig D in S1 Text shows that with the parameters hext = 1 and σexc,inh ≈ 10, used in the plots Figs 5 and 6 and Fig. B and Fig. C in S1 Text, the linear approximation is good, whereas in Fig 7, we used hext = 6, for which the linear perturbation theory already begins to break down. The latter figure is mainly supposed to give an intuitive impression.

A generic property that is shared by nearly all neuron models is the characteristic duration τ during which the activity of a sending cell affects the downstream neuron. For the binary neuron model, this time scale is identical to the mean interval τ between updates, because, once active, a neuron will stay active until the next update. It most certainly deactivates at that point, because we here consider low activity states prevalent in cortex [1]. In the leaky integrate-and-fire model the exponentially decaying membrane voltage with time constant τ is qualitatively similar: it sustains the effect that an input has on the output for this time scale. As a consequence, neurons transmit their input in a low-pass filtered manner to their output. This feature persists for more realistic spiking models, as shown for the leaky integrate-and-fire model [52, 53], the exponential integrate-and-fire model [52, 53], and the quadratic integrate-and-fire model [54]. We therefore expect that the qualitative properties reported here will carry over to these models.

A possible application of the framework developed in this paper is a quantitative comparison of the neuronal activity in the model network to the analysis of data measured in cortex [26]. Detecting the occurrence of so called Unitary Events (UE, [55–57], see also Sec. I in S1 Text), the authors observed that the simultaneous activation of neurons above the level expected for independence is locked to certain phases of the LFP. They hypothesized that the reason for this observation is the activation of cell assemblies. The results presented here show that the correlated activation of pairs of neurons is modulated by a sinusoidal drive even in a completely unstructured random network. In consequence, the locking of pairwise events to the cycle of the LFP is more pronounced for correlated events than for single spikes. Future work needs to quantitatively compare experimental data to the results from the model presented here. The closed form expressions for the modulations of the mean activities and covariances enable such an approach and the effective study of the dependence on the model parameters. A quantitative comparison needs to convert mean activities and pairwise covariances for binary neurons into the probability to measure a unitary event, interpreting the binary neuron states as binned spike trains. Preliminary results indicate that already the homogeneous network presented in this work can show some features described in [26]. In Sec. I in S1 Text, we apply the Unitary Event analysis to our setting. The presented methods will be helpful to analyze the modulation of synchrony in the presence of cell assemblies [58] in the model. This can be done by enhancing the connection probability among groups of excitatory neurons, similar as in [59] and will yield a more realistic model, which captures also nonlinear effects in the perturbation. Technically this extension amounts to the introduction of additional populations and the change of the connectivity matrix to reflect that these populations represent cell assemblies.

The relation of spiking activity to mesoscopic measures, such as the LFP, is still an open question. These population measures of neuronal activity naturally depend on the statistics of the microscopic activity they are composed of. Pairwise covariances, the focus of the current work, in particular tend to dominate the variance of any mesoscopic signal of summed activity: The contribution of covariances grows quadratically in the number of components, the contribution of variances only linearly [60, Box 2][10, eq. (1)][21, eq. (1),(2)]. Under the assumption that the LFP mainly reflects the input to a local recurrent network [21, 24], we have shown here that these two signals—spikes and LFPs—are intimately related; not only does the afferent oscillatory drive trivially modulate the propensity to produce spikes, their firing rate, but also the joint statistics of pairs of neurons by the three distinct pathways exposed in the present analysis. Forward modeling studies have shown that the spatial reach of the LFP critically depends on covariances, with elevated covariances leading to larger reach [21]. In this light our work shows that a local piece of neuronal tissue driven by a source of coherent oscillations will more effectively contribute to the local field potential itself: not only the spiking rate is modulated accordingly, but also the covariances are increased and decreased in a periodic manner, further amplifying the modulation of the generated local field potential and temporally modulating the spatial reach of the signal.

Functional consequences of the findings presented here deduce from the hypothesis that communication channels in cortex may effectively be multiplexed by the selective excitation of different areas with coherent oscillations [61, 62]. The presented analysis exposes that oscillatory drive to a local piece of cortex alone already effectively enhances coherent firing beyond the level expected based on the assumption of independence. If synchronous activity is employed as a dimension to represent information, it is hence tightly entangled with time-dependent changes of the mean activity. A similar conclusion was drawn from the observation that covariance transmission in feed-forward networks is monotonously increasing with firing rate [4, 5]. Any information-carrying modulation of synchronous activity must hence go beyond the here investigated effects, which can be regarded the baseline given by the non-stationary activity in networks without function. Since the mechanisms we have exposed only depend on generic features of cortical tissue—networks of non-linear neurons, connectivity with strong convergence and divergence, and dynamic stabilization by inhibition—the time-dependent entanglement of mean activity and covariances qualitatively exists in any network with these properties. In this view, our analysis can help to distinguish the level of time-modulated covariances in neural tissues that are surprising, and are therefore candidates to be attributed to function, from those that need to be expected in networks due to their generic properties.

Supporting information

In this text, we show how to apply the UE-analysis to a model network of binary neurons choosing the parameters from Table 1. We also present the derivation of the ODEs for the first two moments, we discuss the different possibilities to define a spike in a binary network and show how to handle the complex phase jump induced by the usage of the sine-function as a perturbation. Furthermore, we include the plots of the II- and EI-component of the covariances for the parameters of Table 1 and a plot of the mean activities and the EE-covariance for varying perturbation strength hext for the same parameters.

(PDF)

Acknowledgments

The authors would like to thank PierGianLuca Porta Mana for his great support and Michael Denker, Sonja Grün and the whole INM-6 for fruitful discussions. All network simulations were carried out with NEST (http://www.nest-simulator.org).

Data Availability

All python files are available from the dryad database (doi:10.5061/dryad.45sg0).

Funding Statement

This work was partly supported by the Helmholtz young investigator group VH-NG-1028 (https://www.helmholtz.de/en/jobs_talent/funding_programs/helmholtz_young_investigators_groups/), the Helmholtz Portfolio Supercomputing and Modeling for the Human Brain (SMHB, http://www.fz-juelich.de/inm/inm-1/EN/Forschung/JuBrain/Helmholtz%20Portfolio.html) and EU Grant 604102 (Human Brain Project, HBP, https://www.humanbrainproject.eu/). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Softky WR, Koch C. The Highly Irregular Firing of Cortical Cells Is Inconsistent with Temporal Integration of Random EPSPs. J Neurosci. 1993;13(1):334–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. London M, Roth A, Beeren L, Häusser M, Latham PE. Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature. 2010. July;466(1):123–128. 10.1038/nature09086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Lindner B, Doiron B, Longtin A. Theory of oscillatory firing induced by spatially correlated noise and delayed inhibitory feedback. Phys Rev E. 2005;72:061919 10.1103/PhysRevE.72.061919 [DOI] [PubMed] [Google Scholar]

- 4. De la Rocha J, Doiron B, Shea-Brown E, Kresimir J, Reyes A. Correlation between neural spike trains increases with firing rate. Nature. 2007. August;448(16):802–807. 10.1038/nature06028 [DOI] [PubMed] [Google Scholar]

- 5. Shea-Brown E, Josic K, de la Rocha J, Doiron B. Correlation and synchrony transfer in integrate-and-fire neurons: basic properties and consequences for coding. Phys Rev Lett. 2008. March;100:108102 10.1103/PhysRevLett.100.108102 [DOI] [PubMed] [Google Scholar]

- 6. Renart A, De La Rocha J, Bartho P, Hollender L, Parga N, Reyes A, et al. The asynchronous State in Cortical Circuits. Science. 2010. January;327:587–590. 10.1126/science.1179850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Pernice V, Staude B, Cardanobile S, Rotter S. How Structure Determines Correlations in Neuronal Networks. PLoS Comput Biol. 2011. May;7(5):e1002059 10.1371/journal.pcbi.1002059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Trousdale J, Hu Y, Shea-Brown E, Josic K. Impact of network structure and cellular response on spike time correlations. PLoS Comput Biol. 2012;8(3):e1002408 10.1371/journal.pcbi.1002408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Pernice V, Staude B, Cardanobile S, Rotter S. Recurrent interactions in spiking networks with arbitrary topology. Phys Rev E. 2012;85(3):031916 10.1103/PhysRevE.85.031916 [DOI] [PubMed] [Google Scholar]

- 10. Tetzlaff T, Helias M, Einevoll G, Diesmann M. Decorrelation of neural-network activity by inhibitory feedback. PLoS Comput Biol. 2012;8(8):e1002596 10.1371/journal.pcbi.1002596 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hu Y, Trousdale J, Josić K, Shea-Brown E. Motif statistics and spike correlations in neuronal networks. Journal of Statistical Mechanics: Theory and Experiment. 2013;2013(03):P03012 10.1088/1742-5468/2013/03/P03012 [DOI] [Google Scholar]

- 12. Helias M, Tetzlaff T, Diesmann M. Echoes in correlated neural systems. New J Phys. 2013;15:023002 10.1088/1367-2630/15/2/023002 [DOI] [Google Scholar]

- 13. Helias M, Tetzlaff T, Diesmann M. The correlation structure of local cortical networks intrinsically results from recurrent dynamics. PLoS Comput Biol. 2014;10(1):e1003428 10.1371/journal.pcbi.1003428 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Rosenbaum R, Smith MA, Kohn A, Rubin JE, Doiron B. The spatial structure of correlated neuronal variability. Nat Neurosci. 2017;20:107–114. 10.1038/nn.4433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Riehle A, Grün S, Diesmann M, Aertsen A. Spike Synchronization and Rate Modulation Differentially Involved in Motor Cortical Function. Science. 1997. December;278(5345):1950–1953. 10.1126/science.278.5345.1950 [DOI] [PubMed] [Google Scholar]

- 16. Kilavik BE, Roux S, Ponce-Alvarez A, Confais J, Gruen S, Riehle A. Long-term Modifications in Motor Cortical Dynamics induced by intensive practice. J Neurosci. 2009;29:12653–12663. 10.1523/JNEUROSCI.1554-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Cohen MR, Maunsell JHR. Attention improves performance primarily by reducing interneuronal correlations. Nat Neurosci. 2009;12:1594–1600. 10.1038/nn.2439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Buzsáki G, Draguhn A. Neuronal Oscillations in Cortical Networks. Science. 2004;304:1926–1929. 10.1126/science.1099745 [DOI] [PubMed] [Google Scholar]

- 19. Nunez PL, Ramesh S. Electric fields of the brain: the neurophysics of EEG. Oxford University Press; 2006. [Google Scholar]

- 20. Ray S, Crone NE, Niebur E, Franaszczuk PJ, Hsiao SS. Neural correlates of high-gamma oscillations (60–200 Hz) in macaque local field potentials and their potential implications in electrocorticography. J Neurosci. 2008. November;28(45):11526–11536. 10.1523/JNEUROSCI.2848-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Lindén H, Tetzlaff T, Potjans TC, Pettersen KH, Grün S, Diesmann M, et al. Modeling the spatial reach of the LFP. Neuron. 2011;72(5):859–872. 10.1016/j.neuron.2011.11.006 [DOI] [PubMed] [Google Scholar]

- 22. Mitzdorf U. Current Source-Density Method and Application in Cat Cerebral Cortex: Investigation of Evoked Potentials and EEG Phenomena. Physiol Rev. 1985;65(1):37–100. [DOI] [PubMed] [Google Scholar]

- 23. Viswanathan A, Freeman RD. Neurometabolic coupling in cerebral cortex reflects synaptic more than spiking activity. Nature Neuroscience. 2007;10:1308–1312. 10.1038/nn1977 [DOI] [PubMed] [Google Scholar]

- 24. Mazzoni A, Lindén H, Cuntz H, Lansner A, Panzeri S, Einevoll G. Computing the Local Field Potential (LFP) from Integrate-and-Fire Network Models. PLoS Comput Biol. 2015;11:1–38. 10.1371/journal.pcbi.1004584 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Scherberger H, Jarvis MR, Andersen RA. Cortical Local Field Potential Encodes Movement Intentions in the Posterior Parietal Cortex. Neuron. 2005;46(2):347–354. Available from: http://www.sciencedirect.com/science/article/pii/S0896627305001996. 10.1016/j.neuron.2005.03.004 [DOI] [PubMed] [Google Scholar]

- 26. Denker M, Roux S, Lindén H, Diesmann M, Riehle A, Grün S. The Local Field Potential Reflects Surplus Spike Synchrony. Cereb Cortex. 2011. December;21:2681–2695. 10.1093/cercor/bhr040 [DOI] [PMC free article] [PubMed] [Google Scholar]