Abstract

The spiking activity of single neurons can be well described by a nonlinear integrate-and-fire model that includes somatic adaptation. When exposed to fluctuating inputs sparsely coupled populations of these model neurons exhibit stochastic collective dynamics that can be effectively characterized using the Fokker-Planck equation. This approach, however, leads to a model with an infinite-dimensional state space and non-standard boundary conditions. Here we derive from that description four simple models for the spike rate dynamics in terms of low-dimensional ordinary differential equations using two different reduction techniques: one uses the spectral decomposition of the Fokker-Planck operator, the other is based on a cascade of two linear filters and a nonlinearity, which are determined from the Fokker-Planck equation and semi-analytically approximated. We evaluate the reduced models for a wide range of biologically plausible input statistics and find that both approximation approaches lead to spike rate models that accurately reproduce the spiking behavior of the underlying adaptive integrate-and-fire population. Particularly the cascade-based models are overall most accurate and robust, especially in the sensitive region of rapidly changing input. For the mean-driven regime, when input fluctuations are not too strong and fast, however, the best performing model is based on the spectral decomposition. The low-dimensional models also well reproduce stable oscillatory spike rate dynamics that are generated either by recurrent synaptic excitation and neuronal adaptation or through delayed inhibitory synaptic feedback. The computational demands of the reduced models are very low but the implementation complexity differs between the different model variants. Therefore we have made available implementations that allow to numerically integrate the low-dimensional spike rate models as well as the Fokker-Planck partial differential equation in efficient ways for arbitrary model parametrizations as open source software. The derived spike rate descriptions retain a direct link to the properties of single neurons, allow for convenient mathematical analyses of network states, and are well suited for application in neural mass/mean-field based brain network models.

Author summary

Characterizing the dynamics of biophysically modeled, large neuronal networks usually involves extensive numerical simulations. As an alternative to this expensive procedure we propose efficient models that describe the network activity in terms of a few ordinary differential equations. These systems are simple to solve and allow for convenient investigations of asynchronous, oscillatory or chaotic network states because linear stability analyses and powerful related methods are readily applicable. We build upon two research lines on which substantial efforts have been exerted in the last two decades: (i) the development of single neuron models of reduced complexity that can accurately reproduce a large repertoire of observed neuronal behavior, and (ii) different approaches to approximate the Fokker-Planck equation that represents the collective dynamics of large neuronal networks. We combine these advances and extend recent approximation methods of the latter kind to obtain spike rate models that surprisingly well reproduce the macroscopic dynamics of the underlying neuronal network. At the same time the microscopic properties are retained through the single neuron model parameters. To enable a fast adoption we have released an efficient Python implementation as open source software under a free license.

Introduction

There is prominent evidence that information in the brain, about a particular stimulus for example, is contained in the collective neuronal spiking activity averaged over populations of neurons with similar properties (population spike rate code) [1, 2]. Although these populations can comprise a large number of neurons [3], they often exhibit low-dimensional collective spiking dynamics [4] that can be measured using neural mass signals such as the local field potential or electroencephalography.

The behavior of cortical networks at that level is often studied computationally by employing simulations of multiple (realistically large or subsampled) populations of synaptically coupled individual spiking model neurons. A popular choice of single cell description for this purpose are two-variable integrate-and-fire models [5, 6] which describe the evolution of the fast (somatic) membrane voltage and an adaptation variable that represents a slowly-decaying potassium current. These models are computationally efficient and can be successfully calibrated using electrophysiological recordings of real cortical neurons and standard stimulation protocols [5, 7–10] to accurately reproduce their subthreshold and spiking activity. The choice of such (simple) neuron models, however, does not imply reasonable (short enough) simulation durations for a recurrent network, especially when large numbers of neurons and synaptic connections between them are considered.

A fast and mathematically tractable alternative to simulations of large networks are population activity models in terms of low-dimensional ordinary differential equations (i.e., which consist of only a few variables) that typically describe the evolution of the spike rate. These reduced models can be rapidly solved and allow for convenient analyses of the dynamical network states using well-known methods that are simple to implement. A popular example are the Wilson-Cowan equations [11], which were also extended to account for (slow) neuronal adaptation [12] and short-term synaptic depression [13]. Models of this type have been successfully applied to qualitatively characterize the possible dynamical states of coupled neuronal populations using phase space analyses [11–13], yet a direct link to more biophysically described networks of (calibrated) spiking neurons in terms of model parameters is missing.

Recently, derived population activity models have been proposed that bridge the gap between single neuron properties and mesoscopic network dynamics. These models are described by integral equations [14, 15] or partial differential equations [16, 17]

Here we derive simple models in terms of low-dimensional ordinary differential equations (ODEs) for the spike rate dynamics of sparsely coupled adaptive nonlinear integrate-and-fire neurons that are exposed to noisy synaptic input. The derivations are based on a Fokker-Planck equation that describes the neuronal population activity in the mean-field limit of large networks. We develop reduced models using recent methodological advances on two different approaches: the first is based on a spectral decomposition of the Fokker-Planck operator under two different slowness assumptions [18–20]. In the second approach we consider a cascade of linear temporal filters and a nonlinear function which are determined from the Fokker-Planck equation and semi-analytically approximated, building upon [21]. Both approaches are extended for an adaptation current, a nonlinear spike generating current and recurrent coupling with distributed synaptic delays.

We evaluate the developed low-dimensional spike rate models quantitatively in terms of reproduction accuracy in a systematic manner over a wide range of biologically plausible parameter values. In addition, we provide numerical implementations for the different reduction methods as well as the Fokker-Planck equation under a free license as open source project.

For the derived models in this contribution we use the adaptive exponential integrate-and-fire (aEIF) model [5] to describe individual neurons, which is similar to the model proposed by Izhikevich [6] but includes biophysically meaningful parameters and a refined description of spike initiation. However, the presented derivations are equally applicable when using the Izhikevich model instead (requiring only a small number of simple substitutions in the code).

Through their parameters the derived models retain a direct, quantitative link to the underlying spiking model neurons, and they are described in a well-established, convenient form (ODEs) that can be rapidly solved and analyzed. Therefore, these models are well suited (i) for mathematical analyses of dynamical states at the population level, e.g., linear stability analyses of attractors, and (ii) for application in multi-population brain network models. Apart from a specific network setting, the derived models are also appropriate as a spike rate description of individual neurons under noisy input conditions.

The structure of this article contains mildly redundant model specifications allowing the readers who are not interested in the methodological foundation to directly read the self-contained Sect. Results.

Results

Model reduction

The quantity of our interest is the population-averaged number of spikes emitted by a large homogeneous network of N sparsely coupled aEIF model neurons per small time interval, i.e., the spike rate rN(t). The state of neuron i at time t is described by the membrane voltage Vi(t) and adaptation current wi(t), which evolve piecewise continuously in response to overall synaptic current Isyn,i = Iext,i(t) + Irec,i(t). This input current consists of fluctuating network-external drive Iext,i = C[μext(t) + σext(t)ξext,i(t)] with membrane capacitance C, time-varying moments μext, and unit Gaussian white noise process ξext,i as well as recurrent input Irec,i. The latter causes delayed postsynaptic potentials (i.e., deflections of Vi) of small amplitude J triggered by the spikes of K presynaptic neurons (see Sect. Methods for details).

Here we present two approaches of how the spike rate dynamics of the large, stochastic delay-differential equation system for the 2N states (Vi, wi) can be described by simple models in terms of low-dimensional ODEs. Both approaches (i) take into account adaptation current dynamics that are sufficiently slow, allowing to replace the individual adaptation current wi by its population-average 〈w〉, governed by

| (1) |

where a, Ew, b, τw are the adaptation current model parameters (subthreshold conductance, reversal potential, spike-triggered increment, time constant, respectively), 〈V〉∞ is the steady-state membrane voltage averaged across the population (which can vary over time, see below), and r is the spike rate of the respective low-dimensional model. Furthermore, both approaches (ii) are based on the observation that the collective dynamics of a large, sparsely coupled (and noise driven) network of integrate-and-fire type neurons can be well described by a Fokker-Planck equation. In this intermediate Fokker-Planck (FP) model the overall synaptic input is approximated by a mean part with additive white Gaussian fluctuations, Isyn,i/C ≈ μsyn(t, rd) + σsyn(t, rd)ξi(t), that are uncorrelated between neurons. The moments of the overall synaptic input,

| (2) |

depend on time via the moments of the external input and, due to recurrent coupling, on the delayed spike rate rd. The latter is governed by

| (3) |

which corresponds to individual propagation delays drawn from an exponentially distributed random variable with mean τd. The FP model involves solving a partial differential equation (PDE) to obtain the time-varying membrane voltage distribution p(V, t) and the spike rate r(t).

The first reduction approach is based on the spectral decomposition of the Fokker-Planck operator and leads to the following two low-dimensional models: the “basic” model variant (spec1) is given by a complex-valued differential equation describing the spike rate evolution in its real part,

| (4) |

where λ1(μtot, σtot) is the dominant eigenvalue of and r∞(μtot, σtot) is the steady-state spike rate. Its parameters λ1, r∞, and 〈V〉∞ (cf. Eq (1)) depend on the total input moments given by μtot(t) = μsyn − 〈w〉/C and which closes the model (Eqs (1)–(4)). The other, “advanced” spectral model variant (spec2) is given by a real-valued second order differential equation for the spike rate,

| (5) |

where the dots denote time derivatives. Its parameters β2, β1, β0, βc, r∞ and 〈V〉∞ depend on the total input moments (μtot, ) as follows: the latter two parameters explicitly as in the basic model above, the former four indirectly via the first two dominant eigenvalues λ1, λ2 and via additional quantities obtained from the (stationary and the first two nonstationary) eigenfunctions of and its adjoint . Furthermore, the parameter βc depends explicitly on the population-averaged adaptation current 〈w〉, the delayed spike rate rd, and on the first and second order time derivatives of the external moments μext and .

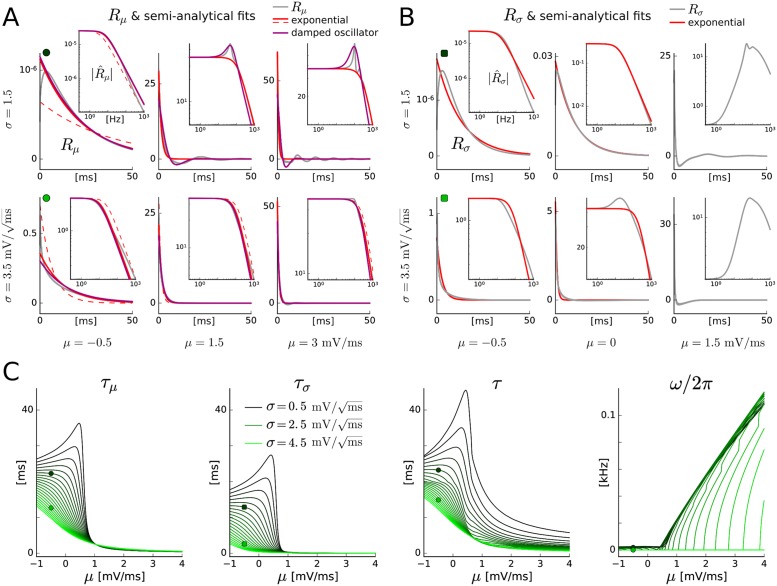

The second approach is based on a Linear-Nonlinear (LN) cascade, in which the population spike rate is generated by applying to the time-varying mean and standard deviation of the overall synaptic input, μsyn and σsyn, separately a linear temporal filter, followed by a common nonlinear function. These three components–two linear filters and a nonlinearity–are extracted from the Fokker-Planck equation. Approximating the linear filters using exponentials and damped oscillating functions yields two model variants: In the basic “exponential” (LNexp) model the filtered mean μf and standard deviation σf of the overall synaptic input are given by

| (6) |

where the time constants τμ(μeff, σeff), τσ(μeff, σeff) depend on the effective (filtered) input mean μeff(t) = μf − 〈w〉/C and standard deviation σeff(t) = σf. The “damped oscillator” (LNdos) model variant, on the other hand, describes the filtered input moments by

| (7) |

| (8) |

where the time constants τ(μtot, σtot), τσ(μtot, σtot) and the angular frequency ω(μtot, σtot) depend on the total input moments defined above. In both LN model variants the spike rate is obtained by the nonlinear transformation of the effective input moments through the steady-state spike rate,

| (9) |

and the steady-state mean membrane voltage 〈V〉∞ (cf. Eq (1)) is also evaluated at (μeff, σeff).

These four models (spec1, spec2, LNexp, LNdos) from both reduction approaches involve a number of parameters that depend on the strengths of synaptic input and adaptation current only via the total or effective input moments. We refer to these parameters as quantities below to distinguish them from fixed (independent) parameters. The computational complexity when numerically solving the models forward in time (for different parametrizations) can be greatly reduced by precomputing those quantities for a range of values for the total/effective input moments and using look-up tables during time integration. Changing any parameter value of the external input, the recurrent coupling or the adaptation current does not require renewed precomputations, enabling rapid explorations of parameter space and efficient (linear) stability analyses of network states.

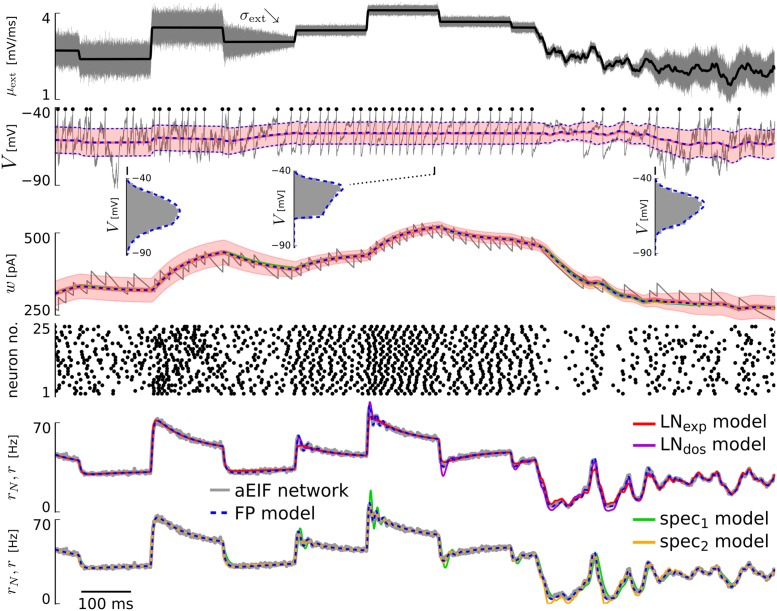

The full specification of the “ground truth” system (network of aEIF neurons), the derivations of the intermediate description (FP model) and the low-dimensional spike rate models complemented by concrete numerical implementations are provided in Sect. Methods (that is complemented by the supporting material S1 Text). In Fig 1 we visualize the outputs of the different models using an example excitatory aEIF network exposed to external input with varying mean μext(t) and standard deviation σext(t).

Fig 1. Example of aEIF network response and output of derived models for varying input.

From top to bottom: Mean input μext (black) together with input standard deviation σext (gray, visualized for one neuron by sampling the respective white noise process ξext,i). 2nd row: Membrane voltage V of one neuron (gray, with spike times highlighted by black dots) and membrane voltage statistics from the excitatory coupled aEIF population of 50,000 neurons (red) and from the FP model (blue dashed): mean ± standard deviation over time, as well as voltage histograms (gray) and probability densities p(V, t) (blue dashed) at three indicated time points. 3rd row: Adaptation current w of one neuron (gray) and mean adaptation currents of all models ± standard deviation for the aEIF network (shaded area). Note that differences in the mean adaptation currents of the different models are hardly recognizable. 4th row: Spike times of a subset of 25 neurons randomly chosen from the network. Below: Spike rate r of the LN cascade based models (LNexp, LNdos) and the spectral models (spec1, spec2) in comparison to the FP model and the aEIF network (rN). The values of the coupling parameters were J = 0.05 mV, K = 100, τd = 3 ms.

Performance for variations of the mean input

Here, and in the subsequent two sections, we assess the accuracy of the four low-dimensional models to reproduce the spike rate dynamics of the underlying aEIF population. The intermediate FP model is included for reference. The derived models generate population activity in response to overall synaptic input moments μsyn and . These depend on time via the external moments μext(t) and , and the delayed spike rate rd(t). Therefore, it is instrumental to first consider an uncoupled population and suitable variations of external input moments that effectively mimic a range of biologically plausible presynaptic spike rate dynamics. This allows us to systematically compare the reproduction performance of the different models over a manageable parameter space (without K, J, τd), yet it provides useful information on the accuracy for recurrent networks.

For many network settings the dominant effect of synaptic coupling is on the mean input (cf. Eq (2)). Therefore, we consider first in detail time-varying mean but constant variance of the input. Specifically, to account for a wide range of oscillation frequencies for presynaptic spike rates, μext is described by an Ornstein-Uhlenbeck (OU) process

| (10) |

where denotes the correlation time, and ϑμ are the mean and standard deviation of the stationary normal distribution, i.e., , and ξ is a unit Gaussian white noise process. Sample time series generated from the OU process are filtered using a Gaussian kernel with a small standard deviation σt to obtain sufficiently differentiable time series (due to the requirements of the spec2 model and the LNdos model). The filtered realization is then used for all models to allow for a quantitative comparison of the different spike rate responses to the same input. The value of σt we use in this study effectively removes very large oscillation frequencies which are rarely observed, while lower frequencies [22] are passed.

The parameter space we explore covers large and small correlation times , strong and weak input mean and standard deviation σext, and for each of these combinations we consider an interval from small to large variation magnitudes ϑμ. The values of and ϑμ determine how rapid and intense μext(t) fluctuates.

We apply two performance measures, as in [21]. One is given by Pearson’s correlation coefficient,

| (11) |

between the (discretely given) spike rates of the aEIF population and each derived model with time averages and over a time interval of length tM − t1. For comparison, we also include the correlation coefficient between the aEIF population spike rate and the time-varying mean input, ρ(rN, μext). In addition, to assess absolute differences we calculate the root mean square (RMS) distance,

| (12) |

where M denotes the number of elements of the respective time series (rN, r).

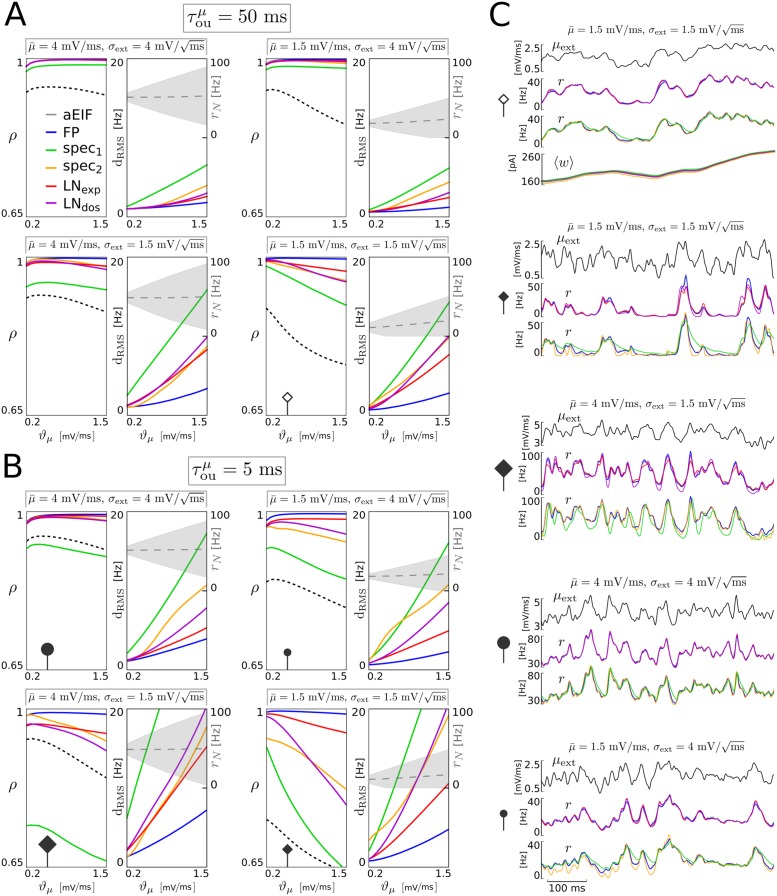

We find that three of the four low-dimensional spike rate models (spec2, LNexp, LNdos) very well reproduce the spike rate rN of the aEIF neurons: for the LNexp model ρ > 0.95 and for the spec2 and LNdos models ρ ≳ 0.8 (each) over the explored parameter space, see Fig 2. Only the basic spectral model (spec1) is substantially less accurate. Among the best models, the simplest (LNexp) overall outperforms spec2 and LNdos, in particular for fast and strong mean input variations. However, in the strongly mean-driven regime the best performing model is spec2.

Fig 2. Reproduction accuracy of the reduced models for variations of the mean input.

Pearson correlation coefficient (ρ) and root mean square distance (dRMS) between the spike rate time series rN(t) of the aEIF population and r(t) of each derived model (FP, spec1, spec2, LNexp, LNdos) for different strengths of baseline mean input , input standard deviation σext, mean input variation ϑμ and for a large value of time constant (A, moderately fast variations) as well as a smaller value (B, rapid variations). The input-output correlation (between μext and rN) is included as a reference (black dashed lines), and mean ± standard deviation of the population spike rate rN are indicated (gray dashed lines, shaded areas). For each parametrization, activity time series of 60 s duration were generated (from 50,000 aEIF neurons and each derived model), from which the first second was omitted (each) to exclude transients, since the initial conditions of the models were not matched. Representative time series examples are shown on the right side with parameter values indicated (C), where empty and filled symbols correspond to large and small correlation time , respectively, relating the examples to the panels A and B. The adaptation current traces were excluded in all but the first example to allow for a larger number of parameter points.

We observe that the performance of any of the spike rate models decreases (with model-specific slope) with (i) increasing variation strength ϑμ larger than a certain (small) value, and with (ii) smaller , i.e., faster changes of μext. For small values of ϑμ fluctuations of rN, which are caused by the finite aEIF population size N and do not depend on the fluctuations of μext, deteriorate the performance measured by ρ (see also [21], p.13 right). This explains why ρ does not increase as ϑμ decreases (towards zero) for any of the models. Naturally, the FP model is the by far most accurate spike rate description in terms of both measures, correlation coefficient ρ and RMS distance. This is not surprising because the four low-dimensional models are derived from that (infinite-dimensional) representation. Thus, the performance of the FP system defines an upper bound on the correlation coefficient ρ and a lower bound on the RMS distance for the low-dimensional models.

In detail: for moderately fast changing mean input (large ) the three models spec2, LNexp and LNdos exhibit excellent reproduction performance with ρ > 0.95, and spec1 shows correlation coefficients of at least ρ = 0.9 (Fig 2A), which is substantially better than ρ(rN, μext). The small differences between the three top models can be better assessed from the RMS distance measure. For large input variance the two LN models perform best (cf. Fig 2A, top, and for an example, 2C). For weak input variance and large mean (small σext, large ) the spec2 model outperforms the LN models, unless the variation magnitude ϑμ is very large. For small mean , where transient activity is interleaved with periods of quiescence, the LNexp model performs best, except for weak variations ϑμ, where LNdos is slightly better (see Fig 2A, bottom).

Stronger differences in performance emerge when considering faster changes of the mean input μext(t) (i.e., for small ), see Fig 2B, and for examples, Fig 2C. The spec1 model again performs worst with ρ values even below the input/output correlation baseline ρ(rN, μext) for large mean input (cf. Fig 2B, left). The spec1 spike rate typically decays too slowly (cf. Fig 2C). The three better performing models differ as follows: for large input variance and mean (large σext and ), where the spike rate response to the input is rather fast (cf. increased ρ(rN, μext)), the performance of all three models in terms of ρ is very high, but the RMS distance measure indicates that LNexp is the most accurate model (cf. Fig 2B, top). For weak mean input LNexp is once again the top model while LNdos and, especially noticeable, spec2 show a performance decline (see example in Fig 2C). For weak input variance (Fig 2A, bottom), where significant (oscillatory) excursions of the spike rates in response to changes in the mean input can be observed (see also Fig 1), we obtain the following benchmark contrast: for large mean drive the spec2 model performs best, except for large variation amplitudes ϑμ, at which LNexp is more accurate. Smaller mean input on the other hand corresponds to the most sensitive regime where periods of quiescence alternate with rapidly increasing and decaying spike rates. The LNexp model shows the most robust and accurate spike rate reproduction in this setting, while LNdos and spec2 each exhibit decreased correlation and larger RMS distances–spec2 even for moderate input variation intensities ϑμ. The slowness approximation underlying the spec2 model likely induces an error due to the fast external input changes in comparison with the rather slow intrinsic time scale by the dominant eigenvalue, vs. 1/|Re{λ1}| ≈ 15 ms (cf. visualization of the spectrum in Sect. Spectral models). Note that for these weak inputs the distribution of the spike rate is rather asymmetric (cf. Fig 2B). Interestingly the LNdos model performs worse than LNexp for large mean input variations (i.e., large ϑμ) in general, and only slightly better for small input variance and mean input variations that are not too large and fast.

We would like to note that decreasing the Gaussian filter width σt to smaller values, e.g., fractions of a millisecond, can lead to a strong performance decline for the spec2 model because of its explicit dependence on first and second order time derivatives of the mean input.

Furthermore, we show how the adaptation parameters affect the reproduction performance of the different models in Fig 3. The adaptation time constant τw and spike-triggered adaptation increment b are varied simultaneously (keeping their product constant) such that the average spike rate and adaptation current, and thus the spiking regime, remain comparable for all parametrizations. As expected, the accuracy of the derived models decreases for faster adaptation current dynamics, due to the adiabatic approximation that relies on sufficiently slow adaptation (cf. Sect. Methods). Interestingly however, the performance of all reduced models (except spec1) declines only slightly as the adaptation time constant decreases to the value of the membrane time constant (which means the assumption of separated time scales underlying the adiabatic approximation is clearly violated). This kind of robustness is particularly pronounced for input with large baseline mean and small noise amplitude σext, cf. Fig 3B.

Fig 3. Effect of adaptation current timescale on reproduction accuracy.

Performance measures and population spike rate statistics (cf. Fig 2A and 2B) as a function of the adaptation time constant τw, that takes values between 20 ms (equal to the membrane time constant) and 200 ms (used throughout the rest of the study). The spike-triggered adaptation increment b was co-varied (antiproportional to τw) such that the product τw b = 8 pAs is fixed for all shown parametrizations. The input mean μext(t) fluctuates with timescale and strength ϑμ = 0.54 mV/ms (same value as for examples in Fig 2C) around a smaller (A) and a larger (B) baseline mean , while the input deviation σext is constant. Note that the rightmost parametrization of A corresponds to Fig 2C (top example) and is contained in Fig 2A (bottom right) while that of B is shown in Fig 2A (bottom left).

Performance for variations of the input variance

For perfectly balanced excitatory and inhibitory synaptic coupling the contribution of presynaptic activity to the mean input μsyn is zero by definition, but the input variance is always positively (linearly) affected by a presynaptic spike rate–even for a negative synaptic efficacy J (cf. Eq (2)). To assess the performance of the derived models in this scenario, but within the reference setting of an uncoupled population, we consider constant external mean drive μext and let the variance evolve according to a filtered OU process (such as that used for the mean input μext in the previous section) with parameters and ϑσ2 of the stationary normal distribution , correlation time and Gaussian filter standard deviation σt as before.

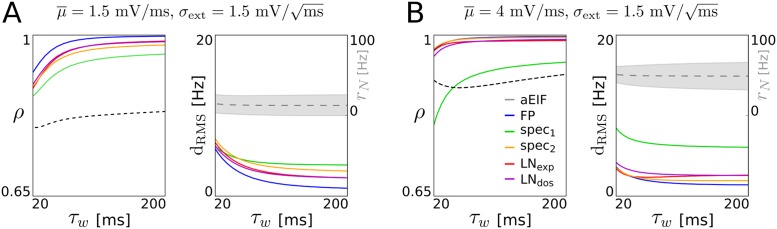

The results of two input parametrizations are shown in Fig 4. For large input mean μext and rapidly varying variance the spike rate response of the aEIF population is very well reproduced by the FP model and, to a large extent, by the spec2 model (cf. Fig 4A). This may be attributed to the fact that the latter model depends on the first two time derivatives of the input variance . The LN models cannot well reproduce the rapid spike rate excursions in this setting, and the spec1 model performs worst, exhibiting time-lagged spike rate dynamics compared to rN(t) which leads to a very small value of correlation coefficient ρ (below the input/output correlation baseline ). For smaller mean input μext and moderately fast varying variance (larger correlation time ) the fluctuating aEIF population spike rate is again nicely reproduced by the FP model while the rate response of the spec2 model exhibits over-sensitive behavior to changes in the input variance, as indicated by the large RMS distance (see Fig 4B). This effect is even stronger for faster variations, i.e., smaller (cf. supplementary visualization S1 Fig). The LN models perform better in this setting, and the spec1 model (again) performs worst in terms of correlation coefficient ρ due to its time-lagged spike rate response.

Fig 4. Performance for variations of the input variance.

Time series of population spike rate and mean adaptation current from the different models in response to varying for large mean input and rapid variations, μext = 4 mV/ms, (A) and for small mean input and moderately fast variations, μext = 1.5 mV/ms, (B). The values for the remaining input parameters were , ϑσ2 = 2 mV2/ms. For the aEIF population 〈w〉± standard deviation are visualized (red shaded areas). Note that the mean adaptation time series of all models as well as the spike rates of the cascade based models are on top of each other. The indicated Pearson correlation coefficients (with dashed input-output correlation) and root mean square distances were calculated from simulated spike rate time series of 60 s duration from which the first second was excluded, as the initial conditions of the models were not matched. In A the correlation ρ (but not the distance dRMS) between the spike rates of the model spec1 and the aEIF population) is strongly decreased due to a small time lag between the two time series which is difficult to see in the figure.

It should be noted that the lowest possible value of the input standard deviation, i.e., σext (plus a nonnegative number in case of recurrent input) cannot be chosen completely freely but must be large enough () for our parametrization. This is due to theoretical reasons (Fokker-Planck formalism) and practical reasons (numerics for Fokker-Planck solution and for calculation of the derived quantities, such as r∞).

Oscillations in a recurrent network

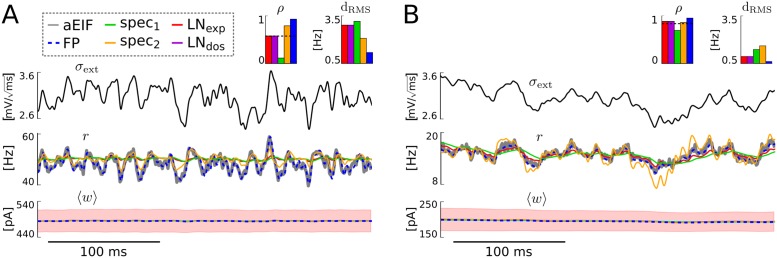

To demonstrate the applicability of the low-dimensional models for network analyses we consider a recurrently coupled population of aEIF neurons that produces self-sustained network oscillations by the interplay of strong excitatory feedback and spike-triggered adaptation or, alternatively, by delayed recurrent synaptic inhibition [16, 23]. The former oscillation type is quite sensitive to changes in input, adaptation and especially coupling parameters for the current-based type of synaptic coupling considered here and due to lack of (synaptic) inhibition and refractoriness. For example, a small increase in coupling strength can lead to a dramatic (unphysiologic) increase in oscillation amplitude because of strong recurrent excitation. Hence we consider a difficult setting here to evaluate the reduced spike rate models–in particular, when the network operates close to a bifurcation.

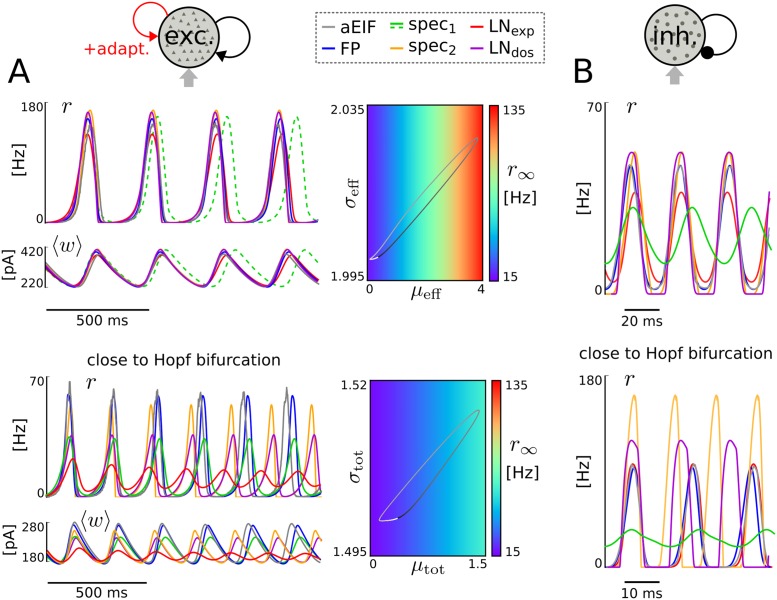

In Fig 5A we present two example parametrizations from a region (in parameter space) that is characterized by stable oscillations. This means the network exhibits oscillatory spike rate dynamics for constant external input moments μext and . The derived models reproduce the limit cycle behavior of the aEIF network surprisingly well, except for small frequency and amplitude deviations (FP, spec2, LNdos, LNexp) and larger frequency mismatch (spec1), see Fig 5A, top. For weaker input moments and increased spike-triggered adaptation strength the network is closer to a Hopf bifurcation [16, 23]. It is, therefore, not surprising that the differences in oscillation period and amplitude are more prominent (cf. Fig 5A, bottom). The bifurcation point of the LNexp model is slightly shifted, shown by the slowly damped oscillatory convergence to a fixed point. This suggests that the bifurcation parameter value of each of the derived models is not far from the true critical parameter value of the aEIF network but can quantitatively differ (slightly) in a model-dependent way.

Fig 5. Network-generated oscillations.

Oscillatory population spike rate and mean adaptation current of 50,000 excitatory coupled aEIF neurons and each of the derived models (for constant external input moments) generated by the interplay of recurrent excitation/adaptation current (A) and by delayed recurrent inhibition (B). In addition, the limit cycle of the LNexp model is shown in terms of the (quantity) steady-state spike rate r∞ as a function of effective input moments μeff, (A, top) and for the spec2 model in dependence of the total input moments (μtot, (A, bottom). The phase of the cycle is visualized by grayscale color code (increasing phase from black to white). The values for the input, adaptation and coupling parameters were μext = 1.5 mV/ms, , a = 3 nS, b = 30 pA (A, top), μext = 1.275 mV/ms, , a = 3 nS, b = 60 pA (A, bottom), K = 1000, J = 0.03 mV, τd = 3 ms (A, both). In B adaptation was removed (a = b = 0) and delays were identical dij = d; input and coupling parameter values were μext = 1.5 mV/ms, , K = 1000, J = −0.0357 mV, d = 10 ms (top) and μext = 3 mV/ms, , K = 1000, J = −0.087 mV, d = 5 ms (bottom).

The second type of oscillation is generated by delayed synaptic inhibition [22] and does not depend on the (neuronal) inhibition that is provided by an adaptation current. To demonstrate this independence the adaptation current was disabled (by setting the parameters a = b = 0) for the two respective examples that are shown in Fig 5B. Similarly as for the previous oscillation type, the low-dimensional models (except spec1) reproduce the spike rate limit cycle of the aEIF network surprisingly well, in particular for weak external input (see Fig 5B, top). For larger external input and stronger inhibition with shorter delay the network operates close to a Hopf bifurcation, leading to larger differences in oscillation amplitude and frequency in a model-dependent way (Fig 5B, bottom). Note that the intermediate (Fokker-Planck) model very well reproduces the inhibition-based type of oscillation which demonstrates the applicability of the underlying mean-field approximation. We would also like to note that enabling the adaptation current dynamics (only) leads to decreased average spike rates but does not affect the reproduction accuracy.

We would like to emphasize that the previous comprehensive evaluations for an uncoupled population provide a deeper insight on the reproduction performance–also for a recurrent network–than the four examples shown here, as explained in the Sect. Performance for variations of the mean input. For example, the (improved) reproduction performance for increased input variance in the uncoupled setting (cf. Fig 2) informs about the reproduction performance for networks of excitatory and inhibitory neurons that are roughly balanced, i.e., where the overall input mean is rather small compared to the input standard deviation.

Implementation and computational complexity

We have developed efficient implementations of the derived models using the Python programming language and by employing the library Numba for low-level machine acceleration [24]. These include: (i) the numerical integration of the Fokker-Planck model using an accurate finite volume scheme with implicit time discretization (cf. Sect. Methods), (ii) the parallelized precalculation of the quantities required by the low-dimensional spike rate models and (iii) the time integration of the latter models, as well as example scripts demonstrating (i)–(iii). The code is available as open source software under a free license at GitHub: https://github.com/neuromethods/fokker-planck-based-spike-rate-models

With regards to computational cost, summarizing the results of several aEIF network parametrizations, the duration to generate population activity time series for the low-dimensional spike rate models is usually several orders of magnitude smaller compared to numerical simulation of the original aEIF network and a few orders of magnitude smaller in comparison to the numerical solution of the FP model. For example, considering a population of 50,000 coupled neurons with 2% connection probability, a single simulation run of 5 s and the same integration time step across the models, the computation times amounted to 1.1–3.6 s for the low-dimensional models (with order–fast to slow–LNexp, spec1, LNdos, spec2), about 100 s for the FP model and roughly 1500 s for the aEIF network simulation on a dual-core laptop computer. The time difference to the network simulation substantially increases with the numbers of neurons and connections, and with spiking activity within the network due to the extensive propagation of synaptic events. Note that the speedup becomes even more pronounced with increasing number of populations, where the runtimes of the FP model and the aEIF network simulation scale linearly and the low-dimensional models show a sublinear runtime increase due to vectorization of the state variables representing the different populations.

The derived low-dimensional (ODE) spike rate models are very efficient to integrate given that the required input-dependent parameters are available as precalulated look-up quantities. For the grids used in this contribution, the precomputation time was 40 min. for the cascade (LNexp, LNdos) models and 120 min. for the spectral (spec1, spec2) models, both on a hexa-core desktop computer. The longer calculation time for the spectral models was due to the finer internal grid for the mean input (see S1 Text).

Note that while the time integration of the spec2 model is on the same order as for the other low-dimensional models its implementation complexity is larger because of the many quantities it depends on, cf. Eqs (63)–(66).

Discussion

In this contribution we have developed four low-dimensional models that approximate the spike rate dynamics of coupled aEIF neurons and retain all parameters of the underlying model neurons. These simple spike rate models were derived in two different ways from a Fokker-Planck PDE that describes the evolving membrane voltage distribution in the mean-field limit of large networks, and is complemented by an ODE for the population-averaged slow adaptation current. Two of the reduced spike rate models (spec1 and spec2) were obtained by a truncated spectral decomposition of the Fokker-Planck operator assuming vanishingly slow (for spec1) or moderately slow (for spec2) changes of the input moments. The other two reduced models (LNexp and LNdos) are described by a cascade of linear filters (one for the input mean and another for its standard deviation) and a nonlinearity which were derived from the Fokker-Planck equation, and subsequently the filters were semi-analytically approximated. Our approaches build upon [18–20] as well as [21], and extend those methods for adaptive nonlinear integrate-and-fire neurons that are sparsely coupled with distributed delays (cf. Sect. Methods).

We have compared the different spike rate representations for a range of biologically plausible input statistics and found that three of the reduced models (spec2, LNexp and LNdos) accurately reproduce the spiking activity of the underlying aEIF population while one model (spec1) shows the least accuracy. Among the best models, the simplest (LNexp) was the most robust and (somewhat surprisingly) overall outperformed spec2 and LNdos–especially in the sensitive regime of rapidly changing sub- and suprathreshold mean drive and in general for rapid and strong input variations. The LNexp model did not exhibit exaggerated deflections in that regime as compared to the other two models. This result is likely due to the importance of the quantitatively correct decay time of the filter for the mean input in the LNexp model, while the violations of the slowness assumptions for the spec2 and LNdos models seem more harmful in this regime. In the strongly mean-driven regime, however, the best performing model was spec2 for variations both in the mean drive (as long as those variations are not too strong and fast) and for variations of the input variance.

We have also demonstrated that the low-dimensional models well reproduce the dynamics of recurrently coupled aEIF populations in terms of asynchronous states (see Fig 1) and spike rate oscillations (cf. Fig 5), where mild deviations at critical (bifurcation) parameter values are expected due to the approximative nature of the model reduction.

The computational demands of the low-dimensional models are very modest in comparison to the aEIF network and also to the integration of the Fokker-Planck PDE, for which we have developed a novel finite volume discretization scheme. We would like to emphasize that any change of a parameter value for input, coupling or adaptation current does not require renewed precomputations. To facilitate the application of the presented models we have made available implementations that precompute all required quantities and numerically integrate the derived low-dimensional spike rate models as well as the Fokker-Planck equation, together with example (Python) scripts, as open source software.

Since the derived models are formulated in terms of simple ODEs, they allow to conveniently perform linear stability analyses, e.g., based on the eigenvalues of the Jacobian matrix of the respective vector field. In this way network states can be rapidly characterized by quantifying the bifurcation structure of the population dynamics–including regions of the parameter space where multiple fixed points and/or limit cycle attractors co-exist. For a characterization of stable network states by numerical continuation and an assessment of their controllability through neuromodulators using the LNexp model see [23] ch. 4.2 and [25]. Furthermore, the low-dimensional models are well suited to be employed in large neuronal networks of multiple populations for efficient simulations of population-averaged activity time series. Overall, the LNexp model seems a good candidate for that purpose considering its accuracy and robustness, as well as its computational and implementational simplicity.

Extensions

Heterogeneity

We considered a homogeneous population of neurons in the sense that the parameter values across model neurons are identical except those for synaptic input. Thereby we assume that neurons with similar dynamical properties can be grouped into populations [3]. Heterogeneity is incorporated by distributed synaptic delays, by sparse random coupling, and by fluctuating external inputs for each neuron. The (reduced) population models further allow for heterogeneous synaptic strengths that are sampled from a Gaussian distribution and can be included in a straightforward way [16, 26] (see also Sect. Methods). Distributed values for other parameters (of the isolated model neurons within the same population) are currently not supported.

Multiple populations

The presented mean-field network model can be easily adjusted for multiple populations. In this case we obtain a low-dimensional ODE for each population and the overall synaptic moments for population k become

| (13) |

where Jkl is the synaptic strength for the Kkl neurons from population l targeting neurons from population k and rd,kl is the delayed spike rate of population l affecting population k (cf. Eq (2)). For each pair of coupled populations we may consider identical or distributed delays (using distributions from the exponential family) as well as identical or distributed synaptic strengths (sampled from a Gaussian distribution).

Synaptic coupling

Here we described synaptic interaction by delayed (delta) current pulses with delays sampled from an exponential distribution. This description leads to a fluctuating overall synaptic input current with white noise characteristics. Interestingly, for the mean-field dynamics this setting is very similar to considering exponentially decaying synaptic currents with a decay constant that matches that of the delay distribution, although the overall synaptic input current is a colored noise process in that case, see [27] and, for an intuitive explanation [28].

A conductance-based model of synaptic coupling can also be considered in principle [16, 29], which results in a multiplicative noise process for the overall synaptic input. This, however, would in general impede the beneficial concept of precalculated “look-up” quantities that are unaffected by the input and coupling parameters.

It should be noted that most current- or conductance-based models of synaptic coupling (including the one considered here) can produce unphysiologically large amounts of synaptic current in case of high presynaptic activity, unless the coupling parameters are carefully tuned. This problem can be solved, for example, by considering a (more realistic) model of synaptic coupling based on [30], from which activity-dependent coupling terms can be derived for the mean-field and reduced population models [23] ch. 4.2. Using that description ensures robust simulation of population activity time series without having to fine-tune the coupling parameter values, which is particularly useful for multi-population network models. In this contribution though we used for simplicity a basic synaptic coupling model that has frequently been applied in the mean-field literature.

Input noise process

The Gaussian stochastic process driving the individual neurons could also be substituted by colored noise, which would lead to a Fokker-Planck model with increased dimensionality [31]. However, this would require more complex and computationally expensive numerical schemes not only to solve that model but also for the different dimension reduction approaches.

Slow adaptation

To derive low-dimensional models of population activity we approximated the adaptation current by its population average, justified by its slow dynamics compared to the other time scales of the system. This approximation is equivalent to a first order moment closure method [17]. In case of a faster adaptation time scale the approximation may be improved by considering second and higher order moments [17, 32].

Population size

The mean-field models presented here can well reproduce the dynamics of population-averaged state variables (that is, spike rate, mean membrane voltage, and mean adaptation current) for large populations (N → ∞ in the derivation). Fluctuations of those average variables due to the finite size of neuronal populations, however, are not captured. Hence, it would be interesting to extend the mean-field models so as to reproduce these (so-called) finite size effects, for example, by incorporating an appropriate stochastic process [18] or using concepts from [15].

Cascade approach

For uncoupled EIF populations (without an adaptation current) and constant input standard deviation it has been shown that the LN cascade approximation performs well for physiological ranges of amplitude and time scale for mean input variations [21]. Our results for the cascade models are consistent with [21], but the performance is substantially improved for the sensitive low (baseline) input regime (LNexp and LNdos, also in absence of adaptation), and damped oscillatory behavior (including over- and undershoots) is accounted for by the LNdos model.

To achieve these improvements we semi-analytically fit the linear filters derived from the Fokker-Planck equation using exponential and damped oscillator functions considering a range of input frequencies. The approximation can be further improved by using more complex functions, such as a damped oscillator with two time scales. That, however, can lead to less robustness (i.e., undesired model behavior) for rapid and strong changes of the input moments (cf. Sect. Methods).

LN cascade models are frequently applied in neuroscience to describe population activity, and the model components are often determined from electrophysiological recordings using established techniques. The methodology presented here contributes to establishing quantitative links between networks of spiking neurons, a mesoscopic description of population activity and recordings at the population level.

Spectral approach

Here we provide a new numerical solver for the eigenvalue problem of the Fokker-Planck operator and its adjoint. This allows to compute the full spectrum together with associated eigenfunctions and is applicable to nonlinear integrate-and-fire models, extending [18, 19, 33].

Using that solver the spec2 model, which is based on two eigenvalues, can be further improved by interpolating its coefficients, Eqs (63)–(66), around the double eigenvalues at the spectrum’s real-to-complex transition. This interpolation would effectively smooth the quantities—e.g., preventing the jumps and kinks that are present in the visualization of Sect. Spectral models—and is expected to increase the spike rate reproduction accuracy (particularly for weak mean input) beyond what was reported in this contribution.

The spec2 model can also be extended to yield a third order ODE with everywhere smooth coefficients by considering an additional eigenvalue (cf. Sect. Remarks on the spectrum).

Moreover, the spec2 model, and more generally the whole spectral decomposition approach, can be extended to account for a refractory period in the presence of time-varying total input moments, e.g., by building upon previous attempts [18, 34, 35].

Furthermore, it could be beneficial to explicitly quantify the approximation error due to the slowness assumption that underlies the spec2 model by integration of the (original) spectral representation of the Fokker-Planck model.

Both reduced spectral models allow for a refined description of the mean adaptation current dynamics, cf. Eq (1), by replacing the mean membrane voltage 〈V〉 with its steady-state value 〈V〉∞, using that the membrane voltage distribution is available through the eigenfunctions of the Fokker-Planck operator.

The numerical eigenvalue solver can be extended in a straightforward way to yield quantities that are required by the original spectral representation of the Fokker-Planck model and by the corresponding stochastic equation for finite population size [18].

Alternative derived models

In addition to the work we build upon [18–21] (cf. Sect. Methods) there are a few other approaches to derive spike rate models from populations of spiking neurons. Some methods also result in an ODE system, taking into account (slow) neuronal adaptation [17, 26, 36–38] or disregarding it [39]. The settings differ from the work presented here in that (i) the intrinsic neuronal dynamics are adiabatically neglected [17, 26, 36, 37], (ii) only uncoupled populations [38] or all-to-all connected networks [17, 36, 39] are assumed in contrast to sparse connectivity, and (iii) (fixed) heterogeneous instead of fluctuating input is considered [39]. Notably, these previous methods yield rather qualitative agreements with the underlying spiking neuron population activity except for [39] where an excellent quantitative reproduction for (non-adaptive) quadratic integrate-and-fire oscillators with quenched input randomness is reported.

Other approaches yield mesoscopic representations of population activity in terms of model classes that are substantially less efficient to simulate and more complicated to analyze than low-dimensional ODEs [14–17, 40–42]. The spike rate dynamics in these models has been described (i) by a rather complex ODE system that depends on a stochastic jump process derived for integrate-and-fire neurons without adaptation [40], (ii) by PDEs for recurrently connected aEIF [16] or Izhikevich [17] neurons, (iii) by an integro-PDE with displacement for non-adaptive neurons [42] or (iv) by integral equations that represent the (mean) activity of coupled phenomenological spiking neurons without [41] and with adaptation [14, 15].

Furthermore, the stationary condition of a noise-driven population of adaptive EIF neurons [32, 43, 44] and the first order spike rate response to weak input modulations [43, 44] have been analyzed using the Fokker-Planck equation. Ref. [32] also considered a refined approximation of the (purely spike-triggered) adaptation current including higher order moments.

It may be interesting for future studies to explore ways to extend the presented methods and relax some of the underlying assumptions, in particular, considering (i) the diffusion approximation (via shot noise input, e.g., [45, 46]), (ii) the Poisson assumption (e.g., using the concept from [47] in combination with results from [48]) and (iii) (noise) correlations (see, e.g., [49]).

Methods

Here we present all models in detail—the aEIF network (ground truth), the mean-field FP system (intermediate model) and the low-dimensional models: spec1, spec2, LNexp, LNdos—including step-by-step derivations and essential information on the respective numerical solution methods. An implementation of these models using Python is made available at GitHub: https://github.com/neuromethods/fokker-planck-based-spike-rate-models

Network model

We consider a large (homogeneous) population of N synaptically coupled aEIF model neurons [5]. Specifically, for each neuron (i = 1, …, N), the dynamics of the membrane voltage Vi is described by

| (14) |

where the capacitive current through the membrane with capacitance C equals the sum of three ionic currents and the synaptic current Isyn,i. The ionic currents consist of a linear leak current IL(Vi) = −gL(Vi − EL) with conductance gL and reversal potential EL, a nonlinear term Iexp(Vi) = gL ΔT exp((Vi − VT)/ΔT) that approximates the rapidly increasing Na+ current at spike initiation with threshold slope factor ΔT and effective threshold voltage VT, and the adaptation current wi which reflects a slowly deactivating K+ current. The adaptation current evolves according to

| (15) |

with adaptation time constant τw. Its strength depends on the subthreshold membrane voltage via conductance a. Ew denotes its reversal potential. When Vi increases beyond VT, it diverges to infinity in finite time due to the exponentially increasing current Iexp(Vi), which defines a spike. In practice, however, the spike is said to occur when Vi reaches a given value Vs—the spike voltage. The downswing of the spike is not explicitly modeled; instead, when Vi ≥ Vs, the membrane voltage Vi is instantaneously reset to a lower value Vr. At the same time, the adaptation current wi is incremented by a value of parameter b, which implements suprathreshold (spike-dependent) activation of the adaptation current.

Immediately after the reset, Vi and wi are clamped (i.e., remain constant) for a short refractory period Tref, and subsequently governed again by Eqs (14) and (15). At the end of the Methods section we describe how (optionally) a spike shape can be included in the aEIF model, together with the associated small changes for the models derived from it.

To complete the network model the synaptic current in Eq (14) needs to be specified: for each cell it is given by the sum of recurrent and external input, Isyn,i = Irec,i(t) + Iext,i(t). Recurrent synaptic input is received from K other neurons of the network, that are connected in a sparse (K ≪ N) and uniformly random way, and is modeled by

| (16) |

where δ denotes the Dirac delta function. Every spike by one of the K presynaptic neurons with indices j and spike times tj causes a postsynaptic membrane voltage jump of size Jij. The coupling strength is positive (negative) for excitation (inhibition) and of small magnitude. Here it is chosen to be constant, i.e., Jij = J. Each of these membrane voltage deflections occur after a time delay dij that takes into account (axonal and dendritic) spike propagation times and is sampled (independently) from a probability distribution pd. In this work we use exponentially distributed delays, i.e., pd(τ) = exp(−τ/τd)/τd (for τ ≥ 0) with mean delay τd.

The second type of synaptic input is a fluctuating current generated from network-external neurons,

| (17) |

with time-varying moments μext and , and unit Gaussian white noise process ξext,i. The latter is uncorrelated with that of other neurons j ≠ i, i.e., 〈ξext,i(t)ξext,j(t + τ)〉 = δ(τ)δij, where 〈·〉 denotes expectation (w.r.t. the joint ensemble of noise realizations at times t and t + τ) and δij is the Kronecker delta. This external current, for example, accurately approximates the input generated from a large number of independent Poisson neurons that produce instantaneous postsynaptic potentials of small magnitude, cf. [48].

The spike rate rN of the network is defined as the population-averaged number of emitted spikes per time interval [t, t + ΔT],

| (18) |

where the interval size ΔT is practically chosen small enough to capture the dynamical structure and large enough to yield a comparably smooth time evolution for a finite network, i.e., N < ∞.

We chose values for the neuron model parameters to describe cortical pyramidal cells, which exhibit “regular spiking” behavior and spike frequency adaptation [7, 50, 51]. For the complete parameter specification see Table 1.

Table 1. Parameter values used throughout the study.

| Name | Symbol | Value |

|---|---|---|

| Network model | ||

| Number of neurons | N | 50,000 |

| Membrane capacitance | C | 200 pF |

| Leak conductance | gL | 10 nS |

| Leak reversal potential | EL | −65 mV |

| Threshold slope factor | ΔT | 1.5 mV |

| Threshold voltage | VT | −50 mV |

| Spike voltage | Vs | −40 mV |

| Reset voltage | Vr | −70 mV |

| Subthreshold adaptation conductance1 | a | 4 nS |

| Spike-triggered adaptation increment1 | b | 40 pA |

| Adaptation reversal potential | Ew | −80 mV |

| Adaptation time constant | τw | 200 ms |

| Refractory period2 | Tref | 0 ms |

| Gaussian filter width for external input | σt | 1 ms |

| Discretization time step | Δt | 0.05 ms |

| Spike rate estimation bin width | ΔT | 1 ms |

| Fokker-Planck model | ||

| Membrane voltage lower bound | Vlb | −200 mV |

| Finite-volume membrane voltage spacing | ΔV | 0.028 mV |

| Discretization time step | Δt | 0.05 ms |

| Low-dimensional models | ||

| Discretization time step | Δt | 0.01 ms |

| Membrane voltage spacing3 | ΔV | 0.01 mV |

| Spacing of mean input3 | Δμ | 0.025 mV/ms |

| Spacing of input standard deviation3 | Δσ | |

1If not specified otherwise.

2A nonzero refractory period is not supported by the spec2 model.

3Parameters for precalculation of model quantities (before simulation).

All network simulations were performed using the Python software BRIAN2 [52, 53] with C++ code generation enabled for efficiency. The aEIF model Eqs (14) and (15) were discretized using the Euler-Maruyama method with equidistant time step Δt and initialized with wi(0) = 0 and Vi(0) that is (independently) sampled from a Gaussian initial distribution p0(V) with mean Vr − δV and standard deviation δV/2 where δV = VT − Vr. Note that the models derived in the following Sects. do not depend on this particular initial density shape but allow for an arbitrary (density) function p0.

Fokker-Planck system

Adiabatic approximation

The time scales of (slow) K+ channel kinetics which are effectively described by the adaptation current wi, cf. Eq (15), are typically much larger than the faster membrane voltage dynamics modeled by Eq (14), i.e., τw ≫ C/gL [54–57]. This observation justifies to replace the individual adaptation current wi in Eq (14) by its average across the population, , in order to reduce computational demands and enable further analysis. The mean adaptation current is then governed by [16, 26, 48, 58]

| (19) |

where 〈V〉N denotes the time-varying population average of the membrane voltage of non-refractory neurons.

The dynamics of the population-averaged adaptation current reflecting the non-refractory proportion of neurons are well captured by Eq (19) as long as Tref is small compared to τw. In this (physiologically plausible) case 〈w〉N from Eq (19) can be considered equal to the average adaptation current over the refractory proportion of neurons [16, 48].

Mean field limit

For large networks (N → ∞) the recurrent input can be approximated by a mean part with additive fluctuations, with delayed spike rate

| (20) |

i.e., the spike rate convolved with the delay distribution, and unit white Gaussian noise process ξrec,i that is uncorrelated to that of any other neuron [16, 18, 26, 34].

The step is valid under the assumptions of (i) sufficiently many incoming synaptic connections (K ≫ 1) with small enough weights |Jij| in comparison with VT − Vr and sufficient presynaptic activity (diffusion approximation) (ii) that neuronal spike trains can be approximated by independent Poisson processes (Poisson assumption) and (iii) that the correlations between the fluctuations of synaptic inputs for different neurons vanish (mean-field limit). The latter assumption is fulfilled by sparse and uniformly random synaptic connectivity, but also when synaptic strengths Jij and delays dij are independently distributed (in case of less sparse or random connections) [18].

This approximation of the recurrent input allows to replace the overall synaptic current in Eq (14) by Isyn,i = C[μsyn(t, rd) + σsyn(t, rd)ξi(t)] with overall synaptic moments

| (21) |

and (overall) unit Gaussian white noise ξi that is uncorrelated to that of any other neuron. Here we have used that external Iext,i and recurrent synaptic current Irec,i are independent from each other.

The resulting mean-field dynamics of the membrane voltage is given by

| (22) |

and corresponds to a McKean-Vlasov type of equation with distributed delays [59] and discontinuity due to the reset mechanism [60] that complements the dynamics of Vi as before. The population-averaged adaptation current 〈w〉 = limN → ∞〈w〉N is governed by

| (23) |

with mean membrane voltage (of non-refractory neurons), 〈V〉 = limN→∞〈V〉N, and spike rate r = limN→∞,Δt→0 rN(t).

Remarks: Instead of exponentially distributed synaptic delays we may also consider other continuous densities pd, identical delays, pd(τ) = δ(τ − d) with d > 0, or no delays at all, pd(τ) = δ(τ). Instead of identical synaptic strengths one may also consider strengths Jij that are drawn independently from a normal distribution with mean Jm and variance Jv instead, in which case the overall synaptic moments become μsyn = μext(t) + JmKrd(t) and , cf. [16, 26].

Continuity equation

In the membrane voltage evolution, Eq (22), individual neurons are exchangeable as they are described by the same stochastic equations and are coupled to each other exclusively through the (delayed) spike rate via the overall synaptic moments μsyn and . Therefore, the adiabatic and mean-field approximations allow us to represent the collective dynamics of a large network by a (1+1-dimensional) Fokker-Planck equation [16, 18, 26, 34],

| (24) |

which describes the evolution of the probability density p(V, t) to find a neuron in state V at time t (in continuity form). The probability flux is given by

| (25) |

with total input mean and standard deviation,

| (26) |

| (27) |

Note that the mean adaptation current (simply) subtracts from the synaptic mean in the drift term, cf. Eq (22).

The mean adaptation current evolves according to Eq (23) with time-dependent mean membrane voltage (of the non-refractory neurons)

| (28) |

The spike rate r is obtained by the probability flux through Vs,

| (29) |

To account for the reset condition of the aEIF neuron dynamics and ensuring that probability mass is conserved, Eq (24) is complemented by the reinjection condition,

| (30) |

where and , an absorbing boundary at Vs,

| (31) |

and a natural (reflecting) boundary condition,

| (32) |

Together with the initial membrane voltage distribution p(V, 0) = p0(V) and mean adaptation current 〈w〉(0) = 0 the Fokker-Planck mean-field model is now completely specified.

Note that p(V, t) only reflects the proportion of neurons which are not refractory at time t, given by (<1 for Tref > 0 and r(t) > 0). The total probability density that the membrane voltage is V at time t is given by p(V, t) + pref(V, t) with refractory density pref(V, t) = (1 − P(t)) δ(V − Vr). At the end of the Methods section we describe how an (optional) spike shape extension for the aEIF model changes the calculation of pref and 〈V〉.

In practice we consider a finite reflecting lower barrier Vlb instead of negative infinite for the numerical solution (next section) and for the low-dimensional approximations of the Fokker-Planck PDE (cf. sections below). Vlb is chosen sufficiently small in order to not distort the free diffusion of the membrane voltage for values below the reset, i.e., Vlb ≪ Vr. The density p(V, t) is then supported on [Vlb, Vs] for each time t, and in all expressions above V → −∞ is replaced by Vlb.

Finite volume discretization

In this work we focus on low-dimensional approximations of the FP model. To obtain a reference for the reduced models it is, however, valuable to solve the (full) FP system, Eqs (24)–(32). Here we outline an accurate and robust method of solution that exploits the linear form of the FP model in contrast to previously described numerical schemes [61, 62] which both require rather small time steps due to the steeply increasing exponential current Iexp in the flux qp close to the spike voltage Vs.

We first discretize the (finite) domain [Vlb, Vs] into NV equidistant grid cells with centers Vm (m = 1, …, NV) that satisfy V1 < V2 < ⋯ < VNV, where and are the outmost cell borders. Within each cell the numerical approximation of p(V, t) is assumed to be constant and corresponds to the average value denoted by p(Vm, t). Integrating Eq (24) combined with Eq (30) over the volume of cell m, and applying the divergence theorem, yields

| (33) |

where ΔV is the grid spacing and mr corresponds to the index of the cell that contains the reset voltage Vr. To solve Eq (33) forward in time the fluxes at the borders of each cell need to be approximated. Since the Fokker-Planck PDE belongs to the class of drift-diffusion equations this can be accurately achieved by the first order Scharfetter-Gummel flux [63, 64],

| (34) |

where and denote the drift and diffusion coefficients, respectively (cf. Eq (25)). This exponentially fitted scheme [64] is globally first order convergent [65] and yields for large drifts, , the upwind flux, sharing its stability properties. For vanishing drifts, on the other hand, the centered difference method is recovered [64], leading to more accurate solutions than the upwind scheme in regimes of strong diffusion.

For the time discretization we rewrite Eq (33) (with Eq (34)) in vectorized form and approximate the involved time derivative as first order backward difference to ensure numerical stability. This yields in each time step of length Δt a linear system for the values pn+1 of the (discretized) probability density at tn+1, given the values pn at the previous time step tn, and the spike rate at the time tn+1−nref for which the refractory period has just passed,

| (35) |

with vector elements , m = 1, …, NV, and . The refractory period in time steps is given by nref = ⌈Tref/Δt⌉, where the brackets denote the ceiling function, and I is the identity matrix. This linear equation can be efficiently solved with runtime complexity due to the tridiagonal structure of which contains the discretization of the membrane voltage (cf. Eqs (33) and (34)), including the absorbing and reflecting boundary conditions (Eqs (31) and (32)). For details we refer to S1 Text.

The spike rate, Eq (29), in this representation is obtained by evaluating the Scharfetter-Gummel flux, Eq (34), at the spike voltage Vs, taking into account the absorbing boundary condition, Eq (31), and introducing an auxiliary ghost cell [66], with center VNV+1, which yields

| (36) |

where the drift and diffusion coefficients, and D, are evaluated at tn. The mean membrane voltage (of non-refractory neurons), Eq (28), used for the dynamics of the mean adaptation current, Eq (23), is calculated by .

Practically, we use the initialization and solve in each time step the linear system, Eq (35), using the function banded_solve from the Python library SciPy [67]. Note that (for a recurrent network or time-varying external input) the tridiagonal matrix Gn has to be constructed in each time step tn, which can be time consuming–especially for small ΔV and/or small Δt. Therefore, we employ low-level virtual machine acceleration for this task through the Python package Numba [24] which yields an efficient implementation.

Remark: for a vanishing refractory period Tref = 0 the matrix Gn would lose its tridiagonal structure due to the instantaneous reinjection, cf. Eq (36). In this case we enforce a minimal refractory period of one time step, Tref = Δt, which is an excellent approximation if the time step is chosen sufficiently small and the spike rate does not exceed biologically plausible values.

Low-dimensional approximations

In the following sections we present two approaches of how simple spike rate models can be derived from the Fokker-Planck mean-field model described in the previous section, cf. Eqs (20), (21) and (23)–(32).

The derived models are described by low-dimensional ordinary differential equations (ODEs) which depend on a number of quantities defined in the plane of (generic) input mean and standard deviation (μ, σ). To explain this concept more clearly we consider, as an example, the steady-state spike rate, which is a quantity required by all reduced models. The steady-state spike rate as a function of μ and σ,

| (37) |

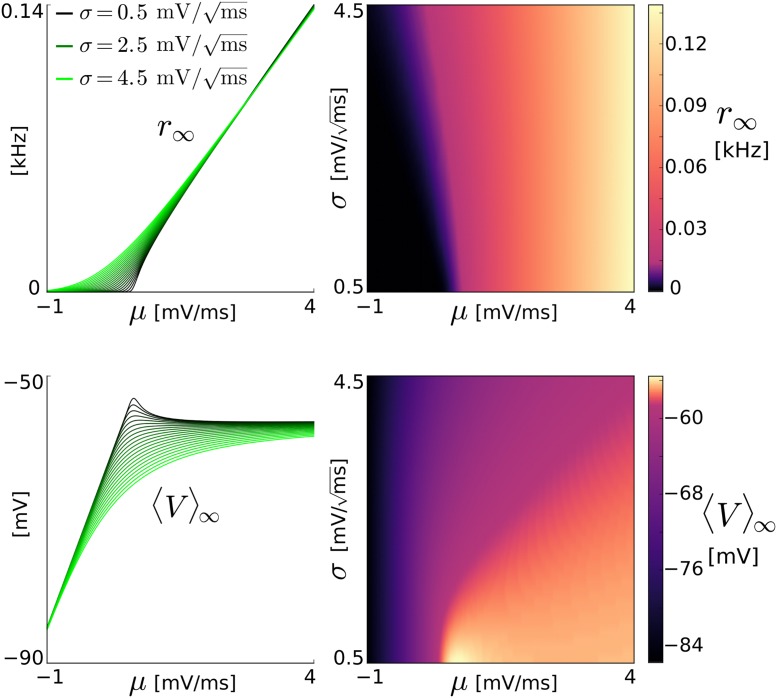

denotes the stationary value of Eq (29) under replacement of the (time-varying) total moments μtot and in the probability flux qp, Eq (25), by (constants) μ and σ2, respectively. Thus the steady-state spike rate r∞ effectively corresponds to that of an uncoupled EIF population whose membrane voltage is governed by dVi/dt = [IL(Vi) + Iexp(Vi)]/C + μ + σξi(t) plus reset condition, i.e., adaptation and synaptic current dynamics are detached. For a visualization of r∞(μ, σ) see Fig 6.

Fig 6. Steady-state spike rate and mean membrane voltage for a population of EIF neurons.

r∞ and 〈V〉∞ for an uncoupled population of EIF neurons (aEIF with a = b = 0) as a function of (generic) input mean μ and standard deviation σ, calculated from the (steady-state) Fokker-Planck equation, shown in two different representations (left and right, each).

When simulating the reduced models these quantities need to be evaluated for each discrete time point t at a certain value of (μ, σ) which depends on the overall synaptic moments μsyn(t), and on the mean adaptation current 〈w〉(t) in a model-specific way (as described in the following Sects.). An example trajectory of r∞ in the (μ, σ) space for a network showing stable spike rate oscillations is shown in Fig 5.

Importantly, these quantities depend on the parameters of synaptic input (J, K, τd, μext, σext) and adaptation current (a, b, τw, Ew) only through their arguments (μ, σ). Therefore, for given parameter values of the EIF model (C, gL, EL, ΔT, VT, Vr, Tref) we precalculate those quantities on a (reasonably large and sufficiently dense) grid of μ and σ values, and access them during time integration by interpolating the quantity values stored in a table. This greatly reduces the computational complexity and enables rapid numerical simulations.

The derived low-dimensional models describe the spike rate dynamics and generally do not express the evolution of the entire membrane voltage distribution. Therefore, the mean adaptation dynamics, which depends on the density p(V, t) (via 〈V〉, cf. Eq (23)) is adjusted through approximating the mean membrane voltage 〈V〉 by the expectation over the steady-state distribution,

| (38) |

which is valid for sufficiently slow adaptation current dynamics [48, 58]. The steady-state distribution is defined as p∞(V) = limt → ∞ p(V, t; μtot = μ, σtot = σ), representing the stationary membrane voltages of an uncoupled EIF population for generic input mean μ and standard deviation σ. The mean adaptation current in all reduced models is thus governed by

| (39) |

where the evaluation of quantity 〈V〉∞ in terms of particular values for μ and σ at a given time t is model-specific (cf. following Sects.). Note again that the calculation of 〈V〉∞ slightly changes when considering an (optional) spike shape extension for the aEIF model, as described at the end of the Methods section.

The Fokker-Planck model does not restrict the form of the delay distribution pd, except that the convolution with the spike rate r, Eq (20), has to be well defined. Here, however, we aim at specifying the complete network dynamics in terms of a low-dimensional ODE system. Exploiting the exponential form of the delay distribution pd we obtain a simple ordinary differential equation for the delayed spike rate,

| (40) |

which is equivalent to the convolution rd = r * pd.

Note that more generally any delay distribution from the exponential family allows to represent the delayed spike rate rd by an equivalent ODE instead of a convolution integral [68]. Identical delays, rd(t) = r(t − d), are also possible but lead to delay differential equations. Naturally, in case of no delays, we simply have rd(t) = r(t).

To simulate the reduced models standard explicit time discretization schemes can be applied–directly to the first order equations of the LNexp model, and for the other models (LNdos, spec1, spec2)–to the respective equivalent (real) first order systems. We would like to note that when using the explicit Euler method to integrate any of the latter three low-dimensional models a sufficiently small integration time step Δt is required to prevent oscillatory artifacts. Although the explicit Euler method works well for the parameter values used in this contribution, we have additionally implemented the method of Heun, i.e., the explicit trapezoidal rule, which is second order accurate.

Spectral models

Eigendecomposition of the Fokker-Planck operator

Following and extending [18] we can specify the Fokker-Planck operator

| (41) |

for an uncoupled EIF population receiving (constant) input (μ, σ), cf. Sect. Low-dimensional approximations. This operator allows to rearrange the FP dynamics of the recurrent aEIF network, Eq (24), as

| (42) |

which depends on the (time-varying) total input moments μtot(t, rd, 〈w〉) and , cf. Eqs (26) and (27), in the drift and diffusion coefficients, respectively.

For each value of (μ, σ) the operator possesses an infinite, discrete set of eigenvalues λn in the left complex half-plane including zero [18], i.e., Re{λn} ≤ 0, and associated eigenfunctions ϕn(V) (n = 0, 1, 2, …) satisfying

| (43) |

Furthermore, the boundary conditions, Eqs (30)–(32) have to be fulfilled for each eigenfunction ϕn separately, i.e., the absorbing boundary at the spike voltage,

| (44) |

and the reflecting barrier at the (finite) lower bound voltage,

| (45) |

must hold. The eigenflux is given by

| (46) |

i.e., the flux qp of Eq (25) with eigenfunction ϕn and (constant) generic input moments (μ, σ2) instead of density p and (time-varying) total input moments, respectively. Moreover, the eigenflux qϕn has to be reinjected into the reset voltage, cf. Eq (30),

| (47) |

where we have neglected the refractory period, i.e., Tref = 0. Note that incorporating a refractory period Tref > 0 is straightforward only for the simplified case of vanishing total input moment variations, , which is described in the following section and is not captured here in general.

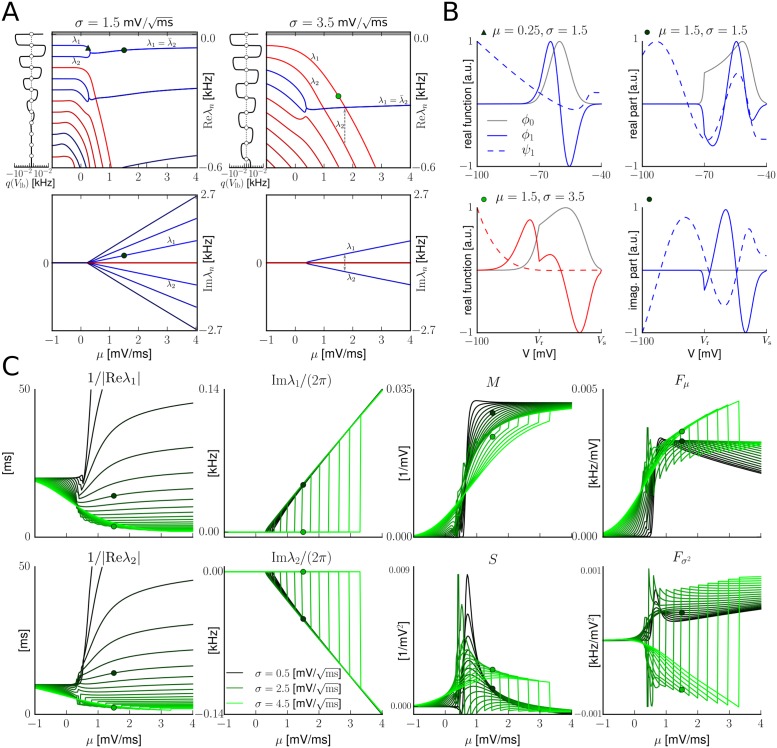

The spectrum of is shown in Fig 7A and further discussed in Sect. Remarks on the spectrum.

Fig 7. Spectrum of the Fokker-Planck operator and related quantities.