Abstract

Source imaging based on magnetoencephalography (MEG) and electroencephalography (EEG) allows for the non-invasive analysis of brain activity with high temporal and good spatial resolution. As the bioelectromagnetic inverse problem is ill-posed, constraints are required. For the analysis of evoked brain activity, spatial sparsity of the neuronal activation is a common assumption. It is often taken into account using convex constraints based on the l1-norm. The resulting source estimates are however biased in amplitude and often suboptimal in terms of source selection due to high correlations in the forward model. In this work, we demonstrate that an inverse solver based on a block-separable penalty with a Frobenius norm per block and a l0.5-quasinorm over blocks addresses both of these issues. For solving the resulting non-convex optimization problem, we propose the iterative reweighted Mixed Norm Estimate (irMxNE), an optimization scheme based on iterative reweighted convex surrogate optimization problems, which are solved efficiently using a block coordinate descent scheme and an active set strategy. We compare the proposed sparse imaging method to the dSPM and the RAP-MUSIC approach based on two MEG data sets. We provide empirical evidence based on simulations and analysis of MEG data that the proposed method improves on the standard Mixed Norm Estimate (MxNE) in terms of amplitude bias, support recovery, and stability.

Index Terms: Electrophysical imaging, brain, inverse methods, magnetoencephalography, electroencephalography, structured sparsity

I. Introduction

SOURCE imaging with magnetoencephalography (MEG) and electroencephalography (EEG) delivers insights into the active brain with high temporal and good spatial resolution in a non-invasive way [1]. It is based on solving the bioelectromagnetic inverse problem, which is a high dimensional ill-posed regression problem. In order to render its solution unique, constraints have to be imposed reflecting a priori assumptions on the neuronal sources. In the past, several source reconstruction techniques have been proposed, which are based on the assumption that only a few focal brain regions are involved in a specific cognitive task. Inverse methods favoring sparse focal source configurations to explain the MEG/EEG signals include parametric [2], scanning [3]–[6], and imaging approaches [7]–[12]. These techniques, which are partly used in clinical routine, are suitable e.g. for analyzing evoked responses or epileptic spike activity. Classic MEG/EEG source imaging technique using sparsity-inducing penalties are the Selective Minimum Norm Method [7] or Minimum Current Estimate (MCE) [13]. Both approaches are based on the Lasso [14], i.e., regularized regression with an l1-norm penalty, which is a convex surrogate for the optimal, but NP hard l0-norm regularized regression problem. To reduce the sensitivity to noise and avoid discontinuous, scattered source activations [8], mixed norms such as the l2,1-mixed-norm used in Group Lasso [15] or Group Basis Pursuit [16] can be applied. The idea is to take the spatio-temporal characteristics of neuronal activity into account by imposing structured sparsity in space or time [8], [9], [17], [18]. We refer to [19] for a general review on group selection in high-dimensional models. A prominent example is the Mixed-Norm Estimate (MxNE) proposed in [9], which extends the MCE to multiple measurement vector problems by applying a block-separable convex penalty. Each block represents the source activation over time of a dipole with free orientation at a specific source location. Spatial sparsity is promoted by an l1-norm penalty over blocks, whereas a Frobenius norm per block promotes stationary source estimates, i.e., a source with a non-zero amplitude at one time instant has a non-zero amplitude during the full time window of interest [9], [20]. The Frobenius norm also prevents the orientations of the free orientation dipoles from being biased towards the coordinate axes [21]. These convex approaches allow for fast algorithms with guaranteed global convergence. However, the resulting source estimates are biased in amplitude and often suboptimal in terms of support recovery [22], which is impaired by the high spatial correlation of the MEG/EEG forward model. As shown e.g. in the field of compressed sensing, promoting sparsity by applying non-convex penalties, such as logarithmic or lp-quasinorm penalties with 0 < p < 1, improves support reconstruction in terms of feature selection, amplitude bias, and stability [22]–[24]. Several approaches for solving the resulting non-convex optimization problem have been proposed including generalized shrinkage [25], iterative reweighted l1 [22], [26]–[28], or iterative reweighted l2 optimization [29]–[33]. See [27], [34] for a review of these approaches for single and multiple measurement vector problems. Several MEG/EEG sparse source imaging techniques based on iterative reweighted l2 optimization have been proposed [29], [35]–[38]. An iterative reweighted l1 optimization technique for EEG source imaging was proposed in [39], which however does not impose structured sparsity and applies a fixed orientation constraint [40]. In this paper, we propose the iterative reweighted Mixed-Norm Estimate (irMxNE), a novel MEG/EEG sparse source imaging approach based on the framework of iterative reweighted l1, which promotes structured sparsity to improve MEG/EEG source reconstruction. A preliminary version of this method was presented in [41]. Similar approaches have recently been proposed in other fields of research [34], [42]. The irMxNE is based on a non-convex block-separable penalty, which combines a Frobenius norm per block and an l0.5-quasinorm over blocks. The non-convex objective function is minimized iteratively by computing a sequence of weighted MxNE problems. For solving the convex surrogate problems, we propose a new computationally efficient strategy, which combines block coordinate descent [27], [43], [44] and a forward active set strategy with convergence controlled by means of the duality gap, which converges significantly faster than the original MxNE algorithm proposed in [9]. We provide information on the integration of different source orientation constraints [40] and discuss specific problems of MEG/EEG source imaging such as depth bias compensation and amplitude bias correction. We present empirical evidence using simulations and analysis of two experimental MEG data sets that the proposed method outperforms MCE and MxNE in terms of amplitude bias, active source identification, and stability. Finally, we compare the proposed approach with the dSPM [45] and RAP-MUSIC method [5] based on two MEG data sets.

Notation

We mark vectors with bold letters, a ∈ ℝN, and matrices with capital bold letters, A ∈ ℝN×M. The transpose of a vector or matrix is denoted by aT and AT. The scalar a[i] is the ith element of a. A[i,:] corresponds to the ith row and A[:, j] to the jth column of A. ‖A‖Fro indicates the Frobenius norm, and ‖A‖ the spectral norm of a matrix.

II. Materials and Methods

A. The inverse problem

The MEG/EEG forward problem describes the linear relationship between the MEG/EEG measurements M ∈ ℝN×T (N number of sensors, T number of time instants) and the source activation X ∈ ℝ(SO)×T (S number of source locations, O number of orthogonal dipoles per source location with O = 1 if source orientation is postulated, e.g. using the cortical constraint [46], and typically O = 3 otherwise). The model then reads:

| (1) |

where G ∈ ℝN×(SO) is the gain or leadfield matrix, a known instantaneous mixing matrix, which links source and sensor signals. E is the measurement noise, which is assumed to be additive, white, and Gaussian, for all j. This assumption is acceptable on the basis of a proper spatial whitening of the data using an estimate of the noise covariance [47]. As SO ≫ N, the MEG/EEG inverse problem is ill-posed and constraints have to be imposed on the source activation matrix X to render the solution unique. By partitioning X into S blocks Xs∈ ℝO×T, where each Xs represents the source activation at a specific source location s over time and across O orthogonal current dipoles, we can apply a penalty term promoting block sparsity by combining a Frobenius norm per block and a l0.5-quasinorm penalty over blocks. The optimization problem reads:

| (2) |

where λ> 0 is the regularization parameter balancing the data fit and penalty term. Similar to the constraint applied in MxNE [9], promotes source estimates with only a few focal sources that have non-zero activations during the entire time interval of interest. The Frobenius norm per block Xs, which combines l2-norm penalties over time and orientation as proposed in [8], [13], [20], imposes stationarity of the source estimate and prevents the source orientations from being biased towards the coordinate axes [21]. The l0.5-quasinorm penalty promotes spatial sparsity.

B. Iterative reweighted Mixed Norm Estimate

The proposed block-separable regularization functional is an extension of the l2,p-quasinorm penalty with 0 < p < 1 used in [22], [26], [27], [32]. These works showed, based on the framework of Difference of Convex functions programming or Majorization-Minimization algorithms, that the resulting non-convex optimization problem can be solved by iteratively solving a sequence of weighted convex surrogate optimization problems with weights being defined based on the previous estimate. The convex surrogate problem is obtained by replacing the non-decreasing concave function with a convex upper bound using a local linear approximation at the current estimate. By solving this sequence of surrogate problems, the value of the non-convex objective function decreases, but without guarantee for convergence to a global minimum. The cost function in Eq. (2) can thus be minimized by computing the sequence of convex problems given in Eq. (3). The weights for the kth iteration are obtained from the previous source estimate . Intuitively, sources with high amplitudes in the (k-1)th iteration will be less penalized in the kth iteration and therefore further promoted.

| (3) |

As each iteration is equivalent to solving a weighted MxNE problem, we call this optimization scheme the iterative reweighted MxNE (irMxNE). Due to the non-convexity of the optimization problem in Eq. (2), the procedure is sensitive to the initialization of w(k)[s]. In this paper, we use w(1)[s] = 1 for all s as proposed in [26]. Consequently, the first iteration of irMxNE is equivalent to solving a standard MxNE problem. As each iteration of the iterative scheme in Eq. (3) solves a convex problem with guaranteed global convergence, the initialization of X has no influence on the final solution. X can thus be chosen arbitrarily and we use warm starts for improving the computation time. For sources with Eq. (3) has an infinite regularization term. Typically, a smoothing parameter ε is added to avoid weights to become zero [22], [26], [31]. Here, we reformulate the weighted MxNE subproblem and apply the weights without epsilon smoothing by scaling the gain matrix with a weighting matrix W(k) as given in Eq. (4). After convergence, we reapply the scaling to to obtain the final estimate .

| (4) |

with W(k) ∈ ℝSO×SO being a diagonal matrix, which is computed according to Eq. (5):

| (5) |

where 1(O) ∈ ℝO is a vector of ones and ⨂ is the Kronecker product. In each MxNE iteration, we restrict the source space to source locations with w(k) [s] > 0 to reduce the computation time.

We control the global convergence of each weighted MxNE subproblems in Eq. (4) by monitoring the duality gap. For details on convex duality in the context of optimization with sparsity-inducing penalties, we refer to [48]. In the following, we summarize the rationale for this stopping criterion. For a general minimization problem, the minimum of the primal objective function ℱp (X) is bounded below by the maximum of the associated dual objective function ℱd (Y), i.e., ℱp(X*) ≥ ℱd(Y*), where X* and Y* are the optimal solutions of the primal and dual problem. The duality gap η = ℱp (X) − ℱd (Y) ≥ 0, where X and Y are the current values of the primal and dual variable, is thus non-negative and provides an upper bound on the difference between ℱp (X) and ℱp(X*). If strong duality holds, the duality gap at the optimum is zero. To use this stopping criterion in practice, we need to derive the dual problem and choose a good feasible dual variable Y given a value of X, which allows for η = 0 at the optimum.

Due to Slater’s conditions [49], strong duality holds for the MxNE subproblem and we can check convergence of an iterative optimization scheme solving Eq. (4) by computing the current duality gap η(i) = ℱp (X(i)) − ℱd(Y(i)) ≥ 0. Based on the Fenchel-Rockafellar duality theorem [50], the dual objective function associated to the primal objective function

is given in Eq. (6). For a detailed derivation, we refer to [9].

| (6) |

where Tr indicates the trace of a square matrix, and Ω* the Fenchel conjugate of Ω, which is the indicator function of the associated dual norm. As shown in [9], a natural mapping from the primal to the dual space is given by a scaling of the residual . This is motivated by the fact that the solution of the dual problem at the optimum is proportional to the residual, which follows from the associated KKT conditions [9]. The scaling is done according to Eq. (7) such that the dual variable Y satisfies the constraint of Ω*.

| (7) |

In practice, we terminate the iterative optimization scheme for solving MxNE, when the estimate at the kth iteration X(i) is ε-optimal with ε = 10−6, i.e., η(i) < 10−6. According to [9], this is a conservative choice provided that the data is scaled by spatial pre-whitening.

For solving the weighted MxNE subproblems, we propose a block coordinate descent (BCD) scheme [43], which, for the problem at hand, converges faster than the Fast Iterative Shrinkage-Thresholding algorithm (FISTA) proposed earlier in [9] (cf. section III-B). A BCD scheme for solving the Group LASSO was proposed in [27], [44]. The subproblem per block has a closed form solution, which involves applying the group soft-thresholding operator, the proximity operator associated to the l2,1-mixed-norm [9]. Accordingly, the closed form solution for the BCD subproblems solving the MxNE problem can be derived, which is given in Eq. (8).

| (8) |

The step length μ[s] for each BCD subproblem is determined by with being the Lipschitz constant of the data-fit restricted to the sth source location. This step length is typically larger than the step length applicable in iterative proximal gradient methods, which is upper-bounded by the inverse of L = ‖GTG‖. Pseudo code for the BCD scheme is shown in Algorithm 1.

Algorithm 1.

MxNE with BCD

| Require: | M, G, X, μ, λ> 0, ∈> 0, and S. |

| 1: | Initialization: η = ℱp (X) − ℱd (Y) |

| 2: | while η ≥ ε do |

| 3: | for s = 1 to S do |

| 4: | Xs ← Solve Eq. (8) with X, μ, and M |

| 5: | end for |

| 6: | η = ℱp (X) − ℱd (Y) |

| 7: | end while |

Algorithm 2.

MxNE with BCD and active set strategy

| Require: | M, G, λ> 0, ε> 0, and S. |

| 1: | Initialization: X = 0, , η = ℱp (X) − ℱd (Y) |

| 2: | for s = 1 to S do |

| 3: | |

| 4: | end for |

| 5: | while η ≥ ε do |

| 6: | |

| 7: | |

| 8: | Define and by restricting G and X to |

| 9: | Solve Algorithm 1 with μ, and |

| 10: | for , else 0 |

| 11: | η = ℱp (X) − ℱd (Y) |

| 12: | end while |

The BCD scheme is typically applied using a cyclic sweep pattern, i.e., all blocks are updated in a cyclic order in each iteration. However, as the penalty term in Eq. (2) promotes spatial sparsity, most of the blocks of are zero. We can thus reduce the computation time by primarily updating blocks, that are likely to be non-zero, while keeping the remaining blocks at zero. For this purpose, data-dependent sweep patterns (such as greedy approaches based on steepest descent [51], [52]) or active set strategies [53], [54] can be applied. In this paper, we combine BCD with a forward active set strategy proposed in [9], [54]. Pseudo code for the proposed MxNE solver is provided in Algorithm 2.

We start by estimating an initial active set of sources by evaluating the Karush-Kuhn-Tucker (KKT) optimality conditions, which state that if [9]. We select the N sources as the initial active set, which violate this condition the most (we use N = 10 in practice). Subsequently, we restrict the source space to the sources in and estimate by solving Eq. (4) with convergence controlled by the duality gap. After convergence of this restricted optimization problem, we check whether is also an ε-optimal solution for the original optimization problem (without restricting the source space to ) by computing the corresponding duality gap η assuming that all sources, which are not part of the active set, have zero activation. If is not an ε -optimal solution indicated by η ≥ ε, we re-evaluate the KKT optimality conditions and update the active set by adding the N sources with the highest score. We then repeat the procedure with warm start.

Algorithm 3.

Iterative reweighted MxNE

| Require: | M, G, λ> 0, ε > 0, τ > 0, and K. |

| 1: | Initialization: |

| 2: | for k = 1 to K do |

| 3: | G(k) = GW(k) |

| 4: | Solve Algorithm 2 with G(k) and X(k) |

| 5: | |

| 6: | |

| 7: | break |

| 8: | end if |

| 9: | W(k+1) ← Solve Eq. 5 with |

| 10: | end for |

We terminate irMxNE when with a user specified threshold τ, which we set to 10−6 in practice. The proposed optimization algorithm for irMxNE is fast enough to allow its usage in practical MEG/EEG applications. Full pseudo code for irMxNE is provided in Algorithm 3.

C. Source constraints and bias

1) Source orientation

The proposed BCD scheme is applicable for MEG/EEG inverse problems without and with orientation constraint. For imposing a loose orientation constraint [40], we apply a weighting matrix K = diag([1,ρ,ρ]) to each block of the gain matrix Gs ∈ ℝN×3 with Gs [:, 1 ] corresponding to the dipole orientated normally to the cortical surface, and Gs[:, 2] and Gs[:, 3] to the two tangential dipoles. The weighting parameter 0 <ρ ≤ 1 controls up to which angle the rotating dipole may deviate from the normal direction [20], [40]. The orientation-weighted gain matrix is hence defined as , where I(S) ∈ ℝS×S is the identity matrix. Since the penalty in Eq. (8) does not promote sparsity along orientations, the irMxNE result is not biased towards the coordinate axes [21]. When the source orientation is postulated a priori (e.g. normal to the cortical surface), each block Xs corresponds to the activation of a fixed dipole. Consequently, the Frobenius norm per block can be replaced by the l2-norm of the source activation of the corresponding fixed dipole.

2) Depth bias compensation

Due to the attenuation of the bioelectromagnetic field with increasing distance between source and sensor, deep sources require higher source amplitudes to generate sensor signals of equal strength compared to superficial sources. Consequently, inverse methods, which are based on constraints penalizing the source amplitudes, have a bias towards superficial sources. In order to compensate this bias, each block of the gain matrix is weighted a priori. Here, we apply the depth bias compensation proposed in [55], which computes the weights used for depth bias compensation based on the SVD of the gain matrix.

3) Amplitude bias compensation

Source activation estimated with source reconstruction approaches based on lp-quasinorms with 0 < p ≤ 1, such as MxNE and irMxNE, show a varying degree of amplitude bias due to the inherent shrinkage. The standard practice for compensating the amplitude bias consists in computing the least squares fit after restricting the source space to the support of , which is typically an over-determined optimization problem. In contrast, we apply the debiasing approach proposed in [20], which preserves the source characteristics and orientations estimated with irMxNE by estimating a scaling factor for each source, which is constrained to be above 1 and constant over orientation and time. The bias corrected source estimate is computed using D as , where the diagonal scaling matrix D is estimated based on the convex problem:

D. Simulation setup

We compare MCE, MxNE and irMxNE in terms of amplitude bias, support recovery, and stability using simulated auditory evoked fields. The simulation, which was repeated 100 times, is based on a real gain matrix computed with a three-shell boundary element model using 4699 cortical sources with fixed orientation (normal to the cortical surface), and a 306-channels Elekta Neuromag Vectorview system (Elekta Neuromag Oy, Helsinki, Finland) with 102 magnetometers and 204 gradiometers. The sampling rate was set to 1 kHz and we restricted the analysis to the time window from 60 ms to 150 ms. We generated single trials by activating two dipolar sources, one in each transverse temporal gyrus, with Gaussian functions peaking at 100 ms and 110 ms with a peak amplitude of 55 nAm and 45 nAm, Xsim. Background activity was generated by ten dipolar sources placed randomly on the cortical surface. Each dipole was activated with filtered white noise with a peak amplitude of 100 nAm. The filter coefficients were determined by fitting an auto-regressive process of order 5 to real baseline MEG data [20]. By averaging 100 single trials, the SNR of the evoked response, which we compute using spatial whitened data as , was set to SNR = 2.63 ± 0.46. Source reconstruction was computed without orientation constraint, where none of the dipoles used to generate the gain matrix was oriented perpendicularly to the cortical surface. All methods were applied with different regularization parameters λ given as a percentage of the respective λmax, which is the smallest regularization parameter leading to an empty active set [9]. We evaluate the source reconstruction performance by means of the true and false positives counts. We consider a source to be a true positive, if its geodesic distance along the cortical surface from the true source location is less than 1 cm. A value of 1 cm is what would be considered an acceptable localization error for most neuroscience applications. Moreover, we present the active set size and the root mean square error in the sensor space, . To evaluate the stability of the reconstructed support, we compute Krippendorff’s alpha [56].

E. Experimental MEG data

We evaluate the performance of MxNE and irMxNE using data from the MIND multi-site MEG study [57]–[59]. We use two different data sets from one exemplary subject, auditory evoked fields (AEF) and somatosensory evoked fields (SEF), recorded using the 306-channels Elekta Neuromag Vectorview system. A detailed description of the data and paradigms can be found in [57]–[59]. For the AEF data set, we report results for AEFs evoked by left auditory stimulation with pure tones of 500 Hz. The analysis window for source estimation was chosen from 50 ms to 200 ms based on visual inspection of the evoked data to capture the dominant N100m component. For the SEF data set, we analyzed SEFs evoked by bipolar electrical stimulation (0.2 ms in duration) of the left median nerve. To capture the main peaks of the evoked response and to exclude the strong stimulus artifact, the analysis window was chosen from 18 ms to 200 ms based on visual inspection. Following the standard pipeline from the MNE software [60], signal preprocessing for both data sets consisted of signal-space projection for suppressing environmental noise, and baseline correction using pre-stimulus data (from −200 ms to −20 ms). Epochs with peak-to-peak amplitudes exceeding predefined rejection parameters (3 pT for magnetometers, 400 pT/m for gradiometers, and 150 V for EOG) were assumed to be affected by artifacts and discarded. This resulted in 96 (AEF) and 294 (SEF) artifact-free epochs, which were resampled to 500 Hz. The gain matrix was computed using a set of 7498 cortical locations, and a three-layer boundary element model. The stability of the source reconstruction was tested using a resampling technique. For each data set, we generated 100 random sets of epochs by randomly selecting 80% of all available epochs without replacement. The noise covariance matrix for spatial whitening was estimated for each subsample using prestimulus data (from −200 ms to −20 ms). We applied both MxNE and irMxNE on the average of each random set without orientation constraint. Due to the lack of a ground truth, the source reconstruction performance is evaluated by means of the Goodness of Fit (GOF) and the active set size. To compare results with well established source reconstruction techniques, we compute the dSPM solution [45] without orientation constraint and the RAP-MUSIC estimate [5] for both data sets using the MNE-Python software [61]. For RAP-MUSIC, we use single-dipole and two-dipole independent topographies to address the problem of correlated sources [4]. A similar idea is pursued e.g. by dual core beamformers [62]. The correlation threshold was set to 0.95 as proposed by Mosher et al. [4]. The rank of the signal subspace was determined by thresholding the eigenvalues of the data covariance based on an estimate of the noise variance. As we apply a spatial whitening, the threshold was set to 1.

III. Results

A. Simulation study

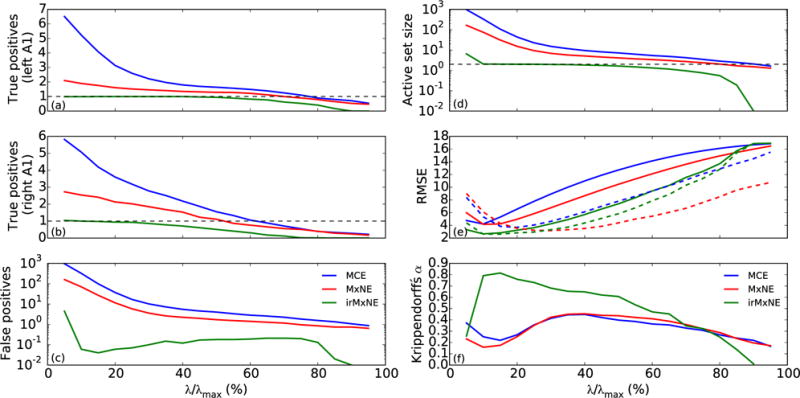

The results of the simulation study (100 repetitions) for different regularization parameters (from 5 to 100% of λmax) are presented in Fig. 1. The source space contained 4699 sources and one source per hemisphere was active indicated by the horizontal dashed lines in Fig. 1a and b. True positive counts above this threshold indicate suboptimally sparse source estimates, whereas counts close to zero indicate false negatives. We can see that the irMxNE approach provides the best support recovery. It allows to reconstructs single dipoles in both regions of interest, whereas MCE and MxNE find multiple correlated sources. Particularly for low values of λ, MCE and MxNE overestimate the size of the active set leading to a large number of false positives, whereas irMxNE generates significantly less false positives. The mean active set size confirms that irMxNE provides the sparsest result of all three methods. The mean RMSE is shown in Fig. 1e. While all methods profit from the debiasing procedure, the effect on irMxNE is less pronounced compared to the other methods indicating a reduced amplitude bias. The best result is obtained with irMxNE. Krippendorff’s α indicates that the support reconstructed with irMxNE is more stable compared to MCE or MxNE. The source estimate is thus less dependent on the epochs used for generating the evoked response.

Fig. 1.

Results of the simulation study based on simulated AEFs for MCE, MxNE and irMxNE. The source space contained a total of 4699 sources and the simulation was repeated 100 times: (a) mean true positive count (left A1), (b) mean true positive count (right A1), (c) mean false positive count, (d) mean active set size, (e) mean RMSE without (solid) and with (dashed) debiasing, and (f) Krippendorff’s α.

B. Experimental MEG data

1) Auditory evoked fields

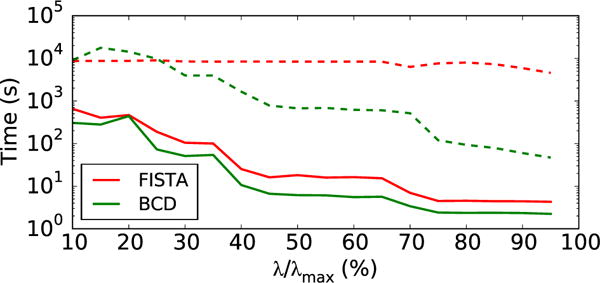

We first compare the performance of the proposed BCD scheme for solving the weighted MxNE with the Fast Iterative Shrinkage Thresholding Algorithm (FISTA) [63], a proximal gradient method used in [9]. Both methods were applied with and without active set strategy. All computations were performed on a computer with a 2.4 GHz Intel Core 2 Duo processor and 8 GB RAM. The computation times as a function of λ are presented in Fig. 2. The BCD scheme outperforms FISTA both with and without active set strategy. Combining the BCD scheme and the active set strategy reduces the computation time by a factor of 100 and allows to compute the MxNE on real MEG/EEG data in a few seconds. Since subsequent MxNE iterations are significantly faster due to the restriction of the source space, irMxNE also runs in a few seconds on real MEG/EEG source localization problems.

Fig. 2.

Computation time as a function of λ for MxNE on real MEG data (free orientation) using BCD and FISTA with (solid) and without (dashed) active set strategy.

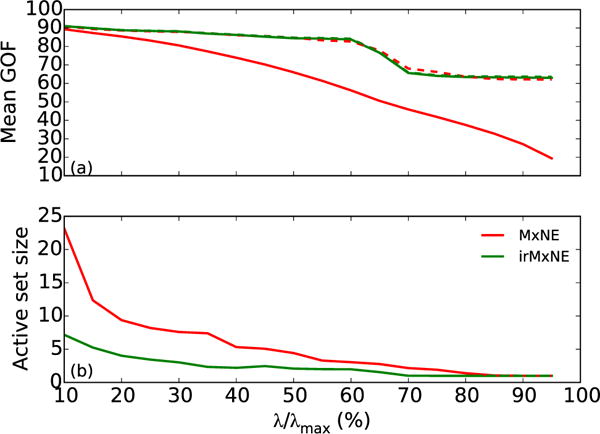

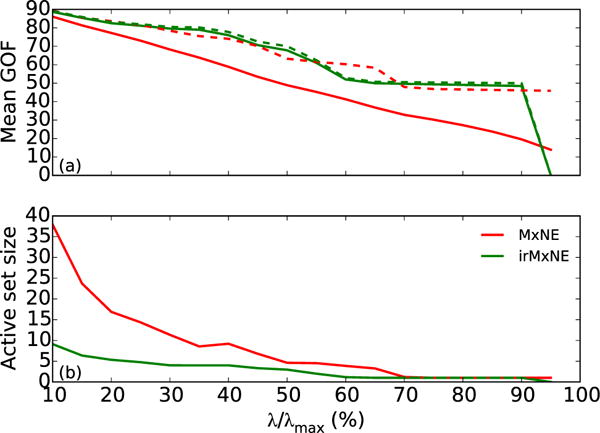

We applied MxNE and irMxNE (with and without debiasing) with different regularization parameters λ to 100 AEF data sets generated by averaging randomly selected subsets of epochs. The mean GOF around the N100m component (from 90 ms to 150 ms) and the mean active set size are presented in Fig. 3 as a function of λ. We can see that the debiasing procedure has a strong effect on MxNE, whereas the GOF of the irMxNE result is only slightly improved indicating less amplitude bias. Debiased MxNE and irMxNE yield similar GOFs with similar plateaus, but irMxNE provides a sparser, i.e., simpler model.

Fig. 3.

Mean GOF, and active set size for MxNE and irMxNE without (solid) and with (dashed) debiasing for the AEF data.

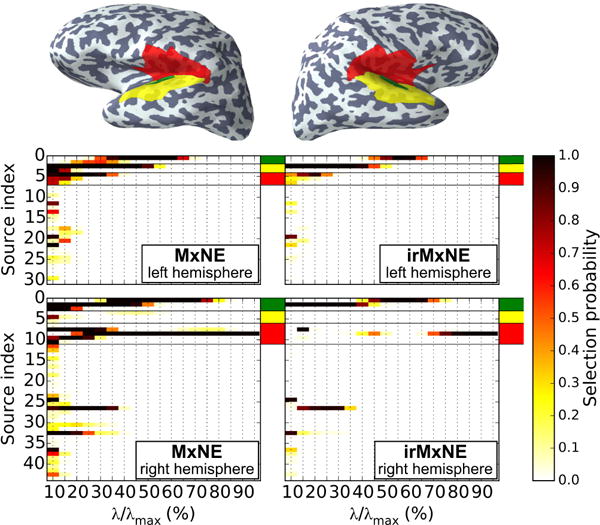

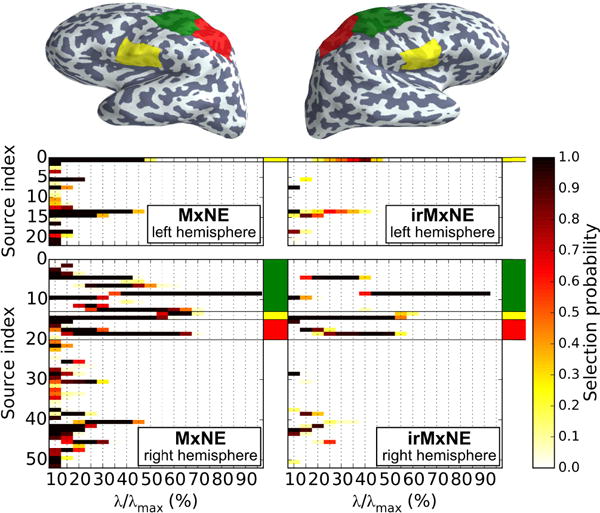

The selection probability for all sources being, at least once, part of the active set obtained with MxNE or irMxNE is shown in Fig. 4. MxNE selects multiple sources with high probability within each region of interest, which is a consequence of the correlated design. The irMxNE approach is more selective and provides sparser source estimates. Moreover, the number of false positives, i.e., sources outside of the regions of interest, is lower for irMxNE, particularly for low values of λ.

Fig. 4.

Source selection probability for the AEF data set using MxNE (left) and irMxNE (right). The plot is restricted to sources that are active in at least one random subsample. The colored patches on the inflated brain indicate regions of interest based on anatomical labels (green, yellow, red). Source indices, which are in the regions of interest, are highlighted by corresponding color marks. The transversal temporal gyrus is indicated in green.

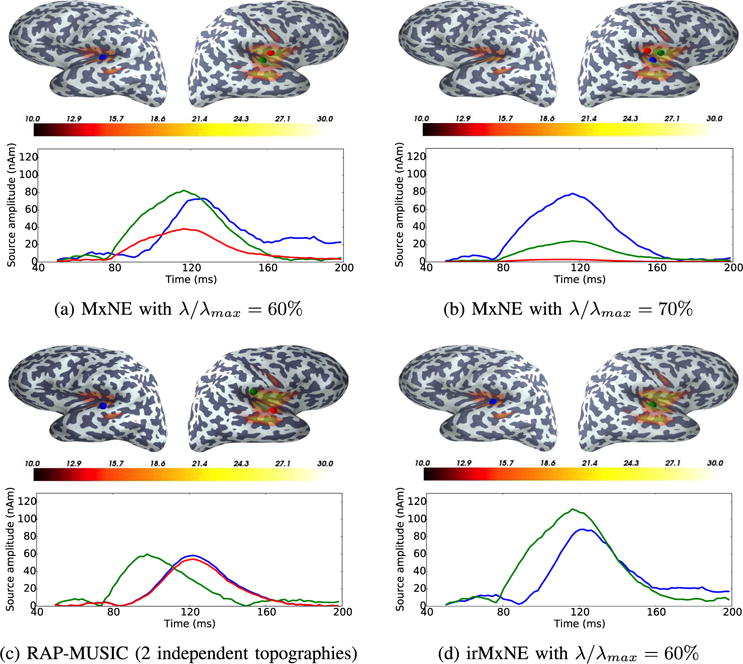

Exemplary source reconstructions for debiased MxNE and irMxNE are illustrated in Fig. 5. For comparison, we present a RAP-MUSIC estimate based on single- and two-dipole independent topographies [4], [5]. The maximum dSPM score [45] per source is shown as an overlay on each cortical surface. MxNE with λ/λmax = 60% shows activation in both primary auditory cortices with main peaks around 110 ms corresponding to the N100m component. The activation on the right hemisphere is however split into two highly correlated dipoles, which are partly located on the wrong side of the Sylvian fissure. Increasing λ does not fix the latter issue, since dipoles in the left primary auditory cortex are eliminated before actually erasing spurious activity on the right hemisphere. The loss of the active source in the left auditory cortex is also indicated by the drop of the GOF in Fig. 3. The size of the signal subspace for RAP-MUSIC was estimated to be 50 by the thresholding procedure. Being based on an empirical estimate of the data covariance, this procedure tends to overselect the rank of the signal subspace [5] and the RAP-MUSIC estimate depends on the correlation threshold. With the setting proposed in [4], only two independent topographies, a single- and a two-dipole topography, yield sufficient subspace correlations. The dipoles are reconstructed close to the primary auditory cortex on both hemispheres. The GOF of the three-dipole model is 86.7%. Using single- and two-dipole topographies provides better RAP-MUSIC estimates than using only single-dipole topographies. The irMxNE with λ/λmax = 60%, which converged after 10 iterations, reconstructs single dipoles in both primary auditory cortices. Intuitively, the green and blue sources, which are the strongest sources according to MxNE with λ/λmax = 60%, are favored at the next iteration of the reweighted scheme pruning out the source on the wrong side of the Sylvian fissure present in the MxNE result. The estimated source locations roughly match the peaks of the dSPM estimate. The source estimate obtained with dSPM or similar linear inverse methods (sLORETA, MNE, etc.) is however spatially smeared. To reduce the smearing of the dSPM estimate, one could increase the threshold, yet it would make it time dependent and certainly too high to see weaker sources. Alternatively, post-processing is generally required, e.g. by defining regions of interest, to improve interpretability. Note also that, in contrast to dSPM, source amplitudes obtained with irMxNE are moments of electrical dipoles expressed in nAm, which is similar to dipole fitting procedures [2]. The GOF of the two-dipole model obtained with irMxNE is 81.9% and thus only slightly lower than the three-dipole model obtained with RAP-MUSIC.

Fig. 5.

Source reconstruction results using AEFs evoked by left auditory stimulation. The estimated source locations for MxNE (a, b), RAP-MUSIC (c) and irMxNE (d), indicated by colored spheres, and the corresponding time courses are color-coded. The maximum of the dSPM estimate per source, which is thresholded for visualization purposes, is shown as an overlay on each cortical surface.

2) Somatosensory evoked fields

We applied MxNE and irMxNE (with and without debiasing) with different regularization parameters to 100 averaged random subsets of epochs of the SEF data set. The mean GOF and the corresponding active set size for MxNE and irMxNE (with and without debiasing) as a function of the regularization parameter λ are shown in Fig. 6. We can see again that irMxNE yields significantly sparser source estimates, which however allow for a better GOF compared to MxNE. The GOFs of the MxNE and irMxNE results with and without debiasing illustrate also that the irMxNE source estimates are less biased in amplitude.

Fig. 6.

Mean GOF, and active set size for MxNE and irMxNE without (solid) and with (dashed) debiasing for the SEF data.

Fig. 7 presents the selection probability for all sources, which are non-zero in at least one MxNE or irMxNE estimate. The irMxNE typically selects only one source per region of interest for different values of λ. The number of false positives is also significantly lower. These results confirm the findings obtained from the AEF data set in section III-B1. The stability analysis reveals also that the source in the contralateral secondary somatosensory cortex (S2c) is less stable compared to the ipsilateral sources, which might be caused by its relatively weak field pattern [12].

Fig. 7.

Selection probability for sources obtained with MxNE (left) and irMxNE (right) for the SEF data. The plot is restricted to sources that are active in at least one random subsample. The colored patches on the inflated brain indicate regions of interest based on anatomical labels (green, yellow, red). Source indices, which are in the regions of interest, are highlighted by corresponding color marks.

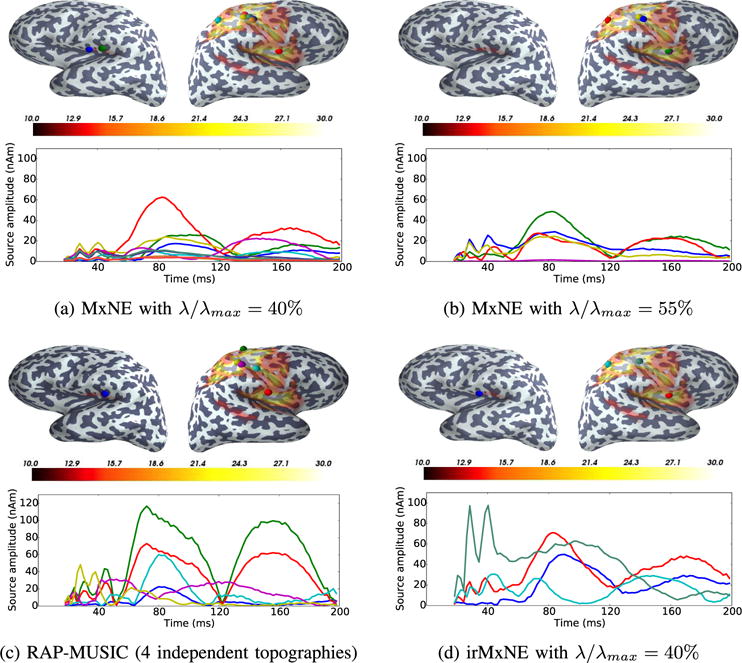

Fig. 8 presents source reconstruction results obtained with MxNE and irMxNE for selected regularization parameters. As in section III-B.1, we show a RAP-MUSIC estimate and added the maximum dSPM score per source as an overlay to all subfigures. MxNE with λ/λmax = 40% reconstructs dipoles in the contralateral primary somatosensory cortex (cS1), the contralateral and ipsilateral secondary somatosensory cortices (cS2 and iS2), and the posterial parietal cortex (cPPC). The source locations roughly coincide with the main peaks of the dSPM estimate. As for the AEF data set, the source activation per region is split into several correlated dipoles. An increase of the regularization parameter results in a loss of physiologically meaningful source activity such as activation in iS2, which is visible in the dSPM estimate. The relevance of this activation is also indicated by the drop of the GOF in Fig. 6. The signal subspace estimation for RAP-MUSIC yields a signal subspace size of 43. Hence, the RAP-MUSIC estimate depends on the choice of the correlation threshold. For our settings, four independent topographies, two single- and two two-dipole topographies, are above the subspace correlation threshold. Dipoles are reconstructed in all relevant areas. The activation in cS1 is split into several dipoles, probably due to the fixed orientation source model applied in RAP-MUSIC. The GOF of this six-dipole model is 82,6%. The RAP-MUSIC estimate benefits from using single and two-dipole topographies. The irMxNE approach with λ/λmax = 40% converged after 14 reweightings. The resulting source estimate contains four single dipoles representing activation in each of the four regions. The GOF of the four-dipole model obtained with irMxNE is 81.4% and thus higher than the GOF of the corresponding MxNE estimate and only slightly lower than the GOF of the six-dipole model obtained with RAP-MUSIC.

Fig. 8.

Source reconstruction results using SEFs evoked by electrical stimulation of the left median nerve. The estimated source locations for MxNE (a, b), RAP-MUSIC (c) and irMxNE (d), indicated by colored spheres, and the corresponding time courses are color-coded. The maximum of the dSPM estimate per source, which is thresholded for visualization purposes, is shown as an overlay on each cortical surface.

IV. Discussion and Conclusion

In this work, we presented irMxNE, an MEG/EEG inverse solver based on regularized regression with a non-convex block-separable penalty. The non-convex optimization problem is solved by iteratively solving a sequence of weighted MxNE problems, which allows for fast algorithms and global convergence control at each iteration. We proposed a new algorithm for solving the MxNE surrogate problems combining BCD and a forward active set strategy, which significantly decreases the computation time compared to the original MxNE algorithm [9]. This new algorithm makes the proposed iterative reweighted optimization scheme applicable for practical MEG/EEG applications. The approach is also applicable to other block-separable non-convex penalties such as the logarithmic penalty proposed in [22] by adapting the definition of the weights in Eq. (5). The irMxNE method is designed for offline source reconstruction, which is still the main application of MEG/EEG source imaging in research and clinical routine. However, we are aware of a growing interest in real-time brain monitoring [64]. New techniques such as parallel BCD schemes [51], clustering approaches [65], and safe rules [66] can help to further reduce the computation time. As proposed in [22], [26], the first iteration of irMxNE is equivalent to computing the standard MxNE. Consequently, the irMxNE result is at least as sparse as the MxNE estimate. The iterative reweighting procedure can thus be considered as a post-processing for MxNE improving source recovery, stability, and amplitude bias. This was confirmed by empirical results based on simulations and two MEG data sets. We attribute this to the spatial correlation and the poor conditioning of the forward operator in MEG/EEG source analysis. An alternative approach to improve the conditioning of the inverse problem based on clustering the columns of the gain matrix is presented in [65], which however affects the spatial resolution. The source locations reconstructed by irMxNE roughly coincided with the main peaks of the dSPM estimate, which demonstrate that the proposed inverse solver can present a simple and easy-to-interpret spatio-temporal picture of the active sources. The models reconstructed with RAP-MUSIC provided a slightly higher goodness of fit, but contained more active sources. We found that RAP-MUSIC benefits from using single-dipole and two-dipole independent topographies. The use of higher-order source models, which are limited to a small number of correlated sources, however significantly increases the computational complexity, particularly for source spaces with high resolution. Approaches improving the computation time of RAP-MUSIC are presented in [64]. In contrast, irMxNE makes no assumption on the number of correlated sources. Its computation time is not dramatically affected by the resolution of the source space. Model selection for sparse source imaging approaches, which amounts here to choosing the regularization parameter, is a critical aspect. Automatic approaches based on minimizing the prediction error such as cross-validation tend to overestimate the number of active sources and increase the false positive rate. Here, we selected the regularization parameter based on the GOF and the size of the active set. A similar procedure is used e.g. in sequential dipole fitting. The development of an automatic model selection procedure for the proposed inverse solver is future work. In particular, approaches maximizing model stability are an interesting alternative [67], [68]. Model selection is however a general issue in MEG/EEG source reconstruction. In our comparison with RAP-MUSIC, we found e.g. that the size of the signal subspace and the correlation threshold have a strong influence on the final source estimate. Due to the limited number of samples, we can only obtain an empirical estimate of the data covariance, which involves the risk of overestimating the size of the signal subspace using the thresholding procedure. The correlation threshold is used to switch to higher order source models and to account for noise components in the signal subspace. Similar to MxNE, irMxNE assumes that the locations of active sources is constant over time. Hence, it should be applied to data, for which this model assumption is approximately true, e.g., by selecting intervals of interest or applying a moving window approach. To go beyond stationary sources, the reconstruction of non-stationary focal source activation can be improved by applying sparsity constraints in the time-frequency domain such as in the TF-MxNE [20]. Preliminary results on the application of non-convex regularization for such models based on iterative reweighting procedures were presented in [69]. The irMxNE solver is available in the MNE-Python package [61].

Acknowledgments

The authors would like to thank M. S. Hämäläinen for providing the experimental MEG data sets.

This work was supported by the German Research Foundation (Ha 2899/21-1), the European Union (FP7-PEOPLE-2013-IAPP 610950), the EDF and Jacques Hadamard Mathematical Foundation (Gaspard Monge Program for Optimization and operations research), the French National Research Agency (ANR-14-NEUC-0002-01), and the National Institutes of Health (R01 MH106174).

Contributor Information

Daniel Strohmeier, Institute of Biomedical Engineering and Informatics, Technische Universiät Ilmenau, Ilmenau, Germany.

Yousra Bekhti, LTCI, CNRS, Télécom ParisTech, Université Paris-Saclay, Paris, France.

Jens Haueisen, Institute of Biomedical Engineering and Informatics, Technische Universiät Ilmenau, Ilmenau, Germany and the Biomagnetic Center, Department of Neurology, Jena University Hospital, Jena, Germany.

Alexandre Gramfort, LTCI, CNRS, Télécom ParisTech, Université Paris-Saclay, Paris, France and the NeuroSpin, CEA Saclay, Bat. 145, Gif-sur-Yvette Cedex, France.

References

- 1.Baillet S, Mosher JC, Leahy RM. Electromagnetic brain mapping. IEEE Signal Proc Mag. 2001 Nov;18(6):14–30. [Google Scholar]

- 2.Scherg M, Von Cramon D. Two bilateral sources of the late AEP as identified by a spatio-temporal dipole model. Electroencephalogr Clin Neurophysiol. 1985 Jan;62(1):32–44. doi: 10.1016/0168-5597(85)90033-4. [DOI] [PubMed] [Google Scholar]

- 3.Mosher JC, Lewis PS, Leahy RM. Multiple dipole modeling and localization from spatio-temporal MEG data. IEEE Trans Biomed Eng. 1992 Jun;39(6):541–557. doi: 10.1109/10.141192. [DOI] [PubMed] [Google Scholar]

- 4.Mosher JC, Leahy RM. Recursive MUSIC: a framework for EEG and MEG source localization. IEEE Trans Biomed Eng. 1998 Jan;45(11):1342–1354. doi: 10.1109/10.725331. [DOI] [PubMed] [Google Scholar]

- 5.Mosher JC, Leahy RM. Source localization using recursively applied and projected (RAP) MUSIC. IEEE Trans Signal Process. 1999 Feb;47(2):332–340. doi: 10.1109/10.867959. [DOI] [PubMed] [Google Scholar]

- 6.Xu XL, Xu B, He B. An alternative subspace approach to EEG dipole source localization. Phys Med Biol. 2004 Jan;49(2):327. doi: 10.1088/0031-9155/49/2/010. [DOI] [PubMed] [Google Scholar]

- 7.Matsuura K, Okabe Y. Selective minimum-norm solution of the biomagnetic inverse problem. IEEE Trans Biomed Eng. 1995 Jun;42(6):608–615. doi: 10.1109/10.387200. [DOI] [PubMed] [Google Scholar]

- 8.Ou W, Hamalainen MS, Golland P. A distributed spatio-temporal EEG/MEG inverse solver. NeuroImage. 2009 Feb;44(3):932–946. doi: 10.1016/j.neuroimage.2008.05.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gramfort A, Kowalski M, Hämäläinen MS. Mixed-norm estimates for the M/EEG inverse problem using accelerated gradient methods. Phys Med Biol. 2012 Apr;57(7):1937–1961. doi: 10.1088/0031-9155/57/7/1937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lucka F, Pursiainen S, Burger M, Wolters C. Hierarchical bayesian inference for the EEG inverse problem using realistic FE head models: Depth localization and source separation for focal primary currents. NeuroImage. 2012 Apr;61(4):1364–1382. doi: 10.1016/j.neuroimage.2012.04.017. [DOI] [PubMed] [Google Scholar]

- 11.Wipf D, Nagarajan S. A unified bayesian framework for MEG/EEG source imaging. NeuroImage. 2009 Feb;44(3):947–966. doi: 10.1016/j.neuroimage.2008.02.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sorrentino A, Parkkonen L, Pascarella A, Campi C, Piana M. Dynamical MEG source modeling with multi-target bayesian filtering. Hum Brain Mapp. 2009 Jun;30(6):1911–1921. doi: 10.1002/hbm.20786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Uutela K, Hämäläinen MS, Somersalo E. Visualization of magnetoencephalographic data using minimum current estimates. NeuroImage. 1999 Aug;10(2):173–180. doi: 10.1006/nimg.1999.0454. [DOI] [PubMed] [Google Scholar]

- 14.Tibshirani R. Regression Shrinkage and Selection Via the Lasso. J Royal Stat Soc B. 1996 Jan;58(1):267–288. [Google Scholar]

- 15.Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. J Royal Stat Soc B. 2006 Feb;68(1):49–67. [Google Scholar]

- 16.Liao X, Li H, Carin L. Generalized Alternating Projection for Weighted-2,1 Minimization with Applications to Model-Based Compressive Sensing. SIAM J Imaging Sci. 2014 Apr;7(2):797–823. [Google Scholar]

- 17.Haufe S, Nikulin VV, Ziehe A, Müller K-R, Nolte G. Combining sparsity and rotational invariance in EEG/MEG source reconstruction. NeuroImage. 2008 Aug;42(2):726–738. doi: 10.1016/j.neuroimage.2008.04.246. [DOI] [PubMed] [Google Scholar]

- 18.Bolstad A, Van Veen B, Nowak R. Space-time event sparse penalization for magneto-/electroencephalography. NeuroImage. 2009 Jul;46(4):1066–1081. doi: 10.1016/j.neuroimage.2009.01.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Huang J, Breheny P, Ma S. A selective review of group selection in high-dimensional models. Statist Sci. 2012 Nov;274 doi: 10.1214/12-STS392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gramfort A, Strohmeier D, Haueisen J, Hämäläinen MS, Kowalski M. Time-frequency mixed-norm estimates: Sparse M/EEG imaging with non-stationary source activations. NeuroImage. 2013 Apr;70:410–422. doi: 10.1016/j.neuroimage.2012.12.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chang WT, Ahlfors SP, Lin FH. Sparse current source estimation for MEG using loose orientation constraints. Hum Brain Mapp. 2013 Dec;34(9):2190–2201. doi: 10.1002/hbm.22057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Candès EJ, Wakin MB, Boyd SP. Enhancing sparsity by reweighted l1 minimization. J Fourier Anal Applicat. 2008 Dec;14(5–6):877–905. [Google Scholar]

- 23.Chartrand R. Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Proc Let. 2007 Oct;14(10):707–710. [Google Scholar]

- 24.Saab R, Chartrand R, Yilmaz O. Stable sparse approximations via nonconvex optimization. IEEE Int Conference on Acoustics, Speech and Signal Processing (ICASSP) 2008:3885–3888. [Google Scholar]

- 25.Woodworth J, Chartrand R. Compressed sensing recovery via nonconvex shrinkage penalties. arXiv:1504.02923. 2015 Apr; [Online]. Available: http://arxiv.org/abs/1504.02923.

- 26.Gasso G, Rakotomamonjy A, Canu S. Recovering sparse signals with a certain family of nonconvex penalties and DC programming. IEEE Trans Signal Process. 2009 Dec;57(12):4686–4698. [Google Scholar]

- 27.Rakotomamonjy A. Surveying and comparing simultaneous sparse approximation (or group-lasso) algorithms. Signal Process. 2011 Jul;91(7):1505–1526. [Google Scholar]

- 28.Zhang Z, Rao BD. Sparse signal recovery with temporally correlated source vectors using sparse bayesian learning. IEEE J Sel Topics Signal Process. 2011 Sep;5(5):912–926. [Google Scholar]

- 29.Gorodnitsky IF, Rao BD. Sparse signal reconstruction from limited data using FOCUSS: A re-weighted minimum norm algorithm. IEEE Trans Signal Process. 1997 Mar;45(3):600–616. [Google Scholar]

- 30.Rao BD, Kreutz-Delgado K. An affine scaling methodology for best basis selection. IEEE Trans Signal Process. 1999 Jan;47(1):187–200. [Google Scholar]

- 31.Chartrand R, Yin W. Iteratively reweighted algorithms for compressive sensing. IEEE Int Conference on Acoustics, Speech and Signal Processing (ICASSP) 2008:3869–3872. [Google Scholar]

- 32.Cotter SF, Rao BD, Engan K, Kreutz-Delgado K. Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE Trans Signal Process. 2005 Jul;53(7):2477–2488. [Google Scholar]

- 33.Zhang Z, Rao BD. Iterative reweighted algorithms for sparse signal recovery with temporally correlated source vectors. IEEE Int Conference on Acoustics, Speech and Signal Processing (ICASSP) 2011:3932–3935. [Google Scholar]

- 34.Wipf D, Nagarajan S. Iterative reweighted and methods for finding sparse solutions. IEEE J Sel Topics Signal Process. 2010 Apr;4(2):317–329. [Google Scholar]

- 35.Gorodnitsky IF, George JS, Rao BD. Neuromagnetic source imaging with FOCUSS: a recursive weighted minimum norm algorithm. Electroencephalogr Clin Neurophysiol. 1995 Oct;95(4):231–251. doi: 10.1016/0013-4694(95)00107-a. [DOI] [PubMed] [Google Scholar]

- 36.Portniaguine O, Zhdanov MS. Focusing geophysical inversion images. Geophysics. 1999 Jun;64(3):874–887. [Google Scholar]

- 37.Nagarajan SS, Portniaguine O, Hwang D, Johnson C, Sekihara K. Controlled support MEG imaging. NeuroImage. 2006 Nov;33(3):878–885. doi: 10.1016/j.neuroimage.2006.07.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Liu H, Schimpf PH, Dong G, Gao X, Yang F, Gao S. Standardized shrinking LORETA-FOCUSS (SSLOFO): a new algorithm for spatio-temporal EEG source reconstruction. IEEE Trans Biomed Eng. 2005 Oct;52(10):1681–1691. doi: 10.1109/TBME.2005.855720. [DOI] [PubMed] [Google Scholar]

- 39.Xu P, Tian Y, Chen H, Yao D. Lp norm iterative sparse solution for EEG source localization. IEEE Trans Biomed Eng. 2007 Mar;54(3):400–409. doi: 10.1109/TBME.2006.886640. [DOI] [PubMed] [Google Scholar]

- 40.Lin F-H, Belliveau JW, Dale AM, Hämäläinen MS. Distributed current estimates using cortical orientation constraints. Hum Brain Mapp. 2006 Jan;27(1):1–13. doi: 10.1002/hbm.20155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Strohmeier D, Haueisen J, Gramfort A. Improved MEG/EEG source localization with reweighted mixed-norms. 4th Int Workshop on Pattern Recognition in Neuroimaging (PRNI) 2014:1–4. [Google Scholar]

- 42.Zhang Z, Rao BD. Exploiting correlation in sparse signal recovery problems: Multiple measurement vectors, block sparsity, and time-varying sparsity. 28th Int Conference on Machine learning (ICML) 2011 [Google Scholar]

- 43.Tseng P. Approximation accuracy, gradient methods, and error bound for structured convex optimization. Math Program. 2010 Oct;125:263–295. [Google Scholar]

- 44.Qin Z, Scheinberg K, Goldfarb D. Efficient block-coordinate descent algorithms for the group lasso. Math Program Comput. 2013 Jun;5(2):143–169. [Google Scholar]

- 45.Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, Lewine JD, Halgren E. Dynamic statistical parametric mapping: Combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000 Apr;26(1):55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- 46.Dale A, Sereno M. Improved localization of cortical activity by combining EEG and MEG with MRI cortical surface reconstruction: a linear approach. J Cognitive Neurosci. 1993 Spring;5(2):162–176. doi: 10.1162/jocn.1993.5.2.162. [DOI] [PubMed] [Google Scholar]

- 47.Engemann DA, Gramfort A. Automated model selection in covariance estimation and spatial whitening of MEG and EEG signals. NeuroImage. 2015 Mar;108:328–342. doi: 10.1016/j.neuroimage.2014.12.040. [DOI] [PubMed] [Google Scholar]

- 48.Bach F, Jenatton R, Mairal J, Obozinski G. Optimization with sparsity-inducing penalties. Foundations and Trends® in Machine Learning. 2012 Jan;4(1):1–106. [Google Scholar]

- 49.Boyd S, Vandenberghe L. Convex optimization. Cambridge: Cambridge University Press; 2004. [Google Scholar]

- 50.Rockafellar RT. Convex analysis. Princeton: Princeton University Press; 1997. [Google Scholar]

- 51.Li Y, Osher S. Coordinate descent optimization for l1 minimization with application to compressed sensing; a greedy algorithm. Inverse Problems and Imaging. 2009 Aug;3(3):487–503. [Google Scholar]

- 52.Wei X, Yuan Y, Ling Q. DOA estimation using a greedy block coordinate descent algorithm. IEEE Trans Signal Process. 2012 Dec;60(12):6382–6394. [Google Scholar]

- 53.Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010 Feb;33(1):1–22. [PMC free article] [PubMed] [Google Scholar]

- 54.Roth V, Fischer B. The group-lasso for generalized linear models: uniqueness of solutions and efficient algorithms. 25th Int Conference on Machine learning (ICML) 2008:848–855. [Google Scholar]

- 55.Huang MX, Huang CW, Robb A, Angeles A, Nichols SL, Baker DG, Song T, Harrington DL, Theilmann RJ, Srinivasan R, et al. MEG source imaging method using fast l1 minimum-norm and its applications to signals with brain noise and human resting-state source amplitude images. NeuroImage. 2014 Jan;84:585–604. doi: 10.1016/j.neuroimage.2013.09.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Hayes AF, Krippendorff K. Answering the call for a standard reliability measure for coding data. Commun Methods Meas. 2007 Dec;1(1):77–89. [Google Scholar]

- 57.Aine CJ, Sanfratello L, Ranken D, Best E, MacArthur JA, Wallace T, Gilliam K, Donahue CH, Montaño R, Bryant JE, Scott A, Stephen JM. MEG-SIM: a web portal for testing MEG analysis methods using realistic simulated and empirical data. Neuroinformatics. 2012 Apr;10(2):141–158. doi: 10.1007/s12021-011-9132-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Weisend M, Hanlon F, Montano R, Ahlfors S, Leuthold A, Pantazis D, Mosher J, Georgopoulos A, Hämäläinen MS, Aine C. Paving the way for cross-site pooling of magnetoencephalography (MEG) data. Intern Congress Series. 2007 Aug;1300:615–618. [Google Scholar]

- 59.Ou W, Golland P, Hämäläinen M. Sources of variability in MEG. Medical Image Computing and Computer-Assisted Intervention (MIC-CAI) 2007:751–759. doi: 10.1007/978-3-540-75759-7_91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Gramfort A, Luessi M, Larson E, Engemann DA, Strohmeier D, Brodbeck C, Parkkonen L, Hämäläinen MS. MNE software for processing MEG and EEG data. NeuroImage. 2014 Feb;86:446–460. doi: 10.1016/j.neuroimage.2013.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Gramfort A, Luessi M, Larson E, Engemann DA, Strohmeier D, Brodbeck C, Goj R, Jas M, Brooks T, Parkkonen L, Hämäläinen MS. MEG and EEG data analysis with MNE-Python. Frontiers in Neurosci. 2013 Dec;7(267) doi: 10.3389/fnins.2013.00267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Diwakar M, Huang MX, Srinivasan R, Harrington DL, Robb A, Angeles A, Muzzatti L, Pakdaman R, Song T, Theilmann RJ, Lee RR. Dual-core beamformer for obtaining highly correlated neuronal networks in MEG. NeuroImage. 2011 Jan;54(1):253–263. doi: 10.1016/j.neuroimage.2010.07.023. [DOI] [PubMed] [Google Scholar]

- 63.Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J on Imaging Sci. 2009 Jan;2(1):183–202. [Google Scholar]

- 64.Dinh C. Brain Monitoring: Real-Time Localization of Neuronal Activity. Herzogenrath: Shaker. 2015 [Google Scholar]

- 65.Dinh C, Strohmeier D, Luessi M, Güllmar D, Baumgarten D, Haueisen J, Hämäläinen MS. Real-time MEG source localization using regional clustering. Brain topography. 2015 Nov;28(6):771–784. doi: 10.1007/s10548-015-0431-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Fercoq O, Gramfort A, Salmon J. Mind the duality gap: safer rules for the lasso. 32nd Int Conference on Machine Learning (ICML) 2015:333–342. [Google Scholar]

- 67.Lim C, Yu B. Estimation stability with cross validation (ESCV) J Comp Graph Stat. 2015 [Online]. Available: http://dx.doi.org/10.1080/10618600.2015.1020159.

- 68.Sun W, Wang J, Fang Y. Consistent selection of tuning parameters via variable selection stability. J Mach Learn Res. 2013 Nov;14(1):3419–3440. [Google Scholar]

- 69.Strohmeier D, Gramfort A, Haueisen J. MEG/EEG source imaging with a non-convex penalty in the time-frequency domain. 5th Int Workshop on Pattern Recognition in Neuroimaging (PRNI) 2015:1–4. [Google Scholar]