Abstract

Environmental stimuli and objects, including rewards, are often processed sequentially in the brain. Recent work suggests that the phasic dopamine reward prediction-error response follows a similar sequential pattern. An initial brief, unselective and highly sensitive increase in activity unspecifically detects a wide range of environmental stimuli, then quickly evolves into the main response component, which reflects subjective reward value and utility. This temporal evolution allows the dopamine reward prediction-error signal to optimally combine speed and accuracy.

Rewards induce behaviours that enable animals to obtain necessary objects for survival. Although the term ‘reward’ is commonly associated with happiness, in scientific terms rewards have three functions. First, they can act as positive reinforcers to induce learning. Second, rewards elicit movements towards the desired object and constitute factors to be considered in economic choices. Their value for the individual decision maker is subjective and can be formalized as economic utility. The third reward function is associated with emotions, such as pleasure and desire. This third function is difficult to test in animals, but the first two and their underlying brain processes can be quantitatively assessed using specific behavioural tasks, and hence are the focus of this article.

Electrophysiological investigations in monkeys, rats and mice have identified individual neurons that signal reward-related information in the midbrain dopamine system (substantia nigra and ventral tegmental area (VTA)), striatum, orbitofrontal cortex, amygdala and associated structures1. These reward neurons process specific aspects of rewards — such as their amount, probability, subjective value, formal economic utility and risk — in forms that are suitable for learning and decision-making. Most dopamine neurons in the substantia nigra and VTA show brief, phasic responses to rewards and reward-predicting stimuli. These responses code a temporal reward prediction error, which reflects the difference in value between a received reward and a predicted reward at each moment in time2–9. This fast dopamine signal differs distinctly from the slower dopamine activity increases that reflect reward risk10 or, more inconsistently, behavioural reactivity2,11–14; it differs most from the tonic dopamine level that is necessary to enable neuronal processes underlying a wide range of behaviours (BOX 1).

Box 1. Fast, slow and tonic dopamine functions.

What does dopamine do? How can a single, simple chemical be involved in such diverse processes as movement (and its disruption in Parkinson disease), attention (disrupted in attention deficit hyperactivity disorder (ADHD)), cognition (disrupted in schizophrenia) and motivation162? Some answers may lie in the different timescales across which dopamine operates1.

At the fastest, subsecond timescale (see the figure, part a), dopamine neurons show a phasic two-component prediction error response that — I argue in this article — transitions from salience and detection to reward value. This phasic dopamine response can be measured by electrophysiology and voltammetry, and constitutes a highly time specific neuronal signal that is capable of influencing other fast neuronal systems involved in rapid behavioural functions.

At an intermediate timescale (in the seconds to minutes range) a wide variety of behaviours and brain functions are associated with slower changes in dopamine levels that are revealed by dialysis (see the figure, part b) and voltammetry. These include behavioural activation or forced inactivation, stress, attention, reward-related behaviour, punishment and movement156,163–167. These changes in dopamine levels are unlikely to be driven by subsecond changes in dopamine impulses and thus may be unrelated to reward prediction error. Instead, they may be mediated by slower impulse changes in the seconds range or by presynaptic interactions157,159,168. Their function may be to homeostatically adjust the sensitivity of the fast, phasic dopamine reward responses169.

At the slowest timescale, dopamine exerts an almost tonic influence on postsynaptic structures (see the figure, part c). Parkinson disease, ADHD and schizophrenia are associated with deficits in the tonic, finely regulated release of dopamine, which enables the functions of the postsynaptic neurons that mediate movement, cognition, attention and motivation. The effects of tonic dopamine reductions in Parkinson disease are partly remedied by pharmacological dopamine-receptor stimulation, which cannot reinstate phasic dopamine responses but can provide similar receptor occupation to the natural tonic dopamine levels. Thus, the deficits in Parkinson disease are not easily explained by reductions of phasic dopamine changes.

Taken together, dopamine neurotransmission, unlike that mediated by most other neurotransmitters, exerts different influences on neuronal processes and behaviour at different timescales.

Δ [DA], change in dopamine concentration. Part b of the figure is adapted with permission from REF. 163, Elsevier.

Despite robust evidence for their involvement in reward coding, research over more than three decades has shown that some dopamine neurons show phasic activity increases in response to non-rewarding and aversive stimuli2,6,15–27. This discrepancy does not rule out a role for phasic dopamine release in reward processing; indeed, other components of the brain’s reward systems also contain distinct neuronal populations that code non-rewarding events28–32. However, the extent to which phasic dopamine responses code reward versus non-reward information has been difficult to resolve because of their sensitivity to experimental conditions19.

Several recent studies have encouraged a revision of our views on the nature of the phasic dopamine reward response. The studies demonstrate distinct subcomponents of the phasic dopamine response33, provide an alternative explanation for activations in response to aversive stimuli25–27 and document strong sensitivity to some unrewarded stimuli34. Here, I outline and evaluate the evidence for a more elaborate view of the phasic dopamine reward prediction-error signal, which evolves from an initial response that unselectively detects any potential reward (including stimuli that turn out to be aversive or neutral) to a subsequent main component that codes the by now well-identified reward value. Furthermore, I suggest that the reward prediction-error response should be specifically considered to be a utility prediction error signal35.

Processing of reward components

Reward components

Rewards consist of distinct sensory and value components (FIG. 1). Their neuronal processing takes time and engages sequential mechanisms, which becomes particularly evident when the rewards consist of more-complex objects and stimuli. Rewards first impinge on the body through their physical sensory impact. They draw attention through their physical salience, which facilitates initial detection. The specific identity of rewards derives from their physical parameters, such as size, form, colour and position, which engage subsequent sensory and cognitive processes. Comparison with known objects determines their novelty, which draws attention through novelty salience and surprise salience. During and after their identification, valuation takes place. Value is the essential feature that distinguishes rewards from other objects and stimuli; it can be estimated from behavioural preferences that are elicited in choices. Value draws attention because it provides motivational salience. The various forms of salience — physical, novelty, surprise and motivational — induce stimulus-driven attention, which selects information and modulates neuronal processing36–40. Thus, neuronal reward processing evolves in time from unselective sensory detection to the more demanding and crucial stages of identification and valuation. These processes lead to internal decisions and overt choices between different options, to actions towards the chosen option and to feedback that updates the neural representation of a reward’s value.

Figure 1. Reward components.

A reward is composed of sensory and value-related components. The sensory components have an impact on sensory receptors and neurons, and drive initial sensory processing that detects and subsequently identifies the reward. Reward value, which specifically reflects the positively motivating function of rewards, is processed only after the object has been identified. Value does not primarily reflect physical parameters but rather the brain’s subjective assessment of the usefulness of the reward for survival and reproduction. These sequential processes result in decisions and actions, and drive reinforcement as a result of the experienced outcome. For further details, see REF. 114.

Sequential processing in other systems

Research in sensory, cognitive and reward systems has long recognized the component nature of stimuli and objects. Although simple stimuli may be processed too rapidly to reveal their dissociable components, more-sophisticated events take longer to identify, discriminate and valuate. For example, during a visual search task, neurons in the frontal eye fields, lateral intraparietal cortex (LIP) and cortical visual area V4 exhibit an initial unselective response and require a further 50–120 ms to discriminate targets from distractors41–44 (FIG. 2a). Similarly, during perceptual discriminations between partly coherently moving dots, neuronal activity in the LIP and dorsolateral prefrontal cortex becomes distinctive only 120–200 ms after the initial detection response45,46. Even responses in regions of the primary sensory cortex, such as V1 or the mouse somatosensory barrel cortex, show temporal evolutions from gross tuning for stimulus properties to a more finely discriminative response47–53. The results of other studies have supported an alternative view in which hierarchically connected neurons evaluate both the available information and prior knowledge about the scene52. Although these results demonstrate a sequential neuronal processing flow, typical feature- or category-specific neurons in the inferotemporal cortex process highly specific stimulus properties of visual objects at the same time as they detect and identify them54. Despite these exceptions, there is a body of evidence suggesting that many sensory and cognitive neurons process the different components of demanding stimuli in consecutive steps (Supplementary information S1 (table)).

Figure 2. Sequential neuronal processing of stimulus and reward components.

a | Sequential processing in a cognitive system. The graph shows the time course of target discrimination in a monkey frontal eye field neuron during attentional selection. The animal’s task was to distinguish between a target stimulus and a distractor. The neuron initially detects both stimuli indiscriminately (blue zone); only later does its response differentiate between the stimuli (red zone). b | Sequential dopamine processing of reward. The graph shows distinct, sequential components of the dopamine prediction-error response to conditioned stimuli predicting either non-reward or reward delivery. These responses reflect initial transient object detection, which is indiscriminate, and subsequent reward identification and valuation, which distinguishes between reward and no reward prediction. c | The components of the dopamine prediction-error response in part b that relate to detection and valuation can be distinguished by statistics. The partial beta (slope) coefficients of the double linear regression on physical intensity and reward value show distinct time courses, indicating the dynamic evolution from initial detection to subsequent valuation. d | Voltammetric dopamine responses in rat nucleus accumbens distinguish between a reward-predicting conditioned stimulus (CS+) and a non-reward-predicting conditioned stimulus (CS−). Again, the dopamine release comprises an initial indiscriminate detection component and a subsequent identification and value component. e | A more demanding random dot motion discrimination task reveals completely separated dopamine response components. Increasing motion coherence (MC) results in more accurate motion discrimination and thus higher reward probability (p). The initial, stereotyped, non-differential activation reflects stimulus detection and decreases back to baseline (blue zone); the subsequent separate, graded increase develops when the animal signals stimulus discrimination; it codes reward value (red zone), which in this case derives from reward probability10,74. [DA], dopamine concentration. Part a is adapted, with permission, from REF. 44, Proceedings of the National Academy of Sciences. Part b is adapted from REF. 6, republished with permission of Society for Neuroscience, from Coding of predicted reward omission by dopamine neurons in a conditioned inhibition paradigm, Tobler, P. N., Dickinson, A. & Schultz, W., 23 (32), 2003; permission conveyed through Copyright Clearance Center, Inc. Part c is adapted from REF. 25, republished with permission of Society for Neuroscience, from Multiphasic temporal dynamics in responses of midbrain dopamine neurons to appetitive and aversive stimuli, Fiorillo, C. D., Song, M. R. & Yun, S. R., 33 (11), 2013; permission conveyed through Copyright Clearance Center, Inc. Part d is from REF. 60, Nature Publishing Group. Part e is adapted from REF. 33, republished with permission of Society for Neuroscience, from Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli, Nomoto, K., Schultz, W., Watanabe, T. & Sakagami, M., 30 (32), 2010; permission conveyed through Copyright Clearance Center, Inc.

Processing a reward requires an additional valuation step (FIG. 1). Neurons in primary reward structures such as the amygdala show an initial sensory response, followed 60–300 ms later by a separate value component31,55,56, and V1 and inferotemporal cortex neurons show similar response transitions with differently rewarded stimuli57,58. Thus, the neuronal processing of rewards might also involve sequential steps.

Sequential processing in dopamine neurons

Similar to the responses of neurons in other cognition- and reward-related brain regions, phasic dopamine reward prediction error responses show a temporal evolution with sequential components5,6,9,17,22,25,33,35,59–62 (FIG. 2b–e; Supplementary information S1 (table)). An initial, short latency and short-duration activation of dopamine neurons detects any environmental object before having properly identified and valued it. The subsequently evolving response component properly identifies the object and values it in a finely graded manner. These prediction-error components are often difficult to discern when simple, rapidly distinguishable stimuli are used; however, they can be revealed by specific statistical methods25 (FIG. 2c) or by using more demanding stimuli that require longer processing33 (FIG. 2e). Below, I describe in detail the characteristics of these components and the factors influencing them.

The initial component: detection

Effective stimuli

The initial component of the phasic dopamine reward prediction-error response is a brief activation (an increase in the frequency of neuronal impulses) that occurs unselectively in response to a large variety of unpredicted events, such as rewards9,35, reward-predicting stimuli9,25,33,59–62 (FIG. 2b–e), unrewarded stimuli5,16,17,20,34, aversive stimuli22 and conditioned inhibitors predicting reward omission6 (FIG. 2b). Owing to their varying sensitivities, some dopamine neurons show little or no initial component. True to the notion that dopamine neurons are involved in temporal prediction-error coding, the initial activation is sensitive to the time of stimulus occurrence and thus codes a temporal-event prediction error33. Stimuli of all sensory modalities are effective in eliciting this initial response component; those tested include loud sounds, intense light flashes, rapidly moving visual objects and liquids touching the mouth2,9,16,19,20,35. The unselective and multisensory nature of the initial activation thus corresponds to the large range and heterogeneous nature of potentially rewarding stimuli and objects present in the environment.

Sensitivity to stimulus characteristics

Several factors can enhance the initial dopamine activation. These factors may thus explain why the phasic dopamine response has sometimes been suggested to be involved in aversive processing21,22,24,63 or to primarily reflect attention64,65. Stimuli of sufficient physical intensity elicit the initial dopamine activation in a graded manner (FIG. 3a), irrespective of their positive or negative value associations25. Physically weaker stimuli generate little or no initial dopamine activations6,66. Such physically weak stimuli will only induce a dopamine activation if they are rewards or are associated with rewards4,6 (see below).

Figure 3. Factors influencing the initial dopamine activation.

a | Influence of physical intensity. In the example shown, stronger (yet non-aversive) sounds generate higher initial dopamine responses than weaker sounds. b | Influence of reward context. The left graph shows dopamine responses to the presentation of either a reward or one of two unrewarded pictures of different sizes in an experiment in which the contexts in which each is presented are distinct. The right graph shows that the dopamine neuron responses to the pictures are much more substantial when they are presented in the same context as the reward (that is, when the presentations occur in one common trial block with a common background picture and when the liquid spout is always present). c | Influence of reward generalization between stimuli that share physical characteristics. In the example shown, a conditioned visual aversive stimulus activates 16% of dopamine neurons when it is pseudorandomly interspersed with an auditory reward-predicting stimulus. However, the same visual aversive stimulus activates 65% of dopamine neurons when the alternating reward-predicting stimulus is also visual. d | Influence of stimulus novelty. The graph shows activity in a single dopamine neuron during an experiment in which an animal is repeatedly presented with a novel, unrewarded stimulus (rapid vertical opening of the door of an empty box). Dots representing action potentials are plotted in sequential trials from top down. Activation was substantial in the first ten trials. However, 60 trials later, the same dopamine neuron shows a diminished response. Horizontal eye movement traces displayed above and below the neuronal rasters illustrate the change in behaviour that correlates with decreasing novelty (less eye movement towards the stimulus; y-axis represents eye position to the right). Part a is adapted from REF. 25, republished with permission of Society for Neuroscience, from Multiphasic temporal dynamics in responses of midbrain dopamine neurons to appetitive and aversive stimuli, Fiorillo, C. D., Song, M. R. & Yun, S. R., 33 (11), 2013; permission conveyed through Copyright Clearance Center, Inc. Part b is adapted with permission from REF. 34, Elsevier. Part c is from REF. 19, Nature Publishing Group. Part d is adapted with permission from REF. 2, American Physiological Society.

The context in which a stimulus is presented can also enhance the initial activation. Unrewarded stimuli elicit little dopamine activation when they are well separated from reward; however, the same unrewarded stimuli effectively elicit dopamine activations when presented in the same context in which the animal receives a reward34 (FIG. 3b). Similarly, increasing the probability that the animal will receive a reward in a given experiment constitutes a more rewarded context and increases the incidence of dopamine activations to unrewarded stimuli5,6,34. Neurons might be primed by a rewarded context and initially process every unidentified stimulus as a potential reward until the opposite is proven. These neuronal context sensitivities may involve behavioural pseudoconditioning or higher-order context conditioning67.

The physical resemblance of a stimulus to other stimuli known to be associated with a reward can enhance the initial dopamine activation through generalization5,6,17,19,60. For example, a visual stimulus that is paired with an aversive experience (air puff) leads only to small dopamine activations when it is randomly interspersed between presentations of an auditory reward-predicting stimulus; however, the same conditioned visual aversive stimulus induces substantial activations when it is alternated with a visual reward-predicting stimulus19 (FIG. 3c). This is analogous to behavioural generalization, in which ‘neutral’ stimuli elicit similar reactions to physically similar target stimuli68. Thus, an otherwise ineffective, unrewarded or even aversive stimulus may drive the initial dopamine neuron activation as a result of generalization with a rewarded stimulus or a reward that requires only superficial identification. The enhancement might also affect the main dopamine response component if more-specific assessment of stimulus similarity requires some identification.

Novel stimuli, whether rewarded or not, can enhance dopamine activations. The response of dopamine neurons to an initial novel stimulus decreases with stimulus repetition2,4 (FIG. 3d). However, novelty alone is ineffective in activating dopamine neurons: physically weak novel stimuli fail to induce a dopamine response6. Similarly to generalization, novelty detection involves comparison with an existing stimulus and thus requires identification, which is proposed to take place in the main dopamine response.

These finding show that the initial dopamine response is sensitive to factors that are related to potential reward availability. Stimuli of high intensity are potential rewards and should be prioritized for processing so as to not miss a reward. Stimuli occurring in reward contexts or resembling known rewards have a fair chance to be rewards themselves. Novel stimuli are potential rewards until their true value has been determined. They are more likely to be rewards than non-novel stimuli whose lack of reward value has already been established. Thus, even the earliest dopamine detection response is already geared towards rewards.

Salience

The factors that enhance the initial dopamine activation are closely associated with different forms of stimulus-driven salience. Stimulus intensity provides physical salience. Stimuli that become effective in rewarding contexts or through response generalization are motivationally salient because of these reward associations. The mechanism by which salience induces the initial dopamine response component may apply primarily to rewarding stimuli, because the negative value of stimuli — including punishers25, negative reward prediction errors3,5,69 and conditioned reward inhibitors6 — is unlikely to induce dopamine activations. Novel or surprising stimuli are salient owing to their rare or unpredicted occurrence. The distinction between these different forms of salience might be important because they are thought to affect different aspects of behaviour, such as the identification of stimuli, valuation of rewards and processing of decisions, actions and reinforcement.

Benefits of initial unselective processing

At first sight, it might be assumed that an unselective response that occurs before value processing constitutes an inaccurate neuronal signal prone to inducing erroneous, disadvantageous behavioural reactions. One may wonder why such unselective responses have survived evolution.

However, although the initial activation appears unselective, it is (as outlined above) sensitive to modulation by several factors that are related to potential reward availability. Stimuli of high intensity should be prioritized for processing in order to not miss a reward. Stimuli occurring in reward contexts or resembling known rewards have a reasonable chance of being rewards themselves. Novel stimuli are potential rewards until their true value has been determined and are thus more likely to be rewards than unrewarded non-novel stimuli whose absent lack of reward value has already been established. Thus, the earliest dopamine detection response is already tuned towards reward. The wide, multisensory sensitivity of the response, on the other hand, would facilitate the detection of a maximal number of potential reward objects that should be attended to and identified quickly to avoid missing a reward.

Through stimulus-driven salience, the early dopamine activation component might serve to transiently enhance the ability of rewards to induce learning and action. This mechanism is formalized in the attentional Pearce–Hall learning rule70, in which surprise salience derived from reward prediction errors enhances the learning rate, as do physical and motivational salience. Thus, higher salience would induce faster learning, whereas lower salience would result in smaller and more fine-tuned learning steps. During action generation, stimulus-driven salience and top-down attention are known to enhance the neuronal processing of sensory stimuli and resulting behavioural responses36–40, and salience processing might also underlie the enhancing effects of reward on the accuracy of spatial target detection71. Similarly, by conveying physical, novelty and motivational salience, the initial dopamine response component might boost and sharpen subsequent reward value processing and ultimately increase action accuracy. This notion mirrors an earlier idea about sequential processing of global and finer stimulus categories in the inferotemporal cortex: that is, “… global information could be used as a ‘header’ to prepare destination areas for receiving more detailed information” (REF. 48).

Through its rapid detection of potential rewards, the initial dopamine activation might provide a temporal advantage by inducing early preparatory processes that lead to faster behavioural reactions towards important stimuli. As the response occurs more quickly than most behavioural reactions, there would still be time to cancel the behavioural initiation process if the subsequent valuation of a stimulus labels it as worthless or damaging. Thus the lower accuracy of the initial response would not compromise behavioural actions. A temporal gain of several tens of milliseconds, together with attentional response enhancement, might be important in competitive situations that require rapid behavioural reactions. As Darwin said72, in the long run of evolution, any small edge will ultimately result in an advantage.

I suggest that, through the early detection of salient stimuli, the initial dopamine response component affords a gain in speed and processing without substantially compromising accuracy, thus supporting the function of the phasic dopamine reward signal.

The main component: valuation

The dopamine reward prediction-error response evolves from the initial unselective stimulus detection and gradually sharpens into the increasingly specific identification and valuation of the stimulus25,33 (FIG. 2c,e). This later component — rather than the initial detection activation described above — defines the function of the phasic dopamine response and reflects the evolving neuronal processing that is required to fully appreciate the value of the stimulus. Higher-than-predicted rewards (generating positive prediction errors) elicit brief dopamine activations, lower-than-predicted rewards (generating negative prediction errors) induce decreases in activity (‘depressions’), and accurately predicted rewards do not change the activity. These responses constitute biological implementations of the crucial error term for reinforcement learning according to the Rescorla–Wagner model and temporal difference reinforcement models73; such a signal is appropriate for mediating learning and updating of reward predictions for approach behaviour and economic decisions4.

Subjective reward value

A reward’s value cannot be measured directly but is estimated from observable behavioural choices. Thus, value is a theoretical construct that is used to explain learning and decision-making. In being defined by the individual’s needs and behaviour, value is necessarily subjective. The construction of reward value involves brain mechanisms that include those mediated by dopamine neurons in the substantia nigra and VTA.

The material components of rewards are often difficult to assess and, most importantly, do not fully define their subjective value. Although phasic dopamine responses increase with the expected reward value (the summed product of the amounts and probabilities of the rewards, an objective, physical reward measure)7,10,59,74,75, it is intrinsically unclear whether they code objective or subjective reward value. One way to resolve the issue is to examine choices between rewards that are objectively equal. When a monkey chooses between identical amounts of blackcurrant juice and orange juice and shows a preference for blackcurrant juice, it can be inferred that the blackcurrant juice has a higher subjective value to the monkey than the orange juice9. Similarly, preferences for risky over safe rewards with identical mean volumes suggest increased subjective value due to the risk. Even with a larger range of safe and risky liquid and food rewards, monkeys show well-ranked choice preferences. The animals’ preferences satisfy transitivity (when preferring reward A over reward B, and reward B over reward C, they also prefer reward A over reward C), which suggests meaningful rather than chance behaviour9. Another way to estimate subjective value is to have an individual choose between the reward in question and a common reference reward; the psychophysically determined amount of the reference reward at which the individual becomes equally likely to select either option (choice indifference) indicates the subjective value of the reward in question. It is measured in physical units of the common reference reward (known as a ‘common currency’, such as millilitres of blackcurrant juice). Dopamine neurons show higher activations in response to the preferred juice, and their activity also correlates with the indifference amounts in choices between risky and safe rewards, indicating that the neurons consistently code subjective rather than objective reward value9. A relationship between dopamine neuron activity and subjective value can also be seen when the reward value is reduced by the addition of an aversive liquid25 (Supplementary information S2 (box)).

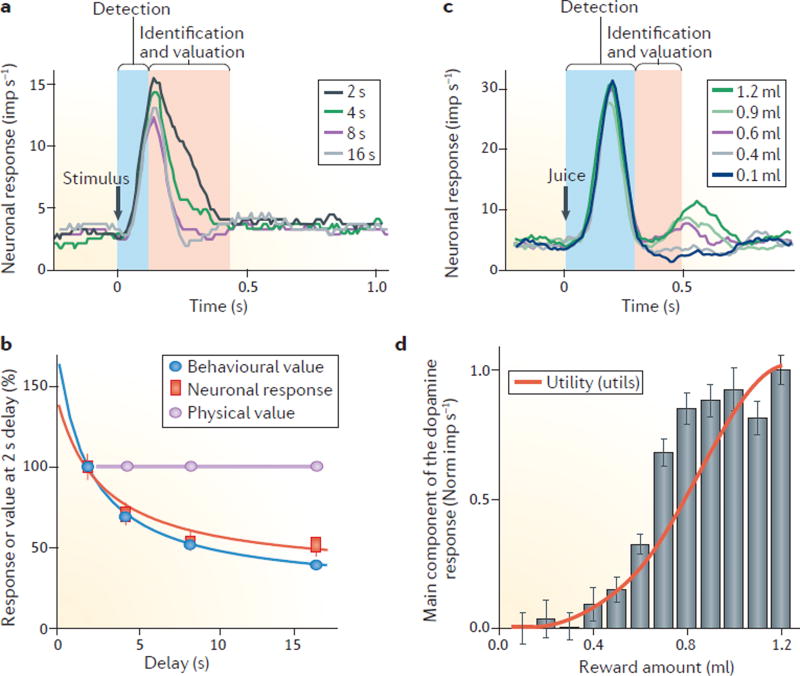

Temporal discounting is another way to dissociate subjective value from objective value. Rewards lose subjective value after delays, even when they remain physically unchanged76. Correspondingly, although the initial component of the dopamine response to a stimulus that predicts a delayed reward stays almost constant, the second component of the dopamine response decreases as the delay increases (FIG. 4a) and follows closely the hyperbolic decay of subjective value assessed by measuring behaviour61,62. Similar temporal discounting can be observed in dopamine voltammetry77.

Figure 4. Subjective value and utility coding by the main dopamine response component.

a | Temporal discounting of a reward’s subjective value is reflected in the dopamine response. The figure shows the averaged activity from 54 dopamine neurons responding to 4 stimuli predicting the same amount of a reward that is delivered after delays of 2, 4, 8 or 16 seconds. The stimulus response is reduced as the delay before receiving the reward increases, suggesting that the value coding by these neurons is subjective and decreases with the delay after which the reward is delivered. The value-related response decrement occurs primarily in the later, main response component (red zone). b | Corresponding temporal discounting in both the behavioural response and the main dopamine response component reveals subjective value coding. c | Monotonic increase of the main dopamine response component (red zone) when an animal is provided with an increasing amount of an unpredicted juice reward. By contrast, the initial response component (blue zone) is unselective and reflects only the detection of juice flow onset. d | The dopamine reward prediction-error signal codes formal economic utility. For the experiment described in c, the red line shows the utility function as estimated from behavioural choices. The grey bars show a nonlinear increase in the main component of the dopamine response (red zone in c) to juice reward, reflecting the positive prediction error generated by its unpredicted delivery. Parts a and b are adapted from REF. 61, republished with permission of Society for Neuroscience, from Influence of reward delays on responses of dopamine neurons, Kobayashi, S. & Schultz, W., 28 (31), 2008; permission conveyed through Copyright Clearance Center, Inc. Parts c and d are adapted with permission from REF. 35, Elsevier.

These findings indicate that the second dopamine response component codes the subjective value of different types of rewards, risky rewards, composite rewards and delayed rewards.

Utility

Economic utility provides the most constrained, principled and conceptualized definition of the subjective value of rewards. It is the result of 300 years of mathematical and economic theory that incorporates basic ideas about the acquisition and exchange of goods78–81. Additions, such as prospect theory82, provide important extensions but do not question the fundamental role of utility. Probably the most important potential that utility has for neuroscience lies in the assumption that utility provides an internal, private metric for subjective reward value83. Utility as an internal value reflects individual choice preferences and constitutes a mathematical function of objective, physical reward amount84,85. This function, u(x), is usually, but not necessarily, nonlinear over x. By contrast, the subjective value derived from choice preferences and indifference points described above provides a measure in physical units (such as millilitres, pounds or dollars of a common reference reward) but does not tell us how much a physical unit of the reference reward is privately worth to the individual decision maker. By estimating utility, we could obtain such a private measure.

A private, internal metric for reward value would allow researchers to establish a neuronal value function. This function would relate the frequency of action potentials to the internal reward value that matters to the decision maker. The number of action potentials during one well-defined period — for example, for 200 ms after a stimulus — would quantitate how much the reward is valued by the monkey’s neurons, and thus how much it is worth privately to the monkey.

Economic theory suggests that numeric estimates of utility can be obtained experimentally in choices involving risky rewards81,86,87. The most simple and confound-free form of risk can be tested by using equiprobable gambles in which a small and a large reward occur with equal probability of p = 0.5 (REF. 88). To obtain utility functions, we can use specifically structured choices between such gambles and variable safe (riskless) rewards (known as a ‘fractile procedure’ (REFS 89,90)) and estimate ‘certainty equivalents’ — the amount of the safe reward that is required for the animal to select this reward as often as the gamble. All certainty equivalents are then used to construct the utility function89,90. In such a procedure, a monkey’s choices reveal nonlinear utility35. When the amount of reward is low, the curvature of the utility function is convex (progressively increasing), which indicates that monkeys tend to be risk-seeking when the stakes are low, as previously observed in other monkeys91,92 and humans93–95. The utility function becomes concave with higher liquid amounts (progressively flattening), which is consistent with the risk avoidance seen in traditional utility functions85,96. The convex–concave shape is similar to the inflected utility functions that have been modelled for humans97,98. Thus, it is possible to experimentally estimate numeric economic utility functions in monkeys that are suitable for mathematically valid correlations with numeric neuronal reward responses.

The most basic and straightforward method to elicit a dopamine reward prediction-error response involves delivery of an unpredicted reward (juice) at a spout. Reward amount is defined by the duration of juice flow out of the spout. The start of liquid flow thus indicates the onset of reward delivery, but its final amount becomes only appreciable when the liquid flow terminates. Dopamine neurons show an initial, uniform detection response to liquid-flow onset that is unaffected by reward amount, and a second response component that increases monotonically with the final amount and signals value35 (FIG. 4c). Importantly, the second dopamine response component increases only gradually when juice amounts are small, then more steeply with intermediate juice amounts, and then more gradually again with higher amounts, following the nonlinear curvature of the utility function (FIG. 4d). Thus, the main, fully evolved dopamine reward prediction-error response correlates with numeric, quantitative utility.

To truly determine whether the dopamine signal codes numeric utility, experimental tests should use well-defined gambles that satisfy the conditions for utility81, rather than unpredicted rewards in which the risk is poorly defined. For example, when using binary, equiprobable gambles with well-defined and identical variance risk, the higher of the two gamble outcomes elicits non-monotonically varying positive dopamine reward prediction-error responses that reflect the nonlinear shape of the utility function35. These responses with well-defined gambles match well the responses obtained with free rewards and demonstrate a neuronal signal for formal numeric utility, as stringently defined by economic choice theory.

Because the fully developed main response component codes utility, the phasic dopamine reward prediction-error response can be specified as a utility prediction-error signal. All other factors that might affect utility — including risk, delay and effort cost — were held constant in these experiments; therefore the utility signal reflects income utility rather than net-benefit utility. Although economists consider utility to be a hypothetical construct that explains decision-making but lacks a physical existence, dopamine responses seem to represent a physical correlate for utility.

Downstream influences

Correct behaviour based on late component

As described above, the initial dopamine activation is transient, and it is likely that the accurate value representation of the second dopamine response component can quickly compensate for the initial unselectivity. The second response component persists throughout the resulting behaviour until the reward is received, as revealed by the graded positive prediction-error response to reward delivery5,33. This prediction-error response is large with intermediate reward probability, which generates an intermediate value prediction11, and decreases progressively when higher reward-probability predictions lead to less-surprising rewards33 (FIG. 5a). Similar persistence of the second response component is apparent in temporal discounting experiments, in which longer delays associated with lower values result in higher prediction-error responses to the full reward61. Thus, the initial activation lasts only until the subsequent value component conveys the accurate reward value information, which remains present until the reward occurs (FIG. 5b), covering the entire period of planning, initiation, execution and outcome of action. In this way, the initial dopamine signal can be beneficial without unfocusing or misleading the behaviour.

Figure 5. Persistent accurate value representation.

The reward prediction error signalled by the main dopamine response component following a conditioned stimulus remains present until the time of reward. a | The graphs illustrate persistent reward representation in a random dot motion discrimination task in which distinct dopamine response components can initially be observed (blue and red zones). The reward prediction-error response subsequently decreases in a manner that correlates with increasing reward probability (right), suggesting that a neuronal representation of reward value persists after the onset of the value response component of the dopamine response. b | Schematics showing how an accurate reward-value representation may persist until the reward is received. As shown in the top panel, after a rewarded stimulus generates a detection response that develops into a full reward-value activation, reward delivery, which induces no prediction error (no PE), elicits no dopamine response; by contrast, reward omission, generating a negative prediction error (−PE), induces a dopamine depression. However, as shown at in the bottom panel, after an unrewarded stimulus generates a detection response that develops into a dopamine depression, a surprising reward elicits a positive prediction error (+PE) and dopamine activation, whereas no reward fails to generate a prediction error and dopamine response. Thus, the dopamine responses at the time of reward probe the reward prediction that exists at that moment. This proposed mechanism expands on previous suggestions that have not taken into account the two dopamine response components4,5. Part a is adapted from REF. 33, republished with permission of Society for Neuroscience, from Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli, Nomoto, K., Schultz, W., Watanabe, T. & Sakagami, M. 30 (32), 2010; permission conveyed through Copyright Clearance Center, Inc.

Both the initial, unselective detection information and the specific value information present in the second dopamine response component are propagated to downstream neurons, many of which show similar multi-component responses (FIG. 2a; Supplementary information S1 (table)). Thus, despite the transient, inaccurate, first dopamine response component, the quickly following second response component would allow neurons to distinguish rewards from non-rewards early enough to influence behavioural reactions. This is similar to the multi-component response patterns observed in other sensory, cognitive and reward neurons (FIG. 2a; Supplementary information S1 (table)). Thus, the two-component dopamine signalling mechanism combines speed, efficiency and accuracy in reward processing.

The two-component mechanism operates on a narrow timescale that requires unaltered, precise processing in the 10 ms range. Any changes in the temporal structure of the phasic dopamine response might disturb the valuation and lead to impaired postsynaptic processing of reward information. Thus, stimulant drugs, which are known to prolong increases in dopamine concentration99, might extend the effects of the initial activation component so that the dopamine surge overlaps with the second, value response and thus generates a false value signal for postsynaptic neurons. This mechanism may contribute to stimulant drug addiction and behavioural alterations in psychiatric disorders (BOX 2).

Box 2. Altered dopamine responses and drug addiction.

Recognition of the two-component nature of phasic dopamine responses may allow us to speculate on possible mechanisms of drug addiction. The response structure described here must be precisely transmitted to postsynaptic neurons to preserve the specific information present in each component. Therefore, as is the case for any sophisticated mechanism, it is vulnerable to alterations. Cocaine and other psychostimulants are known to enhance and prolong impulse-dependent changes in dopamine neuron firing (see the figure, part a). When a sufficiently strong but motivationally neutral stimulus induces the unselective initial response component, the effects of dopamine uptake inhibition by cocaine prolong the dopamine increase beyond the initial response period (blue zone) and might carry it into the subsequent period of the main response component that signals reward value (red zone). Part b of the figure shows the time courses of the changes in dopamine concentration derived from the initial response component to an unrewarded conditioned stimulus (CS−; blue zone) and the continuation of this response into the value component in the case of a reward-predicting conditioned stimulus (CS+; red zone), as shown also in FIG. 2d with a compressed time course. With the prolongation of the first, unselective dopamine response component by cocaine, an unrewarded stimulus would appear to postsynaptic neurons as a reward rather than an undefined stimulus and induce erroneous learning, approach and decision-making. In addition, the cocaine-induced blockade of dopamine uptake may lead to a supranatural dopamine boost that is unfiltered by sensory receptors and is likely to induce exaggerated postsynaptic plasticity effects. Thus, the transformation of the unselective dopamine detection response into a false reward-value response may constitute a possible mechanism contributing to psychostimulant addiction. A similar mechanism may apply to any psychotic or attentional disorder in which reduced neuronal processing precision might compromise critical transitions between the dopamine-response components and induce false value messages and wrong environmental associations.

[DA], dopamine concentration. Figure part a is adapted with permission from REF. 99, Jones, S. R., Garris, P. A. & Wightman, R. M. Different effects of cocaine and nomifensine on dopamine uptake in the caudate-putamen and nucleus accumbens. J. Pharmacol. Exp. Ther. 274, 396–403 (1995). Figure part b is adapted from REF. 60, Nature Publishing Group.

Updating predictions and decision variables

The main, utility prediction-error response component (FIG. 4c,d) might provide a suitable reinforcement signal for updating neuronal utility signals35. The underlying mechanism may consist of dopamine-dependent plasticity in postsynaptic striatal and cortical neurons100–106 and involve a three-factor Hebbian learning mechanism with input, output and dopamine signals4. A positive prediction error would enhance behaviour-related neuronal activity that resulted in a reward, whereas a negative prediction error would reduce neuronal activity and thus disfavour behaviour associated with a lower reward. Indeed, optogenetic activation of midbrain dopamine neurons induces learning of place preference, nose poking and lever pressing in rodents107–112. These learning effects depend on dopamine D1 receptors mediating long-term potentiation (LTP) and long-term depression (LTD) in striatal neurons103,104. The teaching effects of phasic dopamine responses may differ between striatal neuron types because optogenetic stimulation of neurons expressing D1 or D2 dopamine receptors induces learning of behavioural preferences and dispreferences, respectively113.

Dopamine-dependent plasticity may affect neuronal populations whose activity characteristics comply with specific formalisms of competitive decision models, such as object value, action value, chosen value and their derivatives114. The dopamine reward prediction-error response conforms to the formalism of chosen value, reflecting the value of the object or action that is chosen by the animal115; it might be driven by inputs from striatal and cortical reward neurons116–119. The output of the dopamine signal may affect object-value and action-value coding neurons in the striatum and frontal cortex whose particular signals are suitable for competitive decision processes116,120–124. Thus, the main, utility prediction-error response component of dopamine neurons shows appropriate characteristics for inducing learning and value updating in predictions and decision processes, although formal utility coding remains to be established in postsynaptic striatal and cortical neurons.

Immediate influences on behaviour

Phasic dopamine signals may also affect behavioural reactions through an immediate focusing effect on cortico-striatal connections. Through such a mechanism, weak afferent activity might be filtered out, and only information from the most active inputs might be passed on to postsynaptic striatal neurons125–127. Optogenetic activation of mouse midbrain dopamine neurons elicits immediate behavioural actions, including contralateral rotation and locomotion110. Correspondingly, reduction of dopamine bursting activity through NMDA-receptor knockout prolongs reaction time128, and dopamine depletion reduces learned neuronal responses in striatum129. These behavioural findings might be explained by a neuronal mechanism in which dopamine prolongs transitions to excitatory membrane up states in D1 receptor-expressing striatal direct pathway neurons130, but reduces membrane up states and prolongs membrane down states in D2 receptor-expressing striatal indirect pathway neurons131; both effects conceivably facilitate behavioural reactions. This link to behaviour might be confirmed by the effects of optogenetic stimulation of striatal neurons; stimulation of neurons expressing dopamine D1 receptors increases behavioural choices towards contralateral nose-poke targets, whereas stimulation of D2 receptor-expressing striatal neurons increases ipsilateral choices (or contralateral dispreferences), suggesting that these two populations of neurons have differential effects on the coding of action value132. These effects would reflect value influences from the second dopamine response component on neurons in the striatum and frontal cortex.

Addressing open issues

Unlikely aversive activation

Recognition of the two-component dopamine response structure and the influence of physical impact, reward context, reward generalization and novelty on the initial response component may help us to scrutinize the impact of aversive stimuli on dopamine neurons. There is a long history of ‘aversive’ dopamine activations seen in electrophysiological and voltammetric studies on awake and anaesthetized animals15,17,19,21–24,63,133. However, recent reports that distinguished between different stimulus components of punishers suggest that the physical impact of punishers is the major determinant for dopamine activations, and the psychophysically assessed aversive nature of the punishers did not explain the recorded dopamine activations25–27. Thus, it is possible that the previously reported activations of dopamine neurons by punishers15,17,19,21–24,134 may have been due to physical impact of the tested stimuli, rather than true aversiveness. Reward context and reward generalization19 may have had an additional facilitating influence on these dopamine activations, which would merit further tests.

Some of the dopamine activations observed in response to aversive stimuli might reflect outright reward processes. The end of exposure to a punisher can be rewarding because of the relief it provides135. Correspondingly, aversive stimuli induce delayed, post-stimulus dopamine release in the shell of the nucleus accumbens63,133, the magnitude of which predicts successful punishment avoidance136. These responses are distinct from immediate dopamine changes in the nucleus accumbens core63,133 (which may reflect physical impact, reward generalization or reward context).

Optogenetic stimulation of habenula inputs to dopamine neurons induces behavioural place preference changes137 that are compatible with both aversive and reward accounts of dopamine function, whereas electrical habenula stimulation affects preferences in a way that is compatible with a rewarding dopamine influence138. In support of the latter result, habenula stimulation elicits inhibitory electrophysiological dopamine responses139–141 through an intermediate reticular nucleus138, rather than the excitatory responses required for a postulated aversive dopamine influence on place preferences137.

The phasic dopamine activations by aversive stimuli seem to constitute the initial, unselective dopamine response component driven by physical impact25, and possibly boosted by reward context and reward generalization19, rather than reflecting a straightforward aversive response; however, the existence of some truly aversive dopamine activations can never be completely ruled out. For a more extensive discussion of the issue, see Supplementary information S2 (box).

Salience

Recognition of the two-component structure of phasic dopamine responses may also resolve earlier controversies, which suggested an attentional rather than rewarding role of the dopamine prediction-error signal64,65 on the basis of activations following exposure to physically salient stimuli16,20 and punishers24. It might seem as if dopamine neurons were involved in driving attention as a result of physical salience if they were tested in the complete absence of rewards. Without any rewards, reward prediction errors would not occur, and the second, value response component would be absent: an initial, salience response component could then be interpreted as the principal dopamine response. Testing with reward should reveal the complete dopamine signal.

A role for the dopamine prediction-error response in mediating attention derived from motivational salience (which is common to reward and punishers) would be confirmed if dopamine neurons were shown to exhibit the same (activating) response to rewards and punishers24. However, the improbability of truly aversive dopamine activations25 make this interpretation unlikely. This suggests that theories of dopamine function based on motivational salience142 present an incomplete account of phasic dopamine function.

The incentive-salience hypothesis captures a different form of salience: one that is associated with conditioned stimuli for rewards, not punishers. The hypothesis postulates that dopamine neurons function in approach behaviour rather than in learning143. Its experimental basis is the dopamine antagonist-induced deficit in approach behaviour, but not learning, that is seen in so-called sign-tracking rat strains144. However, specific learning deficits do occur in mice in which NMDA-receptor knockout in dopamine neurons results in reduced dopamine burst activity, suggesting a connection between phasic dopamine activity and the learning of specific tasks128. Thus, the evidence for a strictly differential dopamine role in approach behaviour versus learning is at best inconclusive. The incentive-salience hypothesis and the prediction-error account are difficult to compare and might not be mutually exclusive: incentive salience concerns dopamine’s influences on behaviour, whereas prediction-error coding concerns the properties of the dopamine prediction-error signal itself, which can have many functions. Indeed, a prediction-error signal can support both learning and efficient performance145.

The two-component response structure thus provides a viable account of phasic salience signalling by dopamine neurons. The response to salient stimuli does not represent the full coding potential of dopamine neurons: rather, it constitutes only the initial, undifferentiated component of the dopamine reward prediction-error signal.

Dopamine diversity

The functional interpretation of the phasic dopamine response may shed light on the currently debated diversity of dopamine mechanisms, which focus on differences between phasic dopamine prediction error responses26 or concern variations of dopamine functions akin to those in other brain systems146.

The least diverse of dopamine’s functions are the phasic electrophysiological dopamine responses, whose latency, duration and type of information coding varies only in a graded (not categorical) manner between neurons. In this respect, dopamine neurons contrast strongly with non-dopamine neurons in the striatum and frontal cortex, which show wide varieties of activations and depressions at different time points before and after different stimuli and behavioural events114,147–149. Dopamine neurons in medial and lateral, or dorsal and ventral, midbrain positions do show graded differences in responses to rewarding and aversive stimuli2,23,24,26,33. However, because aversive responses may primarily concern the initial response component25, it is possible that their regional distributions might be explained by varying sensitivities to stimulus intensity, reward context and reward generalization (FIG. 3). Thus, a strong activation in response to the physical impact of an aversive stimulus in particularly sensitive neurons may supersede a depression reflecting the negative value, and this might appear as a categorical difference in aversive and motivational salience coding15,23,24,134. Furthermore, the phasic dopamine response has been suggested to process cognitive signals in working memory and visual search tasks150. However, on closer inspection, dopamine responses in such elaborate tasks constitute standard reward prediction-error signals2,33,59,66,69,75,151–153. Altogether, the phasic dopamine reward signal is remarkably similar across neurons and so far seems to show graded rather than strictly categorical differences.

In contrast to the phasic dopamine reward prediction-error signal, all other aspects of dopamine function are diverse, including dopamine neuron morphology, electrophysiology, neurochemistry, connectivity and contribution to behaviour. For example, various subsets of dopamine neurons (1–44%) show slow, sluggish and diverse changes in the seconds time range that are inconsistently related to various aspects of behavioural task engagement and reactivity with stimulus-triggered or self-initiated arm or mouth movements11,12,14,18; these changes fail to occur with more-controlled arm, mouth and eye movements in a considerable range of studies2,5,9,13,151,152,154 (thus, dopamine impulse activity does not seem to reliably code the movement processes that are deficient in Parkinson disease). In addition, a well-controlled slow activation reflects reward risk during the stimulus–reward interval in about 30% of dopamine neurons10. Voltammetric studies in rats show similarly slow striatal dopamine increases with movements towards cocaine levers155 and with reward proximity and value156; the release might derive from the above-mentioned slow impulse activities or from presynaptic influences of non-dopamine terminals157. Impulse-dependent dopamine release is also heterogeneous and sensitive to varying degrees of modulation. For example, risky cues induce differential voltammetric dopamine responses in the nucleus accumbens core but non-differential responses in the shell158. Further, inhomogeneous neuronal release of acetylcholine, glutamate and substance P affects dopamine release159–161, which, together with the heterogeneous striosome-matrix compartments, would result in diverse dopamine release. More than with most other neurons, the time course of dopamine neuron function varies vastly1 (BOX 1). It is unclear whether all dopamine neurons, or only specific subgroups, participate in all of these functions. Furthermore, like most other neurons, dopamine neurons vary in morphology, connections, neurotransmitter colocalization, receptor location, neurotransmitter sensitivity and membrane channels. Also, the ultimate function of dopamine neurons on behaviour differs depending on the anatomical projection and function of the postsynaptic neurons they are influencing.

The phasic electrophysiological dopamine reward signal is remarkably similar across neurons and shows graded rather than strictly categorical differences. It affects diverse downstream dopamine and non-dopamine mechanisms, which together makes dopamine function as diverse as the function of other neuronal systems. In this way, the homogeneous phasic dopamine signal influences other brain structures with heterogeneous functions and thus exerts differential and specific influences on behaviour.

Conclusions and future directions

Recent research has revealed the interesting and beneficial component structure of the phasic dopamine reward prediction-error signal during its dynamic evolvement. This processing structure is well established in neurons involved in sophisticated, higher-order processes but has long been overlooked for dopamine neurons. It can explain both the salience and punishment accounts of dopamine function. According to this account, salience concerns only the initial and transient part of the dopamine response, whereas punishers activate dopamine neurons through their physical impact rather than their aversiveness. These advances also address the debated issue of dopamine diversity; the phasic reward signal has been shown to be remarkably similar between dopamine neurons and shows only graded variations that are typical for biological phenomena rather than categorical differences; however, all other aspects of dopamine function are as diverse as in other neuronal systems. In moving beyond these issues, we now identify dopamine prediction-error signals for subjective reward value and formal economic utility. Together with recent molecular, cellular and synaptic work, these results will help to better characterize reward signals in other key structures of the brain and construct a neuronal theory of basic learning, utility and economic decision-making.

Supplementary Material

Acknowledgments

The author thanks A. Dickinson, P. Bossaerts, C. R. Plott and C. Harris for discussions about animal learning theory and experimental economics; his collaborators on the cited studies for their ingenuity, work and patience; and three anonymous referees for comments. The author is also indebted to K. Nomoto, M. Sakagami and C. D. Fiorillo, whose recent experiments encouraged the ideas proposed in this article. The author acknowledges grant support from the Wellcome Trust (Principal Research Fellowship, Programme and Project Grants: 058365, 093270 and 095495), the European Research Council (ERC Advanced Grant 293549) and the US National Institutes of Health Caltech Conte Center (P50MH094258).

Glossary

- Behavioural pseudoconditioning

A situation in which the context (environment) is paired, through Pavlovian conditioning, to a reinforcer that is present in this environment. Any stimulus occurring in this context thus reflects the same association, without being explicitly paired with the reinforcer. Pseudoconditioning endows an unpaired stimulus with motivational value.

- Context conditioning

An association between a specific stimulus (for example, a reward or punisher) and a context (for example, an environment, including all stimuli except the specific explicit stimulus).

- Down states

Neuronal membrane states that are defined by hyperpolarized membrane potentials and very little firing.

- Economic utility

A mathematical, usually nonlinear function that derives the internal subjective reward value u from the objective value x. Utility is the fundamental variable that decision-makers maximize in rational economic choices between differently valued options.

- Hebbian learning

A cellular mechanism of learning, proposed by Donald Hebb, according to which the connection between a presynaptic and a postsynaptic cell is strengthened if the presynaptic cell is successful in activating a postsynaptic cell.

- Motivational salience

The ability of a stimulus to elicit attention due to its positive (reward) or negative (punishment) motivational value. Motivational salience is common to reward and punishment.

- Novelty salience

The ability of a stimulus to elicit attention due to its novelty.

- Physical salience

The ability of a stimulus to elicit attention by standing out, due to its physical intensity or conspicuousness.

- Rescorla–Wagner model

The prime error-driven reinforcement model for Pavlovian conditioning, in which the prediction error (reward or punishment outcome minus current prediction) is multiplied by a learning factor and added to the current prediction to result in an updated prediction.

- Surprise salience

The ability of a stimulus to elicit attention due to its unexpectedness.

- Temporal difference reinforcement models

A family of non-trial-based reinforcement learning models in which the difference between the expected and actual values of a particular state (prediction error) in a sequence of behaviours is used as a teaching signal to facilitate the acquisition of associative rules or policies to direct future behaviour. Temporal difference learning extends Rescorla–Wagner-type reinforcement models to real time and higher-order reinforcers.

- Up states

Neuronal membrane states that are defined by relatively depolarized membrane potentials and lots of action potential firing.

- Visual search task

An experimental paradigm in which subjects are asked to detect a ‘target’ item (for example, a red dot) among an array of distractor items (for example, many green dots).

- Voltammetry

An electrochemical measurement of oxidation-reduction currents across a range of imposed voltages, used in neuroscience for assessing concentrations of specific molecules, such as dopamine.

Footnotes

Competing interests statement

The author declares no competing interests.

See online article: S1 (table) | S2 (box)

ALL LINKS ARE ACTIVE IN THE ONLINE PDF

References

- 1.Schultz W. Multiple dopamine functions at different time courses. Ann. Rev. Neurosci. 2007;30:259–288. doi: 10.1146/annurev.neuro.28.061604.135722. [DOI] [PubMed] [Google Scholar]

- 2.Ljungberg T, Apicella P, Schultz W. Responses of monkey dopamine neurons during learning of behavioral reactions. J. Neurophysiol. 1992;67:145–163. doi: 10.1152/jn.1992.67.1.145. [DOI] [PubMed] [Google Scholar]

- 3.Schultz W, Dayan P, Montague RR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 4.Schultz W. Predictive reward signal of dopamine neurons. J. Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- 5.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 6.Tobler PN, Dickinson A, Schultz W. Coding of predicted reward omission by dopamine neurons in a conditioned inhibition paradigm. J. Neurosci. 2003;23:10402–10410. doi: 10.1523/JNEUROSCI.23-32-10402.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pan W-X, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J. Neurosci. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lak A, Stauffer WR, Schultz W. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc. Natl Acad. Sci. USA. 2014;111:2343–2348. doi: 10.1073/pnas.1321596111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 11.Schultz W, Ruffieux A, Aebischer P. The activity of pars compacta neurons of the monkey substantia nigra in relation to motor activation. Exp. Brain Res. 1983;51:377–387. [Google Scholar]

- 12.Schultz W. Responses of midbrain dopamine neurons to behavioral trigger stimuli in the monkey. J. Neurophysiol. 1986;56:1439–1462. doi: 10.1152/jn.1986.56.5.1439. [DOI] [PubMed] [Google Scholar]

- 13.DeLong MR, Crutcher MD, Georgopoulos AP. Relations between movement and single cell discharge in the substantia nigra of the behaving monkey. J. Neurosci. 1983;3:1599–1606. doi: 10.1523/JNEUROSCI.03-08-01599.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Romo R, Schultz W. Dopamine neurons of the monkey midbrain: contingencies of responses to active touch during self-initiated arm movements. J. Neurophysiol. 1990;63:592–606. doi: 10.1152/jn.1990.63.3.592. [DOI] [PubMed] [Google Scholar]

- 15.Chiodo LA, Antelman SM, Caggiula AR, Lineberry CG. Sensory stimuli alter the discharge rate of dopamine (DA) neurons: evidence for two functional types of DA cells in the substantia nigra. Brain Res. 1980;189:544–549. doi: 10.1016/0006-8993(80)90366-2. [DOI] [PubMed] [Google Scholar]

- 16.Steinfels GF, Heym J, Strecker RE, Jacobs BL. Behavioral correlates of dopaminergic unit activity in freely moving cats. Brain Res. 1983;258:217–228. doi: 10.1016/0006-8993(83)91145-9. [DOI] [PubMed] [Google Scholar]

- 17.Schultz W, Romo R. Responses of nigrostriatal dopamine neurons to high intensity somatosensory stimulation in the anesthetized monkey. J. Neurophysiol. 1987;57:201–217. doi: 10.1152/jn.1987.57.1.201. [DOI] [PubMed] [Google Scholar]

- 18.Schultz W, Romo R. Dopamine neurons of the monkey midbrain: contingencies of responses to stimuli eliciting immediate behavioral reactions. J. Neurophysiol. 1990;63:607–624. doi: 10.1152/jn.1990.63.3.607. [DOI] [PubMed] [Google Scholar]

- 19.Mirenowicz J, Schultz W. Preferential activation of midbrain dopamine neurons by appetitive rather than aversive stimuli. Nature. 1996;379:449–451. doi: 10.1038/379449a0. [DOI] [PubMed] [Google Scholar]

- 20.Horvitz JC, Stewart T, Jacobs BL. Burst activity of ventral tegmental dopamine neurons is elicited by sensory stimuli in the awake cat. Brain Res. 1997;759:251–258. doi: 10.1016/s0006-8993(97)00265-5. [DOI] [PubMed] [Google Scholar]

- 21.Guarraci FA, Kapp BS. An electrophysiological characterization of ventral tegmental area dopaminergic neurons during differential pavlovian fear conditioning in the awake rabbit. Behav. Brain Res. 1999;99:169–179. doi: 10.1016/s0166-4328(98)00102-8. [DOI] [PubMed] [Google Scholar]

- 22.Joshua M, Adler A, Mitelman R, Vaadia E, Bergman H. Midbrain dopaminergic neurons and striatal cholinergic interneurons encode the difference between reward and aversive events at different epochs of probabilistic classical conditioning trials. J. Neurosci. 2008;28:11673–11684. doi: 10.1523/JNEUROSCI.3839-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brischoux F, Chakraborty S, Brierley DI, Ungless MA. Phasic excitation of dopamine neurons in ventral VTA by noxious stimuli. Proc. Natl Acad. Sci. USA. 2009;106:4894–4899. doi: 10.1073/pnas.0811507106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctively convey positive and negative motivational signals. Nature. 2009;459:837–841. doi: 10.1038/nature08028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fiorillo CD, Song MR, Yun SR. Multiphasic temporal dynamics in responses of midbrain dopamine neurons to appetitive and aversive stimuli. J. Neurosci. 2013;33:4710–4725. doi: 10.1523/JNEUROSCI.3883-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fiorillo CD, Yun SR, Song MR. Diversity and homogeneity in responses of midbrain dopamine neurons. J. Neurosci. 2013;33:4693–4709. doi: 10.1523/JNEUROSCI.3886-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fiorillo CD. Two dimensions of value: dopamine neurons represent reward but not aversiveness. Science. 2013;341:546–549. doi: 10.1126/science.1238699. [DOI] [PubMed] [Google Scholar]

- 28.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp. Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 29.Ravel S, Legallet E, Apicella P. Responses of tonically active neurons in the monkey striatum discriminate between motivationally opposing stimuli. J. Neurosci. 2003;23:8489–8497. doi: 10.1523/JNEUROSCI.23-24-08489.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Roitman MF, Wheeler RA, Carelli RM. Nucleus accumbens neurons are innately tuned for rewarding and aversive taste stimuli, encode their predictors, and are linked to motor output. Neuron. 2005;45:587–597. doi: 10.1016/j.neuron.2004.12.055. [DOI] [PubMed] [Google Scholar]

- 31.Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Amemori K-I, Graybiel AM. Localized microstimulation of primate pregenual cingulate cortex induces negative decision-making. Nat. Neurosci. 2012;15:776–785. doi: 10.1038/nn.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nomoto K, Schultz W, Watanabe T, Sakagami M. Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli. J. Neurosci. 2010;30:10692–10702. doi: 10.1523/JNEUROSCI.4828-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kobayashi S, Schultz W. Reward contexts extend dopamine signals to unrewarded stimuli. Curr. Biol. 2014;24:56–62. doi: 10.1016/j.cub.2013.10.061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Stauffer WR, Lak A, Schultz W. Dopamine reward prediction error responses reflect marginal utility. Curr. Biol. 2014;24:2491–2500. doi: 10.1016/j.cub.2014.08.064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bushnell MC, Goldberg ME, Robinson DL. Behavioral enhancement of visual responses in monkey cerebral cortex. I. Modulation in posterior parietal cortex related to selective visual attention. J. Neurophysiol. 1981;46:755–772. doi: 10.1152/jn.1981.46.4.755. [DOI] [PubMed] [Google Scholar]

- 37.Treue S, Maunsell JHR. Attentional modulation of visual motion processing in cortical areas MT and MST. Nature. 1996;382:539–541. doi: 10.1038/382539a0. [DOI] [PubMed] [Google Scholar]

- 38.Womelsdorf T, Anton-Erxleben K, Pieper F, Treue S. Dynamic shifts of visual receptive fields in cortical area MT by spatial attention. Nat. Neurosci. 2006;9:1156–1160. doi: 10.1038/nn1748. [DOI] [PubMed] [Google Scholar]

- 39.Nardo D, Santangelo V, Macaluso E. Stimulus-driven orienting of visuo-spatial attention in complex dynamic environments. Neuron. 2011;69:1015–1028. doi: 10.1016/j.neuron.2011.02.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Annic A, Bocquillon P, Bourriez J-L, Derambure P, Dujardin K. Effects of stimulus-driven and goal-directed attention on prepulse inhibition of the cortical responses to an auditory pulse. Clin. Neurophysiol. 2014;125:1576–1588. doi: 10.1016/j.clinph.2013.12.002. [DOI] [PubMed] [Google Scholar]

- 41.Thompson KG, Hanes DP, Bichot NP, Schall JD. Perceptual and motor processing stages identified in the activity of macaque frontal eye field neurons during visual search. J. Neurophysiol. 1996;76:4040–4055. doi: 10.1152/jn.1996.76.6.4040. [DOI] [PubMed] [Google Scholar]

- 42.Ipata AE, Gee AL, Bisley JW, Goldberg ME. Neurons in the lateral intraparietal area create a priority map by the combination of disparate signals. Exp. Brain Res. 2009;192:479–488. doi: 10.1007/s00221-008-1557-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ipata AE, Gee AL, Goldberg ME. Feature attention evokes task-specific pattern selectivity in V4 neurons. Proc. Natl Acad. Sci. USA. 2012;109:16778–16785. doi: 10.1073/pnas.1215402109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Pooresmaeili A, Poort J, Roelfsema PR. Simultaneous selection by object-based attention in visual and frontal cortex. Proc. Natl Acad. Sci. USA. 2014;111:6467–6472. doi: 10.1073/pnas.1316181111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (Area LIP) of the rhesus monkey. J. Neurophysiol. 2001;86:1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- 46.Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J. Neurosci. 2002;22:9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ringach DL, Hawken MJ, Shapley R. Dynamics of orientation tuning in macaque primary visual cortex. Nature. 1997;387:281–284. doi: 10.1038/387281a0. [DOI] [PubMed] [Google Scholar]

- 48.Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- 49.Bredfeldt CE, Ringach DL. Dynamics of spatial frequency tuning in macaque V1. J. Neurosci. 2002;22:1976–1984. doi: 10.1523/JNEUROSCI.22-05-01976.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hedgé J, Van Essen DC. Temporal dynamics of shape analysis in macaque visual area V2. J. Neurophysiol. 2004;92:3030–3042. doi: 10.1152/jn.00822.2003. [DOI] [PubMed] [Google Scholar]

- 51.Roelfsema PR, Tolboom M, Khayat PS. Different processing phases for features, figures, and selective attention in the primary visual cortex. Neuron. 2007;56:785–792. doi: 10.1016/j.neuron.2007.10.006. [DOI] [PubMed] [Google Scholar]

- 52.Hedgé J. Time course of visual perception: Coarse-to-fine processing and beyond. Prog. Neurobiol. 2008;84:405–439. doi: 10.1016/j.pneurobio.2007.09.001. [DOI] [PubMed] [Google Scholar]

- 53.Lak A, Arabzadeh E, Harris JA, Diamond ME. Correlated physiological and perceptual effects of noise in a tactile stimulus. Proc. Natl Acad. Sci. USA. 2010;107:7981–7986. doi: 10.1073/pnas.0914750107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast readout of object identity from macaque inferior temporal cortex. Science. 2005;310:863–866. doi: 10.1126/science.1117593. [DOI] [PubMed] [Google Scholar]

- 55.Ambroggi F, Ishikawa A, Fields HL, Nicola SM. Basolateral amygdala neurons facilitate reward-seeking behavior by exciting nucleus accumbens neurons. Neuron. 2008;59:648–661. doi: 10.1016/j.neuron.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]