Abstract

Recent advances in high-resolution fluorescence microscopy have enabled the systematic study of morphological changes in large populations of cells induced by chemical and genetic perturbations, facilitating the discovery of signaling pathways underlying diseases and the development of new pharmacological treatments. In these studies, though, due to the complexity of the data, quantification and analysis of morphological features are for the vast majority handled manually, slowing significantly data processing and limiting often the information gained to a descriptive level. Thus, there is an urgent need for developing highly efficient automated analysis and processing tools for fluorescent images. In this paper, we present the application of a method based on the shearlet representation for confocal image analysis of neurons. The shearlet representation is a newly emerged method designed to combine multiscale data analysis with superior directional sensitivity, making this approach particularly effective for the representation of objects defined over a wide range of scales and with highly anisotropic features. Here, we apply the shearlet representation to problems of soma detection of neurons in culture and extraction of geometrical features of neuronal processes in brain tissue, and propose it as a new framework for large-scale fluorescent image analysis of biomedical data.

Keywords: curvelets, fluorescent microscopy, image processing, segmentation, shearlets, sparse representations, wavelets

1. Introduction

High-resolution fluorescence microscopy has emerged during the last decade as a fundamental tool for the study of molecular mechanisms in biological samples. Unlike traditional optical microscopy, fluorescence microscopy is based on the principle of absorption and subsequent reradiation of light emitted from fluorophores with separable spectral properties. Through chemical conjugation, these fluorophores can be linked with a high degree of specificity to single molecules and used as probes to track sub-cellular localization and expression pattern of any protein of interest. Hence, fluorescence microscopy can be invaluable to unravel structure-function properties at single cell level, in tissues or in whole organisms. Furthermore, combined with automated image acquisition and robotic handling, it can be applied for the systematic study of morphological changes within a large populations of cells under a variety of perturbations (e.g., drugs, compounds, gene silencing), so that automated fluorescence microscopy has become an essential technique for discovering new molecular pathways in diseases or new potential pharmacological treatments. However, the manual or semi-manual analysis of the large data sets acquired from these studies is very labor-intensive and there is a urgent need for automated methods which can rapidly and objectively process and analyze the image data. [24, 27]

The efficient analysis and processing of fluorescence images presents several additional challenges with respect to standard image processing problems. Fluorescence images are formed by detecting small amount of lights emitted by the fluorophores, a process which is affected by the randomness of photon emission, the spatial uncertainty inherent with the photon detection and photochemical reactions such as photobleaching, where a fluorophore loses its ability to fluoresce over time [28]. As a result, images acquired from fluorescent microscopy usually appear blurry and noisy. An additional difficulty stems from the complexity of the image data, which typically contain large variations in image contrast and object sizes, with features of interest varying from several tens of microns down to the resolution limit (~ 0.1 micron). Due to all these factors, standard image processing tools frequently perform poorly on fluorescent images and several specialized algorithms have been proposed in the literature, with different approaches often required to deal with different types of data [5, 22, 27].

Wavelets and multiscale methods, in particular, have been very successful in signal and image processing during the last decade, and several wavelet-based methods have been applied to fluorescent images for tasks such as image denoising, deblurring and segmentation (e.g., [3, 5, 38, 39]). Even though wavelets generally outperform Fourier-based and other traditional methods, they have serious limitations in multidimensional applications. This is due to the fact that the analyzing functions associated with the wavelet transform have poor directional sensitivity so that they are rather inefficient to represent edges and other anisotropic features which are frequently the most relevant objects in multidimensional data. To overcome this limitation, a new class of improved multiscale methods has emerged during the last decade, including most notably the shearlets and curvelets [2], which combine the advantages of multiscale analysis with the ability to efficiently encode directional information. Thanks to these properties, shearlets provide optimally sparse representations for 2D and 3D data containing edge discontinuities [2, 10, 21] and have been shown to perform very competitively for image denoising and enhancement, regularization and feature extraction [6, 20, 30, 44]. In particular, shearlet-based algorithms for denoising have been recently applied to fluorescent images in [7, 17], where they were shown to significantly outperform competing wavelet-based methods.

Motivated by the properties of directional multiscale representations, in this paper we present two algorithms which take advantage of the shearlet transform to efficiently and robustly extract the local geometry content from fluorescent imaging data. In particular, we will describe an algorithm for the automated identification of somas in neuronal cultures and an algorithm for the automated quantification of geometrical features of neurites in brain tissue.

The paper is organized as follows. In Section 2., we recall the basic properties of the shearlet transform. We next present the application of our shearlet-based approach to the identification of somas in neuronal cultures (Section 3.) and to the quantification of geometrical features of neurites in neuronal networks (Section 4.).

2. The Shearlet Transform

Before presenting the shearlet transform, let us briefly recall a few facts from the theory of wavelets.

2.1. The wavelet transform

Wavelets were introduced in the Eighties to provide efficient representations for functions with point discontinuities [25].

The basic idea at the core of this approach is to generate a family of waveforms ranging over several scales and locations through the actions of dilation and translation operators on a fixed ‘mother’ function. Hence, in the two-dimensional case, let G be a subgroup of the group GL2(ℝ) of 2 × 2 invertible matrices. The affine systems generated by ψ ∈ L2(ℝ2) are the collections of functions of the form

| (2.1) |

If there is an admissible function ψ so that any f ∈ L2(ℝ2) can be recovered via the reproducing formula

where λ is a measure on G, then ψ is a continuous wavelet and the continuous wavelet transform is the mapping

| (2.2) |

There are many examples of continuous wavelet transforms [41]. The simplest case is when the matrices M have the form a I, where a > 0 and I is the identity matrix, in which case the admissible ψ need to satisfy the simple condition

In this situation, one obtains the ‘traditional’ continuous wavelet transform

| (2.3) |

where the dilation factor is the same for all coordinate directions. Note that the classical discrete wavelet transform (in 2D) is obtained by discretizing (2.3) for a = 2−j, t = 2−jk, j ∈ ℤ, k ∈ ℤ2.

One of the most useful properties of the continuous wavelet transform is its ability to identify the singularities of functions and distributions. Namely, if f is a function or distribution which is smooth apart from a discontinuity at a point x0, then the continuous wavelet transform Wψf(a, t) decays rapidly as a → 0, unless t = x0 [15]. This property is critical to capture the local regularity and explains the ability of discrete wavelets to provide sparse approximations for functions with point singularities, which is one major reason for the success of wavelets in signal processing applications.

2.2. The shearlet transform

Despite its useful properties, the traditional continuous wavelet transform does not provide much information about the geometry of the singularities of a function or distribution. In many applications, edge-type discontinuities are frequently the most significant features and it is desirable not only to identify the their locations but also to capture their orientations. As shown by one of the authors and their collaborators in [11, 19], it is possible to combine multiscale analysis and improved geometric sensitivity by constructing a non-isotropic version of the continuous wavelet transform (2.2), called the continuous shearlet transform.

For appropriate admissible functions ψ(h), ψ(v) ∈ L2(ℝ2) and matrices

we define the horizontal and vertical (continuous) shearlets by

and

respectively. The reason for choosing two systems of analyzing functions is to ensure a more uniform covering of the range of directions through the shearing variable s. Indeed, rather than using a single shearlet system where s ranges over ℝ, we will use the two systems of shearlets defined above and let s range over a finite interval only.

To define our admissible functions ψ(h), ψ(v), for ξ = (ξ1, ξ2) ∈ ℝ2, let

| (2.4) |

where , and satisfy the following conditions:

| (2.5) |

| (2.6) |

Observe that, in the frequency domain, a shearlet has the form:

This shows each function has the following support:

That is, the frequency support of is a pair of trapezoids, symmetric with respect to the origin, oriented along a line of slope s.

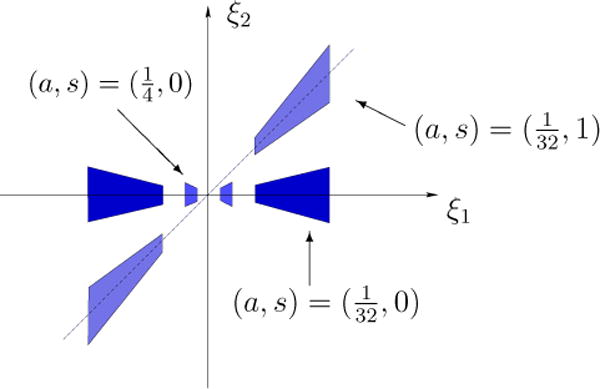

The horizontal and vertical shearlets form a collection of functions ranging not only over various locations and scales, like the elements of a traditional wavelet system, but also over various orientations controlled by the variable s, and with frequency supports becoming highly anisotropic at fine scales (a → 0). In space domain, these functions are not compactly supported but their supports are essentially concentrated on orientable rectangles with side-lengths a, and orientation controlled by s. The support becomes increasingly thin as a → 0. The frequency supports of some representative horizontal shearlets are illustrated in Figure 1.

Figure 1.

Frequency supports of the horizontal shearlets for different values of a and s.

For and , each system of continuous shearlets spans a subspace of L2(ℝ2) consisting of functions having frequency supports in one of the horizontal or vertical cones defined in the frequency domain by

More precisely, the following proposition from [19] shows that the horizontal and vertical shearlets, with appropriate ranges for a, s, t, form continuous reproducing systems for the spaces of L2 functions whose frequency supports are contained in and , respectively.

Proposition 1

Let ψ(h) and ψ(v) be given by (2.4) with and satisfying (2.5) and (2.6). Let

with a similar definition for . We have the following reproducing formulas.

- For all ,

- For all ,

where both identities hold in the weak topology of L2(ℝ2).

Using the horizontal and vertical shearlets, we define the (fine-scale) continuous shearlet transform on L2(ℝ2) as the mapping

given by

| (2.7) |

In the above expression we adopt the convention that the limit value of for s = ±∞ is set equal to .

The term fine-scale refers to the fact that the shearlet transform , given by (2.7), is only defined for the “fine scales” scale variable a ∈ (0, 1/4]. In fact, as it is clear from Proposition 1, defines an isometry on L2(ℝ2 \ [−2, 2]2)˅, the subspace of L2(ℝ2) of functions with frequency support away from [−2, 2]2 but not on L2(ℝ2). This is not a limitation since the geometric characterization of singularities only requires asymptotic estimates as a approaches 0.

Thanks to its directional sensitivity, the continuous shearlet transform is able to capture the geometry of edges precisely, going beyond the limitations of the traditional wavelet approach. If f is a cartoon-like image – a simplified model of natural images consisting of regular regions separated by smooth (or Cm regular, m ≥ 2) edges – then the shearlet transform of f provides a description of the location and orientations of the edges of f through its asymptotic decay at fine scales. In particular, we have he following theorem from [11].

Theorem 2

Let f = χS, where S ⊂ ℝ2 is a compact set whose boundary ∂S is a simple smooth curve. Then we have the following.

- If t ∉ ∂S, then

- If t ∈ ∂S and s does not correspond to the normal direction of ∂S at t, then

- If t ∈ ∂S and s corresponds to the normal direction of ∂S at t, then

In other words, the continuous shearlet transform of f = χS decays rapidly, asymptotically for a → 0, unless t is on the boundary of S and s corresponds to the normal direction of ∂S at t, in which case one observes the slow decay

This result can be extended to planar regions S whose boundaries contain corner points and even to more general functions which are not necessarily characteristic functions of sets [11, 12].

The discrete shearlet transform is obtained by sampling (2.7) at a = 2−2j, s = 2−jℓ, , for j, ℓ ∈ ℤ, k ∈ ℤ2 [14]. In this case, with an abuse of notation, we write the discrete shearlet transform as the mapping

This transform inherits some useful analytic properties from its continuous counterpart and can be implemented by fast wavelet-like algorithms. These efficient numerical implementations, combined with the property that discrete shearlet representations provide highly sparse approximations, make the discrete shearlet transform very useful for singularity detection and other image processing applications [20, 44].

3. Soma identification in fluorescent images of neuronal cultures

Recall that a neuron typically possesses two morphological structures: a round cell body called the soma and several branched processes called neurites, i.e., dendrites and axons. In many biological studies, it is often necessary to identify the individual neurons and their subcellular compartments, i.e., soma and neurites, in a culture or a brain tissue. For example, High Content Analysis applications for screening drugs normally require the extraction and quantification of several morphological features on large datasets for identifying compounds that cause phenotypic changes. Clearly, in these studies, traditional analysis methods such as manual segmentation or semi-automated segmentation with human intervention are highly impractical and automated methods for the quantification of morphological features are virtually necessary.

The automated extraction of the location and morphology of the somas in a neuronal network is essential for such applications and several ideas have been proposed in the literature. Some popular methods use intensity thresholding [37, 40], which is very effective in images acquired from phase-contrast microcopy where the somas often have higher contrast than the neurites. However, this approach is unreliable in fluorescent microscopy due to the inhomogeneity of the fluorescence intensity. Other more sophisticated methods from the literature use Laplacian-of-Gaussian and related filters [1, 32] to detect local maxima, but irregular intensity profiles inside the soma frequently lead to detect more than one soma candidate for each soma. Other more flexible methods include the use of morphological operators [37, 43].

In this paper, we propose a novel approach which takes advantage of the shearlet transform to accurately separate the somas from the neurites by classifying appropriate geometric descriptors extracted from the fluorescent image data. Our method is motivated by the properties of the shearlet transform presented in Sec. 2. in connection with the detection of the location and orientation of edges.

3.1. A shearlet-based Directional Ratio

Let us consider the cartoon-like image of a neuron f = χS in Figure 2, where S is a subset of ℝ2 consisting of the union of a disk-shaped region and a thin and long solid rectangle. One might expect S to be compact but, to simplify the mathematical analysis, we assume that the thin and long rectangle extends to infinity. Moreover, we consider its centerline to be the y-axis and the disk-shaped area to be centered at the origin of the coordinate system and be attached to it, so that S is connected. The continuous shearlet transform provides a precise characterization of the boundaries ∂S of the region S since has rapid asymptotic decay, as a → 0, for all (s, t), except for t located on ∂S and for s associated with the normal orientation to ∂S at t. Clearly, the discrete version of the shearlet transform can only be computed up to a minimal but finite resolution level. In this case, we expect that, at “fine scales”, the largest shearlet coefficients in magnitude will be those located near the edges, with the values of the shear parameter corresponding to the normal orientation to the edges. In particular, as shown by one of the authors in [44], we can use this observation to distinguish points near the edges from points away from the edges. At the points near the edges, the local orientation of an edge remains constant and the shearlet coefficient corresponding to a directional subband parallel to this orientation will have a significant value. This is visible in Panel B in Fig. 3.1., showing the consistent directional sensitivity of the shearlet transform for a single scale a near the singularities. The panel illustrates that the response of the transform at higher scales is more consistent in thinner neurites, while in thicker ones the response of the shearlet transform is less consistent. The same phenomenon is observed in the interior of the neurite, where the orientations of maximum response appear to be distributed almost like white-noise, in stark contrast to the consistent strong responses shown at the boundaries of the neurite. However, at intermediate scales, depending on the relative thickness of a neurite as compared to its length (aspect ratio), the shearlet transform “sees” the entire neurite as a singularity spatially organized along its centerline. Then, the orientations of maximum response in the interior of the neurite and on its boundary become more consistent. This observation leads to the conclusion that tubular and non-tubular structures appear with different degrees of directional coherence at different scales in the shearlet transform domain.

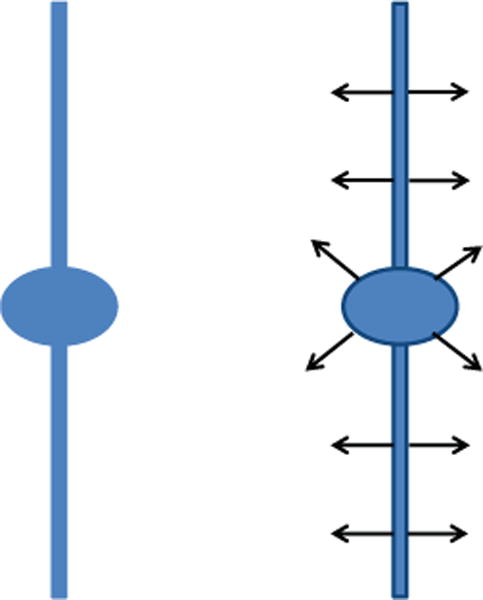

Figure 2.

Left: Cartoon-like model of a neurite and a soma. Right: The continuous shearlet transform of the cartoon-like image has rapid asymptotic decay everywehere except at the boundaries, for values of the shear variable corresponding to the normal orientation.

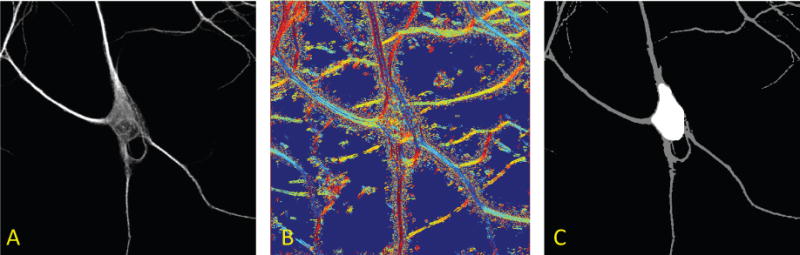

Figure 3.

Automatic detection of the soma. Panel A shows the maximum projection of a neuron imaged from fluorescent microscopy. Panel B illustrates the maximum response orientation components of the image (directions are color-coded) computed using the continuous shearlet decomposition at a single scale. Note that similar or slowly-changing colors are located along the neurites, unlike the soma region where there are no dominant orientations, that is, directional components vary randomly creating a white noise pattern. Using the directional ratio, we can accurately segment the soma from the rest of the structure in the image (Panel C).

As a measure of directional coherence, we consider a notion of directional ratio with respect to the continuous shearlet transform, which we use as a shape descriptor to separate isotropic from anisotropic structures. This concept was originally introduced in [29] using another set of analysis atoms. Similar directional ratios can be defined using other classes of multiscale transforms induced by appropriate ‘directional’ atoms.

The shearlet-based Directional Ratio of f at scale a > 0 and location t ∈ ℝ3 is given by

| (3.1) |

and measures the strength of directional information at scale a and location t. The next theorem predicts consistent responses of directional ratio on tubular structures in general and, thus, it formalizes the preceding discussion.

Theorem 3

Let f = χS be a cartoon-like image of a neuron, where S contains two disjoint subsets: A, a ball centered at the origin with radius R > 0 and B, the cylinder [−r, r]×[2R, + ∞), r > 0. Let the cylinder [−r, r] × [0, ∞) be contained in S. Moreover, assume that

the Fourier transforms of both ψ1 and ψ2 are C∞ and even;

the wavelet function ψ1 has two “plateaus” in the frequency domain: for all 3/4 ≤ |ξ| ≤ 3/2;

the “bump” function ψ2 satisfies for all |ξ| ≤ 1/4 and that .

Then, for 4r ≤ a ≤ 1/4, there exists a threshold τ such that the shearlet-based Directional Ratio of f, given by (3.1), satisfies:

| (3.2) |

On the other hand, if ε > 0 is an arbitrary positive number, then, for every y ∈ κεA, orientation s and sufficiently fine scale a, where 0 < κε < 1, we have that .

3.2. Proof of Theorem 3

Before starting the proof of the theorem we remark that the constant c above must exceed 1 because the support of is the interval and ‖ψ2‖2 = 1. Also, with no loss of generality, we can assume that .

Proof

We begin by making clear that the sinc-function is given by

Let y = (y1, y2) be an arbitrary element in B which is contained in the rectangle C = [−r, r] × [y2 − R, y2 +R] ⊂ B. Since the length of the cylinder B is large enough, with no loss of generality we can shift the origin in order to have y2 = 0. We have:

| (3.3) |

The absolute integrability of the shearlet functions implies that, for any ε > 0, there exists a square with side of length 2R0, such that

A similar relation holds for , with the roles of R0 and r0 reversed. For technical reasons, we will assume R0 > 1/2. Later in the proof we will determine ε. The above inequality implies that, for both the vertical and horizontal shearlets, we have

| (3.4) |

and

Our strategy is to show that the numerator in the fraction (3.3) is significantly smaller than the denominator. We begin by estimating the latter.

We stress that we have not specified the quantity ε yet, thus R0 still needs to be determined. However, we postpone this task until the end of the analysis of the behavior of directional ratio on the tubular part of the cartoon-like neuron.

On the other hand, f = χS contains the rectangle [−r, r] × [−R, R] which, in turn, contains y. Once again, with no loss of generality, since S extends as long we wish toward the positive direction of the y-axis, we can assume R > R0. Our assumptions on R0 and on the scale a imply aR0 ≥ 2r. Consequently, both rectangles −y + [−r, r] × [−R, R] and [−aR0, aR0] × [−a1/2r0, a1/2r0] are contained in the rectangle [−aR0, aR0] × [−R, R].

We observe that

which, if combined with aR0 ≥ 2r, allows us to take y1 = 0 and to conclude that

Thus,

We also observe that

where we drop the absolute value of ξ1 due to the symmetry of the integrand with respect to the ξ2-axis. First, we estimate the inner integral. To do so, we set . Then we observe:

Note that

Since , the scale a does not exceed and , we conclude that both and are greater than 1. In conclusion,

| (3.5) |

Next, we examine the outer integral

The selection of the scale a implies for every value of ξ1 in the interval of integration. Since the sinc function takes positive values in the open interval and for all , using (3.5) we finally conclude that

| (3.6) |

Now we turn our attention to the numerator of (3.3); we need to show that it is relatively much smaller than the denominator. We have that

Regardless of how y1 varies between −r and r, the rectangle always stays inside ℝ × [−R, R], so . Therefore, we have that

| (3.7) |

Note that

Since is even, we obtain that the latter double integral is equal to

| (3.8) |

We now estimate the inner integral. First, using the change the variable , we obtain that the inner integral in (3.8) is equal to

By applying the Cauchy-Schwartz inequality to ψ2, which has norm equal to 1, we deduce . Therefore, the inner integral in (3.8) is bounded above . Thus, the absolute value of the integral in (3.8) is bounded above by

where the last inequality follows from our assumption on the scale a. Using (3.7) and the last inequality, we obtain that

Next, using (3.6), we derive the following upper bound for :

Taking , we get that , for all y ∈ B.

To complete the proof we turn our attention to the isotropic part of the cartoon neuron, the ball A. Since A is centered at the origin and it is a ball, it is enough to prove the statement for horizontal shearlets only. As we did in the first part of the proof, for each arbitrary ε > 0, we can find a square with side of length 2R0,ε such that

The shearing parameter s varies between −1 and 1 and, as it does so, it “shears” the square Q := [−R0,ε, R0,ε] × [−R0,ε, R0,ε]. Therefore, if the scale is sufficiently fine, it is not hard to see that there exists 0 < κε < 1 such that

for every y ∈ κεA. Now, let −1 ≤ s ≤ 1. Then . However, . Thus,

because y + Mas(Q) ⊆ A. Therefore, we conclude that

□

The last part of the proof of Theorem 3 illustrates the behavior of the shearlet transform in isotropic regions. We don’t have to take the ε very small because, as the scale becomes finer and finer, the quantity 2a3/4ε decreases as well. In any case, the main implication of the second part of Theorem 3 is that the shearlet transform will attain very small values everywhere in the isotropic part of the cartoon-like neuron, as long as the location variable of the transform is away from the boundaries. As a result, the values of the Directional Ratio will vary wildly in isotropic regions (see Fig. 3.2.) and, consequently, the Directional Ratio is not useful to detect such regions. As we discuss in the next paragraph, there is a method to detect and segment isotropic regions such as somas.

To identify somas with respect to neurites, we will use Theorem 3, which predicts that there is a threshold below which, at appropriate scales, the directional ratio in the neurites does not exceed this threshold. The existence of such a threshold is numerically verifiable as one can see in Fig. 3.2.

Thus, given a segmented neuron, we can identify within this subvolume the grid points corresponding to somas. In fact, Proposition 4 below indicates that, in the interior of tubular structures modeling the (thin) neurites, the function is weakly differentiable. This property can be used to circumscribe a boundary between a neurite and a soma via a level-set method. This method can be significantly more accurate than plain thresholding to locate this boundary.

Recall that level set methods propagate a surface or a curve which evolves until it coincides with a desired level set. In our case, this level set would be the boundary between a neurite and a soma. The gradient field required to evolve the interface curve to the desired level set can be defined by taking finite differences of the directional ratio, since this function is weakly differentiable. To initialize the evolution we can use the curve which is the boundary of the set of points inside the soma whose values of , for a suitable a, are less than a threshold predicted by Theorem 3.2. In [29], we have proposed a similar method to segment somas in fluorescent images of neurons.

3.3. Weak differentiability

Let us now turn our attention to the weak differentiability of the directional ratio of f, where f is a cartoon-like neuron. To establish that , given by the ratio (3.1), satisfies a Lipschitz condition, it is enough to verify this property for the numerator and the denominator of that ratio. To control how small the denominator can become, we assume that in an open set Ω there exists α > 0 such that for all y ∈ Ω. Select y ∈ Ω and a vector h such that y + h ∈ Ω. Using the triangle inequality we have

for every scale a and shear variable s. A similar inequality holds for horizontal shearlets. We will assume Ω to be convex. Since the Fourier transforms of ψ(v) and ψ(h) are C∞ and compactly supported, the ψ(v) and ψ(h) are in the Schwartz space. Hence, all of their partial derivatives are uniformly bounded.

By applying the Mean Value Theorem of differential calculus we obtain that

where C is a constant derived by the L∞-bounds of the partial derivatives of the horizontal and vertical shearlets. Since the shear variable s is taken in [−1, 1], the constant C holds uniformly in s. Therefore, for every s ∈ [−1, 1] we have that

By combining horizontal and vertical shearlets, we deduce that

By swapping the points y and y + h in the previous inequality, we obtain that

On the other hand, a similar argument establishes that

Combining these two observations we derive the following result.

The above discussion yields the next statement:

Proposition 4

Assume that, for every point y in the open and convex set Ω ⊂ ℝ2, there exists α > 0 such that . Then the shearlet-based Directional Ratio , at scale a, restricted on Ω, satisfies a Lipschitz condition and, thus, it is weakly differentiable on Ω.

The weak differentiability of follows from the fact that is absolutely continuous since it satisfies a Lipschitz condition.

4. Quantitative measure of alteration in biological neural networks

Structural and morphological changes of neurites often precede functional deficits or neuronal death associated with many brain disorders and several methods have been proposed to quantify these phenotypes. Popular methods include the Sholl analysis [36, 23], the Schoenen Ramification Index [35] and the fractal analysis [26], which are used to measure the branching characteristics of neurons, and the tortuosity index, defined as the ratio of the arc length over the end-point distance of a curve, which is used to describe the degree of ‘straightness’ of a given neurite [31, 42]. While these methods are very effective at single neuron level and have been often used to classify neuron populations, they are unable to provide a quantitative measure of the structural properties of a biological neural network. Emerging evidence indicates that many brain disorders are associated with the disruption of the network of neurites in defined brain areas [4, 16]. Thus, the ability to quantify the loss of regularity and structural organization in these networks is potentially very helpful to unravel fundamental structure-function properties involved in the insurgence of brain disorders.

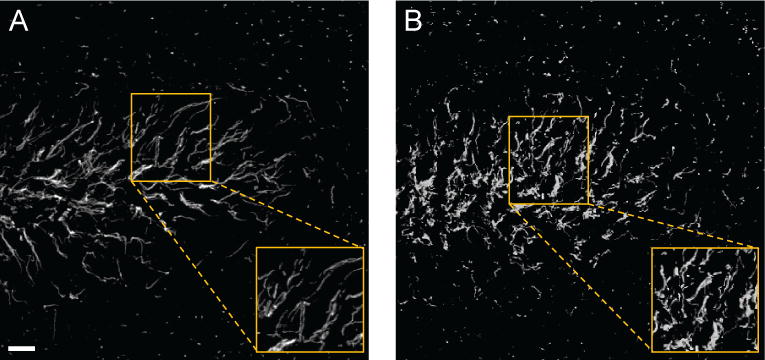

An example of the type of data for which we wish to provide a quantitative analysis is illustrated in Fig. 5, where axonal processes in a brain tissue derived from a control mouse (Fig. 5(a)) and from a transgenic mouse which models neurodegeneration (Fig. 5(b)) are visualized with confocal fluorescent microscopy. The figure shows that, while in the control specimen the axonal processes are rather straight and tend to run parallel to one another, in the transgenic animal neighbouring processes are not very aligned and tortuous, leading to an overall disrupted and chaotic pattern. To deal with these types of data, we introduce a new approach which models a network of neurites as a system of tubular structures. Our observation is that one can measure the overall structural organization and regularity of this system by estimating the statistical spread of orientations of the tubular structures sampled over the region they occupy.

Figure 5.

Representative confocal images of axonal processes in the dentate gyrus, a brain area important for cognitive function, in a control mouse (A) and in a transgenic mouse model of neurodegeneration (B). Axonal processes are labeled with an anti-beta-IV-spectrin antibody and visualized with an Alexa 488-conjugated secondary antibody. Note that the pattern distribution of beta-IV-spectrin is disrupted in the dentate gyrus of the transgenic animal. The boxed region is enlarged on the bottom right of each panel to highlight the orientation pattern of the axonal processes at a higher resolution. Scale bar = 10 μm.

A direct approach to measure the spread of orientations would require to extract the local directional information of the tubular structures and use the notion of circular variance from directional statistics [8]. In an ideal tubular structure, this can be obtained by detecting the edges and then estimating their orientations. However, in the case of fluorescent images, it is difficult to extract the edges reliably due to the presence of noise. Therefore, we introduce an alternative procedure which uses the shearlet transform to estimate the local directional content of an image. As illustrated in Section 2., the continuous shearlet transform maps a function f into the elements measuring the energy content of f near t at scale and orientations associated with a and s, respectively. Hence, for aj = 2−2j and fixed, the functions measure the distribution of the directional content of f at scale 2−2j and location tk. We will use these functions to build an histogram of the directional content of an image.

4.1. Shearlet-based histogram of directional content

To define an histogram of the directional content of an image I using the shearlet transform, we consider the functions evaluated at the discrete directional values s(ℓ) = 2−jℓ, ℓ ∈ Nj, where Nj is a finite set depending on j. We assume that the image is normalized so that ‖I‖2 = 1. For each fixed scale j, we compute the quantities

where is the discrete shearlet transform introduced in Sec. 2.. Hence, Hj describes the energy amount of I in each directional band indexed by ℓ.

Using the vectors Hj, we define a measure of the directional spread by computing a distance between the image I and an appropriate reference image I0. That is, the image I0 models the situation where all neurites are perfectly straight and parallel to one another, so that the corresponding feature vectors have all components equal to 0, except for one component (with ‖I0‖2 = 1). We define the directionality spread index as the quantity

| (4.1) |

where [ℓ+d]n(j) is the sum ℓ+d modulo nj and is used to ensure that I0 is compared to all possible rotated versions of I. That is, ν2(I) is the rotation-invariant ℓ2 distance between the feature vectors of I and I0. Note that ν2 ranges between ν2(I) = 0, corresponding to the case where all neurites are perfectly straight and parallel to one another in the image I, and ν2(I) ≈ 2, corresponding to the case of maximum misalignment, where the directional content is uniformly distributed among all directional bands.

However, while the use of the ℓ2-norm in (4.1) is a natural mathematical choice, it is not very ‘physical’ (the inadequacy of ℓ2-norm to measure distances between features in well-known in computer vision, e.g., [9, 34]). As a more effective alternative, we can consider the Earth Mover’s Distance (EMD), known in mathematics as the Wasserstein metric, which is based on a solution of the transportation problem and was originally proposed to provide a consistent measure of distance or dissimilarity between two distributions of mass [33, 34]. This metric is particularly appealing in our context. In fact, the histogram associated with the vector Hj can be viewed as the amount of directional content piled over the various directionals bins. The directionality spread index associated with the EMD gives a measure of the minimum cost of turning these piles into the single pile corresponding to . In other words, it is measuring the cost of turning a network of possibly misaligned neurites into an ideal configuration where all neurites run parallel to one another. With respect to other notions of distances between histograms such as the information theoretic Kullback-Leibler divergence [18] and the entropy, which account only for the correspondence between bins with the same index and do not use information across bins, EMD is a cross-bin distance measure, is not affected by binning differences and meaningfully matches the physical notion of closeness.

To illustrate the use of our new definition, we have computed the directionality spread index ν2 and its variants associated with the EMD and other types of distances on a set of confocal fluorescent images similar to those in Fig. 5 in order to compare the axonal processes in brain tissues from control mice versus transgenic mice. The values of the directionality spread index associated with the ℓ2-norm, EMD, Kullback-Leibler distance (KLD) and the entropy distance are reported in Table 1. For each population (control animal, i.e., wild type, vs. transgenic animal), the table shows the mean directionality spread index computed over 8 samples together with the standard deviation. Note that only the values of the directionality spread index associated with the EMD have passed the normality test. The numbers that we have obtained show that the difference between the directionality spread index of the two populations is statistically significant.

Table 1.

Mean tortuosity scores using different metrics. Data are in the form: mean ± SD; p < 0.0001. The values are obtained from 8 sample images for each population.

| Sample tissue | EMD | KLD | ℓ2 | ENTROPY |

|---|---|---|---|---|

| Wild type | 28.1 ± 3.4 | 32.8 ± 3.4 | 1.9 ± 0.07 | 4.7 ± 0.11 |

| Transgenic | 37.3 ± 1.5 | 45.8 ± 1.9 | 1.5 ± 0.02 | 4.9 ± 0.01 |

5. Conclusion

In this paper, we have shown that directional multiscale representations and, in particular, the shearlet representation offer a powerful and flexible framework for the analysis and processing of fluorescent images of complex biomedical data. The power of the shearlet representation comes for its ability to combine multiscale data analysis and high directional sensitivity, making this approach particularly effective for the representation of objects defined over a wide range of scales and with highly anisotropic features, such as the somas and neurites which are the main morphological constituents of imaged neurons. We have applied the shearlet representation to detect somas in fluorescent images of neuronal cultures and extract geometrical features from complex networks of neuronal processes in brain tissue. Our theoretical observations and numerical results show that the shearlet approach offers a very competitive analytical and computational framework for the geometric quantification of morphological features from large-scale fluorescent images of neurons.

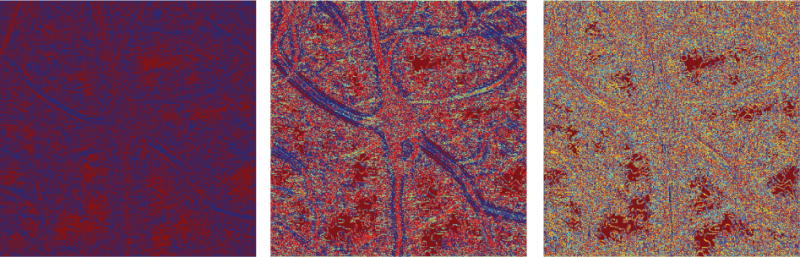

Figure 4.

Values of directional ratio (color-coded) computed at various discrete scales; from left to right: , , and according to the conventions of this paper. Using the convention of the discrete shearlet transform, from left to right we show the directional ratio at two, three and five scales below the resolution level of the original. As the scale becomes coarser, the values of the directional ratio in the interior of neurites become consistently more uniform and do not exceed a certain low threshold, whose existence is predicted by Theorem 3.2. In the interior of the soma and of thicker neurites, the values of directional ratio vary wildly, once again as predicted by Theorem 3.2.

Acknowledgments

This work was partially supported by NSF-DMS 1320910 (MP), 1008900 (DL), 1005799 (DL), by NHARP-003652-0136-2009 (MP,DL) and by NIH R01 MH095995 (FL).

Footnotes

AMS subject classification: 42C15, 42C40, 92C15, 92C55

References

- 1.Al-Kofahi Y, Lassoued W, Lee W, Roysam B. Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Trans Biomed Eng. 2010;57:841–852. doi: 10.1109/TBME.2009.2035102. [DOI] [PubMed] [Google Scholar]

- 2.Candès EJ, Donoho DL. New tight frames of curvelets and optimal representations of objects with piecewise C2 singularities. Comm Pure and Appl Math. 2004;56:216–266. [Google Scholar]

- 3.Chang CW, Mycek MA. Total variation versus wavelet-based methods for image denoising in fluorescence lifetime imaging microscopy. J Biophotonics. 2012 May;5(5–6):449–57. doi: 10.1002/jbio.201100137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Delatour B, Blanchard V, Pradier L, Duyckaerts C. Alzheimer pathology disorganizes cortico-cortical circuitry: direct evidence from a transgenic animal model. Neurobiol Dis. 2004;16(1):41–7. doi: 10.1016/j.nbd.2004.01.008. [DOI] [PubMed] [Google Scholar]

- 5.Dima A, Scholz M, Obermayer K. Automatic Segmentation and Skeletonization of Neurons From Confocal Microscopy Images Based on the 3-D Wavelet Transform. IEEE Trans Image Process. 2002;11:790–801. doi: 10.1109/TIP.2002.800888. [DOI] [PubMed] [Google Scholar]

- 6.Easley G, Labate D, Lim W. Sparse Directional Image Representations using the Discrete Shearlet Transform. Appl Comput Harmon Anal. 2008;25:25–46. [Google Scholar]

- 7.Easley G, Labate D, Negi PS. 3D data denoising using combined sparse dictionaries. Math Model Nat Phen. 2013;8(1):60–74. [Google Scholar]

- 8.Fisher NI. Statistical Analysis of Circular Data. Cambridge University Press; 1993. [Google Scholar]

- 9.Grigorescu C, Petkov N. Distance sets for shape filters and shape recognition. IEEE Trans on Image Processing. 2003;12(10):1274–1286. doi: 10.1109/TIP.2003.816010. [DOI] [PubMed] [Google Scholar]

- 10.Guo K, Labate D. Optimally Sparse Multidimensional Representation using Shearlets. SIAM J Math Anal. 2007;39:298–318. [Google Scholar]

- 11.Guo K, Labate D. Characterization and analysis of edges using the continuous shearlet transform. SIAM Journal on Imaging Sciences. 2009;2:959–986. [Google Scholar]

- 12.Guo K, Labate D, Lim W. Edge Analysis and identification using the Continuous Shearlet Transform. Appl Comput Harmon Anal. 2009;27:24–46. [Google Scholar]

- 13.Guo K, Labate D. Analysis and Detection of Surface Discontinuities using the 3D Continuous Shearlet Transform. Appl Comput Harmon Anal. 2010;30:231–242. [Google Scholar]

- 14.Guo K, Labate D. The Construction of Smooth Parseval Frames of Shearlets. Math Model Nat Phen. 2013;8(1):82–105. [Google Scholar]

- 15.Holschneider M. Wavelets. Analysis tool. Oxford University Press; Oxford: 1995. [Google Scholar]

- 16.Jacobs B, Praag H, Gage F. Adult brain neurogenesis and psychiatry: a novel theory of depression. Mol Psychiatry. 2000;5(3):262–269. doi: 10.1038/sj.mp.4000712. [DOI] [PubMed] [Google Scholar]

- 17.James TF, Luisi J, Nenov MN, Panova-Electronova N, Labate D, Laezza F. The Nav1.2 channel is regulated by glycogen synthase kinase 3 (GSK3) 2013. submitted to Neuropharmacology. [Google Scholar]

- 18.Kullback S. Information theory and statistics. John Wiley and Sons; NY: 1959. [Google Scholar]

- 19.Kutyniok G, Labate D. Resolution of the Wavefront Set using Continuous Shearlets. Trans Amer Math Soc. 2009;361:2719–2754. [Google Scholar]

- 20.Kutyniok G, Labate D. Shearlets: Multiscale Analysis for Multivariate Data. Birkhäuser; Boston: 2012. [Google Scholar]

- 21.Labate D, Lim W, Kutyniok G, Weiss G. Sparse Multidimensional Representation using Shearlets. Wavelets XI; San Diego, CA: 2005. pp. 254–262. SPIE Proc. 5914, SPIE, Bellingham, WA, (2005) [Google Scholar]

- 22.Lamprecht MR, Sabatini DM, Carpenter AE. CellProfiler: free, versatile software for automated biological image analysis. Biotechniques. 2007;42(1):71–75. doi: 10.2144/000112257. [DOI] [PubMed] [Google Scholar]

- 23.Langhammer CG, Previtera PM, Sweet ES, Sran SS, Chen M, Firestein BL. Automated Sholl analysis of digitized neuronal morphology at multiple scales: Whole cell Sholl analysis versus Sholl analysis of arbor subregions. Cytometry A. 2010;77(12):1160–1168. doi: 10.1002/cyto.a.20954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Li F, Yin Z, Jin G, Zhao H, Wong ST. Bioimage informatics for systems pharmacology. PLoS Comput Biol. 2013 Apr;9(4) doi: 10.1371/journal.pcbi.1003043. Chapter 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mallat S. A Wavelet Tour of Signal Processing. Academic Press; San Diego, CA: 1998. [Google Scholar]

- 26.Milosevic NT, Ristanovic D, Stankovic JB. Fractal analysis of the laminar organization of spinal cord neurons. Journal of Neuroscience Methods. 2005;146(2):198–204. doi: 10.1016/j.jneumeth.2005.02.009. [DOI] [PubMed] [Google Scholar]

- 27.Murphy RF, Meijering E, Danuser G. Special issue on molecular and cellular bioimaging. IEEE Trans Image Process. 2005;14:1233–1236. [Google Scholar]

- 28.Ntziachristos V. Fluorescence molecular imaging. Annu Rev Biomed Eng. 2006;8:1–33. doi: 10.1146/annurev.bioeng.8.061505.095831. [DOI] [PubMed] [Google Scholar]

- 29.Ozcan B, Labate D, Jimenez D, Papadakis M. Directional and non-directional representations for the characterization of neuronal morphology. Wavelets XV; San Diego, CA: 2013. SPIE Proc. 8858 (2013) [Google Scholar]

- 30.Patel VM, Easley GR, Healy DM. Shearlet-based deconvolution. IEEE Trans Image Proc. 2009;18(12):2673–2685. doi: 10.1109/TIP.2009.2029594. [DOI] [PubMed] [Google Scholar]

- 31.Portera-Cailliau C, Weimer RM, De Paola V, Caroni P, Svoboda K. Diverse modes of axon elaboration in the developing neocortex. PLoS Biol. 2005;3(8):1473–1487. doi: 10.1371/journal.pbio.0030272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Qi X, Xing F, Foran D, Yang L. Robust segmentation of overlapping cells in histopathology specimens using parallel seed detection and repulsive level set. IEEE Trans Biomed Eng. 2012;59(3):754–765. doi: 10.1109/TBME.2011.2179298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rubner Y, Tomasi C, Guibas LJ. A Metric for Distributions with Applications to Image Databases; Proceedings ICCV; 1998. pp. 59–66. [Google Scholar]

- 34.Rubner Y, Tomasi C, Guibas LJ. The Earth Mover’s Distance as a Metric for Image Retrieval. International Journal of Computer Vision. 2000;40(2):99–121. [Google Scholar]

- 35.Schoenen J. The dendritic organization of the human spinal cord: the dorsal horn. Neuroscience. 1982;7:2057–2087. doi: 10.1016/0306-4522(82)90120-8. [DOI] [PubMed] [Google Scholar]

- 36.Sholl DA. Dendritic organization in the neurons of the visual and motor cortices of the cat. J Anat. 1953;87:387–406. [PMC free article] [PubMed] [Google Scholar]

- 37.Vallotton P, Lagerstrom R, Sun C, Buckley M, Wang D. Automated Analysis of Neurite Branching in Cultured Cortical Neurons Using HCA-Vision. Cytom Part A. 2007;71(10):889–895. doi: 10.1002/cyto.a.20462. [DOI] [PubMed] [Google Scholar]

- 38.Vonesch C, Unser M. A Fast Thresholded Landweber Algorithm for Wavelet-Regularized Multidimensional Deconvolution. IEEE Transactions on Image Processing. 2008;17(4):539–549. doi: 10.1109/TIP.2008.917103. [DOI] [PubMed] [Google Scholar]

- 39.Vonesch C, Unser M. A Fast Multilevel Algorithm for Wavelet-Regularized Image Restoration. IEEE Transactions on Image Processing. 2009 Mar;18(3):509–523. doi: 10.1109/TIP.2008.2008073. [DOI] [PubMed] [Google Scholar]

- 40.Wählby C, Sintorn IM, Erlandsson F, Borgefors G, Bengtsson E. Combining intensity, edge and shape information for 2D and 3D segmentation of cell nuclei in tissue sections. J Microsc. 2004;215:67–76. doi: 10.1111/j.0022-2720.2004.01338.x. [DOI] [PubMed] [Google Scholar]

- 41.Weiss G, Wilson E. The mathematical theory of wavelets; Proceeding of the NATO–ASI Meeting. Harmonic Analysis 2000. A Celebration; Kluwer Publisher; 2001. [Google Scholar]

- 42.Wen Q, Stepanyants A, Elston GN, Grosberg AY, Chklovskiia DB. Maximization of the connectivity repertoire as a statistical principle governing the shapes of dendritic arbors. PNAS. 2009;106(30):12536–12541. doi: 10.1073/pnas.0901530106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yan C, Li A, Zhang B, Ding W, Luo Q, Gong H. Automated and accurate detection of soma location and surface morphology in large-scale 3D neuron images. PLoS One. 2013 Apr 24;8(4) doi: 10.1371/journal.pone.0062579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Yi S, Labate D, Easley GR, Krim H. A Shearlet approach to Edge Analysis and Detection. IEEE Trans Image Process. 2009;18(5):929–941. doi: 10.1109/TIP.2009.2013082. [DOI] [PubMed] [Google Scholar]