Abstract

The pharmaceutical industry has long known that approximately 80% of the results of academic laboratories cannot be reproduced when repeated in industry laboratories. Yet academic investigators are typically unaware of this problem, which severely impedes the drug development process. This “academic-industrial complication” is not one of deception, but rather a complex issue related to how scientific research is carried out and translated in strikingly different enterprises. This opinion article describes the reasons for inconsistencies between academic and industrial laboratories and what can be done to repair this failure of translation.

Keywords: Translational failure, bench to bedside, drug development

Relationship Issues in Drug Discovery

Thirty-five years ago a landmark article in Science defined the term Academic-Industrial Complex [1]. It explored the complex relationship between academia and industry, at a time when industry was funding increasing numbers of academic research laboratories and centers. Within the broad umbrella covered by this term was the relationship between research done in laboratories at universities (“academic”) and its development by pharmaceutical and medical device companies, including start-up companies (“industry”). The value of this relationship is the production of new knowledge in academic laboratories, which are then translated by industry to develop new treatments or diagnostic techniques.

Although the academic-industrial cooperation should be a fertile environment for the scientific enterprise, there is now a general recognition that the results of academic laboratories are frequently irreproducible in industry laboratories [2]. Bayer found that only 20–25% of academic findings could be reproduced by their in-house team [3], and Amgen found only 11% [4]. A specific example relates to the sirtuin activators, which were shown effective in early publications from an academic laboratory and in data from a start-up company, Sirtris, but not when studied by larger companies such as Pfizer [5]. Research and development heads at large companies often privately acknowledge that the results they obtain when attempting to use the fruits of academic laboratory research are not the same as those reported in publications or confidential disclosures.

Several recent articles have pointed out serious issues in reproducibility [2, 3, 6–9], which handicap the ability of industry to develop the work from academia.

In contrast to the recognition of this problem on the part of industry, there is usually disbelief on the part of academic researchers when informed of the problem. The academic world believes in its research, and views the failure to translate from academia to industry as an issue related to protocols, misunderstandings, or even incompetence.

This commentary describes sources of inconsistencies between the results of academic research and their replication in industrial laboratories, proscribes factors that increase the risk of discrepancies, and prescribes better methods for collaboration between the two enterprises.

Successful Translation of Academic Results to Industry

There are many examples of how research in academic laboratories has translated into drugs or devices that have been further developed and marketed by industry. Examples include the discovery of a taxane extracted from European yews (Taxus baccata) in the CNRS laboratory of Pierre Potier, co-financed by Rhône-Poulenc Rorer, which led to the commercialization of docetaxel (Taxotere) for cancer therapy. Vaccines have also been commonly discovered in university laboratories. The first human B hepatitis vaccine was prepared by Philippe Maupas at the University of Tours, France, before being prepared by Glaxo. Most recently, a team led by Kim Lewis of Northeastern University used an electronic chip to discover new antibiotics extracted from soil microbes which are active against most resistant bacterial strains [10]. Teixobactin, the first new antibiotic developed in the past 30 years, is highly effective against Mycobacterium tuberculosis and Staphylococcus aureus without inducing resistant strains, representing a revolution in antimicrobial development which many companies struggle to achieve.

In the United States, the Bayh-Dole Act defines the terms delineating how the use of patents derived from federally-funded academic research can be licensed to industrial partners. Licensing fees typically go to the university, the laboratory, and the investigator(s) that are inventors on the patent. Similar incentive strategies are being developed in European countries, particularly in France. This powerful incentive has increasingly led to interest in translating research from academia to industry, a dramatically different picture from the practice of science hundreds of years ago, when scientific curiosity was the key driver for research done by individuals in small and often privately-funded laboratories.

Problems with Reproducing Academic Results in Industry

Despite these successes, more commonly there are failures. Translational failures in this setting are of 4 types: (1) The experimental data from the academic side are not true (e.g. from incorrect interpretation, “p hacking” [11], or falsification; (2) the experimental data from the industry side are not true; (3) both sets of data are true, but different, because experimental models, reagents, parameters, instruments, or environments differ between the two; or (4) the data are reproduced accurately, but industry’s requirements for effect sizes, sample sizes, lack of toxicity, or other features differ from that of the academic laboratory.

To better understand the problem of failed translation, it is important to understand the roles and perspectives of the parties (see Figure 1). From the standpoint of industry, there are several explanations for the assumption that academic results are inaccurate or irreproducible. First, there is a belief that the interests of the investigator in publishing (“publish or perish”) and the need to maintain grant funding can trump the accounting of results that are less than overwhelming. For most scientists in academia there is a strong pressure to publish papers and obtain grant funding. This pressure can be reduced in systems where researchers have permanent positions, and their own salaries do not depend on obtaining grants, yet remains high because academic evaluation and funding are mostly based on publication ranking. The main advantage of permanent positions for senior researchers is the possibility to engage in risky research despite the potential for failure.

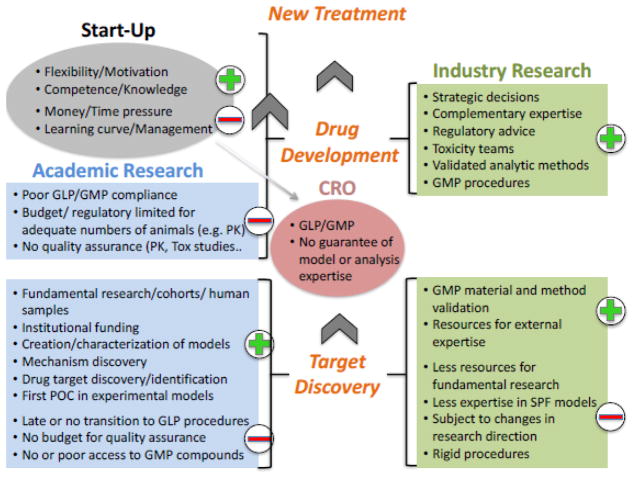

Figure 1.

The relations between academic institutions (light blue), industry (light green), start-up companies (gray), and contract research organizations (CRO; dark pink) in the drug development process, which is depicted as proceeding from the bottom to the top. Green plus signs (+) represent areas of strengths; red minus signs (−) represent areas of weaknesses or challenges.

The number of universities providing “hard money,” where salaries are guaranteed as a part of tenure-track employment, are diminishing. It is rare that researchers have guaranteed grant funding from other sources. Even the awards given to the most productive investigators, such as the Javits Award from the National Institute of Neurological Disorders and Stroke (7 years) or investigatorships from the Howard Hughes Medical Institute (5 years), do not have a lifetime guarantee. The need to publish one’s results to obtain further grant funding can be a source of pressure on investigators, and unconscious over-interpretation, or in worse cases, embellishment of data, might ensue.

Second, the limitation of government-derived funding and the rise of disease-based foundations (e.g. the Cystic Fibrosis Foundation or the American Diabetes Foundation) as sources of research funding can induce investigators to incorporate a clinical relevance to a research project for which the connection may be flimsy. Third, many top-tier journals favor submissions which tell a compelling story, and can inadvertently promote the overselling of research data. At the same time, it is precisely those high-impact articles that may stimulate industry interest.

Our experience has been that similar problems can arise in small start-up companies, where employees may lose their jobs when experiments do not work. Such pressure can unconsciously influence interpretation of subjective experimental variables. On the other hand, start-ups appear to play a special role intermediate between academia and industry. Start-up personnel often originate from the academic laboratory, and because it is their own project, they are probably more likely to work harder to reproduce results, identify pitfalls, and understand the source of problems. Given that their jobs depend on translatability of their work, start-up personnel may have a tremendous incentive to explore inconsistent results and mitigate them by improving procedures, models, and reagents. This emphasis on understanding and refining experimental methods could help the project survive. Contrast this with big pharma, where projects are terminated because there is insufficient incentive to understand initially negative results. If start-ups were not performing the role of reproducing, refining, and improving the science originated by academia, probably far less of it would go further. Industry companies are unable to perform this because they are not structured to do so.

Although there are well-publicized examples of fraudulent research, it is unlikely that failed replication is the result of fraud. This is not based on a belief in the inherent honesty of one group of scientists over another, but rather a statistical argument that the fruits of academic research are frequently able to be replicated in other laboratories, albeit when accompanied by communication between the originator and the copier. If indeed roughly 80% of academic research is fraudulent, then presumably that should result in an equivalently high rate of failed reproducibility in the literature. Instead, academic laboratories appear to be able to reproduce the work of others, although this is not always the case [9]. Even when results are reproducible. it does not mean that the results are also true, as pointed out by Ioannidis [12]. Failure to reproduce results can suffer from a bias against publishing negative or unclear results, leading to the file-drawer effect [13], while if many laboratories attempt to reproduce an effect, some may detect the opposite effect by chance, i.e. the Proteus phenomenon [14]. Together, these concerns are fundamental issues in the reproducibility of academic research.

An issue relating to reproducing academic research is that the methodologies used for designing experiments, collecting data, and analyzing results may not less rigorous than those used in industry for the same purpose. We previously described this “Lost in Translation problem,” and suggested that differences in methodological rigor in basic science laboratory research could help explain why it is frequently not reproduced in clinical trials [15]. For example, a problem with reproducing preclinical studies of neuroprotective agents in stroke in clinical trials led to the formation of the Stroke Treatment Academic Industry Roundtable (STAIR), which produced recommendations for improving the quality of basic research in the field [16]. The deficiencies raised by the STAIR consortium applies both to academic researchers and those in industry doing replication. The ARRIVE guidelines [17] for reporting animal research are akin to the CONSORT guidelines for clinical trials, and address the frequent lack of detail in how animal experiments are reported. The DEPART guidelines [8] are an example of osteoarthritis-specific considerations for how animal experiments are designed, while all NIH-supported preclinical research must now adhere to the NIH Principles and Guidelines for Reporting Preclinical Research guidelines [18]. Applying clinical trial methods to the bench, e.g. the preclinical randomized controlled multicenter trial [19], can help improve rigor and thus reproducibility.

Incentives to publish positive findings can promote biased interpretation of data, as suggested above. A different type of bias occurs where initial experiments showing exciting results are followed by experiments that fail to repeat the positive results, and even though those negative experiments are repeatable, they are ignored or rationalized as a result of some other factor, e.g. different personnel or change in reagent lots. Finally, an over-reliance on p values for interpreting the likelihood of an hypothesis can lead to conditions such as “p-hacking,” where data are analyzed until it yields a significant p value [20].

Another issue is that experiments in academic laboratories may use doses of drugs that are too high, with resultant poor selectivity and even toxicity. Academic scientists infrequently conduct pharmacokinetic studies that would help define appropriate drug concentration, duration of exposure at the target site, and other factors – all of which are critical concerns for the industrial scientist.

The View from the Academic Side

We have discussed the observation from the industry side that academic research is frequently not repeatable. Conversely, from the standpoint of academic researchers, industry does not always have the detailed knowledge needed to reproduce the academic laboratory’s results. First, a frequent assumption made by academic laboratories when their results fail to be translated in industry laboratories is that is the industry laboratory may not have equivalent levels of expertise in the specialized methods required to carry out replication of the former’s results. Many companies subcontract animal studies to specialized contract research organizations, and given the need for these organizations to have many animal models on their menu, they may not have sufficient expertise to carry out the research at the same level of proficiency as an academic laboratory which uses only that model. An academic laboratory may have spent years developing a specific model, validating it, and ensuring the repeatability and reliability of the model over many years of use. A contract research organization or industry laboratory may have come to the model late in the development pathway, and may only use the model when there is a need. The model is likely to have been established based on published protocols describing the model, but it is well-known that simple replication of a procedure does not guarantee reproducibility. A protocol may not have enough detail to ensure repeatability, or there may be subtle issues that are not even written down.

This problem is particularly acute in specialties where specialized clinical expertise is critical to the analysis of the results of animal models, e.g. ophthalmology. In a contract research organization (CRO), technicians often receive ad hoc training to evaluate clinical scores in a new model, while in academic laboratories, specialty-trained physicians typically have years of experience with the particular model and its evaluation. It is paradoxical that clinical trials are conducted by credentialed expert physicians, but preclinical evaluation of models is often delegated to technicians or laboratory managers without particular expertise in the therapeutic area. The result is that valid work appears irreproducible. An ill-chosen comparator (e.g. positive or negative controls) can lead to the incorrect assumption that a drug does not work. For example, an intraocular corticosteroid may be highly effective in regressing choroidal neovascularization in mice but not humans, while the opposite may be true for an anti-vascular endothelial growth factor agent. Testing the latter against a corticosteroid positive control in mice would falsely identify it as poorly effective, even though it would be highly effective in clinical trials when compared to the corticosteroid.

When an uncommon or complex animal model is replicated from an originating academic laboratory to a recipient academic laboratory, the nature of the academic relationship allows for great flexibility in transferring the critical details from one to the other. Two colleagues in the same field can discuss the details by phone, and will frequently arrange for a skilled postdoctoral fellow or advanced graduate student to spend time in the originating laboratory, learning the written and unwritten minutiae of the method. This helps ensure that the technique is carried out faithfully and with all the details necessary for proper replication. Such a process may be less practical when the originating laboratory is an academic laboratory and the recipient laboratory is a contract research organization or industry laboratory, because of perceived barriers between nonprofit and for-profit organizations, need for confidentiality, or simply the belief that animal models are not very complicated to reproduce.

Second, business issues drive industry to identify drugs that will not fail or that are guaranteed to succeed. This strongly limits the number of drugs that can be studied when the threshold for carrying on research is very high. For example, if an academic laboratory identifies a drug as being able to decrease the progression rate of a disease by 30–50%, this will be accepted as scientifically significant. From the academic point of view, a 30–50% success is impressive. If it is novel, repeatable, tied to a biologically or medically relevant target, and accompanied by data establishing the mechanism by which it occurs, such a result would qualify for publication in a high-impact journal. From the industry point of view, these factors may not be great enough to assure subsequent success in translating the research to human clinical trials and eventual FDA approval. From their point of view, a 30–50% decrease in progression rate in an animal model may translate to a lower effect size in humans [15], and this result may not be enough for a future development process to be financially viable. Even if the effect size in humans Is predicted to be clinically relevant, the project will be abandoned if the developmental, regulatory, and commercial pathway requirements are not clearly apparent. A project can also be abandoned when the licensing arrangement between the academic institution and a company depends on the interpretation of results. If remuneration is tied to the strength of the data, then even reproducible data may be “not strong enough” from the financial perspective of the company, and incorrectly labeled as a translational failure.

Similarly, industry may commit limited resources toward confirming the results from an academic laboratory, in some cases only a single set of experiments is performed. Without calculations of variability and power analysis, a false negative result from a small number of experiments may lead to premature termination of the project. The result is that the academic laboratory may feel that industry is being too harsh in its requirements, while the industry laboratory may judge the academic laboratory as being unrealistic.

Solutions

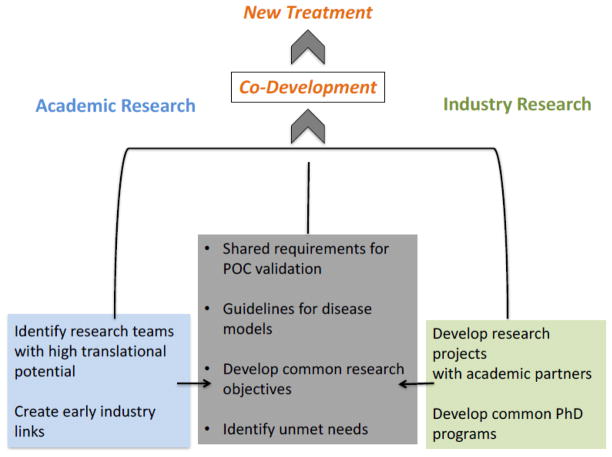

Given the large number of potential problems associated with transferring results from academic laboratories to industry laboratories, it may seem that the problem is insurmountable. However, there are strategies that can be used to solve the problem and make it improve the quality of transferability (Figure 2).

Figure 2.

A proposed model for improving interactions among academic institutions (light blue), industry (light green), and start-up companies (gray) in the drug development process, with the goal of addressing the problems identified in the article.

Sensitizing researchers to transferability

As discussed earlier, many if not most researchers outside of industry are unaware of the problem we have raised. Educating academic researchers and trainees to problems with transferability could help improve the quality of translation. It is a rare academic laboratory that is aware of the procedures that are followed by industry laboratories in confirming the results. Standards for laboratory practices, such as Good Laboratory Practices (GLP), are almost always unknown to most academic laboratories, yet following GLP rules on standard operating procedures, predetermined study plans, quality assurance methods, test systems, and others would increase the quality and transferability of their work. If the academic laboratories are aware in advance of what is likely to be needed for validation in an industry laboratory, it is more likely that the translation process can be improved. Researchers should attempt to have their own results reproduced by other researchers in their own laboratories. They should be sensitive to the importance of having sufficient effect size to drive future development, what we have called the “Princess and the Pea Problem” [15]. Another strategy is a team approach, which can facilitate the avoidance of using the same person for the experimental design, performing the experiments, and analyzing the results.

Relations with industry

Paralleling the theme in the previous paragraph, we suggest that improving relations between academic laboratories and industry laboratories would be beneficial when there is a high likelihood of need for translation between the two. For example, internships or other educational opportunity for young researchers or early researchers in the industry environment would be beneficial. Exposure to protocols such as GLP for standards and other types of certifications would be useful for academic laboratories to see how rigorous protocols can be followed. There should be discussions to arrive at common uses of positive and negative controls used in experiments.

It is important to provide enough financial support to perform the requisite experiments with sufficient numbers of animals, and pay for the number of repeat experiments needed to have results that are statistically worthwhile. Researchers should not only become better trained in statistics, but realize that the statistical methods used in biologic research are worthless when studies are underpowered because of constraints on the number of animals used in a study. In addition, instruments have to be certified, protocols have to be standardized, and adequate time has to be devoted to carrying out the steps. Joint efforts (both financial and methodological) between universities and national research agencies should support teams particularly dedicated to translational research to achieve an adequate level of rigor, which could include financial incentives for reproducible results [21].

We believe that the most advantageous approach to dealing with the complex nature of the academic and industry collaboration is for there to be early partnerships between the two. Such collaborations can be at the level of joint programs that start at very basic levels, progressing through development of new drugs and devices, and at the translational level, where clinical trials are envisaged, designed, and carried out. Industry has both the financial support and the methodology for carrying out expert-level research. The same is true for academic laboratories, but in many cases there is incomplete overlap between the two. Partnership and joint ventures can help deal with differences in knowledge base, techniques, and approach.

An example of a partnership is the transfer of information for carrying out complex animal models. As mentioned above, the nature of the relationship between academic laboratories facilitates the transfer of critical details from one to the other, and it is common that movement of personnel allow hands-on learning of a model that may be relatively rare. Consulting or collaborative arrangements could make this a standard practice between academic and industry laboratories, based on the same rationale behind collaborative networks [22], and thereby improve the reliability and reproducibility of complex models.

Another advantage of industry is that they are fully aware of the work that needs to be done to satisfy regulatory agencies, something which is not always known to most academic researchers. By involving academic researchers early in the process, and with an understanding of the development pathway that would lead to regulatory approval, joint research programs can be optimized.

Industry knows how to kill a project early, an apparently harsh approach that is valuable in optimizing resource use to promote the most valuable projects. This is different from what occurs in academia, where a grant may be funded for a set period, and even if an approach appears to be failing, it is difficult to give up the project. Stopping the project early might mean that the money would have to be returned to the granting agency. The industry approach to project fate is one that could inform the practices of academia and government funding. On the other hand, industry procedures that are overly rigid can close the window of discovery. The great flexibility of being able to modify protocols and evolve experimental designs based on results is an opportunity enjoyed by academic researchers, and leads to innovation. Clearly, there is a double-edged sword associated with rigorous and restrictive experimental procedures.

Concluding Remarks

The relationship between academic and industrial research is complicated. In theory, there should be a natural flow of useful information between early-stage experiments in academic laboratories and confirmation, refinement, and development in industry. In practice, this often fails to occur, for a variety of reasons that have already been discussed. This failure of translation is deleterious. Academic researchers have ideas and creativity that can lead to significant advances, and the failure to reproduce them in the industry laboratory is not one of academic malfeasance but more likely reflects a system failure. We have pointed out several approaches that can address these issues (see Outstanding Questions), which hopefully can lead to an improved level of cooperation between the most important partners in translational biomedical research.

Trends Box.

The drug development process has historically thrived on results of basic research in academic laboratories which are then refined in industry laboratories and translated to clinical studies

Academic laboratories increasingly participate in applied research directed toward disease, as a result of funding pressures from granting agencies to focus on clinically relevant investigations

Recent experience and retrospective studies have demonstrated that the process is frequently flawed, with as many as 70–90% of academic laboratory findings not being reproduced when repeated in the industry setting

Start-up companies are positioned in the middle of the discovery process, with expertise in the drug and the experimental models, yet subject to the financial and time pressures of industry

The result is slowing and inefficiency in drug development, leading to a drying up of pipelines.

Outstanding questions.

Would industry benefit from rotations in academic laboratories for practical experience, thereby improving the ability to confirm preclinical results? Examples include exposure to specific animal models, methods for assaying results, and specifics of drug administration.

Are contract research organizations (CROs) unbiased because they do not have an incentive for results in one direction or another, or is there a bias towards showing positive effects that would make it more likely that further studies are requested and contracts renewed?

What level of masking should be required at the level of a laboratory (academic, industry, or CRO) attempting to replicate data? For example, masked drug and placebo samples can be provided by a company to a CRO, followed by secondary masking when aliquoted for specific experiments, in addition to the typical masking of the person running the experiments. Does the combination of three levels of masking decrease unconscious bias more than what occurs in usual practice?

Can rules guide the number and type of repetitions of preclinical models at the industry level? Should proof of efficacy be required in more than one strain of animal, more than one laboratory site, and/or more than one animal model? How should the number of repetitions be determined? How can restrictions on animal numbers required for validation be dealt with?

In replicating animal models, should similar models be used or should they differ greatly to make it more likely that an effect would fail to translate to clinical validation? Reinforcement by variation is a tenet of “ensemble” approaches to dealing with chaotic systems, but this has not been quantified for translational research.

Could guidelines be developed from collaborative academic-industrial consortia and used to standardize the use of specific animal models for specific human diseases? For example, are there animal models that would satisfy all parties for validating the efficacy of a compound for a specific disease or sign?

How valuable is sending samples to more than one CRO as a control on the reliability and accuracy of research done in CROs? Results can be analyzed not only whether they are positive or not, but whether the findings are within the same approximate range of protection or inhibition. What methods can be used to decide the level of inter-CRO replication?

Does the fact that a CRO has SOP and GLP management procedures guarantee the quality of the human evaluation of models? How is the expertise of the operators measured and guaranteed in CROs?

Acknowledgments

Grant Support: Canada Research Chairs, National Institutes of Health R21EY025074 and P30EY016665, Research to Prevent Blindness, Fond National Suisse 320030_156401, Agence Nationale de la Recherche ANR-15-CE18-0032 ROCK SUR MER

Footnotes

Conflict of Interest

The authors declare the following financial relationships:

LAL – Consultant to Aerie, Inotek, Quark, Regenera, and Teva.

FBC – Consultant to Bayer,, Quark, Teva, Allergan, Eyevensys, Novartis, Thrombogenics, Logitec, Genentech, Boehringer-Ingelheim, Steba Biotech, Solid Drug Development.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Culliton BJ. The academic-industrial complex. Science. 1982;216(4549):960–2. doi: 10.1126/science.11643748. [DOI] [PubMed] [Google Scholar]

- 2.Freedman S, Mullane K. The academic–industrial complex: navigating the translational and cultural divide. Drug Discovery Today. 2017 doi: 10.1016/j.drudis.2017.03.005. [DOI] [PubMed] [Google Scholar]

- 3.Prinz F, et al. Believe it or not: how much can we rely on published data on potential drug targets? Nat Rev Drug Discov. 2011;10(9):712–712. doi: 10.1038/nrd3439-c1. [DOI] [PubMed] [Google Scholar]

- 4.Begley CG, Ellis LM. Drug development: Raise standards for preclinical cancer research. Nature. 2012;483(7391):531–3. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- 5.Pacholec M, et al. SRT1720, SRT2183, SRT1460, and resveratrol are not direct activators of SIRT1. J Biol Chem. 2010;285(11):8340–51. doi: 10.1074/jbc.M109.088682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jarvis MF, Williams M. Irreproducibility in Preclinical Biomedical Research: Perceptions, Uncertainties, and Knowledge Gaps. Trends Pharmacol Sci. 2016;37(4):290–302. doi: 10.1016/j.tips.2015.12.001. [DOI] [PubMed] [Google Scholar]

- 7.Goodman SN, et al. What does research reproducibility mean? Science translational medicine. 2016;8(341):341ps12–341ps12. doi: 10.1126/scitranslmed.aaf5027. [DOI] [PubMed] [Google Scholar]

- 8.Smith MM, et al. Considerations for the design and execution of protocols for animal research and treatment to improve reproducibility and standardization: “DEPART well-prepared and ARRIVE safely”. Osteoarthritis Cartilage. 2017;25(3):354–363. doi: 10.1016/j.joca.2016.10.016. [DOI] [PubMed] [Google Scholar]

- 9.Mullane K, Williams M. Enhancing Reproducibility: Failures from Reproducibility Initiatives underline core challenges. Biochemical Pharmacology. 2017 doi: 10.1016/j.bcp.2017.04.008. [DOI] [PubMed] [Google Scholar]

- 10.Ling LL, et al. A new antibiotic kills pathogens without detectable resistance. Nature. 2015;517(7535):455–9. doi: 10.1038/nature14098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Simonsohn U, et al. P-curve: a key to the file-drawer. Journal of Experimental Psychology: General. 2014;143(2):534. doi: 10.1037/a0033242. [DOI] [PubMed] [Google Scholar]

- 12.Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rosenthal R. The file drawer problem and tolerance for null results. Psychological bulletin. 1979;86(3):638. [Google Scholar]

- 14.Ioannidis JP, Trikalinos TA. Early extreme contradictory estimates may appear in published research: the Proteus phenomenon in molecular genetics research and randomized trials. Journal of clinical epidemiology. 2005;58(6):543–549. doi: 10.1016/j.jclinepi.2004.10.019. [DOI] [PubMed] [Google Scholar]

- 15.Levin LA, Danesh-Meyer HV. Lost in translation: Bumps in the road between bench and bedside. JAMA. 2010;303(15):1533–4. doi: 10.1001/jama.2010.463. [DOI] [PubMed] [Google Scholar]

- 16.Fisher M, et al. Update of the stroke therapy academic industry roundtable preclinical recommendations. Stroke. 2009;40(6):2244–50. doi: 10.1161/STROKEAHA.108.541128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kilkenny C, et al. Improving bioscience research reporting: the ARRIVE guidelines for reporting animal research. PLoS Biol. 2010;8(6):e1000412. doi: 10.1371/journal.pbio.1000412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Landis SC, et al. A call for transparent reporting to optimize the predictive value of preclinical research. Nature. 2012;490(7419):187. doi: 10.1038/nature11556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Llovera G, et al. Results of a preclinical randomized controlled multicenter trial (pRCT): Anti-CD49d treatment for acute brain ischemia. Science Translational Medicine. 2015;7(299):299ra121–299ra121. doi: 10.1126/scitranslmed.aaa9853. [DOI] [PubMed] [Google Scholar]

- 20.Nuzzo R. Scientific method: statistical errors. Nature. 2014;506(7487):150–2. doi: 10.1038/506150a. [DOI] [PubMed] [Google Scholar]

- 21.Rosenblatt M. An incentive-based approach for improving data reproducibility. American Association for the Advancement of Science. 2016 doi: 10.1126/scitranslmed.aaf5003. [DOI] [PubMed] [Google Scholar]

- 22.Ivinson AJ. Collaboration in Translational Neuroscience: A Call to Arms. Neuron. 2014;84(3):521–525. doi: 10.1016/j.neuron.2014.10.036. [DOI] [PubMed] [Google Scholar]