Abstract

Recent advances in brain imaging techniques, measurement approaches, and storage capacities have provided an unprecedented supply of high temporal resolution neural data. These data present a remarkable opportunity to gain a mechanistic understanding not just of circuit structure, but also of circuit dynamics, and its role in cognition and disease. Such understanding necessitates a description of the raw observations, and a delineation of computational models and mathematical theories that accurately capture fundamental principles behind the observations. Here we review recent advances in a range of modeling approaches that embrace the temporally-evolving interconnected structure of the brain and summarize that structure in a dynamic graph. We describe recent efforts to model dynamic patterns of connectivity, dynamic patterns of activity, and patterns of activity atop connectivity. In the context of these models, we review important considerations in statistical testing, including parametric and non-parametric approaches. Finally, we offer thoughts on careful and accurate interpretation of dynamic graph architecture, and outline important future directions for method development.

The increasing availability of human neuroimaging data acquired at high temporal resolution has spurred efforts to model and interpret these data in a manner that provides insights into circuit dynamics [1]. Such data span many distinct imaging modalities and capture inherently different indicators of underlying neural activity, neurotransmitter function, and excitatory/inhibitory balance [2, 3]. Particularly amenable to whole-brain acquisitions, the development of multiband imaging has provided an order of magnitude increase in the temporal resolution of one of the slowest imaging measurements: functional magnetic resonance imaging (fMRI) [4]. Over limited areas of cortex, intracranial electrocorticography (ECoG) complements magnetoencephalography (MEG) and electroencephalography (EEG) by providing sampling frequencies of approximately 2 kHz and direct measurements of synchronized postsynaptic potentials at the exposed cortical surface [5]. In each case, data can be sampled from many brain areas over hours (fMRI) to weeks (ECoG), providing increasingly rich neurophysiology for models of brain dynamics [6], both to explain observations in a single modality, and to link observations across modalities [7].

A common guiding principle across many of these modeling endeavors is that the brain is an interconnected complex system (Fig. 1), and that understanding neural function may therefore require theoretical tools and computational methods that embrace that interconnected structure [8]. The language of networks and graphs has proven particularly useful in describing interconnected structures throughout the world in which we live [9]: from vasculature [10] and genetics [11] to social groups [12] and physical materials [13]. Historically, the application of network science to each of these domains tends to begin with a careful description of the network architecture present in the system, including comparisons to appropriate statistical null models [14]. Descriptive statistics then give way to generative models that support prediction and classification, and eventually efforts focus on fundamental theories of network development, growth, and function [15]. In the context of neural systems, these tools are fairly nascent – with the majority of efforts focusing on description [16], a few efforts beginning to tackle generation and prediction [17–23], and still little truly tackling theory [24, 25].

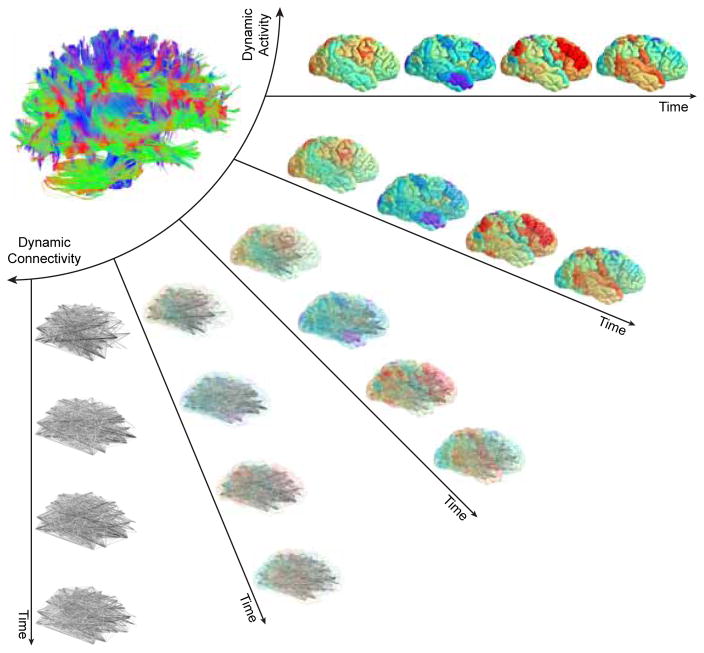

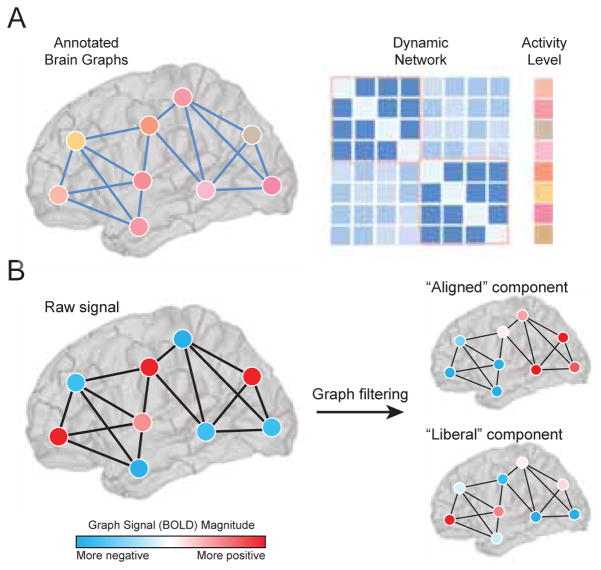

FIG. 1. Mesoscale network methods can address activity, connectivity, or the two together.

In the human brain, the structural connectome supports a diverse repertoire of functional brain dynamics, ranging from the patterns of activity across individual brain regions to the dynamic patterns of connectivity between brain regions. Current methods to study the brain as a networked system usually address connectivity alone (either static or dynamic) or activity alone. Methods developed to address the relations between connectivity and activity are few in number, and further efforts connecting them will be an important area for future growth in the field. In particular, the development of methods in which activity and connectivity can be weighted differently – such as is possible in annotated graphs, which we review later in this article – could provide much-needed insight into their complimentary roles in neural processing.

The recent extension of network models to the time domain supports the movement from description to prediction (and eventually theory), and also capitalizes on the increasing availability of high-resolution neuroimaging data. Variously referred to as dynamic graphs [26], temporal networks [27], or dynamic networks [28], graph-based models of time-evolving interconnection patterns are particularly well-poised to enhance our understanding of dynamic neural processes from cognition and psychosis [29, 30], to development and aging [31, 32]. Here we review several of these recently developed methods that have been built upon the mathematical foundations of graph theory [33, 34], and we complement a description of the approaches with a discussion of statistical testing and interpretation. To ensure that the treatise is conceptually accessible and manageable in terms of length, we place a particular focus on approaches quantifying mesoscale network architecture, by which we mean salient organizational characteristics at topological scales that are larger than single or a few nodes, and smaller than the entire network. A commonly studied example of mesoscale structure is modularity [35]. Mesoscale network architecture has proven particularly important in our conceptual understanding of the structural organization of the brain [36], as well as its function [37] and response to perturbation via stimulation [38]. Readers are directed elsewhere for recent reviews on local and global-scale network architecture [39], multi-scale network models [40], multiscale biophysical models [41], ICA-based approaches [42], dynamic causal modeling [43], and whole-brain dynamical systems models [6] including the Virtual Brain [44].

The remainder of this review is organized as follows. We begin with a simple description of network (or graph) models that can be derived from functional signals across different imaging modalities. We then describe recent efforts to model network dynamics by considering (i) patterns of connectivity, (ii) levels of activity, and (iii) activity atop connectivity. Next, we discuss statistical testing of network dynamics building on graph null models, time series null models, and other related inferences approaches. We conclude with a discussion of important considerations when interpreting network dynamics, and outline a few future directions that we find particularly exciting.

GRAPH MODELS OF FUNCTIONAL SIGNALS

Before describing the recent methodological advances in dynamic graph models, and their application to neuroimaging data, it is important to clarify a few definitions. First, we use the term graph in the mathematical sense to indicate a graph G = (V, E) composed of a vertex (or node) set V with size N and an edge set E, and we store this information in an adjacency matrix A, whose elements Aij indicate the strength of edges between nodes [33, 34]. Second, we use the term model to indicate a simplified representation of raw observations; a graph model parsimoniously encodes the relationships between system components [45]. Given these two first definitions, it is natural that we use the term dynamic graph model to indicate a time-ordered set of graph models of data: a single adjacency matrix encodes the pattern of connectivity at a single time point or in a single time window t of data, and the set of adjacency matrices extends that encoding over many time points or many time windows. Dynamic graph models have the disadvantage of ignoring non-relational aspects of the data, such as discrete properties of vertices (e.g., node size, function, or history) or non-discrete properties of the system that lie along a continuum (e.g., chemical gradients in biological systems, or fluids in physical systems). Nevertheless, dynamic graph models have unique advantages in providing access to a host of computational tools and conceptual frameworks developed by the applied mathematics community over the last few decades. Moreover, dynamic graph models are a singular representation that can be flexibly applied across spatial and temporal scales, thereby supporting multimodal investigations [46, 47] and cross-species analyses [48, 49].

Dynamic graph models can be built from fMRI, EEG, MEG, ECoG, and other imaging modalities using similar principles. First, vertices of the graph (or nodes of the network) need to be chosen, followed by a measure quantifying the strength of edges linking two vertices. In fMRI, nodes are commonly chosen as contiguous volumes either defined by functional or anatomical boundaries [50, 51]. Edge weights are commonly defined by a Pearson correlation coefficient [52]; however, a growing number of studies uses a magnitude squared coherence, to increase robustness to artifacts and to ensure that regional variability in the hemodynamic response function does not create artifactual structure as it can in a correlation matrix [53–55]. In EEG and MEG data, nodes usually represent either sensors or sources obtained after applying source-localization techniques; edges usually represent spectral coherence [56], mutual information [57], phase lag index [58], or synchronization likelihood [59]. In ECoG data, an increasingly popular method to define functional relationships between sensors is a multi-taper coherence [60, 61]. Note: While neuron-level recordings are not the focus of this exposition, the tools we describe here are equally applicable to dynamic graphs in which neurons are represented as nodes [62], and in which relationships between neurons are summarized in, for example, shuffle-corrected cross-correlograms [63–66].

After nodes have been chosen and the edge measure defined, the commonly-used approach for generating a dynamic graph model is to delineate time windows, where the pattern of functional connectivity in each time window is encoded in an adjacency matrix. Choosing the size of the time window is important. Short windows can hamper accurate estimates of functional connectivity within frequency bands that are not adequately sampled within that time period [67, 68], while long windows may only reflect the time-invariant network structure of the data [69–71]. Intuitively, to achieve accurate estimates of covariation in fluctuations at any time scale, one would like to include multiple cycles of the signal: the more cycles included the greater the confidence in the estimated covariation [67, 72]. In our recent work, we demonstrated that short time windows – on the order of 20–30 s in fMRI – may better reflect individual differences while long time windows – on the order of 2–3 min in fMRI – may reflect network architectures that are reproducible over iterative measurement [68]. We also suggest that a reasonable method for choosing a time window of interest is to maximize the variability in the network’s flexible reconfigurations over time (see later sections for further details). Prior work has used similar approaches to suggest optimal time windows on the order of a few 10’s of seconds for human BOLD data, and 1 s for ECoG data [60, 61, 68, 73].

MODELING NETWORK DYNAMICS

The construction process described in the previous section provides a dynamic graph model from which one can begin to infer organizational principles and their temporal variation. In this section, we describe methods that build on these models to characterize time-evolving patterns of connectivity. We then describe a set of related methods that characterize time-evolving patterns of activity, and we conclude this section by describing methods that explicitly characterize how activity occurs atop connectivity.

Considering patterns of connectivity

Time-varying graph dynamics can be thought of as a type of system evolution (Fig. 2). When considering canonical forms of evolution, one quite naturally thinks about modularity [74]: the nearly decomposable nature of many adaptive systems that supports their potential for evolution and development [75–77]. Modularity is a consistently observed characteristic of graph models of brain function [78, 79], where it is thought to facilitate segregation of function [80], progressive integration of information across network architectures [38], learning without forgetting [81], and potential for rehabilitation after injury [82].

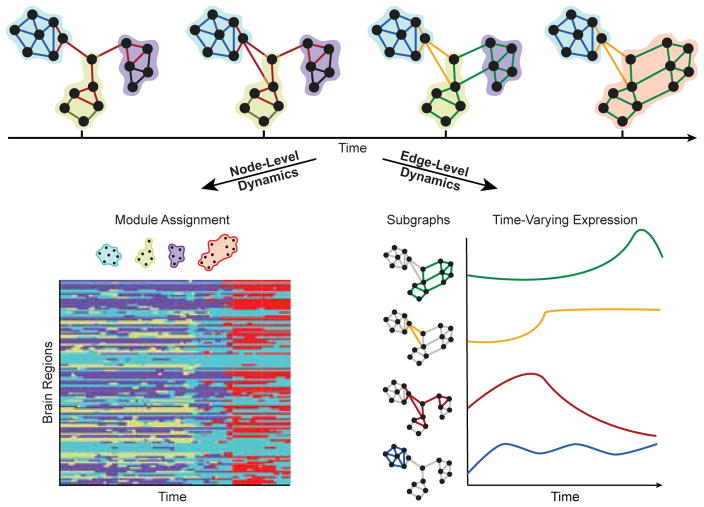

FIG. 2. Dynamic network modules and subgraphs.

(Top) Network science enables investigators to study dynamic architecture of complex brain networks in terms of the collective organization of nodes and of edges. Clusters of strongly interconnected nodes are known as modules, and clusters of edges whose strengths, or edge weights, vary together in time are known as subgraphs. Nodes and edges of the same module or subgraph are shaded by color. Each module represents a collection of nodes that are highly interconnected to one another and sparsely connected to nodes of other modules, and each node may only be a member of a single module. Each subgraph is a recurring pattern of edges that link information between nodes at the same points in time, and each edge can belong to multiple subgraphs. (Bottom Left) Dynamic community detection assigns nodes to time-varying modules. Nodes may shift their participation between modules over time based on the demands of the system. (Bottom Right) Non-negative matrix factorization pursues a parts-based decomposition of the dynamic network into subgraphs and time-varying coefficients, which quantify the level of expression of each subgraph over time.

The ability to assess time-varying modular architecture in brain graphs is critical for an understanding of the exact trajectories of network reconfiguration that accompany healthy cognitive function and development [83], as well as the identification of altered trajectories characteristic of disease [84]. Yet, there are several computational challenges that must be addressed. The simplest method to assess time-varying modular architecture is to identify modules in each time window, and then develop statistics to characterize their changes. However, identifying changes in modules requires that we have a map from a module in one time window to itself in the next time window. Such a map is not a natural byproduct of methods applied to individual time windows separately; due to the heuristic nature of the common community detection algorithms [85–87], a module assigned the label of module 1 in time window l need not be the same as the module assigned the same label in time window r. The historic Hungarian algorithm (developed in 1955) can be used to attempt a re-labeling to create an accurate mapping [88, 89], but the algorithm fails when ties occur, and is not parameterized to assess mappings sensitive to module-to-module similarities occurring over different time scales.

Dynamic Community Detection

A recent solution to these problems lies in transforming the ordered set of adjacency matrices that compose a dynamic graph model into a multilayer network [90]. Here, the graph in one time window is linked to the graph in adjacent time windows by identity edges that connect a node in one time window to itself in neighboring time windows [91, 92]; this identity linking is performed for all nodes. Then, one can identify modules – and their temporal variation – by maximizing a multilayer modularity quality function:

| (1) |

where Aijl is the weight of the edge between nodes i and j in a time window l; the community assignment of node i in layer l is gil, the community assignment of node j in layer r is gjr, and δ(gil, gjr) = 1 if gil = gjr and 0 otherwise; the total edge weight is , where κjl = kjl+cjl is the strength of node j in layer l, kjl is the intra-layer strength of node j in layer l, and cjl = Σr ωjlr is the inter-layer strength of node j in layer l. The variable Pijl is the corresponding element of a specified null model, which can be tuned to account for different characteristics of the system [13, 93, 94]. The parameter γl is a structural resolution parameter of layer l that can be used to tune the number of communities identified, with lower values providing sensitivity to large-scale community structure and higher values providing sensitivity to small-scale community structure. The strength of the identity link between node j in layer r and node j in layer l is the dimensionless quantity ωjlr, which can be used to tune the temporal resolution of the identified module reconfiguration process, with lower values providing sensitivity to high-frequency (relative to the sampling rate) changes in community structure and higher values providing sensitivity to low-frequency changes in community structure [95].

The dynamic community detection approach has several strengths. First, it solves the matching problem that defines which module in one time window “is the same as” which module in another time window. Second, it provides tuning parameters that enable one to access information about both fine and coarse topological scales of network reconfiguration (γ), as well as both fine and coarse temporal scales of network reconfiguration (ω). Third, the formulation allows one to construct and incorporate hypothesis-specific null models (P). Fourth, unlike statistically-driven methods based on principle components analysis or independent components analysis, modules need not be completely independent from one another, but instead edges with nonzero weight can exist between a node in one module and a node in another module. Fifth, the method provides natural ways to assess overlapping community structure, either by examining the probability that nodes are assigned to a given community over multiple optimizations of the modulatiy quality function, or by extending the tool to identify communities of edges [96–98]. Sixth, generative models for module reconfiguration processes are beginning to facilitate the potential transition from description to prediction and theory [99, 100]. These advantages have proven critical for studies of network dynamics accompanying working memory [28], attention [101], mood [102], motor learning [103, 104], reinforcement learning [105], language processing [106, 107], intertask differences [68, 73], normative development and aging [108, 109], inter-frequency relationships [110], and behavioral chunking [94].

Non-Negative Matrix Factorization

Of course, the clustering of nodes into functionally-cohesive modules is just one of potentially many organizational principles characterizing dynamic brain networks. Dynamic community detection provides a lens on the dynamics of node-level organization in the network, but does not explicitly describe the dynamics of edges that link nodes within and between modules. Recent advances in graph theoretic tools based on machine learning can provide insights into additional constraints on the evolution of brain systems [32, 111, 112] by addressing open questions such as: How are the edges linking network nodes changing with time? Do all edges reorganize as a cohesive group, or are there smaller clusters of edges that reorganize at different rates or in different ways? Could the same edge link two nodes of the same module at one point in time and link two nodes of different modules at another point in time?

One set of tools that can begin answering these questions is an unsupervised machine learning approach known as non-negative matrix factorization (NMF) [113]. NMF has previously been applied to neuroimaging data to extract structure in morphometric variables [114], tumor heterogeneity [115], and resting state fMRI [116]. In the context of dynamic graph models, NMF objectively identifies clusters of co-evolving edges, known as subgraphs, in large, temporally-resolved data sets [117]. Conceptually, subgraphs are mathematical basis functions of the dynamic brain graph whose weighted linear combination – given by a set of time-varying basis weights or expression coefficients for each subgraph – reconstructs a repertoire of graph configurations observed over time. In contrast to the hard-partitioning of nodes into discrete modules in dynamic community detection, NMF pursues a soft-partitioning of the network such that all graph nodes and edges participate to varying degree in each subgraph – which is represented by a weighted adjacency matrix (see [117] for in-depth comparison of network modules and network subgraphs).

To apply NMF to the dynamic graph model, the edges in the N × N × T dynamic adjacency matrix must be non-negative and unraveled into a N(N − 1)/2 × T network configuration matrix Â. Next, one can minimize the L2-norm reconstruction error between  and the matrix product of two non-negative matrices W – an N(N − 1)/2 × m subgraph matrix – and H – an m × T time-varying expression coefficients matrix – such that:

| (2) |

where ||·||F is the Frobenius norm operator, ||·||1 is the L1 norm operator, m ∈ [2, min(N(N − 1)/2, T) − 1] is a rank parameter of the factored matrices that can be used to tune the number of subgraphs to identify, β is a tunable penalty weight to impose sparse temporal expression coefficients, and α is a tunable regularization of the edge strengths for subgraphs [118]. To tune these parameters without overfitting the model to dynamic network data, recent studies have employed parameter grid search [32] and random sampling approaches [112, 117].

NMF has distinct advantages over other unsupervised matrix decomposition approaches such as PCA [119]. First, the non-negativity constraint in the NMF approach means that subgraphs can be interpreted as additive components of the original dynamic network. That is, the relative expression of different subgraphs can be judged purely based on the positivity of the expression coefficients during a given time window. Second, NMF does not make any explicit assumptions about the orthogonality or independence of the resulting subgraphs, which provides added flexibility in uncovering network components with overlapping sub-structures that are specific to different brain processes. Recent applications of NMF to characterize network dynamics at the edge-level have led to important insights into the evolution of executive networks during healthy neurodevelopment [32] and have uncovered putative network components of function and dysfunction in medically refractory epilepsy [112].

Considering levels of activity

The approaches described in the previous section seek to characterize mesoscale structure in dynamic graph models in which nodes represent brain areas and edges represent functional connections. Yet, one can construct alternative graph models from neuroimaging data to test different sorts of hypotheses. In particular, a set of new approaches have begun to be developed for understanding how brain states evolve over time: where a state is defined as a pattern of activity over all brain regions [120–124], rather than as a pattern of connectivity. This notion of brain state is one that has its roots in the analysis of EEG and MEG data [125], where the voltage patterns across a set of sensors or sources has been referred to as a microstate [126, 127]. The composition and dynamics of microstates predict working memory performance [128] and are altered in disease [129]. Emerging graph theoretical tools have become available to study how such states evolve into one another. These activity-centric approaches are reminiscent of multi-voxel pattern analysis (MVPA) approaches in the sense that the object of interest is a multi-region pattern of activity [130, 131]; yet, they differ from MVPA in that they explicitly use computational tools from graph theory to understand complex patterns of relationships between states.

Time-by-Time Graphs

Perhaps the simplest example of such an approach is the construction of so-called time-by-time networks [132–134]: a graph whose nodes represent the time point of an instantaneous measurement, and whose edges represent similarities between pairs of time points (Fig. 3A–B). For example, in the context of BOLD fMRI, a node could represent a repetition time sample (TR), and an edge between two TRs could indicate a degree of similarity or distance between the brain state at TR l and the brain state at TR r. We will represent this graph as the adjacency matrix T which is of dimension T × T, where T is the number of time points at which an instantaneous measurement was acquired, in contrast to the traditionally studied adjacency matrix A which is of dimension N × N, where N is the number of brain regions.

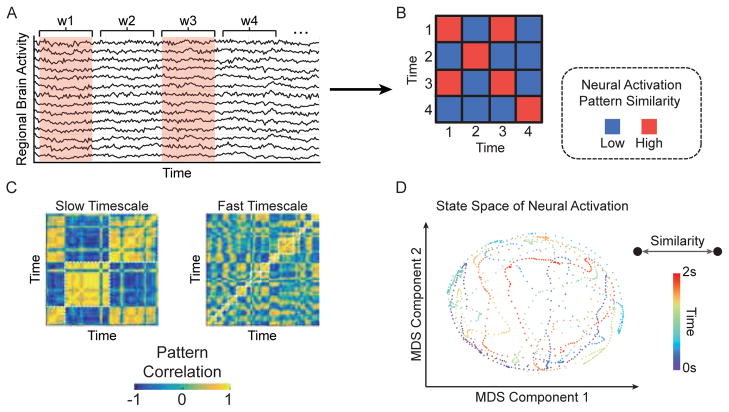

FIG. 3. State space of brain activity patterns.

(A) The time-by-time graph captures similarities in neural activation patterns between different points in time. In practice, one can compute the average brain activity for individual brain regions within discrete time windows and compare the resulting pattern of activation between two time windows using a similarity function, such as the Pearson correlation. (B) The resulting time-by-time graph has an adjacency matrix representation in which each time window is a node and the neural activation similarity between a pair of time windows is an edge. (C) Clustering tools based on graph theory or machine learning can identify groups of time windows – or states – with similar patterns of neural activation. By parametrically varying the number of clusters, or their size, one can examine the dynamic states over multiple time scales (Adapted from [136]). (D) Multidimensional scaling (MDS) [137] can be used to trace the trajectory of a dynamical system through state space by projecting a high-dimensional, time-by-time graph onto a two dimensional subspace. In this subspace, each point is a neural activation pattern in a time window and the spatial proximity between two points represents the similarity of the neural activation pattern between time windows. The example shown here is an MDS projection of a time-by-time graph derived from ECoG in an epilepsy patient during a 2 s, resting period onto a two-dimensional space. Each point represents a 2 ms time window and is shaded based on its occurrence in the 2 s interval. The depicted state space demonstrates an interleaved trajectory in which the system revisits and crosses through paths visited at earlier time points. These tools can be readily adapted to characterize the evolution of a neural system in conjunction with changes in behavior.

After constructing a time-by-time network, one can apply graph theoretical techniques to extract the community structure of the graph, to identify canonical states, and to quantify the transitions between them (Fig. 3C–D). Efforts in this vein have identified different canonical states in rest [132, 133] versus task [134], and observed that flexible transitions between states change over development [132] and predict individual differences in learning [134]. While these studies have focused on the cluster structure of time-by-time graphs, other metrics – including local clustering and global efficiency – could also be applied to these networks to better understand how the brain traverses states over time. In related work, reproducible temporal sequences of states have been referred to as lag threads [135], and boundaries between states have offered important insights into the storage and retrieval of events in long-term memory [136].

Topological Data Analysis: Mapper

A conceptually similar approach begins with the same underlying data type (an N×T matrix representing regional activity magnitudes as a function of time) and applies tools from algebraic topology to uncover meaningful – and statistically unexpected structure – in evolving patterns of neural activity [138].

Generally, these raw data arise from sampling a possibly high-dimensional manifold which describes all possible occurrences of the observed data type – implying that the global shape or topological features of this manifold could inform our understanding of processes specific to the system. In mathematics, multiple methods exist for returning topological information about a manifold. One such method is to construct a simplified object from our topological space X, called the Reeb graph [139], which captures the evolution of the connected components within level sets of a continuous function f: X → ℝ. Recall that a level set is the collection of inputs (elements of X) which are all mapped to the same output (value in ℝ). As an example, if our topological space X is a torus (Fig. 4A), then we can use the height function h: X → ℝ, so the level sets, h−1(c) for c ∈ ℝ, are horizontal slices of X at height c. Note each horizontal slice has one or two (or zero) connected components. As we move from one slice to the next, we record only the critical points at which the number of connected components changes, and how the connected components split or merge at these points. With nodes as critical points and edges indicating connected component evolution, the Reeb graph succinctly reflects these topological features (Fig. 4A, right).

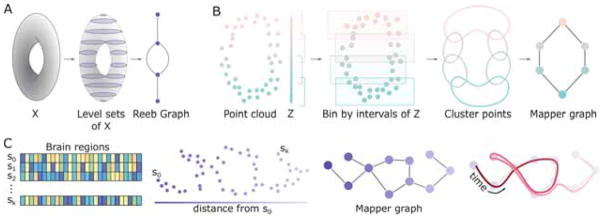

FIG. 4. Mapping temporal structure with algebraic topology.

(A) Schematic of Reeb graph construction. Given a topological space X, here a torus, the Reeb graph is constructed by examining the evolution of the level sets from h(X). (B) Illustration of the Mapper algorithm. Beginning with a point cloud and parameter space Z, points are binned and clustered. Resulting clusters are collapsed to nodes in the final Mapper graph, and edges between nodes exist if the two corresponding clusters share points from the original point cloud. (C) Example use of topological data analysis for dynamic networks. The initial point cloud here is the collection of brain states across time and parameter space the distance from the initial state of the system. Following the path of time (right, red curve) on the Mapper graph may yield insights to system evolution.

While we would prefer a simple summary such as the Reeb graph for neural processes, we record noisy point clouds (perhaps from neuron spiking, region activity, or microstates at each time point) instead of nice manifolds. One approach for computing summary objects such as this in the presence of noisy data is called Mapper [140], which begins with a point cloud Y and two functions: (i) as before, f: Y → Z for some parameter space Z, and (ii) a distance metric on Y (Fig. 4B, left). We then choose a cover of Z, or a collection of open sets Uα with Z ⊆ ∪α Uα. If Z is a subset of the real line, in practice we often use a small number of intervals with fixed length. We look then to the collection of points of Y falling within f−1(Uα) for each α. In other words, we bin points in Y based on their associated z ∈ Z, and cluster these points using the chosen distance metric. This is analogous to taking level sets and determining connected components when constructing the Reeb graph. The last step creates the output graph (called the Mapper graph from [140]) – defined using a single node for each cluster and laying edges between nodes whose corresponding clusters share at least one point y ∈ Y.

The Mapper algorithm or similar methods have been used to stratify disease states and identify patient subgroups [141–143], conduct proteomics analyses [144, 145], and compare brain morphology [146, 147]. These methods are now ripe for application in dynamic networks, as discussed in this review. One possible avenue to describe the shape of a neural state space might involve modeling the states of brain activity {s0, s1, …, sk} as points in a cloud and defining the association parameter Z as the Euclidean distance between the initial state, s0, and all other states (Fig. 4C, left). By binning and clustering points in the cloud, we could recover a graph summarizing the topological features of the state space traversed. This approach would enable us to track whether the system re-visits previously encountered states or enters novel states, as evidenced by loops, dead zones, and branches of the traversed path itself (Fig. 4C, right). Additional possibilities include using density or eccentricity as parameters, or combining these which would yield a higher dimensional output [140].

Considering activity atop connectivity

The approaches described thus far address either evolving patterns of connectivity (dynamic community detection and non-negative matrix factorization) or evolving patterns of activity (time-by-time networks and Reeb graphs). A natural next question is whether these two perspectives on brain function can be combined in a way that provides insights into how activity occurs atop connectivity. In this section we will describe two such recently-developed approaches stemming respectively from applied mathematics and engineering – annotated graphs and graph signal processing – that have been recently applied to multimodal neuroimaging data to better understand brain network dynamics.

Annotated graphs

The traditional composition of a graph includes identical nodes and non-identical (weighted) edges, and this composition is therefore naturally encoded in an adjacency matrix A. What is not traditionally included in graph construction – and also not naturally encoded in an adjacency matrix – is any identity or weight associated with a node. Yet, in many systems, nodes differ by size, location, and importance in a way that need not be identically related to their role in the network topology. Indeed, understanding how a node’s features may help to explain its connectivity, or how a node’s connectivity may constrain its features can be critical for explaining a system’s observed dynamics and function.

Annotated graphs are graphs that allow for scalar values or categories (annotations) to be associated with each node (Fig. 5A). These graphs are represented by both an adjacency matrix A of dimension N × N, and a vector x of dimension N × 1. Characterizing annotated graph structure, and performing statistical inference, requires an expansion of the common graph analysis toolkit [148, 149]. In an unannotated graph, network communities are composed of densely interconnected nodes. In an annotated graph, network communities are composed of nodes that are both densely interconnected and have similar annotations. Recent efforts have formalized the study of community structure in such graphs by writing down the probability of observing a given annotated graph as a product of the probability of observing that exemplar of a weighted stochastic block model and the probability of observing the annotations [150]. That is, assuming independence between the annotation x and the graph A with block structure θ and community partition z:

| (3) |

where the first term of the right side of the equation accounts for the probability of observing the graph given the community structure under the assumptions of the weighted stochastic block model. This term relies on the assumption that interconnected nodes are likely to be in the same community. The second term accounts for the probability of observing the continuously valued annotations given the community structure [151]. This term relies on the assumption that nodes with similar annotations are more likely to be in the same community. Methods are available to fit this model to existing data to extract community structure and quantify the degree to which that community structure aligns with node-level annotations. These methods function by finding the community partition z that maximizes the probability of Eq. 3.

FIG. 5. Brain activity on brain graphs.

(A) Annotated graphs enable the investigator to model scalar or categorical values associated with each node. These graphs are represented by both an adjacency matrix A of dimension N × N, and a vector x of dimension N × 1 (Adapted from [151]). (B) Graph signal processing allows one to interpret and manipulate signals atop nodes in a mathematical space defined by their underlying graphical structure. A graph signal is defined on each vertex in a graph. For example, the signal could represent the level of BOLD activity at brain regions interconnected by a network of fiber tracts. Graph filters can be constructed using the eigenvectors of A that are most and least aligned with its structure. Applying these filters to a graph signal decomposes it into aligned/misaligned components. Elements of the aligned component will tend to have the same sign if they are joined by a connection. The elements of the misaligned or “liberal” component, on the other hand, may change sign frequently, even if joined by a direct structural connection.

Initial efforts applying these tools to neuroimaging data have demonstrated that discrepancies between BOLD magnitudes and the community structure of functional connectivity patterns predicts individual differences in the learning of a new motor skill [151]. The model defined in that study also included a parameter that could be used to tune the relative contribution of the annotation versus the connectivity to the estimated community structure; this tunable parameter allows one to test hypotheses about the relative contribution of an annotation (e.g., BOLD magnitude) and a network (e.g., functional connectivity) to neural markers of cognition or disease. Future efforts could build on these preliminary findings to incorporate different types of annotations that are agnostic to network organization, such as measurements of time series complexity [152], gray matter density, cortical thickness, oxidative metabolism [153], gene expression [154], or cytoarchitectural characteristics [155]. Another class of interesting annotations includes statistics that summarize complementary features of network organization, such as a regional statistic of the structural network annotating a functional graph, or summary statistics of edge covariance matrices including node degree of the functional hypergraph [156–158] or archetype [159] annotating structural or functional graphs. More generally, annotated graphs can be used in this way to better understand the relationships between these regional characteristics and inter-regional estimates of structural or functional connectivity.

Graph Signal Processing

A discrete-time signal consists of a series of observations, and can be operated on and transformed using tools from classical signal processing, e.g. filtered, denoised, downsampled, etc. [160]. Many signals, however, are defined on the vertices of a graphs and therefore exhibit interdependencies that are contingent upon the graph’s topological organization. Graph signal processing (GSP) is a set of mathematical tools that implement operations from classical signal processing while simultaneously incorporating and respecting the graphical structure underlying the signal [161] (Fig. 5B). While GSP, in general, has been widely applied for purposes of image compression [162] and semisupervised learning [163] (among others), only recently has it been used to investigate patterns in neuroimaging data [164–166].

One particularly interesting application involves using graph Fourier analysis to study the relationship of a graph signal to an underlying network. This approach, analogous to classical Fourier analysis, decomposes a (graph) signal along a set of components, each of which represents a different mode of spatial variation (graph frequency) with respect to the graph’s toplogical structure. These modes are given by an eigendecomposition of the graph Laplacian matrix, L = D − A, where D = diag(s1, …, sN) and where si = Σj Aij. The eigendecomposition results in a set of ordered eigenvalues, λ0 ≤ λ1 ≤ … ≤ λN−1, and corresponding eigenvectors, Λ0, …, ΛN−1. Each eigenvector can be characterized in terms of its “alignment” with respect to A, a measure of how smoothly it varies over the network. Calculated as Σi,j AijΛk(i)Λk(j), an eigenvector’s alignment takes on a positive value when elements with the same sign are also joined by a connection. The variable alignments (smoothness) of eigenvectors are, intuitively, analogous to the different frequencies in classical signal processing.

One recent study applied graph Fourier analysis to neuroimaging data to study the relationship of regional activity (BOLD) and inter-regional white-matter networks [167]. In this study, the authors performed a sort of “connectome filtering” by designing two separate graph filters from the eigenvalues of the structural connectivity matrix. The “low-frequency” filter was constructed from the eigenvectors with the greatest alignment while the “low-frequency” filter was constructed from the least aligned eigenvectors (the authors refer to the low- and high-frequency filters as “aligned” and “liberal”, respectively). Both filters were applied to the vector time series of BOLD activity B = [b1(t), …, bN(t)], decomposing regional time series into filtered time series B̃aligned and B̃liberal. The filtered time series encoded the components of the BOLD signal that were aligned and misaligned with the brain’s white-matter connectivity. Interestingly, the variability of the “liberal” signal over time was predictive of cognitive switching costs in a visual perception task, much more so than that of the “aligned” signal. This finding suggests that brain signals that deviate from the underlying white-matter scaffolding promote cognitive flexibility.

These recent applications of graph signal processing [164, 166, 167] highlight its utility for studying neural systems at the network level. Nonetheless, there are open methodological and neurobiological questions. For instance, the graph filtering procedure described above operates on the BOLD activity at each instant, independent of the activity at all other time points, and also assumes that the underlying network structure is fixed (i.e. static). Extending the framework to explicitly incorporate the dynamic nature of the signal/network remains an unresolved issue [168]. Also, while graph signal processing operations can identify network-level correlates of behavioral relevance, how these operators are realized neurobiologically is also unclear. Future work could be directed to investigate these and other open questions.

STATISTICAL TESTING OF NETWORK DYNAMICS

When constructing and characterizing dynamic graph models and other graph-based representations of neuroimaging data, it is important to determine whether the dynamics that are observed are expected or unexpected in an appropriate null model. While no single null model is appropriate for every scientific question, there are a family of null models that have proven particularly useful in testing the significance of different features of brain network dynamics. Generally speaking, these null models fall into two broad categories: those that directly alter the structure of the graph, and those that alter the structure of the time series used to construct the graph. In this section, we will describe common examples of both of these types of null models, and we will also discuss statistical approaches for identifying data-driven boundaries between time windows used to construct the graphs.

Graph null models

The construction of graph-based null models for statistical inference has a long history dating back to the foundations of graph theory [33, 34]. Common null models used to query the architecture of static graphs include the Erdos-Renyi random graph model and the regular lattice [169–171] – two benchmark models that have proven particularly useful in estimating small-worldness [172, 173]. To address questions regarding network development and associated physical constraints, both spatial null models [93, 174] and growing null models [22] have proven particularly useful. In each case, the null model purposefully maintains some features of interest, while destroying others.

When moving from static graph models to dynamic graph models, one can either devise model-based nulls or permutation-based nulls. Because generative models of network reconfiguration are relatively new [99, 100], and none have been validated as accurate fits to neuroimaging data, the majority of nulls exercised in dynamic graph analysis are permutation-based nulls. In prior literature, there are three features of a dynamic graph model that are fairly straightforward to permute: the temporal order of the adjacency matrices, the pattern of connectivity within any given adjacency matrix, and (for multilayer graphs) the rules for placing and weighting the inter-layer identity links [103]. Permuting the order of time windows uniformly at random in a dynamic graph model is commonly referred to as a temporal null model. Permuting the connectivity within a single time window (that is, permuting the location of edges uniformly at random throughout the adjacency matrix) is commonly referred to as a connectional null model. For multilayer networks in which the graph in time window t is linked to the graph in time window t + 1 and also to the graph in time window t−1, one can permute the identity links connecting a node with itself in neighboring time windows uniformly at random. This is commonly referred to as a nodal null model.

These permutation-based null models are important benchmarks against which to compare dynamic graph architectures because they separately perturb distinct dimensions of the dynamic graph’s structure. The temporal null model can be used to test hypotheses regarding the nature of the temporal evolution of the graph; the connectional null model can be used to test hypotheses regarding the nature of the intra-window pattern of functional connectivity; and the nodal null model can be used to test hypotheses regarding the importance of regional identity in the observed dynamics [95]. It will be interesting in the future to expand this set of non-parametric null models to include parametric null models that have been carefully constructed to fit generalized statistical structure of neuroimaging data.

Time series null models

Dynamic graph null models are critical for practitioners conversant in graph theory to understand the driving influences in their data. Yet, others trained in dynamical system theory may wish to understand better how characteristics of the time series drive the observed time-evolving patterns of functional connectivity that constitute the dynamic graph model. In particular, one might wish to separately account for the impact of motion, noise, and fatigue on the observed dynamics and determine the degree to which the time series or network patterns derived from them remain non-stationary, either at rest (e.g., [175–177]) or during effortful cognitive processing (e.g., [178]). For this final question of whether the time series are non-stationary, surrogate data time series are particularly useful.

Specifically, a common problem that one might wish to address is the question of whether the observed patterns of network dynamics are due to linearity versus nonlinearity in the time series, or whether the underlying process is stationary versus non-stationary [179]. Here, we use the term linear to indicate that each value in the time series is linearly dependent on past values in the time series, or on both present and past values of some i.i.d. process [180]. Determining the extent of non-linearity is important because it determines whether one should use linear versus nonlinear statistics to characterize the time series [181]. Furthermore, determining whether the underlying process is stationary versus nonstationary informs whether the observed network dynamics could be interpreted as being driven by a fundamental change in the cognitive processes employed [182]. To demonstrate that observed patterns of network dynamics are indicative of meaningful non-stationarities, surrogate data techniques can be used to construct pseudo time series that retain signal properties under the assumption of stationarity and build a distribution reflecting the expected value of a chosen test statistic.

Two common methods to address this question are the Fourier transform (FT) surrogate and the amplitude adjusted Fourier transform (AAFT) surrogate. Both methods preserve the mean, variance, and autocorrelation function of the original time series, by scrambling the phase of time series in Fourier space [183]. The AAFT extends the FT surrogate by also retaining the amplitude distribution of the original signal [184]. First, we assume that the linear properties of the time series are specified by the squared amplitudes of the discrete Fourier transform

| (4) |

where st denotes an element in a time series of length T and Su denotes a complex Fourier coefficient in the Fourier transform of s. We can construct the FT surrogate data by multiplying the Fourier transform by phases chosen uniformly at random and transforming back to the time domain:

| (5) |

where au ∈ [0, 2π) are chosen independently and uniformly at random. This approach has proven useful for characterizing brain networks in prior studies [52, 95].

An important feature of these approaches is that they can be used to alter nonlinear relationships between time series while preserving linear relationships (such as, by scrambling the phase of time series x in the same way as time series y), or they can be used to alter both linear and nonlinear relationships between time series (such as, by scrambling the phase of time series x independently from time series y) [183]. Other methods that are similar in spirit include those that generate surrogate data using stable vector autoregressive models [190] that approximately preserve the power and cross-spectrum of the actual time series [182]. In each case, after creating surrogate data time series, one can re-apply time window boundaries and extract functional connectivity patterns in each time window to create dynamic graph models. Then one can compare statistics of the dynamic graph models constructed from surrogate data time series with the statistics obtained from the true data [191]. In all efforts to construct surrogate data, it is important to carefully consider the nature of the measurement technique, peculiarities of the data itself, and the specificity of the hypothesis being tested.

Change points

Thus far, our discussion regarding statistical inference on dynamic graph models presumes that one has previously determined a set of time windows of the observed time series in which to measure functional connectivity [67, 68]. The common approach is to choose a single time window size, and then to apply it either in a nonoverlapping fashion or in an overlapping fashion [32, 103]. There are several benefits to this approach, including effectively controlling for the influence of the time series length on the observed network architecture. However, one disadvantage of the fixed-window-length approach is that one may be insensitive to changes in the neurophysiological state that occur at non-regular intervals. To address this issue, several methods have been proposed to identify change points – points in time where the generative process underlying the data appears to change – both in patterns of connectivity [192, 193] and in patterns of activity [194, 195]. These methods employ a data-driven framework to identify irregularly sized time windows before constructing brain graphs using the modeling approaches discussed earlier. Such change point-based network models can potentially enhance tracking of network dynamics alongside changes in cognitive state.

INTERPRETING NETWORK DYNAMICS

At the conclusion of any study applying the modeling techniques described in this review, it is important to interpret the observed network dynamics within the context in which the data were acquired. When studying network dynamics during a task, assessing the correlation between brain and behavior – both raw behavioral data and parameter values for models fit to the behavioral data – can help the investigator infer network mechanisms associated with task performance [25]. Relevant questions include which network dynamics accompany which sorts of cognitive processes or mental states. For example, recent observations point to a role for frontal-parietal network flexibility in motor learning [104], reinforcement learning [105], working memory [28], and cognitive flexibility [28]. In the case of time-by-time graphs, it is also relevant to link brain states to not only processing functions but also to representation functions, for example by combining local MVPA analyses with global graph analyses [196]. Finally, in both task and rest studies, it is also useful to determine the relationship between brain network dynamics and online measurements of physiology such as pupil diameter [197], galvanic skin response, or fatigue [102].

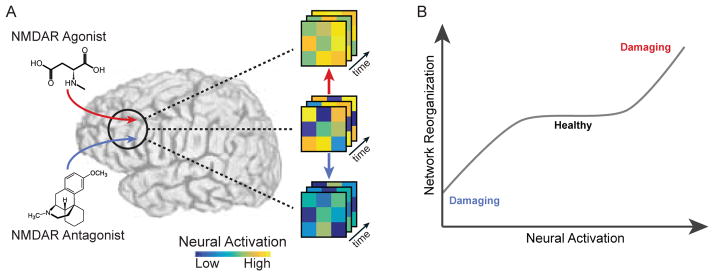

Outside of the context of a single study, it is important to build an intuition for what types of neurophysiological mechanisms may be driving certain types of brain network reconfiguration – irrespective of whether the subject is performing a task or simply resting inside the scanner. Particularly promising approaches for pinpointing neurophysiological drivers include pharma-fMRI studies, which suggest that distinct neurotransmitters may play important roles in driving network dynamics (Fig. 6). Using an NMDA-receptor antagonist, Braun and colleagues demonstrated that network flexibility – assessed from dynamic community detection – is increased relative to placebo, suggesting a critical role for glutamate in fMRI-derived brain network dynamics [30]. Preliminary data also hint at a role for seratonin by linking a positive effect with network flexibility [102], a role for norepinephrine by linking pupil diameter to network reconfiguration [197], and a role for stress-related corticosteroids and catecholamines in facilitating reallocation of resources between competing attention and executive control networks [198]. However, the relative impact of dopamine, seratonin, glutamate, and norepinephrine on network reconfiguration properties remains elusive, and therefore forms a promising area for further research.

FIG. 6. Pharmacologic modulation of network dynamics.

(A) By blocking or enhancing neurotransmitter release through pharmacologic manipulation, investigators can perturb the dynamics of brain activity. For example, an NMDA receptor agonist might hyper-excite brain activity [185–187], while a NMDA receptor antagonist might reduce levels of brain activity [188, 189]. (B) Hypothetically speaking, by exogenously modulating levels of a neurotransmitter, one might be able to titrate the dynamics of brain activity and the accompanying functional connectivity to avoid potentially damaging brain states.

Besides pharmacological drivers, there is an evergrowing body of literature suggesting that temporal fluctuations in neural acitivity and functional network patterns are largely constrained by underlying networks of structural connections [199, 200] – i.e. the material projections and tracts among neurons and brain regions that collectively comprise a connectome [201]. Over long periods of time, functional network topology largely recapitulates these structural links [18], but over shorter intervals can more freely decouple from this structure [202, 203], possibly in order to efficiently meet ongoing cognitive demands [204]. Even over short durations, functional networks maintain close structural support such that the most stable functional connections (i.e. those that are least variable over time) are among those with corresponding direct structural links [205, 206]. Multimodal and freely available datasets, such as the Human Connectome Project [207], Nathan Kline Institute, Rockland sample [208], and the Philadelphia Neurodevelopmental Cohort [209], all of which acquire both diffusion-weighted and functional MRI for massive cohorts, make it increasingly possible to further investigate the role of structure in shaping temporal fluctuations in functional networks.

FUTURE DIRECTIONS

Prospectively, it will be important to further develop tools and models to understand the dynamic networks that support human cognition. Including additional biological realism and constraints will become increasingly important as these networks are inherently multi-layered and embedded, including spatially distributed circuits in neocortex, cortico-subcortico loops, and local networks in the basal ganglia and cerebellum. Efforts are expected to target specific computational and theoretical challenges for mathematical development including models for non-stationary network dynamics, coupled multilayer stochastic block models and dynamics atop them, and extensions of temporal non-negative matrix factorization to annotated graphs. These efforts offer promise in not only providing descriptive statistics to characterize cognitive processes, but also to push the boundaries beyond description and into prediction and eventually fundamental theories of network development, growth, and function [15, 25].

Acknowledgments

We thank Andrew C. Murphy and John D. Medaglia for helpful comments on an earlier version of this manuscript. A.N.K., A.E.S., R.F.B., and D.S.B. would like to acknowledge support from the John D. and Catherine T. MacArthur Foundation, the Alfred P. Sloan Foundation, the National Institute of Health (1R01HD086888-01), and the National Science Foundation (BCS-1441502, CAREER PHY-1554488, BCS-1631550). The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the funding agencies.

APPENDIX

Useful tools include the following:

For dynamic community detection tools see http://netwiki.amath.unc.edu/GenLouvain/GenLouvain. [210]

For statistics of dynamic modules see http://commdetect.weebly.com/.

For non-negative matrix factorization for dynamic graph models see https://doi.org/10.5281/zenodo.583150 [211]

For statistics on dynamic graph models see https://doi.org/10.5281/zenodo.583170. [212]

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Glaser JI, Kording KP. The development and analysis of integrated neuroscience data. Fron Comput Neurosci. 2016;10:11. doi: 10.3389/fncom.2016.00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Duncan NW, Wiebking C, Northoff G. Associations of regional GABA and glutamate with intrinsic and extrinsic neural activity in humans-a review of multimodal imaging studies. Neurosci Biobehav Rev. 2014;47:36–52. doi: 10.1016/j.neubiorev.2014.07.016. [DOI] [PubMed] [Google Scholar]

- 3.Hall EL, Robson SE, Morris PG, Brookes MJ. The relationship between MEG and fMRI. Neuroimage. 2014;102:80–91. doi: 10.1016/j.neuroimage.2013.11.005. [DOI] [PubMed] [Google Scholar]

- 4.Moeller S, et al. Multiband multislice GE-EPI at 7 tesla, with 16-fold acceleration using partial parallel imaging with application to high spatial and temporal whole-brain fMRI. Magn Reson Med. 2010;63:1144–1153. doi: 10.1002/mrm.22361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dringenberg HC, Vanderwolf CH. Involvement of direct and indirect pathways in electrocorticographic activation. Neurosci Biobehav Rev. 1998;22:243–257. doi: 10.1016/s0149-7634(97)00012-2. [DOI] [PubMed] [Google Scholar]

- 6.Breakspear M. Dynamic models of large-scale brain activity. Nat Neurosci. 2017;20:340–352. doi: 10.1038/nn.4497. [DOI] [PubMed] [Google Scholar]

- 7.Jones SR, et al. Quantitative analysis and biophysically realistic neural modeling of the MEG mu rhythm: rhythmogenesis and modulation of sensory-evoked responses. J Neurophysiol. 2009;102:3554–3572. doi: 10.1152/jn.00535.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bassett DS, Gazzaniga MS. Understanding complexity in the human brain. Trends Cogn Sci. 2011;15:200–209. doi: 10.1016/j.tics.2011.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Newman MEJ. Networks: An Introduction. Oxford University Press; 2010. [Google Scholar]

- 10.Shih AY, et al. Robust and fragile aspects of cortical blood flow in relation to the underlying angioarchitecture. Microcirculation. 2015;22:204–218. doi: 10.1111/micc.12195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Conaco C, et al. Functionalization of a protosynaptic gene expression network. Proc Natl Acad Sci U S A. 2012;109:10612–10618. doi: 10.1073/pnas.1201890109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kearns M, Judd S, Tan J, Wortman J. Behavioral experiments on biased voting in networks. Proc Natl Acad Sci U S A. 2009;106:1347–1352. doi: 10.1073/pnas.0808147106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Papadopoulus L, Puckett J, Daniels KE, Bassett DS. Evolution of network architecture in a granular material under compression. axXiv. 2016;1603:08159. doi: 10.1103/PhysRevE.94.032908. [DOI] [PubMed] [Google Scholar]

- 14.Butts CT. Revisiting the foundations of network analysis. Science. 2009;325:414–416. doi: 10.1126/science.1171022. [DOI] [PubMed] [Google Scholar]

- 15.Proulx S, Promislow D, Phillips PC. Network thinking in ecology and evolution. Trends in Ecology and Evolution. 2005;20:345–353. doi: 10.1016/j.tree.2005.04.004. [DOI] [PubMed] [Google Scholar]

- 16.Rubinov M, Sporns O. Complex network measures of brain connectivity: uses and interpretations. Neuroimage. 2010;52:1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 17.Ghosh A, Rho Y, McIntosh AR, Kotter R, Jirsa VK. Noise during rest enables the exploration of the brain’s dynamic repertoire. PLoS Comput Biol. 2008;4:e1000196. doi: 10.1371/journal.pcbi.1000196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Deco G, Jirsa VK, McIntosh AR. Emerging concepts for the dynamical organization of resting-state activity in the brain. Nature Reviews Neuroscience. 2011;12:43–56. doi: 10.1038/nrn2961. [DOI] [PubMed] [Google Scholar]

- 19.Gollo LL, Breakspear M. The frustrated brain: from dynamics on motifs to communities and networks. Philos Trans R Soc Lond B Biol Sci. 2014;369 doi: 10.1098/rstb.2013.0532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Messe A, Hutt MT, Konig P, Hilgetag CC. A closer look at the apparent correlation of structural and functional connectivity in excitable neural networks. Sci Rep. 2015;5:7870. doi: 10.1038/srep07870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Betzel RF, et al. Generative models of the human connectome. Neuroimage. 2016;124:1054–1064. doi: 10.1016/j.neuroimage.2015.09.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Klimm F, Bassett DS, Carlson JM, Mucha PJ. Resolving structural variability in network models and the brain. PLoS Computational Biology. 2014;10:e1003491. doi: 10.1371/journal.pcbi.1003491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Vertes PE, et al. Simple models of human brain functional networks. Proc Natl Acad Sci U S A. 2012;109:5868–5873. doi: 10.1073/pnas.1111738109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Valiant LG. What must a global theory of cortex explain? Curr Opin Neurobiol. 2014;25:15–19. doi: 10.1016/j.conb.2013.10.006. [DOI] [PubMed] [Google Scholar]

- 25.Bassett DS, Mattar MG. A network neuroscience of human learning: Potential to inform quantitative theories of brain and behavior. Trends Cogn Sci. 2017;S1364-6613:30016–5. doi: 10.1016/j.tics.2017.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kim H, Anderson R. Temporal node centrality in complex networks. Phys Rev E Stat Nonlin Soft Matter Phys. 2012;85:026107. doi: 10.1103/PhysRevE.85.026107. [DOI] [PubMed] [Google Scholar]

- 27.Holme P, Saramaki J. Temporal networks. Phys Rep. 2012;519:97–125. [Google Scholar]

- 28.Braun U, et al. Dynamic reconfiguration of frontal brain networks during executive cognition in humans. Proc Natl Acad Sci U S A. 2015;112:11678–11683. doi: 10.1073/pnas.1422487112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dwyer DB, et al. Large-scale brain network dynamics supporting adolescent cognitive control. J Neurosci. 2014;34:14096–14107. doi: 10.1523/JNEUROSCI.1634-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Braun U, et al. Dynamic reconfiguration of brain networks: a potential schizophrenia genetic risk mechanism modulated by NMDA receptor function. 2016 doi: 10.1073/pnas.1608819113. Submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sato JR, et al. Temporal stability of network centrality in control and default mode networks: Specific associations with externalizing psychopathology in children and adolescents. Hum Brain Mapp. 2015;36:4926–4937. doi: 10.1002/hbm.22985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chai LR, et al. Evolution of brain network dynamics in neurodevelopment. Network Neuroscience. 2017 doi: 10.1162/NETN_a_00001. Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bollobas B. Random Graphs. Academic Press; 1985. [Google Scholar]

- 34.Bollobas B. Graph Theory: An Introductory Course. Springer-Verlag; 1979. [Google Scholar]

- 35.Almendral JA, Criado R, Leyva I, Buldu JM, Sendina-Nadal I. Introduction to focus issue: mesoscales in complex networks. Chaos. 2011;21:016101. doi: 10.1063/1.3570920. [DOI] [PubMed] [Google Scholar]

- 36.Lo CY, He Y, Lin CP. Graph theoretical analysis of human brain structural networks. Rev Neurosci. 2011;22:551–563. doi: 10.1515/RNS.2011.039. [DOI] [PubMed] [Google Scholar]

- 37.Bertolero MA, Yeo BT, D’Esposito M. The modular and integrative functional architecture of the human brain. Proc Natl Acad Sci U S A. 2015;112:E6798–E6807. doi: 10.1073/pnas.1510619112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cocchi L, et al. Dissociable effects of local inhibitory and excitatory theta-burst stimulation on large-scale brain dynamics. J Neurophysiol. 2015;113:3375–3385. doi: 10.1152/jn.00850.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Sizemore AE, Bassett DS. Dynamic graph metrics: Tutorial, toolbox, and tale. 2017 doi: 10.1016/j.neuroimage.2017.06.081. In preparation. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Betzel RF, Bassett DS. Multi-scale brain networks. Neuroimage. 2016 doi: 10.1016/j.neuroimage.2016.11.006. Epub Ahead of Print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kopell NJ, Gritton HJ, Whittington MA, Kramer MA. Beyond the connectome: the dynome. Neuron. 2014;83:1319–1328. doi: 10.1016/j.neuron.2014.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Calhoun VD, Miller R, Pearlson G, Adali T. The chronnectome: time-varying connectivity networks as the next frontier in fMRI data discovery. Neuron. 2014;84:262–274. doi: 10.1016/j.neuron.2014.10.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Stephan KE, Roebroeck A. A short history of causal modeling of fMRI data. Neuroimage. 2012;62:856–863. doi: 10.1016/j.neuroimage.2012.01.034. [DOI] [PubMed] [Google Scholar]

- 44.Ritter P, Schirner M, McIntosh AR, Jirsa VK. The virtual brain integrates computational modeling and multimodal neuroimaging. Brain Connect. 2013;3:121–145. doi: 10.1089/brain.2012.0120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bullmore ET, Bassett DS. Brain graphs: graphical models of the human brain connectome. Annu Rev Clin Psychol. 2011;7:113–140. doi: 10.1146/annurev-clinpsy-040510-143934. [DOI] [PubMed] [Google Scholar]

- 46.Uludag K, Roebroeck A. General overview on the merits of multimodal neuroimaging data fusion. Neuroimage. 2014;102:3–10. doi: 10.1016/j.neuroimage.2014.05.018. [DOI] [PubMed] [Google Scholar]

- 47.Muldoon SF, Bassett DS. Network and multilayer network approaches to understanding human brain dynamics. Philosophy of Science. 2016 Epub Ahead of Print. [Google Scholar]

- 48.Fitch WT. Toward a computational framework for cognitive biology: unifying approaches from cognitive neuroscience and comparative cognition. Phys Life Rev. 2014;11:329–364. doi: 10.1016/j.plrev.2014.04.005. [DOI] [PubMed] [Google Scholar]

- 49.van den Heuvel MP, Bullmore ET, Sporns O. Comparative connectomics. Trends in Cognitive Sciences. 2016;20:345–361. doi: 10.1016/j.tics.2016.03.001. [DOI] [PubMed] [Google Scholar]

- 50.Cammoun L, et al. Mapping the human connectome at multiple scales with diffusion spectrum mri. Journal of neuroscience methods. 2012;203:386–397. doi: 10.1016/j.jneumeth.2011.09.031. [DOI] [PubMed] [Google Scholar]

- 51.Glasser MF, et al. A multi-modal parcellation of human cerebral cortex. Nature. 2016;536:171–178. doi: 10.1038/nature18933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zalesky A, Fornito A, Bullmore E. On the use of correlation as a measure of network connectivity. Neuroimage. 2012;60:2096–2106. doi: 10.1016/j.neuroimage.2012.02.001. [DOI] [PubMed] [Google Scholar]

- 53.Sun FT, Miller LM, Rao AA, D’Esposito M. Functional connectivity of cortical networks involved in bimanual motor sequence learning. Cereb Cortex. 2007;17:1227–1234. doi: 10.1093/cercor/bhl033. [DOI] [PubMed] [Google Scholar]

- 54.Zhang Z, Telesford QK, Giusti C, Lim KO, Bassett DS. Choosing wavelet methods, filters, and lengths for functional brain network construction. PLoS One. 2016;11:e0157243. doi: 10.1371/journal.pone.0157243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sun FT, Miller LM, D’Esposito M. Measuring interregional functional connectivity using coherence and partial coherence analyses of fMRI data. Neuroimage. 2004;21:647–658. doi: 10.1016/j.neuroimage.2003.09.056. [DOI] [PubMed] [Google Scholar]

- 56.Obando C, De Vico Fallani F. A statistical model for brain networks inferred from large-scale electrophysiological signals. J R Soc Interface. 2017;14:20160940. doi: 10.1098/rsif.2016.0940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Bassett DS, et al. Cognitive fitness of cost-efficient brain functional networks. Proc Natl Acad Sci U S A. 2009;106:11747–11752. doi: 10.1073/pnas.0903641106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Fraga Gonzalez G, et al. Graph analysis of EEG resting state functional networks in dyslexic readers. Clin Neurophysiol. 2016;127:3165–3175. doi: 10.1016/j.clinph.2016.06.023. [DOI] [PubMed] [Google Scholar]

- 59.Bartolomei F, et al. Disturbed functional connectivity in brain tumour patients: evaluation by graph analysis of synchronization matrices. Clin Neurophysiol. 2006;117:2039–2049. doi: 10.1016/j.clinph.2006.05.018. [DOI] [PubMed] [Google Scholar]

- 60.Khambhati AN, et al. Dynamic network drivers of seizure generation, propagation and termination in human neocortical epilepsy. PLoS Comput Biol. 2015;11:e1004608. doi: 10.1371/journal.pcbi.1004608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Khambhati A, Davis K, Lucas T, Litt B, Bassett DS. Virtual cortical resection reveals push-pull network control preceding seizure evolution. Neuron. 2016;91:1170–1182. doi: 10.1016/j.neuron.2016.07.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Wiles L, et al. Autaptic connections shift network excitability and bursting. Sci Rep. 2017;7:44006. doi: 10.1038/srep44006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Cohen MR, Kohn A. Measuring and interpreting neuronal correlations. Nat Neurosci. 2011;14:811–819. doi: 10.1038/nn.2842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Brody CD. Disambiguating different covariation types. Neural Comput. 1999;11:1527–1535. doi: 10.1162/089976699300016124. [DOI] [PubMed] [Google Scholar]

- 65.Brody CD. Correlations without synchrony. Neural Comput. 1999;11:1537–1551. doi: 10.1162/089976699300016133. [DOI] [PubMed] [Google Scholar]

- 66.Dann B, Michaels JA, Schaffelhofer S, Scherberger H. Uniting functional network topology and oscillations in the fronto-parietal single unit network of behaving primates. eLife. 2016;5:e15719. doi: 10.7554/eLife.15719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Leonardi N, Van De Ville D. On spurious and real fluctuations of dynamic functional connectivity during rest. Neuroimage. 2015;104:430–436. doi: 10.1016/j.neuroimage.2014.09.007. [DOI] [PubMed] [Google Scholar]

- 68.Telesford QK, et al. Detection of functional brain network reconfiguration during task-driven cognitive states. Neuroimage. 2016;S1053-8119:30198–30207. doi: 10.1016/j.neuroimage.2016.05.078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Chu CJ, et al. Emergence of stable functional networks in long-term human electroencephalography. J Neurosci. 2012;32:2703–2713. doi: 10.1523/JNEUROSCI.5669-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Kramer MA, et al. Emergence of persistent networks in long-term intracranial EEG recordings. J Neurosci. 2011;31:15757–15767. doi: 10.1523/JNEUROSCI.2287-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Jones DT, et al. Non-stationarity in the “resting brain’s” modular architecture. PLoS One. 2012;7:e39731. doi: 10.1371/journal.pone.0039731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Zalesky A, Breakspear M. Towards a statistical test for functional connectivity dynamics. Neuroimage. 2015;114:466–470. doi: 10.1016/j.neuroimage.2015.03.047. [DOI] [PubMed] [Google Scholar]

- 73.Mattar MG, Cole MW, Thompson-Schill SL, Bassett DS. A functional cartography of cognitive systems. PLoS Comput Biol. 2015;11:e1004533. doi: 10.1371/journal.pcbi.1004533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Schlosser G, Wagner GP. Modularity in development and evolution. University of Chicago Press; 2004. [Google Scholar]

- 75.Simon H. The architecture of complexity. American Philosophical Society. 1962;106:467–482. [Google Scholar]

- 76.Kirschner M, Gerhart J. Evolvability. Proc Natl Acad Sci U S A. 1998;95:8420–8427. doi: 10.1073/pnas.95.15.8420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Wagner GP, Altenberg L. Complex adaptations and the evolution of evolvability. Evolution. 1996;50:967–976. doi: 10.1111/j.1558-5646.1996.tb02339.x. [DOI] [PubMed] [Google Scholar]

- 78.Salvador R, et al. Neurophysiological architecture of functional magnetic resonance images of human brain. Cerebral cortex. 2005;15:1332–1342. doi: 10.1093/cercor/bhi016. [DOI] [PubMed] [Google Scholar]

- 79.Meunier D, Achard S, Morcom A, Bullmore E. Age-related changes in modular organization of human brain functional networks. Neuroimage. 2009;44:715–723. doi: 10.1016/j.neuroimage.2008.09.062. [DOI] [PubMed] [Google Scholar]

- 80.Sporns O, Betzel RF. Modular brain networks. Annu Rev Psychol. 2016;67 doi: 10.1146/annurev-psych-122414-033634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Ellefsen KO, Mouret JB, Clune J. Neural modularity helps organisms evolve to learn new skills without forgetting old skills. PLoS Comput Biol. 2015;11:e1004128. doi: 10.1371/journal.pcbi.1004128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Arnemann KL, et al. Functional brain network modularity predicts response to cognitive training after brain injury. Neurology. 2015;84:1568–1574. doi: 10.1212/WNL.0000000000001476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Liao X, Cao M, Xia M, He Y. Individual differences and time-varying features of modular brain architecture. Neuroimage. 2017;152:94–107. doi: 10.1016/j.neuroimage.2017.02.066. [DOI] [PubMed] [Google Scholar]

- 84.Gu S, et al. Emergence of system roles in normative neurodevelopment. Proc Natl Acad Sci U S A. 2015;112:13681–13686. doi: 10.1073/pnas.1502829112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Porter MA, Onnela JP, Mucha PJ. Communities in networks. Notices of the AMS. 2009;56:1082–1097. [Google Scholar]

- 86.Fortunato S. Community detection in graphs. Physics reports. 2010;486:75–174. [Google Scholar]

- 87.Fortunato S, Hric D. Community detection in networks: A user guide. Physics Reports. 2016;659:1–44. [Google Scholar]

- 88.Kuhn HW. The hungarian method for the assignment problem. Naval Research Logistics Quarterly. 1955;2:83–97. [Google Scholar]

- 89.Kuhn HW. Variants of the hungarian method for assignment problems. Naval Research Logistics Quarterly. 1956;3:253–258. [Google Scholar]

- 90.Mucha PJ, Richardson T, Macon K, Porter MA, Onnela JP. Community structure in time-dependent, multiscale, and multiplex networks. science. 2010;328:876–878. doi: 10.1126/science.1184819. [DOI] [PubMed] [Google Scholar]

- 91.Kivel M, et al. Multilayer networks. J Complex Netw. 2014;2:203–271. [Google Scholar]

- 92.De Domenico M. Multilayer modeling and analysis of human brain networks. GigaScience. 2017 doi: 10.1093/gigascience/gix004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Betzel RF, et al. The modular organization of human anatomical brain networks: Accounting for the cost of wiring. Network Neuroscience. 2017:1–27. doi: 10.1162/NETN_a_00002. Posted online on 6 Jan 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Wymbs NF, Bassett DS, Mucha PJ, Porter MA, Grafton ST. Differential recruitment of the sensorimotor putamen and frontoparietal cortex during motor chunking in humans. Neuron. 2012;74:936–946. doi: 10.1016/j.neuron.2012.03.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Bassett DS, et al. Robust detection of dynamic community structure in networks. Chaos. 2013;23:013142. doi: 10.1063/1.4790830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Ball B, Karrer B, Newman MEJ. An efficient and principled method for detecting communities in networks. Phys Rev E. 2011;84:036103. doi: 10.1103/PhysRevE.84.036103. [DOI] [PubMed] [Google Scholar]

- 97.Evans TS, Lambiotte R. Line graphs, link partitions, and overlapping communities. Phys Rev E Stat Nonlin Soft Matter Phys. 2009;80:016105. doi: 10.1103/PhysRevE.80.016105. [DOI] [PubMed] [Google Scholar]