Abstract

Unlike most optical coherence microscopy (OCM) systems, dynamic speckle-field interferometric microscopy (DSIM) achieves depth sectioning through the spatial-coherence gating effect. Under high numerical aperture (NA) speckle-field illumination, our previous experiments have demonstrated less than 1 μm depth resolution in reflection-mode DSIM, while doubling the diffraction limited resolution as under structured illumination. However, there has not been a physical model to rigorously describe the speckle imaging process, in particular explaining the sectioning effect under high illumination and imaging NA settings in DSIM. In this paper, we develop such a model based on the diffraction tomography theory and the speckle statistics. Using this model, we calculate the system response function, which is used to further obtain the depth resolution limit in reflection-mode DSIM. Theoretically calculated depth resolution limit is in an excellent agreement with experiment results. We envision that our physical model will not only help in understanding the imaging process in DSIM, but also enable better designing such systems for depth-resolved measurements in biological cells and tissues.

OCIS codes: (050.1960) Diffraction theory, (180.1655) Coherence tomography, (110.3175) Interferometric imaging, (110.6150) Speckle imaging, (180.3170) Interference microscopy

1. Introduction

Depth selectivity, or the so-called sectioning effect, is important in optical imaging of microscopic objects that have complex 3D features [1–3]. Over the years, many depth-resolved optical microscopy techniques have been proposed including scanning confocal microscopy (SCM) [4,5], structured-illumination microscopy [6,7], two-photon fluorescence microscopy [8,9], light sheet microscopy [10,11], optical coherence tomography (OCT) [12,13], and optical diffraction tomography (ODT) [14,15]. Among these methods, SCM is the most widely implemented microscopy technique. Furthermore, C. J. R. Sheppard and his colleagues have pioneered the development of 3D coherent transfer function (CTF) method to help understand the optical sectioning effect in SCM systems [16,17].

Interferometric microscopy offers extreme sensitivity in measuring sample deformation or absorption along the axial dimension without using fluorescence staining [18–22]. In an interferometric microscopy system, the sectioning effect can be realized via either the spatial- or the temporal-coherence property of light under wide-field imaging mode. OCT, as an interferometric imaging technique, normally uses the temporal-coherence gating effect to achieve depth resolved measurements [13]. Its depth resolution is typically a few microns, which is mainly determined by the bandwidth or the temporal coherence of the light source used. Similarly, the spatial-coherence gating effect has also been utilized in interferometric microscopy to obtain depth-resolved measurements [23]. B. Redding et al. reported a full-field interferometric confocal imaging method, where the spatial coherence was manipulated by using a multimode fiber [24]. The measured spatial resolution however was limited to a few microns. Soon after, Y. Choi et al. demonstrated a reflection-mode dynamic speckle-field quantitative phase microscopy system with ~500 nm lateral resolution and ~1 micron depth resolution [25]. This type of system is promising in studying the nanoscale dynamics of depth-resolved structures such plasma and nucleic membranes in complex eukaryotic cells. If applied to 3D imaging, this reflection phase imaging system can potentially solve the “missing cone” problem during image reconstruction, which otherwise requires priori constraints, such as the non-negativity and piecewise smoothness for convergence [26,27].

Despite of the recent experimental advances in depth-resolved interferometric imaging using dynamic speckle-fields, there has not been a full physical model to describe the sectioning effect in such systems [23]. Most of the previous theoretical analysis of depth resolution was based on small scattering angle approximations or paraxial approximations, including the SCM transfer function calculations [16,17], where the diffraction effects that potentially degrade the image reconstruction quality in high NA imaging are not fully accounted for. Later on, C. J. R. Sheppard’s group has also calculated the 3D CTF for high NA imaging conditions for holographic tomography [28]. Recently, through solving the inverse scattering problem with the diffraction tomography theory, accurate 3D CTF has been obtained for low temporal-coherence interferometric tomography systems, which enabled more precise 3D reconstruction with improved spatial resolution in all dimensions for tissue [29,30] and cellular imaging [31–33]. This highlights the importance of including the diffraction effects in coherent imaging.

In this paper, we have extended the diffraction tomography theory to dynamic speckle-field interferometric microscopy or DSIM. We have successfully developed a model to calculate the axial response function in reflection-mode DSIM systems, which can be used to determine the depth resolution. The theoretically calculated depth resolution agrees well with our previous experimental results [25]. In the following, a full description of the physical model is provided. First of all, we describe a typical reflection-mode DSIM system, including the light scattering process, the interference fields, and the detection measurement function. Then, we solve the scattered field from the inhomogeneous wave equation to calculate the cross-correlation function, which is directly related to the measurement quantity. Finally, we calculate the axial response function of a thin 2D slice to obtain the depth resolution. Our study shows that the depth resolution is proportional to the square of the NA of the illumination and imaging objective. In the discussion section, we also verify that transmission-mode DSIM systems do not have sectioning effects for flat objects.

2. Reflection-mode dynamic speckle-field interferometric microscopy

In this section, we first describe the working principle of a typical reflection-mode DSIM system. Then, we solve the backward scattered field for an arbitrary object to determine the measurement function on the detector plane. This lays the foundation for calculating the axial response function and the depth resolution.

2.1 System configuration and working principle

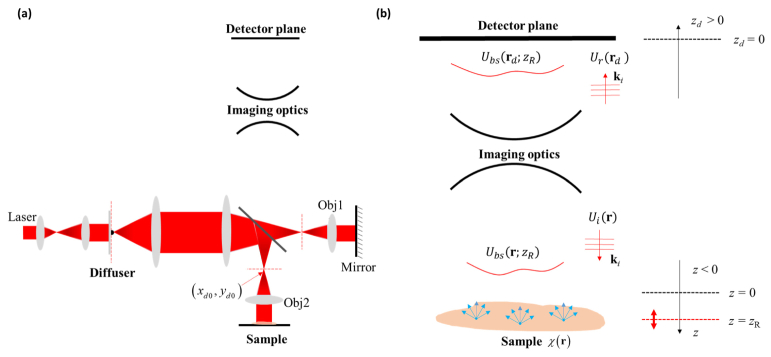

Typically, a Linnik-type interferometer is used in a reflection-mode DSIM system. Figure 1(a) shows the schematic of such a system (more details can be found in [23,25]). The field of interest starts from the diffuser plane, consisting of a disk shape ground glass, which is conjugated to the back focal planes of the reference arm objective (Obj1) as well as that of the imaging arm objective (Obj2). The sample surface, reference mirror, and the detector are also in conjugate planes through imaging optics. When the diffuser rotates at a high-speed (this allows for sufficient averaging of speckles during the camera integration time), the generated dynamic speckle-field forms a smooth distribution in the objective back aperture planes. According to our following theoretical model, it would be ideal for this field distribution to uniformly fill up the back aperture of Obj1 and Obj2 to achieve the optimum illumination with the best sectioning effect. Next, we describe the fields that are involved in the imaging process as described in Fig. 1(b).

Fig. 1.

Illustration of reflection-mode DSIM. (a) The system configuration of a reflection-mode DSIM based on a Linnik-type interferometer; (b) A description of the electromagnetic fields involved in the imaging system.

In our imaging system, the diffuser is in the Fourier plane where the speckle field is generated. Following the theory framework in [23], we assume that the speckle-field, immediately after the diffuser plane, has an angular spectrum distribution, where is the wavevector. For simplicity, we assume a 1:1 4f relay system between the diffuser plane and the back focal planes (or the back aperture planes) of Obj1 and Obj2. Thus, the speckle angular distribution at the back aperture planes is still A particular wavevector corresponding to a physical point on the back focal plane of Obj1 at where f is the focal length of the objective and is the laser wavelength in free space, generates an incident plane wave, in the sample space as shown in Fig. 1(b), given by

| (1) |

where from the dispersion relation (due to the fact that the incident field satisfies the homogeneous wave equation), is the propagation constant in free space, is the medium refractive index, is the propagation constant in the medium, and is the position vector. The plane wave illuminates the sample, described by the scattering potential where is the sample refractive index distribution. As a result, a backward scattered field, is generated. To obtain the depth-resolved measurements, the sample needs to be scanned along the axial direction around the focal plane. Assuming the sample focal displacement is the backward scattered field in the sample and detector space is denoted by and respectively, (where). On the detector plane, there is also a plane wave component, coming from the reference arm, which has a form similar to that of the incident field,

| (2) |

The backscattered sample and the reference fields interfere at the detector plane, creating an intensity distribution. From the measured intensity, we obtain which is the real part of the cross-correlation function for each . In this paper, we are interested in modeling the physical imaging process, thus, we need to fully describe the cross-correlation function, which requires solving the sample scattered field.

2.2 Solving the backward scattered field

The sample scattered field can be described by the inhomogeneous wave equation [14]:

| (3) |

where is the total driving field which consists of both the incident and the scattered fields, Under the first-order Born approximation, we have that allows us to solve the backward scattered field, denoted as in the z > 0 sample space, for different sample focal displacement as (refer to the Appendix for the derivation)

| (4) |

where is the Fourier transform variable with respect to and is the scattered field axial projection ( and have units of m−1). Notice that for simplicity, we will use the same representation for a physical parameter in different spaces by carrying the variables throughout this paper. For example, in Eq. (4) is in the 3D Fourier transform space as evidenced from its variables. The imaging condition ensures that the field at z = 0 (defined at the sample surface) is conjugated with the camera detector plane, zd = 0. Therefore,

| (5) |

where is the Fourier transform variable of the transverse detector coordinate and are related through a magnification M, i.e., and In Eq. (5), the aperture function has been introduced, which defines the spatial frequency bandwidth limited by the objective numerical aperture. Next, the scattered field solution will be used to calculate the cross-correlation function to obtain the system response function.

3. System response function

In this section, we will calculate the axial response function in reflection-mode DSIM. First, we calculate the scattered field from a thin step phase object. Then, we calculate the cross-correlating function by considering the speckle statistics.

3.1 Thin step object response

A homogeneous thin object, as described in Fig. 2, is used as the sample to calculate the axial response function. The one-dimensional object has an infinite lateral dimensions and an axial width of zo, thus, its scattering potential can be described with a rectangle function in z as (the constant part of the scattering potential has been ignored, as it does not contribute to the axial response calculation). The 3D Fourier transform of this scattering potential is

| (6) |

Substituting the above expression into Eq. (5), we obtain the backward scattered field in the sample space as,

| (7) |

Next, we take a 2D inverse Fourier transform of Eq. (7) over . This Fourier transform integral can be directly evaluated by using the delta function property, i.e., Hence,

| (8) |

The dispersion relation of the incident field makes Then, Eq. (8) is simplified as,

| (9) |

If is very small, such that and , the scattered field becomes

| (10) |

At the detector plane, we have

| (11) |

Equation (11) is the backward scattering field solution, where the phase term signifies the double path of the field in the sample. Interestingly, in transmission-mode operation, the forward scattered field does not have a dependent phase term, indicating that it will not be able to provide the sectioning effect for flat objects (see more details in the discussion part).

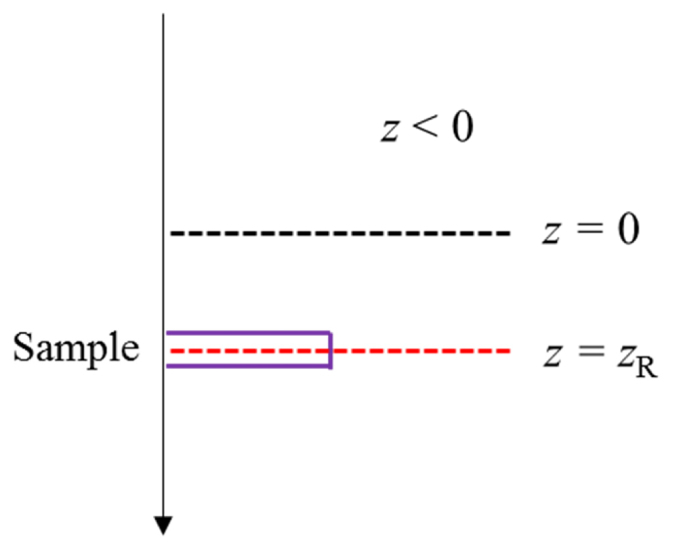

Fig. 2.

Illustration of a thin step phase object, defined by a rectangle function.

3.2 Speckle-field statistics

Next, we calculate the correlation function while considering the speckle-field statistics. The speckle-field angular spectrum distribution is a complex function, which can be written as

| (12) |

where N2 is the number of independent scattering areas. The distributions of and have the following statistical properties (see Goodman [34]): each of the amplitude and phase elements are statistically independent of each other (i.e., the scattering area elements are unrelated and the strength of a given scattered component bears no relation to its phase); the phase values are uniformly distributed in the primary interval (-π, π). The detector measurement obtains the real part of the cross-correlation function between the sample backward scattered field and the reference field, i.e., , which includes all the speckle wavevector contributions weighted by the distribution function. Therefore, is a summation of all possible individual correlation pairs ,

| (13) |

In the above equation, we have changed the notation of and to and to make the mathematical operation clearer. With the solution of and given in Eq. (2) and Eq. (11), we can write down the exact form of that is

| (14) |

where . Using trigonometric identities, we can write as

| (15) |

Furthermore, can be break into two parts, one is the matched speckle term () whereas the other is the unmatched term ( or ). Since the probability distribution of and is uniform, their difference will also statistically have a uniform distribution in the interval (−2π, 2π). If we take the ensemble average of many speckle patterns, due to rotation of the diffuser that produces uncorrelated speckle patterns, the unmatched correlation terms reduce to zero. Therefore, only the matched terms survive, leaving

| (16) |

where denotes the ensemble average over M distributions. When M is very large, this ensemble average will make a smooth distribution for which is also called the original speckle spectral distribution such that Note that is also band-limited by the objective aperture function , since the illumination and imaging paths share the same objective lens in the reflection DSIM system. For this reason, we replace the term with in Eq. (16).

3.3 The axial response function

In order to calculate the axial response function, the solution of in Eq. (16) is converted into an integral form as below,

| (17) |

It is always desired that is uniform within the objective back aperture area for the best depth selectivity. There are many ways to achieve this goal, such as magnifying this distribution with an additional 4f system [25]. For the best sectioning effect, is assumed to be a uniform distribution and is assumed to be a circular disk function. Thus, will still be a circular disk function that has the same distribution as the radius of the disk is determined by the numerical aperture of the objective. By denoting and the integral in Eq. (17) is converted into the polar coordinates as below,

| (18) |

The range of is limited by the objective numerical aperture through to . The integral in can be dropped out as the function in the integral is circularly symmetric, giving

| (19) |

With a variable change, and Eq. (19) becomes

| (20) |

The above integral can be easily evaluated to give an analytical solution as

| (21) |

where the sinc function is defined as:

The above framework establishes a mathematical model that describes the axial response of the reflection-mode DSIM system: if we know the objective numerical aperture, the illumination wavelength, we can compute as a function of to obtain the axial response function in DSIM. Finally, with the axial response function, the depth resolution can also be determined.

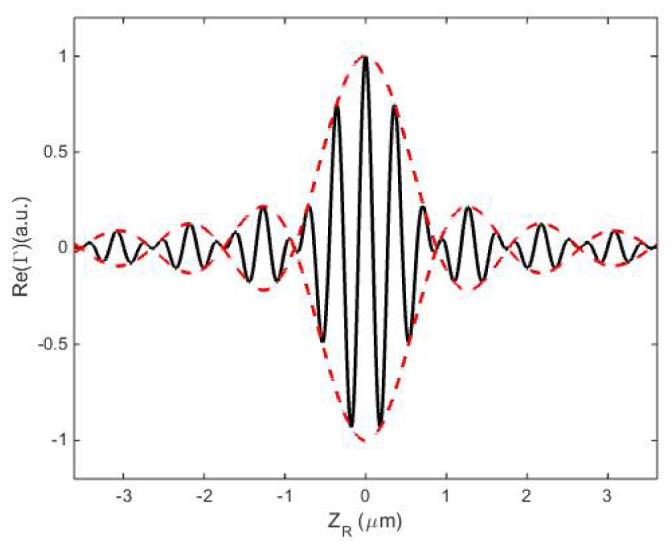

4. Depth resolution

In this section, the axial response function model is tested using the specifications from an experimental system, and this model is subsequently used to quantify the sectioning effect in terms of depth resolution. For this study, we use the parameters from our previous experiment [25], which also provides a way to validate our theoretical model. In that reflection-mode DSIM system, the laser wavelength is μm, the sample host medium is water with refractive index and two water immersion objectives are used with Inserting these parameters into Eq. (21), we obtain the axial response function as shown in Fig. 3, where the solid black curve is the axial response function, i.e., vs. different defocus positions The dashed red curve is the envelope function, from which the first zero is determined to be around μm, and the half maximum value is found to be around μm. The depth resolution, is defined as the full-width half maximum (FWHM) value which is 1.06 μm, which is in a good agreement with our previous study [25].

Fig. 3.

Axial response function with The axial response function is obtained by calculating at different defocus position .

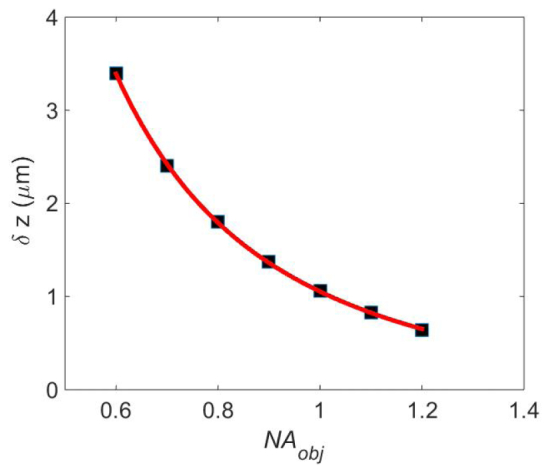

Next, we study the relationship between the depth resolution and the objective numerical aperture. The depth resolution values are obtained for various ranging from 0.6 to 1.2 with 0.1 intervals. In Fig. 4, the depth resolution is plotted as a function of in black square markers. Curve fitting shows a trend over the plotted data range; the solid red line is described by This fitting result is expected as depth resolution normally degrades with in coherent microscopy. Therefore, in a reflection-mode DSIM system the higher the numerical aperture, the better the depth resolution. According to this calculation, at a depth resolution as small as 0.65 μm can be achieved. It should be noted that in order to get the best depth resolution, a uniform speckle spectral distribution over the whole back aperture area of the high numerical aperture objective is necessary.

Fig. 4.

Relationship between depth resolution (vertical axis) and objective numerical aperture (horizontal axis).

5. Discussion

We have developed a physical model to precisely describe the sectioning effect in a reflection-mode speckle-field illumination interferometric system. The sectioning effect comes from the spatially incoherent illumination. It is also possible to use a broadband source in such a system to further enhance the depth selectivity. However, it is not clear how much depth selectivity can be enhanced with this addition. In principle, this theoretical framework can be extended to incorporate temporal coherence to answer this interesting question. We note that frameworks considering temporal coherence have been reported for transmission case in earlier publications [31,32]. Another important question is whether transmission-mode DSIM can provide sectioning effect. In order to answer this, we calculate the forward scattered field using Eq. (33b) in the Appendix for the same step object described in Section 3. The field is given as

| (22) |

Similarly, we can Fourier transform the field into the spatial domain representation,

| (23) |

The dispersion relation dictates that making

| (24) |

The detector plane sees the field at z = 0 plane as

| (25) |

It turns out that the forward scattered field, as described in Eq. (25), is not a function of , thus giving no sectioning effect. Note that the above calculation assumes flat thin objects, with no lateral structures. However, for objects that have lateral features, there will be sectioning as was demonstrated in [36]. The missing axial frequency information in the low transverse region is called the “missing cone” problem in 3D optical imaging. Our following paper will discuss this issue in more details by calculating the 3D CTF in both reflection and transmission-mode DSIM systems.

6. Summary

In conclusion, we have developed a mathematical model to describe the axial response function in reflection-mode dynamic speckle-field interferometric microscopy. This model is based on the diffraction tomography theory and speckle statistics, and provides a spatial correlation function. Using this function, the axial response function is obtained and used to determine the depth resolution. The theoretically calculated depth resolution is in excellent agreement with our experimental results. Using this method, the connection between depth resolution and objective numerical aperture is also studied, which reveals an inverse square law relationship that is also expected. We envision that developed physical model will contribute to the understanding of sectioning effect in spatially incoherent illumination interferometry systems. It can also guide on the design of such systems for better performance in the future.

Appendix: optical diffraction tomography

We start with the inhomogeneous wave equation that describes the scattered field [31,32]:

| (26) |

where is approximated as . Equation (26) can be solved in the space (or spatial spectrum space) by taking the 3D Fourier transform on both sides, namely

| (27) |

where is the Fourier transform variable of and Assuming the object is centered at i.e., then the above equation can be revised to the following form to incorporate this sample shift:

| (28) |

By re-arranging Eq. (28), the scattered field is solved as

| (29) |

where (notice that this q is not kz, because the homogeneous dispersion relation does not apply to the scattered field [35]). The two fractional terms in Eq. (29), 1/(kz + q) and 1/(kz - q), correspond to the forward scattered and backward scattered fields, respectively. This can be seen by performing an inverse Fourier transform over Eq. (29) over kz. The inverse Fourier transform of 1/kz gives a sign function, sgn(z) (one can also write it as 1-2H(-z) where H(z) is the Heaviside function). We can therefore write

| (30) |

For backward scattering, we consider the term. The backward scattered field, denoted as has the following form

| (31a) |

For the forward scattering, we consider the term that results in a forward scattered field, denoted as in the form of

| (31b) |

Next, we write the convolution in z in Eq. (31a) as an integral

| (32) |

where The above integral is a Fourier transform over z that turns back to the 3D Fourier transform domain with a phase shift which is in addition to the phase term giving:

| (33a) |

Following the same derivation, we can write the forward scattered field as:

| (33b) |

Funding

US National Institute of Health (NIH) grants NIH9P41EB015871-26A1, 1R01HL121386-01A1; the Hamamatsu Corp, and National Research Foundation Singapore through the Singapore MIT Alliance for Research and Technology’s BioSystems and Micromechanics Inter-Disciplinary Research program.

References and links

- 1.Agard D. A., “Optical sectioning microscopy: cellular architecture in three dimensions,” Annu. Rev. Biophys. Bioeng. 13(1), 191–219 (1984). 10.1146/annurev.bb.13.060184.001203 [DOI] [PubMed] [Google Scholar]

- 2.Conchello J. A., Lichtman J. W., “Optical sectioning microscopy,” Nat. Methods 2(12), 920–931 (2005). 10.1038/nmeth815 [DOI] [PubMed] [Google Scholar]

- 3.Keller P. J., Pampaloni F., Stelzer E. H. K., “Life sciences require the third dimension,” Curr. Opin. Cell Biol. 18(1), 117–124 (2006). 10.1016/j.ceb.2005.12.012 [DOI] [PubMed] [Google Scholar]

- 4.Minsky M., “Memoir on inventing the confocal scanning microscope,” Scanning 10(4), 128–138 (1988). 10.1002/sca.4950100403 [DOI] [Google Scholar]

- 5.Wilson T., “Optical sectioning in confocal fluorescent microscopes,” J. Microsc-Oxford 154(2), 143–156 (1989). 10.1111/j.1365-2818.1989.tb00577.x [DOI] [Google Scholar]

- 6.Neil M. A. A., Juskaitis R., Wilson T., “Method of obtaining optical sectioning by using structured light in a conventional microscope,” Opt. Lett. 22(24), 1905–1907 (1997). 10.1364/OL.22.001905 [DOI] [PubMed] [Google Scholar]

- 7.Gustafsson M. G. L., Shao L., Carlton P. M., Wang C. J. R., Golubovskaya I. N., Cande W. Z., Agard D. A., Sedat J. W., “Three-dimensional resolution doubling in wide-field fluorescence microscopy by structured illumination,” Biophys. J. 94(12), 4957–4970 (2008). 10.1529/biophysj.107.120345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.So P. T. C., Dong C. Y., Masters B. R., Berland K. M., “Two-photon excitation fluorescence microscopy,” Annu. Rev. Biomed. Eng. 2(1), 399–429 (2000). 10.1146/annurev.bioeng.2.1.399 [DOI] [PubMed] [Google Scholar]

- 9.Denk W., Strickler J. H., Webb W. W., “Two-photon laser scanning fluorescence microscopy,” Science 248(4951), 73–76 (1990). 10.1126/science.2321027 [DOI] [PubMed] [Google Scholar]

- 10.Dodt H. U., Leischner U., Schierloh A., Jährling N., Mauch C. P., Deininger K., Deussing J. M., Eder M., Zieglgänsberger W., Becker K., “Ultramicroscopy: three-dimensional visualization of neuronal networks in the whole mouse brain,” Nat. Methods 4(4), 331–336 (2007). 10.1038/nmeth1036 [DOI] [PubMed] [Google Scholar]

- 11.Huisken J., Swoger J., Del Bene F., Wittbrodt J., Stelzer E. H. K., “Optical sectioning deep inside live embryos by selective plane illumination microscopy,” Science 305(5686), 1007–1009 (2004). 10.1126/science.1100035 [DOI] [PubMed] [Google Scholar]

- 12.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fercher A. F., Drexler W., Hitzenberger C. K., Lasser T., “Optical coherence tomography - principles and applications,” Rep. Prog. Phys. 66(2), 239–303 (2003). 10.1088/0034-4885/66/2/204 [DOI] [Google Scholar]

- 14.Wolf E., “Three-dimensional structure determination of semi-transparent objects from holographic data,” Opt. Commun. 1(4), 153–156 (1969). 10.1016/0030-4018(69)90052-2 [DOI] [Google Scholar]

- 15.Choi W., Fang-Yen C., Badizadegan K., Oh S., Lue N., Dasari R. R., Feld M. S., “Tomographic phase microscopy,” Nat. Methods 4(9), 717–719 (2007). 10.1038/nmeth1078 [DOI] [PubMed] [Google Scholar]

- 16.Sheppard C. J. R., Gu M., Mao X. Q., “Three-dimensional coherent transfer-function in a reflection-mode confocal scanning microscope,” Opt. Commun. 81(5), 281–284 (1991). 10.1016/0030-4018(91)90616-L [DOI] [Google Scholar]

- 17.Gu M., Principles of Three Dimensional Imaging in Confocal Microscopes (World Scientific, Singapore; River Edge, NJ, 1996). [Google Scholar]

- 18.Sommargren G. E., “Optical heterodyne profilometry,” Appl. Opt. 20(4), 610–618 (1981). 10.1364/AO.20.000610 [DOI] [PubMed] [Google Scholar]

- 19.Creath K., “Phase-measurement interferometry techniques for nondestructive testing,” Moire Techniques, Holographic Interferometry, Optical NDT, and Applications to Fluid Mechanics 1554, 701–707 (1991). [Google Scholar]

- 20.Bhaduri B., Edwards C., Pham H., Zhou R., Nguyen T. H., Goddard L. L., Popescu G., “Diffraction phase microscopy: principles and applications in materials and life sciences,” Adv. Opt. Photonics 6(1), 57–119 (2014). 10.1364/AOP.6.000057 [DOI] [Google Scholar]

- 21.Hosseini P., Zhou R., Kim Y. H., Peres C., Diaspro A., Kuang C., Yaqoob Z., So P. T. C., “Pushing phase and amplitude sensitivity limits in interferometric microscopy,” Opt. Lett. 41(7), 1656–1659 (2016). 10.1364/OL.41.001656 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cuche E., Marquet P., Depeursinge C., “Simultaneous amplitude-contrast and quantitative phase-contrast microscopy by numerical reconstruction of Fresnel off-axis holograms,” Appl. Opt. 38(34), 6994–7001 (1999). 10.1364/AO.38.006994 [DOI] [PubMed] [Google Scholar]

- 23.Somekh M. G., See C. W., Goh J., “Wide field amplitude and phase confocal microscope with speckle illumination,” Opt. Commun. 174(1-4), 75–80 (2000). 10.1016/S0030-4018(99)00657-4 [DOI] [Google Scholar]

- 24.Redding B., Bromberg Y., Choma M. A., Cao H., “Full-field interferometric confocal microscopy using a VCSEL array,” Opt. Lett. 39(15), 4446–4449 (2014). 10.1364/OL.39.004446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Choi Y., Hosseini P., Choi W., Dasari R. R., So P. T. C., Yaqoob Z., “Dynamic speckle illumination wide-field reflection phase microscopy,” Opt. Lett. 39(20), 6062–6065 (2014). 10.1364/OL.39.006062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Agard D. A., Hiraoka Y., Shaw P., Sedat J. W., “Fluorescence microscopy in three dimensions,” Methods Cell Biol. 30, 353–377 (1989). 10.1016/S0091-679X(08)60986-3 [DOI] [PubMed] [Google Scholar]

- 27.Sung Y., Choi W., Lue N., Dasari R. R., Yaqoob Z., “Stain-free quantification of chromosomes in live cells using regularized tomographic phase microscopy,” PLoS One 7(11), e49502 (2012). 10.1371/journal.pone.0049502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kou S. S., Sheppard C. J. R., “Image formation in holographic tomography: high-aperture imaging conditions,” Appl. Opt. 48(34), H168–H175 (2009). 10.1364/AO.48.00H168 [DOI] [PubMed] [Google Scholar]

- 29.Ralston T. S., Marks D. L., Carney P. S., Boppart S. A., “Interferometric synthetic aperture microscopy,” Nat. Phys. 3(2), 129–134 (2007). 10.1038/nphys514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Shemonski N. D., South F. A., Liu Y. Z., Adie S. G., Carney P. S., Boppart S. A., “Computational high-resolution optical imaging of the living human retina,” Nat. Photonics 9(7), 440–443 (2015). 10.1038/nphoton.2015.102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kim T., Zhou R., Mir M., Babacan S. D., Carney P. S., Goddard L. L., Popescu G., “White-light diffraction tomography of unlabelled live cells,” Nat. Photonics 8(3), 256–263 (2014). 10.1038/nphoton.2013.350 [DOI] [Google Scholar]

- 32.Zhou R., Kim T., Goddard L. L., Popescu G., “Inverse scattering solutions using low-coherence light,” Opt. Lett. 39(15), 4494–4497 (2014). 10.1364/OL.39.004494 [DOI] [PubMed] [Google Scholar]

- 33.Kim T., Zhou R. J., Goddard L. L., Popescu G., “Solving inverse scattering problems in biological samples by quantitative phase imaging,” Laser Photonics Rev. 10(1), 13–39 (2016). 10.1002/lpor.201400467 [DOI] [Google Scholar]

- 34.Goodman J. W., “Statistical properties of laser speckle patterns,” in Laser Speckle and Related Phenomena (Springer, 1975), pp. 9–75. [Google Scholar]

- 35.Shan M., Nastasa V., Popescu G., “Statistical dispersion relation for spatially broadband fields,” Opt. Lett. 41(11), 2490–2492 (2016). 10.1364/OL.41.002490 [DOI] [PubMed] [Google Scholar]

- 36.Choi Y., Yang T. D., Lee K. J., Choi W., “Full-field and single-shot quantitative phase microscopy using dynamic speckle illumination,” Opt. Lett. 36(13), 2465–2467 (2011). 10.1364/OL.36.002465 [DOI] [PubMed] [Google Scholar]