Significance

Rapid growth in sensor and recording technologies is spurring rapid growth in time series data. Nonstationary and oscillatory structure in time series is commonly analyzed using time-varying spectral methods. These widely used techniques lack a statistical inference framework applicable to the entire time series. We develop a state-space multitaper (SS-MT) framework for time-varying spectral analysis of nonstationary time series. We efficiently implement the SS-MT spectrogram estimation algorithm in the frequency domain as parallel 1D complex Kalman filters. In analyses of human EEGs recorded under general anesthesia, the SS-MT paradigm provides enhanced denoising (>10 dB) and spectral resolution relative to standard multitaper methods, a flexible time-domain decomposition of the time series, and a broadly applicable, empirical Bayes’ framework for statistical inference.

Keywords: nonparametric spectral analysis, spectral representation theorem, complex Kalman filter, statistical inference, big data

Abstract

Time series are an important data class that includes recordings ranging from radio emissions, seismic activity, global positioning data, and stock prices to EEG measurements, vital signs, and voice recordings. Rapid growth in sensor and recording technologies is increasing the production of time series data and the importance of rapid, accurate analyses. Time series data are commonly analyzed using time-varying spectral methods to characterize their nonstationary and often oscillatory structure. Current methods provide local estimates of data features. However, they do not offer a statistical inference framework that applies to the entire time series. The important advances that we report are state-space multitaper (SS-MT) methods, which provide a statistical inference framework for time-varying spectral analysis of nonstationary time series. We model nonstationary time series as a sequence of second-order stationary Gaussian processes defined on nonoverlapping intervals. We use a frequency-domain random-walk model to relate the spectral representations of the Gaussian processes across intervals. The SS-MT algorithm efficiently computes spectral updates using parallel 1D complex Kalman filters. An expectation–maximization algorithm computes static and dynamic model parameter estimates. We test the framework in time-varying spectral analyses of simulated time series and EEG recordings from patients receiving general anesthesia. Relative to standard multitaper (MT), SS-MT gave enhanced spectral resolution and noise reduction (10 dB) and allowed statistical comparisons of spectral properties among arbitrary time series segments. SS-MT also extracts time-domain estimates of signal components. The SS-MT paradigm is a broadly applicable, empirical Bayes’ framework for statistical inference that can help ensure accurate, reproducible findings from nonstationary time series analyses.

The importance of developing principled methods to solve big data problems is now broadly appreciated (sites.nationalacademies.org/DEPS/BMSA/DEPS_171738). Time series are an important big data class that includes signals ranging from gravitational waves (1), solar variations (2), radar emissions (3), seismic activity (4), global positioning data (5), and stock prices (6) to neural spike train measurements (7), EEG recordings (8), vital signs (9), and voice recordings (10). Rapid growth in sensor and recording technologies in science, engineering, and economics is increasing time series data production and with it, the importance of conducting rapid, accurate analyses. Such analyses require extracting specific data features and characterizing their uncertainty in a way that makes possible formal statistical inferences the same way as they are conducted in simpler problems.

A range of time-frequency methods is used to characterize the nonstationary and often oscillatory features in time series data. Standard nonparametric spectral methods estimate the spectrum (i.e., the frequency content of the time series in a small time interval on which the data are presumed to be stationary) (8, 11, 12). Fourier-based spectral methods estimate only signal power as a function of frequency and therefore, cannot provide time-domain signal estimates. Spectrogram estimation (time-varying spectral analysis), which entails estimating the frequency content as function of time for nonstationary data, is carried out by simply repeating spectrum estimation on overlapping or nonoverlapping time intervals. Spectrum estimates on adjacent intervals (8, 10–14) are not formally related. While recently developed time-frequency methods address the general problem of minimizing the resolution tradeoff between time and frequency, these techniques are computationally intensive, give their best performance in high signal-to-noise problems, and to date, have had limited application in actual time series analyses (15, 16). Despite their use to study important problems, current time-frequency methods have a critical shortcoming. None of these methods provides a statistical inference framework applicable to the entire time series. (1–10).

State-space (SS) modeling is a flexible, established inference framework for analyzing systems with properties that evolve with time (17–21). This paradigm has been used for time-frequency analysis of nonstationary time series with parametric time series models (22–24), harmonic regression models (25), and nonparametric time series models using batch processing (26). Given stationary data recorded on a finite interval, multitaper (MT) methods are optimal for balancing the bias–variance tradeoff in spectrum estimation conducted by combining Fourier-based methods with tapering (8, 11, 12). Therefore, a plausible approach to analyzing nonstationary and oscillatory time series is to combine SS modeling with MT methods to optimize locally the bias–variance tradeoff, relate spectral estimates across local intervals, and conduct formal statistical inference.

The important advances that we report are state-space multitaper (SS-MT) methods, which provide a statistical inference framework for time-varying spectral analysis of nonstationary time series. The balance of the paper is organized as follows. In Theory, we define the SS-MT time-frequency model, SS-MT spectrogram estimation algorithm, time-domain signal extraction algorithm, and empirical Bayes’ inference framework. In Applications, we illustrate use of the algorithms in the analysis of a simulated nonstationary time series and of EEG time series recorded from patients under general anesthesia. Discussion summarizes the properties of the SS-MT paradigm and highlights the implications of this future research for solving large data inference problems.

Theory

An SS-MT Time-Frequency Model.

Assume that we observe a nonstationary time series of the form

| [1] |

where is a zero mean, second-order, locally stationary Gaussian process and is independent, zero mean Gaussian noise with common variance for . A common practice in analyses of nonstationary time series is to assume a minimal interval length and that data are stationary on intervals having this minimal length (SI Appendix, Table S1). We define the local stationarity of by assuming that we can write , where defines the number of distinct, nonoverlapping stationary intervals in and is the number of observations per stationary interval. We index the stationary intervals as and the points per interval as . For example, if we have 1,440 s of a time series that is stationary on 1-s intervals and recorded at 250 Hz, then , , and .

We present the data on stationary interval as the vector of length , with the th element that is , , and for and . By the spectral representation theorem (27), we can express each as

| [2] |

where is a matrix with the th element that is . are differences of orthogonal Gaussian increments, and we define .

To relate the data on adjacent intervals, we assume that the Gaussian increment differences are linked by the random walk model

| [3] |

where we assume that is a zero mean, independent complex Gaussian process with diagonal covariance matrix for . Eq. 3 defines a stochastic continuity constraint on the nonstationary time series in the frequency domain.

To represent the observation model [2] in the frequency domain, we let be the Fourier transform operator defined as the matrix with the th element that is . Taking the Fourier transform of Eq. 2 yields

| [4] |

where , , and is a zero mean, complex Gaussian vector with diagonal covariance matrix . Eqs. 2 and 3 define a frequency-domain SS model for the nonstationary time series.

To combine the SS and MT paradigms, we note that, in the absence of Eq. 3, MT methods with Slepian functions selected as tapers would be used to estimate the spectrum on each stationary interval (11, 12). Therefore, as in the application of MT methods, given , the number of data points in the stationary interval and , the data sampling rate, we also assume that , the desired frequency resolution for the spectral analysis, has been specified for the problem. Next, , the number of tapers, is chosen to minimize the local bias–variance tradeoff for spectrum estimation on each stationary interval using the standard MT formula (28). We index the tapers as .

We let denote the operator for applying the th Slepian taper to the data, denote the tapered data, and denote the Fourier transform of the tapered data. If we take the Slepian tapers to be orthonormal, then by theorem 4.4.2 in ref. 29, the Fourier transform of each tapered series has the same probability distribution and thus, the same spectral representation as in Eq. 4. Therefore, we write

| [5] |

and we view and as a realization of and of , respectively, observable through the th tapered series. It follows that the random walk model in Eq. 3 has the realization

| [6] |

where we assume that is a zero mean, independent complex Gaussian vector with a diagonal covariance matrix for and . Eq. 6 induces stochastic continuity constraints on the tapered nonstationary time series. Eqs. 5 and 6 define an SS-MT time-frequency model. Use of the Slepian tapers to achieve the desired frequency resolution given the assumed length of the local stationary intervals transforms the original time series [2] and its state model [3] into independent time series [5] and their respective independent state models [6].

SS-MT Spectrogram Estimation Algorithm.

The linear complex Gaussian form of Eqs. 5 and 6 suggests that a Kalman filter algorithm can be used to compute the sequence of increment differences (23) and thus, the sequence of spectrum estimates. For this problem, the Kalman filter has a special structure. Because the are independent, there are separate, independent -dimensional Kalman filters. In addition, because is orthogonal across frequencies, there are, for each tapered series, parallel 1D complex Kalman filter algorithms, one for each frequency . Hence, the Gaussian increment differences can be recursively estimated by applying 1D complex Kalman filter algorithms to the tapered time series. Assuming that the increment difference estimates have been computed on interval , then for tapered series , the 1D complex Kalman filter algorithm for estimating on interval is

| [7a] |

| [7b] |

| [7c] |

| [7d] |

where the Kalman gain for , , and is

| [8] |

The notation denotes the estimate on stationary interval given all of the data observed through stationary interval . The derivation of the Kalman filter algorithm is in SI Appendix. We assume that the algorithm has initial conditions and . We carry out their estimation along with the model parameters using an expectation–maximization (EM) algorithm, which we describe in SI Appendix. Given the Kalman filter estimate of the increment differences on interval , the SS-MT spectrogram estimate at frequency on interval is

| [9] |

where is the th SS eigenspectrogram at frequency (11). Each SS eigenspectrogram is a spectrogram estimate computed by weighting the data with a different Slepian taper. Like the MT spectrogram defined below [10], SS-MT spectrogram estimate [9] is the average of the approximately independent SS eigenspectrograms.

Eqs. 7–9 define the SS-MT algorithm for spectrogram estimation for nonstationary time series. For each tapered series, the increment difference estimate on interval is a weighted average between the increment difference estimate on interval and the difference between the Fourier transform of the tapered series and the increment difference estimate on interval . The weighting depends on the Kalman gain, which is between zero and one by construction. If the Kalman gain is close to zero, then the one-step prediction variance is small relative to the observation variance , and hence, the increment difference estimate on interval is close to the estimate on interval . If the Kalman gain is close to one, then the one-step prediction variance is large relative to the observation variance, meaning that the uncertainty in the prediction of the increment difference on interval based on the data up through interval is large. In this case, the increment difference estimate on interval is close to the Fourier transform of the tapered series observed on interval .

In the absence of the state models [3 and 6], Eq. 9 becomes the MT spectrogram estimate

| [10] |

where and is the th MT eigenspectrogram at frequency (12). In the absence of tapering, Eq. 9 becomes the SS periodogram estimate

| [11] |

which is computed by applying parallel 1D complex Kalman filters to the Fourier transformed data . In the absence of tapering and the SS model, Eq. 11 becomes the periodogram estimate

| [12] |

where . By comparing the SS-MT algorithm [7–9] with the standard MT [10], the periodogram [12], and the SS periodogram [11] algorithms, it is possible to understand the effects on spectrogram estimation of combining the MT approach with SS modeling. In addition, the SS-MT paradigm can be applied to compute cross-spectrograms between two or more time series that are described in SI Appendix (SI Appendix, Fig. S12).

Time-Domain Signal Extraction.

Given the , we can estimate the denoised time-domain signal as

| [13] |

where . The extracted signal is a linear combination of the estimated increment differences across all of the frequencies. Frequency components on different stationary intervals are related, because all are estimated by the complex Kalman filter algorithm in Eqs. 7a–7d. Hence, selective filtering, such as high-pass, low-pass, and band-pass filtering, can be performed by simply choosing the components of in the desired frequency range. Given a set of , not necessarily sequential frequencies, for , we can obtain the filtered time-domain signal as

| [14] |

where the components of , outside the frequencies and their conjugate symmetric frequencies, are all zero. Eq. 14 provides a highly flexible alternative to an empirical mode decomposition that allows extraction of a time-domain signal composed of any specified frequency components. The analytic version of the filtered time-domain signal can be computed as

| [15] |

for and . Here, and are the instantaneous amplitude and phase of the time-domain signal in the specified frequency range, respectively (SI Appendix, Figs. S1 and S2). This computation obviates the need to apply a Hilbert–Huang transform to either filtered data or data processed by an empirical mode decomposition to estimate instantaneous amplitude and phase.

Inferences for Functions of the Increment Differences.

To make inferences for functions of the increment differences at any time points, we compute the joint distribution of the increment differences conditional on all of the data in the time series using the fixed interval smoothing algorithm (20, 21), which is

| [16] |

where the initial conditions are and for and . To compute the covariances between any two states, we use the covariance smoothing algorithm defined as (20)

| [17] |

for . Eqs. 16 and 17 allow us to compute the joint distribution of the increment differences conditional on all of the data. Therefore, we can compute the distribution of any function of the increment differences by Monte Carlo methods (30). For each Monte Carlo sample, we draw from the joint distribution and compute the function of interest. The histogram of the function of interest provides a Monte Carlo estimate of its posterior probability density. The estimate is empirical Bayes, because it is computed conditional on the maximum likelihood parameter estimates (31).

Model Parameter and Initial Condition Estimation.

The Kalman filter [7 and 8], Kalman smoother [16], and covariance smoothing [17] algorithms assume that the initial states , the initial state variances , and the model parameters and are known. We use an EM algorithm (32) designed after refs. 20 and 21) to compute maximum likelihood estimates of the initial conditions and the model parameters. The details are given in SI Appendix.

Applications

Spectrogram Analysis of Simulated Data.

We first tested the SS-MT algorithm on the simulated nonstationary process (Fig. 1A) defined by the sixth-order autoregressive model adapted from ref. 12:

| [18] |

where s and is independent, zero mean Gaussian noise with unit variance. The spectrogram of the model has three peaks at , , and Hz (Fig. 1B). All three peaks grow linearly in height and width with time. We added an independent zero mean Gaussian noise with variance set to achieve a signal-to-noise ratio of 0 dB. The sampling rate is 64 Hz. Eq. 18 is nonstationary at each time . However, because it can be approximated in small intervals by a stationary sixth-order autoregressive process, it satisfies the Dahlhaus definition of local stationarity (33). We set and . We choose , the number of tapers, to be four, which corresponds to a spectral resolution of Hz for the MT methods. We estimated the model parameters from the first 50 observations using the EM algorithm (SI Appendix).

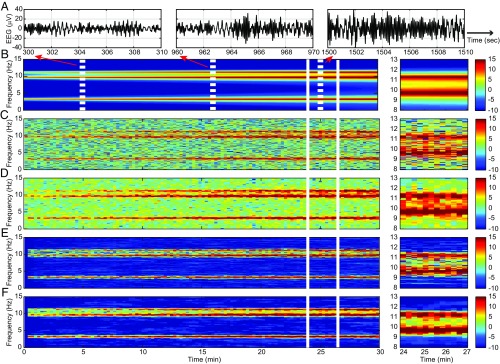

Fig. 1.

Spectrogram analysis of the time-varying sixth-order autoregressive process defined in Eq. 18. (A) Ten-second segments from the simulated time series starting at 5, 16, and 25 min. (B) True spectrogram. (C) Periodogram. (D) MT spectrogram. (E) SS periodogram. (F) SS-MT spectrogram. Right shows for each panel a zoomed-in display of the 3 min between 24 and 27 min. The color scale is in decibels.

Fig. 1 C–F shows the periodogram (Fig. 1C), the MT spectrogram (Fig. 1D), the SS periodogram (Fig. 1E), and the SS-MT spectrogram (Fig. 1F). While all four methods capture the general structure of the true spectrogram (Fig. 1B), there are clear differences. The MT spectrogram (Fig. 1D) shows, as expected, better resolution of (less variability in estimating) the three peaks compared with the periodogram (Fig. 1C). However, when comparing the power in the frequency bands outside the three peaks, both the periodogram (Fig. 1C) and the MT spectrogram (Fig. 1D and SI Appendix, Fig. S6) overestimate the noise relative to the true spectrogram (Fig. 1B and SI Appendix, Fig. S6 C and D) by 10–15 dB. The SS periodogram (Fig. 1E) and the SS-MT spectrogram (Fig. 1F and SI Appendix, Fig. S6 C and D) estimate the noise outside the three peaks to be at or near −10 dB as in the true spectrogram (Fig. 1B and SI Appendix, Fig. S6 C and D). The MT and the SS-MT spectrograms capture well and agree closely in their estimates of the power in the three peaks (Fig. 1 D, Right and F, Right and SI Appendix, Fig. S6).

A key difference between the MT and the SS-MT spectrograms appears as the power increases. As the heights of the spectral peaks at 9.5 and 11.5 Hz increase, the depth of the “valley” in the spectrogram between them increases also (Fig. 1B and SI Appendix, Fig. S6 C and D). The valley is at 5 dB between minutes 24 and 27 (Fig. 1B, Right and SI Appendix, Fig. S6D). The MT spectrogram estimates the valley to be at 10 dB (Fig. 1D, Right and SI Appendix, Fig. S6D). In contrast, the SS-MT spectrograms estimates the valley to be at 4 dB (Fig. 1D, Right and SI Appendix, Fig. S6D). In addition, the mean squared error was lower at all frequencies for the SS-MT algorithm compared with the other three methods (SI Appendix, Table S2). We explain the enhanced denoising and enhanced spectral resolution of the SS-MT algorithm relative to the MT algorithm after we analyze the real EEG recordings in the next examples. In SI Appendix, we assess the effect of stationary interval length on spectrogram estimation.

Spectrogram Analysis of the EEG During General Anesthesia.

Anesthetic drugs act in the brain to create the altered states of general anesthesia by producing highly structured oscillations that disrupt normal information flow between brain regions (34, 35). Because these oscillations are readily visible in the EEG, EEG and EEG-derived measures are commonly used to track in real time the brain states of patients receiving general anesthesia and sedation (36). We illustrate the SS-MT methods by comparing them with the other three spectrogram methods in the analysis of EEG recordings from patients during general anesthesia.

The EEG recordings, in this example and the subsequent examples, are deidentified data collected as part of protocols at the Massachusetts General Hospital (MGH) that have been approved by the MGH Human Research Committee. For EEGs recorded from patients, informed consent was not required, whereas for EEGs recorded from volunteer subjects, informed consent was required and was obtained. For each patient, the EEG was continuously recorded during general anesthesia using the Sedline monitor (Masimo) with the standard six-electrode frontal montage. The Sedline array records from electrodes located approximately at positions Fp1, Fp2, F7, and F8. On each channel, the electrode impedance was less than 5 kohms. We used the EEG data recorded at Fp1 for the spectral analyses and the EEG data recorded at Fp1 and Fp2 for the coherence analyses (SI Appendix, Fig. S12). We began the EEG recordings approximately 3–5 min before induction of general anesthesia and continued the recordings for ∼3–5 min after extubation.

The data analyzed in Fig. 2 consist of 190 min of EEG recorded at 250 Hz during maintenance of general anesthesia using the ether anesthetic sevoflurane with oxygen. Hence, we take = 2,850,000. We set = 500 based on our several years of experience analyzing the EEG of anesthetized patients. Hence, we have = 5,750. We chose , the number of tapers, to be three. This corresponds to a spectral resolution of 2 Hz for the MT method. We estimated the model parameters and initial conditions from the first 5 min of data using the EM algorithm. To estimate the observation noise variance in the EM algorithm, we restricted the analysis to frequencies in the physiologically relevant range from 0.1 to 30 Hz. The raw EEG signal (Fig. 2B) shows strong modulation with changes in the sevoflurane concentration (Fig. 2A).

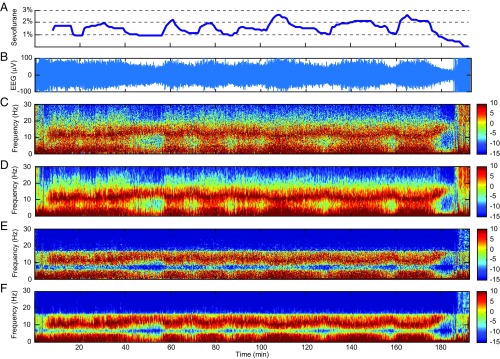

Fig. 2.

Spectrogram analysis of EEG time series recorded from a patient under general anesthesia maintained with sevoflurane and oxygen. (A) The expired concentration of sevoflurane. (B) Raw EEG signals. (C) Periodogram. (D) MT method spectrogram. (E) SS periodogram. (F) SS-MT spectrogram. The color scale is in decibels.

All four spectrograms for these data show the well-known alpha-beta oscillations (8–17 Hz) and slow-delta oscillations (0.1–4 Hz) that are characteristic of general anesthesia maintained by sevoflurane (36). When the sevoflurane concentration increases, the power in the alpha-beta band shifts to lower frequencies, while the power in the slow-delta band power shifts to higher frequencies. The opposite changes occur when the sevoflurane concentration decreases. The spectral changes associated with increases in the sevoflurane concentration appear as increases in theta oscillation power (4–8 Hz) (36). The periodogram (Fig. 2C) shows diffuse, grainy power between 10 and 17 Hz and in the slow-delta range. As expected, the MT spectrogram (Fig. 2D) has higher spectral resolution relative to the periodogram. Both the periodogram and the MT spectrogram show diffuse power ranging from 7 to −2 dB in the theta range and from −5 to −15 dB in the beta-gamma range (17 Hz). Relative to the periodogram and the MT spectrogram, the SS periodogram (Fig. 2E) and the SS-MT spectrogram (Fig. 2F) show substantially greater denoising defined as a reduction in power in the frequency bands with low power. For the latter two spectrograms, the power in the beta-gamma range is uniformly at −15 dB, which is a 5–15 dB power reduction relative to the MT spectrogram. Both the SS periodogram and the SS-MT spectrogram estimate the power in the theta band to be 10–15 dB less than that for either the periodogram or the MT spectrogram. Like the periodogram, the prominent alpha-beta and the slow-delta power in the SS periodogram is grainy and diffuse.

Spectrogram Analysis of the EEG During Transitions Among Anesthetic States.

To illustrate the full potential of the SS-MT algorithm, we reanalyze 155.4 min of EEG data recorded at 250 Hz from a frontal lead in a human volunteer subject receiving i.v. propofol administered by a computer-controlled infusion at an increasing and then, a decreasing infusion rate (35). Gradually increasing the propofol infusion rate allows the subject to transition gradually from being awake to unconsciousness (UNC). Gradually decreasing the propofol infusion rate from the rate required to achieve the maximum target effect site concentration (model-derived brain concentration) allows the subject to transition from UNC to the awake state. Baseline EEG was recorded for 20 min while the subject lay supine with eyes closed and received no propofol. After the baseline period, propofol was administered through a computer-controlled infusion to achieve five different effect site concentrations in increasing levels (Fig. 3A) (35). After completing the fifth level, the propofol infusion rate was systematically decreased to achieve a similar sequence of target effect site concentrations in decreasing order until the infusion was stopped. Each target effect site concentration was maintained for 14 min.

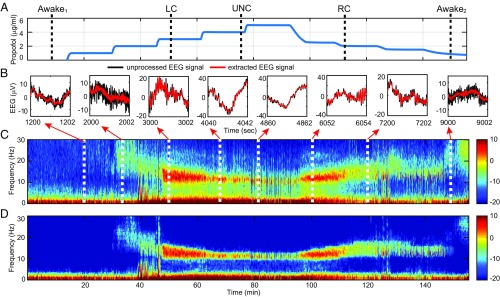

Fig. 3.

Spectrogram analysis of EEG recorded in a volunteer subject receiving a computer-controlled infusion of propofol. (A) Time course of propofol target effect site concentrations based on the Schneider model (35). The black dashed vertical lines define the anesthetic states determined by the behavioral analysis: awake1, baseline conscious state; LC; UNC; RC; and awake2, final conscious state. (B) Two seconds of unprocessed EEG (black curves) and of EEG extracted from the SS-MT analysis (red curves) [14] at different target effect site concentrations. (C) MT method spectrogram. (D) SS-MT spectrogram. The color scale is in decibels.

Based on the analyses of the subject’s responses to yes–no questions administered every 4 s, we identified five distinct behavioral or anesthetic states: conscious, loss of consciousness (LC), UNC, recovering consciousness (RC), and conscious (Fig. 3A, black dashed vertical lines) (35). The important scientific question to answer is whether these distinct anesthetic states are associated with distinct EEG signatures.

We take and set , , and . This gives a spectral resolution of 1.5 Hz for the MT method. Because we expect the EEG frequency content to change substantially with changes in target effect site concentration, we estimated, using the EM algorithm, a new set of model parameters from the first 5 min of EEG data recorded at each level. The effects of changing the propofol infusion rate are apparent in the unprocessed EEG (Fig. 3B, black curves), the denoised time-domain signal (Eq. 14 and Fig. 3B, red curves), the MT spectrogram (Fig. 3C), and the SS-MT spectrogram (Fig. 3D). At baseline, moderate-amplitude slow oscillations dominate the EEG. Low-amplitude beta-gamma oscillations appear midway through level 2 and transition into narrow-band, high-amplitude alpha oscillations by level 4. At the same time, the slow oscillations transition to high-amplitude, slow-delta oscillations. By level 5, the alpha oscillations have nearly dissipated, and the EEG is dominated by slow-delta oscillations. As the propofol infusion rate is decreased, EEG dynamics are recapitulated in reverse. As in the previous examples, the SS-MT spectrogram shows substantial spectral denoising and increased resolution relative to the MT spectrogram. The denoised time-domain signals and the SS-MT spectrogram strongly suggest that different oscillatory components are present in the EEG when the subject is in different anesthetic states.

Inferring Differences in Spectral Power Between Anesthetic States.

To make a formal statistical inference about the relationship between these anesthetic states and EEG signatures, we compare the power across the spectrogram from 0.1 to 30 Hz among representative 100-s intervals during each of the anesthetic states (Fig. 3A). The 100-s intervals are baseline awake period awake1, 500–600 s; LC, 3,100–3,200 s; UNC, 4,600–4,700 s; RC, 6,600–6,700 s; and final awake state awake2, 9,000–9,100 s. To compare two 100-s intervals for each frequency in a given frequency range, we compute the average difference spectrogram between two intervals:

| [19] |

where and are two distinct 100-s intervals. To determine if there is a significant change in the spectrogram between any two of the anesthetic states, we use a Monte Carlo procedure to compute an approximate empirical Bayes’ 95% confidence interval (95% CI) for (30). Together, the Kalman filter [7 and 8], the Kalman smoothing [16], and the covariance smoothing [17] algorithms define the multivariate complex Gaussian joint posterior density of for , conditional on the parameter estimates. The quantity is a function of the , so that given a random sample of the , we can compute . By drawing a large number of the , say 1,000, we can, therefore, compute 95% CIs for (Fig. 4). A significant difference in power is observed if the zero is not in the 95% CI.

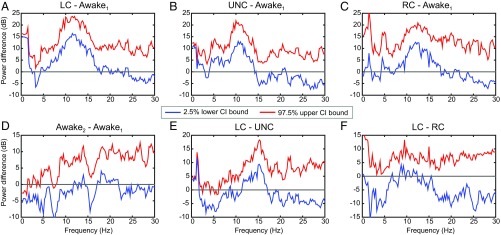

Fig. 4.

The 95% CIs for comparisons of the average power differences between the anesthetic states in Fig. 3A. Each panel shows the 95% empirical Bayes confidence interval (CI) for the average power difference defined by the lower 2.5% CI bound (blue curves), and the upper 97.5% CI bound (red curves). (A) LC-awake1: LC compared with baseline conscious state. (B) UNC-awake1: UNC compared with baseline conscious state. (C) RC-awake1: RC compared with baseline conscious state. (D) Awake2-awake1: final conscious state compared with baseline conscious state. (E) LC-UNC: LC compared with the UNC state. (F) LC-RC: LC compared with RC.

We show 6 of 10 possible comparisons of differences in power among the five anesthetic states (Fig. 4). The LC and UNC states show significant increases in power in the slow-delta and alpha bands relative to awake1, the baseline awake state (Fig. 4 A and B). There is also a significant increase in power in the upper part of the slow-delta and in the alpha bands between RC and awake1 (Fig. 4C) and between LC and the UNC state (Fig. 4E). In contrast, there are no appreciable differences in power between awake2 and awake1 (Fig. 4D) or between LC and RC (Fig. 4F). These findings are in complete agreement with the structure of the power in the spectrogram (Fig. 3D). We can conclude that there are significant quantifiable differences in EEG power between different anesthetic states and that those differences can range from 10 to 20 dB (95% CI). These findings also agree with and go beyond the original analyses of these data, in which hypothesis testing methods with Bonferroni corrections were used to compare the anesthetized states with just awake1 (35). Using the model in Eq. 18, we assessed the coverage probability of the empirical Bayes’ CIs in a simulation study (SI Appendix) and found that the actual and nominal coverage probabilities are in good agreement.

Spectrogram Denoising.

The SS-MT spectrogram has greater denoising than the MT spectrogram (Figs. 1–3) because of the stochastic continuity constraints (Eq. 3) and the eigenspectrogram averaging (SI Appendix, Figs. S3 and S4). The stochastic continuity constraint has a different independent effect at each frequency. In both the theoretical and the real data examples, the state variances, , are small (0.05–4 dB) for frequencies with low power and large (27–38 dB) for frequencies with high power (SI Appendix, Fig. S5, blue curves). The Kalman gains, , reached steady-state values within 5–10 updates, and like the state variances, the Kalman gains were small (0.1–0.4) for frequencies with low power and large (0.7–0.95) for frequencies with high power (SI Appendix, Fig. S5, red curves). Rewriting Eq. 7c as

| [20] |

shows that the increment difference estimate on interval is a weighted average between the increment difference estimate on interval and the Fourier transform of the tapered data on interval . In particular, frequencies with low power weight more than . This weighting favors suppressing increases or fluctuations in the low-power or noise frequencies. In contrast, frequencies with high power weight more the new information in . These differential effects denoise the spectrogram by heightening the contrast between frequencies with high power and those with low power in the analysis of the simulated data (Fig. 1F and SI Appendix, Fig. S6 C and D) and in the analysis of the actual EEG recordings (Fig. 2F and SI Appendix, Figs. S6C and S9C).

Averaging the MT eigenspectrograms reduces the variability in the MT spectrogram (SI Appendix, Fig. S3) (11). Each SS-MT eigenspectrogram (SI Appendix, Fig. S4 A–C) has variability comparable with the SS periodogram (Fig. 2E) (29). Averaging the SS-MT eigenspectrograms reduces the variability of the SS-MT spectrogram at each frequency relative to the SS periodogram (SI Appendix, Fig. S4D) by [9], thus giving the SS-MT spectrogram a further denoising enhancement.

Spectral Resolution and Leakage Reduction.

Kalman filter updating [7c and 20] enhances the spectral resolution of the SS-MT spectrogram relative to the MT spectrogram by reducing leakage. To see why, assume that and are the true spectrograms on time interval at two frequencies and , respectively, and that . Let be the frequency resolution chosen for the MT analysis. If (), then in the MT analysis, the narrow (broad)-band power at leaks into the power at (12). The extent of the leakage is governed by the power spectral density of each taper (SI Appendix, Figs. S7, S8, S10, and S11). In the SS-MT analysis, because has low power, weights much more than , the term in Eq. 20 that carries the leakage from . Hence, broad- and narrow-band power leakage from into the power at is reduced, because the Kalman gain at is small.

For example, at 70 min (Fig. 2 D and F and SI Appendix, Fig. S9 A and C), the MT and SS-MT spectrograms agree in the high-power frequency bands (i.e., 0.1–5 and 9.5–15.5 Hz) and disagree in the low-power frequency bands (5.1–9.4 and 15.6–30 Hz); 6 Hz is just on the border of the narrow-band leakage from 5 Hz for the MT spectrogram (SI Appendix, Fig. S9A). The 12-dB difference between the MT and the SS-MT spectrograms at 6 Hz results, because the former has leakage from the power at 5 Hz, whereas the latter has enhanced denoising and reduced leakage. A 10- to 15-dB power difference persists between the MT and SS-MT spectrograms beyond 15 Hz because of the small values of the Kalman gain in this frequency band (SI Appendix, Fig. S9 C and D).

At 80 min (Fig. 2 D and F and SI Appendix, Fig. S9 B and D), the MT and SS-MT spectrograms also agree in the high-power frequency bands (0.1–5 and 10.5–15 Hz) and disagree in the low-power frequency bands (i.e., 5.1–9.4 and 15.1–30 Hz). A similar argument explains the 7-dB difference in power at 16 Hz between the MT and the SS-MT spectrograms at minute 80. The same argument also explains in the analysis of the simulated data example the 7-dB difference in power at 11 Hz in the MT and SS-MT spectrograms at 25 min (SI Appendix, Fig. S6D). The differences between MT and SS-MT tapering are discussed in SI Appendix.

Discussion

Time series are a prominent big data class with growth that is being spurred by innovations in sensing and recording technologies. These data track dynamic processes, making accurate real-time analyses an important objective. Aside from simply extracting important features from the series, the analysis should provide associated measures of uncertainty, so that formal statistical inference can be conducted the same way that it would be conducted for questions arising from smaller, simpler datasets. No current time-frequency analysis method provides an inference framework applicable to the entire series (8, 11–16). To address the inference problem for nonstationary time series, we combined the MT and the SS approaches into an empirical Bayes’ paradigm for frequency- and time-domain analyses of nonstationary time series. We showed the SS-MT inference paradigm by analyzing differences in EEG power between different propofol-induced anesthetic states (Fig. 4). By reporting the results in terms of 95% empirical Bayes’ CIs, we measure directly the effect size (i.e., how much EEG power differs among propofol’s anesthetic states). We base our inferences on 95% CIs derived from the empirical Bayes’ estimate of the joint posterior distribution of the power across all of the frequencies and all times in the time series rather than on tests of multiple hypotheses. Our small simulation study (SI Appendix) suggests that the nominal and actual coverage probabilities of the empirical Bayes’ CIs are in good agreement. The empirical Bayes’ paradigm has been suggested as a practical approach to solving large-scale inference problems (37).

Our analyses offer scientific insights. The SS-MT spectrograms show denoising and spectral resolution that more clearly define the frequency content of anesthetic EEG signals. As a consequence, the results in Fig. 2 suggest that most of the theta oscillation power in the sevoflurane spectrogram could be caused by power shifts from both the alpha band above and the slow-delta band below. The results in Fig. 4 allow us to make formal inferences about EEG power difference as a function of the level of unconsciousness in a single individual.

SS-MT Time-Frequency Analysis of Nonstationary Time Series.

In addition to providing a statistical inference framework, the SS-MT paradigm has other desirable features. By the spectral representation theorem, the orthogonal increment differences are the fundamental process that underlies a stationary time series (27, 38). Hence, we defined nonstationarity by starting with the common practice of choosing a minimal interval on which the time series is assumed stationary (SI Appendix, Table S1) and then applying a stochastic continuity constraint [3 and 6] to link the increment differences across the minimal intervals. We constructed the SS model by taking the observed data to be the Fourier transforms of the tapered data series [5]. For a given taper, the Fourier transform of the tapered data is independent, complex Gaussian observations in the frequency domain. Hence, to estimate the increment differences, we implemented in parallel independent 1D complex Kalman filters [7a–7d]. Given the tapers, the algorithms run in parallel, and the SS-MT spectrogram (cross-spectrogram) estimates are computed by summing the eigenspectrograms (eigen cross-spectrograms) in Eq. 9 (SI Appendix, Eq. S14) at each Kalman filter update. Parallel computation makes SS-MT spectrogram estimation attractive for real-time applications. Each 1D complex Kalman filter has an associated Kalman smoother [16], covariance smoothing [17], and an EM algorithm for parameter estimation at each frequency (SI Appendix).

Both the SS and the MT components of SS-MT analysis contribute significantly to spectrogram denoising. The state variances and Kalman gains are high (low) at frequencies with high (low) power (SI Appendix, Fig. S5). Therefore, the Kalman filter updating [7c and 20] denoises the spectrogram by heightening the contrast between high- and low-power spectrogram ordinates (Figs. 1 D and F, 2 D and F, and 3 C and D). The MT component of the SS-MT algorithm further contributes to the denoising by averaging the eigenspectrograms to reduce the variance at all frequencies by (SI Appendix, Figs. S3 and S4). In addition, SS estimation [7c and 20] enhances the spectral resolution in the SS-MT spectrogram relative to the MT spectrogram by reducing both narrow-band and broad-band leakage (SI Appendix, Figs. S6 and S9). Because the Kalman gains at low-power frequencies are small, leakage from even nearby frequencies with high power is reduced (SI Appendix, Figs. S6 and S9). In our simulated and real data examples, the effect of SS updating on denoising and spectral resolution was a 10- to 15-dB difference between the SS-MT and the MT spectrograms in the low-power frequency bands (Figs. 1 D and F, 2 D and F, and 3 C and D and SI Appendix, Figs. S6 and S9).

By applying the spectral representation theorem to the estimated increment differences [14 and 15], we extracted the time-domain signals within specified frequency bands as well as instantaneous phase and amplitude (Fig. 3B and SI Appendix, Figs. S1 and S2). The SS-MT paradigm is highly flexible, because arbitrary combinations of frequency components can be chosen to construct the time-domain signal. Time-domain signal extraction is not possible with standard nonparametric spectral methods, which only estimate power as a function of frequency. Estimating instantaneous phase and amplitude with conventional approaches requires a separate analysis (14). The SS-MT paradigm conducts spectral analysis, signal extraction, and instantaneous phase and amplitude estimation as parts of a unified framework.

Theoretical and Problem-Specific Methods Development.

Our local stationarity definition differs from the Dahlhaus definition, which assumes time-invariant increment differences with deterministic continuity of the time-dependent transfer function in the spectral representation (33). The lengths of the local intervals are either assumed (33) or estimated (39). The local spectral estimates are computed as the squared modulus of the Fourier transform of the data after applying a single taper or by fitting a parametric model using local Whittle likelihoods (40). Like the MT methods, these local estimation approaches do not combine information across the local stationary intervals.

In contrast, the stochastic continuity constraint imposed by the random walk model enables recursive estimation of the time-dependent increment differences and the spectrogram. The current form of the continuity constraint has a theoretical drawback. It implies that, at each frequency, spectral power grows with time, since the theoretical spectrum on stationary interval at frequency is

| [21] |

In practice, the spectrogram estimate does not explode, because on every stationary interval , , the Kalman gain is bounded between zero and one [8]. If the Kalman gain is zero, then the spectrogram estimate on interval is the SS-MT spectrogram estimate on interval , whereas if the Kalman gain is one, then the spectrogram estimate on interval is the MT spectrogram estimate on interval [20]. Nevertheless, a possible correction to Eq. 21 is to assume that the increment differences follow a stationary model, such as

| [22] |

where we assume that . Hence, we have

| [23] |

Eq. 22 means that the nonstationary time series has an underlying stationary increments process. The parameter can easily be estimated in the EM algorithm. Our SS approach falls into the class of regularization methods for solving big data problems (41). Thus, the current wealth of regularization research can be applied to the SS-MT paradigm.

In our data analyses, we followed the common practice of setting the stationary interval a priori (SI Appendix, Table S1). Our analyses (SI Appendix, Fig. S14 and Table S2) suggest that the spectrogram estimates can be sensitive to interval choice and that different stationary intervals could be optimal for different frequencies. Therefore, in future work, we will incorporate interval choice into the estimation by evaluating the likelihood as a function of stationary interval length.

At present, both our model-fitting algorithms and inference framework depend critically on the Gaussian observation and state models [2 and 3]. Description of signal frequency content and inferences may be inaccurate when these assumptions do not hold. As an alternative, model-fitting and inference using non-Gaussian SS models can be readily carried out using sequential Monte Carlo methods (42). This extension will be the topic of a future investigation.

Application of the SS-MT paradigm in time-frequency analyses of different types of nonstationary time series is a productive way to extend our methods by allowing the question under investigation to guide problem-specific development of the framework. We will continue to study EEG time series recorded under different anesthetic drugs (36). The EEG recorded during sevoflurane general anesthesia (Fig. 2) suggests a smooth process defining continuity among the time intervals. Therefore, higher-order stationary models could be chosen to impose a greater degree of smoothness on the increment differences and the spectrogram. In contrast, the EEG recorded during induction of and recovery from propofol-induced unconsciousness (Fig. 3) suggests that a process with jump discontinuities may be a more appropriate state model for these data. SI Appendix, Table S1 summarizes problems from different fields of science that have used MT spectral methods to study nonstationary processes. These several applications suggest a rich testbed for further development of the SS-MT paradigm.

Adaptive model parameter estimation using local likelihood, SS, or method of moments techniques can be combined with different continuity models. A key decision in using adaptive estimation is defining the timescale of the parameter updates. We used the target anesthetic levels as covariates—subject matter constraints—to set this timescale in Fig. 3. Subject matter constraints may also be used to reduce the number of parameters. We limited state variance estimation to frequencies below 30 Hz based on knowledge of the frequency range relevant for tracking anesthetic states (36). The naïve empirical Bayes’ CIs had good coverage probabilities based on our small simulation study of the model in Eq. 18. These intervals can be further calibrated by taking account of the uncertainty in the maximum likelihood parameter estimates obtained from the EM algorithm (43). Computation of the SS-MT cross-spectrogram (SI Appendix, Fig. S12) suggests that our paradigm can be extended to inference problems for multivariate nonstationary time series.

The SS-MT paradigm provides a computationally efficient framework for spectrogram estimation, time-domain signal extraction, and statistical inference for nonstationary time series.

Supplementary Material

Acknowledgments

This work was partially supported by National Research Foundation of Korea Grant 2017R1C1B5017254 from the Korean Government (to S.K.), a Guggenheim Fellowship in Applied Mathematics (to E.N.B.), NIH Transformative Research Award R01-GM104948 (to E.N.B.), NIH Award P01-GM118629 (to E.N.B.,), funds from MGH (E.N.B.), and funds from the Picower Institute for Learning and Memory (E.N.B.).

Footnotes

Conflict of interest statement: The authors have applied for patents on the use of these methods to track brain states of patients receiving general anesthesia and sedation.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1702877115/-/DCSupplemental.

References

- 1.Chatterji S, Blackburn L, Martin G, Katsavounidis E. Multiresolution techniques for the detection of gravitational-wave bursts. Classical Quantum Gravity. 2004;21:S1809–S1818. [Google Scholar]

- 2.Haigh JD, Winning AR, Toumi R, Harder JW. An influence of solar spectral variations on radiative forcing of climate. Nature. 2010;467:696–699. doi: 10.1038/nature09426. [DOI] [PubMed] [Google Scholar]

- 3.Haykin SS, Steinhardt AO. Adaptive Radar Detection and Estimation. Vol 11 Wiley-Interscience; New York: 1992. [Google Scholar]

- 4.Emery WJ, Thomson RE. Data Analysis Methods in Physical Oceanography. Elsevier Science; Amsterdam: 2001. [Google Scholar]

- 5.Misra P, Enge P. Global Positioning Systems: Signals, Measurements, and Performance. Granga-Jamuna; Lincoln, MA: 2006. [Google Scholar]

- 6.Zheng X, Chen BM. Stock Market Modeling and Forecasting. Vol 442 Springer; London: 2013. [Google Scholar]

- 7.Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol. 2005;93:1074–1089. doi: 10.1152/jn.00697.2004. [DOI] [PubMed] [Google Scholar]

- 8.Mitra P, Bokil H. Observed Brain Dynamics. Oxford Univ Press; New York: 2007. [Google Scholar]

- 9.Yilmaz T, Foster R, Hao Y. Detecting vital signs with wearable wireless sensors. Sensors. 2010;10:10837–10862. doi: 10.3390/s101210837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Quatieri TF. Discrete-Time Speech Signal Processing: Principles and Practice. Prentice Hall; Englewood Cliffs, NJ: 2008. [Google Scholar]

- 11.Percival DB, Walden AT. Spectral Analysis for Physical Applications. Cambridge Univ Press; Cambridge, UK: 1993. [Google Scholar]

- 12.Babadi B, Brown EN. A review of multitaper spectral analysis. IEEE Trans Biomed Eng. 2014;61:1555–1564. doi: 10.1109/TBME.2014.2311996. [DOI] [PubMed] [Google Scholar]

- 13.Koornwinder TH. Wavelets: An Elementary Treatment of Theory and Applications. World Scientific; River Edge, NJ: 1995. [Google Scholar]

- 14.Huang NE, et al. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc R Soc Math Phys Eng Sci. 1998;454:903–995. [Google Scholar]

- 15.Daubechies I, Lu J, Wu H-T. Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appl Comput Harmon Anal. 2011;30:243–261. [Google Scholar]

- 16.Daubechies I, Wang YG, Wu H-T. ConceFT: Concentration of frequency and time via a multitapered synchrosqueezed transform. Philos Trans A Math Phys Eng Sci. 2016;374:20150193. doi: 10.1098/rsta.2015.0193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fahrmeir L, Tutz G. Multivariate Statistical Modeling Based on Generalized Linear Models. Springer; New York: 2001. [Google Scholar]

- 18.Durbin J, Koopman SJ. Time Series Analysis by State Space Methods. Vol 38 Oxford Univ Press; Oxford: 2012. [Google Scholar]

- 19.Brown EN, Frank LM, Tang D, Quirk MC, Wilson MA. A statistical paradigm for neural spike train decoding applied to position prediction from ensemble firing patterns of rat hippocampal place cells. J Neurosci. 1998;18:7411–7425. doi: 10.1523/JNEUROSCI.18-18-07411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Smith AC, Brown EN. Estimating a state-space model from point process observations. Neural Comput. 2003;15:965–991. doi: 10.1162/089976603765202622. [DOI] [PubMed] [Google Scholar]

- 21.Shumway RH, Stoffer DS. An approach to time series smoothing and forecasting using the EM algorithm. J Time Ser Anal. 1982;3:253–264. [Google Scholar]

- 22.Bohlin T. Analysis of EEG signals with changing spectra a short-word Kalman estimator. Math Biosci. 1977;35:221–259. [Google Scholar]

- 23.Kitagawa G, Gersch W. Smoothness Priors Analysis of Time Series. Vol 116 Springer; Berlin: 1996. [Google Scholar]

- 24.Tarvainen MP, Hiltunen JK, Ranta-aho PO, Karjalainen PA. Estimation of nonstationary EEG with Kalman smoother approach: An application to event-related synchronization (ERS) IEEE Trans Biomed Eng. 2004;51:516–524. doi: 10.1109/TBME.2003.821029. [DOI] [PubMed] [Google Scholar]

- 25.Qi Y, Minka TP, Picard RW. Bayesian spectrum estimation of unevenly sampled nonstationary data. Proc IEEE Int Conf Acoust Speech Signal Process. 2002;2:1473–1476. [Google Scholar]

- 26.Ba D, Babadi B, Purdon PL, Brown EN. Robust spectrotemporal decomposition by iteratively reweighted least squares. Proc Natl Acad Sci USA. 2014;111:E5336–E5345. doi: 10.1073/pnas.1320637111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shumway RH, Stoffer DS. Time Series Analysis and Its Applications. Springer; New York: 2011. [Google Scholar]

- 28.Prerau MJ, Brown RE, Bianchi MT, Ellenbogen JM, Purdon PL. Sleep neurophysiological dynamics through the lens of multitaper spectral analysis. Physiology. 2017;32:60–92. doi: 10.1152/physiol.00062.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Brillinger DR. Time Series: Data Analysis and Theory. Vol 38 SIAM; Philadelphia: 2001. [Google Scholar]

- 30.Czanner G, Eden UT, Wirth S, Yanike M, Suzuki WA, Brown EN. Analysis of between-trial and within-trial neural spiking dynamics. J Neurophysiol. 2008;99:2672–2693. doi: 10.1152/jn.00343.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Carlin BP, Louis TA. Bayesian Methods for Data Analysis. 3rd Ed CRC; Boca Raton, FL: 2009. [Google Scholar]

- 32.Dempster NM, Laird NM, Rubin DB. Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B Stat Methodol. 1977;39:1–38. [Google Scholar]

- 33.Dahlhaus R. Fitting time series models to nonstationary processes. Anal Stat. 1997;25:1–37. [Google Scholar]

- 34.Cimenser A, et al. Tracking brain states under general anesthesia by using global coherence analysis. Proc Natl Acad Sci USA. 2011;108:8832–8837. doi: 10.1073/pnas.1017041108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Purdon PL, et al. Electroencephalogram signatures of loss and recovery of consciousness from propofol. Proc Natl Acad Sci USA. 2013;110:E1142–E1151. doi: 10.1073/pnas.1221180110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Purdon PL, Sampson A, Pavone KJ, Brown EN. Clinical electroencephalography for anesthesiologists part I: Background and basic signatures. Anesthesiology. 2015;123:937–960. doi: 10.1097/ALN.0000000000000841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Efron B. Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction. Cambridge Univ Press; Cambridge, UK: 2013. [Google Scholar]

- 38.Priestley MB. Spectral Analysis and Time Series. Academic; London: 1981. [Google Scholar]

- 39.Adak S. Time-dependent spectral analysis of nonstationary time series. J Amer Statist Assoc. 1998;93:1488–1501. [Google Scholar]

- 40.Dahlhaus RD. A likelihood approximation for locally stationary processes. Annal Stat. 2000;28:1762–1794. [Google Scholar]

- 41.Fan J, Han F, Liu H. Challenges of big data analysis. Natl Sci Rev. 2014;1:293–314. doi: 10.1093/nsr/nwt032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Doucet A, De Freitas N, Gordon N. Sequential Monte Carlo Methods in Practice. Springer; New York: 2001. [Google Scholar]

- 43.McLachlan GJ, Krishnan T. The EM Algorithm and Extensions. Wiley; Hoboken, NJ: 2008. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.