Significance

Most physical theories are effective theories, descriptions at the scale visible to our experiments which ignore microscopic details. Seeking general ways to motivate such theories, we find an information theory perspective: If we select the model which can learn as much information as possible from the data, then we are naturally led to a simpler model, by a path independent of concerns about overfitting. This is encoded as a Bayesian prior which is nonzero only on a subspace of the original parameter space. We differ from earlier prior selection work by not considering an infinite quantity of data. Having finite data is always a limit on the resolution of an experiment, and in our framework this selects how complicated a theory is appropriate.

Keywords: effective theory, model selection, renormalization group, Bayesian prior choice, information theory

Abstract

We use the language of uninformative Bayesian prior choice to study the selection of appropriately simple effective models. We advocate for the prior which maximizes the mutual information between parameters and predictions, learning as much as possible from limited data. When many parameters are poorly constrained by the available data, we find that this prior puts weight only on boundaries of the parameter space. Thus, it selects a lower-dimensional effective theory in a principled way, ignoring irrelevant parameter directions. In the limit where there are sufficient data to tightly constrain any number of parameters, this reduces to the Jeffreys prior. However, we argue that this limit is pathological when applied to the hyperribbon parameter manifolds generic in science, because it leads to dramatic dependence on effects invisible to experiment.

Physicists prefer simple models not because nature is simple, but because most of its complication is usually irrelevant. Our most rigorous understanding of this idea comes from the Wilsonian renormalization group (1–3), which describes mathematically the process of zooming out and losing sight of microscopic details. These details influence the effective theory which describes macroscopic observables only through a few relevant parameter combinations, such as the critical temperature or the proton mass. The remaining irrelevant parameters can be ignored, as they are neither constrained by past data nor useful for predictions. Such models can now be understood as part of a large class called sloppy models (4–14), whose usefulness relies on a similar compression of a large microscopic parameter space down to just a few relevant directions.

This justification for model simplicity is different from the one more often discussed in statistics, motivated by the desire to avoid overfitting (15–21). Since irrelevant parameters have an almost invisible effect on predicted data, they cannot be excluded on these grounds. Here we motivate their exclusion differently: We show that simplifying a model can often allow it to extract more information from a limited dataset and that this offers a guide for choosing appropriate effective theories.

We phrase the question of model selection as part of the choice of a Bayesian prior on some high-dimensional parameter space. In a set of nested models, we can always move to a simpler model by using a prior which is nonzero only on some subspace. Recent work has suggested that interpretable effective models are typically obtained by taking some parameters to their limiting values, often or , thus restricting to lower-dimensional boundaries of the parameter manifold (22).

Our setup is that we wish to learn about a theory by performing some experiment which produces data . The theory and the experiment are together described by a probability distribution , for each value of the theory’s parameters . This function encodes both the quality and the quantity of data to be collected.

The mutual information (MI) between the parameters and their expected data is defined as , where is the Shannon entropy (23). The MI thus quantifies the information which can be learned about the parameters by measuring the data, or equivalently, the information about the data which can be encoded in the parameters (24, 25). Defining by maximizing this, we see the following:

-

i)

The prior is almost always discrete (26–30), with weight only on a finite number of points or atoms (Figs. 1 and 2):

-

ii)

When data are abundant, approaches the Jeffreys prior (31–33). As this continuum limit is approached, the proper spacing of the atoms shrinks as a power law (Fig. 3).

-

iii)

When data are scarce, most atoms lie on boundaries of the parameter space, corresponding to effective models with fewer parameters (Fig. 4). The resulting distribution of weight along relevant directions is much more even than that given by the Jeffreys prior (Fig. 5).

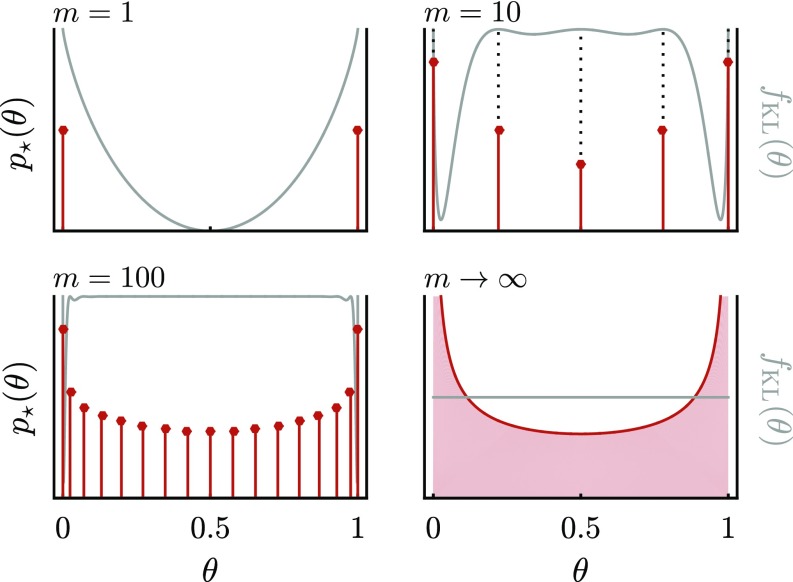

Fig. 1.

Optimal priors for the Bernoulli model (Eq. 1). Red lines indicate the positions of delta functions in , which are at the maxima of , Eq. 3. As these coalesce into the Jeffreys prior .

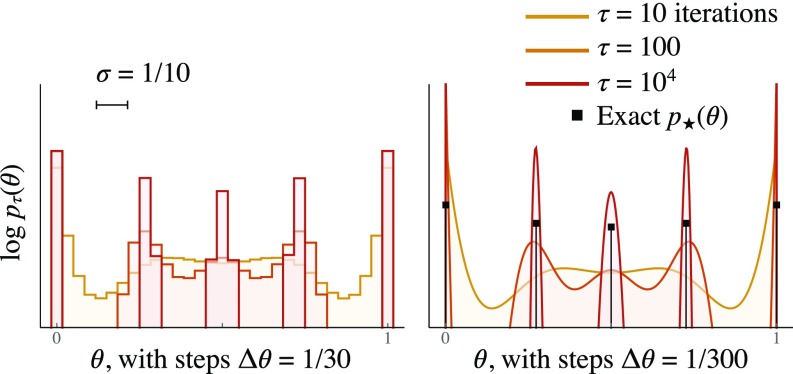

Fig. 2.

Convergence of the BA algorithm. This is for the one-parameter Gaussian model Eq. 2 with (comparable to in Fig. 1). Right shows discretized into 10 times as many points, but clearly converges to the same 5 delta functions.

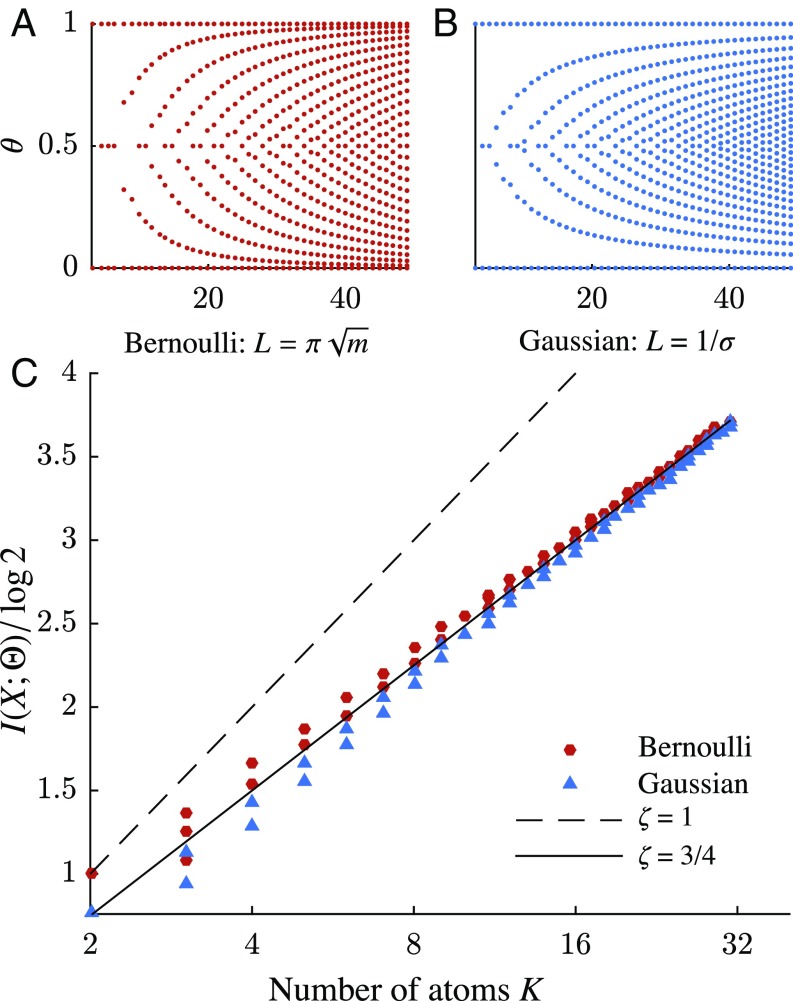

Fig. 3.

Behavior of with increasing Fisher length. A and B show the atoms of for the two one-dimensional models as is increased (i.e., we perform more repetitions or have smaller noise ). C shows the scaling of the MI (in bits) with the number of atoms . The dashed line is the bound , and the solid line is the scaling law .

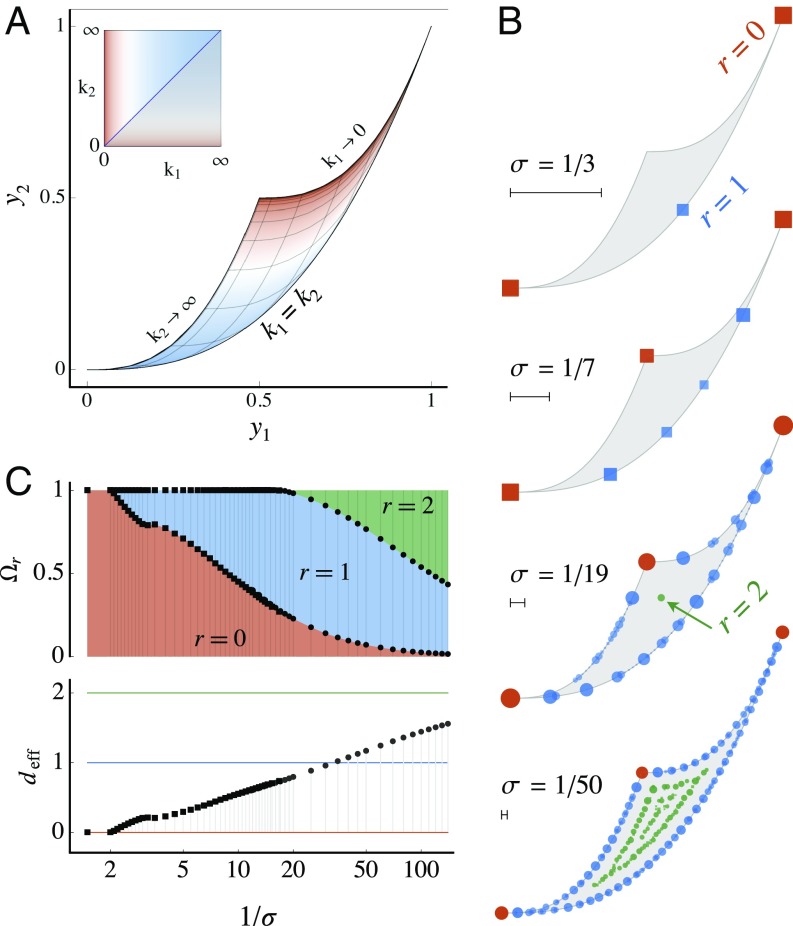

Fig. 4.

Parameters and priors for the exponential model (Eq. 5). A shows the area of the plane covered by all decay constants . B shows the positions of the delta functions of the optimal prior for several values of , with colors indicating the dimensionality at each point. C shows the proportion of weight on these dimensionalities.

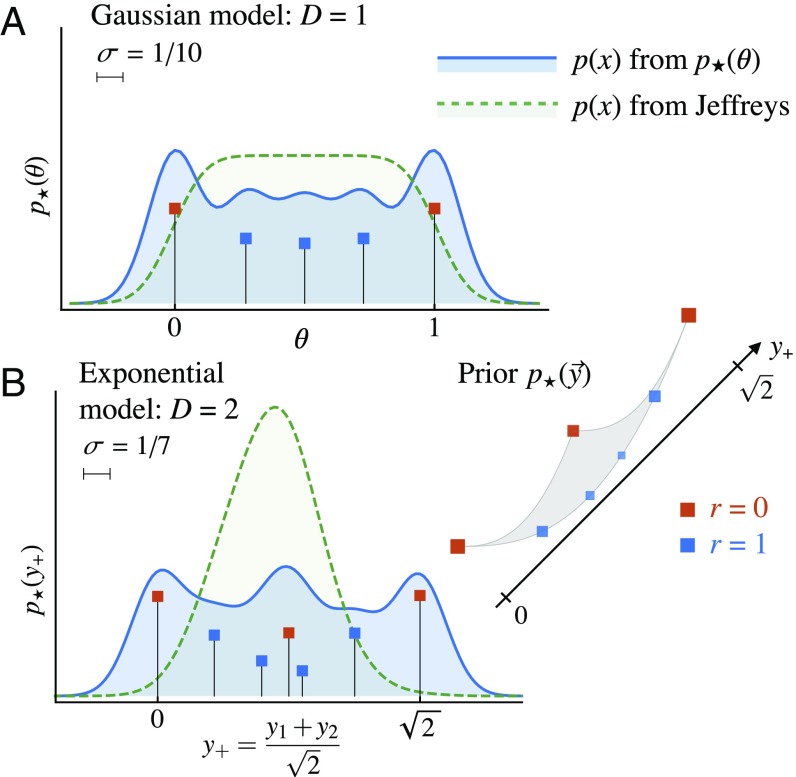

Fig. 5.

Distributions of expected data from different priors. A is the one-parameter Gaussian model, with . B projects the two-parameter exponential model onto the direction, for where the perpendicular direction should be irrelevant. The length of the relevant direction is about the same as the one-parameter case: . Note that the distribution of expected data from the Jeffreys prior here is quite different, with almost no weight at the ends of the range ( and ), because this prior still weights the area and not the length.

After some preliminaries, we demonstrate these properties in three simple examples, each a stylized version of a realistic experiment. To see the origin of discreteness, we study the bias of an unfair coin and the value of a single variable corrupted with Gaussian noise. To see how models of lower dimension arise, we then study the problem of inferring decay rates in a sum of exponentials.

In Supporting Information, we discuss the algorithms used for finding , and we apply some more traditional model selection tools to the sum of exponentials example.

Priors and Geometry

Bayes’ theorem tells us how to update our knowledge of upon observing data , from prior to posterior , where . In the absence of better knowledge we must pick an uninformative prior which codifies our ignorance. The naive choice of a flat prior has undesirable features, in particular making depend on the choice of parameterization, through the measure .

The Jeffreys prior is invariant under changes of parameterization because it is constructed from some properties of the experiment (34). This is, up to normalization, the volume form arising from the Fisher information metric or matrix (FIM):

This Riemannian metric defines a reparameterization-invariant distance between points, . It measures the distinguishability of the data which and are expected to produce, in units of standard deviations. Repeating an (identical and independently distributed) experiment times means considering , which leads to metric . However, the factor in the volume is lost by normalizing . Thus, the Jeffreys prior depends on the type of experiment, but not the quantity of data.

Bernardo defined a prior by maximizing the MI between parameters and the expected data from repetitions and then a reference prior by taking the limit (29, 31). Under certain benign assumptions, this reference prior is exactly the Jeffreys prior (31–33), providing an alternative justification for .

We differ in taking seriously that the amount of data collected is always finite.* Besides being physically unrealistic, the limit is pathological both for model selection and for prior choice. In this limit any number of parameters can be perfectly inferred, justifying an arbitrarily complicated model. In addition, in this limit the posterior becomes independent of any smooth prior.†

Geometrically, the defining feature of sloppy models is that they have a parameter manifold with hyperribbon structure (6–9): There are some long directions (corresponding to relevant, or stiff, parameters) and many shorter directions ( irrelevant, or sloppy, parameter combinations). These lengths are often estimated using the eigenvalues of and have logarithms that are roughly evenly spaced over many orders of magnitude (4, 5). The effect of coarse graining is to shrink irrelevant directions (here using the technical meaning of irrelevant: a parameter which shrinks under renormalization group flow) while leaving relevant directions extended, producing a sloppy manifold (8, 14). By contrast, the limit has the effect of expanding all directions, thus erasing the distinction between directions longer and shorter than the critical length scale of (approximately) 1 SD.

On such a hyperribbon, the Jeffreys prior has an undesirable feature: Since it is constructed from the -dimensional notion of volume, its weight along the relevant directions always depends on the volume of the irrelevant directions. This gives it extreme dependence on which irrelevant parameters are included in the model.‡ The optimal prior avoids this dependence because it is almost always discrete, at finite .§ It puts weight on a set of nearly distinguishable points, closely spaced along the relevant directions, but ignoring the irrelevant ones. Yet being the solution to a reparameterization-invariant optimization problem, the prior retains this good feature of .

Maximizing the MI was originally done to calculate the capacity of a communication channel, and we can borrow techniques from rate-distortion theory here: The algorithms we use were developed there (37, 38), and the discreteness we exploit was discovered several times in engineering (26–28, 39). In statistics, this problem is more often discussed as an equivalent minimax problem (40). Discreteness was also observed in other minimax problems (41–43) and later in directly maximizing MI (29, 30, 33, 44). However, it does not seem to have been seen as useful, and none of these papers explicitly find discrete priors in dimension , which is where we see attractive properties. Discreteness has been useful, although for different reasons, in the idea of rational inattention in economics (45, 46). There, market actors have a finite bandwidth for news, and this drives them to make discrete choices despite all the dynamics being continuous. Rate-distortion theory has also been useful in several areas of biology (47–49), and discreteness emerges in a recent theoretical model of the immune system (50).

We view this procedure of constructing the optimal prior as a form of model selection, picking out the subspace of on which has support. This depends on the likelihood function and the data space , but not on the observed data . In this regard it is closer to Jeffreys’ perspective on prior selection than to tools like the information criteria and Bayes factors, which are used at the stage of fitting to data. We discuss this difference at length in Model Selection from Data.

One-Parameter Examples

We begin with some problems with a single bounded parameter, of length in the Fisher metric. These tractable cases illustrate the generic behavior along either short (irrelevant) or long (relevant, ) parameter directions in higher-dimensional examples.

Our first example is the Bernoulli problem, in which we wish to determine the probability that an unfair coin gives heads, using the data from trials. It is sufficient to record the total number of heads , which occurs with probability

| [1] |

This gives , thus , and proper parameter space length .

In the extreme case , the optimal prior is two delta functions, , and , exactly one bit (29, 30, 33). Before an experiment that will run only once, this places equal weight on both outcomes; afterward it records the outcome. As increases, weight is moved from the boundary onto interior points, which increase in number and ultimately approach the smooth (Figs. 1 and 3A).

Similar behavior is seen in a second example, in which we measure one real number , normally distributed with known about the parameter :

| [2] |

Repeated measurements are equivalent to smaller (by ), so we fix here. The Fisher metric is , and thus . An optimal prior is shown in Fig. 2; in Fig. 5A it is shown along with its implied distribution of expected data. This is similar to that implied by the Jeffreys prior, here .

We calculated numerically in two ways. After discretizing both and , we can use the Blahut–Arimoto (BA) algorithm (37, 38). This converges to the global maximum, which is a discrete distribution (Fig. 2). Alternatively, using our knowledge that is discrete, we can instead adjust the positions and weights of a finite number of atoms. See Algorithms for more details.

To see analytically why discreteness arises, we write the MI as

| [3] |

where is the Kullback–Leibler divergence.¶ Maximizing MI over all functions with = 1 gives = const. But the maximizing function will not, in general, obey . Subject to this inequality must satisfy

at every . With finite data must be an analytic function of and therefore must be smooth with a finite number of zeros, corresponding to the atoms of (Fig. 1). See refs. 28, 29, and 46 for related arguments for discreteness and refs. 41–43 for other approaches.

The number of atoms occurring in increases as the data improve. For atoms there is an absolute bound , saturated if they are perfectly distinguishable. In Fig. 3C we observe that the optimal priors instead approach a line , with slope . At large the length of parameter space is proportional to the number of distinguishable points, and hence . Together these imply , and so the average number density of atoms grows as

| [4] |

Thus, the proper spacing between atoms shrinks to zero in the limit of infinite data; i.e., neighboring atoms cease to be distinguishable.

To derive this scaling law analytically, in a related paper (51) we consider a field theory for the number density of atoms, in which the entropy density (omitting numerical factors) is . From this we find , which is consistent with both examples presented above.

Multiparameter Example

In the examples above, concentrates weight on the edges of its allowed domain when data are scarce (i.e., when is small or is large, and hence is small). We next turn to a multiparameter model in which some parameter combinations are ill-constrained and where edges correspond to reduced models.

The physical picture is that we wish to determine the composition of an unknown radioactive source, from data of Geiger counter clicks at some times . As parameters we have the quantities and decay constants of isotopes . The probability of observing should be a Poisson distribution (of mean ) at each time, but we approximate these by Gaussians of fixed to write#

| [5] |

We can see the essential behavior with just two isotopes in fixed quantities: , and thus . Measuring at only two times and , we almost have a 2D version of Eq. 2, in which the center of the distribution plays the role of above. The mapping between and is shown in Fig. 4A, fixing . The FIM is proportional to the ordinary Euclidean metric for , but not for :

| [6] |

Thus, the Jeffreys prior is a constant on the allowed region of the plane.

Then we proceed to find the optimum for this model, shown in Fig. 4B for various values of . When is large, this has delta functions only in two of the corners, allowing only and . As is decreased, new atoms appear first along the lower boundary (corresponding to the one-dimensional model where ) and then along the other boundaries. At sufficiently small , atoms start filling in the (2D) interior.

To show this progression in Fig. 4C, we define as the total weight on all edges of dimension and an effective dimensionality . This increases smoothly from 0 toward as the data improve.

At medium values of , the prior almost ignores the width of the parameter manifold and cares mostly about its length ( along the diagonal). This behavior is very different from that of the Jeffreys prior: In Fig. 5B we demonstrate this by plotting the distributions of data implied by these two priors. Jeffreys puts almost no weight near the ends of the long (i.e., stiff or relevant) parameter’s range because the (sloppy or irrelevant) width happens to be even narrower there than in the middle. By contrast, our effective model puts significant weight on each end, much like the one-parameter model in Fig. 5A.

The difference between one and two parameters being relevant (in Fig. 4B) is very roughly to , a factor 7 in Fisher length and thus a factor 50 in the number of repetitions —perhaps the difference between a week’s data and a year’s. These numbers are artificially small to demonstrate the appearance of models away from the boundary: More realistic models often have manifold lengths spread over many orders of magnitude (5, 8) and thus have some parameters inaccessible even with centuries of data. To measure these we need a qualitatively different experiment, justifying a different effective theory.

The one-dimensional model along the lower edge of Fig. 4A is the effective theory with equal decay constants. This remains true if we allow more parameters in Eq. 5, and will still place a similar weight there.‖ Measuring also at later times will add more thin directions to the manifold (7), but the one-dimensional boundary corresponding to equal decay constants will still have significant weight. The fact that such edges give human-readable simpler models (unlike arbitrary submanifolds) was the original motivation for preferring them in ref. 22, and it is very interesting that our optimization procedure has the same preference.**

Discussion

While the three examples we have studied here are very simple, they demonstrate a principled way of selecting optimal effective theories, especially in high-dimensional settings. Following ref. 45, we may call this rational ignorance.

The prior which encodes this selection is the maximally uninformative prior, in the sense of leaving maximum headroom for learning from data. But its construction depends on the likelihood function , and thus it contains knowledge about the experiment through which we are probing nature. The Jeffreys prior also depends on the experiment, but more weakly: It is independent of the number of repetitions , precisely because it is the limit of the optimal prior (32, 33).

Under either of these prescriptions, performing a second experiment may necessitate a change in the prior, leading to a change in the posterior not described by Bayes’ theorem. If the second experiment is different from the first one, then changing to the Jeffreys prior for the combined experiment (and then applying Bayes’ rule just once) will have this effect (55, 56).†† Our prescription differs from that of Jeffreys in also regarding more repetitions of an identical experiment as being different. Many experiments would have much higher resolution if they could be repeated for all eternity. The fact that they cannot is an important limit on the accuracy of our knowledge, and our proposal treats this limitation on the same footing as the rest of the specification of the experiment.

Keeping finite is where we differ from earlier work on prior selection. Bernardo’s reference prior (31) maximizes the same MI, but always in the limit where it gives a smooth analytically tractable function. Using to quantify what can be learned from an experiment goes back to Lindley (24). That finite information implies a discrete distribution was known at least since refs. 26 and 27. What has been overlooked is that this discreteness is useful for avoiding a problem with the Jeffreys prior on the hyperribbon parameter spaces generic in science (5): Because it weights the irrelevant parameter volume, the Jeffreys prior has strong dependence on microscopic effects invisible to experiment. The limit has erased the divide between relevant and irrelevant parameters, by throwing away the natural length scale on the parameter manifold. By contrast, retains discreteness at roughly this scale, allowing it to ignore irrelevant directions. Along a relevant parameter direction this discreteness is no worse than rounding to as many digits as we can hope to measure, and we showed that in fact the spacing of atoms decreases faster than our accuracy improves.

Model selection is more often studied not as part of prior selection, but at the stage of fitting the parameters to data. From noisy data, one is tempted to fit a model which is more complicated than reality; avoiding such overfitting improves predictions. The Akaike information criterion (AIC), Bayesian information criterion (BIC) (15, 61), and related criteria (19, 20, 62–64) are subleading terms of various measures in the limit, in which all (nonsingular) parameters of the true model can be accurately measured. Techniques like minimum description length (MDL), normalized maximum likelihood (NML), and cross-validation (62, 65, 66) need not take this limit, but all are applied after seeing the data. They favor minimally flexible models close to the data seen, while our procedure favors one answer which can distinguish as many different outcomes as possible. It is curious that both approaches can point toward simplicity. We explore this contrast in more detail in Model Selection from Data.‡‡

Being discrete, the prior is very likely to exclude the true value of the parameter, if such a exists. This is not a flaw: The spirit of effective theory is to focus on what is relevant for describing the data, deliberately ignoring microscopic effects which we know to exist (67). Thus, the same effective theory can emerge from different microscopic physics [as in the universality of critical points describing phase transitions (68)]. The relevant degrees of freedom are often quasiparticles [such as the Cooper pairs of superconductivity (69)] which do not exist in the microscopic theory, but give a natural and simple description at the scale being observed. We argued here for such simplicity not on the grounds of the difficulty of simulating electrons or of human limitations, but based on the natural measure of information learned.

There is similar simplicity to be found outside of physics. For example, the Michaelis–Menten law for enzyme kinetics (70) is derived as a limit in which only the ratios of some reaction rates matter and is useful regardless of the underlying system. In more complicated systems which we cannot solve by hand and, for which the symmetries and scaling arguments used in physics cannot be applied, we hope that our information approach may be useful for identifying the appropriately detailed theory.

Supplementary Material

Acknowledgments

We thank Vijay Balasubramanian, William Bialek, Robert de Mello Koch, Peter Grünwald, Jon Machta, James Sethna, Paul Wiggins, and Ned Wingreen for discussion and comments. We thank International Centre for Theoretical Sciences Bangalore for hospitality. H.H.M. was supported by NIH Grant R01GM107103. M.K.T. was supported by National Science Foundation (NSF)-Energy, Power, and Control Networks 1710727. B.B.M. was supported by a Lewis-Sigler Fellowship and by NSF Division of Physics 0957573. M.C.A. was supported by Narodowe Centrum Nauki Grant 2012/06/A/ST2/00396.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

*Interned for 5 y, John Kerrich flipped his coin only times (35). With computers we can do better, but even the Large Hadron Collider generated only about bits of data (36).

†For simplicity we consider only regular models; i.e., we assume all parameters are structurally identifiable.

‡See Fig. 5 for a demonstration of this point. For another example, consider a parameter manifold which is a cone, with Fisher metric : There is one relevant direction of length , and there are irrelevant directions forming a sphere of diameter . Then the prior on alone implied by is , putting most of the weight near , dramatically so if is large. But since only the relevant direction is visible to our experiment, the region ought to be treated similarly to . The prior has this property.

§We offer both numerical and analytic arguments for discreteness below. The exception to discreteness is that if there is an exact continuous symmetry, will be constant along it. For example, if our Gaussian model Eq. 2 is placed on a circle (identifying both and , then the optimum prior is a constant.

#Using a normal distribution of fixed here is what allows the metric in Eq. 6 to be so simple. However, the qualitative behavior from the Poisson distribution is very similar.

‖If we have more parameters than measurements, then the model must be singular. In fact the exponential model of Fig. 4 is already slightly singular, since does not change the data; we could cure this by restricting to , or by working with , to obtain a regular model.

**Edges of the parameter manifold give simpler models not only in the sense of having fewer parameters, but also in an algorithmic sense. For example, the Michaelis–Menten model is analytically solvable (52) in a limit which corresponds to a manifold boundary (53). Stable linear dynamical systems of order are model boundaries of order systems (54). Taking some parameter combinations to the extreme can lock spins into Kadanoff blocks (53).

‡‡Model selection usually starts from a list of models to be compared, in our language a list of submanifolds of . We can also consider maximizing mutual information in this setting, rather than with an unconstrained function , and unsurprisingly we observe a similar preference for highly flexible simpler models. This is also discussed in Eq. S3.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1715306115/-/DCSupplemental.

References

- 1.Kadanoff LP. Scaling laws for Ising models near . Physics. 1966;2:263–272. [Google Scholar]

- 2.Wilson KG. Renormalization group and critical phenomena. 1. Renormalization group and the Kadanoff scaling picture. Phys Rev. 1971;B4:3174–3183. [Google Scholar]

- 3.Cardy JL. Scaling and Renormalization in Statistical Physics. Cambridge Univ Press; Cambridge, UK: 1996. [Google Scholar]

- 4.Waterfall JJ, et al. Sloppy-model universality class and the Vandermonde matrix. Phys Rev Lett. 2006;97:150601–150604. doi: 10.1103/PhysRevLett.97.150601. [DOI] [PubMed] [Google Scholar]

- 5.Gutenkunst RN, et al. Universally sloppy parameter sensitivities in systems biology models. PLoS Comput Biol. 2007;3:1871–1878. doi: 10.1371/journal.pcbi.0030189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Transtrum MK, Machta BB, Sethna JP. Why are nonlinear fits to data so challenging? Phys Rev Lett. 2010;104:060201. doi: 10.1103/PhysRevLett.104.060201. [DOI] [PubMed] [Google Scholar]

- 7.Transtrum MK, Machta BB, Sethna JP. Geometry of nonlinear least squares with applications to sloppy models and optimization. Phys Rev E. 2011;83:036701. doi: 10.1103/PhysRevE.83.036701. [DOI] [PubMed] [Google Scholar]

- 8.Machta BB, Chachra R, Transtrum MK, Sethna JP. Parameter space compression underlies emergent theories and predictive models. Science. 2013;342:604–607. doi: 10.1126/science.1238723. [DOI] [PubMed] [Google Scholar]

- 9.Transtrum MK, et al. Perspective: Sloppiness and emergent theories in physics, biology, and beyond. J Chem Phys. 2015;143:010901. doi: 10.1063/1.4923066. [DOI] [PubMed] [Google Scholar]

- 10.O’Leary T, Sutton AC, Marder E. Computational models in the age of large datasets. Curr Opin Neurobiol. 2015;32:87–94. doi: 10.1016/j.conb.2015.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Niksic T, Vretenar D. Sloppy nuclear energy density functionals: Effective model reduction. Phys Rev C. 2016;94:024333. [Google Scholar]

- 12.Dhruva VR, Anderson J, Papachristodoulou A. Delineating parameter unidentifiabilities in complex models. Phys Rev E. 2017;95:032314. doi: 10.1103/PhysRevE.95.032314. [DOI] [PubMed] [Google Scholar]

- 13.Bohner G, Venkataraman G. Identifiability, reducibility, and adaptability in allosteric macromolecules. J Gen Physiol. 2017;149:547–560. doi: 10.1085/jgp.201611751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Raju A, Machta BB, Sethna JP. 2017. Information geometry and the renormalization group. arXiv:1710.05787.

- 15.Akaike H. A new look at the statistical model identification. IEEE Trans Automat Contr. 1974;19:716–772. [Google Scholar]

- 16.Sugiura N. Further analysts of the data by Akaike’s information criterion and the finite corrections. Commun Stat Theory Meth. 1978;7:13–26. [Google Scholar]

- 17.Balasubramanian V. Statistical inference, Occam’s razor, and statistical mechanics on the space of probability distributions. Neural Comp. 1997;9:349–368. [Google Scholar]

- 18.Myung IJ, Balasubramanian V, Pitt MA. Counting probability distributions: Differential geometry and model selection. Proc Natl Acad Sci USA. 2000;97:11170–11175. doi: 10.1073/pnas.170283897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Spiegelhalter DJ, Best NG, Carlin BP, Linde AVD. Bayesian measures of model complexity and fit. J R Stat Soc B. 2002;64:583–639. [Google Scholar]

- 20.Watanabe S. Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. JMLR. 2010;11:3571–3594. [Google Scholar]

- 21.LaMont CH, Wiggins PA. Information-based inference for singular models and finite sample sizes 2017 [Google Scholar]

- 22.Transtrum MK, Qiu P. Model reduction by manifold boundaries. Phys Rev Lett. 2014;113:098701. doi: 10.1103/PhysRevLett.113.098701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shannon CE. A mathematical theory of communication. Bell Sys Tech J. 1948;27:623–656. [Google Scholar]

- 24.Lindley DV. On a measure of the information provided by an experiment. Ann Math Stat. 1956;27:986–100. [Google Scholar]

- 25.Rényi A. On some basic problems of statistics from the point of view of information theory. Proc 5th Berkeley Symp Math Stat Prob. 1967:531–543. [Google Scholar]

- 26.Färber G. Die Kanalkapazität allgemeiner Übertragunskanäle bei begrenztem Signalwertbereich beliebigen Signalübertragungszeiten sowie beliebiger Störung. Arch Elektr Übertr. 1967;21:565–574. [Google Scholar]

- 27.Smith JG. The information capacity of amplitude-and variance-constrained scalar Gaussian channels. Inf Control. 1971;18:203–219. [Google Scholar]

- 28.Fix SL. Rate distortion functions for squared error distortion measures. Proc 16th Annu Allerton Conf Commun Control Comput. 1978:704–711. [Google Scholar]

- 29.Berger JO, Bernardo JM, Mendoza M. On priors that maximize expected information. In: Klein J, Lee J, editors. Recent Developments in Statistics and Their Applications. Freedom Academy; Seoul, Korea: 1988. pp. 1–20. [Google Scholar]

- 30.Zhang Z. 1994. Discrete noninformative priors. PhD thesis (Yale University, New Haven, CT)

- 31.Bernardo JM. Reference posterior distributions for Bayesian inference. J R Stat Soc B. 1979;41:113–147. [Google Scholar]

- 32.Bertrand SC, Barron AR. Jeffreys’ prior is asymptotically least favorable under entropy risk. J Stat Plan Infer. 1994;41:37–60. [Google Scholar]

- 33.Scholl HR. Shannon optimal priors on independent identically distributed statistical experiments converge weakly to Jeffreys’ prior. Test. 1998;7:75–94. [Google Scholar]

- 34.Jeffreys H. An invariant form for the prior probability in estimation problems. Proc R Soc A. 1946;186:453–461. doi: 10.1098/rspa.1946.0056. [DOI] [PubMed] [Google Scholar]

- 35.Kerrich JE. An Experimental Introduction to the Theory of Probability. E Munksgaard; Copenhagen: 1946. [Google Scholar]

- 36.O’Luanaigh C. 2013 CERN data centre passes 100 petabytes. home.cern. Available at https://home.cern/about/updates/2013/02/cern-data-centre-passes-100-petabytes.

- 37.Arimoto S. An algorithm for computing the capacity of arbitrary discrete memoryless channels. IEEE Trans Inf Theory. 1972;18:14–20. [Google Scholar]

- 38.Blahut R. Computation of channel capacity and rate-distortion functions. IEEE Trans Inf Theory. 1972;18:460–473. [Google Scholar]

- 39.Rose K. A mapping approach to rate-distortion computation and analysis. IEEE Trans Inf Theory. 1994;40:1939–1952. [Google Scholar]

- 40.Haussler D. A general minimax result for relative entropy. IEEE Trans Inf Theory. 1997;43:1276–1280. [Google Scholar]

- 41.Ghosh MN. Uniform approximation of minimax point estimates. Ann Math Stat. 1964;35:1031–1047. [Google Scholar]

- 42.Casella G, Strawderman WE. Estimating a bounded normal mean. Ann Stat. 1981;9:870–878. [Google Scholar]

- 43.Feldman I. Constrained minimax estimation of the mean of the normal distribution with known variance. Ann Stat. 1991;19:2259–2265. [Google Scholar]

- 44.Chen M, Dey D, Müller P, Sun D, Ye K. Frontiers of Statistical Decision Making and Bayesian Analysis. Springer; New York: 2010. [Google Scholar]

- 45.Sims CA. Rational inattention: Beyond the linear-quadratic case. Am Econ Rev. 2006;96:158–163. [Google Scholar]

- 46.Jung J, Kim J, Matĕjka F, Sims CA. 2015 Discrete actions in information-constrained decision problems. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.696.267.

- 47.Laughlin S. A simple coding procedure enhances a neuron’s information capacity. Z Naturforsch C. 1981;36:910–912. [PubMed] [Google Scholar]

- 48.Tkačik G, Callan CG, Bialek W. Information flow and optimization in transcriptional regulation. Proc Natl Acad Sci USA. 2008;105:12265–12270. doi: 10.1073/pnas.0806077105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Petkova MD, Tkačik G, Bialek W, Wieschaus EF, Gregor T. 2016. Optimal decoding of information from a genetic network. arXiv:1612.08084. [DOI] [PMC free article] [PubMed]

- 50.Mayer A, Balasubramanian V, Mora T, Walczak AM. How a well-adapted immune system is organized. Proc Natl Acad Sci USA. 2015;112:5950–5955. doi: 10.1073/pnas.1421827112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Abbott MC, Machta BB. 2017. An information scaling law . arXiv:1710.09351.

- 52.Schnell S, Mendoza C. Closed form solution for time-dependent enzyme kinetics. J Theor Biol. 1997;187:207–212. [Google Scholar]

- 53.Transtrum M, Hart G, Qiu P. 2014. Information topology identifies emergent model classes. arXiv:1409.6203.

- 54.Paré PE, Wilson AT, Transtrum MK, Warnick SC. A unified view of balanced truncation and singular perturbation approximations. 2015 American Control Conference. 2015 doi: 10.1109/ACC.2015.7171025. [DOI] [Google Scholar]

- 55.Lewis N. Combining independent Bayesian posteriors into a confidence distribution, with application to estimating climate sensitivity. J Stat Plan Inference. 2017 doi: 10.1016/j.jspi.2017.09.013. [DOI] [Google Scholar]

- 56.Lewis N. 2013. Modification of Bayesian updating where continuous parameters have differing relationships with new and existing data. arXiv:1308.2791.

- 57.Poole D, Raftery AE. Inference for deterministic simulation models: The Bayesian melding approach. J Am Stat Assoc. 2000;95:1244–1255. [Google Scholar]

- 58.Seidenfeld T. Why I am not an objective Bayesian; some reflections prompted by Rosenkrantz. Theory Decis. 1979;11:413–440. [Google Scholar]

- 59.Kass RE, Wasserman L. The selection of prior distributions by formal rules. J Am Stat Assoc. 1996;91:1343–1370. [Google Scholar]

- 60.Williamson J. Objective Bayesianism, Bayesian conditionalisation and voluntarism. Synthese. 2009;178:67–85. [Google Scholar]

- 61.Schwarz G. Estimating the dimension of a model. Ann Stat. 1978;6:461–464. [Google Scholar]

- 62.Rissanen J. Modeling by shortest data description. Automatica. 1978;14:465–471. [Google Scholar]

- 63.Wallace CS, Boulton DM. An information measure for classification. Comput J. 1968;11:185–194. [Google Scholar]

- 64.Watanabe S. A widely applicable Bayesian information criterion. J Mach Learn Res. 2013;14:867–897. [Google Scholar]

- 65.Grünwald PD, Myung IJ, Pitt MA. Advances in Minimum Description Length: Theory and Applications. MIT Press; Cambridge, MA: 2009. [Google Scholar]

- 66.Arlot S, Celisse A. A survey of cross-validation procedures for model selection. Stat Surv. 2010;4:40–79. [Google Scholar]

- 67.Anderson PW. More is different. Science. 1972;177:393–396. doi: 10.1126/science.177.4047.393. [DOI] [PubMed] [Google Scholar]

- 68.Batterman RW. Philosophical implications of Kadanoff’s work on the renormalization group. J Stat Phys. 2017;167:559–574. [Google Scholar]

- 69.Bardeen J, Cooper LN, Schrieffer JR. Theory of superconductivity. Phys Rev. 1957;108:1175–1204. [Google Scholar]

- 70.Michaelis L, Menten ML. The kinetics of invertin action. FEBS Lett. 2013;587:2712–2720. doi: 10.1016/j.febslet.2013.07.015. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.