Abstract

Correlation-based Hebbian plasticity is thought to shape neuronal connectivity during development and learning, whereas homeostatic plasticity would stabilize network activity. Here we investigate another, new aspect of this dichotomy: Can Hebbian associative properties also emerge as a network effect from a plasticity rule based on homeostatic principles on the neuronal level? To address this question, we simulated a recurrent network of leaky integrate-and-fire neurons, in which excitatory connections are subject to a structural plasticity rule based on firing rate homeostasis. We show that a subgroup of neurons develop stronger within-group connectivity as a consequence of receiving stronger external stimulation. In an experimentally well-documented scenario we show that feature specific connectivity, similar to what has been observed in rodent visual cortex, can emerge from such a plasticity rule. The experience-dependent structural changes triggered by stimulation are long-lasting and decay only slowly when the neurons are exposed again to unspecific external inputs.

Introduction

Network plasticity involves connectivity changes at different levels. Changes in the strength of already existing synapses are known as functional plasticity, whereas structural changes of axonal or dendritic morphology, as well as the creation of new and deletion of already existing synapses, is known as structural plasticity. During certain stages of development, axons and dendrites have been shown to grow and degenerate depending on neuronal activation1–3. Structural changes, however, are not limited to the extent and shape of neurites, but also include more subtle alterations in spines and boutons. Spine remodeling on excitatory cells due to neuronal activity has been observed in vitro using organotypic hippocampal cultures4–6 and in vivo in sensory and in motor cortex of rodents7–11. Not only changes on postsynaptic spines, but also changes in presynaptic structures have been reported in organotypic hippocampal slice cultures12–14.

The exact rules governing activity-dependent structural changes, however, are still not understood. Computational models have tried to shed light on this issue by simulations, showing for example that many observed features of cortical connectivity could be achieved through the interaction of multiple plasticity mechanisms15,16, and that homeostatic regulation of neuronal activity on multiple time scales is necessary in order to stabilize Hebbian changes17,18. Homeostatic plasticity, in this context, usually refers to a regulation of neuronal connectivity that result in stabilization of neuronal activity at a set point. There is growing evidence for homeostatic regulation of cortical connectivity (for a review, see Turrigiano19). More recently, homeostatic regulation of cortical activity has been demonstrated in vivo in rodent visual cortex20–23. Hebbian plasticity, on the other hand, is used to describe mechanisms that change connections between two neurons based on the correlation between their respective activities. Many of these aspects were discussed at a recent conference devoted to the interaction of Hebbian and homeostatic plasticity24.

But is it the case that the associative principles defining Hebbian learning must rely on pre-post correlations that are available exclusively at the level of individual synapses? Or could associative learning in networks also emerge from plasticity rules that are based on homeostatic principles on the level of whole neurons? The latter has been first proposed by Dammasch25 and, to our knowledge, has not been followed up since then. He proposed that Hebbian learning could emerge as a network property by using an algorithm based on firing rate homeostasis of individual neurons, with no reference to correlation. The idea that associative learning could also emerge from the principle of homeostasis brings an important new aspect into the discussion of integrating Hebbian and homeostatic plasticity. Experimental data usually describe the effects of plasticity on connectivity, but there are still many details missing. Therefore, it is currently not an easy task to differentiate between three scenarios how associative learning might arise: It could emerge from a correlation-based Hebbian learning rule, it might arise as network effect of a learning rule based on homeostasis, or it could occur as a combination of both.

In this paper, we will explore these questions by testing the idea proposed by Dammasch25, using a reimplementation of his algorithm in a more modern modeling framework. We use a structural plasticity rule based on firing rate homeostasis recently implemented in NEST26,27 and a recurrent network of leaky integrate-and-fire (LIF) excitatory and inhibitory neurons. We show that a strongly interconnected assembly of neurons emerges when these neurons are jointly stimulated with stronger external input. We then test the associative properties in an experimentally well-documented scenario, employing a simple model for the maturation of circuits in the primary visual cortex of rodents (V1).

It has been shown that neurons in adult V1 have an increased probability to be synaptically linked to other neurons that have a similar preference for visual features28. Later, it was demonstrated that this feature-specific bias in connectivity was not present at the time of eye opening, and it developed only after some weeks of visual experience29, suggesting that plastic mechanisms would shape the maturation of V1 circuits through visual experience. Surprisingly, a feature-specific bias in connectivity was also shown to develop after eye opening in dark-reared mice lacking any visual input30. The idea of activity dependent plastic mechanisms shaping the maturation of these networks, however, was not ruled out. Spontaneous retinal activity is also present on dark-reared animals31, and plasticity could refine V1 networks based on patterned activity received by pairs of neurons that share some of these inputs. Moreover, the relationship between connectivity and neuronal response to natural movies was not as strong for dark-reared mice as for normally reared mice30, suggesting that plastic mechanisms contribute to the maturation of V1 circuits in an essential way, and the full maturation of feature specific connectivity would depend on visual experience anyway.

Recently, Sadeh et al.32 have shown that a bias for feature specific connectivity can emerge in balanced random networks of LIF neurons with a synaptic plasticity rule that combines Hebbian and homeostatic mechanisms. They used, however, a functional plasticity rule, which limits a direct comparison to experimental data on connectivity. Here, we show that feature-specific connectivity can also emerge in a network of LIF neurons with a structural plasticity rule in which correlations are implicitly evaluated through the random combination of presynaptic and postsynaptic elements in the network, and which does not require that synapses keep track of the presynaptic activity. Moreover, we observed long-lasting structural after-effects of stimulation. This property is compatible with the notion of a persistent memory, which is not in every moment reflected by activity.

Methods

Network simulations

We simulate a recurrent network of N = 12500 current-based LIF neurons, of which NE = 0.8 N are excitatory and NI = 0.2 N are inhibitory. The sub-threshold dynamics of the membrane potential Vi of neuron i obeys the differential equation

| 1 |

where τm is the membrane time constant. The synaptic weight Jij from a presynaptic neuron j to a postsynaptic neuron i is the peak amplitude of the postsynaptic potential and depends on the type of the presynaptic neuron. Excitatory connections have a strength of JE = J = 0.1 mV. Inhibitory connections are stronger by a factor g = 8 such that JI = −gJ = −0.8 mV. A spike train consists of all spikes produced by neuron j. Some of these neurons represent the external input. The lumped spike train of all external neurons to a given neuron in the network is modeled as a Poisson process of rate νext, and external input to different neurons is assumed to be independent. All synapses have a constant transmission delay of d = 1.5 ms. When the membrane potential reaches the firing threshold Vth = 20 mV, the neuron emits a spike to all postsynaptic neurons and its membrane potential is reset to Vr = 10 mV and held there for a refractory period of tref = 2 ms.

The indegree is fixed at 0.1NI for inhibitory to inhibitory and inhibitory to excitatory connections, and at 0.1NE for excitatory to inhibitory synapses. After connections of these types are established they remain unchanged throughout the simulation. In contrast, excitatory to excitatory (EE) connections are initially absent and emerge only from the structural plasticity rule. All simulations were conducted using the NEST simulator33,34]. Numerical values of all parameters of our model are again collected in Table 1.

Table 1.

Parameters of the simulation and neuron model.

| Parameter | Symbol | Value |

|---|---|---|

| Number of neurons | N | 12500 |

| Number of excitatory neurons | N E | 10000 |

| Number of inhibitory neurons | N I | 2500 |

| Incoming excitatory connections per inhibitory neuron | C E | 1000 |

| Incoming inhibitory connections per neuron | C I | 250 |

| Reference weight | J | 0.1 mV |

| Ratio inhibition to excitation | g | 8 |

| Excitatory weight | J E | 0.1 mV |

| Inhibitory weight | J I | −0.8 mV |

| External weight | J ext | 0.1 mV |

| Rate of external input | ν ext | 15 kHz |

| Membrane time constant | τ m | 20 ms |

| Synaptic delay | d | 1.5 ms |

| Threshold potential | V th | 20 mV |

| Reset potential | V r | 10 mV |

| Refractory period | t ref | 2 ms |

Homeostatic structural plasticity (SP)

The SP model used in our work has been recently implemented in NEST27. The implementation combines precursor models by Dammasch35, van Ooyen & van Pelt36 and van Ooyen37. This model has been employed before to study the rewiring of networks after lesion or stroke26,38,39, the specific properties of small-world networks40, the emergence of critical dynamics in developing neuronal networks41, and neurogenesis in the adult dentate gyrus42,43. All these models, however, included a distance-dependent kernel for the formation of new synapses, which is not part of the NEST implementation27 that was used in our present study.

Neuronal activity and synaptic elements

The EE connections in the network are volatile and undergo continuous remodeling, controlled by the SP algorithm. In its first versions, the model had continuous representations of pre- and postsynaptic densities, which were used for accessing connectivity between two neurons42. Later, it was adapted to have a discrete number of axonal and dendritic elements, which are combined to form synapses between neurons38. In the original model, the electrical activity of a neuron is represented by its intracellular calcium concentration, which is a lowpass filtered version of its time-dependent firing rate. In this paper, we use the lowpass filtered spike train of neuron i as a measure for its instantaneous firing rate ri

| 2 |

The time constant of the lowpass filter was chosen as τr = 10 s throughout all our simulations.

Excitatory neurons are assigned a target rate ρ and a set of pre- and postsynaptic elements, which can be interpreted as axonal boutons and dendritic spines, respectively. The number of presynaptic elements, zpre, and postsynaptic elements, zpost, evolves in dependence of the neuron’s firing rate according to

| 3 |

where i is the index of the neuron, and βk is a growth parameter. We use a target rate ρ = 8 Hz and growth parameter β = 2 for both pre- and postsynaptic elements of all excitatory neurons, unless stated otherwise.

Previous work on SP has used different functions to describe how these elements change with the neuron’s activity, such as linear38,41–43, gaussian26,27,39, or logistic40. Since there is currently no direct experimental data showing how the number of these elements vary with firing rate, we chose a generic linear function to implement a simple phenomenological model of firing rate homeostasis. We use the same growth rule and the same parameters for both types of elements of all neurons in our network. In the original model26, free elements that are not engaged in a synapse will decay with a certain rate. In the model considered here, however, free elements do not decay with time. We did run test simulations considering the decay, and found that our main results were not altered (see Supplementary material).

At regular intervals Δt = 100 ms, the structural plasticity rules are applied to delete already existing and create new synaptic contacts. Numerical values of the parameters are summarized in Table 2.

Table 2.

Parameters of the plasticity rule. Numbers in bold are the default values that are used if no specification is given.

| Parameter | Symbol | Value |

|---|---|---|

| Firing rate time constant | τ r | 10 s |

| Synaptic elements growth parameter | β | 0.2, 0.625, 2, 6.25, 20 |

| Target rate | ρ | 5, 6, 7, 8, 9 Hz |

| Structural plasticity interval | Δt | 100 ms |

Synapse deletion and creation

At regular intervals Δt, when rewiring is scheduled, a neuron may have more or less pre- and post-synaptic elements than it has actual synapses. In that case, synapses are either deleted or created, in order to match the number of elements to the number of active synaptic contacts. At fixed intervals Δt, the number of postsynaptic (presynaptic) elements is compared to the number of existing incoming (outgoing) synapses of each neuron

| 4 |

where C is the matrix containing the number of synapses between presynaptic neurons j and postsynaptic neurons i. If the neuron has more synaptic contacts than synaptic elements (Δzk < 0), synapses are deleted. The synapses to be deleted are randomly chosen among the existing contacts a neuron has. After a synapse has been deleted due to a loss of presynaptic (postsynaptic) elements, the corresponding postsynaptic (presynaptic) elements remain available for a new connection. If the neuron has more elements than contacts (), the neuron is considered to have Δzk free synaptic elements. All free synaptic elements in the network are randomly combined into pairs of pre- and postsynaptic elements to form new synapses. The number of synapses formed is limited by both the total number of pre- and the total number of postsynaptic elements in the full network. Each newly created synapse has a fixed strength J. Multiple synapses between the same pair of neurons are allowed, but auto-synapses (self-connection of a neuron onto itself) are not. See Butz & van Ooyen26 and Diaz-Pier et al.27 for more details on the implementation of the model.

Subgroup stimulation

The networks were first grown without structured input, with all excitatory neurons receiving external Poisson input with the same rate νext. Stimulation was started after 750 s, when enough EE connections were grown, and, apart from small fluctuations, all neurons fired at their target rate. During the stimulation period, a subgroup comprising 10% of the excitatory neurons received an increased external input (1.1νext for 150 s). After stimulation, the external input was set back to its original value (νext) for all excitatory neurons. Both the activity and the connectivity of the network were monitored for 5500 s.

Visual stimulation protocol

For the visual cortex simulations, we consider a network similar to the one described in Sect. Network Simulations. The stimulation only starts after the networks have created enough EE connections such that the preset firing rate can be maintained. Visual stimulation is simulated by providing the excitatory neurons with Poisson input the rate of which depends on the orientation of the stimulus, see Sadeh et al.32 for details of the protocol. The baseline firing rate νext is the same as during the growth period, the modulation depends on the orientation of the visual stimulation θ and the preferred orientation (PO) θPO of the input and a modulation gain parameter μ

| 5 |

Each neuron is assigned a parameter θPO, which is randomly drawn from a uniform distribution on [0°, 180°). During the stimulation phase, a different θ is randomly drawn from a uniform distribution on the interval [0°, 180°) and presented to all excitatory neurons for a duration of tst = 1 s. We use a modulation μ = 0.15, and the stimulation protocol consists of presenting a total of Nst = 5000 different stimuli. After the stimulation, the external input to all excitatory neurons is once again set to its initial non-modulated value of νext, and the network is simulated for another tpost = 10000 s. Numerical values of all parameters regarding the stimulation protocol are collected in Table 3.

Table 3.

Parameters of the stimulation protocol.

| Parameter | Symbol | Value |

|---|---|---|

| Modulation of external input | μ | 0.15 |

| Stimulus orientation | θ | [0°, 180°) |

| Input preferred orientation | θ PO | [0°, 180°) |

| Time per stimulus | t st | 1 s |

| Number of stimuli | N st | 5000 |

| Time post stimulation | t post | 10000 s |

Spike train analysis

The spike count correlation between a pair of neurons i and j was calculated as the Pearson correlation coefficient

| 6 |

where cij is the covariance between spike counts extracted from spike trains xi and xj of two neurons, and cii is the variance of spike counts extracted from xi. Correlations were calculated from spike trains comprising 20 s of activity, using bins of size 10 ms.

The irregularity of spike train of neuron i was measured as the coefficient of variation of its inter-spike intervals

| 7 |

where μi is the mean and σi is the standard deviation of the inter-spike intervals extracted from the spike train of neuron i (duration 20 s).

Data availability

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

Results

We start by growing recurrent networks of excitatory and inhibitory LIF neurons. All synaptic connections are static, except for EE connections, which are initially absent and grow according to a structural plasticity rule that implements firing rate homeostasis (see Methods for more details). Before stimulation, we characterized the networks formed under the influence of the structural plasticity rule for uniform (untuned) external stimulation.

Grown networks are random in absence of structured input

The target rate is set to ρ = 8 Hz for all excitatory neurons, which is the expected firing rate of excitatory neurons for the parameter set we are using and 10% EE connectivity. As expected, average EE in- and outdegree increase from 0 until stabilizing at approximately 1000, corresponding to an average EE connectivity of 10% (Fig. 1B and C). The plasticity rule is always active and individual EE connections are still being created and deleted, but we consider the network to be in equilibrium at this point, when excitatory neurons fire on average at their target rate.

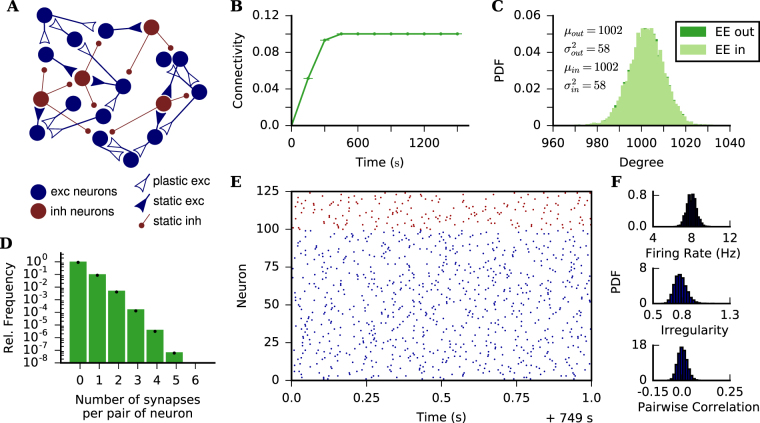

Figure 1.

Structure of grown networks. (A) The network is composed of 80% excitatory and 20% inhibitory LIF neurons. EE connections are plastic and follow the SP rule. All other connections are static and randomly created at the beginning of the simulation, such that all neurons have a fixed indegree corresponding to 10% of the presynaptic population size. (B) Time evolution of average EE connectivity. Dots and bars indicate mean ± standard deviation across 10 independent simulation runs. Highest standard deviation in the time series is 2.8 × 10−5. (C) Indegree and outdegree distributions for EE connections after 750 s simulation time. μin, , μout and are the mean and the variance of the shown indegree and outdegree distributions, respectively. (D) Normalized histogram of the number of synapses per contact between pairs of neurons after 750 s of simulation. Black dots refer to a Poisson distribution with rate parameter matching the average connectivity of the simulated network. (E) Raster plot showing 1 s activity of 100 excitatory and 25 inhibitory neurons (randomly chosen) after 750 s of simulation. (F) Histograms of firing rate, irregularity and pairwise correlation for all (pairs of) excitatory neurons after the network has reached a statistical equilibrium state (judged “by eye”). The neurons fire in an asynchronous-irregular (AI) regime. Data were extracted from 20 s of activity, the bin size used for calculating CC was 10 ms. (B–F) Target rate ρ = 8 Hz and β = 2 for all subplots.

Since multiple synapses between the same pair of neurons are allowed, we also looked into the distribution of the number of synapses between pairs of pre- and post-synaptic neurons. Figure 1D shows that this distribution is roughly a Poisson distribution. If individual contacts can be considered as independent random variables with a Poisson distribution, the in- and outdegree of individual neurons would also follow a Poisson distribution and satisfy μ ≈ σ2. Figure 1C shows, however, that the distribution of in- and outdegrees have σ2 < μ. In the SP model, in- and outdegree distributions change for different distribution of target rates. We also performed simulations in which target rates were drawn from broader distributions, yielding also broader distributions of in- and outdegree,and the main results of feature specific connectivity were not altered (see Supplementary material). Since a thorough study of the effect of target rate on degree distributions was beyond the scope of this paper, for simplicity we fixed ρ = 8 Hz for all excitatory neurons.

For the parameters used on our simulations, an inhibition dominated random network of LIF neurons with 10% connection probability has been shown to have low firing rates, as well as asynchronous and irregular (AI) spike trains44. Figure 1E shows the activity of the network in equilibrium, after 750 s of simulation. The population raster plot (Fig. 1E) indicates the network is in an AI state. Pairs of neurons fire with low correlation coefficient (CC, bin size 10 ms) (Fig. 1F), and individual spike trains have a coefficient of variation (CV) around 0.7 (Fig. 1F). As expected from the choice of ρ, the firing rate of excitatory neurons has a mean value of 8 Hz in equilibrium (Fig. 1F). Firing rates, pairwise correlation and irregularity were calculated from spike trains recorded during 20 s of simulation.

The networks grown according to the SP rule do exhibit some non-random features (see Supplementary Material). However, we consider these deviations from random networks as small. After 750 s of simulation, networks with a target rate ρ = 8 Hz for all excitatory neurons, and a growth parameter β = 2 for all synaptic elements have an essentially random structure and an activity that can be classified as AI.

Time constant of growth process depends on target rate and the growth parameter for synaptic elements

We then determined the time scale of network growth. To that end we simulated a network in which all neurons receive Poisson input with the same rate νext until the equilibrium was reached. We considered the network to be in equilibrium when connectivity (i.e. in- and outdegree distributions) are stable. In this state, individual synapses are still plastic and are created, deleted and recreated as the simulation runs. For these simulations, all neurons had the same target firing rate ρ, but we simulated networks with different values set for ρ.

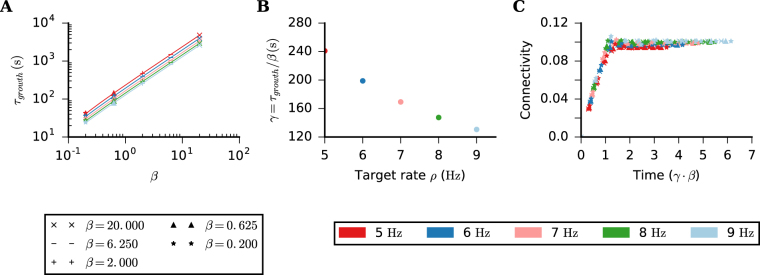

The evolution of EE connectivity is plotted in Fig. 1B. The time scale of that growth process depends on how fast synaptic elements grow (parameter β), but also on the target rate ρ (β = 2 and ρ = 8 Hz on Fig. 1B). The number of new synapses created in the network depends on the number of available free elements. This number, in turn, depends on the growth parameter β for the synaptic elements. Therefore, it comes as no surprise that the network growth also depends on β. The observed relation between the time constant β of network growth and target rate ρ, however, is not self-explaining, as for networks with fixed incoming inhibitory connections and fixed external input, the gain in firing rate depends non-linearly on the actual number of incoming excitatory connections to excitatory neurons44.

To better understand this dependence, we defined a growth time constant τgrowth = s/α, where s is the plateau value of the connectivity, calculated here as the average connectivity for the last 4 discrete time points in a long enough simulation, and α is the highest (mostly initial) slope extracted from the connectivity time series. We then plotted τgrowth against the growth parameter β of synaptic elements, for different values of ρ (Fig. 2A). The range of parameters considered here spanned more than 3 orders of magnitude, so we used a log-log plot to represent it. The exponent, however, is 1, indicating a linear dependency between β and τgrowth. Also, the growth of EE connectivity is faster for simulations with higher ρ, as can be seen in Fig. 2A. The time constant of EE connectivity growth is, therefore, a function of both β and ρ. In order to express this dependency of the growth time constant on ρ,we extracted the coefficient γ = τgrowth/β from the simulated data (Fig. 2B). Finally we rescaled the time axis of Fig. 1B by dividing it by τgrowth for given values of β and ρ, obtaining a growth curve in time units of γ ⋅ β (Fig. 2C).

Figure 2.

Time scale of network growth. (A) Time constant of the growth process (defined in text) extracted from Fig. 1B plotted against β, for different values of ρ. This plot summarizes the result of 25 simulation runs, using 5 different values of β and 5 different values of ρ. In each simulation, all excitatory neurons have the same parameters ρ and β, for both presynaptic and postsynaptic elements. (B) Gain γ = τgrowth/β plotted against ρ. (C) Time evolution of the average EE connectivity of the 25 simulation runs in (A), rescaled by τgrowth.

We found that the growth process is stable throughout at least 3 orders of magnitude of the parameter β. An even slower process would lead to exceedingly long simulation times, but we have no reason to believe that this would destabilize the system. A value of β could easily be chosen such that network growth would happen in hours or days, matching experimental data of structural plasticity. Making the process faster, however, may destabilize the system, as the rate of new contacts created would increase. Apart from mathematical constraints, there are also biological limits regarding speed, of course, as a fast system also requires high turnover rates and efficient transport of proteins and other molecules.

From now on, all simulated networks have a target rate ρ = 8 Hz for all excitatory neurons, all synaptic elements have a growth parameter β = 2.

Associative properties of SP

In his paper, Dammasch25 suggested that the compensation algorithm, on which this particular SP model26,27 is based, could implement a form of Hebbian learning. This becomes clear when analyzing the equations by Butz & van Ooyen26 describing the expected change in connectivity induced by the SP algorithm:

| 8 |

Please note that we have adapted Eq. 8 from26 to match the nomenclature used in the present paper. Also, we only account for the simplest case here, in which only EE connections are plastic, and where the formation of synapses does not depend on distance. Eq. 8 clearly shows that the connectivity increase happens when two neurons are simultaneously in a low activity state, implementing Hebbian learning through a covariance rule. On the other hand, in periods of elevated activity, the connectivity decreases in an unspecific manner, similar to a weight-dependent synaptic scaling, affecting both pre- and postsynaptic elements.

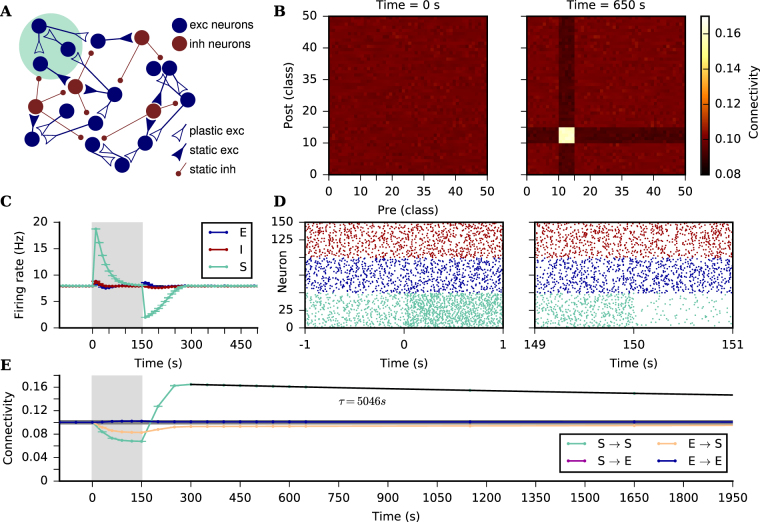

The associative properties of SP are tested by stimulating a subgroup of neurons and quantifying the changes in their connectivity. After the network was grown and all excitatory neurons were firing roughly at their target rate, a subgroup of the excitatory neurons was stimulated with higher external input for a duration of 150 s. Upon stimulation onset, the instantaneous firing rate of the stimulated subgroup increases (Fig. 3C), pushing the neurons away from their target rate and triggering a rewiring of their dendrites and axons. The connectivity of the neurons then decays (Fig. 3E) until the instantaneous rate again reaches its target value, and the average connectivity then stabilizes. When specific stimulation stops, and all excitatory neurons receive again un-tuned external input, the firing rate of the subgroup drops to a level below the set point, as the excitatory amplification by the recurrent network is now reduced due to the deletion of connections during stimulation. The firing rate below the set point triggers once again rewiring, and the neurons create new pre- and postsynaptic elements. Since the effect of specific stimulation on the firing rate of the remaining network is much smaller (Fig. 3C and E), there are more synaptic elements available from the subgroup, and it is more likely for them to create connections within the subgroup than with neurons outside of it (Fig. 3B).

Figure 3.

Associative properties of the SP rule. (A) A recurrent network of excitatory and inhibitory neurons is grown from scratch (see text for details). After it has reached a statistical equilibrium of connectivity, a subgroup (S) comprising 10% of the excitatory neurons is stimulated with a strong external input for 150 s. All the other excitatory (E) and inhibitory (I) neurons in the network are still stimulated with the same external input as during the growth phase. (B) Connectivity matrix before specific stimulation (left), and after specific stimulation has been off for 500 s (right). Neurons are divided into 50 equally large classes and colors correspond to average connectivity between classes. Neurons are sorted such that classes 10 to 15 comprise neurons belonging to the stimulated subgroup. (C) The S neurons (green), E neurons (blue), and I neurons (red) change their firing rates due to a change in external input, but also due to the induced changes in connectivity. As the firing rates of excitatory neurons are subject to individual homeostatic control, they are all back to normal after another 150 s once the extra stimulus is turned off. Dots and bars indicate mean ± standard deviation across 10 independent simulation runs. Highest standard deviation in the time series is 0.09 Hz. (D) Raster plot for 50 neurons randomly chosen from S, 50 from E, and 50 from I. Shown are 2 s before and after specific stimulation starts (left) and 2 s before and after specific stimulation ends (right). (E) Average connectivity within the stimulated subgroup (green), among excitatory neurons not belonging to the subgroup (blue), as well as across populations from non-stimulated excitatory neurons to the stimulated subgroup (orange) and from the stimulated subgroup to non-stimulated excitatory neurons (purple). Dots and bars indicate mean ± standard deviation across 10 independent simulation runs. Highest standard deviation in the time series is 5 × 10−4. The grey horizontal line indicates the average connectivity right before specific stimulation starts. The black line is an exponential fit to the subgroup to subgroup connectivity, from which the time constant τ was extracted. The structural association among jointly stimulated neurons induced by stimulation persists for a very long time. Grey boxes in (C) and (E) indicate the time when external input to the stimulated subgroup is on.

The structural plasticity rule is continuously remodeling the network and there are still changes in connectivity even after the firing rate of the subgroup reaches its target (approximately 300 s after the end of specific stimulation, see Fig. 3C). In this case, however, all neurons are firing on average at their target rate, and rewiring is slower, as the change in the number of elements is proportional to r(t) − ρ. The higher connectivity that was formed within the stimulated subgroup lasts for a longer period after activity is back to what it was before stimulation (Fig. 3C and E).

SP leads to feature-specific connectivity in a simple model for the maturation of V1

We were then interested in testing the associative properties in a biologically more realistic scenario. As an example, we consider a simple model for the maturation of V1, in which different stimuli are presented consecutively, and neurons respond to them according to their own functional preferences. Once the network was formed and is in equilibrium, excitatory neurons were driven by external input that was tuned to stimulus orientation to simulate visual experience. Each neuron received external input as a Poissonian spike train, the rate of which was modulated according to a tuning curve with an input PO (θPO) that was randomly assigned at the beginning of the simulation. More specifically, the modulation depended on the difference between θPO and the stimulus orientation (SO), which changed randomly at fixed time intervals. According to the stimulation protocol, neurons receive a slightly higher external input when the presented SO is similar to their input PO, which also entails a higher output rate (Fig. 4A–C).

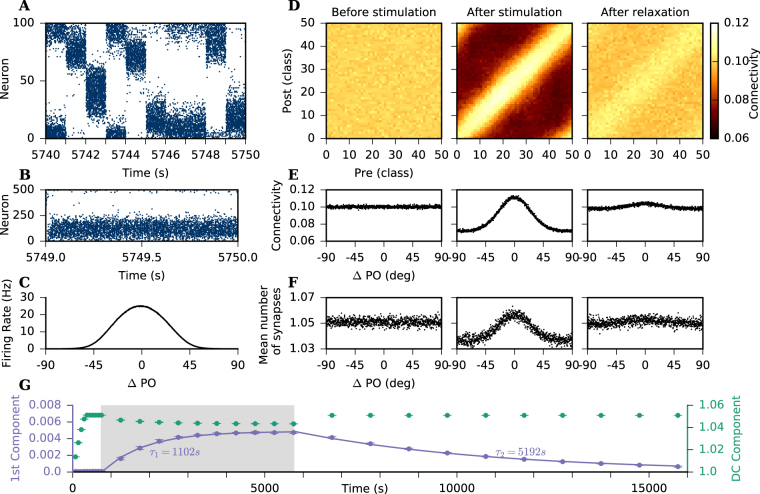

Figure 4.

Emergence of feature specific connectivity. (A) Raster plot of the activity of 100 randomly chosen excitatory neurons during 10 s of stimulation. Neurons are sorted according to their input PO. Every 1 s a new stimulus orientation is randomly chosen and presented, leading to external inputs to all excitatory neurons which was modulated according to their respective input PO. (B) Activity of 500 randomly chosen excitatory neurons for the last 1 s of a stimulation of total duration of 5000 s. Neurons are sorted according to their respective input PO. (C) Tuning curves averaged across all excitatory neurons using the spikes generated during the last 20 stimuli. (D) Connectivity matrix, pre- and post-synaptic neurons are sorted according to their PO and subdivided into 50 equally large classes of similar PO. Colorbar shows average connectivity between classes accounting for multiple contacts. (E) Mean connection probability, also accounting for multiple contacts, plotted against the difference between pre and post PO. Pairs of neurons are sorted into 1000 bins, and shown is the average connectivity for each individual bin. (F) Mean number of synapses plotted against the difference between pre and post PO. Only contacts between pairs of neurons that contain at least one synapse are considered. (D–F) Left column: Connectivity at the end of the initial growth phase (t = 750 s). No orientation bias in connectivity is visible. Middle column: At the end of the stimulation phase (t = 5750 s), connectivity is strongly modulated according to the difference between pre and post PO. Right column: After 10000 s of unmodulated input (t = 15750 s), connectivity has still a slight orientation bias. Note that the scales are the same across columns. (G) First two Fourier components of the connectivity as a function of the difference between pre and post PO (E). The left axis (purple) shows the time evolution of the first component, and the right axis (green) shows the time evolution of the DC component. We use non-linear least squares to fit exponential functions to each time series and extract their time constants, τ1 and τ2, respectively. The grey shaded area indicates the period of visual stimulation. Dots and bars indicate mean ± standard deviation across 10 independent simulation runs. Highest standard deviation in the time series is 2.6 × 10−4 for the DC component and 2 × 10−4 for the first component.

Before oriented stimulation, the connectivity between excitatory neurons was random, and in particular, there was no bias of connectivity with regard to the PO of neurons (Fig. 4D and E left columns). After the presentation of 5000 different stimuli, however, the connectivity pattern of the excitatory neurons change, and neurons are more likely to connect to neurons with similar PO (Fig. 4D middles column). The connectivity is, therefore, modulated according to the difference in the POs of pairs of neurons (Fig. 4E middle column). During modulated stimulation, neurons fire either at a lower or at a higher rate than their target rate, according to the difference between the SO and their PO. Whenever they fire lower than their target rate, they create synaptic elements. In contrast, if they fire higher than their target rate, they delete synaptic elements. Neurons with similar PO increase their number of elements at the same stimulus periods and, therefore, have a higher probability of creating synapses between each other.

Figure 4D and E refer to average connectivity between classes of neurons, and synapses contribute the same to connectivity irrespective of whether they are established between two different pairs of neurons or between the same pair. Figure 4F show how the number of synapses between pairs of connected neurons are modulated according to the difference in their respective POs. After visual stimulation, neurons are more likely to create multiple synapses to other neurons with similar PO, as compared to neurons with different PO. Another aspect to notice on Fig. 4D and E is the conspicuous drop in average connectivity following the feature-specific stimulation. One possible explanation for this drop is the non-linearity of the input-output response curve of LIF neurons. During oriented stimulation the average output is higher, although the average input to the neurons is still the same as before stimulation. We performed simulations with a static network with 10% connectivity and the same modulated external input, mimicking visual experience. We found that the average firing rate of the neurons increased by 1 Hz during stimulation (see Supplementary material). In the network with SP such an increase in firing rate would lead to a decrease in average connectivity to keep average firing rate at its target value.

Structural changes induced by stimulation decay very slowly

As in the case of subgroup stimulation (Fig. 3E), the network structure formed during visual stimulation persists long after the end of stimulation. After 5000 stimuli, we set the external input to all excitatory neurons back to a uniform value of νext to see what happens to the SP induced connectivity in absence of any structured stimulation. Even after 10000 s of non-modulated external input, the connectivity of excitatory neurons is still slightly modulated according to their PO (Fig. 4D and E right columns).

Figure 4G shows the time evolution of the first Fourier components of the feature connectivity modulation (Fig. 4E) during network growth, stimulation and post-stimulation phase. As previously observed in Fig. 4D and E, there is a drop in average connectivity during the visual stimulation phase, which can be seen in this case as a decrease in the DC component of the modulated signal. Also, during the visual stimulation phase, there is an increase in the first Fourier component, corresponding to the modulation of the signal that happens simultaneously with the decrease in average connectivity (Fig. 4G).

After the stimulation phase, when all excitatory neurons receive once again the same non-modulated external input νext, the average connectivity returns quickly to the value it had before stimulation. The modulation component of the connectivity, on the other hand, decays slowly back to its original value, indicating that there are different time scales for creating and destroying feature specific connectivity based on the modulation of external input. We used non-linear least squares to fit an exponential function to the first Fourier component of the modulated signal during and after stimulation and extracted the time constant for both process, shown in Fig. 4G. As described previously, the process of creating feature specific connectivity is 5 times faster (τ1 ≈ 1000 s) than destroying it (τ2 ≈ 5000 s). The time series of the Fourier components for multiplicity modulation are qualitatively very similar to the feature specific modulation (see Supplementary material).

Discussion

In our study, we employed a structural plasticity (SP) rule based on the homeostasis of firing rates26,27 to grow random networks of excitatory and inhibitory neurons. We allowed only EE connections to grow, all other synapses were static. We showed that in such a configuration an implementation of homeostatic structural plasticity shares important functional properties with a Hebbian learning rule, with different time scales for the creation and decay of the newly formed associations. In a generic model of visual cortex, we show that feature-specific connectivity, similar to what has been observed in V1 of mice, can emerge from the SP model. This feature-specific connectivity persists even after the feature-specific stimulation has been turned off.

The time scales of structural plasticity in the brain cover several orders of magnitude, ranging from minutes45 up to hours or even days4,9,46. Here we show how the SP model can be adjusted to allow investigations stable through time scales covering at least three orders of magnitude. Minerbi et al.46 continuously imaged cultures of rat cortical neurons and found that, although the distribution of synaptic sizes was stable over days, individual synapses were continuously remodeled. In the SP model, individual pre- and postsynaptic elements are remodeled on a fast time scale, whereas the global network structure evolves at a much slower pace. Therefore, average connectivity and the distributions of in- and outdegrees might be stable, but individual synapses are still plastic, continuously creating new and deleting existing synapses.

We show in Fig. 2 that the time scale of network growth depends on the growth parameter for the synaptic elements (β). A thorough study on the influence of all parameters of the growth rule on network remodeling was beyond the scope of our present study. Therefore, we used the simplest combination of identical linear controllers for both pre- and postsynaptic elements. There is, however, experimental evidence that the growth of axons is somewhat slower than that of spines47, but the exact rules governing the growth of synaptic elements are still unknown. The choice of a linear function to implement the homeostatic controller of firing rates is, in any case, in accordance with empirical studies that demonstrated an increase in the number of newly formed spines on cortical neurons in adult mice after monocular deprivation9 and after small lesions of the retina48. More recently, several studies20–22 showed a regulation of firing rate through homeostatic plasticity in the rodent visual cortex in vivo. Keck et al.20 demonstrated an increase of spine size in vivo after a change of sensory input through a retinal lesion, indicating a compensatory recovery through functional plasticity, but no change in spine density after the lesion. This does not completely discard the hypothesis that sensory deprivation could trigger structural plasticity mechanisms, as the homeostatic regulation of activity is probably the result of an interaction between functional and structural plasticity. In this specific case, the spine turnover due to structural plasticity as previously observed by Keck et al.48, and an increase of the strength of existing connections together lead to a recovery of neuronal activity. Regarding presynaptic elements, Canty et al.49 have recently demonstrated axon regrowth in vivo after ablation, with axonal bouton densities similar to the state before the lesion, in accordance to a putative homeostatic mechanism.

Several known aspects of cortical network structure and dynamics were not reproduced in our simulations, such as a broad and skewed distribution of firing rates50–52 and synaptic strength53, and a specific motif statistics for pairs and triplets of neurons54,55. The structure and dynamics observed in cortical neuronal networks, however, emerge from the interplay of multiple plasticity mechanisms. A full account of structural remodeling of cortical networks should, therefore, include multiple plasticity mechanisms56,57. With such models, however, is not an easy task to understand what are the effects of individual processes, and what are the effects of combined mechanisms. On one side, by simulating only one plasticity rule in isolation, we were able to report a very interesting property of a growth rule based on firing rate of homeostasis. On the other side, it must remain open how SP interacts with other forms of plasticity, and how the above-mentioned property is changed in the presence of other plastic mechanisms that simultaneously update connectivity in the network.

We have provided support for an idea put forward by Dammasch25: Hebbian plasticity is not necessarily tied to individual synapses, but can also emerge as a system property. The homeostatic control of structural plasticity is achieved on the level of whole neurons, and not of individual synapses. In this model, neurons have control over the number of synaptic elements, which are putative synapses, but not directly over the formation of specific synapses. The realization of a synaptic contact is, in fact, implemented by randomly wiring available elements. Thus, Hebbian learning is implemented through the availability and random wiring of free elements in the network. In contrast to traditional rules implementing Hebbian learning at individual synapses, in the SP model it is not necessary that the neurons keep track of the individual activity of other neurons. Instead, they only need to keep track of their own activity, and a random wiring scheme implements the correlation dependence. Hebbian association, therefore, is formed due to neurons controlling their own total input and output, but not the weight of individual synapses, an idea that may be related to the neurocentric view of learning proposed by Titley et al.58 In their review, Fauth & Tetzlaff59 distinguish two types of structural plasticity rules: (i) Hebbian, if there is an increase (decrease) of the number of synapses during high (low) activity and (ii) homeostatic, if there is an increase (decrease) of the number of synapses during low (high) activity. The SP rule is, according to this definition, a specific variant of homeostatic structural plasticity. On the network level, however, it implements a form of Hebbian plasticity. The classification proposed by Fauth & Tetzlaff59 takes the rules causing the changes in number of pre- and postsynaptic elements into consideration. Another possible classification, however, would consider the effects of the plastic mechanisms on the connectivity. It is actually not an easy task to distinguish these two options in experiments, since what we observe is the effect on the network, and it may be impossible to know what were the mechanisms that led to the observed effects.

Another interesting feature implemented by SP in our specific study are the different time scales for establishing and deleting modulated connectivity. If we consider specific non-random connectivity to implement some sort of memory for previous experiences of the system, this would mean that the system learns faster than it forgets. This appears to happen because the rewiring in the network is triggered by (and depends on) the discrepancy between the actual activity of the neuron and its target rate. Learning takes place if there is modulated external input, and the neuronal firing rate is drawn away from its setpoint, leading to strong rewiring. When the external input is not modulated any more, the neuron’s activity recovers quickly back to its target rate, and the rewiring then becomes very slow, depending on the amplitude of random fluctuations. In a more theoretical framework, Fauth et al.60 showed recently that fast learning and slow forgetting can occur in a stochastic model of structural plasticity. In their model, new synapses are randomly formed with a constant probability, and randomly deleted with a probability that depends on the number of the existing synapses and the current stimulation. In our simulations, in contrast, both the creation and the deletion of synapses depend on the number of synaptic elements of each neuron, which in turn depends on its level of activity. Deletion also depends on the number of existing synaptic contacts between pre- and postsynaptic neurons, due to competition when deleting an existing contact. Different rules for the growth of synaptic elements could of course lead to different dependencies. These rules might also influence other properties of the SP model we describe in this paper, such as the capability to form associations.

Hiratani & Fukai61 demonstrated the formation of cell assemblies of strongly connected cells in a random recurrent network with short-term depression, log-STDP62 and homeostatic plasticity. Similarly to other computational models17,63, the stronger connectivity is accompanied by sustained activity of neurons, consistent with the concept of a working memory. Our results, in contrast, show a memory trace in the connectivity of neurons without sustained activity, more consistent with the idea of contextual memories. Another clear difference between these models concerns the time evolution of average synaptic weights. Hiratani & Fukai61 show an increase in average synaptic weight throughout the stimulation. In our simulations, the average connectivity decreases during stimulation time due to the homeostatic principles underlying the plasticity rule, and it increases after the specific stimulation has been turned off. This would imply that immediately after the end of stimulation, connectivity between the stimulated neurons is lower than baseline. Although this seems counter-intuitive, there have indeed been studies showing perceptual deterioration after trial repetition for subjects tested in a certain task on the same day64–67, followed by perceptual improvement after 24 and 48 hours67, which would be in agreement to the observed dynamics of connectivity in our simulations. Experimental data on plasticity usually report values of connectivity before and after a stimulation, but do not allow insight into the connectivity dynamics. Knowing the time evolution of these connectivity values during different stimulation protocols could give us important hints about the exact mechanisms of plastic changes and help constrain the plasticity models.

A straight-forward consequence of homeostatic plasticity is the stabilization of activity in neuronal networks. This aspect has been thoroughly studied over many years [see19 for a review on homeostatic plasticity for stabilizing neuronal activity]. The synaptic homeostasis hypothesis should be mentioned in this context68. It states that Hebbian learning during awake states leads to an increase in firing rates, and homeostatic plasticity during sleep states has the goal to restore activity back to baseline levels. Hengen et al.23 recently showed that the opposite is the case. They continuously monitored the firing rate of individual visual cortical neurons in freely behaving rats over several days and showed that homeostasis is actually inhibited by sleep and promoted by wake states. It is of course possible that homeostatic plasticity has an exclusive role for network stabilization, even if it is active during wake and not sleep states. In any case, all these aspects taken together suggest that there could be more to homeostatic plasticity than just stabilizing the network.

Electronic supplementary material

Acknowledgements

Supported by Erasmus Mundus/EuroSPIN, BMBF (grant BFNT 01GQ0830) and DFG (grant EXC 1086). The HPC facilities are funded by the state of Baden-Württemberg through bwHPC and DFG grant INST 39/963-1 FUGG. We thank Sandra Diaz-Pier and Mikaël Naveau from the Research Center Jülich for support on new features of NEST, and Uwe Grauer from the Bernstein Center Freiburg as well as Bernd Wiebelt and Michael Janczyk from the Freiburg University Computing Center for their assistance with HPC applications. The article processing charge was covered by the open access publication fund of the University of Freiburg.

Author Contributions

J.G. and S.R. conceptualized the main goals, J.G. performed the simulations, J.G. and S.R. analyzed the results, S.R. supervised the work, J.G. and S.R. wrote and revised the manuscript.

Competing Interests

The authors declare no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-018-22077-3.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Cohan CS, Kater SB. Suppression of neurite elongation and growth cone motility by electrical activity. Science. 1986;232:1638–40. doi: 10.1126/science.3715470. [DOI] [PubMed] [Google Scholar]

- 2.Van Huizen F, Romijn H. Tetrodotoxin enhances initial neurite outgrowth from fetal rat cerebral cortex cells in vitro. Brain Res. 1987;408:271–274. doi: 10.1016/0006-8993(87)90386-6. [DOI] [PubMed] [Google Scholar]

- 3.Mattson MP, Kater S. Excitatory and inhibitory neurotransmitters in the generation and degeneration of hippocampal neuroarchitecture. Brain Res. 1989;478:337–348. doi: 10.1016/0006-8993(89)91514-X. [DOI] [PubMed] [Google Scholar]

- 4.Harris KM, Kirov SA. Dendrites are more spiny on mature hippocampal neurons when synapses are inactivated. Nat. Neurosci. 1999;2:878–883. doi: 10.1038/13178. [DOI] [PubMed] [Google Scholar]

- 5.Wiegert JS, Oertner TG. Long-term depression triggers the selective elimination of weakly integrated synapses. Proc. Natl. Acad. Sci. 2013;110:E4510–9. doi: 10.1073/pnas.1315926110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Oh WC, Parajuli LK, Zito K. Heterosynaptic Structural Plasticity on Local Dendritic Segments of Hippocampal CA1 Neurons. Cell Rep. 2015;10:162–169. doi: 10.1016/j.celrep.2014.12.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zuo Y, Yang G, Kwon E, Gan W-B. Long-term sensory deprivation prevents dendritic spine loss in primary somatosensory cortex. Nature. 2005;436:261–265. doi: 10.1038/nature03715. [DOI] [PubMed] [Google Scholar]

- 8.Zuo Y, Lin A, Chang P, Gan W-B. Development of Long-Term Dendritic Spine Stability in Diverse Regions of Cerebral Cortex. Neuron. 2005;46:181–189. doi: 10.1016/j.neuron.2005.04.001. [DOI] [PubMed] [Google Scholar]

- 9.Hofer SB, Mrsic-Flogel TD, Bonhoeffer T, Hübener M. Experience leaves a lasting structural trace in cortical circuits. Nature. 2009;457:313–317. doi: 10.1038/nature07487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xu T, et al. Rapid formation and selective stabilization of synapses for enduring motor memories. Nature. 2009;462:915–919. doi: 10.1038/nature08389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yang G, Pan F, Gan W-B. Stably maintained dendritic spines are associated with lifelong memories. Nature. 2009;462:920–924. doi: 10.1038/nature08577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nikonenko I, Jourdain P, Muller D. Presynaptic remodeling contributes to activity-dependent synaptogenesis. J. Neurosci. 2003;23:8498–505. doi: 10.1523/JNEUROSCI.23-24-08498.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schuemann A, Klawiter A, Bonhoeffer T, Wierenga CJ. Structural plasticity of GABAergic axons is regulated by network activity and GABAA receptor activation. Front. Neural Circuits. 2013;7:113. doi: 10.3389/fncir.2013.00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ninan I, Liu S, Rabinowitz D, Arancio O. Early presynaptic changes during plasticity in cultured hippocampal neurons. EMBO J. 2006;25:4361–4371. doi: 10.1038/sj.emboj.7601318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zheng P, Triesch J. Robust development of synfire chains from multiple plasticity mechanisms. Front. Computat. Neurosci. 2014;8:66. doi: 10.3389/fncom.2014.00066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Miner D, Triesch J. Plasticity-Driven Self-Organization under Topological Constraints Accounts for Non-random Features of Cortical Synaptic Wiring. PLOS Computat. Biol. 2016;12:e1004759. doi: 10.1371/journal.pcbi.1004759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zenke, F., Agnes, E. J. & Gerstner, W. Diverse synaptic plasticity mechanisms orchestrated to form and retrieve memories in spiking neural networks. Nat. Commun. 6, 10.1038/ncomms7922 (2015). [DOI] [PMC free article] [PubMed]

- 18.Zenke F, Gerstner W. Hebbian plasticity requires compensatory processes on multiple timescales. Philos. Transact. Royal Soc. B. 2017;372:20160259. doi: 10.1098/rstb.2016.0259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Turrigiano G. Homeostatic synaptic plasticity: local and global mechanisms for stabilizing neuronal function. Cold Spring Harb. perspectives in biology. 2012;4:a005736. doi: 10.1101/cshperspect.a005736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Keck T, et al. Synaptic Scaling and Homeostatic Plasticity in the Mouse Visual Cortex In Vivo. Neuron. 2013;80:327–334. doi: 10.1016/j.neuron.2013.08.018. [DOI] [PubMed] [Google Scholar]

- 21.Hengen KB, Lambo ME, Van Hooser SD, Katz DB, Turrigiano GG. Firing rate homeostasis in visual cortex of freely behaving rodents. Neuron. 2013;80:335–42. doi: 10.1016/j.neuron.2013.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Barnes SJ, et al. Subnetwork-Specific Homeostatic Plasticity in Mouse Visual Cortex In Vivo. Neuron. 2015;86:1290–303. doi: 10.1016/j.neuron.2015.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hengen KB, Torrado Pacheco A, McGregor JN, Van Hooser SD, Turrigiano GG. Neuronal Firing Rate Homeostasis Is Inhibited by Sleep and Promoted by Wake. Cell. 2016;165:180–191. doi: 10.1016/j.cell.2016.01.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fox K, Stryker M. Integrating Hebbian and homeostatic plasticity: introduction. Philos. Transactions Royal Soc. B. 2017;372:20160413. doi: 10.1098/rstb.2016.0413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dammasch, I. E. Structural relization of a Hebb-type learning rule. In M, C. R. (ed.) Models of Brain Functions (Cambridge Univeristy Press, 1989).

- 26.Butz M, van Ooyen A. A simple rule for dendritic spine and axonal bouton formation can account for cortical reorganization after focal retinal lesions. PLOS Computat. Biol. 2013;9:e1003259. doi: 10.1371/journal.pcbi.1003259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Diaz-Pier, S., Naveau, M., Butz-Ostendorf, M. & Morrison, A. Automatic Generation of Connectivity for Large-Scale Neuronal Network Models through Structural Plasticity. Front. Neuroanat. 10, 10.3389/fnana.2016.00057 (2016). [DOI] [PMC free article] [PubMed]

- 28.Ko H, et al. Functional specificity of local synaptic connections in neocortical networks. Nature. 2011;473:87–91. doi: 10.1038/nature09880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ko H, et al. The emergence of functional microcircuits in visual cortex. Nature. 2013;496:96–100. doi: 10.1038/nature12015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ko H, Mrsic-Flogel TD, Hofer SB. Emergence of feature-specific connectivity in cortical microcircuits in the absence of visual experience. J. Neurosci. 2014;34:9812–6. doi: 10.1523/JNEUROSCI.0875-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Demas J, Eglen SJ, Wong ROL. Developmental loss of synchronous spontaneous activity in the mouse retina is independent of visual experience. J. Neurosci. 2003;23:2851–60. doi: 10.1523/JNEUROSCI.23-07-02851.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sadeh S, Clopath C, Rotter S. Emergence of Functional Specificity in Balanced Networks with Synaptic Plasticity. PLOS Computat. Biol. 2015;11:e1004307. doi: 10.1371/journal.pcbi.1004307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gewaltig M-O, Diesmann M. NEST (NEural Simulation Tool) Scholarpedia. 2007;2:1430. doi: 10.4249/scholarpedia.1430. [DOI] [Google Scholar]

- 34.Bos, H. et al. NEST 2.10.0, 10.5281/ZENODO.44222 (2015).

- 35.Dammasch IE, Wagner GP, Wolff JR. Self-stabilization of neuronal networks. Biol. Cybern. 1986;54:211–222. doi: 10.1007/BF00318417. [DOI] [PubMed] [Google Scholar]

- 36.van Ooyen A, van Pelt J. Activity-dependent Outgrowth of Neurons and Overshoot Phenomena in Developing Neural Networks. J. Theor. Biol. 1994;167:27–43. doi: 10.1006/jtbi.1994.1047. [DOI] [Google Scholar]

- 37.Van Ooyen A, Van Pelt J, Corner MA. Implications of activity dependent neurite outgrowth for neuronal morphology and network development. J. Theoret. Biol. 1995;172:63–82. doi: 10.1006/jtbi.1995.0005. [DOI] [PubMed] [Google Scholar]

- 38.Butz M, Van Ooyen A, Wörgötter F. A model for cortical rewiring following deafferentation and focal stroke. Front. Computat. Neurosci. 2009;3:10. doi: 10.3389/neuro.10.010.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Butz M, Steenbuck ID, van Ooyen A. Homeostatic structural plasticity can account for topology changes following deafferentation and focal stroke. Front. Neuroanat. 2014;8:115. doi: 10.3389/fnana.2014.00115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Butz M, Steenbuck ID, van Ooyen A. Homeostatic structural plasticity increases the efficiency of small-world networks. Front. Synaptic Neurosci. 2014;6:7. doi: 10.3389/fnsyn.2014.00007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tetzlaff C, Okujeni S, Egert U, Wörgötter F, Butz M. Self-Organized Criticality in Developing Neuronal Networks. PLOS Comput. Biol. 2010;6:e1001013. doi: 10.1371/journal.pcbi.1001013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Butz M, Lehmann K, Dammasch IE, Teuchert-Noodt G. A theoretical network model to analyse neurogenesis and synaptogenesis in the dentate gyrus. Neural Networks. 2006;19:1490–1505. doi: 10.1016/j.neunet.2006.07.007. [DOI] [PubMed] [Google Scholar]

- 43.Butz M, Teuchert-Noodt G, Grafen K, van Ooyen A. Inverse relationship between adult hippocampal cell proliferation and synaptic rewiring in the dentate gyrus. Hippocampus. 2008;18:879–898. doi: 10.1002/hipo.20445. [DOI] [PubMed] [Google Scholar]

- 44.Brunel N. Dynamics of Sparsely Connected Networks of Excitatory and Inhibitory Spiking Neurons. J Comput. Neurosci. 2000;8:183–208. doi: 10.1023/A:1008925309027. [DOI] [PubMed] [Google Scholar]

- 45.Bonhoeffer T, Engert F. Dendritic spine changes associated with hippocampal long-term synaptic plasticity. Nature. 1999;399:66–70. doi: 10.1038/19978. [DOI] [PubMed] [Google Scholar]

- 46.Minerbi A, et al. Long-Term Relationships between Synaptic Tenacity, Synaptic Remodeling, and Network Activity. PLOS Biol. 2009;7:e1000136. doi: 10.1371/journal.pbio.1000136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Majewska AK, Newton JR, Sur M. Remodeling of Synaptic Structure in Sensory Cortical Areas In Vivo. J. Neurosci. 2006;26:3021–3029. doi: 10.1523/JNEUROSCI.4454-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Keck T, et al. Massive restructuring of neuronal circuits during functional reorganization of adult visual cortex. Nat. Neurosci. 2008;11:1162–1167. doi: 10.1038/nn.2181. [DOI] [PubMed] [Google Scholar]

- 49.Canty AJ, et al. In-vivo single neuron axotomy triggers axon regeneration to restore synaptic density in specific cortical circuits. Nat. Commun. 2013;4:777–791. doi: 10.1038/ncomms3038. [DOI] [PubMed] [Google Scholar]

- 50.Hromádka T, DeWeese MR, Zador AM. Sparse Representation of Sounds in the Unanesthetized Auditory Cortex. PLOS Biol. 2008;6:e16. doi: 10.1371/journal.pbio.0060016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.O’Connor DH, Peron SP, Huber D, Svoboda K. Neural Activity in Barrel Cortex Underlying Vibrissa-Based Object Localization in Mice. Neuron. 2010;67:1048–1061. doi: 10.1016/j.neuron.2010.08.026. [DOI] [PubMed] [Google Scholar]

- 52.Mizuseki K, Buzsáki G. Preconfigured, Skewed Distribution of Firing Rates in the Hippocampus and Entorhinal Cortex. Cell Rep. 2013;4:1010–1021. doi: 10.1016/j.celrep.2013.07.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cossell L, et al. Functional organization of excitatory synaptic strength in primary visual cortex. Nature. 2015;518:399–403. doi: 10.1038/nature14182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Song S, Sjöström PJ, Reigl M, Nelson S, Chklovskii DB. Highly nonrandom features of synaptic connectivity in local cortical circuits. PLOS Biol. 2005;3:e68. doi: 10.1371/journal.pbio.0030068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Perin R, Berger TK, Markram H. A synaptic organizing principle for cortical neuronal groups. Proc. Natl. Acad. Sci. 2011;108:5419–24. doi: 10.1073/pnas.1016051108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Toyoizumi T, Kaneko M, Stryker M, Miller K. Modeling the Dynamic Interaction of Hebbian and Homeostatic Plasticity. Neuron. 2014;84:497–510. doi: 10.1016/j.neuron.2014.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Deger M, Seeholzer A, Gerstner W. Multicontact Co-operativity in Spike-Timing?Dependent Structural Plasticity Stabilizes Networks. Cereb. Cortex. 2017;61:247–258. doi: 10.1093/cercor/bhx339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Titley HK, Brunel N, Hansel C. Toward a Neurocentric View of Learning. Neuron. 2017;95:19–32. doi: 10.1016/j.neuron.2017.05.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Fauth M, Tetzlaff C. Opposing Effects of Neuronal Activity on Structural Plasticity. Front. Neuroanat. 2016;10:75. doi: 10.3389/fnana.2016.00075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Fauth M, Wörgötter F, Tetzlaff C. Formation and Maintenance of Robust Long-Term Information Storage in the Presence of Synaptic Turnover. PLOS Comput. Biol. 2015;11:e1004684. doi: 10.1371/journal.pcbi.1004684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Hiratani N, Fukai T. Interplay between short- and long-term plasticity in cell-assembly formation. PloS one. 2014;9:e101535. doi: 10.1371/journal.pone.0101535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Gilson M, Fukai T. Stability versus neuronal specialization for STDP: long-tail weight distributions solve the dilemma. PloS one. 2011;6:e25339. doi: 10.1371/journal.pone.0025339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Sadeh S, Clopath C, Rotter S. Processing of Feature Selectivity in Cortical Networks with Specific Connectivity. PloS one. 2015;10:e0127547. doi: 10.1371/journal.pone.0127547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Mednick SC, Arman AC, Boynton GM. The time course and specificity of perceptual deterioration. Proc. Natl. Acad. Sci. 2005;102:3881–3885. doi: 10.1073/pnas.0407866102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Censor N, Karni A, Sagi D. A link between perceptual learning, adaptation and sleep. Vision Res. 2006;46:4071–4074. doi: 10.1016/j.visres.2006.07.022. [DOI] [PubMed] [Google Scholar]

- 66.Ofen N, Moran A, Sagi D. Effects of trial repetition in texture discrimination. Vision Res. 2007;47:1094–1102. doi: 10.1016/j.visres.2007.01.023. [DOI] [PubMed] [Google Scholar]

- 67.Mednick S, Nakayama K, Stickgold R. Sleep-dependent learning: a nap is as good as a night. Nat. Neurosci. 2003;6:697–698. doi: 10.1038/nn1078. [DOI] [PubMed] [Google Scholar]

- 68.Tononi G, Cirelli C. Sleep and the Price of Plasticity: From Synaptic and Cellular Homeostasis to Memory Consolidation and Integration. Neuron. 2014;81:12–34. doi: 10.1016/j.neuron.2013.12.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.