Abstract

Bayesian inference plays an important role in phylogenetics, evolutionary biology, and in many other branches of science. It provides a principled framework for dealing with uncertainty and quantifying how it changes in the light of new evidence. For many complex models and inference problems, however, only approximate quantitative answers are obtainable. Approximate Bayesian computation (ABC) refers to a family of algorithms for approximate inference that makes a minimal set of assumptions by only requiring that sampling from a model is possible. We explain here the fundamentals of ABC, review the classical algorithms, and highlight recent developments. [ABC; approximate Bayesian computation; Bayesian inference; likelihood-free inference; phylogenetics; simulator-based models; stochastic simulation models; tree-based models.]

Introduction

Many recent models in biology describe nature to a high degree of accuracy but are not amenable to analytical treatment. The models can, however, be simulated on computers and we can thereby replicate many complex phenomena such as the evolution of genomes (Marttinen et al. 2015), the dynamics of gene regulation (Toni et al. 2009), or the demographic spread of a species (Currat and Excoffier 2004; Fagundes et al. 2007; Itan et al. 2009; Excoffier et al. 2013). Such simulator-based models are often stochastic and have multiple parameters. While it is usually relatively easy to generate data from the models for any configuration of the parameters, the real interest is often focused on the inverse problem: the identification of parameter configurations that would plausibly lead to data that are sufficiently similar to the observed data. Solving such a nonlinear inverse problem is generally a very difficult task.

Bayesian inference provides a principled framework for solving the aforementioned inverse problem. A prior probability distribution on the model parameters is used to describe the initial beliefs about what values of the parameters could be plausible. The prior beliefs are updated in light of the observed data by means of the likelihood function. Computing the likelihood function, however, is mostly impossible for simulator-based models due to the unobservable (latent) random quantities that are present in the model. In some cases, Monte Carlo methods offer a way to handle the latent variables such that an approximate likelihood is obtained, but these methods have their limitations, and for large and complex models, they are “too inefficient by far” (Green et al. 2015, p. 848). To deal with models where likelihood calculations fail, other techniques have been developed that are collectively referred to as likelihood-free inference or approximate Bayesian computation (ABC).

In a nutshell, ABC algorithms sample from the posterior distribution of the parameters by finding values that yield simulated data sufficiently resembling the observed data. ABC is widely used in systematics. For instance, Hickerson et al. (2006) used ABC to test for simultaneous divergence between members of species pairs. Fan and Kubatko (2011) estimated the topology and speciation times of a species tree under the coalescent model using ABC. Their method does not require sequence data, but only gene tree topology information, and was found to perform favorably in terms of both accuracy and computation time. Slater et al. (2012) used ABC to simultaneously infer rates of diversification and trait evolution from incompletely sampled phylogenies and trait data. They found their ABC approach to be comparable to likelihood-based methods that use complete data sets. In addition, it can handle extremely sparsely sampled phylogenies and trees containing very large numbers of species. Ratmann et al. (2012) used ABC to fit two different mechanistic phylodynamic models for interpandemic influenza A(H3N2) using both surveillance data and sequence data simultaneously. The simultaneous consideration of these two types of data allowed them to drastically constrain the parameter space and expose model deficiencies using the ABC framework. Very recently, Baudet et al. (2015) used ABC to reconstruct the coevolutionary history of host–parasite systems. The ABC-based method was shown to handle large trees beyond the scope of other existing methods.

While widely applicable, ABC comes with its own set of difficulties, that are of both computational and statistical nature. The two main intrinsic difficulties are how to efficiently find plausible parameter values and how to define what is similar to the observed data and what is not. All ABC algorithms have to deal with these two issues in some manner, and the different algorithms discussed here essentially differ in how they tackle the two problems.

The remainder of this article is structured as follows. We next discuss important properties of simulator-based models and point out difficulties when performing statistical inference with them. The discussion leads to the basic rejection ABC algorithm which is presented in the subsequent section. This is followed by a presentation of popular ABC algorithms that have been developed to increase the computational efficiency. We then consider several recent advances that aim to improve ABC both computationally and statistically. The final section provides conclusions and a discussion about likelihood-free inference methods related to ABC.

Simulator-based Models

Definition

Simulator-based models are functions  that map the model parameters

that map the model parameters  and some random variables

and some random variables  to data

to data  . The functions

. The functions  are generally implemented as computer programs where the parameter values are provided as input and where the random variables are drawn sequentially by making calls to a random number generator. The parameters

are generally implemented as computer programs where the parameter values are provided as input and where the random variables are drawn sequentially by making calls to a random number generator. The parameters  govern the properties of interest of the generated data, whereas the random variables

govern the properties of interest of the generated data, whereas the random variables  represent the stochastic variation inherent to the simulated process.

represent the stochastic variation inherent to the simulated process.

The mapping  may be as complex as needed, and this generality of simulator-based models allows researchers to implement hypotheses about how the data were generated without having to make excessive compromises motivated by mathematical simplicity, or other reasons not related to the scientific question being investigated.

may be as complex as needed, and this generality of simulator-based models allows researchers to implement hypotheses about how the data were generated without having to make excessive compromises motivated by mathematical simplicity, or other reasons not related to the scientific question being investigated.

Due to the presence of the random variables  , the outputs of the simulator fluctuate randomly even when using exactly the same values of the model parameters

, the outputs of the simulator fluctuate randomly even when using exactly the same values of the model parameters  . This means that we can consider the simulator to define a random variable

. This means that we can consider the simulator to define a random variable  whose distribution is implicitly determined by the distribution of

whose distribution is implicitly determined by the distribution of  and the mapping

and the mapping  acting on

acting on  for a given

for a given  . For this reason, simulator-based models are sometimes called implicit models (Diggle and Gratton 1984). Using the properties of transformation of random variables, it is possible to formally write down the distribution of

. For this reason, simulator-based models are sometimes called implicit models (Diggle and Gratton 1984). Using the properties of transformation of random variables, it is possible to formally write down the distribution of  . For instance, for a fixed value of

. For instance, for a fixed value of  , the probability that

, the probability that  takes values in an

takes values in an  neighborhood

neighborhood  around the observed data

around the observed data  is equal to the probability to draw values of

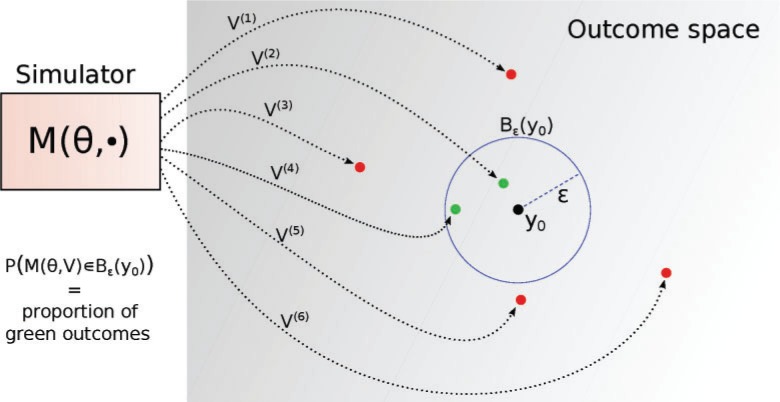

is equal to the probability to draw values of  that are mapped to that neighborhood (Fig. 1),

that are mapped to that neighborhood (Fig. 1),

| (1) |

Computing the probability analytically is impossible for complex models. But it is possible to test empirically whether a particular outcome  of the simulation ends up in the neighborhood of

of the simulation ends up in the neighborhood of  or not (Fig. 1). We will see that this property of simulator-based models plays a key role in performing inference about their parameters.

or not (Fig. 1). We will see that this property of simulator-based models plays a key role in performing inference about their parameters.

Figure 1.

Illustration of the stochastic simulator  run multiple times with a fixed value of

run multiple times with a fixed value of  . The black dot

. The black dot  is the observed data and the arrows point to different simulated data sets. Two outcomes, marked in green, are less than

is the observed data and the arrows point to different simulated data sets. Two outcomes, marked in green, are less than  away from

away from  . The proportion of such outcomes provides an approximation of the likelihood of

. The proportion of such outcomes provides an approximation of the likelihood of  for the observed data

for the observed data  .

.

Example

As an example of a simulator-based model, we here present the simple yet analytically intractable model by Tanaka et al. (2006) for the spread of tuberculosis. We will use the model throughout the article for illustrating different concepts and methods.

The model begins with one infectious host and stops when a fixed number of infectious hosts  is exceeded (Fig. 2). In the simulation, it is assumed that each infectious host randomly infects other individuals from an unlimited supply of hosts with the rate

is exceeded (Fig. 2). In the simulation, it is assumed that each infectious host randomly infects other individuals from an unlimited supply of hosts with the rate  , each time transmitting a specific strain of the communicable pathogen, characterized by its haplotype. It is thus effectively assumed that a strong transmission bottleneck occurs, such that only a single strain is passed forward in each transmission event, despite the eventual genetic variation persisting in the within-host pathogen population. Further, each infected host is considered to be infectious immediately. The model states that a host stops being infectious, that is, recovers or dies, randomly with the rate

, each time transmitting a specific strain of the communicable pathogen, characterized by its haplotype. It is thus effectively assumed that a strong transmission bottleneck occurs, such that only a single strain is passed forward in each transmission event, despite the eventual genetic variation persisting in the within-host pathogen population. Further, each infected host is considered to be infectious immediately. The model states that a host stops being infectious, that is, recovers or dies, randomly with the rate  , and the pathogen of the host mutates randomly within the host at the rate

, and the pathogen of the host mutates randomly within the host at the rate  , thereby generating a novel haplotype under a single-locus infinite alleles model. The parameters of the model are thus

, thereby generating a novel haplotype under a single-locus infinite alleles model. The parameters of the model are thus  . The output of the simulator is a vector of cluster sizes in the simulated population of infected hosts, where clusters are the groups of hosts infected by the same haplotype of the pathogen. After the simulation, a random sample of size

. The output of the simulator is a vector of cluster sizes in the simulated population of infected hosts, where clusters are the groups of hosts infected by the same haplotype of the pathogen. After the simulation, a random sample of size  is taken from the population yielding the vector of cluster sizes

is taken from the population yielding the vector of cluster sizes  present in the sample. For example,

present in the sample. For example,  corresponds to a sample of size

corresponds to a sample of size  containing one cluster with six infected hosts, one cluster with three hosts, two clusters with two hosts each, as well as seven singleton clusters. Note that this model of pathogen spread is atypical in the sense that the observation times of the infections are all left implicit in the sampling process, in contrast to the standard likelihood formulation used for infectious disease epidemiological models (Anderson and May 1992).

containing one cluster with six infected hosts, one cluster with three hosts, two clusters with two hosts each, as well as seven singleton clusters. Note that this model of pathogen spread is atypical in the sense that the observation times of the infections are all left implicit in the sampling process, in contrast to the standard likelihood formulation used for infectious disease epidemiological models (Anderson and May 1992).

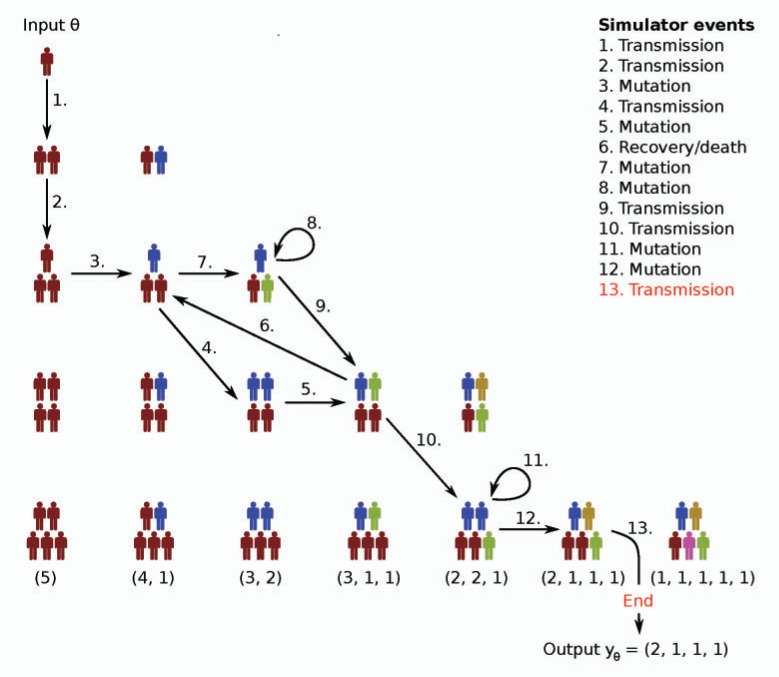

Figure 2.

An example of a transmission process simulated under a parameter configuration  without subsampling of the simulated infectious population. Arrows indicate the sequence of random events taking place in the simulation and different colors represent different haplotypes of the pathogen. The simulation starts with one infectious host who transmits the pathogen to another host. After one more transmission event, the pathogen undergoes a mutation within one of the three hosts infected so far (event three). As the sixth event in the simulation, one of the haplotypes is removed from the population due to the recovery/death of the corresponding host. The simulation stops when the infectious population size exceeds

without subsampling of the simulated infectious population. Arrows indicate the sequence of random events taking place in the simulation and different colors represent different haplotypes of the pathogen. The simulation starts with one infectious host who transmits the pathogen to another host. After one more transmission event, the pathogen undergoes a mutation within one of the three hosts infected so far (event three). As the sixth event in the simulation, one of the haplotypes is removed from the population due to the recovery/death of the corresponding host. The simulation stops when the infectious population size exceeds  and the simulator outputs the generated

and the simulator outputs the generated  . The nodes not connected by arrows show all the other possible configurations of the infectious population, but which were not visited in this example run of the simulator. The bottom row lists the possible outputs of the simulator (cluster size vectors) under their corresponding population configuration.

. The nodes not connected by arrows show all the other possible configurations of the infectious population, but which were not visited in this example run of the simulator. The bottom row lists the possible outputs of the simulator (cluster size vectors) under their corresponding population configuration.

Difficulties in Performing Statistical Inference

Values of the parameters  that are plausible in the light of the observations

that are plausible in the light of the observations  can be determined via statistical inference either by finding values that maximize the probability in Equation (1) for some sufficiently small

can be determined via statistical inference either by finding values that maximize the probability in Equation (1) for some sufficiently small  or by determining their posterior distribution. In more detail, in maximum likelihood estimation, the parameters are determined by maximizing the likelihood function

or by determining their posterior distribution. In more detail, in maximum likelihood estimation, the parameters are determined by maximizing the likelihood function  ,

,

| (2) |

where  is a proportionality factor that may depend on

is a proportionality factor that may depend on  , which is needed when

, which is needed when  shrinks to zero as

shrinks to zero as  approaches zero. If the output of the simulator can only take a countable number of values,

approaches zero. If the output of the simulator can only take a countable number of values,  is called a discrete random variable and the definition of the likelihood simplifies to

is called a discrete random variable and the definition of the likelihood simplifies to  , which equals the probability of simulating data equal to the observed data. In Bayesian inference, the essential characterization of the uncertainty about the model parameters is defined by their conditional distribution given the data, that is, the posterior distribution

, which equals the probability of simulating data equal to the observed data. In Bayesian inference, the essential characterization of the uncertainty about the model parameters is defined by their conditional distribution given the data, that is, the posterior distribution  ,

,

| (3) |

where  is the prior distribution of the parameters.

is the prior distribution of the parameters.

For complex models neither the probability in Equation (1) nor the likelihood function  are available analytically in closed form as a function of

are available analytically in closed form as a function of  , which is the reason why statistical inference is difficult for simulator-based models.

, which is the reason why statistical inference is difficult for simulator-based models.

For the model of tuberculosis transmission presented in the previous section, computing the likelihood function becomes intractable if the infectious population size  is large, or if the death rate

is large, or if the death rate  (Stadler 2011). This is because for large

(Stadler 2011). This is because for large  , the state space of the process, that is, the number of different cluster vectors, grows very quickly. This makes exact numerical calculation of the likelihood infeasible because in essence, every possible path to the outcome should be accounted for (Fig. 2). Moreover, if the death rate

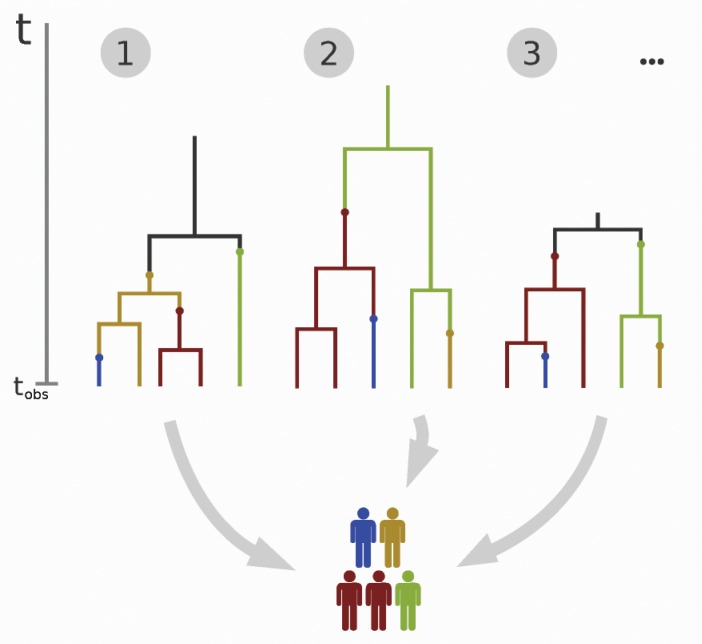

, the state space of the process, that is, the number of different cluster vectors, grows very quickly. This makes exact numerical calculation of the likelihood infeasible because in essence, every possible path to the outcome should be accounted for (Fig. 2). Moreover, if the death rate  is nonzero, the process is allowed to return to previous states which further complicates the computations. Finally, the assumption that not all infectious hosts are observed contributes additionally to the intractability of the likelihood. Stadler (2011) approached the problem using transmission trees (Fig. 3). The likelihood function stays, however, intractable because of the vast number of different trees that all yield the same observed data and thus need to be considered when evaluating the likelihood of a parameter value.

is nonzero, the process is allowed to return to previous states which further complicates the computations. Finally, the assumption that not all infectious hosts are observed contributes additionally to the intractability of the likelihood. Stadler (2011) approached the problem using transmission trees (Fig. 3). The likelihood function stays, however, intractable because of the vast number of different trees that all yield the same observed data and thus need to be considered when evaluating the likelihood of a parameter value.

Figure 3.

The transmission process in Figure 2 can also be described with transmission trees (Stadler 2011) paired with mutations. The trees are characterized by their structure, the length of their edges, and the mutations on the edges (marked with small circles that change the color of the edge, where colors represent the different haplotypes of the pathogen). The figure shows three examples of different trees that yield the same observed data at the observation time  . Calculating the likelihood of a parameter value requires summing over all possible trees yielding the observed data, which is computationally impossible when the sample size is large.

. Calculating the likelihood of a parameter value requires summing over all possible trees yielding the observed data, which is computationally impossible when the sample size is large.

Inference via Rejection Sampling

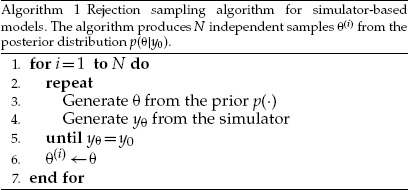

We present here an algorithm for exact posterior inference that is applicable when  can only take countably many values, that is, if

can only take countably many values, that is, if  is a discrete random variable. As shown above, in this case

is a discrete random variable. As shown above, in this case  . The presented algorithm forms the basis of the algorithms for ABC discussed in the later sections.

. The presented algorithm forms the basis of the algorithms for ABC discussed in the later sections.

In general, samples from the prior distribution  of the parameters can be converted into samples from the posterior

of the parameters can be converted into samples from the posterior  by retaining each sampled value with a probability proportional to

by retaining each sampled value with a probability proportional to  . This can be done sequentially by first sampling a parameter value from the prior,

. This can be done sequentially by first sampling a parameter value from the prior,  and then accepting the obtained value with the probability

and then accepting the obtained value with the probability  . This procedure corresponds to rejection sampling (see e.g., Robert and Casella 2004, Chapter 2). Now with the likelihood

. This procedure corresponds to rejection sampling (see e.g., Robert and Casella 2004, Chapter 2). Now with the likelihood  being equal to the probability that

being equal to the probability that  , the latter step can be implemented for simulator-based models even when

, the latter step can be implemented for simulator-based models even when  is not available analytically: we run the simulator and check whether the generated data equal the observed data. This gives the rejection algorithm for simulator-based models summarized as Algorithm 1. Rubin (1984) used it to provide intuition about how Bayesian inference about parameters works in general.

is not available analytically: we run the simulator and check whether the generated data equal the observed data. This gives the rejection algorithm for simulator-based models summarized as Algorithm 1. Rubin (1984) used it to provide intuition about how Bayesian inference about parameters works in general.

To obtain another interpretation of Algorithm 1, recall that for discrete random variables the posterior distribution  is, by definition, equal to the joint distribution of

is, by definition, equal to the joint distribution of  and

and  , normalized by the probability that

, normalized by the probability that  . That is, the posterior is obtained by conditioning on the event

. That is, the posterior is obtained by conditioning on the event  . We can thus understand the test for equality of

. We can thus understand the test for equality of  and

and  on line 5 of the algorithm as an implementation of the conditioning operation.

on line 5 of the algorithm as an implementation of the conditioning operation.

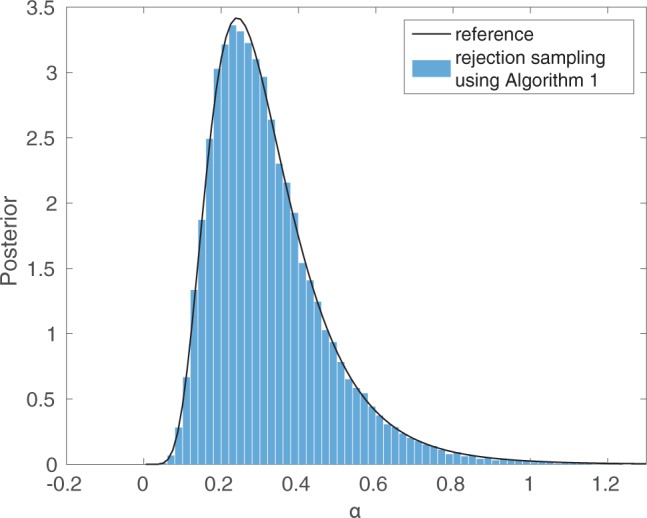

To illustrate Algorithm 1, we generated a synthetic data set  from the tuberculosis transmission model by running the simulator with the parameter values

from the tuberculosis transmission model by running the simulator with the parameter values  ,

,  ,

,  , and setting the population size to

, and setting the population size to  . We further assumed that the whole population is observed, which yielded the observed data

. We further assumed that the whole population is observed, which yielded the observed data  . The assumptions about the size of the population, and that the whole population was observed, are unrealistic but they enable a comparison to the exact posterior distribution, which in this setting can be numerically computed using Theorem 1 of Stadler (2011). In this case, the histogram of samples obtained with Algorithm 1 matches the posterior distribution very accurately (Fig. 4). To obtain this result, we assumed that both of the parameters

. The assumptions about the size of the population, and that the whole population was observed, are unrealistic but they enable a comparison to the exact posterior distribution, which in this setting can be numerically computed using Theorem 1 of Stadler (2011). In this case, the histogram of samples obtained with Algorithm 1 matches the posterior distribution very accurately (Fig. 4). To obtain this result, we assumed that both of the parameters  and

and  were known and assigned a uniform prior distribution in the interval

were known and assigned a uniform prior distribution in the interval  for the sole unknown parameter, the transmission rate

for the sole unknown parameter, the transmission rate  . A total of 20 million data sets

. A total of 20 million data sets  were simulated, out of which 40,000 matched

were simulated, out of which 40,000 matched  (acceptance rate of 0.2%).

(acceptance rate of 0.2%).

Figure 4.

Exact inference for a simulator-based model of tuberculosis transmission. A very simple setting was chosen where the exact posterior can be numerically computed (black line), and where Algorithm 1 is applicable (blue bars).

Fundamentals of approximate Bayesian computation

The Rejection ABC Algorithm

While Algorithm 1 produces independent samples from the posterior, the probability that the simulated data equal the observed data is often negligibly small, which renders the algorithm impractical as virtually no simulated realizations of  will be accepted. The same problem holds true if the generated data can take uncountably many values, that is, when

will be accepted. The same problem holds true if the generated data can take uncountably many values, that is, when  is a continuous random variable.

is a continuous random variable.

To make inference feasible, the acceptance criterion  in Algorithm 1 can be relaxed to

in Algorithm 1 can be relaxed to

| (4) |

where  and

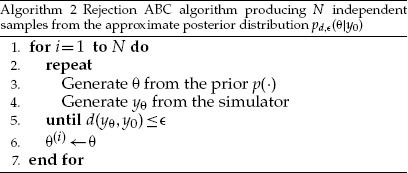

and  is a “distance” function that measures the discrepancy between the two data sets, as considered relevant for the inference. With this modification, Algorithm 1 becomes the rejection ABC algorithm summarized as Algorithm 2. The first implementation of this algorithm appeared in the work by Pritchard et al. (1999).

is a “distance” function that measures the discrepancy between the two data sets, as considered relevant for the inference. With this modification, Algorithm 1 becomes the rejection ABC algorithm summarized as Algorithm 2. The first implementation of this algorithm appeared in the work by Pritchard et al. (1999).

Algorithm 2 does not produce samples from the posterior  in Equation (3) but samples from an approximation

in Equation (3) but samples from an approximation  ,

,

| (5) |

which is the posterior distribution of  conditional on the event

conditional on the event  . Equation (5) is obtained by approximating the intractable likelihood function

. Equation (5) is obtained by approximating the intractable likelihood function  in Equation (2) with

in Equation (2) with  ,

,

| (6) |

The approximation is two-fold. First, the distance function  is generally not a metric in the mathematical sense, namely

is generally not a metric in the mathematical sense, namely  even if

even if  . This may happen, for example, when

. This may happen, for example, when  is defined through summary statistics that remove information from the data (see below). Second,

is defined through summary statistics that remove information from the data (see below). Second,  is chosen large enough so that enough samples will be accepted. Intuitively, the likelihood of a parameter value is approximated by the probability that running the simulator with said parameter value produces data within

is chosen large enough so that enough samples will be accepted. Intuitively, the likelihood of a parameter value is approximated by the probability that running the simulator with said parameter value produces data within  distance of

distance of  (Fig. 1).

(Fig. 1).

The distance  is typically computed by first reducing the data to suitable summary statistics

is typically computed by first reducing the data to suitable summary statistics  and then computing the distance

and then computing the distance  between them, so that

between them, so that  , where

, where  is often the Euclidean or some other metric for the summary statistics. When combining different summary statistics, they are usually re-scaled so that they contribute equally to the distance (as, e.g., done by Pritchard et al. 1999).

is often the Euclidean or some other metric for the summary statistics. When combining different summary statistics, they are usually re-scaled so that they contribute equally to the distance (as, e.g., done by Pritchard et al. 1999).

In addition to the accuracy of the approximation  , the distance

, the distance  and the threshold

and the threshold  also influence the computing time required to obtain samples. For instance, if

also influence the computing time required to obtain samples. For instance, if  and the distance

and the distance  is such that

is such that  if and only if

if and only if  , then Algorithm 2 becomes Algorithm 1 and

, then Algorithm 2 becomes Algorithm 1 and  becomes

becomes  but the computing time to obtain samples from

but the computing time to obtain samples from  would typically be impractically large. Hence, on a very fundamental level, there is a trade-off between statistical and computational efficiency in ABC (see e.g., Beaumont et al. 2002, p. 2027).

would typically be impractically large. Hence, on a very fundamental level, there is a trade-off between statistical and computational efficiency in ABC (see e.g., Beaumont et al. 2002, p. 2027).

We next illustrate Algorithm 2 and the mentioned trade-off using the previous example about tuberculosis transmission. Two distances  and

and  are considered,

are considered,

| (7) |

where  is the number of clusters contained in the data divided by the sample size

is the number of clusters contained in the data divided by the sample size  and

and  is a genetic diversity measure defined as

is a genetic diversity measure defined as  , where

, where  is the size of the

is the size of the  -th cluster. For

-th cluster. For  , we have

, we have  and

and  . For both

. For both  and

and  , the absolute difference between the summary statistics is used as the metric

, the absolute difference between the summary statistics is used as the metric  .

.

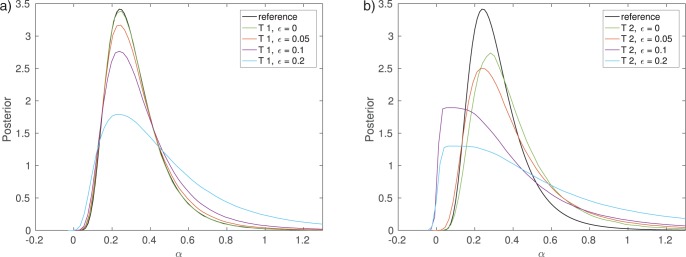

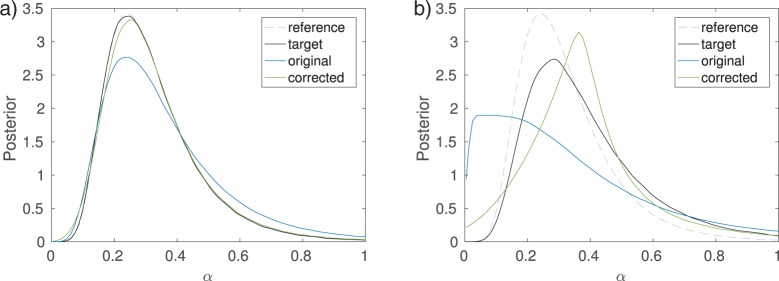

For this example, using the summary statistic  instead of the full data does not lead to a visible deterioration of the inferred posterior when

instead of the full data does not lead to a visible deterioration of the inferred posterior when  (Fig. 5a). For summary statistic

(Fig. 5a). For summary statistic  , however, there is a clear difference as the posterior mode and mean are shifted to larger values of

, however, there is a clear difference as the posterior mode and mean are shifted to larger values of  and the posterior variance is larger too (Fig. 5b). In both cases, increasing

and the posterior variance is larger too (Fig. 5b). In both cases, increasing  , that is, accepting more parameters, leads to an approximate posterior distribution that is less concentrated than the true posterior.

, that is, accepting more parameters, leads to an approximate posterior distribution that is less concentrated than the true posterior.

Figure 5.

Inference results for the transmission rate  of tuberculosis. The plots show the posterior distributions obtained with Algorithm 2 and 20 million simulated data sets (proposals). a) Cluster frequency as a summary statistic. b) Genetic diversity as a summary statistic.

of tuberculosis. The plots show the posterior distributions obtained with Algorithm 2 and 20 million simulated data sets (proposals). a) Cluster frequency as a summary statistic. b) Genetic diversity as a summary statistic.

Algorithm 2 with summary statistic  produces results comparable to Algorithm 1 but from the computational efficiency point of view the number of simulations required to obtain the approximate posterior differs between the two algorithms. It can be seen that for a computational budget of 100,000 simulations, the posterior obtained by Algorithm 1 differs substantially from the exact posterior, while the posterior from Algorithm 2 with

produces results comparable to Algorithm 1 but from the computational efficiency point of view the number of simulations required to obtain the approximate posterior differs between the two algorithms. It can be seen that for a computational budget of 100,000 simulations, the posterior obtained by Algorithm 1 differs substantially from the exact posterior, while the posterior from Algorithm 2 with  is still matching it well (Fig. 6a). The relatively poor result with Algorithm 1 is due to its low acceptance rate (here 0.2%). While the accepted samples do follow the exact posterior

is still matching it well (Fig. 6a). The relatively poor result with Algorithm 1 is due to its low acceptance rate (here 0.2%). While the accepted samples do follow the exact posterior  , the algorithm did not manage to produce enough accepted realizations within the computational budget available, which implies that the Monte Carlo error of the posterior approximation remains nonnegligible.

, the algorithm did not manage to produce enough accepted realizations within the computational budget available, which implies that the Monte Carlo error of the posterior approximation remains nonnegligible.

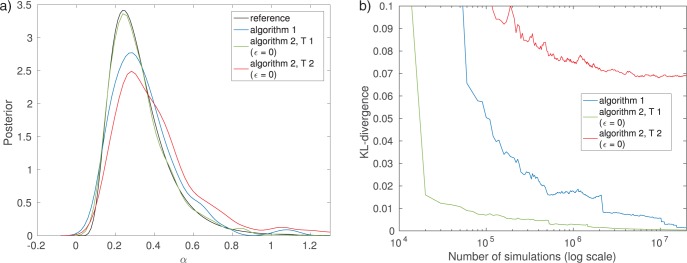

Figure 6.

Comparison of the efficiency of Algorithms 1 and 2. Smaller KL divergence means more accurate inference of the posterior distribution. Note that the stopping criterion of the algorithm has here been changed to be the total number of runs of the simulator instead of the number of accepted samples. a) Results after 100,000 simulations. b) Accuracy versus computational cost.

Plotting the number of data sets simulated versus the accuracy of the inferred posterior distribution allows us to further study the trade-off between statistical and computational efficiency between the different algorithms (Fig. 6b). The accuracy is measured by the Kullback–Leibler (KL) divergence (Kullback and Leibler 1951) between the exact and the inferred posterior. Algorithm 2 with summary statistic  features the best trade-off, whereas using summary statistic

features the best trade-off, whereas using summary statistic  performs the worst. The curve of the latter one flattens out after approximately 1 million simulations, showing the approximation error introduced by using the summary statistic

performs the worst. The curve of the latter one flattens out after approximately 1 million simulations, showing the approximation error introduced by using the summary statistic  . For Algorithm 1, nonzero values of the KL divergence are due to the Monte Carlo error only and it will approach the true posterior as the number of simulations grows. When using summary statistics, nonzero values of the KL divergence are due to both the Monte Carlo error and the use of the summary statistics. In this particular example, the error caused by the summary statistic

. For Algorithm 1, nonzero values of the KL divergence are due to the Monte Carlo error only and it will approach the true posterior as the number of simulations grows. When using summary statistics, nonzero values of the KL divergence are due to both the Monte Carlo error and the use of the summary statistics. In this particular example, the error caused by the summary statistic  is, however, negligible.

is, however, negligible.

Choice of the Summary Statistics

If the distance  is computed by projecting the data to summary statistics followed by their comparison using a metric in the summary statistics space (e.g., the Euclidean distance), the quality of the inference hinges on the summary statistics chosen (Figs. 5 and 6).

is computed by projecting the data to summary statistics followed by their comparison using a metric in the summary statistics space (e.g., the Euclidean distance), the quality of the inference hinges on the summary statistics chosen (Figs. 5 and 6).

For consistent performance of ABC algorithms, the summary statistics should be sufficient for the parameters, but this is often not the case. Additionally, with the increase in the number of summary statistics used, more simulations tend to be rejected so that an increasing number of simulation runs is needed to obtain a satisfactory number of accepted parameter values, making the algorithm computationally extremely inefficient. This is known as the curse of dimensionality for ABC (see also the discussion in the review paper by Beaumont 2010).

One of the main remedies to the above issue is to efficiently choose informative summary statistics. Importantly, the summary statistics that are informative for the parameters in a neighborhood of the true parameter value, and the summary statistics most informative globally, are significantly different (Nunes and Balding 2010). General intuition suggests that the set of summary statistics that are locally sufficient would be a subset of the globally sufficient ones. Therefore, a good strategy seems to first find a locality containing the true parameter with high enough probability and then choose informative statistics depending on that locality. However, this can be difficult in practice because rather different parameter values can produce summary statistics that are contained in the same locality.

In line with the above, Nunes and Balding (2010), Fearnhead and Prangle (2012), and Aeschbacher et al. (2012) first defined “locality” through a pilot ABC run and then chose the statistics in that locality. Four methods for choosing the statistics are generally used: (i) a sequential scheme based on the principle of approximate sufficiency (Joyce and Marjoram 2008); (ii) selection of a subset of the summary statistics maximizing prespecified criteria such as the Akaike information criterion (used by Blum et al. 2013) or the entropy of a distribution (used by Nunes and Balding 2010); (iii) partial least square regression which finds linear combinations of the original summary statistics that are maximally decorrelated and at the same time highly correlated with the parameters (Wegmann et al. 2009); (iv) assuming a statistical model between parameters and transformed statistics of simulated data, summary statistics are chosen by minimizing a loss function (Aeschbacher et al. 2012; Fearnhead and Prangle 2012). For comparison of the above methods in simulated and practical examples, we refer readers to the work by Blum and François (2010), Aeschbacher et al. (2012), and Blum et al. (2013).

Choice of the Threshold

Having the distance function  specified, possibly using summary statistics, the remaining factor in the approximation of the posterior in Equation (5) is the specification of the threshold

specified, possibly using summary statistics, the remaining factor in the approximation of the posterior in Equation (5) is the specification of the threshold  .

.

Larger values of  result in biased approximations

result in biased approximations  (see e.g., Fig. 5). The gain is a faster algorithm, meaning a reduced Monte Carlo error as one is able to produce more samples per unit of time. Therefore, when specifying the threshold the goal is to find a good balance between the bias and the Monte Carlo error. We illustrate this using Algorithm 2 with the full data without reduction to summary statistics [in other words,

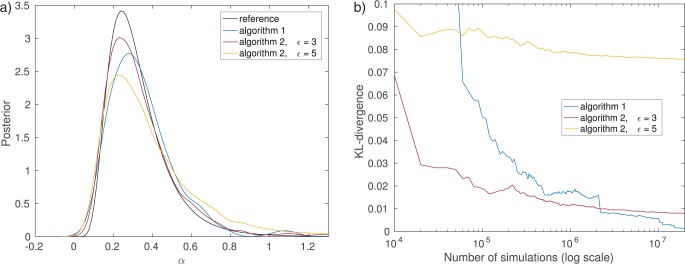

(see e.g., Fig. 5). The gain is a faster algorithm, meaning a reduced Monte Carlo error as one is able to produce more samples per unit of time. Therefore, when specifying the threshold the goal is to find a good balance between the bias and the Monte Carlo error. We illustrate this using Algorithm 2 with the full data without reduction to summary statistics [in other words,  ]. In this case, Algorithm 2 with

]. In this case, Algorithm 2 with  is identical to Algorithm 1. Here the choice

is identical to Algorithm 1. Here the choice  results in a better posterior compared to

results in a better posterior compared to  when using a maximal number of 100,000 simulations (Fig. 7a). This means that the gain from reduced Monte Carlo error is greater than the loss incurred by the bias. But this is no longer true for

when using a maximal number of 100,000 simulations (Fig. 7a). This means that the gain from reduced Monte Carlo error is greater than the loss incurred by the bias. But this is no longer true for  where the bias dominates. Eventually, the exact method will converge to the true posterior, whereas the other two continue to suffer from the bias caused by the larger threshold (Fig. 7b). However, with smaller computational budgets (less than 2 million simulations in our example), more accurate results are obtained with the nonzero threshold

where the bias dominates. Eventually, the exact method will converge to the true posterior, whereas the other two continue to suffer from the bias caused by the larger threshold (Fig. 7b). However, with smaller computational budgets (less than 2 million simulations in our example), more accurate results are obtained with the nonzero threshold  .

.

Figure 7.

Comparison of the trade-off between Monte Carlo error and bias. Algorithm 1 is equivalent here to Algorithm 2 with  . Smaller KL divergences mean more accurate inference of the posterior distribution. a) Results after 100,000 simulations. b) Accuracy versus computational cost.

. Smaller KL divergences mean more accurate inference of the posterior distribution. a) Results after 100,000 simulations. b) Accuracy versus computational cost.

The choice of the threshold is typically made by experimenting with a precomputed pool of simulation–parameter pairs  . Rather than setting the threshold value by hand, it is often determined by accepting some small proportion of the simulations (e.g., 1%, see Beaumont et al. 2002). The choice between different options can be made more rigorous by using some of the simulated data sets in the role of the observed data and solving the inference problem for them using the remaining data sets. As the data-generating parameters are known for the simulated observations, different criteria, such as the mean squared error (MSE) between the mean of the approximation and the generating parameters can be used to make the choice [see e.g. Faisal et al. (2013) and the section on validation of ABC]. This also allows one to assess the reliability of the inference procedure. Prangle et al. (2014) discuss the use of the coverage property (Wegmann et al. 2009) as the criterion to choose the threshold value

. Rather than setting the threshold value by hand, it is often determined by accepting some small proportion of the simulations (e.g., 1%, see Beaumont et al. 2002). The choice between different options can be made more rigorous by using some of the simulated data sets in the role of the observed data and solving the inference problem for them using the remaining data sets. As the data-generating parameters are known for the simulated observations, different criteria, such as the mean squared error (MSE) between the mean of the approximation and the generating parameters can be used to make the choice [see e.g. Faisal et al. (2013) and the section on validation of ABC]. This also allows one to assess the reliability of the inference procedure. Prangle et al. (2014) discuss the use of the coverage property (Wegmann et al. 2009) as the criterion to choose the threshold value  . Intuitively, the coverage property tests if the parameter values

. Intuitively, the coverage property tests if the parameter values  used to artificially generate a data set

used to artificially generate a data set  are covered by the credible intervals constructed from the ABC output for

are covered by the credible intervals constructed from the ABC output for  at correct rates (i.e.,

at correct rates (i.e.,  credible intervals should contain the true parameter in

credible intervals should contain the true parameter in  of the tests).

of the tests).

If one plans to increase the computational budget after initial experiments, theoretical convergence results can be used to adjust the threshold value. Barber et al. (2015) provide convergence results for an optimal  sequence with respect to the MSE of a posterior expectation (e.g., the posterior mean). The theoretically optimal sequence for the threshold

sequence with respect to the MSE of a posterior expectation (e.g., the posterior mean). The theoretically optimal sequence for the threshold  is achieved by making it proportional to

is achieved by making it proportional to  as

as  , where

, where  is the number of accepted samples. If the constant in this relation is estimated in a pilot run, one can compute the new theoretically optimal threshold based on the planned increase in the computational budget. Blum (2010) derives corresponding results using an approach based on conditional density estimation, finding that

is the number of accepted samples. If the constant in this relation is estimated in a pilot run, one can compute the new theoretically optimal threshold based on the planned increase in the computational budget. Blum (2010) derives corresponding results using an approach based on conditional density estimation, finding that  should optimally be proportional to

should optimally be proportional to  as

as  , where

, where  is the dimension of the parameter space and

is the dimension of the parameter space and  the total number of simulations performed [see also Fearnhead and Prangle (2012), Silk et al. (2013), and Biau et al. (2015), for similar results].

the total number of simulations performed [see also Fearnhead and Prangle (2012), Silk et al. (2013), and Biau et al. (2015), for similar results].

Beyond simple rejection sampling

The basic rejection ABC algorithm is essentially a trial and error scheme where the trial (proposal) values are sampled from the prior. We now review three popular algorithms that seek to improve upon the basic rejection approach. The first two aim at constructing proposal distributions that are closer to the posterior, whereas the third is a correction method that aims at adjusting samples obtained by ABC algorithms so that they are closer to the posterior.

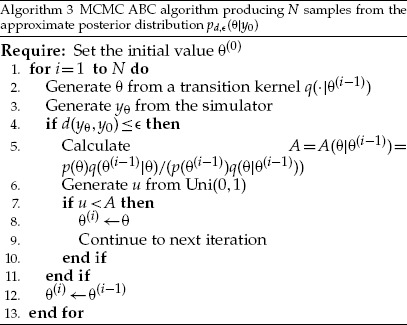

Markov Chain Monte Carlo ABC

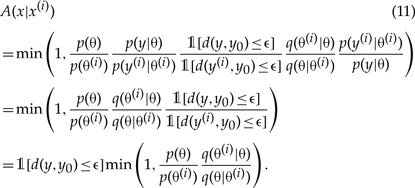

The Markov chain Monte Carlo (MCMC) ABC algorithm is based on the Metropolis–Hastings MCMC algorithm that is often used in Bayesian statistics (Robert and Casella 2004, Chapter 7). In order to leverage this algorithm, we write  in Equation (5) as the marginal distribution of

in Equation (5) as the marginal distribution of  ,

,

| (8) |

where  denotes the probability density (mass) function of

denotes the probability density (mass) function of  , and

, and  equals one if

equals one if  and zero otherwise. Importantly, while

and zero otherwise. Importantly, while  is generally unknown for simulator-based models, it is still possible to use

is generally unknown for simulator-based models, it is still possible to use  as the target distribution in a Metropolis–Hastings MCMC algorithm by choosing the proposal distribution in the right way. The obtained (marginal) samples of

as the target distribution in a Metropolis–Hastings MCMC algorithm by choosing the proposal distribution in the right way. The obtained (marginal) samples of  then follow the approximate posterior

then follow the approximate posterior  .

.

Assuming that the Markov chain is at iteration  in state

in state  where

where  , the Metropolis–Hastings algorithm involves sampling candidate states

, the Metropolis–Hastings algorithm involves sampling candidate states  from a proposal distribution

from a proposal distribution  and accepting the candidates with the probability

and accepting the candidates with the probability  ,

,

| (9) |

Choosing the proposal distribution such that the move from  to

to  does not depend on the value of

does not depend on the value of  , and that

, and that  is sampled from the simulator-based model with parameter value

is sampled from the simulator-based model with parameter value  irrespective of

irrespective of  , we have

, we have

| (10) |

where  is a suitable proposal distribution for

is a suitable proposal distribution for  . As a result of this choice, the unknown quantities in Equation (9) cancel out,

. As a result of this choice, the unknown quantities in Equation (9) cancel out,

|

This means that the acceptance probability is only probabilistic in  since a proposal

since a proposal  is immediately rejected if the condition

is immediately rejected if the condition  is not met. While the Markov chain operates in the

is not met. While the Markov chain operates in the  space, the choice of the proposal distribution decouples the acceptance criterion into an ordinary Metropolis–Hastings criterion for

space, the choice of the proposal distribution decouples the acceptance criterion into an ordinary Metropolis–Hastings criterion for  and the previously seen ABC rejection criterion for

and the previously seen ABC rejection criterion for  . The resulting algorithm, shown in full in the Appendix, is known as MCMC ABC algorithm and was introduced by Marjoram et al. (2003).

. The resulting algorithm, shown in full in the Appendix, is known as MCMC ABC algorithm and was introduced by Marjoram et al. (2003).

An advantage of the MCMC ABC algorithm is that the parameter values do not need to be drawn from the prior, which most often hampers the rejection sampler by incurring a high rejection rate of the proposals. As the Markov chain converges, the proposed parameter values follow the posterior with some added noise. A potential disadvantage, however, is the continuing presence of the rejection condition  which dominates the acceptance rate of the algorithm. Parameters in the tails of the posteriors have, by definition, a small probability to generate data

which dominates the acceptance rate of the algorithm. Parameters in the tails of the posteriors have, by definition, a small probability to generate data  satisfying the rejection condition, which can lead to a “sticky” Markov chain where the state tends to remain constant for many iterations.

satisfying the rejection condition, which can lead to a “sticky” Markov chain where the state tends to remain constant for many iterations.

Sequential Monte Carlo ABC

The sequential Monte Carlo (SMC) ABC algorithm can be considered as an adaptation of importance sampling which is a popular technique in statistics (see e.g., Robert and Casella 2004, Chapter 3). If one uses a general distribution  in place of the prior

in place of the prior  , Algorithm 2 produces samples that follow a distribution proportional to

, Algorithm 2 produces samples that follow a distribution proportional to  . However, by weighting the accepted parameters

. However, by weighting the accepted parameters  with

with  ,

,

| (12) |

the resulting weighted samples follow  . This kind of trick is used in importance sampling and can be employed in ABC to iteratively morph the prior into a posterior.

. This kind of trick is used in importance sampling and can be employed in ABC to iteratively morph the prior into a posterior.

The basic idea is to use a sequence of shrinking thresholds  and to define the proposal distribution

and to define the proposal distribution  at iteration

at iteration  based on the weighted samples

based on the weighted samples  from the previous iteration (Fig. 8). This is typically done by defining a mixture distribution,

from the previous iteration (Fig. 8). This is typically done by defining a mixture distribution,

| (13) |

where  is often a Gaussian distribution with mean

is often a Gaussian distribution with mean  and a covariance matrix estimated from the samples. Sampling from

and a covariance matrix estimated from the samples. Sampling from  can be done by choosing

can be done by choosing  with probability

with probability  and then perturbing the chosen parameter according to

and then perturbing the chosen parameter according to  . The proposed sample is then accepted or rejected as in Algorithm 2 and the weights of the accepted samples are computed with Equation (12). Such iterative algorithms were proposed by Sisson et al. (2007); Beaumont et al. (2009); Toni et al. (2009) and are called SMC ABC algorithms or population Monte Carlo ABC algorithms. The algorithm by Beaumont et al. (2009) is given in the Appendix.

. The proposed sample is then accepted or rejected as in Algorithm 2 and the weights of the accepted samples are computed with Equation (12). Such iterative algorithms were proposed by Sisson et al. (2007); Beaumont et al. (2009); Toni et al. (2009) and are called SMC ABC algorithms or population Monte Carlo ABC algorithms. The algorithm by Beaumont et al. (2009) is given in the Appendix.

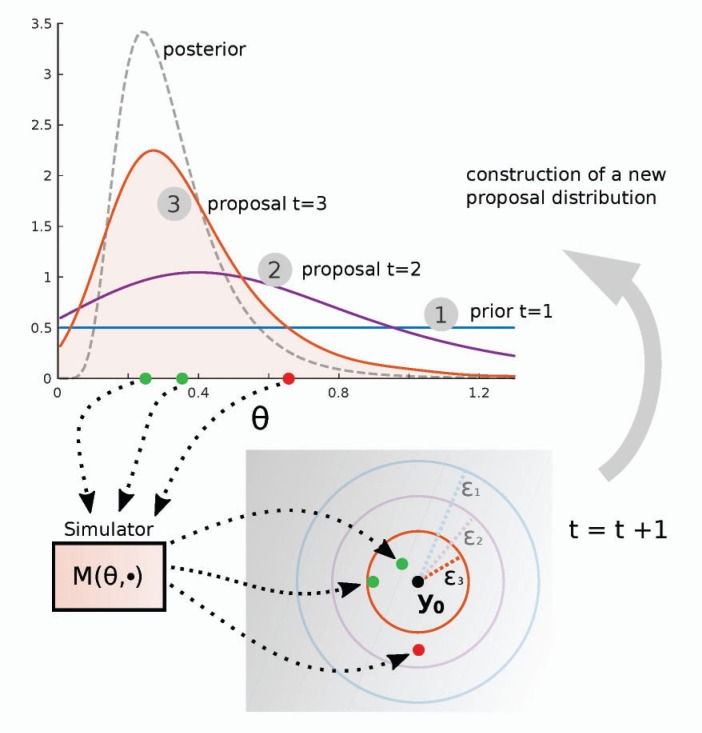

Figure 8.

Illustration of sequential Monte Carlo ABC using the tuberculosis example. The first proposal distribution is the prior and the threshold value used is  . The proposal distribution in iteration

. The proposal distribution in iteration  is based on the sample of size

is based on the sample of size  from the previous iteration. The threshold value

from the previous iteration. The threshold value  is decreased at every iteration as the proposal distributions become similar to the true posterior. The figure shows parameters drawn from the proposal distribution of the third iteration (

is decreased at every iteration as the proposal distributions become similar to the true posterior. The figure shows parameters drawn from the proposal distribution of the third iteration ( ). The red proposal is rejected because the corresponding simulation outcome is too far from the observed data

). The red proposal is rejected because the corresponding simulation outcome is too far from the observed data  . At iteration

. At iteration  , however, it would have been accepted. After iteration

, however, it would have been accepted. After iteration  , the accepted parameter values follow the approximate posterior

, the accepted parameter values follow the approximate posterior  . As long as the threshold values

. As long as the threshold values  decrease, the approximation becomes more accurate at each iteration.

decrease, the approximation becomes more accurate at each iteration.

Similar to the MCMC ABC, the samples proposed by the SMC algorithm follow the posterior  with some added noise. The proposed parameter values are drawn from the prior only at the first iteration after which adaptive proposal distributions

with some added noise. The proposed parameter values are drawn from the prior only at the first iteration after which adaptive proposal distributions  closer to the true posterior are used (see Fig. 8 for an illustration). This reduces the running time as the number of rejections is lower compared to the basic rejection ABC algorithm. For small values of

closer to the true posterior are used (see Fig. 8 for an illustration). This reduces the running time as the number of rejections is lower compared to the basic rejection ABC algorithm. For small values of  , however, the probability to accept a parameter value becomes very small, even if the parameter value was sampled from the true posterior. This results in long computing times in the final iterations of the algorithm without notable improvements in the approximation of the posterior.

, however, the probability to accept a parameter value becomes very small, even if the parameter value was sampled from the true posterior. This results in long computing times in the final iterations of the algorithm without notable improvements in the approximation of the posterior.

Post-Sampling Correction Methods

We assume here that the distance  is specified in terms of summary statistics, that is,

is specified in terms of summary statistics, that is,  , with

, with  and

and  . As

. As  decreases to zero, the approximate posterior

decreases to zero, the approximate posterior  in Equation (5) converges to

in Equation (5) converges to  , where we use

, where we use  to denote the conditional distribution of

to denote the conditional distribution of  given a value of the summary statistics

given a value of the summary statistics  . While small values of

. While small values of  are thus preferred in theory, making them too small is not feasible in practice because of the correspondingly small acceptance rate and the resulting large Monte Carlo error. We here present two schemes that aim at adjusting

are thus preferred in theory, making them too small is not feasible in practice because of the correspondingly small acceptance rate and the resulting large Monte Carlo error. We here present two schemes that aim at adjusting  without further sampling so that the adjusted distribution is closer to

without further sampling so that the adjusted distribution is closer to  .

.

For the first scheme, we note that if we had a mechanism to sample from  , we could sample from the limiting approximate posterior by using

, we could sample from the limiting approximate posterior by using  . The post-sampling correction methods in the first scheme thus estimate

. The post-sampling correction methods in the first scheme thus estimate  and use the estimated conditional distributions to sample from

and use the estimated conditional distributions to sample from  . In order to facilitate sampling,

. In order to facilitate sampling,  is expressed in terms of a generative (regression) model,

is expressed in terms of a generative (regression) model,

| (14) |

where  is a vector-valued function and

is a vector-valued function and  a vector of random variables for the residuals. By suitably defining

a vector of random variables for the residuals. By suitably defining  , we can assume that the random variables of the vector

, we can assume that the random variables of the vector  are independent, of zero mean and equal variance, and that their distribution

are independent, of zero mean and equal variance, and that their distribution  does not depend on

does not depend on  . Importantly, the model does not need to hold for all

. Importantly, the model does not need to hold for all  because, ultimately, we would like to sample from it using

because, ultimately, we would like to sample from it using  only. Assuming that the model

only. Assuming that the model  holds for

holds for  and that we have (weighted) samples

and that we have (weighted) samples  available from an ABC algorithm with a threshold

available from an ABC algorithm with a threshold  , the model

, the model  can be estimated by regressing

can be estimated by regressing  on the summary statistics

on the summary statistics  .

.

In order to sample  using the estimated model

using the estimated model  , we need to know the distribution of

, we need to know the distribution of  . For that, the residuals

. For that, the residuals  are determined by solving the regression equation,

are determined by solving the regression equation,

| (15) |

The residuals  can be used to estimate

can be used to estimate  , or as usually is the case in ABC, be directly employed in the sampling of the

, or as usually is the case in ABC, be directly employed in the sampling of the  ,

,

| (16) |

If the original samples  are weighted, both the

are weighted, both the  and the new “adjusted” samples

and the new “adjusted” samples  inherit the weights. By construction, if the relation between

inherit the weights. By construction, if the relation between  and

and  is estimated correctly, the (weighted) samples

is estimated correctly, the (weighted) samples  follow

follow  with

with  .

.

In most models  employed so far, the individual components of

employed so far, the individual components of  are treated separately, thus not accounting for possible correlations between them. For this paragraph we thus let

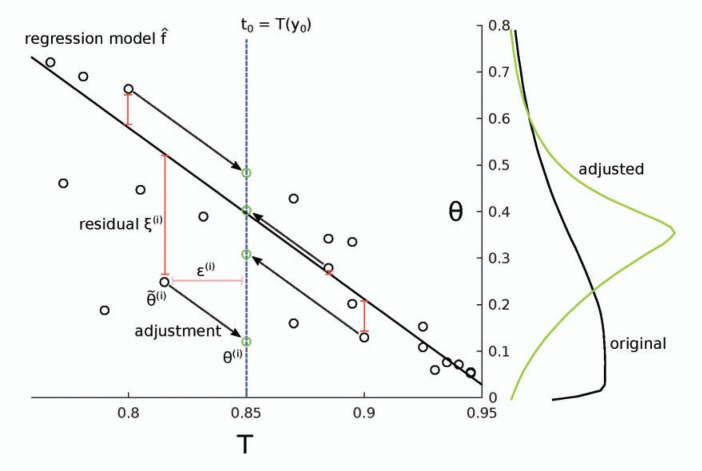

are treated separately, thus not accounting for possible correlations between them. For this paragraph we thus let  be a scalar. The first regression model used was linear (Beaumont et al. 2002),

be a scalar. The first regression model used was linear (Beaumont et al. 2002),

| (17) |

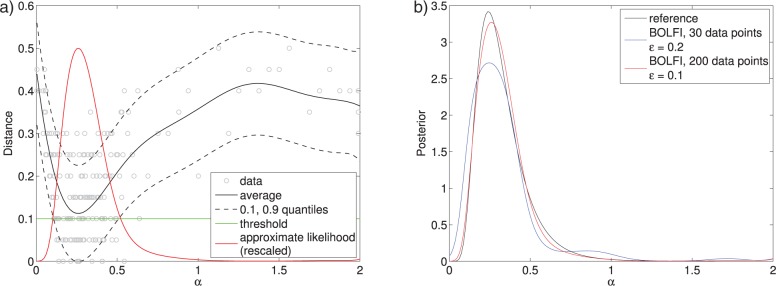

which results in the adjustment  , where

, where  is the learned regression coefficient (Fig. 9). When applied to the model of the spread of tuberculosis, with summary statistic

is the learned regression coefficient (Fig. 9). When applied to the model of the spread of tuberculosis, with summary statistic  [see Equation (7)], the adjustment is able to correct the bias caused by the nonzero threshold

[see Equation (7)], the adjustment is able to correct the bias caused by the nonzero threshold  , that is the estimated model

, that is the estimated model  is accurate (Fig. 10a). With summary statistic

is accurate (Fig. 10a). With summary statistic  , the threshold

, the threshold  is too large for accurate adjustment, although the result is still closer to the target distribution than the original (Figure 10b). Note also that here the target distribution of the adjustment is substantially different from the true posterior due to the bias incurred by summary statistic

is too large for accurate adjustment, although the result is still closer to the target distribution than the original (Figure 10b). Note also that here the target distribution of the adjustment is substantially different from the true posterior due to the bias incurred by summary statistic  .

.

Figure 9.

Illustration of the linear regression adjustment (Beaumont et al. 2002). First, the regression model  is learned and then, based on

is learned and then, based on  , the simulations are adjusted as if they were sampled from

, the simulations are adjusted as if they were sampled from  with

with  . Note that the residuals

. Note that the residuals  are preserved. The change in the posterior densities after the adjustment is shown on the right. Here, the black (original) and green (adjusted) curves are the same as in Figure 10(b).

are preserved. The change in the posterior densities after the adjustment is shown on the right. Here, the black (original) and green (adjusted) curves are the same as in Figure 10(b).

Figure 10.

Linear regression adjustment (Beaumont et al. 2002). applied to the example model of the spread of tuberculosis (compare to Fig. 5). The target distribution of the adjustment is the posterior  with the threshold decreased to

with the threshold decreased to  . Note that when using summary statistic

. Note that when using summary statistic  the target distribution is substantially different from the true posterior (reference) because of the bias incurred by

the target distribution is substantially different from the true posterior (reference) because of the bias incurred by  . a)

. a)  with

with  . b)

. b)  with

with  .

.

Also nonlinear models  have been proposed. Blum (2010) assumed a quadratic model,

have been proposed. Blum (2010) assumed a quadratic model,

| (18) |

where  is a symmetric matrix that adds a quadratic term to the linear adjustment. A more general nonlinear model was considered by Blum and François (2010),

is a symmetric matrix that adds a quadratic term to the linear adjustment. A more general nonlinear model was considered by Blum and François (2010),

| (19) |

where  models the conditional mean and

models the conditional mean and  the conditional standard deviation of

the conditional standard deviation of  . Both functions were fitted using a multi-layer neural network, and denoting the learned functions by

. Both functions were fitted using a multi-layer neural network, and denoting the learned functions by  and

and  , the following adjustments were obtained

, the following adjustments were obtained

| (20) |

The term  is an estimate of the posterior mean of

is an estimate of the posterior mean of  , whereas

, whereas  is an estimate of the posterior standard deviation of the parameter. They can both be used to succinctly summarize the posterior distribution of

is an estimate of the posterior standard deviation of the parameter. They can both be used to succinctly summarize the posterior distribution of  .

.

A more complicated model  is not necessarily better than a simpler one. It depends on the amount of training data available to fit it, that is, the amount of original samples

is not necessarily better than a simpler one. It depends on the amount of training data available to fit it, that is, the amount of original samples  that satisfy

that satisfy  . The different models presented above were compared by Blum and François (2010) who also pointed out that techniques for model selection from the regression literature can be used to select among them.

. The different models presented above were compared by Blum and François (2010) who also pointed out that techniques for model selection from the regression literature can be used to select among them.

While the first scheme to adjust  consists of estimating

consists of estimating  , the second scheme consists of estimating

, the second scheme consists of estimating  , that is the conditional distribution of the summary statistics given a parameter value. The rationale of this approach is that knowing

, that is the conditional distribution of the summary statistics given a parameter value. The rationale of this approach is that knowing  implies knowing the approximate likelihood function

implies knowing the approximate likelihood function  for

for  , because

, because  when the distance

when the distance  is specified in terms of summary statistics.

is specified in terms of summary statistics.

Importantly,  does not need to be known everywhere but only locally around

does not need to be known everywhere but only locally around  , where

, where  .

.

If we use  to denote the distribution of

to denote the distribution of  conditional on

conditional on  and

and  , Leuenberger and Wegmann (2010) showed that

, Leuenberger and Wegmann (2010) showed that  takes the role of a local likelihood function and

takes the role of a local likelihood function and  the role of a local prior, and that the local posterior equals the true posterior

the role of a local prior, and that the local posterior equals the true posterior  .

.

The functional form of  is generally not known. However, as in the first scheme, running an ABC algorithm with threshold

is generally not known. However, as in the first scheme, running an ABC algorithm with threshold  provides data

provides data  that can be used to estimate a model of

that can be used to estimate a model of  . Since the model does not need to hold for all values of the summary statistics, but only for those in the neighborhood of

. Since the model does not need to hold for all values of the summary statistics, but only for those in the neighborhood of  , Leuenberger and Wegmann (2010) proposed to model

, Leuenberger and Wegmann (2010) proposed to model  as Gaussian with constant covariance matrix and a mean depending linearly on

as Gaussian with constant covariance matrix and a mean depending linearly on  . When the samples

. When the samples  are used to approximate

are used to approximate  as a kernel density estimate, the Gaussianity assumption on

as a kernel density estimate, the Gaussianity assumption on  facilitates the derivation of closed-form formulae to adjust the kernel density representation of

facilitates the derivation of closed-form formulae to adjust the kernel density representation of  so that it becomes an approximation of

so that it becomes an approximation of  (Leuenberger and Wegmann 2010).

(Leuenberger and Wegmann 2010).

While Leuenberger and Wegmann (2010) modeled  as Gaussian, other models can be used as well. Alternatively, one may make the mean of the Gaussian depend nonlinearly on

as Gaussian, other models can be used as well. Alternatively, one may make the mean of the Gaussian depend nonlinearly on  and allow the covariance of the summary statistic depend on

and allow the covariance of the summary statistic depend on  . This was done by Wood (2010) and the model was found rich enough to represent

. This was done by Wood (2010) and the model was found rich enough to represent  for all values of the summary statistics and not only for those in the neighborhood of the observed one.

for all values of the summary statistics and not only for those in the neighborhood of the observed one.

Recent developments

We here present recent advances that aim to make ABC both computationally and statistically more efficient. This presentation focuses on our own work (Gutmann et al. 2014; Gutmann and Corander 2016).

Computational Efficiency

The computational cost of ABC can be attributed to two main factors:

(1) Most of the parameter values result in large distances between the simulated and observed data and those parameter values for which the distances tend to be small are unknown.

(2) Generating simulated data sets, that is, running the simulator, may be costly.

MCMC ABC and SMC ABC were partly introduced to avoid proposing parameters in regions where the distance is large. Nonetheless, typically millions of simulations are needed to infer the posterior distribution of a handful of parameters only. A key obstacle to efficiency in these algorithms is the continued presence of the rejection mechanism  , or more generally, the online decisions about the similarity between

, or more generally, the online decisions about the similarity between  and

and  . In recent work, Gutmann and Corander (2016) proposed a framework called Bayesian optimization for likelihood-free inference (BOLFI) for performing ABC which overcomes this obstacle by learning a probabilistic model about the stochastic relation between the parameter values and the distance (Fig. 11). After learning, the model can be used to approximate

. In recent work, Gutmann and Corander (2016) proposed a framework called Bayesian optimization for likelihood-free inference (BOLFI) for performing ABC which overcomes this obstacle by learning a probabilistic model about the stochastic relation between the parameter values and the distance (Fig. 11). After learning, the model can be used to approximate  , and thus

, and thus  , for any

, for any  without requiring further runs of the simulator (Fig. 12).

without requiring further runs of the simulator (Fig. 12).

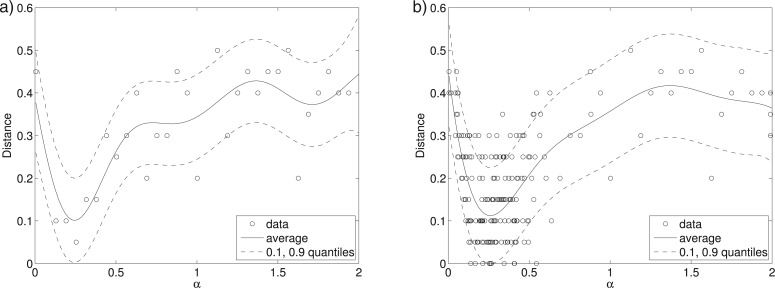

Figure 11.

The basic idea of BOLFI is to model the distance, and to prioritize regions of the parameter space where the distance tends to be small. The solid curves show the modeled average behavior of the distance  , and the dashed curves its variability for the tuberculosis example. a) After initialization (30 data points). b) After active data acquisition (200 data points).

, and the dashed curves its variability for the tuberculosis example. a) After initialization (30 data points). b) After active data acquisition (200 data points).

Figure 12.

In BOLFI, the estimated model of  is used to approximate

is used to approximate  by computing the probability that the distance is below a threshold

by computing the probability that the distance is below a threshold  . This kind of likelihood approximation leads to a model-based approximation of

. This kind of likelihood approximation leads to a model-based approximation of  . The KL-divergence between the reference solution and the BOLFI solution with 30 data points is 0.09, and for 200 data points it is 0.01. Comparison with Figure 6 shows that BOLFI increases the computational efficiency of ABC by several orders of magnitude. a) Approximate likelihood function. b) Model-based posteriors.

. The KL-divergence between the reference solution and the BOLFI solution with 30 data points is 0.09, and for 200 data points it is 0.01. Comparison with Figure 6 shows that BOLFI increases the computational efficiency of ABC by several orders of magnitude. a) Approximate likelihood function. b) Model-based posteriors.

Like the post-sampling correction methods presented in the previous section, BOLFI relies on a probabilistic model to make ABC more efficient. However, the quantities modeled differ, since in the post-sampling correction methods the relation between summary statistics and parameters is modeled, while BOLFI focuses on the relation between the parameters and the distance. A potential advantage of the latter approach is that the distance is a univariate quantity while the parameters and summary statistics may be multidimensional. Furthermore, BOLFI does not assume that the distance is defined via summary statistics and can be used without first running another ABC algorithm.

Learning of the model of  requires data about the relation between

requires data about the relation between  and

and  . In BOLFI, the data are actively acquired focusing on regions of the parameter space where the distance tends to be small. This is achieved by leveraging techniques from Bayesian optimization (see e.g., Jones 2001; Brochu et al. 2010), hence its name. Ultimately, the framework provided by Gutmann and Corander (2016) reduces the computational cost of ABC by addressing both of the factors mentioned above. The first point is addressed by learning from data which parameter values tend to have small distances, whereas the second problem is resolved by focusing on areas where the distance tends to be small when learning the model and by not requiring further runs of the simulator once the model is learned.

. In BOLFI, the data are actively acquired focusing on regions of the parameter space where the distance tends to be small. This is achieved by leveraging techniques from Bayesian optimization (see e.g., Jones 2001; Brochu et al. 2010), hence its name. Ultimately, the framework provided by Gutmann and Corander (2016) reduces the computational cost of ABC by addressing both of the factors mentioned above. The first point is addressed by learning from data which parameter values tend to have small distances, whereas the second problem is resolved by focusing on areas where the distance tends to be small when learning the model and by not requiring further runs of the simulator once the model is learned.

While BOLFI is not restricted to a particular model for  , Gutmann and Corander (2016) used Gaussian processes in the applications in their paper. Gaussian processes have also been used in other work as surrogate models for quantities that are expensive to compute. Wilkinson (2014) used them to model the logarithm of

, Gutmann and Corander (2016) used Gaussian processes in the applications in their paper. Gaussian processes have also been used in other work as surrogate models for quantities that are expensive to compute. Wilkinson (2014) used them to model the logarithm of  , and the training data were constructed based on quasi-random numbers covering the parameter space. Meeds and Welling (2014) used Gaussian processes to model the empirical mean and covariances of the summary statistics as a function of

, and the training data were constructed based on quasi-random numbers covering the parameter space. Meeds and Welling (2014) used Gaussian processes to model the empirical mean and covariances of the summary statistics as a function of  . Instead of simulating these quantities for every

. Instead of simulating these quantities for every  , values from the model were used in a MCMC algorithm in approximating the likelihood. These approaches have been demonstrated to assist in speeding up ABC.

, values from the model were used in a MCMC algorithm in approximating the likelihood. These approaches have been demonstrated to assist in speeding up ABC.

Statistical Efficiency