Abstract

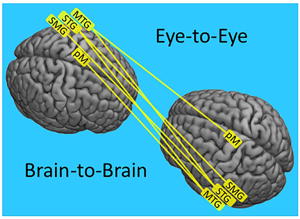

Human eye-to-eye contact is a primary source of social cues and communication. In spite of the biological significance of this interpersonal interaction, the underlying neural processes are not well-understood. This knowledge gap, in part, reflects limitations of conventional neuroimaging methods, including solitary confinement in the bore of a scanner and minimal tolerance of head movement that constrain investigations of natural, two-person interactions. However, these limitations are substantially resolved by recent technical developments in functional near-infrared spectroscopy (fNIRS), a non-invasive spectral absorbance technique that detects changes in blood oxygen levels in the brain by using surface-mounted optical sensors. Functional NIRS is tolerant of limited head motion and enables simultaneous acquisitions of neural signals from two interacting partners in natural conditions. We employ fNIRS to advance a data-driven theoretical framework for two-person neuroscience motivated by the Interactive Brain Hypothesis which proposes that interpersonal interaction between individuals evokes neural mechanisms not engaged during solo, non-interactive, behaviors. Within this context, two specific hypotheses related to eye-to-eye contact, functional specificity and functional synchrony, were tested. The functional specificity hypothesis proposes that eye-to-eye contact engages specialized, within-brain, neural systems; and the functional synchrony hypothesis proposes that eye-to-eye contact engages specialized, across-brain, neural processors that are synchronized between dyads. Signals acquired during eye-to-eye contact between partners (interactive condition) were compared to signals acquired during mutual gaze at the eyes of a picture-face (non-interactive condition). In accordance with the specificity hypothesis, responses during eye-to-eye contact were greater than eye-to-picture gaze for a left frontal cluster that included pars opercularis (associated with canonical language production functions known as Broca’s region), pre- and supplementary motor cortices (associated with articulatory systems), as well as the subcentral area. This frontal cluster was also functionally connected to a cluster located in the left superior temporal gyrus (associated with canonical language receptive functions known as Wernicke’s region), primary somatosensory cortex, and the subcentral area. In accordance with the functional synchrony hypothesis, cross-brain coherence during eye-to-eye contact relative to eye-to-picture gaze increased for signals originating within left superior temporal, middle temporal, and supramarginal gyri as well as the pre- and supplementary motor cortices of both interacting brains. These synchronous cross-brain regions are also associated with known language functions, and were partner-specific (i.e., disappeared with randomly assigned partners). Together, both within and across-brain neural correlates of eye-to-eye contact included components of previously established productive and receptive language systems. These findings reveal a left frontal, temporal, and parietal long-range network that mediates neural responses during eye-to-eye contact between dyads, and advance insight into elemental mechanisms of social and interpersonal interactions.

Keywords: Two-person neuroscience, hyperscanning, fNIRS, eye contact, Interactive Brain Hypothesis, cross-brain coherence, social neuroscience

Graphical abstract

Introduction

Eye contact between two humans establishes a universally recognized social link and a conduit for non-verbal interpersonal communication including salient and emotional information. The dynamic exchange of reciprocal information without words between individuals via eye-to-eye contact constitutes a unique opportunity to model mechanisms of human interpersonal communication. A distinctive feature of eye-to-eye contact between two individuals is the rapid and reciprocal exchange of salient information in which each send and receive “volley” is altered in response to the previous, and actions are produced simultaneously with reception and interpretive processes. Common wisdom, well-recognized within the humanities disciplines, regards eye-to-eye contact as a highly poignant social event, and this insight is also an active topic in social neuroscience. It has been proposed that the effects of direct eye gaze in typical individuals involves “privileged access” to specialized neural systems that process and interpret facial cues, including social and communication signals (Allison et al., 2000; Emery, 2000; Ethofer et al., 2011). Support for this hypothesis is based, in part, on imaging studies in which single participants view pictures of faces rather than making real eye-to-eye contact with another individual (Rossion et al., 2003). For example, “eye contact effects” have been observed by comparing neural activity during epochs of viewing static face pictures with either direct or averted gaze during tasks such as passive viewing, identity matching, detection of gender, or discrimination of gaze direction (Senju & Johnson, 2009). Distributed face-selective regions, including the fusiform gyrus, amygdala, superior temporal gyrus, and orbitofrontal cortices, were found to be upregulated during direct gaze relative to indirect gaze. These findings support a classic model for hierarchical processing of static representations of faces with specialized processing for direct gaze (Kanwisher et al., 1997; Haxby et al., 2000; Zhen et al., 2013). The specialized neural impact of direct eye gaze in typical participants (Johnson et al., 2015) underscores the salience of eyes in early development and neurological disorders, particularly with respect to language and autism spectrum disorders (Golarai et al., 2006; Jones & Klin, 2013).

Eye-to-eye contact in natural situations, however, also includes additional features such as dynamic and rapid perception of eye movements, interpretation of facial expressions, as well as appreciation of context and social conditions (Myllyneva & Hietanen, 2015; 2016; and Teufel et al, 2009). A hyperscanning (simultaneous imaging of two individuals) study using fMRI and two separate scanners has shown that responses to eye movement cues can be distinguished from object movement cues, and that partner-to-partner signal synchrony is increased during joint eye tasks (Saito et al., 2010), consistent with enhanced sensitivity to dynamic properties of eyes. Further insight into the social and emotional processing streams stimulated by eye contact originates from behavioral studies where cognitive appraisal of pictured eye-gaze directions has been shown to be modulated by social context (Teufel et al., 2009), and neural responses recorded by electroencephalography, EEG, were found to be amplified during perceptions of being seen by others (Myllyneva & Hietanen, 2016). Modulation of EEG signals has also been associated with viewing actual faces compared to pictured faces and found to be dependent upon both social attributions and the direction of the gaze (Myllyneva & Hietanen, 2015). Extending this evidence for interconnected processing systems related to faces, eyes, emotion, and social context, a recent clinical study using functional magnetic resonance imaging (fMRI) showed symptomatic improvement in patients with generalized anxiety disorder, an affect disorder characterized by negative emotional responses to faces, after treatment with paroxetine that was correlated with neural changes to direct vs averted gaze pictures (Schneier et al., 2011).

Other single brain studies using fMRI and EEG have confirmed that motion contained in video sequences of faces enhances cortical responses in the right superior temporal sulcus, bilateral fusiform face area, and bilateral inferior occipital gyrus (Schultz & Pilz, 2009; Trautmann et al. 2009; and Recio et al., 2011). Dynamic face stimuli, compared to static pictures, have also been associated with enhanced differentiation of emotional valences (Trautmann-Lengsfeld et al., 2013), and these systems were found to be sensitive to the rate of facial movement (Schultz et al., 2013). Moving trajectories of facial expressions morphed from neutral to either a positive or negative valence and recorded by magnetoencephalography (MEG) revealed similar findings with the added observation that pre-motor activity was concomitant with activity in the temporal visual areas, suggesting that motion sensitive mechanisms associated with dynamic facial expressions may also predict facial expression trajectories (Furl et al., 2010). Similar findings have been reported from passive viewing of static and dynamic faces using fMRI, confirming that these effects were lateralized to the right hemisphere (Sato et al., 2004). Overall, these findings establish the neural salience of faces based on single brain responses to either dynamic picture stimuli or to live faces with dynamic emotional expressions, and provide a compelling rationale and background for extending the investigational and theoretical approach to direct and natural eye-to-eye contact between two interacting partners where both single brain and cross-brain effects can be observed.

Recent technical advances in neuroimaging using fNIRS pave the way for hyperscanning during natural and spontaneous social interactions, and enable a new genre of experimental paradigms to investigate interpersonal and social mechanisms (Cheng et al., 2015; Dommer et al., 2012; Funane et al., 2011; Holper et al., 2012; Jiang et al., 2015; Osaka et al., 2014, 2015; Vanutelli et al., 2015, Pinti et al., 2015). These pioneering studies have demonstrated the efficacy of new social and dual-brain approaches, and have given rise to novel research questions that address the neurobiology of social interactions (Babiloni & Astolfi, 2014; García & Ibáñez, 2014; Schilbach et al., 2013, 2014). Concomitant with these technical and experimental advances, a recently proposed theoretical framework, the Interactive Brain Hypothesis, advances the idea that live social interaction drives dynamic neural activity with direct consequences for cognition (DiPaolo & DeJaegher, 2012; De Jaegher et al., 2016). This hypothesis also encompasses the conjecture that social interaction engages neural processes that either do not occur or are less active during similar solo activities (De Jaegher et al., 2010). Supporting observations and experimental paradigms that incorporate real-time interactions to test aspects of this broad hypothesis are nascent but emerging (Konvalinka & Roepstorff, 2012; Schilbach et al., 2013), and highlight new experimental approaches that probe “online” processes, i.e. processes that become manifest when two agents coordinate their visual attention (Schilbach, 2014).

A pivotal aspect of online two-brain investigations relates to the measures of cross-brain linkages. Oscillatory coupling of signals between brains is assumed to reflect cooperating mechanisms that underlie sensation, perception, cognition, and/or action. Cross-brain coherence (a linking indicator) measured by oscillatory coupling between two brains during natural interactive tasks has been reported for both fNIRS (hemodynamic) and EEG (electroencephalographic) signals, and encompasses a wide range of functions. For example, hyperscanning of dyads engaged in cooperative and obstructive interactions during a turn-based game of Jenga using fNIRS revealed inter-brain neural synchrony between right middle and superior frontal gyri during both cooperative and obstructive interaction, and in the dorsomedial prefrontal cortex during cooperative interactions only (Liu et al., 2016). Spontaneous imitation of hand movements between two partners generated EEG signals in the alpha-mu (8–12Hz) frequency band between right central-parietal regions of the cooperating brains, suggesting that an inter-brain synchronizing network was active during this interaction (Dumas et al., 2010). Behavioral synchrony while playing a guitar in a duet has been shown to produce synchronous neural oscillatory activity in the delta (1–4Hz) and theta (4–8Hz) frequency bands, suggesting a hyperbrain (two interconnected brains) model for dyadic musical duets (Lindenberger et al., 2009; Sänger et al., 2012). Further, a topology of hyperbrain networks including nodes from both brains has been reported based on musical improvisation tasks and synchronous oscillations between delta and theta frequency bands (Müller et al., 2013). In an EEG study of kissing, hyperbrain networks were found to increase oscillatory coherence during romantic kissing relative to kissing one’s own hand, suggesting that this cross-frequency coupling represents neural mechanisms that support interpersonally coordinated voluntary action (Müller & Lindenberger, 2014).

Here, we extend this technical and experimental background with an objective test of the Interactive Brain Hypothesis using eye-to-eye contact in natural interactive conditions. Specifically, the neural effects of direct eye-to-eye contact between two participants (“online” interactive condition) are compared with the neural effects of gaze at a static picture of eyes in a face (“offline” non-interactive condition) (Schilbach, 2014). Two hypotheses emerge from this general framework: a within-brain specificity hypothesis and a cross-brain synchrony hypothesis. In the case of the former, localized effects and functional connectivity are expected to reveal neural systems associated with live online eye-to-eye contact. In the latter case, cross-brain synchrony evidenced by coherence of signals between dyads is expected to increase for the eye-to-eye condition relative to the eye-to-picture condition reflecting neural processes associated with dynamic online visual responses associated with eyes. The two conditions differ with respect to interpersonal interaction and dynamic properties, and observed neural effects would be due to both interaction effects and motion sensitivity. See the Discussion Section for a further review.

Neural effects of eye-to-eye contact were determined by acquisition of hemodynamic signals using functional near-infrared spectroscopy during simultaneous neuroimaging of two interacting individuals. Functional NIRS is well-suited for concurrent neuroimaging of two partners because the signal detectors are head-mounted, participants are seated across from each other, and the system is tolerant of limited head movement (Eggebrecht et al., 2014). Hemodynamic signals that represent variations in oxygen levels of oxyhemoglobin (OxyHb) and deoxyhemoglobin (deOxyHb) (Villringer & Chance, 1997) serve as a proxy for neural activity, similar to variations in magnetic resonance caused by deOxyHb changes measured in fMRI (Boas et al., 2004, 2014; Ferrari & Quaresima, 2012). Thus, the deOxyHb signal (rather than the OxyHb signal) is most closely related to the fMRI signal. The correspondence between both hemodynamic signals acquired by fNIRS and the blood oxygen level-dependent (BOLD) signal acquired by fMRI is well-established (Sato et al., 2013; Scholkmann et al., 2013), and each reflects metabolic processes associated with task-specific neural activity (Cui et al., 2011; Eggebrecht et al., 2014; Sato et al., 2013; Scholkmann et al., 2014; Strangman et al., 2002). The OxyHb signal is more likely to include additional components originating from systemic effects such as blood flow, blood pressure, and respiration than the deOxyHb signal (Boas et al., 2004, 2014; Ferrari & Quaresima, 2012; Tachtsidis & Scholkmann, 2016), and is therefore distributed more globally than the the deOxyHb signal (Zhang et al., 2016). Due to these added factors, the amplitude of the OxyHb signal is characteristically higher than that of the deOxyHb signal, accounting for the fact that the OxyHb signal is frequently selected as the signal of choice for fNIRS studies. However, the potential advantages of maximizing spatial precision related to neural-specific functional activity while minimizing the possible confounds of signal components due to non-neural signal origins favors the use of the deOxyHb signal. Accordingly, the deOxyHb signal is reported in this investigation. To confirm the neural origin of these signals, as opposed to a possible global cardiovascular origin, an EEG study using the same paradigm was performed (See Supplementary Materials). Results showed enhanced evoked potential responses during the eye-to-eye condition as compared to the eye-to-picture condition in general accordance with the findings reported below, absent the spatial specificity.

Materials and Methods

Participants

Thirty-eight healthy adults (19 pairs, 27 +/- 9 years of age, 58% female, 90% right-handed (Oldfield, 1971)) participated in this two-person interactive hyperscanning paradigm using fNIRS. Forty participants were recruited and two participants were excluded because of poor signal quality associated with motion artifacts. All participants provided written informed consent in accordance with guidelines approved by the Yale University Human Investigation Committee (HIC #1501015178). Dyads were assigned in order of recruitment, and participants were either strangers prior to the experiment or casually acquainted as classmates. Participants were not stratified further by affiliation or dyad gender mix. Ten pairs were mixed gender, six pairs were female-female, and three pairs were male-male.

Paradigm

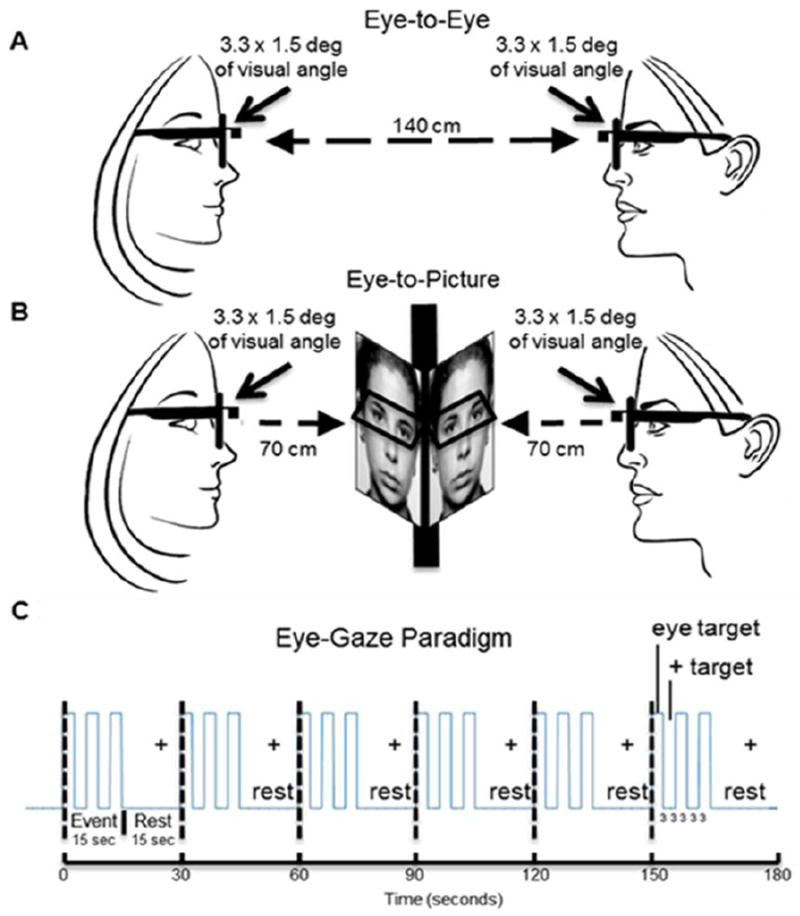

Participant dyads were positioned 140 cm across a table from each other with a direct face-to-face view of each other. A fixation crosshair was located 10 degrees of visual angle to the right of the inferred line-of-sight between the two participants. A virtual “eye box” for both of the participants and for the photograph of a neutral face (Ekman et al., 1975) that was attached to the occluder inserted between the participants subtended 3.3 × 1.5 degrees of visual angle (See Figure 1A and 1B, respectively). The face (real or picture) was out of view for each participant when gaze was averted by 10 deg off the line of direct face-gaze to the crosshair. Participants were instructed to minimize head movement, not to talk to each other, and to maintain facial expressions that were as neutral as possible. At the start of a run, they fixated on the crosshair. An auditory cue prompted participants to gaze at their partner’s eyes in the eye-to-eye condition (Figure 1A), or at the eyes of the face photograph in the eye-to-picture condition (Figure 1B). The auditory tone also cued viewing the crosshair during the rest/baseline condition according to the protocol time series (Figure 1C). The 15-second (s) active task period alternated with a 15 s rest/baseline period. The task period consisted of three 6 s cycles in which gaze alternated “on” for 3 s and “off” for 3 s for each of three events (Figure 1C), and the time series was performed in the same way for the real face and for the pictured face. During the 15 s rest/baseline period, participants focused on the fixation crosshair, as in the case of the 3 s “off” periods that separated the eye contact and gaze events, and were instructed to “clear their minds” during this break. The 3 s time period for the eye-to-eye contact events was selected for participant comfort due to the difficulty of maintaining eye contact with a real partner for periods longer than 3 s. The 15 s “activity” epoch with alternating eye contact events, was processed as a single block. The photographed face was the same for all participants and runs. Each 3-minute run was repeated twice.

Figure 1. Hyperscanning configuration and time series.

A. Eye-to-Eye condition. Partners faced each other at an eye-to-eye distance of 140 cm. The eye regions subtended by both the real eyes and the picture eyes was 3.3 × 1.5 degrees of visual angle. B. Eye-to-picture condition. An occlusion separated partners and a neutral face picture was placed in front of each at a distance of 70 cm, subtending the same visual angles as the eye-to-eye condition. Eye-tracking was recorded by scene and gaze measures of each participant. A rest target (crosshair) was located 10 degrees to the right of each target center. C. The event sequence for the experiment consisted of six 30 s cycles partitioned into 15 s of rest and 15 s of active on-off eye-gaze epochs. Active epochs contained three 6 s cycles each with three seconds of eye-gaze and three seconds of rest consisting of fixation on a crosshair located 10 degrees off the eye-gaze line of sight.

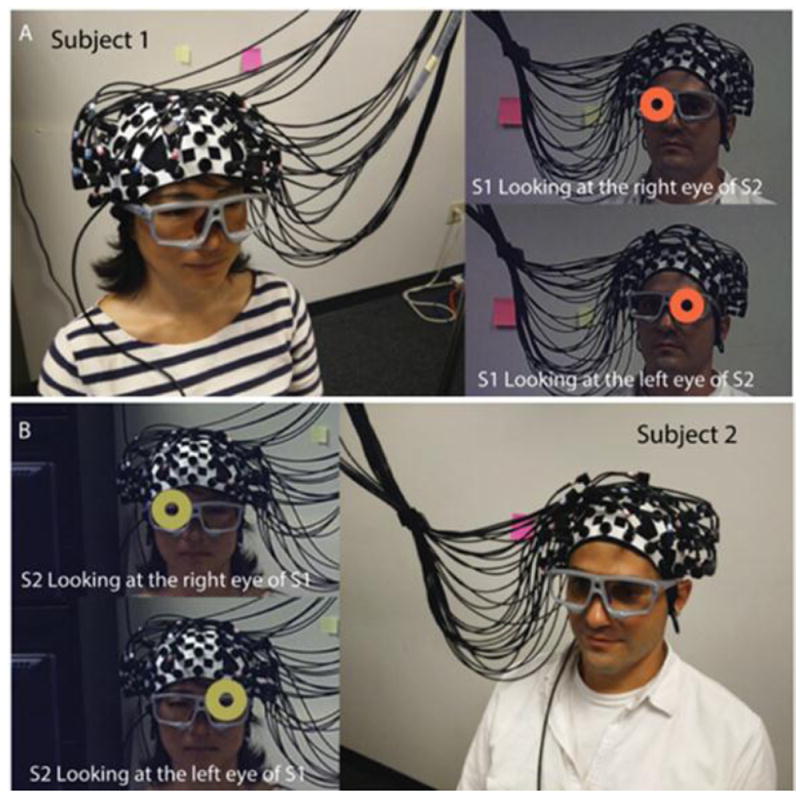

Eye-Tracking and fNIRS setup

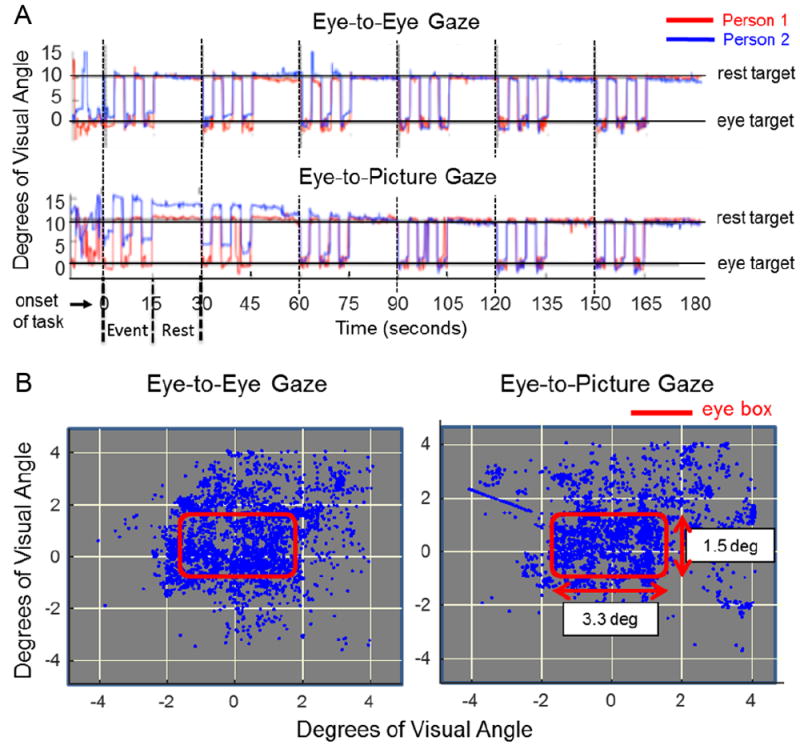

A two-person eye-tracking system with scene and eye-position monitoring cameras embedded into eyeglass frames for each participant was used during fNIRS recordings (Figure 2). Eye-gaze positions for both conditions were calibrated prior to each experiment. SMI ETG2 eye-tracking glasses provided position resolution of 0.5 degrees of visual angle and effective temporal resolution of 30 Hz. Eye-tracking signals were synchronized with stimulus presentations and acquisitions of neural signals via a TTL trigger mechanism. Glasses were placed on each participant and a nose bridge was adjusted for comfort and signal optimization. The calibration step illustrated in Figure 2 was repeated prior to the start of each trial. Eye-tracking traces as a function of time (x-axis) and location (y-axis) are shown in Figure 3A for an illustrative dyad. The red traces indicate eye positions for participant 1 while blue traces indicate eye positions for participant 2. The top row indicates the traces during the eye-to-eye contact with the partner, and the second row indicates the eye-to-photograph gaze.

Figure 2. fNIRS and eye-tracking setup.

Dual-brain hyperscanning setup using the Shimadzu LABNIRS is shown for two illustrative participants. The optode layout consists of 16 detector and emitter pairs per cap configured to provide 42 channels per participant. fNIRS signals were synchronized across participants and the eye-tracking system. A. Participant 1 views the right eye (orange circle, upper panel) and left eye (orange circle, lower panel) of participant 2. B. Participant 2 views the right eye (yellow circle, upper panel) and left eye (yellow circle, lower panel) of Participant 1. These calibrations demonstrate position accuracy related to gaze position.

Figure 3. Eye-tracking and eye gaze comparisons.

A. Eye-tracking traces of two illustrative participants (red and blue) document typical performance during eye gaze events and rest. The y-axis represents degrees of visual angle subtended on the eye of the participant; 0 represents the eye-target center. The crosshair was located 10 degrees away from the eye target (either direct eye contact or gaze of picture eyes). Top and bottom rows illustrate the absence of evidence for eye-tracking differences during direct eye-to-eye contact and joint eye-gaze of the face picture between the two participants. Similarly, comparison of all participants provides no evidence for differences during eye-to-eye gaze and eye-to-picture gaze. B. Eye gaze positions recorded during the eye-to-eye task (left column) and eye-to-picture task (right column) experiments. The red box delineates the 3.3 × 1.5 degree of visual angle window region enclosing both eyes of the participant’s partner and picture. Comparison of all participants provides no evidence for differences during either the eye-to-eye task or the eye-to-picture task.

A linear regression between the group datasets indicated a correlation coefficient of at least 0.96 between eye-to-eye contact and eye-to-picture gaze, demonstrating the absence of evidence for differences between the eye-tracking in either condition. Consistency of fixations within the 3.3 × 1.5-degree “eye box” was evaluated by the x, y location at the mid-point of the 3 s temporal epoch. Figure 3B shows the point cloud from all eye-to-eye (left panel) and eye-to-photo (right panel) conditions. As in the case of the eye-tracking, target accuracy results failed to show differences between the eye-to-eye and eye-to-photograph conditions. These measures rule out eye behavior as a possible source of observed neural differences.

Signal Acquisition

Hemodynamic signals were acquired using a 64-fiber (84-channel) continuous-wave fNIRS system (Shimadzu LABNIRS, Kyoto, Japan) designed for hyperscanning of two participants. The cap and optode layout of the system (Figure 2) illustrate the extended head coverage for both participants achieved by distribution of 42 channels over both hemispheres of the scalp (Figure 4A). Channel distances were set at 3 cm. A lighted fiber-optic probe (Daiso, Hiroshima, Japan) was used to displace all hair from each optode channel prior to placement of the optode inside the holder to ensure a solid connection with the scalp. Resistance was measured for each channel prior to recording to assure acceptable signal-to-noise ratios, and adjustments (including hair removal) were made until all channels met the minimum criteria established by the LABNIRS recording standards (Noah et al., 2015; Ono et al., 2014; Tachibana et al., 2011). Three wavelengths of light (780, 805, and 830 nm) are delivered by each emitter in the LABNIRS system and each detector measures the absorbance for each of these wavelengths. For each channel, the measured absorption for each wavelength is converted to corresponding concentration changes for deoxyhemoglobin (780 nm), oxyhemoglobin (830 nm), and the total combined deoxyhemoglobin and oxyhemoglobin (805 nm) according to a modified Beer-Lambert equation in which raw optical density changes are converted into relative chromophore concentration changes (i.e., ΔOxyHb, ΔdeOxyHb, and ΔTotalHb) (arbitrary units, μmol cm) based on the following equations (Boas et al., 2004; Cope et al., 1988; Matcher, 1995):

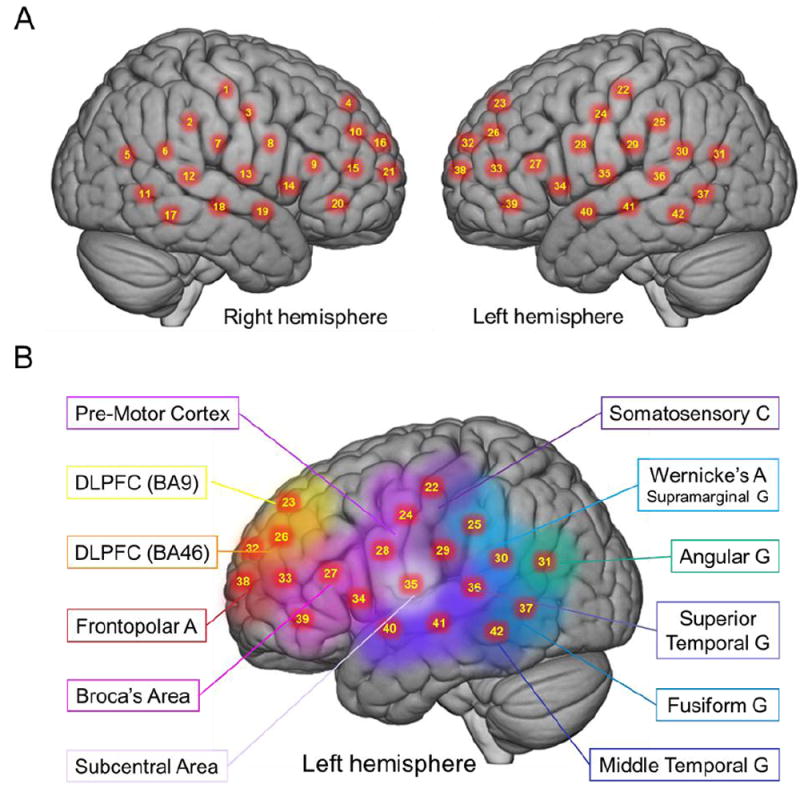

Figure 4. Channels and channel groups.

A. Right and left hemispheres of a single rendered brain illustrate average locations (red dots) for channel centroids. Montreal Neurological Institute (MNI) coordinates were determined by digitizing emitter and detector locations in relation to the conventional 10-20 system based on frontal, posterior, dorsal, and lateral landmarks. See Table 1 for coordinates, anatomical regions, Brodmann’s Area (BA), and regional probabilities for each channel. B. Average locations of channel group centroids are represented by colored regions on a rendered brain image. Right hemisphere centroids are equivalent to left hemisphere centroids displayed here (See Table 2). Abbreviations: DLPFC (9), dorsolateral prefrontal cortex in Brodmann’s Area (BA) 9; DLPFC (46), dorsolateral prefrontal cortex in BA46

Optode Localization

The anatomical locations of optodes in relation to standard head landmarks, including inion; nasion; top center, Cz; left tragus (T3); and right tragus (T4), were determined for each participant using a Patriot 3D Digitizer (Polhemus, Colchester, VT), and linear transform techniques were applied as previously described (Eggebrecht et al., 2012; Ferradal et al., 2014; Okamoto & Dan, 2005; Singh et al., 2005). The Montreal Neurological Institute (MNI) coordinates (Mazziotta et al., 2001) for the channels were obtained using the NIRS-SPM software (Ye et al., 2009) with MATLAB (Mathworks, Natick, MA), and the corresponding anatomical locations of each channel were determined by the provided atlas (Rorden & Brett, 2000). Table 1 lists the median-averaged MNI coordinates and anatomical regions with probability estimates for each of the channels shown in Figure 4A.

Table 1. Channels, group-averaged coordinates, anatomical regions, and atlas-based probabilities.*.

Channel numbers (as shown on Figure 4A) are listed in the left column. Anatomical locations of the channels are determined for each participant by digitization based on standard 10-20 fiduciary markers. Group-averaged (n = 38) centroids of the channels are based on the Montreal Neurological Institute (MNI) coordinate system (Mazziotta et al., 2001) and listed as x, y, z coordinates for which [-] values indicate left hemisphere. Anatomical region labels are indicated as presented by the NIRS-SPM utility (Ye et al., 2009) based on representation (Rorden & Brett, 2000) of the Damasio stereotaxic atlas (Damasio, 1995). Anatomical regions, including Brodmann’s Areas (BA) with probabilities of inclusion in the channel region/cluster, are shown in the center and right columns.

| Channel number | MNI Coordinates | Anatomical Region | BA | Probability | ||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| 1 | 56.83 | -20.39 | 48.61 | Primary Somatosensory Cortex | 1 | 0.38 |

| Primary Somatosensory Cortex | 3 | 0.38 | ||||

| Primary Motor Cortex | 4 | 0.24 | ||||

| 2 | 61.63 | -33.70 | 37.97 | Primary Somatosensory Cortex | 2 | 0.18 |

| Supramarginal Gyrus, part of Wernicke’s Area | 40 | 0.82 | ||||

| 3 | 59.36 | -7.23 | 37.74 | Primary Somatosensory Cortex | 1 | 0.06 |

| Primary Somatosensory Cortex | 3 | 0.19 | ||||

| Primary Motor Cortex | 4 | 0.28 | ||||

| Pre- and Supplementary Motor Cortex | 6 | 0.14 | ||||

| Subcentral Area | 43 | 0.33 | ||||

| 4 | 20.35 | 47.41 | 47.40 | Dorsolateral Prefrontal Cortex | 9 | 1.00 |

| 5 | 50.56 | -73.06 | 13.86 | V3 | 19 | 0.46 |

| Fusiform Gyrus | 37 | 0.20 | ||||

| Angular Gyrus, part of Wernicke’s Area | 39 | 0.34 | ||||

| 6 | 61.88 | -47.51 | 17.48 | Middle Temporal Gyrus | 21 | 0.25 |

| Superior Temporal Gyrus | 22 | 0.75 | ||||

| Fusiform Gyrus | 37 | 0.01 | ||||

| 7 | 63.26 | -21.52 | 26.13 | Primary Somatosensory Cortex | 2 | 0.82 |

| Superior Temporal Gyrus | 22 | 0.10 | ||||

| Subcentral Area | 43 | 0.07 | ||||

| Retrosubicular Area | 48 | 0.01 | ||||

| 8 | 60.41 | 2.82 | 23.29 | Primary Motor Cortex | 4 | 0.01 |

| Pre- and Supplementary Motor Cortex | 6 | 0.59 | ||||

| Subcentral Area | 43 | 0.41 | ||||

| 9 | 53.37 | 27.75 | 20.58 | Pars Opercularis, part of Broca’s Area | 44 | 0.02 |

| Pars Triangularis, part of Broca’s Area | 45 | 0.98 | ||||

| 10 | 36.29 | 48.59 | 32.06 | Dorsolateral Prefrontal Cortex | 9 | 0.18 |

| Pars Triangularis, part of Broca’s Area | 45 | 0.04 | ||||

| Dorsolateral Prefrontal Cortex | 46 | 0.78 | ||||

| 11 | 57.83 | -59.56 | -2.98 | Fusiform Gyrus | 37 | 1.00 |

| 12 | 64.93 | -35.49 | 9.08 | Superior Temporal Gyrus | 22 | 1.00 |

| 13 | 63.38 | -9.54 | 12.93 | Superior Temporal Gyrus | 22 | 0.62 |

| Subcentral Area | 43 | 0.28 | ||||

| Retrosubicular Area | 48 | 0.11 | ||||

| 14 | 56.74 | 13.53 | 5.83 | Pre- and Supplementary Motor Cortex | 6 | 0.17 |

| Temporopolar Area | 38 | 0.15 | ||||

| Pars Opercularis, part of Broca’s Area | 44 | 0.14 | ||||

| Pars Triangularis, part of Broca’s Area | 45 | 0.01 | ||||

| Retrosubicular Area | 48 | 0.54 | ||||

| 15 | 45.40 | 46.57 | 17.01 | Pars Triangularis, part of Broca’s Area | 45 | 0.44 |

| Dorsolateral Prefrontal Cortex | 46 | 0.56 | ||||

| 16 | 22.68 | 61.13 | 29.80 | Dorsolateral Prefrontal Cortex | 9 | 0.26 |

| Frontopolar Area | 10 | 0.47 | ||||

| Dorsolateral Prefrontal Cortex | 46 | 0.26 | ||||

| 17 | 61.83 | -47.40 | -9.79 | Inferior Temporal Gyrus | 20 | 0.36 |

| Fusiform Gyrus | 37 | 0.64 | ||||

| 18 | 65.65 | -23.10 | -4.17 | Middle Temporal Gyrus | 21 | 0.94 |

| Superior Temporal Gyrus | 22 | 0.06 | ||||

| 19 | 61.23 | -2.33 | -7.83 | Middle Temporal Gyrus | 21 | 0.89 |

| Temporopolar Area | 38 | 0.02 | ||||

| Retrosubicular Area | 48 | 0.09 | ||||

| 20 | 51.22 | 37.68 | 1.78 | Pars Triangularis, part of Broca’s area | 45 | 0.82 |

| Dorsolateral Prefrontal Cortex | 46 | 0.18 | ||||

| 21 | 34.28 | 61.15 | 15.32 | Frontopolar Area | 10 | 0.69 |

| Dorsolateral Prefrontal Cortex | 46 | 0.31 | ||||

| 22 | -53.95 | -18.88 | 47.90 | Primary Somatosensory Cortex | 1 | 0.09 |

| Primary Somatosensory Cortex | 3 | 0.60 | ||||

| Primary Motor Cortex | 4 | 0.30 | ||||

| Pre- and Supplementary Motor Cortex | 6 | 0.01 | ||||

| 23 | -16.15 | 48.24 | 46.76 | Dorsolateral Prefrontal Cortex | 9 | 1.00 |

| 24 | -56.34 | -7.24 | 37.61 | Primary Somatosensory Cortex | 1 | 0.02 |

| Primary Somatosensory Cortex | 3 | 0.17 | ||||

| Primary Motor Cortex | 4 | 0.37 | ||||

| Pre- and Supplementary Motor Cortex | 6 | 0.14 | ||||

| Subcentral Area | 43 | 0.30 | ||||

| Dorsolateral Prefrontal Cortex | 46 | 0.84 | ||||

| 25 | -59.27 | -32.34 | 36.98 | Primary Somatosensory Cortex | 2 | 0.46 |

| Supramarginal Gyrus, part of Wernicke’s Area | 40 | 0.54 | ||||

| 26 | -32.93 | 49.10 | 31.93 | Dorsolateral Prefrontal Cortex | 9 | 0.15 |

| Pars Triangularis, part of Broca’s Area | 45 | 0.01 | ||||

| Dorsolateral Prefrontal Cortex | 46 | 0.84 | ||||

| 27 | -50.21 | 27.57 | 20.96 | Pars Opercularis, part of Broca’s Area | 44 | 0.01 |

| Pars Triangularis, part of Broca’s Area | 45 | 0.99 | ||||

| 28 | -57.56 | 2.43 | 23.44 | Pre- and Supplementary Motor Cortex | 6 | 0.57 |

| Subcentral Area | 43 | 0.43 | ||||

| 29 | -60.69 | -20.44 | 26.54 | Primary Somatosensory Cortex | 2 | 0.70 |

| Superior Temporal Gyrus | 22 | 0.05 | ||||

| Subcentral Area | 43 | 0.10 | ||||

| Retrosubicular Area | 48 | 0.14 | ||||

| 30 | -60.48 | -45.93 | 19.27 | Middle Temporal gyrus | 21 | 0.03 |

| Superior Temporal Gyrus | 22 | 0.97 | ||||

| 31 | -51.10 | -70.19 | 17.35 | V3 | 19 | 0.30 |

| Fusiform Gyrus | 37 | 0.13 | ||||

| Angular Gyrus, part of Wernicke’s Area | 39 | 0.57 | ||||

| 32 | -17.33 | 62.01 | 30.03 | Dorsolateral Prefrontal Cortex | 9 | 0.28 |

| Frontopolar Area | 10 | 0.56 | ||||

| Dorsolateral Prefrontal Cortex | 46 | 0.16 | ||||

| 33 | -41.83 | 47.52 | 17.33 | Pars Triangularis, part of Broca’s Area | 45 | 0.38 |

| Dorsolateral Prefrontal Cortex | 46 | 0.62 | ||||

| 34 | -54.34 | 13.25 | 6.56 | Pre- and Supplementary Motor Cortex | 6 | 0.20 |

| Temporopolar Area | 38 | 0.06 | ||||

| Pars Opercularis, part of Broca’s Area | 44 | 0.15 | ||||

| Pars Triangularis, part of Broca’s Area | 45 | 0.01 | ||||

| Retrosubicular Area | 48 | 0.58 | ||||

| 35 | -60.77 | -9.69 | 14.68 | Superior Temporal Gyrus | 22 | 0.54 |

| Subcentral Area | 43 | 0.35 | ||||

| Retrosubicular Area | 48 | 0.11 | ||||

| 36 | -62.47 | -34.81 | 9.89 | Superior Temporal Gyrus | 22 | 0.99 |

| Primary and Auditory Association Cortex | 42 | 0.01 | ||||

| 37 | -57.43 | -57.78 | 0.33 | Fusiform Gyrus | 37 | 1.00 |

| 38 | -28.93 | 62.53 | 15.44 | Frontopolar Area | 10 | 0.75 |

| Dorsolateral Prefrontal Cortex | 46 | 0.25 | ||||

| 39 | -48.28 | 38.47 | 2.13 | Pars Triangularis, part of Broca’s Area | 45 | 0.79 |

| Dorsolateral Prefrontal Cortex | 46 | 0.21 | ||||

| 40 | -59.13 | -2.55 | -6.89 | Middle Temporal Gyrus | 21 | 0.74 |

| Temporopolar Area | 38 | 0.07 | ||||

| Retrosubicular Area | 48 | 0.19 | ||||

| 41 | -63.04 | -23.25 | -3.67 | Middle Temporal Gyrus | 21 | 0.94 |

| Superior Temporal Gyrus | 22 | 0.06 | ||||

| 42 | -60.25 | -46.80 | -8.37 | Inferior Temporal Gyrus | 20 | 0.36 |

| Middle Temporal Gyrus | 21 | 0.04 | ||||

| Fusiform Gyrus | 37 | 0.60 | ||||

Atlas references: Mazziotta et al. (2001), Rorden and Brett (2000)

BA: Brodmann’s Area

Signal Processing

Baseline drift was modeled and removed using a polynomial of the fourth degree,

which was fitted to the raw fNIRS signals (MATLAB). Any channel without a signal due to insufficient optode contact with the scalp was identified automatically by the root mean square of the raw data when the magnitude was more than 10 times greater than the average signal. Approximately 4.5% of the channels in the entire data set were automatically removed prior to subsequent analyses based on this criterion; however, no single participant’s data were removed entirely.

Global Mean Removal

Global systemic effects (e.g., blood pressure, respiration, and blood flow variation) have previously been shown to alter relative blood hemoglobin concentrations (Kirilina et al., 2012; Tak & Ye, 2014), which raises the possibility of inadvertently measuring hemodynamic responses that are not due to neurovascular coupling (Tachtsidis & Scholkmann, 2016). These global components were removed using a principal component analysis (PCA) spatial filter (Zhang et al., 2016) prior to general linear model (GLM) analysis. This technique exploits the advantages of the extended optode coverage of both heads in this system in order to distinguish signals that originate from local sources assumed to be specific to the neural events under investigation by removing signal components due to global sources assumed to originate from systemic cardiovascular functions.

Voxel-wise contrast effects

The 42-channel fNIRS data sets per participant were reshaped into 3-D volume images for the first-level GLM analysis using SPM8. The beta values (i.e., the amplitude of the deOxyHb signal) were normalized to standard MNI space using linear interpolation. The computational mask was subdivided into a total of 3,753 2×2×2mm voxels that “tiled” the cortical shell consisting of 18 mm of brain below the cortical surface and covered by the 42 optodes (See Figure 4A). This approach provided a spatial resolution advantage achieved by interpolation between the channels. The anatomical variation across participants was used to generate the distributed response maps. Results were rendered on a standard MNI brain template using MRIcroGL (http://www.mccauslandcenter.sc.edu/mricrogl/home/). Anatomical locations of peak voxel activity were identified using NIRS-SPM (Tak & Ye, 2014; Ye et al., 2009).

Channel-wise contrast effects

Although voxel-wise analysis offers the best estimate of spatial localization by virtue of interpolated centroids computed from spatially distributed signals, this method is susceptible to false positive findings as a result of multiple voxel comparisons. One alternative approach takes advantage of the coarse spatial sampling characteristic of fNIRS by using the discrete channels as the analysis unit. This channel-wise approach compromises estimates of spatial location, but optimizes statistical validity by reducing the number of comparisons. All channel locations for each participant were converted to MNI space and registered to median locations using non-linear interpolation. Once in normalized space, comparisons across conditions were based on discrete channel units as originally acquired rather than the voxel units.

Conjunction between Voxel-wise and Channel-wise approaches

The decision rule for reported results was a conjunction between the two analysis methods, voxel-wise and channel-wise, in which results of each analysis were required to meet a minimal statistical threshold of p < 0.05. Statistical confidence is enhanced by this conjunction rule, as non-duplicated findings are not included.

Functional Connectivity

Psychophysiological Interaction (PPI) analysis (Friston et al., 1994, 2003) using the gPPI toolbox (McLaren et al., 2012) with SPM8 was employed to measure the strength of functional connections between remote brain regions. The PPI analysis is described with the following equations:

| [1] |

| [2] |

in which H is the hemodynamic response function, H(x) denotes the convolution of signal X using kernel H. gp is the demeaned time course of the task for which 1 represents task time and -1 represents a rest period. βi is the beta value of the PPI, and βp and βk are the beta values for the task and time course of the seed, respectively. Yk is the fNIRS data obtained at the seed region. In this study, seed region k is the functionally-defined cluster based on results of the general linear contrast. xa is the estimated neural activity of the seed region. ei is the residual error.

Wavelet Analysis and Cross-Brain Coherence

Cross-brain coherence using wavelet analysis and related correlation techniques have been applied in neuroimaging studies to confirm synchronous neural activation between two individuals and to analyze cross-brain synchrony during either live interpersonal interactions or delayed story-telling and listening paradigms (Cui et al., 2012; Jiang et al., 2012; Scholkmann et al., 2013; Tang et al., 2016; Kawasaki et al., 2013; Hasson et al., 2004; Dumas et al., 2010), Liu, et al, 2017). Wavelet analysis decomposes a time varying signal into frequency components. Cross brain coherence is measured as a correlation between two corresponding frequency components (Torrence & Compo, 1998; Lachaux et al., 1999, 2002), and is represented as a function of the period of the frequency components.

To confirm this coherence method, we performed a test experiment using visual checkerboards reversing at different rates (See Supplementary Methods Section). It is expected that if two participants viewed the same sequence of flashing checkerboards, signals in their corresponding visual cortices would be expected be 100% synchronous. If, however, two participants viewed different sequences of flashing checkerboards, signals in their corresponding visual cortices would be expected to show less or no synchronous neural activity. Therefore, the wavelet analysis would be expected to show no or only partial coherence. Further details of this validating experiment are outlined in the supplementary information, and observations confirm the expectations.

In the present study, cross-brain coherence between dyads was measured between pairs of brain regions. Individual channels were grouped into anatomical regions based on shared anatomy, which served to optimize signal-to-noise ratios and reduce potential Type 1 errors due to multiple comparisons. The average number of channels in each region was 1.68 +/- 0.70. Grouping was achieved by identification of 12 bilateral ROIs from the acquired channels including: 1) angular gyrus (BA39); 2) dorsolateral prefrontal cortex (BA9); 3) dorsolateral prefrontal cortex (BA46); 4) pars triangularis (BA45); 5) supramarginal gyrus (BA40); 6) fusiform gyrus (BA37); 7) middle temporal gyrus (BA21); 8) superior temporal gyrus (BA22); 9) somatosensory cortex (BA1, 2, and 3); 10) premotor and supplementary motor cortex (BA6); 11) subcentral area (BA43); and 12) frontopolar cortex (BA10) and automatically assigning the channels to these groups (See rendering on Figure 4B for the left hemisphere and median centroid coordinates on Table 2).

Table 2. Channel groups and group-averaged centroids.

Channels (See Figure 4B) are grouped according to common anatomical regions (left column), including hemisphere, average number of channels, average MNI coordinates, anatomical notation for the region label, Brodmann’s Area (BA), and probability of inclusion.

| Group Name | Hemisphere | nCh* | MNI Coordinates | Anatomical Region | BA | Probability | ||

|---|---|---|---|---|---|---|---|---|

| X | Y | Z | ||||||

| Angular Gyrus | Left | 0.50 | -58 | -68 | 27 | Angular Gyrus | 39 | 0.95 |

| Right | 0.45 | 56 | -71 | 23 | Angular Gyrus | 39 | 0.91 | |

| DLPFC BA9 | Left | 1.53 | -18 | 50 | 44 | Dorsolateral Prefrontal Cortex | 9 | 1.00 |

| Right | 1.40 | 23 | 48 | 45 | Dorsolateral Prefrontal Cortex | 9 | 1.00 | |

| DLPFC BA46 | Left | 1.73 | -40 | 51 | 28 | Dorsolateral Prefrontal Cortex | 46 | 0.77 |

| Pars Triangularis | 45 | 0.23 | ||||||

| Right | 1.95 | 42 | 51 | 26 | Dorsolateral Prefrontal Cortex | 46 | 0.75 | |

| Pars Triangularis | 45 | 0.25 | ||||||

| Pars Triangularis | Left | 2.85 | -55 | 34 | 13 | Pars Triangularis | 45 | 1.00 |

| Right | 2.83 | 58 | 34 | 14 | Pars Triangularis | 45 | 1.00 | |

| Supramarginal Gyrus | Left | 0.90 | -66 | -37 | 39 | Supramarginal Gyrus | 40 | 0.75 |

| Primary Somatosensory Cortex | 2 | 0.21 | ||||||

| Right | 0.90 | 69 | -35 | 40 | Supramarginal Gyrus | 40 | 0.77 | |

| Primary Somatosensory Cortex | 2 | 0.22 | ||||||

| Fusiform Gyrus | Left | 1.58 | -64 | -57 | -3 | Fusiform Gyrus | 37 | 0.89 |

| Right | 1.80 | 66 | -56 | -3 | Fusiform Gyrus | 37 | 0.89 | |

| MTG | Left | 2.35 | -69 | -19 | -7 | Middle Temporal Gyrus | 21 | 0.90 |

| Right | 2.65 | 71 | -18 | -7 | Middle Temporal Gyrus | 21 | 0.87 | |

| STG | Left | 2.40 | -68 | -33 | 13 | Superior Temporal Gyrus | 22 | 0.88 |

| Right | 2.58 | 71 | -32 | 12 | Superior Temporal Gyrus | 22 | 0.93 | |

| Somatosensory Area | Left | 2.15 | -65 | -21 | 39 | Primary Somatosensory Cortex | 2 | 0.49 |

| Primary Somatosensory Cortex | 1 | 0.38 | ||||||

| Right | 1.78 | 66 | -22 | 41 | Primary Somatosensory Cortex | 1 | 0.50 | |

| Primary Somatosensory Cortex | 2 | 0.36 | ||||||

| Pre-Motor Cortex | Left | 1.10 | -62 | 6 | 26 | Pre- and Supplementary Motor Cortex | 6 | 0.58 |

| Subcentral Area | 43 | 0.30 | ||||||

| Right | 1.05 | 65 | 8 | 18 | Pre- and Supplementary Motor Cortex | 6 | 0.66 | |

| Subcentral Area | 43 | 0.20 | ||||||

| Subcentral Area | Left | 1.65 | -66 | -6 | 27 | Subcentral Area | 43 | 0.92 |

| Right | 1.70 | 68 | -5 | 28 | Subcentral Area | 43 | 0.91 | |

| Frontopolar Area | Left | 1.28 | -26 | 64 | 20 | Frontopolar Cortex | 10 | 0.71 |

| Dorsolateral Prefrontal Cortex | 46 | 0.29 | ||||||

| Right | 1.10 | 32 | 64 | 20 | Frontopolar Cortex | 10 | 0.65 | |

| Dorsolateral Prefrontal Cortex | 46 | 0.35 | ||||||

nCh: Average number of channels, BA: Brodmann’s Area

Results

Voxel-wise analysis

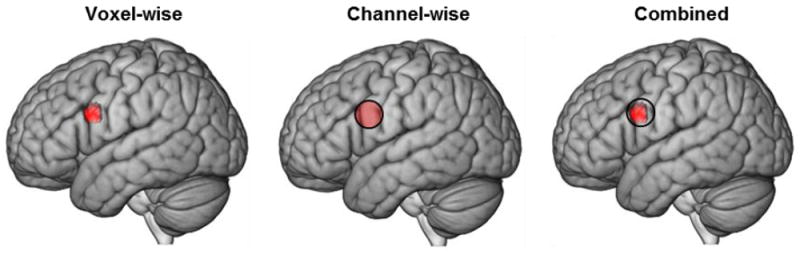

A unique eye-to-eye contact effect [eye-to-eye] > [eye-to-picture] was observed in the left hemisphere (Figure 5, left panel) with peak voxel located at (-54, 8, 26), (p < 0.009). In accordance with the NIRS-SPM atlas (Tak et al., 2016; Mazziotta et al., 2001), the spatial distribution of the cluster included pars opercularis (Brodmann’s Area (BA) 44, part of Broca’s Area, known to be associated with speech production), 40%; pre-motor/supplementary motor cortex (BA6, also part of the frontal language system associated with speech articulation) 45%; and the subcentral area (BA43, a region without a previously described functional role), 12%.

Figure 5. Contrast effects.

fNIRS deOxyHb signals, n = 38. Voxel-wise analysis (left): Colored region on the left hemisphere of the rendered brain indicates the contrast [Eye-to-Eye > Eye-to-Picture]. MNI coordinates = (-54, 8, 26); peak voxel t = 2.4; p = 0.009; n of voxels (an index of regional area) = 75; Channel-wise analysis (middle): Colored region on the left hemisphere of the rendered brain, Channel 28 (See Figure 4B), indicates the contrast [Eye-to-Eye > Eye-to-Picture]. MNI coordinates = (-58, 2, 23); peak voxel t = 1.78; p = 0.042. The right rendering shows the corresondence between the voxel-wise cluster (red area) and channel 28 (open circle). The significant regions overlap by 57%.

Channel-wise analysis

Similar to the voxel-wise analysis, a unique eye-to-eye contact effect [eye-to-eye > eye-to-picture] was observed in the left hemisphere (Figure 5, middle panel) within channel 28 (average central location: (-58, 2.4, 23), p = 0.042). In accordance with the NIRS-SPM atlas, the spatial distribution of the channel (see Figure 4A) included pre- and supplementary motor cortex (BA6, 57%) and the subcentral area (BA43, 43%). These two anatomical regions overlap with two of the three regions that subtend 57% of the cluster identified in the voxel-wise analysis, and meet the conjunction criteria for a consensus between the two analyses.

Combined voxel-wise and channel-wise approaches

Based on the decision rule to report only the conjunction of voxel-wise and channel-wise findings, we take the cluster at and around channel 28 as the main finding, and conclude that the eye-to-eye effect is greater than the eye-to-picture effect for this left frontal cluster.

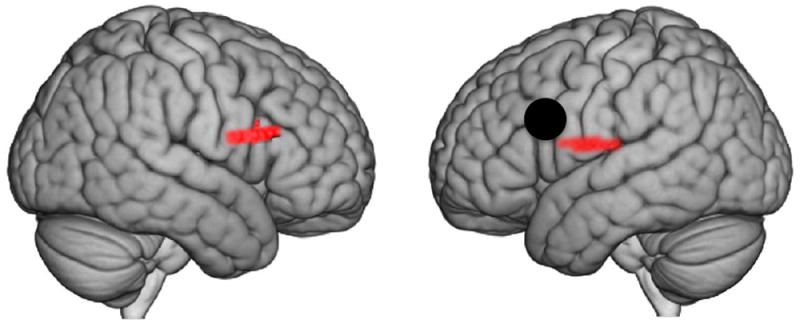

Functional Connectivity

Increases in functional connectivity between the above eye-to-eye contact cluster and remote brain regions during eye-to-eye contact relative to eye-to-picture gaze are taken as evidence of large-scale neural mechanisms sensitive to eye-to-eye contact. The functionally-determined eye-to-eye cluster with peak voxel at (-54, 8, 26) is shown as the seed (black dot) on Figure 6 and Table 3 (left column) and reveals functional connections to a right hemisphere homologue to the seed cluster with peak voxel at (56, 22, 18) (p = 0.008), which includes pars triangularis, 52%; pars opercularis, 32%; and pre-motor and supplementary motor cortex, 11% (Table 3, top rows). A left hemisphere cluster was also functionally connected to the seed cluster (Table 3, bottom rows) with peak voxel at (-60, -16, 20) (p = 0.018), including superior temporal gyrus (STG, part of Wernicke’s Area, associated with receptive language processes) 40%; primary somatosensory cortex, 18%; and the subcentral area, 35%. Together, these functional connectivity results provide evidence for a long-range left frontal to temporal-parietal network including substantial overlap with canonical language processing systems associated with Broca’s and Wernicke’s regions.

Figure 6. Functional Connectivity by Psychophysiological Interaction (PPI), n = 38 (deOxyHb signals).

Black dot indicates the centroid of the functionally determined seed region (-54, 8, 26), based on the [Eye-to-Eye > Eye-to-Picture] contrast (see Figure 5, left panel). The result does not change with seed (-58, 2, 23) based on the centroid of channel 28 (see Figure 5, middle panel). Colored areas show regions with higher functional connectivity to the seed during eye-to-eye contact than eye-to-picture gaze (p ≤ 0.025). See Table 3.

Table 3. Functional connectivity based on psychophysiological interaction [Eye-to-Eye > Eye-to-Picture].

Functional connectivity increases relative to picture gaze are shown for the functionally defined anatomical seed (left column). Areas included in the functional networks are identified by coordinates, levels of statistical significance (t-value and p), regions included in the cluster including Brodmann’s Area (BA), estimated probability, and n of voxels, which is a measure of cluster volume.

| Functionally Defined Seed | Connected Peak Voxels | Anatomical Regions in Cluster | BA | Probability | n of Voxels | ||

|---|---|---|---|---|---|---|---|

| Coordinates* | t value | p | |||||

| Eye-to-eye Contact Effect (-54, 8, 26) | (56, 22, 18) | 2.54 | 0.008 | Pars Triangularis | 45 | 0.52 | 119 |

| Pars Opercularis | 4 | 0.32 | |||||

| Pre and Supplementary Motor Cortex | 6 | 0.11 | |||||

| (Pre and Suppl. Motor, Pars Opercularis, Subcentral Area) | (-60, -16, 20) | 2.16 | 0.018 | Superior Temporal Gyrus | 22 | 0.40 | 48 |

| Subcentral Area | 43 | 0.35 | |||||

| Primary Somatosensory Cortex | 2 | 0.18 | |||||

Coordinates are based on the MNI system and (-) indicates left hemisphere.

Cross-Brain Coherence

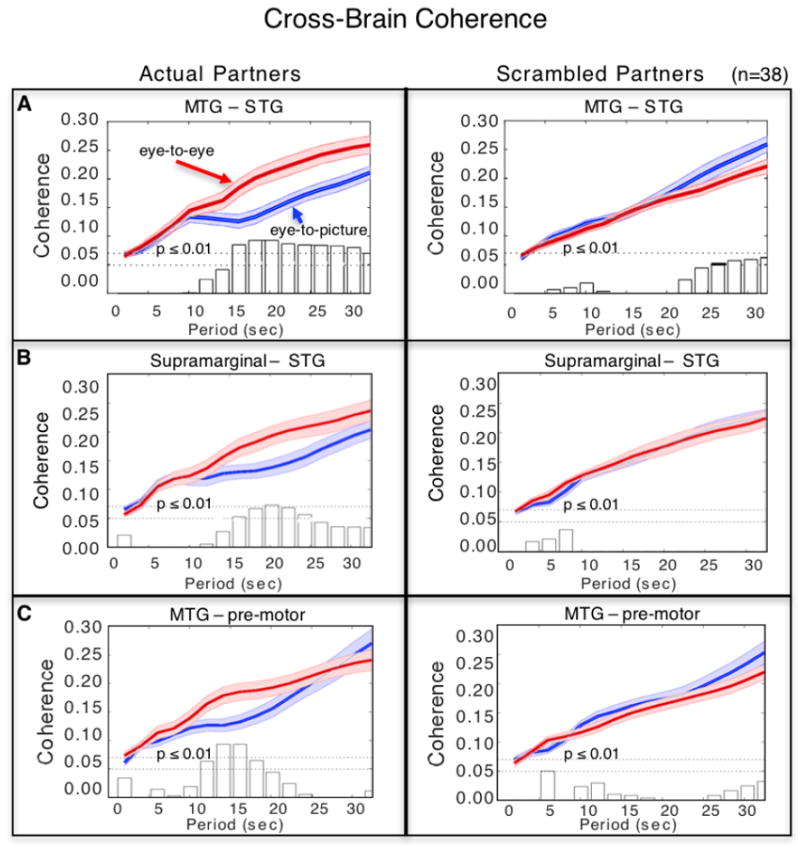

The cross-brain synchrony hypothesis, that eye-to-eye contact is associated with increased coherence of signals between the interacting dyads, was evaluated using wavelet analysis (Cui et al., 2012; Jiang et al., 2012). Eye-to-eye and eye-to-picture signals acquired from the 12 predefined anatomical regions (Figure 4B, Table 2) were decomposed into wavelet kernels that were correlated across brains. Temporal period (x-axis, seconds) and cross-brain coherence (y-axis, correlation) functions were determined for the eye-to-eye (red) and eye-to-picture (blue) conditions (Figure 6). The plotted functions represent the average coherence for each of the time points on the x-axis. The expectation based on our hypothesis is that cross-brain synchrony for the eye-to-eye condition would exceed cross-brain synchrony for the eye-to-picture condition for pairs of brain areas specialized for interactive functions that depend upon real and dynamic eyes. Further, we expect that the coherence difference will not be present when partners are computationally exchanged (i.e., true partners are randomly assigned, i.e. scrambled; see Figure 7 right column). The vertical bars along the x-axis of the figure report t-values reflecting differences between the two conditions. The top dotted horizontal line indicates p < 0.01, and the bottom line indicates p < 0.05. The decision rule is satisfied with two or more contiguous bars at p < 0.01.

Figure 7. Cross-brain coherence.

Signal coherence between participants (y-axis) is plotted against the period of the frequency components (x-axis) for the eye-to-eye (red) and the eye-to-picture (blue) conditions (shaded areas: ±1 SEM). Bar graphs indicate significance levels for the separations between the two conditions for each of the period values on the x-axis. The upper horizontal dashed line indicates (p ≤ 0.01) and the lower line indicates (p ≤ 0.05). Left panels show coherence between actual partners, and right panels show coherence between scrambled partners. Cross-brain coherence is shown between A. middle temporal gyrus (MTG) and superior temporal gyrus (STG) (Partners: p = 0.001, t = 3.79; Scrambled: no significant effect); B. supramarginal gyrus and STG (Partners: p = 0.008, t = 2.83; Scrambled: no significant effect); and C. MTG and pre-motor cortex (Partners: p = 0.001, t = 4.00; Scrambled: no significant effect).

Comparison of coherence between actual partners and “scrambled” partners distinguishes between two interpretations: 1) cross-brain correlations are due to similar operations regardless of cross-brain interactions, or 2) cross-brain correlations are due to events specific to the partner interaction. If the coherence between a pair of brain areas remains significant during the scrambled cases, then we decide in favor of option 1. If coherence does not survive the partner scrambling technique, then we conclude in favor of option 2. Cross-brain coherence between signals within three regional pairs: middle temporal gyrus (MTG) and superior temporal gyrus (STG) (Figure 7A); supramarginal gyrus (SMG) and STG (Figure 7B); and pre-and supplementary motor cortex and MTG (Figure 7C) was higher during the [eye-to-eye] condition than during the [eye-to-picture condition] (p < 0.01) for periods between 12–24 s, a range between the high and low frequency noise ranges within the 30 s task cycle.

Discussion

This study extends conventional neuroimaging of task-based eye-to-eye functions using single brains in isolation to two brains during live eye-to-eye contact by introducing a hyperscanning paradigm using fNIRS to simultaneously acquire functional responses from two individuals. The overall goal was to investigate the neural basis for online social interaction as it relates to eye-to-eye contact. Left frontal regions found to be more sensitive to eye-to-eye contact than to eye-to-picture gaze were functionally connected to left temporal-parietal regions, contributing to the accumulation of evidence for integrated face-to-language processing during eye-to-eye contact (Baron-Cohen et al., 1997). Further, these findings are consistent with feature processing mechanisms associated with the eye-to-eye contact task, as anticipated by the specificity hypothesis proposing that within-brain neural activity associated with eye-to-eye contact will be greater than neural activity associated with eye-to-picture gaze.

Here we introduce hemodynamic brain-to-brain coherence as a neural marker of shared processes associated with specific pairs of anatomical regions during eye-to-eye contact. Signal components (wavelets), originating from within the temporal-parietal (MTG, STG, SMG) and frontal (pre- and supplementary motor cortex) systems of the two interacting brains were more synchronized during online eye-to-eye conditions than during off-line eye-to-picture conditions. These results are consistent with rapid processing streams possibly optimized for facial motion as well as automatic and reciprocal information exchanges as would be expected for online streaming of social cues such as micro-expressions that automatically occur during natural interpersonal interactions. Cross-brain coherence was observed primarily between temporal-parietal systems with known receptive and interpretive language functions (Hagoort, 2014; Poeppel, 2014). In this case, these region pairs were engaged during rapidly-exchanged streaming of visual information that was presumably sent and received between participant brains. This synchrony was partner-specific, suggesting that the receptive language system also functions as a receptive gateway for non-verbal social cues and neural interpretation.

Together, results of this study support the widely held view that eye-to-eye contact is a socially salient and fundamental interactive stimulus that is associated with specialized neural processes. Specifically, we find that these processes include left frontal, subcentral, and temporal-parietal systems. Although the left frontal and temporal-parietal systems include neural regions with known associations to language processes, the subcentral area (BA43) remains a region without a known function. The subcentral area is formed by the union of the pre- and post-central gyri at the inferior end of the central sulcus in face-sensitive topography, with internal projections that extend into the supramarginal area over the inner surface of the operculum with a medial boundary at the insular cortex (Brodmann & Garey, 1999). The finding of subcentral area activity within the contrast [eye-to-eye > eye-to-picture] and functional connections to the eye-to-eye effect cluster suggests a possible role for this area within a nexus of distributed neural mechanisms associated with social interaction and eye contact. This interesting speculation is a topic for further research and model development.

Classical theoretical frameworks arising from models of attention classify cognition into fast and slow processes, sometimes referred to as automatic and controlled processes, respectively (Posner & Snyder, 1975; Shiffrin & Schneider, 1977). Similarly, in the parallel distributed processing framework (Cohen et al., 1990), automaticity is modeled as a rapid continuous process in contrast to attentional control, which is modeled as a slow intermittent process similar to hierarchical feature-based processing in vision. Here these processing models are considered in the context of the findings from this study.

Hierarchical models of task-based feature detection and human face perception (Allison et al., 2000; Emery, 2000; Ethofer et al., 2011; Kanwisher et al., 1997; Rossion et al., 2003; Schneier et al., 2011; Senju & Johnson, 2009; Zhen et al., 2013) are grounded in relatively slow, feature extracting, visual responses. The contrast and functional connectivity results shown here confirm that these models can also be applied to neural events that occur in natural environments and social interactions that are spontaneous and not determined as in the classical stimulus-response paradigms. Decomposition of fNIRS signals by wavelet analysis exposes component frequencies higher than the task-based 30s cycle that are correlated across brains during eye-to-eye contact. Specifically, coherence across brains that is greater for the eye-to-eye condition than the eye-to-picture condition occurred within periods between 10 s and 20 s (See Figure 7), which is shorter (higher frequency) than the task period of 30 s, and raises novel theoretical considerations related to mechanisms for high-level perception of rapidly streaming, socially meaningful visual cues.

A related theoretical approach to high-level visual perception previously referred to as Reverse Hierarchy Theory (Ahissar & Hochstein, 2004; Hochstein & Ahissar, 2002; Hochstein et al., 2015) has addressed a similar question arising from visual search studies. This framework proposes mechanisms for assessments of visual scenes based on the computation of global statistics. Accordingly, perception of the “gist” of a scene does not depend upon conscious perception of underlying local details, but rather is dependent upon a rapid computation of a deviation from the global statistic. This accounts for observations of visual search in which very rapid “pop out” perceptions occur during search operations when an element differs categorically from all other elements in a set (Treisman & Gelade, 1980). This classical framework is consistent with reported lesion effects associated with the left temporal-parietal junction, suggesting specificity of the region for the perception of global rather than local features (Robertson et al., 1988). Signals arising from the left temporal-parietal system were also found in this experiment to synchronize across brains during eye-to-eye contact, and support the suggestion that this region is sensitive to rapid global processes. This “high-level-first” model of social perception, combined with the suggestion of a specific temporal- parietal substrate, provides a possible theoretical framework for the detection and interpretation of rapidly streaming social information in eye-to-eye contact, as observed in this study.

A hyperbrain network for neural processing during eye-to-eye contact

Although well-known neural systems that underlie spoken language exchanges between individuals continuously receive and transmit auditory signals during spontaneous and natural communications, similar models are rare (if existent) for continuously streaming visual information. Our results suggest that language-sensitive networks and their capabilities for rapid on-line streaming, may also be employed during eye-to-eye contact between dyads, consistent with multimodal capabilities for these well-known interactive systems.

This framework contributes a foundation for a mechanistic understanding of neural computations related to eye-to-eye contact. The findings suggest that specific left frontal, central, and temporal-parietal regions that are typically engaged during speech reception and production (Hagoort, 2014; Poeppel, 2014) plus the subcentral area are seemingly “called to action” and bound together during eye-to-eye contact. One interpretation of the coherence results suggests that signals may synchronize across brains in the service of functions presumably related to sending, receiving, and interpreting rapidly streaming, socially relevant, visual cues. However, another interpretation of the coherence results suggests that synchronization of signals across brains is evidence for simultaneous processing the same information on the same time scale. Thus, processes represented by coherence would be socially informative rather than specifically interactive. Clarity between these two options might be enhanced by future investigations where a dynamic video condition is employed for additional comparisons of coherence when viewing a dynamic but non-interactive face stimulus as opposed to viewing either a static face picture or real eye-to-eye contact. Nonetheless, the observation that the eye-to-eye coherence effect was specific for actual partners and not for computationally-selected (scrambled) partners (Figure 7, right column) suggests that coherence is sensitive to rapid and automatic communicative signs between interacting individuals. This approach raises the new question of how rapid visual signals are automatically perceived and interpreted within the context of task-based deliberate processes during live social interaction, and opens novel future directions for investigations aimed at understanding the interwoven neural mechanisms that underlie complex visual and social interactions in natural, online situations.

Advantages, limitations, and future directions

Comparison of real eye-to-eye interactions with gaze directed at static pictures of faces and eyes

A direct-gaze photograph of a face is a standard stimulus frequently applied to investigate eye contact effects (Senju & Johnson, 2009; Johnson et al., 2015) particularly in fMRI acquisitions. This was a primary consideration in the choice for a control stimulus in this study. The question of a difference between the two conditions was considered a key starting point. If no difference, then we would conclude that the real and static direct gaze engaged the same neural systems. If, however, as was the case, we observed a difference then we conclude that real eyes matter. The “real eyes matter” result could be interpreted as either due to a difference in the perception of dynamic and static images, or due to an interaction effect as hypothesized. A static photograph of a face and eyes lacks several aspects of real interacting faces including movement and response contingencies that are relevant to the interpretation of comparisons with real faces and eyes. Given this, the study might have employed a dynamic face video as a control condition to theoretically provide a closer comparison with the real face absent the responses due to motion alone. It is well-established that facial motion and dynamic facial expressions enhance signal strength in face recognition and emotion processing areas such as the right superior temporal sulcus, the bilateral fusiform face area, and the inferior occipital gyrus (Schultz & Pilz, 2009; Trautmann et al., 2009; and Recio et al., 2011). Further, dynamic faces enhance differentiation of emotional valences (Trautmann-Lengsfeld et al., 2013), and engage motion sensitive systems (Furl et al., 2010; Sato et al., 2004). However, these stimulus complexities and systems are associated with faces and context processing, whereas, the focus of this study was on eyes-only (which was confirmed by the eye-tracking and gaze locations).

Although the focus on eyes in this study reduced the possible impact of face processing mechanisms on the findings, the possible influence of subtle eye movements not involved in interpersonal interaction cannot be totally ruled out, and requires further investigation. For example, social, but non-interactive, stimuli typically contain meaningful facial expressions, and specifically controlling for these effects would strengthen evidence for or against interaction-specific neural activations. However, the findings of this study show left hemisphere lateralized effects in keeping with dominant language-related functions, whereas the predominance of findings related to socially meaningful but non-interactive facial movement and emotional expressions are generally reported in the right hemisphere (Sato et al., 2004; Schultz & Pilz, 2009). These considerations suggest that the observations reported here are not due primarily to random eye motion or socially meaningful face perceptions, as further suggested by the loss of coherence by computationally mixing partners. However, the question of what stimulus features actually drive visual interpersonal interactions remains an open topic for future investigative approaches.

Spatial resolution

The technical solutions to hyperscanning in ecologically valid environments using fNIRS, as opposed to chaining two or more fMRI scanners together (Montague et al., 2002; Saito et al., 2010), come with unique features and limitations. Among the limitations, the relatively low spatial resolution (approximately 3 cm) restricts signal acquisition to superficial cortical tissues. The spatial resolution limitation is graphically represented by the channel layout (Figure 4A), and the “fuzzy” colored patches illustrated in Figure 4B provide a realistic representation of regional anatomical boundaries. Since exact locations for each of the reported areas are not acquired, anatomical results from this study are appropriately considered as approximate and subjects for future investigations with techniques that can provide higher spatial resolution.

Temporal resolution

The signal acquisition rate (30 Hz) provides a relatively high temporal resolution that facilitates computations of connectivity and coherence across brains. The advantage of dense temporal sampling enriches the information content of the signal contributing to the sensitivity of the functional connectivity and wavelet analyses employed for the GLM and cross-brain coherence measures. In the case of time-locked and task-based paradigms, the conventional goal is to isolate low-frequency, functionally-specific neural responses. However, conventional methods do not interrogate neural mechanisms responsive to high-frequency, spontaneously-generated, interpersonal, and continuously streaming information between interacting individuals. The temporal advantages of dual-brain studies using fNIRS facilitate a novel genre of experimental and computational approaches. These include new analysis tools that do not assume time-locked block and event-related designs, and capture signals generated by neural events that occur on a relatively high-frequency time scale. Acquisition of signals that provide insight into the neurobiology engaged in detection and interpretation of these rapid, online, and transient cues constitutes a foundational basis for understanding the neurobiology of social communication. We exploit the fNIRS advantage of high temporal resolution by using the dual-brain paradigm with a signal decomposition approach (wavelet analysis) to evaluate region-specific cross-brain processes. These techniques reveal an emerging instance of functional specificity for cross-brain effects, and constitute a fundamental technical and theoretical advance for investigations of social interactions.

Signal processing and validation

The exclusive use of the deOxyHb signal for this investigation assures a close approximation to the blood oxygen level-dependent (BOLD) signal acquired for neuroimaging using fMRI, and assumed to be a proxy for neural events. This assumption is supported for the deOxyHb signal in this investigation by the EEG results (a direct measure of neural activity, see Supplementary Materials). However, as indicated above, the amplitude of the deOxyHb signal is reduced relative to the OxyHb signal (Zhang et al., 2016), further challenging data analysis procedures. Although hemodynamic signals acquired by fNIRS are typically analyzed by similar analysis tools and assumptions as applied to fMRI data (Ye et al., 2009), analogous statistical simulations and validations have not been performed on fNIRS data. The validity of statistical approaches commonly applied to neuroimaging studies based on contrasts of fMRI data has recently been called into question (Eklund et al., 2016). However, signals acquired by the two techniques (fMRI and fNIRS) differ in several major respects that are expected to impact these concerns: fNIRS signals originate from a much larger unit size, are acquired at a higher temporal resolution, sensitivity is limited to superficial cortex, and measurement techniques are based on absorption rather than magnetic susceptibility. However, although to a lesser extent than for fMRI, the risk of false positive results due to multiple comparisons for the contrast results remains a concern for fNIRS data as well, and we have adopted measures to minimize this risk. The additional discrete channel-wise approach and a decision rule that final results must be significant on both voxel-wise and channel-wise analyses contributes the advantages of a conjunction approach. The underlying assumption of the conjunction decision rule is that a signal due to noise will differ from a similar signal due to true neural activity by the probability that it will be found in measurements taken with multiple techniques. Since the methods employed here are not independent, the advantage of the two analyses is less than multiplicative, but, nonetheless, incremental. Consistency between the contrast results and the independent cross-brain coherence findings of left frontal-temporal-parietal topology, however, adds further confidence in the statistical approaches applied to these data. Further, the finding of an eye-to-eye effect with the same paradigm based on the EEG data reported in Supplementary Materials provides additional face validity, and motivates future studies using simultaneous EEG and fNIRS acquisitions.

Optode coverage

Notable limitations of this investigation also include the absence of optodes over the occipital lobe, which prevents detailed observations of visual processes. However, the current configuration of extended optode coverage over both hemispheres for both participants pioneers a new level of global sampling for fNIRS, and serves to illustrate the advantages of further developments that increase the coverage of whole heads. In future studies, we intend to populate the whole head with detectors that cover the occipital regions as well as the frontal, temporal, and parietal regions.

Supplementary Material

Acknowledgments

The authors are grateful for the significant contributions of Swethasri Dravida, MD/PhD student, Yale School of Medicine; Jeiyoun Park and Pawan Lapborisuth, Yale University undergraduates; and Dr. Ilias Tachtsidis, Department of Medical Physics and Biomedical Engineering, University College London. This research was partially supported by the National Institute of Mental Health of the National Institutes of Health under award number R01MH107513 (PI JH), Burroughs Wellcome Fund Collaborative Research Travel Grant (JAN), and the Japan Society for the Promotion of Science (JSPS) Grants in Aid for Scientific Research (KAKENHI) JP15H03515 and JP16K01520 (PI YO). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The data reported in this paper are available upon request.

Footnotes

Author contributions: J.H., X.Z., and J.A.N. designed and performed the research, analyzed data, and wrote the paper. Y.O. contributed laboratory facilities and engineering expertise for the EEG investigations included in the supplementary material.

Conflict of Interest: The authors declare no conflicts of interest.

This work has not been published previously and is not under consideration for publication elsewhere. All authors have approved the manuscript in its present form.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. The Journal of Neuroscience. 2000;20(7):2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: Role of the STS region. Trends in Cognitive Sciences. 2000;4(7):267–278. doi: 10.1016/S1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends in Cognitive Sciences. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Babiloni F, Astolfi L. Social neuroscience and hyperscanning techniques: Past, present and future. Neuroscience & Biobehavioral Reviews. 2014;44:76–93. doi: 10.1016/j.neubiorev.2012.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Jolliffe Therese. Is There a “Language of the Eyes”? Evidence from Normal Adults, and Adults with Autism or Asperger Syndrome. Visual Cognition. 1997;4(3):311–331. doi: 10.1080/713756761. [DOI] [Google Scholar]

- Boas DA, Dale AM, Franceschini MA. Diffuse optical imaging of brain activation: Approaches to optimizing image sensitivity, resolution, and accuracy. Neuroimage. 2004;23(Suppl 1):S275–288. doi: 10.1016/j.neuroimage.2004.07.011. [DOI] [PubMed] [Google Scholar]

- Boas DA, Elwell CE, Ferrari M, Taga G. Twenty years of functional near-infrared spectroscopy: Introduction for the special issue. Neuroimage. 2014;85(Pt 1):1–5. doi: 10.1016/j.neuroimage.2013.11.033. [DOI] [PubMed] [Google Scholar]

- Brodmann K, Garey L. Brodmann’s “Localisation in the cerebral cortex”. London: Imperial College Press; 1999. [DOI] [Google Scholar]

- Cheng X, Li X, Hu Y. Synchronous brain activity during cooperative exchange depends on gender of partner: A fNIRS-based hyperscanning study. Human Brain Mapping. 2015;36(6):2039–2048. doi: 10.1002/hbm.22754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JD, Dunbar K, McClelland JL. On the control of automatic processes: A parallel distributed processing account of the Stroop effect. Psychological Review. 1990;97(3):332. doi: 10.1037/0033-295x.97.3.332. [DOI] [PubMed] [Google Scholar]

- Cope M, Delpy DT, Reynolds EOR, Wray S, Wyatt J, van der Zee P. Methods of quantitating cerebral near infrared spectroscopy data. In: Mochizuki M, Honig CR, Koyama T, Goldstick TK, Bruley DF, editors. Oxygen Transport to Tissue X. Boston, MA: Springer US; 1988. pp. 183–189. [DOI] [PubMed] [Google Scholar]

- Cui X, Bray S, Bryant DM, Glover GH, Reiss AL. A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. Neuroimage. 2011;54(4):2808–2821. doi: 10.1016/j.neuroimage.2010.10.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui X, Bryant DM, Reiss AL. NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. Neuroimage. 2012;59(3):2430–2437. doi: 10.1016/j.neuroimage.2011.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio H. Human brain anatomy in computerized images. New York: Oxford University Press; 1995. [Google Scholar]

- De Jaegher H, Di Paolo E, Gallagher S. Can social interaction constitute social cognition? Trends in Cognitive Sciences. 2010;14(10):441–447. doi: 10.1016/j.tics.2010.06.009. [DOI] [PubMed] [Google Scholar]

- De Jaegher H, Di Paolo E, Adolphs R. What does the interactive brain hypothesis mean for social neuroscience? A dialogue. Philosophical Transactions of the Royal Society B: Biological Sciences. 2016;371(1693) doi: 10.1098/rstb.2015.0379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Paolo E, De Jaegher H. The interactive brain hypothesis. Frontiers in Human Neuroscience. 2012;6(163) doi: 10.3389/fnhum.2012.00163. [DOI] [PMC free article] [PubMed] [Google Scholar]